A WORD FROM ME

when I studied in electronics engineering at the undergraduate level there I knew and could understand that electronic engineering is a pure technique that I have learned myself from the analysis and evidence of self-taught practice in my home; at that time I saw that electronics science can not be understood only from a technical drawing and its symbol symbol on the drawing paper but an electronics engineer is forced to and can and is able to engineer a device and electronic engineering material that is structured to fit the desired function and requirement by us as individuals, groups or wider needs of the earth; in the field of electronics engineering unlike other fields of the field such as building or civil engineering --- architectural engineering --- mapping techniques (geodesy and geology) --- mechanical engineering ---- and new electrical engineering branches of informatics engineering; in engineering electronics engineering drawing --- components --- raw materials --- design process --- analysis of both software and brainware --- scale comparison --- component replacement (component equation) --- installation of components on PCB (both with the latest solder or technique that is SMT technique until nano technology) --- the quality of the component materials used --- the installation robotic versus humans -----> it is interrelated and determine the results of the process and performance of tools and materials desired by user. when I graduate college and even college hardware design equivalent Post-graduate (S-2) for 1 year I researched by using existing equipment at that time in my study and work at home that the performance of an electronic device can not be done only from the above count paper drawing design but after we compute in theory electronics circuit theory studied in lectures and books electronic books such as electronic principles principles (Malvino) as well as integrated electronic circuit (milmann halkias) there we are constrained in placing components of electronic components according to the image or design that we make as you wish or user; in which the installed component must be exactly the same as the drawing of the design we made - numerical analysis - as well as the circuit count theory (node - mesh - norton - thevenin - superposition - lineiritas Analysis etc) - function of mathematical function of transformation analysis like laplace - fourier - differential - integral - jacobian - etc as comparative study we have to install component good and ideal maybe according to count and circuit design analysis .

Design of electronic devices (Hardware design) = Performance of components and materials of electronics .

Electronic Engineering = Pure Technique

XXX . XXX stack pointer

A stack pointer is a small register that stores the address of the last program request in a stack. A stack is a specialized buffer which stores data from the top down. As new requests come in, they "push down" the older ones. The most recently entered request always resides at the top of the stack, and the program always takes requests from the top.

A stack (also called a pushdown stack) operates in a last-in/first-out sense. When a new data item is entered or "pushed" onto the top of a stack, the stack pointer increments to the next physical memory address, and the new item is copied to that address. When a data item is "pulled" or "popped" from the top of a stack, the item is copied from the address of the stack pointer, and the stack pointer decrements to the next available item at the top of the stack.

A stack is a LIFO (last in, first out - the last entry you push on to the stack is the first one you get back when you pop) data structure that is typically used to hold stack frames (bits of the stack that belong to the current function).

This includes, but is not limited to:

- the return address.

- a place for a return value.

- passed parameters.

- local variables.

The actual implementation of a stack depends on the microprocessor architecture. It can grow up or down in memory and can move either before or after the push/pop operations.

Operation which typically affect the stack are:

- subroutine calls and returns.

- interrupt calls and returns.

- code explicitly pushing and popping entries.

- direct manipulation of the SP register.

Consider the following program in my (fictional) assembly language:

Addr Opcodes Instructions ; Comments

---- -------- -------------- ----------

; 1: pc<-0000, sp<-8000

0000 01 00 07 load r0,7 ; 2: pc<-0003, r0<-7

0003 02 00 push r0 ; 3: pc<-0005, sp<-7ffe, (sp:7ffe)<-0007

0005 03 00 00 call 000b ; 4: pc<-000b, sp<-7ffc, (sp:7ffc)<-0008

0008 04 00 pop r0 ; 7: pc<-000a, r0<-(sp:7ffe[0007]), sp<-8000

000a 05 halt ; 8: pc<-000a

000b 06 01 02 load r1,[sp+2] ; 5: pc<-000e, r1<-(sp+2:7ffe[0007])

000e 07 ret ; 6: pc<-(sp:7ffc[0008]), sp<-7ffe

- This is the starting condition where the program counter is zero and the stack pointer is 8000 (all these numbers are hexadecimal).

- This simply loads register r0 with the immediate value 7 and moves to the next step (I'll assume that you understand the default behavior will be to move to the next step unless otherwise specified).

- This pushes r0 onto the stack by reducing the stack pointer by two then storing the value of the register to that location.

- This calls a subroutine. What would have been the program counter is pushed on to the stack in a similar fashion to r0 in the previous step and then the program counter is set to its new value. This is no different to a user-level push other than the fact it's done more as a system-level thing.

- This loads r1 from a memory location calculated from the stack pointer - it shows a way to pass parameters to functions.

- The return statement extracts the value from where the stack pointer points and loads it into the program counter, adjusting the stack pointer up at the same time. This is like a system-level pop (see next step).

- Popping r0 off the stack involves extracting the value from where the stack pointer points then adjusting that stack pointer up.

- Halt instruction simply leaves program counter where it is, an infinite loop of sorts

Hopefully from that description, it will become clear. Bottom line is: a stack is useful for storing state in a LIFO way and this is generally ideal for the way most microprocessors do subroutine calls.

Just to clarify the steps taken when pushing and popping values in the above example (whether explicitly or by call/return), see the following examples:

LOAD R0,7

PUSH R0

Adjust sp Store val

sp-> +--------+ +--------+ +--------+

| xxxx | sp->| xxxx | sp->| 0007 |

| | | | | |

| | | | | |

| | | | | |

+--------+ +--------+ +--------+

POP R0

Get value Adjust sp

+--------+ +--------+ sp->+--------+

sp-> | 0007 | sp->| 0007 | | 0007 |

| | | | | |

| | | | | |

| | | | | |

+--------+ +--------+ +--------+

To push a value onto the stack, the stack pointer is incremented to point to the next physical memory address, and the new value is copied to that address in memory.

To pop a value from the stack, the value is copied from the address of the stack pointer, and the stack pointer is decremented, pointing it to the next available item in the stack.

The most typical use of a hardware stack is to store the return address of a subroutine call. When the subroutine is finished executing, the return address is popped off the top of the stack and placed in the Program Counter register, causing the processor to resume execution at the next instruction following the call to the subroutine.

The Stack Pointer is a register which holds the address of the next available spot on the stack.

The stack is a area in memory which is reserved to store a stack, that is a LIFO (Last In First Out) type of container, where we store the local variables and return address, allowing a simple management of the nesting of function calls in a typical program.

For 8085: Stack pointer is a special purpose 16-bit register in the Microprocessor, which holds the address of the top of the stack.

The stack pointer register in a computer is made available for general purpose use by programs executing at lower privilege levels than interrupt handlers. A set of instructions in such programs, excluding stack operations, stores data other than the stack pointer, such as operands, and the like, in the stack pointer register. When switching execution to an interrupt handler on an interrupt, return address data for the currently executing program is pushed onto a stack at the interrupt handler's privilege level. Thus, storing other data in the stack pointer register does not result in stack corruption. Also, these instructions can store data in a scratch portion of a stack segment beyond the current stack pointer.

The Stack is an area of memory for keeping temporary data. Stack is used by the CALL instruction to keep the return address for procedures The return RET instruction gets this value from the stack and returns to that offset. The same thing happens when an INT instruction calls an interrupt. It stores in the Stack the flag register, code segment and offset. The IRET instruction is used to return from interrupt call.

The Stack is a Last In First Out (LIFO) memory. Data is placed onto the Stack with a PUSH instruction and removed with a POP instruction. The Stack memory is maintained by two registers: the Stack Pointer (SP) and the Stack Segment (SS) register. When a word of data is PUSHED onto the stack the the High order 8-bit Byte is placed in location SP-1 and the Low 8-bit Byte is placed in location SP-2. The SP is then decremented by 2. The SP addds to the (SS x 10H) register, to form the physical stack memory address. The reverse sequence occurs when data is POPPED from the Stack. When a word of data is POPPED from the stack the the High order 8-bit Byte is obtained in location SP-1 and the Low 8-bit Byte is obtained in location SP-2. The SP is then incremented by 2.

The stack pointer holds the address to the top of the stack. A stack allows functions to pass arguments stored on the stack to each other, and to create scoped variables. Scope in this context means that the variable is popped of the stack when the stack frame is gone, and/or when the function returns. Without a stack, you would need to use explicit memory addresses for everything. That would make it impossible (or at least severely difficult) to design high-level programming languages for the architecture. Also, each CPU mode usually have its own banked stack pointer. So when exceptions occur (interrupts for example), the exception handler routine can use its own stack without corrupting the user process.

On some CPUs, there is a dedicated set of registers for the stack. When a call instruction is executed, one register is loaded with the program counter at the same time as a second register is loaded with the contents of the first, a third register is be loaded with the second, and a fourth with the third, etc. When a return instruction is executed, the program counter is latched with the contents of the first stack register and the same time as that register is latched from the second; that second register is loaded from a third, etc. Note that such hardware stacks tend to be rather small (many the smaller PIC series micros, for example, have a two-level stack).

While a hardware stack does have some advantages (push and pop don't add any time to a call/return, for example) having registers which can be loaded with two sources adds cost. If the stack gets very big, it will be cheaper to replace the push-pull registers with an addressable memory. Even if a small dedicated memory is used for this, it's cheaper to have 32 addressable registers and a 5-bit pointer register with increment/decrement logic, than it is to have 32 registers each with two inputs. If an application might need more stack than would easily fit on the CPU, it's possible to use a stack pointer along with logic to store/fetch stack data from main RAM.

Stack register A stack register is a computer central processor register whose purpose is to keep track of a call stack. On an accumulator-based architecture machine, this may be a dedicated register such as SP on an Intel x86 machine. On a general register machine, it may be a register which is reserved by convention, such as on the PDP-11 or RISC machines. Some designs such as the Data General Eclipse had no dedicated register, but used a reserved hardware memory address for this function.

Machines before the late 1960s—such as the PDP-8 and HP 2100—did not have compilers which supported recursion. Their subroutine instructions typically would save the current location in the jump address, and then set the program counter to the next address. While this is simpler than maintaining a stack, since there is only one return location per subroutine code section, there cannot be recursion without considerable effort on the part of the programmer.

A stack machine has 2 or more stack registers — one of them keeps track of a call stack, the other(s) keep track of other stack(s).

Stack registers in x86

In 8086, the main stack register is called stack pointer - SP. The stack segment register (SS) is usually used to store information about the memory segment that stores the call stack of currently executed program. SP points to current stack top. By default, the stack grows downward in memory, so newer values are placed at lower memory addresses. To push a value to the stack, thePUSH instruction is used. To pop a value from the stack, the POP instruction is used.Example: Assuming that SS = 1000h and SP = 0xF820. This means that current stack top is the physical address 0x1F820 (this is due to memory segmentation in 8086). The next two machine instructions of the program are:

PUSH AX

PUSH BX

- These first instruction shall push the value stored in AX (16-bit register) to the stack. This is done by subtracting a value of 2 (2 bytes) from SP.

- The new value of SP becomes 0xF81E. The CPU then copies the value of AX to the memory word whose physical address is 0x1F81E.

- When "PUSH BX" is executed, SP is set to 0xF81C and BX is copied to 0x1F81C.

POP BX

POP AX

POP BXcopies the word at 0x1F81C (which is the old value of BX) to BX, then increases SP by 2. SP now is 0xF81E.POP AXcopies the word at 0x1F81E to AX, then sets SP to 0xF820.

NOTE: In 8086,

PUSH & POP instructions can only work with 16-bit elements.Stack engine

Simpler processors store the stack pointer in a regular hardware register and use the arithmetic logic unit (ALU) to manipulate its value. Typically push and pop are translated into multiple micro-ops, to separately add/subtract the stack pointer, and perform the load/store in memory.Newer processors contain a dedicated stack engine to optimize stack operations. Pentium M was the first x86 processor to introduce a stack engine. In its implementation, the stack pointer is split among two registers: ESPO, which is a 32-bit register, and ESPd, an 8-bit delta value that is updated directly by stack operations. PUSH, POP, CALL and RET opcodes operate directly with the ESPd register. If ESPd is near overflow or the ESP register is referenced from other instructions (when ESPd ≠ 0), a synchronisation micro-op is inserted that updates the ESPO using the ALU and resets ESPd to 0. This design has remained largely unmodified in later Intel processors, although ESPO has been expanded to 64 bits.

A stack engine similar to Intel's was also adopted in the AMD K8 microarchitecture. In Bulldozer, the need for synchronization micro-ops was removed, but the internal design of the stack engine is not known

What is a “stack pointer” in a microprocessor?

the “stack” is a block of random-access memory (RAM) for storage of data or addresses, and is usually organized as a series of either “top-down” or “bottom-up” locations. The “stack pointer” is a special function register that “points to” (contains the address of) a location within the block, and is used along with special instructions to access the stack contents:

- The PUSH instruction places data into the location pointed to by the stack pointer, and then causes the stack pointer’s value to be incremented (for a “bottom-up” stack) or decremented (for a “top-down”) stack.

- The POP instruction takes data from the location pointed to by the stack pointer, and then causes the stack pointer’s value to be decremented (for a “bottom-up” stack) or incremented (for a “top-down” stack).

Other instructions (particularly those associated with interrupt handling) may modify the stack pointer’s value, so programmers using low-level (i.e. assembly) languages to program the microprocessor must be careful when performing stack operations. Some microprocessors will set an error flag if the stack pointer goes above (stack overflow) or below (stack underflow) pre-defined values, but the implementation of these - and other - flags is specific to the particular microprocessor. For many systems the stack is a relatively small amount of the total amount of RAM, so additional care is required to guard against stack errors. This can be important for programs that require recursive operations, so stack size is often a critical factor in determining maximum recursion depth in many systems.

There is so much more that can be said about stacks, even though their operation is fundamentally quite simple

a stack is an abstract data type that serves as a collection of elements, with two principal operations:

- push, which adds an element to the collection, and

- pop, which removes the most recently added element that was not yet removed.

The order in which elements come off a stack gives rise to its alternative name, LIFO (last in, first out).

You can consider it as extra space to store your register data when you get short with the limited number of general purpose registers.

Similarly,

With respect to the Intel 8085 microprocessor, a Stack Pointer points to the top of the Stack. The Physical address of Stack(SP) is declared by the programmer using the LXI SP command in Intel 8085.

LXI SP, 4000 H. (H - Hexadecimal)

SP is a 16-bit register in Intel 8085 microprocessor and hence takes 2 bytes of data as input.

At times, you may feel that the number of general purpose registers is not sufficient to calculate your answer and you have to free some of the general purpose registers for your further calculation or you immediately want to store a important data then you can store your registers information in the stack(using PUSH) and also retrieve the information from the stack(using POP) when needed.

PUSH Operation in Intel 8085 takes 2 bytes as input and always occurs in register pairs( PSW & Accumulator, B & C, D & E, H & L). First the data of the Higher byte is stored then the Lower byte.

LXI SP, 4000 H

PUSH B

The data stored in Register B is stored in the Stack(4000 H) first then the value of Stack Pointer is decremented(3FFF H) and data stored in Register C is stored in Stack.

Similarly in the POP command of Intel 8085.

XXX . XXX 4% zero AVR Stack & Stack Pointer

What is a stack?

A stack is a consecutive block of data memory allocated by the programmer. This block of memory can be use both by the microcontroller internal control as well as the programmer to store data temporarily. The stack operates with a Last In First Out (LIFO) mechanism, i.e the last thing store on the stack is the first thing to be retrieved from the stack.What is the Stack Pointer?

The stack pointer is basically a register or registers that holds either "the memory address of the last location on that stack where data was stored" or "the memory address of the next available location on the stack to store data." The definition of the stack pointer depends on the design of the microcontroller.In AVR microcontrollers, such as the ATMega8515, ATMega16, ATTiny13, etc., the stack pointer holds the address on the next available location on the stack available to store data.

The AVR Stack Pointer

The AVR 8-bits microcontroller stack pointer can either consist of a single I/O register SPL (Stack Pointer Low) or two (2) I/O registers SPL and SPH (Stack Pointer High). The size of the stack pointer depends on the amount of data memory a microntroller contains. If the entire data memory can be addressed using 8-bits then the stack pointer is 8-bits wide i.e. SPL only, otherwise the stack pointer is consist of SPL and SPH.

Setting-up the AVR Stack

A programmer set up the stack on a microcontroller by loading the start address of the stack into the microcontroller stack pointer. The code below shows how to set up the stack in an AVR ATMega8515 microcontroller. Here the address being loaded is that of the last memory location in SRAM. This code could also be used for the ATMega16 , ATMega32 or any one of the AVR microcontrooler with a 16-bits stack pointer, you just of the replace the "m8515def.inc" include file with "m16def.inc" or "m32def.inc" respectively.

Notes:

- RAMEND is a label that represents the address of the last memory location in SRAM. To use this label you MUST ensure that you include the definition header file for the specific microcontroller.

- The functions low() and high() are use by the assembler to return the low byte and high byte respectively of a 16-bit word. Remember we are dealing with a 8-bit microcontroller which can only handle only 8-bits at a time. RAMEND is a 16-bit word and so we use the functions to split it.

.include "m8515def.inc" LDI R16, low(RAMEND) OUT SPL, R16 LDI R16, high(RAMEND) OUT SPH, R16

AVR Microcontroller's Stack Operation

As mentioned earlier the stack can be use by both the microcontroller internal control and the programmer. The programmer accesses the stack using the PUSH and POP instructions. The PUSH instruction is use to store data on the stack while the POP instruction is used to retrieve data from the stack. The operation of the AVR stack will be discussed using the code that follows.

Notes:

Notice here the microcontroller being used in the code below is the Atmel ATTiny2313 AVR microcontroller. This AVR microcontroller's data memory can be addressed using 8-bits and therefore its stack pointer is defined only by the SPL.

/* * Compatibly in both AVR Studio 5 & AVR Studio 6 * * Created: 10/15/2011 2:44:24 PM * Author: AVR Tutorials */ .include "tn2313def.inc" ;Set up AVR ATTiny2313 stack LDI R16, RAMEND OUT SPL, R16 LDI R16, 0x33 LDI R17, 0x25 LDI R18, 0x0A PUSH R16 PUSH R17 POP R17 PUSH R18 end: RJMP end

Figure 1 - Initial Stack |  Figure 2 - Stack After First PUSH |

Figure 3 - Stack After Second PUSH |  Figure 4 - Stack After POP |

Figure 5 - Stack After Third PUSH |  |

stack pointer Often used in computer programming, the stack pointer refers to a cutoff point for relevance in a stack of memory. This point is the smallest address, anything smaller is seen as garbage and higher, valid data.

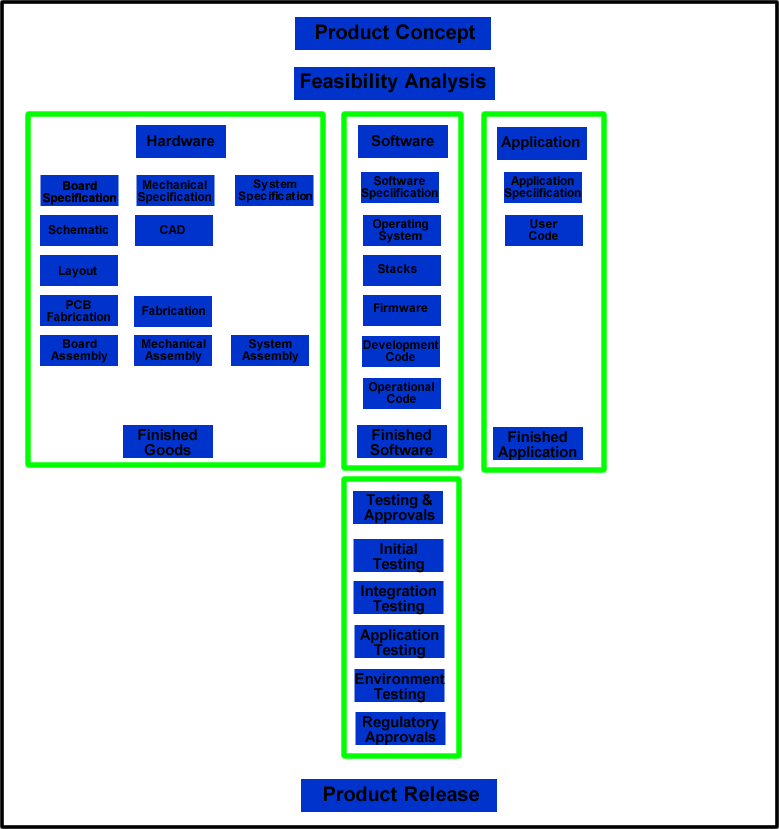

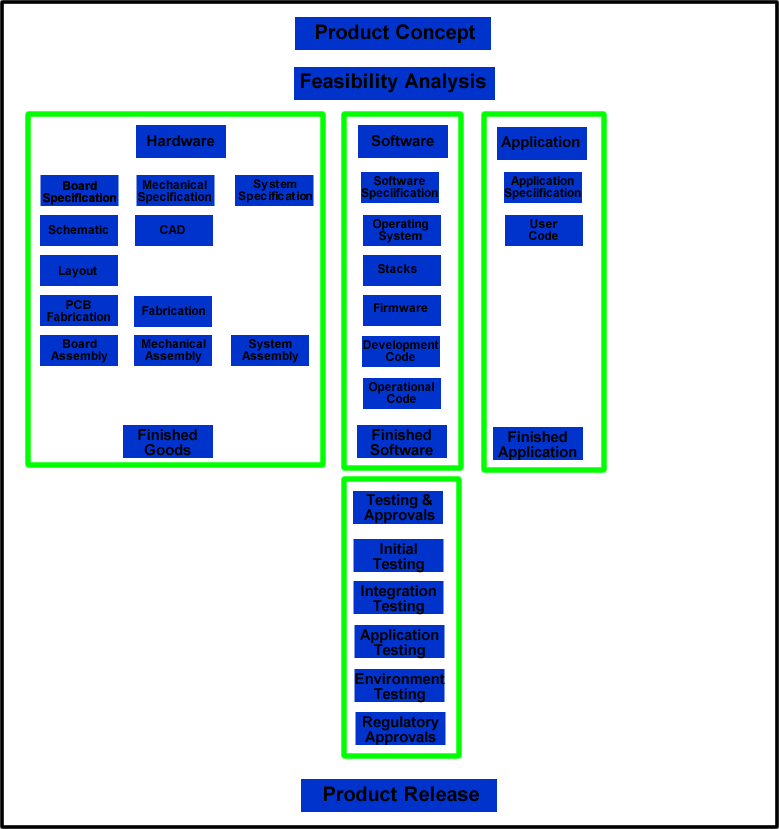

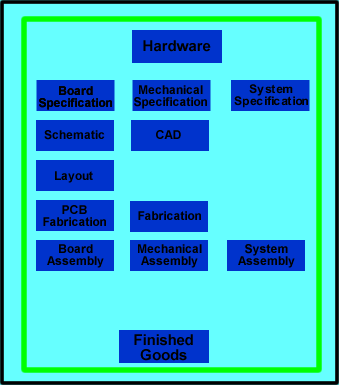

The flowchart below shows the basic stages an Idea goes through to becoming a tangible reality. In this article, we focus on the Hardware part of product development. Please refer to the other articles in this series for explanations of Software, User Application and Testing.

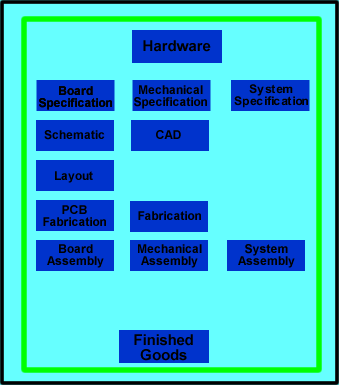

The Hardware block is expanded below for easier reference. This is a high-level diagram of the basic flow to create electronics; The nearly a dozen stages indicated in the diagram could have multiple sub-stages, depending on the design complexity and requirements.

To illustrate this process, we’ll use the Idea of a smart garage door opener. The idea is that a homeowner wants to use a smart-phone to check if the home garage door is opened or closed. The homeowner would also like to be able to remotely open or close the door with the smart-phone. For example, to let a guest in or a child that forgot/lost a key.

Referring back to the flow chart diagram at the beginning of this article, the Idea needs to be conceptualized so that it can be shared with everyone that will be working on it. The Concept set out what the idea is, what it does, who will use it and in what context. Even if it’s just you, it still important to write out and document the Concept so you can focus on specifics. This is the starting point for the requirements of the idea.

The Concept is very similar to the idea, but with added details and refinement: A garage door opener that is powered with standard US 120V, 10A circuit. It should have a wired push-button to open/close manually, an integrated light fixture and safety sensor inputs (e.g., doesn’t close if something/someone is in the way). Should be able to handle single wide and double wide doors. It connects wirelessly with a smart-phone (Apple/iOs or Android, no Windows, Blackberry or Others). As this is a fairly generalized description, it shouldn’t take more than 1 hour to write out a Concept of a thought out Idea.

Next is to do an Analysis or Feasibility study. This is where you investigate all the possible (and even the impossible) ways you can realize the idea. It’s best to partition the concept in various blocks or modules to make the analysis more cohesive. Some major blocks in this Concept for a smart garage door opener are Motor, Lighting, Power, Communications, Enclosure, Controls.

It’s worth emphasizing that at this initial stage, all the various possibilities should be taken into account, researched and explored. Nothing should be dismissed. The Feasibility process is to document the findings, and then rank them; Pick the top candidate as the “recommendation” for going forward.

Let’s dive into the Communications: Should the device have wired or wireless capabilities? There are many wireless communications methods available including Cellular, Radio, WiFi, WiMax, Bluetooth, Zigbee and others. Wired solutions could include standard Ethernet or Ethernet-Over-Powerline. Each of these should be examined to determine if it would meet the requirements, what are the advantages and disadvantages for each, the availability and cost. Other things for consideration may include the size, weight, power usage, and regulatory compliances.

For a smaller, not too complicated product (and these metrics of course depend on your background, experience with similar products, and many other factors) budget about 45 hours for the Feasibility study. This may seem like a large up-front time investment, but being thorough at the beginning pays off huge dividends as the project progresses. The cost for making changes at this stage is also very low; The cost increases exponentially with each stage.

As an example: Imagine the scenario where you launch the product – Yay! A happy homeowner buys your smart garage door opener and has just paid the installer to put it. The homeowner launches the smart-phone app but can’t “find” the garage door opener. After a long talk with your (expensive) help support tech, the problem is found out that opener is “too far away” to communicate.

No longer a happy homeowner when it comes to your product (and you can be sure you’ll hear about it on Twitter, Facebook and Yelp). You have to take a return on the opener and reimburse the owner for the cost of the installation/removal. Expensive! And you find out that as more customers buy and install the open, more than half have the same problem. This means a recall and redesign. Which could have been avoided by a thorough and detailed investigation during the Feasibility stage.

Having completed the Feasibility study and defined solid recommendations for how to meet the Concept requirements, the next phase involves defining the Hardware, Software and User Application.

These items can be put into sub-groups under hardware: Board Specification, Mechanical Specification and System Specification. Now let’s look at each of these in a little more detail.

From this specification, a System Bill Of Materials (BOM) is created which lists every unique item: a description of what it is, the vendor, the vendor part number, an optional internal part number and a placement designator. The BOM is used by the purchasing department to buy the necessary amount of materials to build the unit, and by the manufacturing group to know how to assemble it. For some products there literally no items that would be included in this specification, and for other products this is the only specification when they are built completely using COTS. I budget 15 hours on average for developing this specification.

The CAD files are sent to the fabricator who cuts, bends, stamps, drills and otherwise works on the metal to create what is on the drawings. Similarly, a product may use a 3-D Printing (an additive process) or a CNC milling-machine (a subtractive process) to create pieces of the product. Rapid prototype companies can provide quick turn-around of low volumes in 1 to 3 days, but creating mechanical pieces can often take 1 to 3 weeks.

If you are not familiar with all the types of components available to you as a designer, there is a helpful 3-Volume Encyclopedia of Electronic Components that set includes key information on electronics parts for your projects—complete with photographs, schematics, and diagrams.

Once you understand the components, here is an excellent reference provides the essential information that every circuit designer needs to produce a working circuit, as well as information on how to make a design that is robust, tolerant to noise and temperature, and able to operate in the system for which it is intended. It looks at best practices, design guidelines, and engineering knowledge gained from years of experience, and includes practical, real-world considerations for components and printed circuit boards (PCBs) as well as their manufacturability, reliability, and cost:

Of particular importance is the pin-out (e.g., what each pin or connection of a device actual does) for the components. Also included is the power usage and timing information. It’s also useful to include the device sizes (e.g., width, length, height and weight) for reference. How each device is used and how it connects with other devices on the board is described in this document. As such, the board specification is used as the guidelines for the schematics.

This is a rather detailed document; It can take 40 hours to read through data sheets and determine how devices work together. In the case of our garage door opener example, how does the processor on the board control the motor to turn the gears which opens/closes the door? How does the door status get read from a sensor and sent to the communications chip? All of the signals travel on wires / board traces to the pins of chips and the designer has to determine which pins and wires are connected.

The Hardware block is expanded below for easier reference. This is a high-level diagram of the basic flow to create electronics; The nearly a dozen stages indicated in the diagram could have multiple sub-stages, depending on the design complexity and requirements.

To illustrate this process, we’ll use the Idea of a smart garage door opener. The idea is that a homeowner wants to use a smart-phone to check if the home garage door is opened or closed. The homeowner would also like to be able to remotely open or close the door with the smart-phone. For example, to let a guest in or a child that forgot/lost a key.

Referring back to the flow chart diagram at the beginning of this article, the Idea needs to be conceptualized so that it can be shared with everyone that will be working on it. The Concept set out what the idea is, what it does, who will use it and in what context. Even if it’s just you, it still important to write out and document the Concept so you can focus on specifics. This is the starting point for the requirements of the idea.

The Concept is very similar to the idea, but with added details and refinement: A garage door opener that is powered with standard US 120V, 10A circuit. It should have a wired push-button to open/close manually, an integrated light fixture and safety sensor inputs (e.g., doesn’t close if something/someone is in the way). Should be able to handle single wide and double wide doors. It connects wirelessly with a smart-phone (Apple/iOs or Android, no Windows, Blackberry or Others). As this is a fairly generalized description, it shouldn’t take more than 1 hour to write out a Concept of a thought out Idea.

Next is to do an Analysis or Feasibility study. This is where you investigate all the possible (and even the impossible) ways you can realize the idea. It’s best to partition the concept in various blocks or modules to make the analysis more cohesive. Some major blocks in this Concept for a smart garage door opener are Motor, Lighting, Power, Communications, Enclosure, Controls.

It’s worth emphasizing that at this initial stage, all the various possibilities should be taken into account, researched and explored. Nothing should be dismissed. The Feasibility process is to document the findings, and then rank them; Pick the top candidate as the “recommendation” for going forward.

Let’s dive into the Communications: Should the device have wired or wireless capabilities? There are many wireless communications methods available including Cellular, Radio, WiFi, WiMax, Bluetooth, Zigbee and others. Wired solutions could include standard Ethernet or Ethernet-Over-Powerline. Each of these should be examined to determine if it would meet the requirements, what are the advantages and disadvantages for each, the availability and cost. Other things for consideration may include the size, weight, power usage, and regulatory compliances.

For a smaller, not too complicated product (and these metrics of course depend on your background, experience with similar products, and many other factors) budget about 45 hours for the Feasibility study. This may seem like a large up-front time investment, but being thorough at the beginning pays off huge dividends as the project progresses. The cost for making changes at this stage is also very low; The cost increases exponentially with each stage.

As an example: Imagine the scenario where you launch the product – Yay! A happy homeowner buys your smart garage door opener and has just paid the installer to put it. The homeowner launches the smart-phone app but can’t “find” the garage door opener. After a long talk with your (expensive) help support tech, the problem is found out that opener is “too far away” to communicate.

No longer a happy homeowner when it comes to your product (and you can be sure you’ll hear about it on Twitter, Facebook and Yelp). You have to take a return on the opener and reimburse the owner for the cost of the installation/removal. Expensive! And you find out that as more customers buy and install the open, more than half have the same problem. This means a recall and redesign. Which could have been avoided by a thorough and detailed investigation during the Feasibility stage.

Having completed the Feasibility study and defined solid recommendations for how to meet the Concept requirements, the next phase involves defining the Hardware, Software and User Application.

Hardware

Let’s start with Hardware. This is the physical, tangible “thing” that you are going to produce. In the case of the smart garage door opener, there is a motor, gears, a metal case and a clear plastic light cover. A three-prong power cord plugs into an outlet and connects to the power supply inside the opener. Cables and wires connect all the printed circuit boards (PCB) together. Each board has chips, connectors, heat-sinks and other components. There are nuts, bolts, grommets and fasteners holding everything together. All of this is “hardware”. You can see some of this in the picture below.

These items can be put into sub-groups under hardware: Board Specification, Mechanical Specification and System Specification. Now let’s look at each of these in a little more detail.

System Specification

The System Specification is where the “custom-off-the-shelf” (COTS) or “third-party” pieces are called out. In this case, the power supply (for converting the 120V AC from the house receptacle into 5V DC in the opener), the cable harnesses (connect the AC/DC power supply to each board), the gears, the screws and fasteners.From this specification, a System Bill Of Materials (BOM) is created which lists every unique item: a description of what it is, the vendor, the vendor part number, an optional internal part number and a placement designator. The BOM is used by the purchasing department to buy the necessary amount of materials to build the unit, and by the manufacturing group to know how to assemble it. For some products there literally no items that would be included in this specification, and for other products this is the only specification when they are built completely using COTS. I budget 15 hours on average for developing this specification.

Mechanical Specification

The Mechanical Specification defines the brackets, the metal case that houses everything, the clear plastic light cover, etc.. This specification provides the detail drawings of each piece with all dimensions, holes, hole diameters, bend lines and stamp areas. The designer uses a CAD (Computer Aided Design) program to create all the documents. The CAD files are used by the metal and plastic fabricators to create the housing. The specification can be created fairly easily as the bulk of the work is in the actual CAD file creation. Figure on 3 hours for the specification; but the CAD work can take from a few hours to many 10’s of hours depending on what is being designed. For a garage door housing and brackets, 10 hours should be sufficient.The CAD files are sent to the fabricator who cuts, bends, stamps, drills and otherwise works on the metal to create what is on the drawings. Similarly, a product may use a 3-D Printing (an additive process) or a CNC milling-machine (a subtractive process) to create pieces of the product. Rapid prototype companies can provide quick turn-around of low volumes in 1 to 3 days, but creating mechanical pieces can often take 1 to 3 weeks.

Hardware Board Specification

The Board Specification, also referred to as the Hardware Specification, contains all of the information about the various modules, chips, resistors, capacitors, antennae, heat sinks and other electronics, interconnect and passive components on a board. If there are multiple boards in a design, then there would typically be a specification for each board. This specification has the basic characteristics and implementation details for each component, and is a very condensed summary of the data sheet. This document focuses on the electrical parameters and connectivity of the components.If you are not familiar with all the types of components available to you as a designer, there is a helpful 3-Volume Encyclopedia of Electronic Components that set includes key information on electronics parts for your projects—complete with photographs, schematics, and diagrams.

Once you understand the components, here is an excellent reference provides the essential information that every circuit designer needs to produce a working circuit, as well as information on how to make a design that is robust, tolerant to noise and temperature, and able to operate in the system for which it is intended. It looks at best practices, design guidelines, and engineering knowledge gained from years of experience, and includes practical, real-world considerations for components and printed circuit boards (PCBs) as well as their manufacturability, reliability, and cost:

Of particular importance is the pin-out (e.g., what each pin or connection of a device actual does) for the components. Also included is the power usage and timing information. It’s also useful to include the device sizes (e.g., width, length, height and weight) for reference. How each device is used and how it connects with other devices on the board is described in this document. As such, the board specification is used as the guidelines for the schematics.

This is a rather detailed document; It can take 40 hours to read through data sheets and determine how devices work together. In the case of our garage door opener example, how does the processor on the board control the motor to turn the gears which opens/closes the door? How does the door status get read from a sensor and sent to the communications chip? All of the signals travel on wires / board traces to the pins of chips and the designer has to determine which pins and wires are connected.

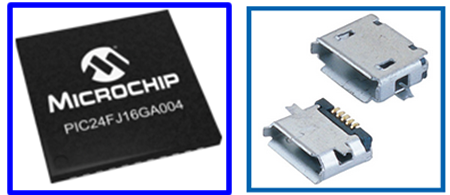

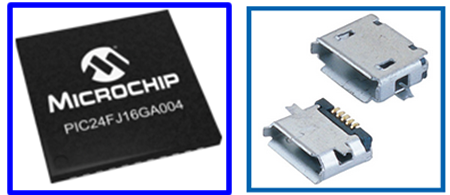

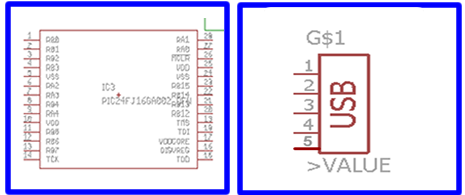

For an illustrative example of what occurs in the next stages let’s look at the PIC24FJ16GA004 microcontroller from Microchip, and a USB Micro-B connector from Assmann WSW Components. The microcontroller is an “active” component, where as the USB connector is a “passive” component.

This is what the actual components looks like (not to scale), and what would be soldered to the Printed Circuit Board (PCB):

This is the pin-out diagram, which would be included in the Hardware Board Specification:

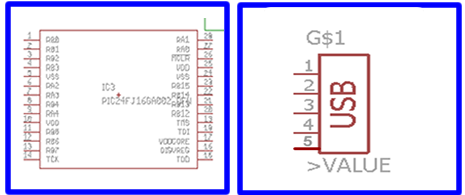

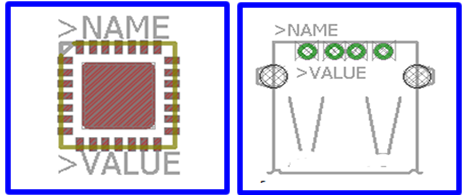

The schematic symbol created for the schematic library:

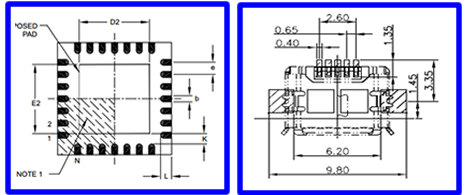

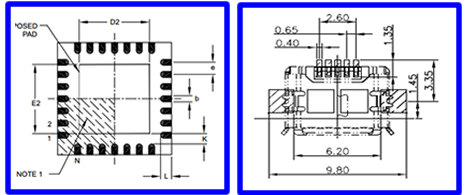

The physical dimensions of the device, along with the solder areas:

The layout symbol created for the PCB library:

You’ll need some tools to get started at this stage. The “Eagle” tools from Cadsoft (a division of Autodesk) are excellent for beginners, hobbyists, entrepreneurs as well as full scale industry engineering firms. The software scales from simple designs to very complex ones. Another tool vendor to consider is Altium. Most of the vendors have fully functional free version that are typically limited by either the number of components, the number of schematic pages, the physical board dimensions, number of board layers or some combination of all of these.

It’s also helpful to have a physical book to refer to as you are working with the these tools. The online materials available in .pdf format are extensive, but printing them can be costly and reading online can be straining.

Many schematic tools vendors include the most often used components in a packaged library, and vendors also have symbol libraries for their devices. However, there are many different schematic tools, and many different components; It is often the case that schematic symbols for major components in the design will need to be created. Once all the symbols are available, the engineer can connect the pins together as required for the design.

At this stage, all of the interconnects, passives, electromagnetic and active components for the design are accounted for on the schematic. The library for each of these contains a description, a vendor, a vendor part number and an optional internal part number. When a symbol is placed on the schematic, it is assigned a unique reference number which is used in the board assembly process. Once all of the parts are placed on the schematic, a parts Bill Of Materials (BOM) can be generated. This is used by the purchasing group to know how many of which item to buy.

Also, once all of the components have been connected or “wired” together on the schematic, a “netlist” can be created. This is a file which lists the network of connections between the components on the board and is used in creating the physical layout of the board.

This entire process is referred to the “Hardware Design” or “Schematic Capture”. For a small design with only a few components, budget about 15 hours for this step.

Depending on the selected components and their functionality, it may be possible to simulate the operational characteristics of the design. Many manufacturers provide simulation models for their components, and it is also possible to develop models “in-house” (or pay a third-party developer to create them). A first level simulation may only use simple static timing analysis (STA) models and generate cycle-based results. This is generally a less computationally intensive method to validate and verify basic operations .

For example, are address and data lines connected properly? Is combinatorial logic generating the expected output for a given input. At the other extreme, a fully simulated schematic will have parameters for all of the input buffer circuits, output driver circuits and internal times.

This timing parameters includes such things as:

Similar to the schematic capture library, there must e PCB library element for each component. Whereas the schematic symbol was a conceptual representation of the electrical connections of the component, the PCB symbol is an exact physical representation (width, length, height). The layout symbol shows the solder areas for the pins and pads for surface-mount parts, as well as where the holes are for through-hole components.

The PCB engineer places the components on the board and begins the process of placing metal traces to create the netlist connections indicated in the schematic. The “nets” in the schematic are virtual, abstracted connections, and the PCB connections are where the actual metal will be on the board. This is the stage where the ground (e.g., GND) and power (e.g., VCC or VDD) planes and connections are created.

The “writing” for the part outlines, component numbering, company name / logo, product information, etc. is put into a “silk screen” on either/both the top and bottom sides of the PCB.

PCB design is both a science and an art, especially for analog designs and high speed designs. For a simple, low-frequency, digital design the PCB layout can take at least as long as the schematic capture stage. In this example, would budget about 15 hours.

At the end of the board layout phase, the CAD software will generate a file to be used for the physical PCB creation. This file is commonly called a “Gerber” file. Each Gerber file represents only one PCB layer. That means you will usually get seven files for a two-layer board :

At a minimum, the physical connection netlist from the PCB layout should be compared to the netlist from the schematic capture. There should be a one-to-one correspondence between the two netlists. If they don’t match up, there is either a trace missing on the board, a trace added on the board or an incorrect trace routing on the board.

Simulations can become more accurate at this stage since the connections between components is defined. The characteristics of the connections depend on such things as the length, width and amount of material (e.g., the copper pour) used in the trace; The number of connections between different layers of the PCB (e.g., the vias), the dielectric / type of PCB material (e.g., FR4) and other factors.

It is also possible to simulate more than just the electrical signal characteristics of this virtual board. Heat maps and temperature profiles can be created; Electromagnetic interference (EMI) and electromagnetic radiation (EMR) – essentially how much “noise” does the system create at various frequencies – scans can be generated.

At this point, the actual design process is complete.

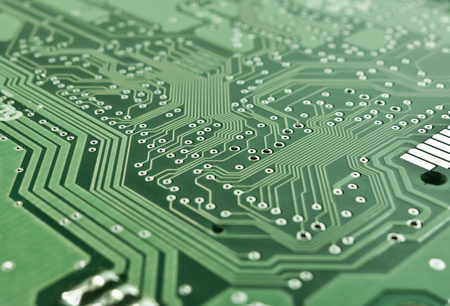

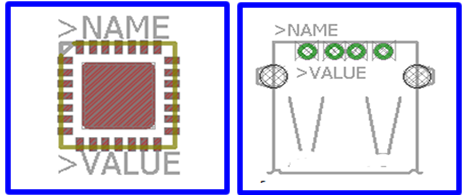

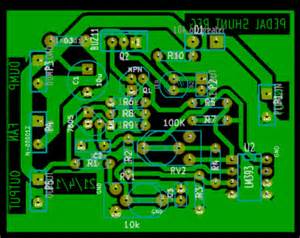

Here is an example of a “bare” board:

For reference, a small, 2-sided board with low number of components can be assembled in under 2 days for less than $50 per board. The cost drops significantly for a 1- to 2-week delivery. Per unit costs also decrease when ordered in volume, so the final production costs will considerably less than the initial prototype.

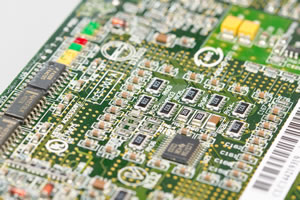

The final example, an assembled PCB:

This is what the actual components looks like (not to scale), and what would be soldered to the Printed Circuit Board (PCB):

This is the pin-out diagram, which would be included in the Hardware Board Specification:

The schematic symbol created for the schematic library:

The physical dimensions of the device, along with the solder areas:

The layout symbol created for the PCB library:

Hardware Design / Schematic Capture

With a comprehensive board specification and the relevant datasheets, a schematic can be created. The first step is to make a diagram or symbol for each component. These are also called “library elements”. This is a representation that shows the electrical connection for the device. This also has Power and Ground pins, which are often not shown on the schematic as these are common to many devices (the exception is when there are specific, non-common power and/or ground connections, often the case with communications or precision components).You’ll need some tools to get started at this stage. The “Eagle” tools from Cadsoft (a division of Autodesk) are excellent for beginners, hobbyists, entrepreneurs as well as full scale industry engineering firms. The software scales from simple designs to very complex ones. Another tool vendor to consider is Altium. Most of the vendors have fully functional free version that are typically limited by either the number of components, the number of schematic pages, the physical board dimensions, number of board layers or some combination of all of these.

It’s also helpful to have a physical book to refer to as you are working with the these tools. The online materials available in .pdf format are extensive, but printing them can be costly and reading online can be straining.

Many schematic tools vendors include the most often used components in a packaged library, and vendors also have symbol libraries for their devices. However, there are many different schematic tools, and many different components; It is often the case that schematic symbols for major components in the design will need to be created. Once all the symbols are available, the engineer can connect the pins together as required for the design.

At this stage, all of the interconnects, passives, electromagnetic and active components for the design are accounted for on the schematic. The library for each of these contains a description, a vendor, a vendor part number and an optional internal part number. When a symbol is placed on the schematic, it is assigned a unique reference number which is used in the board assembly process. Once all of the parts are placed on the schematic, a parts Bill Of Materials (BOM) can be generated. This is used by the purchasing group to know how many of which item to buy.

Also, once all of the components have been connected or “wired” together on the schematic, a “netlist” can be created. This is a file which lists the network of connections between the components on the board and is used in creating the physical layout of the board.

This entire process is referred to the “Hardware Design” or “Schematic Capture”. For a small design with only a few components, budget about 15 hours for this step.

Depending on the selected components and their functionality, it may be possible to simulate the operational characteristics of the design. Many manufacturers provide simulation models for their components, and it is also possible to develop models “in-house” (or pay a third-party developer to create them). A first level simulation may only use simple static timing analysis (STA) models and generate cycle-based results. This is generally a less computationally intensive method to validate and verify basic operations .

For example, are address and data lines connected properly? Is combinatorial logic generating the expected output for a given input. At the other extreme, a fully simulated schematic will have parameters for all of the input buffer circuits, output driver circuits and internal times.

This timing parameters includes such things as:

- Input Delay

- Output Delay

- Min/Max/Typical Input Skew

- Min/Max/Typical Output Skew

- Internal Propagation Delay

PCB Design / Board Layout

In the Printed Circuit Board (PCB) Design, also called “Board Layout”, stage the engineer determines how the physical board will look with all of the components in place. Using another software tool (although usually from the same vendor as the schematic capture tools), the designer creates a 2-dimensional shape of a board (e.g., a 3 inch x 5 inch rectangle or 4.5 inch diameter circle) to represent the PCB.Similar to the schematic capture library, there must e PCB library element for each component. Whereas the schematic symbol was a conceptual representation of the electrical connections of the component, the PCB symbol is an exact physical representation (width, length, height). The layout symbol shows the solder areas for the pins and pads for surface-mount parts, as well as where the holes are for through-hole components.

The PCB engineer places the components on the board and begins the process of placing metal traces to create the netlist connections indicated in the schematic. The “nets” in the schematic are virtual, abstracted connections, and the PCB connections are where the actual metal will be on the board. This is the stage where the ground (e.g., GND) and power (e.g., VCC or VDD) planes and connections are created.

The “writing” for the part outlines, component numbering, company name / logo, product information, etc. is put into a “silk screen” on either/both the top and bottom sides of the PCB.

PCB design is both a science and an art, especially for analog designs and high speed designs. For a simple, low-frequency, digital design the PCB layout can take at least as long as the schematic capture stage. In this example, would budget about 15 hours.

At the end of the board layout phase, the CAD software will generate a file to be used for the physical PCB creation. This file is commonly called a “Gerber” file. Each Gerber file represents only one PCB layer. That means you will usually get seven files for a two-layer board :

- Top layer

- Bottom layer

- Solder Stop Mask

- Solder Stop Mask

- Silk Top

- Silk Bottom

- Drill – some PCB fabricators may want a different format file named “excellon.cam”

At a minimum, the physical connection netlist from the PCB layout should be compared to the netlist from the schematic capture. There should be a one-to-one correspondence between the two netlists. If they don’t match up, there is either a trace missing on the board, a trace added on the board or an incorrect trace routing on the board.

Simulations can become more accurate at this stage since the connections between components is defined. The characteristics of the connections depend on such things as the length, width and amount of material (e.g., the copper pour) used in the trace; The number of connections between different layers of the PCB (e.g., the vias), the dielectric / type of PCB material (e.g., FR4) and other factors.

It is also possible to simulate more than just the electrical signal characteristics of this virtual board. Heat maps and temperature profiles can be created; Electromagnetic interference (EMI) and electromagnetic radiation (EMR) – essentially how much “noise” does the system create at various frequencies – scans can be generated.

At this point, the actual design process is complete.

PCB Fabrication

There are many places which will create the green (sometimes red) glass-reinforced epoxy laminate sheets that are the printed circuit board. A small, rectangular, 2-sided PCB can be produced very quickly for nominal cost. Many companies can quick-turn up to 10 PCBs in under 2 days for $25 to $50 each.Here is an example of a “bare” board:

Board Assembly /PCB Stuffing

With the components you ordered during the specification stage and the boards back from the fabricator, it’s time to “assemble” everything. Also called “stuffing” the board, this is where the parts are soldering to the board to create a finished product. Many designers will use the same company for fabrication and assembly. This can save on costs, since it’s a packaged price to include both services. And it can also save on time since there is no delay in mailing the PCB to you, and then you mailing the PCB plus components to the assembler.For reference, a small, 2-sided board with low number of components can be assembled in under 2 days for less than $50 per board. The cost drops significantly for a 1- to 2-week delivery. Per unit costs also decrease when ordered in volume, so the final production costs will considerably less than the initial prototype.

The final example, an assembled PCB:

The process for taking a concept through to a product starts with software to create a physical description for the various components, their placement and their connections. The output of the software program is used by the manufacturing / assembly house to make the PCB which is ready to accept the components.

There are many options available for PCB manufacturing, and these depend on your specific needs:

- Number of Boards (prototypes, development, pre-production, small production, large production)

- Turn-around time (overnight, days, 1-2 weeks)

- Price

- Component Type / Size

- Trace Widths

- Number of layers

- Number of Components

- Market (hobby, commercial, military, avionics, medical, etc.)

- Location (USA, China, Mexico)

A prototype is defined as ‘an original model on which something is patterned’.

A prototype circuit board is a working model of a printed circuit board (PCB) that has been created especially to check the functionality of the final PCB. A prototype can be created prior to a small as well as large-volume production requirement. In some cases, prototyping is also carried out for a single PCB requirement.

Why is a prototype needed?

Just as a circuit diagram of a PCB cannot serve as an effective base for manufacturing of the final product, it is imperative to first turn the diagram into an actual PCB and check its feasibility. Once the feasibility has been established and improvements have been analyzed, the actual working model can be manufactured.

There are several advantages to making prototype circuit boards::

- Once a prototype has been manufactured and tested, there are very few hurdles in the actual PCB manufacturing process.

- With a prototype, the customer gets a clear idea of how their end product would function. Risks and weak points, if any, can be easily identified at an early stage.

- Once a product goes into production, making changes to the product becomes very costly and time consuming; especially when large volume production is involved. Typically, production doesn\’t begin until the prototype circuit board has been approved by the customer.

There are several different types of PCBs that can be prototyped before they are put into production. These include single or multi-layer PCBs, flex or rigid PCBs, PCBs with IMS insulated metal substrate, and PF Rogers PCB. Prototyping can also be carried out for Flexible printed circuits (FPCs) which are used in several different applications. There are different types of FPCs such as single-sided FPC, dual access FPC, double-sided FPC and multilayer FPC.

Most PCB manufacturers offer prototyping services.. They will likely provide customers with several packages from which they can choose. A few manufacturers also give add-on services such as electrical testing and standard tooling for free. Such firms should be preferred as these free services give added value.

It typically takes a couple of days to manufacture prototype circuit boards. Experts recommend choosing a firm that specializes in PCB manufacturing and has a proven track record in the field. Before finalizing a prototyping project, ask the firm to provide some samples or references of their previous projects. Many firms also provide services to tweak and improve your existing prototype circuit board for better results.

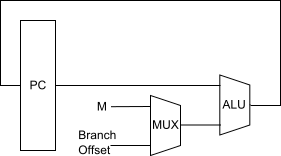

The Program Counter (PC) is a register structure that contains the address pointer value of the current instruction. Each cycle, the value at the pointer is read into the instruction decoder and the program counter is updated to point to the next instruction. For RISC computers updating the PC register is as simple as adding the machine word length (in bytes) to the PC. In a CISC machine, however, the length of the current instruction needs to be calculated, and that length value needs to be added to the PC.

Updating the PC

The PC can be updated by making the enable signal high. After each instruction cycle the PC needs to be updated to point to the next instruction in memory. It is important to know how the memory is arranged before constructing your PC update circuit.Harvard-based systems tend to store one machine word per memory location. This means that every cycle the PC needs to be incremented by 1. Computers that share data and instruction memory together typically are byte addressable, which is to say that each byte has its own address, as opposed to each machine word having its own address. In these situations, the PC needs to be incremented by the number of bytes in the machine word.

In this image, the letter M is being used as the amount by which to update the PC each cycle. This might be a variable in the case of a CISC machine.

Example: MIPS

The MIPS architecture uses a byte-addressable instruction memory unit. MIPS is a RISC computer, and that means that all the instructions are the same length: 32-bits. Every cycle, therefore, the PC needs to be incremented by 4 (32 bits = 4 bytes).

The MIPS architecture uses a byte-addressable instruction memory unit. MIPS is a RISC computer, and that means that all the instructions are the same length: 32-bits. Every cycle, therefore, the PC needs to be incremented by 4 (32 bits = 4 bytes).

Example: Intel IA32

The Intel IA32 (better known by some as "x86") is a CISC architecture, which means that each instruction can be a different length. The Intel memory is byte-addressable. Each cycle the instruction decoder needs to determine the length of the instruction, in bytes, and it needs to output that value to the PC. The PC unit increments itself by the value received from the instruction decoder.

The Intel IA32 (better known by some as "x86") is a CISC architecture, which means that each instruction can be a different length. The Intel memory is byte-addressable. Each cycle the instruction decoder needs to determine the length of the instruction, in bytes, and it needs to output that value to the PC. The PC unit increments itself by the value received from the instruction decoder.

Branching

Branching occurs at one of a set of special instructions known collectively as "branch" or "jump" instructions. In a branch or a jump, control is moved to a different instruction at a different location in instruction memory.During a branch, a new address for the PC is loaded, typically from the instruction or from a register. This new value is loaded into the PC, and future instructions are loaded from that location.

Non-Offset Branching

A non-offset branch, frequently referred to as a "jump" is a branch where the previous PC value is discarded and a new PC value is loaded from an external source.In this image, the PC value is either loaded with an updated version of itself, or else it is loaded with a new Branch Address. For simplification we do not show the control signals to the MUX.

Offset Branching

An offset branch is a branch where a value is added (or subtracted) to the current PC value to produce the new value. This is typically used in systems where the PC value is larger then a register value or an immediate value, and it is not possible to load a complete value into the PC. It is also commonly used to support relocatable binaries which may be loaded at an arbitrary base address.In this image there is a second ALU unit. Notice that we could simplify this circuit and remove the second ALU unit if we use the configuration below:

These are just two possible configurations for this circuit.

Offset and Non-Offset Branching

Many systems have capabilities to use both offset and non-offset branching. Some systems may differentiate between the two as "near jump" and "far jump" respectively, although this terminology is archaic.Program counter

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence.

In most processors, the PC is incremented after fetching an instruction, and holds the memory address of ("points to") the next instruction that would be executed. (In a processor where the incrementation precedes the fetch, the PC points to the current instruction being executed.)

Processors usually fetch instructions sequentially from memory, but control transfer instructions change the sequence by placing a new value in the PC. These include branches (sometimes called jumps), subroutine calls, and returns. A transfer that is conditional on the truth of some assertion lets the computer follow a different sequence under different conditions.

A branch provides that the next instruction is fetched from somewhere else in memory. A subroutine call not only branches but saves the preceding contents of the PC somewhere. A return retrieves the saved contents of the PC and places it back in the PC, resuming sequential execution with the instruction following the subroutine call.

Front panel of an IBM 701 computer introduced in 1952. Lights in the middle display the contents of various registers. The instruction counter is at the lower left.

Hardware implementation

In a typical central processing unit (CPU), the PC is a digital counter (which is the origin of the term "program counter") that may be one of many registers in the CPU hardware. The instruction cycle[4] begins with a fetch, in which the CPU places the value of the PC on the address bus to send it to the memory. The memory responds by sending the contents of that memory location on the data bus. (This is the stored-program computer model, in which executable instructions are stored alongside ordinary data in memory, and handled identically by it[5]). Following the fetch, the CPU proceeds to execution, taking some action based on the memory contents that it obtained. At some point in this cycle, the PC will be modified so that the next instruction executed is a different one (typically, incremented so that the next instruction is the one starting at the memory address immediately following the last memory location of the current instruction).Like other processor registers, the PC may be a bank of binary latches, each one representing one bit of the value of the PC.[6] The number of bits (the width of the PC) relates to the processor architecture. For instance, a “32-bit” CPU may use 32 bits to be able to address 232 units of memory. If the PC is a binary counter, it may increment when a pulse is applied to its COUNT UP input, or the CPU may compute some other value and load it into the PC by a pulse to its LOAD input.[7]

To identify the current instruction, the PC may be combined with other registers that identify a segment or page. This approach permits a PC with fewer bits by assuming that most memory units of interest are within the current vicinity.

Consequences in machine architecture

Use of a PC that normally increments assumes that what a computer does is execute a usually linear sequence of instructions. Such a PC is central to the von Neumann architecture. Thus programmers write a sequential control flow even for algorithms that do not have to be sequential. The resulting “von Neumann bottleneck” led to research into parallel computing, including non-von Neumann or dataflow models that did not use a PC; for example, rather than specifying sequential steps, the high-level programmer might specify desired function and the low-level programmer might specify this using combinatory logic.This research also led to ways to making conventional, PC-based, CPUs run faster, including:

- Pipelining, in which different hardware in the CPU executes different phases of multiple instructions simultaneously.

- The very long instruction word (VLIW) architecture, where a single instruction can achieve multiple effects.

- Techniques to predict out-of-order execution and prepare subsequent instructions for execution outside the regular sequence.

Consequences in high-level programming

Modern high-level programming languages still follow the sequential-execution model and, indeed, a common way of identifying programming errors is with a “procedure execution” in which the programmer's finger identifies the point of execution as a PC would. The high-level language is essentially the machine language of a virtual machine, too complex to be built as hardware but instead emulated or interpreted by software.However, new programming models transcend sequential-execution programming:

- When writing a multi-threaded program, the programmer may write each thread as a sequence of instructions without specifying the timing of any instruction relative to instructions in other threads.

- In event-driven programming, the programmer may write sequences of instructions to respond to events without specifying an overall sequence for the program.

- In dataflow programming, the programmer may write each section of a computing pipeline without specifying the timing relative to other sections.

Typical use of CR3 in address translation with 4 KiB pages

program counter is a register in a computer processor that contains the address (location) of the instruction being executed at the current time. As each instruction gets fetched, the program counter increases its stored value by 1.

Program counter(PC) , also called instruction pointer .

A branch provides that the next instruction is fetched from somewhere else in memory. A subroutine call not only branches but saves the preceding contents of the PC somewhere. A return retrieves the saved contents of the PC and places it back in the PC, resuming sequential execution with the instruction following the subroutine call.

A program counter is also known as an instruction counter, instruction pointer, instruction address register or sequence control register.

we will need both always. The program counter (PC) holds the address of the next instruction to be executed, while the instruction register (IR) holds the encoded instruction. Upon fetching the instruction, the program counter is incremented by one "address value" (to the location of the next instruction). The instruction is then decoded and executed appropriately.

The reason why you need both is because if you only had a program counter and used it for both purposes you would get the following troublesome system:

[Beginning of program execution]

- PC contains 0x00000000 (say this is start address of program in memory)

- Encoded instruction is fetched from the memory and placed into PC.

- The instruction is decoded and executed.

- Now it is time to move onto the next instruction so we go back to the PC to see what the address of the next instruction is. However, we have a problem because PC's previous address was removed so we have no idea where the next instruction is.

P.S. the width of the registers varies depending on the architecture's word size. For example, for a 32-bit processor, the word size is 32-bits. Therefore, the registers on the CPU would be 32 bits. Instruction registers are no different in dimensions. The difference is in the behavior and interpretation. Instructions are encoded in various forms, however, they still occupy a 32-bit register. For example, the Nios II processor from Altera contains 3 different instruction types, each encoded differently.

the Program Counter

What is the program counter?

The Program Counter (PC) is a register that is apart of all central processing unit (CPU) or microprocessor. All microcontrollers contains a microprocessor and thus has a program counter. The purpose of the program counter is to hold/store the address of the next instruction to be executed by the microcontroller's microprocessor.The size (width) of the program counter of a microcontroller is measured in bits and is directly related to the size of the microcontroller's program memory.

Determining the size of the AVR Program Counter

As mentioned above the purpose of the program counter (PC) is to hold the address of the next instruction to be executed by the CPU. Instructions are stores in the program memory of a microcontroller and thus the PC width is directly related to the size of the micorcontroller program memory. As an example lets determine the width of the program counter for the ATMega16 and ATMega32 AVR microcontrollers.

The width(size) of the program counter (PC) is basically the smallest possibly number of bits necessary to address the microcontroller program memory based on its organisation. Lets say n is the width of the PC then n would be related to the size of the program memory by the following equation:

2n = size of program memory

n = log(size of program memory)/(log2)

For the ATMega8515 microcontroller the program memory is 8k-Bytes organised as 4k-Words. As such the width of the ATMega8515 microcontroller Program Counter n is given by:

2n = 4k = 4x1024

n = log(4096)/log(2) = 12bits

For the ATMega16 microcontroller the program memory is 16k-Bytes organised as 8k-Words. As such the width of the ATMega16 microcontroller Program Counter n is given by:

2n = 8k = 8x1024

n = log(8192)/log(2) = 13bits

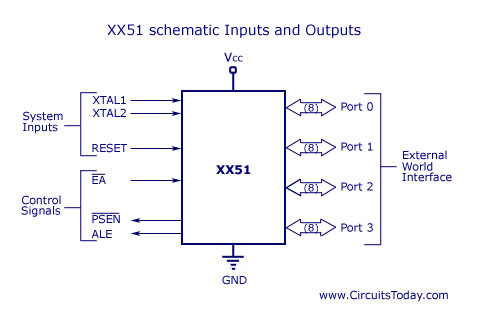

THE PROGRAM COUNTER AND ROM SPACE IN THE 8051

the role of the program counter (PC) register in executing an 8051 program. We also discuss ROM memory space for various 8051 family members.

Program counter in the 8051

Another important register in the 8051 is the PC (program counter). The program counter points to the address of the next instruction to be executed. As the CPU fetches the opcode from the program ROM, the program counter is incremented to point to the next instruction. The program counter in the 8051 is 16 bits wide. This means that the 8051 can access program addresses 0000 to FFFFH, a total of 64K bytes of code. However, not all members of the 8051 have the entire 64K bytes of on-chip ROM installed, as we will see soon. Where does the 8051 wake up when it is powered? We will discuss this important topic next.

Where the 8051 wakes up when it is powered up

One question that we must ask about any microcontroller (or microprocessor) is: At what address does the CPU wake up upon applying power to it? Each microprocessor is different. In the case of the 8051 family (that is, all members regardless of the maker and variation), the microcontroller wakes up at memory address 0000 when it is powered up. By powering up we mean applying Vcc to the RESET pin as discussed in Chapter 4. In other words, when the 8051 is powered up,.the PC (program counter) has the value of 0000 in it. This means that it expects the first opcode to be stored at ROM address OOOOH. For this reason in the 8051 system, the first opcode must be burned into memory location OOOOH of program ROM since this is where it looks for the first instruction when it is booted. We achieve this by the ORG statement in the source program as shown earlier. Next, we discuss the step-by-step action of the program counter in fetching and executing a sample program.

Placing code in program ROM

To get a better understanding of the role of the program counter in fetching and executing a program, we examine the action of the program counter as each instruction is fetched and executed. First, we examine once more the list file

of the sample program and how the code is placed in the ROM of an 8051 chip. As we can see, the opcode and operand for each instruction are listed on the left side of the list file.

Program21:ListFile

ROM Address

After the prografn is burned into ROM of an 8051 family member such as 8751 or AT8951 or DS5000, the opcode and operand are placed in ROM memory locations starting at 0000 as shown in the list below.

The list shows that address 0000 contains 7D, which is the opcode for moving a value into register R5, and address 0001 contains the operand (in this case 25H) to be moved to R5. Therefore, the instruction “MOV R5,#25H” has a machine code of “7D25″, where 7D is the opcode and 25 is the operand. Similarly, the machine code “7F34″ is located in memory locations 0002 and 0003 and represents the opcode and the operand for the instruction “MOV R7,#34H”. In the same way, machine code “7400″ is located in memory locations 0004 and 0005 and represents the opcode and the operand for the instruction “MOV A, #0″. The memory location 0006 has the opcode of 2D, which is the opcode for the

instruction “ADD A, R5″ and memory location 0007 has the content 2F, which is the opcode for the “ADD A, R7″ instruction. The opcode for the instruction “ADD A, #12H” is located at address 0008 and the operand 12H at address 0009. The memory location OOOA has the opcode for the SJMP instruction and its target address is located in location OOOB. The reason the target address is FE is explained in the next chapter.

Executing a program byte by byte

Assuming that the above program is burned into the ROM of an 8051 chip (or 8751, AT8951, or DS5000), the following is a step-by-step description of the action of the 8051 upon applying power to it.

- When the 8051 is powered up, the PC (program counter) has 0000 and starts

to fetch the first opcode from location 0000 of the program ROM. In the case

of the above program the first opcode is 7D, which is the code for moving an

operand to R5. Upon executing the opcode, the CPU fetches the value 25 and

places it in R5. Now one instruction is finished. Then the program counter is

incremented to point to 0002 (PC = 0002), which contains opcode 7F, the

opcode for the instruction “MOV R7 , . .”. - Upon executing the opcode 7F, the value 34H is moved into R7. Then the pro

gram counter is incremented to 0004. - ROM location 0004 has the opcode for the instruction “MOV A, #0″. This

instruction is executed and now PC = 0006. Notice that all the above instruc