In the world of electronics and mechanics as well as informatics a locking device is needed so that a system works so that the system can be controlled properly, especially the control using detector techniques that exist both in terms of the A / D / S Tour in Space Time.

Love in S H I N to A/D/S Tour

( Gen . Mac Tech Zone e- Locking and Detection )

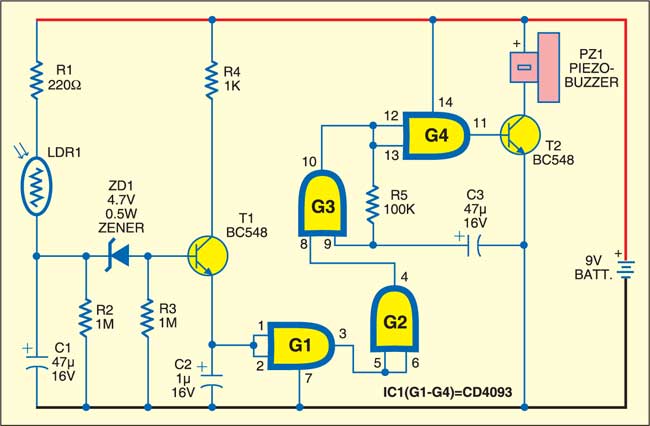

Dark Detector

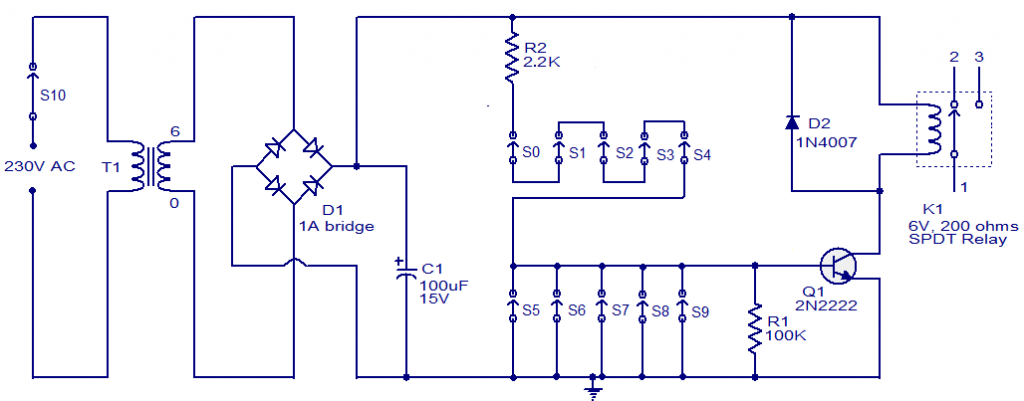

II0 Code Lock Circuit using Transistor

electronic circuit is one of the simplest code lock circuit one can make easily in their home.This circuit uses one transistor a relay and a few passive components in it.The logic of this circuit is also very simple.Even this circuit is simple it works really fine and efficient for a simple cupboard or shelf lockers.

WORKING OF CODE LOCK CIRCUIT:

The

working of this circuit is very simple it just uses a transistor as a

switch with a relay at its collector as load.Five switches (S0 to S4) is

arranged in series with a current limiting resistor R2 is connected

across it.Another five switches (S5 to S9) is connected across the base

of the transistor and the ground.So this circuit uses transistor as a

switch and the transistor will ON only when all the switches from S0 to

S4 was in ON state and S5 to S9 was in OFF state.This was the primary

logic for this circuit.Lets see how to design it as per our wish.

Now

coming to the design of this circuit we should shuffle arrange the

switches in the panel in such a way so that the password will be too

tough to guess.For example if your password is 58901 you should arrange

it in the circuit that the keypad 5 will be your switch S0 then 8 as

S1,9 as S2,0 as S3 and 1 as S4.Since it was in series the voltage will

not pass through unless you pressed the keys in right combination.

UPDATE:

The above Circuit diagram have a small bug, the resistor R1 should not be pulled down to ground. It should be connected in such a way to connect the switch S9 and base of the transistor Q1 in order to bias it for switching the relay ON.

Electronic Component of Transducers, sensors, detectors

- Transducers generate physical effects when driven by an electrical signal, or vice versa.

- Sensors (detectors) are transducers that react to environmental conditions by changing their electrical properties or generating an electrical signal.

- The transducers listed here are single electronic components (as opposed to complete assemblies), and are passive (see Semiconductors and Tubes for active ones). Only the most common ones are listed here.

- Audio

- Loudspeaker – Electromagnetic or piezoelectric device to generate full audio

- Buzzer – Electromagnetic or piezoelectric sounder to generate tones

- Position, motion

- Linear variable differential transformer (LVDT) – Magnetic – detects linear position

- Rotary encoder, Shaft Encoder – Optical, magnetic, resistive or switches – detects absolute or relative angle or rotational speed

- Inclinometer – Capacitive – detects angle with respect to gravity

- Motion sensor, Vibration sensor

- Flow meter – detects flow in liquid or gas

- Force, torque

- Strain gauge – Piezoelectric or resistive – detects squeezing, stretching, twisting

- Accelerometer – Piezoelectric – detects acceleration, gravity

- Thermal

- Thermocouple, thermopile – Wires that generate a voltage proportional to delta temperature

- Thermistor – Resistor whose resistance changes with temperature, up PTC or down NTC

- Resistance Temperature Detector (RTD) – Wire whose resistance changes with temperature

- Bolometer – Device for measuring the power of incident electromagnetic radiation

- Thermal cutoff – Switch that is opened or closed when a set temperature is exceeded

- Magnetic field

- Magnetometer, Gauss meter

- Humidity

- Electromagnetic, light

- Photo resistor – Light dependent resistor (LDR)

-

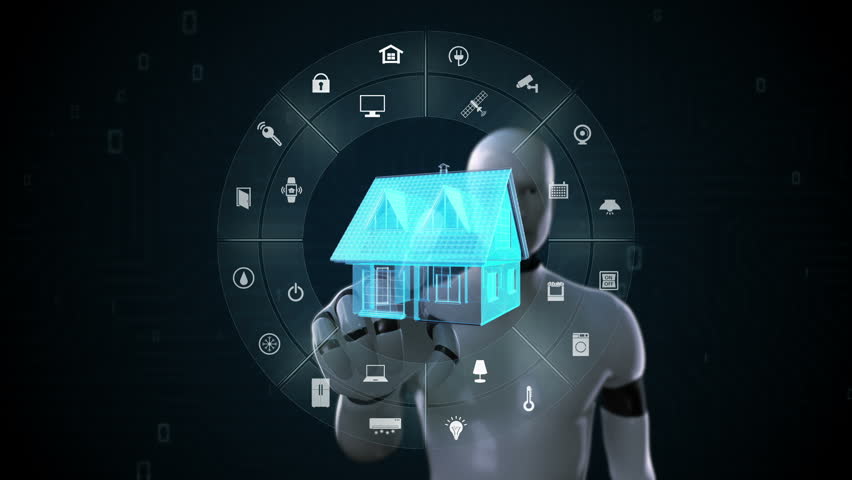

II0 II0 Importance and Classification of Electronic Security System

Electronic security system refers to any electronic equipment that could

perform security operations like surveillance, access control, alarming

or an intrusion control to a facility or an area which uses a power

from mains and also a power backup like battery etc. It also includes

some of the operations such as electrical, mechanical gear.

Determination of a type of security system is purely based on area to be

protected and its threats.

Importance of Electronic Security System:

The electronic security systems are

broadly utilized within corporate work places, commercial places,

shopping centers and etc. These systems are also used in railway

stations, public places and etc. The systems have profoundly welcomed,

since it might be worked from a remote zone. And these systems are also

utilized as access control systems, fire recognition and avoidance

systems and attendance record systems. As we are know that the crime

rates are increasing day by day so most of the people are usually not

feeling comfort until they provide a sure for their security either it

may be at office or home. So we should choose a better electronic

system for securing purpose.

Classification of security system can be done in different ways based on

functioning and technology usage, conditions of necessity accordingly.

Based on functioning categorizing electronic security system as follows:

- CCTV Surveillance Security System

- Fire Detection/Alarming System

- Access Control/Attendance System

CCTV Surveillance Systems:

It is the process of watching over a

facility which is under suspicion or area to be secured; main part of

the surveillance electronic security system consists of camera or CCTV

cameras which forms as eyes to surveillance system. System consists of

different kinds of equipment which helps in visualizing and saving of

recorded surveillance data. The close-circuits IP cameras and CCTVS

transfers image information to a remote access place. The main feature

of this system is that, it can use any place where we watch the human

being actions. Some of the CCTV surveillance systems are cameras,

network equipments, IP cameras and monitors. In this system, we can

detect the crime through the camera, the alarm rings after receiving the

signal from the cameras which are connected CCTV system; to concern on

the detection of interruption or suspicion occurrence on a protected

area or capability, the complete operation is based on the CCTV

surveillance system through internet. The figure below is representing

the CCTV Surveillance Systems.

P Surveillance System:

The IP-Surveillance system is designed

for security purpose, which gives clients capability to control and

record video/audio using an IP PC system/network, for instance, a LAN or

the internet. In a simple way, the IP-Surveillance system includes the

utilization of a system Polaroid system switch, a computer for review,

supervising and saving video/audio, which shown in figure below.

In an IP-Surveillance system, a

digitized video/audio streams might be sent to any area even as far and

wide as possible if wanted by means of a wired or remote IP system,

empowering video controlling and recording from anyplace with

system/network access.

Attendance and Access Control Systems:

System which provides a secured access

to a facility or another system to enter or control it can be called as

an access control system. It can also act as attendance providing system

which can play a dual role. According to user credentials and

possessions access control system is classified, what a user uses for

access makes system different, user can provide different types like pin

credentials, biometrics or smart card. System can even use all

possessions from user for a multiple access controls involved. Some of

the attendance and access control systems are:

- Access Control System

- RF based access control and attendance system:

Electronic security system extends its

applications in various fields like home automation, Residential (homes

and apartments), commercial (offices, banks lockers), industrial,

medical, and transportations. Some of the applications using electronic

security system are electronic security system for railway compartment,

electronic eye with security, electronic voting system are the most

commonly used electronic security system.

One of the examples related to electronic security system:

From the block diagram, the system is

mainly designed based on Electronic eye (LDR sensor); we use this kind

of systems in bank lockers, jewelry shops. When the cash box is closed,

the neither buzzer nor the binary counter/divider indicates that box is

closed. If anyone tries to open the locker door then automatically a

light falls on the LDR sensor then the resistance decreases slowly this

cause buzzer to alert the customer. This process continues until the box

is closed.

Electronic Eye Controlled Security System

Electronic Eye Controlled Security SystemLocker Guard Project

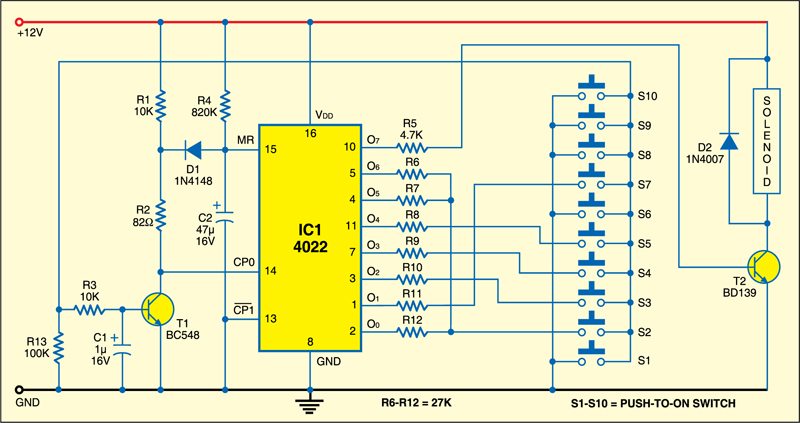

Intelligent Electronic Lock Circuit

securing anything is through mechanical locks, which operate with a specific key or a few keys; but, for locking a large area many locks are necessary. However, conventional locks are heavy and do not offer the desired protection as they can be easily broken down by using some tools. Therefore, security breaching problems are associated with the mechanical locks.However to decide the electronic security system problems that are associated with the mechanical locks.

Nowadays, many devices’ operations are based on digital technology. For

example, digital based door lock systems for auto door opening and

closing, token-based-digital-identity devices are all based on digital

technology. These locking systems are controlled by a keypad and are

installed at the side hedge of the door. intelligent electronic

security lock system offers freedom from physical and mental stress

faced by a person while moving away from their home.

1. Intelligent Electronic Lock Circuit Diagram:

The below shown circuit represents an

intelligent electronic lock , which is built using transistors

only. To open this electronic lock, one has to press switches S1 through

S4 serially. For dishonesty, you may explain these switches with

different numbers on the keypad. For example, if you want to use 10

switches 0 to 9 on the keypad, use any four arbitrary numbers out of

these switches and remaining 6 numbers may be explained on the leftover

switches. These switches may be wired in parallel to disable S6 switch.

When four password digits are mixed with remaining 6 digits, which are

connected across disable switch terminals, energization of the RL1 relay

by unknown person is prohibited.

For authorized persons or known persons,

a four-digit password is very easy to remember. To strengthen the relay

RL1, one has to press the switches S1 to S4 in sequence within six

seconds. Each of the switches will take 0.75 to 1.25 seconds time

duration. The relay will not work if time duration is less than 0.75 Sec

or above 1.25Sec. A special characteristic of this electronic lock

circuit is the pressing of any switch wired across the switch S6 which

will guide to disable of the whole circuit for about one minute. This

circuit comprises sequential switching, relay latch up sections and

disabling. The disabling section consists of Transistors T1, T2 and

Zener diode ZD5. The function of the disabling section is such that-

when the disable switch S6 is pressed, it cuts off the positive supply

to the sequential switching and the relay latches up sections for one

minute.

During idle state, the C1 capacitor is

discharged and the voltage is less than 4.7V. Thus, T1 transistor and

Zener diode are in non-conduction state. So the collector voltage of the

T1 transistor is higher than transistor T2. Therefore, +12V is extended

to the relay latch up and sequential switching sections. The sequential

switching includes Transistors: T3, T4, T5; Zener diodes ZD1, ZD2, ZD3;

Tactile switches S1 to S4; and, Timing capacitors: C2 to C4. In this electronic switch,

when the tactile switches are activated, then the timing capacitors are

charged through resistors. Thus, while activating tactile switches

sequentially, transistors T3, T4 and T5 remain in conduction for a few

seconds (T3 for 6 seconds, T4 for 3 seconds, and T5 for 1.5 seconds).

To activate the tactile switches, the

time taken is greater than 6 seconds, and the T3 transistor stops

performing due to the time lapse. Thus, Sequential switching is not

achieved and it is not possible to energize the relay RL1. However, on

correct operation of sequential switches S1, S2, S3 and S4, the

capacitor C5 is charged through R9 resistor, and the voltage across it

increases above 4.7 volts. Next the transistors T6, T7, T8 as well as

the Zener diode start conducting and the RL1 relay gets energized. Next,

if you turn on the reset switch S5 for a moment, the C5 capacitor is

instantly discharged through the R8 resistor, and the voltage across it

falls below 4.7 volts. Therefore the transistors T6, T7, T8 and the Zener diode ZD4 stop conducting again and the RL1 relay de-energizes.

2. Password Based Door Locking System:

In this password based door locking system ,

a keypad is arranged to open and close the door. After entering a

password, if it matches with the stored one, then the door will unlock

for a limited period of time. After extending the unlocking process for a

fixed period of time, the relay energizes, and then the door gets

locked again. If any unauthorized person enters a wrong password in an

attempt to open the door, then this system immediately switches a buzzer .

The working of this project can be

described by the above block diagram. It consists of blocks as a

microcontroller, a keypad, a buzzer, an LCD, a stepper motor and a motor

driver.

The keypad is an input device which

helps to enter a password to open the door. Then, it gives the entered

code signals to the microcontroller. The LCD and buzzer are the

indicating devices for alarming and displaying the information. The stepper motor moves

the door to open and close and the motor driver drives the motor after

receiving the code signals from the microcontroller.

The microcontroller which is used in this project is from 8051 families and that is programmed with the Keil software.

When a person enters a password through a keypad, then the

microcontroller reads the data and contrasts it with the stored data. If

the entered password matches with the stored data, then the

microcontroller sends the information to the LCD, which displays this

information: the code is valid. Also, it sends the command signals to

the motor driver to rotate the motor in a particular direction such that

the door opens. After some time, the spring system with a particular

time delay closes its relay, and then the door gets to its normal

position,

If a person challenging to open the door

enters a wrong password, then the microcontroller switches the buzzer

for further action. In this way, a simple door-electronic-lock system

can be implemented with the use of a microcontroller

3. ATmega Based Garage Door Opening:

This is an advanced project compared to the above project. This project uses android technology instead

of a keyboard for opening and closing the door. Hence, users can use

their Android mobiles for opening and closing the door.

The main intention of this project is to

unlock a garage door with an Android-OS-based device such as mobile or

tablet by entering a single password through the Android application.

This system uses a microcontroller, a Bluetooth modem, a buzzer, an

Android mobile, a relay driver, lamps and relays for attaining the

remote-controlled operations of the door.

The Android based device is connected to this system through a Bluetooth device. The Bluetooth device is arranged to the microcontroller which is programmed with a particular password for opening and closing the garage door.

Before sending this information to the microcontroller, the Bluetooth on the phone

is attached to the control device which is paired to the Bluetooth

modem. After entering the password in the android device, it sends the

data to the microcontroller through a Bluetooth. Then it compares that

data with the password stored in the microcontroller. If the two

passwords match, then the microcontroller sends the control signals to

the relay driver.

Then, the relay performs mechanical operations

to open and close the garage door through the motor. Here, the motor is

replaced with the lamp for visualization purpose. If the entered

password is wrong, then the system generates an alarm.

Thus, this is all about intelligent electronic lock and basic procedure based on electronic door lock system. II0 II0 II0 Space-Time Ripples: How Scientists Could Detect of Lockers Gravity Waves

For years, scientists have been trying — and failing — to detect theoretical ripples in space-time called gravitational waves.

Four gravitational wave detectors are currently in operation.

Gravitational waves, predicted by Einstein's theory of general

relativity, are thought to be created by some of the most violent events

in the universe, such as the collision of two neutron stars.Neutron stars are extremely dense dead stars left over after supernova explosions. When two merge into each other, they are predicted to release strong gravitational waves that should be detectable on Earth.

A new way of seeing the universe

Einstein's theory of general relativity describes how objects with mass bend and curve space-time. Imagine holding out a taut bedsheet and placing a football in the center. Just as the bed sheet curves around the football, space-time curves around objects with mass.

And like the ripples moving across a lake, the distortion in space-time caused by accelerating objects gradually decreases in strength, so by the time they finally reach Earth, they are very hard to detect. Hard, but not impossible. detecting gravitational waves opens up a new way of investigating the universe.

The waves could also help researchers probe some other mysterious and powerful cosmic events.

"Gravitational waves have great penetrating power, so they will allow us to see directly to the center of the systems responsible for supernova explosions, gamma-ray bursts and a wealth of other systems so far hidden from view. we need additional detector for looking for energy in 4 gravitation .

Gravity is most accurately described by the general theory of relativity (proposed by Albert Einstein in 1915) which describes gravity not as a force, but as a consequence of the curvature of spacetime caused by the uneven distribution of mass.

Albert Einstein called gravity a distortion in the shape of space-time. ... Newton's theory says this can occur because of gravity, a force attracting those objects to one another or to a single, third object. Einstein also says this occurs due to gravity -- but in his theory, gravity is not a force.

According to Newton , gravity is a force expressed mutually between two objects by the virtue of their masses(heavier the objects, more the gravity)…he considered gravity a pull. According to Einstein , gravity was a curvature in the 4-dimensional space-time fabric proportional to objects masses.

“Imagination is more important than knowledge,” Einstein would say. It's no coincidence that around the same time, Einstein began to use thought experiments that would change the way he would think about his future experiments. ... His work on gravity was influenced by imagining riding a free-falling elevator. we have Illustration about 240 keypad as like as electronic lock switch in Input for e- S H I N to A / D / S tour and then to detecting gravitation energy to out space in space and time .

In space, it is possible to create "artificial gravity" by spinning your spacecraft or space station. ... Technically, rotation produces the same effect as gravity because it produces a force (called the centrifugal force) just like gravity produces a force.

The gravitational pull of the moon pulls the seas towards it, causing the ocean tides. Gravity creates stars and planets by pulling together the material from which they are made. Gravity not only pulls on mass but also on light. Albert Einstein discovered this principle.

Does the influence of gravity extend out forever? ... As you get farther away from a gravitational body such as the sun or the earth (i.e. as your distance r increases), its gravitational effect on you weakens but never goes completely away; at least according to Newton's law of gravity

to summarize, general relativity says that matter bends spacetime, and the effect of that bending of spacetime is to create a generalized kind of force that acts on objects. However, it isn't a force as such that acts on the object, but rather just the object following its geodesic path through spacetime

The better news is that there is no science that says that gravity control is impossible. First, we do know that gravity and electromagnetism are linked phenomena. ... Historically, gravity has been studied in the general sense, but not very much from the point of view of seeking propulsion breakthroughs .

Earth's gravity is what keeps you on the ground and what makes things fall. ... So, the closer objects are to each other, the stronger their gravitational pull is. Earth's gravity comes from all its mass. All its mass makes a combined gravitational pull on all the mass in your body .

Gravity from Earth keeps the Moon and human-made satellites in orbit. It is true that gravity decreases with distance, so it is possible to be far away from a planet or star and feel less gravity. But that doesn't account for the weightless feeling that astronauts experience in space .

It is a common misconception that astronauts in orbit are weightless because they have flown high enough to escape the Earth's gravity. In fact, at an altitude of 400 kilometres (250 mi), equivalent to a typical orbit of the ISS, gravity is still nearly 90% as strong as at the Earth's surface.

Einstein said it is impossible, but as Jennifer Ouellette explains some scientists are still trying to break the cosmic speed limit – even if it means bending the laws of physics. "It is impossible to travel faster than light, and certainly not desirable, as one's hat keeps blowing off."

Also, under Einstein's theory of general relativity, gravity can bend time. Picture a four-dimensional fabric called space-time. When anything that has mass sits on that piece of fabric, it causes a dimple or a bending of space-time.

Albert Einstein, in his theory of special relativity, determined that the laws of physics are the same for all non-accelerating observers, and he showed that the speed of light within a vacuum is the same no matter the speed at which an observer travels.

The special theory of relativity implies that only particles with zero rest mass may travel at the speed of light. Tachyons, particles whose speed exceeds that of light, have been hypothesized, but their existence would violate causality, and the consensus of physicists is that they cannot exist.

The faster the relative velocity, the greater the time dilation between one another, with the rate of time reaching zero as one approaches the speed of light (299,792,458 m/s). This causes massless particles that travel at the speed of light to be unaffected by the passage of time.

The force of gravity is the weakest at the equator because of the centrifugal force caused by the Earth's rotation and because points on the equator are furthest from the center of the Earth. The force of gravity varies with latitude and increases from about 9.780 m/s2 at the Equator to about 9.832 m/s2 at the poles.

In string theory, believed to be a consistent theory of quantum gravity, the graviton is a massless state of a fundamental string. If it exists, the graviton is expected to be massless because the gravitational force is very long range and appears to propagate at the speed of light.

The gravitational constant, called G in physics equations, is an empirical physical constant. It is used to show the force between two objects caused by gravity. The gravitational constant appears in Newton's universal law of gravitation. G is about 6.67408 ×10−11 N⋅m2/kg2, and is denoted by letter G.

Wormholes ( virtual point matrix ) are consistent with the general theory of relativity, but whether wormholes actually exist remains to be seen. A wormhole could connect extremely long distances such as a billion light years or more, short distances such as a few meters, different universes, or different points in time.

According to the current

understanding of physics, an object within space-time cannot exceed the

speed of light, which means an attempt to travel to any other galaxy would be a journey of millions of earth years via conventional flight.

Real world and Retro is possible , Because of the vastness of those distances, interstellar travel would require a high percentage of the speed of light; huge travel time, lasting from decades to millennia or longer. The speeds required for interstellar travel in a human lifetime far exceed what current methods of spacecraft propulsion can provide.

A causal loop is a paradox of time travel

that occurs when a future event is the cause of a past event, which in

turn is the cause of the future event. Both events then exist in

spacetime, but their origin cannot be determined.

However, making one body

advance or delay more than a few milliseconds compared to another body

is not feasible with current technology. As for backwards time travel, it is possible to find solutions in general relativity that allow for it, but the solutions require conditions that may not be physically possible.

With an estimated light-travel distance of about 13.4 billion light-years (and a proper distance of approximately 32 billion light-years (9.8 billion parsecs) from Earth due to the Universe's expansion since the light we now observe left it about 13.4 billion years ago), astronomers announced it as the most distant ...

Tachyons. In special relativity, it is impossible to accelerate an object to the speed of light, or for a massive object to move at the speed of light. However, it might be possible for an object to exist which always moves faster than light.

A spacecraft equipped with a warp drive may travel at speeds greater than that of light by many orders of magnitude. ... The problem of a material object exceeding light speed is that an infinite amount of kinetic energy would be required to travel at exactly the speed of light.

In astronomy, the interstellar medium (ISM) is the matter and radiation that exists in the space between the star systems in a galaxy. This matter includes gas in ionic, atomic, and molecular form, as well as dust and cosmic rays. It fills interstellar space and blends smoothly into the surrounding intergalactic space.

Explanations of why ships can travel faster than light in hyperspace vary; hyperspace may be smaller than real space and therefore a star ship's propulsion seems to be greatly multiplied, or else the speed of light in hyperspace is not a barrier as it is in real space.

g-lock in astronomy celluloid

g-force. A force acting on a body as a result of acceleration or gravity, informally described in units of acceleration equal to one g. For example, a 12 pound object undergoing a g-force of 2g experiences 24 pounds of force. See more at acceleration of gravity.

G-force induced loss of consciousness (abbreviated as G-LOC,

pronounced 'JEE-lock') is a term generally used in aerospace physiology

to describe a loss of consciousness occurring from excessive and

sustained g-forces draining blood away from the brain causing cerebral hypoxia.

The acceleration that causes blackouts in fighter pilots is called the maximum g-force. Fighter pilots experience this force when accelerating or decelerating quickly. At high g's the pilots blood pressure changes and the flow of oxygen to the brain rapidly decreases.

As objects accelerate through the air toward (or away) from the ground, gravitational forces exert resistance against human bodies,

objects, and matter of all kinds. ... As we accelerate faster and

faster and fly higher and higher, the gravitational impact on our bodies grows greater.

RPM stands for "Revolutions per minute." This is how centrifuge manufacturers generally describe how fast the centrifuge is going. ... RCF (relative centrifugal force) is measured in force x gravity or g-force. This is the force exerted on the contents of the rotor, resulting from the revolutions of the rotor.

Impact From a Falling Object

The first step is to set the equations for gravitational potential energy and work equal to each other and solve for force. W = PE is F × d = m × g × h, so F = (m × g × h) ÷ d.

1 g is the average gravitational acceleration on Earth, the average force, which affects a resting person at sea level. 0 g is the value at zero gravity. 1 g = 9.80665 m/s² = 32.17405 ft/s². To reach this value at a linear acceleration, you must accelerate from 0 to 60 mph in 2.74 seconds.

To calculate this g-force, use this formula: The little g is the g-force or the amount of acceleration caused by gravity. The big G

is Newton's gravitational constant, approximately 6.67 x 10-11 N * m2 /

kg2. The little m is the mass of the object, and the little r is the

radius of the object.

When something falls on Earth with an acceleration of 9.8 m/s2 , then they are accelerating at 1 g. A person in freefall at 1 g would feel no external forces, but from the physics perspective we would say that he is experiencing an external force of gravity .

The magnitude of the force of gravity acting upon the passenger (or car) can easily be found using the equation Fgrav = m. g where g = acceleration of gravity (9.8 m/s2). The magnitude of the normal force depends on two factors - the speed of the car, the radius of the loop and the mass of the rider.

That force will cause the plane to accelerate unless it exactly balances gravity and drag. Since the the pilot is strapped into the plane he or she feels the force caused by the acceleration of the seat and/or straps. ... The g-forces you feel are caused by inertia .

g-force is pretty much an acceleration force.

For example 1g (Earth gravity) is basically an acceleration of 9.8m/s2

towards the Earth, you don't accelerate because the ground resists this force

Well Einstein gave us the answer to that: it would feel exactly like standing on the surface of the Earth where the acceleration

due to gravity is 1g. ... The overall speed doesn't matter, and the

astronauts would have no way of directly perceiving their velocity, but

yes they would constantly perceive the acceleration.

At the surface of the Earth, the acceleration due to gravity is roughly 9.8 m/s2. The average distance to the centre of the Earth is 6371 km. Using the constant , we can work out gravitational acceleration at a certain altitude. Example: Find the acceleration due to gravity 1000 km above Earth's surface.

G-force induced loss of consciousness (abbreviated as G-LOC, pronounced 'JEE-lock') is a term generally used in aerospace physiology to describe a loss of consciousness occurring from excessive and sustained g-forces draining blood away from the brain causing cerebral hypoxia. The condition is most likely to affect pilots of high performance fighter and aerobatic aircraft or astronauts but is possible on some extreme amusement park rides. G-LOC incidents have caused fatal accidents in high performance aircraft capable of sustaining high g for extended periods. High-G training for pilots of high performance aircraft or spacecraft often includes ground training for G-LOC in special centrifuges, with some profiles exposing pilots to 9 gs for a sustained period.

- Greyout - a loss of color vision

- Tunnel vision - loss of peripheral vision, retaining only the center vision

- Blackout - a complete loss of vision but retaining consciousness.

- G-LOC - where consciousness is lost.

Because of the high level of sensitivity that the eye’s retina has to hypoxia, symptoms are usually first experienced visually. As the retinal blood pressure decreases below globe pressure (usually 10–21 mm Hg), blood flow begins to cease to the retina, first affecting perfusion farthest from the optic disc and retinal artery with progression towards central vision. Skilled pilots can use this loss of vision as their indicator that they are at maximum turn performance without losing consciousness. Recovery is usually prompt following removal of g-force but a period of several seconds of disorientation may occur. Absolute incapacitation is the period of time when the aircrew member is physically unconscious and averages about 12 seconds. Relative incapacitation is the period in which the consciousness has been regained, but the person is confused and remains unable to perform simple tasks. This period averages about 15 seconds. Upon regaining cerebral blood flow, the G-LOC victim usually experiences myoclonic convulsions (often called the ‘funky chicken’) and often full amnesia of the event is experienced.[1] Brief but vivid dreams have been reported to follow G-LOC. If G-LOC occurs at low altitude, this momentary lapse can prove fatal and even highly experienced pilots can pull straight to a G-LOC condition without first perceiving the visual onset warnings that would normally be used as the sign to back off from pulling any more gs.

The human body is much more tolerant of g-force when it is applied laterally (across the body) than when applied longitudinally (along the length of the body). Unfortunately most sustained g-forces incurred by pilots is applied longitudinally. This has led to experimentation with prone pilot aircraft designs which lies the pilot face down or (more successfully) reclined positions for astronauts.

The g thresholds at which these effects occur depend on the training, age and fitness of the individual. An un-trained individual not used to the G-straining manoeuvre can black out between 4 and 6 g, particularly if this is pulled suddenly. A trained, fit individual wearing a g suit and practicing the straining manoeuvre can, with some difficulty, sustain up to 12-14g without loss of consciousness. The “Blue Angels” have to perform their maneuvers without the aid of flight-suits, and regularly sustain 3-5 second bursts of 10 ‘g’ thresholds .