The research futuristic is a comprehensive view of the new concept of IoT especially proposed for robotics i.e. Internet of Robotic Things (IoRT). IoRT is a mix of diverse technologies like Cloud Computing, Artificial Intelligence (AI), Machine Learning and Internet of Things (IoT).

The Future of Robotics. Robotic engineers are designing the next generation of robots to look, feel and act more human, to make it easier for us to warm up to a cold machine. Realistic looking hair and skin with embedded sensors will allow robots to react naturally in their environment.

When it comes to robots cooking and cleaning, it's unlikely to happen in the next fifty years. Mainly because tasks such as ironing, washing dishes, and folding clothes would cost tons of money. Overseeing health problems and being an attendant to the elderly are the domestic roles robots will play in the future.

Robots could replace nearly a third of the U.S. workforce by 2030. Over the next 13 years, the rising tide of automation will force as many as 70 million workers in the United States to find another way to make money, a new study from the global consultancy McKinsey predicts.

Robots are already working in our everyday lives and have changed the way that some industries operate. The future of robotics will change how we live forever. ... Soon, robots could look and function similar to humans. Robots may become smarter than their creators as soon as 2029, as experts estimate.

This kind of job is better done by robots than by humans. Most robots today are used to do repetitive actions or jobs considered too dangerous for humans. A robot is ideal for going into a building that has a possible bomb. Robots are also used in factories to build things like cars, candy bars, and electronics.

Robots are a good way to implement the lean principle in an industry. They save time as they can produce more products. They also reduce the amount of wasted material used due to the high accuracy. Including robots in production lines, will save money as they have quick return on investment (ROI) .

Robotics deals with the design, construction, operation, and use of robots, as well as computer systems for their control, sensory feedback, and information processing. These technologies are used to develop machines that can substitute for humans and replicate human actions.

By 2019, more than 1.4 million new industrial robots will be installed in factories around the world - that's the latest forecast from the International Federation of Robotics (IFR).

Robot software is the set of coded commands or instructions that tell a mechanical device and electronic system, known together as a robot, what tasks to perform. Robot software is used to perform autonomous tasks. Many software systems and frameworks have been proposed to make programming robots easier.

Robotics and Artificial Intelligence. Artificial Intelligence (AI) is a general term that implies the use of a computer to model and/or replicate intelligent behavior. Research in AI focuses on the development and analysis of algorithms that learn and/or perform intelligent behavior with minimal human intervention.

The Internet

The Internet is a worldwide system, or network, of computers. It got started in the late 1960s, originally conceived as a network that could survive nuclear war. Back then it was called ARPAnet, named after the Advanced Research Project Agency (ARPA) of the United States federal government.

Protocol and packets When people began to connect their computers into ARPAnet, the need became clear for a universal set of standards, called a protocol, to ensure that all the machines “speak the same language.” The modern Internet is such that you can use any type of computer—IBM-compatible, Mac, or other—and take advantage of all the network’s resources. All Internet activity consists of computers “talking” to one another. This occurs in machine language. However, the situation is vastly more complicated than when data goes from one place to another within a single computer. In the Internet (often called simplythe Net), data must often go through several different computers to get from the transmitting or source computer to the receiving or destination computer. These intermediate computers are called nodes, servers, hosts, or Internet service providers (ISPs). Millions of people are simultaneously using the Net; the most efficient route between a given source and destination can change from moment to moment. The Net is set up in such a way that signals always try to follow the most efficient route. If you are connected to a distant computer, say a machine at the National Hurricane Center, the requests you make of it and the data it sends you, are broken into small units called packets. Each packet coming to you has, in effect, your computer’s name written on it. But not all packets necessarily travel the same route through the network. Ultimately, all the packets are reassembled into the data you want, say, the infrared satellite image of a hurricane, even though they might not arrive in the same order they were sent.

E-mail and newsgroups

For many computer users, communication via Internet electronic mail (e-mail) and/or newsgroups has practically replaced the postal service. You can leave messages for, and receive them from, friends and relatives scattered throughout the world.

To effectively use e-mail or newsgroups, everyone must have an Internet address. These tend to be arcane. An example is

sciencewriter@nanosecond.com

The first part of the address, before the @ symbol, is the username. The word after the @ sign and before the period (or dot) represents the domain name. The three letter abbreviation after the dot is the domain type. In this case, “com” stands for “commercial.” Nanosecond is a commercial provider. Other common domain types include “net” (network), “org” (organization), “edu” (educational institution), and “gov” (government). In recent years, country abbreviations have been increasingly used at the ends of Internet addresses, such as “us” for United States, “de” for Germany, “uk” for United Kingdom, and “jp” for Japan.

Internet conversations You can carry on a teletype-style conversation with other computer users via the Internet, but takes a bit of getting used to. When done among users within a single service provider, this is called chat. When done among people connected to different service providers, it is called Internet relay chat (IRC). Typing messages to and reading them from other people in real time is more personal than letter writing, because your addressees get their messages immediately. But it’s less personal than talking on the telephone, especially at first, because you cannot hear, or make, vocal inflections. It is possible to digitize voice signals and transfer them via the Internet. This has given rise to hardware and software schemes that claim to provide virtually toll-free long-distance telephone communications. As of this writing, this is similar to amateur radio in terms of reliability and quality of connection. When Net traffic is light, such connections can be good. But when Net traffic is heavy, the quality is marginal to poor. Audio signals, like any other form of Internet data, are broken into packets. All, or nearly all, the packets must be received and reassembled before a good signal can be heard. This takes variable time, depending on the route each packet takes through the Net. If many of the packets arrive disproportionately late, and the destination computer can only “do its best” to reassemble the signal. In the worst case, the signal might not get through at all.

Getting information One of the most important features of Internet is the fact that it can get you in touch with thousands of sources of information. Data is transferred among computers by means of a file transfer protocol (FTP) that allows the files on the hard drives of distant computers to become available exactly as if the data were stored on your own computer’s hard drive, except the access time is slower. You can also store files on distant computers’ hard drives. When using FTP, you should be aware of the time at the remote location, and avoid, if possible, accessing files during the peak hours at the remote computer. Peak hours usually correspond to working hours, or approximately 8:00 a.m. to 5:00 p.m. local time, Monday through Friday.

You must take time differences into account if you’re not in the same time zone as the remote computer. The World Wide Web (also called WWW or the Web) is one of the most powerful information servers you will find on-line. Its outstanding feature is hypertext,a scheme of cross-referencing. Certain words, phrases, and images make up so-called links. When you select a link in a Web page or Web site (a document containing text and graphics and sometimes also other types of files), your computer is transferred to another document dealing with the same or a related subject. This site will probably also contain numerous links. Before long, you might find yourself “surfing” the Web for hours going from site to site. The word surfing derives from the similarity of this activity to television “channel surfing.” The Web works fastest during the predawn hours in the United States, when the fewest number of people are connected to the Internet. When Net traffic is heavy, Web documents can take a long time to appear. In some instances you won’t be able to get a page at all; you’ll sit there staring at a blank display or at an hourglass. This problem is worst with comparatively slow telephone-line modems, but it can occur even with the most expensive, high-speed Internet connections. When you experience it, you’ll know why some people refer to the Web as the “World Wide Wait.”

Getting connected If you’re not an Internet user but would like to get access, try calling the computer science department of the nearest trade school, college, or university. Many, if not most, academic institutions have Internet access, and some will let you in for a reasonable charge. If you aren’t near a school that can provide you with Internet service, you can get connected through a commercial provider. You’ll have to pay a fee, generally by the month. You might also have to pay for any hours you use per month past a certain maximum.

Robotics and artificial intelligence

A ROBOT IS A SOPHISTICATED MACHINE, OR A SET OF MACHINES WORKING together, thatperforms certain tasks. Some people imagine robots as having two legs, a head, two arms with functional end effectors (hands), an artificial voice, and an electronic brain. The technical name for a humanoid robot is android. Androids are within the realm of technological possibility, but most robots are structurally simpler than, and don’t look or behave like, people. An electronic brain, also called artificial intelligence (AI), is more than mere fiction, thanks to the explosive evolution of computer hardware and software. But even the smartest computer these days would make a dog seem brilliant by comparison. Some scientists think computers and robots might become as smart as, or smarter than, human beings. Others believe the human brain and mind are more complicated than anything that can be duplicated with electronic circuits. Asimov’s three laws In one of his early science-fiction stories, the famous author Isaac Asimov first mentioned the word robotics, along with three “fundamental rules” that all robots ought to obey:

• First controlling : A robot must not injure, or allow the injury of, any human being.

• Second controlling : A robot must obey all orders from humans, except orders that would contradict the First controlling .

• Third controlling : A robot must protect itself, except when to do so would contradict the First controlling or the Second controlling .

These rules were first coined in the 1940s, but they are still considered good standards for robots nowadays.

Robot generations Some researchers have analyzed the evolution of robots, marking progress according to so-called robot generations. This has been done with computers and integrated circuits, so it only seems natural to do it with robots, too. One of the first engineers to make formal mention of robot generations was the Japanese engineer Eiji Nakano.

First generation According to Nakano, a first-generation robot is a simple mechanical arm without AI. Such machines have the ability to make precise motions at high speed, many times, for a long time. They have found widespread industrial application and have been around for more than half a century. These are the fast-moving systems that install rivets and screws in assembly lines, that solder connections on printed circuits, and that, in general, have taken over tedious, mind-numbing chores that used to be done by humans. First-generation robots can work in groups if their actions are synchronized. The operation of these machines must be constantly watched, because if they get out of alignment and are allowed to keep operating anyway, the result can be a series of bad production units. At worst, a misaligned and unsupervised robot might create havoc of a sort that you can hardly begin to imagine even if you let your mind run wild.

Second generation A second-generation robot has some level of AI. Such a machine is equipped with pressure sensors, proximity sensors, tactile sensors, binocular vision, binaural hearing, and/or other devices that keep it informed about goings-on in the world around it. A computer called a robot controller processes the data from the sensors and adjusts the operation of the robot accordingly. The earliest second-generation robots came into common use around 1980. Second-generation robots can stay synchronized with each other, without having to be overseen constantly by a human operator. Of course, periodic checking is needed with any machine, because things can always go wrong, and the more complex the system, the more ways it can malfunction.

Third generation Nakano gave mention to third-generation robots, but in the years since the publication of his original paper, some things have changed. Two major avenues are developing for advanced robot technology. These are the autonomous robot and the insect robot. Both of these technologies hold promise for the future. An autonomous robot can work on its own. It contains a controller and can do things largely without supervision, either by an outside computer or by a human being. A good example of this type of third-generation robot is the personal robot about which technophiles dream.

There are some situations in which autonomous robots don’t work well. In these cases, many simple robots, all under the control of one central computer, can be used. They function like ants in an anthill or bees in a hive. The individual machines are stupid, but the group as a whole is intelligent.

Fourth generation and beyond Nakano did not write about anything past the third generation of robots. But we might mention a fourth-generation robot: a machine of a sort yet to be deployed. An example is a fleet or population of robots that reproduce and evolve. Past that, we might say that a fifth-generation robot is something humans haven’t yet dreamed of—or, if someone has thought up such a thing, he or she has not published the brainstorm. Independent or dependent? Years ago, roboticist and engineer Rodney Brooks became well known for his work with insect robots. His approach to robotics and AI was at first considered unorthodox. Before his time, engineers wanted robotic AI systems to mimic human thought processes. The machines were supposed to stand alone and be capable of operating independently of humans or other machines. But Brooks believed that insect like intelligence might be superior to human like intelligence in many applications. Support for his argument came from the fact that insect colonies are highly efficient, and often they survive adversity better than supposedly higher life forms.

Insect robots

Insect robots operate in large numbers under the control of a central AI system. A mobile insect robot has several legs, a set of wheels, or a track drive. The first machines of this type, developed by Brooks, looked like beetles. They ranged in size from more than a foot long to less than a millimeter across. Most significant is the fact that they worked collectively. Individual robots, each with its own controller, do not necessarily work well together in a team. This shouldn’t be too surprising; people are the same way. Professional sports teams have been assembled by buying the best players in the business, but the team won’t win unless the players get along. Insects, in contrast, are stupid at the individual level. Ants and bees are like idiot robots, or, perhaps, like ideal soldiers. But an anthill or beehive, just like a well-trained military unit, is an efficient system, controlled by a powerful central brain. Rodney Brooks saw this fundamental difference between autonomous and collective intelligence. He saw that most of his colleagues were trying to build autonomous robots, perhaps because of the natural tendency for humans to envision robots as humanoid. To Brooks, it was obvious that a major avenue of technology was being neglected. Thus he began designing robot colonies, each consisting of many units under the control of a central AI system. Brooks envisioned microscopic insect robots that might live in your house, coming out at night to clean your floors and countertops. “Antibody robots” of even tinier proportions could be injected into a person infected with some previously incurable disease.

Controlled by a central microprocessor, they could seek out the disease bacteria or viruses and swallow them up.

Autonomous robots A robot is autonomous if it is self-contained, housing its own computer system, and not depending on a central computer for its commands. It gets around under its own power, usually by rolling on wheels or by moving on two, four, or six legs.

Autonomous robots

A robot is autonomous if it is self-contained, housing its own computer system, and not depending on a central computer for its commands. It gets around under its own power, usually by rolling on wheels or by moving on two, four, or six legs. Robots are shown as squares and controllers as solid black dots. In the drawing at A, there is only one controller; it is central and is common to all the individual robots. The computer communicates with, and coordinates, the robots through wires or fiber optics or via radio. In the drawing at B, each robot has its own controller, and there is no central computer. Straight lines show possible paths of communication among robot controllers in both scenarios. Simple robots, like those in assembly lines, are not autonomous. The more complex the task, and the more different things a robot must do, the more autonomy it will have. The most autonomous robots have AI. The ultimate autonomous robot will act like a living animal or human. Such a machine has not yet been developed, and it will probably be at least the year 2050 before this level of sophistication is reached.

Androids

An android is a robot, often very sophisticated, that takes a more or less human form. An android usually propels itself by rolling on small wheels in its base. The technology for fully functional arms is under development, but the software needed for their operation has not been made cost-effective for small robots. Legs are hard to design and engineer and aren’t really necessary; wheels or track drives work well enough. (Elevators can be used to allow a rolling android to get from floor to floor in a building.) An android has a rotatable head equipped with position sensors. Binocular, or stereo, vision allows the android to perceive depth, thereby locating objects anyplace within a large room. Speech recognition and synthesis are common. Because of their human like appearance, androids are ideal for use wherever there are children. Androids, in conjunction with computer terminals, might someday replace school teachers in some situations. It is possible that the teaching profession will suffer because of this, but it is more likely that the opposite will be true. There will be a demand for people to teach children how to use robots. Robots might free human teachers to spend more time in areas like humanities and philosophy, while robots instruct students in computer programming, reading, arithmetic, and other rote-memory subjects. Robotic teachers, if responsibly used, might help us raise children into sensitive and compassionate adults. Robot arms Robot arms are technically called manipulators. Some robots, especially industrial robots, are nothing more than sophisticated manipulators. A robot arm can be categorized according to its geometry. Some manipulators resemble human arms. The joints in these machines can be given names like “shoulder,” “elbow,” and “wrist.” Other manipulators are so much different from human arms that these names don’t make sense. An arm that employs revolute geometry is similar to a human arm, with a “shoulder,” “elbow,” and “wrist.” An arm with cartesian geometry is far different from a human arm, and moves along axes (x, y, z) that are best described as “upand-down,” “right-and-left,” and “front-to-back.”

Degrees of freedom The term degrees of freedom refers to the number of different ways in which a robot manipulator can move. Most manipulators move in three dimensions, but often they have more than three degrees of freedom.

You can use your own arm to get an idea of the degrees of freedom that a robot arm might have. Extend your right arm straight out toward the horizon. Extend your index finger so it is pointing. Keep your arm straight, and move it from the shoulder. You can move in three ways. Up-and-down movement is called pitch.Movement to the right and left is called yaw.You can rotate your whole arm as if you were using it as a screwdriver. This motion is called roll. Your shoulder therefore has three degrees of freedom: pitch, yaw, and roll. Now move your arm from the elbow only. This is hard to do without also moving your shoulder. Holding your shoulder in the same position constantly, you will see that your elbow joint has the equivalent of pitch in your shoulder joint. But that is all. Your elbow, therefore, has one degree of freedom. Extend your arm toward the horizon again. Now move only your wrist. Try to keep the arm above the wrist straight and motionless. Your wrist can bend up-and-down, side-to-side, and it can also twist a little. Your lower arm has the same three degrees of freedom that your shoulder has, although its roll capability is limited. In total, your arm has seven degrees of freedom: three in the shoulder, one in the elbow, and three in the arm below the elbow. You might think that a robot should never need more than three degrees of freedom. But the extra possible motions, provided by multiple joints, give a robot arm versatility that it could not have with only three degrees of freedom. (Just imagine how inconvenient life would be if your elbow and wrist were locked and only your shoulder could move.)

Degrees of rotation

The term degrees of rotation refers to the extent to which a robot joint, or a set of robot joints, can turn clockwise or counterclockwise about a prescribed axis. Some reference point is always used, and the angles are given in degrees with respect to that joint. Rotation in one direction (usually clockwise) is represented by positive angles; rotation in the opposite direction is specified by negative angles. Thus, if angle X 58 degrees, it refers to a rotation of 58 degrees clockwise with respect to the reference axis. If angle Y 274 degrees, it refers to a rotation of 74 degrees counterclockwise. Figure 34-2 shows a robot arm with three joints. The reference axes are J1,J2, and J3, for rotation angles X, Y, and Z. The individual angles add together. To move this robot arm to a certain position within its work envelope, or the region in space that the arm can reach, the operator enters data into a computer. This data includes the measures of angles X, Y, and Z. The operator has specified X 39, Y 75, and Z 51. In this example, no other parameters are shown. (This is to keep the illustration simple.) But there would probably be variables such as the length of the arm sections, the base rotation angle, and the position of the robot gripper (hand).

Articulated geometry

The word articulated means “broken into sections by joints.” A robot arm with articulated geometry bears some resemblance to the arm of a human. The versatility is defined in terms of the number of degrees of freedom. For example, an arm might have three degrees of freedom: base rotation (the equivalent of azimuth), elevation angle, and reach (the equivalent of radius). If you’re a mathematician, you might recognize this as a spherical coordinate scheme. There are several different articulated geometries for any given number of degrees of freedom.

Cartesian coordinate geometry Another mode of robot arm movement is known as cartesian coordinate geometry or rectangular coordinate geometry. This term comes from the cartesian system often used for graphing mathematical functions. The axes are always perpendicular to each other. Variables are assigned the letters x and y in a two-dimensional cartesian plane, or x, y, and z in cartesian three-space. The dimensions are called reach for the x variable, elevation for the y variable, and depth for the z variable.

Cylindrical coordinate geometry A robot arm can be guided by means of a plane polar coordinate system with an elevation dimension added . This is known as cylindrical coordinate geometry. In the cylindrical system, a reference plane is used. An origin point is chosen in this plane. A reference axis is defined, running away from the origin in the reference plane. In the reference plane, the position of any point can be specified in terms of reach x, elevation y, and rotation z, the angle that the reach arm subtends relative to the reference axis. except that the sliding movement is also capable of rotation. The rotation angle z can range from 0 to 360 degrees counterclockwise from the reference axis. In some systems, the range is specified as 0 to 180 degrees (up to a half circle counterclockwise from the reference axis), and 0 to 180 degrees (up to a half circle clockwise from the reference axis).

Revolute geometry A robot arm capable of moving in three dimensions using revolute geometry . The whole arm can rotate through a full circle (360 degrees) at the base point, or shoulder.There is also an elevation joint at the base that can move the arm through 90 degrees, from horizontal to vertical. A joint in the middle of the robot arm, at the elbow, moves through 180 degrees, from a straight position to doubled back on itself. There might be, but is not always, a wrist joint that can flex like the elbow and/or twist around and around. A 90-degree-elevation revolute robot arm can reach any point within a half sphere. The radius of the half sphere is the length of the arm when its elbow and wrist (if any) are straightened out. A 180-degree-elevation revolute arm can be designed that will reach any point within a fully defined sphere, with the exception of the small obstructed region around the base.

Robotic hearing and vision Machine hearing involves more than just the picking up of sound (done with a microphone) and the amplification of the resulting audio signals (done with an amplifier). A sophisticated robot can tell from which direction the sound is coming and perhaps deduce the nature of the source: human voice, gasoline engine, fire, or barking dog.

Binaural hearing Even with your eyes closed, you can usually tell from which direction a sound is coming. This is because you have binaural hearing. Sound arrives at your left ear with a different intensity, and in a different phase, than it arrives at your right ear. Your brain processes this information, allowing you to locate the source of the sound, with certain limitations. If you are confused, you can turn your head until the sound direction becomes apparent to you. Robots can be equipped with binaural hearing. Two sound transducers are positioned, one on either side of the robot’s head. A microprocessor compares the relative phase and intensity of signals from the two transducers. This lets the robot determine, within certain limitations, the direction from which sound is coming. If the robot is confused, it can turn until the confusion is eliminated and a meaningful bearing is obtained. If the robot can move around and take bearings from more than one position, a more accurate determination of the source location is possible if the source is not too far away.

Hearing and AI With the advent of microprocessors that can compare patterns, waveforms, and huge arrays of numbers in a matter of microseconds, it is possible for a robot to determine the nature of a sound source, as well as where it comes from. A human voice produces one sort of waveform, a clarinet produces another, a growling bear produces another, and shattering glass produces yet another. Thousands of different waveforms can be stored by a robot controller and incoming sounds compared with this repertoire. In this way, a robot can immediately tell if a particular noise is a lawn mower going by or person shouting, an aircraft taking off or a car going down the street. Beyond this coarse mode of sound recognition, an advanced robot can identify a person by analyzing the waveform of his or her voice. The machine can even decipher commonly spoken words. This allows a robot to recognize a voice as yours or that of some unknown person and react accordingly. For example, if you tell your personal robot to get you a hammer and a box of nails, it can do so by recognizing the voice as yours and the words as giving that particular command. But if a burglar comes up your walkway, approaches your robot, and tells it to go jump in the lake, the robot can trundle off, find you by homing in on the transponder you’re wearing for that very purpose, and let you know that an unidentified person in your yard just told it to hydrologically dispose of itself.

Visible-light vision

A visible-light robotic vision system must have a device for receiving incoming images. This is usually a charge-coupled device (CCD) video camera, similar to the type used in home video cameras. The camera receives an analog video signal. This is processed into digital form by an analog-to-digital converter (ADC). The digital signal is clarified by digital signal processing (DSP). The resulting data goes to the robot controller. The moving image, received from the camera and processed by the circuitry, contains an enormous amount of information. It’s easy to present a robot controller with a detailed and meaningful moving image. But getting the machine’s brain to know what’s happening, and to determine whether or not these events are significant, is another problem altogether.

Vision and AI There are subtle things about an image that a machine will not notice unless it has advanced AI. How, for example, is a robot to know whether an object presents a threat? Is that four-legged thing there a big dog, or is it a bear cub? How is a robot to forecast the behavior of an object? Is that stationary biped a human or a mannequin? Why is it holding a stick? Is the stick a weapon? What does the biped want to do with the stick, if anything? The biped could be a department-store dummy with a closedup umbrella or a baseball bat. It could be an old man with a cane. Maybe it is a hunter with a rifle. You can think up various images that look similar, but that have completely different meanings. You know right away if a person is carrying a tire iron to help you fix a flat tire, or if the person is clutching it with the intention of smashing something up. How is a robot to determine subtle things like this from the images it sees? It is important for a police robot or a security robot to know what constitutes a threat and what does not. In some robot applications, it isn’t necessary for the robot to know much about what’s happening. Simple object recognition is good enough. Industrial robots are programmed to look for certain things, and usually they aren’t hard to identify. A bottle that is too tall or too short, a surface that’s out of alignment, or a flaw in a piece of fabric is easy to pick out.

Sensitivity and resolution

Sensitivity is the ability of a machine to see in dim light or to detect weak impulses at invisible wavelengths. In some environments, high sensitivity is necessary. In others, it is not needed and might not be wanted. A robot that works in bright sunlight doesn’t need to be able to see well in a dark cave. A robot designed for working in mines, pipes, or caverns must be able to see in dim light, using a system that might be blinded by ordinary daylight. Resolutionis the extent to which a machine can differentiate between objects. The better the resolution, the keener the vision. Human eyes have excellent resolution, but machines can be designed with greater resolution. In general, the better the resolution, the more confined the field of vision must be. To understand why this is true, think of a telescope. The higher the magnification, the better the resolution (up to a certain maximum useful magnification). Increasing the magnification reduces the angle, or field, of vision. Zeroing in on one object or zone is done at the expense of other objects or zones. Sensitivity and resolution are interdependent. If all other factors remain constant, improved sensitivity causes a sacrifice in resolution. Also, the better the resolution, the less well the vision system will function in dim light.

Invisible and passive vision Robots have a big advantage over people when it comes to vision. Machines can see at wavelengths to which humans are blind. Human eyes are sensitive to electromagnetic waves whose length ranges from 390 to 750 nanometers (nm). The nanometer is a billionth (10 9) of a meter, or a millionth of a millimeter. The longest visible wavelengths look red. As the wavelength gets shorter, the color changes through orange, yellow, green, blue, and indigo. The shortest waves look violet. Energy at wavelengths somewhat longer than 750 nm is infrared (IR); energy at wavelengths somewhat shorter than 390 nm is ultraviolet (UV). Machines, and even nonhuman living species, often do not see in this exact same range of wavelengths. In fact, insects can see UV that we can’t and are blind to red and orange light that we can see. (Maybe you’ve used orange “bug lights” when camping to keep the flying pests from coming around at night or those UV devices that attract bugs and then zap them.) A robot might be designed to see IR or UV or both, as well as (or instead of) visible light. Video cameras can be sensitive to a range of wavelengths much wider than the range we see. Robots can be made to “see” in an environment that is dark and cold and that radiates too little energy to be detected at any electromagnetic wavelength. In these cases the robot provides its own illumination. This can be a simple lamp, a laser, an IR device, or a UV device. Or the robot might emanate radio waves and detect the echoes; this is radar. Some robots can navigate via ultrasonic echoes, like bats; this is sonar.

Binocular vision

Binocular machine vision is the analog of binocular human vision. It is sometimes called stereo vision. In humans, binocular vision allows perception of depth. With only one eye, that is, with monocular vision, you can infer depth to a limited extent on the basis of perspective. Almost everyone, however, has had the experience of being fooled when looking at a scene with one eye covered or blocked. A nearby pole and a distant tower might seem to be adjacent, when in fact they are a city block apart. the basic concept of binocular robot vision. Of primary importance are high-resolution video cameras and a sufficiently powerful robot controller.

Color sensing Robot vision systems often function only in gray scale, like old-fashioned 1950s television. But color sensing can be added, in a manner similar to the way it is added to television systems. Color sensing can help a robot with AI tell what an object is. Is that horizontal surface a floor inside a building, or is it a grassy yard? (If it is green, it’s probably a grassy yard or maybe a playing field with artificial turf.) Sometimes, objects have regions of different colors that have identical brightness as seen by a gray-scale system; these objects, obviously, can be seen in more detail with a color-sensing system than with a vision system that sees only shades of gray. In a typical color-sensing vision system, three gray-scale cameras are used. Each camera has a color filter in its lens. One filter is red, another is green, and another is blue.

These are the three primary colors. All possible hues, levels of brightness, and levels of saturation are made up of these three colors in various ratios. The signals from the three cameras are processed by a microcomputer, and the result is fed to the robot controller.

Robotic navigation

Mobile robots must get around in their environment without wasting motion, without running into things, and without tipping over or falling down a flight of stairs. The nature of a robotic navigation system depends on the size of the work area, the type of robot used, and the sorts of tasks the robot is required to perform. In this section, we’ll look at a few of the more common methods of robotic navigation.

Clinometer

A clinometer is a device for measuring the steepness of a sloping surface. Mobile robots use clinometers to avoid inclines that might cause them to tip over or that are too steep for them to ascend while carrying a load. The floor in a building is almost always horizontal. Thus, its incline is zero. But sometimes there are inclines such as ramps. A good example is the kind of ramp used for wheelchairs, in which a very small elevation change occurs. A rolling robot can’t climb stairs, but it might use a wheelchair ramp, provided the ramp isn’t so steep that it would upset the robot’s balance or cause it to lose its payload. In a clinometer, a transducer produces an electrical signal whenever the device is tipped from the horizontal.

Edge detection

The term edge detection refers to the ability of a robotic vision system to locate boundaries. It also refers to the robot’s knowledge of what to do with respect to those boundaries. A robot car, bus, or truck might use edge detection to see the edges of a road and use the data to keep itself on the road. But it would have to stay a certain distance from the right-hand edge of the pavement to avoid crossing into the lane of oncoming traffic (Fig. 34-8). It would have to stay off the road shoulder. It would have to tell the difference between pavement and other surfaces, such as gravel, grass, sand, and snow. The robot car could use beacons for this purpose, but this would require the installation of the guidance system beforehand. That would limit the robot car to roads that are equipped with such navigation aids. The interior of a home contains straight-line edge boundaries of all kinds, and each boundary represents a potential point of reference for a mobile robotic navigation system. The controller in a personal home robot would have to be programmed to know the difference between, say, the line where carpet ends and tile begins and the line where a flight of stairs begins. The vertical line produced by the intersection of two walls would present a different situation than the vertical line produced by the edge of a doorway.

Embedded path

An embedded path is a means of guiding a robot along a specific route. This scheme is commonly used by a mobile robot called an automated guided vehicle (AGV). A common embedded path consists of a buried, current-carrying wire. The current in the wire produces a magnetic field that the robot can follow. This method of guidance has been suggested as a way to keep a car on a highway, even if the driver isn’t paying attention. The wire needs a constant supply of electricity for this guidance method to work. If this current is interrupted for any reason, the robot will lose its way unless some backup navigation method (or good old human control) is substituted. Alternatives to wires, such as colored or reflective paints or tapes, do not need a supply of power, and this gives them an advantage. Tape is easy to remove and put somewhere else; this is difficult to do with paint and practically impossible with wires embedded in concrete. However, tape will be obscured by even the lightest snowfall, and at night, glare from oncoming headlights might be confused for reflections from the tape.

Range sensing and plotting

Range sensing is the measurement of distances to objects in a robot’s environment in a single dimension. Range plotting is the creation of a graph of the distance (range) to objects, as a function of the direction in two or three dimensions. For one-dimensional (1-D) range sensing, a signal is sent out, and the robot measures the time it takes for the echo to come back. This signal can be sound, in which case the device is sonar. Or it can be a radio wave; this constitutes radar. Laser beams can also be used. Close-in, one-dimensional range sensing is known as proximity sensing. Two-dimensional (2-D) range plotting involves mapping the distance to various objects, as a function of their direction. The echo return time for a sonar signal, for example, might be measured every few degrees around a complete circle, resulting in a set of range points. A better plot would be obtained if the range were plotted every degree, every tenth of a degree, or even every minute of arc (1/60 degree). But no matter how detailed the direction resolution, a 2-D range plot renders only one plane, such as the floor level in a room, or some horizontal plane above the floor. The greater the number of echo samples in a complete circle (that is, the smaller the angle between samples), the more detail can be resolved at a given distance from the robot, and the greater the distance at which a given amount of detail can be resolved. Three-dimensional (3-D) range plotting is done in spherical coordinates: azimuth (compass bearing), elevation (degrees above the horizontal), and range (distance). The distance must be measured for a large number of directions— preferably at least several thousand—at all orientations. In a furnished room, a 3D sonar range plot would show ceiling fixtures, things on the floor, objects on top of a desk, and other details not visible with a 2-D plot. The greater the number of echo samples in a complete sphere surrounding the robot, the more detail can be resolved at a given distance, and the greater the range at which a given amount of detail can be resolved.

Epipolar navigation

Epipolar navigation is a means by which a machine can locate objects in three-dimensional space. It can also navigate, and figure out its own position and path. Epipolar navigation works by evaluating the way an image changes as the viewer moves. The human eyes and brain do this without having to think, although they are not very precise. Robot vision systems, along with AI, can do it with extreme precision. Imagine you’re piloting an airplane over the ocean. era, and AI software. You can figure out your coordinates and altitude, using only these devices, by letting the computer work with the image of the island. As you fly along, you aim the camera at the island and keep it there. The computer sees an image that constantly changes shape. The computer has the map data, so it knows the true size, shape, and location of the island. The computer compares the shape/size of the image it sees, from the vantage point of the aircraft, with the actual shape/size of the island, which it knows from the map data. From this alone, it can determine your altitude, your speed relative to the surface, your exact latitude, and your exact longitude. There is a one-to-one correspondence between all points within sight of the island and the size/shape of the island’s image. Epipolar navigation works on any scale, for any speed. It is a method by which robots can find their way without triangulation, direction finding, beacons, sonar, or radar. It is only necessary that the robot have a computer map of its environment and that viewing conditions be satisfactory.

Telepresence

Telepresence is a refined, advanced form of robot remote control. The robot operator gets a sense of being “on location,” even if the remotely controlled machine, or telechir, and the operator are miles apart. Control and feedback are done by means of telemetry sent over wires, optical fibers, or radio.

What it’s like What would it be like to operate a telechir? Here is a possible scenario. The robot is autonomous and has a humanoid form. The control station consists of a suit that you wear or a chair in which you sit with various manipulators and displays. Sensors can give you feelings of pressure, sight, and sound. You wear a helmet with a viewing screen that shows whatever the robot camera sees. When your head turns, the robot head, with its vision system, follows, so you see an image that changes as you turn your head, as if you were in a space suit or diving suit at the location of the robot. Binocular robot vision provides a sense of depth. Binaural robot hearing allows you to perceive sounds. Special vision modes let you see UV or IR; special hearing modes let you hear ultrasound or infrasound. Robot propulsion can be carried out by means of a track drive, a wheel drive, or robot legs. If the propulsion uses legs, you propel the robot by walking around a room. Otherwise you sit in a chair and drive the robot like a car. The telechir has two arms, each with grippers resembling human hands. When you want to pick something up, you go through the motions. Back-pressure sensors and position sensors let you feel what’s going on. If an object weighs 10 pounds, it will feel as if it weighs 10 pounds. But it will be as if you’re wearing thick gloves; you won’t be able to feel texture. You might throw a switch, and something that weighs 10 pounds feels as if it only weighs one pound. This might be called “strength 10” mode. If you switch to “strength 100” mode, a 100pound object seems to weigh 1 pound. Figure 34-10 is a simple block diagram of a telepresence system.

Applications You can certainly think of many different uses for a telepresence system. Some applications are

• Working in extreme heat or cold

• Working under high pressure, such as on the sea floor

• Working in a vacuum, such as in space

• Working where there is dangerous radiation

• Disarming bombs

• Handling toxic substances

• Police robotics

• Robot soldier

• Neurosurgery Of course, the robot must be able to survive conditions at its location. Also, it must have some way to recover if it falls or gets knocked over.

Limitations In theory, the technology for telepresence exists right now. But there are some problems that will be difficult, if not impossible, to overcome. The most serious limitation is the fact that telemetry cannot, and never will, travel faster than the speed of light in free space. This seems fast at first thought (186,282 miles, or 299,792 kilometers, per second). But it is slow on an interplanetary scale. The moon is more than a light second away from the earth; the sun is 8 light minutes away. The nearest stars are at distances of several light years. The delay between the sending of a command, and the arrival of the return signal, must be less than 0.1 second if telepresence is to be realistic. This means that the telechir cannot be more than about 9300 miles, or 15,000 kilometers, away from the control operator. Another problem is the resolution of the robot’s vision. A human being with good eyesight can see things with several times the detail of the best fast-scan television sets. To send that much detail, at realistic speed, would take up a huge signal bandwidth. There are engineering problems (and cost problems) that go along with this. Still another limitation is best put as a question: How will a robot be able to “feel” something and transmit these impulses to the human brain? For example, an apple feels smooth, a peach feels fuzzy, and an orange feels shiny yet bumpy. How can this sense of texture be realistically transmitted to the human brain? Will people allow electrodes to be implanted in their brains so they can perceive the universe as if they are robots?

The mind of the machine

A simple electronic calculator doesn’t have AI. But a machine that can learn from its mistakes, or that can show reasoning power, does. Between these extremes, there is no precise dividing line. As computers become more powerful, people tend to set higher standards for what they will call AI. Things that were once thought of as AI are now quite ordinary. Things that seem fantastic now will someday be humdrum. There is a tongue-in-cheek axiom: AI is AI only as long as it remains esoteric.

Relationship with robotics

Robotics and artificial intelligence go together; they complement each other. Scientists have dreamed for more than a century about building smart androids: robots that look and act like people. Androids exist, but they aren’t very smart. Powerful computers exist, but they lack mobility. If a machine has the ability to move around under its own power, to lift things, and to move things, it seems reasonable that it should do so with some degree of intelligence if it is to accomplish anything worthwhile. Otherwise it is little more than a bumbling box, and might even be dangerous, like a driverless car with a brick on the gas pedal. If a computer is to manipulate anything, it will need to be able to move around, to grasp, to lift, and to carry objects. It might contemplate fantastic exploits and discover new secrets about the cosmos, but if it can’t act on its thoughts, the work (and the risk) must be undertaken by people, whose strength, maneuverability, and courage are limited.

Expert systems

The term expert systems refers to a method of reasoning in AI. Sometimes this scheme is called the rule-based system. Expert systems are used in the control of smart robots. The heart of an expert system is a set of facts and rules. In the case of a robotic system, the facts consist of data about the robot’s environment, such as a factory, an office, or a kitchen. The rules are statements of the logical form “If X, then Y,” similar to many of the statements in high-level programming languages. An inference engine decides which logical rules should be applied in various situations and instructs the robot to carry out certain tasks. But the operation of the system can only be as sophisticated as the data supplied by human programmers. Expert systems can be used in computers to help people do research, make decisions, and make forecasts. A good example is a program that assists a physician in making a diagnosis. The computer asks questions and arrives at a conclusion based on the answers given by the patient and doctor. One of the biggest advantages of expert systems is the fact that reprogramming is easy. As the environment changes, the robot can be taught new rules, and supplied with new facts.

How smart a machine?

Experts in the field of AI have been disappointed in the past few decades. Computers have been designed that can handle tasks no human could ever contend with, such as navigating a space probe. Machines have been built that can play chess and checkers well enough to compete with human masters. Modern machines can understand, as well as synthesize, much of any spoken language. But these abilities, by themselves, don’t count for much in the dreams of scientists who hope to create artificial life. The human mind is incredibly complicated. A circuit that would have occupied, and used all the electricity in, a whole city in 1940 can now be housed in a box the size of a vitamin pill and run by a battery. Imagine this degree of miniaturization happening again, then again, and then again. Would that begin to approach the level of sophistication in your body’s nervous system? Perhaps. A human brain might be nothing more than a digital switching network, but no electronic device yet conceived has come anywhere near its level of intelligence. Some experts think that a machine might someday be built that is smarter than a human. But most concede that if it’s ever done, it won’t be for a very long time. Other experts say that we humans cannot create a mind more powerful than our own, because that would violate the laws of nature.

Artificial life What, exactly, constitutes something living, which makes it different from something nonliving? This has been one of the great questions of science throughout history. In some cultures, life is ascribed to things that Americans think of as inanimate. One definition of artificial life involves the ability of a human-made thing to reproduce itself. Suppose you were to synthesize a new kind of molecule in a beaker and named it QNA (which stands for some weird name nobody can ever remember). Suppose this molecule, like DNA, could make replicas of itself, so that when you put one of them in a glass of water, you’d have a whole glassful in a few days. This molecule would be artificial life, in the sense that it could reproduce and that it was made by humans, rather than by nature in general. You might build a robot that could assemble other robots like itself. The machine would also be artificial life according to the above definition. It would, of course, be far different from the QNA molecule in terms of size and environment. But reproduction ability is the basis for the definition. The QNA molecule and the self-replicating robot both meet the definition. A truly self-replicating robot hasn’t yet been developed. Society is a long way from having to worry that robots might build copies of themselves and take over the whole planet. But artificially living robots might be possible from a technological standpoint. Robots would probably reproduce by merely assembling other robots similar to themselves. Robots could also build machines much different from themselves individually. It’s interesting to think of the ways in which artificially living machine populations might evolve. Another definition for artificial life involves thought processes. At what point does machine intelligence become consciousness? Can a machine ever be aware of its own existence, and be able to ponder its place in the universe, the way a human being can? The reproduction question is answerable by an “either/or,” but the consciousness question can be endlessly debated. One person might say that all computers are fully conscious; another person could point out that a sizable proportion of the human population, at any given time, is semiconscious or unconscious. In the end, questions like this might not matter much. As long as smart robots obey Asimov’s three laws, the issue of whether or not they are living beings will most likely take second place to more pragmatic concerns.

IIII0 how we can combine robots with the Internet of Things

The Internet of Things

is a popular vision of objects with internet connections sending

information back and forth to make our lives easier and more

comfortable. It’s emerging in our homes, through everything from voice-controlled speakers to smart temperature sensors. To improve our fitness, smart watches and Fitbits

are telling online apps how much we’re moving around. And across entire

cities, interconnected devices are doing everything from increasing the

efficiency of transport to flood detection.

In parallel, robots are steadily moving outside the confines of factory lines. They’re starting to appear

as guides in shopping malls and cruise ships, for instance. As prices

fall and the Artificial Intelligence (AI) and mechanical technology

continues to improve, we will get more and more used to them making

independent decisions in our homes, streets and workplaces.

Here lies a major opportunity. Robots become considerably more

capable with internet connections. There is a growing view that the next

evolution of the Internet of Things will be to incorporate them into

the network – opening up thrilling possibilities along the way.

Home improvements

Even simple robots become useful when connected to the internet –

getting updates about their environment from sensors, say, or learning

about their users’ whereabouts and the status of appliances in the

vicinity. This lets them lend their bodies, eyes and ears to give an

otherwise impersonal smart environment a user-friendly persona. This can

be particularly helpful for people at home who are older or have

disabilities.

We recently unveiled a futuristic apartment at Heriot-Watt University to work on such possibilities. One of a few

such test sites around the EU, our whole focus is around people with

special needs – and how robots can help them by interacting with

connected devices in a smart home.

Suppose a doorbell rings that has smart video features. A robot

could find the person in the home by accessing their location via

sensors, then tell them who is at the door and why. Or it could help

make video calls to family members or a professional carer – including

allowing them to make virtual visits by acting as a telepresence

platform.

Equally, it could offer protection. It could inform them the oven

has been left on, for example – phones or tablets are less reliable for

such tasks because they can be misplaced or not heard. Similarly, the

robot could raise the alarm if its user appears to be in difficulty.

Of course, voice-assistant devices like Alexa or Google Home can

offer some of the same services. But robots are far better at moving,

sensing and interacting with their environment. They can also engage

their users by pointing at objects or acting more naturally, using

gestures or facial expressions. These “social abilities” create bonds

which are crucially important for making users more accepting of the

support and making it more effective.

Robots offshore

There are comparable opportunities in the business world. Oil and gas companies are looking at the Internet of Things, for example; experimenting with wireless sensors to collect information such as temperature, pressure and corrosion levels to detect and possibly predict faults in their offshore equipment.

In future, robots could be alerted to problem areas by sensors to

go and check the integrity of pipes and wells, and to make sure they

are operating as efficiently and safely as possible. Or they could place

sensors in parts of offshore equipment which are hard to reach, or help

to calibrate them or replace their batteries. The likes of the ORCA Hub, a £36m project led by the Edinburgh Centre for Robotics,

bringing together leading experts and over 30 industry partners, is

developing such systems. The aim is to reduce the costs and the risks of

humans working in remote hazardous locations.

Working underwater is particularly challenging, since radio waves

don’t move well under the sea. Underwater autonomous vehicles and

sensors usually communicate using acoustic waves, which are many times

slower (1,500 metres a second vs 300m metres a second for radio waves).

Acoustic communication devices are also much more expensive than those

used above the water.

This academic project

is developing a new generation of low-cost acoustic communication

devices, and trying to make underwater sensor networks more efficient.

It should help sensors and underwater autonomous vehicles to do more

together in future – repair and maintenance work similar to what is

already possible above the water, plus other benefits such as helping

vehicles to communicate with one another over longer distances and

tracking their location.

Beyond oil and gas, there is similar potential in sector after

sector. There are equivalents in nuclear power, for instance, and in

cleaning and maintaining the likes of bridges and buildings. My

colleagues and I are also looking at possibilities in areas such as farming, manufacturing, logistics and waste.

First, however, the research sectors around the Internet of

Things and robotics need to properly share their knowledge and

expertise.

To the same end, industry and universities need to look at

setting up joint research projects. It is particularly important to

address safety and security issues – hackers taking control of a robot

and using it to spy or cause damage, for example. Such issues could make

customers wary and ruin a market opportunity.

We also need systems that can work together, rather than in

isolated applications. That way, new and more useful services can be

quickly and effectively introduced with no disruption to existing ones.

If we can solve such problems and unite robotics and the Internet of

Things, it genuinely has the potential to change the world.

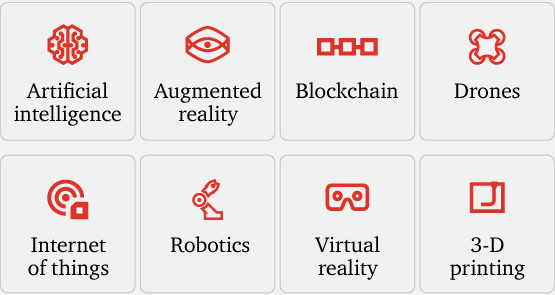

The new tech future

Artificial intelligence

Software algorithms are automating complex decision-making tasks to mimic human thought processes and senses. Artificial intelligence (AI) is not a monolithic technology. A subset of AI, machine learning, focuses on the development of computer programs that can teach themselves to learn, understand, reason, plan, and act when blasted with data. Machine learning carries enormous potential for the creation of meaningful products and services — for example, hospitals using a library of scanned images to quickly and accurately detect and diagnose cancer; insurance companies digitally and automatically recognizing and assessing car damage; or security companies trading clunky typed passwords for voice recognition.Embodied AI

Technologies: 3-D printing, AI, Drones, IoT, RoboticsAI is everywhere. Along with IoT sensors, it’s integrated in many products, from simple cameras to sophisticated drones. Embedded sensors collect data, which is fed to algorithms that give that object the illusion of intelligence. This enables drones to follow a moving object like a truck or a person autonomously. It enables a 3-D printer to automatically modify a design as it is being printed to have a stronger structure, become lighter, or be more cost effective to print. It enables AR glasses to overlay data on an anchored endpoint or allow you to communicate via voice with a robot or conversational agent.

_______________________________________________________________________________

e- Intern ROBO ART

_______________________________________________________________________________

Tidak ada komentar:

Posting Komentar