"Giving artificial intelligence to people, created by people, is the most important.”

Thanks to My Family : AMNIMARJESLOW AL DO FOUR DO AL ONE ( 4 / 1 / 2020 )

EINSTEIN FOR PHYSICS AND MECHANICS QUANTUM

Einstein was the first physicist to say that Planck's discovery of the quantum (h) would require a rewriting of the laws of physics. To support his point, in 1905 he proposed that light sometimes acts as a particle which he called a light quantum .

Einstein accepted that quantum mechanics was an experimentally confirmed empirical theory, he simply believed that some of its structural features make it inherently incomplete, as opposed to the view, advanced by Bohr and others, that quantum mechanics is as complete an account of the micro physics .

Quantum mechanics is the body of scientific truth that describe the wacky behavior of photons, electrons and the other particles that make up the universe. ... At the scale of atoms and electrons, many of the equations of classical mechanics, which describe how things move at everyday sizes and speeds, cease to be useful.

Quantum mechanics (QM; also known as quantum physics, quantum theory, the wave mechanical model, or matrix mechanics), including quantum field theory, is a fundamental theory in physics which describes nature at the smallest scales of energy levels of atoms and subatomic particles.

In physics, a quantum (plural: quanta) is the minimum amount of any physical entity (physical property) involved in an interaction. The fundamental notion that a physical property may be "quantized" is referred to as "the hypothesis of quantization".

Quantum mechanics of time travel. Until recently, most studies on time travel are based upon classical general relativity. Coming up with a quantum version of time travel requires us to figure out the time evolution equations for density states in the presence of closed time like curves (CTC).

Reality is the sum or aggregate of all that is real or existent, as opposed to that which is only imaginary. The term is also used to refer to the ontological status of things, indicating their existence. In physical terms, reality is the totality of the universe, known and unknown.

A quantum computer with a given number of qubits is fundamentally different from a classical computer composed of the same number of classical bits. ... The qubits are in a superposition of states before any measurement is made, which directly affects the possible outcomes of the computation.

Einstein and the Quantum

___________________________

Einstein had become completely unforgiving of quantum mechanics’ probabilistic interpretation of the universe and would step away from it forever.

Quantum mechanics is very impressive. But an inner voice tells me that it is not yet the real thing. The theory produces a good deal but hardly brings us closer to the secret of the Old One. I am at all events convinced that He does not play dice.

Einstein’s life (even including the last moments just before his death on April 18, 1955), his scientific endeavors were committed to this vision as he focused on finding a unified field theory. Among other things, such a theory was to unify gravity (as described by Einstein’s very own general relativity) and electromagnetism (as described by Maxwell’s equations), and most importantly, it was to rid physics of the “quantum uncertainty.”

Nonetheless, Einstein’s relationship with quantum wasn’t always so strained, and in fact, he led the way in its development for some 20 years, as it transitioned from quantum theory into quantum mechanics. So, what happened?

By 1900, a 42-year-old Max Planck (1858–1947) had spent almost six years on attempting to understand the fundamental basis for the radiation spectrum produced by an object when it’s heated to a certain temperature (e.g., an electric stove burner turns red upon heating), and it was beginning to look like his efforts were going to be in vain, thanks to new experimental data that had revealed an error in his theory. Nonetheless, Planck quickly made the needed revisions, yielding a theory that was in perfect agreement with experiment.

However, the price for this success would be costly, nothing short of the total upheaval of classical physics. His new theory would also give us the curious concept of energy quanta: at the atomic level, matter absorbs and emits energy only in discrete “chunks”—not to a continuous degree as classical physics had always assured. Needless to say, Planck and others were hesitant to fully embrace this aspect of his new theory. However, Einstein would do so immediately and run with it for almost 20 years.

In 1905, at the age of 26, Einstein published On a Heuristic Point of View Concerning the Production and Transformation of Light (along with three other groundbreaking papers, which would change physics forever, and finished his PhD; this was his annus mirabilis, Latin for “miraculous year”). In it he would propose that light, too, came in chunks (i.e., light quanta), or behaves as particles, which we now call photons.

The nature of light had been debated many times before with some of the earliest theories dating back to the ancient Greeks. With the last in a series of papers on electricity and magnetism published in 1864 by James Clerk Maxwell (1831–1879), along with his two-volume book A Treatise on Electricity and Magnetism published in 1873, light as an electromagnetic wave—not a particle (photon)—had been ingrained in stone. And rightly so, for most of light’s fundamental properties were well described by it being a wave. Not all of them, however, and Einstein’s light quanta was able to successfully address these discrepancies.

Nonetheless, Einstein’s idea met with tremendous resistance, much more than Planck had ever suffered with his quantum theory. The general sentiment of the physics’ community was clear: don’t mess with the wave theory of light! Einstein was undeterred, and he continued to explore the consequences of light as a particle, implementing it at will in his work on quantum theory, as he blazed the way forward.

In 1909, while considering light’s momentum, he found the astounding result that light behaved as both a particle and a wave, a sort of a duality that had never been described before (de Broglie’s version of wave-particle duality would arrive in 1923). In referring to his results, he concluded:

It is therefore my opinion that the next stage in the development of theoretical physics will bring us a theory of light that can be understood as a kind of fusion of the wave and [particle] theories of light.

Einstein stood completely alone in his conclusion, but still continued to push his agenda forward.

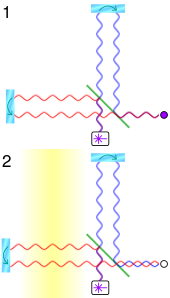

After taking time away to focus on general relativity, Einstein returned to the quantum theory of light in July 1916. His efforts culminated in three papers, two in 1916 and the most prominent one in 1917. It had been 16 years since Planck’s original theory, yet despite its incredible success, it was still tarnished by being an awkward hybrid of mostly rigorous classical derivations with the speculation of energy quanta sprinkled in to smooth out the rough edges; it was far from being a full-fledged quantum theory. And while Einstein was able to arrive at a “much more” quantum derivation of Planck’s work, he too fell short, having to rely on assumptions from other theories. Nonetheless, with this work, Einstein would be successful at obtaining a much deeper understanding of light and its interaction with matter.

A major success of the theory would be its prediction of stimulated emission, where a passing photon “bumps” an electron in an atom on its way by, causing it to fall into a lower energy state, resulting in the emission of a photon (in addition to the one passing by in the first place); this novel mechanism forms the foundation of modern-day lasers.

Einstein also uncover another interesting phenomenon, one he found very startling, to the point of being a flaw in his current formulation. Unlike stimulated emission, which occurs as the result of a passing photon, an atom also experiences spontaneous emission. As the name implies, it happens naturally (in the absence of a passing photon), but is otherwise a very similar process to stimulated emission. (It’s most familiar to us in radioactive decay processes, where radiation, such as x-rays or gamma rays, are naturally given off.) Since it happens spontaneously, the emitted photon can fly off in any direction, which is simply not known ahead of time. In other words, the direction the photon flies off in is inherently random; this deeply troubled Einstein, and would mark the beginning of his uneasiness with quantum theory, which would eventually culminate in his denouncement of quantum mechanics altogether in 1926.

Einstein would make his last big contribution to quantum theory (and perhaps to physics) in 1925. In 1924, Satyendra Nath Bose (1894–1974) finally succeeded at obtaining a fully quantum version of Planck’s theory. And he did it by embracing Einstein’s light quanta concept; something no physicist, other than Einstein himself, had done since its introduction in 1905. The work was revolutionary, and would establish the area of quantum statistics. Einstein had spent some two decades wrestling with the fundamental nature of light, and he must have immediately realized what Bose had accomplished (having seen his own work full short of such a feat).

Convinced that the method developed by Bose for light also had application to atoms as well, Einstein proceeded to develop the quantum theory of the monoatomic ideal gas:

If Bose’s derivation of Planck’s radiation formula is taken seriously, then one will not be allowed to ignore [my] theory of the ideal gas; since if it is justified to regard the radiation [light] as a quantum gas, then the analogy between the quantum gas [light] and the molecule gas has to be a complete one.

Einstein wrote three papers detailing his method. In the first paper (presented to the Prussian Academy only eight days after Bose’s paper was received for publication and published later in 1924), Einstein successfully applied Bose’s new method to the monoatomic ideal gas and, among other things, establishes an equivalence between light and atoms.

The second paper, which was published in 1925, is the most significant of the three. Here, Einstein predicts the occurrence of a very unusual phase transition, which we now call Bose-Einstein condensation (BEC). In BEC, the atoms in the gas begin to “pile up” or condense into the lowest (single-particle) energy state, as the temperature is lowered. This effect becomes most pronounced as the temperature is lowered to absolute zero, at which point all the gas atoms condense into this lowest-energy state.

The amazing part about BEC is that the condensation of the atoms has nothing to do with attractive interactions pulling them (condensing) them together, which is normally how condensation occurs. It has to do with the quantum nature of the atoms themselves. Although BEC wasn’t taken too seriously at the time, it was finally shown to be true in 1995, when experimentalists were able to cool a system of rubidium-87 to near absolute zero using a combination of novel cooling techniques.

In a 12-month period of sustained creativity throughout 1926, Erwin Schrödinger (1887–1961) would produce six major papers on a new theory of quantum known as wave mechanics, and which would give us his famous wave equation. Einstein would initially welcome Schrödinger’s success with open arms, saying, “the idea of your work springs from true genius!” Ten days later Einstein added, “I am convinced that you have made a decisive advance with your formulation of the quantum condition….” However, his feelings would soon change.

The physical implications of Schrödinger’s wave equation were still a big mystery to everyone, including Schrödinger himself. It would finally be Max Born who got it right: “The motion of particles follows probability laws….” In other words, unlike a classical particle, a quantum particle (electron, photon, etc.) doesn’t move along a well-defined physical path with well-defined values for its key properties, such as position, momentum, energy and the like, at every instant in time. Such physical quantities (and many others) are determined entirely by an inherent quantum probability.

The notion of an underlying quantum probability proved to be too much for Einstein (and Schrödinger as well), who would now turn his back on the new quantum mechanics forever to pursue his dream of a causal unified field theory. In the end, Einstein would never realize this final dream, and the “strangeness” of quantum mechanics continues with us today.

Basically you can think of the division between the relativity and quantum systems as “smooth” versus “chunky”. In general relativity, events are continuous and deterministic, meaning that every cause matches up to a specific, local effect. In quantum mechanics, events produced by the interaction of subatomic particles happen in jumps (yes, quantum leaps), with probabilistic rather than definite outcomes. Quantum rules allow connections forbidden by classical physics. This was demonstrated in a much-discussed recent experiment in which Dutch researchers defied the local effect. They showed that two particles – in this case, electrons – could influence each other instantly, even though they were a mile apart. When you try to interpret smooth relativistic laws in a chunky quantum style, or vice versa, things go dreadfully wrong.

Relativity gives nonsensical answers when you try to scale it down to quantum size, eventually descending to infinite values in its description of gravity. Likewise, quantum mechanics runs into serious trouble when you blow it up to cosmic dimensions. Quantum fields carry a certain amount of energy, even in seemingly empty space, and the amount of energy gets bigger as the fields get bigger. According to Einstein, energy and mass are equivalent (that’s the message of E=mc2), so piling up energy is exactly like piling up mass. Go big enough, and the amount of energy in the quantum fields becomes so great that it creates a black hole that causes the universe to fold in on itself.

‘Quantum mechanics provided the conceptual tools for the Large Hadron Collider.’

Einstein unveiled general relativity, he not only superseded Isaac Newton’s theory of gravity; he also unleashed a new way of looking at physics that led to the modern conception of the Big Bang and black holes, not to mention atomic bombs and the time adjustments essential to your phone’s GPS. Likewise, quantum mechanics did much more than reformulate James Clerk Maxwell’s textbook equations of electricity, magnetism and light. It provided the conceptual tools for the Large Hadron Collider, solar cells, all of modern microelectronics.

What emerges from the dust-up could be nothing less than a third revolution in modern physics, with staggering implications. It could tell us where the laws of nature came from, and whether the cosmos is built on uncertainty or whether it is fundamentally deterministic, with every event linked definitively to a cause.

Small is beautiful

Hogan, champion of the quantum view, is what you might call a lamp-post physicist: rather than groping about in the dark, he prefers to focus his efforts where the light is bright, because that’s where you are most likely to be able to see something interesting. That’s the guiding principle behind his current research. The clash between relativity and quantum mechanics happens when you try to analyse what gravity is doing over extremely short distances, he notes, so he has decided to get a really good look at what is happening right there. “I’m betting there’s an experiment we can do that might be able to see something about what’s going on, about that interface that we still don’t understand,” he says.

A basic assumption in Einstein’s physics – an assumption going all the way back to Aristotle, really – is that space is continuous and infinitely divisible, so that any distance could be chopped up into even smaller distances. But Hogan questions whether that is really true. Just as a pixel is the smallest unit of an image on your screen and a photon is the smallest unit of light, he argues, so there might be an unbreakable smallest unit of distance: a quantum of space.

In Hogan’s scenario, it would be meaningless to ask how gravity behaves at distances smaller than a single chunk of space. There would be no way for gravity to function at the smallest scales because no such scale would exist. Or put another way, general relativity would be forced to make peace with quantum physics, because the space in which physicists measure the effects of relativity would itself be divided into unbreakable quantum units. The theatre of reality in which gravity acts would take place on a quantum stage.

Hogan acknowledges that his concept sounds a bit odd, even to a lot of his colleagues on the quantum side of things. Since the late 1960s, a group of physicists and mathematicians have been developing a framework called string theory to help reconcile general relativity with quantum mechanics; over the years, it has evolved into the default mainstream theory, even as it has failed to deliver on much of its early promise. Like the chunky-space solution, string theory assumes a fundamental structure to space, but from there the two diverge. String theory posits that every object in the universe consists of vibrating strings of energy. Like chunky space, string theory averts gravitational catastrophe by introducing a finite, smallest scale to the universe, although the unit strings are drastically smaller even than the spatial structures Hogan is trying to find.

Chunky space does not neatly align with the ideas in string theory – or in any other proposed physics model, for that matter. “It’s a new idea. It’s not in the textbooks; it’s not a prediction of any standard theory,” Hogan says, sounding not the least bit concerned. “But there isn’t any standard theory, right?”

If he is right about the chunkiness of space, that would knock out a lot of the current formulations of string theory and inspire a fresh approach to reformulating general relativity in quantum terms. It would suggest new ways to understand the inherent nature of space and time. And weirdest of all, perhaps, it would bolster the notion that our seemingly three-dimensional reality is composed of more basic, two-dimensional units. Hogan takes the “pixel” metaphor seriously: just as a TV picture can create the impression of depth from a bunch of flat pixels, he suggests, so space itself might emerge from a collection of elements that act as if they inhabit only two dimensions.

Like many ideas from the far edge of today’s theoretical physics, Hogan’s speculations can sound suspiciously like late-night philosophising in the freshman dorm. What makes them drastically different is that he plans to put them to a hard experimental test. As in, right now.

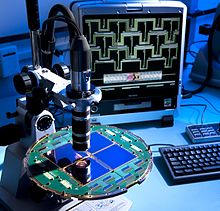

Starting in 2007, Hogan began thinking about how to build a device that could measure the exceedingly fine graininess of space. As it turns out, his colleagues had plenty of ideas about how to do that, drawing on technology developed to search for gravitational waves. Within two years Hogan had put together a proposal and was working with collaborators at Fermilab, the University of Chicago and other institutions to build a chunk-detecting machine, which he more elegantly calls a “holometer”. (The name is an esoteric pun, referencing both a 17th-century surveying instrument and the theory that 2D space could appear three-dimensional, analogous to a hologram.)

Beneath its layers of conceptual complexity, the holometer is technologically little more than a laser beam, a half-reflective mirror to split the laser into two perpendicular beams, and two other mirrors to bounce those beams back along a pair of 40m-long tunnels. The beams are calibrated to register the precise locations of the mirrors. If space is chunky, the locations of the mirrors would constantly wander about (strictly speaking, space itself is doing the wandering), creating a constant, random variation in their separation. When the two beams are recombined, they’d be slightly out of sync, and the amount of the discrepancy would reveal the scale of the chunks of space.

For the scale of chunkiness that Hogan hopes to find, he needs to measure distances to an accuracy of 10-18m, about 100m times smaller than a hydrogen atom, and collect data at a rate of about 100m readings per second. Amazingly, such an experiment is not only possible, but practical. “We were able to do it pretty cheaply because of advances in photonics, a lot of off-the-shelf parts, fast electronics and things like that,” Hogan says. “It’s a pretty speculative experiment, so you wouldn’t have done it unless it was cheap.” The holometer is currently humming away, collecting data at the target accuracy; he expects to have preliminary readings by the end of the year.

Hogan has his share of fierce sceptics, including many within the theoretical physics community. The reason for the disagreement is easy to appreciate: a success for the holometer would mean failure for a lot of the work being done in string theory. Despite this superficial sparring, though, Hogan and most of his theorist colleagues share a deep core conviction: they broadly agree that general relativity will ultimately prove subordinate to quantum mechanics. The other three laws of physics follow quantum rules, so it makes sense that gravity must as well.

For most of today’s theorists, however, belief in the primacy of quantum mechanics runs deeper still. At a philosophical – epistemological – level, they regard the large-scale reality of classical physics as a kind of illusion, an approximation that emerges from the more “true” aspects of the quantum world operating at an extremely small scale. Chunky space certainly aligns with that worldview.

Hogan likens his project to the landmark Michelson-Morley experiment of the 19th century, which searched for the aether – the hypothetical substance of space that, according to the leading theory of the time, transmitted light waves through a vacuum. The experiment found nothing; that perplexing null result helped inspire Einstein’s special theory of relativity, which in turn spawned the general theory of relativity and eventually turned the entire world of physics upside down. Adding to the historical connection, the Michelson-Morley experiment also measured the structure of space using mirrors and a split beam of light, following a setup remarkably similar to Hogan’s.

“We’re doing the holometer in that kind of spirit. If we don’t see something or we do see something, either way it’s interesting. The reason to do the experiment is just to see whether we can find something to guide the theory,” Hogan says. “You find out what your theorist colleagues are made of by how they react to this idea. There’s a world of very mathematical thinking out there. I’m hoping for an experimental result that forces people to focus the theoretical thinking in a different direction.”

Whether or not he finds his quantum structure of space, Hogan is confident the holometer will help physics address its big-small problem. It will show the right way (or rule out the wrong way) to understand the underlying quantum structure of space and how that affects the relativistic laws of gravity flowing through it.

A bigger vision

If you are looking for a totally different direction, Smolin of the Perimeter Institute is your man. Where Hogan goes gently against the grain, Smolin is a full-on dissenter: “There’s a thing that Richard Feynman told me when I was a graduate student. He said, approximately, ‘If all your colleagues have tried to demonstrate that something’s true and failed, it might be because that thing is not true.’ Well, string theory has been going for 40 or 50 years without definitive progress.”

And that is just the start of a broader critique. Smolin thinks the small-scale approach to physics is inherently incomplete. Current versions of quantum field theory do a fine job explaining how individual particles or small systems of particles behave, but they fail to take into account what is needed to have a sensible theory of the cosmos as a whole. They don’t explain why reality is like this, and not like something else. In Smolin’s terms, quantum mechanics is merely “a theory of subsystems of the universe”.

A more fruitful path forward, he suggests, is to consider the universe as a single enormous system, and to build a new kind of theory that can apply to the whole thing. And we already have a theory that provides a framework for that approach: general relativity. Unlike the quantum framework, general relativity allows no place for an outside observer or external clock, because there is no “outside”. Instead, all of reality is described in terms of relationships between objects and between different regions of space. Even something as basic as inertia (the resistance of your car to move until forced to by the engine, and its tendency to keep moving after you take your foot off the accelerator) can be thought of as connected to the gravitational field of every other particle in the universe.

‘What if the universe were entirely empty except for two astronauts, one of them spinning, the other stationary?’

That last statement is strange enough that it’s worth pausing for a moment to consider it more closely. Consider a thought problem, closely related to the one that originally led Einstein to this idea in 1907. What if the universe were entirely empty except for two astronauts? One of them is spinning, the other is stationary. The spinning one feels dizzy, doing cartwheels in space. But which one of the two is spinning? From either astronaut’s perspective, the other is the one spinning. Without any external reference, Einstein argued, there is no way to say which one is correct, and no reason why one should feel an effect different from what the other experiences.

The distinction between the two astronauts makes sense only when you reintroduce the rest of the universe. In the classic interpretation of general relativity, then, inertia exists only because you can measure it against the entire cosmic gravitational field. What holds true in that thought problem holds true for every object in the real world: the behaviour of each part is inextricably related to that of every other part. If you’ve ever felt as if you wanted to be a part of something big, well, this is the right kind of physics for you. It is also, Smolin thinks, a promising way to obtain bigger answers about how nature really works, across all scales.

“General relativity is not a description of subsystems. It is a description of the whole universe as a closed system,” he says. When physicists are trying to resolve the clash between relativity and quantum mechanics, therefore, it seems like a smart strategy for them to follow Einstein’s lead and go as big as they possibly can.

Smolin is keenly aware that he is pushing against the prevailing devotion to small-scale, quantum-style thinking. “I don’t mean to stir things up; it just kind of happens that way. My role is to think clearly about these difficult issues, put my conclusions out there, and let the dust settle,” he says genially. “I hope people will engage with the arguments, but I really hope that the arguments lead to testable predictions.”

At first blush, Smolin’s ideas sound like a formidable starting point for concrete experimentation. Much as all of the parts of the universe are linked across space, they may also be linked across time, he suggests. His arguments led him to hypothesise that the laws of physics evolve over the history of the universe. Over the years, he has developed two detailed proposals for how this might happen. His theory of cosmological natural selection, which he hammered out in the 1990s, envisions black holes as cosmic eggs that hatch new universes. More recently, he has developed a provocative hypothesis about the emergence of the laws of quantum mechanics, called the principle of precedence – and this one seems much more readily put to the test.

Smolin’s principle of precedence arises as an answer to the question of why physical phenomena are reproducible. If you perform an experiment that has been performed before, you expect the outcome will be the same as in the past. (Strike a match and it bursts into flame; strike another match the same way and… you get the idea.) Reproducibility is such a familiar part of life that we typically don’t even think about it. We simply attribute consistent outcomes to the action of a natural “law” that acts the same way at all times. Smolin hypothesises that those laws actually may emerge over time, as quantum systems copy the behaviour of similar systems in the past.

One possible way to catch emergence in the act is by running an experiment that has never been done before, so there is no past version (that is, no precedent) for it to copy. Such an experiment might involve the creation of a highly complex quantum system, containing many components that exist in a novel entangled state. If the principle of precedence is correct, the initial response of the system will be essentially random. As the experiment is repeated, however, precedence builds up and the response should become predictable… in theory. “A system by which the universe is building up precedent would be hard to distinguish from the noises of experimental practice,” Smolin concedes, “but it’s not impossible.”

Although precedence can play out at the atomic scale, its influence would be system-wide, cosmic. It ties back to Smolin’s idea that small-scale, reductionist thinking seems like the wrong way to solve the big puzzles. Getting the two classes of physics theories to work together, though important, is not enough, either. What he wants to know – what we all want to know – is why the universe is the way it is. Why does time move forward and not backward? How did we end up here, with these laws and this universe, not some others?

The present lack of any meaningful answer to those questions reveals “something deeply wrong with our understanding of quantum field theory”, Smolin says. Like Hogan, he is less concerned about the outcome of any one experiment than he is with the larger programme of seeking fundamental truths. For Smolin, that means being able to tell a complete, coherent story about the universe; it means being able to predict experiments, but also to explain the unique properties that made atoms, planets, rainbows and people. Here again he draws inspiration from Einstein.

“The lesson of general relativity, again and again, is the triumph of relationalism,” Smolin says. The most likely way to get the big answers is to engage with the universe as a whole.

And the winner is?

If you wanted to pick a referee in the big-small debate, you could hardly do better than Sean Carroll, an expert in cosmology, field theory and gravitational physics at Caltech. He knows his way around relativity, he knows his way around quantum mechanics, and he has a healthy sense of the absurd: he calls his personal blog Preposterous Universe. Right off the bat, Carroll awards most of the points to the quantum side. “Most of us in this game believe that quantum mechanics is much more fundamental than general relativity is,” he says. That has been the prevailing view ever since the 1920s, when Einstein tried and repeatedly failed to find flaws in the counterintuitive predictions of quantum theory. The recent Dutch experiment demonstrating an instantaneous quantum connection between two widely separated particles – the kind of event that Einstein derided as “spooky action at a distance” – only underscores the strength of the evidence.

Taking a larger view, the real issue is not general relativity versus quantum field theory, Carroll explains, but classical dynamics versus quantum dynamics. Relativity, despite its perceived strangeness, is classical in how it regards cause and effect; quantum mechanics most definitely is not. Einstein was optimistic that some deeper discoveries would uncover a classical, deterministic reality hiding beneath quantum mechanics, but no such order has yet been found. The demonstrated reality of spooky action at a distance argues that such order does not exist.

“If anything, people underappreciate the extent to which quantum mechanics just completely throws away our notions of space and locality [the notion that a physical event can affect only its immediate surroundings]. Those things simply are not there in quantum mechanics,” Carroll says. They may be large-scale impressions that emerge from very different small-scale phenomena, like Hogan’s argument about 3D reality emerging from 2D quantum units of space.

Despite that seeming endorsement, Carroll regards Hogan’s holometer as a long shot, though he admits it is removed from his area of research. At the other end, he doesn’t think much of Smolin’s efforts to start with space as a fundamental thing; he believes the notion is as absurd as trying to argue that air is more fundamental than atoms. As for what kind of quantum system might take physics to the next level, Carroll remains broadly optimistic about string theory, which he says “seems to be a very natural extension of quantum field theory”. In all these ways, he is true to the mainstream, quantum-based thinking in modern physics.

Yet Carroll’s ruling, while almost entirely pro-quantum, is not purely an endorsement of small-scale thinking. There are still huge gaps in what quantum theory can explain. “Our inability to figure out the correct version of quantum mechanics is embarrassing,” he says. “And our current way of thinking about quantum mechanics is simply a complete failure when you try to think about cosmology or the whole universe. We don’t even know what time is.” Both Hogan and Smolin endorse this sentiment, although they disagree about what to do in response. Carroll favours a bottom-up explanation in which time emerges from small-scale quantum interactions, but declares himself “entirely agnostic” about Smolin’s competing suggestion that time is more universal and fundamental. In the case of time, then, the jury is still out.

No matter how the theories shake out, the large scale is inescapably important, because it is the world we inhabit and observe. In essence, the universe as a whole is the answer, and the challenge to physicists is to find ways to make it pop out of their equations. Even if Hogan is right, his space-chunks have to average out to the smooth reality we experience every day. Even if Smolin is wrong, there is an entire cosmos out there with unique properties that need to be explained – something that, for now at least, quantum physics alone cannot do.

By pushing at the bounds of understanding, Hogan and Smolin are helping the field of physics make that connection. They are nudging it toward reconciliation not just between quantum mechanics and general relativity, but between idea and perception. The next great theory of physics will undoubtedly lead to beautiful new mathematics and unimaginable new technologies. But the best thing it can do is create deeper meaning that connects back to us, the observers, who get to define ourselves as the fundamental scale of the universe.

___________________________________________________________

Einstein and quantum resonance reviews the magnetism of the earth and

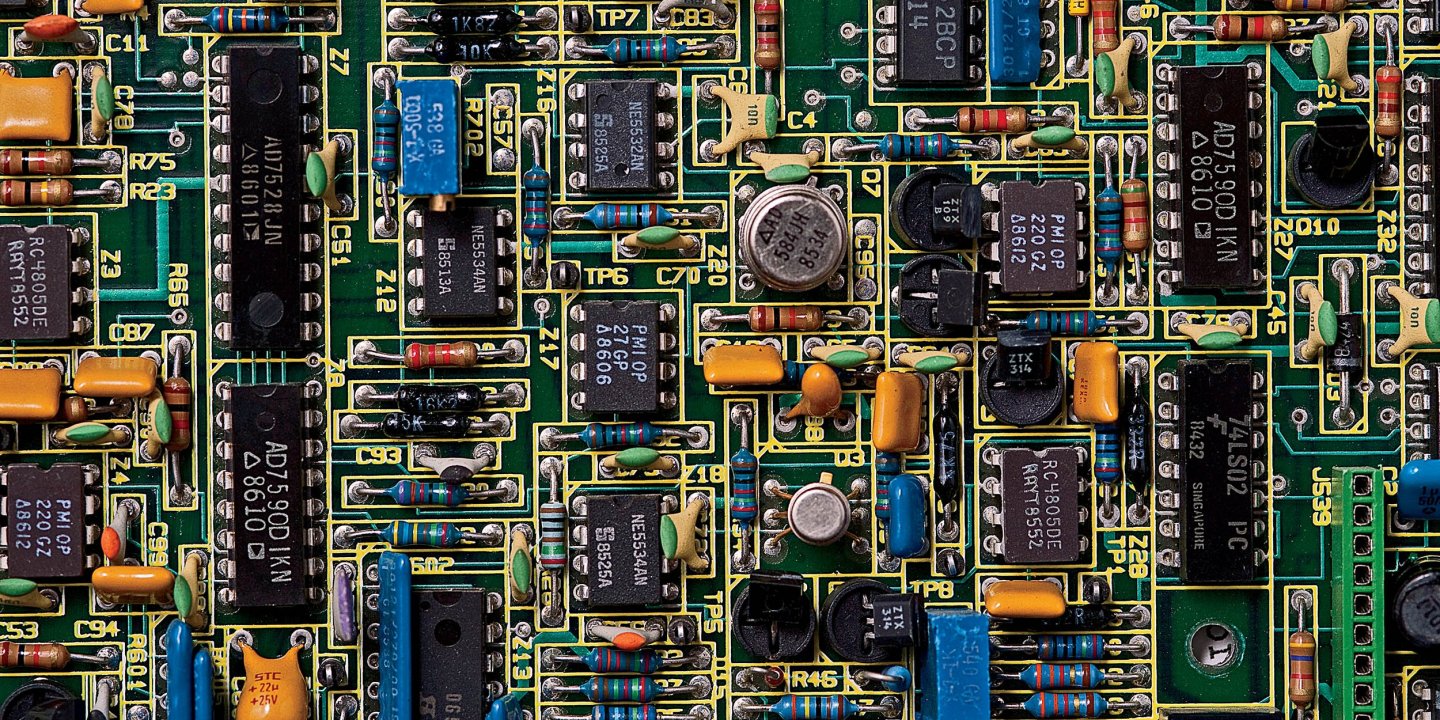

Electronic Device Systems

___________________________________________________________

Energy resonance occurs in lighting systems within electronic ballasts as well as discharge lamps and this resonance is related to the alpha constant, to Einstein's famous energy equation, E =mc 2 and to Planck's quantized dimensions involving mass, energy, and space. This report will show how both the alpha constant and Einstein's equation have the same type of resonance characteristic and that characteristic is comparable to a space-time relationship dependent on three spatial dimensions and time. It is predicted this resonance occurs at a specific frequency that is proportional to the square of the cube of three-dimensional space-time. A periodic triune energy resonance map for subatomic structures has been mathematically formulated using this predicted space-time resonance relationship that is based on a triune dependent and dynamic structure consisting of electric fields, magnetic fields, and gravitational fields .

Resonance is a well-known and documented characteristic associated with electromagnetic energy in discharge lamps. It can be a positive or negative factor depending on the lamp design and/or the associated ballast design. In terms of fluorescent discharge lamp design, the resonance radiation is an important consideration for producing efficient ultraviolet radiation. In the fluorescent lamp, the generation of ultraviolet resonance radiation from the metastable state to the ground state is necessary for the subsequent conversion of the 254 nanometer resonance radiation into the phosphor to produce visible light. In some high intensity discharge lamps operating at high frequency, the occurrence of acoustic resonance can be destructive to an operating lamp by causing the lamp to instantly extinguish because the impedance approaches infinity as the discharge approaches resonance. So it is important to understand the fundamental basis for discharge lamp resonance. But how this resonance radiation is related to space-time has never been explored or satisfactorily explained as evidenced by the accepted mystery of the meaning of the resonant alpha constant for hydrogen. That mystery had to be initially solved and then related to Einstein's energy-mass relationship before the resonance relationship could be associated with spatial dimensions and time. Once that was resolved, the relationship of this resonance characteristic with three dimensional space and one time interval then became mathematically apparent. This resonant condition is basically compatible with Maxwell's electromagnetic theory and Plank's quantized concept. It should explain some of the commonality with Einstein's relativity concept and the presently postulated superstring theory.

An Einstein Rosen bridge, ER or commonly called a wormhole, is a theoretical passage through space and time that could create shortcuts for long journeys across the universe. This phenomenon was predicted in 1935 when Albert Einstein and Nathan Rosen published a paper showing the existence of a corridor or passage directly connecting one part of the universe to another as part of a black hole-white hole. system. It was the first mathematical description of a wormhole by performing a coordinate transformation on the Schwarzschild equation that removed the region containing the curvature singularity.

Since then, many interpretations and developments of this theory have been made, one of the most famous of them being ER=EPR, another is space travel or time traveling. The solar system with our planet is moving fast in space, leaving like a trail behind with information encrypted inside it. And one could imagine making a bridge between two points distant in space and time.

These space and time travel developments while not yet proven in an experiment have been multiple times .

RESONANCE FOR TO GRAVITATION WAVE

_____________________________________________

Gravitational waves are disturbances in the curvature of spacetime, generated by accelerated masses, that propagate as waves outward from their source at the speed of light. They were proposed by Henri Poincaré in 1905 and subsequently predicted in 1916 by Albert Einstein on the basis of his general theory of relativity. Gravitational waves transport energy as gravitational radiation, a form of radiant energy similar to electromagnetic radiation. Newton's law of universal gravitation, part of classical mechanics, does not provide for their existence, since that law is predicated on the assumption that physical interactions propagate instantaneously (at infinite speed) – showing one of the ways the methods of classical physics are unable to explain phenomena associated with relativity.

Gravitational-wave astronomy is a branch of observational astronomy that uses gravitational waves to collect observational data about sources of detectable gravitational waves such as binary star systems composed of white dwarfs, neutron stars, and black holes; and events such as supernovae, and the formation of the early universe shortly after the Big Bang.

In 1993, Russell A. Hulse and Joseph H. Taylor, Jr. received the Nobel Prize in Physics for the discovery and observation of the Hulse-Taylor binary pulsar, which offered the first indirect evidence of the existence of gravitational waves.

On 11 February 2016, the LIGO and Virgo Scientific Collaboration announced they had made the first direct observation of gravitational waves. The observation was made five months earlier, on 14 September 2015, using the Advanced LIGO detectors. The gravitational waves originated from the merging of a binary black hole system. After the initial announcement the LIGO instruments detected two more confirmed, and one potential, gravitational wave events. In August 2017, the two LIGO instruments and the Virgo instrument observed a fourth gravitational wave from merging black holes and a fifth gravitational wave from a binary neutron star merger. Several other gravitational wave detectors are planned or under construction .

In Einstein's general theory of relativity, gravity is treated as a phenomenon resulting from the curvature of space time. This curvature is caused by the presence of mass. Generally, the more mass that is contained within a given volume of space, the greater the curvature of space time will be at the boundary of its volume. As objects with mass move around in space time, the curvature changes to reflect the changed locations of those objects. In certain circumstances, accelerating objects generate changes in this curvature, which propagate outwards at the speed of light in a wave-like manner. These propagating phenomena are known as gravitational waves.

As a gravitational wave passes an observer, that observer will find space time distorted by the effects of strain. Distances between objects increase and decrease rhythmically as the wave passes, at a frequency equal to that of the wave. This occurs despite such free objects never being subjected to an unbalanced force. The magnitude of this effect decreases in proportion to the inverse distance from the source. Inspiraling binary neutron stars are predicted to be a powerful source of gravitational waves as they coalesce, due to the very large acceleration of their masses as they orbit close to one another. However, due to the astronomical distances to these sources, the effects when measured on Earth are predicted to be very small, having strains of less than 1 part in 1020. Scientists have demonstrated the existence of these waves with ever more sensitive detectors. The most sensitive detector accomplished the task possessing a sensitivity measurement of about one part in 5×1022 (as of 2012) provided by the LIGO and VIRGO observatories. A space based observatory, the Laser Interferometer Space Antenna, is currently under development by ESA.

Gravitational waves can penetrate regions of space that electromagnetic waves cannot. They are able to allow the observation of the merger of black holes and possibly other exotic objects in the distant Universe. Such systems cannot be observed with more traditional means such as optical telescopes or radio telescopes, and so gravitational wave astronomy gives new insights into the working of the Universe. In particular, gravitational waves could be of interest to cosmologists as they offer a possible way of observing the very early Universe. This is not possible with conventional astronomy, since before recombination the Universe was opaque to electromagnetic radiation. Precise measurements of gravitational waves will also allow scientists to test more thoroughly the general theory of relativity.

In principle, gravitational waves could exist at any frequency. However, very low frequency waves would be impossible to detect and there is no credible source for detectable waves of very high frequency. Stephen Hawking and Werner Israel list different frequency bands for gravitational waves that could plausibly be detected, ranging from 10−7 Hz up to 1011 Hz.

The possibility of gravitational waves was discussed in 1893 by Oliver Heaviside using the analogy between the inverse-square law in gravitation and electricity. In 1905, Henri Poincaré proposed gravitational waves, emanating from a body and propagating at the speed of light, as being required by the Lorentz transformations and suggested that, in analogy to an accelerating electrical charge producing electromagnetic waves, accelerated masses in a relativistic field theory of gravity should produce gravitational waves. When Einstein published his general theory of relativity in 1915, he was skeptical of Poincaré's idea since the theory implied there were no "gravitational dipoles". Nonetheless, he still pursued the idea and based on various approximations came to the conclusion there must, in fact, be three types of gravitational waves (dubbed longitudinal-longitudinal, transverse-longitudinal, and transverse-transverse by Hermann Weyl).

However, the nature of Einstein's approximations led many (including Einstein himself) to doubt the result. In 1922, Arthur Eddington showed that two of Einstein's types of waves were artifacts of the coordinate system he used, and could be made to propagate at any speed by choosing appropriate coordinates, leading Eddington to jest that they "propagate at the speed of thought". This also cast doubt on the physicality of the third (transverse-transverse) type that Eddington showed always propagate at the speed of light regardless of coordinate system. In 1936, Einstein and Nathan Rosen submitted a paper to Physical Review in which they claimed gravitational waves could not exist in the full general theory of relativity because any such solution of the field equations would have a singularity. The journal sent their manuscript to be reviewed by Howard P. Robertson, who anonymously reported that the singularities in question were simply the harmless coordinate singularities of the employed cylindrical coordinates. Einstein, who was unfamiliar with the concept of peer review, angrily withdrew the manuscript, never to publish in Physical Review again. Nonetheless, his assistant Leopold Infeld, who had been in contact with Robertson, convinced Einstein that the criticism was correct, and the paper was rewritten with the opposite conclusion and published elsewhere.

In 1956, Felix Pirani remedied the confusion caused by the use of various coordinate systems by rephrasing the gravitational waves in terms of the manifestly observable Riemann curvature tensor. At the time this work was mostly ignored because the community was focused on a different question: whether gravitational waves could transmit energy. This matter was settled by a thought experiment proposed by Richard Feynman during the first "GR" conference at Chapel Hill in 1957. In short, his argument known as the "sticky bead argument" notes that if one takes a rod with beads then the effect of a passing gravitational wave would be to move the beads along the rod; friction would then produce heat, implying that the passing wave had done work. Shortly after, Hermann Bondi, a former gravitational wave skeptic, published a detailed version of the "sticky bead argument".

After the Chapel Hill conference, Joseph Weber started designing and building the first gravitational wave detectors now known as Weber bars. In 1969, Weber claimed to have detected the first gravitational waves, and by 1970 he was "detecting" signals regularly from the Galactic Center; however, the frequency of detection soon raised doubts on the validity of his observations as the implied rate of energy loss of the Milky Way would drain our galaxy of energy on a timescale much shorter than its inferred age. These doubts were strengthened when, by the mid-1970s, repeated experiments from other groups building their own Weber bars across the globe failed to find any signals, and by the late 1970s general consensus was that Weber's results were spurious.

In the same period, the first indirect evidence for the existence of gravitational waves was discovered. In 1974, Russell Alan Hulse and Joseph Hooton Taylor, Jr. discovered the first binary pulsar, a discovery that earned them the 1993 Nobel Prize in Physics. Pulsar timing observations over the next decade showed a gradual decay of the orbital period of the Hulse-Taylor pulsar that matched the loss of energy and angular momentum in gravitational radiation predicted by general relativity.

This indirect detection of gravitational waves motivated further searches despite Weber's discredited result. Some groups continued to improve Weber's original concept, while others pursued the detection of gravitational waves using laser interferometers. The idea of using a laser interferometer to detect gravitational waves seems to have been floated by various people independently, including M. E. Gertsenshtein and V. I. Pustovoit in 1962, and Vladimir B. Braginskiĭ in 1966. The first prototypes were developed in the 1970s by Robert L. Forward and Rainer Weiss. In the decades that followed, ever more sensitive instruments were constructed, culminating in the construction of GEO600, LIGO, and Virgo.

After years of producing null results improved detectors became operational in 2015 - LIGO made the first direct detection of gravitational waves on 14 September 2015. It was inferred that the signal, dubbed GW150914, originated from the merger of two black holes with masses 36+5

−4 M⊙ and 29+4

−4 M⊙, resulting in a 62+4

−4 M⊙ black hole. This suggested that the gravitational wave signal carried the energy of roughly three solar masses, or about 5 x 1047 joules.

−4 M⊙ and 29+4

−4 M⊙, resulting in a 62+4

−4 M⊙ black hole. This suggested that the gravitational wave signal carried the energy of roughly three solar masses, or about 5 x 1047 joules.

A year earlier it appeared LIGO might have been beaten to the punch when the BICEP2 claimed that they had detected the imprint of gravitational waves in the cosmic microwave background. However, they were later forced to retract their result.

In 2017, the Nobel Prize in Physics was awarded to Rainer Weiss, Kip Thorne and Barry Barish for their role in the detection of gravitational waves

Effects of passing

Gravitational waves are constantly passing Earth; however, even the strongest have a minuscule effect and their sources are generally at a great distance. For example, the waves given off by the cataclysmic final merger of GW150914 reached Earth after travelling over a billion light-years, as a ripple in spacetime that changed the length of a 4-km LIGO arm by a thousandth of the width of a proton, proportionally equivalent to changing the distance to the nearest star outside the Solar System by one hair's width. This tiny effect from even extreme gravitational waves makes them observable on Earth only with the most sophisticated detectors.

The effects of a passing gravitational wave, in an extremely exaggerated form, can be visualized by imagining a perfectly flat region of spacetime with a group of motionless test particles lying in a plane, e.g. the surface of a computer screen. As a gravitational wave passes through the particles along a line perpendicular to the plane of the particles, i.e. following the observer's line of vision into the screen, the particles will follow the distortion in spacetime, oscillating in a "cruciform" manner, as shown in the animations. The area enclosed by the test particles does not change and there is no motion along the direction of propagation.

The oscillations depicted in the animation are exaggerated for the purpose of discussion – in reality a gravitational wave has a very small amplitude (as formulated in linearized gravity). However, they help illustrate the kind of oscillations associated with gravitational waves as produced by a pair of masses in a circular orbit. In this case the amplitude of the gravitational wave is constant, but its plane of polarization changes or rotates at twice the orbital rate, so the time-varying gravitational wave size, or 'periodic spacetime strain', exhibits a variation as shown in the animation. If the orbit of the masses is elliptical then the gravitational wave's amplitude also varies with time according to Einstein's quadrupole formula.

As with other waves, there are a number of characteristics used to describe a gravitational wave:

- Amplitude: Usually denoted h, this is the size of the wave – the fraction of stretching or squeezing in the animation. The amplitude shown here is roughly h = 0.5 (or 50%). Gravitational waves passing through the Earth are many sextillion times weaker than this – h ≈ 10−20.

- Frequency: Usually denoted f, this is the frequency with which the wave oscillates (1 divided by the amount of time between two successive maximum stretches or squeezes)

- Wavelength: Usually denoted λ, this is the distance along the wave between points of maximum stretch or squeeze.

- Speed: This is the speed at which a point on the wave (for example, a point of maximum stretch or squeeze) travels. For gravitational waves with small amplitudes, this wave speed is equal to the speed of light (c).

The speed, wavelength, and frequency of a gravitational wave are related by the equation c = λ f, just like the equation for a light wave. For example, the animations shown here oscillate roughly once every two seconds. This would correspond to a frequency of 0.5 Hz, and a wavelength of about 600 000 km, or 47 times the diameter of the Earth.

In the above example, it is assumed that the wave is linearly polarized with a "plus" polarization, written h+. Polarization of a gravitational wave is just like polarization of a light wave except that the polarizations of a gravitational wave are 45 degrees apart, as opposed to 90 degrees. In particular, in a "cross"-polarized gravitational wave, h×, the effect on the test particles would be basically the same, but rotated by 45 degrees, as shown in the second animation. Just as with light polarization, the polarizations of gravitational waves may also be expressed in terms of circularly polarized waves. Gravitational waves are polarized because of the nature of their source.

Sources

In general terms, gravitational waves are radiated by objects whose motion involves acceleration and its change, provided that the motion is not perfectly spherically symmetric (like an expanding or contracting sphere) or rotationally symmetric (like a spinning disk or sphere). A simple example of this principle is a spinning dumbbell. If the dumbbell spins around its axis of symmetry, it will not radiate gravitational waves; if it tumbles end over end, as in the case of two planets orbiting each other, it will radiate gravitational waves. The heavier the dumbbell, and the faster it tumbles, the greater is the gravitational radiation it will give off. In an extreme case, such as when the two weights of the dumbbell are massive stars like neutron stars or black holes, orbiting each other quickly, then significant amounts of gravitational radiation would be given off.

Some more detailed examples:

- Two objects orbiting each other, as a planet would orbit the Sun, will radiate.

- A spinning non-axisymmetric planetoid – say with a large bump or dimple on the equator – will radiate.

- A supernova will radiate except in the unlikely event that the explosion is perfectly symmetric.

- An isolated non-spinning solid object moving at a constant velocity will not radiate. This can be regarded as a consequence of the principle of conservation of linear momentum.

- A spinning disk will not radiate. This can be regarded as a consequence of the principle of conservation of angular momentum. However, it will show gravitomagnetic effects.

- A spherically pulsating spherical star (non-zero monopole moment or mass, but zero quadrupole moment) will not radiate, in agreement with Birkhoff's theorem.

More technically, the second time derivative of the quadrupole moment (or the l-th time derivative of the l-th multipole moment) of an isolated system's stress–energy tensor must be non-zero in order for it to emit gravitational radiation. This is analogous to the changing dipole moment of charge or current that is necessary for the emission of electromagnetic radiation.

Binaries

Gravitational waves carry energy away from their sources and, in the case of orbiting bodies, this is associated with an in-spiral or decrease in orbit. Imagine for example a simple system of two masses – such as the Earth–Sun system – moving slowly compared to the speed of light in circular orbits. Assume that these two masses orbit each other in a circular orbit in the x–y plane. To a good approximation, the masses follow simple Keplerian orbits. However, such an orbit represents a changing quadrupole moment. That is, the system will give off gravitational waves.

In theory, the loss of energy through gravitational radiation could eventually drop the Earth into the Sun. However, the total energy of the Earth orbiting the Sun (kinetic energy + gravitational potential energy) is about 1.14×1036 joules of which only 200 watts (joules per second) is lost through gravitational radiation, leading to a decay in the orbit by about 1×10−15 meters per day or roughly the diameter of a proton. At this rate, it would take the Earth approximately 1×1013 times more than the current age of the Universe to spiral onto the Sun. This estimate overlooks the decrease in r over time, but the majority of the time the bodies are far apart and only radiating slowly, so the difference is unimportant in this example.

More generally, the rate of orbital decay can be approximated by

where r is the separation between the bodies, t time, G the gravitational constant, c the speed of light, and m1 and m2 the masses of the bodies. This leads to an expected time to merger of [44]

Compact binaries

Compact stars like white dwarfs and neutron stars can be constituents of binaries. For example, a pair of solar mass neutron stars in a circular orbit at a separation of 1.89×108 m (189,000 km) has an orbital period of 1,000 seconds, and an expected lifetime of 1.30×1013 seconds or about 414,000 years. Such a system could be observed by LISA if it were not too far away. A far greater number of white dwarf binaries exist with orbital periods in this range. White dwarf binaries have masses in the order of the Sun, and diameters in the order of the Earth. They cannot get much closer together than 10,000 km before they will merge and explode in a supernova which would also end the emission of gravitational waves. Until then, their gravitational radiation would be comparable to that of a neutron star binary.

When the orbit of a neutron star binary has decayed to 1.89×106 m (1890 km), its remaining lifetime is about 130,000 seconds or 36 hours. The orbital frequency will vary from 1 orbit per second at the start, to 918 orbits per second when the orbit has shrunk to 20 km at merger. The majority of gravitational radiation emitted will be at twice the orbital frequency. Just before merger, the inspiral could be observed by LIGO if such a binary were close enough. LIGO has only a few minutes to observe this merger out of a total orbital lifetime that may have been billions of years. ln August 2017, LIGO and Virgo observed the first binary neutron star inspiral in GW170817, and 70 observatories collaborated to detect the electromagnetic counterpart, a kilonova in the galaxy NGC 4993, 40 megaparsecs away, emitting a short gamma ray burst (GRB 170817A) seconds after the merger, followed by a longer optical transient (AT 2017gfo) powered by r-process nuclei. Advanced LIGO detector should be able to detect such events up to 200 megaparsecs away. Within this range of the order 40 events are expected per year.

Black hole binaries

Black hole binaries emit gravitational waves during their in-spiral, merger, and ring-down phases. The largest amplitude of emission occurs during the merger phase, which can be modeled with the techniques of numerical relativity. The first direct detection of gravitational waves, GW150914, came from the merger of two black holes.

Supernovae

A supernova is a transient astronomical event that occurs during the last stellar evolutionary stages of a massive star's life, whose dramatic and catastrophic destruction is marked by one final titanic explosion. This explosion can happen in one of many ways, but in all of them a significant proportion of the matter in the star is blown away into the surrounding space at extremely high velocities (up to 10% of the speed of light). Unless there is perfect spherical symmetry in these explosions (i.e., unless matter is spewed out evenly in all directions), there will be gravitational radiation from the explosion. This is because gravitational waves are generated by a changing quadrupole moment, which can happen only when there is asymmetrical movement of masses. Since the exact mechanism by which supernovae take place is not fully understood, it is not easy to model the gravitational radiation emitted by them.

Spinning neutron stars

As noted above, a mass distribution will emit gravitational radiation only when there is spherically asymmetric motion among the masses. A spinning neutron star will generally emit no gravitational radiation because neutron stars are highly dense objects with a strong gravitational field that keeps them almost perfectly spherical. In some cases, however, there might be slight deformities on the surface called "mountains", which are bumps extending no more than 10 centimeters (4 inches) above the surface, that make the spinning spherically asymmetric. This gives the star a quadrupole moment that changes with time, and it will emit gravitational waves until the deformities are smoothed out.

Inflation

Many models of the Universe suggest that there was an inflationary epoch in the early history of the Universe when space expanded by a large factor in a very short amount of time. If this expansion was not symmetric in all directions, it may have emitted gravitational radiation detectable today as a gravitational wave background. This background signal is too weak for any currently operational gravitational wave detector to observe, and it is thought it may be decades before such an observation can be made.

Properties and behaviour

Energy, momentum, and angular momentum

Water waves, sound waves, and electromagnetic waves are able to carry energy, momentum, and angular momentum and by doing so they carry those away from the source. Gravitational waves perform the same function. Thus, for example, a binary system loses angular momentum as the two orbiting objects spiral towards each other—the angular momentum is radiated away by gravitational waves.

The waves can also carry off linear momentum, a possibility that has some interesting implications for astrophysics. After two supermassive black holes coalesce, emission of linear momentum can produce a "kick" with amplitude as large as 4000 km/s. This is fast enough to eject the coalesced black hole completely from its host galaxy. Even if the kick is too small to eject the black hole completely, it can remove it temporarily from the nucleus of the galaxy, after which it will oscillate about the center, eventually coming to rest. A kicked black hole can also carry a star cluster with it, forming a hyper-compact stellar system. Or it may carry gas, allowing the recoiling black hole to appear temporarily as a "naked quasar". The quasar SDSS J092712.65+294344.0 is thought to contain a recoiling supermassive black hole.

Redshifting

Like electromagnetic waves, gravitational waves should exhibit shifting of wavelength due to the relative velocities of the source and observer, but also due to distortions of space-time, such as cosmic expansion. This is the case even though gravity itself is a cause of distortions of space-time. Redshifting of gravitational waves is different from redshifting due to gravity.

Quantum gravity, wave-particle aspects, and graviton

In the framework of quantum field theory, the graviton is the name given to a hypothetical elementary particle speculated to be the force carrier that mediates gravity. However the graviton is not yet proven to exist, and no scientific model yet exists that successfully reconciles general relativity, which describes gravity, and the Standard Model, which describes all other fundamental forces. Attempts, such as quantum gravity, have been made, but are not yet accepted.

If such a particle exists, it is expected to be massless (because the gravitational force appears to have unlimited range) and must be a spin-2 boson. It can be shown that any massless spin-2 field would give rise to a force indistinguishable from gravitation, because a massless spin-2 field must couple to (interact with) the stress–energy tensor in the same way that the gravitational field does; therefore if a massless spin-2 particle were ever discovered, it would be likely to be the graviton without further distinction from other massless spin-2 particles. Such a discovery would unite quantum theory with gravity.

Significance for study of the early universe

Due to the weakness of the coupling of gravity to matter, gravitational waves experience very little absorption or scattering, even as they travel over astronomical distances. In particular, gravitational waves are expected to be unaffected by the opacity of the very early universe. In these early phases, space had not yet become "transparent," so observations based upon light, radio waves, and other electromagnetic radiation that far back into time are limited or unavailable. Therefore, gravitational waves are expected in principle to have the potential to provide a wealth of observational data about the very early universe.

Determining direction of travel

The difficulty in directly detecting gravitational waves, means it is also difficult for a single detector to identify by itself the direction of a source. Therefore, multiple detectors are used, both to distinguish signals from other "noise" by confirming the signal is not of earthly origin, and also to determine direction by means of triangulation. This technique uses the fact that the waves travel at the speed of light and will reach different detectors at different times depending on their source direction. Although the differences in arrival time may be just a few milliseconds, this is sufficient to identify the direction of the origin of the wave with considerable precision.

Only in the case of GW170814 were three detectors operating at the time of the event, therefore, the direction is precisely defined. The detection by all three instruments led to a very accurate estimate of the position of the source, with a 90% credible region of just 60 deg2, a factor 20 more accurate than before.

Gravitational wave astronomy

Two-dimensional representation of gravitational waves generated by two neutron stars orbiting each other.

During the past century, astronomy has been revolutionized by the use of new methods for observing the universe. Astronomical observations were originally made using visible light. Galileo Galilei pioneered the use of telescopes to enhance these observations. However, visible light is only a small portion of the electromagnetic spectrum, and not all objects in the distant universe shine strongly in this particular band. More useful information may be found, for example, in radio wavelengths. Using radio telescopes, astronomers have found pulsars, quasars, and made other unprecedented discoveries of objects not formerly known to scientists. Observations in the microwave band led to the detection of faint imprints of the Big Bang, a discovery Stephen Hawking called the "greatest discovery of the century, if not all time". Similar advances in observations using gamma rays, x-rays, ultraviolet light, and infrared light have also brought new insights to astronomy. As each of these regions of the spectrum has opened, new discoveries have been made that could not have been made otherwise. Astronomers hope that the same holds true of gravitational waves.

Gravitational waves have two important and unique properties. First, there is no need for any type of matter to be present nearby in order for the waves to be generated by a binary system of uncharged black holes, which would emit no electromagnetic radiation. Second, gravitational waves can pass through any intervening matter without being scattered significantly. Whereas light from distant stars may be blocked out by interstellar dust, for example, gravitational waves will pass through essentially unimpeded. These two features allow gravitational waves to carry information about astronomical phenomena heretofore never observed by humans.

The sources of gravitational waves described above are in the low-frequency end of the gravitational-wave spectrum (10−7 to 105 Hz). An astrophysical source at the high-frequency end of the gravitational-wave spectrum (above 105 Hz and probably 1010 Hz) generates relic gravitational waves that are theorized to be faint imprints of the Big Bang like the cosmic microwave background. At these high frequencies it is potentially possible that the sources may be "man made" that is, gravitational waves generated and detected in the laboratory.

A supermassive black hole, created from the merger of the black holes at the center of two merging galaxies detected by the Hubble Space Telescope, is theorized to have been ejected from the merger center by gravitational waves

Detection

Indirect detection

Although the waves from the Earth–Sun system are minuscule, astronomers can point to other sources for which the radiation should be substantial. One important example is the Hulse–Taylor binary – a pair of stars, one of which is a pulsar. The characteristics of their orbit can be deduced from the Doppler shifting of radio signals given off by the pulsar. Each of the stars is about 1.4 M☉ and the size of their orbits is about 1/75 of the Earth–Sun orbit, just a few times larger than the diameter of our own Sun. The combination of greater masses and smaller separation means that the energy given off by the Hulse–Taylor binary will be far greater than the energy given off by the Earth–Sun system – roughly 1022 times as much.

The information about the orbit can be used to predict how much energy (and angular momentum) would be radiated in the form of gravitational waves. As the binary system loses energy, the stars gradually draw closer to each other, and the orbital period decreases. The resulting trajectory of each star is an inspiral, a spiral with decreasing radius. General relativity precisely describes these trajectories; in particular, the energy radiated in gravitational waves determines the rate of decrease in the period, defined as the time interval between successive periastrons (points of closest approach of the two stars). For the Hulse-Taylor pulsar, the predicted current change in radius is about 3 mm per orbit, and the change in the 7.75 hr period is about 2 seconds per year. Following a preliminary observation showing an orbital energy loss consistent with gravitational waves, careful timing observations by Taylor and Joel Weisberg dramatically confirmed the predicted period decrease to within 10%. With the improved statistics of more than 30 years of timing data since the pulsar's discovery, the observed change in the orbital period currently matches the prediction from gravitational radiation assumed by general relativity to within 0.2 percent. In 1993, spurred in part by this indirect detection of gravitational waves, the Nobel Committee awarded the Nobel Prize in Physics to Hulse and Taylor for "the discovery of a new type of pulsar, a discovery that has opened up new possibilities for the study of gravitation." The lifetime of this binary system, from the present to merger is estimated to be a few hundred million years.

Inspirals are very important sources of gravitational waves. Any time two compact objects (white dwarfs, neutron stars, or black holes) are in close orbits, they send out intense gravitational waves. As they spiral closer to each other, these waves become more intense. At some point they should become so intense that direct detection by their effect on objects on Earth or in space is possible. This direct detection is the goal of several large scale experiments.

The only difficulty is that most systems like the Hulse–Taylor binary are so far away. The amplitude of waves given off by the Hulse–Taylor binary at Earth would be roughly h ≈ 10−26. There are some sources, however, that astrophysicists expect to find that produce much greater amplitudes of h ≈ 10−20. At least eight other binary pulsars have been discovered.

Difficulties

Gravitational waves are not easily detectable. When they reach the Earth, they have a small amplitude with strain approximates 10−21, meaning that an extremely sensitive detector is needed, and that other sources of noise can overwhelm the signal. Gravitational waves are expected to have frequencies 10−16 Hz < f < 104 Hz.

Ground-based detectors

Though the Hulse–Taylor observations were very important, they give only indirect evidence for gravitational waves. A more conclusive observation would be a direct measurement of the effect of a passing gravitational wave, which could also provide more information about the system that generated it. Any such direct detection is complicated by the extraordinarily small effect the waves would produce on a detector. The amplitude of a spherical wave will fall off as the inverse of the distance from the source (the 1/R term in the formulas for h above). Thus, even waves from extreme systems like merging binary black holes die out to very small amplitudes by the time they reach the Earth. Astrophysicists expect that some gravitational waves passing the Earth may be as large as h ≈ 10−20, but generally no bigger.

Resonant antennae

A simple device theorised to detect the expected wave motion is called a Weber bar – a large, solid bar of metal isolated from outside vibrations. This type of instrument was the first type of gravitational wave detector. Strains in space due to an incident gravitational wave excite the bar's resonant frequency and could thus be amplified to detectable levels. Conceivably, a nearby supernova might be strong enough to be seen without resonant amplification. With this instrument, Joseph Weber claimed to have detected daily signals of gravitational waves. His results, however, were contested in 1974 by physicists Richard Garwin and David Douglass. Modern forms of the Weber bar are still operated, cryogenically cooled, with superconducting quantum interference devices to detect vibration. Weber bars are not sensitive enough to detect anything but extremely powerful gravitational waves.

MiniGRAIL is a spherical gravitational wave antenna using this principle. It is based at Leiden University, consisting of an exactingly machined 1,150 kg sphere cryogenically cooled to 20 millikelvins. The spherical configuration allows for equal sensitivity in all directions, and is somewhat experimentally simpler than larger linear devices requiring high vacuum. Events are detected by measuring deformation of the detector sphere. MiniGRAIL is highly sensitive in the 2–4 kHz range, suitable for detecting gravitational waves from rotating neutron star instabilities or small black hole mergers.

There are currently two detectors focused on the higher end of the gravitational wave spectrum (10−7 to 105 Hz): one at University of Birmingham, England, and the other at INFN Genoa, Italy. A third is under development at Chongqing University, China. The Birmingham detector measures changes in the polarization state of a microwave beam circulating in a closed loop about one meter across. Both detectors are expected to be sensitive to periodic spacetime strains of h ~ 2×10−13 /√Hz, given as an amplitude spectral density. The INFN Genoa detector is a resonant antenna consisting of two coupled spherical superconducting harmonic oscillators a few centimeters in diameter. The oscillators are designed to have (when uncoupled) almost equal resonant frequencies. The system is currently expected to have a sensitivity to periodic spacetime strains of h ~ 2×10−17 /√Hz, with an expectation to reach a sensitivity of h ~ 2×10−20 /√Hz. The Chongqing University detector is planned to detect relic high-frequency gravitational waves with the predicted typical parameters ~1011 Hz (100 GHz) and h ~10−30 to 10−32.

Interferometers

A more sensitive class of detector uses laser interferometry to measure gravitational-wave induced motion between separated 'free' masses. This allows the masses to be separated by large distances (increasing the signal size); a further advantage is that it is sensitive to a wide range of frequencies (not just those near a resonance as is the case for Weber bars). After years of development the first ground-based interferometers became operational in 2015. Currently, the most sensitive is LIGO – the Laser Interferometer Gravitational Wave Observatory. LIGO has three detectors: one in Livingston, Louisiana, one at the Hanford site in Richland, Washington and a third (formerly installed as a second detector at Hanford) that is planned to be moved to India. Each observatory has two light storage arms that are 4 kilometers in length. These are at 90 degree angles to each other, with the light passing through 1 m diameter vacuum tubes running the entire 4 kilometers. A passing gravitational wave will slightly stretch one arm as it shortens the other. This is precisely the motion to which an interferometer is most sensitive.