AND DIGITAL

AND DIGITAL Video Graphics Array (VGA), is a standard analog computer display first marketed by IBM in 1987. Although the VGA standard is no longer in use because it has been replaced by newer standards, VGA is still implemented on Pocket PC. VGA is the last graphical standard followed by the majority of manufacturers of computer graphics card maker. Display Windows until now still using VGA mode because it is supported by many manufacturers of monitors and graphics cards.A graphics card: "Cirrus Logic").Video Graphics Array (VGA) is also called video card, video adapter, display card, graphics card, graphics board, display adapter or graphics adapter. The term VGA itself is also often used to refer to a screen resolution size of 640 × 480, whatever the graphics card manufacturer. The VGA card is useful for translating computer output to the monitor. For the graphic design process or playing a video game, a high-powered graphics card is required. Manufacturers of famous graphics cards include ATI and nVidia.In addition, VGA can also refer to the 15-pin VGA connector which is still widely used to deliver analog video signals to the monitor. The VGA standard was officially replaced by the XGA standard from IBM, but in fact the VGA was superseded by Super VGA.

Today's VGA cards already use the Graphic Accelerator chipset, which is today's chipset which already incorporates three-dimensional (3D) acceleration capabilities integrated into the chipset it owns. In addition to the VGA card, there is now a "peripheral" computer peripheral called "3D Accelerator" [1] [2], which is the function of this 3D accelerator to process / translate 3D image data more perfectly. 3D accelerators whose existence no longer requires IRQs are capable of more complex and more perfect 3D graphic manipulations, for example computer games that support three dimensional displays are capable of being displayed with a much more realistic image, which can give a very real impression . This is because the many functions of three-dimensional graphics processing that was done by the processor on the "board" (English: motherboard), can now be done by three-dimensional graphics processor on the 3D accelerator. With this division of labor, the processor on the motherboard can do more processing tasks other data. In addition programmers do not need to create three-dimensional graphics function, because the function has been provided by itself by three-dimensional accelerator.A graphics card: "Oak Technology").Also note that the 3D chipset on the VGA card is not as good as if using a 3D accelerator as a supporter (3D accelerator installed separately along with VGA card). However, the 3D Chipset on the VGA card also supports some three-dimensional acceleration facilities in 3D accelerator. As an important note that, 3D accelerator function will be optimal if the "software" (English: software) game is run using the special functions of the 3D accelerator. The "game" software (English: game) that supports this facility is now beginning to evolve, the notable is support for 3D accelerator which has VooDoo 3D FX chipset, Rendition Verite and Permedia 3D Labs

A graphics card: "Oak Technology").

VGA Card function, often called Graphic Card (graphics card) or Video Card, is a function to translate / convert digital signals from computer into a graphical display on the monitor screen. The VGA card (Video Graphic Adapter) is useful for translating the computer output to the monitor. To draw / design graphics or to play games. VGA Card is often also called Card display, VGA card or graphics card. The embedded graphics card is called an expansion slot. Chipset / processor on the VGA card, so many kinds because each VGA card factory has its flagship Chipset. There are many manufacturers of VGA card chipsets like NVidia, 3DFX, S3, ATi, Matrox, SiS, Cirrus Logic, Number Nine (# 9), Trident, Tseng, 3D Labs, STB, OTi, and so on.

The ISA VGA Card is a type of VGA card inserted in the ISA (Industry Standard Architecture) expansion slot of a bus that is still 8-bit or 16-bit I / O. VGA card of this type is now no longer used, because in addition to data transfer speed is very slow, display smoothness of the image and also the combination of colors that are also very limited. The ISA expansion slot bus technology with an 8-bit I / O system was first introduced in 1981, while the ISA expansion slot bus technology with a 16-bit I / O system was first introduced in 1984

The model expansion slot model ISA (Industry Standard Architecture) bus with 16-bit I / O system otherwise known as "AT bus

Image of EISA expansion slot model (Extended Industry Standard Architecture) bus with 32-bit I / O system

Image of PCI expansion slot (Peripheral Component Interconnect) expansion bus model with 32-bit or 64-bit I / O system

Features of VGA card physical form: AGP 3.3 volts, 1.5 volt AGP, AGP Universal, AGP Pro 3.3 volts, AGP Pro 1.5 volt, and AGP Pro Universal

Image model of AGP expansion slot (Accelerated Graphics Port) bus with 128-bit or 256-bit I / O system.

Physical details of expansion slots PCI bus and PCIe bus (PCIe 1x and PCIe 16x).

Physical details of PCIe bus expansion slots (PCIe 1x, PCIe 4x, PCIe 8x, and PCIe 16x).

Image of PCIe expansion slot model (Peripheral Component Interconnect Express) bus which is a series circuit of its I / O system, with transfer speed is up to 32 GByte / s.

X . I Homemade VGA Adapter An inexpensive solution, pushing the envelope on MCU clock Cycle optimization

Introduction

Motivation

The goal of our project is to create a VGA video adapter. This “homemade video card” should be able to connect to any monitor that subscribes to VGA standards with a standard connector and display the desired material reliably. The challenges involved here stem from adapting a general use microprocessor that we are familiar with to a specific task that it may (or may not) be suited to. The project required the researching and understanding the VGA standard of how a picture is displayed on a computer screen, identifying the shortcomings or advantages of the MEGA644 processor in accomplishing this, development and fabrication of the necessary hardware to interface with the screen, and in converting images to a format that can be stored in memory and displayed on the microprocessor.We divided our goals for this project into a progression of three different tasks, each building off of the previous one.

- First, we wanted to display color bars on the screen, by means of direct output from the microcontroller to an analog circuit that transformed pin outs to VGA output.

- Next, we wanted to display color bars or a static image to the screen, by means of triggering RGB outputs from static random-access memory (SRAM).

- Finally, we wanted to render an animation or video to the screen, by means of triggering outputs from SRAM but also writing to SRAM live data simultaneously.

Our ultimate goal, originally, was to stream a live CCD camera to VGA output using our device. However, upon delivery of the CCD camera and studying its output, we observed very quickly that its transmitted signals were not suitable to be converted to VGA in the scope of the remaining time in our 5-week project. This will be described at greater length after a brief background about the standards and parameters relating to VGA.

While this is a “solved problem” by industry standards, it poses a number of interesting challenges to the inquiring student.

- The clock speed of the processor versus the needed clock speed of the VGA standard (overclocking).

- The onboard memory the MEGA644 has versus the needed memory (external memory).

- The exact timing needed for the VGA output standard (cycle accuracy).

- Fabrication of appropriate hardware to address shortcomings of the processor for the above tasks or in simply building hardware filters/interfaces for the VGA standard.

Research

Video Graphics Array (VGA) is a video standard devised by IBM for their PS/2 computers in 1987. The widespread adoption has since made this the baseline for all displays and is still the baseline for operation today. The standard specifies a set of screen resolutions, operating modes, and minimum hardware requirements.There are five signals in the VGA connection that we are most interested in: two for timing conditioning and three for colorization.

For conditioning, the vertical sync pulse is a digital active low signal whose negative edge triggers the monitor moving focus to the topmost line, leftmost pixel of the screen to display RGB; the horizontal sync pulse is a digital active low signal whose negative edge triggers the monitor focus to the leftmost pixel of the next line down from where current focus is. When not in the presence of sync pulses, the monitor moves focus to the pixel to the right of existing focus, one pixel per clock cycle on a 25.175 MHz clock.

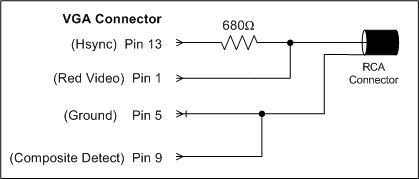

The other three signals with which we are concerned are for Red, Blue, and Green, which are each analog signals sent to the monitor. Thus, since we store values (like the colors in an image) as digital elements in the MCU, part of the hardware for such a device would require a Digital-to-Analog conversion.

Pinout diagram of a VGA adapter

VGA Waveform Guide, from Altera

Summary of stages and stage overlap in VGA standard

Timing of stages in VGA standard

Reconsideration of Project Scope

Now, with the insight about how VGA signals and timing works, we return to the original proposal of CCD camera live display to VGA. Upon learning more about a sample WiseCom miniature camera and its limited accompanying documentation, we learned that output is a single signal consisting of sync pulses for each line and rapidly changing RGB values in between the sync pulses at a notably higher voltage. The signal appeared to be compliant with NTSC standards, which has a period of 50 uS between sync pulses (versus 31.78 uS for our VGA output).Because of this time difference, even with implementation of a buffer and additional digital circuitry, our VGA screen could change at most once every two samples, as a result of the timing difference, making the change from NTSC to VGA functionally insignificant in terms of quality. Moreover, having to isolate the sync pulse and decode RGB values from a single signal would add to the complexity of this goal. Though possible to convert NTSC to VGA through precise edge-based interrupts, timing, sampling, and buffering, that goal seemed incredibly unlikely and also risky for the amount of time that had been remaining, and would likely have entailed in entire project in and of itself.

To replace the live camera feed as our ultimate goal for VGA display, we needed to choose another application, and, at that, one that could exhibit real time memory loading and animation during porch time. An added goal was to include user-interactivity, which would, in turn, require the use of more ports than we have availability, thus needing a design solution to address the limited number of ports.

We decided to implement a ‘Paint’-like application, to accompany the already existing Image Mode functionality, where the user loads a static images to memory. In Paint Mode, the device would take advantage of the image the user loaded previously, storing this as the background. Using a joystick, the user would then move a colored cursor around the screen, painting their choice of 16 4-bit colors superimposed on the background. In addition to allowing for user interactivity, more ports than available, and real-time updated animation functionalities, Paint Mode would require the use of multiple memory blocks, having the image stored more than once, because we need to preserve the color of the background image that is obscured by the cursor to be replaced once the cursor moves away. This added design capability would not be necessary if there did not exist a “clear” paint color that the user can select to move around the screen without painting on top of the background, thus affording an addition functionality.

Aside from working to develop a VGA driver on an MCU that is not intended to have the memory or speed to drive such a device, the most significant takeaways from this project are the understanding of VGA as a precision data vehicle and the achievement of creating a standalone and self-sufficient application to fulfill these goals. As mentioned in the initial project description, our goals, including VGA output and, now, creating Paint, are not broaching on uncharted technological territory; however, implementing these functionalities in something as low-level as assembly has the potential to offer greater optimization capabilities (and as such, reward and satisfaction at the end of the day).

High-Level Design

System Organization

In order to meet the requirements of our final design—including display of both painted and loaded data images, we needed to implement three different programs, each executed for a different purpose.

High-Level Block Diagram

The contents of this file can then be loaded into program memory of a second application which writes the data to SRAM, saving the pixel colors to memory for later use. After loading completes, this application displays the loaded image to the VGA monitor in 8-bit color. To display an image to the monitor at high enough of a resolution, the pixel data for the image will exceed the storage capacity of the MCU. Thus, a series of files are created for each image that is processed, which can be loaded into SRAM over the course of numerous program executions. This procedure represents Image Mode.

A separate application affords the user the functionality of the Paint Mode application described above.

On the hardware side, the necessary connections need to be made between the MCU and memory. Furthermore, MCU and SRAM output are digital, but VGA color input is analog, requiring a Digital to Analog Converter circuit. Finally, a Tri-state stands between memory and the VGA so as prevent memory’s RGBS output pins to be sending to the monitor when our sync pulses are low, so as to not interfere with pixel alignment and ruin the integrity of the signal and image.

Other important considerations and high-level design tradeoffs regarding port assignments, timing, and implementation are described in more detail below.

Port Assignments

Both Image Mode and Paint Mode will be displaying data from SRAM to the screen, meaning that they will each require 18 address bits as outputs (to point to a location in SRAM). Overall, SRAM has 19 address bits, but we need not use all of them. The distribution of these bits is described in more detail under the Memory Loading heading of the Software section.Both modes also share a need to have an enable bit for the Tri-state, a Vertical sync pulse to signal the monitor for a new screen, a Horizontal sync pulse to signal the monitor for a new line, an SRAM write bit, and an SRAM output or read bit—all of which are active low.

With 23 bits occupied in each of our modes, we are left with nine to use.

In the Image Mode, it is critical that we load 8-bits of color into SRAM, allowing for 256 different colors to be sent to VGA. This leaves only one vacant port which will go unused.

In the Paint Mode, the user needs to be able to interact with the application via joystick and button, thus requiring 5 inputs and leaving 4 remaining. In order for the cursor in paint to move about the screen (and thus be written and re-written to SRAM), we need output ports from the MCU. However, in order to restore the portion of the image obscured by the cursor once it has moved, we also need to have input ports to read from SRAM.

This point represents an integral design decision in our development process where more bit functions exist than there are ports. To resolve this, we first considered the different applications of the ports in demand. Realizing that we do not have space for 8 inputs and 8 outputs for SRAM I/O RGBS data, we decided that—solely for the paint application—colorization will be reduced to 4-bits instead of 8-bits. While the Image Mode will preserve 256 colors, the paint modes pigment selections will be limited to just 16.

After this decision, we have need for 4 SRAM inputs, 4 SRAM outputs, and 5 joystick inputs (for four directions and a button), but only nine pins exist to fit these. Seeing that there are nine inputs, including two groups of four (for joystick directions and SRAM I/O), we decided to multiplex these together. Using the horizontal sync (which goes low for ~70 clock cycles per line displayed to the screen) as a multiplexing control bit, we can read the joystick inputs while the horizontal sync is low, and otherwise read the outputs of SRAM on those pins. This allows the joystick button and the outputs to write to SRAM to receive their own (un-shared or un-multiplexed) pins, unperturbed.

Output Port Assignments

Input Pin Assignments

Thus, the input pins were matched alongside the Horizontal and Vertical sync in addition to the Tri-state output enable—each of which is assigned as an individual bit and independently of the others—on PORTD. It was beneficial to leave the four related data RGBS outputs to SRAM I/O on another port that could be assigned as a cluster (along with the remaining two address bits, and SRAM’s write- and output-enable bits) since we note that, when looking at the library of available assembly instructions, a cycle cost of 1 is assessed for reassigning a port’s entire 8-bit value simultaneously, but a cost of 2 is assessed to alter each individual bit in isolation, making it a poor decision to have put these four data bits that are assigned to all at once alongside the input values, and instead put them on PORTB.

Timing Considerations

Next, we need to assess the timing of our operation. Although we generally use a 16 MHz crystal in lab, 25.175 MHz is the VGA standard, and gives us more clock cycles to work with, giving us more flexibility in operation during time that we are outputting to the display. As such, we decided to overclock the MCU by ~25% using a 25.175 MHz crystal, and, provided that, we can identify the following:

Now, we can observe how this will impact our capacity for output, knowing that we will follow the general VGA standard and spend 800 cycles processing each line at the proper frequency, and use this to identify an optimal number of lines to process to match a standard 60 Hz refresh rate as closely as possible.

For convenience, we decided to have 512 lines per screen, and can proceed to identify the following.

Timing Tradeoffs

The most critical feature of VGA is precise and consistent timing. We observed from various examples that a single clock cycle difference between horizontal screen lines of output results in a jagged, zigzag appearance since one clock cycle difference equates to a one pixel shift.Since each clock cycle represents a pixel, this indicates that the only way to obtain full resolution across a line is to change the output for every pixel, and thus, every clock cycle. Although our original proposal acknowledged an interest in implementing a VGA-output program in C, it became very evident through initial testing that programming in C or any other even higher level language would sacrifice our granularity over control of clock cycles, and possibly compromise consistency and precision of our output—particularly for not being able to control the compiler’s output and how many clock cycles are used for a given line of code, depending on the singular or sequence of instructions. With this in mind, we proceeded to implement the body of our code in assembly language.

Even with the body in assembly, we were left to consider different design implementations as to how to output VGA signal to the screen. Two ideas were having the assembly body output a single line to the screen versus having the assembly body output an entire screen. Having the routine called once per line means that the code needs to respond and be prepared within ~31.78 uS, but more importantly, this preparation needs to take place during the fraction of that time not being used for display (when the function can be released—less than one-fourth of that, or less than 7.9 uS). Having the routine called once per screen means that the code needs to respond and be prepared within ~16.7 mS, or the fraction of that time during which lines at the bottom of the screen are not being printed but rather withheld, which, for 32 of 512 lines would be ~1.03 mS).

These considerations were as follows:

- Executing the assembly body called from an interrupt in C

Tradeoffs: easy to implement regular interrupt; need to have a timer count to ~400,000 if implemented once per screen; losing ~70 clock cycles from entering the interrupt; losing ~10 clock cycles from entering the assembly function - Executing the assembly body called from a naked interrupt in C

Tradeoffs: more challenging to implement and preserve registers; losing ~5 clock cycles from entering the interrupt; losing ~10 clock cycles from entering the assembly function - Executing the assembly body called from an interrupt in assembly

Tradeoffs: not too challenging to implement, simply a new technique; minimal overhead; (like the above) would need to account for latency—since the processor completes the current instruction before entering an interrupt, even if it costs numerous clock cycles—so that each line of display starts at the same precise pixel; (also like the above) we would need to use caution when assigning tasks to the main routine, since we are not always guaranteed to finish them, which is arguably dangerous if the program relies on these tasks completing to re-write part of the pixels in memory before the next screen - Executing the assembly body in an assembly loop

Tradeoffs: the most intuitive to implement; most granular control over allocation of cycles; least associated overhead; requires the rest of the application to be programmed in assembly; requires the most time to construct, debug, and track memory, register assignments, cycle accuracy, and other low-level parameters that are generally taken care of in higher-level languages

In the interests of pushing the capacity of the MCU as far as possible, we began testing concept [1] just to see if it would work. We could observe that the output was consistent and the interrupt was being called in equal intervals in trials with a prescaler of 1024. However, as the prescaler was lowered to approach the realistic and required value of one, we noticed significant performance degradation and lag time on interrupt calls, eliminating this from our list of viable solutions.

We proceeded to investigate naked interrupts for concept [2], and began conducting trials with those but noticed performance degradation here, as well, although it took longer to reach lag here than in the previous case, but it still occurred before reaching a prescaler of one.

Between remaining concepts [3] and [4], we decided in the interests of granular control to pursue concept [4] since we would consistently be fully cognizant of the number of clock cycles for performance and could use that insight to keep our options open for project extensions and a larger pool of potential applications to display on the VGA later in the process once image display was successfully achieved.

Hardware

Hardware Selection

The necessary considerations for the selection of the hardware were driven by the need to execute tasks that the microprocessor that we had the most familiarity with [the Atmel MEGA 644] is not particularly suited for the task of driving video. While not totally impossible, a professional hardware designer would make different architecture choices to leverage innate capabilities of different processors and their I/O configurations. We saw this as a primary challenge to the project and set about initially to improve upon other examples in terms of screen resolution and execution features.The initial hardware platform was inspired through a reference project where someone attempted to build a VGA driver using the 644. The basic platform was adapted to our needs and then added ot as necessary to confront the hacks others used to overcome the system limitations.

The basic task is to write pixels to a screen. Considering the VGA standard of 640 x 480 active pixels, this yields over 300,000 pixels sent to the screen 60 times per second. Each pixel can be represented as a byte to yield a 256 color palette, and this in turn, generates 2.45 gigabits of information to output to the screen at 60 Hz! Needless to say, this is far beyond the memory capability of the MEGA 644, even if were to co-opt all of the EEPROM, which is only good for 2KB. Therefore some serious outboard memory will be required.

Additionally, the flow control of this data stream needs to be tightly controlled and data bus collisions must be avoided for this to work. Therefore, a traffic cop is needed to enforce blanking intervals to ensure proper synchronization and to allow for writing information to the outboard memory as well as reading the stored memory to send it to the screen. For this we will use an Octal Bus Transceiver (here after referred to as a ‘tristate’) because it has the attractive features of enabling through 1-bit control and effectively prevents reverse biasing, an important consideration which will be revisited later in the project.

The last component of the core hardware is a DAC to interpret the color palette we have sent to the screen and generate the analog R-G-B information that the VGA monitor is waiting for. While DAC’s are available, a simple passive component DAC was chosen for several reasons most important of which is that it works well.

With these needs in mind we confirmed our use of the MEGA644 and selected a 512 x 8 Static RAM chip, a LS74245 octal bus Transceiver, and used lab surplus resistors and diodes to build the analog DAC. The choices of the hardware reflect the concept that we will be dealing with the data a byte at a time and do not need bit-accurate recall; one command reads, writes or passes through a byte (colored pixel) in one motion. Given that the response time of the SRAM is on the order of 10 nS, this will be quick enough to work; older hardware such as the eeprom chips kicking around our shop have both insufficient capacity and their access times were too long by at least an order of magnitude.

The real difficulty in the SRAM concept was the package. Chips of the capacity and speed to fit our needs are not available in a PDIP format. I saw a number of ‘creative solutions’ to this problem on hobby websites and came up with the idea of soldering ribbon cables to the j-pins of the SOJ chip we had and terminating them to a 40-pin IC socket. (It seems that a 36-pin adapter either does not exist or is sufficiently rare as to avoid detection.)

The real difficulty in the SRAM concept was the package. Chips of the capacity and speed to fit our needs are not available in a PDIP format. I saw a number of ‘creative solutions’ to this problem on hobby websites and came up with the idea of soldering ribbon cables to the j-pins of the SOJ chip we had and terminating them to a 40-pin IC socket. (It seems that a 36-pin adapter either does not exist or is sufficiently rare as to avoid detection.)

SOJ SRAM Conversion

The last selection was to be that which drove the entire project: the timing crystal. The AT MEGA 644 is rated to run at 20 MHz, however the VGA standard is 25.175 MHz. This would mean ‘overclocking’ by almost 29%. Overclocking results in higher power draw, higher operating temperatures, and the possibility of processor breakdown through timer errors or the inability of outport ports to cycle fast enough. Obviously, we took the overclock route and selected a 25.275 MHz oscillator crystal.

Power was supplied by a standard 7805-style voltage regulator, a 340T5. This supplies a regulated 5VDC supply at 1.0 A (more than enough!) and we added 2 bolt-on heat sinks to accomplish sufficient dissipation. The circuits were assembled on spare solderboards found in the MAE and ECE labs that had friendly configurations and were populated by hand.

Hardware Layout

Signal Flow

Signal flow is generally not as one would expect, that is that the MCU would access the byte stored in memory and send it to the screen. Not only could the processor run fast enough to accomplish this, but there is no real need to do so. Rather, the MCU sends a stream of addresses to the SRAM, which then dumps the needed byte directly to the tristate to be exported to the screen.

Image Mode Hardware Flow, write information to SRAM and display a static image

If a user interface is desired, then the flow is more complex with the selection of enabling the signal from the user (such as a joystick) when there is no signal being sent to the screen.

Paint Mode Hardware Flow, interact with user input

Logical Design

The purpose of the logic gates is to enable/disable data flow based on the state of the MCU and therefore the SRAM. We could have done this through a separate pinout from the MCU, unfortunately, the ports are fully populated and so we required a passive method of doing this.The logic gates are also buffered by the TriState #2, as previously mentioned. If this was not done and simple And gates were used, then when the user signal was disallowed the output would be driven to a logical low and data collisions would occur. Moreover, by using a truth-table style approach to scheduling of user interface we were able to exploit those valuable times when the processor was not parsing data to the screen to interact with the user or to process the image itself.

Logical Enabling of User Interface

Final Architecture

Our final hardware architecture evolved into something quite different than what it started as due to the limitations of the microprocessor and the desire for enhanced functionality. In order for us to be able to implement a paint-style application it became necessary to create additional functionality independent of the MEGA 644. Additionally, we used a set of manual jumpers and multipin headers to switch between operational modes.For example Jumper J1 selects which half of the SRAM’s memory addresses are accessed by manually setting the Most Significant Bit to 1 or 0. We were simply out of Pins on the 644 to access all the addresses any other way. Likewise, Jumper J2 selects or deselects the logic set depending on which operating mode you are using, much like any number of computer components such as hard drives.

Mode Selection Jumpers

Lastly, it should be observed that we now understand completely why today’s modern video cards are so resource-intensive or resort to using system memory to render viable graphics. In order to render VGA-standard resolution with a reduced color palette, we overdrove our little microcontroller to a ‘screaming’ 25.175 MHz and loaded up just over 4MB of external memory; todays video cards often exceed 3 GB of memory and utilize processor speeds exceeding 3 GHz in order to drive a modern high resolution monitor with the millions of colors we demand….and they have no part in the implementation of the application that they are displaying!

Software

Image Processing

One of the component functionalities of our product allows users to display their own image on the VGA monitor. In order to resolve the provided image of variable input resolution to 256 x 480 (shown on a 512 x 480 canvas), a Java application retrieves the input image and divides it into 122,880 (or 256*480) equally sized rectangles, each taking up 1/255 of horizontal space and 1/480 of vertical space.For each rectangle in the prospective newly-resolved image, the program reviews all pixels in the input image that lie within that rectangular space and collect their RGB values. Then, it will compute Red, Green, and Blue values for the rectangle by summing the Red, Green, and Blue values of the pixels in the input image that lie within that rectangular space, weighting each value by the fractional area the given pixel inhabits in the area of the whole rectangle whose RGB is being identified.

This weighted average (for each Red, Green, and Blue) is between 0 and 255, and is calculated using the formula below where: x represents a pixel in the newly-resolved image, i represents a pixel in user’s provided image, and X represents the subset of all pixels i that are contained in rectangle x when the new and old images are scaled to be of equal dimensions and superimposed.

For our 8-bit colors, we assign two bits for Red, two bits for Blue, two bits for Green, and two bits for Shading (which makes the displayed color brighter or more white-based, which we will return to later). Thus, we needed to map the domain of 0 to 255 to the range of 0 to 3 for each color. For the entirety of the domain 0 to 255, the goal is to identify a function that has each of the output values (0 to 3) evenly represented. We do this keeping in mind that for the paint mode of the application, values will re-be colorized from 8-bit to 4-bit; since the most significant bit of each color element (RGBS) will be used independently for that conversion, we want to ensure that having the color asserted or not asserted happens for an equal range of our 8-bit or the standard original image's 8-byte input domain. For this, we can right-shift the RGB values by six to reduce the initial 8-bit value to a 2-bit one, also maintaining evenness of domain distribution across the range.

Finally, we calculate the shading—with higher shading values indicative of more brightness. For this, we surveyed 8-byte colors to qualitatively observe trends that dictate noticeable brightness changes. Namely, we saw that increasing the Red, Green, or Blue in isolation (with the others set to zero) results in—obviously—increasing the presence of the color, but lacks a noticeable change in brightness, even when the changing color has been adjusted to 255. As such, the mapping function that we use to determine the two shading bits from RGB will likely not include R, G, or B values by themselves in a sum. Rather, increasing a pairwise combination of Red, Green, or Blue results in noticeable brightness changes.

Thus, we can calculate a shading score as follows, where WA stands for the weighted average from above, and x represents the position of a pixel in our newly-resolved image:

We continue using the weighted averages since they offer more control over granularity for our calculations. Next, we analyzed greyscale colors (where Red = Green = Blue exactly) to look for the qualitative point at which brightness levels change dramatically. We selected 190, 210, and 230 as those points, and solved the above equation for Shading with those as input parameters. Thus, if a given pixel in the newly-resolved image maps to a Shading(x) below that of the first threshold (190), shading is zero. For values above 190's shading but below 210's, shading is set to one; above 210's but below 230's, shading is two; and above 230's, shading is three.

Memory Loading

First, we needed to determine how to assign the 19-bits address bits of SRAM so as to store pixel information. 8-bits were assigned to represent the x-component of the pixel position on the screen (which does, in fact, range from 0 to 255). 9-bits were assigned to represent the y-component of the pixel position (since these range from 0 to 480, where 512 is the lowest power of two to contain them); furthermore, we actually write 512 lines to the screen (although the bottom-most 32 lines are empty and only used for processing and memory-interaction time), this simplifies things. There are two unused bits, indicating that our 512 kb SRAM can store four images in all. For the purposes of our paint application, we can toggle between two images using bit 17, controlled by the program. Bit 18 is left as a manual switch that the user can toggle to choose the half of memory that they want displayed (or which two-image storage cluster, high or low).

Assignment of SRAM Address Bits

Furthermore, arrays in C cannot occupy more than 32,767 bytes, so since each of the elements of our array is a byte, the largest number of entities that can be stored in the array is 32,767 (or one less than 2^15). For simplicity and convenience, we have devised a scheme that writes to SRAM four separate times; each time, we write the values stored in an array of maximum length to 32,767 addresses in SRAM (losing one pixel at the end, but avoiding visual incongruities by hiding the last column of each line when displayed on the screen so that a crisp line exists at the right-side of the image). Bits 17 and 16 are held constant for each program execution. As such, the Java program outputs four files, each containing an array of length 32,767 where the value is the 8-bit RGBS value and the index represents the 15 least-significant address bits. Accompanying the array is another variable, representing the two most-significant address bits for the partner array.

Alternative solutions could have carried out in only two or three program memory writes to SRAM; however, these solutions would have sacrificed code readability, intuitiveness, ease-of-use, and convenience when a single program execution is capable of adjusting any combination of the 17 address bits that we are using, instead of keeping the two uppermost constant and the others gradually incremented. This was a design tradeoff with which we were satisfied. Moreover, we opted to continue using a single array for each memory load for much of the same reasons. Even with multiple arrays, we could not increase the memory load of a single program execution by a single power of two over the existing implementation, more so discouraging deviation from the existing design as there is not much to stand to gain as a result.

Image Mode

The first mode in which our VGA adapter can be used is to load and display static images to the screen. Since the precision of the loading component of this mode need not be cycle-sensitive like sending images to the screen, we can implement this in C. Then, as discussed earlier, actually sending the SRAM data to VGA is implemented in cycle-accurate assembly.The procedure for the C routine is relatively simple.

We take the code snippet from our image processor and include it in variable declarations storing the array in program memory. When the program begins to execute, we stall for a milliseconds to allow SRAM to initialize, so as to not blow the internal transistors in the chip and render it non-functional. Then, we iterate over the elements of the array, output the value to the I/O ports of SRAM, and output the address of the value to the SRAM address ports.

Once this is complete, we can briefly set the SRAM Write-Enable to low to write the value to memory while keeping the other active low enable pins at a logical high (including the SRAM Output Enable, Tri-state enable, and Vertical sync pulse). The Horizontal sync pulse is kept low for reasons of multiplexing the versatile pins on the microcontroller that serve multiple purposes at different times or in different modes; however, this has no impact on the output since the Tri-state enable is kept high, preventing signals from being transmitted to the monitor.

As mentioned before, the fifteen least-significant bits of the pixel’s SRAM address are determined by the index of the array value while the two next-least-significant bits are defined for all values in a particular load file code snippet. Meanwhile, the most significant bit is set manually. Generally speaking: enable, write-enable, and read-enable pins are kept on for a few clock cycles to allow for the estimated logic propagation to take place in the breadboarded digital circuits such that the signals reach their destination before enable bits (as potential multiplexing bits) are disabled once again.

Upon completion, we proceed to the display stage.

Based on our earlier calculations, it is essential to be cycle accurate, regardless of what branches are being executed throughout the program: the RGBS must start and end at the same exact same time and pixel, every time. Knowing this, each required operation was recorded, and the total number of clock cycles throughout the program was managed to ensure cycle accuracy.

Summary of AVR Assembly Instruction Set, from Atmel

Program Flow of Image Mode

Paint Mode

The second mode in which our VGA adapter can be used includes a ‘Paint’-like application. The user can start with a blank screen or an image already having been loaded into SRAM and then draw on top of the image in 4-bit colors—where one bit is used to represent each Red, Green, Blue, and Shading.From the user perspective, their interactions with the application abide by the following usability requirements:

- See the image already loaded in SRAM with 16-bit color displayed to the screen. (A)

- See a cursor (a square, serving as your paint brush) displayed on the screen, having a write border outside and your selected paint color inside. (B)

- Press a button to toggle the color of your brush between 16 different 4-bit color options. (C)

- Draw in the selected color on top of the image as your cursor moves about the screen. (D)

- Have the ability to select a “clear” or null color so as to not draw on the image as your cursor moves about the screen. (Since we have the shading bit, binary 1111 and 0111 will appear the same, so we can have one of these serve as white pigment and the other serve as clear.) (E)

Fulfillment of this application included the following operational requirements, derived from the above user-display interactions:

- Draw the image stored in SRAM to VGA. (a)

- Allow the user cursor to move about the screen, storing last movement. (b1)

- Superimpose the user cursor on top of the image. (b2)

- Read joystick directional input to determine cursor movement. (b3)

- Return pixel contents to their original form after cursor has moved away. (b4)

- Read and debounce the button to determine pigment changes. (c)

- Superimpose the selected pigment color on top of the center of the cursor. (d1)

- Store permanent changes to the screen from painting non-clear colors. (d2)

- Disable permanent pigment changes when selected color is clear. (e)

Given the timing constraints, one of the most taxing elements of the project was developing an entire program flow in assembly. Trying to be clock cycle conservative, we worked to maximize available register space by intelligently determining the most necessary and important values to be stored there.

Register Assignments in Paint Mode

As such, between each screen print, we can recover the pixels from ‘SRAM[17]=1’ elements where the cursor is covering it in ‘SRAM[17]=0’, write any pigment changes from a moving, non-colorless cursor to the background image in ‘SRAM[17]=1’, and then re-draw the cursor on top of the foreground image.

Tasks by Line Number in Paint Mode

The following state machine for the button debounce executes only during the time at which the horizontal sync pulse is drawn low and only while line 486 of the screen being displayed (after RGB has finished being sent, but the program is polling user input for cursor movement before printing the cursor once again to the foreground).

Debounce State Machine for Color Toggle Button

Program Flow of Paint Mode

Results top

Original JPEG

256-Color VGA Bitmap

VGA Standard

We started testing without SRAM or many other circuit elements, but instead, simply sending static colors to the screen directly from the MCU without any intermediate stops. This allowed us to better understand the timing constraints of VGA. Oddly enough, in spite of ample documentation for VGA timing standards, our testing revealed that many of the listed timing requirements are not heavily enforced by the inherent technology. For example, new lines were triggered by the negative edge of the horizontal sync pulse, but the duration of the pulse was irrelevant. Further, the cycles within the porches could also be moved about wherever necessary, and if need be, RGB could begin transmitting immediately before or after the horizontal sync is low. The only constraint that was observed to be detrimental to the display operating correctly was asserting RGB when sync pulses are low.As such, we adjusted most of the timings in our routines based on logical order and flow, and maintaining RGB sent to the monitor for as long a time and as long a line as necessary, observing that no quality loss existed by deviating from the published times. Even the number of clock cycles between sync pulses is unrestricted (though resulting in a different refresh rate); however, varying the number of cycles between syncs amongst lines in close proximity leads to a jaggedness in the display, and depending on the delay, potentially even a static discontinuity across the full length of the horizontal.

Before continuing, we used the oscilloscope to confirm that the system was behaving as expected. Namely, RGB outputs should consistently be held to zero when the Tri-state is disabled, and the Tri-state should be consistently disabled when either of the syncs are low.

CH1 Horizontal Sync, CH2 Tri-state Enable

The horizontal sync goes low once every 31.78 uS, once every 800 clock cycles. The Tri-state is only enabled to transmit RGB when the sync is not low.

The horizontal sync goes low once every 31.78 uS, once every 800 clock cycles. The Tri-state is only enabled to transmit RGB when the sync is not low.

CH1 Horizontal Sync, CH2 RGB

RGB is never asserted when sync active low.

RGB is never asserted when sync active low.

CH1 Tri-State Enable, CH2 Vertical Sync

The vertical sync goes low once every 16.27 mS, once every 800*512 clock cycles. The Tri-state is disabled during lines 480-512 and 1-2 for vertical sync time.

The vertical sync goes low once every 16.27 mS, once every 800*512 clock cycles. The Tri-state is disabled during lines 480-512 and 1-2 for vertical sync time.

CH1 Tri-State Enable, CH2 RGB

RGB is only asserted when Tri-state enabled low.

RGB is only asserted when Tri-state enabled low.

Integrating SRAM

We then proceeded to connect SRAM and send values stored in memory to the Tri-state, DAC, and monitor. We started with color bars (greyscale, monochrome, and multi-colored) to confirm that the analog component was wired correctly and the order of the bits was as expected. After this, we advanced to basic pictures, whose conversions to a 256-Color scheme is less lossy than photographs.As expected, photographs do not convert as well to our 256-Color scheme as drawings or images created with a quantized palette of basic colors do. However, some photos convert better than others. The most lossy range seems to be that of mid-level, semi-bright colors, including some kin tones, indicating that we could return to our algorithm for calculating shading and consider raising the thresholds required for shading bits to be asserted.

One observation of note is the consistency of SRAM. After power cycling, the contents of SRAM are cleared, and the display appears like static, indicating a variety of numbers near to or around 255. When we first began using SRAM, it was extremely reliable and consistently output what we had written to it. However, we noticed after significant amounts of testing that some bits, often random, would get overwritten by static upon further compiles. Since we load an image to memory over the course of four compilations, we noticed that the most recent load was always preserved; however, as later loads took place (even if consecutive loads and compiles were of identical code) a few new pixels would get drowned in static. We attribute this to the rapid frequency with which we are interacting with the chip, and perhaps unreliability between the SRAM to Tri-state connection.

Port Interference

Without a tri-state or other barrier between the pins that write to SRAM during the memory load stage, this connection remains intact during the time that the MCU calls the SRAM to output to the Tri-state and display to the screen. When we forced those outputs low, we observed a generally black screen with few consistent splotches of color and far between. Forcing those outputs high observed a similar result, but white-based. Turning the MCU outputs into inputs with high impedance during the display stage resulted in jagged lines indicative of inconsistent timing across the screen, and generally one of more drastic discontinuities, as can be seen on the left below. Adding a pull-up resistor pacified the drastic discontinuity but left many of the smaller ones intact.The only way for the image to appear with crisp borders and lines is to remove the connection between those ports and SRAM entirely during the display stage, as seen to the right. This seemed to indicate that even when a port was not asserting any voltage, simply being a connected input causes the monitor to receive the RGB signals late, yielding a jagged edge and sometimes even static. Although we speculate that the cause of this may be processor delay, since the processor directly outputs the sync functions and thus must be lagged from the changing input voltages, or even line delay, we have not been able to confirm the source.

Ports Connected as High Impedance Inputs

MCU's Ports to SRAM I/O Disconnected

Possible Extensions

One of our previous goals that is worth future consideration is achieving full resolution with a MEGA644. We settled for half resolution, since it takes one cycle to increment your address counter and a second cycle to output it, meaning that we can trigger a new pixel at most once every two cycles in this configuration. One possible solution is to clock the crystal at twice VGA standard, or 50.35 MHz, thus executing two instructions in the time that the monitor has shifted focus by a single pixel. Alternatively, one could use double buffered memory with dual processors running concurrently, alternating frames, and synchronized via SPI.Additionally, if we conduct a brief analysis on the final version of the Paint Mode procedure, we can observe that there are at least 100 free cycles per line that can be used for computation. Furthermore, the operations taking place between line 480 and line 511 can easily be consolidated, seeing as how the cost of many of them is only on the order of tens of cycles rather than hundreds. The current implementation was devised this way nonetheless for readability and debug-ability, but further, since the scope of this project did not have any additional computation to take place, thus not sacrificing anything for the convenience of that design decision. Leveraging these otherwise unused cycles for addition lines or columns, barring memory issues, is certainly possible under this configuration.

Another alternative that was not brought to our attention until the final few days of the project is the possibility of using a MEGA128 instead of a MEGA644, so as to allot for more ports, easing the need for digital logic and simplifying the design.

Conclusions

Meeting Expectations

Our final design was able to successfully read from and write to SRAM and trigger SRAM addresses to output stored data through the Tri-state and DAC to produce a crisp image of user choice on the display. In terms of usability, our design was satisfactory. The issue with having so many different stages and programs in the design, as a result of circumventing memory restrictions, is that the operator must be familiar with the procedure for the system, and as such, it is accompanied with detailed instructions from the very first step when the user submits an image for processing. The device has not realized any safety flaws, and has no accuracy measure except for the similarity or dissimilarity of submitted images to their on-screen 256-color counterparts. We believe that the device performs well in this capacity, and can further improve as more complexity is added to the 2-bit shading calculation. For interference, we have recognized and sidestepped and issue with port conflicts resulting in static and delays affecting the screen impression. Finally, speed of execution is a regime in which our design reigns superior, having been coded in assembly language for cycle-accurate, optimum performance. Although the speed of the design cannot be improved upon without changing the screen refresh rate, we could, in the future, seek to add or consolidate more computation during the time of processing.

From a personal standpoint, I (Ryan) recognized midway through the design process that our project, originally intended as a simple pursuit to better understand and optimize image displays with an MCU, inadvertently ended up being the perfect capstone to my undergraduate career in ECE, embracing a healthy balance of the broad majority of core classes in the department—ranging from manipulating voltage signals, to design of analog circuits for RGBS output, digital logic for toggling between assignments for our limited number of ports using multiplexers, extensive assembly programming, memory distribution, and knowledge of embedded computing.

Societal Impact

There are many applications where the human interface to a microprocessor is integral to the functionality of a task, yet it is often the case that those same interfaces are woefully inadequate past the point of debugging or demonstration of concept. Input devices such as button or keypad presses or even keyboard-and-mouse entries are generally sufficient both from a usability standpoint and from adequacy of the type of information gathered without being excessively onerous to accommodate programming-wise. It is the output however, that is often lacking in clarity.An LCD screen is quite inexpensive and easy to set up, but you are restricted to small set of characters (mostly alphanumeric) displayed in monochrome dot-matrix style. It does not even execute quickly. While these shortcomings don’t really matter with a simple information stream, such as a reaction timer high score, anything of more complexity is all but lost. The situation improves with a serial communication tool such as Putty or the once-ubiquitous HyperTerminal in that strings, numbers and the like may be sent to a screen with improved flexibility and speed. A graph can be created using ingenuity in placing symbols on the page like dot-matrix printers of the 1970’s did, but that is where the capability ends; a picture is out of the question.

Suppose you wanted to display a photo taken by a robot’s on-board camera. Or perhaps you wish to display the results or condition of the process in question in a truly intelligible fashion. The successful execution of this project and further extension for data transmission and storage can allow for all of that and more. With this system, it would be possible to output full VGA images to nearly any screen available with obvious application to demonstrations in labs or views of working equipment and processes with the interface being made by an $8 chip.

Standards

The standards relevant to this project are fairly straightforward in that the goal is based around being able to get the microprocessor to output a signal that adheres to the VGA Format. Quite obviously the most important standard is that of the Video Graphics Array by IBM which specifies a set of screen resolutions, operating modes, and minimum hardware requirements. Additionally, VGA spells out voltage, impedance, refresh rates and color palettes; NTSC-M dictates color coding. Other standards that have an influence on the parameters of the project albeit tangentially are those referring to VGA configurations [IEEE 1275-1994], SRAM [JEDEC 100B.01, JESD21-C], and microprocessors themselves [ISP 35.160].Safety Considerations

As with any application using consumer electronics, especially when one is intentionally hacking in to a system, there exists the risk of electrical shock. Fortunately, all of our work was constrained to the low-voltage side of the equipment. All of the voltages that we were dealing with were 5VDC or below, thus effectively removing that danger.The use of soldering equipment carries with it the obvious burn hazards as well as the not-so-obvious hazard of lead exposure from the solder and smoke as the better solders do indeed contain lead. This was mitigated by frequent hand-washing , especially before eating and in a conscious effort to inhale as little smoke as possible when soldering in well-ventilated areas.

Lastly would be the risk of fire that can arise from electronics, especially those being driven above their ratings. While our setup did indeed get quite hot at times it was never left unattended or in the immediate proximity of combustible materials.

Intellectual Property Considerations

The VGA standard was implemented in the 1987; it is still the default minimum standard to which all computers must adhere. As it is a standard, and an old one at that it is to be adhered to rather than patented.The hardware we used was all off-the-shelf grade material and no proprietary software was used. Circuit designs were all standard practice methods and (as stated in the Hardware Development section) the initial core hardware layout was inspired by tutorials on Lucid Science . References on software techniques and the like were all openly published and their use was encouraged. Nonetheless, all computer source code was original with the obvious exception of C libraries used by the Atmel Compiler.

We are not seeking a patent or any other exclusivity for any part of his project; this was done as an investigation of a ‘solved problem’ in order to further our understanding of the use of microcontrollers.

Acknowledgements

We would like to thank Bruce Land and the Spring 2012 ECE 4760 TAs for their ideas, insight, and dedication. In many cases, their advice from experience and suggestions from domain knowledge saved us hours of potential mistakes, component seeking, debugging, and deliberating, and as a result, their efforts contributed greatly to our project's success. Moreover, their commitment to the course and countless hours in lab and lecture not only worked to improve the quality of our final product, but furthermore, improved the overall quality of the design experience from taking this course. Much gratitude goes out to all those involved for using this course as a forum to instill passion and excitement for engineering design.

Appendices

Parts List

Schematics

Code

- Image Converter (rgbs_converter.java)

- Sample Images and Output (rgbs_examples.zip)

- Image Mode Data Loader (image_mode.c)

- Image Mode Pixel Display (image_mode.s)

- Paint Mode Program Loader (paint_mode.c)

- Paint Mode Pixel Display (paint_mode.s)

X . III What exactly is VGA, and what is the difference between it and a video card?

Before VGA was introduced we had a few other graphics standards, such as hercules which displayed either text (80 lines of 25 chars) or for relative high definition monochrome graphics (at 720x348 pixels).

Other standards at the time were CGA (Colour graphic adapter), which also allowed up to 16 colours at a resolution of up to 640x200 pixels. The result of that would look like this:

Finally, a noteworthy PC standard was the Enhanced graphics adapter (EGA), which allowed resolutions up to 640×350 with 64 colours.

(I am ignoring non-PC standards to keep this relative short. If I start to add Atari or Amiga standards -up to 4096 colours at the time!- then this will get quite long.)

Then in 1987 IBM introduced the PS2 computer. It had several noteworthy differences compared with its predecessors, which included new ports for mice and keyboards (Previously mice used 25 pins serial ports or 9 pins serial ports, if you had a mouse at all); standard 3½ inch drives and a new graphic adapter with both a high resolution and many colours.

This graphics standard was called Video Graphics Array. It used a 3 row, 15 pin connector to transfer analog signals to a monitor. This connector is lasted until a few years ago, when it got replaced by superior digital standards such as DVI and display port.

After VGA

Progress did not stop with the VGA standards. Shortly after the introduction of VGA new standards arose such as the 800x600 S uper VGA (SVGA), which used the same connector. (Hercules, CGA, EGA etc all had their own connectors. You could not connect a CGA monitor to a VGA card, not even if you tried to display a low enough resolution).

Since then we have moved on to much higher resolution displays, but the most often used name remains VGA. Even though the correct names would be SVGA, XVGA, UXGA etc etc.

Another thing which gets called 'VGA' is the DE15 connector used with the original VGA card. This usually blue connector is not the only way to transfer analog 'VGA signals' to a monitor, but it is the most common.

Left: DB5HD Right: Alternative VGA connectors, usually used for better quality)

A third way 'VGA' is used is to describe a graphics card, even though that card might produce entirely different resolutions than VGA. The use is technically wrong, or should at least be 'VGA compatible card', but common speech does not make that difference.

That leaves writing to VGA

This comes from the way the memory on an IBM XT was devided. The CPU could access up to 1MiB (1024KiB) of memory. The bottom 512KiB was reserved for RAM, the upper 512 KiB for add-in cards, ROM etc.

This upper area is where the VGA cards memory was mapped to. You could directly write to it and the result would show up on the display.

This was not just used for VGA, but also for same generation alternatives.

G = Graphics Mode Video RAM M = Monochrome Text Mode Video RAM C = Color Text Mode Video RAM V = Video ROM BIOS (would be "a" in PS/2) a = Adapter board ROM and special-purpose RAM (free UMA space) r = Additional PS/2 Motherboard ROM BIOS (free UMA in non-PS/2 systems) R = Motherboard ROM BIOS b = IBM Cassette BASIC ROM (would be "R" in IBM compatibles) h = High Memory Area (HMA), if HIMEM.SYS is loaded. Conventional (Base) Memory: First 512KB (or 8 chunks of 64KiB). Upper Memory Area (UMA): 0A0000: GGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGGG 0B0000: MMMMMMMMMMMMMMMMMMMMMMMMMMMMMMMMCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC 0C0000: VVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa 0D0000: aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa 0E0000: rrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrrr 0F0000: RRRRRRRRRRRRRRRRRRRRRRRRbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbRRRRRRRR

Writing to a "fixed address" was essentially writing to a video card directly. All those video ISA video cards (CGA, EGA, VGA) essentially had some RAM (and registers) mapped directly into the CPUs memory and I/O space.

So when you wrote a byte to a certain memory location, that character (in text mode) appeared on screen immediately, since you in fact wrote into a memory located on a video card, and video card just used that memory.

This all looks very confusing today, especially considering that today's video cards sometimes are called VGA (and they have bear resemblance to "true" VGA cards from 1990s). However even modern cards emulate some of the functionality of these older designs (you can boot DOS on most modern PCs and use DOS programs that write to video memory directly). Of course, nowdays it's all emulated in video card's firmware.

Even if you video card is integrated, it is still connected to the rest of the system via some kind of a bus: PCIe, PCI, AGP, ISA, etc. These buses can connect external components to the motherboard, and can connect internal components inside the chipset (SATA, video, etc.)

We have explained that old video cards worked by having having video memory mapped into the processor's address space. This was the cards own memory. The northbridge knows to redirect requests for this mapped memory to the VGA device.

Then on top of that there were amny expansions and new modes for VGA-compatible cards. This lead to the creation of VESA BIOS Extensions (VBE), which operate through

int 10h. This supports basic 2D acceleration (BitBlt), hardware cursors, double/tripple buffering, etc. This is the basic method for full color display at any supported resolution (including high resolutions). This normally used memory internal to the card too, with the northbridge performing redirection like with classic VGA. This is the simplest way to utilize full collor/full-resolution graphics.Next we some direct method of accessing the a GPU without using the bios, which provides access to the same features as VBE, and possibly additional ones.

Then there is the GPU interface that can support 3D acelleration/GP-GPU computation etc. This definately requires manufacturer provided drivers or specifications for full use, and frequently there are substancial differences even between devices of the same manufacturer.

X . IIII Reference Design for Switching VGA Signals in a Laptop

This application shows how the MAX4885E low-capacitance VGA switch can be used to perform the switching function in a laptop computer. The MAX4885E draws nearly zero current, fits into a 4mm x 4mm package, and incorporates most of the switches and active components used in a discrete implementation. All device outputs are protected to ±15kV Human Body Model (HBM) so that the designer can eliminate many ESD components, thereby reducing cost and saving board space. An application circuit shows the MAX4885E used for VGA signal switching between a laptop and docking station.

Introduction

Analog VGA signals have been part of the PC world since IBM introduced PCs in 1987. Today most business-oriented laptops need to work with a docking station and with the vast number of existing projectors. Nearly all projectors have a VGA port which is the only common way for a typical user to hook up a laptop. Although digital connections such as DVI™ and HDMI™ are appearing, the vast majority of projectors still only support VGA.The requirement to support VGA through the docking station and the VGA port will likely continue for many years, until one digital standard fully replaces the ubiquitous blue VGA connector on the laptop. Maxim introduced the MAX4885E low-capacitance VGA switch to perform that switching function.

The MAX4885E draws nearly zero current, fits into a 4mm x 4mm package, and incorporates most of the switches and active components used in a discrete implementation. All device outputs are protected to ±15kV HBM (Human Body Model), so that the designer can eliminate many ESD components, reduce cost, and save board space.

MAX4885E Is Optimized for VGA Switching

RGB Switching

RGB switching requires high-bandwidth switches. The MAX4885E contains three SPDT switches that exhibitDDC Switching

DDC switching is also done on the MAX4885E, which uses a pair of SPDT n-Channel FETs to do the switching for the SDA and SCL. By actually switching the outputs, the system can only hook up to the monitor in use. Switching the outputs further reduces the capacitance that the DDC circuit will see, since only one device is connected at a time. In addition, all outputs are again protected to ±15kV (HBM), so no additional ESD diodes are needed. The gate of the FET is switched to a voltage level, VL. This voltage is normally the same as the GPU I/O (2.5V to 3.3V). The DDC signals are actually I²C signals, with pullup resistors on both sides of the switch. Since the signals going to the monitor can be as high as 5.5V, the GPU needs to be protected and level shifted. By biasing the gate of the switch FET to the same voltage of the GPU, the FET protects the GPU from signals that exceed the VL.¹ By using two SPDT n-Channel FETs, the GPU only has one capacitive load and is protected from high voltages and ESD events.Horizontal and Vertical Level Translation and Buffering

Horizontal and vertical synchronization signals are required to interface the GPU to full TTL-level signals. Pullups on the monitor can, in fact, pull these signals to +5.5V. The MAX4885E has a pair of level-translating buffers that take a signal between 0.8V and 2V and translate it to a full TTL output; the device can supply ±8mA, which meets the VESA specification. The output is referenced to 5V, so there is no issue with voltage compatibility. Again the horizontal and vertical outputs have ±15kV (HBM) ESD protection, so no added diodes are needed.Integrated LC Filter for Harmonic Stability

The MAX4885E integrates all the key switches, FETs, and buffers typically used for VGA switching into a tiny 4mm x 4mm TQFN package. However, many systems require some form of bandwidth limiting filter to prevent harmonics from radiating. The MAX4885E did not include any filtering for that function. Passive component values would be too large, and an active filter would require considerable current. While the MAX4885E could have integrated a triple-amplifier/filter, that would have made the device draw as much as 100mA—too much to be tolerated in a laptop. Instead, the device's LC filter draws no current and accomplishes the same task. The MAX4885E draws < 5µA at idle and a few mA when driving the monitor for the horizontal and vertical buffers.High Integration Reduces Component Count

Table 1 shows how the MAX4885E replaces as many as 14 standard devices. Remember that the MAX4885E fits in a 16mm² package.Table 1. Components Eliminated with the MAX4885E

| Quantity | Component | Function | Package | Size (mm²) | |

| 1 | 74FST3257 | R,G,B | 16-TSSOP | 35 | |

| 2 | 74LVC1G125 | H,V | SC70 | 8 | |

| 4 | 2N7002 | DDC | SOT23 | 24 | |

| 7 | NUP2301 | ESD | SC88 | 28 | |

| Total Savings | 14 | 95 |

The assortment of standard and inexpensive devices shows that the MAX4885E replaces 14 standard parts that require 95mm². There may be ways to reduce the parts count perhaps to 10 parts and 50mm² using more specific and integrated devices, but the resulting component costs would undoubtedly be higher.

The MAX4885E is priced to sell below the sum of the costs of these many components. The MAX4885E thus saves board space and placement cost. It improves reliability and the high-frequency analog performance of RGB switches.

Applications Circuit

The circuit in Figure 1 shows the MAX4885E used in a docking station application for a laptop. All the critical components are present. All ESD concerns are managed, and only one control bit is required to select the dock vs. an internal connector. The circuit only draws a few µA at idle and a few mA to supply the horizontal and vertical buffers.

Figure 1. Application circuit for a VGA connection between a laptop and docking station features the MAX4885E VGA switch. The connector pin assignment for the docking station is determined by the designer. This design is just an illustration of one configuration.

HDMI is a registered trademark and registered service mark of HDMI Licensing LLC

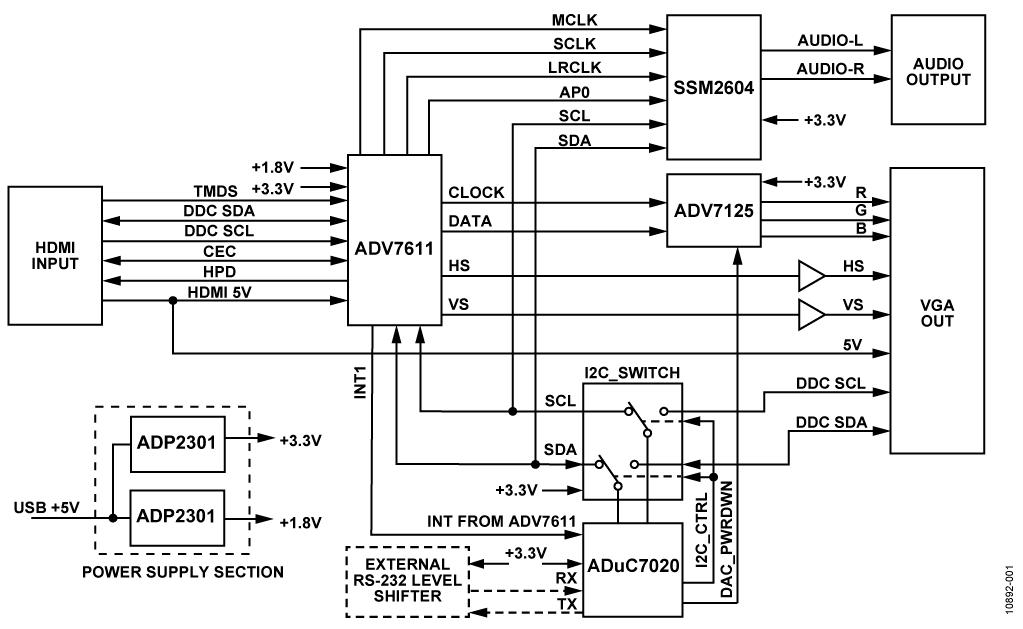

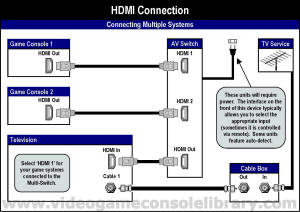

USB Powered DVI/HDMI-to-VGA Converter (HDMI2VGA) with Audio Extraction

Reference Design using part ADV7611 by Analog Devices

Description

- USB Powered DVI/HDMI-to-VGA Converter (HDMI2VGA) with Audio Extraction. The Circuit is a complete solution for the conversion of HDMI/DVI to VGA (HDMI2VGA) with an analog audio output. It uses the low power ADV7611 high-definition multimedia interface (HDMI) receiver capable of receiving video streams up to 165 MHz. The circuit is powered from a USB cable and works for resolutions up to 1600 + 1200 at 60 Hz

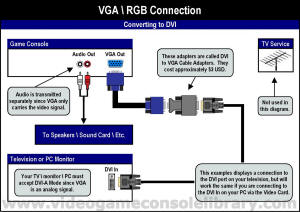

Dvi To Vga Wiring Diagram Vga To Dvi Diy Wiring Diagrams inside Hdmi To Vga Wiring Diagram

From the thousand pictures online about hdmi to vga wiring diagram, we all choices the very best choices together with ideal resolution just for you, and now this images is actually one of graphics selections in your greatest pictures gallery about Hdmi To Vga Wiring Diagram. I am hoping you will enjoy it.

That graphic (Dvi To Vga Wiring Diagram Vga To Dvi Diy Wiring Diagrams inside Hdmi To Vga Wiring Diagram) earlier mentioned is usually classed with: diagram, hdmi, to

Description for Dvi To Vga Wiring Diagram Vga To Dvi Diy Wiring Diagrams inside Hdmi To Vga Wiring Diagram

| No | Image atribute | Value |

|---|---|---|

| 1 | Title: | Dvi To Vga Wiring Diagram Vga To Dvi Diy Wiring Diagrams inside Hdmi To Vga Wiring Diagram |

| 2 | Upload by: | Tops Stars Team |

| 3 | Upload date: | September 8, 2017 |

| 4 | Image link: | https://tops-stars.com/wp-content/uploads/2017/09/dvi-to-vga-wiring-diagram-vga-to-dvi-diy-wiring-diagrams-inside-hdmi-to-vga-wiring-diagram.png |

| 5 | Location: | 2017/09/dvi-to-vga-wiring-diagram-vga-to-dvi-diy-wiring-diagrams-inside-hdmi-to-vga-wiring-diagram.png |

| 6 | Width: | 573 px |

| 7 | Height: | 573 px |

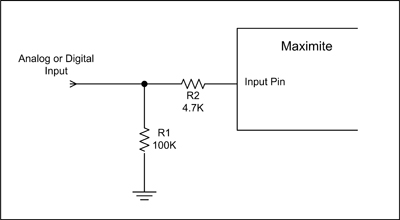

Another danger is SCR latch up which can be caused by a large current (>20mA) being forced through the protective diodes. This could happen if the Maximite is connected to another circuit that is powered up before the Maximite and in this case the best protection is to include a series resistor to limit the current on any susceptible inputs.

Another danger is SCR latch up which can be caused by a large current (>20mA) being forced through the protective diodes. This could happen if the Maximite is connected to another circuit that is powered up before the Maximite and in this case the best protection is to include a series resistor to limit the current on any susceptible inputs.

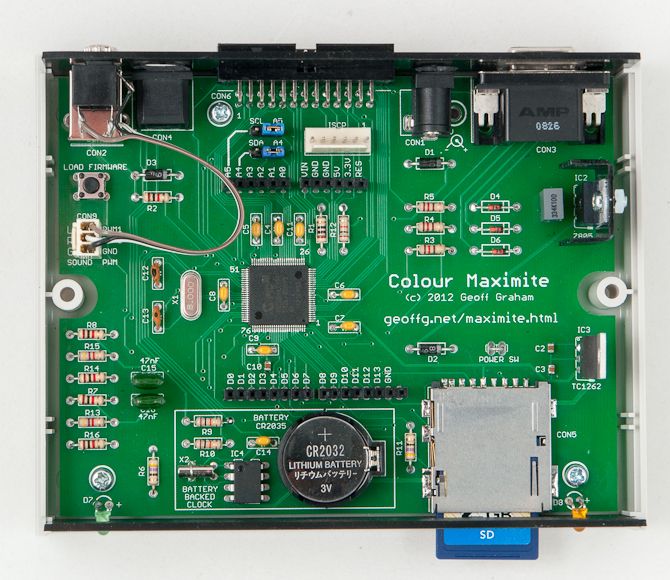

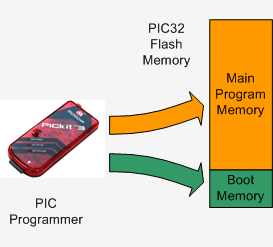

When you buy a PIC32 chip from Microchip is is supplied completely blank. So, the first thing that you must do is program the chip with the firmware included in the construction pack (available at the bottom of this page). This operation is illustrated on the right.

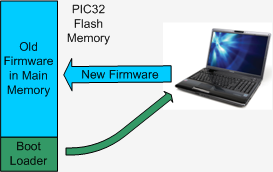

When you buy a PIC32 chip from Microchip is is supplied completely blank. So, the first thing that you must do is program the chip with the firmware included in the construction pack (available at the bottom of this page). This operation is illustrated on the right.  Once the boot loader is in place you can update the firmware via the USB interface and a Windows computer. Full instructions will be included with the update but in essence the boot loader is run at power up and its job is to download the new firmware and reprogram the main program memory (as illustrated on the left).

Once the boot loader is in place you can update the firmware via the USB interface and a Windows computer. Full instructions will be included with the update but in essence the boot loader is run at power up and its job is to download the new firmware and reprogram the main program memory (as illustrated on the left).

Very significant Information for us, I have think the representation of this Information is actually superb one. This is my first visit to your site. Cloud Storage Connect

BalasHapusWow, this piece of writing is pleasant, my younger sister is analyzing these things,

BalasHapusthus I am going to inform her.부산달리기

Hey good information, can you tell me where can ibuy HDMI Cables & Adaptors online pls suggest me

BalasHapus