Live electronic music

Live electronic music (also known as live electronics) is a form of music that can include traditional electronic sound-generating devices, modified electric musical instruments, hacked sound generating technologies, and computers. Initially the practice developed in reaction to sound-based composition for fixed media such as musique concrète, electronic music and early computer music. Musical improvisation often plays a large role in the performance of this music. The timbres of various sounds may be transformed extensively using devices such as amplifiers, filters, ring modulators and other forms of circuitry . Real-time generation and manipulation of audio using live coding is now commonplace.

Laptronica is a form of live electronic music or computer music in which laptops are used as musical instruments. The term is a portmanteau of "laptop computer" and "electronica". The term gained a certain degree of currency in the 1990s and is of significance due to the use of highly powerful computation being made available to musicians in highly portable form, and therefore in live performance. Many sophisticated forms of sound production, manipulation and organization (which had hitherto only been available in studios or academic institutions) became available to use in live performance, largely by younger musicians influenced by and interested in developing experimental popular music forms . A combination of many laptops can be used to form a laptop orchestra.

Live coding

(Collins, McLean, Rohrhuber, and Ward 2003) (sometimes referred to as 'on-the-fly programming' (Wang and Cook 2004,[page needed]), 'just in time programming') is a programming practice centred upon the use of improvised interactive programming. Live coding is often used to create sound and image based digital media, and is particularly prevalent in computer music, combining algorithmic composition with improvisation (Collins 2003,[page needed]). Typically, the process of writing is made visible by projecting the computer screen in the audience space, with ways of visualising the code an area of active research (McLean, Griffiths, Collins, and Wiggins 2010,[page needed]). There are also approaches to human live coding in improvised dance (Anon. 2009). Live coding techniques are also employed outside of performance, such as in producing sound for film (Rohrhuber 2008, 60–70) or audio/visual work for interactive art installations

Live coding is also an increasingly popular technique in programming-related lectures and conference presentations, and has been described as a "best practice" for computer science lectures by Mark Guzdial (2011).

Electroacoustic improvisation

Electroacoustic improvisation (EAI) is a form of free improvisation that was originally referred to as live electronics. It has been part of the sound art world since the 1930s with the early works of John Cage (Schrader 1991,[page needed]; Cage 1960). Source magazine published articles by a number of leading electronic and avant-garde composers in the 1960s (Anon. & n.d.(a)).

It was further influenced by electronic and electroacoustic music and the music of American experimental composers such as John Cage, Morton Feldman and David Tudor. British free improvisation group AMM, particularly their guitarist Keith Rowe, have also played a contributing role in bringing attention to the practice.

Electronic musical instrument

An electronic musical instrument is a musical instrument that produces sound using electronic circuitry. Such an instrument sounds by outputting an electrical, electronic or digital audio signal that ultimately is plugged into a power amplifier which drives a loudspeaker, creating the sound heard by the performer and listener.

An electronic instrument might include a user interface for controlling its sound, often by adjusting the pitch, frequency, or duration of each note. A common user interface is the musical keyboard, which functions similarly to the keyboard on an acoustic piano, except that with an electronic keyboard, the keyboard itself does not make any sound. An electronic keyboard sends a signal to a synth module, computer or other electronic or digital sound generator, which then creates a sound. However, it is increasingly common to separate user interface and sound-generating functions into a music controller (input device) and a music synthesizer, respectively, with the two devices communicating through a musical performance description language such as MIDI or Open Sound Control.

All electronic musical instruments can be viewed as a subset of audio signal processing applications. Simple electronic musical instruments are sometimes called sound effects; the border between sound effects and actual musical instruments is often unclear.

In the 2010s, electronic musical instruments are now widely used in most styles of music. In popular music styles such as electronic dance music, almost all of the instrument sounds used in recordings are electronic instruments (e.g., bass synth, synthesizer, drum machine). Development of new electronic musical instruments, controllers, and synthesizers continues to be a highly active and interdisciplinary field of research. Specialized conferences, notably the International Conference on New Interfaces for Musical Expression, have organized to report cutting-edge work, as well as to provide a showcase for artists who perform or create music with new electronic music instruments, controllers, and synthesizers.

Early examples

In the 18th-century, musicians and composers adapted a number of acoustic instruments to exploit the novelty of electricity. Thus, in the broadest sense, the first electrified musical instrument was the Denis d'or keyboard, dating from 1753, followed shortly by the clavecin électrique by the Frenchman Jean-Baptiste de Laborde in 1761. The Denis d'or consisted of a keyboard instrument of over 700 strings, electrified temporarily to enhance sonic qualities. The clavecin électrique was a keyboard instrument with plectra (picks) activated electrically. However, neither instrument used electricity as a sound-source.

The first electric synthesizer was invented in 1876 by Elisha Gray.[1][2] The "Musical Telegraph" was a chance by-product of his telephone technology when Gray accidentally discovered that he could control sound from a self-vibrating electromagnetic circuit and so invented a basic oscillator. The Musical Telegraph used steel reeds oscillated by electromagnets and transmitted over a telephone line. Gray also built a simple loudspeaker device into later models, which consisted of a diaphragm vibrating in a magnetic field.

A significant invention, which later had a profound effect on electronic music, was the audion in 1906. This was the first thermionic valve, or vacuum tube and which led to the generation and amplification of electrical signals, radio broadcasting, and electronic computation, among other things. Other early synthesizers included the Telharmonium (1897), the Theremin (1919), Jörg Mager's Spharophon (1924) and Partiturophone, Taubmann's similar Electronde (1933), Maurice Martenot's ondes Martenot ("Martenot waves", 1928), Trautwein's Trautonium (1930). The Mellertion (1933) used a non-standard scale, Bertrand's Dynaphone could produce octaves and perfect fifths, while the Emicon was an American, keyboard-controlled instrument constructed in 1930 and the German Hellertion combined four instruments to produce chords. Three Russian instruments also appeared, Oubouhof's Croix Sonore (1934), Ivor Darreg's microtonal 'Electronic Keyboard Oboe' (1937) and the ANS synthesizer, constructed by the Russian scientist Evgeny Murzin from 1937 to 1958. Only two models of this latter were built and the only surviving example is currently stored at the Lomonosov University in Moscow. It has been used in many Russian movies—like Solaris—to produce unusual, "cosmic" sounds.[3][4]

Hugh Le Caine, John Hanert, Raymond Scott, composer Percy Grainger (with Burnett Cross), and others built a variety of automated electronic-music controllers during the late 1940s and 1950s. In 1959 Daphne Oram produced a novel method of synthesis, her "Oramics" technique, driven by drawings on a 35 mm film strip; it was used for a number of years at the BBC Radiophonic Workshop.[5] This workshop was also responsible for the theme to the TV series Doctor Who, a piece, largely created by Delia Derbyshire, that more than any other ensured the popularity of electronic music in the UK.

Telharmonium

In 1897 Thaddeus Cahill patented an instrument called the Telharmonium (or Teleharmonium, also known as the Dynamaphone). Using tonewheels to generate musical sounds as electrical signals by additive synthesis, it was capable of producing any combination of notes and overtones, at any dynamic level. This technology was later used to design the Hammond organ. Between 1901 and 1910 Cahill had three progressively larger and more complex versions made, the first weighing seven tons, the last in excess of 200 tons. Portability was managed only by rail and with the use of thirty boxcars. By 1912, public interest had waned, and Cahill's enterprise was bankrupt.[6]

Theremin

Another development, which aroused the interest of many composers, occurred in 1919-1920. In Leningrad, Leon Theremin (actually Lev Termen) built and demonstrated his Etherophone, which was later renamed the Theremin. This led to the first compositions for electronic instruments, as opposed to noisemakers and re-purposed machines. The Theremin was notable for being the first musical instrument played without touching it. In 1929, Joseph Schillinger composed First Airphonic Suite for Theremin and Orchestra, premièred with the Cleveland Orchestra with Leon Theremin as soloist. The next year Henry Cowell commissioned Theremin to create the first electronic rhythm machine, called the Rhythmicon. Cowell wrote some compositions for it, and he and Schillinger premiered it in 1932.

Ondes Martenot

The 1920s have been called the apex of the Mechanical Age and the dawning of the Electrical Age. In 1922, in Paris, Darius Milhaud began experiments with "vocal transformation by phonograph speed change."[7] These continued until 1927. This decade brought a wealth of early electronic instruments—along with the Theremin, there is the presentation of the Ondes Martenot, which was designed to reproduce the microtonal sounds found in Hindu music, and the Trautonium. Maurice Martenot invented the Ondes Martenot in 1928, and soon demonstrated it in Paris. Composers using the instrument ultimately include Boulez, Honegger, Jolivet, Koechlin, Messiaen, Milhaud, Tremblay, and Varèse. Radiohead guitarist and multi-instrumentalist Jonny Greenwood also uses it in his compositions and a plethora of Radiohead songs. In 1937, Messiaen wrote Fête des belles eaux for 6 ondes Martenot, and wrote solo parts for it in Trois petites Liturgies de la Présence Divine (1943–44) and the Turangalîla-Symphonie (1946–48/90).

Trautonium

The Trautonium was invented in 1928. It was based on the subharmonic scale, and the resulting sounds were often used to emulate bell or gong sounds, as in the 1950s Bayreuth productions of Parsifal. In 1942, Richard Strauss used it for the bell- and gong-part in the Dresden première of his Japanese Festival Music. This new class of instruments, microtonal by nature, was only adopted slowly by composers at first, but by the early 1930s there was a burst of new works incorporating these and other electronic instruments.

Hammond organ and Novachord

In 1929 Laurens Hammond established his company for the manufacture of electronic instruments. He went on to produce the Hammond organ, which was based on the principles of the Telharmonium, along with other developments including early reverberation units.[8] The Hammond organ is an electromechanical instrument, as it used both mechanical elements and electronic parts. A Hammond organ used spinning metal tonewheels to produce different sounds. A magnetic pickup similar in design to the pickups in an electric guitar is used to transmit the pitches in the tonewheels to an amplifier and speaker enclosure. While the Hammond organ was designed to be a lower-cost alternative to a pipe organ for church music, musicians soon discovered that the Hammond was an excellent instrument for blues and jazz; indeed, an entire genre of music developed built around this instrument, known as the organ trio (typically Hammond organ, drums, and a third instrument, either saxophone or guitar).

The first commercially manufactured synthesizer was the Novachord, built by the Hammond Organ Company from 1938 to 1942, which offered 72-note polyphony using 12 oscillators driving monostable-based divide-down circuits, basic envelope control and resonant low-pass filters. The instrument featured 163 vacuum tubes and weighed 500 pounds. The instrument's use of envelope control is significant, since this is perhaps the most significant distinction between the modern synthesizer and other electronic instruments.

Analogue synthesis 1950–1980

The most commonly used electronic instruments are synthesizers, so-called because they artificially generate sound using a variety of techniques. All early circuit-based synthesis involved the use of analogue circuitry, particularly voltage controlled amplifiers, oscillators and filters. An important technological development was the invention of the Clavivox synthesizer in 1956 by Raymond Scott with subassembly by Robert Moog. French composer and engineer Edgard Varèse created a variety of compositions using electronic horns, whistles, and tape. Most notably, he wrote Poème électronique for the Phillips pavilion at the Brussels World Fair in 1958.

Modular synthesizers

RCA produced experimental devices to synthesize voice and music in the 1950s. The Mark II Music Synthesizer, housed at the Columbia-Princeton Electronic Music Center in New York City. Designed by Herbert Belar and Harry Olson at RCA, with contributions from Vladimir Ussachevsky and Peter Mauzey, it was installed at Columbia University in 1957. Consisting of a room-sized array of interconnected sound synthesis components, it was only capable of producing music by programming,[2] using a paper tape sequencer punched with holes to control pitch sources and filters, similar to a mechanical player piano but capable of generating a wide variety of sounds. The vacuum tubesystem had to be patched to create timbres.

In the 1960s synthesizers were still usually confined to studios due to their size. They were usually modular in design, their stand-alone signal sources and processors connected with patch cords or by other means and controlled by a common controlling device. Harald Bode, Don Buchla, Hugh Le Caine, Raymond Scott and Paul Ketoff were among the first to build such instruments, in the late 1950s and early 1960s. Buchla later produced a commercial modular synthesizer, the Buchla Music Easel.[9] Robert Moog, who had been a student of Peter Mauzey and one of the RCA Mark II engineers, created a synthesizer that could reasonably be used by musicians, designing the circuits while he was at Columbia-Princeton. The Moog synthesizer was first displayed at the Audio Engineering Society convention in 1964.[10] It required experience to set up sounds but was smaller and more intuitive than what had come before, less like a machine and more like a musical instrument. Moog established standards for control interfacing, using a logarithmic 1-volt-per-octave for pitch control and a separate triggering signal. This standardization allowed synthesizers from different manufacturers to operate simultaneously. Pitch control was usually performed either with an organ-style keyboard or a music sequencer producing a timed series of control voltages. During the late 1960s hundreds of popular recordings used Moog synthesizers. Other early commercial synthesizer manufacturers included ARP, who also started with modular synthesizers before producing all-in-one instruments, and British firm EMS.

Integrated synthesizers

In 1970, Moog designed the Minimoog, a non-modular synthesizer with a built-in keyboard. The analogue circuits were interconnected with switches in a simplified arrangement called "normalization." Though less flexible than a modular design, normalization made the instrument more portable and easier to use. The Minimoog sold 12,000 units.[11] further standardized the design of subsequent synthesizers with its integrated keyboard, pitch and modulation wheels and VCO->VCF->VCA signal flow. It has become celebrated for its "fat" sound—and its tuning problems. Miniaturized solid-state components allowed synthesizers to become self-contained, portable instruments that soon appeared in live performance and quickly became widely used in popular music and electronic art music.[12]

Polyphony

Many early analog synthesizers were monophonic, producing only one tone at a time. Popular monophonic synthesizers include the Moog Minimoog. A few, such as the Moog Sonic Six, ARP Odyssey and EML 101, could produce two different pitches at a time when two keys were pressed. Polyphony (multiple simultaneous tones, which enables chords) was only obtainable with electronic organ designs at first. Popular electronic keyboards combining organ circuits with synthesizer processing included the ARP Omni and Moog's Polymoog and Opus 3.

By 1976 affordable polyphonic synthesizers began to appear, notably the Yamaha CS-50, CS-60 and CS-80, the Sequential Circuits Prophet-5 and the Oberheim Four-Voice. These remained complex, heavy and relatively costly. The recording of settings in digital memory allowed storage and recall of sounds. The first practical polyphonic synth, and the first to use a microprocessor as a controller, was the Sequential Circuits Prophet-5 introduced in late 1977.[13] For the first time, musicians had a practical polyphonic synthesizer that could save all knob settings in computer memory and recall them at the touch of a button. The Prophet-5's design paradigm became a new standard, slowly pushing out more complex and recondite modular designs.

Tape recording

In 1935, another significant development was made in Germany. Allgemeine Elektrizitäts Gesellschaft (AEG) demonstrated the first commercially produced magnetic tape recorder, called the Magnetophon. Audio tape, which had the advantage of being fairly light as well as having good audio fidelity, ultimately replaced the bulkier wire recorders.

The term "electronic music" (which first came into use during the 1930s) came to include the tape recorder as an essential element: "electronically produced sounds recorded on tape and arranged by the composer to form a musical composition"[17] It was also indispensable to Musique concrète.

Tape also gave rise to the first, analogue, sample-playback keyboards, the Chamberlin and its more famous successor the Mellotron, an electro-mechanical, polyphonic keyboard originally developed and built in Birmingham, England in the early 1960s.

Sound sequencer

During the 1940s–1960s, Raymond Scott, an American composer of electronic music, invented various kind of music sequencers for his electric compositions. Step sequencers played rigid patterns of notes using a grid of (usually) 16 buttons, or steps, each step being 1/16 of a measure. These patterns of notes were then chained together to form longer compositions. Software sequencers were continuously utilized since the 1950s in the context of computer music, including computer-played music (software sequencer), computer-composed music (music synthesis), and computer sound generation (sound synthesis).

Digital era 1980–2000

Digital synthesis

The first digital synthesizers were academic experiments in sound synthesis using digital computers. FM synthesis was developed for this purpose; as a way of generating complex sounds digitally with the smallest number of computational operations per sound sample. In 1983 Yamaha introduced the first stand-alone digital synthesizer, the DX-7. It used frequency modulation synthesis (FM synthesis), first developed by John Chowning at Stanford University during the late sixties.[18] Chowning exclusively licensed his FM synthesis patent to Yamaha in 1975.[19] Yamaha subsequently released their first FM synthesizers, the GS-1 and GS-2, which were costly and heavy. There followed a pair of smaller, preset versions, the CE20 and CE25 Combo Ensembles, targeted primarily at the home organ market and featuring four-octave keyboards.[20] Yamaha's third generation of digital synthesizers was a commercial success; it consisted of the DX7 and DX9 (1983). Both models were compact, reasonably priced, and dependent on custom digital integrated circuits to produce FM tonalities. The DX7 was the first mass market all-digital synthesizer.[21] It became indispensable to many music artists of the 1980s, and demand soon exceeded supply.[22] The DX7 sold over 200,000 units within three years.[23]

The DX series was not easy to program but offered a detailed, percussive sound that led to the demise of the electro-mechanical Rhodes piano, which was heavier and larger than a DX synth. Following the success of FM synthesis Yamaha signed a contract with Stanford University in 1989 to develop digital waveguide synthesis, leading to the first commercial physical modeling synthesizer, Yamaha's VL-1, in 1994.[24] The DX-7 was affordable enough for amateurs and young bands to buy, unlike the costly synthesizers of previous generations, which were mainly used by top professionals.

Sampling

The Fairlight CMI (Computer Musical Instrument), the first polyphonic digital sampler, was the harbinger of sample-based synthesizers.[25] Designed in 1978 by Peter Vogel and Kim Ryrie and based on a dual microprocessor computer designed by Tony Furse in Sydney, Australia, the Fairlight CMI gave musicians the ability to modify volume, attack, decay, and use special effects like vibrato. Sample waveforms could be displayed on-screen and modified using a light pen. The Synclavier from New England Digital was a similar system. Jon Appleton (with Jones and Alonso) invented the Dartmouth Digital Synthesizer, later to become the New England Digital Corp's Synclavier. The Kurzweil K250, first produced in 1983, was also a successful polyphonic digital music synthesizer, noted for its ability to reproduce several instruments synchronously and having a velocity-sensitive keyboard.

Computer music

An important new development was the advent of computers for the purpose of composing music, as opposed to manipulating or creating sounds. Iannis Xenakis began what is called musique stochastique, or stochastic music, which is a method of composing that employs mathematical probability systems. Different probability algorithms were used to create a piece under a set of parameters. Xenakis used graph paper and a ruler to aid in calculating the velocity trajectories of glissandi for his orchestral composition Metastasis (1953–54), but later turned to the use of computers to compose pieces like ST/4 for string quartet and ST/48 for orchestra (both 1962).

The impact of computers continued in 1956. Lejaren Hiller and Leonard Issacson composed Illiac Suite for string quartet, the first complete work of computer-assisted composition using algorithmic composition.

In 1957, Max Mathews at Bell Lab wrote MUSIC-N series, a first computer program family for generating digital audio waveforms through direct synthesis. Then Barry Vercoe wrote MUSIC 11 based on MUSIC IV-BF, a next-generation music synthesis program (later evolving into csound, which is still widely used).

In mid 80s, Miller Puckette at IRCAM developed graphic signal-processing software for 4X called Max (after Max Mathews), and later ported it to Macintosh (with Dave Zicarelli extending it for Opcode [31]) for real-time MIDIcontrol, bringing algorithmic composition availability to most composers with modest computer programming background.

MIDI

In 1980, a group of musicians and music merchants met to standardize an interface by which new instruments could communicate control instructions with other instruments and the prevalent microcomputer. This standard was dubbed MIDI (Musical Instrument Digital Interface). A paper was authored by Dave Smith of Sequential Circuits and proposed to the Audio Engineering Society in 1981. Then, in August 1983, the MIDI Specification 1.0 was finalized.

The advent of MIDI technology allows a single keystroke, control wheel motion, pedal movement, or command from a microcomputer to activate every device in the studio remotely and in synchrony, with each device responding according to conditions predetermined by the composer.

MIDI instruments and software made powerful control of sophisticated instruments easily affordable by many studios and individuals. Acoustic sounds became reintegrated into studios via sampling and sampled-ROM-based instruments.

Modern electronic musical instruments

The increasing power and decreasing cost of sound-generating electronics (and especially of the personal computer), combined with the standardization of the MIDI and Open Sound Control musical performance description languages, has facilitated the separation of musical instruments into music controllers and music synthesizers.

By far the most common musical controller is the musical keyboard. Other controllers include the radiodrum, Akai's EWI and Yamah's WX wind controllers, the guitar-like SynthAxe, the BodySynth, the Buchla Thunder, the Continuum Fingerboard, the Roland Octapad, various isomorphic keyboards including the Thummer, and Kaossilator Pro, and kits like I-CubeX.

Reactable

The Reactable is a round translucent table with a backlit interactive display. By placing and manipulating blocks called tangibles on the table surface, while interacting with the visual display via finger gestures, a virtual modular synthesizeris operated, creating music or sound effects.

Percussa AudioCubes

AudioCubes are autonomous wireless cubes powered by an internal computer system and rechargeable battery. They have internal RGB lighting, and are capable of detecting each other's location, orientation and distance. The cubes can also detect distances to the user's hands and fingers. Through interaction with the cubes, a variety of music and sound software can be operated. AudioCubes have applications in sound design, music production, DJing and live performance.

Kaossilator

The Kaossilator and Kaossilator Pro are compact instruments where the position of a finger on the touch pad controls two note-characteristics; usually the pitch is changed with a left-right motion and the tonal property, filter or other parameter changes with an up-down motion. The touch pad can be set to different musical scales and keys. The instrument can record a repeating loop of adjustable length, set to any tempo, and new loops of sound can be layered on top of existing ones. This lends itself to electronic dance-music but is more limited for controlled sequences of notes, as the pad on a regular Kaossilator is featureless.

Eigenharp

The Eigenharp is a large instrument resembling a bassoon, which can be interacted with through big buttons, a drum sequencer and a mouthpiece. The sound processing is done on a separate computer.

XTH Sense

The XTH Sense is a wearable instrument that uses muscle sounds from the human body (known as mechanomyogram) to make music and sound effects. As a performer moves, the body produces muscle sounds that are captured by a chip microphone worn on arm or legs. The muscle sounds are then live sampled using a dedicated software program and a library of modular audio effects. The performer controls the live sampling parameters by weighing force, speed and articulation of the movement.

AlphaSphere

The AlphaSphere is a spherical instrument that consists of 48 tactile pads that respond to pressure as well as touch. Custom software allows the pads to be indefinitely programmed individually or by groups in terms of function, note, and pressure parameter among many other settings. The primary concept of the AlphaSphere is to increase the level of expression available to electronic musicians, by allowing for the playing style of a musical instrument.

Chip music

Chiptune, chipmusic, or chip music is music written in sound formats where many of the sound textures are synthesized or sequenced in real time by a computer or video game console sound chip, sometimes including sample-based synthesis and low bit sample playback. Many chip music devices featured synthesizers in tandem with low rate sample playback.

DIY culture

During the late 1970s and early 1980s, DIY (Do it yourself) designs were published in hobby electronics magazines (notably the Formant modular synth, a DIY clone of the Moog system, published by Elektor) and kits were supplied by companies such as Paia in the US, and Maplin Electronics in the UK.

Circuit bending

In 1966, Reed Ghazala discovered and began to teach math "circuit bending"—the application of the creative short circuit, a process of chance short-circuiting, creating experimental electronic instruments, exploring sonic elements mainly of timbre and with less regard to pitch or rhythm, and influenced by John Cage’s aleatoric music concept.[32]

Much of this manipulation of circuits directly, especially to the point of destruction, was pioneered by Louis and Bebe Barron in the early 1950s, such as their work with John Cage on the Williams Mix and especially in the soundtrack to Forbidden Planet.

Modern circuit bending is the creative customization of the circuits within electronic devices such as low voltage, battery-powered guitar effects, children's toys and small digital synthesizers to create new musical or visual instruments and sound generators. Emphasizing spontaneity and randomness, the techniques of circuit bending have been commonly associated with noise music, though many more conventional contemporary musicians and musical groups have been known to experiment with "bent" instruments. Circuit bending usually involves dismantling the machine and adding components such as switches and potentiometers that alter the circuit. With the revived interest for analogue synthesizer circuit bending became a cheap solution for many experimental musicians to create their own individual analogue sound generators. Nowadays many schematics can be found to build noise generators such as the Atari Punk Console or the Dub Siren as well as simple modifications for children toys such as the famous Speak & Spells that are often modified by circuit benders.

Modular synthesizers

The modular synthesizer is a type of synthesizer consisting of separate interchangeable modules. These are also available as kits for hobbyist DIY constructors. Many hobbyist designers also make available bare PCB boards and front panels for sale to other hobbyists.

it is working on musical control with the Sixense TrueMotion motion controller. Immersive virtual musical instruments, or immersive virtual instruments for music and sound aim to represent musical events and sound parameters in a virtual reality so that they can be perceived not only through auditory feedback but also visually in 3D and possibly through tactile as well as haptic feedback, allowing the development of novel interaction metaphors beyond manipulation such as prehension.

Synthesizer

A synthesizer (often abbreviated as synth) is an electronic musical instrument that generates audio signals that may be converted to sound. Synthesizers may imitate traditional musical instruments such as piano, flute, vocals, or natural sounds such as ocean waves; or generate novel electronic timbres. They are often played with a musical keyboard, but they can be controlled via a variety of other devices, including music sequencers, instrument controllers, fingerboards, guitar synthesizers, wind controllers, and electronic drums. Synthesizers without built-in controllers are often called sound modules, and are controlled via USB, MIDI or CV/gate using a controller device, often a MIDI keyboard or other controller.

Synthesizers use various methods to generate electronic signals (sounds). Among the most popular waveform synthesis techniques are subtractive synthesis, additive synthesis, wavetable synthesis, frequency modulation synthesis, phase distortion synthesis, physical modeling synthesis and sample-based synthesis.

Synthesizers were first used in pop music in the 1960s. In the late 1970s, synths were used in progressive rock, pop and disco. In the 1980s, the invention of the relatively inexpensive Yamaha DX7 synth made digital synthesizers widely available. 1980s pop and dance music often made heavy use of synthesizers. In the 2010s, synthesizers are used in many genres, such as pop, hip hop, metal, rock and dance. Contemporary classical music composers from the 20th and 21st century write compositions for synthesizer.

Early electric instruments

One of the earliest electric musical instruments, the Musical Telegraph, was invented in 1876 by American electrical engineer Elisha Gray. He accidentally discovered the sound generation from a self-vibrating electromechanical circuit, and invented a basic single-note oscillator. This instrument used steel reeds with oscillations created by electromagnets transmitted over a telegraph line. Gray also built a simple loudspeaker device into later models, consisting of a vibrating diaphragm in a magnetic field, to make the oscillator audible.[3][4] This instrument was a remote electromechanical musical instrument that used telegraphy and electric buzzers that generated fixed timbre sound. Though it lacked an arbitrary sound-synthesis function, some have erroneously called it the first synthesizer.

In 1897, Thaddeus Cahill invented the Telharmonium, which was capable of additive synthesis. Cahill's business was unsuccessful for various reasons, but similar and more compact instruments were subsequently developed, such as electronic and tonewheel organs including the Hammond organ, which was invented in 1934.

Emergence of electronics and early electronic instruments

In 1906, American engineer, Lee de Forest ushered in the "electronics age".[5] He invented the first amplifying vacuum tube, called the Audion tube. This led to new entertainment technologies, including radio and sound films. These new technologies also influenced the music industry, and resulted in various early electronic musical instruments that used vacuum tubes, including:

- Audion piano by Lee de Forest in 1915[6]

- Theremin by Léon Theremin in 1920[7]

- Ondes Martenot by Maurice Martenot in 1928

- Trautonium by Friedrich Trautwein in 1929

Most of these early instruments used heterodyne circuits to produce audio frequencies, and were limited in their synthesis capabilities. Ondes Martenot and Trautonium were continuously developed for several decades, finally developing qualities similar to later synthesizers.

Graphical sound

In the 1920s, Arseny Avraamov developed various systems of graphic sonic art,[8] and similar graphical sound and tonewheel systems were developed around the world.[9] In 1938, USSR engineer Yevgeny Murzin designed a compositional tool called ANS, one of the earliest real-time additive synthesizers using optoelectronics. Although his idea of reconstructing a sound from its visible image was apparently simple, the instrument was not realized until 20 years later, in 1958, as Murzin was, "an engineer who worked in areas unrelated to music."[10]

Subtractive synthesis and polyphonic synthesizer

In the 1930s and 1940s, the basic elements required for the modern analog subtractive synthesizers — audio oscillators, audio filters, envelope controllers, and various effects units — had already appeared and were utilized in several electronic instruments.[citation needed]

The earliest polyphonic synthesizers were developed in Germany and the United States. The Warbo Formant Orgal developed by Harald Bode in Germany in 1937, was a four-voice key-assignment keyboard with two formant filters and a dynamic envelope controller.

The Hammond Novachord released in 1939, was an electronic keyboard that used twelve sets of top-octave oscillators with octave dividers to generate sound, with vibrato, a resonator filter bank and a dynamic envelope controller. During the three years that Hammond manufactured this model, 1,069 units were shipped, but production was discontinued at the start of World War II. Both instruments were the forerunners of the later electronic organs and polyphonic synthesizers.

Monophonic electronic keyboards

In the 1940s and 1950s, before the popularization of electronic organs and the introductions of combo organs, manufacturers developed and marketed various portable monophonic electronic instruments with small keyboards. These small instruments consisted of an electronic oscillator, vibrato effect, passive filters etc. Most of these (except for Clavivox) were designed for conventional ensembles, rather than as experimental instruments for electronic music studios—but they contributed to the evolution of modern synthesizers. These small instruments included:

- Solovox (1940) by Hammond Organ Company: a monophonic attachment keyboard instrument consisting of a large tone-cabinet and a small keyboard-unit, intended to accompany the pianos with monophonic lead voice of organ or orchestral sound.

- Multimonica (1940) designed by Harald Bode, produced by Hohner: dual keyboard instrument consisting of an electrically blown reed organ (lower) and a monophonic sawtooth synthesizer (upper).

- Ondioline (1941) designed by Georges Jenny in France.

- Clavioline (1947) designed by Constant Martin, produced by Selmer, Gibson, etc.. This instrument was featured on various 1960s popular recordings, including Del Shannon's "Runaway" (1961), and The Beatles' "Baby, You're a Rich Man" (1967).

- Univox (1951) by Jennings Musical Instruments (JMI). This instrument was featured on The Tornados' "Telstar" (1962).

- Clavivox (1952) by Raymond Scott.

- first portable digital keyboard (1971) by D. Ross Grable

Other innovations

In the late 1940s, Canadian inventor and composer, Hugh Le Caine invented the Electronic Sackbut, a voltage-controlled electronic musical instrument that provided the earliest real-time control of three aspects of sound (volume, pitch, and timbre)—corresponding to today's touch-sensitive keyboard, pitch and modulation controllers. The controllers were initially implemented as a multidimensional pressure keyboard in 1945, then changed to a group of dedicated controllers operated by left hand in 1948.[16]

In Japan, as early as in 1935, Yamaha released Magna organ,[17] a multi-timbral keyboard instrument based on electrically blown free reeds with pickups.[18] It may have been similar to the electrostatic reed organs developed by Frederick Albert Hoschke in 1934 and then manufactured by Everett and Wurlitzer until 1961.

In 1949, Japanese composer Minao Shibata discussed the concept of "a musical instrument with very high performance" that can "synthesize any kind of sound waves" and is "...operated very easily," predicting that with such an instrument, "...the music scene will be changed drastically."

Electronic music studios as sound synthesizers

After World War II, electronic music including electroacoustic music and musique concrète was created by contemporary composers, and numerous electronic music studios were established around the world, especially in Cologne, Paris and Milan. These studios were typically filled with electronic equipment including oscillators, filters, tape recorders, audio consoles etc., and the whole studio functioned as a "sound synthesizer".

Origin of the term "sound synthesizer"

In 1951–1952, RCA produced a machine called the Electronic Music Synthesizer; however, it was more accurately a composition machine, because it did not produce sounds in real time.[21] RCA then developed the first programmable sound synthesizer, RCA Mark II Sound Synthesizer, installing it at the Columbia-Princeton Electronic Music Center in 1957.[22] Prominent composers including Vladimir Ussachevsky, Otto Luening, Milton Babbitt, Halim El-Dabh, Bülent Arel, Charles Wuorinen, and Mario Davidovsky used the RCA Synthesizer extensively in various compositions.

From modular synthesizer to popular music

In 1959–1960, Harald Bode developed a modular synthesizer and sound processor,[24][25] and in 1961, he wrote a paper exploring the concept of self-contained portable modular synthesizer using newly emerging transistor technology.[26] He also served as AES session chairman on music and electronic for the fall conventions in 1962 and 1964.[27] His ideas were adopted by Donald Buchla and Robert Moog in the United States, and Paolo Ketoff et al. in Italy[28][29][30] at about the same time:[31] among them, Moog is known as the first synthesizer designer to popularize the voltage control technique in analog electronic musical instruments.[31]

A working group at Roman Electronic Music Center, composer Gino Marinuzzi, Jr., designer Giuliano Strini, MSEE, and sound engineer and technician Paolo Ketoff in Italy; their vacuum-tube modular "FonoSynth" slightly predated (1957–58) Moog and Buchla's work. Later the group created a solid-state version, the "Synket". Both devices remained prototypes (except a model made for John Eaton who wrote a "Concert Piece for Synket and Orchestra"), owned and used only by Marinuzzi, notably in the original soundtrack of Mario Bava's sci-fi film "Terrore nello spazio" (a.k.a. Planet of the Vampires, 1965), and a RAI-TV mini-series, "Jeckyll".[28][29][30]

Robert Moog built his first prototype between 1963 and 1964, and was then commissioned by the Alwin Nikolais Dance Theater of NY;[32][33] while Donald Buchla was commissioned by Morton Subotnick.[34][35] In the late 1960s to 1970s, the development of miniaturized solid-state components allowed synthesizers to become self-contained, portable instruments, as proposed by Harald Bode in 1961. By the early 1980s, companies were selling compact, modestly priced synthesizers to the public. This, along with the development of Musical Instrument Digital Interface (MIDI), made it easier to integrate and synchronize synthesizers and other electronic instruments for use in musical composition. In the 1990s, synthesizer emulations began to appear in computer software, known as software synthesizers. From 1996 onward, Steinberg's Virtual Studio Technology (VST) plug-ins – and a host of other kinds of competing plug-in software, all designed to run on personal computers – began emulating classic hardware synthesizers, becoming increasingly successful at doing so during the following decades.

|

First Movement (Allegro) of Brandenburg Concerto Number 3 played on synthesizer.

|

Problems playing this file? See media help. | |

The synthesizer had a considerable effect on 20th-century music.[36] Micky Dolenz of The Monkees bought one of the first Moog synthesizers. The band was the first to release an album featuring a Moog with Pisces, Aquarius, Capricorn & Jones Ltd. in 1967,[37] which became a Billboard number-one album. A few months later the title track of the Doors' 1967 album Strange Days featured a Moog played by Paul Beaver. Wendy Carlos's Switched-On Bach (1968), recorded using Moog synthesizers, also influenced numerous musicians of that era and is one of the most popular recordings of classical music ever made,[38] alongside the records (particularly Snowflakes are Dancing in 1974) of Isao Tomita, who in the early 1970s utilized synthesizers to create new artificial sounds (rather than simply mimicking real instruments[39]) and made significant advances in analog synthesizer programming.[40]

The sound of the Moog reached the mass market with Simon and Garfunkel's Bookends in 1968 and The Beatles' Abbey Road the following year; hundreds of other popular recordings subsequently used synthesizers, most famously the portable Minimoog. Electronic music albums by Beaver and Krause, Tonto's Expanding Head Band, The United States of America, and White Noise reached a sizable[cult audience and progressive rock musicians such as Richard Wright of Pink Floyd and Rick Wakeman of Yes were soon using the new portable synthesizers extensively. Stevie Wonder and Herbie Hancock also played a major role in popularising synthesizers in Black American music.[41][42] Other early users included Emerson, Lake & Palmer's Keith Emerson, Tony Banks of Genesis, Todd Rundgren, Pete Townshend, and The Crazy World of Arthur Brown's Vincent Crane. In Europe, the first no. 1 single to feature a Moog prominently was Chicory Tip's 1972 hit "Son of My Father".

Polyphonic keyboards and the digital revolution

In 1973, Yamaha developed the Yamaha GX-1, an early polyphonic synthesizer.[45] Other polyphonic synthesizers followed, mainly manufactured in Japan and the United States from the mid-1970s to the early-1980s, and included Roland Corporation's RS-101 and RS-202 (1975 and 1976) string synthesizers,[46][47] the Yamaha CS-80 (1976), Oberheim's Polyphonic and OB-X (1975 and 1979), Sequential Circuits' Prophet-5 (1978), and Roland's Jupiter-4 and Jupiter-8 (1978 and 1981). The success of the Prophet-5, a polyphonic and microprocessor-controlled keyboard synthesizer, aided the shift of synthesizers towards their familiar modern shape, away from large modular units and towards smaller keyboard instruments.[48] This form factor helped accelerate the integration of synthesizers into popular music, a shift that had been lent powerful momentum by the Minimoog, and also later the ARP Odyssey.[49] Earlier polyphonic electronic instruments of the 1970s, rooted in string synthesizers before advancing to multi-synthesizers incorporating monosynths and more, gradually fell out of favour in the wake of these newer, note-assigned polyphonic keyboard synthesizers.[50]

In 1973,[51] Yamaha licensed the algorithms for the first digital synthesis algorithm, frequency modulation synthesis (FM synthesis), from John Chowning, who had experimented with it since 1971.[52] Yamaha's engineers began adapting Chowning's algorithm for use in a commercial digital synthesizer, adding improvements such as the "key scaling" method to avoid the introduction of distortion that normally occurred in analog systems during frequency modulation.[53] In the 1970s, Yamaha were granted a number of patents, under the company's former name "Nippon Gakki Seizo Kabushiki Kaisha", evolving Chowning's early work on FM synthesis technology.[54] Yamaha built the first prototype digital synthesizer in 1974.[51] Yamaha eventually commercialized FM synthesis technology with the Yamaha GS-1, the first FM digital synthesizer, released in 1980.[55] The first commercial digital synthesizer released a year earlier, the Casio VL-1,[56] released in 1979.[57]

By the end of the 1970s, digital synthesizers and digital samplers had arrived on the market around the world (and are still sold today),[note 1] as the result of preceding research and development.[note 1] Compared with analog synthesizer sounds, the digital sounds produced by these new instruments tended to have a number of different characteristics: clear attack and sound outlines, carrying sounds, rich overtones with inharmonic contents, and complex motion of sound textures, amongst others. While these new instruments were expensive, these characteristics meant musicians were quick to adopt them, especially in the United Kingdom[58] and the United States. This encouraged a trend towards producing music using digital sounds,[note 2] and laid the foundations for the development of the inexpensive digital instruments popular in the next decade (see below). Relatively successful instruments, with each selling more than several hundred units per series, included the NED Synclavier (1977), Fairlight CMI (1979), E-mu Emulator (1981), and PPG Wave (1981).[note 1][58][59][60][61]

In 1983, however, Yamaha's revolutionary DX7 digital synthesizer[51][62] swept through popular music, leading to the adoption and development of digital synthesizers in many varying forms during the 1980s, and the rapid decline of analog synthesizer technology. In 1987, Roland's D-50 synthesizer was released, which combined the already existing sample-based synthesis[note 3] and the onboard digital effects,[63] while Korg's even more popular M1 (1988) now also heralded the era of the workstation synthesizer, based on ROM sample sounds for composing and sequencing whole songs, rather than solely traditional sound synthesis.[64]

Throughout the 1990s, the popularity of electronic dance music employing analog sounds, the appearance of digital analog modelling synthesizers to recreate these sounds, and the development of the Eurorack modular synthesiser system, initially introduced with the Doepfer A-100 and since adopted by other manufacturers, all contributed to the resurgence of interest in analog technology. The turn of the century also saw improvements in technology that led to the popularity of digital software synthesizers.[65] In the 2010s, new analog synthesizers, both in keyboard instrument and modular form, are released alongside current digital hardware instruments.[66] In 2016, Korg announced the release of the Korg Minilogue, the first polyphonic analogue synth to be mass-produced in decades.

Electronic dance music

Electronic dance music (also known as EDM, dance music,[1] club music, or simply dance) is a broad range of percussive electronic music genres made largely for nightclubs, raves, and festivals. It is generally produced for playback by disc jockeys (DJs) who create seamless selections of tracks, called a mix, by segueing from one recording to another.[2] EDM producers also perform their music live in a concert or festival setting in what is sometimes called a live PA. In Europe, EDM is more commonly called 'dance music', or simply 'dance'.[3]

In the late 1980s and early 1990s, following the emergence of raving, pirate radio, and an upsurge of interest in club culture, EDM achieved widespread mainstream popularity in Europe. In the United States at that time, acceptance of dance culture was not universal, and although both electro and Chicago house music were influential both in Europe and the US, mainstream media outlets and the record industry remained openly hostile to it. There was also a perceived association between EDM and drug culture, which led governments at state and city level to enact laws and policies intended to halt the spread of rave culture.[4]

Subsequently, in the new millennium, the popularity of EDM increased globally, largely in Australia and the United States. By the early 2010s, the term "electronic dance music" and the initialism "EDM" was being pushed by the American music industry and music press in an effort to rebrand American rave culture.[4] Despite the industry's attempt to create a specific EDM brand, the initialism remains in use as an umbrella term for multiple genres, including house, techno, trance, drum and bass, and dubstep, as well as their respective subgenres

Terminology

The term "electronic dance music" (EDM) was used in the United States as early as 1985, although the term "dance music" did not catch on as a blanket term [95]. Writing in The Guardian, journalist Simon Reynolds noted that the American music industry's adoption of the term EDM in the late 2000s was an attempt to re-brand US "rave culture" and differentiate it from the 1990s rave scene. In the UK, "dance music" or "dance" are more common terms for EDM.[4]What is widely perceived to be "club music" has changed over time; it now includes different genres and may not always encompass EDM. Similarly, "electronic dance music" can mean different things to different people. Both "club music" and "EDM" seem vague, but the terms are sometimes used to refer to distinct and unrelated genres (club music is defined by what is popular, whereas EDM is distinguished by musical attributes).[96] Until the late 1990s, when the larger US music industry created music charts for "dance" (Billboard magazine has maintained a "dance" chart since 1974 and it continues to this day).[93] In July 1995, Nervous Records and Project X Magazine hosted the first awards ceremony, calling it the "Electronic Dance Music Awards"

Production

Electronic dance music is generally composed and produced in a recording studio with specialized equipment such as samplers, synthesizers, effects units and MIDI controllers all set up to interact with one another using the MIDI protocol. In the genre's early days, hardware electronic musical instruments were used and the focus in production was mainly on manipulating MIDI data as opposed to manipulating audio signals. However, since the late 1990s the use of software has been increasing. A modern electronic music production studio generally consists of a computer running a digital audio workstation (DAW), with various plug-ins installed such as software synthesizers and effects units, which are controlled with a MIDI controller such as a MIDI keyboard. This setup suffices for a producer to create an entire track from start to finish, ready to be mastered.

Ghost production

A ghost producer is a hired music producer in a business arrangement who produces a song for another DJ/artist that releases it as their own, typically under a contract which prevents them from identifying themselves as a personnel of the song.[140] Ghost producers are often noted in a song's credits.[140] Ghost producers receive a simple fee or royalty payments for their work and are often able to work in their preference of not having the intense pressure of fame and the lifestyle of an internationally recognized DJ.[139] A ghost producer, also regularly called a bedroom producer due to the availability of digital audio workstation software that facilitates a "work from home"-esque musical production career, may increase their notability in the music industry by acquainting with established "big name" DJs and producers.[139] Producers like Martin Garrix and Porter Robinson are often noted for their ghost production work for other producers while David Guetta and Steve Aoki are noted for their usage of ghost producers in their songs whereas DJs like Tiësto have been openly crediting their ghost producers in an attempt to avoid censure and for transparency.

Many ghost producers sign agreements that prevent them from working for anyone else or establishing themselves as a solo artist.[142] Such non-disclosure agreements are often noted as predatory because ghost producers, especially teenage producers, do not have an understanding of the music industry. London producer Mat Zo has alleged that DJs who hire ghost producers "have pretended to make their own music and [left] us actual producers to struggle

Remix

A remix is a piece of media which has been altered from its original state by adding, removing, and/or changing pieces of the item. A song, piece of artwork, books, video, or photograph can all be remixes. The only characteristic of a remix is that it appropriates and changes other materials to create something new.

Most commonly, remixes are a subset of audio mixing in music and song recordings. Songs may be remixed for a variety of reasons:

- to adapt or revise a song for radio or nightclub play

- to create a stereo or surround sound version of a song where none was previously available

- to improve the fidelity of an older song for which the original master has been lost or degraded

- to alter a song to suit a specific music genre or radio format

- to use some of the same materials, allowing the song to reach a different audience

- to alter a song for artistic purposes.

- to provide additional versions of a song for use as bonus tracks or for a B-side, for example, in times when a CD single might carry a total of 4 tracks

- to create a connection between a smaller artist and a more successful one, as was the case with Fatboy Slim's remix of "Brimful of Asha" by Cornershop

- to improve the first or demo mix of the song, generally to ensure a professional product.

- to provide an alternative version of a song

- to improve a song from its original state

Remixes should not be confused with edits, which usually involve shortening a final stereo master for marketing or broadcasting purposes. Another distinction should be made between a remix, which recombines audio pieces from a recording to create an altered version of a song, and a cover: a re-recording of someone else's song like Mike D's remix of Moby's "Natural Blues".

While audio mixing is one of the most popular and recognized forms of remixing, this is not the only media form which is remixed in numerous examples. Literature, film, technology, and social systems can all be argued as a form of remix .

Since the beginnings of recorded sound in the late 19th century, technology has enabled people to rearrange the normal listening experience. With the advent of easily editable magnetic tape in the 1940s and 1950s and the subsequent development of multitrack recording, such alterations became more common. In those decades the experimental genre of musique concrète used tape manipulation to create sound compositions. Less artistically lofty edits produced medleys or novelty recordings of various types.

After the rise of dance music in the late 1980s, a new form of remix was popularised, where the vocals would be kept and the instruments would be replaced, often with matching backing in the house music idiom. Kevin Saunderson was the first producer to change the art of remixing by creating his own original music, entirely replacing the earlier track, then mixing back in the artist's original lyrics to make his remix. He introduced this technique for the first time with the Wee Papa Girl Rappers song "Heat it Up", in 1988. Another clear example of this approach is Roberta Flack's 1989 ballad "Uh-Uh Ooh-Ooh Look Out (Here It Comes)", which Chicago House great Steve "Silk" Hurley dramatically reworked into a boisterous floor-filler by stripping away all the instrumental tracks and substituting a minimalist, sequenced "track" to underpin her vocal delivery. The art of the remix gradually evolved, and soon more avant-garde artists such as Aphex Twin were creating more experimental remixes of songs (relying on the groundwork of Cabaret Voltaire and the others), which varied radically from their original sound and were not guided by pragmatic considerations such as sales or "danceability", but were created for "art's sake"

A remix in art often takes multiple perspectives upon the same theme. An artist takes an original work of art and adds their own take on the piece creating something completely different while still leaving traces of the original work. It is essentially a reworked abstraction of the original work while still holding remnants of the original piece while still letting the true meanings of the original piece shine through. Famous examples include The Marilyn Diptych by Andy Warhol (modifies colors and styles of one image), and The Weeping Woman by Pablo Picasso, (merges various angles of perspective into one view). Some of Picasso's other famous paintings also incorporate parts of his life, such as his love affairs, into his paintings. For example, his painting Les Trois Danseuses, or The Three Dancers, is about a love triangle.

In recent years the concept of the remix has been applied analogously to other media and products. In 2001, the British Channel 4 television program Jaaaaam was produced as a remix of the sketches from the comedy show Jam. In 2003 The Coca-Cola Company released a new version of their soft drink Sprite with tropical flavors under the name Sprite Remix.

Remix production is now often involved in media production as a form of parody. Scary Movie series is famous for its comic remix of various well-known horror movies such as Ring, Scream, and Saw. This form of remix is also used in advertisements, creating parodies of famous movies, TV series, etc. For example, McDonald's published a commercial poster that parodied the movie Dark Knight.

Because remixes may borrow heavily from an existing piece of music (possibly more than one), the issue of intellectual property becomes a concern. The most important question is whether a remixer is free to redistribute his or her work, or whether the remix falls under the category of a derivative work according to, for example, United States copyright law. Of note are open questions concerning the legality of visual works, like the art form of collage, which can be plagued with licensing issues.

There are two obvious extremes with regard to derivative works. If the song is substantively dissimilar in form (for example, it might only borrow a motif which is modified, and be completely different in all other respects), then it may not necessarily be a derivative work (depending on how heavily modified the melody and chord progressions were). On the other hand, if the remixer only changes a few things (for example, the instrument and tempo), then it is clearly a derivative work and subject to the copyrights of the original work's copyright holder.

The Creative Commons is a non-profit organization that allows the sharing and use of creativity and knowledge through free legal tools and explicitly aims for enabling a Remix culture.[20] They created a website that allows artists to share their work with other users, giving them the ability to share, use, or build upon their work, under the Creative Commons license. The artist can limit the copyright to specific users for specific purposes, while protecting the users and the artist.

The exclusive rights of the copyright owner over acts such as reproduction/copying, communication, adaptation and performance – unless licensed openly – by their very nature reduce the ability to negotiate copyright material without permission.[22] Remixes will inevitably encounter legal problems when the whole or a substantial part of the original material has been reproduced, copied, communicated, adapted or performed – unless a permission has been given in advance through a voluntary open content license like a Creative Commons license, there is fair dealing involved (the scope of which is extraordinarily narrow), a statutory license exists, or permission has been sought and obtained from the copyright owner. Generally, the courts consider what will amount to a substantial part by reference to its quality, as opposed to quantity and the importance the part taken bears in relation to the work as whole.

There are proposed theories of reform regarding the copyright law and remixes. Nicolas Suzor believes that copyright law should be reformed in such a manner as to allow certain reuses of copyright material without the permission of the copyright owner where those derivatives are highly transformative and do not impact upon the primary market of the copyright owner. There certainly appears to be a strong argument that non commercial derivatives, which do not compete with the market for the original material, should be afforded some defense to copyright actions

Architectural acoustics

Architectural acoustics (also known as room acoustics and building acoustics) is the science and engineering of achieving a good sound within a building and is a branch of acoustical engineering. The first application of modern scientific methods to architectural acoustics was carried out by Wallace Sabine in the Fogg Museum lecture room who then applied his new found knowledge to the design of Symphony Hall, Boston.

Architectural acoustics can be about achieving good speech intelligibility in a theatre, restaurant or railway station, enhancing the quality of music in a concert hall or recording studio, or suppressing noise to make offices and homes more productive and pleasant places to work and live in.Architectural acoustic design is usually done by acoustic consultants

Building skin envelope

This science analyzes noise transmission from building exterior envelope to interior and vice versa. The main noise paths are roofs, eaves, walls, windows, door and penetrations. Sufficient control ensures space functionality and is often required based on building use and local municipal codes. An example would be providing a suitable design for a home which is to be constructed close to a high volume roadway, or under the flight path of a major airport, or of the airport itself.

Inter-space noise control

The science of limiting and/or controlling noise transmission from one building space to another to ensure space functionality and speech privacy. The typical sound paths are ceilings, room partitions, acoustic ceiling panels (such as wood dropped ceiling panels), doors, windows, flanking, ducting and other penetrations. Technical solutions depend on the source of the noise and the path of acoustic transmission, for example noise by steps or noise by (air, water) flow vibrations. An example would be providing suitable party wall design in an apartment complex to minimize the mutual disturbance due to noise by residents in adjacent apartments.

Interior space acoustics

This is the science of controlling a room's surfaces based on sound absorbing and reflecting properties. Excessive reverberation time, which can be calculated, can lead to poor speech intelligibility.

Sound reflections create standing waves that produce natural resonances that can be heard as a pleasant sensation or an annoying one.[5] Reflective surfaces can be angled and coordinated to provide good coverage of sound for a listener in a concert hall or music recital space. To illustrate this concept consider the difference between a modern large office meeting room or lecture theater and a traditional classroom with all hard surfaces.

Interior building surfaces can be constructed of many different materials and finishes. Ideal acoustical panels are those without a face or finish material that interferes with the acoustical infill or substrate. Fabric covered panels are one way to heighten acoustical absorption. Perforated metal also shows sound absorbing qualities.[6] Finish material is used to cover over the acoustical substrate. Mineral fiber board, or Micore, is a commonly used acoustical substrate. Finish materials often consist of fabric, wood or acoustical tile. Fabric can be wrapped around substrates to create what is referred to as a "pre-fabricated panel" and often provides good noise absorption if laid onto a wall.

Prefabricated panels are limited to the size of the substrate ranging from 2 by 4 feet (0.61 m × 1.22 m) to 4 by 10 feet (1.2 m × 3.0 m). Fabric retained in a wall-mounted perimeter track system, is referred to as "on-site acoustical wall panels". This is constructed by framing the perimeter track into shape, infilling the acoustical substrate and then stretching and tucking the fabric into the perimeter frame system. On-site wall panels can be constructed to accommodate door frames, baseboard, or any other intrusion. Large panels (generally, greater than 50 square feet (4.6 m2)) can be created on walls and ceilings with this method. Wood finishes can consist of punched or routed slots and provide a natural look to the interior space, although acoustical absorption may not be great.

There are three ways to improve workplace acoustics and solve workplace sound problems – the ABCs.

- A = Absorb (via drapes, carpets, ceiling tiles, etc.)

- B = Block (via panels, walls, floors, ceilings and layout)

- C = Cover-up (via sound masking)

Mechanical equipment noise

Building services noise control is the science of controlling noise produced by:

- ACMV (air conditioning and mechanical ventilation) systems in buildings, termed HVAC in North America

- Elevators

- Electrical generators positioned within or attached to a building

- Any other building service infrastructure component that emits sound.

Inadequate control may lead to elevated sound levels within the space which can be annoying and reduce speech intelligibility. Typical improvements are vibration isolation of mechanical equipment, and sound traps in ductwork. Sound masking can also be created by adjusting HVAC noise to a predetermined level.

XO____XO Acoustic transmission

Acoustic transmission is the transmission of sounds through and between materials, including air, wall, and musical instruments.

The degree to which sound is transferred between two materials depends on how well their acoustical impedances match.

In musical instrument design[edit]

Musical instruments are generally designed to radiate sound effectively. A high-impedance part of the instrument, such as a string, transmits vibrations through a bridge (intermediate impedance) to a sound board (lower impedance). The soundboard then moves the still lower-impedance air. Without bridge and soundboard, the instrument does not transmit enough sound to the air, and is too quiet to be performed with. An electric guitar has no soundboard; it uses a microphone pick-up and artificial amplification. Without amplification, electric guitars are very quiet.

Stethoscope

Stethoscopes roughly match the acoustical impedance of the human body, so they transmit sounds from a patient's chest to the doctor's ear much more effectively than the air does. Putting an ear to someone's chest would have a similar effect.

In building design

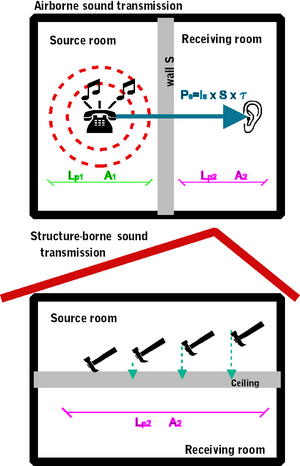

Acoustic transmission in building design refers to a number of processes by which sound can be transferred from one part of a building to another. Typically these are:

- Airborne transmission - a noise source in one room sends air pressure waves which induce vibration to one side of a wall or element of structure setting it moving such that the other face of the wall vibrates in an adjacent room. Structural isolation therefore becomes an important consideration in the acoustic design of buildings. Highly sensitive areas of buildings, for example recording studios, may be almost entirely isolated from the rest of a structure by constructing the studios as effective boxes supported by springs. Air tightness also becomes an important control technique. A tightly sealed door might have reasonable sound reduction properties, but if it is left open only a few millimeters its effectiveness is reduced to practically nothing. The most important acoustic control method is adding mass into the structure, such as a heavy dividing wall, which will usually reduce airborne sound transmission better than a light one.

- Impact transmission - a noise source in one room results from an impact of an object onto a separating surface, such as a floor and transmits the sound to an adjacent room. A typical example would be the sound of footsteps in a room being heard in a room below. Acoustic control measures usually include attempts to isolate the source of the impact, or cushioning it. For example carpets will perform significantly better than hard floors.

- Flanking transmission - a more complex form of noise transmission, where the resultant vibrations from a noise source are transmitted to other rooms of the building usually by elements of structure within the building. For example, in a steel framed building, once the frame itself is set into motion the effective transmission can be pronounced.

Reflection (physics)

Reflection is the change in direction of a wavefront at an interface between two different media so that the wavefront returns into the medium from which it originated. Common examples include the reflection of light, sound and water waves. The law of reflection says that for specular reflection the angle at which the wave is incident on the surface equals the angle at which it is reflected. Mirrors exhibit specular reflection.

In acoustics, reflection causes echoes and is used in sonar. In geology, it is important in the study of seismic waves. Reflection is observed with surface waves in bodies of water. Reflection is observed with many types of electromagnetic wave, besides visible light. Reflection of VHF and higher frequencies is important for radio transmission and for radar. Even hard X-rays and gamma rays can be reflected at shallow angles with special "grazing" mirrors.

Reflection of light

Reflection of light is either specular (mirror-like) or diffuse (retaining the energy, but losing the image) depending on the nature of the interface. In specular reflection the phase of the reflected waves depends on the choice of the origin of coordinates, but the relative phase between s and p (TE and TM) polarizations is fixed by the properties of the media and of the interface between them.[1]

A mirror provides the most common model for specular light reflection, and typically consists of a glass sheet with a metallic coating where the significant reflection occurs. Reflection is enhanced in metals by suppression of wave propagation beyond their skin depths. Reflection also occurs at the surface of transparent media, such as water or glass.

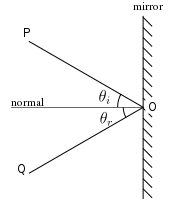

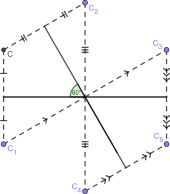

In the diagram, a light ray PO strikes a vertical mirror at point O, and the reflected ray is OQ. By projecting an imaginary line through point O perpendicular to the mirror, known as the normal, we can measure the angle of incidence, θiand the angle of reflection, θr. The law of reflection states that θi = θr, or in other words, the angle of incidence equals the angle of reflection.

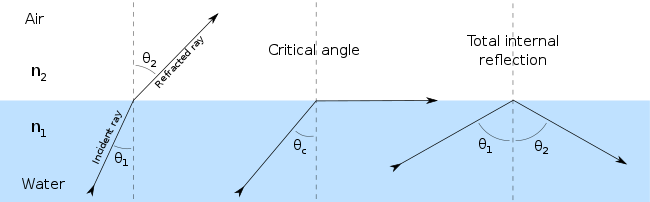

In fact, reflection of light may occur whenever light travels from a medium of a given refractive index into a medium with a different refractive index. In the most general case, a certain fraction of the light is reflected from the interface, and the remainder is refracted. Solving Maxwell's equations for a light ray striking a boundary allows the derivation of the Fresnel equations, which can be used to predict how much of the light is reflected, and how much is refracted in a given situation. This is analogous to the way impedance mismatch in an electric circuit causes reflection of signals. Total internal reflection of light from a denser medium occurs if the angle of incidence is greater than the critical angle.

Total internal reflection is used as a means of focusing waves that cannot effectively be reflected by common means. X-ray telescopes are constructed by creating a converging "tunnel" for the waves. As the waves interact at low angle with the surface of this tunnel they are reflected toward the focus point (or toward another interaction with the tunnel surface, eventually being directed to the detector at the focus). A conventional reflector would be useless as the X-rays would simply pass through the intended reflector.

When light reflects off a material denser (with higher refractive index) than the external medium, it undergoes a phase inversion. In contrast, a less dense, lower refractive index material will reflect light in phase. This is an important principle in the field of thin-film optics.

Specular reflection forms images. Reflection from a flat surface forms a mirror image, which appears to be reversed from left to right because we compare the image we see to what we would see if we were rotated into the position of the image. Specular reflection at a curved surface forms an image which may be magnified or demagnified; curved mirrors have optical power. Such mirrors may have surfaces that are spherical or parabolic.

Laws of reflection

If the reflecting surface is very smooth, the reflection of light that occurs is called specular or regular reflection. The laws of reflection are as follows:

- The incident ray, the reflected ray and the normal to the reflection surface at the point of the incidence lie in the same plane.

- The angle which the incident ray makes with the normal is equal to the angle which the reflected ray makes to the same normal.

- The reflected ray and the incident ray are on the opposite sides of the normal.

These three laws can all be derived from the Fresnel equations.

Mechanism

In classical electrodynamics, light is considered as an electromagnetic wave, which is described by Maxwell's equations. Light waves incident on a material induce small oscillations of polarisation in the individual atoms (or oscillation of electrons, in metals), causing each particle to radiate a small secondary wave in all directions, like a dipole antenna. All these waves add up to give specular reflection and refraction, according to the Huygens–Fresnel principle.

In the case of dielectrics such as glass, the electric field of the light acts on the electrons in the material, and the moving electrons generate fields and become new radiators. The refracted light in the glass is the combination of the forward radiation of the electrons and the incident light. The reflected light is the combination of the backward radiation of all of the electrons.

In metals, electrons with no binding energy are called free electrons. When these electrons oscillate with the incident light, the phase difference between their radiation field and the incident field is π (180°), so the forward radiation cancels the incident light, and backward radiation is just the reflected light.

Light–matter interaction in terms of photons is a topic of quantum electrodynamics, and is described in detail by Richard Feynman in his popular book QED: The Strange Theory of Light and Matter.

Diffuse reflection

When light strikes the surface of a (non-metallic) material it bounces off in all directions due to multiple reflections by the microscopic irregularities inside the material (e.g. the grain boundaries of a polycrystalline material, or the cell or fiber boundaries of an organic material) and by its surface, if it is rough. Thus, an 'image' is not formed. This is called diffuse reflection. The exact form of the reflection depends on the structure of the material. One common model for diffuse reflection is Lambertian reflectance, in which the light is reflected with equal luminance (in photometry) or radiance (in radiometry) in all directions, as defined by Lambert's cosine law.

The light sent to our eyes by most of the objects we see is due to diffuse reflection from their surface, so that this is our primary mechanism of physical observation.[2]

Retroreflection

Some surfaces exhibit retroreflection. The structure of these surfaces is such that light is returned in the direction from which it came.