metaphor in machine learning

Deep learning is a

machine learning technique that teaches computers to do what comes

naturally to humans: learn by example. Deep learning is a key technology

behind driverless cars, enabling them to recognize a stop sign, or to

distinguish a pedestrian from a lamppost. It is the key to voice control

in consumer devices like phones, tablets, TVs, and hands-free speakers.

Deep learning is getting lots of attention lately and for good reason.

It’s achieving results that were not possible before.

In deep learning, a

computer model learns to perform classification tasks directly from

images, text, or sound. Deep learning models can achieve

state-of-the-art accuracy, sometimes exceeding human-level performance.

Models are trained by using a large set of labeled data and neural

network architectures that contain many layers.

1. Explanations

When people

think about artificial intelligence, they typically seem to have in mind

the first kind of explanation. The expectation is that the system made a

deliberation and chose a course of action based on the expected

outcome. Although there are cases where this is possible, increasingly

we are seeing a move towards systems that are more similar to the second

case; that is, they receive stimuli and then they just react.

There

are very good reasons for this (not least because the world is

complicated), but it does mean that it’s harder to understand the reasons

for why a particular decision was made, or why we ended up with one

model as opposed to another. With that in mind, lets dig into what we

mean by a model, and the metaphor of the black box.

2. Boxes and Models

The

black box metaphor dates back to the early days of cybernetics and

behaviourism, and typically refers to a system for which we can only

observe the inputs and outputs, but not the internal workings. Indeed,

this was the way in which B. F. Skinner conceptualized minds in general.

Although he successfully demonstrated how certain learned behaviours

could be explained by a reinforcement signal which linked certain inputs

to certain outputs, he then famously made the mistake of thinking that

this theory could easily explain all of human behaviour, including language.

As

a simpler example of a black box, consider a thought experiment from

Skinner: you are given a box with a set of inputs (switches and buttons)

and a set of outputs (lights which are either on or off). By

manipulating the inputs, you are able to observe the corresponding

outputs, but you cannot look inside to see how the box works. In the

simplest case, such as a light switch in a room, it is easy to determine

with great confidence that the switch controls the light level. For a

sufficiently complex system, however, it may be effectively impossible

to determine how the box works by just trying various combinations.

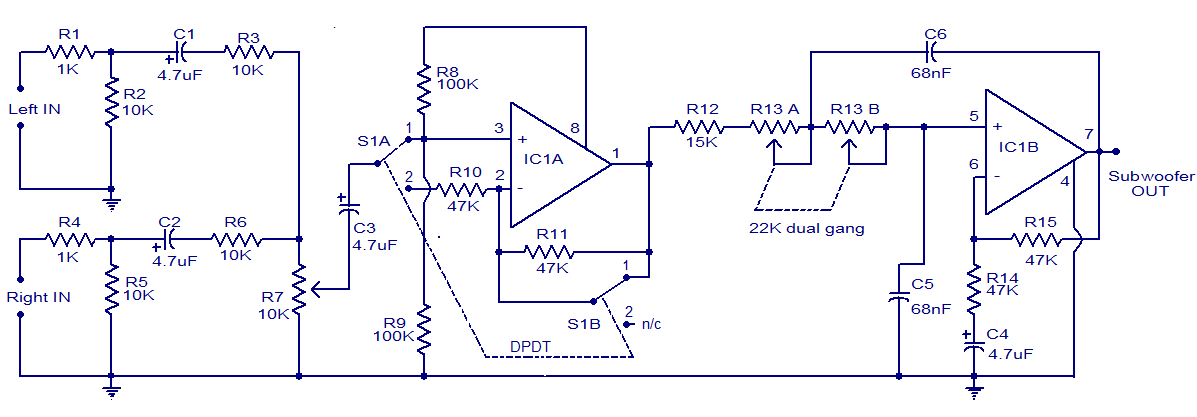

Now imagine that you are allowed to open up the box and look inside. You are even given a full wiring diagram, showing what all the components are, and how they are connected. Moreover, none of the components are complex in and of themselves; everything is built up from simple components such as resistors and capacitors, each of which has behaviour that is well understood in isolation. Now, not only do you have access to the full specification of all the components in the system, you can even run experiments to see how each of the various components responds to particular inputs.

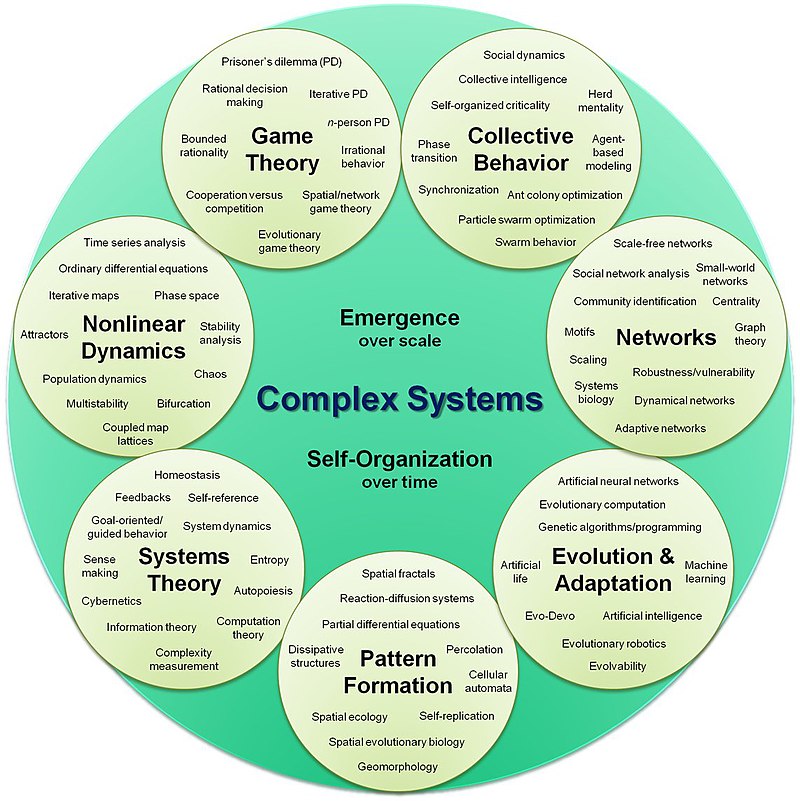

You

might think that with all this information in hand, you would now be in a

position to give a good explanation of how the box works. After all,

each individual component is understood, and there is no hidden

information. Unfortunately, complexity arises from the interaction of

many simple components. For a sufficiently complex system, it is

unlikely you’d be able to predict what the output of the box will be for

a given input, without running the experiment to find out. The only

explanation for why the box is doing what it does is that all of the

components are following the rules that govern their individual

behaviour, and the overall behaviour emerges from their interactions.

Even more importantly, beyond the how of the system, you would likely be at a loss to explain why each component had been placed where it is, even if you knew the overall purpose of the system. Given that the box was designed

for some purpose, we assume that each component was added for a reason.

For a particularly clever system, however, each component might end up

taking on multiple roles, as in the case of DNA. Although this can lead

to a very efficient system, it also makes it very difficult to even

think about summarizing the purpose of each component. In other words, the how of the system is completely transparent, but the why is potentially unfathomable.

This,

as it turns out, is a perfect metaphor for deep learning. In general,

the entire system is open to inspection. Moreover, it is entirely made

up of simple components that are easily understood in isolation. Even if

we know the purpose of the overall system, however, there is not

necessarily a simple explanation we can offer as to how

the system works, other than the fact that each individual component

operates according to its own rules, in response to the input. This,

indeed, is the true explanation of how they system works, and it is

entirely transparent. The tougher question of course is why each component has taken on the role that it has. To understand this further, it will be helpful to separate the idea of a model from the algorithm used to train it.

3. Models and Algorithms

To

really get into the details, we need to be a bit more precise about

what we’re talking about. Harris refers to “how the algorithm is

accomplishing what its accomplishing”, but there are really two parts

here: a model — such a deep learning system — and a learning

algorithm — which we use to fit the model to data. When Harris refers to

“the algorithm”, he is presumably talking about the model, not

necessarily how it was trained.

What exactly do we mean by a model? Although perhaps somewhat vague, a statistical model

basically captures the assumptions we make about how things work in the

world, with details to be learned from data. In particular, a model

specifies what the inputs are, what the outputs are, and typically how

we think the inputs might interact with each other in generating the

output.

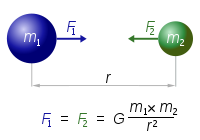

A

classic example of a model is the equations which govern Newtonian

gravity. The model states that the output (the force of gravity between

two objects) is determined by three input values: the mass of the first

object, the mass of the second object, and the distance between them.

More precisely, it states that gravity will be proportional to the

product of the two masses, divided by the distance squared. Critically,

it doesn’t explain why these

factors should be the factors that influence gravity; it merely tries to

provide a parsimonious explanation that allows us to predict gravity

for any situation.

Of course, even if this were completely correct, in order to be able to make a prediction, we also need to know the corresponding scaling factor, G. In

principle, however, it should be possible to learn this value through

observation. If we have assumed the correct (or close to correct) model

for how things operate in reality, we have a good chance of being able

to learn the relevant details from data.

In

the case of gravity of course, Einstein eventually showed that Newton’s

model was only approximately correct, and that it fails in extreme

conditions. For most circumstances, however, the Newtonian model is good

enough, which is why people were able to learn the constant G= 6.674×10^(−11) N · (m/kg)², and use it to make predictions.

Einstein’s

model is much more complex, with more details to be learned through

observation. In most circumstances, it gives approximately the same

prediction as the Newtonian model would, but it is more accurate in

extreme circumstances, and of course has been essential in the

development of technologies such as GPS. Even more impressively, the

secondary predictions of relativity have been astounding, successfully

predicting, for example, the existence of black holes before we could

ever hope to test for their existence. And yet we know that Einstein’s

model too, is not completely correct, as it fails to agree with the

models of quantum mechanics under even more extreme conditions.

Gravitation,

of course, is deterministic (as far as we know). In machine learning

and statistics, by contrast, we are typically dealing with models that

involve uncertainty or randomness. For example, a simple model of how

long you are going to live would be to just predict the average of the

population for the country in which you live. A better model might take

into account relevant factors, such as your current health status, your

genes, how much you exercise, whether or not you smoke cigarettes, etc.

In pretty much every case, however, there will be some uncertainty about

the prediction, because we don’t know all the relevant factors. (This

is different of course from the apparent true randomness which occurs at

the sub-atomic level, but we won’t worry about that difference here).

In

addition to being an incredibly successful rebranding of neural

networks and machine learning (itself arguably a rather successful

rebranding of statistics), the term deep learning

refers to a particular type of model, one in which the outputs are the

results of a series of many simple transformations applied to the inputs

(much like our wiring diagram from above). Although deep learning

models are certainly complex, they are not black boxes. In fact, it

would be more accurate to refer to them as glass boxes, because we can

literally look inside and see what each component is doing.

The

problem, of course, is that these systems are also complicated. If I

give you a simple set of rules to follow in order to make a prediction,

as long as there aren’t too many rules and the rules themselves are

simple, you could pretty easily figure out the full set of

input-to-output mappings in your mind. This is also true, though to a

lesser extent, with a class of models known as linear models, where the effect of changing any one input can be interpreted without knowing about the value of other inputs.

Deep

learning models, by contrast, typically involve non-linearities and

interactions between inputs, which means that not only is there no

simple mapping from input to outputs, the effect of changing one input

may dependent critically on the values of other inputs. This makes it

very hard to mentally figure out what’s happening, but the details are

nevertheless transparent and completely open to inspection.

The

actual computation performed by these models in making a prediction is

typically quite straightforward; where things get difficult is in the

actual learning of the model parameters from data. As described above,

once we assume a certain form for a model (in this case, a flexible

neural network); we then need to try to figure out good values for the

parameters from data.

In

the example of gravity, once we have assumed a “good enough” model

(proportional to mass and inversely proportional to distance squared),

we just need to resolve the value of one parameter (G), by fitting the model to observations. With modern deep learning systems, by contrast, there can easily be millions of such parameters to be learned.

In

practice, nearly all of these deep learning models are trained using

some variant of an algorithm called stochastic gradient descent (SGD),

which takes random samples from the training data, and gradually adjusts

all parameters to make the predicted output more like what we want.

Exactly why it works as well as it does is still not well understood,

but the main thing to keep in mind is that it, too, is transparent.

Because

it is usually initialized with random values for all parameters, SGD

can lead to different parameters each time we run it. The algorithm

itself, however, is deterministic, and if we used the same

initialization and the same data, it would produce the same result. In

other words, neither the model nor the algorithm is a black box.

Although

it is somewhat unsatisfying, the complete answer to why a machine

learning system did something ultimately lies in the combination of the

assumptions we made in designing model, the data it was trained on, and

various decisions made about how to learn the parameters, including the

randomness in the initialization.

4. Back to black boxes

Why does all this matter? Well, there are at least two ways in which the concept of black boxes are highly relevant to machine learning.

First, there are plenty of algorithms and software systems (and not just those based on machine learning) that are

black boxes as far as the user is concerned. This is perhaps most

commonly the case in proprietary software, where the user doesn’t have

access to the inner workings, and all we get to see are the inputs and

outputs. This is the sort of system that ProPublica reported on

in it’s coverage of judicial sentencing algorithms (specifically the

COMPAS system from Northpointe). In that case, we know the inputs, and

we can see the risk scores that have given to people as the output. We

don’t, however, have access to the algorithm used by the company, or the

data it was trained on. Nevertheless, it is safe to say that someone has access to the details — presumably the employees of the company — and it is very likely completely transparent to them.

The

second way in which the metaphor of black boxes is relevant is with

respect to they systems we are tying to learn, such as human vision. In

some ways, human behaviour is unusually transparent, in that we can

actually ask people why they did something, and obtain explanations.

However, there is good reason to believe that we don’t always know the

true reasons for the things we do. Far from being transparent to

ourselves, we simply don’t have conscious access to many of the internal

processes that govern our behaviour. If asked to explain why we did

something, we may be able to provide a narrative that at least conveys

how the decision making process felt to us. If asked to explain how we

are able to recognize objects, by contrast, we might think we can

provide some sort of explanation (something involving edges and

colours), but in reality, this process operates well below the level of

consciousness.

Although

there are special circumstances in which we can actual inspect the

inner workings human or other mammalian systems, such as neuroscience

experiments, in general, we are trying to use machine learning to mimc

human behaviour using only the inputs and the outputs. In other words,

from the perspective of a machine learning system, the human is the black box.

6. Conclusion

In conclusion, it’s useful to reflect on what people want when they think of systems that are not

black boxes. People typically imagine something like the scenario in

which a self-driving car has gone off the road, and we want to know why.

In the popular imagination, the expectation seems to be that the car

must have evaluated possible outcomes, assigned them probabilities, and

chose the one with the best chance of maximizing some better outcome,

where better is determined according to some sort of morality that has

been programmed into it.

In

reality, it is highly unlikely that this is how things will work.

Rather, if we ask the car why it did what it did, the answer will be

that it applied a transparent and deterministic computation using the

values of its parameters, given its current input, and this determined

its actions. If we ask why it had those particular parameters, the

answer will be that they are the result of the model that was chosen,

the data it was trained on, and the details of the learning algorithm

that was used.

This

does seem frustratingly unhelpful, and it is easy to see why people

reach for the black box metaphor. However, consider, that we don’t

actually have this kind of access for the systems we are trying to

mimic. If we ask a human driver why they went off the road, they will

likely be capable of responding in language, and giving some account of

themselves — that they were drunk, or distracted, or had to swerve, or

were blinded by the weather — and yet aside from providing some sort of

narrative coherence, we don’t really know why they did it, and neither

do they. At least with machine learning, we can recreate the same

setting and probe the internal state. It might be complicated to

understand, but it is not a black box.

Block Diagram

What is a Block Diagram?

A block diagram is a specialized, high-level flowchart used in engineering. It is used to design new systems or to describe and improve existing ones. Its structure provides a high-level overview of major system components, key process participants, and important working relationships.Types and Uses of Block Diagrams

A block diagram provides a quick, high-level view of a system to rapidly identify points of interest or trouble spots. Because of its high-level perspective, it may not offer the level of detail required for more comprehensive planning or implementation. A block diagram will not show every wire and switch in detail, that's the job of a circuit diagram.A block diagram is especially focused on the input and output of a system. It cares less about what happens getting from input to output. This principle is referred to as black box in engineering. Either the parts that get us from input to output are not known or they are not important.

How to Make a Block Diagram

Block diagrams are made similar to flowcharts. You will want to create blocks, often represented by rectangular shapes, that represent important points of interest in the system from input to output. Lines connecting the blocks will show the relationship between these components.In SmartDraw, you'll want to start with a block diagram template that already has the relevant library of block diagram shapes docked. Adding, moving, and deleting shapes is easy in just a few key strokes or drag-and-drop. SmartDraw's block diagram tool will help build your diagram automatically.

Symbols Used in Block Diagrams

Block diagrams use very basic geometric shapes: boxes and circles. The principal parts and functions are represented by blocks connected by straight and segmented lines illustrating relationships.When block diagrams are used in electrical engineering, the arrows connecting components represent the direction of signal flow through the system.

Whatever any specific block represents should be written on the inside of that block.

A block diagram can also be drawn in increasing detail if analysis requires it. Feel free to add as little or as much detail as you want using more specific electrical schematic symbols.

Block Diagram: Best Practices

- Identify the system. Determine the system to be illustrated. Define components, inputs, and outputs.

- Create and label the diagram. Add a symbol for each component of the system, connecting them with arrows to indicate flow. Also, label each block so that it is easily identified.

- Indicate input and output. Label the input that activates a block, and label that output that ends the block.

- Verify accuracy. Consult with all stakeholders to verify accuracy.

Block Diagram Examples

The best way to understand block diagrams is to look at some examples of block diagrams.

Block Diagram Score--Board

“For example, we make everything smaller. The physical accuracy of the models hasn't changed, but we're entering regimes where there's increasing cross talks between components simply because we're packing them together more closely.”

new product demands, such as the push for greener technologies – which calls for ever-improving energy minimization – are creating an environment for design engineers in which simulation-based verification alone is simply not practical. “When you're designing a product, such as, say, a cellphone, you have maybe about a hundred or so components on the circuit board. That's a lot of design values. To completely explore that design space and try every possible combination of components is unfeasible.

“When we want to do design optimization we can't be concerned with every single variable inside the system,” “All we really care about is what's happening in the aggregate – the signals at the outside of the device where the humans are interacting with it. So we want these abstracted models and that's what machine learning gives you – models that you then use for simulation.”

Accomplishing this is no small task, given that simulations require engineers to model everything in a system, and all of those effects can be represented , and completely data-driven modeling, not based on any prior knowledge of what's inside the system. To do this they need to use machine learning algorithms to that can predict a particular output and represent the behaviors of particular components.

machine learning-based modeling also offers several other benefits that should be attractive to companies, such as the ability to share models without revealing vital intellectual property (IP). “Because behavior modeling only describes, say input/output characteristics, they don't tell you what's inside the black box. They preserve or obscure IP. With a behavioral model a supplier can easily share that model with their customer without disclosing proprietary information .

It allows for the free flow of critical information and it allows the customer then to be able to design their system using that model from the supplier.”

Most integrated circuit manufacturers, for example, use Input/Output Buffer Information Specification (IBIS) models to share information about input/output (I/O) signals with customers, while also protecting IP. that IBIS models tell you absolutely nothing about the circuit design details.

“Where machine learning can help is to make models such as IBIS better . “IBIS models don't represent interactions between the multiple I/O pins of an integrated circuit. There's a lot of unintended coupling that current models can't replicate. But with more powerful methods based on machine learning for obtaining models, next-gen models may be able to capture those important effects.” The other great benefit would be reduced time to market. In the current state of circuit design there's almost a sense of planned failure that eats up a lot of development time. “Many chips don't pass qualification testing and need to undergo a re-spin,” “With better models we can get designs right the first time.”. A background in system level ESD, a world she said is built on trial and error and would benefit greatly from behavioral modeling. “[Design engineers] make a product, say a laptop, it undergoes testing, probably fails, then they start sticking additional components on the circuit board until it passes...and it wastes a lot of time,” We build in time to fix things, but it's often by the seat of one's pants. If we had accurate models for how these systems would respond to ESD we could design them to pass qualification testing the first time.” The willingness and interest in machine learning-based behavioral models is there, but the hurdles are in the details. How do you actually do this? Today, machine learning finds itself being largely applied to image recognition, natural language processing, and, perhaps most ignominiously, the sort of behavior prediction that lets Google guess what ads it wants to serve you. “There's only been a little bit of work in regards to electronics modeling . “We have to figure out all the details. We're working with real measurement data. How much do you need? Do you need to process or filter it before delivering it to the algorithm? And which algorithms are suitable for representing electronic components and systems? We have to answer all of those questions.”

CAEML's aim is to demonstrate, over a five-year period, that machine learning can be applied to modeling for many different applications within the realm of electronics design. As part of that the center will be doing foundational research on the actual machine learning on the algorithms – identifying ones that are most suitable and how to use them.

“Although we're working on many applications – signal integrity analysis, IP reuse, power delivery network design, even IC layouts and physical design – all of which require models, there are common problems that we're facing, a lot of them do pertain to working with a limited set of real measurement data,” machine learning theorists really only focused on the algorithm. They assumed there's an unlimited quantity of data available, and that's not realistic, at least in our domain. In order to get data you have to fabricate samples and measure them, which that's takes time and money. The amount of data, though it seems huge to us, is very small compared to what they use in the field. “ .

XO___XO Electronics and Artificial Intelligence

Through Artificial Intelligence, ITCL aims for the design and implementation of processes that, when run on physical architectures, trigger the maximisation of certain results by means of the actions they set off, always focusing on productivity enhancement.

The area has a long history of working

with companies throughout the national territory, having participated in

a number of projects, both R&D and process improvement,

highlighting their developments in areas of application where

intelligent systems, communication Advanced and microelectronics are key

factors (Smart Cities, Smart Energy, Industrial Internet of Things –

IIoT, Industry 4.0, Factories of the Future – FoF, Machine-to-Machine

Technology- M2M).

lines of work of the research group

Some of the lines of work of the research group in electronics and artificial intelligence are the following:

- Design and prototyping of electronic boards and advanced devices for integration in equipment and telemedicine

- ARM Solution Design

- Programming embedded systems under Linux

- Programming of microprocessors for data acquisition, control and advanced communications.

- Data analysis in intelligent systems: Design of custom algorithms and design of experiments. Feature selection, feature-based process optimization, dynamic data series clustering, output model prediction.

- Specific developments for sustainable mobility: domestic and collective electric vehicle charging systems, demand analysis of loads, energy distribution according to constraints, localization and control systems, carpooling, carsharing, route optimization.

- Design of intelligent systems for precision agriculture, infrastructures and cities.

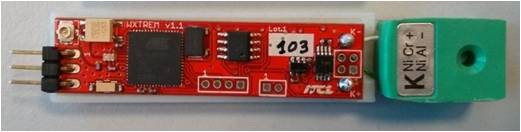

Technology samples

- Microcontrollers: several architectures (ARM, RISC) and manufacturers (Microchip, Atmel, Freescale, Raspberry).

- Wireless Communication: ZigBee, Bluetooth v2.0 y v4.0, radio-frequency (434 MHz), Wi-Fi, GPRS, RFID Mifare.

- Communication Protocols: RS-485, RS-232, I2C, SPI, bus CAN, PLC, UART, TCP/IP.

- Positioning: GPS, GPRS.

- Sensors: temperature (thermocouple, PT100, RTD), light sensors, load cells, movement sensors (accelerometers, gyroscopes), biosensors, potential-free contacts, touch sensors, skin conductivity, infrared sensors, etc.

- HMI (Human Machine Interface): light and acoustic indicators, displays (LCD, graphic, alphanumeric, etc.), keyboards.

XO___XO ++ DW DEEP LEARNING

How does deep learning attain such impressive results?

In a word, accuracy. Deep learning achieves recognition accuracy at higher levels than ever before. This helps consumer electronics meet user expectations, and it is crucial for safety-critical applications like driverless cars. Recent advances in deep learning have improved to the point where deep learning outperforms humans in some tasks like classifying objects in images.While deep learning was first theorized in the 1980s, there are two main reasons it has only recently become useful:

- Deep learning requires large amounts of labeled data. For example, driverless car development requires millions of images and thousands of hours of video.

- Deep learning requires substantial computing power. High-performance GPUs have a parallel architecture that is efficient for deep learning. When combined with clusters or cloud computing, this enables development teams to reduce training time for a deep learning network from weeks to hours or less.

Examples of Deep Learning at Work

Deep learning applications are used in industries from automated driving to medical devices.Automated Driving: Automotive researchers are using deep learning to automatically detect objects such as stop signs and traffic lights. In addition, deep learning is used to detect pedestrians, which helps decrease accidents.

Aerospace and Defense: Deep learning is used to identify objects from satellites that locate areas of interest, and identify safe or unsafe zones for troops.

Medical Research: Cancer researchers are using deep learning to automatically detect cancer cells. Teams at UCLA built an advanced microscope that yields a high-dimensional data set used to train a deep learning application to accurately identify cancer cells.

Industrial Automation: Deep learning is helping to improve worker safety around heavy machinery by automatically detecting when people or objects are within an unsafe distance of machines.

Electronics: Deep learning is being used in automated hearing and speech translation. For example, home assistance devices that respond to your voice and know your preferences are powered by deep learning applications.

How Deep Learning Works

Most deep learning methods use neural network architectures, which is why deep learning models are often referred to as deep neural networks.

The term “deep” usually refers to the number of hidden layers in the neural network. Traditional neural networks only contain 2-3 hidden layers, while deep networks can have as many as 150.

Deep learning models are trained by using large sets of labeled data and neural network architectures that learn features directly from the data without the need for manual feature extraction.

The term “deep” usually refers to the number of hidden layers in the neural network. Traditional neural networks only contain 2-3 hidden layers, while deep networks can have as many as 150.

Deep learning models are trained by using large sets of labeled data and neural network architectures that learn features directly from the data without the need for manual feature extraction.

Figure 1: Neural networks, which are organized in layers

consisting of a set of interconnected nodes. Networks can have tens or

hundreds of hidden layers.

One of the most popular types of deep neural networks is known as convolutional neural networks (CNN or ConvNet).

A CNN convolves learned features with input data, and uses 2D

convolutional layers, making this architecture well suited to processing

2D data, such as images.

CNNs eliminate the need for manual feature extraction, so you do not need to identify features used to classify images. The CNN works by extracting features directly from images. The relevant features are not pretrained; they are learned while the network trains on a collection of images. This automated feature extraction makes deep learning models highly accurate for computer vision tasks such as object classification.

CNNs eliminate the need for manual feature extraction, so you do not need to identify features used to classify images. The CNN works by extracting features directly from images. The relevant features are not pretrained; they are learned while the network trains on a collection of images. This automated feature extraction makes deep learning models highly accurate for computer vision tasks such as object classification.

Figure 2: Example of a network with many convolutional layers.

Filters are applied to each training image at different resolutions, and

the output of each convolved image serves as the input to the next

layer.

CNNs learn to detect different features of an image using tens or

hundreds of hidden layers. Every hidden layer increases the complexity

of the learned image features. For example, the first hidden layer could

learn how to detect edges, and the last learns how to detect more

complex shapes specifically catered to the shape of the object we are

trying to recognize.

Another key difference is deep learning algorithms scale with data, whereas shallow learning converges. Shallow learning refers to machine learning methods that plateau at a certain level of performance when you add more examples and training data to the network.

A key advantage of deep learning networks is that they often continue to improve as the size of your data increases.

What's the Difference Between Machine Learning and Deep Learning?

Deep learning is a specialized form of machine learning. A machine learning workflow starts with relevant features being manually extracted from images. The features are then used to create a model that categorizes the objects in the image. With a deep learning workflow, relevant features are automatically extracted from images. In addition, deep learning performs “end-to-end learning” – where a network is given raw data and a task to perform, such as classification, and it learns how to do this automatically.Another key difference is deep learning algorithms scale with data, whereas shallow learning converges. Shallow learning refers to machine learning methods that plateau at a certain level of performance when you add more examples and training data to the network.

A key advantage of deep learning networks is that they often continue to improve as the size of your data increases.

Figure 3. Comparing a machine learning approach to categorizing vehicles (left) with deep learning (right).

In machine learning, you manually choose features and a classifier

to sort images. With deep learning, feature extraction and modeling

steps are automatic.

Choosing Between Machine Learning and Deep Learning

Machine learning offers a variety of techniques and models you can choose based on your application, the size of data you're processing, and the type of problem you want to solve. A successful deep learning application requires a very large amount of data (thousands of images) to train the model, as well as GPUs, or graphics processing units, to rapidly process your data.When choosing between machine learning and deep learning, consider whether you have a high-performance GPU and lots of labeled data. If you don’t have either of those things, it may make more sense to use machine learning instead of deep learning. Deep learning is generally more complex, so you’ll need at least a few thousand images to get reliable results. Having a high-performance GPU means the model will take less time to analyze all those images.

How to Create and Train Deep Learning Models

The three most common ways people use deep learning to perform object classification are:Training from Scratch

To train a deep network from scratch, you gather a very large labeled data set and design a network architecture that will learn the features and model. This is good for new applications, or applications that will have a large number of output categories. This is a less common approach because with the large amount of data and rate of learning, these networks typically take days or weeks to train.

Transfer Learning

Most deep learning applications use the transfer learning approach, a process that involves fine-tuning a pretrained model. You start with an existing network, such as AlexNet or GoogLeNet, and feed in new data containing previously unknown classes. After making some tweaks to the network, you can now perform a new task, such as categorizing only dogs or cats instead of 1000 different objects. This also has the advantage of needing much less data (processing thousands of images, rather than millions), so computation time drops to minutes or hours.

Transfer learning requires an interface to the internals of the pre-existing network, so it can be surgically modified and enhanced for the new task. MATLAB® has tools and functions designed to help you do transfer learning.

Most deep learning applications use the transfer learning approach, a process that involves fine-tuning a pretrained model. You start with an existing network, such as AlexNet or GoogLeNet, and feed in new data containing previously unknown classes. After making some tweaks to the network, you can now perform a new task, such as categorizing only dogs or cats instead of 1000 different objects. This also has the advantage of needing much less data (processing thousands of images, rather than millions), so computation time drops to minutes or hours.

Transfer learning requires an interface to the internals of the pre-existing network, so it can be surgically modified and enhanced for the new task. MATLAB® has tools and functions designed to help you do transfer learning.

Feature Extraction

A slightly less common, more specialized approach to deep learning is to use the network as a feature extractor. Since all the layers are tasked with learning certain features from images, we can pull these features out of the network at any time during the training process. These features can then be used as input to a machine learning model such as support vector machines (SVM).

A slightly less common, more specialized approach to deep learning is to use the network as a feature extractor. Since all the layers are tasked with learning certain features from images, we can pull these features out of the network at any time during the training process. These features can then be used as input to a machine learning model such as support vector machines (SVM).

Accelerating Deep Learning Models with GPUs

Training a deep learning model can take a long time, from days to weeks. Using GPU acceleration can speed up the process significantly. Using MATLAB with a GPU reduces the time required to train a network and can cut the training time for an image classification problem from days down to hours. In training deep learning models, MATLAB uses GPUs (when available) without requiring you to understand how to program GPUs explicitly.

Figure 4. Deep Learning Toolbox commands for training your own CNN

from scratch or using a pretrained model for transfer learning.

Deep Learning Applications

Pretrained deep neural network models can be used to quickly apply deep learning to your problems by performing transfer learning or feature extraction. For MATLAB users, some available models include AlexNet, VGG-16, and VGG-19, as well as Caffe models (for example, from Caffe Model Zoo) imported using importCaffeNetwork.

Use AlexNet to Recognize Objects with Your Webcam

Use MATLAB, a simple webcam, and a deep neural network to identify objects in your surroundings.

Example: Object Detection Using Deep Learning

In addition to object recognition, which identifies a specific object in an image or video, deep learning can also be used for object detection. Object detection means recognizing and locating the object in a scene, and it allows for multiple objects to be located within the image.With just a few lines of code, MATLAB lets you do deep learning without being an expert. Get started quickly, create and visualize models, and deploy models to servers and embedded devices.

Teams are successful using MATLAB for deep learning because it lets you:

- Create and Visualize Models with Just a Few Lines of Code. MATLAB lets you build deep learning models with minimal code. With MATLAB, you can quickly import pretrained models and visualize and debug intermediate results as you adjust training parameters.

- Perform Deep Learning Without Being an Expert. You can use MATLAB to learn and gain expertise in the area of deep learning. Most of us have never taken a course in deep learning. We have to learn on the job. MATLAB makes learning about this field practical and accessible. In addition, MATLAB enables domain experts to do deep learning – instead of handing the task over to data scientists who may not know your industry or application.

- Automate Ground Truth Labeling of Images and Video. MATLAB enables users to interactively label objects within images and can automate ground truth labeling within videos for training and testing deep learning models. This interactive and automated approach can lead to better results in less time.

- Integrate Deep Learning in a Single Workflow. MATLAB can unify multiple domains in a single workflow. With MATLAB, you can do your thinking and programming in one environment. It offers tools and functions for deep learning, and also for a range of domains that feed into deep learning algorithms, such as signal processing, computer vision, and data analytics.

XO____XO ++ DW DW X Function block diagrams

A picture is worth a thousand words is a familiar proverb that asserts that complex stories can be told with a single still image, or that an image may be more influential than a substantial amount of text. It also aptly characterizes the goals of visualization-based software in industrial control. A function block diagram (FBD) can replace thousands of lines from a textual program.

A picture is worth a thousand words is a familiar

proverb that asserts that complex stories can be told with a single

still image, or that an image may be more influential than a substantial

amount of text. It also aptly characterizes the goals of

visualization-based software in industrial control.

A function block diagram (FBD) can replace

thousands of lines from a textual program. Graphical programming is an

intuitive way of specifying system functionality by assembling and

connecting function blocks. The first two parts of this series evaluated

ladder diagrams and textual programming as choices for models of

computation. Here, the strengths and weaknesses FBDs will be discussed

and compared.

|

Execution control of function blocks in an FBD network is implicit from the function block position in an FBD. |

FBDs

were introduced by IEC 61131-3 to overcome the weaknesses associated

with textual programming and ladder diagrams. An FBD network primarily

comprises interconnected functions and function blocks to express system

behavior. Function blocks were introduced to address the need to reuse

common tasks such as proportional-integral-derivative (PID) control,

counters, and timers at different parts of an application or in

different projects. A function block is a packaged element of software

that describes the behavior of data, a data structure and an external

interface defined as a set of input and output parameters.

In many ways, function blocks can

theoretically be compared with integrated circuits that are used in

electronic equipment. A function block is depicted as a rectangular

block with inputs entering from the left and outputs exiting on the

right. See diagram of typical function block with inputs and outputs.

Key features of function blocks are data

preservation between executions, encapsulation, and information hiding.

Data preservation is enabled by making separate copies of function

blocks in memory every time it is called. Encapsulation handles a

collection of software elements as one entity, and information hiding

restricts external data access and procedures within an encapsulated

element. Because of encapsulation and information hiding, system

designers do not run the risk of accidentally modifying code or

overwriting internal data when copying code from a previous control

solution.

Functions, function block diagrams

A

function is a software element that, when executed with a particular

set of input values, produces one primary result and does not have any

internal storage. Functions are often confused with function blocks,

which have internal storage and may have multiple outputs. Some examples

of functions are trigonometric functions like sin() and cos(),

arithmetic functions like add and multiply, and string handling

functions. Function blocks include PID, counters, and timers.

An FBD is a program constructed by connecting

multiple functions and function blocks resulting in one block that

becomes the input for the next. Unlike textual programming, no variables

are necessary to pass data from one subroutine to another because the

wires connecting different blocks automatically encapsulate and transfer

data.

An FBD can be used to express the behavior of

function blocks, as well as programs. It also can be used to describe

steps, actions, and transitions within sequential function charts

(SFCs).

A function block is not evaluated unless all

inputs that come from other elements are available. When a function

block executes, it evaluates all its variables, including input and

internal variables as well as output variables. During its execution,

the algorithm creates new values for the output and internal variables.

As discussed, functions and function blocks are the building blocks of

FBDs. In FBDs, the signals are considered to flow from the outputs of

functions or function blocks to the inputs of other functions or

function blocks.

Outputs of function blocks are updated as a

result of function block evaluations. Changes of signal states and

values therefore naturally propagate from left to right across the FBD

network. The signal also can be fed back from function block outputs to

inputs of the preceding blocks. A feedback path implies that a value

within the path is retained after the FBD network is evaluated and used

as the starting value on the next network evaluation. See FBD network

diagram.

The execution control of function blocks in an

FBD network is implicit from the position of the function block in an

FBD. For example, in the “FBD network...” diagram, the “Plant Simulator”

function is evaluated after the “Control” function block. Execution

order can be controlled by enabling a function block for execution and

having output terminals that change state once execution is complete.

Execution of an FBD network is considered complete only when all outputs

of all functions and function blocks are updated.

|

Signals from outputs of function blocks can become inputs to other functions. |

Strengths of FBD

Some FBD strengths follow.

Intuitive and easy to program.

Because FBDs are graphical, it is easy for system designers without

extensive programming training to understand and program control logic.

This benefits domain experts who may not necessarily be experts at

writing specific control algorithms in textual languages but understand

the logic of the control algorithm. They can use existing function

blocks to easily construct programs for data acquisition, and process

and discrete control.

Extensive code reuse . One of

the main benefits of function blocks is code reuse. As discussed, system

designers can use existing function blocks such as PIDs and filters or

encapsulate custom logic and easily reuse this code throughout programs.

Since separate copies are made every time these function blocks are

called, system designers do not risk accidentally overwriting data.

Additionally, function blocks also can be invoked from ladder diagrams

and even textual languages such as structured text, making them highly

portable among different models of computation.

Parallel execution. With the

introduction of multiple-processor-based systems, programmable

automation controllers and PCs now can execute multiple functions at the

same time. Graphical programming languages, such as FBDs, can

efficiently represent parallel logic. While textual programmers use

specific threading and timing libraries to take advantage of

multithreading, graphical, FBD, and dataflow languages (such as National

Instruments LabView) can automatically execute parallel function blocks

in different threads. This helps in applications requiring advanced

control, including multiple PIDs in parallel.

Execution traceability and easy debugging.

Graphical data flow of FBDs makes debugging easy as system designers

can follow the wire connections between functions and function blocks.

Many FBD program editors (such as Siemens Step 7) also provide animation

showing data flow to make debugging easier.

Weaknesses of FBD

Some FBD weaknesses follow.

Algorithm development.

Low-level functions and mathematical algorithms are traditionally

represented in text functions; even algorithms for function blocks

conventionally have been written using textual programming. Furthermore,

function blocks abstract the intricacies of an algorithm, making it

difficult for domain experts trying to learn the details of advanced

control and signal processing techniques.

Limited execution control.

Execution of an FBD network is left to right and is suitable for

continuous behavior. While system designers can control the execution of

a network through “jump” constructs and also by using data dependency

between two function blocks, FBDs are not ideal for solving sequencing

problems. For instance, going from “tank fill” state to “tank stir”

state requires evaluation of all the current states. Depending on the

output, a transition action has to take place before moving to the next

state. While this can be achieved using data dependency of function

blocks, such sequencing might require significant time and effort.

IT integration. With businesses

increasingly seeking ways to connect modern factory floors to the

enterprise, connectivity to the Web and databases has become extremely

important. While textual programs have database-logging capabilities and

source code control features, FBDs generally are unable to integrate

natively with IT systems. Furthermore, IT managers are often trained

only in textual programming.

Need for training . Although

intuitive, data flow is not commonly taught as a model of computation.

In the U.S., engineers are trained to use textual languages, such as

C++, Fortran, and Visual Basic, and technicians are trained in ladder

logic or electrical circuits. FBDs require added training, as they

represent a paradigm shift in writing a control program.

FBDs are a graphical way of representing a

control program and are a dataflow programming model. The intuitiveness,

ease of use, and code reuse of FBDs make them very popular with

engineers. FBDs are ideal for complex applications with parallel

execution and for continuous processing. They also effectively fill gaps

in ladder logic, such as encapsulation and code reuse. To overcome some

of their weaknesses, engineers must employ mixed models of computation.

FBDs are used in conjunction with textual programming for algorithms

and IT integration. Batch and discrete operations are improved by adding

SFCs. The SFC model of computation addresses some of the challenges

faced by FBDs and will be covered in the fourth installment of this

five-part series.

Artificial intelligence

Artificial intelligence (AI), the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience. Since the development of the digital computer in the 1940s, it has been demonstrated that computers can be programmed to carry out very complex tasks—as, for example, discovering proofs for mathematical theorems or playing chess—with great proficiency. Still, despite continuing advances in computer processing speed and memory capacity, there are as yet no programs that can match human flexibility over wider domains or in tasks requiring much everyday knowledge. On the other hand, some programs have attained the performance levels of human experts and professionals in performing certain specific tasks, so that artificial intelligence in this limited sense is found in applications as diverse as medical diagnosis, computer search engines, and voice or handwriting recognition.

chess: Chess and artificial intelligence

What is intelligence?

All but the simplest human behaviour is ascribed to intelligence, while even the most complicated insect behaviour is never taken as an indication of intelligence. What is the difference? Consider the behaviour of the digger wasp, Sphex ichneumoneus. When the female wasp returns to her burrow with food, she first deposits it on the threshold, checks for intruders inside her burrow, and only then, if the coast is clear, carries her food inside. The real nature of the wasp’s instinctual behaviour is revealed if the food is moved a few inches away from the entrance to her burrow while she is inside: on emerging, she will repeat the whole procedure as often as the food is displaced. Intelligence—conspicuously absent in the case of Sphex—must include the ability to adapt to new circumstances.Psychologists generally do not characterize human intelligence by just one trait but by the combination of many diverse abilities. Research in AI has focused chiefly on the following components of intelligence: learning, reasoning, problem solving, perception, and using language.

Learning

There are a number of different forms of learning as applied to artificial intelligence. The simplest is learning by trial and error. For example, a simple computer program for solving mate-in-one chess problems might try moves at random until mate is found. The program might then store the solution with the position so that the next time the computer encountered the same position it would recall the solution. This simple memorizing of individual items and procedures—known as rote learning—is relatively easy to implement on a computer. More challenging is the problem of implementing what is called generalization. Generalization involves applying past experience to analogous new situations. For example, a program that learns the past tense of regular English verbs by rote will not be able to produce the past tense of a word such as jump unless it previously had been presented with jumped, whereas a program that is able to generalize can learn the “add ed” rule and so form the past tense of jump based on experience with similar verbs.Reasoning

To reason is to draw inferences appropriate to the situation. Inferences are classified as either deductive or inductive. An example of the former is, “Fred must be in either the museum or the café. He is not in the café; therefore he is in the museum,” and of the latter, “Previous accidents of this sort were caused by instrument failure; therefore this accident was caused by instrument failure.” The most significant difference between these forms of reasoning is that in the deductive case the truth of the premises guarantees the truth of the conclusion, whereas in the inductive case the truth of the premise lends support to the conclusion without giving absolute assurance. Inductive reasoning is common in science, where data are collected and tentative models are developed to describe and predict future behaviour—until the appearance of anomalous data forces the model to be revised. Deductive reasoning is common in mathematics and logic, where elaborate structures of irrefutable theorems are built up from a small set of basic axioms and rules.There has been considerable success in programming computers to draw inferences, especially deductive inferences. However, true reasoning involves more than just drawing inferences; it involves drawing inferences relevant to the solution of the particular task or situation. This is one of the hardest problems confronting AI.

Problem solving

Problem solving, particularly in artificial intelligence, may be characterized as a systematic search through a range of possible actions in order to reach some predefined goal or solution. Problem-solving methods divide into special purpose and general purpose. A special-purpose method is tailor-made for a particular problem and often exploits very specific features of the situation in which the problem is embedded. In contrast, a general-purpose method is applicable to a wide variety of problems. One general-purpose technique used in AI is means-end analysis—a step-by-step, or incremental, reduction of the difference between the current state and the final goal. The program selects actions from a list of means—in the case of a simple robot this might consist of PICKUP, PUTDOWN, MOVEFORWARD, MOVEBACK, MOVELEFT, and MOVERIGHT—until the goal is reached.Many diverse problems have been solved by artificial intelligence programs. Some examples are finding the winning move (or sequence of moves) in a board game, devising mathematical proofs, and manipulating “virtual objects” in a computer-generated world.

Perception

In perception the environment is scanned by means of various sensory organs, real or artificial, and the scene is decomposed into separate objects in various spatial relationships. Analysis is complicated by the fact that an object may appear different depending on the angle from which it is viewed, the direction and intensity of illumination in the scene, and how much the object contrasts with the surrounding field.At present, artificial perception is sufficiently well advanced to enable optical sensors to identify individuals, autonomous vehicles to drive at moderate speeds on the open road, and robots to roam through buildings collecting empty soda cans. One of the earliest systems to integrate perception and action was FREDDY, a stationary robot with a moving television eye and a pincer hand, constructed at the University of Edinburgh, Scotland, during the period 1966–73 under the direction of Donald Michie. FREDDY was able to recognize a variety of objects and could be instructed to assemble simple artifacts, such as a toy car, from a random heap of components.

Language

A language is a system of signs having meaning by convention. In this sense, language need not be confined to the spoken word. Traffic signs, for example, form a minilanguage, it being a matter of convention that {hazard symbol} means “hazard ahead” in some countries. It is distinctive of languages that linguistic units possess meaning by convention, and linguistic meaning is very different from what is called natural meaning, exemplified in statements such as “Those clouds mean rain” and “The fall in pressure means the valve is malfunctioning.”An important characteristic of full-fledged human languages—in contrast to birdcalls and traffic signs—is their productivity. A productive language can formulate an unlimited variety of sentences.

It is relatively easy to write computer programs that seem able, in severely restricted contexts, to respond fluently in a human language to questions and statements. Although none of these programs actually understands language, they may, in principle, reach the point where their command of a language is indistinguishable from that of a normal human. What, then, is involved in genuine understanding, if even a computer that uses language like a native human speaker is not acknowledged to understand? There is no universally agreed upon answer to this difficult question. According to one theory, whether or not one understands depends not only on one’s behaviour but also on one’s history: in order to be said to understand, one must have learned the language and have been trained to take one’s place in the linguistic community by means of interaction with other language users.

Methods and goals in AI

Symbolic vs. connectionist approaches

AI research follows two distinct, and to some extent competing, methods, the symbolic (or “top-down”) approach, and the connectionist (or “bottom-up”) approach. The top-down approach seeks to replicate intelligence by analyzing cognition independent of the biological structure of the brain, in terms of the processing of symbols—whence the symbolic label. The bottom-up approach, on the other hand, involves creating artificial neural networks in imitation of the brain’s structure—whence the connectionist label.To illustrate the difference between these approaches, consider the task of building a system, equipped with an optical scanner, that recognizes the letters of the alphabet. A bottom-up approach typically involves training an artificial neural network by presenting letters to it one by one, gradually improving performance by “tuning” the network. (Tuning adjusts the responsiveness of different neural pathways to different stimuli.) In contrast, a top-down approach typically involves writing a computer program that compares each letter with geometric descriptions. Simply put, neural activities are the basis of the bottom-up approach, while symbolic descriptions are the basis of the top-down approach.

In The Fundamentals of Learning (1932), Edward Thorndike, a psychologist at Columbia University, New York City, first suggested that human learning consists of some unknown property of connections between neurons in the brain. In The Organization of Behavior (1949), Donald Hebb, a psychologist at McGill University, Montreal, Canada, suggested that learning specifically involves strengthening certain patterns of neural activity by increasing the probability (weight) of induced neuron firing between the associated connections. The notion of weighted connections is described in a later section, Connectionism.

In 1957 two vigorous advocates of symbolic AI—Allen Newell, a researcher at the RAND Corporation, Santa Monica, California, and Herbert Simon, a psychologist and computer scientist at Carnegie Mellon University, Pittsburgh, Pennsylvania—summed up the top-down approach in what they called the physical symbol system hypothesis. This hypothesis states that processing structures of symbols is sufficient, in principle, to produce artificial intelligence in a digital computer and that, moreover, human intelligence is the result of the same type of symbolic manipulations.

During the 1950s and ’60s the top-down and bottom-up approaches were pursued simultaneously, and both achieved noteworthy, if limited, results. During the 1970s, however, bottom-up AI was neglected, and it was not until the 1980s that this approach again became prominent. Nowadays both approaches are followed, and both are acknowledged as facing difficulties. Symbolic techniques work in simplified realms but typically break down when confronted with the real world; meanwhile, bottom-up researchers have been unable to replicate the nervous systems of even the simplest living things. Caenorhabditis elegans, a much-studied worm, has approximately 300 neurons whose pattern of interconnections is perfectly known. Yet connectionist models have failed to mimic even this worm. Evidently, the neurons of connectionist theory are gross oversimplifications of the real thing.

Strong AI, applied AI, and cognitive simulation

Employing the methods outlined above, AI research attempts to reach one of three goals: strong AI, applied AI, or cognitive simulation. Strong AI aims to build machines that think. (The term strong AI was introduced for this category of research in 1980 by the philosopher John Searle of the University of California at Berkeley.) The ultimate ambition of strong AI is to produce a machine whose overall intellectual ability is indistinguishable from that of a human being. As is described in the section Early milestones in AI, this goal generated great interest in the 1950s and ’60s, but such optimism has given way to an appreciation of the extreme difficulties involved. To date, progress has been meagre. Some critics doubt whether research will produce even a system with the overall intellectual ability of an ant in the forseeable future. Indeed, some researchers working in AI’s other two branches view strong AI as not worth pursuing.Applied AI, also known as advanced information processing, aims to produce commercially viable “smart” systems—for example, “expert” medical diagnosis systems and stock-trading systems. Applied AI has enjoyed considerable success, as described in the section Expert systems.

In cognitive simulation, computers are used to test theories about how the human mind works—for example, theories about how people recognize faces or recall memories. Cognitive simulation is already a powerful tool in both neuroscience and cognitive psychology.

Alan Turing and the beginning of AI

Theoretical work

The earliest substantial work in the field of artificial intelligence was done in the mid-20th century by the British logician and computer pioneer Alan Mathison Turing. In 1935 Turing described an abstract computing machine consisting of a limitless memory and a scanner that moves back and forth through the memory, symbol by symbol, reading what it finds and writing further symbols. The actions of the scanner are dictated by a program of instructions that also is stored in the memory in the form of symbols. This is Turing’s stored-program concept, and implicit in it is the possibility of the machine operating on, and so modifying or improving, its own program. Turing’s conception is now known simply as the universal Turing machine. All modern computers are in essence universal Turing machines.During World War II, Turing was a leading cryptanalyst at the Government Code and Cypher School in Bletchley Park, Buckinghamshire, England. Turing could not turn to the project of building a stored-program electronic computing machine until the cessation of hostilities in Europe in 1945. Nevertheless, during the war he gave considerable thought to the issue of machine intelligence. One of Turing’s colleagues at Bletchley Park, Donald Michie (who later founded the Department of Machine Intelligence and Perception at the University of Edinburgh), later recalled that Turing often discussed how computers could learn from experience as well as solve new problems through the use of guiding principles—a process now known as heuristic problem solving.

Turing

gave quite possibly the earliest public lecture (London, 1947) to

mention computer intelligence, saying, “What we want is a machine that

can learn from experience,” and that the “possibility of letting the

machine alter its own instructions provides the mechanism

for this.” In 1948 he introduced many of the central concepts of AI in a

report entitled “Intelligent Machinery.” However, Turing did not

publish this paper, and many of his ideas were later reinvented by

others. For instance, one of Turing’s original ideas was to train a

network of artificial neurons to perform specific tasks, an approach described in the section Connectionism.

Chess

At Bletchley Park, Turing illustrated his ideas on machine intelligence by reference to chess—a useful source of challenging and clearly defined problems against which proposed methods for problem solving could be tested. In principle, a chess-playing computer could play by searching exhaustively through all the available moves, but in practice this is impossible because it would involve examining an astronomically large number of moves. Heuristics are necessary to guide a narrower, more discriminative search. Although Turing experimented with designing chess programs, he had to content himself with theory in the absence of a computer to run his chess program. The first true AI programs had to await the arrival of stored-program electronic digital computers.In 1945 Turing predicted that computers would one day play very good chess, and just over 50 years later, in 1997, Deep Blue, a chess computer built by the International Business Machines Corporation (IBM), beat the reigning world champion, Garry Kasparov, in a six-game match. While Turing’s prediction came true, his expectation that chess programming would contribute to the understanding of how human beings think did not. The huge improvement in computer chess since Turing’s day is attributable to advances in computer engineering rather than advances in AI—Deep Blue’s 256 parallel processors enabled it to examine 200 million possible moves per second and to look ahead as many as 14 turns of play. Many agree with Noam Chomsky, a linguist at the Massachusetts Institute of Technology (MIT), who opined that a computer beating a grandmaster at chess is about as interesting as a bulldozer winning an Olympic weightlifting competition.

The Turing test

In 1950 Turing sidestepped the traditional debate concerning the definition of intelligence, introducing a practical test for computer intelligence that is now known simply as the Turing test. The Turing test involves three participants: a computer, a human interrogator, and a human foil. The interrogator attempts to determine, by asking questions of the other two participants, which is the computer. All communication is via keyboard and display screen. The interrogator may ask questions as penetrating and wide-ranging as he or she likes, and the computer is permitted to do everything possible to force a wrong identification. (For instance, the computer might answer, “No,” in response to, “Are you a computer?” and might follow a request to multiply one large number by another with a long pause and an incorrect answer.) The foil must help the interrogator to make a correct identification. A number of different people play the roles of interrogator and foil, and, if a sufficient proportion of the interrogators are unable to distinguish the computer from the human being, then (according to proponents of Turing’s test) the computer is considered an intelligent, thinking entity.

Early milestones in AI

The first AI programs

The earliest successful AI program was written in 1951 by Christopher Strachey, later director of the Programming Research Group at the University of Oxford. Strachey’s checkers (draughts) program ran on the Ferranti Mark I computer at the University of Manchester, England. By the summer of 1952 this program could play a complete game of checkers at a reasonable speed.Information about the earliest successful demonstration of machine learning was published in 1952. Shopper, written by Anthony Oettinger at the University of Cambridge, ran on the EDSAC computer. Shopper’s simulated world was a mall of eight shops. When instructed to purchase an item, Shopper would search for it, visiting shops at random until the item was found. While searching, Shopper would memorize a few of the items stocked in each shop visited (just as a human shopper might). The next time Shopper was sent out for the same item, or for some other item that it had already located, it would go to the right shop straight away. This simple form of learning, as is pointed out in the introductory section What is intelligence?, is called rote learning.

The first AI program to run in the United States also was a checkers program, written in 1952 by Arthur Samuel for the prototype of the IBM 701. Samuel took over the essentials of Strachey’s checkers program and over a period of years considerably extended it. In 1955 he added features that enabled the program to learn from experience. Samuel included mechanisms for both rote learning and generalization, enhancements that eventually led to his program’s winning one game against a former Connecticut checkers champion in 1962.

Evolutionary computing

Samuel’s checkers program was also notable for being one of the first efforts at evolutionary computing. (His program “evolved” by pitting a modified copy against the current best version of his program, with the winner becoming the new standard.) Evolutionary computing typically involves the use of some automatic method of generating and evaluating successive “generations” of a program, until a highly proficient solution evolves.A leading proponent of evolutionary computing, John Holland, also wrote test software for the prototype of the IBM 701 computer. In particular, he helped design a neural-network “virtual” rat that could be trained to navigate through a maze. This work convinced Holland of the efficacy of the bottom-up approach. While continuing to consult for IBM, Holland moved to the University of Michigan in 1952 to pursue a doctorate in mathematics. He soon switched, however, to a new interdisciplinary program in computers and information processing (later known as communications science) created by Arthur Burks, one of the builders of ENIAC and its successor EDVAC. In his 1959 dissertation, for most likely the world’s first computer science Ph.D., Holland proposed a new type of computer—a multiprocessor computer—that would assign each artificial neuron in a network to a separate processor. (In 1985 Daniel Hillis solved the engineering difficulties to build the first such computer, the 65,536-processor Thinking Machines Corporation supercomputer.)

Holland joined the faculty at Michigan after graduation and over the next four decades directed much of the research into methods of automating evolutionary computing, a process now known by the term genetic algorithms. Systems implemented in Holland’s laboratory included a chess program, models of single-cell biological organisms, and a classifier system for controlling a simulated gas-pipeline network. Genetic algorithms are no longer restricted to “academic” demonstrations, however; in one important practical application, a genetic algorithm cooperates with a witness to a crime in order to generate a portrait of the criminal.

Logical reasoning and problem solving

The ability to reason logically is an important aspect of intelligence and has always been a major focus of AI research. An important landmark in this area was a theorem-proving program written in 1955–56 by Allen Newell and J. Clifford Shaw of the RAND Corporation and Herbert Simon of the Carnegie Mellon University. The Logic Theorist, as the program became known, was designed to prove theorems from Principia Mathematica (1910–13), a three-volume work by the British philosopher-mathematicians Alfred North Whitehead and Bertrand Russell. In one instance, a proof devised by the program was more elegant than the proof given in the books.Newell, Simon, and Shaw went on to write a more powerful program, the General Problem Solver, or GPS. The first version of GPS ran in 1957, and work continued on the project for about a decade. GPS could solve an impressive variety of puzzles using a trial and error approach. However, one criticism of GPS, and similar programs that lack any learning capability, is that the program’s intelligence is entirely secondhand, coming from whatever information the programmer explicitly includes.

English dialogue

Two of the best-known early AI programs, Eliza and Parry, gave an eerie semblance of intelligent conversation. (Details of both were first published in 1966.) Eliza, written by Joseph Weizenbaum of MIT’s AI Laboratory, simulated a human therapist. Parry, written by Stanford University psychiatrist Kenneth Colby, simulated a human paranoiac. Psychiatrists who were asked to decide whether they were communicating with Parry or a human paranoiac were often unable to tell. Nevertheless, neither Parry nor Eliza could reasonably be described as intelligent. Parry’s contributions to the conversation were canned—constructed in advance by the programmer and stored away in the computer’s memory. Eliza, too, relied on canned sentences and simple programming tricks.AI programming languages