labour force

All the members of a particular organization or country who are able to work, viewed collectively.

‘a firm with a labour force of one hundred people’

Workforce

The workforce or labour force (labor force in American English; see spelling differences) is the labour pool in employment. It is generally used to describe those working for a single company or industry, but can also apply to a geographic region like a city, state, or country. Within a company, its value can be labelled as its "Workforce in Place". The workforce of a country includes both the employed and the unemployed. The labour force participation rate, LFPR (or economic activity rate, EAR), is the ratio between the labour force and the overall size of their cohort (national population of the same age range). The term generally excludes the employers or management, and can imply those involved in manual labour. It may also mean all those who are available for work.

Formal and informal

Formal labour is any sort of employment that is structured and paid in a formal way.[1] Unlike the informal sector of the economy, formal labour within a country contributes to that country's gross national product.[2] Informal labour is labour that falls short of being a formal arrangement in law or in practice.[3] It can be paid or unpaid and it is always unstructured and unregulated.[4] Formal employment is more reliable than informal employment. Generally, the former yields higher income and greater benefits and securities for both men and women.[5]Informal labour in the world

The contribution of informal labourers is immense. Informal labour is expanding globally, most significantly in developing countries.[6] According to a study done by Jacques Charmes, in the year 2000 informal labour made up 57% of non-agricultural employment, 40% of urban employment, and 83% of the new jobs in Latin America. That same year, informal labour made up 78% of non-agricultural employment, 61% of urban employment, and 93% of the new jobs in Africa.[7] Particularly after an economic crisis, labourers tend to shift from the formal sector to the informal sector. This trend was seen after the Asian economic crisis which began in 1997.[8]Informal labour and gender

Gender is frequently associated with informal labour. Women are employed more often informally than they are formally, and informal labour is an overall larger source of employment for females than it is for males.[5] Women frequent the informal sector of the economy through occupations like home-based workers and street vendors.[8] The Penguin Atlas of Women in the World shows that in the 1990s, 81% of women in Benin were street vendors, 55% in Guatemala, 44% in Mexico, 33% in Kenya, and 14% in India. Overall, 60% of women workers in the developing world are employed in the informal sector.[1]The specific percentages are 84% and 58% for women in Sub-Saharan Africa and Latin America respectively.[1] The percentages for men in both of these areas of the world are lower, amounting to 63% and 48% respectively.[1] In Asia, 65% of women workers and 65% of men workers are employed in the informal sector.[9] Globally, a large percentage of women that are formally employed also work in the informal sector behind the scenes. These women make up the hidden work force.[9]

Agricultural and non-agricultural labour

Formal and informal labour can be divided into the subcategories of agricultural work and non-agricultural work. Martha Chen et al. believe these four categories of labour are closely related to one another.[10] A majority of agricultural work is informal, which the Penguin Atlas for Women in the World defines as unregistered or unstructured.[9] Non-agricultural work can also be informal. According to Martha Chen, informal labour makes up 48% of non-agricultural work in North Africa, 51% in Latin America, 65% in Asia, and 72% in Sub-Saharan Africa.[5]Agriculture and gender

The agricultural sector of the economy has remained stable in recent years.[11] According to the Penguin Atlas of Women in the World, women make up 40% of the agricultural labour force in most parts of the world, while in developing countries they make up 67% of the agricultural workforce.[9] Joni Seager shows in her atlas that specific tasks within agricultural work are also gendered. For example, for the production of wheat in a village in Northwest China, men perform the ploughing, the planting, and the spraying, while women perform the weeding, the fertilising, the processing, and the storage.[9]In terms of food production worldwide, the atlas shows that women produce 80% of the food in Sub-Saharan Africa, 50% in Asia, 45% in the Caribbean, 25% in North Africa and in the Middle East, and 25% in Latin America.[9] A majority of the work women do on the farm is considered housework and is therefore negligible in employment statistics.[9]

Paid and unpaid

Paid and unpaid work are also closely related with formal and informal labour. Some informal work is unpaid, or paid under the table.[10] Unpaid work can be work that is done at home to sustain a family, like child care work, or actual habitual daily labour that is not monetarily rewarded, like working the fields.[9] Unpaid workers have zero earnings, and although their work is valuable, it is hard to estimate its true value. The controversial debate still stands. Men and women tend to work in different areas of the economy, regardless of whether their work is paid or unpaid. Women focus on the service sector, while men focus on the industrial sector.Unpaid labour and gender

Women usually work fewer hours in income generating jobs than men do.[5] Often it is housework that is unpaid. Worldwide, women and girls are responsible for a great amount of household work.[9]The Penguin Atlas of Women in the World, published in 2008, stated that in Madagascar, women spend 20 hours per week on housework, while men spend only two.[9] In Mexico, women spend 33 hours and men spend 5 hours.[9] In Mongolia the housework hours amount to 27 and 12 for women and men respectively.[9] In Spain, women spend 26 hours on housework and men spend 4 hours.[9] Only in the Netherlands do men spend 10% more time than women do on activities within the home or for the household.[9]

The Penguin Atlas of Women in the World also stated that in developing countries, women and girls spend a significant amount of time fetching water for the week, while men do not. For example, in Malawi women spend 6.3 hours per week fetching water, while men spend 43 minutes. Girls in Malawi spend 3.3 hours per week fetching water, and boys spend 1.1 hours.[9] Even if women and men both spend time on household work and other unpaid activities, this work is also gendered.[5]

Unearned pay and gender

In the United Kingdom in 2014, two-thirds of workers on long-term sick leave were women, despite women only constituting half of the workforce, even after excluding maternity leaveXXX . V It May Surprise You Which Countries Are Replacing Workers With Robots the Fastest

Automation has been responsible for improvements in manufacturing productivity for decades.

Advanced robotics will accelerate this trend. Machines, after all, can perform many manufacturing tasks more efficiently, effectively and consistently than humans, leading to increased output, better quality and less waste. And machines don’t require health insurance, coffee breaks, maternity leave or sleep.

The industrial world realizes this and robot sales have been surging, increasing 29 percent in 2014 alone, according to the International Federation of Robotics.

None of this should surprise us. Automation makes little or no economic sense in countries where there is comparatively little manufacturing or where abundant cheap labor is readily available. The basic economic trade-off between the cost of labor and the cost of automation is the primary consideration. Labor laws, cultural considerations, the availability of capital and the age and skill levels of local workers also are important factors.

Consider an economy such as India’s. When you have 1.3 billion people who can make things cheaply, it doesn’t make a lot of economic sense to automate. In fact, Indians appear more likely to design and produce labor-saving robots and other such machinery than to use it in their factories.

But with wages increasing in emerging economies, we soon may see more robotics even in textiles, especially in cotton products where the raw material is shipped to countries like Pakistan, converted to textiles and sent back to the U.S.

What this all means is that the next stage of the robotic revolution — dubbed Industry 4.0 — will affect some countries more than others and some industries and job categories more than others. Every industry has certain unique jobs, each with its own required tasks. Some jobs can be automated, others not. Moreover, different tasks require different robotic functions — some of which will require very expensive robotic systems. All of this has to be considered.

Surprisingly, the countries moving ahead most aggressively — installing more robots than would be expected given their productivity-adjusted labor costs — are emerging markets: Indonesia, South Korea, Taiwan and Thailand. Manufacturers in South Korea and Thailand, in particular, have been automating at a comparatively breakneck pace; the Indonesians and Taiwanese slightly slower.

The fast pace of automation in South Korea and Taiwan can be explained in part by higher-than-average wage increases, aging workforces and low unemployment rates. In a developing economy like Indonesia, the motive might be different — to improve quality so local factories can compete with those in Japan and the West.

The reason for this, we believe, is that Chinese manufacturers see the writing on the wall. Labor costs have been increasing rapidly in China, a trend that is likely to continue. Moreover, with an aging workforce — complicated by the country’s decades-old though recently abandoned one-child policy — skill shortages appear on the horizon. And quality remains a big concern. The strategic use of robots can help compensate for these shortcomings.

Countries moving more slowly in the adoption of industrial robotics include Australia, the Czech Republic, Germany, Mexico and Poland. While there are other factors at play as well, labor regulations that require employers to justify layoffs and pay idled workers for long periods of time appear to be largely responsible for the slower pace.

Under the circumstances, automation would appear to be a no-brainer — except that their governments, in effect, discourage it with various restrictions on replacing workers with machines, including years of mandatory severance pay in some cases.

As the world’s emerging economies industrialize and labor costs rise — as is now happening in China and happened even earlier in South Korea and Taiwan — the picture is likely to change.

For now, however, workers in most developing economies would appear to have little to fear. Robots may be coming to their factories, but not any time soon.

Advanced robotics will accelerate this trend. Machines, after all, can perform many manufacturing tasks more efficiently, effectively and consistently than humans, leading to increased output, better quality and less waste. And machines don’t require health insurance, coffee breaks, maternity leave or sleep.

The industrial world realizes this and robot sales have been surging, increasing 29 percent in 2014 alone, according to the International Federation of Robotics.

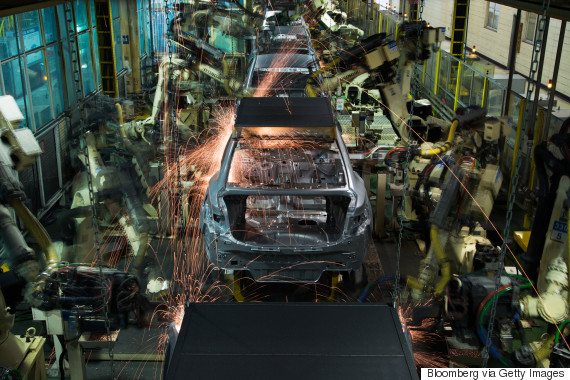

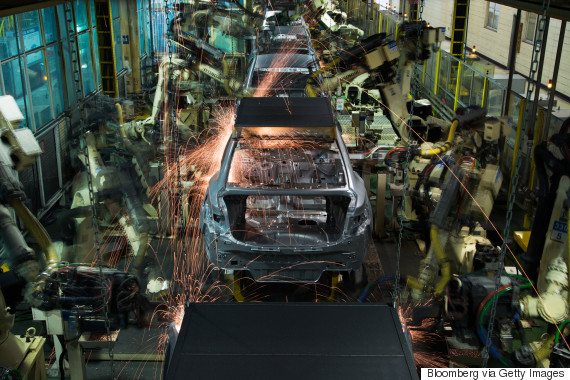

Robots at the Hyundai factory in Asan, South Korea, on Jan. 20, 2015. (SeongJoon Cho/Bloomberg via Getty Images)

Of the more than 229,000 industrial robots sold in 2014 (the most recent statistics available), more than 57,000 were sold to Chinese manufacturers, 29,300 to Japanese companies, 26,200 to companies in the U.S., 24,700 to South Koreans and more than 20,000 to German companies. By comparison, robot sales in India totaled just 2,100, IFR reported.None of this should surprise us. Automation makes little or no economic sense in countries where there is comparatively little manufacturing or where abundant cheap labor is readily available. The basic economic trade-off between the cost of labor and the cost of automation is the primary consideration. Labor laws, cultural considerations, the availability of capital and the age and skill levels of local workers also are important factors.

Consider an economy such as India’s. When you have 1.3 billion people who can make things cheaply, it doesn’t make a lot of economic sense to automate. In fact, Indians appear more likely to design and produce labor-saving robots and other such machinery than to use it in their factories.

When you have 1.3 billion people who can make things cheaply, it doesn’t make a lot of economic sense to automate.Also important in the “buy” or “don’t buy” calculation involving robotics is the technical ability of machines vis-a-vis manual labor. Some jobs — think textile cutting and stitching, for example — simply need human hands, at least for now. This is good news, all else being equal, not only for China and India, but for other emerging market economies like Bangladesh, Indonesia, Pakistan, Thailand and Turkey, which are significant textile exporters.

But with wages increasing in emerging economies, we soon may see more robotics even in textiles, especially in cotton products where the raw material is shipped to countries like Pakistan, converted to textiles and sent back to the U.S.

What this all means is that the next stage of the robotic revolution — dubbed Industry 4.0 — will affect some countries more than others and some industries and job categories more than others. Every industry has certain unique jobs, each with its own required tasks. Some jobs can be automated, others not. Moreover, different tasks require different robotic functions — some of which will require very expensive robotic systems. All of this has to be considered.

A worker in Guangzhou, China on March 4, 2011. (AP Photo/Kin Cheung)

Last fall we took a close look at the world’s 25 largest manufacturing export economies to see which countries are most aggressively automating production and which are lagging. Nearly half of the countries — Brazil, China, the Czech Republic, India, Indonesia, Mexico, Poland, Russia, South Korea, Taiwan and Thailand — are generally considered emerging markets.Surprisingly, the countries moving ahead most aggressively — installing more robots than would be expected given their productivity-adjusted labor costs — are emerging markets: Indonesia, South Korea, Taiwan and Thailand. Manufacturers in South Korea and Thailand, in particular, have been automating at a comparatively breakneck pace; the Indonesians and Taiwanese slightly slower.

The fast pace of automation in South Korea and Taiwan can be explained in part by higher-than-average wage increases, aging workforces and low unemployment rates. In a developing economy like Indonesia, the motive might be different — to improve quality so local factories can compete with those in Japan and the West.

Chinese manufacturers see the writing on the wall.Other countries that have been rapidly integrating robotics into manufacturing, but not quite as quickly, are Canada, China, Japan, Russia, the U.K. and the U.S. China is an interesting case because it’s automating rapidly despite the fact that Chinese wages are still comparatively low.

The reason for this, we believe, is that Chinese manufacturers see the writing on the wall. Labor costs have been increasing rapidly in China, a trend that is likely to continue. Moreover, with an aging workforce — complicated by the country’s decades-old though recently abandoned one-child policy — skill shortages appear on the horizon. And quality remains a big concern. The strategic use of robots can help compensate for these shortcomings.

Countries moving more slowly in the adoption of industrial robotics include Australia, the Czech Republic, Germany, Mexico and Poland. While there are other factors at play as well, labor regulations that require employers to justify layoffs and pay idled workers for long periods of time appear to be largely responsible for the slower pace.

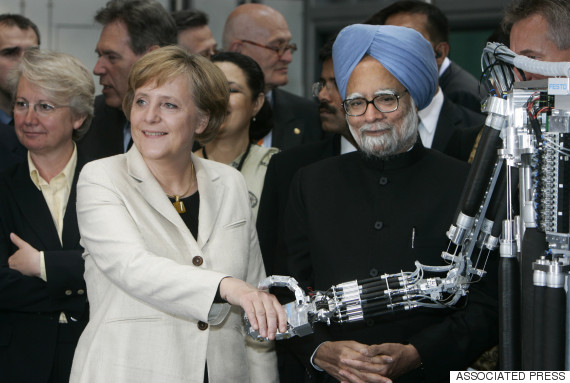

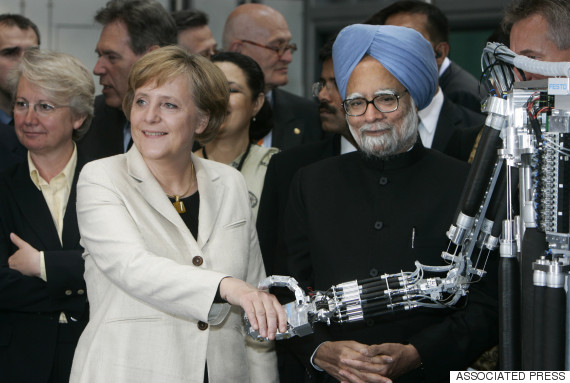

German Chancellor Angela Merkel shakes hands with a robot at an industrial fair in 2006 alongside Indian Prime Minister Manmohan Singh. (AP Photo/Joerg Sarbach)

The slowest adopters of robotic technology among the 25 largest manufacturing exporters have been Austria, Belgium, Brazil, France, India, Italy, the Netherlands, Sweden, Spain and Switzerland. With the exception of India, all of these nations have aging work forces, some of the highest productivity-adjusted labor costs in the world and are likely to face serious skill shortages in coming years.Under the circumstances, automation would appear to be a no-brainer — except that their governments, in effect, discourage it with various restrictions on replacing workers with machines, including years of mandatory severance pay in some cases.

Surprisingly, the countries moving ahead most aggressively — installing more robots than would be expected given their productivity-adjusted labor costs — are emerging markets.India has been moving slowly because the economic balance still favors India’s abundant cheap labor. But there are bureaucratic hurdles as well. Indian companies with more than 100 employees must obtain permission from the government before they fire anyone. Imagine what that involves. This lack of flexibility not only discourages the automation of existing factories, it also sends a powerful signal that any company or investor considering automation needs to think twice — maybe three times — before sinking money into a new modern factory.

As the world’s emerging economies industrialize and labor costs rise — as is now happening in China and happened even earlier in South Korea and Taiwan — the picture is likely to change.

For now, however, workers in most developing economies would appear to have little to fear. Robots may be coming to their factories, but not any time soon.

XXX . V0 Technological unemployment

Technological unemployment is the loss of jobs caused by technological change. Such change typically includes the introduction of labour-saving "mechanical-muscle" machines or more efficient "mechanical-mind" processes (automation). Just as horses employed as prime movers were gradually made obsolete by the automobile, humans' jobs have also been affected throughout modern history. Historical examples include artisan weavers reduced to poverty after the introduction of mechanised looms. During World War II, Alan Turing's Bombe machine compressed and decoded thousands of man-years worth of encrypted data in a matter of hours. A contemporary example of technological unemployment is the displacement of retail cashiers by self-service tills.

That technological change can cause short-term job losses is widely accepted. The view that it can lead to lasting increases in unemployment has long been controversial. Participants in the technological unemployment debates can be broadly divided into optimists and pessimists. Optimists agree that innovation may be disruptive to jobs in the short term, yet hold that various compensation effects ensure there is never a long-term negative impact on jobs, whereas pessimists contend that at least in some circumstances, new technologies can lead to a lasting decline in the total number of workers in employment. The phrase "technological unemployment" was popularised by John Maynard Keynes in the 1930s. Yet the issue of machines displacing human labour has been discussed since at least Aristotle's time.

Prior to the 18th century both the elite and common people would generally take the pessimistic view on technological unemployment, at least in cases where the issue arose. Due to generally low unemployment in much of pre-modern history, the topic was rarely a prominent concern. In the 18th century fears over the impact of machinery on jobs intensified with the growth of mass unemployment, especially in Great Britain which was then at the forefront of the Industrial Revolution. Yet some economic thinkers began to argue against these fears, claiming that overall innovation would not have negative effects on jobs. These arguments were formalised in the early 19th century by the classical economists. During the second half of the 19th century, it became increasingly apparent that technological progress was benefiting all sections of society, including the working class. Concerns over the negative impact of innovation diminished. The term "Luddite fallacy" was coined to describe the thinking that innovation would have lasting harmful effects on employment.

The view that technology is unlikely to lead to long term unemployment has been repeatedly challenged by a minority of economists. In the early 1800s these included Ricardo himself. There were dozens of economists warning about technological unemployment during brief intensifications of the debate that spiked in the 1930s and 1960s. Especially in Europe, there were further warnings in the closing two decades of the twentieth century, as commentators noted an enduring rise in unemployment suffered by many industrialised nations since the 1970s. Yet a clear majority of both professional economists and the interested general public held the optimistic view through most of the 20th century.

In the second decade of the 21st century, a number of studies have been released suggesting that technological unemployment may be increasing worldwide. Oxford Professors Carl Benedikt Frey and Michael Osborne, for example, have estimated that 47 percent of U.S. jobs are at risk of automation.[1] However, on PBS NewsHours, they made clear that their findings do not necessarily imply future technological unemployment.[2] While many economists and commentators still argue such fears are unfounded, as was widely accepted for most of the previous two centuries, concern over technological unemployment is growing once again.[3][4][5] A report in Wired in 2017 quotes knowledgeable people such as economist Gene Sperling and management professor Andrew McAfee on the idea that handling existing and impending job loss to automation is a "significant issue".[6] Regarding a recent claim by Treasury Secretary Steve Mnuchin that automation is not "going to have any kind of big effect on the economy for the next 50 or 100 years", says McAfee, "I don't talk to anyone in the field who believes that."[6] Recent technological innovations have the potential to render humans obsolete with the professional, white-collar, low-skilled, creative fields, and other "mental jobs

In the 21st century, robots are beginning to perform roles not just in manufacturing, but in the service sector; e.g. in healthcare.

Long term effects on employment

There are more sectors losing jobs than creating jobs. And the general-purpose aspect of software technology means that even the industries and jobs that it creates are not forever.

“

”

The concept of structural unemployment, a lasting level of joblessness that does not disappear even at the high point of the business cycle, became popular in the 1960s. For pessimists, technological unemployment is one of the factors driving the wider phenomena of structural unemployment. Since the 1980s, even optimistic economists have increasingly accepted that structural unemployment has indeed risen in advanced economies, but they have tended to blame this on globalisation and offshoring rather than technological change. Others claim a chief cause of the lasting increase in unemployment has been the reluctance of governments to pursue expansionary policies since the displacement of Keynesianism that occurred in the 1970s and early 80s. In the 21st century, and especially since 2013, pessimists have been arguing with increasing frequency that lasting worldwide technological unemployment is a growing threat.

Compensation effects

John Kay inventor of the Fly Shuttle AD 1753, by Ford Madox Brown, depicting the inventor John Kay kissing his wife goodbye as men carry him away from his home to escape a mob angry about his labour-saving mechanical loom. Compensation effects were not widely understood at this time.

Compensation effects include:

- By new machines. (The labour needed to build the new equipment that applied innovation requires.)

- By new investments. (Enabled by the cost savings and therefore increased profits from the new technology.)

- By changes in wages. (In cases where unemployment does occur, this can cause a lowering of wages, thus allowing more workers to be re-employed at the now lower cost. On the other hand, sometimes workers will enjoy wage increases as their profitability rises. This leads to increased income and therefore increased spending, which in turn encourages job creation.)

- By lower prices. (Which then lead to more demand, and therefore more employment.) Lower prices can also help offset wage cuts, as cheaper goods will increase workers' buying power.

- By new products. (Where innovation directly creates new jobs.)

Many economists now pessimistic about technological unemployment accept that compensation effects did largely operate as the optimists claimed through most of the 19th and 20th century. Yet they hold that the advent of computerisation means that compensation effects are now less effective. An early example of this argument was made by Wassily Leontief in 1983. He conceded that after some disruption, the advance of mechanization during the Industrial Revolution actually increased the demand for labour as well as increasing pay due to effects that flow from increased productivity. While early machines lowered the demand for muscle power, they were unintelligent and needed large armies of human operators to remain productive. Yet since the introduction of computers into the workplace, there is now less need not just for muscle power but also for human brain power. Hence even as productivity continues to rise, the lower demand for human labour may mean less pay and employment. However, this argument is not fully supported by more recent empirical studies. One research done by Erik Brynjolfsson and Lorin M. Hitt in 2003 presents direct evidence that suggests a positive short-term effect of computerization on firm-level measured productivity and output growth. In addition, they find the long-term productivity contribution of computerization and technological changes might even be greater.

The Luddite fallacy

If the Luddite fallacy were true we would all be out of work because productivity has been increasing for two centuries.

“

”

There are two underlying premises for why long-term difficulty could develop. The one that has traditionally been deployed is that ascribed to the Luddites (whether or not it is a truly accurate summary of their thinking), which is that there is a finite amount of work available and if machines do that work, there can be no other work left for humans to do. Economists call this the lump of labour fallacy, arguing that in reality no such limitation exists. However, the other premise is that it is possible for long-term difficulty to arise that has nothing to do with any lump of labour. In this view, the amount of work that can exist is infinite, but (1) machines can do most of the "easy" work, (2) the definition of what is "easy" expands as information technology progresses, and (3) the work that lies beyond "easy" (the work that requires more skill, talent, knowledge, and insightful connections between pieces of knowledge) may require greater cognitive faculties than most humans are able to supply, as point 2 continually advances. This latter view is the one supported by many modern advocates of the possibility of long-term, systemic technological unemployment.

Skill levels and technological unemployment

A common view among those discussing the effect of innovation on the labour market has been that it mainly hurts those with low skills, while often benefiting skilled workers. According to scholars such as Lawrence F. Katz, this may have been true for much of the twentieth century, yet in the 19th century, innovations in the workplace largely displaced costly skilled artisans, and generally benefited the low skilled. While 21st century innovation has been replacing some unskilled work, other low skilled occupations remain resistant to automation, while white collar work requiring intermediate skills is increasingly being performed by autonomous computer programs.[27][28][29]Some recent studies however, such as a 2015 paper by Georg Graetz and Guy Michaels, found that at least in the area they studied – the impact of industrial robots – innovation is boosting pay for highly skilled workers while having a more negative impact on those with low to medium skills.[30] A 2015 report by Carl Benedikt Frey, Michael Osborne and Citi Research, agreed that innovation had been disruptive mostly to middle-skilled jobs, yet predicted that in the next ten years the impact of automation would fall most heavily on those with low skills.[31]

Geoff Colvin at Forbes argued that predictions on the kind of work a computer will never be able to do have proven inaccurate. A better approach to anticipate the skills on which humans will provide value would be to find out activities where we will insist that humans remain accountable for important decisions, such as with judges, CEOs, bus drivers and government leaders, or where human nature can only be satisfied by deep interpersonal connections, even if those tasks could be automated.[32]

In contrast, others see even skilled human laborers being obsolete. Oxford academics Carl Benedikt Frey and Michael A Osborne have predicted computerization could make nearly half of jobs redundant ,[33] of the 702 professions assessed, they found a strong correlation between education and income with ability to be automated, with office jobs and service work being some of the more at risk.[34] In 2012 co-founder of Sun Microsystems Vinod Khosla predicted that 80% of medical doctors jobs would be lost in the next two decades to automated machine learning medical diagnostic software.

Empirical findings

There has been a lot of empirical research that attempts to quantify the impact of technological unemployment, mostly done at the microeconomic level. Most existing firm-level research has found a labor-friendly nature of technological innovations. For example, German economists Stefan Lachenmaier and Horst Rottmann find that both product and process innovation have a positive effect on employment. Interestingly, they also find that process innovation has a more significant job creation effect than product innovation.[36] This result is supported by evidence in the United States as well, which shows that manufacturing firm innovations have a positive effect on the total number of jobs, not just limited to firm-specific behavior.[37]At the industry level, however, researchers have found mixed results with regard to the employment effect of technological changes. A 2017 study on manufacturing and service sectors in 11 European countries suggests that positive employment effect of technological innovations only exist in the medium- and high-tech sectors.There also seems to be a negative correlation between employment and capital formation, which suggests that technological progress could potentially be labor-saving given that process innovation is often incorporated in investment.[38]

Limited macroeconomic analysis has been done to study the relationship between technological shocks and unemployment. The small amount of existing research, however, suggests mixed results. Italian economist Marco Vivarelli finds that the labor-saving effect of process innovation seems to have affected the Italian economy more negatively than the United States. On the other hand, the job creating effect of product innovation could only be observed in the United States, not Italy.[39] Another study in 2013 finds a more transitory, rather than permanent, unemployment effect of technological change.

Measures of technological innovation

There have been four main approaches that attempt to capture and document technological innovation quantitatively. The first one, proposed by Jordi Gali in 1999 and further developed by Neville Francis and Valerie A. Ramey in 2005, is to use long-run restrictions in a Vector Autoregression (VAR) to identify technological shocks, assuming that only technology affects long-run productivity.[41][42]The second approach is from Susanto Basu, John Fernald and Miles Kimball.[43] They create a measure of aggregate technology change with augmented Solow residuals, controlling for aggregate, non-technological effects such as non-constant returns and imperfect competition.

The third method, initially developed by John Shea in 1999, takes a more direct approach and employs observable indicators such as Research and Development (R&D) spending, and number of patent applications.[44] This measure of technological innovation is very widely used in empirical research, since it does not rely on the assumption that only technology affects long-run productivity, and fairly accurately captures the output variation based on input variation. However, there are limitations with direct measures such as R&D. For example, since R&D only measures the input in innovation, the output is unlikely to be perfectly correlated with the input. In addition, R&D fails to capture the indeterminate lag between developing a new product or service, and bringing it to market.[45]

The fourth approach, constructed by Michelle Alexopoulos, looks at the number of new titles published in the fields of technology and computer science to reflect technological progress, which turns out to be consistent with R&D expenditure data.[46] Compared with R&D, this indicator captures the lag between changes in technology.

Welfare payments

The use of various forms of subsidies has often been accepted as a solution to technological unemployment even by conservatives and by those who are optimistic about the long term effect on jobs. Welfare programmes have historically tended to be more durable once established, compared with other solutions to unemployment such as directly creating jobs with public works. Despite being the first person to create a formal system describing compensation effects, Ramsey McCulloch and most other classical economists advocated government aid for those suffering from technological unemployment, as they understood that market adjustment to new technology was not instantaneous and that those displaced by labour-saving technology would not always be able to immediately obtain alternative employment through their own efforts.Basic income

Several commentators have argued that traditional forms of welfare payment may be inadequate as a response to the future challenges posed by technological unemployment, and have suggested a basic income as an alternative. People advocating some form of basic income as a solution to technological unemployment include Martin Ford, [120] Erik Brynjolfsson,[81] Robert Reich and Guy Standing. Reich has gone as far as to say the introduction of a basic income, perhaps implemented as a negative income tax is "almost inevitable",[121] while Standing has said he considers that a basic income is becoming "politically essential".[122] Since late 2015, new basic income pilots have been announced in Finland, the Netherlands, and Canada. Further recent advocacy for basic income has arisen from a number of technology entrepreneurs, the most prominent being Sam Altman, president of Y Combinator.[123]Skepticism about basic income includes both right and left elements, and proposals for different forms of it have come from all segments of the spectrum. For example, while the best-known proposed forms (with taxation and distribution) are usually thought of as left-leaning ideas that right-leaning people try to defend against, other forms have been proposed even by libertarians, such as von Hayek and Friedman. Republican president Nixon's Family Assistance Plan (FAP) of 1969, which had much in common with basic income, passed in the House but was defeated in the Senate.[124]

One objection to basic income is that it could be a disincentive to work, but evidence from older pilots in India, Africa, and Canada indicates that this does not happen and that a basic income encourages low-level entrepreneurship and more productive, collaborative work. Another objection is that funding it sustainably is a huge challenge. While new revenue-raising ideas have been proposed such as Martin Ford's wage recapture tax, how to fund a generous basic income remains a debated question, and skeptics have dismissed it as utopian. Even from a progressive viewpoint, there are concerns that a basic income set too low may not help the economically vulnerable, especially if financed largely from cuts to other forms of welfare.

To better address both the funding concerns and concerns about government control, one alternative model is that the cost and control would be distributed across the private sector instead of the public sector. Companies across the economy would be required to employ humans, but the job descriptions would be left to private innovation, and individuals would have to compete to be hired and retained. This would be a for-profit sector analog of basic income, that is, a market-based form of basic income. It differs from a job guarantee in that the government is not the employer (rather, companies are) and there is no aspect of having employees who "cannot be fired", a problem that interferes with economic dynamism. The economic salvation in this model is not that every individual is guaranteed a job, but rather just that enough jobs exist that massive unemployment is avoided and employment is no longer solely the privilege of only the very smartest or highly trained 20% of the population. Another option for a market-based form of basic income has been proposed by the Center for Economic and Social Justice (CESJ) as part of "a Just Third Way" (a Third Way with greater justice) through widely distributed power and liberty. Called the Capital Homestead Act,[128] it is reminiscent of James S. Albus's Peoples' Capitalism[68][69] in that money creation and securities ownership are widely and directly distributed to individuals rather than flowing through, or being concentrated in, centralized or elite mechanisms.

Education

Improved availability to quality education, including skills training for adults, is a solution that in principle at least is not opposed by any side of the political spectrum, and welcomed even by those who are optimistic about long-term technological employment. Improved education paid for by government tends to be especially popular with industry.Proponents of this brand of policy assert higher level, more specialized learning is a way to capitalize from the growing technology industry. Leading technology research university MIT published an open letter to policymakers advocating for the "reinvention of education", namely a shift "away from rote learning" and towards STEM disciplines. [129] Similar statements released by the U.S President's Council of Advisors on Science and Technology (PACST) have also been used to support this STEM emphasis on enrollment choice in higher learning [130]. Education reform is also a part of the U.K government's "Industrial Strategy", a plan announcing the nation's intent to invest millions into a "technical education system" [131]. The proposal includes the establishment of a retraining program for workers who wish to adapt their skill-sets. These suggestions combat the concerns over automation through policy choices aiming to meet the emerging needs of society via updated information. Of the professionals within the academic community who applaud such moves, often noted is a gap between economic security and formal education [132]--a disparity exacerbated by the rising demand for specialized skills-- and education's potential to reduce it.

However, several academics have also argued that improved education alone will not be sufficient to solve technological unemployment, pointing to recent declines in the demand for many intermediate skills, and suggesting that not everyone is capable in becoming proficient in the most advanced skills. Kim Taipale has said that "The era of bell curve distributions that supported a bulging social middle class is over... Education per se is not going to make up the difference."[133] while an op-ed piece from 2011, Paul Krugman, an economics professor and columnist for the New York Times, argued that better education would be an insufficient solution to technological unemployment, as it "actually reduces the demand for highly educated workers".

Public works

Programmes of Public works have traditionally been used as way for governments to directly boost employment, though this has often been opposed by some, but not all, conservatives. Jean-Baptiste Say, although generally associated with free market economics, advised that public works could be a solution to technological unemployment.[135] Some commentators, such as professor Mathew Forstater, have advised that public works and guaranteed jobs in the public sector may be the ideal solution to technological unemployment, as unlike welfare or guaranteed income schemes they provide people with the social recognition and meaningful engagement that comes with work.For less developed economies, public works may be an easier to administrate solution compared to universal welfare programmes.[23] As of 2015, calls for public works in the advanced economies have been less frequent even from progressives, due to concerns about sovereign debt A partial exception is for spending on infrastructure, which has been recommended as a solution to technological unemployment even by economists previously associated with a neoliberal agenda, such as Larry Summers.

Shorter working hours

In 1870, the average American worker clocked up about 75 hours per week. Just prior to World War II working hours had fallen to about 42 per week, and the fall was similar in other advanced economies. According to Wassily Leontief, this was a voluntary increase in technological unemployment. The reduction in working hours helped share out available work, and was favoured by workers who were happy to reduce hours to gain extra leisure, as innovation was at the time generally helping to increase their rates of pay.[23]Further reductions in working hours have been proposed as a possible solution to unemployment by economists including John R. Commons, Lord Keynes and Luigi Pasinetti. Yet once working hours have reached about 40 hours per week, workers have been less enthusiastic about further reductions, both to prevent loss of income and as many value engaging in work for its own sake. Generally, 20th-century economists had argued against further reductions as a solution to unemployment, saying it reflects a Lump of labour fallacy.[139] In 2014, Google's co-founder, Larry Page, suggested a four-day workweek, so as technology continues to displace jobs, more people can find employment.

Broadening the ownership of technological assets

Several solutions have been proposed which don't fall easily into the traditional left-right political spectrum. This includes broadening the ownership of robots and other productive capital assets. Enlarging the ownership of technologies has been advocated by people including James S. Albus[68][142] John Lanchester,[143] Richard B. Freeman,[126] and Noah Smith.[144] Jaron Lanier has proposed a somewhat similar solution: a mechanism where ordinary people receive "nano payments" for the big data they generate by their regular surfing and other aspects of their online presence.[145]Structural changes towards a post-scarcity economy

The Zeitgeist Movement (TZM), The Venus Project (TVP) as well as various individuals and organizations propose structural changes towards a form of a post-scarcity economy in which people are 'freed' from their automatable, monotonous jobs, instead of 'losing' their jobs. In the system proposed by TZM all jobs are either automated, abolished for bringing no true value for society (such as ordinary advertising), rationalized by more efficient, sustainable and open processes and collaboration or carried out based on altruism and social relevance (see also: Whuffie), opposed to compulsion or monetary gain.[ The movement also speculates that the free time made available to people will permit a renaissance of creativity, invention, community and social capital as well as reducing stress.Others

The threat of technological unemployment has occasionally been used by free market economists as a justification for supply side reforms, to make it easier for employers to hire and fire workers. Conversely, it has also been used as a reason to justify an increase in employee protection.Economists including Larry Summers have advised a package of measures may be needed. He advised vigorous cooperative efforts to address the "myriad devices" – such as tax havens, bank secrecy, money laundering, and regulatory arbitrage – which enable the holders of great wealth to avoid paying taxes, and to make it more difficult to accumulate great fortunes without requiring "great social contributions" in return. Summers suggested more vigorous enforcement of anti-monopoly laws; reductions in "excessive" protection for intellectual property; greater encouragement of profit-sharing schemes that may benefit workers and give them a stake in wealth accumulation; strengthening of collective bargaining arrangements; improvements in corporate governance; strengthening of financial regulation to eliminate subsidies to financial activity; easing of land-use restrictions that may cause estates to keep rising in value; better training for young people and retraining for displaced workers; and increased public and private investment in infrastructure development, such as energy production and transportation.[

Michael Spence has advised that responding to the future impact of technology will require a detailed understanding of the global forces and flows technology has set in motion. Adapting to them "will require shifts in mindsets, policies, investments (especially in human capital), and quite possibly models of employment and distribution".

Since the publication of their 2011 book Race Against The Machine, MIT professors Andrew McAfee and Erik Brynjolfsson have been prominent among those raising concern about technological unemployment. The two professors remain relatively optimistic however, stating "the key to winning the race is not to compete against machines but to compete with machines

Disruptive innovation is a term in the field of business administration which refers to an innovation that creates a new market and value network and eventually disrupts an existing market and value network, displacing established market leading firms, products, and alliances. [2] The term was defined and first analyzed by the American scholar Clayton M. Christensen and his collaborators beginning in 1995,[3] and has been called the most influential business idea of the early 21st century.[4]

Not all innovations are disruptive, even if they are revolutionary. For example, the first automobiles in the late 19th century were not a disruptive innovation, because early automobiles were expensive luxury items that did not disrupt the market for horse-drawn vehicles. The market for transportation essentially remained intact until the debut of the lower-priced Ford Model T in 1908.[5] The mass-produced automobile was a disruptive innovation, because it changed the transportation market, whereas the first thirty years of automobiles did not.

Disruptive innovations tend to be produced by outsiders and entrepreneurs, rather than existing market-leading companies. The business environment of market leaders does not allow them to pursue disruptive innovations when they first arise, because they are not profitable enough at first and because their development can take scarce resources away from sustaining innovations (which are needed to compete against current competition).[6] A disruptive process can take longer to develop than by the conventional approach and the risk associated to it is higher than the other more incremental or evolutionary forms of innovations, but once it is deployed in the market, it achieves a much faster penetration and higher degree of impact on the established markets.[7]

Beyond business and economics disruptive innovations can also be considered to disrupt complex systems, only including economic and business-related aspects

The term disruptive technologies was coined by Clayton M. Christensen and introduced in his 1995 article Disruptive Technologies: Catching the Wave,[9] which he cowrote with Joseph Bower. The article is aimed at management executives who make the funding or purchasing decisions in companies, rather than the research community. He describes the term further in his book The Innovator's Dilemma.[10] Innovator's Dilemma explored the cases of the disk drive industry (which, with its rapid generational change, is to the study of business what fruit flies are to the study of genetics, as Christensen was advised in the 1990s[11]) and the excavating equipment industry (where hydraulic actuation slowly displaced cable-actuated movement). In his sequel with Michael E. Raynor, The Innovator's Solution,[12] Christensen replaced the term disruptive technology with disruptive innovation because he recognized that few technologies are intrinsically disruptive or sustaining in character; rather, it is the business model that the technology enables that creates the disruptive impact. However, Christensen's evolution from a technological focus to a business-modelling focus is central to understanding the evolution of business at the market or industry level. Christensen and Mark W. Johnson, who cofounded the management consulting firm Innosight, described the dynamics of "business model innovation" in the 2008 Harvard Business Review article "Reinventing Your Business Model".[13] The concept of disruptive technology continues a long tradition of identifying radical technical change in the study of innovation by economists, and the development of tools for its management at a firm or policy level.

The term “disruptive innovation” is misleading when it is used to refer to a product or service at one fixed point, rather than to the evolution of that product or service over time.

In the late 1990s, the automotive sector began to embrace a perspective of "constructive disruptive technology" by working with the consultant David E. O'Ryan, whereby the use of current off-the-shelf technology was integrated with newer innovation to create what he called "an unfair advantage". The process or technology change as a whole had to be "constructive" in improving the current method of manufacturing, yet disruptively impact the whole of the business case model, resulting in a significant reduction of waste, energy, materials, labor, or legacy costs to the user.

In keeping with the insight that what matters economically is the business model, not the technological sophistication itself, Christensen's theory explains why many disruptive innovations are not "advanced technologies", which the technology mudslide hypothesis would lead one to expect. Rather, they are often novel combinations of existing off-the-shelf components, applied cleverly to a small, fledgling value network.

Rainbow revenue story

The current theoretical understanding of disruptive innovation is different from what might be expected by default, an idea that Clayton M. Christensen called the "technology mudslide hypothesis". This is the simplistic idea that an established firm fails because it doesn't "keep up technologically" with other firms. In this hypothesis, firms are like climbers scrambling upward on crumbling footing, where it takes constant upward-climbing effort just to stay still, and any break from the effort (such as complacency born of profitability) causes a rapid downhill slide. Christensen and colleagues have shown that this simplistic hypothesis is wrong; it doesn't model reality. What they have shown is that good firms are usually aware of the innovations, but their business environment does not allow them to pursue them when they first arise, because they are not profitable enough at first and because their development can take scarce resources away from that of sustaining innovations (which are needed to compete against current competition). In Christensen's terms, a firm's existing value networks place insufficient value on the disruptive innovation to allow its pursuit by that firm. Meanwhile, start-up firms inhabit different value networks, at least until the day that their disruptive innovation is able to invade the older value network. At that time, the established firm in that network can at best only fend off the market share attack with a me-too entry, for which survival (not thriving) is the only reward.[6]Christensen defines a disruptive innovation as a product or service designed for a new set of customers.

"Generally, disruptive innovations were technologically straightforward, consisting of off-the-shelf components put together in a product architecture that was often simpler than prior approaches. They offered less of what customers in established markets wanted and so could rarely be initially employed there. They offered a different package of attributes valued only in emerging markets remote from, and unimportant to, the mainstream."[14]Christensen argues that disruptive innovations can hurt successful, well-managed companies that are responsive to their customers and have excellent research and development. These companies tend to ignore the markets most susceptible to disruptive innovations, because the markets have very tight profit margins and are too small to provide a good growth rate to an established (sizable) firm.[15] Thus, disruptive technology provides an example of an instance when the common business-world advice to "focus on the customer" (or "stay close to the customer", or "listen to the customer") can be strategically counterproductive.

While Christensen argued that disruptive innovations can hurt successful, well-managed companies, O'Ryan countered that "constructive" integration of existing, new, and forward-thinking innovation could improve the economic benefits of these same well-managed companies, once decision-making management understood the systemic benefits as a whole.

Christensen distinguishes between "low-end disruption", which targets customers who do not need the full performance valued by customers at the high end of the market, and "new-market disruption", which targets customers who have needs that were previously unserved by existing incumbents.[16]

"Low-end disruption" occurs when the rate at which products improve exceeds the rate at which customers can adopt the new performance. Therefore, at some point the performance of the product overshoots the needs of certain customer segments. At this point, a disruptive technology may enter the market and provide a product that has lower performance than the incumbent but that exceeds the requirements of certain segments, thereby gaining a foothold in the market.

In low-end disruption, the disruptor is focused initially on serving the least profitable customer, who is happy with a good enough product. This type of customer is not willing to pay premium for enhancements in product functionality. Once the disruptor has gained a foothold in this customer segment, it seeks to improve its profit margin. To get higher profit margins, the disruptor needs to enter the segment where the customer is willing to pay a little more for higher quality. To ensure this quality in its product, the disruptor needs to innovate. The incumbent will not do much to retain its share in a not-so-profitable segment, and will move up-market and focus on its more attractive customers. After a number of such encounters, the incumbent is squeezed into smaller markets than it was previously serving. And then, finally, the disruptive technology meets the demands of the most profitable segment and drives the established company out of the market.

"New market disruption" occurs when a product fits a new or emerging market segment that is not being served by existing incumbents in the industry.

The extrapolation of the theory to all aspects of life has been challenged,[17][18] as has the methodology of relying on selected case studies as the principal form of evidence.[17] Jill Lepore points out that some companies identified by the theory as victims of disruption a decade or more ago, rather than being defunct, remain dominant in their industries today (including Seagate Technology, U.S. Steel, and Bucyrus).[17] Lepore questions whether the theory has been oversold and misapplied, as if it were able to explain everything in every sphere of life, including not just business but education and public institutions.

Disruptive technology

In 2009, Milan Zeleny described high technology as disruptive technology and raised the question of what is being disrupted. The answer, according to Zeleny, is the support network of high technology.[19] For example, introducing electric cars disrupts the support network for gasoline cars (network of gas and service stations). Such disruption is fully expected and therefore effectively resisted by support net owners. In the long run, high (disruptive) technology bypasses, upgrades, or replaces the outdated support network. Questioning the concept of a disruptive technology, Haxell (2012) questions how such technologies get named and framed, pointing out that this is a positioned and retrospective act.[20][21]Technology, being a form of social relationship[citation needed], always evolves. No technology remains fixed. Technology starts, develops, persists, mutates, stagnates, and declines, just like living organisms.[22] The evolutionary life cycle occurs in the use and development of any technology. A new high-technology core emerges and challenges existing technology support nets (TSNs), which are thus forced to coevolve with it. New versions of the core are designed and fitted into an increasingly appropriate TSN, with smaller and smaller high-technology effects. High technology becomes regular technology, with more efficient versions fitting the same support net. Finally, even the efficiency gains diminish, emphasis shifts to product tertiary attributes (appearance, style), and technology becomes TSN-preserving appropriate technology. This technological equilibrium state becomes established and fixated, resisting being interrupted by a technological mutation; then new high technology appears and the cycle is repeated.

Regarding this evolving process of technology, Christensen said:

"The technological changes that damage established companies are usually not radically new or difficult from a technological point of view. They do, however, have two important characteristics: First, they typically present a different package of performance attributes—ones that, at least at the outset, are not valued by existing customers. Second, the performance attributes that existing customers do value improve at such a rapid rate that the new technology can later invade those established markets."[23]Joseph Bower[24] explained the process of how disruptive technology, through its requisite support net, dramatically transforms a certain industry.

"When the technology that has the potential for revolutionizing an industry emerges, established companies typically see it as unattractive: it’s not something their mainstream customers want, and its projected profit margins aren’t sufficient to cover big-company cost structure. As a result, the new technology tends to get ignored in favor of what’s currently popular with the best customers. But then another company steps in to bring the innovation to a new market. Once the disruptive technology becomes established there, smaller-scale innovation rapidly raise the technology’s performance on attributes that mainstream customers’ value."[25]The automobile was high technology with respect to the horse carriage; however, it evolved into technology and finally into appropriate technology with a stable, unchanging TSN. The main high-technology advance in the offing is some form of electric car—whether the energy source is the sun, hydrogen, water, air pressure, or traditional charging outlet. Electric cars preceded the gasoline automobile by many decades and are now returning to replace the traditional gasoline automobile. The printing press was a development that changed the way that information was stored, transmitted, and replicated. This allowed empowered authors but it also promoted censorship and information overload in writing technology.

Milan Zeleny described the above phenomenon.[26] He also wrote that:

"Implementing high technology is often resisted. This resistance is well understood on the part of active participants in the requisite TSN. The electric car will be resisted by gas-station operators in the same way automated teller machines (ATMs) were resisted by bank tellers and automobiles by horsewhip makers. Technology does not qualitatively restructure the TSN and therefore will not be resisted and never has been resisted. Middle management resists business process reengineering because BPR represents a direct assault on the support net (coordinative hierarchy) they thrive on. Teamwork and multi-functionality is resisted by those whose TSN provides the comfort of narrow specialization and command-driven work."Social media could be considered a disruptive innovation within sports. More specifically, the way that news in sports circulates nowadays versus the pre-internet era where sports news was mainly on T.V., radio, and newspapers. Social media has created a new market for sports that was not around before in the sense that players and fans have instant access to information related to sports.

High-technology effects

High technology is a technology core that changes the very architecture (structure and organization) of the components of the technology support net. High technology therefore transforms the qualitative nature of the TSN's tasks and their relations, as well as their requisite physical, energy, and information flows. It also affects the skills required, the roles played, and the styles of management and coordination—the organizational culture itself.This kind of technology core is different from regular technology core, which preserves the qualitative nature of flows and the structure of the support and only allows users to perform the same tasks in the same way, but faster, more reliably, in larger quantities, or more efficiently. It is also different from appropriate technology core, which preserves the TSN itself with the purpose of technology implementation and allows users to do the same thing in the same way at comparable levels of efficiency, instead of improving the efficiency of performance.[28]

As for the difference between high technology and low technology, Milan Zeleny once said:

" The effects of high technology always breaks the direct comparability by changing the system itself, therefore requiring new measures and new assessments of its productivity. High technology cannot be compared and evaluated with the existing technology purely on the basis of cost, net present value or return on investment. Only within an unchanging and relatively stable TSN would such direct financial comparability be meaningful. For example, you can directly compare a manual typewriter with an electric typewriter, but not a typewriter with a word processor. Therein lies the management challenge of high technology. "[29]However, not all modern technologies are high technologies. They have to be used as such, function as such, and be embedded in their requisite TSNs. They have to empower the individual because only through the individual can they empower knowledge. Not all information technologies have integrative effects. Some information systems are still designed to improve the traditional hierarchy of command and thus preserve and entrench the existing TSN. The administrative model of management, for instance, further aggravates the division of task and labor, further specializes knowledge, separates management from workers, and concentrates information and knowledge in centers.

As knowledge surpasses capital, labor, and raw materials as the dominant economic resource, technologies are also starting to reflect this shift. Technologies are rapidly shifting from centralized hierarchies to distributed networks. Nowadays knowledge does not reside in a super-mind, super-book, or super-database, but in a complex relational pattern of networks brought forth to coordinate human action.

Practical example of disruption

In the practical world, the popularization of personal computers illustrates how knowledge contributes to the ongoing technology innovation. The original centralized concept (one computer, many persons) is a knowledge-defying idea of the prehistory of computing, and its inadequacies and failures have become clearly apparent. The era of personal computing brought powerful computers "on every desk" (one person, one computer). This short transitional period was necessary for getting used to the new computing environment, but was inadequate from the vantage point of producing knowledge. Adequate knowledge creation and management come mainly from networking and distributed computing (one person, many computers). Each person's computer must form an access point to the entire computing landscape or ecology through the Internet of other computers, databases, and mainframes, as well as production, distribution, and retailing facilities, and the like. For the first time, technology empowers individuals rather than external hierarchies. It transfers influence and power where it optimally belongs: at the loci of the useful knowledge. Even though hierarchies and bureaucracies do not innovate, free and empowered individuals do; knowledge, innovation, spontaneity, and self-reliance are becoming increasingly valued and promoted.[XXX . V00 Working time

Working time is the period of time that a person spends at paid labor. Unpaid labor such as personal housework or caring for children or pets is not considered part of the working week.

Many countries regulate the work week by law, such as stipulating minimum daily rest periods, annual holidays, and a maximum number of working hours per week. Working time may vary from person to person, often depending on location, culture, lifestyle choice, and the profitability of the individual's livelihood. For example, someone who is supporting children and paying a large mortgage will need to work more hours to meet basic costs of living than someone of the same earning power without children. Because fewer people than ever are having children,[1] choosing part time work is becoming more popular.[2]

Standard working hours (or normal working hours) refers to the legislation to limit the working hours per day, per week, per month or per year. If an employee needs to work overtime, the employer will need to pay overtime payments to employees as required in the law. Generally speaking, standard working hours of countries worldwide are around 40 to 44 hours per week (but not everywhere: from 35 hours per week in France[3] to up to 112 hours per week in North Korean labor camps [4]), and the additional overtime payments are around 25% to 50% above the normal hourly payments Maximum working hours refers to the maximum working hours of an employee. The employee cannot work more than the level specified in the maximum working hours law

Since the 1960s, the consensus among anthropologists, historians, and sociologists has been that early hunter-gatherer societies enjoyed more leisure time than is permitted by capitalist and agrarian societies;[6][7] for instance, one camp of !Kung Bushmen was estimated to work two-and-a-half days per week, at around 6 hours a day.[8] Aggregated comparisons show that on average the working day was less than five hours.[6]

Subsequent studies in the 1970s examined the Machiguenga of the Upper Amazon and the Kayapo of northern Brazil. These studies expanded the definition of work beyond purely hunting-gathering activities, but the overall average across the hunter-gatherer societies he studied was still below 4.86 hours, while the maximum was below 8 hours.[6] Popular perception is still aligned with the old academic consensus that hunter-gatherers worked far in excess of modern humans' forty-hour week.[7]

The industrial revolution made it possible for a larger segment of the population to work year-round, because this labor was not tied to the season and artificial lighting made it possible to work longer each day. Peasants and farm laborers moved from rural areas to work in urban factories, and working time during the year increased significantly.[9] Before collective bargaining and worker protection laws, there was a financial incentive for a company to maximize the return on expensive machinery by having long hours. Records indicate that work schedules as long as twelve to sixteen hours per day, six to seven days per week were practiced in some industrial sites.[citation needed]

1906 – strike for the 8 working hours per day in France

Technology has also continued to improve worker productivity, permitting standards of living to rise as hours decline.[13] In developed economies, as the time needed to manufacture goods has declined, more working hours have become available to provide services, resulting in a shift of much of the workforce between sectors.

Economic growth in monetary terms tends to be concentrated in health care, education, government, criminal justice, corrections, and other activities that are regarded as necessary for society rather than those that contribute directly to the production of material goods.

In the mid-2000s, the Netherlands was the first country in the industrialized world where the overall average working week dropped to less than 30 hours.[14]

Gradual decrease in working hours

Most countries in the developed world have seen average hours worked decrease significantly.[15][16] For example, in the U.S in the late 19th century it was estimated that the average work week was over 60 hours per week.[17] Today the average hours worked in the U.S. is around 33,[18] with the average man employed full-time for 8.4 hours per work day, and the average woman employed full-time for 7.7 hours per work day.[19] The front runners for lowest average weekly work hours are the Netherlands with 27 hours,[20] and France with 30 hours.[21] At current rates the Netherlands is set to become the first country to reach an average work week under 21 hours.[22] In a 2011 report of 26 OECD countries, Germany had the lowest average working hours per week at 25.6 hours.[23]The New Economics Foundation has recommended moving to a 21-hour standard work week to address problems with unemployment, high carbon emissions, low well-being, entrenched inequalities, overworking, family care, and the general lack of free time.[24][25][26] Actual work week lengths have been falling in the developed world.[27]

Factors that have contributed to lowering average work hours and increasing standard of living have been:

- Technological advances in efficiency such as mechanization, robotics and information technology.

- The increase of women equally participating in making income as opposed to previously being commonly bound to homemaking and childrearing exclusively.

- Dropping fertility rates leading to fewer hours needed to be worked to support children.

Workweek structure

The structure of the work week varies considerably for different professions and cultures. Among salaried workers in the western world, the work week often consists of Monday to Friday or Saturday with the weekend set aside as a time of personal work and leisure. Sunday is set aside in the western world because it is the Christian sabbath.The traditional American business hours are 9:00 a.m. to 5:00 p.m., Monday to Friday, representing a workweek of five eight-hour days comprising 40 hours in total. These are the origin of the phrase 9-to-5, used to describe a conventional and possibly tedious job.[32] Negatively used, it connotes a tedious or unremarkable occupation. The phrase also indicates that a person is an employee, usually in a large company, rather than self-employed. More neutrally, it connotes a job with stable hours and low career risk, but still a position of subordinate employment. The actual time at work often varies between 35 and 48 hours in practice due to the inclusion, or lack of inclusion, of breaks. In many traditional white collar positions, employees were required to be in the office during these hours to take orders from the bosses, hence the relationship between this phrase and subordination. Workplace hours have become more flexible, but even still the phrase is commonly used.

Several countries have adopted a workweek from Monday morning until Friday noon, either due to religious rules (observation of shabbat in Israel whose workweek is Sunday to Friday afternoon) or the growing predominance of a 35–37.5 hour workweek in continental Europe. Several of the Muslim countries have a standard Sunday through Thursday or Saturday through Wednesday workweek leaving Friday for religious observance, and providing breaks for the daily prayer times.[

Average annual hours actually worked per worker

OECD ranking

|

|

|

Differences among countries and regions

South Korea

South Korea has the fastest shortening working time in the OECD,[36] which is the result of the government's proactive move to lower working hours at all levels to increase leisure and relaxation time, which introduced the mandatory forty-hour, five-day working week in 2004 for companies with over 1,000 employees. Beyond regular working hours, it is legal to demand up to 12 hours of overtime during the week, plus another 16 hours on weekends.[citation needed] The 40-hour workweek expanded to companies with 300 employees or more in 2005, 100 employees or more in 2006, 50 or more in 2007, 20 or more in 2008 and a full inclusion to all workers nationwide in July 2011.[37] The government has continuously increased public holidays to 16 days in 2013, more than the 10 days of the United States and double that of the United Kingdom's 8 days.[38] Despite those efforts, South Korea's work hours are still relatively long, with an average 2,163 hours per year in 2012.[39]Japan

Work hours in Japan are decreasing, but many Japanese still work long hours.[40] Recently, Japan's Ministry of Health, Labor and Welfare (MHLW) issued a draft report recommending major changes to the regulations that govern working hours. The centerpiece of the proposal is an exemption from overtime pay for white-collar workers.[citation needed]Japan has enacted an 8-hour work day and 40-hour work week (44 hours in specified workplaces). The overtime limits are: 15 hours a week, 27 hours over two weeks, 43 hours over four weeks, 45 hours a month, 81 hours over two months and 120 hours over three months; however, some workers get around these restrictions by working several hours a day without 'clocking in' whether physically or metaphorically.[41][citation needed] The overtime allowance should not be lower than 125% and not more than 150% of the normal hourly rate.[42]European Countries

In most European Union countries, working time is gradually decreasing.[43] The European Union's working time directive imposes a 48-hour maximum working week that applies to every member state except the United Kingdom and Malta (which have an opt-out, meaning that UK-based employees may work longer than 48 hours if they wish, but they cannot be forced to do so).[44] France has enacted a 35-hour workweek by law, and similar results have been produced in other countries through collective bargaining. A major reason for the low annual hours worked in Europe is a relatively high amount of paid annual leave.[45] Fixed employment comes with four to six weeks of holiday as standard. In the UK, for example, full-time employees are entitled to 28 days of paid leave a year.[46]Mexico

Mexican laws mandate a maximum of 48 hours of work per week, but they are rarely observed or enforced due to loopholes in the law, the volatility of labor rights in Mexico, and its underdevelopment relative to other members countries of the Organisation for Economic Co-operation and Development (OECD). Indeed, private sector employees often work overtime without receiving overtime compensation. Fear of unemployment and threats by employers explain in part why the 48-hour work week is disregardedColombia

Articles 161 to 167 of the Substantive Work Code in Colombia provide for a maximum of 48 hours of work a week.[47]Australia

In Australia, between 1974 and 1997 no marked change took place in the average amount of time spent at work by Australians of "prime working age" (that is, between 25 and 54 years of age). Throughout this period, the average time spent at work by prime working-age Australians (including those who did not spend any time at work) remained stable at between 27 and 28 hours per week. This unchanging average, however, masks a significant redistribution of work from men to women. Between 1974 and 1997, the average time spent at work by prime working-age Australian men fell from 45 to 36 hours per week, while the average time spent at work by prime working-age Australian women rose from 12 to 19 hours per week. In the period leading up to 1997, the amount of time Australian workers spent at work outside the hours of 9 a.m. to 5 p.m. on weekdays also increased.[48]In 2009, a rapid increase in the number of working hours was reported in a study by The Australia Institute. The study found the average Australian worked 1855 hours per year at work. According to Clive Hamilton of The Australia Institute, this surpasses even Japan. The Australia Institute believes that Australians work the highest number of hours in the developed world.[49]

From January 1, 2010, Australia enacted a 38-hour workweek in accordance with the Fair Work Act 2009, with an allowance for additional hours as overtime.[50]