Before we continue our discussion of my previous writing on pharmaceutical plants indoors controlled electronics and robots, I will discuss about the development of television from black and white television to color television analog to digital television as well as LED television. why I am going to explain this problem are all related to the technique of accuracy and the development of a dynamic movement that can be seen from seconds per second through a continuous observation which is possible with the development of monotonic communication techniques namely the development of television techniques. I learned a lot of television technical knowledge when I was doing electronics engineering courses and worked in television and case studies to television manufacturers such as SHARP Electronics and SAMSUNG Electronics as well as SIEMENS PABX ; in a television manufacture required so many methods from step-by-step to being a good television, precisely and correctly that is a television that can be accepted and used by the customer at the time and current moment. when the assembly of a television is made and printed it is necessary to research and develop what will be applied when the television is produced, step by step:

1. Design phase

2. New product test phase

3. Stage of market research

4. Early production stage

5. Assembling stage

6. Stage setting Factory setting and User setting on TV product

7. Stage Phase checking TV products

8. Sampling stage for strength test and TV reliability

9. Product Launching Stage

10.Stage TV Product Review

(e - Monotonic VisiON = e - MICON) is very advanced and rapidly in the 20th and 21st centuries but there are many obstacles on the weight and size and flexibility of television it is now being carefully crafted and made a television (e - MICON ) can use more flexible and dynamic component components in energy use for vision electronics (e - Learn + e - Gain + e - Control).

🔝

Gen . Mac Tech

Black and White Television ( e- MICON )

Monochrome TV Transmitter

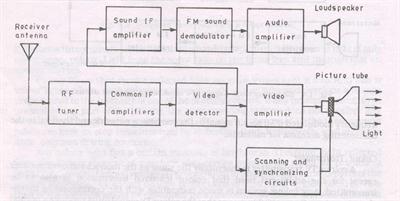

Monochrome TV Transmitter: Figure shows the simplified block diagram of a television transmitter. The video signals obtained from camera tube are applied to a number of video amplifier stages. First stage is located in camera housing to increase weak signal voltage to such a level as to be transmitted over a coaxial cable to the succeeding amplifier stages.

Synchronizing generator produces sets of pulses to operate the system at appropriate timings. This unit includes wave generating and shaping circuits. Eg: Multivibrator circuit, blocking oscillator circuit and clipping circuits etc. The repetition rates of the pulse trains are controlled by frequency stabilized master oscillator.

The horizontal synchronizing pulses are applied to horizontal saw-tooth generator; vertical synchronizing pulses are applied to vertical deflection saw-tooth generator; two sets of blanking pulses are applied to control grid of camera tube to blank it during vertical and horizontal retrace; and a pulse train consisting all above pulse groups is applied to video-amplifier channel for transmission to receiver.

The carrier frequency generated from a crystal controlled oscillator is passed through a number of frequency multiplier and amplifier stages. This results in a production of a carrier wave of desired frequency and energy content. The level of image signals, together with synchronizing and blanking pulses, is raised to modulate this carrier frequency. A high level grid modulation is usually employed.

The carrier when amplitude modulated with video signal (BW = 5 MHz) generates two sidebands and the total bandwidth, required for TV channel would be 10 MHz which is too large. Therefore vestigial sideband transmission in which one sideband – (say upper) is transmitted in full along with reduced second sideband is used. For this purpose, output of the final RF amplifier is applied to a vestigial sideband filter which suppresses the undesired portion of the lower sideband of the modulated wave.

The modulated RF energy is carried from transmitter to the transmitting antenna by means of a co-axial transmission line. The antenna elevation is kept high for large transmission area.

An FM transmitter is used for the purpose of audio signal transmission. The carrier frequency used in audio modulation is 5.5 MHz above that which is used in audio modulation. Both, sound and picture signals are transmitted by the same antenna by using a diplexer called picture – sound diplexer.

10 component components of the existing circuit on black and white television :

Black and White televisions work like woven fabrics to make mats, blankets, as well as fabric both sarong weaving patterns, ulos shawls that send a vertical and horizontal weave to a television screen through the waves of electromagnetic waves.

1 . Component Input and Outputs :

SpeakerTelevisionReceiverAll SectionPicture TubePower SupplyAC Input

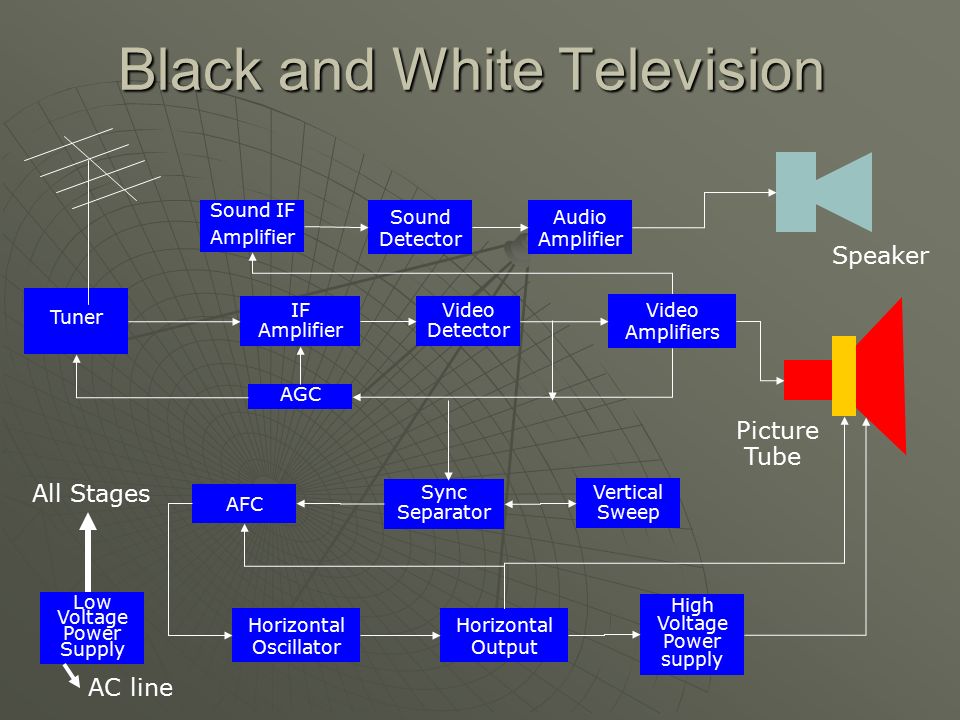

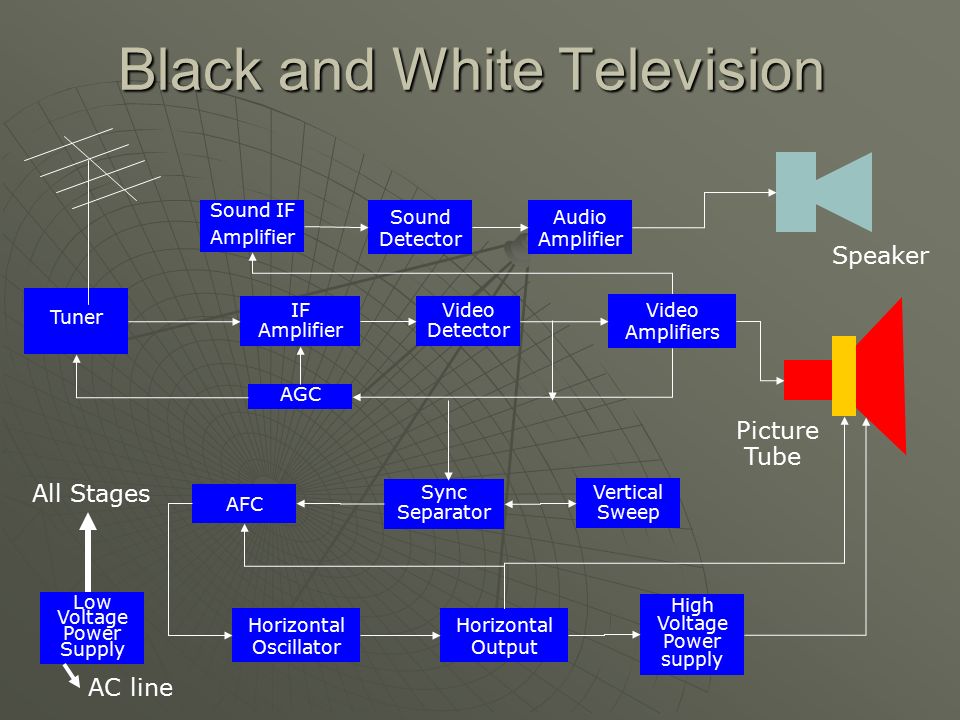

2 . Component Black and White Television :

Sound IFAmplifierSound DetectorAudio AmplifierSpeakerTunerIF AmplifierVideo DetectorVideo AmplifiersAGCPictureTubeAll StagesVertical SweepAFCSync SeparatorLow Voltage Power SupplyHigh Voltage Power supplyHorizontal OscillatorHorizontal OutputAC line

3 . Component Function of each Block Section :

Low Voltage Power Supply – this power supply DC voltage in all section of the television. It is also a place used to convert AC voltage to DC voltage.Sound IF Amp – received and synchronize Sound signalSound detector-

4 . Component Continuation… :

5 . Component Continuation… AGC AFC Sync separator Vertical Sweep :

Horizontal OscillatorHorizontal OutputHigh Voltage Power SupplyPicture tubeDeflection Yoke

6 . Component Color Television Speaker Picture Tube All Stages AC line Sound IF :

AmplifierSound DetectorAudio AmplifierSpeakerTunerIF AmplifierVideo DetectorVideo AmplifiersAGC ( Automatic Gain Control ) PictureTubeAll StagesVertical SweepAFCSync SeparatorLow Voltage Power SupplyHigh Voltage Power supplyHorizontal OscillatorHorizontal OutputAC line

7 . Component Details of Vertical and Horizontal Section :

High Voltage rectifierHorizontal OscillatorHorizontal DriverHorizontal OutputFlybackFilamentcoilYokeTo FilamentCoil 2Coil 1PictureTubeAll StagesVertical OscillatorVertical DriverVertical OutputLow Voltage Power SupplyAC line

8 . Component Horizontal Scanning Horizontal Scanning - 15.734 Hz :

Vertical Scanning – 59 Hz pulseInterlace scanning – Function of horizontal and verticaldeflection pulls the beam from left to right and theother force pull from top to bottom. Composed of525 lines to complete one picture called frame1 lines = 262 lines2 lines = 525 equivalent to 1 frame

9 . Component Composite Video Signal is composed of the following :

Video Signal –gives the right image information to the picture tube. ( 30 Hz – 4.2 MHzHorizontal Blanking pulse – tell the receiver to blank the electron beamHorizontal synchronizing PulseVertical Blanking PulseVertical synchronizing PulseEqualizing pulse

10 . Component Block diagram Black and White TV

A television performs three tasks: it shows a picture (video), it produces the sounds (audio) that accompany the picture, and it synchronizes the picture it produces with the picture that is transmitted. A picture without sound is unacceptable, and sound without a picture is like watching a radio. If the picture on the screen is not synchronized with the picture that is transmitted, the resulting picture is chaotic. This section describes the basic operation of a television, and how it receives signals, converts signals, and produces both picture and sound.

How TV Produces a Picture and Sound

A picture is a series of tiny squares. Using black and white as an example, the squares range from black to white with many gray tones in between to add definition. When you magnify a black and white photograph, the small squares that define the picture become apparent, as shown in FIG. 1.

The signal a television station transmits also is made up of tiny squares of light and the spaces between the squares. You can see the tiny squares on the television screen when you tune to an unused channel. At the television station, the tiny squares that define a picture are converted to electrical signals. Accompanying the electrical signals is all of the information about the squares of light, including their positions and their intensity. This information is used by the receiver that converts the signals to a picture to duplicate the transmitted picture. The more tiny squares there are in a picture, the better the picture quality or resolution.

Also, the television receiver must be able to stay in step with the television station’s transmitter. The television camera scans a scene much like a person scans a page of printed material. Starting at the top-left corner, a per son scans the first line left to right. Then, when the first line is complete, the person’s eye moves down one line and back to the left side of the page. This pattern continues until the page is finished.

To ensure that the receiver stays in step with the transmitter and duplicates the transmitter’s left-to-right and top-to-bottom scanning pattern, a series of signals or timing signals called synchronizing (sync) signals is sent by the transmitter, along with the picture and sound signals. Using the sync signals, the receiver can stay in step with the camera’s scanning pattern. Sync signals can be divided into two sets of instructions. The right-to-left instructions are called horizontal-sync signals. The bottom-to-top instructions are called vertical-sync signals. If there are problems with the sync signals, the picture on the television appears to flicker, tear horizontally, or roll vertically.

Reducing flicker is discussed in the next section. Horizontal tearing and vertical rolling are discussed in the section Processing the Sync Signals, later in this section.

Video Basics

There are 525 horizontal lines in one television picture (frame), with 285 squares of information on each line. In the U.S., a television station transmits 30 complete frames every second. Transmitting only 30 frames per second causes the picture to appear to flicker. This flickering is reduced by interlacing the 525 horizontal lines. Starting at the top of the screen, the electron gun in the picture tube (cathode ray tube or CRT) evenly scans 262+ lines down the screen, shown as solid lines in FIG. 2. Then, the electron gun goes back to the top-left of the screen and scans another 262+ lines between the first lines. The second set of scan lines are shown as dashed lines in FIG. 2. Interlacing the lines reduces the flickering and produces much the same effect as 60 complete pictures-per-second.

A set of 262+ lines is called a field. It takes a set of two fields to produce one complete frame.

- - —

If the television is not producing a picture, it produces a scanning pattern. This pattern, called a scanning raster or snow, appears as a series of horizontal white tines even though you cannot see the defined lines. The snow pattern is important to show that radio frequency signals are being received. The radio frequency signals carry the video, audio and sync signals. You can reproduce a scanning raster by disconnecting the television’s antenna and tuning to an unused channel. If the snow pattern is absent, there is a problem with one of the early stages of reception, such as the tuner or the IF stages.

In addition to the blanking pulses used to blank retrace lines at the end of the sweep cycle, the newer televisions have a built-in blanking circuit which blanks the raster if there is no signal. You can see the retrace lines if you maximize the brightness.

In addition to the scanning raster, a camera must also determine the amount of red, green and blue in the original picture, and the brightness of each square that makes up the picture. When this information is transmitted to the receiver, the receiver must be able to reproduce the original picture by combining correct proportions of red, green and blue, and by reproducing the brightness of each square that defines the picture. All of this picture information is sent to the receiver in video signals.

Using the controls on the television, it is possible to adjust the brightness and contrast to washout or intensify the color of the picture. Even slight adjustments can cause light areas in a picture to lose detail and the dark areas to appear lighter. These problems can be solved easily using the television’s controls. Other problems with the color and brightness are not so easy to identify. Later Sections provide more information about analyzing the color and brightness of the picture produced by the television when troubleshooting picture problems.

Sound Basics

Television sound (audio) is transmitted as FM signals because FM signals are less noisy than AM signals. At the television station, the FM audio signals are combined with the video transmission. Section 6 describes how the sound system works for monaural (mono) as well as stereo sound, as well as how to use the television’s sound to locate troubles in a receiver.

Using a Block Diagram

Television manuals and repair documentation usually include block diagrams. These diagrams break down the entire process of receiving signals, con- veiling signals, and producing picture and sound in a chart format that makes it easy to follow the sequence of the processes called stages. Thus, block diagrams can be a useful troubleshooting tool, even though they do not replace the more complex schematics that we will discuss later in the guide.

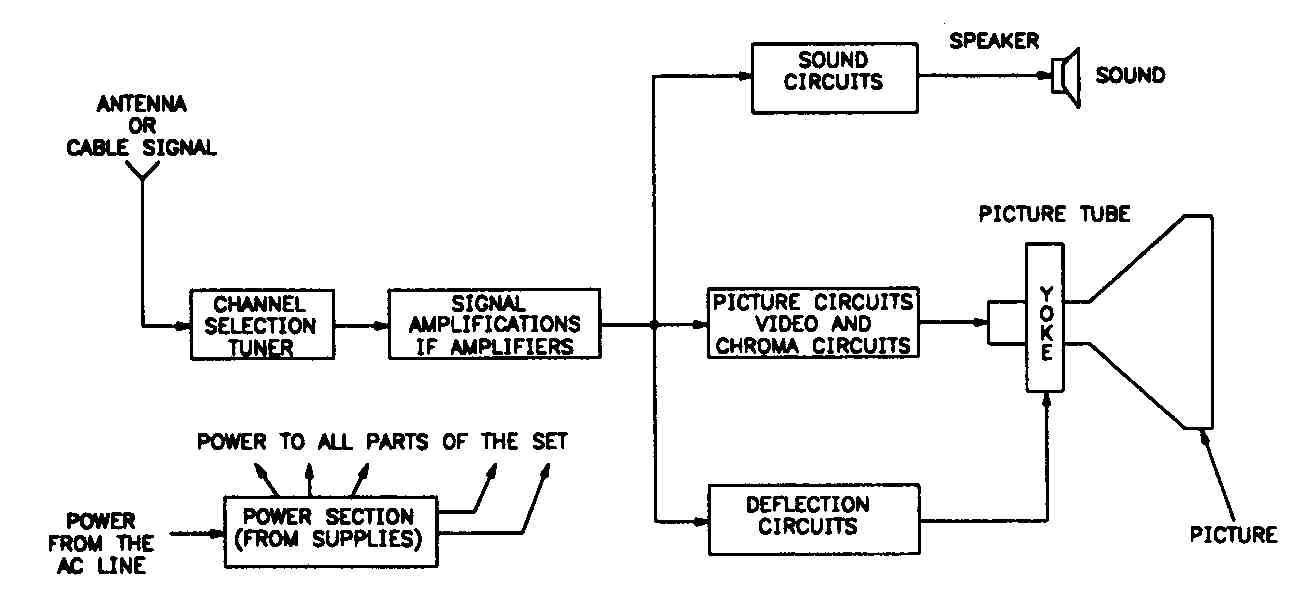

FIG. 4. A functional block diagram showing the basic steps in the process of converting a signal into televised information.

Block diagrams can show high-level views of a process or complex circuit in formation. The simplest version of a block diagram is a black box diagram, shown in FIG. 3, in which there is an input and an output, but the process of receiving the signal, converting the signal, and producing picture and sound appears as a black box providing no details.

The block diagram shown in FIG. 4 is somewhat more complex. It shows that the television is receiving the appropriate power so that it can complete the required processes. The diagram also shows the basic steps in the process of receiving the signal, converting the signal, and producing the picture and sound, as well as the sequence in which the process takes place.

The antenna receives the signal from the television station. When the television’s tuner selects the channel assigned to the television station’s transmitting frequency, the television’s intermediate-frequency (IF) amplifiers increase the selected signal. Then, the signal is split into the three parts: audio signal, video signal and sync signal. These three signals are processed simultaneously.

FIG. 5 is a more typical block diagram. This diagram shows all of the processes that take place in the television, from receiving the signal from the television station to producing the video and audio. This diagram makes it easy to trace the signal through each stage of the process.

The following sections describe each stage in the block diagram. Refer to the block diagram in FIG. 5 while reading through the sections.

Receiving the Signal

Even though the antenna is separate from the television and is often treated as an independent stage, electrically it is really part of the television’s tuner. The antenna receives the RF (radio frequency) signal from the television station and passes the signal to the tuner. All antennas do not receive all television channels. Some antennas work only with VHF channels (channels 2 through 13), and others work only with UHF channels (channels 14 through 83). There also are antennas that receive all channels, as well as some that are designed for only one channel.

Cable companies access signals through a satellite dish. Also, many people now access the television through satellites directly. The signal received from the satellite, whether at a cable company or directly, goes to an amplifier that lowers the frequency to the television frequency range. Then the signals are passed through a cable to the television. Cable access signals are typically stronger and cleaner than antenna signals.

Each television station transmits at a different frequency. The unit of measurement for a television frequency is megahertz (MHz). The frequencies for the VHF channels are 54 MHz through 216 MHz. The frequencies for the UHF channels are 470 MHz through 890 MHz. The tuner’s job is to select a signal at an assigned frequency (channel) from all of the available signals, both weak and strong, then process the signal so that it can be used by the IF (intermediate frequency) amplifiers. IF amplifiers are described in the next section, Increasing the Signal’s Strength.

FIG. 5. A typical block diagram, tracing the signal through each stage of video and audio production process.

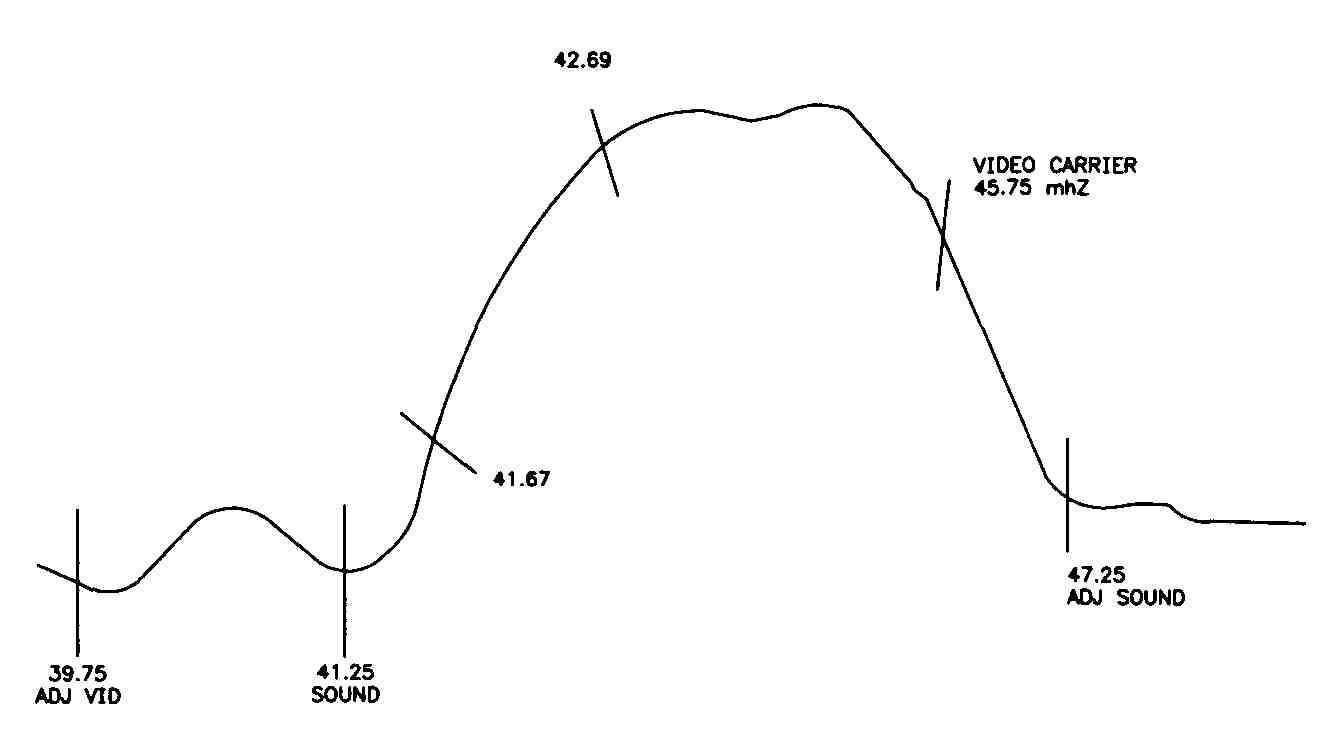

After an RF signal is processed, the output is the IF frequency that contains the composite video, audio and sync signals. FIG. 6 shows the waveform of the IF signal.

1. 39.75 MHz—Adjacent channel’s video carrier.

2. 41.25 MHz—Audio carrier.

3. 41.67 to 42.67 MHz—Color carrier.

4. 45.75 MHz—Video carrier.

5. 47.25 MHz—Adjacent channel’s audio carrier.

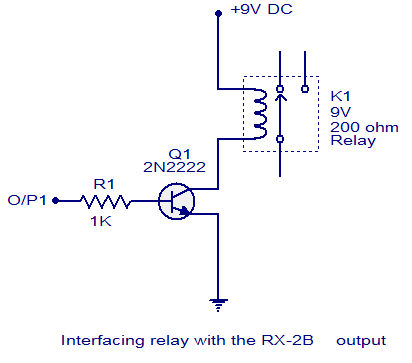

The IF signal is sent to the IF amplifiers where its strength is adjusted. If all signals were of equal strength, this adjustment would not be necessary. However, all signals are not of equal strength. So, the adjustment is made by a control signal called the automatic gain control (AGC). The AGC is de scribed in the next section.

Increasing the Signal’s Strength

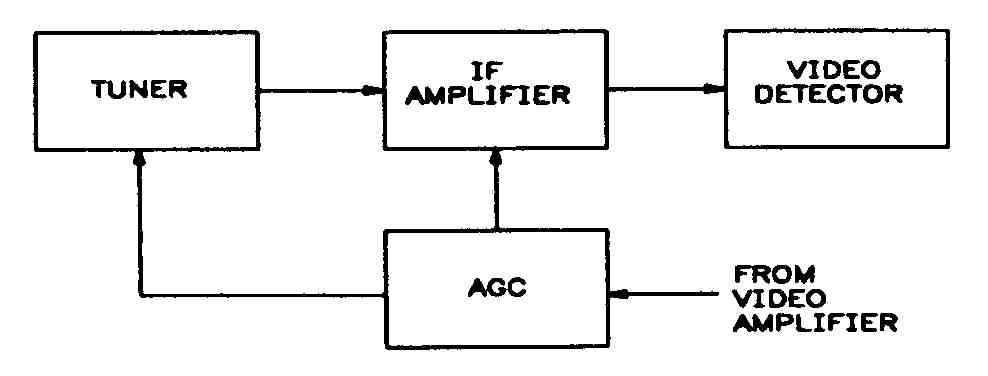

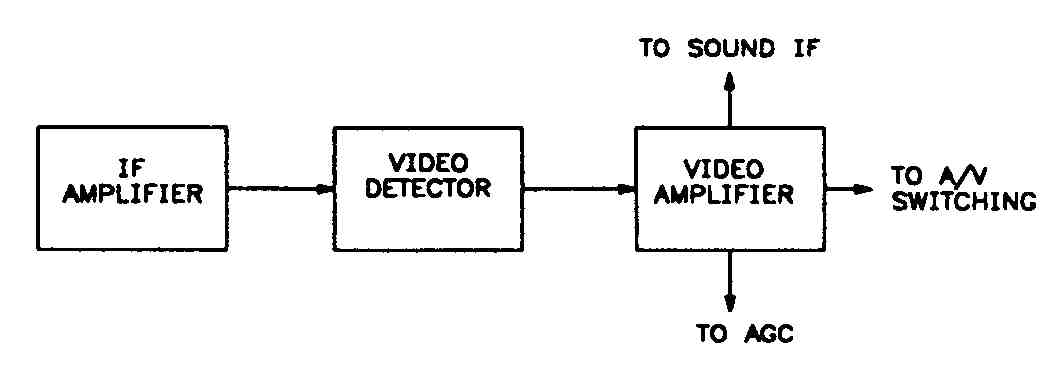

Under normal conditions, the signal from the tuner is not strong enough to operate the picture and sound. Therefore, the signal strength has to be increased. The IF amplifiers, shown in FIG. 7, amplify the signal strength.

The AGC, shown in FIG. 7, monitors the average strength of the sync signals. The AGC adjusts the signal’s strength by increasing or decreasing a control voltage to the tuner and the IF amplifiers. If the voltage level of the sync signals is too low, the AGC increases the control voltage to the tuner and the IF amplifiers. If the voltage level of the sync signals is too high, the AGC decreases the control voltage to the tuner and the IF amplifiers.

Separating Video and Audio

After the signal is amplified sufficiently in the IF amplifiers, the signal is passed to the video detector, shown in FIG. 8. The video detector is in the integrated circuit (IC) that is part of the video IF amplifier and converts the amplified signal from the IF amplifiers and separates the signal into two types:

1. The composite video, which is an AM signal (30 Hz to 4.2 MHz) containing the video and the sync signals.

2. The sound IF, which is an FM signal (4.5 MHz).

Then, the composite video signal and the sound IF signal are sent to the video amplifiers.

Processing the Video

The video amplifiers perform several tasks. First, the video amplifiers in crease the signal from the video detector. Then, the sound IF signal and sync signals are separated from the picture information. The sound IF signal is sent to the sound IF amplifier. The sync signals are sent to the sync separator.

The video signal is amplified more, then sent to the video processing circuit. The contrast control, located in the video processing circuit, controls the amount of video sent to the CRT, like the volume control adjusts the audio level sent to the speakers. In color televisions, the color signals are separated at this point and sent to the luminance and chroma processing stage.

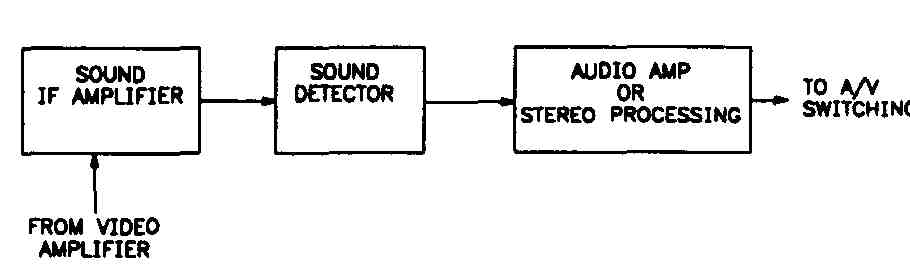

Processing the Audio

The audio processing system, shown in FIG. 9, receives an audio signal from the video amplifier and processes it so that the speakers can produce the sound that accompanies the picture.

The sound IF amplifier increases the FM audio signal before it is sent to the sound detector. Before the audio signal can be sent to the speaker, it must be separated from the sound carrier by the sound detector. The output from the sound detector is an electrical audio signal that must be amplified again by the audio amplifier before it can be heard through the speaker. After the sound completes its trip through the sound processing system, the speaker converts the electrical audio signal to audible sound.

The volume control and tone control are in the audio amplifier stage.

Processing the Sync Signals

Remember that the sync signals are sent by the television station along with the audio and video signals, and ensure that the picture that appears on the screen is synchronized with the picture that was transmitted. Loss of sync signals can cause many problems, from no video to partial picture loss and tearing.

The sync amplifier increases the sync to the required level, and makes sure the correct video timing and placement information is present. The sync separator separates the sync signals into horizontal sync signals and vertical sync signals. The horizontal sync signals control the starting time of the left-to- right lines on the screen. The vertical sync signals control the starting time of the picture from the top-left corner of the screen. As mentioned previously, problems with the sync signal processing can cause vertical rolling or horizontal tearing.

Horizontal Sync Signals

The horizontal sync signals are sent to the automatic phase control (APC), shown in FIG. 10. The rate at which the horizontal output operates deter mines the actual rate at which the picture is scanned to the screen. The picture scan rate must be synchronized with the rate at which the picture is transmitted. To make sure the horizontal sync signal is synchronized with the sync signals, the output is compared to the sync signals by the APC.

After the comparison is made, any adjustments to the horizontal signal are made by the horizontal oscillator. If the rate is too slow, the APC causes the oscillator to speed up, which produces a faster horizontal sync signal rate. If the rate is too fast, the APC causes the oscillator slow down, which produces a slower horizontal signal rate. In this way, the horizontal oscillator produces a horizontal scan signal that is correctly synchronized with the sync signals. The horizontal hold control is in the horizontal oscillator. Next, the horizontal signal is sent to the horizontal output stage.

The horizontal output stage is a powerful signal amplifier, which is turned on and off by the horizontal oscillator. The main job of the horizontal output stage is to provide adequate horizontal scan power to the deflection yoke, an electrical assembly attached to the neck of the CRT which controls the horizontal and vertical scan that produces the picture. The output signal causes the electron beam in the CRT to scan horizontally at a rate that is synchronized with the sync signal—15,750 times every second—to produce one frame of the video. A considerable amount of power is needed to move the electron gun so rapidly. The television’s horizontal output stage, also shown in FIG. 10, provides the required signal to generate the high voltage needed by the CRT.

The vertical sync signals are sent to the vertical oscillator section. Then, after amplification, the signal is sent to the vertical deflection yoke. The output signal causes the electron beam in the CRT to scan vertically at a rate that is synchronized with the sync signals. The vertical hold control, and the picture height and linearity controls are in the television’s vertical scan stage.

The CRT, shown in FIG. 11, receives input from several sources in the television. It receives the voltage necessary to operate the electron gun from the high-voltage power supply which is in the flyback transformer stage. The video signals come from the video amplifiers. The horizontal and vertical scan, processed from the sync signals, provide the timing and location information for the video. The video signal provides the chrominance (color) for color televisions, and luminance (intensity) for both color and black-and-white televisions.

From these inputs, the CRT reproduces the picture transmitted by the television station. If all parts of the television work properly, the reproduced picture is synchronized with the transmitted picture, and the reproduced picture is high quality, without flickering or distortions. However, all parts of the television do not always work properly, and a low quality reproduced picture or no picture can result. The remainder of this guide describes troubleshooting methods that can help you locate and repair problems that occur.

Quiz:

1. Define the following terms:

a. Antenna

b. Audio

c. Automatic phase control (APC)

d. Automatic gain control (AGC)

e. Deflection yoke

f. Electron gun

g. Frame

h. Horizontal oscillator

i. Horizontal sync signals

j. IF amplifier

k. Interlacing

l. Sync signals

m. Tuner

n. Vertical sync signals

o. Video

2. How many horizontal lines does it take to produce one frame?

3. What is the purpose of the sync separator?

4. How is flickering reduced in a picture?

5. What are the two functions of the video amplifier?

6. What are the three types of signals transmitted over a carrier signal from the television station?

7. Why are FM signals used to transmit audio information?

8. Why is a block diagram a useful troubleshooting tool?

Key:

1.a. A device that collects the composite RF signal transmitted from the television station.

1.b. Any sound, mechanical or electrical, that can be heard. Audio is normally 20 Hz to 20,000 Hz.

1.c. Holding the horizontal oscillator in step (phase) with the horizontal sync signal.

1.d. Regulating the receiver’s overall amplification (gain) to produce a constant output from variable input.

1.e. An electrical assembly attached to the neck of the CR1 which controls the horizontal and vertical scan that produces the picture.

1.f. An assembly in the CRT that emits a small electron beam.

1.g. One television picture in which there are 525 horizontal lines.

1.h. An oscillator that makes adjustments to the horizontal sync signal.

1.i. Right-to-left instructions that control the horizontal scanning on the screen.

1.j. Amplifier that increases the signal strength from the tuner.

1.k. A method of scanning the 525 horizontal lines in two successive fields of 262+ lines to reduce picture flicker. After the first field is scanned, the lines from the second field are scanned between the lines of the first field.

1.l. A series of signals or timing signals that synchronize the picture scanned on the screen with the picture transmitted from the television station.

1.m. The circuit that selects the channel assigned to the television station’s transmitting frequency.

1.n. Bottom-to-top instructions that control vertical scanning on the screen.

1.o. Picture signals that are used to produce the picture on the screen.

2. 525.

3. Circuit that separates the sync signals into horizontal sync signals and vertical sync signals.

4. The 525 horizontal scan lines are interlaced in two groups of 262+ fields.

5. Amplifies the signal from the video detector and separates the sound IF signal and the sync signals from the picture information.

6. Composite video, audio and sync signals.

7. FM signals are less noisy than AM signals.

8. They show high-level views of a process or complex circuit information.

XO___XO Block Diagram Colour TV

The television picture

Human perception of motion

Electronic systems

The final, insurmountable problems with any form of mechanical scanning were the limited number of scans per second, which produced a flickering image, and the relatively large size of each hole in the disk, which resulted in poor resolution. In 1908 a Scottish electrical engineer, A.A. Campbell Swinton, wrote that the problems “can probably be solved by the employment of two beams of kathode rays” instead of spinning disks. Cathode rays are beams of electrons generated in a vacuum tube. Steered by magnetic fields or electric fields, Swinton argued, they could “paint” a fleeting picture on the glass screen of a tube coated on the inside with a phosphorescent material. Because the rays move at nearly the speed of light, they would avoid the flicker problem, and their tiny size would allow excellent resolution. Swinton never built a set (for, as he said, the possible financial reward would not be enough to make it worthwhile), but unknown to him such work had already begun in Russia. In 1907 Boris Rosing, a lecturer at the St. Petersburg Institute of Technology, put together equipment consisting of a mechanical scanner and a cathode-ray-tube receiver. There is no record of Rosing actually demonstrating a working television, but he had an interested student named Vladimir Kosma Zworykin, who soon emigrated to America.

In 1923, while working for the Westinghouse Electric Company in Pittsburgh, Pennsylvania, Zworykin filed a patent application for an all-electronic television system, although he was as yet unable to build and demonstrate it. In 1929 he convinced David Sarnoff, vice president and general manager of Westinghouse’s parent company, the Radio Corporation of America (RCA), to support his research by predicting that in two years, with $100,000 of funding, he could produce a workable electronic television system. Meanwhile, the first demonstration of a primitive electronic system had been made in San Francisco in 1927 by Philo Taylor Farnsworth, a young man with only a high-school education. Farnsworth had garnered research funds by convincing his investors that he could market an economically viable television system in six months for an investment of only $5,000. In the event, it took the efforts of both men and more than $50 million before anyone made a profit.

With his first hundred thousand dollars of RCA research money, Zworykin developed a workable cathode-ray receiver that he called the Kinescope. At the same time, Farnsworth was perfecting his Image Dissector camera tube (shown in the photograph). In 1930 Zworykin visited Farnsworth’s laboratory and was given a demonstration of the Image Dissector. At that point a healthy cooperation might have arisen between the two pioneers, but competition, spurred by the vision of corporate profits, kept them apart. Sarnoff offered Farnsworth $100,000 for his patents but was summarily turned down. Farnsworth instead accepted an offer to join RCA’s rival Philco, but he soon left to set up his own firm. Then in 1931 Zworykin’s RCA team, after learning much from the study of Farnsworth’s Image Dissector, came up with the Iconoscope camera tube (see the ), and with it they finally had a working electronic system.

In England the Gramophone Company, Ltd., and the London branch of the Columbia Phonograph Company joined in 1931 to form Electric and Musical Industries, Ltd. (EMI). Through the Gramophone Company’s ties with RCA-Victor, EMI was privy to Zworykin’s research, and soon a team under Isaac Shoenberg produced a complete and practical electronic system, reproducing moving images on a cathode-ray tube at 405 lines per picture and 25 pictures per second. Baird excoriated this intrusion of a “non-English” system, but he reluctantly began research on his own system of 240-line pictures by inviting a collaboration with Farnsworth. On November 2, 1936, the BBC instituted an electronic TV competition between Baird and EMI, broadcasting the two systems from the Alexandra Palace (called for the occasion the “world’s first, public, regular, high-definition television station”). Several weeks later a fire destroyed Baird’s laboratories. EMI was declared the victor and went on to monopolize the BBC’s interest. Baird never really recovered; he died several years later, nearly forgotten and destitute.

By 1932 the conflict between RCA and Farnsworth had moved to the courts, both sides claiming the invention of electronic television. Years later the suit was finally ruled in favour of Farnsworth, and in 1939 RCA signed a patent-licensing agreement with Farnsworth Television and Radio, Inc. This was the first time RCA ever agreed to pay royalties to another company. But RCA, with its great production capability and estimable public-relations budget, was able to take the lion’s share of the credit for creating television. At the 1939 World’s Fair in New York City, Sarnoff inaugurated America’s first regular electronic broadcasting, and 10 days later, at the official opening ceremonies, Franklin D. Roosevelt became the first U.S. president to be televised.

Important questions had to be settled regarding basic standards before the introduction of public broadcasting services, and these questions were not everywhere fully resolved until about 1951. The United States adopted a picture repetition rate of 30 per second, while in Europe the standard became 25. All the countries of the world came to use one or the other, just as all countries eventually adopted the U.S. resolution standard of 525 lines per picture or the European standard of 625 lines. By the early 1950s technology had progressed so far, and television had become so widely established, that the time was ripe to tackle in earnest the problem of creating television images in natural colours.

Colour television

Colour television was by no means a new idea. In the late 19th century a Russian scientist by the name of A.A. Polumordvinov devised a system of spinning Nipkow disks and concentric cylinders with slits covered by red, green, and blue filters. But he was far ahead of the technology of the day; even the most basic black-and-white television was decades away. In 1928, Baird gave demonstrations in London of a colour system using a Nipkow disk with three spirals of 30 apertures, one spiral for each primary colour in sequence. The light source at the receiver was composed of two gas-discharge tubes, one of mercury vapour and helium for the green and blue colours and a neon tube for red. The quality, however, was quite poor.

In the early 20th century, many inventors designed colour systems that looked sound on paper but that required technology of the future. Their basic concept was later called the “sequential” system. They proposed to scan the picture with three successive filters coloured red, blue, and green. At the receiving end the three components would be reproduced in succession so quickly that the human eye would “see” the original multicoloured picture. Unfortunately, this method required too fast a rate of scanning for the crude television systems of the day. Also, existing black-and-white receivers would not be able to reproduce the pictures. Sequential systems therefore came to be described as “noncompatible.”

An alternative approach—practically much more difficult, even daunting at first—would be a “simultaneous” system, which would transmit the three primary-colour signals together and which would also be “compatible” with existing black-and-white receivers. In 1924, Harold McCreary designed such a system using cathode-ray tubes. He planned to use a separate cathode-ray camera to scan each of the three primary-colour components of a picture. He would then transmit the three signals simultaneously and use a separate cathode-ray tube for each colour at the receiving end. In each tube, when the resulting electron beam struck the “screen” end, phosphors coated there would glow the appropriate colour. The result would be three coloured images, each composed of one primary colour. A series of mirrors would then combine these images into one picture. Although McCreary never made this apparatus actually work, it is important as the first simultaneous patent, as well as the first to use a separate camera tube for each primary colour and glowing colour phosphors on the receiving end. In 1929 Herbert Ives and colleagues at Bell Laboratories transmitted 50-line colour television images between New York City and Washington, D.C.; this was a mechanical method, using spinning disks, but one that sent the three primary colour signals simultaneously over three separate circuits.

. At the same time, Sarnoff whipped his troops at RCA into developing the first all-electronic compatible colour system.

In 1950 the FCC approved CBS’s colour television and corresponding broadcast standards for immediate commercial use. However, out of 12 million television sets in existence, only some two dozen could receive the CBS colour signal, and after only a few months the broadcasts were abandoned. Then, in June 1951, Sarnoff and RCA proudly unveiled their new system. The design used dichroic mirrors to separate the blue, red, and green components of the original image and focus each component on its own monochrome camera tube. Each tube created a signal corresponding to the red, green, or blue component of the image. The receiving tube consisted of three electron guns, one for each primary-colour signal. The screen in turn comprised a grid of hundreds of thousands of tiny triangles of discrete phosphors, one for each primary colour. Every 1/60 of a second the entire picture was scanned, separated into the three colour components, and transmitted; and every 1/60 of a second the receiver’s three electron guns painted the entire picture simultaneously with red, green, and blue, left to right, line by line.

And the RCA colour system was compatible with existing black-and-white sets. It managed this by converting the three colour signals into two: the total brightness, or luminance, signal (called the “Y” signal) and a complex second signal containing the colour information. The Y signal corresponded to a regular monochrome signal, so that any black-and-white receiver could pick it up and simply ignore the colour signal.

In 1952 the National Television Systems Committee (NTSC) was reformed, this time with the purpose of creating an “industry color system.” The NTSC system that was demonstrated to the press in August 1952 and that would serve into the 21st century was virtually the RCA system. The first RCA colour TV set, the CT-100 (see the ), rolled off the production line in early 1954. It had a 12-inch screen and cost $1,000, as compared with current 21-inch black-and-white sets selling for $300. It was not until the 1960s that colour television became profitable.

In 1960 Japan adopted the NTSC colour standard. In Europe, two different systems came into prominence over the following decade: in Germany Walter Bruch developed the PAL (phase alternation line) system, and in France Henri de France developed SECAM (système électronique couleur avec mémoire). Both were basically the NTSC system, with some subtle modifications. By 1970, therefore, North America and Japan were using NTSC; France, its former dependencies, and the countries of the Soviet Union were using SECAM; and Germany, the United Kingdom, and the rest of Europe had adopted PAL. These are still the standards of colour television today, despite preparations for a digital future.

Digital television

Digital television technology emerged to public view in the 1990s. In the United States professional action was spurred by a demonstration in 1987 of a new analog high-definition television (HDTV) system by NHK, Japan’s public television network. This incited the FCC to declare an open competition to create American HDTV, and in June 1990 the General Instrument Corporation (GI) surprised the industry by announcing the world’s first all-digital television system. Designed by the Korean-born engineer Woo Paik, the GI system displayed a 1,080-line colour picture on a wide-screen receiver and managed to transmit the necessary information for this picture over a conventional television channel. Heretofore, the main obstacle to producing digital TV had been the problem of bandwidth. Even a standard-definition television (SDTV) signal, after digitizing, would occupy more than 10 times the radio frequency space as conventional analog television, which is typically broadcast in a six-megahertz channel. HDTV, in order to be a practical alternative, would have to be compressed into about 1 percent of its original space. The GI team surmounted the problem by transmitting only changes in the picture, once a complete frame existed.

Within a few months of GI’s announcement, both the Zenith Electronics Corporation and the David Sarnoff Research Center (formerly RCA Laboratories) announced their own digital HDTV systems. In 1993 these and four other TV laboratories formed a “Grand Alliance” to develop marketable HDTV. In the meantime, an entire range of new possibilities aside from HDTV emerged. Digital broadcasters could certainly show a high-definition picture over a regular six-megahertz channel, but they might “multicast” instead, transmitting five or six digital standard-definition programs over that same channel. Indeed, digital transmission made “smart TV” a real possibility, where the home receiver might become a computer in its own right. This meant that broadcasters might offer not only pay-per-view or interactive entertainment programming but also computer services such as e-mail, two-way paging, and Internet access.

In late 1996 the FCC approved standards proposed by the Advanced Television Systems Committee (ATSC) for all digital television, both high-definition and standard-definition, in the United States. According to the FCC’s plan, all stations in the country would be broadcasting digitally by May 1, 2003, on a second channel. They would still be broadcasting in analog as well; programs would be “simulcast” in digital and analog, giving the public time to make the switch gradually. In 2006 analog transmissions would cease, old TV sets would become useless, and broadcasters would return their original analog spectrum to the government to be auctioned off for other uses.

At least such was the plan. In a very short time the FCC’s schedule seemed in doubt, as the future form of digital TV remained unclear. Less than 3 percent of the 25 million TV sets sold in America in 2000 were digital, and although 150 stations in 52 cities were broadcasting digitally by that year, most of those stations were merely broadcasting standard-definition programs in digital format. Almost no HDTV was to be seen, and few viewers were even aware of the digital channels. Furthermore, although two-thirds of American viewers had cable TV, most cable companies were refusing to carry the new digital channels. In response, the FCC was considering a rule requiring them to do so; but this in turn would require consumers to purchase a digital cable box, and there was much disagreement within the industry on how to design such a box.

Europe, meanwhile, was far ahead of the United States in digital broadcasting, partly because there was no requirement to incorporate HDTV. In 1993 a consortium of European broadcasters, manufacturers, and regulatory bodies agreed on the Digital Video Broadcasting (DVB) standard, and efforts were begun to apply this standard to satellite, cable, and then terrestrial broadcasting. By the end of the decade some 30 percent of all homes in the United Kingdom had access to digital programming via digital TV sets or via conversion boxes atop their analog sets. Japan began its own digital broadcasting via satellite in December 2000 and planned to begin digital terrestrial broadcasting, using a modification of DVB, in 2003. Both Japan and Europe had target dates similar to that of the United States for ultimate conversion to digital television—i.e., between 2006 and 2010. However, they too faced similar stumbling blocks, so that timetables for the full transition to digital television were in doubt around the world.

A television system involves equipment located at the source of production, equipment located in the home of the viewer, and equipment used to convey the television signal from the producer to the viewer. The purpose of all of this equipment, as stated in the introduction to this article, is to extend the human senses of vision and hearing beyond their natural limits of physical distance. A television system must be designed, therefore, to embrace the essential capabilities of these senses, particularly the sense of vision. The aspects of vision that must be considered include the ability of the human eye to distinguish the brightness, colours, details, sizes, shapes, and positions of objects in a scene before it. Aspects of hearing include the ability of the ear to distinguish the pitch, loudness, and distribution of sounds. In working to satisfy these capabilities, television systems must strike appropriate compromises between the quality of the desired image and the costs of reproducing it. They must also be designed to override, within reasonable limits, the effects of interference and to minimize visual and audial distortions in the transmission and reproduction processes. The particular compromises chosen for a given television service—e.g., broadcast or cable service—are embodied in the television standards adopted and enforced by the responsible government agencies in each country.

Television technology must deal with the fact that human vision employs hundreds of thousands of separate electrical circuits, located in the optic nerve running from the retina to the brain, in order to convey simultaneously in two dimensions the whole content of a scene on which the eye is focused. In electrical communication, however, it is feasible to employ only one circuit (i.e., the broadcast channel) to connect a transmitter with a receiver. This fundamental disparity is overcome in television practice by a process known as image analysis, whereby the scene to be televised is broken up by the camera’s image sensors into an orderly sequence of electrical waves and these waves are sent over the single channel, one after the other. At the receiver the waves are translated back into a corresponding sequence of lights and shadows, and these are reassembled in their correct positions on the viewing screen.

This sequential reproduction of visual images is feasible only because the visual sense displays persistence; that is, the brain retains the impression of illumination for about one-tenth of a second after the source of light is removed from the eye. If, therefore, the process of image synthesis takes less than one-tenth of a second, the eye will be unaware that the picture is being reassembled piecemeal, and it will appear as if the whole surface of the viewing screen is continuously illuminated. By the same token, it will then be possible to re-create more than 10 pictures per second and to simulate thereby the motion of the scene so that it appears to be continuous.

In practice, to depict rapid motion smoothly it is customary to transmit from 25 to 30 complete pictures per second. To provide detail sufficient to accommodate a wide range of subject matter, each picture is analyzed into 200,000 or more picture elements, or pixels. This analysis implies that the rate at which these details are transmitted over the television system exceeds 2,000,000 per second. To provide a system suitable for public use and also capable of such speed has required the full resources of modern electronic technology.

Image analysis

Flicker

The first requirement to be met in image analysis is that the reproduced picture shall not flicker, since flicker induces severe visual fatigue. Flicker becomes more evident as the brightness of the picture increases. If flicker is to be unobjectionable at brightness suitable for home viewing during daylight as well as evening hours, the successive illuminations of the picture screen should occur no fewer than 50 times per second. This is approximately twice the rate of picture repetition needed for smooth reproduction of motion. To avoid flicker, therefore, twice as much channel space is needed as would suffice to depict motion.

The same disparity occurs in motion-picture practice, in which satisfactory performance with respect to flicker requires twice as much film as is necessary for smooth simulation of motion. A way around this difficulty has been found, in motion pictures as well as in television, by projecting each picture twice. In motion pictures, the projector interposes a shutter briefly between film and lens while a single frame of the film is being projected. In television, each image is analyzed and synthesized in two sets of spaced lines, one of which fits successively within the spaces of the other. Thus the picture area is illuminated twice during each complete picture transmission, although each line in the image is present only once during that time. This technique is feasible because the eye is comparatively insensitive to flicker when the variation of light is confined to a small part of the field of view. Hence, flicker of the individual lines is not evident. If the eye did not have this fortunate property, a television channel would have to occupy about twice as much spectrum space as it now does.

It is thus possible to avoid flicker and simulate rapid motion by a picture rate of about 25 per second, with two screen illuminations per picture. The precise value of the picture-repetition rate used in a given region has been chosen by reference to the electric power frequency that predominates in that region. In Europe, where 50-hertz alternating current is the rule, the television picture rate is 25 per second (50 screen illuminations per second). In North America the picture rate is 30 per second (60 screen illuminations per second) to match the 60-hertz alternating current that predominates there. The higher picture-transmission rate of North America allows the pictures there to be about five times as bright as those in Europe for the same susceptibility to flicker, but this advantage is offset by a 20 percent reduction in picture detail for equal utilization of the channel.

Resolution

The second aspect of performance to be met in a television system is the detailed structure of the image. A printed engraving may possess several million halftone dots per square foot of area. However, engraving reproductions are intended for minute inspection, and so the dot structure must not be apparent to the unaided eye even at close range. Such fine detail would be a costly waste in television, since the television picture is viewed at comparatively long range. Standard-definition television (SDTV) is designed on the assumption that viewers in the typical home setting are located at a distance equal to six or seven times the height of the picture screen—on average some 3 metres (10 feet) away. Even high-definition television (HDTV) assumes a viewer who is seated no closer than three times the picture height away. Under these conditions, a picture structure of about 200,000 picture elements for SDTV (approximately 800,000 for HDTV) is a suitable compromise.

The physiological basis of this compromise lies in the fact that the normal eye, under conditions typical of television viewing, can resolve pictorial details if the angle that these details subtend at the eye is not less than two minutes of arc. This implies that the SDTV structure of 200,000 elements in a picture 16 cm (0.5 foot) high can just be resolved at a distance of about 3 metres (10 feet), and the HDTV structure can be resolved at about 1 metre (3 feet). The structure of both pictures may be objectionably evident at short range—e.g., while tuning the receiver—but it would be inappropriate to require a system to assume the heavy costs of transmitting detail that would be used by only a small part of the audience for a small part of the viewing time.

Picture shape

The third item to be selected in image analysis is the shape of the picture. For SDTV, as is shown in the , the universal picture is a rectangle that is one-third wider than it is high. This 4:3 ratio (or aspect ratio) was originally chosen in the 1950s to match the dimensions of standard 35-mm motion-picture film (prior to the advent of wide-screen cinema) in the interest of televising film without waste of frame area. HDTV sets, introduced in the 1980s, accommodate wide-screen pictures by offering an aspect ratio of 16:9. Regardless of the aspect ratio, in both SDTV and HDTV the width of the screen rectangle is greater than its height in order to incorporate the horizontal motion that predominates in virtually all televised events.

Scanning

The fourth determination in image analysis is the path over which the image structure is explored at the camera and reconstituted on the receiver screen. In standard television, the pattern is a series of parallel straight lines, each progressing from left to right, the lines following in sequence from top to bottom of the picture frame. The exploration of the image structure proceeds at a constant speed along each line, since this provides uniform loading of the transmission channel under the demands of a given structural detail, no matter where in the frame the detail lies. The line-by-line, left-to-right, top-to-bottom dissection and reconstitution of television images is known as scanning, from its similarity to the progression of the line of vision in reading a page of printed matter. The agent that disassembles the light values along each line is called the scanning spot, in reference to the focused beam of electrons that scans the image in a camera tube and recreates the image in a picture tube. Tubes are no longer employed in most video cameras (see the section Television cameras and displays), but even in modern transistorized cameras the image is dissected into a series of “spots,” and the path of dissection is called the scanning pattern, or raster.

The scanning pattern

Interlaced lines

The geometry of the standard scanning pattern as displayed on a standard television screen is shown in the . It consists of two sets of lines. One set is scanned first, and the lines are so laid down that an equal empty space is maintained between lines. The second set is laid down after the first and is so positioned that its lines fall precisely in the empty spaces of the first set. The area of the image is thus scanned twice, but each point in the area is passed over only once. This is known as interlaced scanning, and it is used in all the standard television broadcast services of the world. Each set of alternate lines is known as a scanning field; the two fields together, comprising the whole scanning pattern, are known as a scanning frame. The repetition rate of field scanning is standardized in accordance with the frequency of electric power, as noted above, at either 50 or 60 fields per second; corresponding rates of frame scanning are 25 and 30 frames per second. In the North American monochrome system, 525 scan lines are transmitted about 30 times per second, for a horizontal sweep frequency of 525 × 30 = 15,750 hertz. In the colour television system, the 525 scan lines are retained, but the sweep frequency is adjusted to 15,734 hertz and the field rate reduced to a small amount below 60 hertz. This is done to assure backward compatibility of the colour system with the older black-and-white system—a concept discussed in the section Compatible colour television.

For SDTV, the total number of lines in the scanning pattern has been set to provide a maximum pictorial detail on the order of 200,000 pixels. Since the frame area is four units wide by three units high, this figure implies a pattern of about 520 pixels in its width (along each line) and 390 pixels in its height (across the lines). This latter figure would imply a scanning pattern of about 400 lines (one line per pixel), were it not for the fact that many of the picture details, falling in random positions on the scanning pattern, lie partly on two lines and hence require two lines for accurate reproduction. Scanning patterns are designed, therefore, to possess about 40 percent more lines than the number of pixels to be reproduced on the vertical direction. Actual values in use in television broadcasting in various regions are 405 lines, 525 lines, 625 lines, and 819 lines per frame. These values have been chosen to suit the frequency band of the channel actually assigned in the respective geographic regions.

The relationship between the ideal and actual scanning patterns is shown in the diagram of aspect ratios. The part of the pattern beyond the dashed lines of A (the “safe action area”) is lost as the scanning spot retraces. The remaining area of the pattern is actively employed in analyzing and synthesizing the picture information and is adjusted to have the 4:3 or 16:9 aspect ratio of SDTV or HDTV. In practice, some of the safe action area may be hidden behind the decorative mask that surrounds the picture tube of the receiver, as shown by the dashed lines of B, leaving programmers to work with what is known as the “safe title area.”

Deflection signals

The scanning spot is made to follow the interlaced paths described above by being subjected to two repetitive motions simultaneously (see the ). One is a horizontally directed back-and-forth motion in which the spot is moved at constant speed from left to right and then returned as rapidly as possible, while extinguished and inactive, from right to left. At the same time a vertical motion is imparted to the spot, moving it at a comparatively slow rate from the top to the bottom of the frame. This motion spreads out the more rapid left-to-right scans, forming the first field of alternate lines and empty spaces. When the bottom of the frame is reached, the spot moves vertically upward as rapidly as possible, while extinguished and inactive. The next top-to-bottom motion then spreads out the horizontal line scans so that they fall in the empty spaces of the previously scanned field. Precise interlacing of the successive field scans is facilitated if the total number of lines in the frame is an odd number. All the numbers of lines used in standard television were chosen for this reason.

Synchronization signals

The return of the scanning spot from right to left and from bottom to top of the frame, during which it is inactive, consumes time that cannot be devoted to transmitting picture information. This time is used to transmit synchronizing control signals that keep the scanning process at the receiver in step with that at the transmitter. The amount of time lost during retracing of the spot proportionately reduces the actual number of picture elements that can be reproduced. For instance, in the 525-line scanning pattern used in North America, about 15 percent of each line is lost in the return motion, and about 35 out of the 525 lines are blanked out while the spot returns from bottom to top of two successive fields. The scanning area that is actually in use for reproduction of the picture therefore contains a maximum of about 435 pixels along each line, and it has 490 active lines capable of reproducing 350 pixels in the vertical direction. The frame can therefore accommodate at most about 350 × 435, or 152,000, picture elements.

The time taken by the scanning spot to move over the active portion of each scanning line is on the order of 50 millionths of a second, or 50 microseconds. In the American system, 525 lines are transmitted in about one-thirtieth of a second, which is equivalent to about 64 microseconds per line. Up to 15 percent of this time is consumed in the horizontal retrace motion of the spot, leaving 54 microseconds (54 × 10−6 second) for active reproduction of as many as 435 pixels in each line. This represents a maximum rate of 435 ÷ (54 × 10−6) ≅ 8,000,000 pixels per second. Since two pixels can be approximately represented by one cycle of the transmission signal wave, the signal must be capable of carrying components as high as four megahertz (4 million cycles per second). The American six-megahertz television channel provides a sufficient band of frequencies for this picture signal, leaving an additional two megahertz to transmit the sound program, to protect against interference, and mostly to meet the requirements of vestigial side-band transmission.

The picture signal

Wave form

The translation of the televised scene into its electrical counterpart results in a sequence of electrical waves known as the television picture signal. This is represented graphically in the as a wave form, in which the range of electrical values (voltage or current) is plotted vertically and time is plotted horizontally. The electrical values correspond to the brightness of the image at each point on the scanning line, and time is essentially the position on the line of the point in question.

The television signal wave form is actually a composite made up of three individual signals, as is shown in the . The first is a continuous sequence of electrical values corresponding to the brightnesses along each line. This signal contains what is known as the luminance information. The luminance signal is interspersed with blanking pulses, which correspond to the times during which the scanning spot is inactivated and retraced from the end of one line to the beginning of the next, as described above. Superimposed on the blanking pulses are additional short pulses corresponding to the synchronization signals (also described above), whose purpose is to cause the scanning spots at the transmitter and receiver to retrace to the next line at precisely the same instant. These three individual signals—luminance, blanking, and synchronization—are added together to produce the composite video signal.

A blank interval also occurs twice every 525 lines (or twice every 625 lines, depending on the system) when the scanning spot, having reached the bottom of the frame, retraces to the top. This movement is guided by the vertical synchronization signal, a serrated series of impulses (shown in the ) that occurs shortly after the scanning spot has reached the bottom of the frame. The vertical synchronization signal is followed by a series of horizontal synchronizing impulses at black level with no luminance information. The interval of time allocated for the reproducing beam to travel from the bottom of the picture to the top is called the vertical blanking interval. During this time, no picture information is transmitted. In the American system, the vertical blanking interval is equivalent to the time necessary to trace a total of 21 scan lines for each field. The reproducing beam in television receivers actually gets to the top of the screen more quickly than the allocated 21 scan lines, but it is not visible since it falls off the screen. Some of these scan lines can then be used to send other information, such as a vertical interval reference signal to calibrate colour receivers, text information to be displayed for the hard-of-hearing (closed captioning), or (in Europe) teletext.

Distortion and interference

The signal wave form that makes up a television picture signal embodies all the picture information to be transmitted from camera to receiver screen as well as the synchronizing information required to keep the receiver and transmitter scanning operations in exact step with each other. The television system, therefore, must deliver the wave form to each receiver as accurately and as free from blemishes as possible. Unfortunately, almost every item of equipment in the system (amplifiers, cables, transmitter, transmitting antenna, receiving antenna, and receiver circuits) conspires to distort the wave form or permits it to be contaminated by “noise” (random electrical currents) or interference.

Among the possible distortions in the signal producing the picture are (1) failure to maintain the rapidity with which the wave form rises or falls as the scanning spot crosses a sharp boundary between light and dark areas of the image, producing a loss of detail, or “smear,” in the reproduced image; (2) the introduction of overshoots, which cause excessively harsh outlines; and (3) failure to maintain the average value of the wave form over extended periods, which causes the image as a whole to be too bright or too dark.

Throughout the system, amplifiers must be used to keep the television signal strong relative to the noise that is everywhere present. These random currents, generated by thermally induced motions of electrons in the circuits, cause a speckled “snow” to appear in the picture. Pictures received from distant stations are subject to this form of interference, since the radio wave by then is so weak that it cannot override random currents in the receiving antenna. Other sources of noise include electrical storms and electric motors. Distortions of a striated type may be caused by interference from signals of stations other than that to which the receiver is tuned.

Another form of distortion arises when a broadcast television signal arrives at the receiver from more than one path. This can occur when the original signal bounces or is reflected off large buildings or other physical structures. The time delays in the different paths result in the creation of “ghosts” in the received picture. These ghosts also can occur in cable television systems from electrical reflections of the signal along the cable. Care in the design of the receiver tuner and amplifier circuits is necessary to minimize such interference, and channels must be allocated to neighbouring communities at sufficient geographic separations and frequency intervals to protect the local service.

Bandwidth requirements

The quality and quantity of television service are limited fundamentally by the rate at which it is feasible to transmit the picture information over the television channel. If, as is stated above, the televised image is dissected, within a few hundredths of a second, into approximately 200,000 pixels, then the electrical impulses corresponding to the pixels must pass through the channel at a rate of several million per second. Moreover, since the picture content may vary, from frame to frame, from simple close-up shots having little fine detail to comprehensive distant scenes in which the limiting detail of the system comes into play, the actual rate of transmitting the picture information varies considerably. The television channel must be capable, therefore, of handling information over a continuous band of frequencies several million cycles wide. This is testimony to the extraordinary comprehension of the human sense of sight. By comparison, the ear is satisfied by sound carried over a channel only 10,000 cycles wide.

In the United States, the television channel, occupying six megahertz in the radio spectrum, is 600 times as wide as the channel used by each standard amplitude modulation (AM) sound broadcasting station. In fact, one television station uses nearly six times as much spectrum space as all the commercial AM sound broadcasting channels combined. Since each television broadcast must occupy so much spectrum space, a limited number of channels is available in any given locality. Moreover, the quantity of service is in conflict with the quality of reproduction. If the detail of the television image is to be increased (other parameters of the transmission being unchanged), then the channel width must be increased proportionately, and this decreases the number of channels that can be accommodated in the spectrum. This fundamental conflict between quality of transmission and number of available channels dictates that the quality of reproduction shall just satisfy the typical viewer under normal viewing conditions. Any excess of performance beyond this ultimately will result in a restriction of program choice.

Compatible colour television

Compatible colour television represents electronic technology at its pinnacle of achievement, carefully balancing the needs of human perception with the need for technological efficiency. The transmission of colour images requires that extra information be added to the basic monochrome television signal, described above. At the same time, this more complex colour signal must be “compatible” with black-and-white television, so that all sets can pick up and display the same transmission. The design of compatible colour systems, accomplished in the 1950s, was truly a marvel of electrical engineering. The fact that the standards chosen at that time are still in use attests to how well they were designed.

The first compatible colour system was designed in 1950–51 by engineers at the Radio Corporation of America (RCA) and was accepted in 1952 by the National Television Systems Committee (NTSC) as the standard for broadcast television in the United States. (See the section The development of television systems: Colour television.) The essentials of the NTSC system have formed the basis of all other colour television systems. Two rivaling European systems, PAL (phase alternation line) and SECAM (système électronique couleur avec mémoire), are modifications of the NTSC system that have special application to European conditions. One or the other of these three systems has been adopted by all countries of the world (see the table). All are discussed in this section, with the American (NTSC) system being used to describe the basic principles of colour television.

Basic principles of compatible colour: The NTSC system

The technique of compatible colour television utilizes two transmissions. One of these carries information about the brightness, or luminance, of the televised scene, and the other carries the colour, or chrominance, information. Since the ability of the human eye to perceive detail is most acute when viewing white light, the luminance transmission carries the impression of fine detail. Because it employs methods essentially identical to those of a monochrome television system, it can be picked up by black-and-white receivers. The chrominance transmission has no appreciable effect on black-and-white receivers, yet, when used with the luminance transmission in a colour receiver, it produces an image in full colour.

Historically, compatibility was of great importance because it allowed colour transmissions to be introduced without obsolescence of the many millions of monochrome receivers in use. In a larger sense, the luminance-chrominance method of colour transmission is advantageous because it utilizes the limited channels of the radio spectrum more efficiently than other colour transmission methods.

To create the luminance-chrominance values, it is necessary first to analyze each colour in the scene into its component primary colours. Light can be analyzed in this way by passing it through three coloured filters, typically red, green, and blue. The amounts of light passing through each filter, plus a description of the colour transmission properties of the filters, serve uniquely to characterize the coloured light. (The techniques for accomplishing this are described in the section Transmission: Generating the colour picture signal.)

The fact that virtually the whole range of colours may be synthesized from only three primary colours is essentially a description of the process by which the eye and mind of the observer recognize and distinguish colours. Like visual persistence (the basis of reproducing motion in television), this is a fortunate property of vision, since it permits a simple three-part specification to represent any of the 10,000 or more colours and brightnesses that may be distinguished by the human eye. If vision were dependent on the energy-versus-wavelength relationship (the physical method of specifying colour), it is doubtful that colour reproduction could be incorporated in any mass-communication system.

By transforming the primary-colour values, it is possible to specify any coloured light by three quantities: (1) its luminance (brightness or “brilliance”); (2) its hue (the redness, orangeness, blueness, or greenness, etc., of the light); and (3) its saturation (vivid versus pastel quality). Since the intended luminance value of each point in the scanning pattern is transmitted by the methods of monochrome television, it is only necessary to transmit, via an additional two-valued signal, supplementary information giving the hue and saturation of the intended colour at the respective points.

Chrominance, defined as that part of the colour specification remaining when the luminance is removed, is a combination of the two independent quantities, hue and saturation. Chrominance may be represented graphically in polar coordinates on a colour circle (as shown in the ), with saturation as the radius and hue as the angle. Hues are arranged counterclockwise around the circle as they appear in the spectrum, from red to blue. The centre of the circle represents white light (the colour of zero saturation), and the outermost rim represents the most saturation. Points on any radius of the circle represent all colours of the same hue, the saturation becoming less (that is, the colour becoming less vivid, or more pastel) as the point approaches the central “white point.” A diagram of this type is the basis of the international standard system of colour specification.

In the NTSC system, the chrominance signal is an alternating current of precisely specified frequency (3.579545 ± 0.000010 megahertz), the precision permitting its accurate recovery at the receiver even in the presence of severe noise or interference. Any change in the amplitude of its alternations at any instant corresponds to a change in the saturation of the colours being passed over by the scanning spot at that instant, whereas a shift in time of its alternations (a change in “phase”) similarly corresponds to a shift in the hue. As the different saturations and hues of the televised scene are successively uncovered by scanning in the camera, the amplitude and phase, respectively, of the chrominance signal change accordingly. The chrominance signal is thereby simultaneously modulated in both amplitude and phase. This doubly modulated signal is added to the luminance signal (as shown in the of the colour signal wave form), and the composite signal is imposed on the carrier wave. The chrominance signal takes the form of a subcarrier located precisely 3.579545 megahertz above the picture carrier frequency.

The picture carrier is thus simultaneously amplitude modulated by (1) the luminance signal, to represent changes in the intended luminance, and (2) the chrominance subcarrier, which in turn is amplitude modulated to represent changes in the intended saturation and phase modulated to represent changes in the intended hue. When a colour receiver is tuned to the transmission, the picture signal is recovered in a video detector, which responds to the amplitude-modulated luminance signal in the usual manner of a black-and-white receiver. An amplifier stage, tuned to the 3.58-megahertz chrominance frequency, then selects the chrominance subcarrier from the picture signal and passes it to a detector, which recovers independently the amplitude-modulated saturation signal and the phase-modulated hue signal. Because absolute phase information is difficult to extract, the hue signal is made easier to decode by a phase reference transmitted for each horizontal scan line in the form of a short burst of the chrominance subcarrier. This chrominance, or colour, burst consists of a minimum of eight full cycles of the chrominance subcarrier and is placed on the “back porch” of the blanking pulse, immediately after the horizontal synchronization pulse (as shown in the diagram).

When compatible colour transmissions are received on a black-and-white receiver, the receiver treats the chrominance subcarrier as though it were a part of the intended monochrome transmission. If steps were not taken to prevent it, the subcarrier would produce interference in the form of a fine dot pattern on the television screen. Fortunately, the dot pattern can be rendered almost invisible in monochrome reception by deriving the timing of the scanning motions directly from the source that establishes the chrominance subcarrier itself. The dot pattern of interference from the chrominance signal, therefore, can be made to have opposite effects on successive scannings of the pattern; that is, a point brightened by the dot interference on one line scan is darkened an equal amount on the next scan of that line, so that the net effect of the interference, integrated in the eye over successive scans, is virtually zero. Thus, the monochrome receiver in effect ignores the chrominance component of the transmission. It deals with the luminance signal in the conventional manner, producing from it a black-and-white image. This black-and-white rendition, incidentally, is not a compromise; it is essentially identical to the image that would be produced by a monochrome system viewing the same scene.

The television channel, when occupied by a compatible colour transmission, is usually diagrammed as shown in the . The luminance information modulates the chrominance subcarrier in the form of two orthogonal components, the I signal and the Q signal. This form of quadrature modulation accomplishes the simultaneous amplitude and phase modulation of the chrominance subcarrier. The I signal represents hues from the orange-cyan colour axis, and the Q signal represents hues along the magenta-yellow colour axis. The human eye is much less sensitive to spatial detail in colour, and thus the chrominance information is allocated much less bandwidth than the luminance information. Furthermore, since the human eye has more spatial resolution to the hues represented by the I signal, the I signal is allotted 1.5 megahertz, while the Q signal is restricted to only 0.5 megahertz. To conserve spectrum, vestigial modulation is used for the I signal, giving the lower sideband the full 1.5 megahertz. The quadrature modulation used for the chrominance information results in a suppressed carrier.