In optical fiber technology tends to use a cable formed from a glass crystal crystal or a medium of delivery is a glass that is closed by a certain isolation so that the light transmitted as a transmitter does not experience refraction when it arrives at the receiver. in the delivery of data indeed there is our desire as a user for data received and stored can be many and the process of delivery can be very fast when the world wants information fast, precise and accurate. communication information using optical fiber unlike the usual cable communications in the field of audio or video or electrical power which can be soldered or in jumper between cables between each cable connector. on fiber optic cable there must be two to three supporting cables in the transmission of data from the receiver and transmitter:

1. Fiber Optic access cable (located to the receiver)

2. Fiber Cable Optical backbone (located in the distribution line usually in the ground)

3. Fiber Optic Cable on Transmitter (located at transmitter)

on the access cable in the event of a cable break or a fault on the cable can not be soldered or in the connection can only be replaced with a new cable of the same length, for the fiber optic cable on the access cable is made to spin or roll it spin like a loop and tied neatly; although theoretically the direction of light can be refractory or not straight on the course of light on the optical fiber media but the data submitted can be measured by the attenuation tool which if can be up to 2 dB then the access cable can be used also attenuation system should not be under min 17 (- 17 ) if it happens then the access cable must be replaced or seen loop system. while on the backbone cables that need to be considered because of its location under the ground or in the sea so if there is a disconnect cable then used a cable connecting device whose name is a slasher in the form of a connector between two broken wires and inside heated so that the two backbone cable is broken can connect. the distance of many backbone cables there are 100 meters - 200 meters before added transmitter amplifier.

Fiber-optic communication

Fiber-optic communication is a method of transmitting information from one place to another by sending pulses of light through an optical fiber. The light forms an electromagnetic carrier wave that is modulated to carry information. Fiber is preferred over electrical cabling when high bandwidth, long distance, or immunity to electromagnetic interference are required.

Optical fiber is used by many telecommunications companies to transmit telephone signals, Internet communication, and cable television signals. Researchers at Bell Labs have reached internet speeds of over 100 petabit × kilometer per second using fiber-optic communication.

Background

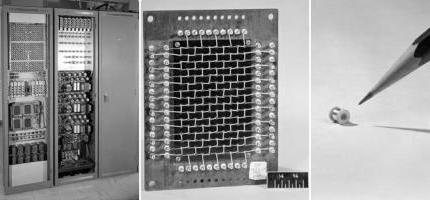

First developed in the 1970s, fiber-optics have revolutionized the telecommunications industry and have played a major role in the advent of the Information Age. Because of its advantages over electrical transmission, optical fibers have largely replaced copper wire communications in core networks in the developed world.

The process of communicating using fiber-optics involves the following basic steps:

- creating the optical signal involving the use of a transmitter, usually from an electrical signal

- relaying the signal along the fiber, ensuring that the signal does not become too distorted or weak

- receiving the optical signal

- converting it into an electrical signal

Applications

Optical fiber is used by many telecommunications companies to transmit telephone signals, Internet communication and cable television signals. Due to much lower attenuation and interference, optical fiber has large advantages over existing copper wire in long-distance, high-demand applications. However, infrastructure development within cities was relatively difficult and time-consuming, and fiber-optic systems were complex and expensive to install and operate. Due to these difficulties, fiber-optic communication systems have primarily been installed in long-distance applications, where they can be used to their full transmission capacity, offsetting the increased cost. The prices of fiber-optic communications have dropped considerably since 2000.

The price for rolling out fiber to the home has currently become more cost-effective than that of rolling out a copper based network. Prices have dropped to $850 per subscriber in the US and lower in countries like The Netherlands, where digging costs are low and housing density is high.

Since 1990, when optical-amplification systems became commercially available, the telecommunications industry has laid a vast network of intercity and transoceanic fiber communication lines. By 2002, an intercontinental network of 250,000 km of submarine communications cable with a capacity of 2.56 Tb/s was completed, and although specific network capacities are privileged information, telecommunications investment reports indicate that network capacity has increased dramatically since 2004.

Flash Back

In 1880 Alexander Graham Bell and his assistant Charles Sumner Tainter created a very early precursor to fiber-optic communications, the Photophone, at Bell's newly established Volta Laboratory in Washington, D.C. Bell considered it his most important invention. The device allowed for the transmission of sound on a beam of light. On June 3, 1880, Bell conducted the world's first wireless telephone transmission between two buildings, some 213 meters apart. Due to its use of an atmospheric transmission medium, the Photophone would not prove practical until advances in laser and optical fiber technologies permitted the secure transport of light. The Photophone's first practical use came in military communication systems many decades later.

In 1954 Harold Hopkins and Narinder Singh Kapany showed that rolled fiber glass allowed light to be transmitted. Initially it was considered that the light can traverse in only straight medium.[clarification needed][citation needed]

Jun-ichi Nishizawa, a Japanese scientist at Tohoku University, proposed the use of optical fibers for communications in 1963.[6] Nishizawa invented the PIN diode and the static induction transistor, both of which contributed to the development of optical fiber communications.

In 1966 Charles K. Kao and George Hockham at STC Laboratories (STL) showed that the losses of 1,000 dB/km in existing glass (compared to 5–10 dB/km in coaxial cable) were due to contaminants which could potentially be removed.

Optical fiber was successfully developed in 1970 by Corning Glass Works, with attenuation low enough for communication purposes (about 20 dB/km) and at the same time GaAs semiconductor lasers were developed that were compact and therefore suitable for transmitting light through fiber optic cables for long distances.

After a period of research starting from 1975, the first commercial fiber-optic communications system was developed which operated at a wavelength around 0.8 µm and used GaAs semiconductor lasers. This first-generation system operated at a bit rate of 45 Mbit/s with repeater spacing of up to 10 km. Soon on 22 April 1977, General Telephone and Electronics sent the first live telephone traffic through fiber optics at a 6 Mbit/s throughput in Long Beach, California.

In October 1973, Corning Glass signed a development contract with CSELT and Pirelli aimed to test fiber optics in an urban environment: in September 1977, the second cable in this test series, named COS-2, was experimentally deployed in two lines (9 km) in Turin, for the first time in a big city, at a speed of 140 Mbit/s.

The second generation of fiber-optic communication was developed for commercial use in the early 1980s, operated at 1.3 µm and used InGaAsP semiconductor lasers. These early systems were initially limited by multi mode fiber dispersion, and in 1981 the single-mode fiber was revealed to greatly improve system performance, however practical connectors capable of working with single mode fiber proved difficult to develop. In 1984, they had already developed a fiber optic cable that would help further their progress toward making fiber optic cables that would circle the globe. Canadian service provider SaskTel had completed construction of what was then the world’s longest commercial fiber optic network, which covered 3,268 km and linked 52 communities. By 1987, these systems were operating at bit rates of up to 1.7 Gb/s with repeater spacing up to 50 km.

The first transatlantic telephone cable to use optical fiber was TAT-8, based on Desurvire optimised laser amplification technology. It went into operation in 1988.

Third-generation fiber-optic systems operated at 1.55 µm and had losses of about 0.2 dB/km. This development was spurred by the discovery of Indium gallium arsenide and the development of the Indium Gallium Arsenide photodiode by Pearsall. Engineers overcame earlier difficulties with pulse-spreading at that wavelength using conventional InGaAsP semiconductor lasers. Scientists overcame this difficulty by using dispersion-shifted fibers designed to have minimal dispersion at 1.55 µm or by limiting the laser spectrum to a single longitudinal mode. These developments eventually allowed third-generation systems to operate commercially at 2.5 Gbit/s with repeater spacing in excess of 100 km.

The fourth generation of fiber-optic communication systems used optical amplification to reduce the need for repeaters and wavelength-division multiplexing to increase data capacity. These two improvements caused a revolution that resulted in the doubling of system capacity every six months starting in 1992 until a bit rate of 10 Tb/s was reached by 2001. In 2006 a bit-rate of 14 Tbit/s was reached over a single 160 km line using optical amplifiers.

The focus of development for the fifth generation of fiber-optic communications is on extending the wavelength range over which a WDM system can operate. The conventional wavelength window, known as the C band, covers the wavelength range 1.53–1.57 µm, and dry fiber has a low-loss window promising an extension of that range to 1.30–1.65 µm. Other developments include the concept of "optical solitons", pulses that preserve their shape by counteracting the effects of dispersion with the nonlinear effects of the fiber by using pulses of a specific shape.

In the late 1990s through 2000, industry promoters, and research companies such as KMI, and RHK predicted massive increases in demand for communications bandwidth due to increased use of the Internet, and commercialization of various bandwidth-intensive consumer services, such as video on demand. Internet protocol data traffic was increasing exponentially, at a faster rate than integrated circuit complexity had increased under Moore's Law. From the bust of the dot-com bubble through 2006, however, the main trend in the industry has been consolidation of firms and offshoring of manufacturing to reduce costs. Companies such as Verizon and AT&T have taken advantage of fiber-optic communications to deliver a variety of high-throughput data and broadband services to consumers' homes.

Technology

Modern fiber-optic communication systems generally include an optical transmitter to convert an electrical signal into an optical signal to send through the optical fiber, a cable containing bundles of multiple optical fibers that is routed through underground conduits and buildings, multiple kinds of amplifiers, and an optical receiver to recover the signal as an electrical signal. The information transmitted is typically digital information generated by computers, telephone systems and cable television companies.

Transmitters

The most commonly used optical transmitters are semiconductor devices such as light-emitting diodes (LEDs) and laser diodes. The difference between LEDs and laser diodes is that LEDs produce incoherent light, while laser diodes produce coherent light. For use in optical communications, semiconductor optical transmitters must be designed to be compact, efficient and reliable, while operating in an optimal wavelength range and directly modulated at high frequencies.

In its simplest form, an LED is a forward-biased p-n junction, emitting light through spontaneous emission, a phenomenon referred to as electroluminescence. The emitted light is incoherent with a relatively wide spectral width of 30–60 nm. LED light transmission is also inefficient, with only about 1%[citation needed] of input power, or about 100 microwatts, eventually converted into launched power which has been coupled into the optical fiber. However, due to their relatively simple design, LEDs are very useful for low-cost applications.

Communications LEDs are most commonly made from Indium gallium arsenide phosphide (InGaAsP) or gallium arsenide (GaAs). Because InGaAsP LEDs operate at a longer wavelength than GaAs LEDs (1.3 micrometers vs. 0.81–0.87 micrometers), their output spectrum, while equivalent in energy is wider in wavelength terms by a factor of about 1.7. The large spectrum width of LEDs is subject to higher fiber dispersion, considerably limiting their bit rate-distance product (a common measure of usefulness). LEDs are suitable primarily for local-area-network applications with bit rates of 10–100 Mbit/s and transmission distances of a few kilometers. LEDs have also been developed that use several quantum wells to emit light at different wavelengths over a broad spectrum and are currently in use for local-area WDM (Wavelength-Division Multiplexing) networks.

Today, LEDs have been largely superseded by VCSEL (Vertical Cavity Surface Emitting Laser) devices, which offer improved speed, power and spectral properties, at a similar cost. Common VCSEL devices couple well to multi mode fiber.

A semiconductor laser emits light through stimulated emission rather than spontaneous emission, which results in high output power (~100 mW) as well as other benefits related to the nature of coherent light. The output of a laser is relatively directional, allowing high coupling efficiency (~50 %) into single-mode fiber. The narrow spectral width also allows for high bit rates since it reduces the effect of chromatic dispersion. Furthermore, semiconductor lasers can be modulated directly at high frequencies because of short recombination time.

Commonly used classes of semiconductor laser transmitters used in fiber optics include VCSEL (Vertical-Cavity Surface-Emitting Laser), Fabry–Pérot and DFB (Distributed Feed Back).

Laser diodes are often directly modulated, that is the light output is controlled by a current applied directly to the device. For very high data rates or very long distance links, a laser source may be operated continuous wave, and the light modulated by an external device, an optical modulator, such as an electro-absorption modulator or Mach–Zehnder interferometer. External modulation increases the achievable link distance by eliminating laser chirp, which broadens the linewidth of directly modulated lasers, increasing the chromatic dispersion in the fiber. For very high bandwidth efficiency, coherent modulation can be used to vary the phase of the light in addition to the amplitude, enabling the use of QPSK, QAM, and OFDM.

A transceiver is a device combining a transmitter and a receiver in a single housing (see picture on right).

Receivers

The main component of an optical receiver is a photodetector which converts light into electricity using the photoelectric effect. The primary photodetectors for telecommunications are made from Indium gallium arsenide The photodetector is typically a semiconductor-based photodiode. Several types of photodiodes include p-n photodiodes, p-i-n photodiodes, and avalanche photodiodes. Metal-semiconductor-metal (MSM) photodetectors are also used due to their suitability for circuit integration in regenerators and wavelength-division multiplexers.

Optical-electrical converters are typically coupled with a transimpedance amplifier and a limiting amplifier to produce a digital signal in the electrical domain from the incoming optical signal, which may be attenuated and distorted while passing through the channel. Further signal processing such as clock recovery from data (CDR) performed by a phase-locked loop may also be applied before the data is passed on.

Coherent receivers use a local oscillator laser in combination with a pair of hybrid couplers and four photodetectors per polarization, followed by high speed ADCs and digital signal processing to recover data modulated with QPSK, QAM, or OFDM.

Digital predistortion

An optical communication system transmitter consists of a digital-to-analog converter (DAC), a driver amplifier and a Mach–Zehnder-Modulator. The deployment of higher modulation formats (> 4QAM) or higher Baud rates (> 32 GBaud) diminishes the system performance due to linear and non-linear transmitter effects. These effects can be categorised in linear distortions due to DAC bandwidth limitation and transmitter I/Q skew as well as non-linear effects caused by gain saturation in the driver amplifier and the Mach–Zehnder modulator. Digital predistortion counteracts the degrading effects and enables Baud rates up to 56 GBaud and modulation formats like 64QAM and 128QAM with the commercially available components. The transmitter digital signal processor performs digital predistortion on the input signals using the inverse transmitter model before uploading the samples to the DAC.

Older digital predistortion methods only addressed linear effects. Recent publications also compensated for non-linear distortions. Berenguer et al models the Mach–Zehnder modulator as an independent Wiener system and the DAC and the driver amplifier are modelled by a truncated, time-invariant Volterra series. Khanna et al used a memory polynomial to model the transmitter components jointly. In both approaches the Volterra series or the memory polynomial coefficients are found using Indirect-learning architecture. Duthel et al records for each branch of the Mach-Zehnder modulator several signals at different polarity and phases. The signals are used to calculate the optical field. Cross-correlating in-phase and quadrature fields identifies the timing skew. The frequency response and the non-linear effects are determined by the indirect-learning architecture.

Fiber cable types

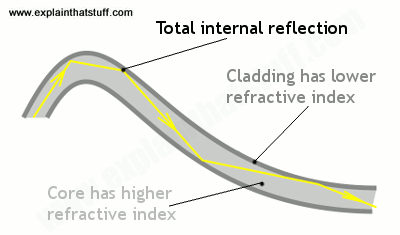

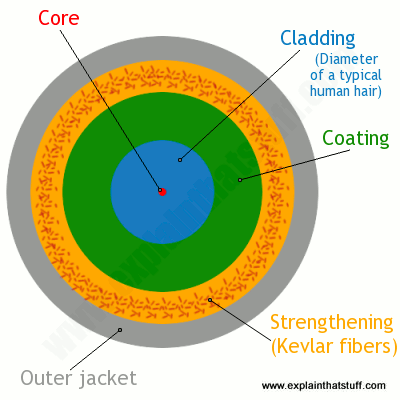

An optical fiber cable consists of a core, cladding, and a buffer (a protective outer coating), in which the cladding guides the light along the core by using the method of total internal reflection. The core and the cladding (which has a lower-refractive-index) are usually made of high-quality silica glass, although they can both be made of plastic as well. Connecting two optical fibers is done by fusion splicing or mechanical splicing and requires special skills and interconnection technology due to the microscopic precision required to align the fiber cores.

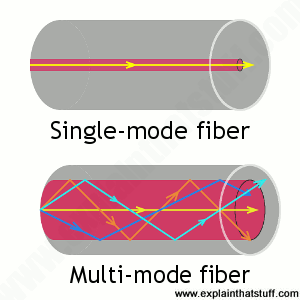

Two main types of optical fiber used in optic communications include multi-mode optical fibers and single-mode optical fibers. A multi-mode optical fiber has a larger core (≥ 50 micrometers), allowing less precise, cheaper transmitters and receivers to connect to it as well as cheaper connectors. However, a multi-mode fiber introduces multimode distortion, which often limits the bandwidth and length of the link. Furthermore, because of its higher dopant content, multi-mode fibers are usually expensive and exhibit higher attenuation. The core of a single-mode fiber is smaller (<10 micrometers) and requires more expensive components and interconnection methods, but allows much longer, higher-performance links.

In order to package fiber into a commercially viable product, it typically is protectively coated by using ultraviolet (UV), light-cured acrylate polymers, then terminated with optical fiber connectors, and finally assembled into a cable. After that, it can be laid in the ground and then run through the walls of a building and deployed aerially in a manner similar to copper cables. These fibers require less maintenance than common twisted pair wires once they are deployed.

Specialized cables are used for long distance subsea data transmission, e.g. transatlantic communications cable. New (2011–2013) cables operated by commercial enterprises (Emerald Atlantis, Hibernia Atlantic) typically have four strands of fiber and cross the Atlantic (NYC-London) in 60–70ms. Cost of each such cable was about $300M in 2011. source: The Chronicle Herald.

Another common practice is to bundle many fiber optic strands within long-distance power transmission cable. This exploits power transmission rights of way effectively, ensures a power company can own and control the fiber required to monitor its own devices and lines, is effectively immune to tampering, and simplifies the deployment of smart grid technology.

Amplification

Optical amplifier

The transmission distance of a fiber-optic communication system has traditionally been limited by fiber attenuation and by fiber distortion. By using opto-electronic repeaters, these problems have been eliminated. These repeaters convert the signal into an electrical signal, and then use a transmitter to send the signal again at a higher intensity than was received, thus counteracting the loss incurred in the previous segment. Because of the high complexity with modern wavelength-division multiplexed signals (including the fact that they had to be installed about once every 20 km), the cost of these repeaters is very high.

An alternative approach is to use optical amplifiers which amplify the optical signal directly without having to convert the signal to the electrical domain. One common type of optical amplifier is called an Erbium-doped fiber amplifier, or EDFA. These are made by doping a length of fiber with the rare-earth mineral erbium and pumping it with light from a laser with a shorter wavelength than the communications signal (typically 980 nm). EDFAs provide gain in the ITU C band at 1550 nm, which is near the loss minimum for optical fiber.

Optical amplifiers have several significant advantages over electrical repeaters. First, an optical amplifier can amplify a very wide band at once which can include hundreds of individual channels, eliminating the need to demultiplex DWDM signals at each amplifier. Second, optical amplifiers operate independently of the data rate and modulation format, enabling multiple data rates and modulation formats to co-exist and enabling upgrading of the data rate of a system without having to replace all of the repeaters. Third, optical amplifiers are much simpler than a repeater with the same capabilities and are therefore significantly more reliable. Optical amplifiers have largely replaced repeaters in new installations, although electronic repeaters are still widely used as transponders for wavelength conversion.

Wavelength-division multiplexing

Wavelength-division multiplexing (WDM) is the practice of multiplying the available capacity of optical fibers through use of parallel channels, each channel on a dedicated wavelength of light. This requires a wavelength division multiplexer in the transmitting equipment and a demultiplexer (essentially a spectrometer) in the receiving equipment. Arrayed waveguide gratings are commonly used for multiplexing and demultiplexing in WDM. Using WDM technology now commercially available, the bandwidth of a fiber can be divided into as many as 160 channels to support a combined bit rate in the range of 1.6 Tbit/s.

Parameters

Bandwidth–distance product

Because the effect of dispersion increases with the length of the fiber, a fiber transmission system is often characterized by its bandwidth–distance product, usually expressed in units of MHz·km. This value is a product of bandwidth and distance because there is a trade-off between the bandwidth of the signal and the distance over which it can be carried. For example, a common multi-mode fiber with bandwidth–distance product of 500 MHz·km could carry a 500 MHz signal for 1 km or a 1000 MHz signal for 0.5 km.

Engineers are always looking at current limitations in order to improve fiber-optic communication, and several of these restrictions are currently being researched.

Record speeds

Each fiber can carry many independent channels, each using a different wavelength of light (wavelength-division multiplexing). The net data rate (data rate without overhead bytes) per fiber is the per-channel data rate reduced by the FEC overhead, multiplied by the number of channels (usually up to eighty in commercial dense WDM systems as of 2008).

| Year | Organization | Effective speed | WDM channels | Per channel speed | Distance |

|---|---|---|---|---|---|

| 2009 | Alcatel-Lucent | 15 Tbit/s | 155 | 100 Gbit/s | 90 km |

| 2010 | NTT | 69.1 Tbit/s | 432 | 171 Gbit/s | 240 km |

| 2011 | KIT | 26 Tbit/s | 1 | 26 Tbit/s | 50 km |

| 2011 | NEC | 101 Tbit/s | 370 | 273 Gbit/s | 165 km |

| 2012 | NEC, Corning | 1.05 Pbit/s 12 cores | 52.4 km |

In 2013, New Scientist reported that a team at the University of Southampton had achieved a throughput of 73.7 Tbit per second over 310 m, with the signal traveling at 99.7% the vacuum speed of light through a hollow-core photonic crystal fiber.

Dispersion

For modern glass optical fiber, the maximum transmission distance is limited not by direct material absorption but by several types of dispersion, or spreading of optical pulses as they travel along the fiber. Dispersion in optical fibers is caused by a variety of factors. Intermodal dispersion, caused by the different axial speeds of different transverse modes, limits the performance of multi-mode fiber. Because single-mode fiber supports only one transverse mode, intermodal dispersion is eliminated.

In single-mode fiber performance is primarily limited by chromatic dispersion (also called group velocity dispersion), which occurs because the index of the glass varies slightly depending on the wavelength of the light, and light from real optical transmitters necessarily has nonzero spectral width (due to modulation). Polarization mode dispersion, another source of limitation, occurs because although the single-mode fiber can sustain only one transverse mode, it can carry this mode with two different polarizations, and slight imperfections or distortions in a fiber can alter the propagation velocities for the two polarizations. This phenomenon is called fiber birefringence and can be counteracted by polarization-maintaining optical fiber. Dispersion limits the bandwidth of the fiber because the spreading optical pulse limits the rate that pulses can follow one another on the fiber and still be distinguishable at the receiver.

Some dispersion, notably chromatic dispersion, can be removed by a 'dispersion compensator'. This works by using a specially prepared length of fiber that has the opposite dispersion to that induced by the transmission fiber, and this sharpens the pulse so that it can be correctly decoded by the electronics.

Attenuation

Fiber attenuation, which necessitates the use of amplification systems, is caused by a combination of material absorption, Rayleigh scattering, Mie scattering, and connection losses. Although material absorption for pure silica is only around 0.03 dB/km (modern fiber has attenuation around 0.3 dB/km), impurities in the original optical fibers caused attenuation of about 1000 dB/km. Other forms of attenuation are caused by physical stresses to the fiber, microscopic fluctuations in density, and imperfect splicing techniques.

Transmission windows

Each effect that contributes to attenuation and dispersion depends on the optical wavelength. There are wavelength bands (or windows) where these effects are weakest, and these are the most favorable for transmission. These windows have been standardized, and the currently defined bands are the following:

| Band | Description | Wavelength Range |

|---|---|---|

| O band | original | 1260 to 1360 nm |

| E band | extended | 1360 to 1460 nm |

| S band | short wavelengths | 1460 to 1530 nm |

| C band | conventional ("erbium window") | 1530 to 1565 nm |

| L band | long wavelengths | 1565 to 1625 nm |

| U band | ultralong wavelengths | 1625 to 1675 nm |

Note that this table shows that current technology has managed to bridge the second and third windows that were originally disjoint.

Historically, there was a window used below the O band, called the first window, at 800–900 nm; however, losses are high in this region so this window is used primarily for short-distance communications. The current lower windows (O and E) around 1300 nm have much lower losses. This region has zero dispersion. The middle windows (S and C) around 1500 nm are the most widely used. This region has the lowest attenuation losses and achieves the longest range. It does have some dispersion, so dispersion compensator devices are used to remove this.

Regeneration

When a communications link must span a larger distance than existing fiber-optic technology is capable of, the signal must be regenerated at intermediate points in the link by optical communications repeaters. Repeaters add substantial cost to a communication system, and so system designers attempt to minimize their use.

Recent advances in fiber and optical communications technology have reduced signal degradation so far that regeneration of the optical signal is only needed over distances of hundreds of kilometers. This has greatly reduced the cost of optical networking, particularly over undersea spans where the cost and reliability of repeaters is one of the key factors determining the performance of the whole cable system. The main advances contributing to these performance improvements are dispersion management, which seeks to balance the effects of dispersion against non-linearity; and solitons, which use nonlinear effects in the fiber to enable dispersion-free propagation over long distances.

Last mile

Although fiber-optic systems excel in high-bandwidth applications, optical fiber has been slow to achieve its goal of fiber to the premises or to solve the last mile problem. However, as bandwidth demand increases, more and more progress towards this goal can be observed. In Japan, for instance EPON has largely replaced DSL as a broadband Internet source. South Korea’s KT also provides a service called FTTH (Fiber To The Home), which provides fiber-optic connections to the subscriber’s home. The largest FTTH deployments are in Japan, South Korea, and China. Singapore started implementation of their all-fiber Next Generation Nationwide Broadband Network (Next Gen NBN), which is slated for completion in 2012 and is being installed by OpenNet. Since they began rolling out services in September 2010, network coverage in Singapore has reached 85% nationwide.

In the US, Verizon Communications provides a FTTH service called FiOS to select high-ARPU (Average Revenue Per User) markets within its existing territory. The other major surviving ILEC (or Incumbent Local Exchange Carrier), AT&T, uses a FTTN (Fiber To The Node) service called U-verse with twisted-pair to the home. Their MSO competitors employ FTTN with coax using HFC. All of the major access networks use fiber for the bulk of the distance from the service provider's network to the customer.

The globally dominant access network technology is EPON (Ethernet Passive Optical Network). In Europe, and among telcos in the United States, BPON (ATM-based Broadband PON) and GPON (Gigabit PON) had roots in the FSAN (Full Service Access Network) and ITU-T standards organizations under their control.

Comparison with electrical transmission

The choice between optical fiber and electrical (or copper) transmission for a particular system is made based on a number of trade-offs. Optical fiber is generally chosen for systems requiring higher bandwidth or spanning longer distances than electrical cabling can accommodate.

The main benefits of fiber are its exceptionally low loss (allowing long distances between amplifiers/repeaters), its absence of ground currents and other parasite signal and power issues common to long parallel electric conductor runs (due to its reliance on light rather than electricity for transmission, and the dielectric nature of fiber optic), and its inherently high data-carrying capacity. Thousands of electrical links would be required to replace a single high bandwidth fiber cable. Another benefit of fibers is that even when run alongside each other for long distances, fiber cables experience effectively no crosstalk, in contrast to some types of electrical transmission lines. Fiber can be installed in areas with high electromagnetic interference (EMI), such as alongside utility lines, power lines, and railroad tracks. Nonmetallic all-dielectric cables are also ideal for areas of high lightning-strike incidence.

For comparison, while single-line, voice-grade copper systems longer than a couple of kilometers require in-line signal repeaters for satisfactory performance; it is not unusual for optical systems to go over 100 kilometers (62 mi), with no active or passive processing. Single-mode fiber cables are commonly available in 12 km lengths, minimizing the number of splices required over a long cable run. Multi-mode fiber is available in lengths up to 4 km, although industrial standards only mandate 2 km unbroken runs.

In short distance and relatively low bandwidth applications, electrical transmission is often preferred because of its

- Lower material cost, where large quantities are not required

- Lower cost of transmitters and receivers

- Capability to carry electrical power as well as signals (in appropriately designed cables)

- Ease of operating transducers in linear mode.

Optical fibers are more difficult and expensive to splice than electrical conductors. And at higher powers, optical fibers are susceptible to fiber fuse, resulting in catastrophic destruction of the fiber core and damage to transmission components.

Because of these benefits of electrical transmission, optical communication is not common in short box-to-box, backplane, or chip-to-chip applications; however, optical systems on those scales have been demonstrated in the laboratory.

In certain situations fiber may be used even for short distance or low bandwidth applications, due to other important features:

- Immunity to electromagnetic interference, including nuclear electromagnetic pulses.

- High electrical resistance, making it safe to use near high-voltage equipment or between areas with different earth potentials.

- Lighter weight—important, for example, in aircraft.

- No sparks—important in flammable or explosive gas environments.[27]

- Not electromagnetically radiating, and difficult to tap without disrupting the signal—important in high-security environments.

- Much smaller cable size—important where pathway is limited, such as networking an existing building, where smaller channels can be drilled and space can be saved in existing cable ducts and trays.

- Resistance to corrosion due to non-metallic transmission medium

Optical fiber cables can be installed in buildings with the same equipment that is used to install copper and coaxial cables, with some modifications due to the small size and limited pull tension and bend radius of optical cables. Optical cables can typically be installed in duct systems in spans of 6000 meters or more depending on the duct's condition, layout of the duct system, and installation technique. Longer cables can be coiled at an intermediate point and pulled farther into the duct system as necessary.

Governing standards

In order for various manufacturers to be able to develop components that function compatibly in fiber optic communication systems, a number of standards have been developed. The International Telecommunications Union publishes several standards related to the characteristics and performance of fibers themselves, including

- ITU-T G.651, "Characteristics of a 50/125 µm multimode graded index optical fibre cable"

- ITU-T G.652, "Characteristics of a single-mode optical fibre cable"

Other standards specify performance criteria for fiber, transmitters, and receivers to be used together in conforming systems. Some of these standards are:

- 100 Gigabit Ethernet

- 10 Gigabit Ethernet

- Fibre Channel

- Gigabit Ethernet

- HIPPI

- Synchronous Digital Hierarchy

- Synchronous Optical Networking

- Optical Transport Network (OTN)

TOSLINK is the most common format for digital audio cable using plastic optical fiber to connect digital sources to digital receivers.

What is fiber optics?

We're used to the idea of information traveling in different ways. When we speak into a landline telephone, a wire cable carries the sounds from our voice into a socket in the wall, where another cable takes it to the local telephone exchange. Cellphones work a different way: they send and receive information using invisible radio waves—a technology called wireless because it uses no cables. Fiber optics works a third way. It sends information coded in a beam of light down a glass or plastic pipe. It was originally developed for endoscopes in the 1950s to help doctors see inside the human body without having to cut it open first. In the 1960s, engineers found a way of using the same technology to transmit telephone calls at the speed of light (normally that's 186,000 miles or 300,000 km per second in a vacuum, but slows to about two thirds this speed in a fiber-optic cable).

Optical technology

Photo: A section of 144-strand fiber-optic cable. Each strand is made of optically pure glass and is thinner than a human hair. Picture by Tech. Sgt. Brian Davidson, courtesy of US Air Force.

A fiber-optic cable is made up of incredibly thin strands of glass or plastic known as optical fibers; one cable can have as few as two strands or as many as several hundred. Each strand is less than a tenth as thick as a human hair and can carry something like 25,000 telephone calls, so an entire fiber-optic cable can easily carry several million calls.

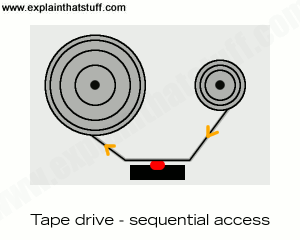

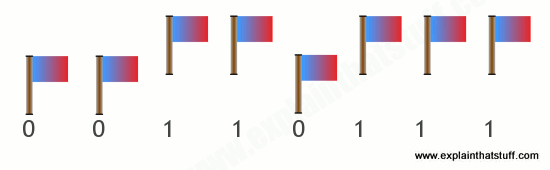

Fiber-optic cables carry information between two places using entirely optical (light-based) technology. Suppose you wanted to send information from your computer to a friend's house down the street using fiber optics. You could hook your computer up to a laser, which would convert electrical information from the computer into a series of light pulses. Then you'd fire the laser down the fiber-optic cable. After traveling down the cable, the light beams would emerge at the other end. Your friend would need a photoelectric cell (light-detecting component) to turn the pulses of light back into electrical information his or her computer could understand. So the whole apparatus would be like a really neat, hi-tech version of the kind of telephone you can make out of two baked-bean cans and a length of string!

How fiber-optics works

Photo: Fiber-optic cables are thin enough to bend, taking the light signals inside in curved paths too. Picture courtesy of NASA Glenn Research Center (NASA-GRC).

Artwork: Total internal reflection keeps light rays bouncing down the inside of a fiber-optic cable.

Light travels down a fiber-optic cable by bouncing repeatedly off the walls. Each tiny photon (particle of light) bounces down the pipe like a bobsleigh going down an ice run. Now you might expect a beam of light, traveling in a clear glass pipe, simply to leak out of the edges. But if light hits glass at a really shallow angle (less than 42 degrees), it reflects back in again—as though the glass were really a mirror. This phenomenon is called total internal reflection. It's one of the things that keeps light inside the pipe.

The other thing that keeps light in the pipe is the structure of the cable, which is made up of two separate parts. The main part of the cable—in the middle—is called the core and that's the bit the light travels through. Wrapped around the outside of the core is another layer of glass called the cladding. The cladding's job is to keep the light signals inside the core. It can do this because it is made of a different type of glass to the core. (More technically, the cladding has a lower refractive index.)

Types of fiber-optic cables

Optical fibers carry light signals down them in what are called modes. That sounds technical but it just means different ways of traveling: a mode is simply the path that a light beam follows down the fiber. One mode is to go straight down the middle of the fiber. Another is to bounce down the fiber at a shallow angle. Other modes involve bouncing down the fiber at other angles, more or less steep.

Artworks: Above: Light travels in different ways in single-mode and multi-mode fibers. Below: Inside a typical single-mode fiber cable (not drawn to scale). The thin core is surrounded by cladding roughly ten times bigger in diameter, a plastic outer coating (about twice the diameter of the cladding), some strengthening fibers made of a tough material such as Kevlar®, with a protective outer jacket on the outside.

The simplest type of optical fiber is called single-mode. It has a very thin core about 5-10 microns (millionths of a meter) in diameter. In a single-mode fiber, all signals travel straight down the middle without bouncing off the edges (red line in diagram). Cable TV, Internet, and telephone signals are generally carried by single-mode fibers, wrapped together into a huge bundle. Cables like this can send information over 100 km (60 miles).

Another type of fiber-optic cable is called multi-mode. Each optical fiber in a multi-mode cable is about 10 times bigger than one in a single-mode cable. This means light beams can travel through the core by following a variety of different paths (purple, green, and blue lines)—in other words, in multiple different modes. Multi-mode cables can send information only over relatively short distances and are used (among other things) to link computer networks together.

Even thicker fibers are used in a medical tool called a gastroscope (a type of endoscope), which doctors poke down someone's throat for detecting illnesses inside their stomach. A gastroscope is a thick fiber-optic cable consisting of many optical fibers. At the top end of a gastroscope, there is an eyepiece and a lamp. The lamp shines its light down one part of the cable into the patient's stomach. When the light reaches the stomach, it reflects off the stomach walls into a lens at the bottom of the cable. Then it travels back up another part of the cable into the doctor's eyepiece. Other types of endoscopes work the same way and can be used to inspect different parts of the body. There is also an industrial version of the tool, called a fiberscope, which can be used to examine things like inaccessible pieces of machinery in airplane engines.

Try this fiber-optic experiment!

This nice little experiment is a modern-day recreation of a famous scientific demonstration carried out by Irish physicist John Tyndall in 1870.

It's best to do it in a darkened bathroom or kitchen at the sink or washbasin. You'll need an old clear, plastic drinks bottle, the brightest flashlight (torch) you can find, some aluminum foil, and some sticky tape.

- Take the plastic bottle and wrap aluminum foil tightly around the sides, leaving the top and bottom of the bottle uncovered. If you need to, hold the foil in place with sticky tape.

- Fill the bottle with water.

- Switch on the flashlight and press it against the base of the bottle so the light shines up inside the water. It works best if you press the flashlight tightly against the bottle. You need as much light to enter the bottle as possible, so use the brightest flashlight you can find.

- Standing by the sink, tilt the bottle so the water starts to pour out. Keep the flashlight pressed tight against the bottle. If the room is darkened, you should see the spout of water lighting up ever so slightly. Notice how the water carries the light, with the light beam bending as it goes! If you can't see much light in the water spout, try a brighter flashlight.

Photo: Seen from below, your water bottle should look like this when it's wrapped in aluminum foil. The foil stops light leaking out from the sides of the bottle. Don't cover the bottom of the bottle or light won't be able to get in. The black object on the right is my flashlight, just before I pressed it against the bottle. You can already see some of its light shining into the bottom of the bottle.

Uses for fiber optics

Shooting light down a pipe seems like a neat scientific party trick, and you might not think there'd be many practical applications for something like that. But just as electricity can power many types of machines, beams of light can carry many types of information—so they can help us in many ways. We don't notice just how commonplace fiber-optic cables have become because the laser-powered signals they carry flicker far beneath our feet, deep under office floors and city streets. The technologies that use it—computer networking, broadcasting, medical scanning, and military equipment (to name just four)—do so quite invisibly.

Computer networks

Fiber-optic cables are now the main way of carrying information over long distances because they have three very big advantages over old-style copper cables:

- Less attenuation: (signal loss) Information travels roughly 10 times further before it needs amplifying—which makes fiber networks simpler and cheaper to operate and maintain.

- No interference: Unlike with copper cables, there's no "crosstalk" (electromagnetic interference) between optical fibers, so they transmit information more reliably with better signal quality

- Higher bandwidth: As we've already seen, fiber-optic cables can carry far more data than copper cables of the same diameter.

You're reading these words now thanks to the Internet. You probably chanced upon this page with a search engine like Google, which operates a worldwide network of giant data centers connected by vast-capacity fiber-optic cables (and is now trying to roll out fast fiber connections to the rest of us). Having clicked on a search engine link, you've downloaded this web page from my web server and my words have whistled most of the way to you down more fiber-optic cables. Indeed, if you're using fast fiber-optic broadband, optical fiber cables are doing almost all the work every time you go online. With most high-speed broadband connections, only the last part of the information's journey (the so-called "last mile" from the fiber-connected cabinet on your street to your house or apartment) involves old-fashioned wires. It's fiber-optic cables, not copper wires, that now carry "likes" and "tweets" under our streets, through an increasing number of rural areas, and even deep beneath the oceans linking continents. If you picture the Internet (and the World Wide Web that rides on it) as a global spider's web, the strands holding it together are fiber-optic cables; according to some estimates, fiber cables cover over 99 percent of the Internet's total mileage, and carry over 99 percent of all international communications traffic.

The faster people can access the Internet, the more they can—and will—do online. The arrival of broadband Internet made possible the phenomenon of cloud computing (where people store and process their data remotely, using online services instead of a home or business PC in their own premises). In much the same way, the steady rollout of fiber broadband (typically 5–10 times faster than conventional DSL broadband, which uses ordinary telephone lines) will make it much more commonplace for people to do things like streaming movies online instead of watching broadcast TV or renting DVDs. With more fiber capacity and faster connections, we'll be tracking and controlling many more aspects of our lives online using the so-called Internet of things.

But it's not just public Internet data that streams down fiber-optic lines. Computers were once connected over long distances by telephone lines or (over shorter distances) copper Ethernet cables, but fiber cables are increasingly the preferred method of networking computers because they're very affordable, secure, reliable, and have much higher capacity. Instead of linking its offices over the public Internet, it's perfectly possible for a company to set up its own fiber network (if it can afford to do so) or (more likely) buy space on a private fiber network. Many private computer networks run on what's called dark fiber, which sounds a bit sinister, but is simply the unused capacity on another network (optical fibers waiting to be lit up).

The Internet was cleverly designed to ferry any kind of information for any kind of use; it's not limited to carrying computer data. While telephone lines once carried the Internet, now the fiber-optic Internet carries telephone (and Skype) calls instead. Where telephone calls were once routed down an intricate patchwork of copper cables and microwave links between cities, most long-distance calls are now routed down fiber-optic lines. Vast quantities of fiber were laid from the 1980s onward; estimates vary wildly, but the worldwide total is believed to be several hundred million kilometers (enough to cross the United States about a million times). In the mid-2000s, it was estimated that as much as 98 percent of this was unused "dark fiber"; today, although much more fiber is in use, it's still generally believed that most networks contain anywhere from a third to a half dark fiber.

Photo: Fiber-optic networks are expensive to construct (largely because it costs so much to dig up streets). Because the labor and construction costs are much more expensive than the cable itself, many network operators deliberately lay much more cable than they currently need. Picture by Chris Willis courtesy of US Air Force.

Broadcasting

Back in the early 20th century, radio and TV broadcasting was born from a relatively simple idea: it was technically quite easy to shoot electromagnetic wavesthrough the air from a single transmitter (at the broadcasting station) to thousands of antennas on people's homes. These days, while radio still beams through the air, we're just as likely to get our TV through fiber-optic cables.

Cable TV companies pioneered the transition from the 1950s onward, originally using coaxial cables (copper cables with a sheath of metal screening wrapped around them to prevents crosstalk interference), which carried just a handful of analog TV signals. As more and more people connected to cable and the networks started to offer greater choice of channels and programs, cable operators found they needed to switch from coaxial cables to optical fibers and from analog to digital broadcasting. Fortunately, scientists were already figuring out how that might be possible; as far back as 1966, Charles Kao (and his colleague George Hockham) had done the math, proving how a single optical fiber cable might carry enough data for several hundred TV channels (or several hundred thousand telephone calls). It was only a matter of time before the world of cable TV took notice—and Kao's "groundbreaking achievement" was properly recognized when he was awarded the 2009 Nobel Prize in Physics.

Apart from offering much higher capacity, optical fibers suffer less from interference, so offer better signal (picture and sound) quality; they need less amplification to boost signals so they travel over long distances; and they're altogether more cost effective. In the future, fiber broadband may well be how most of us watch television, perhaps through systems such as IPTV (Internet Protocol Television), which uses the Internet's standard way of carrying data ("packet switching") to serve TV programs and movies on demand. While the copper telephone line is still the primary information route into many people's homes, in the future, our main connection to the world will be a high-bandwidth fiber-optic cable carrying any and every kind of information.

Medicine

Medical gadgets that could help doctors peer inside our bodies without cutting them open were the first proper application of fiber optics over a half century ago. Today, gastroscopes (as these things are called) are just as important as ever, but fiber optics continues to spawn important new forms of medical scanning and diagnosis.

One of the latest developments is called a lab on a fiber, and involves inserting hair-thin fiber-optic cables, with built-in sensors, into a patient's body. These sorts of fibers are similar in scale to the ones in communication cables and thinner than the relatively chunky light guides used in gastroscopes. How do they work? Light zaps through them from a lamp or laser, through the part of the body the doctor wants to study. As the light whistles through the fiber, the patient's body alters its properties in a particular way (altering the light's intensity or wavelength very slightly, perhaps). By measuring the way the light changes (using techniques such as interferometry), an instrument attached to the other end of the fiber can measure some critical aspect of how the patient's body is working, such as their temperature, blood pressure, cell pH, or the presence of medicines in their bloodstream. In other words, rather than simply using light to see inside the patient's body, this type of fiber-optic cable uses light to sense or measure it instead.

Military

Photo: Fiber optics on the battlefield. This Enhanced Fiber-Optic Guided Missile (EFOG-M) has an infrared fiber-optic camera mounted in its nose so that the gunner firing it can see where it's going as it travels. Picture courtesy of US Army.

It's easy to picture Internet users linked together by giant webs of fiber-optic cables; it's much less obvious that the world's hi-tech military forces are connected the same way. Fiber-optic cables are inexpensive, thin, lightweight, high-capacity, robust against attack, and extremely secure, so they offer perfect ways to connect military bases and other installations, such as missile launch sites and radar tracking stations. Since they don't carry electrical signals, they don't give off electromagnetic radiation that an enemy can detect, and they're robust against electromagnetic interference (including systematic enemy "jamming" attacks). Another benefit is the relatively light weight of fiber cables compared to traditional wires made of cumbersome and expensive copper metal. Tanks, military airplanes, and helicopters have all been slowly switching from metal cables to fiber-optic ones. Partly it's a matter of cutting costs and saving weight (fiber-optic cables weigh nearly 90 percent less than comparable "twisted-pair" copper cables). But it also improves reliability; for example, unlike traditional cables on an airplane, which have to be carefully shielded (insulated) to protect them against lightning strikes, optical fibers are completely immune to that kind of problem.

XXX . ____ . 000 Optical fiber cabling and component specification considerations

Our provided information on fundamentals of optical light sources and transmission. In this continuation of that discussion, I will present information on the means by which that optical theory is put into practice by professionals in the networking and cabling industries.

Unlike balanced twisted-pair media, optical fiber cabling can be considered application-dependent media. This means that considerations such as distance, application and equipment cost play a role in the media selection process.

The Telecommunications Industry Association and the International Organization for Standardization , through reference to specifications from the International Electrotechnical Commission and the International Telecommunication Union , recognize six grades of multi mode and single mode optical fiber as shown in the table on page 12. Physical dimensions related to the optical fiber, e.g. diameter, non-circularity and mechanical requirements, as well as optical specifications such as attenuation and bandwidth are specified. It is important to keep in mind that these specifications are for the “raw” optical fiber before it is subjected to the cabling process. TIA and ISO use these optical fiber requirements to then specify requirements for OM1, OM2, OM3, OM4, OS1 and OS2 optical fiber cables and cabling.

While media selection may seem onerous, comparing the throughput and distance needs in your target environment against performance parameters is a good way to initiate the selection process. Although such comparisons may lead to the conclusion that singlemode fiber is the optimum medium under all scenarios, there are tradeoffs to consider related to the cost of optoelectronics and application implementation.

|

The XLR8 tool from Siemon combines splice activation and mechanical crimping into a single step, enabling quick and reliable field termination of LC and SC connectors.

|

In particular, singlemode optoelectronics rely on much more powerful and precise light sources and can cost 2 to 4 times more than multimode optoelectronics. Also, multimode media is typically easier to terminate and install in the field than singlemode. Additionally, it is always more cost-effective to transmit at 850 nm for multimode applications and at 1310 nm for singlemode applications. Finally, optoelectronics that use multiple transmit lasers (e.g. 10GBase-LX4 uses four separate laser sources per fiber) or other multiplexing techniques cost significantly more than optoelectronics that transmit over one wavelength.

A good rule of thumb is to consider multimode fiber to be the most cost-effective choice for applications up to 550 meters in length.

Optical fiber cabling configurations

Optical fiber cabling is typically deployed in pairs; one fiber is used to transmit and the other is used to receive. Due to its extended distance support of applications compared to balanced twisted-pair cabling, optical fiber cabling is the perfect media for use in customer-owned outside plant (OSP), backbone cabling, and centralized cabling applications.

Customer-owned OSP cabling is deployed between buildings in a campus environment and includes the terminating connecting hardware at or within the structures. Interestingly, customer-owned OSP cabling is typically intended to have a useful life in excess of 30 years, so great care should be taken to specify robust cabling media. Requirements pertaining to customer-owned outside plant cabling and pathways can be found in ANSI/TIA-758-A and BS EN 50174-3.

|

Backbone cabling is deployed between entrance facilities, access-provider spaces, service-provider spaces, common equipment rooms, common telecommunications rooms, equipment rooms, telecommunications rooms, and telecommunications enclosures within a commercial building. Backbone cabling must be configured in a star topology and may contain one (main) or two (main and intermediate) levels of crossconnects. Backbone cabling requirements are specified in ANSI/TIA-568-C.0, ANSI/TIA-568-C.1, and ISO/IEC 11801 Ed2.0.

Centralized optical fiber cabling may be deployed as an alternative to the optical crossconnect to support centralized electronics deployment in single-tenant buildings. Centralized optical fiber cabling supports direct connections from the work area to the centralized crossconnect via a pull-through cable and the use of an interconnect or splice in the telecommunications room or enclosure. Note that the maximum allowed distance of the pull-through cable between the work area and the centralized crossconnect is 90 meters (295 feet). Centralized cabling requirements are specified in ANSI/TIA-568-C.0, ANSI/TIA-568-C.1, and ISO/IEC 11801 Ed2.0.

Optical fiber cabling may also be used in the horizontal cabling infrastructure, although there are no provisions allowing extended distance in the TIA and ISO standards.

Horizontal cabling is deployed between the work area and the telecommunications room or enclosure. Horizontal cabling includes the connector and cords at the work area and the optical fiber patch panel. A full crossconnect or interconnect may be deployed along with an optional multi-user telecommunications outlet assembly (MUTOA) or consolidation point (CP) for a total of four connectors in the channel. The maximum horizontal cable length shall be 90 meters (295 feet) and the total length of work area cords, patch cords or jumpers, and equipment cords shall be 10 meters (32 feet) for both optical fiber and balanced twisted-pair cabling channels. Horizontal cabling requirements are specified in ANSI/TIA-568-C.0, ANSI/TIA-568-C.1, and ISO/IEC 11801 Ed2.0.

Optical fiber cable

The optical fiber that enables light transmission is actually an assembly of three subcomponents: the core, the cladding, and the coating. The core is made of glass (or, more accurately, silica) and is the medium through which the light propagates. The core may have an overall diameter of 9 µm for singlemode or 50 µm or 62.5 µm for multimode transmission. Surrounding the glass is a second layer of glass with a vastly different index of refraction that focuses and contains the light by reflecting it back into the core. This second layer is called the cladding and, regardless of the glass core construction, has an overall diameter of 125 µm. Combining the core and cladding diameters is the source of optical fiber descriptors, such as 50/125 µm or 62.5/125 µm, that are applied to optical fibers commonly used for telecommunications applications. The purpose of the outermost layer, called the coating, is to add strength and build up the outer diameter to a manageable 250-µm diameter (about three times the diameter of a human hair). The coating is not glass, but rather a protective polymer such as urethane acrylate, that may be optionally colored for identification purposes.

Cabling optical fibers makes them easier to handle, facilitates connector termination, provides protection, and increases strength and durability. The cabling process differs depending upon whether the optical fibers are intended for use in indoor, outdoor, or indoor/outdoor environments.

Indoor optical fiber cables are suitable for inside (including riser and plenum) building applications. To facilitate connector terminations, a 900µm plastic buffer is applied over the optical fiber core, cladding, and coating subassembly to create a tight buffered fiber. Up to 12 tight buffered fibers are then encircled with aramid yarns for strength, and then enclosed by an overall flame-retardant thermoplastic jacket to form a finished optical fiber cable. For indoor cables with higher than 12-fiber counts, groups of jacketed optical fiber cables (typically 6- or 12-fiber count) are bundled together with a central strength member (for support and to maintain cable geometry) and are enclosed by an overall flame-retardant thermoplastic jacket. Supported fiber counts are typically between 2 and 144.

Outdoor (also known as outside plant or OSP) optical fiber cables are used outside of the building and are suitable for lashed aerial, duct, and underground conduit applications. To protect the optical fiber core from water and freezing, up to 12 250-µm optical fiber cores are enclosed in a loose buffer tube that is filled with water-blocking gel. For up to 12-fiber applications, the gel-filled loose tube is encircled with water-blocking tapes and aramid yarns and enclosed within an overall ultraviolet and water-resistant black polyolefin jacket. For outdoor cables with higher than 12-fiber counts, groups of loose buffer tubes (typically 6- or 12-fiber count) are bundled together with a central strength member and water-blocking tapes and aramid yarns and then enclosed within an overall ultraviolet and water-resistant black polyolefin jacket. Corrugated aluminum, interlocking steel armor, or dual jackets may be applied for additional protection against crushing and rodent damage. Supported fiber counts are typically between 12 and 144.

|

Several of the optical interconnection technologies described in this article are shown here. Clockwise from upper left are MTP/MPO-style trunking cable assemblies, duplex LC-connected optical fiber cables, plug-and-play array modules (one with MPO/MTP-style connectors showing and the other with LC connectors showing), and a pass-through adapter plate.

|

Indoor/outdoor optical fiber cables offer the ultraviolet and water resistance benefits of outdoor optical fiber cables combined with a fire-retardant jacket that allows the cable to be deployed inside the building entrance facility beyond the maximum 15.2-meter (50-foot) distance that is specified for OSP cables. Note that there is no length limitation in countries outside of the United States that do not specify riser- or plenum-rated cabling. The advantage of using indoor/outdoor optical fibers cables in this scenario is that the number of transition splices and hardware connections is reduced. Indoor/outdoor optical fiber cables are similar in construction to outdoor optical fiber cables except that the 250-µm optical fiber cores may be either tight buffered or enclosed within loose buffer tubes. Loose tube indoor/outdoor optical fiber cables have a smaller overall diameter than tight buffered indoor/outdoor optical fiber cables, however tight buffered indoor/outdoor cables are typically more convenient to terminate because they do not contain water-blocking gel or require the use of breakout kits (described later).

|

Shown here is a typical schematic for centralized optical fiber cabling using an interconnection; the centralized system supports direct connections from the work area to the centralized crossconnect via a pull-through cable and the interconnect.

|

Optical fiber interconnections

Unlike the plug-and-jack combination that makes up a mated balanced twisted-pair connection, an interconnection is used to mate two tight-buffered optical fibers. An optical fiber interconnection typically consists of two plugs (connectors) that are aligned in a nose-to-nose orientation and held in place with an adapter (also called a coupler or bulkhead). The performance of the optical fiber interconnection is highly reliant upon the connector’s internal ferrule and the adapter’s alignment sleeve. These components work in tandem to retain and properly align the optical fibers in the plug-adapter-plug configuration. The internal connector ferrule is fabricated using a high-precision manufacturing process to ensure that the optical fiber is properly seated and its position is tightly controlled. The high tolerances of the alignment sleeve ensure that the optical fibers held in place by the ferrule are aligned as perfectly as possible. Although more expensive, ceramic alignment sleeves maintain slightly tighter tolerances than metal or plastic alignment sleeves, are not as susceptible to performance variations due to temperature fluctuations, and may be specified for extremely low-loss applications.

Accurate plug-adapter-plug alignment minimizes light energy lost at the optical fiber interconnection and maintaining precision tolerances becomes especially critical as the optical fiber diameter decreases. For example, if two 62.5-µm optical fibers are off-center by 4 µm in opposite directions, then 13% of the light energy escapes or is lost at the interconnection point. This same misalignment in a 9-µm singlemode fiber would result in almost a total loss of light energy. The critical nature of the core alignment is the reason why different optical fiber types, including 62.5-µm and 50-µm multimode fiber, should never be mixed in the same link or channel.

Optical fiber breakout kits are used to facilitate termination of loose-tube optical fibers used in indoor/outdoor and outdoor applications. Once the water-blocking gel is thoroughly removed from the optical fibers, the breakout kit allows furcation tubes (typically 1.2mm to 3.0mm in diameter) to be installed over the 250-µm optical fibers, increasing the diameter and forming a short “jacket” so that the optical fibers may be terminated to the desired optical fiber connector. Selection of the correct furcation tube ensures compatibility with all optical fiber connectors.

Users can choose from many optical fiber connector options.

Traditional optical fiber connectors are represented by the SC and ST connector styles. These two types of optical fiber connectors were recognized when optical fiber cabling was described in the first published TIA and ISO/IEC telecommunications cabling standards. The ST connector features a round metal coupling ring that twists and latches onto the adapter and is only available as a simplex assembly (two assemblies are required per link or channel). SC connectors feature a quick push-pull latching mechanism and have an advantage in that they may be used in conjunction with a duplex clip that more easily supports the interconnection of the two optical fibers in a link or channel. SC optical fiber connectors are generally recommended over ST optical fiber connectors for use in new installations due to their duplexing capability. Both ST and SC connectors may be field-terminated using an epoxy/polish or mechanical splice method.

|

Singlemode fiber cores are 9 µm in diameter, while multimode fiber cores may be 50 or 62.5 µm. Regardless of core size, the cladding is 125 µm and the coating 250 µm.

|

Small form factor (SFF) refers to a family of optical fiber interfaces that support double the connector density of traditional optical fiber connectors. The most common SFF interface is the LC connector, with the MT-RJ having some limited legacy market presence. Both interfaces feature duplex configurations and a small pluggable form with external plug latch that is approximately the same size as the 8-position modular plug used for copper connections. The LC connector may be field terminated using an epoxy/polish method or mechanical splice method. The MT-RJ connector is field terminated using a traditional no-epoxy/no-polish mechanical splice termination method. The main difference between the MT-RJ and LC optical connector is related to the performance of the internal ferrule. The LC’s internal ferrule maintains sufficiently tight tolerances to fully support both singlemode and multimode applications, while the MT-RJ connector is recommended for use in legacy applications only. Field termination of MT-RJ connectors is not recommended for singlemode applications.

Array optical fiber connectors are the newest recognized style of optical fiber interfaces and are intended to support extremely high-density environments as well as emerging technologies such as 40GBase-SR4 and 100GBase-SR10 that will require more than two optical fibers per link or channel. There are typically 12 or 24 fibers in an array connector, although one array connector may support as many as 144 fibers. A multi-fiber push on (MPO) style interface is the most basic array interface. MTP optical fiber connectors are intermateable with MPO connectors; however they are engineered to deliver improved mechanical and optical performance and are recommended for deployment in new installations. MPO/MTP connectors cannot be field terminated. Array or “plug-and-play” modules are self-contained and typically support the interconnection of two, 12-fiber MPO/MTP interfaces with 24 LC connections or one 12-fiber MPO/MTP interface with 12 SC or LC connections.

Optical fiber cabling deployment

The most common optical fiber cabling deployment approach is to field terminate the optical fiber connectors to the optical fiber cable using the appropriate epoxy/polish or no-epoxy/no-polish mechanical termination method. However, the new MPO/MTP plug-and-play modules and MPO/MTP array connectors are not supported by field termination and there are considerations, such as installer expertise and the IT construction/upgrade schedule, that may favor the use of factory-terminated pigtails or trunking assemblies over field termination methods.

|

The environment in which the fiber cable will be used, e.g. indoor or outdoor, will determine the cable’s construction and the treatment of the fibers within that cable.

|

The pros and cons of these termination methods are described here.

Field termination supports the lowest raw material cost for SC, ST, LC, and MT-RJ optical fiber cabling systems. However, the time needed for field termination is the longest of the three deployment options and installer skill-level requirements are higher, which may increase the project installation costs. No-epoxy/no-polish and certain mechanical-splice-style termination methods require less installation skill than the epoxy/polish method, however the connectors used in conjunction with mechanical termination methods are more expensive and the performance (especially using the no-epoxy/no-polish method) may be lower and more variable.

Optical fiber pigtails feature a factory preterminated and tested SC, ST, LC or MT-RJ optical fiber connector and a 1-meter stub of 62.5/125-µm multimode, 50/125-µm multimode, or singlemode optical fiber. The stub end of the pigtail is then fusion-spliced to the optical fiber. Fusion splicing provides a consistent, nearly loss-free termination and can be fast with proper technicians and equipment. The main benefits to this approach are the assurance of low-loss performance at the interconnection and the elimination of the need for endface inspections and possible connector reterminations.

Trunking cable assemblies provide an efficient alternative to field-terminated components or splice connections and allow up to 75% faster field deployment times. Trunking cable assemblies are custom factory preterminated and tested lengths of optical fiber cable terminated on both ends with SC, ST, LC, MT-RJ, or MPO/MTP optical fiber connectors that are simply pulled and plugged in. When deploying trunking cable assemblies, cable-length specification is critical and precise planning is required up front. Trunking cable assemblies that have an MPO/MTP connector on one or both ends are commonly referred to as “plug-and-play” cable assemblies. MPO/MTP plug-and-play cable assemblies have the smallest connector profile and, therefore, have the smallest pathway, cabinet, and rack-space requirements of all trunking cable assembly options.

XXX . ____ . 000 . 111 Installing backbone cabling systems

The backbone system consists of connections between entrance facilities, equipment rooms and telecommunications closets. Backbone systems are often referred to as riser systems because in many installations the bulk of the system, especially the cable, is installed in a vertical riser. In multistory buildings, for example, the backbone connects the equipment or computer room in the basement with telecommunications closets located on every floor.

In a campus environment, however, the backbone may run horizontally, connecting different entrance facilities or remote telecommunications closets. In some applications, then, there is no real difference between the terms "horizontal" and "vertical." Physical topologies may vary, and sometimes connections don`t fit our assumptions about what a backbone is.

The main requirement of a backbone system is that it be able to support many different user applications, from simple voice transmission to very unforgiving high-speed data and multimedia networks. To meet this requirement, system designers and installers must use foresight when planning a backbone system. Installations today have to anticipate future growth and prospective applications as well as current needs. For this reason, many backbone installations depend on optical fiber, and can include dozens of spare fibers, if not actual cables. Another recent trend is to install hybrid fiber-optic cables in the backbone, terminating and using the multimode fibers but leaving the singlemode fibers dark, or unused, to support future needs.

Backbone cabling systems that are being installed can usually be classified into one of four different topologies, each of which has its own characteristics. To properly install these systems--Ethernet, token ring, fiber distributed data interface, and asynchronous transfer mode--it is essential to know, at least in a general way, how they function.

Ethernet, FOIRL and 10Base-FL

The fiber-optic inter-repeater link, or FOIRL, section of the 802.3 standard of the Institute of Electrical and Electronics Engineers expands the scope of the traditional Ethernet topology. As specified in IEEE`s 802.3 10Base-5 specification, large 50-ohm Thick Ethernet, or Thicknet, trunk cables are accessed using vampire taps that pierce the cable to contact the conductor at specific intervals of 2.5 meters. (This explains the bandmarks on the cable jacket.)

Transceivers connect to the taps. Attachment unit interface or transceiver cables attach the transceiver to the data terminal equipment, usually a workstation or hub that services several nodes. Ethernet trunks are often used in large networks, with each coaxial-cable segment running up to 500 meters without repeaters in a physical bus topology.

More flexible 50-ohm Thin Ethernet cables, also called Thinnet, are often installed to support smaller networks, limited to 185 meters. The relevant specification is IEEE 802.3`s 10Base-2 document.

Less-expensive Thinnet is a good option for Ethernet networks that are limited to a small geographical area and relatively few users, such as a single office or department. Workstations are linked in a bus topology, but the cable can be directly attached to the workstation, creating a daisy-chain. Both 10Base-5 and 10Base-2 are designed to run at 10 megabits per second.

When considering a 10Base-5 or 10Base-2 network, it should be noted that neither is recommended by the TIA/EIA-568-A commercial building wiring standard of the Telecommunications Industry Association and Electronic Industries Association (both in Arlington, VA). Both networks use a bus topology, which is significantly different from the star topology recommended by 568-A.

For 10-Mbit/sec Ethernet, you can use a 10Base-T system running on unshielded twisted-pair, or UTP, cable.