ROBO ( Ringing On Boat ) in Electronic Signal

ROBO COP

Ringing (signal)

Ringing (telephony).

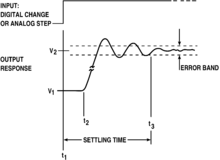

An illustration of overshoot, followed by ringing and settle time.

It is also known as ripple, particularly in electricity or in frequency domain response.

Electricity

In electrical circuits, ringing is an unwanted oscillation of a voltage or current. It happens when an electrical pulse causes the parasitic capacitances and inductances in the circuit (i.e. those that are not part of the design, but just by-products of the materials used to construct the circuit) to resonate at their characteristic frequency. Ringing artifacts are also present in square waves; see Gibbs phenomenon.Ringing is undesirable because it causes extra current to flow, thereby wasting energy and causing extra heating of the components; it can cause unwanted electromagnetic radiation to be emitted ; it can delay arrival at a desired final state (increase settling time); and it may cause unwanted triggering of bistable elements in digital circuits. Ringy communications circuits may suffer falsing.

Ringing can be due to signal reflection, in which case it may be minimized by impedance matching.

Video

In video circuits, electrical ringing causes closely spaced repeated ghosts of a vertical or diagonal edge where dark changes to light or vice versa, going from left to right. In a CRT the electron beam upon changing from dark to light or vice versa instead of changing quickly to the desired intensity and staying there, overshoots and undershoots a few times. This bouncing could occur anywhere in the electronics or cabling and is often caused by or accentuated by a too high setting of the sharpness control.Audio

Ringing can affect audio equipment in a number of ways. Audio amplifiers can produce ringing depending on their design, although the transients that can produce such ringing rarely occur in audio signals.Transducers (i.e., microphones and loudspeakers) can also ring. Mechanical ringing is more of a problem with loudspeakers as the moving masses are larger and less easily damped, but unless extreme they are difficult to audibly identify.

In digital audio, ringing can occur as a result of filters such as brickwall filters. Here, the ringing occurs before the transient as well as after.

Signal processing

In signal processing, "ringing" may refer to ringing artifacts: spurious signals near sharp transitions. These have a number of causes, and occur for instance in JPEG compression and as pre-echo in some audio compression.Formal verification

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of intended algorithms underlying a system with respect to a certain formal specification or property, using formal methods of mathematics.

Formal verification can be helpful in proving the correctness of systems such as: cryptographic protocols, combinational circuits, digital circuits with internal memory, and software expressed as source code.

The verification of these systems is done by providing a formal proof on an abstract mathematical model of the system, the correspondence between the mathematical model and the nature of the system being otherwise known by construction. Examples of mathematical objects often used to model systems are: finite state machines, labelled transition systems, Petri nets, vector addition systems, timed automata, hybrid automata, process algebra, formal semantics of programming languages such as operational semantics, denotational semantics, axiomatic semantics and Hoare logic .

One approach and formation is model checking, which consists of a systematically exhaustive exploration of the mathematical model (this is possible for finite models, but also for some infinite models where infinite sets of states can be effectively represented finitely by using abstraction or taking advantage of symmetry). Usually this consists of exploring all states and transitions in the model, by using smart and domain-specific abstraction techniques to consider whole groups of states in a single operation and reduce computing time. Implementation techniques include state space enumeration, symbolic state space enumeration, abstract interpretation, symbolic simulation, abstraction refinement. The properties to be verified are often described in temporal logics, such as linear temporal logic (LTL), Property Specification Language (PSL), SystemVerilog Assertions (SVA), or computational tree logic (CTL). The great advantage of model checking is that it is often fully automatic; its primary disadvantage is that it does not in general scale to large systems; symbolic models are typically limited to a few hundred bits of state, while explicit state enumeration requires the state space being explored to be relatively small.

Another approach is deductive verification. It consists of generating from the system and its specifications (and possibly other annotations) a collection of mathematical proof obligations, the truth of which imply conformance of the system to its specification, and discharging these obligations using either interactive theorem provers (such as HOL, ACL2, Isabelle, Coq or PVS), automatic theorem provers, or satisfiability modulo theories (SMT) solvers. This approach has the disadvantage that it typically requires the user to understand in detail why the system works correctly, and to convey this information to the verification system, either in the form of a sequence of theorems to be proved or in the form of specifications of system components (e.g. functions or procedures) and perhaps subcomponents (such as loops or data structures).

Software

Formal verification of software programs involves proving that a program satisfies a formal specification of its behavior. Subareas of formal verification include deductive verification (see above), abstract interpretation, automated theorem proving, type systems, and lightweight formal methods. A promising type-based verification approach is dependently typed programming, in which the types of functions include (at least part of) those functions' specifications, and type-checking the code establishes its correctness against those specifications. Fully featured dependently typed languages support deductive verification as a special case.Another complementary approach is program derivation, in which efficient code is produced from functional specifications by a series of correctness-preserving steps. An example of this approach is the Bird–Meertens formalism, and this approach can be seen as another form of correctness by construction.

These techniques can be sound, meaning that the verified properties can be logically deduced from the semantics, or unsound, meaning that there is no such guarantee. A sound technique yields a result only once it has searched the entire space of possibilities. An example of an unsound technique is one that searches only a subset of the possibilities, for instance only integers up to a certain number, and give a "good-enough" result. Techniques can also be decidable, meaning that their algorithmic implementations are guaranteed to terminate with an answer, or undecidable, meaning that they may never terminate. Because they are bounded, unsound techniques are often more likely to be decidable than sound ones.

Verification and validation

Verification is one aspect of testing a product's fitness for purpose. Validation is the complementary aspect. Often one refers to the overall checking process as V & V.- Validation: "Are we trying to make the right thing?", i.e., is the product specified to the user's actual needs?

- Verification: "Have we made what we were trying to make?", i.e., does the product conform to the specifications?

Automated program repair

Program repair is performed with respect to an oracle, encompassing the desired functionality of the program which is used for validation of the generated fix. A simple example is a test-suite—the input/output pairs specify the functionality of the program. A variety of techniques are employed, most notably using satisfiability modulo theories (SMT) solvers, and genetic programming, using evolutionary computing to generate and evaluate possible candidates for fixes. The former method is deterministic, while the latter is randomized.Program repair combines techniques from formal verification and program synthesis. Fault-localization techniques in formal verification are used to compute program points which might be possible bug-locations, which can be targeted by the synthesis modules. Repair systems often focus on a small pre-defined class of bugs in order to reduce the search space. Industrial use is limited owing to the computational cost of existing techniques.

Industry use

The growth in complexity of designs increases the importance of formal verification techniques in the hardware industry. At present, formal verification is used by most or all leading hardware companies, but its use in the software industry is still languishing. This could be attributed to the greater need in the hardware industry, where errors have greater commercial significance. Because of the potential subtle interactions between components, it is increasingly difficult to exercise a realistic set of possibilities by simulation. Important aspects of hardware design are amenable to automated proof methods, making formal verification easier to introduce and more productive.As of 2011, several operating systems have been formally verified: NICTA's Secure Embedded L4 microkernel, sold commercially as seL4 by OK Labs; OSEK/VDX based real-time operating system ORIENTAIS by East China Normal University; Green Hills Software's Integrity operating system; and SYSGO's PikeOS.

As of 2016, Yale and Columbia professors Zhong Shao and Ronghui Gu developed a formal verification protocol for blockchain called CertiKOS. The program is the first example of formal verification in the blockchain world, and an example of formal verification being used explicitly as a security program.

As of 2017, formal verification has been applied to the design of large computer networks through a mathematical model of the network, and as part of a new network technology category, intent-based networking. Network software vendors that offer formal verification solutions include Cisco Forward Networks and Veriflow Systems.

The CompCert C compiler is a formally verified C compiler implementing the majority of ISO C.

Intelligent verification

Intelligent Verification, including intelligent testbench automation, is a form of functional verification of electronic hardware designs used to verify that a design conforms to specification before device fabrication. Intelligent verification uses information derived from the design and specification(s) to expose bugs in and between hardware IP's. Intelligent verification tools require considerably less engineering effort and user guidance to achieve verification results that meet or exceed the standard approach of writing a testbench program.

The first generation of intelligent verification tools optimized one part of the verification process known as Regression testing with a feature called automated coverage feedback. With automated coverage feedback, the test description is automatically adjusted to target design functionality that has not been previously verified (or "covered") by other tests existing tests. A key property of automated coverage feedback is that, given the same test environment, the software will automatically change the tests to improve functional design coverage in response to changes in the design.

Newer intelligent verification tools are able to derive the essential functions one would expect of a testbench (stimulus, coverage, and checking) from a single, compact, high-level model. Using a single model that represents and resembles the original specification greatly reduces the chance of human error in the testbench development process that can lead to both missed bugs and false failures.

Other properties of intelligent verification may include:

- Providing verification results on or above par with a testbench program but driven by a compact high-level model

- Applicability to all levels of simulation to decrease reliance on testbench programs

- Eliminating opportunities for programming errors and divergent interpretations of the specification, esp. between IP and SoC teams

- Providing direction as to why certain coverage points were not detected.

- Automatically tracking paths through design structure to coverage points, to create new tests.

- Ensuring that various aspects of the design are only verified once in the same test sets.

- Scaling the test automatically for different hardware and software configurations of a system.

- Support for different verification methodologies like constrained random, directed, graph-based, use-case based in the same tool.

- Code coverage

- Branch coverage

- Expression coverage

- Functional coverage

- Assertion coverage

Achieving confidence that a design is functionally correct continues to become more difficult. To counter these problems, in the late 1980s fast logic simulators and specialized hardware description languages such as Verilog and VHDL became popular. In the 1990s, constrained random simulation methodologies emerged using hardware verification languages such as Vera and e, as well as SystemVerilog (in 2002), to further improve verification quality and time.

Intelligent verification approaches supplement constrained random simulation methodologies, which bases test generation on external input rather than design structure.Intelligent verification is intended to automatically utilize design knowledge during simulation, which has become increasingly important over the last decade due to increased design size and complexity, and a separation between the engineering team that created a design and the team verifying its correct operation.

There has been substantial research into the intelligent verification area, and commercial tools that leverage this technique are just beginning to emerge.

Functional verification

In electronic design automation, functional verification is the task of verifying that the logic design conforms to specification. In everyday terms, functional verification attempts to answer the question "Does this proposed design do what is intended?" This is a complex task, and takes the majority of time and effort in most large electronic system design projects. Functional verification is a part of more encompassing design verification, which, besides functional verification, considers non-functional aspects like timing, layout and power.

Functional verification is very difficult because of the sheer volume of possible test-cases that exist in even a simple design. Frequently there are more than 10^80 possible tests to comprehensively verify a design – a number that is impossible to achieve in a lifetime. This effort is equivalent to program verification, and is NP-hard or even worse – and no solution has been found that works well in all cases. However, it can be attacked by many methods. None of them are perfect, but each can be helpful in certain circumstances:

- Logic simulation simulates the logic before it is built.

- Simulation acceleration applies special purpose hardware to the logic simulation problem.

- Emulation builds a version of system using programmable logic. This is expensive, and still much slower than the real hardware, but orders of magnitude faster than simulation. It can be used, for example, to boot the operating system on a processor.

- Formal verification attempts to prove mathematically that certain requirements (also expressed formally) are met, or that certain undesired behaviors (such as deadlock) cannot occur.

- Intelligent verification uses automation to adapt the testbench to changes in the register transfer level code.

- HDL-specific versions of lint, and other heuristics, are used to find common problems.

A simulation environment is typically composed of several types of components:

- The generator generates input vectors that are used to search for anomalies that exist between the intent (specifications) and the implementation (HDL Code). This type of generator utilizes an NP-complete type of SAT Solver that can be computationally expensive. Other types of generators include manually created vectors, Graph-Based generators (GBMs) proprietary generators. Modern generators create directed-random and random stimuli that are statistically driven to verify random parts of the design. The randomness is important to achieve a high distribution over the huge space of the available input stimuli. To this end, users of these generators intentionally under-specify the requirements for the generated tests. It is the role of the generator to randomly fill this gap. This mechanism allows the generator to create inputs that reveal bugs not being searched for directly by the user. Generators also bias the stimuli toward design corner cases to further stress the logic. Biasing and randomness serve different goals and there are tradeoffs between them, hence different generators have a different mix of these characteristics. Since the input for the design must be valid (legal) and many targets (such as biasing) should be maintained, many generators use the constraint satisfaction problem (CSP) technique to solve the complex testing requirements. The legality of the design inputs and the biasing arsenal are modeled. The model-based generators use this model to produce the correct stimuli for the target design.

- The drivers translate the stimuli produced by the generator into the actual inputs for the design under verification. Generators create inputs at a high level of abstraction, namely, as transactions or assembly language. The drivers convert this input into actual design inputs as defined in the specification of the design's interface.

- The simulator produces the outputs of the design, based on the design’s current state (the state of the flip-flops) and the injected inputs. The simulator has a description of the design net-list. This description is created by synthesizing the HDL to a low gate level net-list.

- The monitor converts the state of the design and its outputs to a transaction abstraction level so it can be stored in a 'score-boards' database to be checked later on.

- The checker validates that the contents of the 'score-boards' are legal. There are cases where the generator creates expected results, in addition to the inputs. In these cases, the checker must validate that the actual results match the expected ones.

- The arbitration manager manages all the above components together.

High-level verification (HLV), or electronic system-level (ESL) verification, is the task to verify ESL designs at high abstraction level, i.e., it is the task to verify a model that represents hardware above register-transfer level (RTL) abstract level. For high-level synthesis (HLS or C synthesis), HLV is to HLS as functional verification is to logic synthesis.

Electronic digital hardware design has evolved from low level abstraction at gate level to register transfer level (RTL), the abstraction level above RTL is commonly called high-level, ESL, or behavioral/algorithmic level.

In high-level synthesis, behavioral/algorithmic designs in ANSI C/C++/SystemC code is synthesized to RTL, which is then synthesized into gate level through logic synthesis. Functional verification is the task to make sure a design at RTL or gate level conforms to a specification. As logic synthesis matures, most functional verification is done at the higher abstraction, i.e. at RTL level, the correctness of logic synthesis tool in the translating process from RTL description to gate netlist is of less concern today.

High-level synthesis is still an emerging technology, so High-level verification today has two important areas under development

- to validate HLS is correct in the translation process, i.e. to validate the design before and after HLS are equivalent, typically through formal methods

- to verify a design in ANSI C/C++/SystemC code is conforming to a specification, typically through logic simulation.

Runtime verification

Runtime verification is a computing system analysis and execution

approach based on extracting information from a running system and

using it to detect and possibly react to observed behaviors satisfying

or violating certain properties .

Some very particular properties, such as datarace and deadlock

freedom, are typically desired to be satisfied by all systems and may be

best implemented algorithmically. Other properties can be more

conveniently captured as formal specifications. Runtime verification specifications are typically expressed in trace predicate formalisms, such as finite state machines, regular expressions, context-free patterns, linear temporal logics,

etc., or extensions of these. This allows for a less ad-hoc approach

than normal testing. However, any mechanism for monitoring an executing

system is considered runtime verification, including verifying against

test oracles and reference implementations.

When formal requirements specifications are provided, monitors are

synthesized from them and infused within the system by means of

instrumentation. Runtime verification can be used for many purposes,

such as security or safety policy monitoring, debugging, testing,

verification, validation, profiling, fault protection, behavior

modification (e.g., recovery), etc. Runtime verification avoids the

complexity of traditional formal verification techniques, such as model checking

and theorem proving, by analyzing only one or a few execution traces

and by working directly with the actual system, thus scaling up

relatively well and giving more confidence in the results of the

analysis (because it avoids the tedious and error-prone step of

formally modelling the system), at the expense of less coverage.

Moreover, through its reflective capabilities runtime verification can

be made an integral part of the target system, monitoring and guiding

its execution during deployment.

Basic approaches

Overview of the monitor based verification process as described by Havelund et al. in A Tutorial on Runtime Verification.

- The system can be monitored during the execution itself (online) or after the execution e.g. in form of log analysis (offline).

- The verifying code is integrated into the system (as done in Aspect-oriented Programming) or is provided as an external entity.

- The monitor can report violation or validation of the desired specification.

- A monitor is created from some formal specification. This process usually can be done automatically if there is an equivalent automaton for the formal language the property is specified in. To transform a regular expression a finite-state machine can be used; a property in linear temporal logic can be transformed into a Büchi automaton (see also Linear temporal logic to Büchi automaton).

- The system is instrumented to send events concerning its execution state to the monitor.

- The system is executed and gets verified by the monitor.

- The monitor verifies the received event trace and produces a verdict whether the specification is satisfied. Additionally, the monitor sends feedback to the system to possibly correct false behaviour. When using offline monitoring the system of cause cannot receive any feedback, as the verification is done at a later point in time.

Examples

The examples below discuss some simple properties which have been considered, possibly with small variations, by several runtime verification groups by the time of this writing (April 2011). To make them more interesting, each property below uses a different specification formalism and all of them are parametric. Parametric properties are properties about traces formed with parametric events, which are events that bind data to parameters. Here a parametric property has the form , where is a specification in some appropriate formalism referring to generic (uninstantiated) parametric events. The intuition for such parametric properties is that the property expressed by must hold for all parameter instances encountered (through parametric events) in the observed trace. None of the following examples are specific to any particular runtime verification system, though support for parameters is obviously needed. In the following examples Java syntax is assumed, thus "==" is logical equality, while "=" is assignment. Some methods (e.g.,update() in the UnsafeEnumExample) are dummy methods, which are not part of the Java API, that are used for clarity.

HasNext

The HasNext Property

hasNext() method be called and return true before the next() method is called. If this

does not occur, it is very possible that a user will iterate "off the end of" a Collection.

The figure to the right shows a finite state machine that defines a

possible monitor for checking and enforcing this property with runtime

verification. From the unknown state, it is always an error to call the next() method because such an operation could be unsafe. If hasNext() is called and returns true, it is safe to call next(), so the monitor enters the more state. If, however, the hasNext() method returns false, there are no more elements, and the monitor enters the none state. In the more and none states, calling the hasNext() method provides no new information. It is safe to call the next() method from the more state, but it becomes unknown if more elements exist, so the monitor reenters the initial unknown state. Finally, calling the next() method from the none state results in entering the error state. What follows is a representation of this property using parametric past time linear temporal logic.

This formula says that any call to the

next() method must be immediately preceded by a call to hasNext() method that returns true. The property here is parametric in the Iterator i.

Conceptually, this means that there will be one copy of the monitor

for each possible Iterator in a test program, although runtime

verification systems need not implement their parametric monitors this

way. The monitor for this property would be set to trigger a handler

when the formula is violated (equivalently when the finite state machine

enters the error state), which will occur when either next() is called without first calling hasNext(), or when hasNext() is called before next(), but returned false.

UnsafeEnum

Code that violates the UnsafeEnum Property

This pattern is parametric in both the Enumeration and the Vector. Intuitively, and as above runtime verification systems need not implement their parametric monitors this way, one may think of the parametric monitor for this property as creating and keeping track of a non-parametric monitor instance for each possible pair of Vector and Enumeration. Some events may concern several monitors at the same time, such as

v.update(), so the runtime verification system

must (again conceptually) dispatch them to all interested monitors.

Here the property is specified so that it states the bad behaviors of

the program. This property, then, must be monitored for the match of

the pattern. The figure to the right shows Java code that matches this

pattern, thus violating the property. The Vector, v, is updated after

the Enumeration, e, is created, and e is then used.

SafeLock

The previous two examples show finite state properties, but properties used in runtime verification may be much more complex. The SafeLock property enforces the policy that the number of acquires and releases of a (reentrant) Lock class are matched within a given method call. This, of course, disallows release of Locks in methods other than the ones that acquire them, but this is very possibly a desirable goal for the tested system to achieve. Below is a specification of this property using a parametric context-free pattern:

A trace showing two violations of the SafeLock property.

Research Challenges and Applications

Most of the runtime verification research addresses one or more of the topics listed below.Reducing Runtime Overhead

Observing an executing system typically incurs some runtime overhead (hardware monitors may make an exception). It is important to reduce the overhead of runtime verification tools as much as possible, particularly when the generated monitors are deployed with the system. Runtime overhead reducing techniques include:- Improved instrumentation. Extracting events from the executing system and sending them to monitors can generate a large runtime overhead if done naively. Good system instrumentation is critical for any runtime verification tool, unless the tool explicitly targets existing execution logs. There are many instrumentation approaches in current use, each with its advantages and disadvantages, ranging from custom or manual instrumentation, to specialized libraries, to compilation into aspect-oriented languages, to augmenting the virtual machine, to building upon hardware support.

- Combination with static analysis. A common

combination of static and dynamic analyses, particularly encountered in

compilers, is to monitor all the requirements which cannot be

discharged statically. A dual and ultimately equivalent approach tends

to become the norm

in runtime verification, namely to use static analysis to reduce the

amount of otherwise exhaustive monitoring. Static analysis can be

performed both on the property to monitor and on the system to be

monitored. Static analysis of the property to monitor can reveal that

certain events are unnecessary to monitor, that the creation of certain

monitors can be delayed, and that certain existing monitors will never

trigger and thus can be garbage collected. Static analysis of the

system to monitor can detect code that can never influence the monitors.

For example, when monitoring the HasNext property above, one needs not instrument portions of code where each call

i.next()is immediately preceded on any path by a calli.hasnext()which returns true (visible on the control-flow graph). - Efficient monitor generation and management. When monitoring parametric properties like the ones in the examples above, the monitoring system needs to keep track of the status of the monitored property with respect to each parameter instance. The number of such instances is theoretically unbounded and tends to be enormous in practice. An important research challenge is how to efficiently dispatch observed events to precisely those instances which need them. A related challenge is how to keep the number of such instances small (so that dispatching is faster), or in other words, how to avoid creating unnecessary instances for as long as possible and, dually, how to remove already created instances as soon as they become unnecessary. Finally, parametric monitoring algorithms typically generalize similar algorithms for generating non-parametric monitors. Thus, the quality of the generated non-parametric monitors dictates the quality of the resulting parametric monitors. However, unlike in other verification approaches (e.g., model checking), the number of states or the size of the generated monitor is less important in runtime verification; in fact, some monitors can have infinitely many states, such as the one for the SafeLock property above, although at any point in time only a finite number of states may have occurred. What is important is how efficiently the monitor transits from a state to its next state when it receives an event from the executing system.

Specifying Properties

One of the major practical impediments of all formal approaches is that their users are reluctant to, or don't know and don't want to learn how to read or write specifications. In some cases the specifications are implicit, such as those for deadlocks and data-races, but in most cases they need to be produced. An additional inconvenience, particularly in the context of runtime verification, is that many existing specification languages are not expressive enough to capture the intended properties.- Better formalisms. A significant amount of work in the runtime verification community has been put into designing specification formalisms that fit the desired application domains for runtime verification better than the conventional specification formalisms. Some of these consist of slight or no syntactic changes to the conventional formalisms, but only of changes to their semantics (e.g., finite trace versus infinite trace semantics, etc.) and to their implementation (optimized finite state machines instead of Buchi automata, etc.). Others extend existing formalisms with features that are amenable for runtime verification but may not easily be for other verification approaches, such as adding parameters, as seen in the examples above. Finally, there are specification formalisms that have been designed specifically for runtime verification, attempting to achieve their best for this domain and caring little about other application domains. Designing universally better or domain-specifically better specification formalisms for runtime verification is and will continue to be one of its major research challenges.

- Quantitative properties. Compared to other verification approaches, runtime verification is able to operate on concrete values of system state variables, which makes it possible to collect statistical information about the program execution and use this information to assess complex quantitative properties. More expressive property languages that will allow us to fully utilize this capability are needed.

- Better interfaces. Reading and writing property specifications is not easy for non-experts. Even experts often stare for minutes at relatively small temporal logic formulae (particularly when they have nested "until" operators). An important research area is to develop powerful user interfaces for various specification formalisms that would allow users to more easily understand, write and maybe even visualize properties.

- Mining specifications. No matter what tool support is available to help users produce specifications, they will almost always be more pleased to have to write no specifications at all, particularly when they are trivial. Fortunately, there are plenty of programs out there making supposedly correct use of the actions/events that one wants to have properties about. If that is the case, then it is conceivable that one would like to make use of those correct programs by automatically learning from them the desired properties. Even if the overall quality of the automatically mined specifications is expected to be lower than that of manually produced specifications, they can serve as a start point for the latter or as the basis for automatic runtime verification tools aimed specifically at finding bugs (where a poor specification turns into false positives or negatives, often acceptable during testing).

Execution Models and Predictive Analysis

The capability of a runtime verifier to detect errors strictly depends on its capability to analyze execution traces. When the monitors are deployed with the system, instrumentation is typically minimal and the execution traces are as simple as possible to keep the runtime overhead low. When runtime verification is used for testing, one can afford more comprehensive instrumentations that augment events with important system information that can be used by the monitors to construct and therefore analyze more refined models of the executing system. For example, augmenting events with vector-clock information and with data and control flow information allows the monitors to construct a causal model of the running system in which the observed execution was only one possible instance. Any other permutation of events which is consistent with the model is a feasible execution of the system, which could happen under a different thread interleaving. Detecting property violations in such inferred executions (by monitoring them) makes the monitor predict errors which did not happen in the observed execution, but which can happen in another execution of the same system. An important research challenge is to extract models from execution traces which comprise as many other execution traces as possible.Behavior Modification

Unlike testing or exhaustive verification, runtime verification holds the promise to allow the system to recover from detected violations, through reconfiguration, micro-resets, or through finer intervention mechanisms sometimes referred to as tuning or steering. Implementation of these techniques within the rigorous framework of runtime verification gives rise to additional challenges.- Specification of actions. One needs to specify the modification to be performed in an abstract enough fashion that does not require the user to know irrelevant implementation details. In addition, when such a modification can take place needs to be specified in order to maintain the integrity of the system.

- Reasoning about intervention effects. It is important to know that an intervention improves the situation, or at least does not make the situation worse.

- Action interfaces. Similar to the instrumentation for monitoring, we need to enable the system to receive action invocations. Invocation mechanisms are by necessity going to be dependent on the implementation details of the system. However, at the specification level, we need to provide the user with a declarative way of providing feedback to the system by specifying what actions should be applied when under what conditions.

Related Work

Aspect-oriented Programming

In recent years[when?], researchers in Runtime Verification have recognized the potential of using Aspect-oriented Programming as a technique for defining program instrumentation in a modular way. Aspect-oriented programming (AOP) generally promotes the modularization of crosscutting concerns. Runtime Verification naturally is one such concern and can hence benefit from certain properties of AOP. Aspect-oriented monitor definitions are largely declarative, and hence tend to be simpler to reason about than instrumentation expressed through a program transformation written in an imperative programming language. Further, static analyses can reason about monitoring aspects more easily than about other forms of program instrumentation, as all instrumentation is contained within a single aspect. Many current runtime verification tools are hence built in the form of specification compilers, that take an expressive high-level specification as input and produce as output code written in some Aspect-oriented programming language (most often AspectJ).Combination with Formal Verification

Runtime verification, if used in combination with provably correct recovery code, can provide an invaluable infrastructure for program verification, which can significantly lower the latter's complexity. For example, formally verifying heap-sort algorithm is very challenging. One less challenging technique to verify it is to monitor its output to be sorted (a linear complexity monitor) and, if not sorted, then sort it using some easily verifiable procedure, say insertion sort. The resulting sorting program is now more easily verifiable, the only thing being required from heap-sort is that it does not destroy the original elements regarded as a multiset, which is much easier to prove. Looking at from the other direction, one can use formal verification to reduce the overhead of runtime verification, as already mentioned above for static analysis instead of formal verification. Indeed, one can start with a fully runtime verified, but probably slow program. Then one can use formal verification (or static analysis) to discharge monitors, same way a compiler uses static analysis to discharge runtime checks of type correctness or memory safety.Increasing Coverage

Compared to the more traditional verification approaches, an immediate disadvantage of runtime verification is its reduced coverage. This is not problematic when the runtime monitors are deployed with the system (together with appropriate recovery code to be executed when the property is violated), but it may limit the effectiveness of runtime verification when used to find errors in systems. Techniques to increase the coverage of runtime verification for error detection purposes include:- Input generation. It is well known that generating a good set of inputs (program input variable values, system call values, thread schedules, etc.) can enormously increase the effectiveness of testing. That holds true for runtime verification used for error detection, too, but in addition to using the program code to drive the input generation process, in runtime verification one can also use the property specifications, when available, and can also use monitoring techniques to induce desired behaviors. This use of runtime verification makes it closely related to model-based testing, although the runtime verification specifications are typically general purpose, not necessarily crafted for testing reasons. Consider, for example, that one wants to test the general-purpose UnsafeEnum property above. Instead of just generating the above-mentioned monitor to passively observe the system execution, one can generate a smarter monitor which freezes the thread attempting to generate the second e.nextElement() event (right before it generates it), letting the other threads execute in a hope that one of them may generate a v.update() event, in which case an error has been found.

- Dynamic symbolic execution. In symbolic execution programs are executed and monitored symbolically, that is, without concrete inputs. One symbolic execution of the system may cover a large set of concrete inputs. Off-the-shelf constraint solving or satisfiability checking techniques are often used to drive symbolic executions or to systematically explore their space. When the underlying satisfiability checkers cannot handle a choice point, then a concrete input can be generated to pass that point; this combination of concrete and symbolic execution is also referred to as concolic execution.

Profiling (computer programming)

In software engineering, profiling ("program profiling", "software profiling") is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization.

Profiling is achieved by instrumenting either the program source code or its binary executable form using a tool called a profiler (or code profiler). Profilers may use a number of different techniques, such as event-based, statistical, instrumented, and simulation methods.

Gathering program events

Profilers use a wide variety of techniques to collect data, including hardware interrupts, code instrumentation, instruction set simulation, operating system hooks, and performance counters. Profilers are used in the performance engineering process.Use of profilers

Graphical output of the CodeAnalyst profiler.

Program analysis tools are extremely important for understanding program behavior. Computer architects need such tools to evaluate how well programs will perform on new architectures. Software writers need tools to analyze their programs and identify critical sections of code. Compiler writers often use such tools to find out how well their instruction scheduling or branch prediction algorithm is performing...The output of a profiler may be:

— ATOM, PLDI, '94

- A statistical summary of the events observed (a profile)

- Summary profile information is often shown annotated against the source code statements where the events occur, so the size of measurement data is linear to the code size of the program.

/* ------------ source------------------------- count */ 0001 IF X = "A" 0055 0002 THEN DO 0003 ADD 1 to XCOUNT 0032 0004 ELSE 0005 IF X = "B" 0055

- A stream of recorded events (a trace)

- For sequential programs, a summary profile is usually sufficient, but performance problems in parallel programs (waiting for messages or synchronization issues) often depend on the time relationship of events, thus requiring a full trace to get an understanding of what is happening.

- The size of a (full) trace is linear to the program's instruction path length, making it somewhat impractical. A trace may therefore be initiated at one point in a program and terminated at another point to limit the output.

- An ongoing interaction with the hypervisor (continuous or periodic monitoring via on-screen display for instance)

- This provides the opportunity to switch a trace on or off at any desired point during execution in addition to viewing on-going metrics about the (still executing) program. It also provides the opportunity to suspend asynchronous processes at critical points to examine interactions with other parallel processes in more detail.

History

Performance-analysis tools existed on IBM/360 and IBM/370 platforms from the early 1970s, usually based on timer interrupts which recorded the Program status word (PSW) at set timer-intervals to detect "hot spots" in executing code. This was an early example of sampling (see below). In early 1974 instruction-set simulators permitted full trace and other performance-monitoring features.Profiler-driven program analysis on Unix dates back to 1973 , when Unix systems included a basic tool,

prof, which listed each function and how much of program execution time it used. In 1982 gprof extended the concept to a complete call graph analysis.

In 1994, Amitabh Srivastava and Alan Eustace of Digital Equipment Corporation published a paper describing ATOM (Analysis Tools with OM). The ATOM platform converts a program into its own profiler: at compile time, it inserts code into the program to be analyzed. That inserted code outputs analysis data. This technique - modifying a program to analyze itself - is known as "instrumentation".

In 2004 both the

gprof and ATOM papers appeared on the list of the 50 most influential PLDI papers for the 20-year period ending in 1999.

Profiler types based on output

Flat profiler

Flat profilers compute the average call times, from the calls, and do not break down the call times based on the callee or the context.Call-graph profiler

Call graph profilers show the call times, and frequencies of the functions, and also the call-chains involved based on the callee. In some tools full context is not preserved.Input-sensitive profiler

Input-sensitive profilers add a further dimension to flat or call-graph profilers by relating performance measures to features of the input workloads, such as input size or input values. They generate charts that characterize how an application's performance scales as a function of its input.Data granularity in profiler types

Profilers, which are also programs themselves, analyze target programs by collecting information on their execution. Based on their data granularity, on how profilers collect information, they are classified into event based or statistical profilers. Profilers interrupt program execution to collect information, which may result in a limited resolution in the time measurements, which should be taken with a grain of salt. Basic block profilers report a number of machine clock cycles devoted to executing each line of code, or a timing based on adding these together; the timings reported per basic block may not reflect a difference between cache hits and misses.Event-based profilers

The programming languages listed here have event-based profilers:- Java: the JVMTI (JVM Tools Interface) API, formerly JVMPI (JVM Profiling Interface), provides hooks to profilers, for trapping events like calls, class-load, unload, thread enter leave.

- .NET: Can attach a profiling agent as a COM server to the CLR using Profiling API. Like Java, the runtime then provides various callbacks into the agent, for trapping events like method JIT / enter / leave, object creation, etc. Particularly powerful in that the profiling agent can rewrite the target application's bytecode in arbitrary ways.

- Python: Python profiling includes the profile module, hotshot (which is call-graph based), and using the 'sys.setprofile' function to trap events like c_{call,return,exception}, python_{call,return,exception}.

- Ruby: Ruby also uses a similar interface to Python for profiling. Flat-profiler in profile.rb, module, and ruby-prof a C-extension are present.

Statistical profilers

Some profilers operate by sampling. A sampling profiler probes the target program's call stack at regular intervals using operating system interrupts. Sampling profiles are typically less numerically accurate and specific, but allow the target program to run at near full speed.The resulting data are not exact, but a statistical approximation. "The actual amount of error is usually more than one sampling period. In fact, if a value is n times the sampling period, the expected error in it is the square-root of n sampling periods."

In practice, sampling profilers can often provide a more accurate picture of the target program's execution than other approaches, as they are not as intrusive to the target program, and thus don't have as many side effects (such as on memory caches or instruction decoding pipelines). Also since they don't affect the execution speed as much, they can detect issues that would otherwise be hidden. They are also relatively immune to over-evaluating the cost of small, frequently called routines or 'tight' loops. They can show the relative amount of time spent in user mode versus interruptible kernel mode such as system call processing.

Still, kernel code to handle the interrupts entails a minor loss of CPU cycles, diverted cache usage, and is unable to distinguish the various tasks occurring in uninterruptible kernel code (microsecond-range activity).

Dedicated hardware can go beyond this: ARM Cortex-M3 and some recent MIPS processors JTAG interface have a PCSAMPLE register, which samples the program counter in a truly undetectable manner, allowing non-intrusive collection of a flat profile.

Some commonly used statistical profilers for Java/managed code are SmartBear Software's AQtime and Microsoft's CLR Profiler. Those profilers also support native code profiling, along with Apple Inc.'s Shark (OSX), OProfile (Linux), Intel VTune and Parallel Amplifier (part of Intel Parallel Studio), and Oracle Performance Analyzer, among others.

Instrumentation

This technique effectively adds instructions to the target program to collect the required information. Note that instrumenting a program can cause performance changes, and may in some cases lead to inaccurate results and/or heisenbugs. The effect will depend on what information is being collected, on the level of timing details reported, and on whether basic block profiling is used in conjunction with instrumentation. For example, adding code to count every procedure/routine call will probably have less effect than counting how many times each statement is obeyed. A few computers have special hardware to collect information; in this case the impact on the program is minimal.Instrumentation is key to determining the level of control and amount of time resolution available to the profilers.

- Manual: Performed by the programmer, e.g. by adding instructions to explicitly calculate runtimes, simply count events or calls to measurement APIs such as the Application Response Measurement standard.

- Automatic source level: instrumentation added to the source code by an automatic tool according to an instrumentation policy.

- Intermediate language: instrumentation added to assembly or decompiled bytecodes giving support for multiple higher-level source languages and avoiding (non-symbolic) binary offset re-writing issues.

- Compiler assisted

- Binary translation: The tool adds instrumentation to a compiled executable.

- Runtime instrumentation: Directly before execution the code is instrumented. The program run is fully supervised and controlled by the tool.

- Runtime injection: More lightweight than runtime instrumentation. Code is modified at runtime to have jumps to helper functions.

Interpreter instrumentation

- Interpreter debug options can enable the collection of performance metrics as the interpreter encounters each target statement. A bytecode, control table or JIT interpreters are three examples that usually have complete control over execution of the target code, thus enabling extremely comprehensive data collection opportunities.

Hypervisor/Simulator

- Hypervisor: Data are collected by running the (usually) unmodified program under a hypervisor. Example: SIMMON

- Simulator and Hypervisor: Data collected interactively and selectively by running the unmodified program under an Instruction Set Simulator.

Performance prediction

In computer science, performance prediction means to estimate the execution time or other performance factors (such as cache misses) of a program on a given computer. It is being widely used for computer architects to evaluate new computer designs, for compiler writers to explore new optimizations, and also for advanced developers to tune their programs.

There are many approaches to predict program 's performance on computers. They can be roughly divided into three major categories:

- simulation-based prediction

- profile-based prediction

- analytical modeling

Simulation-based prediction

Performance data can be directly obtained from computer simulators, within which each instruction of the target program is actually dynamically executed given a particular input data set. Simulators can predict program's performance very accurately, but takes considerable time to handle large programs. Examples include the PACE and Wisconsin Wind Tunnel simulators as well as the more recent WARPP simulation toolkit which attempts to significantly reduce the time required for parallel system simulation.Another approach, based on trace-based simulation does not run every instruction, but runs a trace file which store important program events only. This approach loses some flexibility and accuracy compared to cycle-accurate simulation mentioned above but can be much faster. The generation of traces often consumes considerable amounts of storage space and can severely impact the runtime of applications if large amount of data are recorded during execution.

Profile-based prediction

The classic approach of performance prediction treats a program as a set of basic blocks connected by execution path. Thus the execution time of the whole program is the sum of execution time of each basic block multiplied by its execution frequency, as shown in the following formula:The execution frequencies of basic blocks are generated from a profiler, which is why this method is called profile-based prediction. The execution time of a basic block is usually obtained from a simple instruction scheduler.

Classic profile-based prediction worked well for early single-issue, in-order execution processors, but fails to accurately predict the performance of modern processors. The major reason is that modern processors can issue and execute several instructions at the same time, sometimes out of the original order and cross the boundary of basic blocks.

_________________________________________________________________________________

Analog signal processing

Analog signal processing is a type of signal processing conducted on continuous analog signals by some analog means (as opposed to the discrete digital signal processing where the signal processing is carried out by a digital process). "Analog" indicates something that is mathematically represented as a set of continuous values. This differs from "digital" which uses a series of discrete quantities to represent signal. Analog values are typically represented as a voltage, electric current, or electric charge around components in the electronic devices. An error or noise affecting such physical quantities will result in a corresponding error in the signals represented by such physical quantities.Examples of analog signal processing include crossover filters in loudspeakers, "bass", "treble" and "volume" controls on stereos, and "tint" controls on TVs. Common analog processing elements include capacitors, resistors and inductors (as the passive elements) and transistors or opamps (as the active elements).

Tools used in analog signal processing

A system's behavior can be mathematically modeled and is represented in the time domain as h(t) and in the frequency domain as H(s), where s is a complex number in the form of s=a+ib, or s=a+jb in electrical engineering terms (electrical engineers use "j" instead of "i" because current is represented by the variable i). Input signals are usually called x(t) or X(s) and output signals are usually called y(t) or Y(s).Convolution is the basic concept in signal processing that states an input signal can be combined with the system's function to find the output signal. It is the integral of the product of two waveforms after one has reversed and shifted; the symbol for convolution is *.

Consider two waveforms f and g. By calculating the convolution, we determine how much a reversed function g must be shifted along the x-axis to become identical to function f. The convolution function essentially reverses and slides function g along the axis, and calculates the integral of their (f and the reversed and shifted g) product for each possible amount of sliding. When the functions match, the value of (f*g) is maximized. This occurs because when positive areas (peaks) or negative areas (troughs) are multiplied, they contribute to the integral.

Fourier transform

The Fourier transform is a function that transforms a signal or system in the time domain into the frequency domain, but it only works for certain functions. The constraint on which systems or signals can be transformed by the Fourier Transform is that:Laplace transform

The Laplace transform is a generalized Fourier transform. It allows a transform of any system or signal because it is a transform into the complex plane instead of just the jω line like the Fourier transform. The major difference is that the Laplace transform has a region of convergence for which the transform is valid. This implies that a signal in frequency may have more than one signal in time; the correct time signal for the transform is determined by the region of convergence. If the region of convergence includes the jω axis, jω can be substituted into the Laplace transform for s and it's the same as the Fourier transform. The Laplace transform is:Bode plots

Bode plots are plots of magnitude vs. frequency and phase vs. frequency for a system. The magnitude axis is in [Decibel] (dB). The phase axis is in either degrees or radians. The frequency axes are in a [logarithmic scale]. These are useful because for sinusoidal inputs, the output is the input multiplied by the value of the magnitude plot at the frequency and shifted by the value of the phase plot at the frequency.Domains

Time domain

This is the domain that most people are familiar with. A plot in the time domain shows the amplitude of the signal with respect to time.Frequency domain

A plot in the frequency domain shows either the phase shift or magnitude of a signal at each frequency that it exists at. These can be found by taking the Fourier transform of a time signal and are plotted similarly to a bode plot.Signals

While any signal can be used in analog signal processing, there are many types of signals that are used very frequently.Sinusoids

Sinusoids are the building block of analog signal processing. All real world signals can be represented as an infinite sum of sinusoidal functions via a Fourier series. A sinusoidal function can be represented in terms of an exponential by the application of Euler's Formula.Impulse

An impulse (Dirac delta function) is defined as a signal that has an infinite magnitude and an infinitesimally narrow width with an area under it of one, centered at zero. An impulse can be represented as an infinite sum of sinusoids that includes all possible frequencies. It is not, in reality, possible to generate such a signal, but it can be sufficiently approximated with a large amplitude, narrow pulse, to produce the theoretical impulse response in a network to a high degree of accuracy. The symbol for an impulse is δ(t). If an impulse is used as an input to a system, the output is known as the impulse response. The impulse response defines the system because all possible frequencies are represented in the inputStep

A unit step function, also called the Heaviside step function, is a signal that has a magnitude of zero before zero and a magnitude of one after zero. The symbol for a unit step is u(t). If a step is used as the input to a system, the output is called the step response. The step response shows how a system responds to a sudden input, similar to turning on a switch. The period before the output stabilizes is called the transient part of a signal. The step response can be multiplied with other signals to show how the system responds when an input is suddenly turned on.The unit step function is related to the Dirac delta function by;

Systems

Linear time-invariant (LTI)

Linearity means that if you have two inputs and two corresponding outputs, if you take a linear combination of those two inputs you will get a linear combination of the outputs. An example of a linear system is a first order low-pass or high-pass filter. Linear systems are made out of analog devices that demonstrate linear properties. These devices don't have to be entirely linear, but must have a region of operation that is linear. An operational amplifier is a non-linear device, but has a region of operation that is linear, so it can be modeled as linear within that region of operation. Time-invariance means it doesn't matter when you start a system, the same output will result. For example, if you have a system and put an input into it today, you would get the same output if you started the system tomorrow instead. There aren't any real systems that are LTI, but many systems can be modeled as LTI for simplicity in determining what their output will be. All systems have some dependence on things like temperature, signal level or other factors that cause them to be non-linear or non-time-invariant, but most are stable enough to model as LTI. Linearity and time-invariance are important because they are the only types of systems that can be easily solved using conventional analog signal processing methods. Once a system becomes non-linear or non-time-invariant, it becomes a non-linear differential equations problem, and there are very few of those that can actually be solved.Analogue electronics

Analogue electronics (American English: analog electronics) are electronic systems with a continuously variable signal, in contrast to digital electronics where signals usually take only two levels. The term "analogue" describes the proportional relationship between a signal and a voltage or current that represents the signal. The word analogue is derived from the Greek word ανάλογος (analogos) meaning "proportional" .An analogue signal uses some attribute of the medium to convey the signal's information. For example, an aneroid barometer uses the angular position of a needle as the signal to convey the information of changes in atmospheric pressure. Electrical signals may represent information by changing their voltage, current, frequency, or total charge. Information is converted from some other physical form (such as sound, light, temperature, pressure, position) to an electrical signal by a transducer which converts one type of energy into another (e.g. a microphone).

The signals take any value from a given range, and each unique signal value represents different information. Any change in the signal is meaningful, and each level of the signal represents a different level of the phenomenon that it represents. For example, suppose the signal is being used to represent temperature, with one volt representing one degree Celsius. In such a system, 10 volts would represent 10 degrees, and 10.1 volts would represent 10.1 degrees.

Another method of conveying an analogue signal is to use modulation. In this, some base carrier signal has one of its properties altered: amplitude modulation (AM) involves altering the amplitude of a sinusoidal voltage waveform by the source information, frequency modulation (FM) changes the frequency. Other techniques, such as phase modulation or changing the phase of the carrier signal, are also used.

In an analogue sound recording, the variation in pressure of a sound striking a microphone creates a corresponding variation in the current passing through it or voltage across it. An increase in the volume of the sound causes the fluctuation of the current or voltage to increase proportionally while keeping the same waveform or shape.

Mechanical, pneumatic, hydraulic, and other systems may also use analogue signals.

Inherent noise

Analogue systems invariably include noise that is random disturbances or variations, some caused by the random thermal vibrations of atomic particles. Since all variations of an analogue signal are significant, any disturbance is equivalent to a change in the original signal and so appears as noise. As the signal is copied and re-copied, or transmitted over long distances, these random variations become more significant and lead to signal degradation. Other sources of noise may include crosstalk from other signals or poorly designed components. These disturbances are reduced by shielding and by using low-noise amplifiers (LNA).Analogue vs digital electronics

Since the information is encoded differently in analogue and digital electronics, the way they process a signal is consequently different. All operations that can be performed on an analogue signal such as amplification, filtering, limiting, and others, can also be duplicated in the digital domain. Every digital circuit is also an analogue circuit, in that the behaviour of any digital circuit can be explained using the rules of analogue circuits.The use of microelectronics has made digital devices cheap and widely available.

Noise

The effect of noise on an analogue circuit is a function of the level of noise. The greater the noise level, the more the analogue signal is disturbed, slowly becoming less usable. Because of this, analogue signals are said to "fail gracefully". Analogue signals can still contain intelligible information with very high levels of noise. Digital circuits, on the other hand, are not affected at all by the presence of noise until a certain threshold is reached, at which point they fail catastrophically. For digital telecommunications, it is possible to increase the noise threshold with the use of error detection and correction coding schemes and algorithms. Nevertheless, there is still a point at which catastrophic failure of the link occurs. digital electronics, because the information is quantized, as long as the signal stays inside a range of values, it represents the same information. In digital circuits the signal is regenerated at each logic gate, lessening or removing noise In analogue circuits, signal loss can be regenerated with amplifiers. However, noise is cumulative throughout the system and the amplifier itself will add to the noise according to its noise figure.Precision

A number of factors affect how precise a signal is, mainly the noise present in the original signal and the noise added by processing (see signal-to-noise ratio). Fundamental physical limits such as the shot noise in components limits the resolution of analogue signals. In digital electronics additional precision is obtained by using additional digits to represent the signal. The practical limit in the number of digits is determined by the performance of the analogue-to-digital converter (ADC), since digital operations can usually be performed without loss of precision. The ADC takes an analogue signal and changes it into a series of binary numbers. The ADC may be used in simple digital display devices, e. g., thermometers or light meters but it may also be used in digital sound recording and in data acquisition. However, a digital-to-analogue converter (DAC) is used to change a digital signal to an analogue signal. A DAC takes a series of binary numbers and converts it to an analogue signal. It is common to find a DAC in the gain-control system of an op-amp which in turn may be used to control digital amplifiers and filters.Design difficulty

Analogue circuits are typically harder to design, requiring more skill than comparable digital systems. This is one of the main reasons that digital systems have become more common than analogue devices. An analogue circuit is usually designed by hand, and the process is much less automated than for digital systems. Since the early 2000s, there were some platforms that were developed which enabled Analog design to be defined using software - which allows faster prototyping. However, if a digital electronic device is to interact with the real world, it will always need an analogue interface. For example, every digital radio receiver has an analogue preamplifier as the first stage in the receive chain.Circuit classification

Analogue circuits can be entirely passive, consisting of resistors, capacitors and inductors. Active circuits also contain active elements like transistors. Many passive analogue circuits are built from lumped elements. That is, discrete components. However, an alternative is distributed element circuits built from pieces of transmission line.____________________________________________________________________________

Digital electronics

Digital electronics, digital technology or digital (electronic) circuits are electronics that operate on digital signals. In contrast, analog circuits manipulate analog signals whose performance is more subject to manufacturing tolerance, signal attenuation and noise. Digital techniques are helpful because it is a lot easier to get an electronic device to switch into one of a number of known states than to accurately reproduce a continuous range of values.Digital electronic circuits are usually made from large assemblies of logic gates (often printed on integrated circuits), simple electronic representations of Boolean logic functions

An advantage of digital circuits when compared to analog circuits is that signals represented digitally can be transmitted without degradation caused by noise. For example, a continuous audio signal transmitted as a sequence of 1s and 0s, can be reconstructed without error, provided the noise picked up in transmission is not enough to prevent identification of the 1s and 0s.

In a digital system, a more precise representation of a signal can be obtained by using more binary digits to represent it. While this requires more digital circuits to process the signals, each digit is handled by the same kind of hardware, resulting in an easily scalable system. In an analog system, additional resolution requires fundamental improvements in the linearity and noise characteristics of each step of the signal chain.

With computer-controlled digital systems, new functions to be added through software revision and no hardware changes. Often this can be done outside of the factory by updating the product's software. So, the product's design errors can be corrected after the product is in a customer's hands.

Information storage can be easier in digital systems than in analog ones. The noise immunity of digital systems permits data to be stored and retrieved without degradation. In an analog system, noise from aging and wear degrade the information stored. In a digital system, as long as the total noise is below a certain level, the information can be recovered perfectly. Even when more significant noise is present, the use of redundancy permits the recovery of the original data provided too many errors do not occur.

In some cases, digital circuits use more energy than analog circuits to accomplish the same tasks, thus producing more heat which increases the complexity of the circuits such as the inclusion of heat sinks. In portable or battery-powered systems this can limit use of digital systems. For example, battery-powered cellular telephones often use a low-power analog front-end to amplify and tune in the radio signals from the base station. However, a base station has grid power and can use power-hungry, but very flexible software radios. Such base stations can be easily reprogrammed to process the signals used in new cellular standards.

Many useful digital systems must translate from continuous analog signals to discrete digital signals. This causes quantization errors. Quantization error can be reduced if the system stores enough digital data to represent the signal to the desired degree of fidelity. The Nyquist-Shannon sampling theorem provides an important guideline as to how much digital data is needed to accurately portray a given analog signal.

In some systems, if a single piece of digital data is lost or misinterpreted, the meaning of large blocks of related data can completely change. For example, a single-bit error in audio data stored directly as linear pulse code modulation causes, at worst, a single click. Instead, many people use audio compression to save storage space and download time, even though a single bit error may cause a larger disruption.

Because of the cliff effect, it can be difficult for users to tell if a particular system is right on the edge of failure, or if it can tolerate much more noise before failing. Digital fragility can be reduced by designing a digital system for robustness. For example, a parity bit or other error management method can be inserted into the signal path. These schemes help the system detect errors, and then either correct the errors, or request retransmission of the data.

Construction

A binary clock, hand-wired on breadboards

Another form of digital circuit is constructed from lookup tables, (many sold as "programmable logic devices", though other kinds of PLDs exist). Lookup tables can perform the same functions as machines based on logic gates, but can be easily reprogrammed without changing the wiring. This means that a designer can often repair design errors without changing the arrangement of wires. Therefore, in small volume products, programmable logic devices are often the preferred solution. They are usually designed by engineers using electronic design automation software.

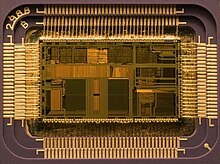

Integrated circuits consist of multiple transistors on one silicon chip, and are the least expensive way to make large number of interconnected logic gates. Integrated circuits are usually interconnected on a printed circuit board which is a board which holds electrical components, and connects them together with copper traces.

Design

Engineers use many methods to minimize logic functions, in order to reduce the circuit's complexity. When the complexity is less, the circuit also has fewer errors and less electronics, and is therefore less expensive.The most widely used simplification is a minimization algorithm like the Espresso heuristic logic minimizer within a CAD system, although historically, binary decision diagrams, an automated Quine–McCluskey algorithm, truth tables, Karnaugh maps, and Boolean algebra have been used.

When the volumes are medium to large, and the logic can be slow, or involves complex algorithms or sequences, often a small microcontroller is programmed to make an embedded system. These are usually programmed by software engineers.

When only one digital circuit is needed, and its design is totally customized, as for a factory production line controller, the conventional solution is a programmable logic controller, or PLC. These are usually programmed by electricians, using ladder logic.

Representation

Representations are crucial to an engineer's design of digital circuits. Some analysis methods only work with particular representations.The classical way to represent a digital circuit is with an equivalent set of logic gates. Each logic symbol is represented by a different shape. The actual set of shapes was introduced in 1984 under IEEE/ANSI standard 91-1984. "The logic symbol given under this standard are being increasingly used now and have even started appearing in the literature published by manufacturers of digital integrated circuits."

Another way, often with the least electronics, is to construct an equivalent system of electronic switches (usually transistors). One of the easiest ways is to simply have a memory containing a truth table. The inputs are fed into the address of the memory, and the data outputs of the memory become the outputs.

For automated analysis, these representations have digital file formats that can be processed by computer programs. Most digital engineers are very careful to select computer programs ("tools") with compatible file formats.

Combinational vs. Sequential

To choose representations, engineers consider types of digital systems. Most digital systems divide into "combinational systems" and "sequential systems." A combinational system always presents the same output when given the same inputs. It is basically a representation of a set of logic functions, as already discussed.A sequential system is a combinational system with some of the outputs fed back as inputs. This makes the digital machine perform a "sequence" of operations. The simplest sequential system is probably a flip flop, a mechanism that represents a binary digit or "bit".