control and instrumentation in electronics is an electronic device that can measure and manage the work of a system in accordance with the program and work orders provided so that an electronic device that works and produce production in accordance with what is desired and expected of a quality product, efficient and effective and optimum in an optimum manner. in the engineering electronics of an instrumentation and control is known to be two parts namely the instrumentation and open control that is human, still plays a role to set the time and place and the form of the resulting product while the instrumentation and control is closed then man has almost no role manually to the electronics by the electronic device has been programmed according to the desired flowchart command in designing the product both time and place have been able to adjust itself so that electronic devices can we call Auto Electronics.

Control engineering

Control engineering or control systems engineering is an engineering discipline that applies automatic control theory to design systems with desired behaviors in control environments. The discipline of controls overlaps and is usually taught along with electrical engineering at many institutions around the world.

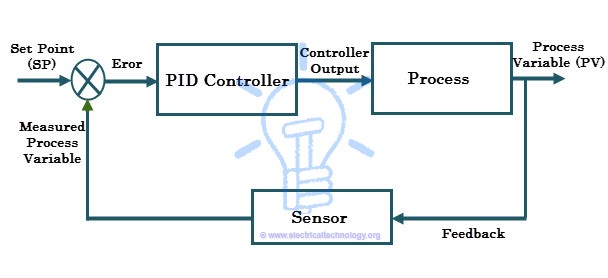

The practice uses sensors and detectors to measure the output performance of the process being controlled; these measurements are used to provide corrective feedback helping to achieve the desired performance. Systems designed to perform without requiring human input are called automatic control systems (such as cruise control for regulating the speed of a car). Multi-disciplinary in nature, control systems engineering activities focus on implementation of control systems mainly derived by mathematical modeling of a diverse range of systems.

Control systems play a critical role in space flight

Modern day control engineering is a relatively new field of study that gained significant attention during the 20th century with the advancement of technology. It can be broadly defined or classified as practical application of control theory. Control engineering has an essential role in a wide range of control systems, from simple household washing machines to high-performance F-16 fighter aircraft. It seeks to understand physical systems, using mathematical modeling, in terms of inputs, outputs and various components with different behaviors; use control systems design tools to develop controllers for those systems; and implement controllers in physical systems employing available technology. A system can be mechanical, electrical, fluid, chemical, financial or biological, and the mathematical modeling, analysis and controller design uses control theory in one or many of the time, frequency and complex-s domains, depending on the nature of the design problem.

In his 1868 paper "On Governors", James Clerk Maxwell was able to explain instabilities exhibited by the flyball governor using differential equations to describe the control system. This demonstrated the importance and usefulness of mathematical models and methods in understanding complex phenomena, and it signaled the beginning of mathematical control and systems theory. Elements of control theory had appeared earlier but not as dramatically and convincingly as in Maxwell's analysis.

Control theory made significant strides over the next century. New mathematical techniques, as well as advancements in electronic and computer technologies, made it possible to control significantly more complex dynamical systems than the original flyball governor could stabilize. New mathematical techniques included developments in optimal control in the 1950s and 1960s followed by progress in stochastic, robust, adaptive, nonlinear, and azid-based control methods in the 1970s and 1980s. Applications of control methodology have helped to make possible space travel and communication satellites, safer and more efficient aircraft, cleaner automobile engines, and cleaner and more efficient chemical processes.

Before it emerged as a unique discipline, control engineering was practiced as a part of mechanical engineering and control theory was studied as a part of electrical engineering since electrical circuits can often be easily described using control theory techniques. In the very first control relationships, a current output was represented by a voltage control input. However, not having adequate technology to implement electrical control systems, designers were left with the option of less efficient and slow responding mechanical systems. A very effective mechanical controller that is still widely used in some hydro plants is the governor. Later on, previous to modern power electronics, process control systems for industrial applications were devised by mechanical engineers using pneumatic and hydraulic control devices, many of which are still in use today.

Control theory

There are two major divisions in control theory, namely, classical and modern, which have direct implications for the control engineering applications. The scope of classical control theory is limited to single-input and single-output (SISO) system design, except when analyzing for disturbance rejection using a second input. The system analysis is carried out in the time domain using differential equations, in the complex-s domain with the Laplace transform, or in the frequency domain by transforming from the complex-s domain. Many systems may be assumed to have a second order and single variable system response in the time domain. A controller designed using classical theory often requires on-site tuning due to incorrect design approximations. Yet, due to the easier physical implementation of classical controller designs as compared to systems designed using modern control theory, these controllers are preferred in most industrial applications. The most common controllers designed using classical control theory are PID controllers. A less common implementation may include either or both a Lead or Lag filter. The ultimate end goal is to meet requirements typically provided in the time-domain called the step response, or at times in the frequency domain called the open-loop response. The step response characteristics applied in a specification are typically percent overshoot, settling time, etc. The open-loop response characteristics applied in a specification are typically Gain and Phase margin and bandwidth. These characteristics may be evaluated through simulation including a dynamic model of the system under control coupled with the compensation model.In contrast, modern control theory is carried out in the state space, and can deal with multiple-input and multiple-output (MIMO) systems. This overcomes the limitations of classical control theory in more sophisticated design problems, such as fighter aircraft control, with the limitation that no frequency domain analysis is possible. In modern design, a system is represented to the greatest advantage as a set of decoupled first order differential equations defined using state variables. Nonlinear, multivariable, adaptive and robust control theories come under this division. Matrix methods are significantly limited for MIMO systems where linear independence cannot be assured in the relationship between inputs and outputs. Being fairly new, modern control theory has many areas yet to be explored. Scholars like Rudolf E. Kalman and Aleksandr Lyapunov are well-known among the people who have shaped modern control theory.

Control systems

Control engineering is the engineering discipline that focuses on the modeling of a diverse range of dynamic systems (e.g. mechanical systems) and the design of controllers that will cause these systems to behave in the desired manner. Although such controllers need not to be electrical many are and hence control engineering is often viewed as a subfield of electrical engineering. However, the falling price of microprocessors is making the actual implementation of a control system essentially trivial. As a result, focus is shifting back to the mechanical and process engineering discipline, as intimate knowledge of the physical system being controlled is often desired.Electrical circuits, digital signal processors and microcontrollers can all be used to implement control systems. Control engineering has a wide range of applications from the flight and propulsion systems of commercial airliners to the cruise control present in many modern automobiles.

In most of the cases, control engineers utilize feedback when designing control systems. This is often accomplished using a PID controller system. For example, in an automobile with cruise control the vehicle's speed is continuously monitored and fed back to the system, which adjusts the motor's torque accordingly. Where there is regular feedback, control theory can be used to determine how the system responds to such feedback. In practically all such systems stability is important and control theory can help ensure stability is achieved.

Although feedback is an important aspect of control engineering, control engineers may also work on the control of systems without feedback. This is known as open loop control. A classic example of open loop control is a washing machine that runs through a pre-determined cycle without the use of sensors.

Control engineering education

At many universities around the world, control engineering courses are taught primarily in electrical engineering but some courses can be instructed in mechatronics engineering, mechanical engineering, and aerospace engineering. In others, control engineering is connected to computer science, as most control techniques today are implemented through computers, often as embedded systems (as in the automotive field). The field of control within chemical engineering is often known as process control. It deals primarily with the control of variables in a chemical process in a plant. It is taught as part of the undergraduate curriculum of any chemical engineering program and employs many of the same principles in control engineering. Other engineering disciplines also overlap with control engineering as it can be applied to any system for which a suitable model can be derived. However, specialised control engineering departments do exist, for example, the Department of Automatic Control and Systems Engineering at the University of Sheffield and the Department of Systems Engineering at the United States Naval Academy.Control engineering has diversified applications that include science, finance management, and even human behavior. Students of control engineering may start with a linear control system course dealing with the time and complex-s domain, which requires a thorough background in elementary mathematics and Laplace transform, called classical control theory. In linear control, the student does frequency and time domain analysis. Digital control and nonlinear control courses require Z transformation and algebra respectively, and could be said to complete a basic control education.

Recent advancement

Originally, control engineering was all about continuous systems. Development of computer control tools posed a requirement of discrete control system engineering because the communications between the computer-based digital controller and the physical system are governed by a computer clock. The equivalent to Laplace transform in the discrete domain is the Z-transform. Today, many of the control systems are computer controlled and they consist of both digital and analog components.Therefore, at the design stage either digital components are mapped into the continuous domain and the design is carried out in the continuous domain, or analog components are mapped into discrete domain and design is carried out there. The first of these two methods is more commonly encountered in practice because many industrial systems have many continuous systems components, including mechanical, fluid, biological and analog electrical components, with a few digital controllers.

Similarly, the design technique has progressed from paper-and-ruler based manual design to computer-aided design and now to computer-automated design or CAutoD which has been made possible by evolutionary computation. CAutoD can be applied not just to tuning a predefined control scheme, but also to controller structure optimisation, system identification and invention of novel control systems, based purely upon a performance requirement, independent of any specific control scheme.

Resilient control systems extend the traditional focus of addressing only planned disturbances to frameworks and attempt to address multiple types of unexpected disturbance; in particular, adapting and transforming behaviors of the control system in response to malicious actors, abnormal failure modes, undesirable human action, etc.

Control reconfiguration

Control reconfiguration is an active approach in control theory to achieve fault-tolerant control for dynamic systems. It is used when severe faults, such as actuator or sensor outages, cause a break-up of the control loop, which must be restructured to prevent failure at the system level. In addition to loop restructuring, the controller parameters must be adjusted to accommodate changed plant dynamics. Control reconfiguration is a building block toward increasing the dependability of systems under feedback control .

Reconfiguration problem

Schematic diagram of a typical active fault-tolerant control system. In the nominal, i.e. fault-free situation, the lower control loop operates to meet the control goals. The fault-detection (FDI) module monitors the closed-loop system to detect and isolate faults. The fault estimate is passed to the reconfiguration block, which modifies the control loop to reach the control goals in spite of the fault.

Fault modelling

The figure to the right shows a plant controlled by a controller in a standard control loop.The nominal linear model of the plant is

The plant subject to a fault (indicated by a red arrow in the figure) is modelled in general by

where the subscript indicates that the system is faulty. This approach models multiplicative faults by modified system matrices. Specifically, actuator faults are represented by the new input matrix , sensor faults are represented by the output map , and internal plant faults are represented by the system matrix .

The upper part of the figure shows a supervisory loop consisting of fault detection and isolation (FDI) and reconfiguration which changes the loop by

- choosing new input and output signals from {} to reach the control goal,

- changing the controller internals (including dynamic structure and parameters),

- adjusting the reference input .

Alternative scenarios can model faults as an additive external signal influencing the state derivatives and outputs as follows:

Reconfiguration goals

The goal of reconfiguration is to keep the reconfigured control-loop performance sufficient for preventing plant shutdown. The following goals are distinguished:- Stabilization

- Equilibrium recovery

- Output trajectory recovery

- State trajectory recovery

- Transient time response recovery

Usually a combination of goals is pursued in practice, such as the equilibrium-recovery goal with stability.

The question whether or not these or similar goals can be reached for specific faults is addressed by reconfigurability analysis.

Reconfiguration approaches

Fault hiding

Linear model following

In linear model following, a formal feature of the nominal closed loop is attempted to be recovered. In the classical pseudo-inverse method, the closed loop system matrix of a state-feedback control structure is used. The new controller is found to approximate in the sense of an induced matrix norm.In perfect model following, a dynamic compensator is introduced to allow for the exact recovery of the complete loop behaviour under certain conditions.

In eigenstructure assignment, the nominal closed loop eigenvalues and eigenvectors (the eigenstructure) is recovered to the nominal case after a fault.

Optimisation-based control schemes

Optimisation control schemes include: linear-quadratic regulator design (LQR), model predictive control (MPC) and eigenstructure assignment methods.Probabilistic approaches

Some probabilistic approaches have been developed.Learning control

There are learning automata, neural networks, etc.Mathematical tools and frameworks

The methods by which reconfiguration is achieved differ considerably. The following list gives an overview of mathematical approaches that are commonly used.- Adaptive control (AC)

- Disturbance decoupling (DD)

- Eigenstructure assignment (EA)

- Gain scheduling (GS)/linear parameter varying (LPV)

- Generalised internal model control (GIMC)

- Intelligent control (IC)

- Linear matrix inequality (LMI)

- Linear-quadratic regulator (LQR)

- Model following (MF)

- Model predictive control (MPC)

- Pseudo-inverse method (PIM)

- Robust control techniques

Fault accommodation is another common approach to achieve fault tolerance. In contrast to control reconfiguration, accommodation is limited to internal controller changes. The sets of signals manipulated and measured by the controller are fixed, which means that the loop cannot be restructured

XXX . XXX Instrumentation

Instrumentation is a collective term for measuring instruments used for indicating, measuring and recording physical quantities, and has its origins in the art and science of Scientific instrument-making.

The term instrumentation may refer to a device or group of devices used for direct reading thermometers or, when using many sensors, may become part of a complex Industrial control system in such as manufacturing industry, vehicles and transportation. Instrumentation can be found in the household as well; a smoke detector or a heating thermostat are examples.

History and development

The history of instrumentation can be divide into several phases.Pre-industrial

Elements of industrial instrumentation have long histories. Scales for comparing weights and simple pointers to indicate position are ancient technologies. Some of the earliest measurements were of time. One of the oldest water clocks was found in the tomb of the ancient Egyptian pharaoh Amenhotep I, buried around 1500 BCE.[1] Improvements were incorporated in the clocks. By 270 BCE they had the rudiments of an automatic control system device.[2]In 1663 Christopher Wren presented the Royal Society with a design for a "weather clock". A drawing shows meteorological sensors moving pens over paper driven by clockwork. Such devices did not become standard in meteorology for two centuries.[3] The concept has remained virtually unchanged as evidenced by pneumatic chart recorders, where a pressurized bellows displaces a pen. Integrating sensors, displays, recorders and controls was uncommon until the industrial revolution, limited by both need and practicality.

Early industrial

Early systems used direct process connections to local control panels for control and indication, which from the early 1930s saw the introduction of pneumatic transmitters and automatic 3-term (PID) controllers.The ranges of pneumatic transmitters were defined by the need to control valves and actuators in the field. Typically a signal ranged from 3 to 15 psi (20 to 100kPa or 0.2 to 1.0 kg/cm2) as a standard, was standardized with 6 to 30 psi occasionally being used for larger valves. Transistor electronics enabled wiring to replace pipes, initially with a range of 20 to 100mA at up to 90V for loop powered devices, reducing to 4 to 20mA at 12 to 24V in more modern systems. A transmitter is a device that produces an output signal, often in the form of a 4–20 mA electrical current signal, although many other options using voltage, frequency, pressure, or ethernet are possible. The transistor was commercialized by the mid-1950s.[4]

Instruments attached to a control system provided signals used to operate solenoids, valves, regulators, circuit breakers, relays and other devices. Such devices could control a desired output variable, and provide either remote or automated control capabilities.

Each instrument company introduced their own standard instrumentation signal, causing confusion until the 4-20 mA range was used as the standard electronic instrument signal for transmitters and valves. This signal was eventually standardized as ANSI/ISA S50, “Compatibility of Analog Signals for Electronic Industrial Process Instruments", in the 1970s. The transformation of instrumentation from mechanical pneumatic transmitters, controllers, and valves to electronic instruments reduced maintenance costs as electronic instruments were more dependable than mechanical instruments. This also increased efficiency and production due to their increase in accuracy. Pneumatics enjoyed some advantages, being favored in corrosive and explosive atmospheres.

Automatic process control

Large integrated computer-based systems

Pneumatic "Three term" pneumatic PID controller, widely used before electronics became reliable and cheaper and safe to use in hazardous areas (Siemens Telepneu Example)

However, whilst providing a central control focus, this arrangement was inflexible as each control loop had its own controller hardware, and continual operator movement within the control room was required to view different parts of the process. With coming of electronic processors and graphic displays it became possible to replace these discrete controllers with computer-based algorithms, hosted on a network of input/output racks with their own control processors. These could be distributed around plant, and communicate with the graphic display in the control room or rooms. The distributed control concept was born.

The introduction of DCSs and SCADA allowed easy interconnection and re-configuration of plant controls such as cascaded loops and interlocks, and easy interfacing with other production computer systems. It enabled sophisticated alarm handling, introduced automatic event logging, removed the need for physical records such as chart recorders, allowed the control racks to be networked and thereby located locally to plant to reduce cabling runs, and provided high level overviews of plant status and production levels.

Applications

In some cases the sensor is a very minor element of the mechanism. Digital cameras and wristwatches might technically meet the loose definition of instrumentation because they record and/or display sensed information. Under most circumstances neither would be called instrumentation, but when used to measure the elapsed time of a race and to document the winner at the finish line, both would be called instrumentation.Household

A very simple example of an instrumentation system is a mechanical thermostat, used to control a household furnace and thus to control room temperature. A typical unit senses temperature with a bi-metallic strip. It displays temperature by a needle on the free end of the strip. It activates the furnace by a mercury switch. As the switch is rotated by the strip, the mercury makes physical (and thus electrical) contact between electrodes.Another example of an instrumentation system is a home security system. Such a system consists of sensors (motion detection, switches to detect door openings), simple algorithms to detect intrusion, local control (arm/disarm) and remote monitoring of the system so that the police can be summoned. Communication is an inherent part of the design.

Kitchen appliances use sensors for control.

- A refrigerator maintains a constant temperature by measuring the internal temperature.

- A microwave oven sometimes cooks via a heat-sense-heat-sense cycle until sensing done.

- An automatic ice machine makes ice until a limit switch is thrown.

- Pop-up bread toasters can operate by time or by heat measurements.

- Some ovens use a temperature probe to cook until a target internal food temperature is reached.

- A common toilet refills the water tank until a float closes the valve. The float is acting as a water level sensor.

Automotive

Modern automobiles have complex instrumentation. In addition to displays of engine rotational speed and vehicle linear speed, there are also displays of battery voltage and current, fluid levels, fluid temperatures, distance traveled and feedbacks of various controls (turn signals, parking brake, headlights, transmission position). Cautions may be displayed for special problems (fuel low, check engine, tire pressure low, door ajar, seat belt unfastened). Problems are recorded so they can be reported to diagnostic equipment. Navigation systems can provide voice commands to reach a destination. Automotive instrumentation must be cheap and reliable over long periods in harsh environments. There may be independent airbag systems which contain sensors, logic and actuators. Anti-skid braking systems use sensors to control the brakes, while cruise control affects throttle position. A wide variety of services can be provided via communication links as the OnStar system. Autonomous cars (with exotic instrumentation) have been demonstrated.Aircraft

Early aircraft had a few sensors. "Steam gauges" converted air pressures into needle deflections that could be interpreted as altitude and airspeed. A magnetic compass provided a sense of direction. The displays to the pilot were as critical as the measurements.A modern aircraft has a far more sophisticated suite of sensors and displays, which are embedded into avionics systems. The aircraft may contain inertial navigation systems, global positioning systems, weather radar, autopilots, and aircraft stabilization systems. Redundant sensors are used for reliability. A subset of the information may be transferred to a crash recorder to aid mishap investigations. Modern pilot displays now include computer displays including head-up displays.

Air traffic control radar is distributed instrumentation system. The ground portion transmits an electromagnetic pulse and receives an echo (at least). Aircraft carry transponders that transmit codes on reception of the pulse. The system displays aircraft map location, an identifier and optionally altitude. The map location is based on sensed antenna direction and sensed time delay. The other information is embedded in the transponder transmission.

Laboratory instrumentation

Among the possible uses of the term is a collection of laboratory test equipment controlled by a computer through an IEEE-488 bus (also known as GPIB for General Purpose Instrument Bus or HPIB for Hewlitt Packard Instrument Bus). Laboratory equipment is available to measure many electrical and chemical quantities. Such a collection of equipment might be used to automate the testing of drinking water for pollutants.Measurement parameters

Instrumentation is used to measure many parameters (physical values). These parameters include:

|

|

Instrumentation engineering

The instrumentation part of a Piping and instrumentation diagram will be developed by an instrumentation engineer.

Instrumentation engineering is loosely defined because the required tasks are very domain dependent. An expert in the biomedical instrumentation of laboratory rats has very different concerns than the expert in rocket instrumentation. Common concerns of both are the selection of appropriate sensors based on size, weight, cost, reliability, accuracy, longevity, environmental robustness and frequency response. Some sensors are literally fired in artillery shells. Others sense thermonuclear explosions until destroyed. Invariably sensor data must be recorded, transmitted or displayed. Recording rates and capacities vary enormously. Transmission can be trivial or can be clandestine, encrypted and low-power in the presence of jamming. Displays can be trivially simple or can require consultation with human factors experts. Control system design varies from trivial to a separate specialty.

Instrumentation engineers are responsible for integrating the sensors with the recorders, transmitters, displays or control systems, and producing the Piping and instrumentation diagram for the process. They may design or specify installation, wiring and signal conditioning. They may be responsible for calibration, testing and maintenance of the system.

In a research environment it is common for subject matter experts to have substantial instrumentation system expertise. An astronomer knows the structure of the universe and a great deal about telescopes - optics, pointing and cameras (or other sensing elements). That often includes the hard-won knowledge of the operational procedures that provide the best results. For example, an astronomer is often knowledgeable of techniques to minimize temperature gradients that cause air turbulence within the telescope.

Instrumentation technologists, technicians and mechanics specialize in troubleshooting, repairing and maintaining instruments and instrumentation systems.

Typical Industrial Transmitter Signal Types

Current Loop (4-20mA) - ElectricalHART - Data signalling often overlaid on a current loop.

Foundation Fieldbus - Data signalling

Profibus - Data signalling

Impact of modern development

Ralph Müller (1940) stated "That the history of physical science is largely the history of instruments and their intelligent use is well known. The broad generalizations and theories which have arisen from time to time have stood or fallen on the basis of accurate measurement, and in several instances new instruments have had to be devised for the purpose. There is little evidence to show that the mind of modern man is superior to that of the ancients. His tools are incomparably better."Davis Baird has argued that the major change associated with Floris Cohen's identification of a "fourth big scientific revolution" after World War II is the development of scientific instrumentation, not only in chemistry but across the sciences. In chemistry, the introduction of new instrumentation in the 1940s was "nothing less than a scientific and technological revolution" in which classical wet-and-dry methods of structural organic chemistry were discarded, and new areas of research opened up.

As early as 1954, W A Wildhack discussed both the productive and destructive potential inherent in process control. The ability to make precise, verifiable and reproducible measurements of the natural world, at levels that were not previously observable, using scientific instrumentation, has "provided a different texture of the world". This instrumentation revolution fundamentally changes human abilities to monitor and respond, as is illustrated in the examples of DDT monitoring and the use of UV spectrophotometry and gas chromatography to monitor water pollutants.

The PID Controller

we will explore how to implement both analog and digital control systems. We will be using a PID (Proportional Integral Derivative) controller. With a PID controller, we can control thermal, electrical, chemical, and mechanical processes. The PID controller is found at the heart of many industrial control systems.

In this first of three installments, we will answer the “why” questions. We will also lay a foundation to better understand what a PID controller is. In subsequent installments, we will explore how to tune the PID controller and how to implement a digital PID using the ZILOG Encore! microprocessor.

The goal of this series is to introduce you to the world of control electronics. Concepts will be explained in a simple, intuitive fashion and useful, practical examples will be presented. The math will be kept to an absolute minimum. This is not to say that the math is not important. Quite the opposite — control systems may be modeled and analyzed mathematically. The mathematics is nothing short of amazing and I would encourage you to peruse it. There are hundreds of books that explain the theory and mathematics of control systems. These books will introduce you to powerful tools, such as Laplace transforms, root locus, and Bode plots. Again, this series of articles hardly scratches the surface. There is much more to be learned.

What Is PID Control?

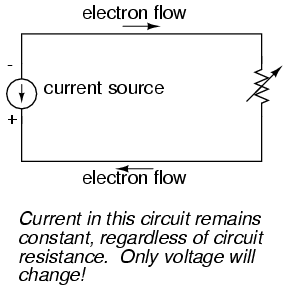

The term PID is an acronym that stands for Proportional Integral Derivative. A PID controller is part of a feedback system. A PID system uses Proportional, Integral, and Derivative drive elements to control a process. Some of you already know what P, I, and D stand for. Don’t worry if you don’t; we will soon cover these terms with easy-to-understand examples.Why Do I Need PID Control?

You need the PID because there are some things that are difficult to control using standard methods. Let me illustrate with an example. My first experience with control systems was a failure. My goal was to regulate the output of a power supply using a PIC microcontroller. The PIC read the output voltage with an AD converter and adjusted a PWM to regulate the output. The control strategy was very simple: If the voltage was below a set-point, turn on the PWM. If the measured voltage was above the set-point, then turn off the PWM. The PIC power supply almost worked. It did produce the DC output voltage that I wanted. Unfortunately, it also has a significant AC ripple riding on the DC signal.The control strategy I just described is called on-off or bang-bang control. Many types of systems use this control strategy. Take the furnace in my house as an example. When the temperature is below the set-point, the furnace is on. When the temp is above the set-point, the furnace is off. Just like my power supply, the plot of temperature over time results in a sine wave.

For some types of control, this is acceptable; for others, it is not. You wouldn’t want this type of control for a servo motor — bad things would happen! Just imagine — the motor would be full power in one direction and, the next moment, full power in the other direction. You can see where the term bang-bang comes from. That servo won’t last long!

The PID controller takes control systems to the next level. It can provide a controlled — almost intelligent — drive for systems. We will now examine the individual components of the PID system. This step is necessary to understand the entire PID system. Please don’t skip this section; you must know how the individual components function to understand the whole system.

What Is Proportional?

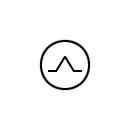

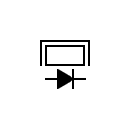

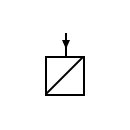

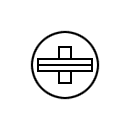

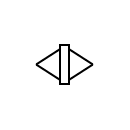

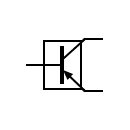

This one is easy. The proportional component is simply gain. We can use an inverting op-amp, as shown in Figure 1.

FIGURE 1.

In this op-amp circuit, the gain is set by the values of the resistors. We have the following mathematical relationship:

Vout = -Vin * Rf /Ri

What Is Integral?

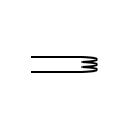

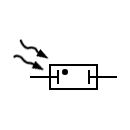

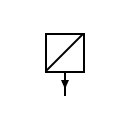

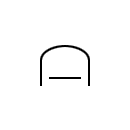

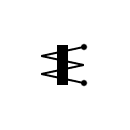

Integral is shorthand for integration. You can think of this as accumulation (adding) of a quantity over time. For example, you are now integrating this information into your store of knowledge. Your store of knowledge has components of both time and knowledge. Obviously, we all started as babies with virtually no knowledge. Over time, we have integrated knowledge into our brains.In our PID controller, we are integrating voltage as time progresses. A schematic of an integrator circuit is shown in Figure 2.

FIGURE 2.

The output voltage is described mathematically by the following equation:

Vout = -(1/RC) * (area under curve) + initial charge on capacitor

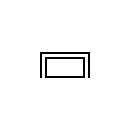

Area is a component of voltage and time. Let’s examine the operation of an ideal integrator. We can simplify the math by making the 1/RC term equal to 1 (i.e., let R=100 KΩ and C=10 µF). Figure 3 illustrates the input/output relationships of the integrator.

FIGURE 3.

From Time 0 to 2 seconds, have a 2 V square wave applied to the input of the integrator. The output of the integrator at the end of this time period is -4 V (remember the circuit is inverting). The integrator has accumulated a 2 V signal for 2 seconds. The area is equal to 4. From T2 to T4, there is no voltage applied to the integrator. The output is unchanged. In the remainder of this diagram, you can see that the integrator output changes polarity when the input signal changes polarity.

The previous discussion assumed an ideal integrator. Real capacitors will have some leakage and will tend to discharge themselves. Also, real op-amps may charge the capacitor with no input present. If the circuit is built as drawn, it will likely saturate after a few minutes of operation. To prevent this saturation, add a resistor in parallel to the capacitor. For our purposes, we are not concerned about the saturation. We will be using the integrator with other circuits to control the charge on the capacitor.

To better understand the integrator, let’s look at a typical application. Integrators are often found in high end audio amplifiers.

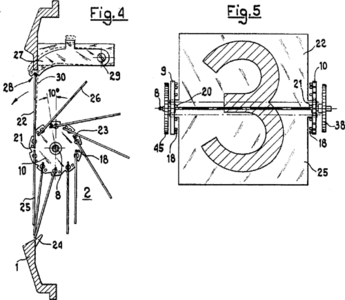

FIGURE 4.

In this application, they are called DC servos. A typical application is shown in Figure 5.

FIGURE 5.

The purpose of this circuit is to remove the unwanted DC voltage from the output of the audio amplifier. Any DC voltage seen on the output of the amplifier will tend to charge the integrator’s capacitor. The integrator then changes the bias of the audio amplifier to remove the DC component. The resistor and capacitor are selected so that the circuit will not respond to audio frequencies.

Also, recall that an AC waveform is symmetrical. The part above 0 tends to charge the capacitor, while the part below will discharge the capacitor. Therefore, when you integrate an AC waveform over a large amount of time, you get 0. Even a small DC voltage will charge the capacitor over a long period of time, thus rebiasing the amplifier.

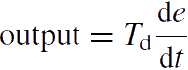

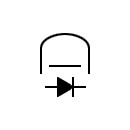

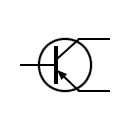

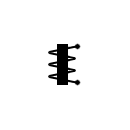

What Is Derivative?

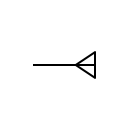

The derivative is a measurement of the rate of change. The ideal differentiator is shown in Figure 5. This circuit looks similar to the high pass filters you have seen in other schematics. Low frequencies are attenuated, while high frequencies are allowed to pass. The mathematics that describe the differentiator is:Vout = -RC * (rate of change)

Rate of change is equivalent to measuring the slope of a line. Slope is a measure of the change in voltage divided by the change in time. In mathematical terms, this is referred to as a delta voltage over delta time or simply dv/dt. If we apply a ramp to the differentiator, we get a steady DC output voltage. Figure 6 illustrates the input/output relationship of a differentiator.

FIGURE 6.

To simplify the math, we will let RC=1. From time 0 to 2, the voltage changes -4 volts, while the time changes 2 seconds. The slope of this line is, therefore, -2. The output of the differentiator will be equal to 2 — remember the stage is inverting.

Servo Motor System

Now that we are familiar with the P, I, and D terms, let’s examine how they are combined to form a complete system. We will be using the PID controller to control a DC servo motor. I used a Hitec brand servo motor typically found in R/C model cars and airplanes. This servo is inexpensive and readily available. You can also purchase replacement gears — more of that in the next installment!The servo mechanism consists of several components, as shown in Photo 1.

PHOTO 1.

We have a DC motor, a set of gears, and a variable resistor. The resistor is attached to the last gear. This variable resistor is used to determine the rotational position of the motor.

The servo was gutted. I only used the motor and the variable resistor, as shown in Photo 2.

PHOTO 2.

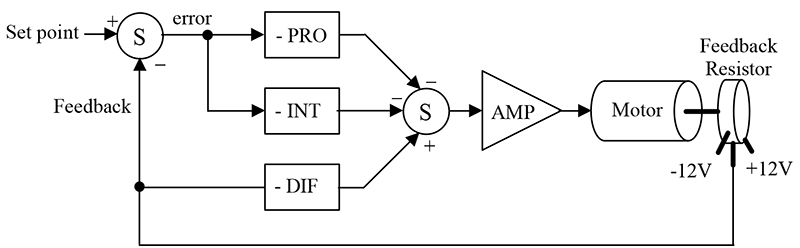

PID Block Diagram

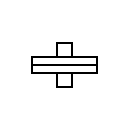

A block diagram showing the functional relationships of the PID controller is shown in Figure 7.

FIGURE 7.

The first thing to notice is that this is a parallel process. The P, I, and D terms are calculated independently and then added at the summer Σ. The input to this loop is the set-point — in this application, it can range from –12 to +12 VDC. The output is motor position. Position is measured by the resistor and feedback as a voltage between –12 to 12 VDC. We will now examine each of the PID terms independently to see how they are related. For this discussion, assume that the set-point is 0 VDC.

On the far left of Figure 7, we see a summing junction. The difference between the set-point and feedback is the error of the system. If the measured motor position is positive of where it should be, the error will be negative (i.e., a negative correction is required). Likewise, if the measured motor position is -1, the error will be positive 1 (i.e., a positive correction is required — remember set-point is 0 VDC).

The error is multiplied by the gain of the proportional block. Notice that the block diagram shows this as a negative gain. This was done so that the block diagram and the schematic (presented later) will be consistent with each other. The proportional amplifier output is sent to the second summing junction, where the sign is again inverted. The amplifier boots the signal’s current and drives the motor.

This chain gets to be quite long, so let’s summarize proportional operation in a few simple sentences:

- An error must be present!

- The system will try to correct the error by turning the motor in a direction that opposes the error.

- The intensity of the correction is determined by proportional gain. If there is no error, there is no proportional drive.

- An error must be present!

- The integral section accumulates the error. A small error can become a large correction over a period of time.

- As the error is accumulated, the motor is forced to correct the error.

- Finally, the integrator will overshoot the set-point. It must produce an error opposite of the original in order to discharge the capacitor.

- The motor must be moving!

- The differentiator will have a high output voltage when the motor is moving fast and a low voltage when the motor is moving slow.

- This signal is applied in such a way as to slow down the motor.

- If the motor is not moving, the differentiator has 0 output voltage.

Schematic

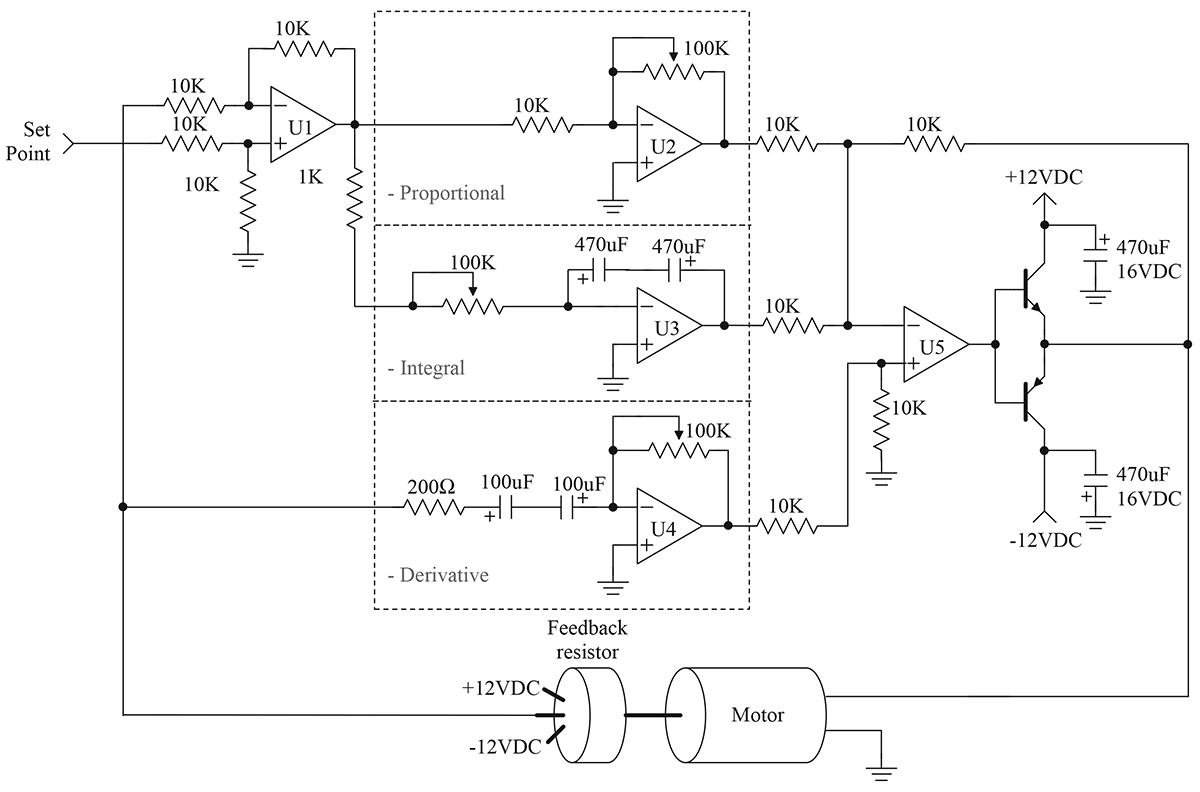

Figure 8 contains a simplified schematic of a servo motor PID control system.

FIGURE 8.

This schematic is an adaptation of the PID controller presented by Professor Jacob in his book, Industrial Control Electronics. This type of system has the advantage of easy tuning. This circuit is also simple and easy to construct.

The schematic has the same physical layout as the block diagram. Op-amp U1 is used as the summing junction for the set-point and measured motor position. The individual P, I, and D functions are implemented by U2, U3, and U4, respectively. Finally, op-amp U5 sums the individual PID terms. The P and I terms are inverted, while the D term is not. Darlington transistors have been added to U5 to boost the current to a level sufficient to drive the motor.

The individual P, I, and D components appear just as they were presented earlier in this article. Each of the terms has a variable resistor to adjust its gains. The adjustment (tuning) of this circuit is the topic for the next installment.

Component selection for this circuit is not critical. The variable resistors should be multiturn for ease of adjustment. General-purpose op-amps may be used; however, U3 should be a FET input type. The FET design is better for the integrator, since it will not self-charge the integrator capacitor. I found a quad op-amp — such as the LF347N — to be ideal for this application. Large capacitors are required for the integrator and derivative circuits. The large values necessitate that electrolytic capacitors be used. The electrolytic capacitor may be operated as a non-polarized capacitor by placing two capacitors in series, as shown in the schematic.

Testing

Before we can test the PID circuit, we need to know more about the mechanical system. We need to know how it responds to a command and how the individual P, I, and D terms interact. You will have to be patient and wait for the next installment. In the meantime, go ahead and breadboard the circuit. You can use a function generator to verify the individual stages. See how the individual stages respond to sine, square, and triangle waveforms. Remember to use a low frequency — less than 10 Hz. This frequency is approximately the same as the servo motor system.Stay tuned; next time, we will learn how to tune the PID controller. We will add additional circuitry to prevent a condition called integral wind-up. Also, keep a lookout for installment three, where we will implement the PID on a ZILOG Encore! microcontroller.

Analog Electronic PID Controllers

Although analog electronic process controllers are considered a newer technology than pneumatic process controllers, they are actually “more obsolete” than pneumatic controllers. Panel-mounted (inside a control room environment) analog electronic controllers were a great improvement over panel-mounted pneumatic controllers when they were first introduced to industry, but they were superseded by digital controller technology later on. Field-mounted pneumatic controllers were either replaced by panel-mounted electronic controllers (either analog or digital) or left alone. Applications still exist for field-mounted pneumatic controllers, even now at the beginning of the 21st century, but very few applications exist for analog electronic controllers in any location.

Analog electronic controllers enjoy only two advantages over digital electronic controllers: greater reliability and faster response. Now that digital industrial electronics has reached a very high level of reliability, the first advantage is academic, leaving only the second advantage for practical consideration. The advantage of faster speed may be fruitful in applications such as motion control, but for most industrial processes even the slowest digital controller is fast enough1. Furthermore, the numerous advantages offered by digital technology (data recording, networking capability, self-diagnostics,flexible configuration, function blocks for implementing different control strategies) severely weaken the relative importance of reliability and speed.

Most analog electronic PID controllers utilized operational amplifiers in their designs. It is relatively easy to construct circuits performing amplification (gain), integration, differentiation, summation, and other useful control functions with just a few op-amps, resistors, and capacitors.

The following schematic diagram shows a full PID controller implemented using eight operational amplifiers, designed to input and output voltage signals representing PV, SP, and Output:

This controller implements the parallel, or independent PID algorithm, since each tuning adjustment (P, I, and D) act independently of each other:

It is possible to construct an analog PID controller with fewer operational amplifiers. An example is shown here:

As you can see, a single operational amplifier does all the work of calculating proportional, integral, and derivative responses. The first three amplifiers do nothing but buffer the input signals and calculate error (PV − SP, or SP − PV, depending on the direction of action).

This controller design happens to implement the series or interacting PID equation. Adjusting either the derivative or integral potentiometers also has an effect on the proportional (gain) value, and adjusting the gain of course has an effect on all terms of the PID equation:

It should be apparent to you now why analog controllers tend to implement the series equation instead of the parallel or ideal PID equations: they are simpler and less expensive to build that way.

One popular analog electronic controller was the Foxboro model 62H, shown in the following photographs. Like the model 130 pneumatic controller, this electronic controller was designed to fit into a rack next to several other controllers. Tuning parameters were adjustable by moving potentiometer knobs under a side-panel accessible by partially removing the controller from its rack:

The Fisher corporation manufactured a series of analog electronic controllers called the AC2, which were similar in construction to the Foxboro model 62H, but very narrow in width so that many could be fit into a compact panel space.

Like the pneumatic panel-mounted controllers preceding, and digital panel-mount controllers to follow, the tuning parameters for a panel-mounted analog electronic controller were typically accessed on the controller’s side. The controller could be slid partially out of the panel to reveal the P, I, and D adjustment knobs (as well as direct/reverse action switches and other configuration controls).

Indicators on the front of an analog electronic controller served to display the process variable (PV), setpoint (SP), and manipulated variable (MV, or output) for operator information. Many analog electronic controllers did not have separate meter indications for PV and SP, but rather used a single meter movement to display the error signal, or difference between PV and SP. On the Foxboro model 62H, a hand-adjustable knob provided both indication and control over SP, while a small edge-reading meter movement displayed the error. A negative meter indication showed that the PV was below setpoint, and a positive meter indication showed that the PV was above setpoint. The Fisher AC2 analog electronic controller used the same basic technique, cleverly applied in such a way that the PV was displayed in real engineering units. The setpoint adjustment was a large wheel, mounted so the edge faced the operator. Along the circumference of this wheel was a scale showing the process variable range, from the LRV at one extreme of the wheel’s travel to the URV at the other extreme of the wheel’s travel. The actual setpoint value was the middle of the wheel from the operator’s view of the wheel edge. A single meter movement needle traced an arc along the circumference of the wheel along this same viewable range. If the error was zero (PV = SP), the needle would be positioned in the middle of this viewing range, pointed at the same value along the scale as the setpoint. If the error was positive, the needle would rise up to point to a larger (higher) value on the scale, and if the error was negative the needle would point to a smaller (lower) value on the scale. For any fixed value of PV, this error needle would therefore move in exact step with the wheel as it was rotated by the operator’s hand. Thus, a single adjustment and a single meter movement displayed both SP and PV in very clear and unambiguous form.

Taylor manufactured a line of analog panel-mounted controllers that worked much the same way, with the SP adjustment being a graduated tape reeled to and fro by the SP adjustment knob. The middle of the viewable section of tape (as seen through a plastic window) was the setpoint value, and a single meter movement needle pointed to the PV value as a function of error. If the error happened to be zero (PV = SP), the needle would point to the middle of this viewable section of tape, which was the SP value.

Another popular panel-mounted analog electronic controller was the Moore Syncro, which featured plug-in modules for implementing different control algorithms (different PID equations, nonlinear signal conditioning, etc.). These plug-in function modules were a hardware precursor to the software “function blocks” appearing in later generations of digital controllers: a simple way of organizing controller functionality so that technicians unfamiliar with computer programming could easily configure a controller to do different types of control functions. Later models of the Syncro featured fluorescent bargraph displays of PV and SP for easy viewing in low-light conditions.

Analog single-loop controllers are largely a thing of the past, with the exception of some low-cost or specialty applications. An example of the former is shown here, a simple analog temperature controller small enough to fit in the palm of my hand:

This particular controller happened to be part of a sulfur dioxide analyzer system, controlling the internal temperature of a gas regulator panel to prevent vapors in the sample stream from condensing in low spots of the tubing and regulator system. The accuracy of such a temperature control application was not critical – if temperature was regulated to ±5 degrees Fahrenheit it would be more than adequate. This is an application where an analog controller makes perfect sense: it is very compact, simple, extremely reliable, and inexpensive. None of the features associated with digital PID controllers (programmability, networking, precision) would have any merit in this application.

In contrast to single-loop analog controllers, multi-loop systems control dozens or even hundreds of process loops at a time. Prior to the advent of reliable digital technology, the only electronic process control systems capable of handling the numerous loops within large industrial installations such as power generating plants, oil refineries, and chemical processing facilities were analog systems, and several manufacturers produced multi-loop analog systems just for these large-scale control applications.

One of the most technologically advanced analog electronic products manufactured for industrial control applications was the Foxboro SPEC 200 system2. Although the SPEC 200 system used panel-mounted indicators, recorders, and other interface components resembling panel-mounted control systems, the actual control functions were implemented in a separate equipment rack which Foxboro called a nest3. Printed circuit boards plugged into each “nest” provided all the control functions (PID controllers, alarm units, integrators, signal selectors, etc.) necessary, with analog signal wires connecting the various functions together with panel-mounted displays and with field instruments to form a working system.

Analog field instrument signals (4-20 mA, or in some cases 10-50 mA) were all converted to a 0-10 VDC range for signal processing within the SPEC 200 nest. Operational amplifiers (mostly the model LM301) formed the “building blocks” of the control functions, with a +/- 15 VDC power supply providing DC power for everything to operate.

An an example of SPEC 200 technology, the following photographs show a model 2AX+A4 proportional-integral (P+I) controller card, inserted into a metal frame (called a “module” by Foxboro). This module was designed to fit into a slot in a SPEC 200 “nest” where it would reside alongside many other similar cards, each card performing its own control function:

Tuning and alarm adjustments may be seen in the right-hand photograph. This particular controller is set to a proportional band value of approximately 170, and an integral time constant of just over 0.01 minutes per repeat. A two-position rotary switch near the bottom of the card selected either reverse (“Dec”) or direct (“Inc”) control action.

The array of copper pins at the top of the module form the male half of a cable connector, providing connection between the control card and the front-panel instrument accessible to operations personnel. Since the tuning controls appear on the face of this controller card (making it a “card tuned” controller), they were not accessible to operators but rather only to the technical personnel with access to the nest area. Other versions of controller cards (“control station tuned”) had blank places where the P and I potentiometer adjustments appear on this model, with tuning adjustments provided on the panel-mounted instrument displays for easier access to operators.

The set of ten screw terminals at the bottom of the module provided connection points for the input and output voltage signals. The following list gives the general descriptions of each terminal pair, with the descriptions for this particular P + I controller written in italic type:

• Terminals (1+) and (1-): Input signal #1 (Process variable input)

• Terminals (2+) and (2-): Output signal #1 (Manipulated variable output)

• Terminals (3+) and (3-): Input #2, Output #4, or Option #1 (Remote setpoint)

• Terminals (4+) and (4-): Input #3, Output #3, or Option #2 (Optional alarm)

• Terminals (5+) and (5-): Input #4, Output #2, or Option #3 (Optional 24 VAC)

A photograph of the printed circuit board (card) removed from the metal module clearly shows the analog electronic components:

Foxboro went to great lengths in their design process to maximize reliability of the SPEC 200 system, already an inherently reliable technology by virtue of its simple, analog nature. As a result, the reliability of SPEC 200 control systems is the stuff of legend4.

1The real problem with digital controller speed is that the time delay between successive “scans” translates into dead time for the control loop. Dead time is the single greatest impediment to feedback control.

2Although the SPEC 200 system – like most analog electronic control systems – is considered obsolete, working installations may still be found at the time of this writing (2008). A report published by the Electric Power Research Institute (see References at the end of this chapter) in 2001 documents a SPEC 200 analog control system installed in a nuclear power plant in the United States as recently as 1992, and another as recently as 2001 in a Korean nuclear power plant.

3Foxboro provided the option of a self-contained, panel-mounted SPEC 200 controller unit with all electronics contained in a single module, but the split architecture of the display/nest areas was preferred for large installations where many dozens of loops (especially cascade, feedforward, ratio, and other multi-component control strategies) would be serviced by the same system.

4I once encountered an engineer who joked that the number “200” in “SPEC 200” represented the number of years the system was designed to continuously operate. At another facility, I encountered instrument technicians who were a bit afraid of a SPEC 200 system running a section of their plant: the system had never suffered a failure of any kind since it was installed decades ago, and as a result no one in the shop had any experience troubleshooting it. As it turns out, the entire facility was eventually shut down and sold, with the SPEC 200 nest running faithfully until the day its power was turned off!

How PID Controllers Works?

PID = proportional + integral and + derivative

PID Controller is a most common control algorithm used in industrial automation & applications and more than 95% of the industrial controllers are of PID type. PID controllers are used for more precise and accurate control of various parameters.

Most often these are used for the regulation of temperature, pressure, speed, flow and other process variables. Due to robust performance and functional simplicity, these have been accepted by enormous industrial applications where a more precise control is the foremost requirement. Let’s see how the PID controller works.

What is PID Controller?

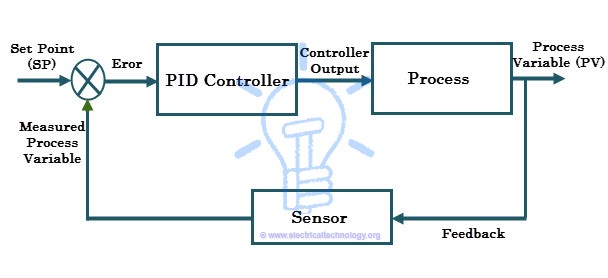

A combination of proportional, integral and derivative actions is more commonly referred as PID action and hence the name, PID (Proportional-Integral-Derivative)controller. These three basic coefficients are varied in each PID controller for specific application in order to get optimal response.

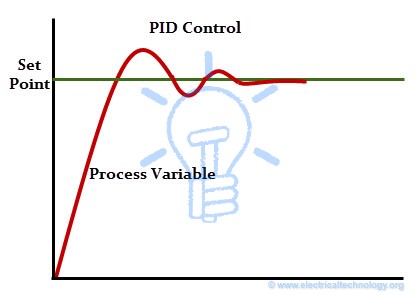

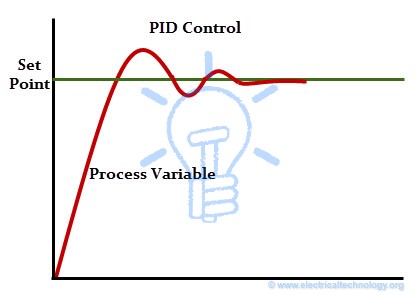

It gets the input parameter from the sensor which is referred as actual process variable. It also accepts the desired actuator output, which is referred as set variable, and then it calculates and combines the proportional, integral and derivative responses to compute the output for the actuator. Consider the typical control system shown in above figure in which the process variable of a process has to be maintained at a particular level. Assume that the process variable is temperature (in centigrade). In order to measure the process variable (i.e., temperature), a sensor is used (let us say an RTD).

Consider the typical control system shown in above figure in which the process variable of a process has to be maintained at a particular level. Assume that the process variable is temperature (in centigrade). In order to measure the process variable (i.e., temperature), a sensor is used (let us say an RTD).

Consider the typical control system shown in above figure in which the process variable of a process has to be maintained at a particular level. Assume that the process variable is temperature (in centigrade). In order to measure the process variable (i.e., temperature), a sensor is used (let us say an RTD).

Consider the typical control system shown in above figure in which the process variable of a process has to be maintained at a particular level. Assume that the process variable is temperature (in centigrade). In order to measure the process variable (i.e., temperature), a sensor is used (let us say an RTD).

A set point is the desired response of the process. Suppose the process has to be maintained at 80 degree centigrade, and then the set point is 80 degree centigrade. Assume that the measured temperature from the sensor is 50 degree centigrade, (which is nothing but a process variable) but the temperature set point is 80 degree centigrade.

This deviation of actual value from the desired value in the PID control algorithm causes to produce the output to the actuator (here it is a heater) depending on the combination of proportional, integral and derivative responses. So the PID controller continuously varies the output to the actuator till the process variable settle down to the set value. This is also called as closed loop feedback control system.

Working of PID Controller

In manual control, the operator may periodically read the process variable (that has to be controlled such as temperature, flow, speed, etc.) and adjust the control variable (which is to be manipulated in order to bring control variable to prescribed limits such as a heating element, flow valves, motor input, etc.). On the other hand, in automatic control, measurement and adjustment are made automatically on a continuous basis. All modern industrial controllers are of automatic type (or closed loop controllers), which are usually made to produce one or combination of control actions. These control actions include ON-OFF control, proportional control, proportional-integral control, proportional-derivative control and proportional-integral-derivative control.

All modern industrial controllers are of automatic type (or closed loop controllers), which are usually made to produce one or combination of control actions. These control actions include ON-OFF control, proportional control, proportional-integral control, proportional-derivative control and proportional-integral-derivative control.

All modern industrial controllers are of automatic type (or closed loop controllers), which are usually made to produce one or combination of control actions. These control actions include ON-OFF control, proportional control, proportional-integral control, proportional-derivative control and proportional-integral-derivative control.

All modern industrial controllers are of automatic type (or closed loop controllers), which are usually made to produce one or combination of control actions. These control actions include ON-OFF control, proportional control, proportional-integral control, proportional-derivative control and proportional-integral-derivative control.

In case of ON-OFF controller, two states are possible to control the manipulated variable, i.e., either fully ON (when process variable is below the set point) or Fully OFF (when process variable is above the set point). So the output will be of oscillating in nature. In order to achieve the precise control, most industries use the PID controller (or PI or PD depends on the application). Let us look at these control actions.

- Also read: SCADA Systems for Electrical Distribution

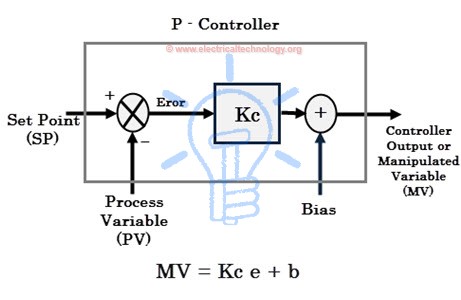

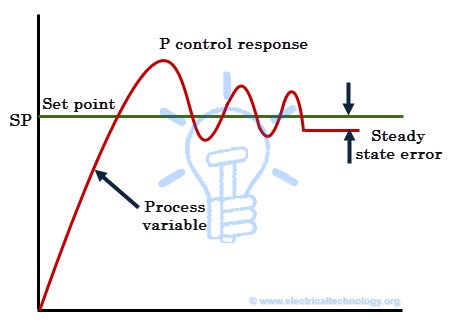

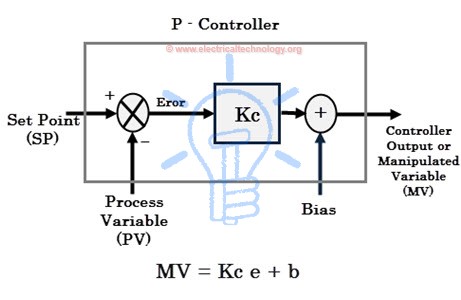

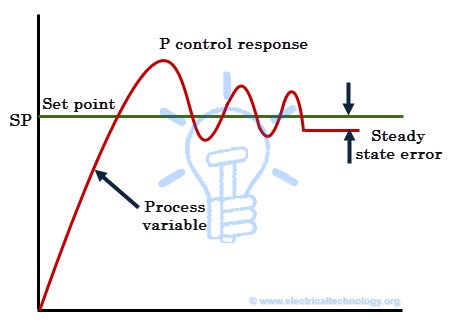

P-Control Response

Proportional control or simply P-controller produces the control output proportional to the current error. Here the error is the difference between the set point and process variable (i.e., e = SP – PV). This error value multiplied by the proportional gain (Kc) determines the output response, or in other words proportional gain decides the ratio of proportional output response to error value.

For example, the magnitude of the error is 20 and Kc is 4 then proportional response will be 80. If the error value is zero, controller output or response will be zero. The speed of the response (transient response) is increased by increasing the value of proportional gain Kc. However, if Kc is increased beyond the normal range, process variable starts oscillating at a higher rate and it will cause instability of the system.

Although P-controller provides stability of the process variable with good speed of response, there will always be an error between the set point and actual process variable. Most of the cases, this controller is provided with manual reset or biasing in order to reduce the error when used alone. However, zero error state cannot be achieved by this controller. Hence there will always be a steady state error in the p-controller response as shown in figure.

Although P-controller provides stability of the process variable with good speed of response, there will always be an error between the set point and actual process variable. Most of the cases, this controller is provided with manual reset or biasing in order to reduce the error when used alone. However, zero error state cannot be achieved by this controller. Hence there will always be a steady state error in the p-controller response as shown in figure.

Although P-controller provides stability of the process variable with good speed of response, there will always be an error between the set point and actual process variable. Most of the cases, this controller is provided with manual reset or biasing in order to reduce the error when used alone. However, zero error state cannot be achieved by this controller. Hence there will always be a steady state error in the p-controller response as shown in figure.

Although P-controller provides stability of the process variable with good speed of response, there will always be an error between the set point and actual process variable. Most of the cases, this controller is provided with manual reset or biasing in order to reduce the error when used alone. However, zero error state cannot be achieved by this controller. Hence there will always be a steady state error in the p-controller response as shown in figure.I-Control Response

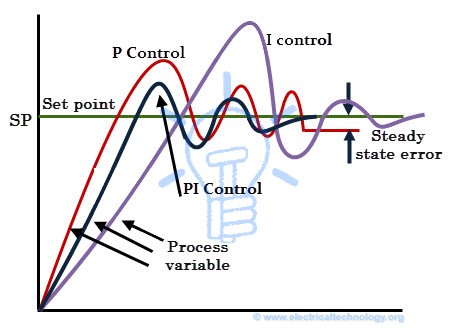

Integral controller or I-controller is mainly used to reduce the steady state error of the system. The integral component integrates the error term over a period of time until the error becomes zero. This results that even a small error value will cause to produce high integral response. At the zero error condition, it holds the output to the final control device at its last value in order to maintain zero steady state error, but in case of P-controller, output is zero when the error is zero. If the error is negative, the integral response or output will be decreased. The speed of response is slow (means respond slowly) when I-controller alone used, but improves the steady state response. By decreasing the integral gain Ki, the speed of the response is increased.

If the error is negative, the integral response or output will be decreased. The speed of response is slow (means respond slowly) when I-controller alone used, but improves the steady state response. By decreasing the integral gain Ki, the speed of the response is increased. For many applications, proportional and integral controls are combined to achieve good speed of response (in case of P controller) and better steady state response (in case of I controller). Most often PI controllers are used in industrial operation in order to improve transient as well as steady state responses. The responses of only I-control, only p-control and PI control are shown in below figure.

For many applications, proportional and integral controls are combined to achieve good speed of response (in case of P controller) and better steady state response (in case of I controller). Most often PI controllers are used in industrial operation in order to improve transient as well as steady state responses. The responses of only I-control, only p-control and PI control are shown in below figure.

If the error is negative, the integral response or output will be decreased. The speed of response is slow (means respond slowly) when I-controller alone used, but improves the steady state response. By decreasing the integral gain Ki, the speed of the response is increased.

If the error is negative, the integral response or output will be decreased. The speed of response is slow (means respond slowly) when I-controller alone used, but improves the steady state response. By decreasing the integral gain Ki, the speed of the response is increased. For many applications, proportional and integral controls are combined to achieve good speed of response (in case of P controller) and better steady state response (in case of I controller). Most often PI controllers are used in industrial operation in order to improve transient as well as steady state responses. The responses of only I-control, only p-control and PI control are shown in below figure.

For many applications, proportional and integral controls are combined to achieve good speed of response (in case of P controller) and better steady state response (in case of I controller). Most often PI controllers are used in industrial operation in order to improve transient as well as steady state responses. The responses of only I-control, only p-control and PI control are shown in below figure.

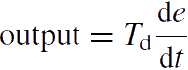

D- Controller Response

A derivative controller (or simply D-Controller) sees how fast process variable changes per unit of time and produce the output proportional to the rate of change. The derivative output is equal to the rate of change of error multiplied by a derivative constant. The D-controller is used when the processor variable starts to change at a high rate of speed.

In such case, D-controller moves the final control device (such as control valves or motor) in such direction as to counteract the rapid change of a process variable. It is to be noted that D-controller alone cannot be used for any control applications.

The derivative action increases the speed of the response because it gives a kick start for the output, thus anticipates the future behavior of the error. The more rapidly D-controller responds to the changes in the process variable, if the derivative term is large (which is achieved by increasing the derivative constant or time Td).

In most of the PID controllers, D-control response depends only on process variable, rather than error. This avoids spikes in the output (or sudden increase of output) in case of sudden set point change by the operator. And also most control systems use less derivative time td, as the derivative response is very sensitive to the noise in the process variable which leads to produce extremely high output even for a small amount of noise.

Therefore, by combining proportional, integral, and derivative control responses, a PID controller is formed. A PID controller finds universal application; however, one must know the PID settings and tune it properly to produce the desired output. Tuning means the process of getting an ideal response from the PID controller by setting optimal gains of proportional, integral and derivative parameters.

There are different methods of tuning the PID controller so as to get desired response. Some of these methods include trial and error, process reaction curve technique and Zeigler-Nichols method. Most popularly Zeigler-Nichols and trial and error methods are used.

This is about the PID controller and its working. Due to the simplicity of controller structure, PID controllers are applicable for a variety of processes. And also it can be tuned for any process, even without knowing detailed mathematical model of process. Some of the applications include, PID controller based motor speed control, temperature control, pressure control, flow control, level of the liquid, etc.

Real-time PID Controllers

There are different types PID controllers available in today’s market, which can be used for all industrial control needs such as level, flow, temperature and pressure. When deciding on controlling such parameters for a process using PID, options include use either PLC or standalone PID controller.

Standalone PID controllers are used where one or two loops are needed to be monitored and controlled or in the situations where it difficult to access with larger systems. These dedicated control devices offer a variety of options for single and dual loop control. Standalone PID controllers offer multiple set point configurations and also generates the independent multiple alarms.

Some of these standalone controllers include Yokogava temperature controllers, Honeywell PID controllers, OMEGA auto tune PID controllers, ABB PID controllers and Siemens PID controllers.

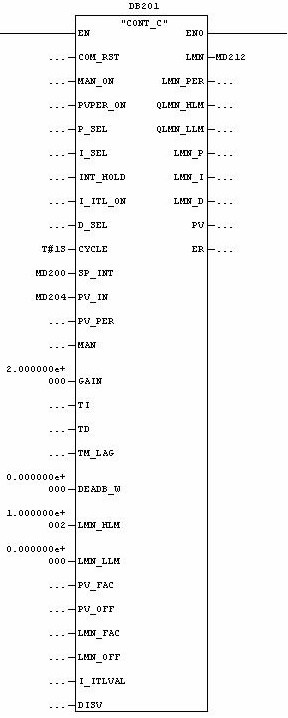

Most of the control applications, PLCs are used as PID controllers. PID blocks are inbuilt in PLCs/PACs and which offers advanced options for a precise control. PLCs are more intelligent and powerful than standalone controllers and make the job easier. Every PLC consist the PID block in their programming software, whether it can Siemens, ABB, AB, Delta, Emersion, or Yokogava PLC.

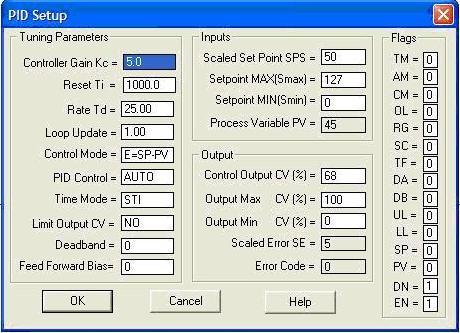

The below figure shows PID controller VIs offered by LabVIEW PID toolset.

literature study and gauge of appeal

Loving Enter Day on Timing ( LED on T )

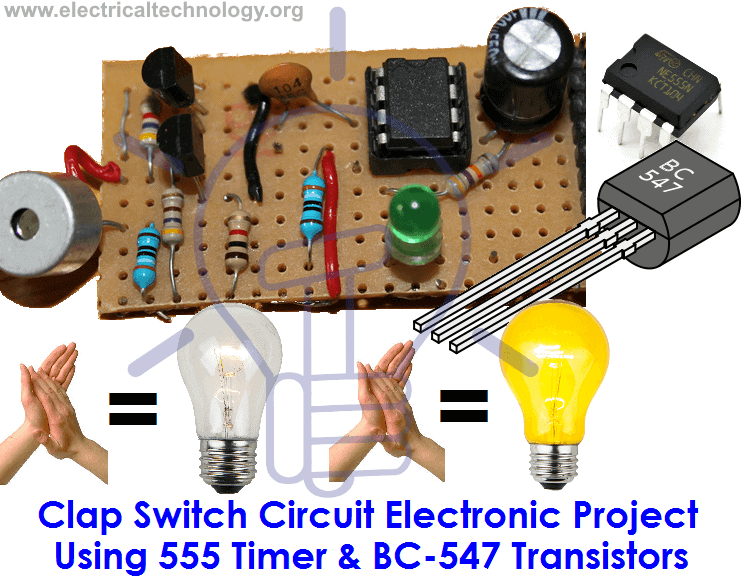

Clap Switch Circuit Electronic Project Using 555 Timer & BC-547 Transistors

Introduction

Clap Switch is a basic Electronics mini-project, made with the help of the basic components. Clap Switch has the ability to turn ON/OFF any electrical component or circuit by the clap sound.

It is known as Clap Switch, because the condenser mic which will be used in this Project will have an ability to take the sound having same pitch as the Clap sound as the input. Although it doesn’t mean that the sound will have to be of Clap sound, it can be any sound having the same high pitch as of Clap. We can also say that it converts the Sound energy into the Electrical Energy, because we are giving an input to the circuit as a sound whereas the Circuit gives us the output as a LED glow (Electrical Energy).

Required Components

As already mentioned, this project is basic Electronics mini-project, so this project is made of the basic components. Following is the list of the components required to make the Clap Switch.

- 1K, 4.7K, 47K, 330 and 470 ohm resistors

- 10µF and 2 100nF capacitors

- Electric Condenser mic

- Two BC547 transistors

- LED

- 555 timer

- 9V Battery

Working Principle of Clap Switch Circuit

This circuit (As shown below) is made with the help of Sound activated sensor, which senses the sound of Clap as input and processes it to the circuit in order to give the Output. When sound is given as the input to the Electric Condenser Mic, it is changed into the Electrical Energy as the LED turns on. LED turns ON, as we give sound input and it turns OFF automatically after few seconds. Turn-On LED timer can be changed by varying the value of 100mF capacitor as it is connected with 555 timer whose main purpose is to generate the pulse.

Although the name of the circuit is the Clap Switch, but you are not restricted to give input as the Clap only. It can be any sound, having same pitch as of Clap so this can also be called as “Sound Operated Switch”. This circuit is mainly based on transistors, because the negative terminal of Mic is directly connected with the transistor. In this circuit, we haven’t used any Electronic Switch to turn on/off the circuit, so when you are connecting the battery with the circuit, it means your circuit is now turned ON and it will take the inputs in the form of Sound Energy. You can modify this circuit by using Relay as Electronic Switch to turn the circuit ON or OFF.

As soon as we give the sound input to the circuit, it amplifies the sound signals and proceeds them to the 555 timers which generates the pulse to the LED, making it turn ON. You are to make sure, that the negative side of the Condenser mic is connected with the amplifier or the circuit will heat-up and may not working with different models of transistors etc. You cannot increase the sensitivity of the Condenser mic for long usage, it has short range by default. It is also applicable for the LAMP, so this circuit has many opportunities for modification.

Advantages & Disadvantages

- It can used to turn ON and OFF the LED or LAMP simply, by clapping your hands.

- We can also remove LEDs and place a FAN or any other electric component on the output in order to get desired result.

- The Condenser Mic used in this circuit has the short range as a default, which cannot be varied.

Applications

Clap Switch is not restricted to turn the LEDs ON and OFF, but it can be used in any electric appliances such as Tube Light, Fan, Radio or any other basic circuit which you want to turn ON by a Sound.

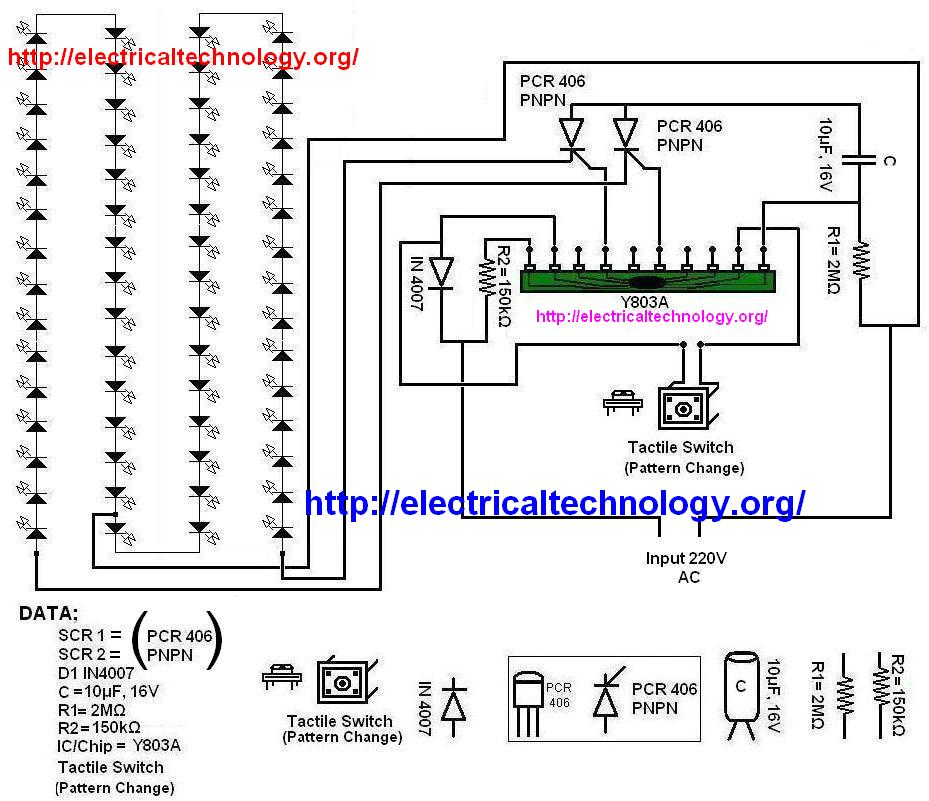

LED String / Strip Circuit Diagram Using PCR-406

It is a very nice and interesting circuit of blinking/Dancing LED String / Strip.

you should try to make one at home because it is very simple and cheep and the basic components of the circuit available everywhere such as an electronics shop.

This LED Strip/String circuit (Dancing/Blinking LED Circuit) is an interesting circuit where LED bulbs light and glow in different ways like “Dance” and “Blinks” in the following series:

you should try to make one at home because it is very simple and cheep and the basic components of the circuit available everywhere such as an electronics shop.

This LED Strip/String circuit (Dancing/Blinking LED Circuit) is an interesting circuit where LED bulbs light and glow in different ways like “Dance” and “Blinks” in the following series:

- Combination

- In Waves

- Sequential

- Slo-Glo

- Chasing/Flash

- Slow/Fade

- Twinkle/Flash

- Steady On.

so here, we are going step by step to make one of this led blinking and dancing circuit.

inside the box. this is the basic circuit on general purpose PCB. ( Back Side of the PCB)