before discussing the touch screen let us look at the basic concept that is an electronic component called a capacitor which in its formation serves as an electric field .

Capacitors and Capacitance

Okay, these are the two nouns with two different meanings. when we remember the Static Electricity, there explained that

Capacitance: parameter ability of an object store energy in the form of an electric field.

Capacitors: objects specially designed to store energy in the form of an electric field

You also must already know the representation of objects in an electric circuit capacitance it like this :

The symbol represents the simplest form of capacitors: two parallel conductor plates separated by a dielectric material. What is a dielectric material? In short, the dielectric material is an insulator. Each dielectric material has a dielectric constant that determines how much energy can be stored in the form of an electric field.

Actually, the capacitors must not parallel plate shaped like a snack. We just try googling "capacitor", would find any lot tubular like this:

So, it was not to be shaped capacitor plate. Any object which consists of the configuration of conductor-dielectric-conductor will have a capacitance value (can store energy in the form of an electric field) so that the electrical circuit can be represented by the symbol of a capacitor as shown above. Including two tubes of different conductors of his fingers, but placed on the same axis. Continue, where we could just find a capacitor?

The main example is the touchscreen

touchscreen

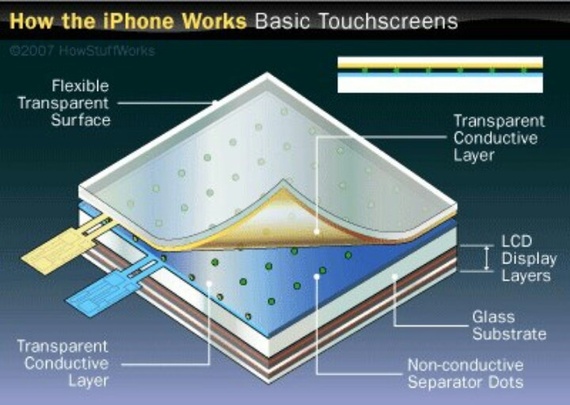

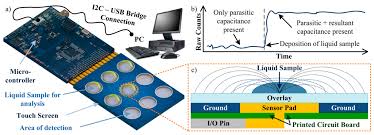

touch-screen-principles we ever thought of how to do lo smartphone or tablet can detect a touch of a finger? There are many methods touchscreen, one of which is a capacitive touchscreen. In this method, the screen acts as a dielectric. Underneath is a layer of conductors. Because the conductivity of the finger and the air is different, when lo touch, lo smartphone will detect the change in capacitance in touch lo area. The next information will be processed by the processor.

This is generally referred to capacitive sensing. Another example is the two conductors dipping into the water, lo will get a dielectric capacitor with a mixture of air and water with a specific composition corresponding water levels right. Well, from here lo can measure the depth / height of the water by looking at the change in capacitance due to changes in water level. This sensor is called a water level sensor.

For example, we want to make the water filling the fields automatically. Say a paddy height of the water should be 30 cm. Due to evaporation during the day, paddy water level will be reduced. This will cause a change in the sensor capacitance value. If already passed a certain extent, there will be an automatic water filling system that will fill the water to the fields and stop charging if the capacitance sensor is already in accordance with the initial value of the fields when the water level of 30 cm.

X . I

How Does A Touchscreen Work?

How does a touchscreen work?

Answer:

Touchscreens let us interact with computers, tablets, phones, remote controls and a host of other things with great ease but how do they work?

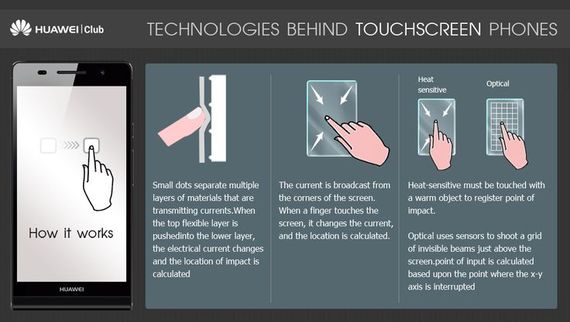

There are two types of touchscreens used in many devices and they work very differently.

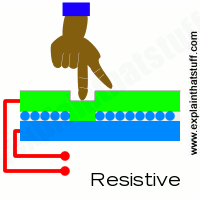

Resistive:

(Image courtesy of PlanarTouch)

Resistive touch screens were commonly used before Apple introduced the iPhone in all touchscreen devices work by having two layers of conductive material separated by air gaps. When you apply pressure to an area of the screen a circuit completes and the device is given a coordinate where the circuit was completed. Think of it like putting a dot on a piece of graph paper. The screen reports that the dot is at the 7th box up 3 boxes in from the left. You can usually identify these screens by the flexible plastic like outer layer.

Positives of Resistive Touchscreens:

- Cheap to produce.

- Can be used with gloves, stylus or pointing devices.

- Can only detect one point of contact (one finger) at a time on most touchscreens.

- Not as resilient/durable as other touchscreens as layers must be made of flexible material.

- “Feel” of touch screen not as smooth/responsive as other technologies.

(Image courtesy of PlanarTouch)

Capacitive touchscreens first introduced to the market with the Apple iPhone work by applying a very light current to all 4 corners of the screen. When a finger or other specially designed stylus touch the screen there is a circuit created and a voltage drop develops and sensors register the location of the voltage drop. You can usually identify these screens by a hard glass outer layer.

Positives of Capacitive Touchscreens:

- Very responsive to touch with natural movements.

- Able to detect multiple points of contact (fingers) to enable pinch zooming, swiping and other hand motions.

- Hard outer glass layer makes for very high quality displays with vibrant colors and high contrast ratios.

- Touch input does not work if your wearing gloves (special touch enabled gloves are availible)

- Screens often very reflective reducing outdoor useability.

- Much more expensive to produce.

X . II

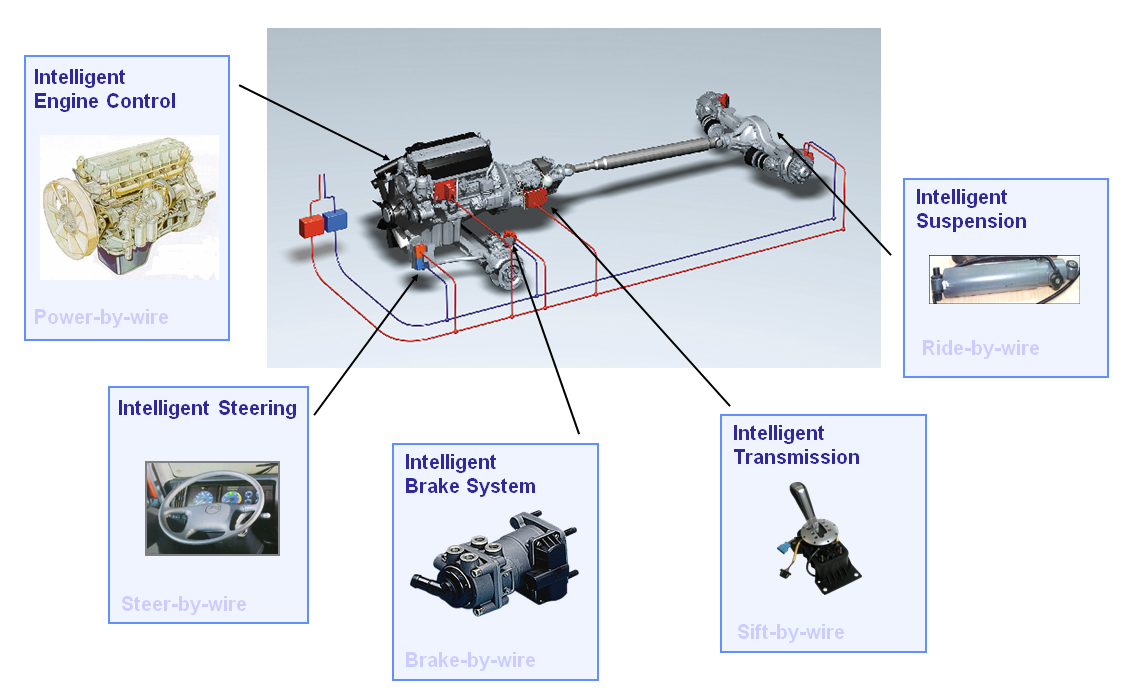

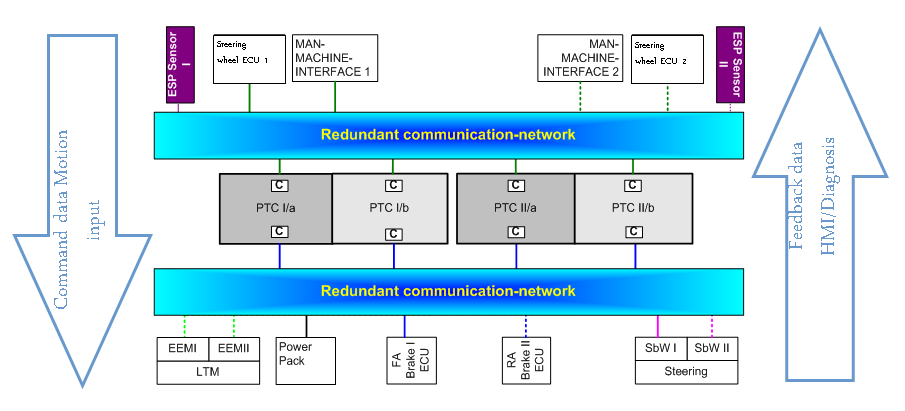

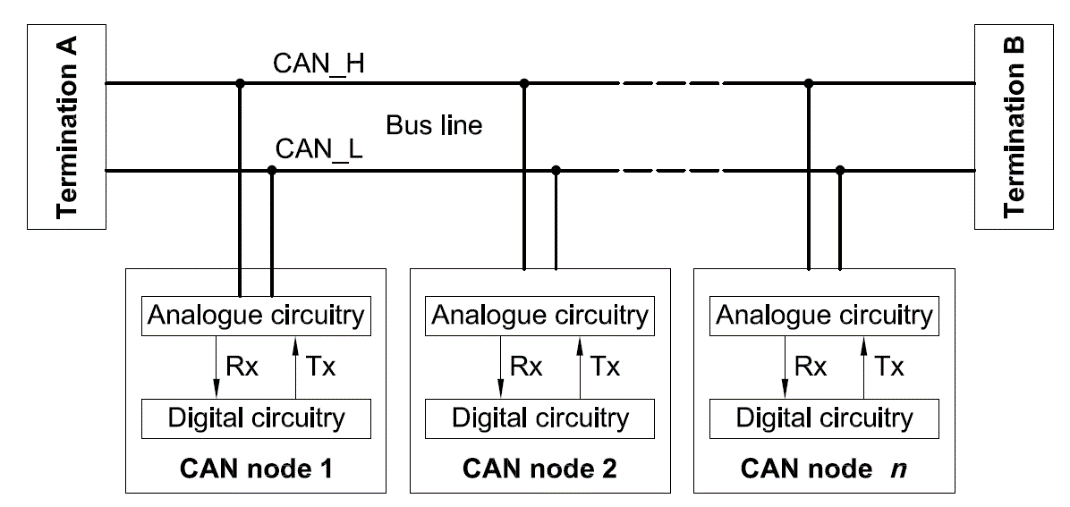

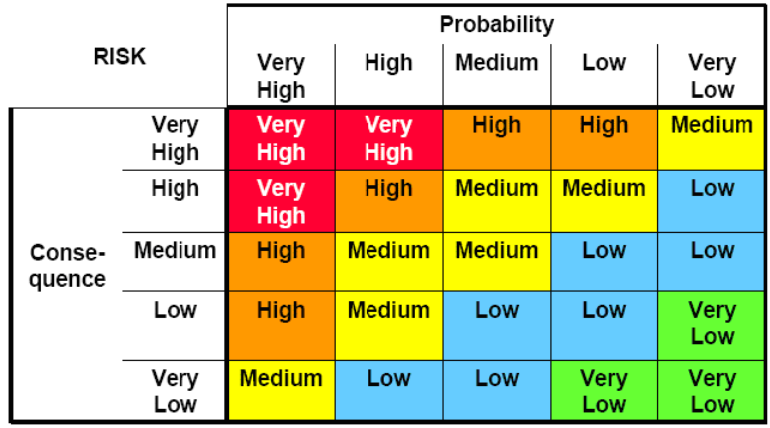

The new technologies of touchscreen available for ROBO Dynamic

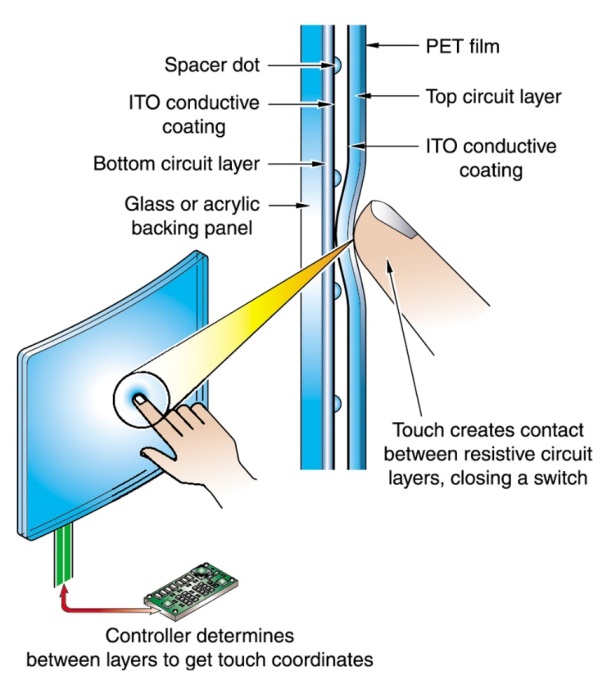

4 Wire Restistive Touch screen technology

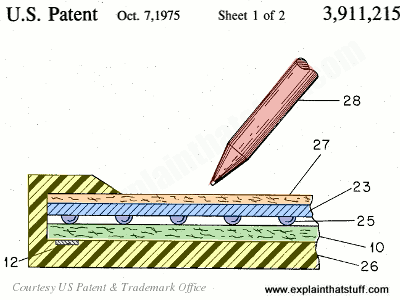

The 4 Wire Resitive touchscreen consists of a glass layer with

a conductive coating on top and a polyester top sheet with a conductive

coating on the bottom. The conductive surfaces are held apart by

"spacer dots", usually glass beads that are silk-screened onto the

coated glass.

When a person presses on the top sheet, its conductive side comes in contact with the conductive side of the glass, effectively closing a circuit (this is called pressure sensing). The voltage at the point of contact is read from a wire connected to the top sheet.It can be touched by finger, gloved finger, leather or stylus pen.It is inherently of many advantages such as high accuracy, quick response, drift-free, stable and long durability for a life time of 1,000,000 times finger touches.

When a person presses on the top sheet, its conductive side comes in contact with the conductive side of the glass, effectively closing a circuit (this is called pressure sensing). The voltage at the point of contact is read from a wire connected to the top sheet.It can be touched by finger, gloved finger, leather or stylus pen.It is inherently of many advantages such as high accuracy, quick response, drift-free, stable and long durability for a life time of 1,000,000 times finger touches.

The 5 wrie resistive touch screen is a two-layer structure,

two materials (film or glass) with ITO are attached with a gap between

them so that the ITO layers are facing each other. Touch input will be

made as the top layer is pressed down and the two ITO layers contact.

There are insulators called spacing dots between the top and bottom ITO

layers. These spacing dots prevent unintended contacts (inputs) of the

ITO layers when not pressed.

When the top ITO film is pressed and makes contact with the bottom glass, the contacted area will be detected via electrical conduction. The notable characteristic of 5wire resistive is that only the bottom glass has detecting function. Even if the top ITO film is damaged, the detecting function will not be affected (except for the damaged area).

When the top ITO film is pressed and makes contact with the bottom glass, the contacted area will be detected via electrical conduction. The notable characteristic of 5wire resistive is that only the bottom glass has detecting function. Even if the top ITO film is damaged, the detecting function will not be affected (except for the damaged area).

Capacitive touch screen is a four-storey glass screen surface

and laminated within the glass screen is coated with a layer of ITO, the

outermost layer is a thin layer of silica glass, laminated ITO coating

as a face, four electrode leads in the four corners, inner ITO good

shielding to ensure a good working environment.

When finger touch the metal layer that is, because the body farm, users and touch-screen surface with a coupling capacitor, high frequency current, capacitance is a direct conductor, so fingers sucked away a very small amount of current from the contact point. The respectively current outflow from the touch electrode on the four corners of the screen, and flows through the four electrode current is proportional to the distance from the fingers to the four corners, controller through the accurate calculation of the four current percentage, came to the location of the touch point.

When finger touch the metal layer that is, because the body farm, users and touch-screen surface with a coupling capacitor, high frequency current, capacitance is a direct conductor, so fingers sucked away a very small amount of current from the contact point. The respectively current outflow from the touch electrode on the four corners of the screen, and flows through the four electrode current is proportional to the distance from the fingers to the four corners, controller through the accurate calculation of the four current percentage, came to the location of the touch point.

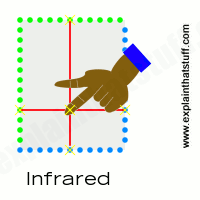

Infrared touch screen is to use a dense matrix of infra-red x,

y directions to detect and locate the user's touch. Infrared touch

screen installed in front of the monitor box outside a circuit board,

circuit board arranged on four sides of the screen infrared led and an

infrared receiver and corresponding anyway cross the infrared matrix.

When users touch the screen, fingers will be blocked anyway after the location for both infrared and therefore can judge the position of the touch points on the screen .it can detect essentially any input including a finger, gloved finger, stylus or pen.

When users touch the screen, fingers will be blocked anyway after the location for both infrared and therefore can judge the position of the touch points on the screen .it can detect essentially any input including a finger, gloved finger, stylus or pen.

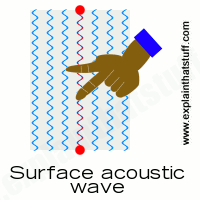

SAWG touch screen technology

Surface Acoustic Wave Guide (SAW) touch screen uses ultrasonic waves

that pass over the touchscreen panel. When the panel is touched, a

portion of the wave is absorbed. This change in the ultrasonic waves

registers the position of the touch event and sends this information to

the controller for processing. Surface wave touchscreen panels can be

damaged by outside elements. Contaminants on the surface can also

interfere with the functionality of the touchscreen. Surface Acoustic

Wave (SAWG) touch screen is based on sending acoustic waves across a

clear glass panel with a series of transducers and reflectors.

When a finger touches the screen, the waves are absorbed, causing a touch event to be detected at that point. SAW touch screen technology is recommended for public information kiosks and other high traffic indoor environments.

When a finger touches the screen, the waves are absorbed, causing a touch event to be detected at that point. SAW touch screen technology is recommended for public information kiosks and other high traffic indoor environments.

Interactive touch foil is transparent touch-sensitive film,

affixed to the membrane in the back of the glass or transparent acrylic

plate, glass or acrylic panels can instantly transform size touch

screen.Only a few microns thick our thin film display screens can turn

any surface into a high definition multi media display surface. Rear

projected images, video and dynamic media content can be displayed in

any format, anywhere.

GreenTouch's touch foil can be pasted on a glass window or inside of a glass door and a simple back-projection or front-projection projector can turn the glass window or glass door into a large touch screen. This film enables projection of product information directly on the glass to attract interactivity with the potential customers. It is a perfect promotional display that not only provides interactive promotion and customer experience around the clock but also records the interactive data from potential customers..

GreenTouch's touch foil can be pasted on a glass window or inside of a glass door and a simple back-projection or front-projection projector can turn the glass window or glass door into a large touch screen. This film enables projection of product information directly on the glass to attract interactivity with the potential customers. It is a perfect promotional display that not only provides interactive promotion and customer experience around the clock but also records the interactive data from potential customers..

X . III

TOUCH SCREEN TECHNOLOGIES

Resistive Touch Screen Technology

Resistive technology is versatile and economical for applications

such as food service and retail point of sale, industrial process

control and instrumentation, portable and handheld products and

communication devices.

Resistive touch screens have a flexible top

layer and a rigid bottom layer separated by insulating dots, with the

inside surface of each layer coated with a transparent conductive

coating.

Voltage applied to the layers produces a gradient across each layer. Pressing the flexible top sheet creates electrical contact between the resistive layers, essentially closing a switch in the circuit.

Voltage applied to the layers produces a gradient across each layer. Pressing the flexible top sheet creates electrical contact between the resistive layers, essentially closing a switch in the circuit.

Advantages:

- Value solution

- Activated by any stylus

- High touch point resolution

- Low power requirements

Disadvantages:

- Reduced optical clarity

- Polyester surface can be damaged

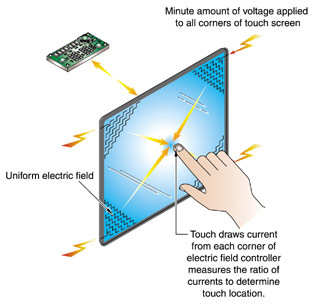

Capacitive Touch Screen Technology

Capacitive technology offers durability, reliability, and optical

clarity. Popular applications include gaming machines, ATM

installations, kiosks, industrial equipment, and point-of-sale.

Capacitive touch screens are curved or flat

glass substrates coated with a transparent metal oxide. A voltage is

applied to the corners of the overlay creating a minute uniform electric

field. A bare finger draws current from each corner of the electric

field, creating a voltage drop that is measured to determine touch

location.

Advantages:

- Extremely durable

- Very accurate

- Good optical clarity

- Good resolution

Disadvantages:

- Requires bare finger or capacitive stylus

- Severe scratch can affect operation within the damaged area

Dispersive (DST) Touch Screen Technology

Dispersive Signal Technology (DST) consists of a

chemically-strengthened glass substrate with piezos mounted on each

corner, mated to a sophisticated, dedicated controller.

The DST Touch System determines the touch

position by pinpointing the source of "bending waves" created by finger

or stylus contact within the glass substrate. This process of

interpreting bending waves within the glass substrate helps eliminate

traditional performance issues related to on-screen contaminants and

surface damage, and provides fast, accurate touch attributes.

Advantages:

- Fast, accurate repeatable touch

- Touch operates with static objects or other touches on the screen

- Touch unaffected by surface contaminants, such as dirt, dust and grime

- Excellent light transmission provides vibrant optical characteristics with anti-glare properties

- Operation unaffected by surface damage

- Input flexibility from finger or stylus, such as pencil, credit card, fingernail, or almost any pointing stylus

- Available for display sizes 32" to 46"

Disadvantages:

- More expensive to integrate than Optical

- Only available for displays 32" and larger

Acoustic Wave Touch Screen Technology (SAW)

Because of its high optical clarity and accuracy, acoustic wave technology is typically used in kiosk applications.

Acoustic wave touch screens use transducers

mounted at the edge of a glass overlay to emit ultrasonic sound waves

along two sides. These waves are reflected across the surface of the

glass and received by sensors. A finger or other soft tipped stylus

absorbs some of the acoustic energy and the controller measures the

amplitude change of the wave to determine touch location.

Advantages:

- Good optical clarity

- Z-axis capability

- Durable glass front

Disadvantages:

- Requires finger or sound absorbing stylus

- Difficult to industrialize

- Signal affected by surface liquids or other contaminants

Infrared Touch Screen Technology

Infrared touch screens are primarily used for large displays, banking machines, and in military applications.

Infrared touch screens are based on light-beam

interruption technology. Instead of an overlay on the surface, a frame

surrounds the display. The frame has light sources, or light emitting

diodes (LEDs) on one side and light detectors on the opposite side,

creating an optical grid across the screen.

When an object touches the screen, the invisible light beam is interrupted, causing a drop in the signal received by the photo sensors.

When an object touches the screen, the invisible light beam is interrupted, causing a drop in the signal received by the photo sensors.

Advantages:

- 100% light transmission (not an overlay)

- Accurate

Disadvantages:

- Costly

- Low reliability (MTBF for diodes)

- Parallax problems

- Accidental activation

- Low touch resolution

- No protection for display surface

Optical Touch Screen Technology

Optical touch screen technology is ideal for large LCD and plasma displays up to 100" diagonal.

Optical touch screen technology uses two line

scanning cameras located at the corners of the screen. The cameras track

the movement of any object close to the surface by detecting the

interruption of an infra-red light source. The light is emitted in a

plane across the surface of the screen and can be either active

(infra-red LED) or passive (special reflective surfaces).

Advantages:

- 100% light transmission (not an overlay)

- Accurate

- Can be retro-fitted to any existing large format LCD or Plasma display

- Can be used with finger, gloved hand or stylus

- Requires only one calibration

- Plug and play - no software drivers

Disadvantages:

- Can be affected by direct sunlight

- Frame increases overall depth of monitor

- Cannot be fitted to plasma and LCD displays with integrated speakers

What is multi-touch?

The term "multi-touch" is now in common use to describe any touch

screen that can recognise more than one point of contact, in effect the

use of two or more fingers simultaneously.

However, there are now several different types

of multi-touch, depending on the touch technology employed. Below is an

explanation of the different types of touch available which also acts as

a guide for the terms we use for describing the touch screens we

supply.

Single Touch

Single Touch occurs when a finger or stylus creates a touch event

on the surface of a touch sensor or within a touch field so it is

detected by the touch controller and the application can determine the

X,Y coordinates of the touch event.

Single Touch occurs when a finger or stylus

creates a touch event on the surface of a touch sensor or within a touch

field so it is detected by the touch controller and the application can

determine the X,Y coordinates of the touch event.

These technologies have been integrated into millions of devices and typically do not have the ability to detect or resolve more than a single touch point at a time as part of their standard configuration.

These technologies have been integrated into millions of devices and typically do not have the ability to detect or resolve more than a single touch point at a time as part of their standard configuration.

Single Touch with Pen Input

Single Touch with Pen input functionality can range from a simple, inactive pointer or stylus to complex, active tethered pens.

Inactive pens enable the same input

characteristics as a finger, but with greater pointing accuracy, while

sophisticated, active pens can provide more control and uses for the

touch system with drawing and palm rejection capabilities, and mouse

event capabilities.

Single Touch with Gesture

Enhancements to firmware, software and hardware by many single

touch technologies have increased their touch functionality. Some touch

technologies can use advanced processing capabilities to "detect" or

recognize that a second touch event is occurring, which is called a

"gesture event".

Since single touch systems can´t resolve the

exact location of the second touch event, they rely on algorithms to

interpret or anticipate the intended gesture event input. Common

industry terms for this functionality are two-finger gestures, dual

touch, dual control, and gesture touch.

Two Touch (2-point)

Two Touch refers to a touch system that can detect and resolve two discrete, simultaneous touch events.

The best demonstration of Two Touch capability

is to draw two parallel lines on the screen at the same time. Two Touch

systems can also support gesturing.

Multi-touch

Multi-touch refers to a touch system´s ability to simultaneously

detect and resolve a minimum of 3+ touch points. All 3 or more touches

are detected and fully resolved resulting in a dramatically improved

touch experience.

Multi-touch is considered by many to become a

widely-used interface mainly because of the speed, efficiency and

intuitiveness of the technology.

X . IIII

Touchscreen in cars

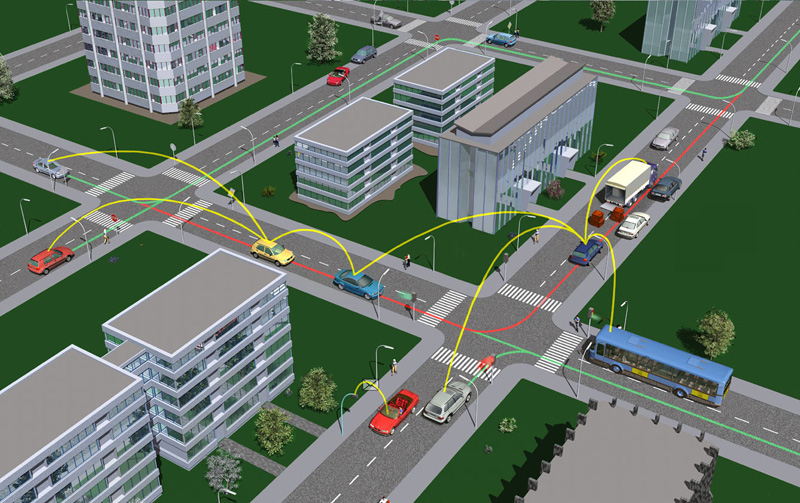

Infotainment displays often have touchscreen features enabling the driver to select functions via touching the display. Touchscreen technology is the direct manipulation type gesture based technology. A touchscreen is an electronic visual display capable of detecting and locating a touch over its display area. It is sensitive to the touch of a human finger, hand, pointed finger nail and passive objects like stylus. Users can simply move things on the screen, scroll them, zoom them and many more.

There are four main touchscreen technologies:

- Resistive

- Capacitive

- Surface Acoustic Wave

- Infrared

Resistive LCD touchscreen monitors rely on a touch overlay, which is composed of a flexible top layer and a rigid bottom layer separated by insulating dots, attached to a touchscreen controller. The inside surface of each of the two layers is coated with a transparent metal oxide coating (ITO) that facilitates a gradient across each layer when voltage is applied. Pressing the flexible top sheet creates electrical contact between the resistive layers, producing a switch closing in the circuit. The control electronics alternate voltage between the layers and pass the resulting X and Y touch coordinates to the touchscreen controller. The touchscreen controller data is then passed on to the computer operating system for processing.

Resistive touchscreen

Because of its versatility and cost-effectiveness, resistive touchscreen technology is the touch technology of choice for many markets and applications. Resistive touchscreens are used in food service, retail point-of-sale (POS), medical monitoring devices, industrial process control and instrumentation, portable and handheld products. Resistive touchscreen technology possesses many advantages over other alternative touchscreen technologies (acoustic wave, capacitive, infrared). Highly durable, resistive touchscreens are less susceptible to contaminants that easily infect acoustic wave touchscreens. In addition, resistive touchscreens are less sensitive to the effects of severe scratches that would incapacitate capacitive touchscreens. Drawback can be the too soft feeling when pressing it, since there is a mechanical deformation required to connect the two resistive layers to each-other.

One can use anything on a resistive touchscreen to make the touch interface work; a gloved finger, a fingernail, a stylus device – anything that creates enough pressure on the point of impact will activate the mechanism and the touch will be registered. For this reason, resistive touchscreen require slight pressure in order to register the touch, and are not always as quick to respond as capacitive touchscreens. In addition, the resistive touchscreen’s multiple layers cause the display to be less sharp, with lower contrast than we might see on capacitive screens. While most resistive screens don’t allow for multi-touch gestures such as pinch to zoom, they can register a touch by one finger when another finger is already touching a different location on the screen.

The capacitive touchscreen technology is the most popular and durable touchscreen technology used all over the world. It consists of a glass panel coated with a capacitive (conductive) material Indium Tin Oxide (ITO). The capacitive systems transmit almost 90% of light from the monitor. In case of surface-capacitive screens, only one side of the insulator is coated with a conducting layer. While the screen is operational, a uniform electrostatic field is formed over the conductive layer. Whenever, a human finger touches the screen, conduction of electric charges occurs over the uncoated layer which results in the formation of a dynamic capacitor. The controller then detects the position of touch by measuring the change in capacitance at the four corners of the screen. In the projected-capacitive touchscreen technology, the conductive ITO layer is etched to form a grid of multiple horizontal and vertical electrodes. It involves sensing along both the X and Y axis using clearly etched ITO pattern. The projective screen contains a sensor at every intersection of the row and column, thereby increasing the accuracy of the system.

Projected capacitive touchscreen

Since capacitive screens are made of one main layer, which is constantly getting thinner as technology advances, these screens are not only more sensitive and accurate, the display itself can be much sharper. Capacitive touchscreens can also make use of multi-touch gestures, but only by using several fingers at the same time. If one finger is touching one part of the screen, it won’t be able to sense another touch accurately.

Acoustic interfaces

In the following subsections the several acoustic technologies are outlined in order of the development.

Beepers

- Safety warnings

- Door is open

- Seat belt is not used

- ADAS warnings

- Comfort feedbacks

- Lights left on

- Parking assist

- Speed limit

Voice feedback

Today’s TTS systems are capable to vocalize complex texts with proper clarity. The typical fields of usage are the following:

- Navigation systems

- Telecommunication systems

- Warning messages

Voice control

Naturally Apple and Google are trying to offer their own solutions integrated into the automotive infotainment solutions, i.e. Apple CarPlay. Google has not presented a market-ready solution yet, but in January 2014 they have announced the Open Automotive Alliance, including General Motors, Honda Motor, Audi, Hyundai, and chipmaker Nvidia, that want to customize Google’s mobile operating system for vehicles.

Visual interfaces

Analogue gauge

Old instrument cluster: electronic gauges, LCD, control lamps (Source: BMW)

In the newest LCD-based instrument clusters the analogue gauge type displaying will continue to be available as detailed in the following subsection.

LCD display

Liquid crystals are almost transparent substances, exhibiting the properties of both solid and liquid matter. Light passing through liquid crystals follows the alignment of the molecules that make them up – a property of solid matter. In the 1960s it was discovered that charging liquid crystals with electricity changed their molecular alignment, and consequently the way light passed through them; a property of liquids.

LCD is described as a transmissive technology because the display works by letting varying amounts of a fixed-intensity white backlight through an active filter. The red, green and blue elements of a pixel are achieved through simple filtering of the white light.

Most liquid crystals are organic compounds consisting of long rod-like molecules which, in their natural state, arrange themselves with their long axes roughly parallel. It is possible to precisely control the alignment of these molecules by flowing the liquid crystal along a finely grooved surface. The alignment of the molecules follows the grooves, so if the grooves are exactly parallel, then the alignment of the molecules also becomes exactly parallel.

In their natural state, LCD molecules are arranged in a loosely ordered fashion with their long axes parallel. However, when they come into contact with a grooved surface in a fixed direction, they line up in parallel along the grooves.

The first principle of an LCD consists of sandwiching liquid crystals between two finely grooved surfaces, where the grooves on one surface are perpendicular (at 90 degrees) to the grooves on the other. If the molecules at one surface are aligned north to south, and the molecules on the other are aligned east to west, then those in-between are forced into a twisted state of 90 degrees. Light follows the alignment of the molecules, and therefore is also twisted through 90 degrees as it passes through the liquid crystals. However, when a voltage is applied to the liquid crystal, the molecules rearrange themselves vertically, allowing light to pass through untwisted.

The second principle of an LCD relies on the properties of polarising filters and light itself. Natural light waves are orientated at random angles. A polarising filter is simply a set of incredibly fine parallel lines. These lines act like a net, blocking all light waves apart from those (coincidentally) orientated parallel to the lines. A second polarising filter with lines arranged perpendicular (at 90 degrees) to the first would therefore totally block this already polarised light. Light would only pass through the second polariser if its lines were exactly parallel with the first, or if the light itself had been twisted to match the second polariser.

Liquid crystal display operating principles

A typical twisted nematic (TN) liquid crystal display consists of two polarising filters with their lines arranged perpendicular (at 90 degrees) to each other, which, as described above, would block all light trying to pass through. But in-between these polarisers are the twisted liquid crystals. Therefore light is polarised by the first filter, twisted through 90 degrees by the liquid crystals, finally allowing it to completely pass through the second polarising filter. However, when an electrical voltage is applied across the liquid crystal, the molecules realign vertically, allowing the light to pass through untwisted but to be blocked by the second polariser. Consequently, no voltage equals light passing through, while applied voltage equals no light emerging at the other end.

Basically two LCD control technique exist: passive matrix and active matrix. The earliest laptops (until the mid-1990s) were equipped with monochrome passive-matrix LCDs, later the colour active-matrix became standard on all laptops. Passive-matrix LCDs are still used today for less demanding applications. In particular this technology is used on portable devices where less information content needs to be displayed, lowest power consumption (no backlight) and low cost are desired, and/or readability in direct sunlight is needed.

The most common type of active matrix LCDs (AMLCDs) is the Thin Film Transistor LCD (TFT LCD), which contains, besides the polarizing sheets and cells of liquid crystal, a matrix of thin-film transistors. In a TFT screen a matrix of transistors is connected to the LCD panel – one transistor for each colour (RGB) of each pixel. These transistors drive the pixels, eliminating at a stroke the problems of ghosting and slow response speed that afflict non-TFT LCDs.

The liquid crystal elements of each pixel are arranged so that in their normal state (with no voltage applied) the light coming through the passive filter is polarised so as to pass through the screen. When a voltage is applied across the liquid crystal elements they twist by up to ninety degrees in proportion to the voltage, changing their polarisation and thereby blocking the light’s path. The transistors control the degree of twist and hence the intensity of the red, green and blue elements of each pixel forming the image on the display.

TFT screens can be made much thinner than LCDs, making them lighter, and refresh rates reached the fast 5 ms value.

There are several TFT panel types exist considering the backlight and panel technology.

The LCDs does not produce light itself, thus a proper light source is needed to built-in to produce a visible image. (However low-cost monochrome LCDs are available without backlight.) Until about 2010 the backlight of large LCD panels was based on Cold Cathode Fluorescent Lamps (CCFLs). It has several disadvantages such as higher voltage and power needed, thicker panel design, no high-speed switching, faster aging. The new LED-based backlight technologies eliminated these harmful properties and took over the CCFL.

The panel technologies can be divided into three main groups:

- Twisted Nematic (TN)

- In Plane Switching (IPS)

- VA (Vertical Alignment)

The TN panel provides the shortest response time (>1ms), and it is very cost effective technology. On the other hand these panels use only 18 bit colour depth which can be increased virtually, but the colour reproduction is not perfect anyway. Another disadvantage is the poor viewing angle.

The IPS panels’ core strength are the exact colour reproduction and the wide viewing angle. The response time is higher than the TN, but it managed to the proper level (>5ms) by now. Despite the higher costs, all in all the IPS panels is the best TFT LCD panels now.

Considering the properties the VA panels can be located between TN and IPS ones. Considering the contrast ratio it is the best technology, but with higher response time (>8ms) and a medium colour reproduction.

OLED display

The basic structure of an OLED is a cathode (which injects electrons), an emissive layer and an anode (which removes electrons). Modern OLED devices use many more layers in order to make them more efficient, but the basic functionality remains the same.

A flexible OLED display prototype

OLED displays have the following advantages over LCD displays:

- Lower power consumption

- Faster refresh rate and better contrast

- Greater brightness and fuller viewing angle

- Exciting displays (such as ultra-thin, flexible or transparent displays)

- Better durability (OLEDs are very durable and can operate in a broader temperature range)

- Lighter weight (the screen can be made very thin)

OLEDs have limited lifetime (like any display, really), that was quite a problem a few years ago. But there has been constant progress, and today this is almost a non-issue. Today OLEDs last long enough to be used in mobile devices and TVs, but the lifetime of a vehicle is significantly longer. OLEDs can also be problematic in direct sunlight, because of their emissive nature, which also could be a problem in a car. But companies are working to make it better, and newer mobile device displays are quite good in that respect.

Today OLED displays are used mainly in small (2" to 5") displays for mobile devices such as phones, cameras and MP3 players. OLED displays carry a price premium over LCDs, but offer brighter pictures and better power efficiency. Making larger OLEDs is possible, but difficult and expensive now. In 2014 several new OLED TVs has been announced and presented, which shows that the manufacturers are trying to force this promising technology, which will make the lower costs in the future.

A software configurable instrument cluster is essentially an LCD display behind the steering wheel, which can be customized to different applications (e.g. sport, luxury sedan display or special diagnostic display). There is theme selection with several colour and shape configurations and we can also decide what gauges or windows should appear. Font size altering option could help for the visually impaired. The central console display can also be temporarily ported over to the instrument cluster showing radio channel information or the current image of the parking systems status. The advantage is that the driver does not have to look aside from the instrument panel

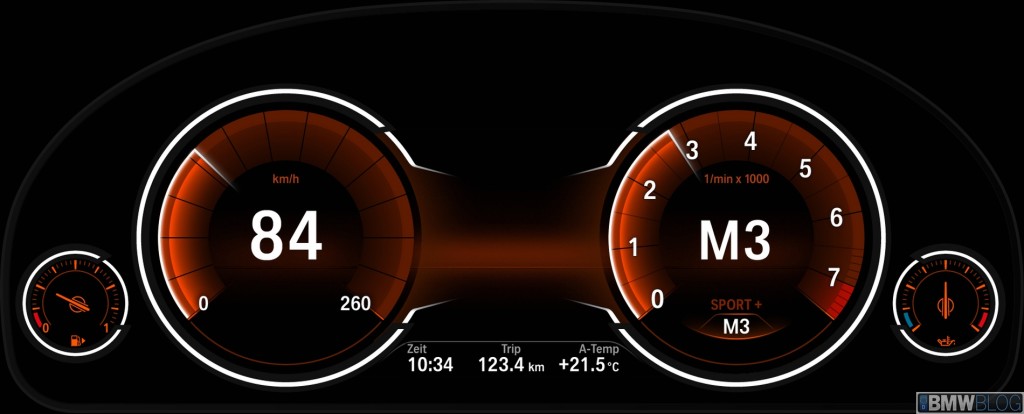

shows a BMW instrument panel, which is based on a 10,2”, high-resolution (318 dpi) LED LCD display, with 6:1 aspect ratio.

The SPORT+ and COMFORT modes of the BMW 5 Series’ instrument cluster

The SPORT+ and COMFORT modes of the BMW 5 Series’ instrument cluster

Head-Up Display (HUD)

Head-Up Display on the M-Technik BMW M6 sports car. (Source. BMW)

HUD system contains a projector and a system of mirrors that beams an easy-to-read, high-contrast image onto a translucent film on the windscreen, directly in your line of sight. The image is projected in such a way that it appears to be about two metres away, above the tip of the bonnet, making it particularly comfortable to read. BMW claims that Head-Up Display halves the time it takes for eyes to shift focus from road to the instruments and back. The system’s height can be adjusted for optimal viewing. The newest HUDs provides full colour displaying which makes the car even more comfortable for the driver. More colours mean it’s easier to differentiate between general driving information like speed limits and navigation directions and urgent warning signals. Important information like “Pedestrian in the road” is now even more clear and recognisable – and this subsequently reduces the driver’s reaction time.

New technologies like the Head-Up Display (HUD) might be promising solution to reduce the time for the driver to be informed, because the information (road sign, speed limit, hazardous situation) can directly be projected in front of the driver eyes without any distraction. There are researches to use HUD technology on the full windshield that would revolutionize e.g. night vision applications providing road path and obstacle “simulation” feeling to driver .

Next generation HUD demonstration

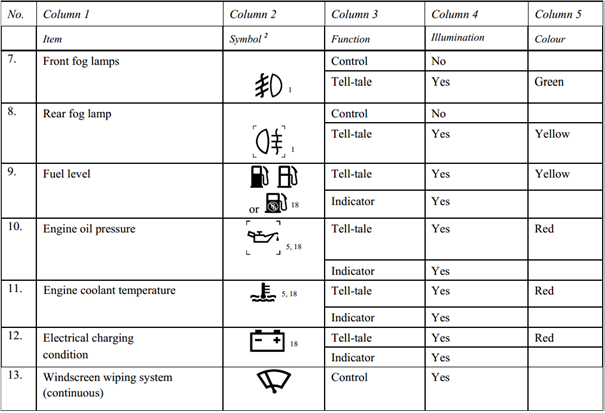

Indicator lights (Tell-tales)

Indicator lights are regulated by automobile safety standards worldwide. In the United States, National Highway Traffic Safety Administration Federal Motor Vehicle Safety Standard 101 includes indicator lights in its specifications. In Europe and throughout most of the rest of the world, ECE Regulations specify it, more precisely “United Nations (UN) Vehicle Regulations - 1958 Agreement” .

The exact meaning and the usage of other (unregulated) symbols are manufacturer specific in the most cases.

Excerpt from the ECE Regulations (Source: UNECE)

Haptic interfaces

- Pedal force and vibration for warning and efficiency functions

- Force feedback steering wheel and vibration

- Driver’s seat vibration for warning and safety functions

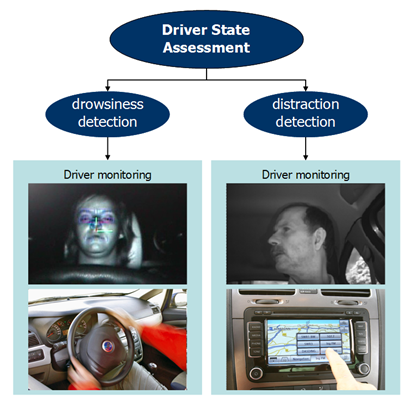

Driver State Assessment

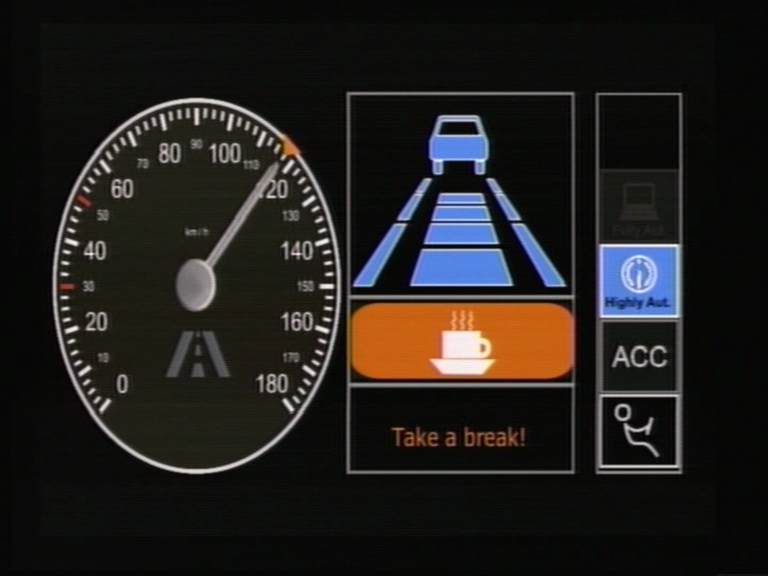

The risk of momentarily falling asleep during long-distance driving in the night is quite high. During driver underload situations drivers may easily loose attention, combined with monotony the risk of falling asleep even becomes higher.

Studies show that, after just four hours of non-stop driving, drivers' reaction times can be up to 50 % slower. So the risk of an accident doubles during this time. And the risk increases more than eight-fold after just six hours of non-stop driving! This is the reason why driving time recording devices (tachograph) are mandatory all across Europe for commercial vehicles.

The driver status is calculated by special algorithms based on direct and indirect monitoring of the driver. The assessment of the driver can be grouped into the following categories:

- Drowsiness level

- Direct monitoring

- Eye movement

- Eye blinking time and frequency

- Indirect monitoring

- Driver activity

- Lane keeping

- Pedal positions

- Steering wheel intensity

- Attention/distraction level

- Driver look focuses on the street or not

- Use of control buttons

Indirect monitoring means the evaluation of the driver activity based on other sensor information (e.g. steering wheel movement, buttons/switches). The indirect algorithms calculate an individual behavioural pattern for the driver during the first few minutes of every trip. This pattern is then continuously compared with the current steering behaviour and the current driving situation, courtesy of the vehicle's electronic control unit. This process allows the system to detect typical indicators of drowsiness and warn the driver by emitting an audible signal and flashing up a warning message in the instrument cluster.

Driver distraction is a leading cause of motor-vehicle crashes. Developing driver warning systems that measure the driver status can help to reduce distraction-related crashes. For such a system, accurately recognizing driver distraction is critical. The challenge of detecting driver distraction is to develop the algorithms suitable to identify different types of distraction. Visual distraction and cognitive distraction are two major types of distraction, which can be described as “eye-off-road” and “mind-offroad”, respectively.

Combination of driver state assessment (Source: HAVEit)

From highly automated driving point of view it is essential to know the status of the driver, whether he is able to take back control in a dangerous situation or a minimum safety risk manoeuvre has to be carried out due to medical emergency of the driver.

Trajectory planning layer

The formulation of the vehicle trajectory, the roadpath that the vehicle travels is composed of two tasks: the trajectory planning and the trajectory execution. Trajectory planning part involves the calculation of different route possibilities with respect to the surrounding environment, contains the ranking, prioritization of the different route options based on minimizing the risk of a collision, and ends up in the selection of the optimum trajectory. The trajectory execution part contains the trajectory segmentation and the generation of the motion vector containing longitudinal and lateral control commands that will be carried out by the intelligent actuators of the execution layer .

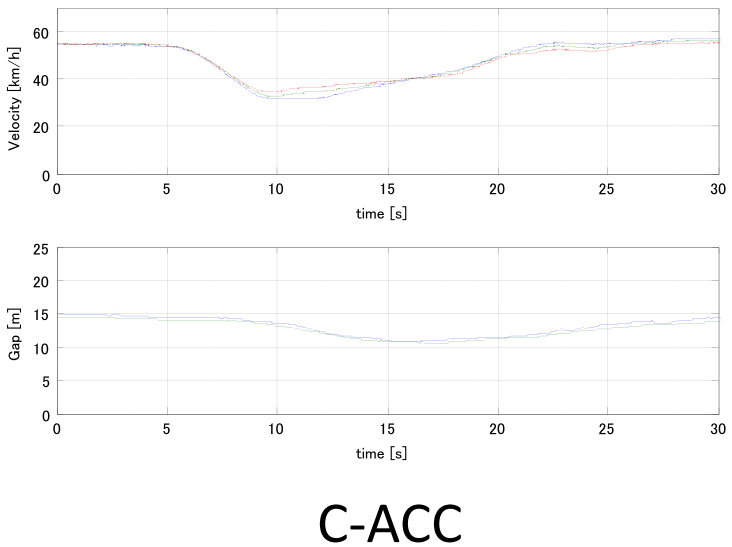

There are already vehicle automation functions available in series production (e.g. intelligent parking assistance systems) that use trajectory planning and execution. These systems are able to park the car under predefined circumstances without any driver intervention, but there are significant limitations compared to the highly automated driving. The most important difference is that parking assistance systems operate in a static or quasi-static environment around zero velocity, while for example temporary auto-pilot drives the vehicle highly automated around 130 km/h in a continuously and rapidly changing environment.

The output of this layer is a trajectory represented in the motion vector that specifies the vehicle status (position, heading and speed) for the subsequent moments.

Longitudinal motion

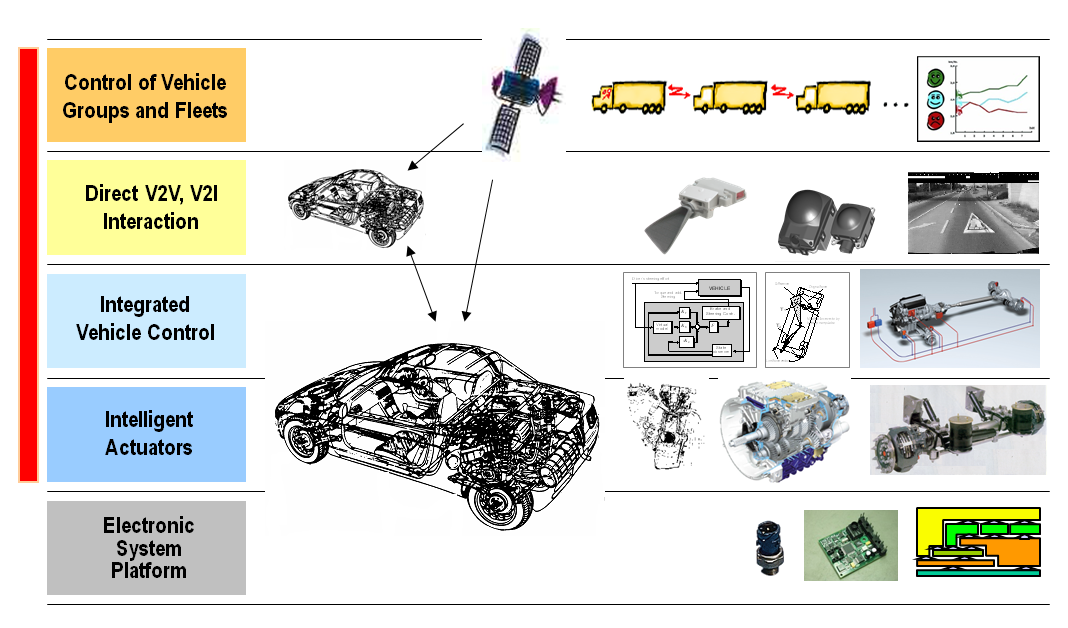

Early Cruise Control (CC) functions were only able to maintain a certain engine revolution at the beginning; later systems were capable of holding a predefined speed constantly. This involved longitudinal speed control by using the engine as the only actuator. The extension of the cruise control system with a long range RADAR led to the invention of the Adaptive Cruise Control (ACC) system. ACC systems (besides longitudinal speed control) could also keep a speed dependant safe distance behind the ego vehicle. Changing between speed and distance control was automatic based on the traffic situation in front of the vehicle. ACC began to use the brake system as a second actuator. With Stop & Go function, the applicability of ACC was expanded for high-congestion, stop-and-go traffic. ACC with Stop & Go can control longitudinal speed downto zero and back to set speed permitting efficient use in traffic jams. Today’s most advanced ACC systems also take into consideration topographic information from the eHorizon like the curves and slopes ahead to calculate an optimum speed profile for the next few kilometres, for details.

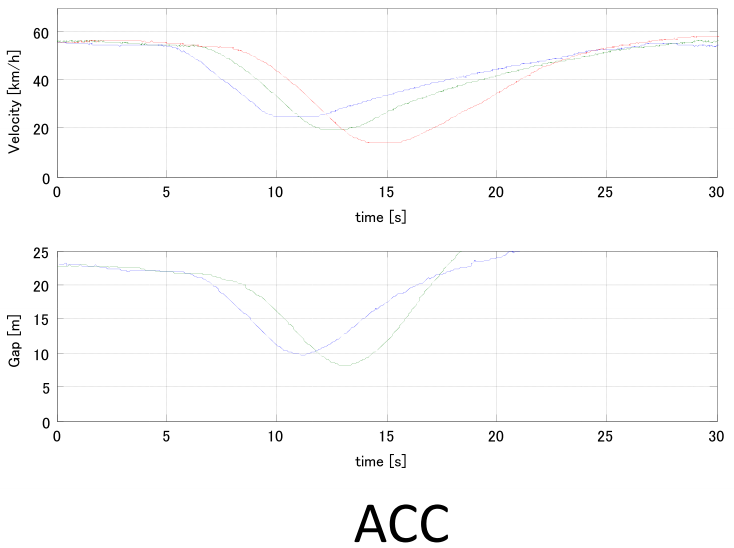

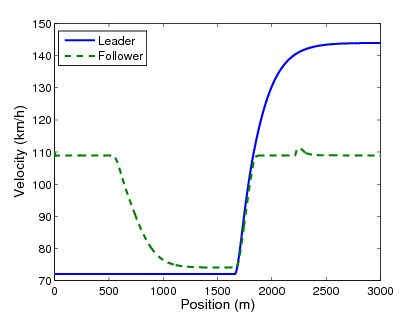

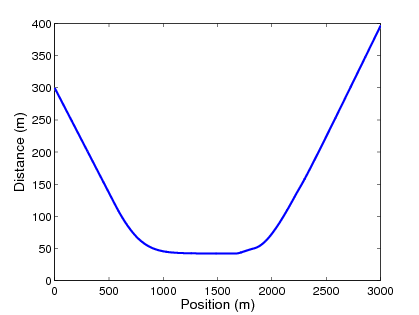

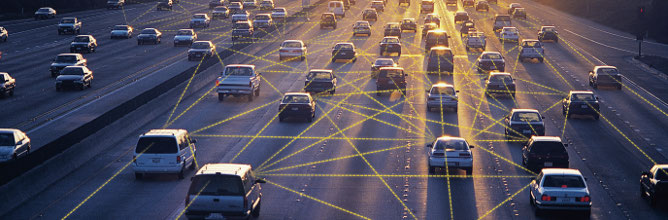

In contrast to standard adaptive cruise control (ACC) that uses vehicle mounted radar sensors to detect the distance and the speed of the ego vehicle, Cooperative ACC uses V2V communication for transmitting acceleration data to reduce the time delay of onboard ranging sensors. This enables the following vehicles to adjust their speeds according to the ego vehicle resulting in better distance keeping performance. shows two comparison diagrams for ACC and cooperative ACC, indicating that V2V information exchange instead of external sensor measurement can significantly reduce of the control loop.

Speed and distance profile comparison of standard ACC versus Cooperative ACC systems (Source: Toyota)

5.2. Lateral motion

The objective of assisted parking systems is to improve comfort and safety of driving during parking manoeuvres. Improve comfort by being able to park the vehicle without the driver steering and improve safety by calculating precisely the parking space size and collision-free motion trajectory avoiding any human failures that may occur during a parking manoeuvre. Series production assisted parking systems appeared on the market in the beginning of the 2000s. The advances in electronic technology enabled the development of precise ultrasonic sensors for nearby distance measurement and electronic power assisted steering for driverless steering of the vehicle.

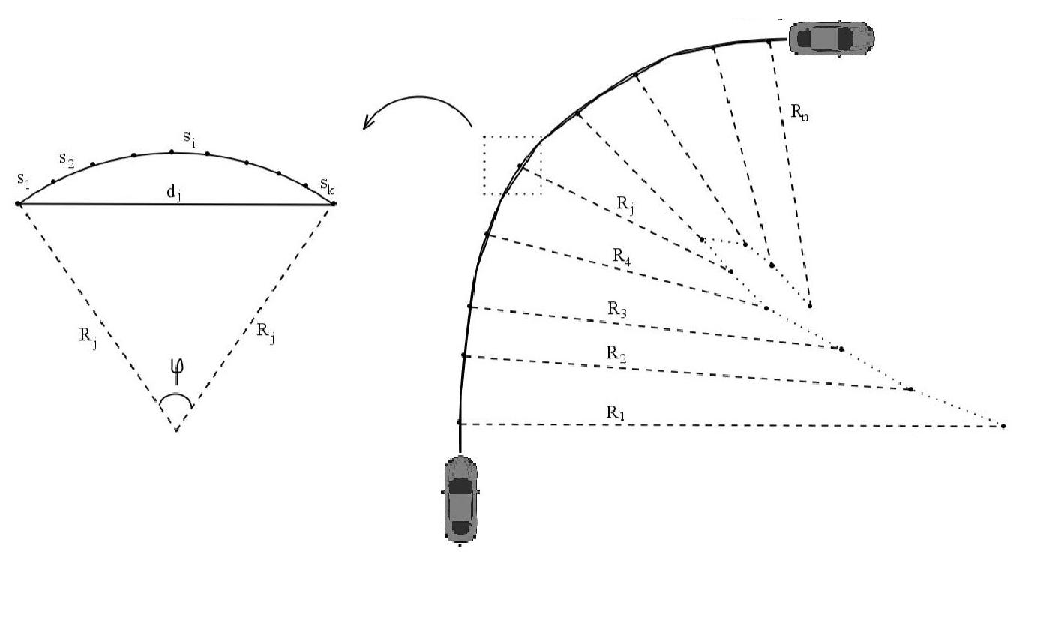

The task of trajectory planning starts with the determination of the proper parking space. By travelling low speed and continuously measuring the vehicle side distance, the parking gap can be calculated, thus proper parking space can be selected. As the first automated parking systems were capable of handling only parallel parking, the initial formulas for trajectory calculation were divided into the following segments:

- straight backward path

- full (right) steering backward path

- straight backward path

- full (left) steering backward path

- (optional straight forward path)

Illustration of the parallel parking trajectory segmentation (Source: Ford)

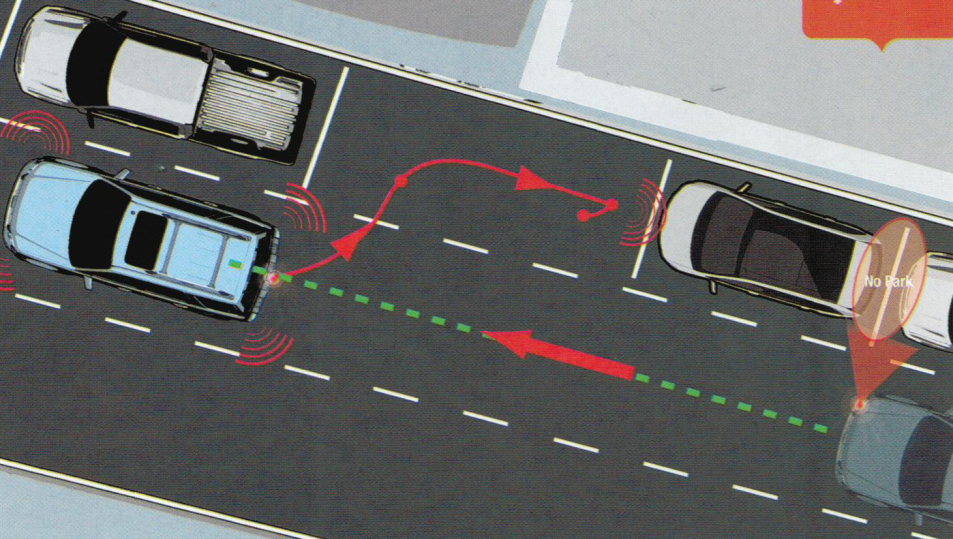

As there are different scenarios exist in everyday parking situations automated parking systems also try to assist the drivers in other situations then just parallel parking. Today’s advanced parking assist systems can also cope with perpendicular or angle parking that require a slightly different approach in lateral motion control.

One topic of recent research in highly automated driving, which is especially challenging in urban environments is fully autonomous parking control. The challenge hereby arises from the narrow corridors, tight turns and unpredictable moving obstacles as well as multiple driving direction switching. The following figure shows common parking spots in urban environments that is subject of the research and development today.

Layout of common parking scenarios for automated parking systems (Source: TU Wien)

Besides parking another good example of low speed combined longitudinal and lateral control is the traffic jam assist system. At speeds between zero and 40 or 60 km/h (depending on OEMs), the traffic jam assist system keeps pace with the traffic flow and helps to steer the car within certain constraints. It also accelerates and brakes autonomously. The system is based on the functionality of the adaptive cruise control with stop & go, extended by adding the lateral control of steering and lane guidance. The function is based on the built-in radar sensors, a wide-angle video camera and the ultrasonic sensors of the parking system. As drivers spend a great amount of their time in heavy traffic, such systems could reduce the risk of rear-end collisions and protect the drivers mentally by relieving them from stressful driving.

Traffic jam assistant system in action (Source: Audi)

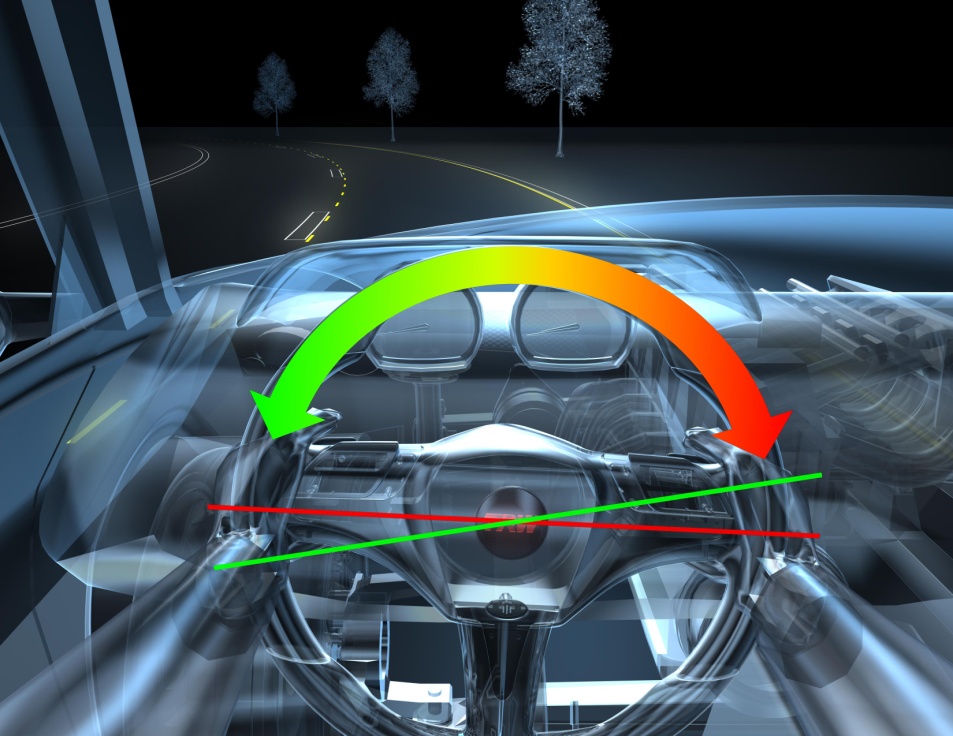

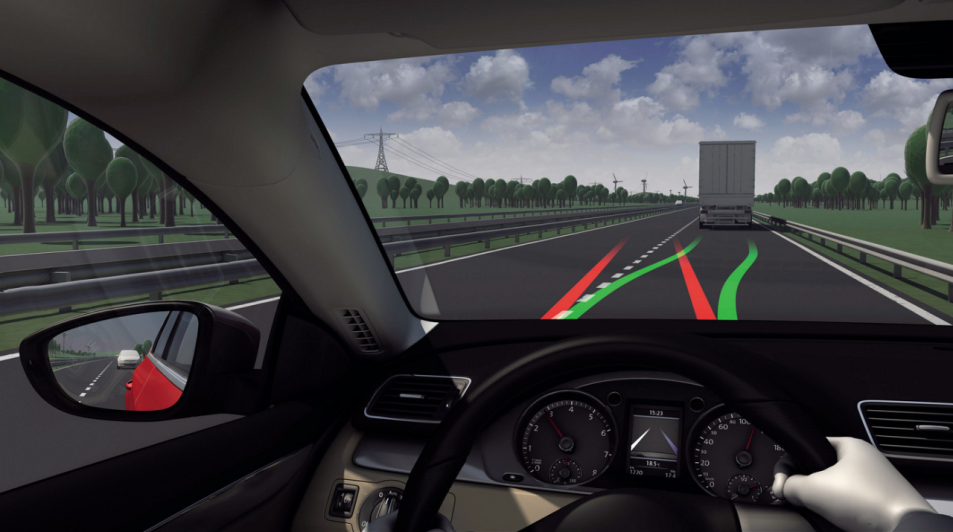

Today’s lane keeping assist (LKA) systems are the initial signs of higher speed lateral control of vehicles. Based on camera and radar information these systems are capable of sensing if the vehicle is deviating from its lane, then they help the vehicle stay inside the lane by an automated steering and/or braking intervention. An advanced extension of LKA is lane centring assist (LCA) when the vehicle not only stays inside the lane but lateral control algorithm keeps the vehicle on a path near the centre of the lane. The primary objective of the lane keeping assist and lane centring assist functions are to warn and assist the driver and these systems are definitely not designed to substitute the driver for steering the vehicle, although on technical level they would be able to do so.

The operation of today’s Lane Keeping Assist (LKA) system (Source: Volkswagen)

Complex functions like highly automated driving with combined longitudinal and lateral control will definitely appear first on highways, since traffic is more predictable and relatively safe there (one-way traffic only, quality road with relative wide lanes, side protections, good visible lane markings, no pedestrians or cyclists, etc.). As highways are the best places to introduce hands-free driving at higher speeds, one could expect a production vehicle equipped with a temporary autopilot or in other words automated highway driving assist function as soon as the end of this decade.

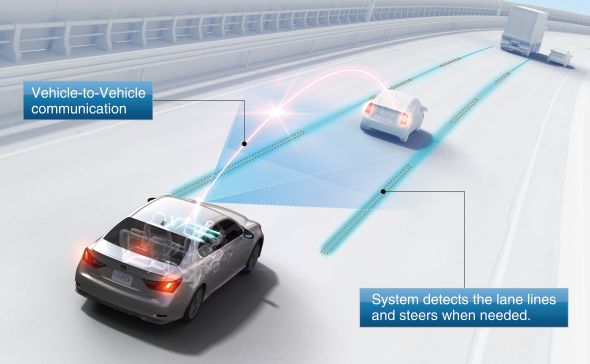

Automated highway driving means the automated control of the complex driving tasks of highway driving, like driving at a safe speed selected by the driver, changing lanes or overtaking front vehicles depending on the traffic circumstances, automatically reducing speed as necessary or stopping the vehicle in the right most lane in case of an emergency. Japanese Toyota Motor have already demonstrated their advanced highway driving support system prototype in real traffic operation. The two vehicles communicate each other, keeping their lane and following the preceding vehicle to maintain a safety distance.

Automated Highway Driving Assist system operation (Source: Toyota)

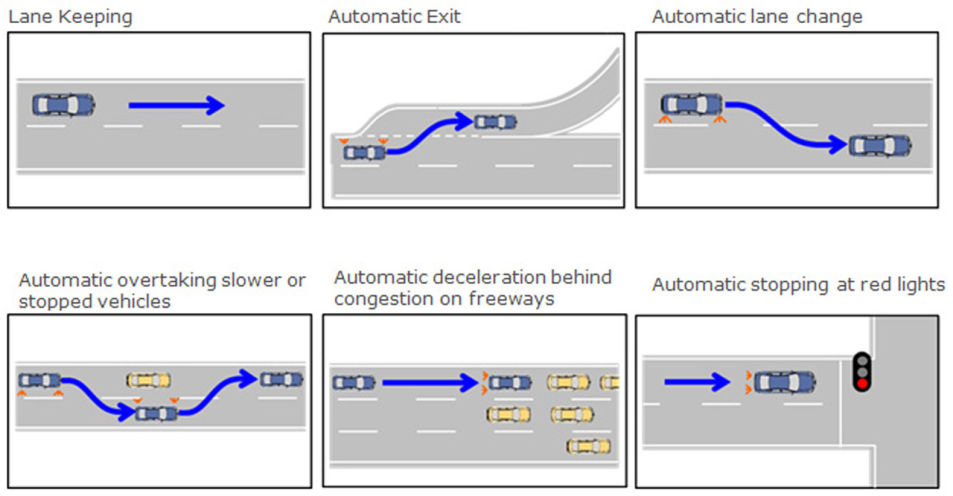

Nissan had also announced that they are also developing their highly automated cars, which is targeted to hit the road by 2020. Equipped with laser scanners, around view monitor cameras, and advanced artificial intelligence and actuators, is not fully autonomous as its systems are designed to allow the driver to manually take over control at any time. illustrates the highly automated Leaf is being tested in a number of combined longitudinal and lateral control scenarios including automated highway exit, lane change, overtaking vehicles.

Scenarios of single or combined longitudinal and lateral control (Source: Nissan)

Automation level

- Availability of a higher automation level

- Real-time environment sensing

- Current status of vehicle dynamics

- Driver attention

- Driver request for higher automation

Auto-pilot

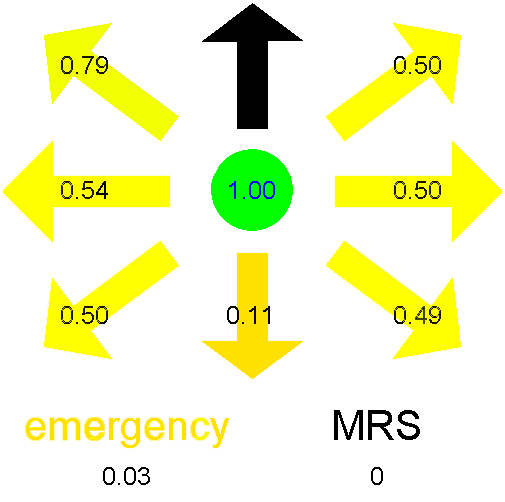

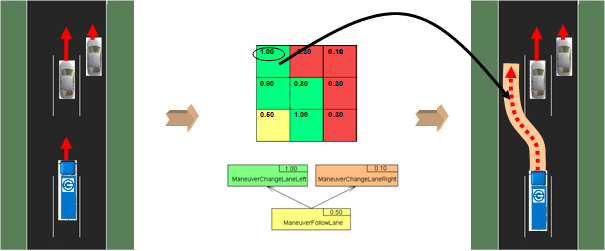

From the results of the multi-sensor based fusion module, the co-pilot algorithms will identify the type of situation the vehicle is in and generate driving strategy options to handle the situation. Driving strategy in this context means a set of feasible manoeuvres and trajectories to be realized by the vehicle or the driver. Driving strategy determination is based upon the analysis of the current driving situation (based on both environment sensor information and future estimation). The assessment of the danger level of the current driving situation may lead the system to select a minimum safety risk strategy or an emergency strategy for the sake of safety. The objective of the auto-pilot is to determine the optimum driving strategy for the driving context.

The co-pilot will provide the set of trajectories with their prioritization. Furthermore, a description of the manoeuvres which the automation intends to perform is provided by these trajectories. Every trajectory is assigned to a specific manoeuvre (e.g. slow down and stop; slow down and steer right; speed up, steer left and overtake) and vice versa. The calculations are based on the input from the perception layer. Irrespective of the automation level, the auto-pilot process is divided into two main functions :

- The definition of a driving strategy at a high level, which is described using a manoeuvre language.

- The definition of trajectory at a lower level: this function uses the previously selected manoeuvre to define a reduced possibility field of the trajectory.

The “definition of trajectory” sub-module describes and evaluates the manoeuvres proposed by the “definition of driving strategy” sub module in greater detail, choosing the best manoeuvres first, until a predefined calculation time span (which is linked to a safe reaction time) elapses.

The manoeuvre grid with priority rankings (Source: HAVEit)

The manoeuvre grid contains the potential longitudinal and lateral actions that the vehicle is capable of performing in its current position. The output of this grid is a ranking of the manoeuvres. The calculation takes into consideration 9 possible combinations that comprise of the multiplication of the 3 longitudinal and 3 lateral options. In the longitudinal direction, the vehicle can accelerate, decelerate (brake) or can keep the current velocity, while in the lateral direction, the vehicle can change lane either to the left or to the right, or can stay in the current lane. There are two additional manoeuvres that have to be considered during the potential manoeuvre calculation, the minimum safety risk manoeuvre and the emergency manoeuvre with maximum braking till standstill. This gives a total of eleven manoeuvres. Each manoeuvre gets a performance indication number between zero and one.

When there are several potential vehicle trajectories available that do not contain the risk of a collision, then the next task is a more detailed evaluation to select the optimum trajectory from them. For being able to select the optimum path, the potential trajectories are ranked and optimized through qualitative and quantitative evaluation according to key performance indicators like:

- trajectory complexity

- travelling time

- driving comfort

- fuel consumption

- safety margins

According to the assumed driving situation on Figure , the best manoeuvre - taking into consideration the trajectory ratings of the grid and tree performance indicators- is overtaking and acceleration in the left lane.

. The decision of the optimum trajectory (Source: HAVEit)

Motion vector generation

Trajectory execution layer

Table of Contents

Another task of the trajectory execution layer is the execution of the calculated control commands (motion vector) and to provide feedback about the actual status. The motion vector contains the selected trajectory that has to be followed including the desired longitudinal and lateral movement of the vehicle.

The execution of the motion vector is distributed among the intelligent actuators of the vehicle. The task distribution and the harmonization of the intelligent actuators are carried out by the integrated vehicle (powertrain) controller.

Longitudinal control

Design of speed profile

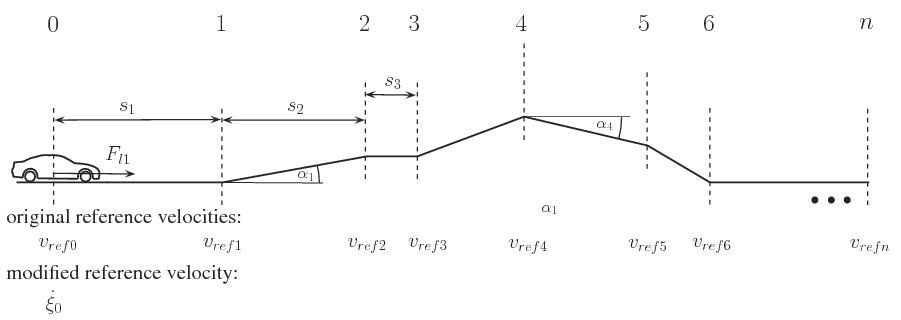

The road ahead of the vehicle is divided into several sections and reference speeds are selected for them, see . The rates of the inclinations of the road and those of the speed limits are assumed to be known at the endpoints of each section. The knowledge of the road slopes is a necessary assumption for the calculation of the velocity signal. In practice the slope can be obtained in two ways: either a contour map which contains the level lines is used, or an estimation method is applied. In the former case a map used in other navigation tasks can be extended with slope information. Several methods have been proposed for slope estimation. They use cameras, laser/inertial profilometers, differential GPS or a GPS/INS systems, An estimation method based on a vehicle model and Kalman filters was proposed by .

. Division of road

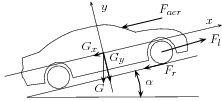

The simplified model of the longitudinal dynamics of the vehicle is shown in Figure . The longitudinal movement of the vehicle is influenced by the traction force

as the control signal and disturbances

as the control signal and disturbances  . Several longitudinal disturbances influence the movement of the vehicle. The rolling resistance is modeled by an empiric form

. Several longitudinal disturbances influence the movement of the vehicle. The rolling resistance is modeled by an empiric form  where

where  is the vertical load of the wheel,

is the vertical load of the wheel,  and

and  are empirical parameters depending on tyre and road conditions and

are empirical parameters depending on tyre and road conditions and  is the velocity of the vehicle, see GOTOBUTTON GrindEQbibitem24 . The aerodynamic force is formulated as

is the velocity of the vehicle, see GOTOBUTTON GrindEQbibitem24 . The aerodynamic force is formulated as  where

where  is the drag coefficient,

is the drag coefficient,  is the density of air,

is the density of air,  is the reference area,

is the reference area,  is the velocity of vehicle relative to the air. In case of a lull

is the velocity of vehicle relative to the air. In case of a lull  , which is assumed in the paper. The longitudinal component of the weighting force is

, which is assumed in the paper. The longitudinal component of the weighting force is  , where m is the mass of the vehicle and

, where m is the mass of the vehicle and  is the angle of the slope. The acceleration of the vehicle is the following:

is the angle of the slope. The acceleration of the vehicle is the following:  , where

, where  is the mass of the vehicle,

is the mass of the vehicle,  is the position of the vehicle, and

is the position of the vehicle, and  are the traction force and the disturbance force (

are the traction force and the disturbance force ( ), respectively.

), respectively.

Simplified vehicle model

Although between the points may be acceleration and declaration an average speed is used. Thus, the rate of accelerations of the vehicle is considered to be constant between these points. In this case the movement of the vehicle using simple kinematic equations is:

, where

, where  is the velocity of vehicle at the initial point,

is the velocity of vehicle at the initial point,  is the velocity of vehicle at the first point and

is the velocity of vehicle at the first point and  is the distance between these points. Thus the velocity of the first section point is the following:

is the distance between these points. Thus the velocity of the first section point is the following:  . The velocity of the first section point

. The velocity of the first section point  is defined as the reference velocity

is defined as the reference velocity  . This relationship also applies to the next road section:

. This relationship also applies to the next road section:  . It is important to emphasize that the longitudinal force

. It is important to emphasize that the longitudinal force  is known only the first section. Moreover, the longitudinal forces

is known only the first section. Moreover, the longitudinal forces  are not known during the traveling in the first section. Therefore at

the calculation of the control force it is assumed that additional

longitudinal forces will not act on the vehicle, i.e., the longitudinal

forces

are not known during the traveling in the first section. Therefore at

the calculation of the control force it is assumed that additional

longitudinal forces will not act on the vehicle, i.e., the longitudinal

forces  will not affect the next sections. At the same time the disturbances

from road slope are known ahead. Similarly, the velocity of the vehicle

can be formalized in the next

will not affect the next sections. At the same time the disturbances

from road slope are known ahead. Similarly, the velocity of the vehicle

can be formalized in the next  section points. Using this principle a velocity-chain, which contains

the required velocities along the way of the vehicle, is constructed. At

the calculation of the control force it is assumed that additional

longitudinal forces

section points. Using this principle a velocity-chain, which contains

the required velocities along the way of the vehicle, is constructed. At

the calculation of the control force it is assumed that additional

longitudinal forces  will not affect the next sections. The velocities of vehicle are

described at each section point of the road by using similar expressions

to GOTOBUTTON GrindEQequation22 . The velocity of the

will not affect the next sections. The velocities of vehicle are

described at each section point of the road by using similar expressions

to GOTOBUTTON GrindEQequation22 . The velocity of the  section point is the following:

section point is the following:  .

It is also an important goal to track the momentary value of the

velocity. It can also be considered in the following equation:

.

It is also an important goal to track the momentary value of the

velocity. It can also be considered in the following equation:

The

disturbance force can be divided in two parts: the first part is the force resistance from road slope

disturbance force can be divided in two parts: the first part is the force resistance from road slope  , while the second part

, while the second part  contains all of the other resistances such as rolling resistance, aerodynamic forces etc. We assume that

contains all of the other resistances such as rolling resistance, aerodynamic forces etc. We assume that  is known while

is known while  is unknown.

is unknown.  depends on the mass of the vehicle and the angle of the slope

depends on the mass of the vehicle and the angle of the slope  . When the control force

. When the control force  is calculated, only

is calculated, only  influences the vehicle of all of the unmeasured disturbances. In the control design the effects of the unmeasured disturbances

influences the vehicle of all of the unmeasured disturbances. In the control design the effects of the unmeasured disturbances  are ignored. The consequence of this assumption is that the model does

not contain all the information about the road disturbances, therefore

it is necessary to design a robust speed controller. This controller can

ignore the undesirable effects. Consequently, the equations of the

vehicle at the section points are calculated in the following way:

are ignored. The consequence of this assumption is that the model does

not contain all the information about the road disturbances, therefore

it is necessary to design a robust speed controller. This controller can

ignore the undesirable effects. Consequently, the equations of the

vehicle at the section points are calculated in the following way: | (1) |

| (2) |

| (3) |

| (4) |

| (5) |

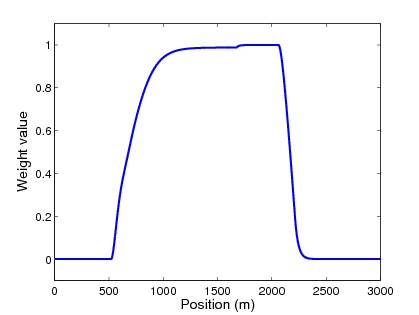

In the following step weights

are applied to reference speeds. An additional weight

are applied to reference speeds. An additional weight  is applied to the momentary speed. An additional weight

is applied to the momentary speed. An additional weight  is applied to the leader speed. While the weights

is applied to the leader speed. While the weights  represent the rate of the road conditions, weight

represent the rate of the road conditions, weight  has an essential role: it determines the tracking requirement of the current reference velocity

has an essential role: it determines the tracking requirement of the current reference velocity  . By increasing

. By increasing  the momentary velocity becomes more important while road conditions become less important. Similarly, by increasing

the momentary velocity becomes more important while road conditions become less important. Similarly, by increasing  the road conditions and the momentary velocity become negligible. The weights should sum up to one, i.e.

the road conditions and the momentary velocity become negligible. The weights should sum up to one, i.e.  .

.Weights have an important role in control design. By making an appropriate selection of the weights the importance of the road condition is taken into consideration. For example when

and

and  the control exercise is simplified to a cruise control problem without

any road conditions. When equivalent weights are used the road

conditions are considered with the same importance, i.e.,

the control exercise is simplified to a cruise control problem without

any road conditions. When equivalent weights are used the road

conditions are considered with the same importance, i.e.,  and

and  . When

. When  and

and  only the tracking of the preceding vehicle is carried out. The optimal

determination of the weights has an important role, i.e., to achieve a

balance between the current velocity and the effect of the road slope.

Consequently, a balance between the velocity and the economy parameters

of the vehicle is formalized.

only the tracking of the preceding vehicle is carried out. The optimal

determination of the weights has an important role, i.e., to achieve a

balance between the current velocity and the effect of the road slope.

Consequently, a balance between the velocity and the economy parameters

of the vehicle is formalized.By summarizing the above equations the following formula is yielded:

| (6) |

depends on the road slopes, the reference velocities and the weights

depends on the road slopes, the reference velocities and the weights | (7) |

where

where  . Equation (6) is rearranged:

. Equation (6) is rearranged: | (8) |

is calculated based on the designed

is calculated based on the designed  . Consequently, the road conditions can be considered by speed tracking. The momentary velocity of vehicle

. Consequently, the road conditions can be considered by speed tracking. The momentary velocity of vehicle  should be equal to parameter

should be equal to parameter  , which contains the road information. The calculation of

, which contains the road information. The calculation of  requires the measurement of the longitudinal acceleration

requires the measurement of the longitudinal acceleration  .

.6.1.2. Optimization of the vehicle cruise control

depends only on the weights in the following way:

depends only on the weights in the following way:  | (9) |

depends on the weight

depends on the weight  , therefore

, therefore  depends on the weights

depends on the weights  and

and  . The longitudinal control force must be minimized, i.e.,

. The longitudinal control force must be minimized, i.e.,  . Instead, in practice the

. Instead, in practice the  optimization is used because of the simpler numerical computation.

Simultaneously, the difference between momentary velocity and modified

velocity must be minimized, i.e.,

optimization is used because of the simpler numerical computation.

Simultaneously, the difference between momentary velocity and modified

velocity must be minimized, i.e.,

The two optimization criteria lead to different optimal solutions. In the first criterion the road inclinations and speed limits are taken into consideration by using appropriately chosen weights

. At the same time the second criterion is optimal if the information is ignored. In the latter case the weights are noted by

. At the same time the second criterion is optimal if the information is ignored. In the latter case the weights are noted by  .

The first criterion is met by the transformation of the quadratic form

into the linear programming using the simplex algorithm. It leads to the

following form:

.

The first criterion is met by the transformation of the quadratic form

into the linear programming using the simplex algorithm. It leads to the

following form:  with the following constrains

with the following constrains  and

and  .

This task is nonlinear because of the weights. The optimization task is

solved by a linear programming method, such as the simplex algorithm.

.

This task is nonlinear because of the weights. The optimization task is

solved by a linear programming method, such as the simplex algorithm.The second criterion must also be taken into consideration. The optimal solution can be determined in a relatively easy way since the vehicle tracks the predefined velocity if the road conditions are not considered. Consequently, the optimal solution is achieved by selecting the weights in the following way:

and

and  .

.In the proposed method two further performance weights, i.e.,

and

and  , are introduced. The performance weight

, are introduced. The performance weight  (

( ) is related to the importance of the minimization of the longitudinal control force

) is related to the importance of the minimization of the longitudinal control force  while the performance weight

while the performance weight  is related to the minimization of

is related to the minimization of  . There is a constraint according to the performance weights

. There is a constraint according to the performance weights  .

Thus the performance weights, which guarantee balance between

optimizations tasks, are calculated in the following expressions:

.

Thus the performance weights, which guarantee balance between

optimizations tasks, are calculated in the following expressions: | (10) |

, i=1,n(11)

, i=1,n(11)Based on the calculated performance weights the speed can be predicted.

The tracking of the preceding vehicle is necessary to avoid a collision, therefore

is not reduced. If the preceding vehicle accelerates, the tracking

vehicle must accelerate as well. As the velocity increases so does the

braking distance, therefore the following vehicle strictly tracks the

velocity of the preceding vehicle. On the other hand it is necessary to

prevent the velocity of the vehicle from increasing above the official

speed limit. Therefore the tracked velocity of the preceding vehicle is

limited by the maximum speed. If the preceding vehicle accelerates and

exceeds the speed limit the following vehicle may fall behind.

is not reduced. If the preceding vehicle accelerates, the tracking

vehicle must accelerate as well. As the velocity increases so does the

braking distance, therefore the following vehicle strictly tracks the

velocity of the preceding vehicle. On the other hand it is necessary to

prevent the velocity of the vehicle from increasing above the official

speed limit. Therefore the tracked velocity of the preceding vehicle is

limited by the maximum speed. If the preceding vehicle accelerates and

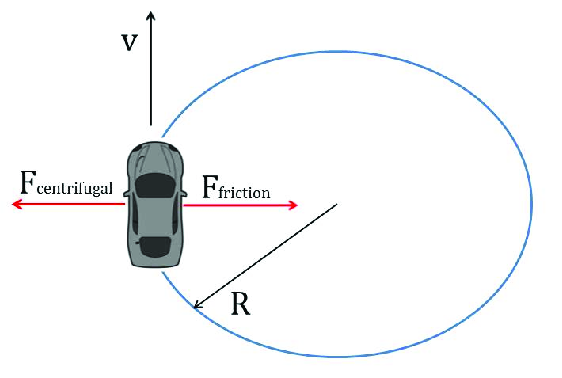

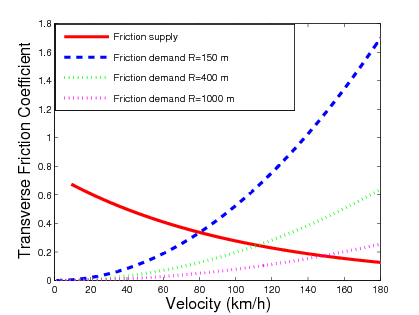

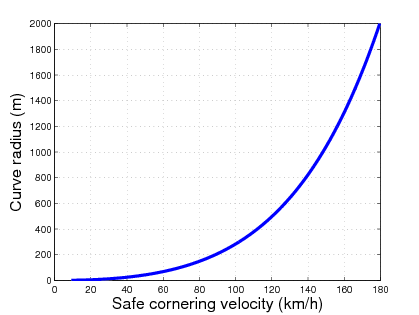

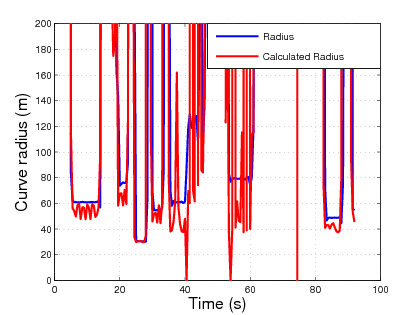

exceeds the speed limit the following vehicle may fall behind.Remark 3.1 Look-ahead control considering efficiency and safe cornering

The maximum cornering velocity for each section can be calculated in advance knowing the designed path of the vehicle. If the speed limit

at section point

at section point  exceeds the safe cornering velocity then the speed limit

exceeds the safe cornering velocity then the speed limit  is substituted with the smallest value concerning skidding or rollover, i.e.,

is substituted with the smallest value concerning skidding or rollover, i.e.,  | (12) |

Implementation of the velocity design

)

is designed. The role of the high-level controller is to calculate this

required longitudinal force. In third step the real physical inputs of

the system, such as the throttle, the gear position and the brake

pressure are generated by the low-level controller.

)

is designed. The role of the high-level controller is to calculate this

required longitudinal force. In third step the real physical inputs of

the system, such as the throttle, the gear position and the brake

pressure are generated by the low-level controller.

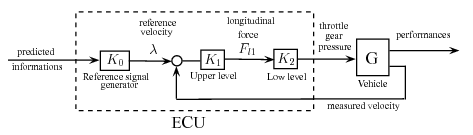

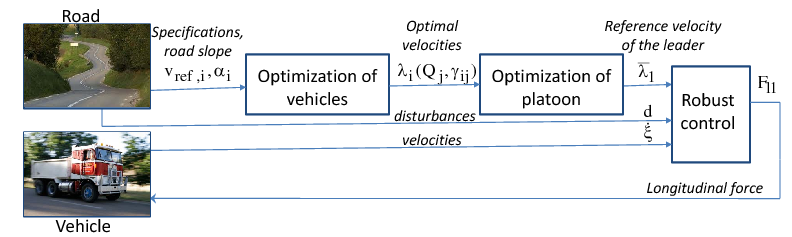

Implementation of the controlled system

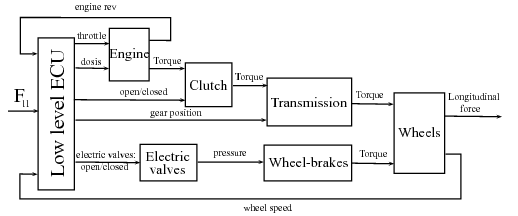

In the proposed method the steps are separated from each other. The reference velocity signal generator can be added to the upper-lower Adaptive Cruise Control (ACC) system. It is possible to design a reference signal generator unit almost individually, and to attach it to the ACC system. Thus the reference signal unit can be designed and produced independently from automobile suppliers, only a few vehicle data are needed. The independent implementation possibility is an important advantage in practice. The high-level controller calculates positive and negative forces as well, therefore the driving and braking systems are also actuated. Figure shows the architecture of the low-level controller.

Architecture of the low-level controller

The engine is controlled by the throttle, which could be a butterfly gate or a quantity of injected fuel. The engine-management system and the fuel-injection system have their own controllers, thus in the realization of the low-level controller only the torque-rev-load characteristics of the engine are necessary. In this case the rev of the engine is measured, the required torque is computed from the longitudinal force of the high-level controller, thus the throttle is determined by an interpolation step using a look-up table. The position of the automatic transmission is determined by logic functions, thus it depends on the fuel consumption and the maximum rev of the engine. The pressures on the cylinders of the wheels increase during braking. At normal traveling the ABS actuation is not necessary, in case of an emergency the optimal driving is overwritten by the safety requirements. The necessary braking pressure for the required braking force is computed from the ratios of the hydraulic/pneumatic parts.

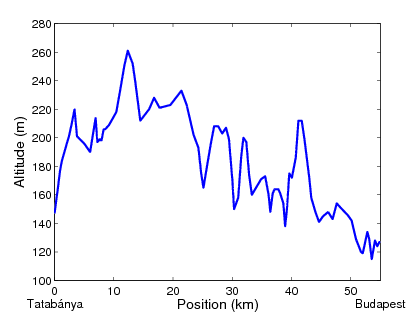

In the simulation example a transportational route with real data is analyzed. The terrain characteristics and geographical information are those of the M1 Hungarian highway between Tatabánya and Budapest in a

-

- -long section. In the simulation a typical F-Class truck travels along the

-long section. In the simulation a typical F-Class truck travels along the  route. The mass of the 6--gear truck is

route. The mass of the 6--gear truck is  and its engine power is

and its engine power is  (

( ). The regulated maximal velocity is

). The regulated maximal velocity is  , but the road section contains other speed limits (e.g.

, but the road section contains other speed limits (e.g.  or

or  ),

and the road section also contains hilly parts. Thus, it is an

acceptable route for the analysis of road conditions, i.e., inclinations

and speed limits. Publicly accessible up-to-date

geographical/navigational databases and visualisation programs, such as

Google Earth and Google Maps, are used for the experiment.

),

and the road section also contains hilly parts. Thus, it is an

acceptable route for the analysis of road conditions, i.e., inclinations

and speed limits. Publicly accessible up-to-date

geographical/navigational databases and visualisation programs, such as

Google Earth and Google Maps, are used for the experiment. |  |

|  |

|  |

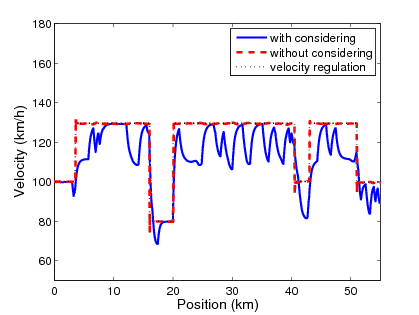

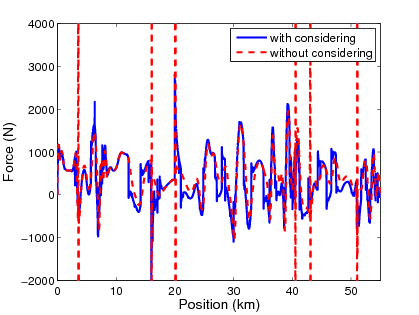

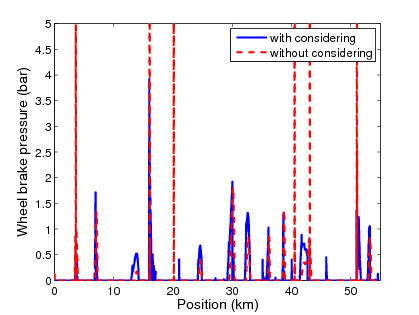

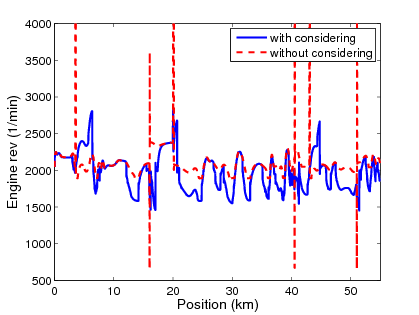

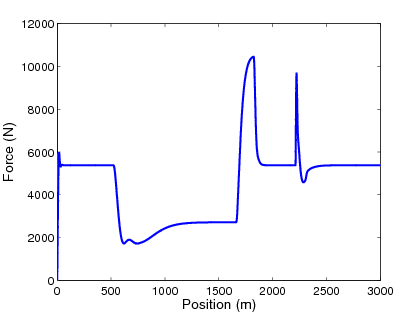

In this example two different controllers are compared. The first is the proposed controller, which considers the road conditions such as inclinations and speed limits and is illustrated by solid line in the figures, while the second controller is a conventional ACC system, which ignores this information and is illustrated by dashed line. Figure shows the time responses of the simulation.

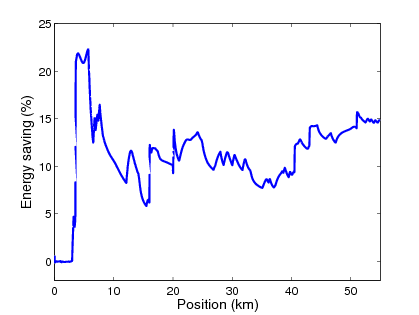

Figure (a) shows that the motorway contains several uphill and downhill sections. Figure (b) shows the velocity of the vehicle with speed limits in both cases. The conventional ACC system tracks the predefined speed limits as accurately as possible and the tracking error is minimal. In the proposed method the speed is determined by the speed limits and simultaneously it takes the road inclinations into consideration according to the optimal requirement. Figure (c) shows the required longitudinal force. The high-precision tracking of the predefined velocities in the conventional ACC system often requires extremely high forces with abrupt changes in the signals. As a result of the road conditions less energy is required during the journey in the proposed control method, see Figure (f). The proposed method requires smaller energy (

) than the conventional method (

) than the conventional method ( ), and the energy saving is

), and the energy saving is  , which is

, which is  . The fuel consumption can also be calculated by using the following approximation: