MOTION DETECTION

Motion detection is the process of detecting a change in the position of an object relative to its surroundings or a change in the surroundings relative to an object. Motion detection can be achieved by either mechanical or electronic methods. When motion detection is accomplished by natural organisms, it is called motion perception.

Methods

Motion can be detected by:- Infrared (passive and active sensors)

- Optics (video and camera systems)

- Radio Frequency Energy (radar, microwave and tomographic motion detection)

- Sound (microphones and acoustic sensors)

- Vibration (triboelectric, seismic, and inertia-switch sensors)

- Magnetism (magnetic sensors and magnetometers)

Mechanical

The most basic form of mechanical motion detection is in the form of a switch or trigger. For example, the keys of a typewriter employ a mechanical method of detecting motion. Each key is a manual switch that is either off or on. Each letter that appears is a result of motion on that corresponding key and the switch being turned on.Electronic

The principal methods by which motion can be electronically identified are optical detection and acoustic detection. Infrared light or laser technology may be used for optical detection. Motion detection devices, such as PIR motion detectors, have a sensor that detects a disturbance in the infrared spectrum. Once detected, a signal can activate an alarm or a camera that can capture an image or video of the motioner.The chief applications for such detection are detection of unauthorized entry, detection of cessation of occupancy of an area to extinguish lighting, and detection of a moving object which triggers a camera to record subsequent events.

A simple algorithm for motion detection by a fixed camera compares the current image with a reference image and simply counts the number of different pixels. Since images will naturally differ due to factors such as varying lighting, camera flicker, and CCD dark currents, pre-processing is useful to reduce the number of false positive alarms.

More complex algorithms are necessary to detect motion when the camera itself is moving, or when the motion of a specific object must be detected in a field containing other movement which can be ignored. An example might be a painting surrounded by visitors in an art gallery. For the case of a moving camera, models based on optical flow are used to distinguish between apparent background motion caused by the camera movement and that of independent objects moving in the scene.

Devices

Motion detecting devices include:- Sony Computer Entertainment's PlayStation Move or PlayStation Eye for PS3 and PlayStation Camera for PS4

- Microsoft Corporation's Kinect for Xbox 360, Windows 7, 8, 8.1 or Xbox One

- Nintendo's Wii Remote

- ASUS Eee Stick

- HP's Swing

X . I

MOTION PERCEPTION

perception of motion of an object in an object can be viewed from the sensor sensor using the principle of analog and digital as illustrated in my previous writings; ie how analog works by motion natural time that according to the process while the digital work on the motion of a fast time that the Lord Jesus which proves that it is He (Jesus) to Thomas that could quickly called logically digital, Jesus reversing the palm of his hand to Thomas and Thomas proved on the palms of the Lord Jesus to put a finger then Thomas believes that the Lord Jesus (0000 ----- 1001).

Motion perception is the process of inferring the speed and direction of elements in a scene based on visual, vestibular and proprioceptive inputs. Although this process appears straightforward to most observers, it has proven to be a difficult problem from a computational perspective, and extraordinarily difficult to explain in terms of neural processing.

Motion perception is studied by many disciplines, including psychology ( visual perception), neurology, neurophysiology, engineering, and computer science.

The dorsal stream (green) and ventral stream (purple) are shown. They originate from a common source in visual cortex. The dorsal stream is responsible for detection of location and motion.

Neuropsychology

The inability to perceive motion is called akinetopsia and it may be caused by a lesion to cortical area V5 in the extrastriate cortex. Neuropsychological studies of a patient who could not see motion, seeing the world in a series of static "frames" instead, suggested that visual area V5 in humans is homologous to motion processing area MT in primates.First-order motion perception

Example of Beta movement, often confused with phi phenomenon, in which a succession of still images gives the illusion of a moving ball.

This pure motion perception is referred to as "first-order" motion perception and is mediated by relatively simple "motion sensors" in the visual system, that have evolved to detect a change in luminance at one point on the retina and correlate it with a change in luminance at a neighbouring point on the retina after a short delay. Sensors that work this way have been referred to as either Hassenstein-Reichardt detectors after the scientists Bernhard Hassenstein and Werner Reichardt, who first modelled them, motion-energy sensors, or Elaborated Reichardt Detectors. These sensors detect motion by spatio-temporal correlation and are plausible models for how the visual system may detect motion. There is still considerable debate regarding the exact nature of this process.

Second-order motion perception

Second-order motion is motion in which the moving contour is defined by contrast, texture, flicker or some other quality that does not result in an increase in luminance or motion energy in the Fourier spectrum of the stimulus. There is much evidence to suggest that early processing of first- and second-order motion is carried out by separate pathways. Second-order mechanisms have poorer temporal resolution and are low-pass in terms of the range of spatial frequencies to which they respond. Second-order motion produces a weaker motion aftereffect unless tested with dynamically flickering stimuli. First and second-order signals appear to be fully combined at the level of Area V5/MT of the visual system.The aperture problem

The aperture problem. The grating appears to be moving down and to the right, perpendicular

to the orientation of the bars. But it could be moving in many other

directions, such as only down, or only to the right. It is impossible to

determine unless the ends of the bars become visible in the aperture.

Individual neurons early in the visual system (V1) respond to motion that occurs locally within their receptive field. Because each local motion-detecting neuron will suffer from the aperture problem, the estimates from many neurons need to be integrated into a global motion estimate. This appears to occur in Area MT/V5 in the human visual cortex.

Motion integration

Having extracted motion signals (first- or second-order) from the retinal image, the visual system must integrate those individual local motion signals at various parts of the visual field into a 2-dimensional or global representation of moving objects and surfaces. Further processing is required to detect coherent motion or "global motion" present in a scene.The ability of a subject to detect coherent motion is commonly tested using motion coherence discrimination tasks. For these tasks, dynamic random-dot patterns (also called random dot kinematograms) are used that consist in 'signal' dots moving in one direction and 'noise' dots moving in random directions. The sensitivity to motion coherence is assessed by measuring the ratio of 'signal' to 'noise' dots required to determine the coherent motion direction. The required ratio is called the motion coherence threshold.

Motion in depth

As in other aspects of vision, the observer's visual input is generally insufficient to determine the true nature of stimulus sources, in this case their velocity in the real world. In monocular vision for example, the visual input will be a 2D projection of a 3D scene. The motion cues present in the 2D projection will by default be insufficient to reconstruct the motion present in the 3D scene. Put differently, many 3D scenes will be compatible with a single 2D projection. The problem of motion estimation generalizes to binocular vision when we consider occlusion or motion perception at relatively large distances, where binocular disparity is a poor cue to depth. This fundamental difficulty is referred to as the inverse problem.Nonetheless, some humans do perceive motion in depth. There are indications that the brain uses various cues, in particular temporal changes in disparity as well as monocular velocity ratios, for producing a sensation of motion in depth.

Perceptual learning of motion

Detection and discrimination of motion can be improved by training with long-term results. Participants trained to detect the movements of dots on a screen in only one direction become particularly good at detecting small movements in the directions around that in which they have been trained. This improvement was still present 10 weeks later. However perceptual learning is highly specific. For example, the participants show no improvement when tested around other motion directions, or for other sorts of stimuli.Cognitive map

Cognitive map is a type of mental representation which serves an individual to acquire, code, store, recall, and decode information about the relative locations and attributes of phenomena in their spatial environment. Place cells work with other types of neurons in the hippocampus and surrounding regions of the brain to perform this kind of spatial processing, but the ways in which they function within the hippocampus are still being researched.Many species of mammals can keep track of spatial location even in the absence of visual, auditory, olfactory, or tactile cues, by integrating their movements—the ability to do this is referred to in the literature as path integration. A number of theoretical models have explored mechanisms by which path integration could be performed by neural networks. In most models, such as those of Samsonovich and McNaughton (1997) or Burak and Fiete (2009), the principal ingredients are (1) an internal representation of position, (2) internal representations of the speed and direction of movement, and (3) a mechanism for shifting the encoded position by the right amount when the animal moves. Because cells in the Medial Entorhinal Cortex(MEC) encode information about position (grid cells ) and movement (head direction cells and conjunctive position-by-direction cells), this area is currently viewed as the most promising candidate for the place in the brain where path integration occurs.

X . II

CAMERA

A camera is an optical instrument for recording or capturing images, which may be stored locally, transmitted to another location, or both. The images may be individual still photographs or sequences of images constituting videos or movies. The camera is a remote sensing device as it senses subjects without physical contact. The word camera comes from camera obscura, which means "dark chamber" and is the Latin name of the original device for projecting an image of external reality onto a flat surface. The modern photographic camera evolved from the camera obscura. The functioning of the camera is very similar to the functioning of the human eye.

Functional description

Basic elements of a modern still camera

A movie camera or a video camera operates similarly to a still camera, except it records a series of static images in rapid succession, commonly at a rate of 24 frames per second. When the images are combined and displayed in order, the illusion of motion is achieved.

History

Main article: History of the camera

The forerunner to the photographic camera was the camera obscura. Camera obscura (Latin

for "dark room") is the natural optical phenomenon that occurs when an

image of a scene at the other side of a screen (or for instance a wall)

is projected through a small hole in that screen and forms an inverted

image (left to right and upside down) on a surface opposite to the

opening. The oldest known record of this principle is a description by Han Chinese philosopher Mozi (ca. 470 to ca. 391 BC). Mozi correctly asserted that the camera obscura image is inverted because light travels in straight lines from its source. In the 11th century The use of a lens in the opening of a wall or closed window shutter of a darkened room to project images used as a drawing aid has been traced back to circa 1550. Since the late 17th century portable camera obscura devices in tents and boxes were used as a drawing aid.

Further information: Camera obscura

Before the development of the photographic camera, it had been known

for hundreds of years that some substances, such as silver salts,

darkened when exposed to sunlight. In a series of experiments, published in 1727, the German scientist Johann Heinrich Schulze demonstrated that the darkening of the salts was due to light alone, and not influenced by heat or exposure to air. The Swedish chemist Carl Wilhelm Scheele

showed in 1777 that silver chloride was especially susceptible to

darkening from light exposure, and that once darkened, it becomes

insoluble in an ammonia solution. The first person to use this chemistry to create images was Thomas Wedgwood.

To create images, Wedgwood placed items, such as leaves and insect

wings, on ceramic pots coated with silver nitrate, and exposed the

set-up to light. These images weren't permanent, however, as Wedgwood

didn't employ a fixing mechanism. He ultimately failed at his goal of

using the process to create fixed images created by a camera obscura.The first permanent photograph of a camera image was made in 1826 by Joseph Nicéphore Niépce using a sliding wooden box camera made by Charles and Vincent Chevalier in Paris. Niépce had been experimenting with ways to fix the images of a camera obscura since 1816. The photograph Niépce succeeded in creating shows the view from his window. It was made using an 8-hour exposure on pewter coated with bitumen. Niépce called his process "heliography". Niépce corresponded with the inventor Louis-Jacques-Mande Daguerre, and the pair entered into a partnership to improve the heliographic process. Niépce had experimented further with other chemicals, to improve contrast in his heliographs. Daguerre contributed an improved camera obscura design, but the partnership ended when Niépce died in 1833. Daguerre succeeded in developing a high-contrast and extremely sharp image by exposing on a plate coated with silver iodide, and exposing this plate again to mercury vapor. By 1837, he was able to fix the images with a common salt solution. He called this process Daguerreotype, and tried unsuccessfully for a couple years to commercialize it. Eventually, with help of the scientist and politician François Arago, the French government acquired Daguerre's process for public release. In exchange, pensions were provided to Daguerre as well as Niépce's son, Isidore.

In the 1830s, the English scientist Henry Fox Talbot independently invented a process to fix camera images using silver salts. Although dismayed that Daguerre had beaten him to the announcement of photography, on January 31, 1839 he submitted a pamphlet to the Royal Institution entitled Some Account of the Art of Photogenic Drawing, which was the first published description of photography. Within two years, Talbot developed a two-step process for creating photographs on paper, which he called calotypes. The calotyping process was the first to utilize negative prints, which reverse all values in the photograph - black shows up as white and vice versa. Negative prints allow, in principle, unlimited duplicates of the positive print to be made. Calotyping also introduced the ability for a printmaker to alter the resulting image through retouching.Calotypes were never as popular or widespread as daguerreotypes, owing mainly to the fact that the latter produced sharper details. However, because daguerreotypes only produce a direct positive print, no duplicates can be made. It is the two-step negative/positive process that formed the basis for modern photography.

The first photographic camera developed for commercial manufacture was a daguerreotype camera, built by Alphonse Giroux in 1839. Giroux signed a contract with Daguerre and Isidore Niépce to produce the cameras in France, with each device and accessories costing 400 francs.The camera was a double-box design, with a landscape lens fitted to the outer box, and a holder for a ground glass focusing screen and image plate on the inner box. By sliding the inner box, objects at various distances could be brought to as sharp a focus as desired. After a satisfactory image had been focused on the screen, the screen was replaced with a sensitized plate. A knurled wheel controlled a copper flap in front of the lens, which functioned as a shutter. The early daguerreotype cameras required long exposure times, which in 1839 could be from 5 to 30 minutes.

After the introduction of the Giroux daguerreotype camera, other manufacturers quickly produced improved variations. Charles Chevalier, who had earlier provided Niépce with lenses, created in 1841 a double-box camera using a half-sized plate for imaging. Chevalier’s camera had a hinged bed, allowing for half of the bed to fold onto the back of the nested box. In addition to having increased portability, the camera had a faster lens, bringing exposure times down to 3 minutes, and a prism at the front of the lens, which allowed the image to be laterally correct. Another French design emerged in 1841, created by Marc Antoine Gaudin. The Nouvel Appareil Gaudin camera had a metal disc with three differently-sized holes mounted on the front of the lens. Rotating to a different hole effectively provided variable f-stops, letting in different amount of light into the camera. Instead of using nested boxes to focus, the Gaudin camera used nested brass tubes. In Germany, Peter Friedrich Voigtländer designed an all-metal camera with a conical shape that produced circular pictures of about 3 inches in diameter. The distinguishing characteristic of the Voigtländer camera was its use of a lens designed by Josef Max Petzval. The f/3.5 Petzval lens was nearly 30 times faster than any other lens of the period, and was the first to be made specifically for portraiture. Its design was the most widely used for portraits until Carl Zeiss introduced the anastigmat lens in 1889.

Within a decade of being introduced in America, 3 general forms of camera were in popular use: the American- or chamfered-box camera, the Robert’s-type camera or “Boston box”, and the Lewis-type camera. The American-box camera had beveled edges at the front and rear, and an opening in the rear where the formed image could be viewed on ground glass. The top of the camera had hinged doors for placing photographic plates. Inside there was one available slot for distant objects, and another slot in the back for close-ups. The lens was focused either by sliding or with a rack and pinion mechanism. The Robert’s-type cameras were similar to the American-box, except for having a knob-fronted worm gear on the front of the camera, which moved the back box for focusing. Many Robert’s-type cameras allowed focusing directly on the lens mount. The third popular daguerreotype camera in America was the Lewis-type, introduced in 1851, which utilized a bellows for focusing. The main body of the Lewis-type camera was mounted on the front box, but the rear section was slotted into the bed for easy sliding. Once focused, a set screw was tightened to hold the rear section in place. Having the bellows in the middle of the body facilitated making a second, in-camera copy of the original image.

Daguerreotype cameras formed images on silvered copper plates. The earliest daguerreotype cameras required several minutes to half an hour to expose images on the plates. By 1840, exposure times were reduced to just a few seconds owing to improvements in the chemical preparation and development processes, and to advances in lens design. American daguerreotypists introduced manufactured plates in mass production, and plate sizes became internationally standardized: whole plate (6.5 x 8.5 inches), three-quarter plate (5.5 x 7 1/8 inches), half plate (4.5 x 5.5 inches), quarter plate (3.25 x 4.25 inches), sixth plate (2.75 x 3.25 inches), and ninth plate (2 x 2.5 inches). Plates were often cut to fit cases and jewelry with circular and oval shapes. Larger plates were produced, with sizes such as 9 x 13 inches (“double-whole” plate), or 13.5 x 16.5 inches (Southworth & Hawes’ plate).

The collodion wet plate process that gradually replaced the daguerreotype during the 1850s required photographers to coat and sensitize thin glass or iron plates shortly before use and expose them in the camera while still wet. Early wet plate cameras were very simple and little different from Daguerreotype cameras, but more sophisticated designs eventually appeared. The Dubroni of 1864 allowed the sensitizing and developing of the plates to be carried out inside the camera itself rather than in a separate darkroom. Other cameras were fitted with multiple lenses for photographing several small portraits on a single larger plate, useful when making cartes de visite. It was during the wet plate era that the use of bellows for focusing became widespread, making the bulkier and less easily adjusted nested box design obsolete.

For many years, exposure times were long enough that the photographer simply removed the lens cap, counted off the number of seconds (or minutes) estimated to be required by the lighting conditions, then replaced the cap. As more sensitive photographic materials became available, cameras began to incorporate mechanical shutter mechanisms that allowed very short and accurately timed exposures to be made.

The use of photographic film was pioneered by George Eastman, who started manufacturing paper film in 1885 before switching to celluloid in 1889. His first camera, which he called the "Kodak," was first offered for sale in 1888. It was a very simple box camera with a fixed-focus lens and single shutter speed, which along with its relatively low price appealed to the average consumer. The Kodak came pre-loaded with enough film for 100 exposures and needed to be sent back to the factory for processing and reloading when the roll was finished. By the end of the 19th century Eastman had expanded his lineup to several models including both box and folding cameras.

Films also made possible capture of motion (cinematography) establishing the movie industry by end of 19th century.

The first camera using digital electronics to capture and store images was developed by Kodak engineer Steven Sasson in 1975. He used a charge-coupled device (CCD) provided by Fairchild Semiconductor, which provided only 0.01 megapixels to capture images. Sasson combined the CCD device with movie camera parts to create a digital camera that saved black and white images onto a cassette tape. The images were then read from the cassette and viewed on a TV monitor. Later, cassette tapes were replaced by flash memory.

Gradually in the 2000s and 2010s, digital cameras became the dominant type of camera across consumer, television and movies.

Mechanics

Image capture

Traditional cameras capture light onto photographic plate or photographic film. Video and digital cameras use an electronic image sensor, usually a charge coupled device (CCD) or a CMOS sensor to capture images which can be transferred or stored in a memory card or other storage inside the camera for later playback or processing.Cameras that capture many images in sequence are known as movie cameras or as ciné cameras in Europe; those designed for single images are still cameras.

However these categories overlap as still cameras are often used to capture moving images in special effects work and many modern cameras can quickly switch between still and motion recording modes.

Lens

Main articles: Camera lens and Photographic lens design

The lens of a camera captures the light from the subject and brings

it to a focus on the sensor. The design and manufacture of the lens is

critical to the quality of the photograph being taken. The technological

revolution in camera design in the 19th century revolutionized optical

glass manufacture and lens design with great benefits for modern lens

manufacture in a wide range of optical instruments from reading glasses

to microscopes. Pioneers included Zeiss and Leitz.Camera lenses are made in a wide range of focal lengths. They range from extreme wide angle, and standard, medium telephoto. Each lens is best suited to a certain type of photography. The extreme wide angle may be preferred for architecture because it has the capacity to capture a wide view of a building. The normal lens, because it often has a wide aperture, is often used for street and documentary photography. The telephoto lens is useful for sports and wildlife but it is more susceptible to camera shake.

Focus

The distance range in which objects appear clear and sharp, called depth of field, can be adjusted by many cameras. This allows for a photographer to control which objects appear in focus, and which do not.

Rangefinder cameras allow the distance to objects to be measured by means of a coupled parallax unit on top of the camera, allowing the focus to be set with accuracy. Single-lens reflex cameras allow the photographer to determine the focus and composition visually using the objective lens and a moving mirror to project the image onto a ground glass or plastic micro-prism screen. Twin-lens reflex cameras use an objective lens and a focusing lens unit (usually identical to the objective lens.) in a parallel body for composition and focusing. View cameras use a ground glass screen which is removed and replaced by either a photographic plate or a reusable holder containing sheet film before exposure. Modern cameras often offer autofocus systems to focus the camera automatically by a variety of methods.

Some experimental cameras, for example the planar Fourier capture array (PFCA), do not require focusing to allow them to take pictures. In conventional digital photography, lenses or mirrors map all of the light originating from a single point of an in-focus object to a single point at the sensor plane. Each pixel thus relates an independent piece of information about the far-away scene. In contrast, a PFCA does not have a lens or mirror, but each pixel has an idiosyncratic pair of diffraction gratings above it, allowing each pixel to likewise relate an independent piece of information (specifically, one component of the 2D Fourier transform) about the far-away scene. Together, complete scene information is captured and images can be reconstructed by computation.

Some cameras have post focusing. Post focusing means take the pictures first and then focusing later at the personal computer. The camera uses many tiny lenses on the sensor to capture light from every camera angle of a scene and is called plenoptics technology. A current plenoptic camera design has 40,000 lenses working together to grab the optimal picture.

Exposure control

The size of the aperture and the brightness of the scene controls the amount of light that enters the camera during a period of time, and the shutter controls the length of time that the light hits the recording surface. Equivalent exposures can be made using a large aperture size with a fast shutter speed and a small aperture with a slow shutter.Shutters

Although a range of different shutter devices have been used during the development of the camera only two types have been widely used and remain in use today.The Leaf shutter or more precisely the in-lens shutter is a shutter contained within the lens structure, often close to the diaphragm consisting of a number of metal leaves which are maintained under spring tension and which are opened and then closed when the shutter is released. The exposure time is determined by the interval between opening and closing. In this shutter design, the whole film frame is exposed at one time. This makes flash synchronisation much simpler as the flash only needs to fire once the shutter is fully open. Disadvantages of such shutters are their inability to reliably produce very fast shutter speeds ( faster than 1/500th second or so) and the additional cost and weight of having to include a shutter mechanism for every lens.

The focal-plane shutter operates as close to the film plane as possible and consists of cloth curtains that are pulled across the film plane with a carefully determined gap between the two curtains (typically running horizontally) or consisting of a series of metal plates (typically moving vertically) just in front of the film plane. The focal-plane shutter is primarily associated with the single lens reflex type of cameras, since covering the film rather than blocking light passing through the lens allows the photographer to view through the lens at all times except during the exposure itself. Covering the film also facilitates removing the lens from a loaded camera (many SLRs have interchangeable lenses).

Complexities

Professional medium format SLR (single-lens-reflex) cameras (typically using 120/220 roll film) use a hybrid solution, since such a large focal-plane shutter would be difficult to make and/or may run slowly. A manually inserted blade known as a dark slide allows the film to be covered when changing lenses or film backs. A blind inside the camera covers the film prior to and after the exposure (but is not designed to be able to give accurately controlled exposure times) and a leaf shutter that is normally open is installed in the lens. To take a picture, the leaf shutter closes, the blind opens, the leaf shutter opens then closes again, and finally the blind closes and the leaf shutter re-opens (the last step may only occur when the shutter is re-cocked).Using a focal-plane shutter, exposing the whole film plane can take much longer than the exposure time. The exposure time does not depend on the time taken to make the exposure over all, only on the difference between the time a specific point on the film is uncovered and then covered up again. For example, an exposure of 1/1000 second may be achieved by the shutter curtains moving across the film plane in 1/50th of a second but with the two curtains only separated by 1/20th of the frame width. In fact in practice the curtains do not run at a constant speed as they would in an ideal design, obtaining an even exposure time depends mainly on being able to make the two curtains accelerate in a similar manner.

When photographing rapidly moving objects, the use of a focal-plane shutter can produce some unexpected effects, since the film closest to the start position of the curtains is exposed earlier than the film closest to the end position. Typically this can result in a moving object leaving a slanting image. The direction of the slant depends on the direction the shutter curtains run in (noting also that as in all cameras the image is inverted and reversed by the lens, i.e. "top-left" is at the bottom right of the sensor as seen by a photographer behind the camera).

Focal-plane shutters are also difficult to synchronise with flash bulbs and electronic flash and it is often only possible to use flash at shutter speeds where the curtain that opens to reveal the film completes its run and the film is fully uncovered, before the second curtain starts to travel and cover it up again. Typically 35mm film SLRs could sync flash at only up to 1/60th second if the camera has horizontal run cloth curtains, and 1/125th if using a vertical run metal shutter.

Formats

A wide range of film and plate formats have been used by cameras. In the early history plate sizes were often specific for the make and model of camera although there quickly developed some standardisation for the more popular cameras. The introduction of roll film drove the standardization process still further so that by the 1950s only a few standard roll films were in use. These included 120 film providing 8, 12 or 16 exposures, 220 film providing 16 or 24 exposures, 127 film providing 8 or 12 exposures (principally in Brownie cameras) and 135 (35 mm film) providing 12, 20 or 36 exposures – or up to 72 exposures in the half-frame format or in bulk cassettes for the Leica Camera range.For cine cameras, film 35 mm wide and perforated with sprocket holes was established as the standard format in the 1890s. It was used for nearly all film-based professional motion picture production. For amateur use, several smaller and therefore less expensive formats were introduced. 17.5 mm film, created by splitting 35 mm film, was one early amateur format, but 9.5 mm film, introduced in Europe in 1922, and 16 mm film, introduced in the US in 1923, soon became the standards for "home movies" in their respective hemispheres. In 1932, the even more economical 8 mm format was created by doubling the number of perforations in 16 mm film, then splitting it, usually after exposure and processing. The Super 8 format, still 8 mm wide but with smaller perforations to make room for substantially larger film frames, was introduced in 1965.

Camera accessories

Accessories for cameras are mainly for care, protection, special effects and functions.- Lens hood: used on the end of a lens to block the sun or other light source to prevent glare and lens flare (see also matte box).

- Lens cap: covers and protects the lens during storage.

- Lens adapter: sometimes called a step-ring, adapts the lens to other size filters.

- Lens filters: allow artificial colors or change light density.

- Lens extension tubes allow close focus in macro photography.

- Flash equipment: including light diffuser, mount and stand, reflector, soft box, trigger and cord.

- Care and protection: including camera case and cover, maintenance tools, and screen protector.

- Large format cameras use special equipment which includes magnifier loupe, view finder, angle finder, focusing rail /truck.

- Battery and sometimes a charger.

- Some professional SLR could be provided with interchangeable finders for eye-level or waist-level focusing, focusing screens, eye-cup, data backs, motor-drives for film transportation or external battery packs.

- Tripod, microscope adapter, cable release, electric wire release.

Camera design history

Plate camera

The earliest cameras produced in significant numbers used sensitised glass plates were plate cameras. Light entered a lens mounted on a lens board which was separated from the plate by an extendible bellows.There were simple box cameras for glass plates but also single-lens reflex cameras with interchangeable lenses and even for color photography (Autochrome Lumière). Many of these cameras had controls to raise or lower the lens and to tilt it forwards or backwards to control perspective.Focussing of these plate cameras was by the use of a ground glass screen at the point of focus. Because lens design only allowed rather small aperture lenses, the image on the ground glass screen was faint and most photographers had a dark cloth to cover their heads to allow focussing and composition to be carried out more easily. When focus and composition were satisfactory, the ground glass screen was removed and a sensitised plate put in its place protected by a dark slide. To make the exposure, the dark slide was carefully slid out and the shutter opened and then closed and the dark slide replaced.

Glass plates were later replaced by sheet film in a dark slide for sheet film; adaptor sleeves were made to allow sheet film to be used in plate holders. In addition to the ground glass, a simple optical viewfinder was often fitted. Cameras which take single exposures on sheet film and are functionally identical to plate cameras were used for static, high-image-quality work; much longer in 20th century, see Large-format camera, below.

Folding camera

The introduction of films enabled the existing designs for plate cameras to be made much smaller and for the base-plate to be hinged so that it could be folded up compressing the bellows. These designs were very compact and small models were dubbed vest pocket cameras. Folding rollfilm cameras were preceded by folding plate cameras, more compact than other designs.Box camera

Box cameras were introduced as a budget level camera and had few if any controls. The original box Brownie models had a small reflex viewfinder mounted on the top of the camera and had no aperture or focusing controls and just a simple shutter. Later models such as the Brownie 127 had larger direct view optical viewfinders together with a curved film path to reduce the impact of deficiencies in the lens.Rangefinder camera

As camera a lens technology developed and wide aperture lenses became more common, rangefinder cameras were introduced to make focusing more precise. Early rangefinders had two separate viewfinder windows, one of which is linked to the focusing mechanisms and moved right or left as the focusing ring is turned. The two separate images are brought together on a ground glass viewing screen. When vertical lines in the object being photographed meet exactly in the combined image, the object is in focus. A normal composition viewfinder is also provided. Later the viewfinder and rangefinder were combined. Many rangefinder cameras had interchangeable lenses, each lens requiring its own range- and viewfinder linkages.Rangefinder cameras were produced in half- and full-frame 35 mm and rollfilm (medium format).

Instant picture camera

After exposure every photograph is taken through pinch rollers inside of the instant camera. Thereby the developer paste contained in the paper 'sandwich' distributes on the image. After a minute, the cover sheet just needs to be removed and one gets a single original positive image with a fixed format. With some systems it was also possible to create an instant image negative, from which then could be made copies in the photo lab. The ultimate development was the SX-70 system of Polaroid, in which a row of ten shots - engine driven - could be made without having to remove any cover sheets from the picture. There were instant cameras for a variety of formats, as well as cartridges with instant film for normal system cameras.Single-lens reflex

In the single-lens reflex camera, the photographer sees the scene through the camera lens. This avoids the problem of parallax which occurs when the viewfinder or viewing lens is separated from the taking lens. Single-lens reflex cameras have been made in several formats including sheet film 5x7" and 4x5", roll film 220/120 taking 8,10, 12 or 16 photographs on a 120 roll and twice that number of a 220 film. These correspond to 6x9, 6x7, 6x6 and 6x4.5 respectively (all dimensions in cm). Notable manufacturers of large format and roll film SLR cameras include Bronica, Graflex, Hasselblad, Mamiya, and Pentax. However the most common format of SLR cameras has been 35 mm and subsequently the migration to digital SLR cameras, using almost identical sized bodies and sometimes using the same lens systems.Almost all SLR cameras use a front surfaced mirror in the optical path to direct the light from the lens via a viewing screen and pentaprism to the eyepiece. At the time of exposure the mirror is flipped up out of the light path before the shutter opens. Some early cameras experimented with other methods of providing through-the-lens viewing, including the use of a semi-transparent pellicle as in the Canon Pellix[44] and others with a small periscope such as in the Corfield Periflex series.[45]

Twin-lens reflex

Twin-lens reflex cameras used a pair of nearly identical lenses, one to form the image and one as a viewfinder. The lenses were arranged with the viewing lens immediately above the taking lens. The viewing lens projects an image onto a viewing screen which can be seen from above. Some manufacturers such as Mamiya also provided a reflex head to attach to the viewing screen to allow the camera to be held to the eye when in use. The advantage of a TLR was that it could be easily focussed using the viewing screen and that under most circumstances the view seen in the viewing screen was identical to that recorded on film. At close distances however, parallax errors were encountered and some cameras also included an indicator to show what part of the composition would be excluded.Some TLR had interchangeable lenses but as these had to be paired lenses they were relatively heavy and did not provide the range of focal lengths that the SLR could support. Most TLRs used 120 or 220 film; some used the smaller 127 film.

Large-format camera

The large-format camera, taking sheet film, is a direct successor of the early plate cameras and remained in use for high quality photography and for technical, architectural and industrial photography. There are three common types, the view camera with its monorail and field camera variants, and the press camera. They have an extensible bellows with the lens and shutter mounted on a lens plate at the front. Backs taking rollfilm, and later digital backs are available in addition to the standard dark slide back. These cameras have a wide range of movements allowing very close control of focus and perspective. Composition and focusing is done on view cameras by viewing a ground-glass screen which is replaced by the film to make the exposure; they are suitable for static subjects only, and are slow to use.Medium-format camera

Medium-format cameras have a film size between the large-format cameras and smaller 35mm cameras. Typically these systems use 120 or 220 rollfilm. The most common image sizes are 6×4.5 cm, 6×6 cm and 6×7 cm; the older 6×9 cm is rarely used. The designs of this kind of camera show greater variation than their larger brethren, ranging from monorail systems through the classic Hasselblad model with separate backs, to smaller rangefinder cameras. There are even compact amateur cameras available in this format.Subminiature camera

Cameras taking film significantly smaller than 35 mm were made. Subminiature cameras were first produced in the nineteenth century. The expensive 8×11 mm Minox, the only type of camera produced by the company from 1937 to 1976, became very widely known and was often used for espionage (the Minox company later also produced larger cameras). Later inexpensive subminiatures were made for general use, some using rewound 16 mm cine film. Image quality with these small film sizes was limited.Movie camera

A ciné camera or movie camera takes a rapid sequence of photographs on image sensor or strips of film. In contrast to a still camera, which captures a single snapshot at a time, the ciné camera takes a series of images, each called a "frame" through the use of an intermittent mechanism.The frames are later played back in a ciné projector at a specific speed, called the "frame rate" (number of frames per second). While viewing, a person's eyes and brain merge the separate pictures to create the illusion of motion. The first ciné camera was built around 1888 and by 1890 several types were being manufactured. The standard film size for ciné cameras was quickly established as 35mm film and this remained in use until transition to digital cinematography. Other professional standard formats include 70 mm film and 16mm film whilst amateurs film makers used 9.5 mm film, 8mm film or Standard 8 and Super 8 before the move into digital format.

The size and complexity of ciné cameras varies greatly depending on the uses required of the camera. Some professional equipment is very large and too heavy to be hand held whilst some amateur cameras were designed to be very small and light for single-handed operation.

Camcorders

A camcorder is an electronic device combining a video camera and a video recorder. Although marketing materials may use the colloquial term "camcorder", the name on the package and manual is often "video camera recorder". Most devices capable of recording video are camera phones and digital cameras primarily intended for still pictures; the term "camcorder" is used to describe a portable, self-contained device, with video capture and recording its primary function.Professional video camera

A professional video camera (often called a television camera even though the use has spread beyond television) is a high-end device for creating electronic moving images (as opposed to a movie camera, that earlier recorded the images on film). Originally developed for use in television studios, they are now also used for music videos, direct-to-video movies, corporate and educational videos, marriage videos etc.These cameras earlier used vacuum tubes and later electronic sensors.

Digital camera

A digital camera (or digicam) is a camera that encodes digital images and videos digitally and stores them for later reproduction. Most cameras sold today are digital, and digital cameras are incorporated into many devices ranging from mobile phones (called camera phones) to vehicles.Digital and film cameras share an optical system, typically using a lens with a variable diaphragm to focus light onto an image pickup device. The diaphragm and shutter admit the correct amount of light to the imager, just as with film but the image pickup device is electronic rather than chemical. However, unlike film cameras, digital cameras can display images on a screen immediately after being recorded, and store and delete images from memory. Most digital cameras can also record moving videos with sound. Some digital cameras can crop and stitch pictures and perform other elementary image editing.

Consumers adopted digital cameras in 1990s. Professional video cameras transitioned to digital around the 2000s-2010s. Finally movie cameras transitioned to digital in the 2010s.

panoramic camera

Panoramic cameras are fixed-lens digital action cameras. They usually have a single fish-eye lens or multiple lenses, to cover the entire 180° up to 360° in their field of view.VR Camera

VR cameras are panoramic cameras that also cover the top and bottom in their field of view. . There have also been camera rigs employing multiple cameras to cover the whole 360° by 360° field of view. The most famous VR camera rig is known as 'Google Jump'.-

The Giroux daguerreotype camera, the first to be commercially produced

-

Leica M9 with a Summicron-M 28/2 ASPH Lens

-

-

Cinématographe Lumière at the Institut Lumière, France

-

Front and back of Canon PowerShot A95, a typical pocket-size digital camera

-

Arri Alexa, a digital movie camera

-

X . IIIMotion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. It is an ill-posed problem as the motion is in three dimensions but the images are a projection of the 3D scene onto a 2D plane. The motion vectors may relate to the whole image (global motion estimation) or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. The motion vectors may be represented by a translational model or many other models that can approximate the motion of a real video camera, such as rotation and translation in all three dimensions and zoom.

Motion vectors that result from a movement into the

Motion vectors that result from a movement into the-plane of the image, combined with a lateral movement to the lower-right. This is a visualization of the motion estimation performed in order to compress an MPEG movie.

Related terms

More often than not, the term motion estimation and the term optical flow are used interchangeably. It is also related in concept to image registration and stereo correspondence. In fact all of these terms refer to the process of finding corresponding points between two images or video frames. The points that correspond to each other in two views (images or frames) of a real scene or object are "usually" the same point in that scene or on that object. Before we do motion estimation, we must define our measurement of correspondence, i.e., the matching metric, which is a measurement of how similar two image points are. There is no right or wrong here; the choice of matching metric is usually related to what the final estimated motion is used for as well as the optimisation strategy in the estimation process.

Algorithms

The methods for finding motion vectors can be categorised into pixel based methods ("direct") and feature based methods ("indirect"). A famous debate resulted in two papers from the opposing factions being produced to try to establish a conclusion.

Direct methods

- Block-matching algorithm

- Phase correlation and frequency domain methods

- Pixel recursive algorithms

- Optical flow

Indirect methods

Indirect methods use features, such as corner detection, and match corresponding features between frames, usually with a statistical function applied over a local or global area. The purpose of the statistical function is to remove matches that do not correspond to the actual motion.

Statistical functions that have been successfully used include RANSAC.

Additional note on the categorization

It can be argued that almost all methods require some kind of definition of the matching criteria. The difference is only whether you summarise over a local image region first and then compare the summarisation (such as feature based methods), or you compare each pixel first (such as squaring the difference) and then summarise over a local image region (block base motion and filter based motion). An emerging type of matching criteria summarises a local image region first for every pixel location (through some feature transform such as Laplacian transform), compares each summarised pixel and summarises over a local image region again. Some matching criteria has the ability to exclude points that does not actually correspond to each other albeit producing a good matching score, others does not have this ability, but they are still matching criteria.

Applications

Video coding

Applying the motion vectors to an image to synthesize the transformation to the next image is called motion compensation. As a way of exploiting temporal redundancy, motion estimation and compensation are key parts of video compression. Almost all video coding standards use block-based motion estimation and compensation such as the MPEG series including the most recent HEVC.X . IIII MOTION CONTROLA motion controller controls the motion of some object. Frequently motion controllers are implemented using digital computers, but motion controllers can also be implemented with only analog components as well.Implementation

Motion controllers require a load (something to be moved), a prime mover (something to cause the load to move), some sensors (to be able to sense the motion and monitor the prime mover), and a controller to provie, packaging, distribution or use[citation needed].

Benefits

Motion controllers are used to achieve some desired benefit(s) which can include:

- increased position and speed accuracy

- higher speeds

- faster reaction time

- increased production

- smoother movements

- reduction in costs

- integration with other automation

- integration with other processes

- ability to convert desired specifications into motion required to produce a product

- increased information and ability diagnose and troubleshoot

- increased consistency

- improved efficiency

- elimination of hazards to humans or animals

Gaming

Motion controllers using accelerometers are used as controllers for video games, which was publicly introduced in 1981 by Datasoft's "Le Stick" controller for the Atari 2600, and made more popular since 2006 by the Wii Remote controller for Nintendo's Wii console, which uses accelerometers to detect its approximate orientation and acceleration, and serves an image sensor, so it can be used as a pointing device. It was followed by other similar devices, including the ASUS Eee Stick, Sony PlayStation Move (which also uses magnetometers to track the Earth's magnetic field and computer vision via the PlayStation Eye to aid in position tracking), and HP Swing.Other systems use different mechanisms for input, such as Microsoft's Kinect, which combines infrared structured light and computer vision, and the Razer Hydra, which uses a magnetic field to determine position and orientation.Wii Remote with original strap.

The Sega AM2 arcade game Hang-On, designed by Yu Suzuki, was controlled using a video game arcade cabinet resembling a motorbike, which the player moved with their body. This began the "Taikan" trend, the use of motion-controlled hydraulic arcade cabinets in many arcade games of the late 1980s, two decades before motion controls became popular on video game consoles. The Sega Activator, based on the Light Harp invented by Assaf Gurner, An Israeli musician and Kung Fu martial artist who researched inter disciplinarian concepts to create the experience of playing an instrument using the whole body's motion. was released for the Mega Drive (Genesis) in 1993. It could read the player's physical movements and was the first controller to allow full-body motion sensing, The original invention related to a 3 octaves musical instrument that could interpret the user's gestures into musical notes via MIDI protocol. The invention was registered as patent initially in Israel on May 11 1988 after 4 years of R&D. In 1992 the first complete Light Harp was created by Assaf Gurner and Oded Zur and was presented to Sega of America, However, it was a commercial failure due to its "unwieldiness and inaccuracy".Another early motion-sensing device was the Sega VR headset, first announced in 1991. It featured built-in sensors that tracked the player's movement and head position, but was never officially released. Another early example is the 2000 light gun shooter arcade game Police 911, which used motion sensing technology to detect the player's movements, which are reflected by the player character within the game. The Atari Mindlink was an early proposed motion controller for the Atari 2600, which measured the movement of the user's eyebrows with a fitted headband.

X . IIIII MOTION DETECTOR

A motion detector is a device that detects moving objects, particularly people. Such a device is often integrated as a component of a system that automatically performs a task or alerts a user of motion in an area. They form a vital component of security, automated lighting control, home control, energy efficiency, and other useful systems.

A motion detector attached to an outdoor, automatic light.

Overview

An electronic motion detector contains an optical, microwave, or acoustic sensor, and in many cases a transmitter for illumination. However, a passive sensor senses a signature only from the moving object via emission or reflection, i.e., it can be emitted by the object, or by some ambient emitter such as the sun or a radio station of sufficient strength. Changes in the optical, microwave, or acoustic field in the device's proximity are interpreted by the electronics based on one of the technologies listed below. Most low-cost motion detectors can detect up to distances of at least 15 feet (4.6 m). Specialized systems cost more, but have much longer ranges. Tomographic motion detection systems can cover much larger areas because the radio waves are at frequencies which penetrate most walls and obstructions, and are detected in multiple locations, not only at the location of the transmitter.Low-cost motion detector used to control lighting.

Motion detectors have found wide use in domestic and commercial applications. One common application is activating automatic door openers in businesses and public buildings. Motion sensors are also widely used in lieu of a true occupancy sensor in activating street lights or indoor lights in walkways, such as lobbies and staircases. In such smart lighting systems, energy is conserved by only powering the lights for the duration of a timer, after which the person has presumably left the area. A motion detector may be among the sensors of a burglar alarm that is used to alert the home owner or security service when it detects the motion of a possible intruder. Such a detector may also trigger a security camera to record the possible intrusion.

Sensor technology

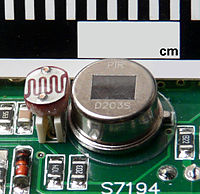

See also: Motion detection § MethodsSeveral types of motion detection are in wide use:A passive infrared detector mounted on circuit board (right), along with photoresistive detector for visible light (left). This is the type most commonly encountered in household motion sensing devices and is designed to turn on a light only when motion is detected and when the surrounding environment is sufficiently dark.

- Passive infrared (PIR)

- Passive infrared (PIR) sensors are sensitive to a person's skin temperature through emitted black body radiation at mid-infrared wavelengths, in contrast to background objects at room temperature. No energy is emitted from the sensor, thus the name passive infrared. This distinguishes it from the electric eye for instance (not usually considered a motion detector), in which the crossing of a person or vehicle interrupts a visible or infrared beam.

- Microwave

- These detect motion through the principle of Doppler radar, and are similar to a radar speed gun. A continuous wave of microwave radiation is emitted, and phase shifts in the reflected microwaves due to motion of an object toward (or away from) the receiver result in a heterodyne signal at a low audio frequency.

- Ultrasonic

- An ultrasonic wave (sound at a frequency higher than a human ear can hear) is emitted and reflections from nearby objects are received. Exactly as in Doppler radar, heterodyne detection of the received field indicates motion. The detected doppler shift is also at low audio frequencies (for walking speeds) since the ultrasonic wavelength of around a centimeter is similar to the wavelengths used in microwave motion detectors. One potential drawback of ultrasonic sensors is that the sensor can be sensitive to motion in areas where coverage is undesired, for instance, due to reflections of sound waves around corners. Such extended coverage may be desirable for lighting control, where the goal is detection of any occupancy in an area. But for opening an automatic door, for example, a sensor selective to traffic in the path toward the door is superior.

- Tomographic motion detector

- These systems sense disturbances to radio waves as they pass from node to node of a mesh network. They have the ability to detect over large areas completely because they can sense through walls and other obstructions.

- Video camera software

- With the proliferation of low-cost digital cameras able to shoot video, it is possible to use the output of such a camera to detect motion in its field of view using software. This solution is particularly attractive when the intent is to record video triggered by motion detection, as no hardware beyond the camera and computer is needed. Since the observed field may be normally illuminated, this may be considered another passive technology. However it can also be used together with near-infrared illumination to detect motion in the dark, that is, with the illumination at a wavelength undetectable by a human eye.

- Gesture detector

- Photodetectors and infrared lighting elements can support digital screens to detect hand motions and gestures with the aid of machine learning algorithms.

Dual-technology motion detectors

Many modern motion detectors use combinations of different technologies. While combining multiple sensing technologies into one detector can help reduce false triggering, it does so at the expense of reduced detection probabilities and increased vulnerability. For example, many dual-tech sensors combine both a PIR sensor and a microwave sensor into one unit. For motion to be detected, both sensors must trip together. This lowers the probability of a false alarm since heat and light changes may trip the PIR but not the microwave, or moving tree branches may trigger the microwave but not the PIR. If an intruder is able to fool either the PIR or microwave, however, the sensor will not detect it.

Often, PIR technology is paired with another model to maximize accuracy and reduce energy use. PIR draws less energy than emissive microwave detection, and so many sensors are calibrated so that when the PIR sensor is tripped, it activates a microwave sensor. If the latter also picks up an intruder, then the alarm is sounded.

X . IIIIII OPTICAL FLOW

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world.Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment. Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion.

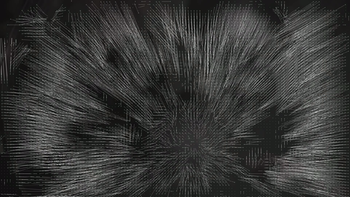

The term optical flow is also used by roboists, encompassing related techniques from image processing and control of navigation including motion detection, object segmentation, time-to-contact information, focus of expansion calculations, luminance, motion compensated encoding, and stereo disparity measurement. The optic flow experienced by a rotating observer (in this case a fly).

The direction and magnitude of optic flow at each location is

represented by the direction and length of each arrow.

The optic flow experienced by a rotating observer (in this case a fly).

The direction and magnitude of optic flow at each location is

represented by the direction and length of each arrow. Estimation

Sequences of ordered images allow the estimation of motion as either instantaneous image velocities or discrete image displacements. Fleet and Weiss provide a tutorial introduction to gradient based optical flow. John L. Barron, David J. Fleet, and Steven Beauchemin provide a performance analysis of a number of optical flow techniques. It emphasizes the accuracy and density of measurements.

The optical flow methods try to calculate the motion between two image frames which are taken at times t andat every voxel position. These methods are called differential since they are based on local Taylor series approximations of the image signal; that is, they use partial derivatives with respect to the spatial and temporal coordinates.

For a 2D+t dimensional case (3D or n-D cases are similar) a voxel at locationwith intensity

will have moved by

,

and

between the two image frames, and the following brightness constancy constraint can be given:

with Taylor series can be developed to get:

From these equations it follows that:

are the

and

components of the velocity or optical flow of

and

,

and

are the derivatives of the image at

in the corresponding directions.

,

and

can be written for the derivatives in the following.

Thus:

Methods for determination

- Phase correlation – inverse of normalized cross-power spectrum

- Block-based methods – minimizing sum of squared differences or sum of absolute differences, or maximizing normalized cross-correlation

- Differential methods of estimating optical flow, based on partial

derivatives of the image signal and/or the sought flow field and

higher-order partial derivatives, such as:

- Lucas–Kanade method – regarding image patches and an affine model for the flow field

- Horn–Schunck method – optimizing a functional based on residuals from the brightness constancy constraint, and a particular regularization term expressing the expected smoothness of the flow field

- Buxton–Buxton method – based on a model of the motion of edges in image sequences

- Black–Jepson method – coarse optical flow via correlation

- General variational methods – a range of modifications/extensions of Horn–Schunck, using other data terms and other smoothness terms.

- Discrete optimization methods – the search space is quantized, and then image matching is addressed through label assignment at every pixel, such that the corresponding deformation minimizes the distance between the source and the target image. The optimal solution is often recovered through Max-flow min-cut theorem algorithms, linear programming or belief propagation methods.

Uses

Motion estimation and video compression have developed as a major aspect of optical flow research. While the optical flow field is superficially similar to a dense motion field derived from the techniques of motion estimation, optical flow is the study of not only the determination of the optical flow field itself, but also of its use in estimating the three-dimensional nature and structure of the scene, as well as the 3D motion of objects and the observer relative to the scene, most of them using the Image Jacobian.

Optical flow was used by robotics researchers in many areas such as: object detection and tracking, image dominant plane extraction, movement detection, robot navigation and visual odometry. Optical flow information has been recognized as being useful for controlling micro air vehicles.

The application of optical flow includes the problem of inferring not only the motion of the observer and objects in the scene, but also the structure of objects and the environment. Since awareness of motion and the generation of mental maps of the structure of our environment are critical components of animal (and human) vision, the conversion of this innate ability to a computer capability is similarly crucial in the field of machine vision.

Consider a five-frame clip of a ball moving from the bottom left of a field of vision, to the top right. Motion estimation techniques can determine that on a two dimensional plane the ball is moving up and to the right and vectors describing this motion can be extracted from the sequence of frames. For the purposes of video compression (e.g., MPEG), the sequence is now described as well as it needs to be. However, in the field of machine vision, the question of whether the ball is moving to the right or if the observer is moving to the left is unknowable yet critical information. Not even if a static, patterned background were present in the five frames, could we confidently state that the ball was moving to the right, because the pattern might have an infinite distance to the observer.The optical flow vector of a moving object in a video sequence.

Optical flow sensor

An optical flow sensor is a vision sensor capable of measuring optical flow or visual motion and outputting a measurement based on optical flow. Various configurations of optical flow sensors exist. One configuration is an image sensor chip connected to a processor programmed to run an optical flow algorithm. Another configuration uses a vision chip, which is an integrated circuit having both the image sensor and the processor on the same die, allowing for a compact implementation. An example of this is a generic optical mouse sensor used in an optical mouse. In some cases the processing circuitry may be implemented using analog or mixed-signal circuits to enable fast optical flow computation using minimal current consumption.

One area of contemporary research is the use of neuromorphic engineering techniques to implement circuits that respond to optical flow, and thus may be appropriate for use in an optical flow sensor. Such circuits may draw inspiration from biological neural circuitry that similarly responds to optical flow.

Optical flow sensors are used extensively in computer optical mice, as the main sensing component for measuring the motion of the mouse across a surface. as the main sensing component for measuring the motion of the mouse across a surface.

Optical flow sensors are also being used in robo applications, primarily where there is a need to measure visual motion or relative motion between the robo and other objects in the vicinity of the robo. The use of optical flow sensors in unmanned aerial vehicles (UAVs), for stability and obstacle avoidance, is also an area of current research.

Hub to Hub Electronics

Electronics is the science of controlling electrical energy electrically, in which the electrons have a fundamental role. Electronics deals with electrical circuits that involve active electrical components such as vacuum tubes, transistors, diodes, integrated circuits, associated passive electrical components, and interconnection technologies. Commonly, electronic devices contain circuitry consisting primarily or exclusively of active semiconductors supplemented with passive elements; such a circuit is described as an electronic circuit.Surface-mount electronic components

The science of electronics is also considered to be a branch of physics and electrical engineering.

The nonlinear behaviour of active components and their ability to control electron flows makes amplification of weak signals possible, and electronics is widely used in information processing, telecommunication, and signal processing. The ability of electronic devices to act as switches makes digital information processing possible. Interconnection technologies such as circuit boards, electronics packaging technology, and other varied forms of communication infrastructure complete circuit functionality and transform the mixed components into a regular working system.

Electronics is distinct from electrical and electro-mechanical science and technology, which deal with the generation, distribution, switching, storage, and conversion of electrical energy to and from other energy forms using wires, motors, generators, batteries, switches, relays, transformers, resistors, and other passive components. This distinction started around 1906 with the invention by Lee De Forest of the triode, which made electrical amplification of weak radio signals and audio signals possible with a non-mechanical device. Until 1950 this field was called "radio technology" because its principal application was the design and theory of radio transmitters, receivers, and vacuum tubes.

Today, most electronic devices use semiconductor components to perform electron control. The study of semiconductor devices and related technology is considered a branch of solid-state physics, whereas the design and construction of electronic circuits to solve practical problems come under electronics engineering. This article focuses on engineering aspects of electronics.

Digital electronics

Digital electronics or digital (electronic) circuits are electronics that handle digital signals (discrete bands of analog levels) rather than by continuous ranges as used in analog electronics. All levels within a band of values represent the same information state. Because of this discretization, relatively small changes to the analog signal levels due to manufacturing tolerance, signal attenuation or noise do not leave the discrete envelope, and as a result are ignored by signal state sensing circuitry.

In most cases, the number of these states is two, and they are represented by two voltage bands: one near a reference value (typically termed as "ground" or zero volts), and the other a value near the supply voltage. These correspond to the false and true values of the Boolean domain respectively. Digital techniques are useful because it is easier to get an electronic device to switch into one of a number of known states than to accurately reproduce a continuous range of values.

Digital electronic circuits are usually made from large assemblies of logic gates, simple electronic representations of Boolean logic functions.

Properties

An advantage of digital circuits when compared to analog circuits is that signals represented digitally can be transmitted without degradation due to noise.[8] For example, a continuous audio signal transmitted as a sequence of 1s and 0s, can be reconstructed without error, provided the noise picked up in transmission is not enough to prevent identification of the 1s and 0s. An hour of music can be stored on a compact disc using about 6 billion binary digits.

In a digital system, a more precise representation of a signal can be obtained by using more binary digits to represent it. While this requires more digital circuits to process the signals, each digit is handled by the same kind of hardware, resulting in an easily scalable system. In an analog system, additional resolution requires fundamental improvements in the linearity and noise characteristics of each step of the signal chain.

Computer-controlled digital systems can be controlled by software, allowing new functions to be added without changing hardware. Often this can be done outside of the factory by updating the product's software. So, the product's design errors can be corrected after the product is in a customer's hands.

Information storage can be easier in digital systems than in analog ones. The noise-immunity of digital systems permits data to be stored and retrieved without degradation. In an analog system, noise from aging and wear degrade the information stored. In a digital system, as long as the total noise is below a certain level, the information can be recovered perfectly.

Even when more significant noise is present, the use of redundancy permits the recovery of the original data provided too many errors do not occur.

In some cases, digital circuits use more energy than analog circuits to accomplish the same tasks, thus producing more heat which increases the complexity of the circuits such as the inclusion of heat sinks. In portable or battery-powered systems this can limit use of digital systems.

For example, battery-powered cellular telephones often use a low-power analog front-end to amplify and tune in the radio signals from the base station. However, a base station has grid power and can use power-hungry, but very flexible software radios. Such base stations can be easily reprogrammed to process the signals used in new cellular standards.

Digital circuits are sometimes more expensive, especially in small quantities.

Most useful digital systems must translate from continuous analog signals to discrete digital signals. This causes quantization errors. Quantization error can be reduced if the system stores enough digital data to represent the signal to the desired degree of fidelity. The Nyquist-Shannon sampling theorem provides an important guideline as to how much digital data is needed to accurately portray a given analog signal.

In some systems, if a single piece of digital data is lost or misinterpreted, the meaning of large blocks of related data can completely change. Because of the cliff effect, it can be difficult for users to tell if a particular system is right on the edge of failure, or if it can tolerate much more noise before failing.

Digital fragility can be reduced by designing a digital system for robustness. For example, a parity bit or other error management method can be inserted into the signal path. These schemes help the system detect errors, and then either correct the errors, or at least ask for a new copy of the data. In a state-machine, the state transition logic can be designed to catch unused states and trigger a reset sequence or other error recovery routine.

Digital memory and transmission systems can use techniques such as error detection and correction to use additional data to correct any errors in transmission and storage.

On the other hand, some techniques used in digital systems make those systems more vulnerable to single-bit errors. These techniques are acceptable when the underlying bits are reliable enough that such errors are highly unlikely.

A single-bit error in audio data stored directly as linear pulse code modulation (such as on a CD-ROM) causes, at worst, a single click. Instead, many people use audio compression to save storage space and download time, even though a single-bit error may corrupt the entire song.

Construction

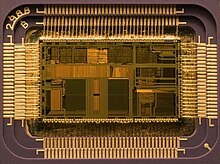

A digital circuit is typically constructed from small electronic circuits called logic gates that can be used to create combinational logic. Each logic gate is designed to perform a function of boolean logic when acting on logic signals. A logic gate is generally created from one or more electrically controlled switches, usually transistors but thermionic valves have seen historic use. The output of a logic gate can, in turn, control or feed into more logic gates.A binary clock, hand-wired on breadboards

Integrated circuits consist of multiple transistors on one silicon chip, and are the least expensive way to make large number of interconnected logic gates. Integrated circuits are usually designed by engineers using electronic design automation software (see below for more information) to perform some type of function.

Integrated circuits are usually interconnected on a printed circuit board which is a board which holds electrical components, and connects them together with copper traces.

Design

Each logic symbol is represented by a different shape. The actual set of shapes was introduced in 1984 under IEEE/ANSI standard 91-1984. "The logic symbol given under this standard are being increasingly used now and have even started appearing in the literature published by manufacturers of digital integrated circuits."