High fidelity—or hi-fi or hifi—reproduction is a term used by home stereo listeners, audiophiles and home audio enthusiasts to refer to high-quality reproduction of sound to distinguish it from the lower quality sound produced by inexpensive audio equipment, or the inferior quality of sound reproduction that can be heard in recordings made until the late 1940s.

Ideally, high-fidelity equipment has inaudible noise and distortion, and a flat (neutral, uncolored) frequency response within the intended frequency range.

Hi-fi speakers are a key component of quality audio reproduction.

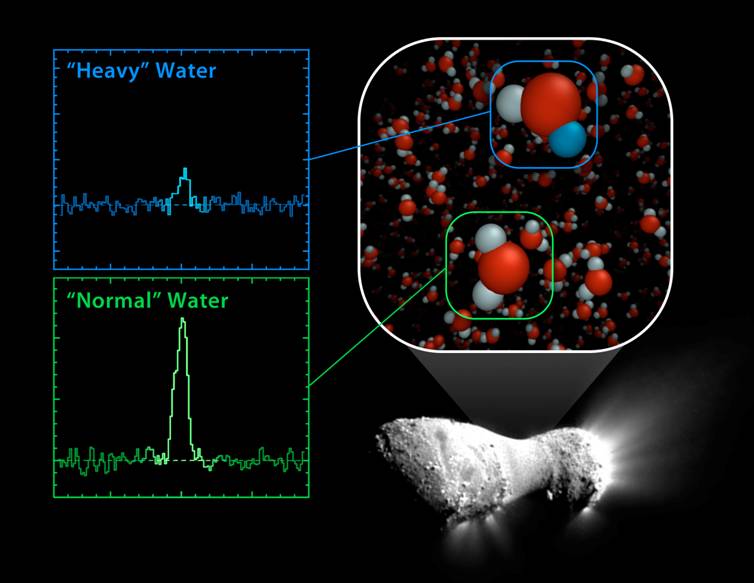

bright cloud

Bell Laboratories began experimenting with a wider range of recording techniques in the early 1930s. Performances by Leopold Stokowski and the Philadelphia Orchestra were recorded in 1931 and 1932 using telephone lines between the Academy of Music in Philadelphia and the Bell labs in New Jersey. Some multitrack recordings were made on optical sound film, which led to new advances used primarily by MGM (as early as 1937) and 20th Century-Fox Film Corporation (as early as 1941). RCA Victor began recording performances by several orchestras using optical sound around 1941, resulting in higher-fidelity masters for 78-rpm discs. During the 1930s, Avery Fisher, an amateur violinist, began experimenting with audio design and acoustics. He wanted to make a radio that would sound like he was listening to a live orchestra—that would achieve high fidelity to the original sound. After World War II, Harry F. Olson conducted an experiment whereby test subjects listened to a live orchestra through a hidden variable acoustic filter. The results proved that listeners preferred high fidelity reproduction, once the noise and distortion introduced by early sound equipment was removed.

Beginning in 1948, several innovations created the conditions that made for major improvements of home-audio quality possible:

- Reel-to-reel audio tape recording, based on technology taken from Germany after WWII, helped musical artists such as Bing Crosby make and distribute recordings with better fidelity.

- The advent of the 33⅓ rpm Long Play (LP) microgroove vinyl record, with lower surface noise and quantitatively specified equalization curves as well as noise-reduction and dynamic range systems. Classical music fans, who were opinion leaders in the audio market, quickly adopted LPs because, unlike with older records, most classical works would fit on a single LP.

- FM radio, with wider audio bandwidth and less susceptibility to signal interference and fading than AM radio, though AM could be heard at longer distances at night.

- Better amplifier designs, with more attention to frequency response and much higher power output capability, reproducing audio without perceptible distortion.

- New loudspeaker designs, including acoustic suspension, developed by Edgar Villchur and Henry Kloss improved bass frequency response.

In the late 1950s and early 1960s, the development of the Westrex single-groove stereophonic record cutterhead led to the next wave of home-audio improvement, and in common parlance, stereo displaced hi-fi. Records were now played on a stereo. In the world of the audiophile, however, the concept of high fidelity continued to refer to the goal of highly accurate sound reproduction and to the technological resources available for approaching that goal. This period is regarded as the "Golden Age of Hi-Fi", when vacuum tube equipment manufacturers of the time produced many models considered endearing by modern audiophiles, and just before solid state (transistorized) equipment was introduced to the market, subsequently replacing tube equipment as the mainstream technology.

A popular type of system for reproducing music beginning in the 1970s was the integrated music centre—which combined a phonograph turntable, AM-FM radio tuner, tape player, preamplifier, and power amplifier in one package, often sold with its own separate, detachable or integrated speakers. These systems advertised their simplicity. The consumer did not have to select and assemble individual components, or be familiar with impedance and power ratings. Purists generally avoid referring to these systems as high fidelity, though some are capable of very good quality sound reproduction. Audiophiles in the 1970s and 1980s preferred to buy each component separately. That way, they could choose models of each component with the specifications that they desired. In the 1980s, a number of audiophile magazines became available, offering reviews of components and articles on how to choose and test speakers, amplifiers and other components.

Listening tests

Listening tests are used by hi-fi manufacturers, audiophile magazines and audio engineering researchers and scientists. If a listening test is done in such a way that the listener who is assessing the sound quality of a component or recording can see the components that are being used for the test (e.g., the same musical piece listened to through a tube power amplifier and a solid state amplifier), then it is possible that the listener's pre-existing biases towards or against certain components or brands could affect their judgement. To respond to this issue, researchers began to use blind tests, in which the researchers can see the components being tested, but not the listeners undergoing the experiments. In a double-blind experiment, neither the listeners nor the researchers know who belongs to the control group and the experimental group, or which type of audio component is being used for which listening sample. Only after all the data has been recorded (and in some cases, analyzed) do the researchers learn which components or recordings were preferred by the listeners. A commonly used variant of this test is the ABX test. A subject is presented with two known samples (sample A, the reference, and sample B, an alternative), and one unknown sample X, for three samples total. X is randomly selected from A and B, and the subject identifies X as being either A or B. Although there is no way to prove that a certain lossy methodology is transparent, a properly conducted double-blind test can prove that a lossy method is not transparent.Scientific double-blind tests are sometimes used as part of attempts to ascertain whether certain audio components (such as expensive, exotic cables) have any subjectively perceivable effect on sound quality. Data gleaned from these double-blind tests is not accepted by some "audiophile" magazines such as Stereophile and The Absolute Sound in their evaluations of audio equipment. John Atkinson, current editor of Stereophile, stated (in a 2005 July editorial named Blind Tests & Bus Stops) that he once purchased a solid-state amplifier, the Quad 405, in 1978 after seeing the results from blind tests, but came to realize months later that "the magic was gone" until he replaced it with a tube amp. Robert Harley of The Absolute Sound wrote, in a 2008 editorial (on Issue 183), that: "...blind listening tests fundamentally distort the listening process and are worthless in determining the audibility of a certain phenomenon."

Doug Schneider, editor of the online Soundstage network, refuted this position with two editorials in 2009. He stated: "Blind tests are at the core of the decades’ worth of research into loudspeaker design done at Canada’s National Research Council (NRC). The NRC researchers knew that for their result to be credible within the scientific community and to have the most meaningful results, they had to eliminate bias, and blind testing was the only way to do so." Many Canadian companies such as Axiom, Energy, Mirage, Paradigm, PSB and Revel use blind testing extensively in designing their loudspeakers. Audio professional Dr. Sean Olive of Harman International shares this view.

Semblance of realism

Stereophonic sound provided a partial solution to the problem of creating the illusion of live orchestral performers by creating a phantom middle channel when the listener sits exactly in the middle of the two front loudspeakers. When the listener moves slightly to the side, however, this phantom channel disappears or is greatly reduced. An attempt to provide for the reproduction of the reverberation was tried in the 1970s through quadraphonic sound but, again, the technology at that time was insufficient for the task. Consumers did not want to pay the additional costs and space required for the marginal improvements in realism. With the rise in popularity of home theater, however, multi-channel playback systems became affordable, and many consumers were willing to tolerate the six to eight channels required in a home theater. The advances made in signal processors to synthesize an approximation of a good concert hall can now provide a somewhat more realistic illusion of listening in a concert hall.In addition to spatial realism, the playback of music must be subjectively free from noise, such as hiss or hum, to achieve realism. The compact disc (CD) provides about 90 decibels of dynamic range, which exceeds the 80 dB dynamic range of music as normally perceived in a concert hall. Audio equipment must be able to reproduce frequencies high enough and low enough to be realistic. The human hearing range, for healthy young persons, is 20 Hz to 20,000 Hz. Most adults can't hear higher than 15 kHz. CDs are capable of reproducing frequencies as low as 10 Hz and as high as 22.05 kHz, making them adequate for reproducing the frequency range that most humans can hear. The equipment must also provide no noticeable distortion of the signal or emphasis or de-emphasis of any frequency in this frequency range.

Modularity

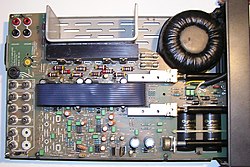

Modular components made by Samsung and Harman Kardon

For slightly less flexibility in upgrades, a preamplifier and a power amplifier in one box is called an integrated amplifier; with a tuner, it is a receiver. A monophonic power amplifier, which is called a monoblock, is often used for powering a subwoofer. Other modules in the system may include components like cartridges, tonearms, hi-fi turntables, Digital Media Players, digital audio players, DVD players that play a wide variety of discs including CDs, CD recorders, MiniDisc recorders, hi-fi videocassette recorders (VCRs) and reel-to-reel tape recorders. Signal modification equipment can include equalizers and signal processors.

This modularity allows the enthusiast to spend as little or as much as they want on a component that suits their specific needs. In a system built from separates, sometimes a failure on one component still allows partial use of the rest of the system. A repair of an integrated system, though, means complete lack of use of the system. Another advantage of modularity is the ability to spend money on only a few core components at first and then later add additional components to the system. Some of the disadvantages of this approach are increased cost, complexity, and space required for the components.

Modern equipment

In the 2000s, modern hi-fi equipment can include signal sources such as digital audio tape (DAT), digital audio broadcasting (DAB) or HD Radio tuners. Some modern hi-fi equipment can be digitally connected using fibre optic TOSLINK cables, universal serial bus (USB) ports (including one to play digital audio files), or Wi-Fi support. Another modern component is the music server consisting of one or more computer hard drives that hold music in the form of computer files. When the music is stored in an audio file format that is lossless such as FLAC, Monkey's Audio or WMA Lossless, the computer playback of recorded audio can serve as an audiophile-quality source for a hi-fi system.X . I

Sound recording and reproduction

Acoustic analog recording is achieved by a microphone diaphragm that can detect and sense the changes in atmospheric pressure caused by acoustic sound waves and record them as a mechanical representation of the sound waves on a medium such as a phonograph record (in which a stylus cuts grooves on a record). In magnetic tape recording, the sound waves vibrate the microphone diaphragm and are converted into a varying electric current, which is then converted to a varying magnetic field by an electromagnet, which makes a representation of the sound as magnetized areas on a plastic tape with a magnetic coating on it. Analog sound reproduction is the reverse process, with a bigger loudspeaker diaphragm causing changes to atmospheric pressure to form acoustic sound waves. Oscillations may also be recorded directly from devices such as an electric guitar pickup or a synthesizer, without the use of acoustics in the recording process, other than the need for musicians to hear how well they are playing during recording sessions via headphones.

Digital recording and reproduction converts the analog sound signal picked up by the microphone to a digital form by the process of digitization. This lets the audio data be stored and transmitted by a wider variety of media. Digital recording stores audio as a series of binary numbers (zeros and ones) representing samples of the amplitude of the audio signal at equal time intervals, at a sample rate high enough to convey all sounds capable of being heard. Digital recordings are considered higher quality than analog recordings not necessarily because they have higher fidelity (wider frequency response or dynamic range), but because the digital format can prevent much loss of quality found in analog recording due to noise and electromagnetic interference in playback and mechanical deterioration or damage to the storage medium. Whereas successive copies of an analog recording tend to degrade in quality, as more noise is added, a digital audio recording can be reproduced endlessly with no degradation in sound quality. A digital audio signal must be reconverted to analog form during playback before it is amplified and connected to a loudspeaker to produce sound.

bright cloud

Long before sound was first recorded, music was recorded—first by written notation, then also by mechanical devices (e.g., wind-up music boxes, in which a mechanism turns a spindle, which plucks tines, thus producing a melody). Automatic music reproduction traces back as far as the 9th century, when the Banū Mūsā brothers invented the earliest known mechanical musical instrument, in this case, a hydropowered organ that played interchangeable cylinders. According to Charles B. Fowler, this "...cylinder with raised pins on the surface remained the basic device to produce and reproduce music mechanically until the second half of the nineteenth century." The Banu Musa brothers also invented an automatic flute player, which appears to have been the first programmable machine. According to Fowler, the automata were a robot band that performed "...more than fifty facial and body actions during each musical selection." In the 14th century, Flanders introduced a mechanical bell-ringer controlled by a rotating cylinder. Similar designs appeared in barrel organs (15th century), musical clocks (1598), barrel pianos (1805), and musical boxes (ca.1800).

The fairground organ, developed in 1892, used a system of accordion-folded punched cardboard books. The player piano, first demonstrated in 1876, used a punched paper scroll that could store an arbitrarily long piece of music. The most sophisticated of the piano rolls were "hand-played", meaning that the roll represented the actual performance of an individual, not just a transcription of the sheet music. This technology to record a live performance onto a piano roll was not developed until 1904. Piano rolls were in continuous mass production from 1896 to 2008. A 1908 U.S. Supreme Court copyright case noted that, in 1902 alone, there were between 70,000 and 75,000 player pianos manufactured, and between 1,000,000 and 1,500,000 piano rolls produced. The use of piano rolls began to decline in the 1920s although one type is still being made today.

Phonautograph

|

|

Phonograph

Phonograph cylinder

|

|

Disc phonograph

Recording of Bell's voice on a wax disc in 1885, identified in 2013

Emile Berliner with disc record gramophone

The next major technical development was the invention of the gramophone disc, generally credited to Emile Berliner and commercially introduced in the United States in 1889, though others had demonstrated similar disk apparatus earlier, most notably Alexander Graham Bell in 1881. Discs were easier to manufacture, transport and store, and they had the additional benefit of being louder (marginally) than cylinders, which by necessity, were single-sided. Sales of the gramophone record overtook the cylinder ca. 1910, and by the end of World War I the disc had become the dominant commercial recording format. Edison, who was the main producer of cylinders, created the Edison Disc Record in an attempt to regain his market. In various permutations, the audio disc format became the primary medium for consumer sound recordings until the end of the 20th century, and the double-sided 78 rpm shellac disc was the standard consumer music format from the early 1910s to the late 1950s.

Although there was no universally accepted speed, and various companies offered discs that played at several different speeds, the major recording companies eventually settled on a de facto industry standard of nominally 78 revolutions per minute, though the actual speed differed between America and the rest of the world. The specified speed was 78.26 rpm in America and 77.92 rpm throughout the rest of the world, the difference in speeds was a result of the difference in cycle frequencies of the AC power driving the stroboscopes used to calibrate recording lathes and turntables. The nominal speed of the disc format gave rise to its common nickname, the "seventy-eight" (though not until other speeds had become available). Discs were made of shellac or similar brittle plastic-like materials, played with needles made from a variety of materials including mild steel, thorn, and even sapphire. Discs had a distinctly limited playing life that varied depending on how they were produced.

Earlier, purely acoustic methods of recording had limited sensitivity and frequency range. Mid-frequency range notes could be recorded, but very low and very high frequencies could not. Instruments such as the violin were difficult to transfer to disc. One technique to deal with this involved using a Stroh violin—which was fitted a conical horn connected to a diaphragm vibrated by the violin bridge. The horn was no longer required once electrical recording was developed.

The long-playing 33 1⁄3 rpm microgroove vinyl record, or "LP", was developed at Columbia Records and introduced in 1948. The short-playing but convenient 7-inch 45 rpm microgroove vinyl single was introduced by RCA Victor in 1949. In the US and most developed countries, the two new vinyl formats completely replaced 78 rpm shellac discs by the end of the 1950s, but in some corners of the world, the "78" lingered on far into the 1960s. Vinyl was much more expensive than shellac, one of several factors that made its use for 78 rpm records very unusual, but with a long-playing disc the added cost was acceptable and the compact "45" format required very little material. Vinyl offered improved performance, both in stamping and in playback. If played with a good diamond stylus mounted in a lightweight pickup on a well-adjusted tonearm, it was long-lasting. If protected from dust, scuffs and scratches there was very little noise. Vinyl records were, over-optimistically, advertised as "unbreakable". They were not, but they were much less fragile than shellac, which had itself once been touted as "unbreakable" compared to wax cylinders.

Electrical recording

RCA-44, a classic ribbon microphone

introduced in 1932. Similar units were widely used for recording and

broadcasting in the 1940s and are occasionally still used today.

Between the invention of the phonograph in 1877 and the first commercial digital recordings in the early 1970s, arguably the most important milestone in the history of sound recording was the introduction of what was then called electrical recording, in which a microphone was used to convert the sound into an electrical signal that was amplified and used to actuate the recording stylus. This innovation eliminated the "horn sound" resonances characteristic of the acoustical process, produced clearer and more full-bodied recordings by greatly extending the useful range of audio frequencies, and allowed previously unrecordable distant and feeble sounds to be captured.

Sound recording began as a purely mechanical process. Except for a few crude telephone-based recording devices with no means of amplification, such as the Telegraphone, it remained so until the 1920s when several radio-related developments in electronics converged to revolutionize the recording process. These included improved microphones and auxiliary devices such as electronic filters, all dependent on electronic amplification to be of practical use in recording. In 1906, Lee De Forest invented the Audion triode vacuum tube, an electronic valve that could amplify weak electrical signals. By 1915, it was in use in long-distance telephone circuits that made conversations between New York and San Francisco practical. Refined versions of this tube were the basis of all electronic sound systems until the commercial introduction of the first transistor-based audio devices in the mid-1950s.

During World War I, engineers in the United States and Great Britain worked on ways to record and reproduce, among other things, the sound of a German U-boat for training purposes. Acoustical recording methods of the time could not reproduce the sounds accurately. The earliest results were not promising.

The first electrical recording issued to the public, with little fanfare, was of November 11, 1920, funeral services for The Unknown Warrior in Westminster Abbey, London. The recording engineers used microphones of the type used in contemporary telephones. Four were discreetly set up in the abbey and wired to recording equipment in a vehicle outside. Although electronic amplification was used, the audio was weak and unclear. The procedure did, however, produce a recording that would otherwise not have been possible in those circumstances. For several years, this little-noted disc remained the only issued electrical recording.

Several record companies and independent inventors, notably Orlando Marsh, experimented with equipment and techniques for electrical recording in the early 1920s. Marsh's electrically recorded Autograph Records were already being sold to the public in 1924, a year before the first such offerings from the major record companies, but their overall sound quality was too low to demonstrate any obvious advantage over traditional acoustical methods. Marsh's microphone technique was idiosyncratic and his work had little if any impact on the systems being developed by others.

Telephone industry giant Western Electric had research laboratories (merged with the AT&T engineering department in 1925 to form Bell Telephone Laboratories) with material and human resources that no record company or independent inventor could match. They had the best microphone, a condenser type developed there in 1916 and greatly improved in 1922, and the best amplifiers and test equipment. They had already patented an electromechanical recorder in 1918, and in the early 1920s, they decided to intensively apply their hardware and expertise to developing two state-of-the-art systems for electronically recording and reproducing sound: one that employed conventional discs and another that recorded optically on motion picture film. Their engineers pioneered the use of mechanical analogs of electrical circuits and developed a superior "rubber line" recorder for cutting the groove into the wax master in the disc recording system.

By 1924, such dramatic progress had been made that Western Electric arranged a demonstration for the two leading record companies, the Victor Talking Machine Company and the Columbia Phonograph Company. Both soon licensed the system and both made their earliest published electrical recordings in February 1925, but neither actually released them until several months later. To avoid making their existing catalogs instantly obsolete, the two long-time archrivals agreed privately not to publicize the new process until November 1925, by which time enough electrically recorded repertory would be available to meet the anticipated demand. During the next few years, the lesser record companies licensed or developed other electrical recording systems. By 1929 only the budget label Harmony was still issuing new recordings made by the old acoustical process.

Comparison of some surviving Western Electric test recordings with early commercial releases indicates that the record companies "dumbed down" the frequency range of the system so the recordings would not overwhelm non-electronic playback equipment, which reproduced very low frequencies as an unpleasant rattle and rapidly wore out discs with strongly recorded high frequencies.

Other recording formats

In the 1920s, Phonofilm and other early motion picture sound systems employed optical recording technology, in which the audio signal was graphically recorded on photographic film. The amplitude variations comprising the signal were used to modulate a light source which was imaged onto the moving film through a narrow slit, allowing the signal to be photographed as variations in the density or width of a "sound track". The projector used a steady light and a photoelectric cell to convert these variations back into an electrical signal, which was amplified and sent to loudspeakers behind the screen. Ironically, the introduction of "talkies" was spearheaded by The Jazz Singer (1927), which used the Vitaphone sound-on-disc system rather than an optical soundtrack. Optical sound became the standard motion picture audio system throughout the world and remains so for theatrical release prints despite attempts in the 1950s to substitute magnetic soundtracks. Currently, all release prints on 35 mm film include an analog optical soundtrack, usually stereo with Dolby SR noise reduction. In addition, an optically recorded digital soundtrack in Dolby Digital and/or Sony SDDS form is likely to be present. An optically recorded timecode is also commonly included to synchronise CDROMs that contain a DTS soundtrack.This period also saw several other historic developments including the introduction of the first practical magnetic sound recording system, the magnetic wire recorder, which was based on the work of Danish inventor Valdemar Poulsen. Magnetic wire recorders were effective, but the sound quality was poor, so between the wars, they were primarily used for voice recording and marketed as business dictating machines. In 1924, a German engineer, Dr. Kurt Stille, developed the Poulsen wire recorder as a dictating machine. The following year, Ludwig Blattner began work that eventually produced the Blattnerphone, enhancing it to use steel tape instead of wire. The BBC started using Blattnerphones in 1930 to record radio programmes. In 1933, radio pioneer Guglielmo Marconi's company purchased the rights to the Blattnerphone, and newly developed Marconi-Stille recorders were installed in the BBC's Maida Vale Studios in March 1935. The tape used in Blattnerphones and Marconi-Stille recorders was the same material used to make razor blades, and not surprisingly the fearsome Marconi-Stille recorders were considered so dangerous that technicians had to operate them from another room for safety. Because of the high recording speeds required, they used enormous reels about one metre in diameter, and the thin tape frequently broke, sending jagged lengths of razor steel flying around the studio. The K1 Magnetophon was the first practical tape recorder, developed by AEG in Germany in 1935.

Magnetic tape

Magnetic audio tapes: acetate base (left) and polyester base (right)

A typical Compact Cassette

The ease and accuracy of tape editing, as compared to the cumbersome disc-to-disc editing procedures previously in some limited use, together with tape's consistently high audio quality finally convinced radio networks to routinely prerecord their entertainment programming, most of which had formerly been broadcast live. Also, for the first time, broadcasters, regulators and other interested parties were able to undertake comprehensive audio logging of each day's radio broadcasts. Innovations like multitracking and tape echo allowed radio programs and advertisements to be produced to a high level of complexity and sophistication. The combined impact with innovations such as the endless loop broadcast cartridge led to significant changes in the pacing and production style of radio program content and advertising.

Stereo and hi-fi

In 1881, it was noted during experiments in transmitting sound from the Paris Opera that it was possible to follow the movement of singers on the stage if earpieces connected to different microphones were held to the two ears. This discovery was commercialized in 1890 with the Théâtrophone system, which operated for over forty years until 1932. In 1931, Alan Blumlein, a British electronics engineer working for EMI, designed a way to make the sound of an actor in a film follow his movement across the screen. In December 1931, he submitted a patent including the idea, and in 1933 this became UK patent number 394,325. Over the next two years, Blumlein developed stereo microphones and a stereo disc-cutting head, and recorded a number of short films with stereo soundtracks.In the 1930s, experiments with magnetic tape enabled the development of the first practical commercial sound systems that could record and reproduce high-fidelity stereophonic sound. The experiments with stereo during the 1930s and 1940s were hampered by problems with synchronization. A major breakthrough in practical stereo sound was made by Bell Laboratories, who in 1937 demonstrated a practical system of two-channel stereo, using dual optical sound tracks on film. Major movie studios quickly developed three-track and four-track sound systems, and the first stereo sound recording for a commercial film was made by Judy Garland for the MGM movie Listen, Darling in 1938. The first commercially released movie with a stereo soundtrack was Walt Disney's Fantasia, released in 1940. The 1941 release of Fantasia used the "Fantasound" sound system. This system used a separate film for the sound, synchronized with the film carrying the picture. The sound film had four double-width optical soundtracks, three for left, center, and right audio—and a fourth as a "control" track with three recorded tones that controlled the playback volume of the three audio channels. Because of the complex equipment this system required, Disney exhibited the movie as a roadshow, and only in the United States. Regular releases of the movie used standard mono optical 35 mm stock until 1956, when Disney released the film with a stereo soundtrack that used the "Cinemascope" four-track magnetic sound system.

German audio engineers working on magnetic tape developed stereo recording by 1941, even though a 2-track push-pull monaural technique existed in 1939. Of 250 stereophonic recordings made during WW2, only three survive: Beethoven's 5th Piano Concerto with Walter Gieseking and Arthur Rother, a Brahm's Serenade, and the last movement of Bruckner's 8th Symphony with Von Karajan. The Audio Engineering Society has issued all these recordings on CD. (Varese Sarabande had released the Beethoven Concerto on LP, and it has been reissued on CD several times since). Other early German stereophonic tapes are believed to have been destroyed in bombings. Not until Ampex introduced the first commercial two-track tape recorders in the late 1940s did stereo tape recording become commercially feasible. However, despite the availability of multitrack tape, stereo did not become the standard system for commercial music recording for some years, and remained a specialist market during the 1950s. EMI (UK) was the first company to release commercial stereophonic tapes. They issued their first Stereosonic tape in 1954. Others quickly followed, under the His Master's Voice and Columbia labels. 161 Stereosonic tapes were released, mostly classical music or lyric recordings. RCA imported these tapes into the USA.

Two-track stereophonic tapes were more successful in America during the second half of the 1950s. They were duplicated at real time (1:1) or at twice the normal speed (2:1) when later 4-track tapes were often duplicated at up to 16 times the normal speed, providing a lower sound quality in many cases. Early American 2-track stereophonic tapes were very expensive. A typical example is the price list of the Sonotape/Westminster reels: $6.95, $11.95 and $17.95 for the 7000, 9000 and 8000 series respectively. Some HMV tapes released in the USA also cost up to $15. The history of stereo recording changed after the late 1957 introduction of the Westrex stereo phonograph disc, which used the groove format developed earlier by Blumlein. Decca Records in England came out with FFRR (Full Frequency Range Recording) in the 1940s, which became internationally accepted as a worldwide standard for higher quality recording on vinyl records. The Ernest Ansermet recording of Igor Stravinsky's Petrushka was key in the development of full frequency range records and alerting the listening public to high fidelity in 1946.

Record companies mixed most popular music singles into monophonic sound until the mid-1960s—then commonly released major recordings in both mono and stereo until the early 1970s. Many 1960s pop albums available only in stereo in the 2000s were originally released only in mono, and record companies produced the "stereo" versions of these albums by simply separating the two tracks of the master tape, creating "pseudo stereo". In the mid Sixties, as stereo became more popular, many mono recordings (such as The Beach Boys' Pet Sounds) were remastered using the so-called "fake stereo" method, which spread the sound across the stereo field by directing higher-frequency sound into one channel and lower-frequency sounds into the other.

1950s to 1980s

The next important innovation was small cartridge-based tape systems, of which the compact cassette, commercialized by the Philips electronics company in 1964, is the best known. Initially, a low-fidelity format for spoken-word voice recording and inadequate for music reproduction, after a series of improvements it entirely replaced the competing formats, the larger 8-track tape (used primarily in cars) and the fairly similar "Deutsche Cassette" developed by the German company Grundig. This latter system was not particularly common in Europe and practically unheard-of in America. The compact cassette became a major consumer audio format and advances in electronic and mechanical miniaturization led to the development of the Sony Walkman, a pocket-sized cassette player introduced in 1979. The Walkman was the first personal music player and it gave a major boost to sales of prerecorded cassettes, which became the first widely successful release format that used a re-recordable medium: the vinyl record was a playback-only medium and commercially prerecorded tapes for reel-to-reel tape decks, which many consumers found difficult to operate, were never more than an uncommon niche market item.

A key advance in audio fidelity came with the Dolby A noise reduction system, invented by Ray Dolby and introduced into professional recording studios in 1966. It suppressed the light but sometimes quite noticeable steady background of hiss, which was the only easily audible downside of mastering on tape instead of recording directly to disc. A competing system, dbx, invented by David Blackmer, also found success in professional audio. A simpler variant of Dolby's noise reduction system, known as Dolby B, greatly improved the sound of cassette tape recordings by reducing the especially high level of hiss that resulted from the cassette's miniaturized tape format. It, and variants, also eventually found wide application in the recording and film industries. Dolby B was crucial to the popularization and commercial success of the cassette as a domestic recording and playback medium, and it became a standard feature in the booming home and car stereo market of the 1970s and beyond. The compact cassette format also benefited enormously from improvements to the tape itself as coatings with wider frequency responses and lower inherent noise were developed, often based on cobalt and chrome oxides as the magnetic material instead of the more usual iron oxide.

The multitrack audio cartridge had been in wide use in the radio industry, from the late 1950s to the 1980s, but in the 1960s the pre-recorded 8-track cartridge was launched as a consumer audio format by Bill Lear of the Lear Jet aircraft company (and although its correct name was the 'Lear Jet Cartridge', it was seldom referred to as such). Aimed particularly at the automotive market, they were the first practical, affordable car hi-fi systems, and could produce superior sound quality to the compact cassette. However the smaller size and greater durability — augmented by the ability to create home-recorded music "compilations" since 8-track recorders were rare — saw the cassette become the dominant consumer format for portable audio devices in the 1970s and 1980s.

There had been experiments with multi-channel sound for many years — usually for special musical or cultural events — but the first commercial application of the concept came in the early 1970s with the introduction of Quadraphonic sound. This spin-off development from multitrack recording used four tracks (instead of the two used in stereo) and four speakers to create a 360-degree audio field around the listener. Following the release of the first consumer 4-channel hi-fi systems, a number of popular albums were released in one of the competing four-channel formats; among the best known are Mike Oldfield's Tubular Bells and Pink Floyd's The Dark Side of the Moon. Quadraphonic sound was not a commercial success, partly because of competing and somewhat incompatible four-channel sound systems (e.g., CBS, JVC, Dynaco and others all had systems) and generally poor quality, even when played as intended on the correct equipment, of the released music. It eventually faded out in the late 1970s, although this early venture paved the way for the eventual introduction of domestic Surround Sound systems in home theatre use, which have gained enormous popularity since the introduction of the DVD. This widespread adoption has occurred despite the confusion introduced by the multitude of available surround sound standards.

Audio components

The replacement of the relatively fragile thermionic valve (vacuum tube) by the smaller, lighter-weight, cooler-running, less expensive, more robust, and less power-hungry transistor also accelerated the sale of consumer high-fidelity "hi-fi" sound systems from the 1960s onward. In the 1950s, most record players were monophonic and had relatively low sound quality. Few consumers could afford high-quality stereophonic sound systems. In the 1960s, American manufacturers introduced a new generation of "modular" hi-fi components — separate turntables, pre-amplifiers, amplifiers, both combined as integrated amplifiers, tape recorders, and other ancillary equipment like the graphic equaliser, which could be connected together to create a complete home sound system. These developments were rapidly taken up by major Japanese electronics companies, which soon flooded the world market with relatively affordable, high-quality transistorized audio components. By the 1980s, corporations like Sony had become world leaders in the music recording and playback industry.Digital recording

Graphical representation of a sound wave in analog (red) and 4-bit digital (blue).

A digital sound recorder from Sony

The most recent and revolutionary developments have been in digital recording, with the development of various uncompressed and compressed digital audio file formats, processors capable and fast enough to convert the digital data to sound in real time, and inexpensive mass storage. This generated new types of portable digital audio players. The minidisc player, using ATRAC compression on small, cheap, re-writeable discs was introduced in the 1990s but became obsolescent as solid-state non-volatile flash memory dropped in price. As technologies that increase the amount of data that can be stored on a single medium, such as Super Audio CD, DVD-A, Blu-ray Disc, and HD DVD become available, longer programs of higher quality fit onto a single disc. Sound files are readily downloaded from the Internet and other sources, and copied onto computers and digital audio players. Digital audio technology is now used in all areas of audio, from casual use of music files of moderate quality to the most demanding professional applications. New applications such as internet radio and podcasting have appeared.

Technological developments in recording, editing, and consuming have transformed the record, movie and television industries in recent decades. Audio editing became practicable with the invention of magnetic tape recording, but digital audio and cheap mass storage allows computers to edit audio files quickly, easily, and cheaply. Today, the process of making a recording is separated into tracking, mixing and mastering. Multitrack recording makes it possible to capture signals from several microphones, or from different takes to tape, disc or mass storage, with maximized headroom and quality, allowing previously unavailable flexibility in the mixing and mastering stages.

Software

There are many different digital audio recording and processing programs running under several computer operating systems for all purposes, ranging from casual users (e.g., a small business person recording her "to-do" list on an inexpensive digital recorder) to serious amateurs (an unsigned "indie" band recording their demo on a laptop) to professional sound engineers who are recording albums, film scores and doing sound design for video games. A comprehensive list of digital recording applications is available at the digital audio workstation article. Digital dictation software for recording and transcribing speech has different requirements; intelligibility and flexible playback facilities are priorities, while a wide frequency range and high audio quality are not.Legal status

UK

Since 1934, copyright law has treated sound recordings (or phonograms) differently from musical works. Copyright, Designs and Patents Act 1988 defines a sound recording as (a) a recording of sounds, from which the sounds may be reproduced, or (b) a recording of the whole or any part of a literary, dramatic or musical work, from which sounds reproducing the work or part may be produced, regardless of the medium on which the recording is made or the method by which the sounds are reproduced or produced. It thus covers vinyl records, tapes, compact discs, digital audiotapes, and MP3s that embody recordings.X . II

Frequency response

Two applications of frequency response analysis are related but have different objectives. For an audio system, the objective may be to reproduce the input signal with no distortion. That would require a uniform (flat) magnitude of response up to the bandwidth limitation of the system, with the signal delayed by precisely the same amount of time at all frequencies. That amount of time could be seconds, or weeks or months in the case of recorded media. In contrast, for a feedback apparatus used to control a dynamic system, the objective is to give the closed-loop system improved response as compared to the uncompensated system. The feedback generally needs to respond to system dynamics within a very small number of cycles of oscillation (usually less than one full cycle), and with a definite phase angle relative to the commanded control input. For feedback of sufficient amplification, getting the phase angle wrong can lead to instability for an open-loop stable system, or failure to stabilize a system that is open-loop unstable. Digital filters may be used for both audio systems and feedback control systems, but since the objectives are different, generally the phase characteristics of the filters will be significantly different for the two applications.

Estimation and plotting

Frequency response of a low pass filter with 6 dB per octave or 20 dB per decade

The frequency response of a system can be measured by applying a test signal, for example:

- applying an impulse to the system and measuring its response (see impulse response)

- sweeping a constant-amplitude pure tone through the bandwidth of interest and measuring the output level and phase shift relative to the input

- applying a signal with a wide frequency spectrum (for example digitally-generated maximum length sequence noise, or analog filtered white noise equivalent, like pink noise), and calculating the impulse response by deconvolution of this input signal and the output signal of the system.

These response measurements can be plotted in three ways: by plotting the magnitude and phase measurements on two rectangular plots as functions of frequency to obtain a Bode plot; by plotting the magnitude and phase angle on a single polar plot with frequency as a parameter to obtain a Nyquist plot; or by plotting magnitude and phase on a single rectangular plot with frequency as a parameter to obtain a Nichols plot.

For audio systems with nearly uniform time delay at all frequencies, the magnitude versus frequency portion of the Bode plot may be all that is of interest. For design of control systems, any of the three types of plots [Bode, Nyquist, Nichols] can be used to infer closed-loop stability and stability margins (gain and phase margins) from the open-loop frequency response, provided that for the Bode analysis the phase-versus-frequency plot is included.

Nonlinear frequency response

Applications

In electronics this stimulus would be an input signal. In the audible range it is usually referred to in connection with electronic amplifiers, microphones and loudspeakers. Radio spectrum frequency response can refer to measurements of coaxial cable, twisted-pair cable, video switching equipment, wireless communications devices, and antenna systems. Infrasonic frequency response measurements include earthquakes and electroencephalography (brain waves).Frequency response requirements differ depending on the application. In high fidelity audio, an amplifier requires a frequency response of at least 20–20,000 Hz, with a tolerance as tight as ±0.1 dB in the mid-range frequencies around 1000 Hz, however, in telephony, a frequency response of 400–4,000 Hz, with a tolerance of ±1 dB is sufficient for intelligibility of speech.

Frequency response curves are often used to indicate the accuracy of electronic components or systems. When a system or component reproduces all desired input signals with no emphasis or attenuation of a particular frequency band, the system or component is said to be "flat", or to have a flat frequency response curve.

Once a frequency response has been measured (e.g., as an impulse response), provided the system is linear and time-invariant, its characteristic can be approximated with arbitrary accuracy by a digital filter. Similarly, if a system is demonstrated to have a poor frequency response, a digital or analog filter can be applied to the signals prior to their reproduction to compensate for these deficiencies.

X . III

HI-FI STEREO petite

PRELIMINARY

Not so long ago we were introduced to a product 'playback' tiny stereo, the Walkman, cassette-recorder stereo was so small and light that can be taken anywhere and is also equipped with lightweight fur headphones. Until now 'walk man' is still popular, even in the market has decreased. In order to be heard while learning in the room, walk man has now been fitted with a pair of earbuds, without compromising voice quality, thereby creating a hi-fi devices are tiny.

Indeed, lately there is a tendency to minimize the equipment hi-fi. Gone are the days of disco with music pounding frenetic, and is now starting to like more light music, sweet, or heavy but tasty in the ear. Now it seems that the producers of hi-fi stereo is actively designing hi-fi products are tiny, portable (portable) and easily placed anywhere in the room. Hi-fi Compo is one product that is currently more popular and more and more fans and is perfect for an employee, student, or students who are boarding or contract. The resulting sound quality is not inferior when compared to the hi-fi is great. Moreover, the hi-fi Compo consists of components of a complete hi-fi so that we will be able to enjoy a dish of hi-fi which is really satisfying.

Remember the proverb that says 'small is beautiful', which means that small is beautiful. But it was all for the wallet thick. For those who eat only mediocre, at most, only hearing can ride in a friend's house. What power? Fortunately we are a hobby electronics. We will set aside some money to assemble a compact stereo device that can be classified as hi-fi.

HI-FI compact stereo

Actually, it is not correct to say that we are going to raft a hi-fi compo, because we are only going to make the power amplifier section and its pre-amplifier section. Radio as well as a string of other complicated we will not discuss here. For colleagues who are just learning to tamper will be very interesting because this circuit is very easy to make.

In Figure 1 looks chart series compact stereo hi-fi. The system consists of a pre-amplifier can we select will be installed as the amplifier equalization tape head, amplifier pick-up, or just as an amplifier mic pre-amplifier is linear. Then the next level consists of instruments regulating the signal level or volume, bass and treble tone regulator, and the regulator of the balance of the stereo channels or balance.

At the last level is a power amplifier that will drive and drive a speaker that can emit sound signals. Pre-amplifier circuit can be set according to the signal source we are going to enter. With reference to Table 1 we can put up a series of feedback (feedback) corresponding to the input signal.

picture 1

sinyal = signal

sumber sinyal = signal source

prapenguat = pre amplifier

penguat daya = power amplifier

Pre - amplifier

If we want to take the signals from the tape head, the magnetic needle, or a mic, then the pre-amplifier circuit would be useful. By regulating the feedback circuit, pre-amplifier will equalizing input signal to be flat. At the next level of the amplifier signal frequency region will be strengthened with an equally large.

For the pre-amplifier circuit we will use artificial NEC IC 1032H UPC specifically for this purpose. As an initial amplifier UPC 1032H meet the standards of low noise, low hissing, and ideal when used for signal equalizing tape head or signal magnetic needle.

In figure 2 pre-amplifier circuit looks simple. Equalization circuit is determined by R1, R2, R3, C2, C3, and C4, the selection of the value of these components can be seen in table 1. Table 2 is the specification of the characteristics of the UPC 1032H for pre amplifier equalization tape head. When used for pre amplifier equalization magnetic needle or mic, the values obtained will be better again.

picture 2

REGULATOR CIRCUIT TONE

With particular consideration for regulating tone finally been using a series regulator Baxandall type tone. The circuit is quite adequate for the system we have created. Pictures of the circuit in Figure 3.

Input regulator circuit is a tone selector switch which can be selected from the preamp or other sources. Then on potentiometers 'volume' paired up a series of 'loudness' which is useful to accentuate bass tones. Can be used for volume potentiometer logarithmic type (A) or linear type (B). When used, the linear potentiometer R6 must be installed in order to keep working logarithmic potentiometer.

Before getting into the regulator of tone, mounted amplifier transistor arranged colector-base configuration with feedback. Stabilizers 'balance' is placed on the input transistor, which is the linear P2. Bass tone is set by P3, while the treble tones is controlled by P4. The output of the regulator circuit given an additional tone to the facility outlet that is, when will be connected with a power amplifier outside greater.

Picture 3

AMPLIFIER POWER STEREO

For the power amplifier we will use one chip IC which contains a stereo amplifier IC TA 7230P also made in Japan released by Toshiba. Very simple external circuit as shown in Figure 4.

R14, R15, C24, and C25 on inputs is a series of low pass. C15 and C18 is used to clutch input signals and blocking the DC voltage at the feet 3 and 8, C16 and C17 are bypass the AC signal is so low tones are not muted. C19 is a bypass filter ripple voltages for its AF amplifier. The amplifier output is taken from the leg 2 and 10 after passing through the capacitors C22 and C23, while for its power supply given to the first leg and foot 8.

picture 4

COMPLETE SERIES

Figure 6 is a complete range of hi-fi stereo system this small. Once familiar with the parts details, we are dealing with the integration of these sections are complete. All parts of the hi-fi system was deliberately designed on a PCB for easy installation and operation.

picture 6

Tabel 1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

table 2

1032 H UPC Specifications

Value absolute magnitude (maximum) (Ta = 25 ° C)

Vcc = 18 V (rating: 8 -17 V)

PT = 270 mW (Ta = 75oC)

Topt = -20 + 75oC s.d

S.d Tstg = -40 + 125oC

Operating characteristics: Vcc = 13.2 V, RL = 10 KW, f = 1 kHz, Ta = 25oC

|

|

|

|

|||

|

|

|

|

|||

|

|

VI = 0 |

|

|

|

|

|

|

Vo = 0,3 V |

|

|

|

|

|

|

Vo = 0,3 V, RNF = 1,8 k |

|

|

|

|

|

|

KF = 1%, NAB = 35 dB |

|

|

|

|

|

|

Vo = 0,3 V, NAB = 35 dB |

|

|

|

|

|

|

|

|

|

||

|

|

Rg = 2,2 k, NAB = 35 dB |

|

|

|

|

table 3

Specifications TA 7230 P

VCC = 4.5 s.d 20 V

Operating conditions: VCC = 14 V, RL = 8 W, Rg = 600 W, f = 1 kHz, Ta = 25oC

|

|

|

|

|

||

|

|

|

|

|||

|

|

VCC = 14 V |

|

|

||

| VCC = 20 V |

|

||||

|

|

|

|

|

|

|

|

|

|

||||

|

|

KF = 10 % |

|

|

|

|

| RL = 4 W , KF = 10 % |

|

||||

|

|

PO = 0,6 W |

|

|

||

| RL = 4 W , PO = 1 W |

|

||||

|

|

Vo = 1 Vrms |

|

|

||

|

|

Rg = 10 k |

|

|

|

|

|

|

Rg = 0, PO = 1,8 W |

|

|

|

|

|

|

Rg = 0, f = 100 Hz |

|

|||

picture 1

X . IIII

speaker Circuit

In small speakers (on the car speakers, tape compo), generally low tones response sorely lacking, because the shape of tiny speakers. Problem can be solved with an electronic speaker, using feedback techniques electronically.

In practice, electronic ep goes a separation between woofer electronically with other areas, with the active cross over. This article presents a cros over to System 2 and 3 lanes. 2 lanes simpler system.

Two Line System

The use of the simplest electronic speaker system is 2-way or bi-amp system, which could give satisfactory results. The advantage is downsizing distortion TIM (transient inter modulation) and the bass and treble can be set independently. 340 Hz switching frequency selected (on top of the original resonance frequency). It is designed to use a small speaker box. If you are using a sub woofer for this under canal , and must be changed below 100 Hz. The resonant frequency of 20-40 Hz larger box, the box was 40-80 Hz, 80 Hz and above a small box.

B1 as a power amplifier power control woofers have suited our needs. Power woofer SP1 need over there of power amplifiers, because the feedback system will add a lot of power provided to the woofer. For ordinary space suitable power amplifier 20-30 Watt. Should have power amplifier suitable for use in low tones and has a large damping factor.

Speaker SP2 can use any tweeter (tweeter and super tweeter, mid range and tweeter or mid range and super tweeter) with separation conventional using crossover active , which will give satisfactory results.

Another option for bi-amp system is the use of full range speakers in a small box as SP2 and sub woofer for under a separate channel.

Three Line System

This system is similar to the system two paths, but here the tone of the middle separated by a band pass filter.

There are several possibilities that could be taken on speakers. The first choice: SP1 woofer, mid range SP2, SP3 tweeter. Option two: SP1 sub woofer, mid range SP2, SP3 super tweeter (intermediate frequencies below 100 Hz and above 15 KHz). The third option: sub woofer SP1, SP2 full range speakers (woofer, mid range, tweeter with passive crossover), SP3 super tweeter.

Terms of equal power amplifier system with 2 lanes. P3 adjustment is done via the auditory system is mounted. First from the ground is rotated slowly until the buzz stating and then oscillation. The optimum tuning in can play it back a little.

Components list

| P1 = 100 KA(LOGARITMIK)

P2 = P4 = 5K (LINIER) P3 = 1K B (LINIER) R1 = 47K R2 = R3 = 4K7 R4 = 22K R5 = 1K8 R6 = 1W 5 W R7 = R8 = 47K R9 = 10K R10 = 220K R11 = 100K R12 = 1K | ... |

R13 = 6K8 R14 = 3K3 R15 = 1K5 R16 = 1K R17 = 6K8 C1 = 100nF C2 = 68nF C3 = 180nF C4 = 6n8 C5 = 470nF C6 = 1uF 25 V (tantalum) Q1 = Q2 = BC 107 = BC108 = BC109 A1 – A4 = IC1 = TL074 = TL075 SP1, SP2 see text |

X . IIIII

VU Meter

This tool is one of the fixtures monitor to see a peak level audio system. VU meter display using LEDs for fast response time, operate at low voltages and currents are small, so it is compatible with integrated circuit design.

This circuit uses IC LM3915N which can control up to 10 LEDs. There are 10 pieces comparator that compares the input voltage level of the reference voltage (pin 6). In this IC has provided a reference voltage source so that you no longer need external reference voltage source.

To increase the voltage reference can be done by installing the resistors R1 and R2 (Figure 1). The reference voltage that has been raised is not sensitive to changes in temperature and supply voltage. This reference voltage is used to control the voltage divider in the IC.

The input signal (audio signal) must first be rectified, just a half-wave, and then enter the pin 5. The input signal to the amplifier front entrance conducted by TR-1. Voltage has been amplified is then buffered by the buffer circuit TR-2. The output signal is taken from the emitter of TR-2 and a half-wave rectified by D11 and filtered by R6, C4, and R8.

Regulatory level of input made by trimerpot P1. Pin 8 on the ground, so the reference voltage output on pin 8 at 1.25 volts (minimum) are provided in the upper reference (pin 6). So that the flow coming out of the pin 7 is large enough to turn on the LED, then R9 made small enough (390 ohms). Installation C6 to prevent oscillations arising due to voltage LED unfiltered. S1 is a switch for selecting the "fast" or "slow".

In assembling the circuit, a PCB to a series of mono. So, we need two identical circuits for the stereo. TR-1 (on the power supply circuit) as the regulator and the series will get a voltage of about 12 volts. Transformer is used quite that small power (300mA) with secondary voltages 0-6-12 volt volt or 2x6 with center tap.

This circuit can be driven from the output decks. Pre-amplifier (tone control), or from the output speaker. To capture from speakers, was given custody of 1 M ohm damper so that the incoming signal to the circuit is not too large. The setting range of LED done at trimerpot P1 .

Components list

R1 = 2k2 C1 = C3 = C5 = C6 = 2,2uF/16V

R2 = 1M5 C4 = 0,47uF/10V

R3 = 15k L1…L10 = LED

R4 = 220k D11 = IN4148

R5 = 2k7 T1 = T2 = 2SC828

R6 = 47 ohm IC = LM3915N

R7 = 10 ohm

R8 = 100k

R9 = 390 ohm

VR = 100k trimerpot

X . IIIIII

Digital signal processor

A digital signal processor (DSP) is a specialized microprocessor (or a SIP block), with its architecture optimized for the operational needs of digital signal processing.The goal of DSPs is usually to measure, filter or compress continuous real-world analog signals. Most general-purpose microprocessors can also execute digital signal processing algorithms successfully, but dedicated DSPs usually have better power efficiency thus they are more suitable in portable devices such as mobile phones because of power consumption constraints. DSPs often use special memory architectures that are able to fetch multiple data or instructions at the same time.

A digital signal processor chip found in a guitar effects unit.

Overview

A typical digital processing system

Digital signal processing algorithms typically require a large number of mathematical operations to be performed quickly and repeatedly on a series of data samples. Signals (perhaps from audio or video sensors) are constantly converted from analog to digital, manipulated digitally, and then converted back to analog form. Many DSP applications have constraints on latency; that is, for the system to work, the DSP operation must be completed within some fixed time, and deferred (or batch) processing is not viable.

Most general-purpose microprocessors and operating systems can execute DSP algorithms successfully, but are not suitable for use in portable devices such as mobile phones and PDAs because of power efficiency constraints. A specialized digital signal processor, however, will tend to provide a lower-cost solution, with better performance, lower latency, and no requirements for specialised cooling or large batteries.

Such performance improvements have led to the introduction of digital signal processing in commercial communications satellites where hundreds or even thousands of analogue filters, switches, frequency converters and so on are required to receive and process the uplinked signals and ready them for downlinking, and can be replaced with specialised DSPs with a significant benefits to the satellites weight, power consumption, complexity/cost of construction, reliability and flexibility of operation. For example, the SES-12 and SES-14 satellites from operator SES, both intended for launch in 2017, are being built by Airbus Defence and Space with 25% of capacity using DSP.

The architecture of a digital signal processor is optimized specifically for digital signal processing. Most also support some of the features as an applications processor or microcontroller, since signal processing is rarely the only task of a system. Some useful features for optimizing DSP algorithms are outlined below.

Architecture

Software architecture

By the standards of general-purpose processors, DSP instruction sets are often highly irregular; while traditional instruction sets are made up of more general instructions that allow them to perform a wider variety of operations, instruction sets optimized for digital signal processing contain instructions for common mathematical operations that occur frequently in DSP calculations. Both traditional and DSP-optimized instruction sets are able to compute any arbitrary operation but an operation that might require multiple ARM or x86 instructions to compute might require only one instruction in a DSP optimized instruction set.One implication for software architecture is that hand-optimized assembly-code routines are commonly packaged into libraries for re-use, instead of relying on advanced compiler technologies to handle essential algorithms. Even with modern compiler optimizations hand-optimized assembly code is more efficient and many common algorithms involved in DSP calculations are hand-written in order to take full advantage of the architectural optimizations.

Instruction sets

- multiply–accumulates (MACs, including fused multiply–add, FMA) operations

- used extensively in all kinds of matrix operations

- convolution for filtering

- dot product

- polynomial evaluation

- Fundamental DSP algorithms depend heavily on multiply–accumulate performance

- used extensively in all kinds of matrix operations

- Instructions to increase parallelism:

- Specialized instructions for modulo addressing in ring buffers and bit-reversed addressing mode for FFT cross-referencing

- Digital signal processors sometimes use time-stationary encoding to simplify hardware and increase coding efficiency.

- Multiple arithmetic units may require memory architectures to support several accesses per instruction cycle

- Special loop controls, such as architectural support for executing a few instruction words in a very tight loop without overhead for instruction fetches or exit testing .

Data instructions

- Saturation arithmetic, in which operations that produce overflows will accumulate at the maximum (or minimum) values that the register can hold rather than wrapping around (maximum+1 doesn't overflow to minimum as in many general-purpose CPUs, instead it stays at maximum). Sometimes various sticky bits operation modes are available.

- Fixed-point arithmetic is often used to speed up arithmetic processing

- Single-cycle operations to increase the benefits of pipelining

Program flow

- Floating-point unit integrated directly into the datapath

- Pipelined architecture

- Highly parallel multiplier–accumulators (MAC units)

- Hardware-controlled looping, to reduce or eliminate the overhead required for looping operations

Hardware architecture

Memory architecture

DSPs are usually optimized for streaming data and use special memory architectures that are able to fetch multiple data or instructions at the same time, such as the Harvard architecture or Modified von Neumann architecture, which use separate program and data memories (sometimes even concurrent access on multiple data buses).DSPs can sometimes rely on supporting code to know about cache hierarchies and the associated delays. This is a tradeoff that allows for better performance. In addition, extensive use of DMA is employed.

Addressing and virtual memory

DSPs frequently use multi-tasking operating systems, but have no support for virtual memory or memory protection. Operating systems that use virtual memory require more time for context switching among processes, which increases latency.- Hardware modulo addressing

- Allows circular buffers to be implemented without having to test for wrapping

- Bit-reversed addressing, a special addressing mode

- useful for calculating FFTs

- Exclusion of a memory management unit

- Memory-address calculation unit

bright cloud

Prior to the advent of stand-alone DSP chips discussed below, most DSP applications were implemented using bit-slice processors. The AMD 2901 bit-slice chip with its family of components was a very popular choice. There were reference designs from AMD, but very often the specifics of a particular design were application specific. These bit slice architectures would sometimes include a peripheral multiplier chip. Examples of these multipliers were a series from TRW including the TDC1008 and TDC1010, some of which included an accumulator, providing the requisite multiply–accumulate (MAC) function.

In 1976, Richard Wiggins proposed the Speak & Spell concept to Paul Breedlove, Larry Brantingham, and Gene Frantz at Texas Instrument's Dallas research facility. Two years later in 1978 they produced the first Speak & Spell, with the technological centerpiece being the TMS5100, the industry's first digital signal processor. It also set other milestones, being the first chip to use Linear predictive coding to perform speech synthesis.

In 1978, Intel released the 2920 as an "analog signal processor". It had an on-chip ADC/DAC with an internal signal processor, but it didn't have a hardware multiplier and was not successful in the market. In 1979, AMI released the S2811. It was designed as a microprocessor peripheral, and it had to be initialized by the host. The S2811 was likewise not successful in the market.

In 1980 the first stand-alone, complete DSPs – the NEC µPD7720 and AT&T DSP1 – were presented at the International Solid-State Circuits Conference '80. Both processors were inspired by the research in PSTN telecommunications.

The Altamira DX-1 was another early DSP, utilizing quad integer pipelines with delayed branches and branch prediction.

Another DSP produced by Texas Instruments (TI), the TMS32010 presented in 1983, proved to be an even bigger success. It was based on the Harvard architecture, and so had separate instruction and data memory. It already had a special instruction set, with instructions like load-and-accumulate or multiply-and-accumulate. It could work on 16-bit numbers and needed 390 ns for a multiply–add operation. TI is now the market leader in general-purpose DSPs.

About five years later, the second generation of DSPs began to spread. They had 3 memories for storing two operands simultaneously and included hardware to accelerate tight loops, they also had an addressing unit capable of loop-addressing. Some of them operated on 24-bit variables and a typical model only required about 21 ns for a MAC. Members of this generation were for example the AT&T DSP16A or the Motorola 56000.

The main improvement in the third generation was the appearance of application-specific units and instructions in the data path, or sometimes as coprocessors. These units allowed direct hardware acceleration of very specific but complex mathematical problems, like the Fourier-transform or matrix operations. Some chips, like the Motorola MC68356, even included more than one processor core to work in parallel. Other DSPs from 1995 are the TI TMS320C541 or the TMS 320C80.

The fourth generation is best characterized by the changes in the instruction set and the instruction encoding/decoding. SIMD extensions were added, VLIW and the superscalar architecture appeared. As always, the clock-speeds have increased, a 3 ns MAC now became possible.

Modern DSPs

Modern signal processors yield greater performance; this is due in part to both technological and architectural advancements like lower design rules, fast-access two-level cache, (E)DMA circuitry and a wider bus system. Not all DSPs provide the same speed and many kinds of signal processors exist, each one of them being better suited for a specific task, ranging in price from about US$1.50 to US$300.Texas Instruments produces the C6000 series DSPs, which have clock speeds of 1.2 GHz and implement separate instruction and data caches. They also have an 8 MiB 2nd level cache and 64 EDMA channels. The top models are capable of as many as 8000 MIPS (instructions per second), use VLIW (very long instruction word), perform eight operations per clock-cycle and are compatible with a broad range of external peripherals and various buses (PCI/serial/etc). TMS320C6474 chips each have three such DSPs, and the newest generation C6000 chips support floating point as well as fixed point processing.

Freescale produces a multi-core DSP family, the MSC81xx. The MSC81xx is based on StarCore Architecture processors and the latest MSC8144 DSP combines four programmable SC3400 StarCore DSP cores. Each SC3400 StarCore DSP core has a clock speed of 1 GHz.

XMOS produces a multi-core multi-threaded line of processor well suited to DSP operations, They come in various speeds ranging from 400 to 1600 MIPS. The processors have a multi-threaded architecture that allows up to 8 real-time threads per core, meaning that a 4 core device would support up to 32 real time threads. Threads communicate between each other with buffered channels that are capable of up to 80 Mbit/s. The devices are easily programmable in C and aim at bridging the gap between conventional micro-controllers and FPGAs

CEVA, Inc. produces and licenses three distinct families of DSPs. Perhaps the best known and most widely deployed is the CEVA-TeakLite DSP family, a classic memory-based architecture, with 16-bit or 32-bit word-widths and single or dual MACs. The CEVA-X DSP family offers a combination of VLIW and SIMD architectures, with different members of the family offering dual or quad 16-bit MACs. The CEVA-XC DSP family targets Software-defined Radio (SDR) modem designs and leverages a unique combination of VLIW and Vector architectures with 32 16-bit MACs.

Analog Devices produce the SHARC-based DSP and range in performance from 66 MHz/198 MFLOPS (million floating-point operations per second) to 400 MHz/2400 MFLOPS. Some models support multiple multipliers and ALUs, SIMD instructions and audio processing-specific components and peripherals. The Blackfin family of embedded digital signal processors combine the features of a DSP with those of a general use processor. As a result, these processors can run simple operating systems like μCLinux, velOSity and Nucleus RTOS while operating on real-time data.

NXP Semiconductors produce DSPs based on TriMedia VLIW technology, optimized for audio and video processing. In some products the DSP core is hidden as a fixed-function block into a SoC, but NXP also provides a range of flexible single core media processors. The TriMedia media processors support both fixed-point arithmetic as well as floating-point arithmetic, and have specific instructions to deal with complex filters and entropy coding.

CSR produces the Quatro family of SoCs that contain one or more custom Imaging DSPs optimized for processing document image data for scanner and copier applications.

Most DSPs use fixed-point arithmetic, because in real world signal processing the additional range provided by floating point is not needed, and there is a large speed benefit and cost benefit due to reduced hardware complexity. Floating point DSPs may be invaluable in applications where a wide dynamic range is required. Product developers might also use floating point DSPs to reduce the cost and complexity of software development in exchange for more expensive hardware, since it is generally easier to implement algorithms in floating point.

Generally, DSPs are dedicated integrated circuits; however DSP functionality can also be produced by using field-programmable gate array chips (FPGAs).

Embedded general-purpose RISC processors are becoming increasingly DSP like in functionality. For example, the OMAP3 processors include a ARM Cortex-A8 and C6000 DSP.

In Communications a new breed of DSPs offering the fusion of both DSP functions and H/W acceleration function is making its way into the mainstream. Such Modem processors include ASOCS ModemX and CEVA's XC4000.

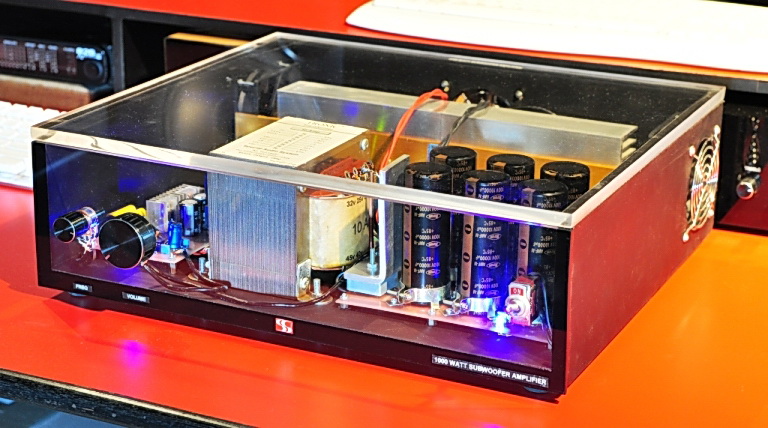

Audio stereo power amplifier

X . IIIIII

A Digital Amplifier Works

Most audiophiles and enthusiasts have grown up with at least a basic understanding of what an amplifier does. It takes a tiny alternating electrical signal that represents the moment-to-moment variations of musical frequencies and their amplitudes (volume levels), and increases their strength many times so they're powerful enough to drive the cones and domes of speakers back and forth to generate air pressure variations (waves), which replicate the original sound waves. Musical tones vary as slowly as 16 times per second (16 Hz)—a very low pipe-organ note—to as fast as 15,000 times per second (15 kHz) or more—the highest harmonics of a cymbal or a violin, for example.

Hi-Fi Analog Amplifiers

Until quite recently, the majority of high-fidelity audio amplifiers were analog, and most were of a type called Class A/B. What does that mean? Perhaps one of the easiest ways to understand how an analog audio amplifier works is to think of it as a kind of servo-controlled “valve” (the latter is what the Brits call vacuum tubes) that regulates stored up energy from the wall outlet and then releases it in measured amounts to your loudspeakers.The amount being discharged is synchronized to the rapid variations of the incoming audio signal. This weak AC signal is used to modulate a circuit that releases power (voltage and amperage) stored up by the big capacitors and transformer in the amplifier’s power supply, power that is discharged in a way that exactly parallels the tiny modulations of the incoming audio signal.

This signal in the amplifier’s input stage applies a varying conductivity to the output circuit’s transistors, which release power from the amplifier’s power supply to move your loudspeaker’s cones and domes. It’s almost as though you were rapidly turning on a faucet (you turning the faucet is the audio signal), which releases all the stored up water pressure—the water tower or reservoir are the storage capacitors-- in a particular pattern, a kind of liquid code. For our purposes, that’s all we need to know about analog amplification.

Digital Amplification