Robotic Humanoids And Digital Humans

humanoid robots and the applications for digital human beings in many aspects of modern-day life. humanoid robots and where you might see digital humans in your everyday life today.

Humanoid robots are being used in

the inspection, maintenance and disaster response at power plants to

relieve human workers of laborious and dangerous tasks. Similarly,

they're prepared to take over routine tasks for astronauts in space

travel.

humanoid robots are powered by artificial intelligence and can listen, talk, move and respond. They use sensors and actuators (motors that control movement) and have features that are modeled after human parts. Whether they are structurally similar to a male (called an Android) or a female (Gynoid), it’s a challenge to create realistic robots that replicate human capabilities. The first modern-day humanoid robots were created to learn how to make better prosthetics for humans, but now they are developed to do many things to entertain us, specific jobs such as a home health worker or manufacturer, and more. Artificial intelligence makes robots human-like and helps humanoids listen, understand, and respond to their environment and interactions with humans.

Digital Human Beings

Digital human beings are photorealistic digitized virtual versions of humans.

AI-powered and SMART ROBO virtual beings are designed to interact, sympathize, and have conversations just like a fellow human would. Here are a few digital human beings in development or at work today .Digital humans have been used in television, movies, and video games already, but there are limitations to using them to replace human actors.

life-like robots could prove useful in helping out the elderly, children, or any person who needs assistance with day-to-day tasks or interactions. For instance, there have been a number of studies exploring the effectiveness of humanoid robots supporting children with autism through play. on future will look like when intelligence is coupled with a perfectly human appearance.

Robotics can - and will - change our lives in the near future

especially amid the earth's situation that is exposed to radiation and pandemics as well as trips to other planets such as Mars.

__________________________________________________________________________________

the record our shows, cook our food, play our music, and even run our cars. We just don't see it because these "robots" don't have a face we can talk to or a butt we can kick.

Right now all modern technology is designed to bring the world to you; phone, radio, television, internet, but if trends continue, robots will soon bring you to the world, everywhere, and at the speed of thought. A mind and a hand where it's needed while you sit safely at home and run the show.

We've taken the first steps into welcoming them into our homes, we just have to wait a bit to proctor them into making us more human, we can say modern human with modern live .

on future robots as artificial robotic organisms that have properties mimicking, and greatly extending, the capabilities of natural organisms. The unique properties of softness and compliance make these machines highly suited to interactions with delicate things, including the human body. In addition, we will touch upon concepts in emerging robotics that have not been considered, including their biodegradability and regenerative energy transduction. How these new technologies will ultimately drive robotics and the exact form of future robots is unknown, but here we can at least glimpse the future impact of robotics for humans.

A “robot” is often defined in terms of its capability—it is a machine that can carry out a complex series of actions automatically, especially one programmable by a computer. This is a useful definition that encompasses a large proportion of conventional robots of the kind you see in science-fiction films. This definition, and the weight of established cultural views of what a robot is, has an impact on our views of what a robot could be. The best indication of this can be seen by examining cultural attitudes to robots around the world. If we type in the word “robot” to the English language version of the Google search engine we obtain images that are almost exclusively humanoid, shiny, rigid in structure and almost clinical . Robots became exemplars of an alien threat . The boundaries between smart materials, artificial intelligence, embodiment, biology, and robotics are blurring.

Smart Materials for Soft Robots

A smart material is one that exhibits some observable effect in one domain when stimulated through another domain. These cover all domains including mechanical, electrical, chemical, optical, thermal, and so on. For example, a thermochromic material exhibits a color change when heated, while an electroactive polymer generates a mechanical output (i.e., it moves) when electrically stimulated (Bar-Cohen, 2004). Smart materials can add new capabilities to robotics, and especially artificial organisms. we can use a smart material that changes electrical properties when exposed to the chemical. we need a robotic device that can be implanted in a person but will degrade to nothing when it has done its job of work?—we can use biodegradable, biocompatible, and selectively dissolvable polymers. The “smartness” of smart materials can even be quantified. Their IQ can be calculated by assessing their responsiveness, agility, and complexity . If we combine multiple smart materials in one robot we can greatly increase the IQ of its body.

Smart

materials can be hard, such as piezo materials (Curie and Curie, 1881),

flexible, such as shape memory alloys (Wu and Wayman, 1987), soft, such

as dielectric elastomers (Pelrine et al., 2000), and even fluidic, such

as ferrofluids (Albrecht et al., 1997) and electrorheological fluids

(Winslow, 1949). This shows the great facility and variety of these

materials, which largely cover the same set of physical properties

(stiffness, elasticity, viscosity) as biological tissue. One important

point to recognize in almost all biological organisms, and certainly all

animals, is their reliance on softness. No animal, large or small,

insect or mammal, reptile or fish, is totally hard. Even the insects

with their rigid exoskeletons are internally soft and compliant.

Directly related to this is the reliance of nature on the actuation (the

generation of movement and forces) of soft tissue such as muscles. The

humble cockroach is an excellent example of this; although it has a very

rigid and hard body, its limbs are articulated by soft muscle tissue

(Jahromi and Atwood, 1969). If we look closer at the animal kingdom we

see many organisms that are almost totally soft. These include worms,

slugs, molluscs, cephalopods, and smaller algae such as euglena. They

exploit their softness to bend, twist, and squeeze in order to change

shape, hide, and to locomote. An octopus, for example, can squeeze out

of a container through an aperture less than a tenth the diameter of its

body (Mather, 2006). Despite their softness, they can also generate

forces sufficient to crush objects and other organisms while being

dextrous enough to unscrew the top of a jar (BBC, 2003). Such remarkable

body deformations are made possible not only by the soft muscle tissues

but also by the exploitation of hydraulic and hydrostatic principles

that enable the controllable change in stiffness (Kier and Smith, 1985).

We now have ample

examples in nature of what can be done with soft materials and we desire

to exploit these capabilities in our robots. Let us now look at some of

the technologies that have the potential to deliver this capability.

State-of-the-art soft robotic technologies can be split into three

groups: 1) hydraulic and pneumatic soft systems; 2) smart actuator and

sensor materials; and 3) stiffness changing materials. In recent years

soft robotics has come to the fore through the resurgence of fluidic

drive systems combined with a greater understanding and modelling of

elastomeric materials. Although great work has been done in perfecting

pneumatic braided rubber actuators (Meller et al., 2014), this discrete

component-based approach limits its range of application.

A better approach is

shown in the pneunet class of robotic actuators (Ilievski et al., 2011)

and their evolution into wearable soft devices (Polygerinos et al.,

2015) and robust robots (Tolley et al., 2014). Pneunets are monolithic

multichamber pneumatic structures made from silicone and polyurethane

elastomers. Unfortunately hydraulic and pneumatic systems are severely

limited due to their need for external pumps, air/fluid reservoirs, and

valves. These add significant bulk and weight to the robot and reduce

its softness. A far better approach is to work toward systems that do

not rely on such bulky ancillaries. Smart materials actuators and

sensors have the potential to deliver this by substituting fluidic

pressure with electrical, thermal, or photonic effects. For example,

electroactive polymers (EAPs) turn electrical energy into mechanical

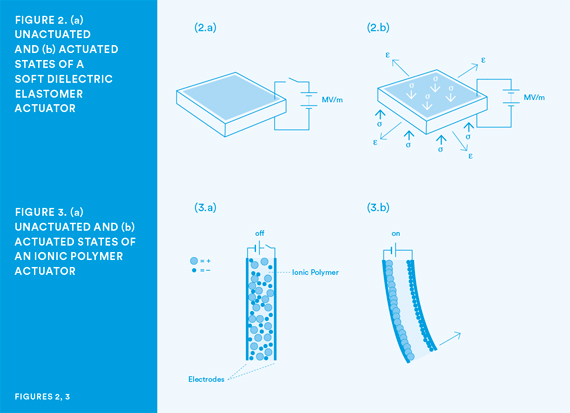

deformation. Figures 2 and 3 show two common forms of EAP: the

dielectric elastomer actuator (DEA) (Pelrine et al., 2000) and the ionic

polymer actuator (IPA) (Shahinpoor and Kim, 2001). The DEA is composed

of a central elastomeric layer with high dielectric constant that is

sandwiched between two compliant electrode layers. When a large electric

field (of the order MV/m) is applied to the composite structure,

opposing charges collect at the two electrodes and these are attracted

by Coulomb forces, labelled σ in Figure 2. These induce Maxwell stresses

in the elastomer, causing it to compress between the electrodes and to

expand in the plane, labelled ε in Figure 2. Since Coulomb forces are

inversely proportional to charge separation, and the electrodes expand

upon actuation, resulting in a larger charge collecting area, the

induced stress in the DEA actuator is proportional to the square of the

electric field. This encourages us to make the elastomer layer as thin

as possible. Unfortunately, a thinner elastomer layer means we need more

layers to make our robot, with a consequently higher chance of

manufacturing defect or electrical breakdown. Because DEAs have power

density close to biological muscles (Pelrine et al., 2000), they are

good candidates for development into wearable assist devices and

artificial organisms.

Ionic polymer

actuators, on the other hand, are smart materials that operate through a

different electromechanical principle, as shown in Figure 3. The IPA is

fabricated from a central ionic conductor layer, again sandwiched by

two conducting electrodes, but in contrast to DEAs the electric field is

much lower (kV/m) and therefore the electrodes must be more conductive.

When an electric field is applied, free ions within the ionic conductor

move toward the electrodes where they collect. The high concentration

of ions at the electrodes causes them to expand as like-charges repel

due to local Coulomb forces. If the cations (+) and ions (-) are

significantly different in size and charge, there will be a mismatch in

the expansion of the two electrodes and the IPA will bend. The advantage

of the IPA is that it operates at much lower voltages than the DEA, but

it can only generate lower forces. A more recent addition to the smart

materials portfolio is the coiled nylon actuator (Haines et al., 2014).

This is a thermal actuator fabricated from a single

twist-insertion-buckled nylon filament. When heated, this structure

contracts. Although the nylon coil actuator has the potential to deliver

low-cost and reliable soft robotics, it is cursed by its thermal cycle.

In common with all other thermal actuators, including shape memory

alloys, it is relatively straightforward to heat the structure (and

thereby cause contraction of the muscle-like filament) but it is much

more challenging to reverse this and to cool the device. As a result,

the cycle speed of the nylon (and SMA) actuators is slow at less than

10Hz. In contrast, DEAs and IPAs have been demonstrated at 100’s of Hz,

and the DEA has been shown to even operate as a loudspeaker (Keplinger

et al., 2013).

The final capability needed to

realize the body of soft robotic organisms is stiffness change. Although

this may be achieved through muscle activation, as in the octopus,

there are a number of soft robotic technologies that can achieve

stiffness modulation independent of actuation. These include shape

memory polymers (SMP) and granular jamming. SMPs are polymers that

undergo a controllable and reversible phase transition from a rigid,

glassy state to the soft, rubber shape (Lendlein et al., 2002). They are

stimulated most commonly through heat, but some SMPs transition between

phases when photonically or electrically stimulated. The remarkable

property of SMPs is their ability to “memorize” a programmed state. In

this way an SMP robot can be made to transition between soft and hard,

and when the operation is complete it can be made to automatically

return to its pre-programmed shape. One exciting possibility of SMPs is

to combine them with actuators that are themselves stimulated by the

same energy source. For example, a thermally operated shape memory

polymer can be combined with a thermal SMP to yield a complex structure

that encompasses actuation, stiffness change, and memory in one unit

driven solely by heat (Rossiter et al., 2014). Granular jamming, in

contrast to SMP phase change, is a more mechanical mechanism (Amend et

al., 2012). A compliant chamber is filled with granular materials and

the stiffness of the chamber can be controlled by pumping a fluid, such

as air, into and out of it. When air is evacuated from the chamber,

atmospheric pressure due to the within-chamber vacuum cases the granules

to compress together and become rigid. In this way a binary soft-hard

stiffness changing structure can be made. Such a composite structure is

very suited to wearable assist devices and exploratory robots.

Robots Where You Don’t Expect Them

Having touched above

on the technologies that will give us a new generation of robotics, let

us now examine how these robots may appear in our lives and how we will

interact, and live, with them.

Smart Skins

The compliance of

soft robotics makes them ideally suited for direct interaction with

biological tissue. The soft-soft interactions of a soft robot and human

are inherently much safer than a hard-soft interface imposed by

conventional rigid robots. There has been much work on smart materials

for direct skin-to-skin contact and for integration on the human skin,

including electrical connections and electronic components (Kim et al.,

2011). A functional soft robotic second skin can offer many advantages

beyond conventional clothing. For example, it may mimic the

color-changing abilities of the cephalopods (Morin et al., 2012), or it

may be able to translocate fluids like the teleost fishes (Rossiter et

al., 2012) and thereby regulate temperature. The natural extension of

such skins lies in smart bandages to promote healing and to reduce the

spread of microbial resistance bacteria by reducing the need for

antibiotics. Of course, skins can substitute for clothing, but we are

some way from social acceptance of second-skins as a replacement for

conventional clothing. If, on the other hand, we exploit fibrous soft

actuation technologies such as the nylon coil actuator and shape memory

alloy-polymer composites (Rossiter et al., 2014), we can weave

artificial muscles into fabric. This yields the possibility of active

and reactive clothing. Such smart garments also offer a unique new

facility: because the smart material is in direct contact with the skin,

and it has actuation capabilities, it can directly mechanically

stimulate the skin. In this way we can integrate tactile communication

into clothing. The tactile communication channel has largely been left

behind by the other senses. Take, for example, the modern smartphone; it

has high bandwidth in both visual and auditory outputs but almost

non-existent touch stimulating capabilities. With touch-enabled clothing

we can generate natural “affective” senses of touch, giving us a

potentially revolutionary new communication channel. Instead of a coarse

vibrating motor (as used in mobile phones) we can stroke, tickle, or

otherwise impart pleasant tactile feelings (Knoop and Rossiter, 2015).

Assist Devices

If the smart

clothing above is able to generate larger forces it can be used not just

for communication but also for physical support. For people who are

frail, disabled, or elderly a future solution will be in the form of

power-assist clothing that will restore mobility. Restoring mobility can

have a great impact on the quality of life of the wearer and may even

enable them to return to productive life, thereby helping the wider

economy. The challenge with such a proposition is in the power density

of the actuation technologies within the assist device. If the wearer is

weak, for example because they have lost muscle mass, they will need

significant extra power, but the weight of this supplementary power

could be prohibitively expensive. Therefore the assist device should be

as light and comfortable as possible, with actuation having a power

density significantly higher than biological muscles. This is currently

beyond the state-of-the-art. Ultimately wearable assist devices will

make conventional assist devices redundant. Why use a wheel chair if you

can walk again by wearing soft robotic Power Pants?

Medical Devices

We can extend the

bio-integration as exemplified by the wearable devices described above

into the body. Because soft robotics is so suitable for interaction with

biological tissue it is natural to think of a device that can be

implanted into the body and which can interact physically with internal

structures. We can then build implantable medical devices that can

restore the functionality of diseased and damaged organs and structures.

Take, for example, soft tissue cancer that can affect organs ranging

from the bowels and prostate to the larynx and trachea. In these

diseases a typical treatment involves the surgical excision of the

cancer and management of the resulting condition. A patient with

laryngeal cancer may have a laryngectomy and thereafter will be unable

to speak and must endure a permanent tracheostomy. By developing and

implanting a soft robotic replacement organ we may restore functional

capabilities and enable the patient to once again speak, swallow, cough

and enjoy their lives. Such bio-integrating soft robotics is under

development and expected to appear in the clinic over the next ten to

fifteen years.

Biodegradable and Environmental Robots

It is natural to

extend the notion of bio-integration from the domestic (human-centric)

environment to the natural environment. Currently robots that operate in

the natural environment are hampered by their very underlying

technologies. Because the robots are made of rigid, complex, and

environmentally harmful materials, they must be constantly monitored.

When they reach the end of their productive lives they must be recovered

and safely disposed of. If, on the other hand, we can make the robots

totally environmentally benign, we can be less concerned with their

recovery after failure. This is now possible through the development of

biodegradable soft robotics (Rossiter et al., 2016). By exploiting smart

materials that are not only environmentally safe in operation, but

which safely degrade to nothing in the environment, we can realize

robots that live, die, and decay without environmental damage. This

changes the way we deploy robots in the environment: instead of having

to track and recall a small number of environmentally damaging robots we

can deploy thousands and even millions of robots, safe in the knowledge

that they will degrade safely in the environment, causing no damage. A

natural extension of a biodegradability robot is one that is edible. In

this case an edible robot can be eaten; it will do a job of work in the

body; and then will be consumed by the body. This provides a new method

for the controlled, and comfortable, delivery of treatments and drugs

into the body.

Intelligent Soft Robots

All of the soft

actuators described above operate as transducers. That is, they convert

one energy form into another. This transduction effect can often be

reversed. For example, dielectric elastomers actuators can be

reconfigured to become dielectric elastomer generators (Jin et al.,

2011). In such a generator the soft elastomer membrane is mechanically

deformed and this results in the generation of an electrical output. Now

we can combine this generator effect with the wearable robotics

described above. A wearable actuator-generator device may, for example,

provide added power when walking up hill, and once the user has reached

the top of the hill, it can generate power from body movement as the

user leisurely walks down the hill. This kind of soft robotic

“regenerative braking” is just one example of the potential of

bidirectional energy conversion in soft robotics. In such materials we

have two of the components of computation: input and output. By

combining these capabilities with the strain-responsive properties

inherent in the materials we can realize robots that can compute with

their bodies. This is a powerful new paradigm, often described in the

more general form as embodied intelligence or morphological computation

(Pfeifer and Gómez, 2009). Through morphological computation we can

devolve low-level control to the body of the soft robot. Do we therefore

need a brain in our soft robotic organism? In many simple soft robots

the brain may be redundant, with all effective computing being performed

by the body itself. This further simplifies the soft robot and again

adds to its potential for ubiquity.

Conclusions

the surface of what a robot is, how it can be

thought of as a soft robotic organism, and how smart materials will help

realize and revolutionize future robotics. The impact on humans has

been discussed, and yet the true extent of this impact is something we

can only guess at. Just as the impact of the Internet and the World Wide

Web were impossible to predict, we cannot imagine where future robotics

will take us. Immersive virtual reality? Certainly. Replacement bodies?

Likely. Complete disruption of lives and society? Quite possibly! As we

walk the path of the Robotics Revolution we will look back at this

decade as the one where robotics really took off, and laid the

foundations for our future world.

____________________________________________________________________________

A robot has some consistent characteristics:

- Robots all consist of some sort of mechanical construction. The mechanical aspect of a robot helps it complete tasks in the environment for which it’s designed. For example, the Mars 2020 Rover’s wheels are individually motorized and made of titanium tubing that help it firmly grip the harsh terrain of the red planet.

- Robots need electrical components that control and power the machinery. Essentially, an electric current (a battery, for example) is needed to power a large majority of robots.

- Robots contain at least some level of computer programming. Without a set of code telling it what to do, a robot would just be another piece of simple machinery. Inserting a program into a robot gives it the ability to know when and how to carry out a task.

The robotics industry is still

relatively young, but has already made amazing strides. From the deepest

depths of our oceans to the highest heights of outer space, robots can

be found performing tasks that humans couldn’t dream of achieving.

There are five types of Robots ( Ringing On Boats )

Pre-Programmed Robots

Pre-programmed

robots operate in a controlled environment where they do simple,

monotonous tasks. An example of a pre-programmed robot would be a

mechanical arm on an automotive assembly line. The arm serves one

function — to weld a door on, to insert a certain part into the engine,

etc. — and it's job is to perform that task longer, faster and more

efficiently than a human.

Humanoid Robots

Humanoid

robots are robots that look like and/or mimic human behavior. These

robots usually perform human-like activities (like running, jumping and

carrying objects), and are sometimes designed to look like us, even

having human faces and expressions. Two of the most prominent examples

of humanoid robots are Hanson Robotics’ Sophia (in the video above) and Boston Dynamics’ Atlas.

Autonomous Robots

Autonomous

robots operate independently of human operators. These robots are

usually designed to carry out tasks in open environments that do not

require human supervision. An example of an autonomous robot would be

the Roomba vacuum cleaner, which uses sensors to roam throughout a home

freely.

Teleoperated Robots

Teleoperated

robots are mechanical bots controlled by humans. These robots usually

work in extreme geographical conditions, weather, circumstances, etc.

Examples of teleoperated robots are the human-controlled submarines used

to fix underwater pipe leaks during the BP oil spill or drones used to detect landmines on a battlefield.

Augmenting Robots

Augmenting

robots either enhance current human capabilities or replace the

capabilities a human may have lost. Some examples of augmenting robots

are robotic prosthetic limbs or exoskeletons used to lift hefty weights.

Applications of Robotics

- Helping fight forest fires

- Working alongside humans in manufacturing plants (known as co-bots)

- Robots that offer companionship to elderly individuals

- Surgical assistants

- Last-mile package and food order delivery

- Autonomous household robots that carry out tasks like vacuuming and mowing the grass

- Assisting with finding items and carrying them throughout warehouses

- Used during search-and-rescue missions after natural disasters

- Landmine detectors in war zones

Uses of Robots

Manufacturing

The

manufacturing industry is probably the oldest and most well-known user

of robots. These robots and co-bots (bots that work alongside humans)

work to efficiently test and assemble products, like cars and industrial

equipment. It’s estimated that there are more than three million

industrial robots in use right now.

Logistics

Shipping,

handling and quality control robots are becoming a must-have for most

retailers and logistics companies. Because we now expect our packages

arriving at blazing speeds, logistics companies employ robots

in warehouses, and even on the road, to help maximize time efficiency.

Right now, there are robots taking your items off the shelves,

transporting them across the warehouse floor and packaging them.

Additionally, a rise in last-mile robots (robots that will autonomously

deliver your package to your door) ensure that you’ll have a

face-to-metal-face encounter with a logistics bot in the near future.

Home

It’s

not science fiction anymore. Robots can be seen all over our homes,

helping with chores, reminding us of our schedules and even entertaining

our kids. The most well-known example of home robots is the autonomous

vacuum cleaner Roomba. Additionally, robots have now evolved to do everything from autonomously mowing grass to cleaning pools.

Travel

Is

there anything more science fiction-like than autonomous vehicles?

These self-driving cars are no longer just imagination. A combination of

data science and robotics, self-driving vehicles are taking the world

by storm. Automakers, like Tesla, Ford, Waymo, Volkswagen and BMW are

all working on the next wave of travel that will let us sit back, relax

and enjoy the ride. Rideshare companies Uber and Lyft are also

developing autonomous rideshare vehicles that don’t require humans to

operate the vehicle.

Healthcare

Robots have made enormous strides

in the healthcare industry. These mechanical marvels have use in just

about every aspect of healthcare, from robot-assisted surgeries to bots

that help humans recover from injury in physical therapy. Examples of

robots at work in healthcare are Toyota’s healthcare assistants, which

help people regain the ability to walk, and “TUG,” a robot designed to autonomously stroll throughout a hospital and deliver everything from medicines to clean linens.________________________________________________________________________________

let's discuss some of the components of modern electronics in the 20th, 21st and future

If we had a big enough construction set with

enough wheels, gears, and other bits and bobs, and a limitless

supply of electronic components, could you bolt together a living,

breathing, walking, talking robot as good as a human in

every way?

That might sound like one question, but it's really several.

First, there's the matter of whether it's technically

possible to build a robot that compares with a human. But there's

also a much bigger question of why you'd want to do that and

whether it's even a useful thing to do. When humans can reproduce

so easily, why do we want to create clunky mechanical replicas of

ourselves? And if there really is a good reason for doing so, what's

the best way to go about it? we'll be taking

a detailed look at what robots are, how they're designed, and some

of the things they can do for us.Imaginary friends

Close your eyes and think "robot." What picture leaps to mind? Most likely a fictional creature like R2-D2 or C-3PO from Star Wars. Very likely a humanoid—a humanlike robot with arms, legs, and a head, probably painted metallic silver. Unless you happen to work in robotics, I doubt you pictured a mechanical snake or a clockwork cockroach, a bomb disposal robot, or a Roomba robot vacuum cleaner.What you pictured, in other words, would have been based more on science fiction than fact, more on imagination than reality. Where the sci-fi robots we see in movies and TV shows tend to be humanoids, the humdrum robots working away in the world around us (things like robotic welder arms in car-assembly plants) are much more functional, much less entertaining. For some reason, sci-fi writers have an obsession with robots that are little more than flawed, tin-can, replacement humans. Maybe that makes for a better story, but it doesn't really reflect the current state of robot technology, with its emphasis on developing practical robots that can work alongside humans.

How do you build a robot?

Photo: Is this a robot?

It certainly looks like one, but it has no senses of any kind, no electronic or mechanical onboard computer for thinking, and its limbs have no motors or other means to move themselves. With no perception, cognition, or action, it cannot be a robot—even if it looks like one.

If robots like C-3PO really did exist, how would anyone ever have developed them? What would it have taken to make a general-purpose robot similar to a human?

It's easy enough to write entertaining stories about intelligent robots taking control of the planet, but just try developing robots like that yourself and see how far you get. Where would you even start? Actually, where any robot engineer starts, by breaking that one big problem into smaller and more manageable chunks. Essentially, there are three problems we need to solve: how to make our robot 1) sense things (detect objects in the world), 2) think about those things (in a more less "intelligent" way, which is a tricky problem we'll explore in a moment), and then 3) act on them (move or otherwise physically respond to the things it detects and thinks about).

In psychology (the science of human behavior) and in robotics, these things are called perception (sensing), cognition (thinking), and action (moving). Some robots have only one or two. For example, robot welding arms in factories are mostly about action (though they may have sensors), while robot vacuum cleaners are mostly about perception and action and have no cognition to speak of. As we'll see in a moment, there's been a long and lively debate over whether robots really need cognition, but most engineers would agree that a machine needs both perception and action to qualify as a robot.

Perception (sensing)

We experience the world through our five senses, but what about robots? How do they get a feel for the things around them?Vision

Humans are seeing machines: estimates vary wildly, but there's general agreement that about 25–60 percent of our cerebral cortex is devoted to processing images from our eyes and building them into a 3D visual model of the world. Now machine vision is really quite simple: all you need to do to give a robot eyes is to glue a couple of digital cameras to its head. But machine perception—understanding what the camera sees (a pattern of orange and black), what it represents (a tiger), what that representation means (the possibility of being eaten), and how relevant it is to you from one minute to the next (not at all, because the tiger is locked inside a cage)—is almost infinitely harder.Like other problems in robotics, tackling perception as a theoretical issue ("how does a robot see and perceive the world?") is much harder than approaching it as a practical problem. So if you were designing something like a Roomba vacuum cleaning robot, you could spend a good few years agonizing over how to give it eyes that "see" a room and navigate around the objects it contains. Or you could forget all about something so involved as seeing and simply use a giant, pressure-sensitive bumper. Let the robot scrabble around until the bumper hits something, then apply the brakes and tell it to creep away in a different direction.

Photo: Look no eyes! Spot, a quadruped robot built by Boston Dynamics, has a lidar (a kind of laser radar) where you'd expect its head to be (the small gray box at the front). Photo by Sgt. Eric Keenan courtesy of US Marine Corps.

Perception, in other words, doesn't have to mean vision. And that's a very important lesson for ambitious projects such as self-driving (robotic) cars. One way to build a self-driving car would be to create a super-lifelike humanoid robot and stick it in the driving seat of an ordinary car. It would drive in exactly the same way as you or I might do: by looking out through the windshield (with its digital camera eyes), interpreting what it sees, and controlling the car in response with its hands and feet. But you could also build a self-driving car an entirely different way without anyone in the driving seat—and this is how most robotics engineers have approached the problem. Instead of eyes, you'd use things like GPS satellite navigation, lidar, sonar, radar, and infrared detectors, accelerometers—and any number of other sensors to build up a very different kind of picture of where the car is, how it's proceeding in relation to the road and other cars, and what you need to do next to keep it safely in motion. Drivers see with their eyes; self-driving cars see with their sensors. A driver's brain builds a moving 3D model of the road; self-driving cars have computers, surfing a flood of digital data quite unlike a human's mental model. That doesn't mean there's no similarity at all. It's quite easy to imagine a neural network (a computer simulation of interconnected brain cells that can be trained to recognize patterns) processing information from a self-driving car's sensors so the vehicle can recognize situations like driving behind a learner, spotting a looming emergency when children are playing ball by the side of the road, and other danger signs that experienced drivers recognize automatically.

Hearing

Just as seeing is a misnomer when it comes to machine vision, so the other human senses (hearing, smell, taste, and touch) don't have exact replicas in the world of robotics. Where a person hears with their ears, a robot uses a microphone to convert sounds into electrical signals that can be digitally processed. It's relatively straightforward to sample a sound signal, analyze the frequencies it contains (for example, using a mathematical descrambling trick called a Fourier transform), and compare the frequency "fingerprint" with a list of stored patterns. If the frequencies in your signal match the pattern of a human scream, it's a scream you're hearing—even if you're a robot and a scream means nothing to you.There's a big difference between hearing simple sounds and understanding what a voice is saying to you, but even that problem isn't beyond a machine's capability. Computers have been successfully turning human speech into recognizable text for decades; even my old PC, with simple, off-the-shelf, voice recognition software, can listen to my voice and faithfully print my words on the screen. Interpreting the meaning of words is a very different thing from turning sounds into words in the first place, but it's a start.

Smell

You might think building a robotic nose is more of a technical challenge, but it's just a matter of building the right sensor. Smell is effectively a chemical recognition system: molecules of vapor from a bacon butty, a yawning iris, or the volatile liquids in perfume drift into our noses and bind onto receptive cells, stimulating them electro-chemically. Our brains do the rest. The way our brains are built explains some of their highly unusual features, such as why smells are powerful memory triggers. (The answer is simply because the bits of our brain that process smells are physically very close to two other key bits of our brains, namely the hippocampus, a kind of "crossroads" in our memory, and amygdala, where emotions are processed.)So, in the words of the old joke, if robots have no nose, how do they smell? We have plenty of machines that can recognize chemicals, including mass spectrometers and gas chromatographs, but they're elaborate, expensive, and unwieldy; not the sorts of things you could easily stuff up a nose. Nevertheless, robot scientists have successfully built simpler electrochemical detectors that resemble (at least, conceptually) the way the human nose converts smells into electrical signals. Once that job's done, and the sensor has produced a pattern of digital data, all you're left with is a computational problem; not, "what does this smell like?", but what does this data pattern represent? It's exactly like seeing or hearing: once the signals have left your eyes, ears, or nose, and reached your brain, the problem is simply one of pattern recognition.

Other senses

Photo: Engineers can building amazingly realistic prosthetic hands.

If we could modify these things with touch sensors, maybe they could double up as working hands for robots? Photo by Sarah Fortney courtesy of US Navy.

Although robots have had arms and primitive grabber claws for over half a century, giving them anything like a working human hand has proved far more of a challenge. Imagine a robot that can play Beethoven sonatas like a concert pianist, perform high-precision brain surgery, carve stone like a sculptor, or a thousand other things we humans can do with our touch-sensitive hands. As the New York Times reported in September 2014, building a robot with human touch has suddenly become one of the most interesting problems in robotics research.

Taste, too, boils down simply to using appropriate chemical sensors. If you want to build a food-tasting robot, a pH meter would be a good starting point, perhaps with something to measure viscosity (how easily a fluid flows). Then again, if you've already given your robot eyes and a nose, that would go a long way to giving it taste, because the look and smell of food play a big part in that.

One of the misleading things about trying to develop a humanoid robot is that it tricks us into replicating only the five basic human senses—and one of the great things about robots is that they can use any kind of sensor or detector we can lay our hands on. There's no need at all for robot vision to be confined to the ordinary visible spectrum of light: robots could just as easily see X rays or infrared (with heat detectors). Robots could also navigate like homing pigeons by following Earth's magnetic field or (better still) by using GPS to track their precise position from one moment to the next. Why limit ourselves to human limitations?

Cognition (thinking)

Thinking about thinking is a recipe for doing not much at all—other than thinking; that's the occupational hazard of philosophers. And if that sounds fatuous, consider all the books and scientific articles that have been published on artificial intelligence since British computer scientist Alan Turing developed what is now called the Turing test (a way of establishing whether a machine is "intelligent") in 1950. Psychologists, philosophers, and computer scientists have been wrestling with definitions of "intelligence" ever since. But that hasn't necessarily got them any nearer to developing an intelligent machine."No cognition. Just sensing and action. This is all I would build, and completely leave out... the intelligence of artificial intelligence."

Rodney A. Brooks, Robot: The Future of Flesh and Machines, p.36.

Emotional intelligence

Photo: Robots are designed with friendly faces so humans don't feel threatened when they work alongside them.

This one is called Emo and it lives at Think Tank, the science museum in Birmingham, England. Its digital-cameras eyes help it to learn and recognize human expressions, while the rubber-tube lips allow it to smile and make expressions of its own.

Whether they're deemed intelligent or not, computers and robots are quintessentially logical and rational where humans are more emotional and inconsistent. Developing robots that are emotional—particularly ones that can sense and respond to human emotions—is arguably much more important than making intelligent machines. Would you rather your coworkers were cold, logical, hyperintelligent beings who could solve every problem and never make a mistake? Or friendly, easy-going, pleasant to pass time with, and fallibly human? Most people would probably chose the latter, simply because it makes for more effective teamwork—and that's how most of us generally get things done. So developing a likeable robot that has the ability to listen, smile, tell jokes round the water cooler, and sympathize when your life takes a turn for the worse is arguably just as important as making one that's clever. Indeed, one of the main reasons for developing humanoid robots is not to replicate human emotions but to make machines that people don't feel scared or threatened by—and building robots that can make eye contact, chuckle, or smile is a very effective way to do that.

Emotion is often in the eye of the beholder—especially when it comes to humans and machines. When people look at cars, they tend to see faces (two headlights for eyes, a radiator grille for a mouth) or link particular emotions with certain colours of paintwork (a red car is racy, a black one is dark and mysterious, a silver one is elegant and professional). In much the same way, people project feelings onto robots simply because of how they look or move: the robot has no emotions; the emotions it conjurs up are entirely in your mind. One of the world's leading robot engineers, MIT's Rodney Brooks, tells a story of how he was involved in the development of a robotic baby toy so lifelike that it provoked sincere feelings of attachment in the adults and children who looked after it. Kismet, an "emotional robot" developed in the late 1990s by Cynthia Breazeal, one of his students, listens, coos, and pays attention to humans in a startlingly babylike way—to the extent that people grow very attached to it, as a parent to a child. Again, the robot has nothing like human emotions; it simply provokes an authentic emotional reaction in humans and we interpret our own feelings as though the robot were emotional too. In other words, we might redefine the problem of developing emotional robots as making machines that humans really care about.

Action (doing)

How a robot moves and responds to the world is the most important thing about it. Intelligent machines that sense and think but don't move or respond hardly qualify as robots; they're really just computers. Action is a much more complex problem than it might seem, both in humans and machines. In humans, the sheer number of muscles, tendons, bones, and nerves in our limbs make coordinatred, accurate body control a logistical nightmare. There's nothing easier than lifting your hand to scratch your nose—your brain makes it seem to easy—but if we try to replicate this sort of behavior in a machine, we instantly realize how difficult it is. That's one reason why, until relatively recently, virtually all robots moved around on wheels rather than fully articulated human legs (wheels are generally faster and more reliable, but hopeless at managing rough terrain or stairs).Just because a robot has to move, it doesn't follow that it has to move like a person. Factory robots are designed around giant electric, hydraulic, or pneumatic arms fitted with various tools geared to specific jobs, like painting, welding, or laser-cutting fabric. No human can swivel their wrist through 360 degrees, but factory robots can; there's simply no good reason to be bound by human limitations. Indeed, there's no reason why robots have to act (move) like humans at all. Virtually every other animal you can think of, from salamanders and sharks to snakes and turkeys, has been replicated in robot form: it often makes much more sense for robots to scuttle round like animals than prance about like people. By the same token, making "emotional robots" (ones to which people feel emotions) doesn't necessarily have to mean building humanoids. That explains the instant success of Sony's robotic AIBO dogs, launched in 1999. They were essentially robotic pets onto which people projected their need for companionship.

Photo: Robots don't have to look or work like humans. This is BigDog, the infamous robotic "pack-mule" designed for the US military by Boston Dynamics.

Where most robots are electrically powered, this one is driven by four hydraulic legs powered by a small internal combustion engine from a go-kart. In theory, that gives it a big advantage over robots powered by batteries (it should be able to go much further); in practice, its official range is just 32km (20 miles). Photo by Kyle J. O. Olson courtesy of US Marine Corps.

Human perception and cognition are hard things for robots to emulate, partly because it's easy to get bogged down in abstract and theoretical arguments about what these terms actually mean. Action is a much simpler problem: movement is movement—we don't have to worry about defining it, the same way we worry over "intelligence," for example. Ironically, though we admire the remarkable grace of a ballet dancer, the leaps and bounds of a world-class athlete, or the painstaking care of a traditional craftsman, we take it for granted that robots will be able to zing about or make things for us with even greater precision. How do they manage it? Some use hydraulics. Most, however, rely on relatively simple, much more afforable electric stepper motors and servo motors, which let a robot spin its wheels or swing its limbs with pinpoint control. Unlike humans, which get tired and make mistakes, robot moves are reliably repeatable; robots get it right every time.

What are robots actually like?

Real-world robots fall into two broad categories. Most are task-specific robots, designed to do one job and repeat it over and over again. Hardly any are general-purpose robots capable of doing a wide variety of jobs (in the way that humans are general-purpose flesh-and-blood machines). Indeed, those multi-purpose robots are still pretty much confined to robotics labs.Robot arms

Photo: It might never have occurred to you that a robot built the car you're driving today. This Jaguar assembly robot (a Kawasaki ZX165U) is a demonstration model at Think Tank, the Birmingham science museum. It can lift loads of up to 300kg and reach up to 3.5m (11.5ft)—quite a bit more than a human arm!

Riveting and welding, swinging and sparking—most of the world's robots are high-powered arms, like the ones you see in car factories. Although they became popular in the 1970s, they were invented in the 1950s and first widely deployed in the 1960s by companies such as General Motors. The original robot arm, Unimate, made its debut on the Johnny Carson show back in 1966. Modern robot arms have more degrees of freedom (they can be turned or rotated in more ways) and can be controlled much more precisely.

Whether robot arms really qualify as robots is a moot point. Many of them lack much in the way of perception or cognition; they're simply machines that repeat preprogrammed actions. Fast, strong, powerful, and dangerous, they're usually fenced off in safety cages and seldom work anywhere never people (a recent article in the New York Times noted that 33 people have been killed by robots in the United States during the last 30 years). A few years ago, Rodney Brooks reinvented the whole idea of the robot arm with an affordable ($25,000), easy-to-use, user-friendly industrial robot called Baxter, which evolved into a similar machine named Sawyer. It can be "trained" (Brooks avoids the word "reprogrammed") simply by moving its limbs, and it has enough onboard sensory perception and cognition to work safely alongside humans, sharing (for example) exactly the same assembly line.

Photo: Robot arms are versatile, precise, and—unlike human factory workers—don't need rest, sleep, or holiday. But "all work and no play..." So this one is learning to play drums for a change, at Think Tank, the Birmingham science museum.

Remote-controlled (teleoperated) machines

Some of the machines we think of as robots are nothing of the kind: they merely appear robotic (and intelligent) because humans are controlling them remotely. Bomb disposal robots work this way: they're simply robot trucks with cameras and manipulator arms operated by joysticks. Until recently, space-exploration robots were designed much the same way, though autonomous rovers (with enough onboard cognition to control themselves) are now commonplace. So while 1997's Mars Sojourner (from the Pathfinder Mission) was semi-autonomous and largely remote-controlled from Earth, the much bigger and newer Mars Spirit and Opportunity rovers (launched in 2003) are far more autonomous.

Photo: Bomb-disposal robots are almost always remote-controlled. This one, Explosive Ordnance Disposal Mobile Unit (EODMU) 8, can pick up suspect devices with its jaw and carry them to safety. Photo by Joe Ebalo courtesy of US Navy.

Photo: NASA's FIDO was one of its first semi-autonomous robot rovers. Onboard cameras allow space scientists to control it remotely from Earth. Photo courtesy of NASA JPL Planetary Robotics Laboratory and NASA on the Commons.

Semi-autonomous household robots

If you've got a robot in your home, most likely it's a robot vacuum cleaner or lawn mower. Although these machines give the impression that they're autonomous and semi-intelligent, they're much simpler (and less robotic) than they appear. When you switch on a Roomba, it doesn't have any idea about the room it's cleaning—how big it is, how dirty it is, or the layout of the furniture. And, unlike a human, it doesn't attempt to build itself a mental model of the room as it's going along. It simply bounces off things randomly and repeatedly, working on the (correct) assumption that if it does this for long enough, the room will be fairly clean in the end. There are a few extra little tweaks, including a spiraling, on-the-spot cleaning mode that kicks in when a "dirt detect" sensor finds concentrated debris, and the ability to follow edges. But essentially, a Roomba cleans at random. Robot lawn mowers work in a somewhat similar way (sometimes with a tether to stop them straying too far).General-purpose robots

Although advanced robots like Baxter can be trained to do many different things, they're still essentially single-domain machines. Whether they're picking out badly formed machine parts for quality control or shifting boxes from one place to another, they're designed only to work on factory floors. We still don't have a robot that can make the breakfast, take the kids to school, drive itself to work somewhere else, come back home again, clean the house, cook the dinner, and put itself on recharge—unless you count your husband or wife.Back in the 1990s, when Kevin Warwick wrote his bestselling book March of the Machines, building intelligent, autonomous, general-purpose robots was considered an overly ambitious research goal. Engineers like Warwick typified a "hands-on" alternative approach to robotics, where grand plans were put aside and robots simply evolved as their creators figured out better ways of building robots with more advanced perception, cognition, and action. It's more like robot evolution, working from the bottom up to develop increasingly advanced creatures, than any sort of top-down approach that might be conceived by a kind of robot-world equivalent of God.

Roll time forwards, however, and much has changed. Although engineers like Kevin Warwick and Rodney Brooks are still champions of the pragmatic, bottom-up, minimal-cognition approach, elsewhere, general-purpose autonomous robots are making great strides forward—often literally, as well as metaphorically. The US Defense Department's research wing, DARPA, has sponsored competitions to develop humanoid robots that can cope with a variety of tricky emergency situations, such as rescuing people from natural disasters. (DARPA claims the intention is humanitarian, but similar technology seems certain to be used in robotic soldiers.) Thanks to video sites such as YouTube, robots like these, which would once have been top-secret, have been "growing up in public"—with each new incarnation of the stair stomping, chair balancing, car driving robots instantly going viral on social media.

Self-driving cars

Photo: A cutting-edge, self-driving Lincoln MKZ packed with sensors, including roof-mounted lidar, GPS, and radar. Photo by Jake McClung courtesy of US Marine Corps.

Self-driving cars are a different flavor of general-purpose, autonomous robot. But they've yet to catch the public's imagination in quite the same way, perhaps because they've been developed more quietly, even secretly, by companies such as Google. Now you could argue that there's nothing remotely general-purpose about driving a car: it involves a robot operating successfully in a single domain (the highway) in just the same way as a Baxter (on the factory floor) or a Roomba (cleaning your home). But the sheer complexity of driving—even humans take years to properly master it—makes it, arguably, as much of a general-purpose challenge as the one the DARPA robots are facing. Think of all the different things you have to learn as a driver: starting off, stopping at a signal, turning a corner, overtaking, parallel parking, slowing down when the car in front indicates, emergency stops... to say nothing of driving at daytime or night, in all kinds of weather, on every kind of country road and superhighway. Maybe it would be easier just to stick a humanoid robot in the driving seat after all.

Our robot future

There's no unknowing the things we learn. Technologies cannot be invented. The march of the robots is unstoppable—but quite where they're marching to, no-one yet knows. Futurologists like Raymond Kurzweil believe humans and machines will merge after we reach a point called the singularity, where vastly powerful machines become more intelligent than people. Humans will download their minds to computers and zoom into the future, not in the "bodiless exultation" of cyberspace (as William Gibson once put it) but in a steel and plastic doppelganger: a machine-body powered by the immortal essence of a human mind.More pragmatic, less dramatic scientists such as Rodney Brooks see a quieter form of evolution where the last few decades of robotic technology begin to augment what millions of years of natural selection have already cobbled together. Brooks argues that we've been on this path for years, with advanced prosthetic limbs, heart pacemakers, cochlear implants for deaf people, robot "exoskeletons" that paralyzed people can slip over their bodies to help them walk again, and (before much longer) widely available artificial retinas for the blind. There will be no revolutionary jump from human to robot but a smarter, smoother transition from flesh machines to hybrids that are part human and part robot.

--------------------------------------------------------------------------------------------------------------------------

Sensors and Transducers Used in Robots

The interaction between the robot and the environment must be informed by data on the state of the environment, and the features of the individual objects contained in the robot’s workspace, and information on the status of the robot itself and its mechanisms. We must know something about the mechanical and physical properties of the environment and particular objects, their position and orientation in space, the position and orientation of the robot links and the end-effector, the forces and velocities reached by these links, etc. These data are needed for the proper control of the robot using feedback loops , and can be provided by means of sensors and transducers, either intelligent (integrated with computer systems, such as vision systems), or non-intelligent (providing input data for the computer).Functions of sensors in robots The interaction between the robot and the environment must be informed by data on the state of the environment, and the features of the individual objects contained in the robot's workspace, and information on the status of the robot itself and its mechanisms. We must know something about the mechanical and physical properties of the environment and particular objects, their position and orientation in space, the position and orientation of the robot links and the end - effector, the forces and velocities reached by these links, etc. These data are needed for the proper control of the robot using feedback loops , and can be provided by means of sensors and transducers, either intelligent (integrated with computer systems, such as vision systems), or non-intelligent (providing input data for the computer). The sensors used in robotics can be divided into two groups: sensors delivering information about parameters describing the state of robot, and sensors delivering information about the state of the environment. All these data are present in the outputs of the sensors as signals representing corresponding parameter values. The parameters describing the state of the robot include the position and velocity of its particular links and the forces exerted by those links. The parameters describing the state of the environment include the shapes, position and orientation of the objects grasped by the robot, the colors of these objects, parameters of the disturbances acting on the robot during operation, and other specific environmental data which must be known in order to perform the given operation. Robot sensors (for example vision sensors) are usually located within the robot links, or their close vicinity. The gripper usually features the highest concentration of sensors.

Types of Robot Sensors

- Light sensors. A Light sensor is used to detect light and create a voltage difference. ...

- Sound Sensor. ...

- Temperature Sensor. ...

- Contact Sensor. ...

- Proximity Sensor. ...

- Distance Sensor. ...

- Pressure Sensors. ...

- Tilt Sensors.

Robots need to use sensors to create a picture of whatever environment they are in. An example of a sensor used in some robots is called LIDAR (Light Detection And Ranging). ... Lasers illuminate objects in an environment and reflect the light back. The robot analyzes these reflections to create a map of its environment.

Type of Sensor – The presence of an object can be detected with proximity sensors and other sensor technologies like ultrasonic sensors, capacitive, photoelectric, inductive, or magnetic; or for advanced applications, generally image sensors and vision software like OpenCV are used.

Sensors provide analogs to human senses and can monitor other phenomena for which humans lack explicit sensors.- Simple Touch: Sensing an object's presence or absence.

- Complex Touch: Sensing an object's size, shape and/or hardness.

- Simple Force: Measuring force along a single axis.

- Complex Force: Measuring force along multiple axes.

- Simple Vision: Detecting edges, holes and corners.

- Complex Vision: Recognizing objects.

- Proximity: Non-contact detection of an object.

- Object Proximity: The presence/absence of an object, bearing, color, distance between objects.

- Physical orientation. The co-ordinates of object in space.

- Heat: The wavelength of infrared or ultra violet rays, temperature, magnitude, direction.

- Chemicals: The presence, identity, and concentration of chemicals or reactants.

- Light: The presence, color, and intensity of light.

- Sound: The presence, frequency, and intensity of sound.

Internal sensor*

It is the part of the robot. Internal sensors measure the robot's internal state. They are used to measure position, velocity and acceleration of the robot joint or end effectors.Position sensor

Position sensors measure the position of a joint (the degree to which the joint is extended). They include:- Encoder: a digital optical device that converts motion into a sequence of digital pulses.

- Potentiometer: a variable resistance device that expresses linear or angular displacements in terms of voltage.

- Linear variable differential transformer: a displacement transducer that provides high accuracy. It generates an AC signal whose magnitude is a function of the displacement of a moving core.

- Synchros and Resolvers

Velocity Sensor

A velocity or speed sensor measures consecutive position measurements at known intervals and computes the time rate of change in the position values.Applications

In a parts feeder, a vision sensor can eliminate the need for an alignment pallet. Vision-enabled insertion robots can precisely perform fitting and insertion operations of machine parts.Robot Sensors •

Sensors are devices for sensing and measuring geometric andphysical properties of robots and the surrounding environment– Position, orientation, velocity, acceleration– Distance, size– Force, moment– temperature, luminance, weight–etc

Internal Sensors

acceleration sensorsvelocity sensoroptical encoder• Robot sensors can be classified into two groups: Internal sensors and external sensors• Internal sensors: Obtain the information about the robot itself.– position sensor, velocity sensor, acceleration sensors, motor torque sensor, etc.

External Sensors•

External sensors: Obtain the information in the surrounding environment.– Cameras for viewing the environment– Range sensors: IR sensor, laser range finder, ultrasonic sensor, etc.– Contact and proximity sensors: Photodiode, IR detector, RFID, touch etc.– Force sensors: measuring the interaction forces with the environment, –etc

Position Measurement •

An optical encoder is to measure the rotational angle of a motor shaft. • It consists of a light beam, a light detector, and a rotating disc with a radial grating on its surface.• The grating consists of black lines separated by clear spaces. The widths of the lines and spaces are the same.– Line: cut the beam a low signal output– Space: allow the beam to pass a high signal output• A train of pulses is generated with rotation of the disc. By counting the pulses, it is possible to know the rotational angle

Optical Encoders•

How to increase the resolution, i.e. to make the value of s smaller.– Increase the number of lines/spaces the manufacturing cost will be increased– Evaluate the two trains of pulses. The evaluation means to take set operations, interpolation, etc.

Optical Encoders•

In robotics, we are more interested in the measurement of joint angles instead of the angle of the motor shaft.• By adding a reduction mechanism (gear box, etc), the measurement resolution of the joint angle will be increased n times, where n is the gear ratio (velocity ratio) of the reduction mechanism.– One turn of the joint corresponds to n turns of the motor shaft.

Inertial Sensors•

Gyroscopes – Heading sensors, that keep the orientation to a fixed frame– absolute measure for the heading of a mobile system. – Two categories, the mechanical and the optical gyroscopes• Mechanical Gyroscopes• Optical Gyroscopes•Accelerometers– Measure accelerations with respect to an inertial frame – Common applications: • Tilt sensor in static applications, Vibration Analysis, Full INS Systems

Applications of Gyroscopes•

Gyroscopes can be very perplexing objects because they move in peculiar ways and even seem to defy gravity. – A bicycle – an advanced navigation system on the space shuttle– a typical airplane uses about a dozen gyroscopes in everything from its compass to its autopilot.– the Russian Mir space station used 11 gyroscopes to keep its orientation to the sun– the Hubble Space Telescope has a batch of navigational gyros as well

Accelerometers •

They measure the inertia force generated when a mass is affected by a change in velocity. • This force may change –The tension of a string – The deflection of a beam – The vibrating frequency of a mass

Touch and Proximity Sensors•

To detect whether any object is close to a robot or touches a robot.• Proximity sensor does not give distance, but only tells the existence of an object.• Typical sensors– IR proximity sensors– Photodiodes– Touch sensors– RFID detector–etc

Photodiodes •

Photodiodes generate a current or voltagewhen illuminated by light.• Their working principle is the same as that ofIR sensors• The differences lie in the wavelength of thelights they sense.

Touch Sensors•

Working principle:– A force sensing resistor changes its resistance when it is pressed or bent.– When the button is pressed, the circuit is connected.

Robot Actuators•

Electrical actuators• Hydraulic actuators• Pneumatic actuators• Others (SMA, heat, etc)

Hydraulic Actuation•

Drive robot joints by using the pressure of oils, water, etc.• Advantages: High power output• Disadvantage: – Difficult to control -->low accuracy – Slow response– Big size– Dirty• Early robots used hydraulic actuation

Pneumatic Actuation•

Drive robot joints by the pressure of air.• Advantages: – clean and small. – cheap• Disadvantage: – difficult to control position precisely• Mainly used in opening control of robot grippers.

Electrical Actuators•

Stepping motors, DC motors, AC motors and Servo motors• Advantages:– small size– easy to control, high control accuracy– fast response– clean• Disadvantages: – low power output compared to hydraulic actuators

Motion Transmission •

Why do we need a motion transmission mechanism?– transfer motion from one type to another– Change direction– Change speed of motion– Deliver big force

Gears are most commonly used transmission devices in robots• Gears are wheels with teeth.• Gears are used to transfer motion or powerfrom one moving part to another.

Harmonic Drive•

Components– A flex spline which can deform– A wave generator with a plug pushing the flex spline– A circular spline• Design: There are fewer teeth on the flex spline than the circular spline• Working principle– As wave generator plug rotates, the plug deforms the spline flex and the flex spline teeth which are meshed with those of the circular spline change. – The difference in teeth will make the circular spline rotate by the angle corresponding to the difference of the teeth in the inverse direction

Harmonic Drive•

Reduction ratio:(flex spline teeth - circular spline teeth) /flex spline teeth • Advantages:– Zero backlash– High reduction ratio with single stage– Compact & light weight– High torque compaibility– Co-axial input & output shaft

example simple Robotics design

Three rule of Robotics:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given to it by human beings, except where such orders would conflict with the First rule.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second rule.

Robot was created with the purpose of exploring:

- Robotic hardware technologies and mechanical components

- Schematics and circuit solutions

- Microcontrollers, sensors and other electronic components

- Embedded software programming

- Real-time intelligent processing algorithms

The robot main design objectives were:

- Simplicity of mechanical hardware components - easy to find parts and build

- Simplicity of schematic solution - minimize number of electronic circuit components

- Place most of the complexity in the robot software - it is easier to enhance and expand then hardware and circuitry.

- Low cost of parts

Robot Functional Diagram

Robot Design Highlights

Under The Hood: Main Board View |

Main Processor:Philips P89C51RD2 Microcontroller

|

Robot Sensory System

- Proximity sensors

- Passive - front left and right, rear contact bumpers

- Active - Infra-Red (IR) emitter/receiver sensors,

use modulated triangulation algorithm: - Sharp GP2D15 short range sensor (front, at the base)

- Sharp GP2Y0A0YK long range IR sensor - detects obstacles up to 10 feet. Mounted on rotating head and positioned by the bi-polar Clifton Precision Stepper motor, allowing 360 degree sweep

- Locomotion speed sensors - wheel independent, reflective optical encoding

- Head home sensor

- Light sensor

- Tilt sensor

- Low supply voltage sensor

Robot Propulsion:

- Independent control of the left and right gearboxes and drive wheels

- Toshiba TA7267BP Full-Bridge (H-Switch) motor driver

- Precise power load control using Pulse Width Modulation (PWM)

- Continuous adjustment of the robot speed using IR sensor feedback with optical encoders, automatically compensating for surface characteristics, angle/slope, battery charge level, etc.

Communication with Robot

- Heartbeat LED indicator

- LED Signal Tower (3 LEDs/7 colors)

- Sound effects using audio beeper

- Audible Morse code system to communicate error codes and status messages

- Robot Mode switches

- Modified RS232 UART interface for robot firmware upload and maintenance

- 27MHz 4-channel radio control/command input interface .

Robot Software Architecture

Concept Overview:- The first step after robot powering up is a thorough system self-diagnostic, including testing hardware and electronic circuitry.

- The Robot's main routine consists of an infinite loop, within which various logic sub-routines are called in sequence.

Routines examples include:- Processing sensory interrupts (bumber activation, object detection within IR proximity, unexpected change in speed...)

- behavior decisions (deciding on driving directions, setting goals, etc.)

- processing communication commands

- minor self-diagnostic

- etc.

- The routines are designed not to take much time and exit almost immediately: They do not actually wait to complete their task (f.e. turning robot 180 degrees) - instead they set required entries in the Robot's task message queue and exit.

- The robot task queue is constantly monitored and its tasks are processed by the separate timer and sensory interrupt driven routines. Tasks in the message queue have various types and priority levels.

- C programming language

- Message Task Queue

- Real-time pre-emptive multitasking algorithm

- Stalled wheel detection routines and PWM load balancing

Calculate the distance between two locations using PHP

BalasHapusSimple star rating system using PHP, jQuery and Ajax

Polling System using PHP, MySQL and Ajax

Simple PHP File Cache

Create Dynamic Pie Chart using Google API

Driving route directions

CSS Simple Menu Navigation Bar

jQuery loop over JSON result

Thermal actuator for HVAC smart home control field

BalasHapus