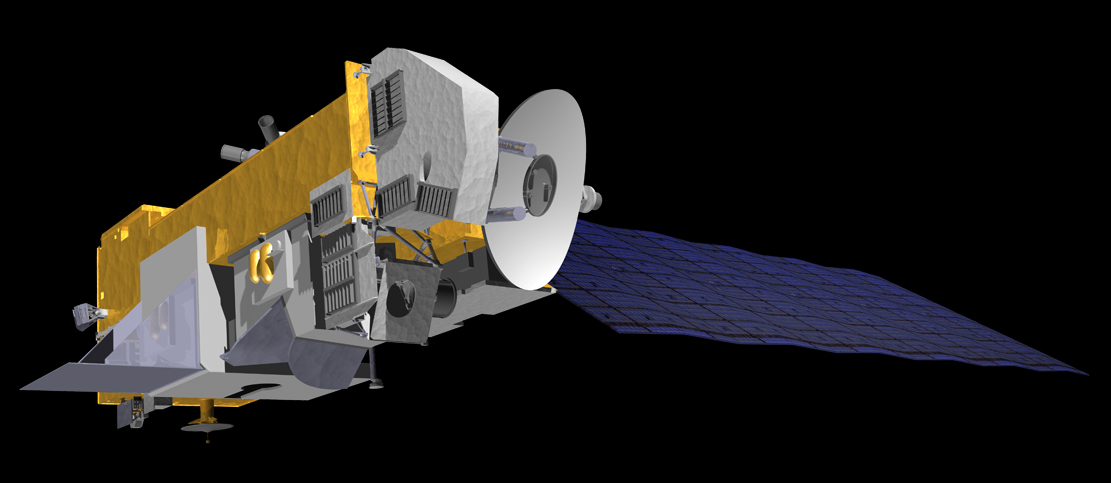

Earth observation satellite

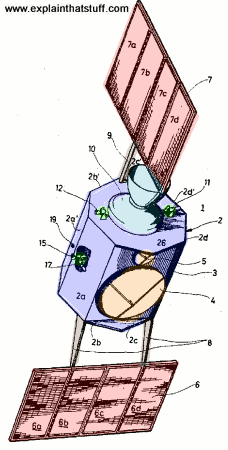

Most Earth observation satellites carry instruments that should be operated at a relatively low altitude. Altitudes below 500-600 kilometers are in general avoided, though, because of the significant air-drag at such low altitudes making frequent orbit reboost maneuvres necessary. The Earth observation satellites ERS-1, ERS-2 and Envisat of European Space Agency as well as the MetOp spacecraft of EUMETSAT are all operated at altitudes of about 800 km. The Proba-1, Proba-2 and SMOS spacecraft of European Space Agency are observing the Earth from an altitude of about 700 km. The Earth observation satellites of UAE, DubaiSat-1 & DubaiSat-2 are also placed in Low Earth Orbits (LEO) orbits and providing satellite imagery of various parts of the Earth.

To get (nearly) global coverage with a low orbit it must be a polar orbit or nearly so. A low orbit will have an orbital period of roughly 100 minutes and the Earth will rotate around its polar axis with about 25 deg between successive orbits, with the result that the ground track is shifted towards west with these 25 deg in longitude. Most are in sun-synchronous orbits.

Spacecraft carrying instruments for which an altitude of 36000 km is suitable sometimes use a geostationary orbit. Such an orbit allows uninterrupted coverage of more than 1/3 of the Earth. Three geostationary spacecraft at longitudes separated with 120 deg can cover the whole Earth except the extreme polar regions. This type of orbit is mainly used for meteorological satellites.

Weather

A weather satellite is a type of satellite that is primarily used to monitor the weather and climate of the Earth.[7] These meteorological satellites, however, see more than clouds and cloud systems. City lights, fires, effects of pollution, auroras, sand and dust storms, snow cover, ice mapping, boundaries of ocean currents, energy flows, etc., are other types of environmental information collected using weather satellites.

Weather satellite images helped in monitoring the volcanic ash cloud from Mount St. Helens and activity from other volcanoes such as Mount Etna.[8] Smoke from fires

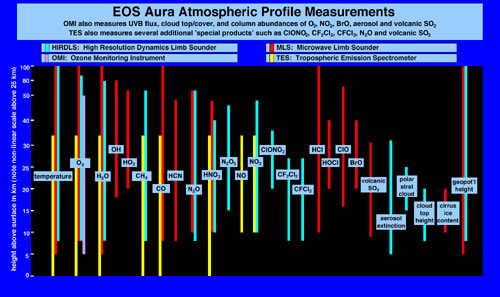

Environmental monitoring

Other environmental satellites can assist environmental monitoring by detecting changes in the Earth's vegetation, atmospheric trace gas content, sea state, ocean color, and ice fields. By monitoring vegetation changes over time, droughts can be monitored by comparing the current vegetation state to its long term average.[9] For example, the 2002 oil spill off the northwest coast of Spain was watched carefully by the European ENVISAT, which, though not a weather satellite, flies an instrument (ASAR) which can see changes in the sea surface. Anthropogenic emissions can be monitored by evaluating data of tropospheric NO2 and SO2.

These types of satellites are almost always in Sun synchronous and "frozen" orbits. The Sun synchronous orbit is in general sufficiently close to polar to get the desired global coverage while the relatively constant geometry to the Sun mostly is an advantage for the instruments. The "frozen" orbit is selected as this is the closest to a circular orbit that is possible in the gravitational field of the Earth.

Mapping

Terrain can be mapped from space with the use of satellites, such as Radarsat-1 and TerraSAR-X.

XO__XO satellites : the sky has eyes

There are approximately 120 private companies in the world that operate about a third of the thousands of small, orbiting, uninhabited space vehicles commonly known as satellites; none of these companies are American. In the USA, the only entities that are allowed to own and operate satellites -- even if they are used to do scientific research, or to provide commercial services relating to communications, entertainment or weather forcecasting -- is the Department of Defense (DOD) or one of the USA's independent intelligence-gathering agencies: the Central Intelligence Agency (created in 1947), the National Security Agency (1952), the National Reconnaissance Organization (1960), or the National Imagery and Mapping Organization (1996). Foreign governments, private companies and scientific institutions must either pay a fee or enter into an exclusive information-sharing agreement (or both) if they want to get access to America's satellites.

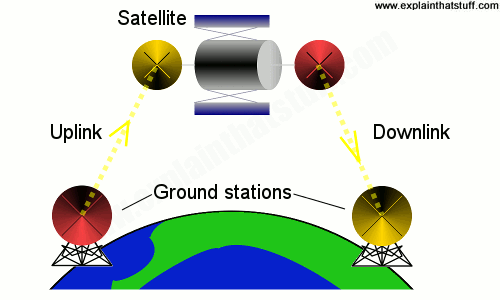

In the other countries that have placed satellites in orbit (Russia, China, France, Japan, India, Canada and Israel), the military/intelligence satellites (if any) are kept separate from the commercial ones, and private companies can operate their own satellite systems. This makes sense from an operational point of view. With some exceptions, military satellites are designed to move in complex elliptical orbits around the earth, so that they can photograph very large areas of the surface (whole continents) and determine the locations of sources of certain microwave transmissions. Commercial satellites, by contrast, are "geo-stationary," that is, designed to stay "motionless" in orbit above or, rather, in between certain (limited or very specific) locations that can't be connected with cables (see diagram below).

The US military makes extensive use of both kinds of satellites. As might be expected, geo-stationary satellites are used to produce very detailed radar images or photographs of small, strategically important locations, and to gather signals intelligence about or intercept and eavesdrop upon certain transmissions. But the DOD also uses geo-stationary satellites to direct precision-guided weapons to their locations. The satellites do this by directing down to the surface a laser beam that can be detected, locked on to and then followed all the way down by bombs or missiles that have laser-readers installed in their cones. And so, these satellites aren't fully automated killers, unlike their "cousins," the the uninhabited aerial vehicles. But they're close, and will get even closer if self-avowed President George W. Bush succeeds in his effort to revive Ronald Reagan's "Star Wars" missile defense plan from the 1980s.

It is highly instructive that the very first American satellite was a "reconnaissance" (spy) satellite and that it was the product of an intense, behind-the-scenes competition between the DOD and the CIA, which are still in competition with each other. (Note the battle to see who will achieve "Total Information Awareness" first.) On February 7, 1958 -- three years after the Air Force had begun work on a satellite that would provide continuous surveillance of "pre-selected areas of the earth" in order "to determine the status of a potential enemy's war-making capability," and one year after the Soviets launched Sputnik, thereby beating the Americans into space -- President Dwight Eisenhower turned to the CIA and asked it to develop a reconnaissance satellite of its own. Unlike the Air Force's satellite program, which had tried unsuccessfully to produce a satellite that would use electronics to beam its photographs down to earth, the CIA's CORONA program concentrated on producing a satellite that would simply put its photographs into a canister that could be dropped down and recovered from wherever it landed.

To make sure that the DOD didn't feel slighted, on February 7, 1958 Eisenhower also created the Advanced Research Projects Agency (ARPA), which later became the Defense Advanced Research Projects Agency (DARPA). The DOD could also console itself with the fact that it still operated the Space Surveillance Network, which ever since 1957 has used ground-based radar and optical sensing equipment to detect, identify, track and catalogue all the artificial objects orbiting Earth, including satellites (both active and inactive), spent rocket bodies and other pieces of debris, even those as small as baseballs.

It took the CIA over a year and 14 separate launches before it finally managed to get an operational CORONA satellite placed in orbit. But Eisenhower deemed it worth the expense and the wait. In a single day, the satellite's Keyhole-1 camera -- which could "resolve" upon objects as small as 40 feet long -- photographed more of the Soviet Union than had been photographed over a period of years by all of the Air Force's U-2 spy planes put together.

In 1963, CORONA was supplemented by GAMBIT, a "close-look" or "spotting" satellite designed to target specifics areas. Thanks to its high-resolution Keyhole-7 camera, which could resolve upon objects as small as 18 inches long, GAMBIT was able to take photographs useful for detailed intelligence work, such as inspecting foreign weapons systems. After GAMBIT, the CIA developed HEXAGON, which could cover even more ground than CORONA and yet still resolve upon objects as small as 1 or 2 feet long. At the same time (the early 1970s), the Air Force developed the Defence Support Program, which placed a constellation of satellites in geostationary orbit around the Earth. Unlike their predecessors, these satellites contained infrared sensors as well as photographic cameras, which allowed them to work at night and/or despite the presence of heavy cloud cover.

According to the European Parliament and other researchers, the early 1970s was also the period in which the various American intelligence-gathering agencies -- trying to keep up with the satellite launches of the International Telecommunications Satellite Organization, formed in 1964 and capable of providing truly global coverage in 1968 -- developed the ECHELON system. Built upon a 1947 intelligence-sharing agreement between the USA, Canada, England, Australia and New Zealand, the ECHELON system uses immense ground-based listening stations (see picture below) to intercept and eavesdrop upon satellite-based communications. These listening stations are dispersed throughout the world, in such places as Yakima (Washington State, USA), Sugar Grove (West Virginia, USA), Menwith Hill (England), Hong Kong, Guam, Misawa Air Base (Japan), and Sabana Seca (Puerto Rico); they allow the English-speaking countries (the USA, mostly) to eavesdrop upon what the rest of the world is saying in their faxes, telexes and international telephone calls.

(Listening station in West Virginia, USA.)

By 1976, satellites such as CRYSTAL were finally able to do what Eisenhower wanted the Air Force's first satellites to do, namely, use electronic signals to relay images down to earth as soon as they are captured by the satellite's (Keyhole-11) cameras. But, despite their incredible sophistication, these space-to-earth transmissions -- like all wireless broadcasts -- were and still are vulnerable to interception.

In 1982, the Department of Defense starting launching the first of the 24 satellites that today enable the operation of the Global Positioning System (GPS). Because it is the subject of a vast and rapidly expanding literature, GPS has been taken up in a separate entry,

Wireless surveillance in England.

The camera is at lower left; the modulator is on the right; and the microwave "beamer" is at upper left.

There are several serious problems with using traditional coaxial or even high-tech fiber-optic cables to transmit video images from the surveillance cameras to the stations at which the images are monitored and/or recorded. Cables are easily damaged or severed by bad weather, birds, insects, accidents or saboteurs, and thus require nearly constant monitoring, maintanence and repair. Furthermore, cables cannot be strung over long distances without sacrificing image quality or having to build expensive booster stations along the way. The use of cable thus necessitates the close physical proximity of the monitoring stations to the areas under video surveillance. But proximity might be undesirable if the surveillance is intended to be covert or impossible if the subject of surveillance is constantly on the move.

To solve these problems, video surveillance has gone wireless. There are several means by which live video images can be transmitted without the use of cables or wires: lasers, radar signals and radio (all of which are "microwaves," differing only in frequency on the spectrum). The most commonly used microwave is radio-frequency ("radio" for short). Radio broadcasts intended to travel long distances usually make use of ground-based antennae or satellites that relay the signal from the transmitter to the receiver. Broadcasts intended to travel short distances are usually beamed "point-to-point," that is, directly from the transmitter to the receiver. (Unfortunately for the people who visit and work in the area, an example of a high-powered point-to-point system can be found right in the heart the New York City's Times Square.)

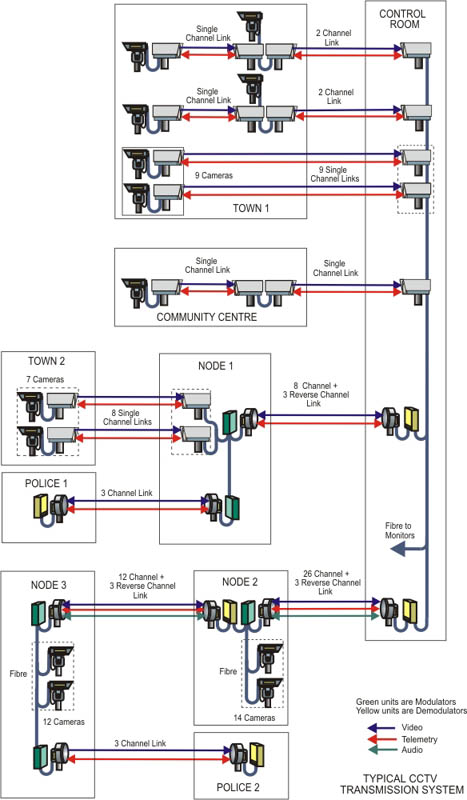

In England, whole towns are covered by complex networks of point-to-point systems. In this schematic, which explains the operation of the secure system in which the wireless surveillance camera depicted above is an element, note that the video-signal is first "modulated" (encrypted), then relayed through a "control room," at which it is "demodulated," then relayed through a "node," at which the signal is modulated again, before it finally reaches the police station, where it is (no doubt) finally unencrypted and watched and/or recorded.

In America, many law enforcement agencies use radio signals to transmit, relay and receive live video images. These agencies include the California Highway Patrol, the US Border Patrol and the the New York Police Department (NYPD). Their use of wireless cameras can either be covert or overt.

In covert operations ("stake-outs"), the cameras are usually small devices that beam their signals over short distances (usually to an unmarked van or car parked nearby) from hidden, semi-permanent locations on or near the suspects. In overt operations ("routine patrols"), the cameras are usually large devices that beam their signals over relatively long distances (back to headquarters or some other command-and-control center) from their mounts atop news-style vans or mobile command centers (usually buses). Overt wireless spy cameras can also be installed on helicopters, planes, unmanned aerial vehicles and satellites.

Whether used covertly or overtly, wireless video is a risky business for two reasons: 1) though such transmissions are often "modulated" or otherwise encrypted, they are nevertheless sent on "open," non-secured frequencies, and so can be intercepted and, given time, decoded; and 2) officers routinely stationed near the transmitters can suffer serious health problems if over-exposed to microwave radiation.

In the other countries that have placed satellites in orbit (Russia, China, France, Japan, India, Canada and Israel), the military/intelligence satellites (if any) are kept separate from the commercial ones, and private companies can operate their own satellite systems. This makes sense from an operational point of view. With some exceptions, military satellites are designed to move in complex elliptical orbits around the earth, so that they can photograph very large areas of the surface (whole continents) and determine the locations of sources of certain microwave transmissions. Commercial satellites, by contrast, are "geo-stationary," that is, designed to stay "motionless" in orbit above or, rather, in between certain (limited or very specific) locations that can't be connected with cables (see diagram below).

The US military makes extensive use of both kinds of satellites. As might be expected, geo-stationary satellites are used to produce very detailed radar images or photographs of small, strategically important locations, and to gather signals intelligence about or intercept and eavesdrop upon certain transmissions. But the DOD also uses geo-stationary satellites to direct precision-guided weapons to their locations. The satellites do this by directing down to the surface a laser beam that can be detected, locked on to and then followed all the way down by bombs or missiles that have laser-readers installed in their cones. And so, these satellites aren't fully automated killers, unlike their "cousins," the the uninhabited aerial vehicles. But they're close, and will get even closer if self-avowed President George W. Bush succeeds in his effort to revive Ronald Reagan's "Star Wars" missile defense plan from the 1980s.

It is highly instructive that the very first American satellite was a "reconnaissance" (spy) satellite and that it was the product of an intense, behind-the-scenes competition between the DOD and the CIA, which are still in competition with each other. (Note the battle to see who will achieve "Total Information Awareness" first.) On February 7, 1958 -- three years after the Air Force had begun work on a satellite that would provide continuous surveillance of "pre-selected areas of the earth" in order "to determine the status of a potential enemy's war-making capability," and one year after the Soviets launched Sputnik, thereby beating the Americans into space -- President Dwight Eisenhower turned to the CIA and asked it to develop a reconnaissance satellite of its own. Unlike the Air Force's satellite program, which had tried unsuccessfully to produce a satellite that would use electronics to beam its photographs down to earth, the CIA's CORONA program concentrated on producing a satellite that would simply put its photographs into a canister that could be dropped down and recovered from wherever it landed.

To make sure that the DOD didn't feel slighted, on February 7, 1958 Eisenhower also created the Advanced Research Projects Agency (ARPA), which later became the Defense Advanced Research Projects Agency (DARPA). The DOD could also console itself with the fact that it still operated the Space Surveillance Network, which ever since 1957 has used ground-based radar and optical sensing equipment to detect, identify, track and catalogue all the artificial objects orbiting Earth, including satellites (both active and inactive), spent rocket bodies and other pieces of debris, even those as small as baseballs.

It took the CIA over a year and 14 separate launches before it finally managed to get an operational CORONA satellite placed in orbit. But Eisenhower deemed it worth the expense and the wait. In a single day, the satellite's Keyhole-1 camera -- which could "resolve" upon objects as small as 40 feet long -- photographed more of the Soviet Union than had been photographed over a period of years by all of the Air Force's U-2 spy planes put together.

In 1963, CORONA was supplemented by GAMBIT, a "close-look" or "spotting" satellite designed to target specifics areas. Thanks to its high-resolution Keyhole-7 camera, which could resolve upon objects as small as 18 inches long, GAMBIT was able to take photographs useful for detailed intelligence work, such as inspecting foreign weapons systems. After GAMBIT, the CIA developed HEXAGON, which could cover even more ground than CORONA and yet still resolve upon objects as small as 1 or 2 feet long. At the same time (the early 1970s), the Air Force developed the Defence Support Program, which placed a constellation of satellites in geostationary orbit around the Earth. Unlike their predecessors, these satellites contained infrared sensors as well as photographic cameras, which allowed them to work at night and/or despite the presence of heavy cloud cover.

According to the European Parliament and other researchers, the early 1970s was also the period in which the various American intelligence-gathering agencies -- trying to keep up with the satellite launches of the International Telecommunications Satellite Organization, formed in 1964 and capable of providing truly global coverage in 1968 -- developed the ECHELON system. Built upon a 1947 intelligence-sharing agreement between the USA, Canada, England, Australia and New Zealand, the ECHELON system uses immense ground-based listening stations (see picture below) to intercept and eavesdrop upon satellite-based communications. These listening stations are dispersed throughout the world, in such places as Yakima (Washington State, USA), Sugar Grove (West Virginia, USA), Menwith Hill (England), Hong Kong, Guam, Misawa Air Base (Japan), and Sabana Seca (Puerto Rico); they allow the English-speaking countries (the USA, mostly) to eavesdrop upon what the rest of the world is saying in their faxes, telexes and international telephone calls.

By 1976, satellites such as CRYSTAL were finally able to do what Eisenhower wanted the Air Force's first satellites to do, namely, use electronic signals to relay images down to earth as soon as they are captured by the satellite's (Keyhole-11) cameras. But, despite their incredible sophistication, these space-to-earth transmissions -- like all wireless broadcasts -- were and still are vulnerable to interception.

In 1982, the Department of Defense starting launching the first of the 24 satellites that today enable the operation of the Global Positioning System (GPS). Because it is the subject of a vast and rapidly expanding literature, GPS has been taken up in a separate entry,

wireless video surveillance

radio frequencies and microwaves

The camera is at lower left; the modulator is on the right; and the microwave "beamer" is at upper left.

There are several serious problems with using traditional coaxial or even high-tech fiber-optic cables to transmit video images from the surveillance cameras to the stations at which the images are monitored and/or recorded. Cables are easily damaged or severed by bad weather, birds, insects, accidents or saboteurs, and thus require nearly constant monitoring, maintanence and repair. Furthermore, cables cannot be strung over long distances without sacrificing image quality or having to build expensive booster stations along the way. The use of cable thus necessitates the close physical proximity of the monitoring stations to the areas under video surveillance. But proximity might be undesirable if the surveillance is intended to be covert or impossible if the subject of surveillance is constantly on the move.

To solve these problems, video surveillance has gone wireless. There are several means by which live video images can be transmitted without the use of cables or wires: lasers, radar signals and radio (all of which are "microwaves," differing only in frequency on the spectrum). The most commonly used microwave is radio-frequency ("radio" for short). Radio broadcasts intended to travel long distances usually make use of ground-based antennae or satellites that relay the signal from the transmitter to the receiver. Broadcasts intended to travel short distances are usually beamed "point-to-point," that is, directly from the transmitter to the receiver. (Unfortunately for the people who visit and work in the area, an example of a high-powered point-to-point system can be found right in the heart the New York City's Times Square.)

In England, whole towns are covered by complex networks of point-to-point systems. In this schematic, which explains the operation of the secure system in which the wireless surveillance camera depicted above is an element, note that the video-signal is first "modulated" (encrypted), then relayed through a "control room," at which it is "demodulated," then relayed through a "node," at which the signal is modulated again, before it finally reaches the police station, where it is (no doubt) finally unencrypted and watched and/or recorded.

In America, many law enforcement agencies use radio signals to transmit, relay and receive live video images. These agencies include the California Highway Patrol, the US Border Patrol and the the New York Police Department (NYPD). Their use of wireless cameras can either be covert or overt.

In covert operations ("stake-outs"), the cameras are usually small devices that beam their signals over short distances (usually to an unmarked van or car parked nearby) from hidden, semi-permanent locations on or near the suspects. In overt operations ("routine patrols"), the cameras are usually large devices that beam their signals over relatively long distances (back to headquarters or some other command-and-control center) from their mounts atop news-style vans or mobile command centers (usually buses). Overt wireless spy cameras can also be installed on helicopters, planes, unmanned aerial vehicles and satellites.

Whether used covertly or overtly, wireless video is a risky business for two reasons: 1) though such transmissions are often "modulated" or otherwise encrypted, they are nevertheless sent on "open," non-secured frequencies, and so can be intercepted and, given time, decoded; and 2) officers routinely stationed near the transmitters can suffer serious health problems if over-exposed to microwave radiation.

MSNBC and the Microwave Monster

Without intending to do so or realizing what it did, MSNBC (cable television) solved a small but important mystery during the program that it aired between 2:30 pm and 3 pm (EST) on Tuesday, 3 July 2001. Devoted to face recognition software and the use of surveillance cameras in public places, this program -- an awkward mish-mash of live and pre-recorded segments -- included a short interview with Bill Brown of the Surveillance Camera Players that had been taped in Times Square, Manhattan, earlier in the day.

The now-solved mystery was this: who owns and operates the large unlabeled surveillance camera installed right in the heart of Times Square? It's the one installed at the top of a City-owned pole on the north end of the traffic island that lies between 43rd and 44th Streets and between Broadway and Seventh Avenues. (See photograph above.)

In addition to systematically violating people's right to privacy, this surveillance camera is jeopardizing and possibly adversely affecting the health of the tens of thousands of unsuspecting people who either work in the surrounding buildings and/or visit Times Square, the so-called crossroads of the world, as tourists. Unlike most surveillance cameras in New York City, the one in Times Square is outfitted with a large, expensive and very nasty-looking microwave transmitter, which obviously beams the images captured by the camera to an equally large receiver, which is installed high up in a building two blocks away. In the process, the transmitter -- which is continually operating, installed only 25 feet above ground-level and violates federal law to the extent that it doesn't bear a label saying that it has been inspected and approved by the Federal Communications Commission -- regularly pollutes the entire area with microwave radiation.

Who could afford to operate such a monster, which costs around $10,000? Who could operate it on City-owned property? Who would operate it with such complete disregard for federal law and the public health? The New York Police Department, which maintains a police station just one block away? The Department of Defense, which operates a recruiting center at the far end of the traffic island? No one knew.

And so the Microwave Monster has been an important topic of discussion during every single walking tour of surveillance camera locations in Times Square. Indeed, had there been enough time, Bill Brown would have shown the Monster to MSNBC's reporter Monica Novotny when he gave her an abbreviated version of the Times Square walking tour on the morning of Tuesday, 3 July. It would have been very interesting indeed if the MSNBC crew had included shots of the Monster in the collage of Times Square surveillance cameras that opened Novotny's report.

The mystery was solved by the following, perhaps unscripted admission. To provide continuity between an interview with Larry Irving of the Privacy Council and Monica Novotny's interview with Bill Brown, "Rick" at the MSNBC Live news-desk said:

"We're going to talk a little bit more about this. How many surveillance cameras are there in Times Square, for example? Well, you're looking at [sic] one of them right now. It's probably the most famous: NBC's Times Square camera, and that's just one of many."Despite Rick's obvious mistake -- the viewer wasn't looking at a surveillance camera, but through the lens of one -- it was easy to deduce the location of this "famous" camera by the view of the area it afforded: the exact same location occupied by the Microwave Monster. There can be no doubt; no other camera is positioned to take such a view. The Microwave Monster has nothing whatsoever to do with law enforcement: it isn't owned or operated by the NYPD or the US military, but by NBC.

It is imperative that we get NBC to confirm or deny that it is in fact the owner/operator of the Microwave Monster; NBC must explain how it justifies the use of a dangerous, high-powered microwave transmitter in the heart of Times Square.

U.S. acts over satellite images

LONDON, England -- U.S. officials plan to encrypt data after it was revealed that European satellite TV viewers can tune into live spy plane transmissions. CNN confirmed on Thursday that footage from American aircraft flying over Medovci, Bosnia, could still be accessed live by satellite. The alarm was raised by British satellite enthusiast John Locker who told CNN: "I thought that the U.S. had made a deadly error. My first thought was that they were sending their spy plane pictures through the wrong satellite by mistake, and broadcasting secret information across Europe."

Locker, from the Wirral, north-western England, said he tried repeatedly to warn British, NATO and U.S. officials about the leak but his warnings were set aside. One officer wrote back to tell him that the problem was a "known hardware limitation." Locker said that it was easier for terrorists to tune into live video of U.S. intelligence activity than to pick up Disney cartoons or newly released movies. The high-intensity signal can be picked up using just basic equipment.

Pentagon officials told CNN's Jamie McIntyre the video was deliberately left "in the clear" and did not show sensitive information. The video, which on Thursday appeared to show a region's mountainous terrain, military-type vehicles, and other landmarks, is coming from either an unmanned Hunter surveillance drone, or the manned P-3 type of aircraft. The P-3 is similar to the four-engined, propeller plane that was forced to land on China's Hainan Island in April, 2001, after a mid-air collision with one of Beijing's warplanes.

Pentagon officials said the only "story" regarding the video would be that having to send such video in the clear underscores a lack of technology among NATO allies. The unclassified video is made available by agreement with the U.S. and any NATO allies who have troops deployed in the Bosnian region. A NATO spokesman in Brussels told CNN that it was a U.S. issue and the Pentagon had decided on the level of security at which it would accept the material.

Locker said he was able to identify the spy aircraft, Hunter and the P-3 which were helpfully identified in the corner of the screen. Geoff Bains, editor of Britain's "What Satellite TV?" magazine, said any satellite TV enthusiast in Europe could tune in with a one-metre dish costing about 60 ($90) and a free-to-air DBS digital satellite receiver costing from 150 ($220) upwards. "There's nothing complicated about the hardware to receive the signal." he told CNN.

Viewers tuning into the satellite this week were able to watch a security alert round the U.S. Army's headquarters at Camp Bondsteel in Kosovo. Last week, the spy plane provided airborne cover for a heavily protected patrol of the Macedonian-Kosovo border near Skopje. The pictures, from manned spy aircraft and drones, have been broadcast through a satellite over Brazil. The links, which are not encrypted, have also been transmitted over the Internet.

Locker said: "Obviously I'm not a military analyst and I'm not an expert in this field but I am just amazed this type of material is going out free-to-air. They put up data quite often which identified vehicles and the area to within two metres. That to me is a risk."

Richard Perle, chairman of the Pentagon's Defense Policy Board, told the BBC: "There are plans to encrypt this data. We have discovered in the period since September 11 how important this sort of real-time intelligence is. Now we are making much better use of this kind of information and it will make sense to encrypt it in the future."

Defence analyst Andrew Brookes of the International Institute for Strategic Studies told CNN the information being transmitted did not pose a risk to the planes or crew, and seemed designed for training purposes. He said the decision to use channels accessible to the public was a question of prioritising as there are only limited numbers of secret military satellite channels available.

aerial surveillance

uninhabited aerial vehicles (UAVs)

Both helicopters and spy planes have a very serious weakness: they must be piloted by human beings; that is to say, human beings must be aboard them. And so, they cannot be lost with impunity; they cannot enter into or create toxic environments. One must avoid both the purely negative consequences of crashes (death and property destruction), but also the consequences that are positive for one's enemies (the taking of prisoners of war, hostages and other potential sources of sensitive information).

And so, in order to maim and kill from a distance and without fear of being maimed or killed, the United States military has since 1964 spent billions of dollars researching and developing uninhabited (or "unmanned") aerial vehicles ("UAVs"). Because most of them can use the US military's system of satellites to communicate with their ground stations, these UAVs have mostly been used by the Air Force for reconnasissance and surveillance ("imagery intelligence") at very long ranges (between 50 and 3,000 nautical miles away). But they have also been used as combat aircraft, that is, as automated killers.

Of particular interest are (1) the Global Hawk, made by Northrop Grumman, (2) the Predator, made by General Atomics, (3) the Cypher, made by Sikorsky, and (4) the Desert Hawk, made by Lockheed Martin.

(1) Each Global Hawk costs $15 million to manufacture. This UAV is over 40 feet long, and thus requires a very large run-way for take-offs and landings. Controlled by a human operator, not an on-board computer, the Global Hawk can stay in the air for as long as 40 hours. In that time, and without stopping once to re-fuel, it can travel 3,000 miles to its target; focus upon a huge area (as many as 3,000 square miles) from as high up as 65,000 feet; use electro-optical, infra-red and Synthetic Aperture Radar (SAR) cameras to take pictures of the ground; use wireless technology or satellites to transmit those pictures in "real time"; and then return back to its homebase. The Global Hawk was first used by the Department of Defense to help NATO bombers spot potential targets in the 1998 war over Kosovo.

(2) The Predator is quite similar to the Global Hawk: it also requires a human operator and a long run-way for take-offs and landings. But, at $4.5 million each, the 27-foot-long Predator is cheaper and smaller. As a result, it is worth the risk of being sighted and shot-down to fly a Predator at relatively low altitudes (25,000 feet and below). Predators were first deployed for reconnaissance and surveillance operations ("RQ-1") by the US military during the 1995 civil war in Bosnia. Predators were used far more extensively in the USA's assault against and occupation of Afghanistan in October 2001.

Each Predator is equipped with a color nose camera (generally used by the aerial vehicle operator for flight control), a day variable aperture TV camera, a variable aperture infrared camera (for low light/night), and a synthetic aperture radar for looking through smoke, clouds or haze. The cameras produce full motion video and the SAR still frame radar images. According to Coast Guard Lt. Cmdr. Troy Beshears, a UAV platform manager in Washington, D.C., a Predator's cameras "can read a 3 to 6-inch letter from 10,000 feet."

Predator "drones" can also be equipped with lasers, targeting systems, and a pair of "Hellfire" anti-tank missiles, and used in combat ("MQ-1"). It was in fact an armed Predator that the Central Intelligence Agency -- working by remote-control from its headquarters in Langley, Virginia -- used on 3 November 2002 to assassinate Qaed Salim Sinan al-Harethi, an alleged member of Al Qaeda, and five other men (one, Kamal Derwish, a US citizen), as they traveled in a car on a road in Yemen. Al-Harethi was suspected of being responsible for the attack on the USS Cole, as well as the recent attack on a French oil tanker off the Yemeni coast.

Unfortunately, this still-unpunished war crime wasn't the first time that the CIA used an armed UAV to assassinate a group of "suspects." Earlier in the year (2002), in Afghanistan, an armed Predator was used to assassinate Mohammed Atef, al Qaeda's chief of operations. Other attacks by armed Predators -- such as those against the Taliban's former leader, Mullah Omar, and Gulbuddin Hekmatyar (a former Afghan prime minister and the head of the Islamic fundamentalist Hezb-e-Islami group) -- have missed their targets and/or killed civilians. (Here the CIA is following the example of the Israeli special forces, which have for years been using American-made missile-firing helicopters to assassinate suspected Palestinian terrorists.)

And yet the US military didn't use Predators in its March 2003 assault against and occupation of Iraq. The reason was clear: too many of the damn things -- as many as 12 percent of them, according to one estimate -- had crashed in Afghanistan due to bad weather conditions and other "technical" problems. In addition to wasting money, these little-publicized crashes made great trophies. But these facts shouldn't have come as surprises to the Department of Defense, which had already been informed by Thomas P. Christie, the director of the Pentagon's Operational Test and Evaluation Office, that, "As tested, the Predator UAV system is not operationally effective or suitable [...] Poor target location accuracy, ineffective communications, and limits imposed by relatively benign weather, including rain, negatively impact missions such as strike support, combat search and rescue, area search, and continuous coverage."

In place of Predators, the US military forces in Iraq used AAI's Shadow 200, which cost $300,000 each. Much smaller than Predators, the 11-foot-long Shadows don't require run-ways to take-off and land. Like helicopters, they only require clearance space above them. And yet Shadows can still send live feeds from their video and infrared cameras over distances of 77 miles. Another "Unmanned Combat Air Vehicle" or UCAV is the X-45, manufactured by Boeing ($10 million each) and capable of launching precision-guided weapons.

(3) The Cypher is relatively small. Some "mini-Cyphers" are only 8 feet tall and 3 feet wide, and weigh only 30 pounds, which allow it to enter buildings as well as hover above or land on top of them. (Other UAVs, such as MLB's truck-launched UAV, can be even smaller.) But the uniqueness of the Cypher isn't simply a matter of its small size and maneouverabiliy. Unlike the Global Hawk and the Predator, the Cypher flies itself. A fully automated uninhabited spy plane, it simply needs to know where to go to find its targets. Once given this information, the Cypher can launch itself (veritically, like a helicopter); can use the military's network of Global Positioning Satellites (GPS) to find out where its launch has placed it; and can use a variety of on-board cameras to "see" where it needs to go. Internal computer programs will also tell the Cypher when it has arrived, what to do, and when to return to homebase.

Onboard each Cypher are video cameras, Forward-Looking Infra-Red (FLIR) cameras, chemical detectors, magnometers, radio and satellite links, microphones to relay pre-recorded announcements, and so-called non-lethal payloads (tear gas and/or smoke cannisters, steel spikes to puncture tires or printed propaganda). As one can see from this "mixed" payload, the Cypher is very different from both the Global Hawk and the Predator. It isn't primarily designed to be used as a spy plane (or as an automated assassin) in wars against heavily armed combatants, though it can serve quite well in such instances. The Cypher's real usefulness is in what the US military calls "operations other than war," that is to say, in domestic police actions or enforcements of the law against civilian non-combatants one wants to disperse or arrest, but not murder in cold blood.

(4) The Desert Hawk is truly tiny. Manufactured by Lockheed Martin, it's less than 3-feet-long and, according to a 6 March 2004 report by Jim Skeen of the Daily News, it's being used by the US military in both Iraq and Afghanistan.

Palmdale Calif. - A tiny spy plane developed by Lockheed Martin engineers in Palmdale is doing sentry duty around U.S. air bases in Iraq and Afghanistan. Weighing seven pounds and looking like something out of a hobby shop, Desert Hawk controls itself without human guidance and carries video cameras and infrared sensors that allow security personnel to scout outside bases without exposing themselves to danger.

"A key factor of our system is it's fully autonomous. You don't have to have a guy standing there with a joy stick," said David Eichstedt, Lockheed Martin's project manager.

A product of Lockheed Martin Aeronautics Co./Palmdale, the craft is formally known as the Force Protection Airborne Surveillance System, or FPASS. It was nicknamed "Desert Hawk" by the general in charge of air forces in the Middle East. The Desert Hawk is just 32 inches long and has a wingspan of 52 inches, powered by an electric motor and able to fly for an hour to 75 minutes at a time. It is operated in a system that each includes six Desert Hawks, a ground station and a remote viewing terminal, plus a field kit for repairs. While the Desert Hawk looks like a remote-controlled model plane, it is much more sophisticated. Its missions are programmed in advance using a touch-screen interface on a laptop computer. A mission can be changed while the aircraft is in flight. The aircraft lands by itself, without guidance from its human operators. Rather than taking off from a runway, the aircraft are launched into the air with a bungee cord that can stretch out to 150 feet. "It can fling it up 200 feet in short order," Eichstedt [of Lockheed Martin] said.

The Defense Department is looking at expanding the use of Desert Hawk by placing unmanned air vehicles and their operators with Army Special Operations forces. Four systems have been delivered to the British Army. For now, Lockheed Martin is focused on military uses of the Desert Hawk.

Federal Aviation Administration regulations block unmanned air vehicles from using the national air space [hic], making civilian applications problematic. NASA and the aviation industry are embarking on an effort to determine what regulations and what advancements in unmanned air vehicle technologies are needed to allow aircraft like Desert Hawk as well as larger unmanned craft to routinely use the national air space. Resolving those issues will open up the national air space for civilian applications of UAVs.

"You can think of all kinds of cool things to do with UAVs -- look for forest fires, search and rescue missions, monitoring aqueducts and pipelines," Eichstedt said.

The Desert Hawk's roots go back to 1996 when Lockheed Martin was conducting research and development under a program called Micro Air Vehicle for the Defense Advanced Research Projects Agency. At the time, DARPA was interested in the idea of developing a UAV that was no more than 6 inches long. Out of that work came development of autopilot and communications technologies that would serve as the backbone of Desert Hawk. In February 2002, as the Afghanistan campaign was well under way, Lockheed Martin entered into a contract with the Air Force to begin providing the Desert Hawk systems. The first two systems were delivered four months later. The Air Force was so pleased with the system that it nominated Desert Hawk for the 2002 Collier Trophy, the aviation world's equivalent of an Academy Award. While it did not win the Collier, the Air Force's nomination was high praise. Employment numbers on the program were not disclosed, but company spokeswoman Dianne Knippel said the Desert Hawk is not a large employment project. The Desert Hawk is in use in both Iraq and Afghanistan, but Lockheed Martin officials would not comment on how many have been deployed [or lost].

*

One often hears about the use of UAVs in "the war on terrorism" and "homeland security." For example, on 11 November 2002, the Newhouse News Service reported that "Pilotless Planes' Latest Target May Be Homeland Security," that is, "[i]f concerns about their safety and reliability can be resolved." Good point, but there is another "concern" that must be addressed before UAVs can been used over the USA: the Federal Aviation Administration has to approve their use in commercial flight zones, which is something it hasn't done yet, and isn't likely to do due to the still-unresolved problem of crashes.

On 26 November 2002, The Gaithersburg News reported that UAVs can be used to gather photographs and videotape for use in Lockheed Martin's Geographic Information System (GIS), which displays large quantities of detailed information in the form of digital maps, which in turn can be used to make quick and well-chosen responses to emergencies (such as terrorist attacks). But there are still significant technical problems. At the demonstration at Lockheed Martin's plant in Gaithersburg, there was in the words of the reporter "a planned demonstration" that literally "never got off the ground" (most unfortunate, because this particular demonstration concerned the actual merging of UAV and GIS technologies!), and "another demonstration" that was cancelled due to "weather and technical glitches."

On 23 July 2003, The Herald Sun reported that the Australian military would soon start using UAVs to track "rebels" and "gangs of thugs" on the Solomon Islands. "Once located," the story says, "targets can be kept under surveillance, filmed and photographed day and night." But these one-meter-long UAVs will not use on-board transmitters. Instead, "film and photographs will be flown back to base, probably at Honiara airport, where they will be analysed by military experts and defence scientists and used to help plan operations." And so, while they are "more secure" to the extent they aren't sending out transmissions that the rebels can intercept, these UAVs will more likely be targets for ground-to-air attacks: bring one down and you've destroyed both the UAV itself and any images it took.

surveillance from the air

spy planes

As we've mentioned elsewhere, helicopters have certain drawbacks: they are very noisy; they must fly at relatively low altitudes; they cannot "hide" by achieving high altitudes; they're vulnerable to small arms fire from the ground; and they can't fly long distances or stay aloft for long periods of time without refueling. And so helicopters are increasingly being used alongside a variety of other aircraft, including spy planes.

To be as undetectable, as sneaky, as possible, spy planes aren't large military jets, but small propeller-driven "private" airplanes (usually Cessnas or De Havillands) that have been packed with military equipment. The motors have been modified so that they can run "silently." As a result, spy planes make virtually no noise, look "perfectly innocent" and are highly unlikely to be detected, even when they fly slowly at low altitudes. (The only dead giveaway is the antennae-laden "radom" on the plane's underside.)

Ironically, spy planes are easier to spot at night than during the day. They frequently fly very slowly -- so slowly, in fact, that at times they appear to be motionless. Spy planes don't keep to the easy to spot flight routes maintained by the Federal Aviation Admininstration (FAA) for jet-powered commercial aircraft, and sometimes circle several times around a "pivot" (target) on the ground. The bodies of spy planes are silver or white, and bear few if any identifying or distinguishing markings or insignia. Finally, unlike the lights on commercial airplanes, which are bright and continuously shining, the lights on spy planes are dim and/or red-colored, alternate in irregular patters ("flicker") and sometimes go completely dark, and then light up again, in a matter of seconds.

Spy planes have long been used by so-called intelligence-gathering agencies such as the National Security Agency (NSA) to monitor foreign countries, especially the Soviet Union. But in recent years, especially since September 11th, spy planes are being used to fly reconnaissance missions over the territory of the United States of America. To our knowledge, at least two "authorities" are currently using spy planes for the purpose of "homeland security": the Federal Bureau of Investigation (FBI) and the Department of Defense.

On 15 March 2003, the Associated Press (AP) reported that "the FBI has a fleet of aircraft [...] flying America's skies to track and collect intelligence from suspected terrorists." There are around 100 of these planes, which are nicknamed "Nightstalkers" and can be flown by any of the FBI's 56 field offices. Each spy plane is equipped with what the AP describes as "electronic surveillance equipment so agents can pursue listening devices placed in cars, in buildings and even along streets, or listen to cell phone calls. Still others fly photography missions [using] infrared devices that allow agents to track people and vehicles in the dark."

The AP report went on to explain that, "Legally, no warrants are necessary for the FBI to track cars or people from the air. Law enforcement officials need warrants to search homes or to plant listening devices or monitor cell phone calls -- and that includes when the listener is flying in an airplane [...] A senior FBI official, speaking on condition of anonymity, said the FBI does not do flyovers to listen to telephone calls and gather electronic data from random citizens in hopes the data will provide leads. Rather, the planes are used to follow specific individuals."

In mid-October 2002, the Department of Defense (DOD) used the series of sniper attacks in the Washington, DC area as an opportuntiy to "offer" the use of its spy planes to catch the culprits (one whom, it turned out, had extensive military training). Dispatched from the 204th Military Intelligence Battalion at Fort Bliss, Texas, the DOD's planes are even more sophisticated than those flown by the FBI: each one is equipped with long-range cameras, radar and hyperspectral sensors, Forward Looking Infra-Red (FLIR) imaging devices, on-board computers, target-acquisition and tracking software programs, and microwave transmitters to "downlink" to monitoring stations on the ground.

News reports indicate that the Department of Defense's kindly offer was reluctantly refused. There had been too much criticism of the offer, which clearly violated the spirit, if not the actual letter, of the 1878 Posse Comitatus Act (there must be a strict separation between the military and domestic law enforcement agencies, that is, if democracy is to survive).

Needless to say, the Bush Administration wants to repeal the Posse Comitatas Act, and for the same cynical reasons that the New York Police Department wants to nullify the Handschu Consent Decree: September 11th rendered these internal controls both obsolete and an obstacle to preventing future attacks. And so, without generating any objections at all, the DOD announced in March 2003 (five months after the sniper attacks had ended) that it was deploying Black Hawk helicopters in New York's "airspace." No doubt, DOD (or NSA?) spy planes were also deployed.

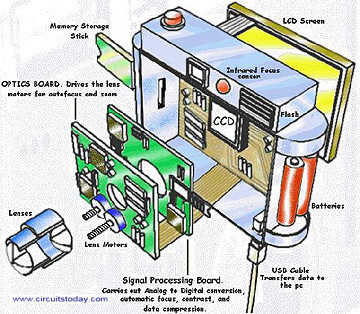

The digital camera can be considered as an alteration of the conventional analog camera. Most of the associated components are also the same, except that instead of light falling on a photosensitive film like an analog camera, image sensors are used in digital cameras. Though analog cameras are mostly dependent on mechanical and chemical processes, digital cameras are dependent on digital processes. This is a major shift from its predecessor as the concept of saving and sharing audio as well as video contents have been simplified to earth.

Digital Camera Basics

As told earlier, the basic components are all the same for both analog and digital cameras. But, the only difference is that the images received in an analog camera will be printed on a photographic paper. If you need to send these photos by mail, you will have to digitally convert them. So, the photo has to be digitally scanned.

This difficulty is not seen in digital photos. The photos from a digital camera are already in the digital format which the computer can easily recognize (0 and 1). The 0’s and 1’s in a digital camera are kept as strings of tiny dots called pixels.

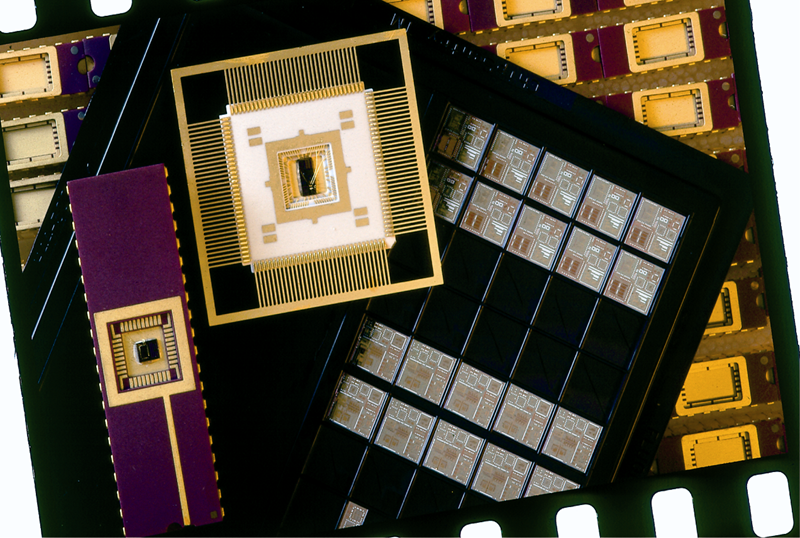

The image sensors used in an digital can be either a Charge Coupled Device (CCD) or a Complimentary Metal Oxide Semi-conductor (CMOS). Both these image sensors have been deeply explained earlier.

The image sensor is basically a micro-chip with a width of about 10mm. The chip consists arrays of sensors, which can convert the light into electrical charges. Though both CMOS and CCD are very common, CMOS chips are known to be more cheaper. But for higher pixel range and costly cameras mostly CCD technology is used.

A digital camera has lens/lenses which are used to focus the light that is to be projected and created. This light is made to focus on an image sensor which converts the light signals into electric signals. The light hits the image sensor as soon as the photographer hits the shutter button. As soon as the shutter opens the pixels are illuminated by the light in different intensities. Thus an electric signal is generated. This electric signal is then further broke down to digital data and stored in a computer.

Pixel Resolution of a Digital Camera

The clarity of the photos taken from a digital camera depends on the resolution of the camera. This resolution is always measured in the pixels. If the numbers of pixels are more, the resolution increases, thereby increasing the picture quality. There are many type of resolutions available for cameras. They differ mainly in the price.

- 256×256 – This is the basic resolution a camera has. The images taken in such a resolution will look blurred and grainy. They are the cheapest and also unacceptable.

- 640×480 – This is a little more high resolution camera than 256×256 type. Though a clearer image than the former can be obtained, they are frequently considered to be low end. These type of cameras are suitable for posting pics and images in websites.

- 1216×912 – This resolution is normally used in studios for printing pictures. A total of 1,109,000 pixels are available.

- 1600×1200 – This is the high resolution type. The pictures are in their high end and can be used to make a 4×5 with the same quality as that you would get from a photo lab.

- 2240×1680 – This is commonly referred to as a 4 megapixel cameras. With this resolution you can easily take a photo print up to 16×20 inches.

- 4064×2704 – This is commonly referred to as a 11.1 megapixel camera. 11.1 megapixels takes pictures at this resolution. With this resolution you can easily take a photo print up to 13.5×9 inch prints with no loss of picture quality.

- There are even higher resolution cameras up to 20 million pixels or so.

Color Filtering using Demosaicing Algorithms

The sensors used in digital cameras are actually coloured blind. All it knows is to keep a track of the intensity of light hitting on it. To get the colour image, the photosites use filters so as to obtain the three primary colours. Once these colours are combined the required spectrum is obtained.

For this, a mechanism called interpolation is carried out. A colour filter array is placed over each individual photosite. Thus, the sensor is divided into red, green and blue pixels providing accurate result of the true colour at a particular location. The filter most commonly used for this process is called Bayer filter pattern. In this pattern an alternative row of red and green filters with a row of blue and green filters. The number of green pixels available will be equal to the number of blue and red combined. It is designed in a different proportion as the human eye is not equally sensitive to all three colours. Our eyes will percept a true vision only if the green pixels are more.

The main advantage of this method is that only one sensor is required for the recording of all the colour information. Thus the size of the camera as well as its price can be lessened to a great extent. Thus by using a Bayer Filter a mosaic of all the main colours are obtained in various intensities. These various intensities can be further simplified into equal sized mosaics through a method called demosaicing algorithms. For this the three composite colours from a single pixel are mixed to form a single true colour by finding out the average values of the closest surrounding pixels.

Take a look at the digital camera schematic shown below.

- Digital Camera Diagram

Parameters of a Digital Camera

Like a film camera, a digital camera also has certain parameters. These parameters decide the clarity of the image. First of all the amount of light that enters through the lens and hits the sensor has to be controlled. For this, the parameters are

- Aperture – Aperture refers to the diameter of the opening in the camera. This can be set in automatic as well as the manual mode. Professionals prefer manual mode, as they can bring their own touch to the image.

2. Shutter Speed – Shutter speed refers to the rate and amount of light that passes through the aperture. This can be automatic only. Both the aperture and the shutter speed play important roles in making a good image.

3. Focal Length – The focal length is a factor that is designed by the manufacturer. It is the distance between the lens and the sensor. It also depends on the size of the sensor. If the size of the sensor is small, the focal length will also be reduced by a proportional amount.

4. Lens – There are mainly four types of lenses used for a digital camera. They differ according to the cost of the camera, and also focal length adjustment. They are

- Fixed-focus, fixed-zoom lens – They are very common and are used in inexpensive cameras.

- Optical-zoom lenses with automatic focus – These are lenses with focal length adjustments. They also have the “wide” and “telephoto” options.

- Digital zoom – Full-sized images are produced by taking pixels from the centre of the image sensor. This method also depends on the resolution as well as the sensor used in the camera.

- Replaceable lens systems – Some digital cameras replace their lenses with 35mm camera lenses so as to obtain better images.

Digital Cameras v/s Analog Camera

- The picture quality obtained in a film camera is much better than that in a digital camera.

- The rise of technology has made filming the help of digital techniques easier as well as popular.

- Since the digtal copy can be posted in websites, photos can be sent to anyone in this world.

Different Types Of Digital Cameras

Digital cameras are mainly classified according to their use, automatic and manual focus, and also price. Here are the classifications.

1. Compact digital cameras

Compact cameras are the most widely used and the simplest cameras to be ever seen. They are used for ordinary purposes and are thus called “point and shoot cameras”. They are very small in size and are hence portable. Since they are cheaper than the other cameras, they also contain fewer features, thus lessening the picture quality. These cameras are further classified according to their size. The smaller cameras are generally called as ultra-compact cameras. The others are called compact cameras.

Here are some features of this camera

- Compact and simple.

- Images can be stored in computer as JPEG files.

- Live preview can be seen before taking photos.

- Low power flashes are available for taking photos in the dark.

- Contains auto-focus system with closer focusing ability.

- Zoom capability.

Although these features are available, their magnitude may be less compared to other cameras. The flashes may be available only for nearby objects. The preview of the picture to be taken will have less motion capability. The image sensors used in these cameras have a very small diognal space of about 6mm with a crop factor of 6.

Compact Digital Cameras

2. Bridge cameras

Bridge cameras are most often mistaken for single-lens reflex cameras (SLR). Though they have the same characteristics their features are different. Some of its features are

- Fixed lens

- Small image sensors

- Live preview of the image to be taken

- Auto-focus using contrast-detect method and also manual focus.

- Image stabilization method to reduce sensitivity.

- Image can be stored as a raw data as well as compressed JPEG format.

Though they resemble SLR in many ways, they operate much slower than the latter. They are very big in size and so the fixed lenses are given very high zooming capability and also fast apertures. The autofocus or manual focus is set according to our necessity. The image preview is done using either a LCD or an Electronic View Finder (EVF).

Bridge Cameras

3. Digital single lens reflex cameras (DSLR)

This is one of the most high end cameras obtainable for a decent price. They use the single-lens reflex method just like an ordinary camera with a digital image sensor. The SLR method consists of a mirror which reflects the light passing through the lens with the help of a separate optical viewfinder.

Some features of this camera are

- Special type of sensors is setup in the mirror box for obtaining autofocus.

- Has live preview mode.

- Very high end sensors with crop factors from 2 to 1 with diagonal space from 18mm to 36mm.

- High picture quality even at low light.

- The depth of field is very less at a particular aperture.

- The photographer can choose the lens needed for the situation and can also be easily interchangeable.

- A focal plane shutter is used in front of the imager.

Digital single lens reflex cameras (DSLR)

Digital single lens reflex cameras (DSLR)

4. Electronic viewfinder (EVF)

This is just a combination of very large sensors and also interchangeable lenses. The preview is made using an EVF. There is no complication in mechanism like a DSLR.

Electronic View Finder

5. Digital rangefinders

This is a special film camera equipped with a rangefinder. With this type of a camera distant photography is possible. Though other cameras can be used to take distant photos, they do not use the rangefinder technique.

Digital rangefinders

6. Line-scan cameras

This type of cameras is used for capturing high image resolutions at a very high speed. To make this mechanism possible, a single pixel of image sensors are used instead of a matrix system. A stream of pictures of constantly moving materials can be taken with this camera. The data produced by a line-scan camera is 1-dimensional. It has to be processed in a computer to make it 2-D. This 2-D data is further processed to obtain our needs.

Line-scan cameras

Charge Coupled Device and CMOS Active Pixel Sensor have already been posted, it is time to know their comparison, advantages and disadvantages. Though both of them are equally used in cameras, there are some differences in parameters like gain, speed and so on.

Comparison – Charge Coupled Device and CMOS Active Pixel Sensor

- Both the devices are used to convert light into electric signals and are used for the same applications. After converting the signals, they have to be read from each cell. This process is different for both the devices.

- The charge from each chip is taken to the end of the array and then read in a CCD. This is then converted into a digital signal with the help of an analog to digital converter (ADC). The process of reading the signal by CMOS Active Pixel Sensor is done by using transistors and amplifiers at each pixel and then the signal is moved using traditional wires.

Difference – Charge Coupled Device and CMOS Active Pixel Sensor

- CCD image sensors create super quality pictures. They also produce lesser noise than CMOS APS.

- In a CMOS all the transistors are kept right next to each pixel. As a result, all the photons that hit the device actually get scattered by hitting the transistors as well. Thus, the sensitivity of CMOS Active Pixel Sensor is lesser than that of a Charge Coupled Device.

- The design of the CCD sensors is in such a way that they require more power for its operation. If both the devices of equal reception are taken, the CCD is considered to consume almost 100 times more power than its equivalent CMOS Active Pixel Sensor.

- All the devices have been using Charge Coupled Device devices far more than CMOS Active Pixel Sensor. As a result a vast study has been done on CCD devices. So, they are more mature and also tend to have higher quality pixels.

"Nannycams" and other insecure forms of video surveillance

Thousands of people who have installed a popular wireless video camera, intending to increase the security of their homes and offices, have instead unknowingly opened a window on their activities to anyone equipped with a cheap receiver. The wireless video camera, which is heavily advertised on the Internet, is intended to send its video signal to a nearby base station, allowing it to be viewed on a computer or a television. But its signal can be intercepted from more than a quarter-mile away by off-the-shelf electronic equipment costing less than $250.

A recent drive around the New Jersey suburbs with two security experts underscored the ease with which a digital eavesdropper can peek into homes where the cameras are put to use as video baby monitors and inexpensive security cameras. The rangy young driver pulled his truck around a corner in the well-to-do suburban town of Chatham and stopped in front of an unpretentious house. A window on his laptop's screen that had been flickering suddenly showed a crisp black-and-white video image: a living room, seen from somewhere near the floor. Baby toys were strewn across the floor, and a woman sat on a couch.

After showing the nanny-cam images, the man, a privacy advocate who asked that his name not be used, drove on, scanning other houses and finding a view from above a back door and of an empty crib [...]

The vulnerability of wireless products has been well understood for decades. The radio spectrum is crowded, and broadcast is an inherently leaky medium; baby monitors would sometimes receive signals from early cordless phones (most are scrambled today to prevent monitoring). A subculture of enthusiasts grew up around inexpensive scanning equipment that could pick up signals from cordless and cellular phones, as former Speaker Newt Gingrich discovered when recordings of a 1996 conference call strategy session were released by Democrats [...]

In the case of the XCam2, the cameras transmit an unscrambled analog radio signal that can be picked up by receivers sold with the cameras. Replacing the receiver's small antenna with a more powerful one and adding a signal amplifier to pick up transmissions over greater distances is a trivial task for anyone who knows his way around a RadioShack and can use a soldering iron.

Products intended for the consumer market rarely include strong security, said Gary McGraw, the chief technology officer of Cigital, a software risk-management company. That is because security costs money, and even pennies of added expense eat into profits. "When you're talking about a cheap thing that's consumer grade that you're supposed to sell lots and lots of copies of, that really matters," he said.

Refitting an X10 camera with encryption technology would be beyond the skills of most consumers. It is best for manufacturers to design security features into products from the start, because adding them afterward is far more difficult, Mr. McGraw said. The cameras are only the latest example of systems that are too insecure in their first versions, he said, and cited other examples, including Microsoft's Windows operating system. "It's going to take a long time for consumer goods to have any security wedged into them at all," he said [...]

As a security expert, Mr. Rubin said he was concerned about the kinds of mischief that a criminal could carry out by substituting one video image for another. In one scenario, a robber or kidnapper wanting to get past a security camera at the front door could secretly record the video image of a trusted neighbor knocking. Later, the robber could force that image into the victim's receiver with a more powerful signal. "I have my computer retransmit these images while I come by," he said, explaining the view of a would-be robber. Far-fetched, perhaps. That is the way security experts think. But those who use the cameras and find out about the security hole seem to grasp the implications quickly

the real news from Washington

At approximately 9:00 am (Eastern Standard Time) on Sunday 21 April 2002, the CBS news program Sunday Morning with Charles Osgood included a segment on surveillance cameras. Reported by news correspondent Martha Teichner and produced by Jason Sacca, this 10-minute-long segment focused upon the police video surveillance system in Washington, D.C., and included interviews with D.C. Police Chief Charles Ramsey, Congressional Representative Connie Morella, Bill Brown of the New York Surveillance Camera Players, and Marc Rotenberg of the Electronic Privacy Information Center (EPIC), which has put up a new web site exclusively devoted to surveillance cameras in the District of Columbia.

If one didn't see the TV broadcast but simply read about it on CBS's web site, one might resonably have thought that the focus of the story was primarily New York City, not Washington, D.C.

(CBS) Times Square. The best place in the United States to lose yourself. Pretty anonymous, right? Think again. As many as 200 surveillance cameras are observing every move you make.Unfortunately, this was only the beginning of the confusion. A patch-work construction, CBS's program on surveillance introduced several, possibly (un)related themes: 1) the use of police cameras to watch political demonstrators (the program opened with shots of and commentary about demonstrators walking beneath surveillance cameras during a large anti-war demonstration held in Washington, D.C., on 20 April 2002); 2) the use of Park Police cameras to watch visitors to the monuments in the nation's capital; 3) the use of police cameras to watch tourists in Times Square; and 4) the use of surveillance cameras in England (there was a brief sub-segment that included shots of cameras in London and a badly recorded voice-over that was no doubt left in because it couldn't be re-recorded without flying back to England). There was also 5) a series of ad hoc references to topics of "current" interest, such as face recognition software, traffic or "red-light" cameras and the use of surveillance cameras to watch passengers on JetBlue airplanes. (We must place the word "current" in quotes because CBS passed on lots of out-of-date information about these subjects: Correspondent Teichner referred to the use of face recognition in Tampa Bay, Florida, without mentioning the fact that Tampa's police stopped using the software back in August 2001 because it was totally unreliable; and she referred to traffic cameras without mentioning the fact that, in the last few months, these devices have either been successfully challenged in court or taken down in several American cities and states, including Denver, San Diego and Hawaii.)

That's nothing compared to Washington, D.C., where the chief of police says that he potentially has access to an unlimited number of cameras.

This Sunday Morning, the slippery slope of surveillance.

Americans have grown accustomed to surveillance cameras watching them in convenience stores, at work, and at ATM machines. But in the aftermath of Sept. 11, many private businesses, town governments, and police departments are installing surveillance cameras, often in public places, at what privacy advocates say is an alarming rate. They want to know, "Who's watching the watchers?"

CBS News Sunday Morning Correspondent Martha Teichner reports on the growing debate between security and privacy.

Unfortunately for the (average) viewer, at least two of these themes -- the sub-segment on England and the series of ad hoc references -- should never have been included in the first place. The time spent on these matters, which are clearly of secondary concern, should have been devoted instead to the widespread but under-reported use of cameras by police departments to watch political demonstrations both large and small, both in the nation's capital and elsewhere. (Note well that the DC Independent Media Center carried a great deal of coverage of the 20 April 2002 demonstrations, but did not mention the police's use of high-tech surveillance cameras to watch the participants in them, despite the fact that -- according to the police -- many of these cameras were originally installed to surveill the demonstrators outside of the World Bank/International Monetary Fund meetings that were held in Washington, D.C., in April 2000.) Instead, CBS chose to devote the majority of its attention to the use of police cameras to watch visitors to the national monuments in Washington, D.C., and "tourists" in Times Square.

According to EPIC's Marc Rotenberg, D.C. will be the main field upon which the looming battle over surveillance cameras and privacy rights will be fought. Rotenberg's right, but not necessarily (or solely) for the reason given, i.e., that Washington's monuments -- the Lincoln Memorial, the Vietnam Veterans' Memorial, et al -- literally symbolize the country's commitment to "liberty and justice for all," and so should not be defiled or trivialized by the presence of devices that provide "security for some." People won't stand for it, or so goes this line of reasoning.

But "people" will stand for it: CBS says so and of course CBS has "the facts" to prove it. According to a poll "out today," 77 percent (of those who responded) were in favor of the use of police cameras at the nation's monuments; 81 percent didn't think such cameras were an invasion of the right to privacy; and 72 percent would be willing to give up "some" of their personal liberties for increased "security." Even more damaging to the position(s) taken by Rotenberg, Rep. Morella and Bill Brown (that is to say, everyone other than Police Chief Ramsey) was the "fact" that, despite the use of video surveillance by 80 percent of America's 19,000 police departments, CBS "couldn't find any evidence of serious abuses."

Neither Rotenberg, Morella, nor Brown were given an opportunity to respond to these alledged facts, which are either completely irrelevant or obviously inaccurate. The express intention of the Bill of Rights was to guarantee the protection of certain basic human rights, including the right to privacy, despite the vicissitudes of public opinion, unless or only if those vicissitudes rose to the level at which another amendment to the Constitution was necessary. The Ninth Amendment makes it clear that, even if the Fourth Amendment doesn't use the word "privacy," the right to privacy (among other unspecified rights) is still protected by the Constitution. Serious abuses of video cameras have been reported over and over again in New York's newspapers. In the most recent case, the building superintendant at 597 Fifth Avenue in Manhattan was caught using the "security" system he himself installed and maintained, not to prevent crimes, but to commit them, i.e., to watch women's bathrooms for sexual gratification). Such stories have led to the passage of measures against secret videotaping in Florida and other states.

In the absence of this truly relevant information, the viewer of the CBS program must have thought that all the anti-surveillance activists have going for them is: 1) the Fourth Amendment to the U.S. Constitution, which CBS showed Bill Brown reading aloud to what the TV network identified as a police surveillance camera in Times Square (this scene was in fact the segment's conclusion); 2) the scene in which EPIC's photographers were physically ejected from a building by private security guards (in the incongruous voice-over, Correspondent Teichner implored her viewers "You be the judge" of whether or not this horrific footage documents precisely the sort of "abuse" that CBS claimed that it couldn't find among police officers, who sometimes moon-light as security guards); and 3) the scene in which Marc Rotenberg pointed out that Police Chief Ramsey was obviously lying when he (Ramsey) claimed that his cameras can't zoom in and focus on the words in a flyer or newspaper that is being distributed by someone standing on the street, nor can they pull back and read a license plate on a car one mile away, when (as Rotenberg pointed out) a camera with these abilities (and more) can be purchased at any electronics store in America for under $1,000.

Though that was a lot of damaging testimony, it wasn't enough to give the viewer a sense of what's really at stake in the battle over the surveillance of public places by the D.C. police. As a result, the opposition -- even if it was articulated by three (very) different people (Rotenberg, Brown and Morella) -- seemed irrelevant, out-of-step and possibly motivated by ulterior ("political") motives, unlike humble public servant Charles Ramsey. "This isn't the 19th Century," the ultra-modern Chief of Police, possibly confused about the century in which the Bill of Rights was passed, said to CBS; "it's the 21st Century, and we're going to use what's available to us."