Eye Diagram Basics: Reading and applying eye diagrams

Accelerating data rates, greater design complexity, standards requirements, and shorter cycle times put greater demand on design engineers to debug complex signal integrity issues as early as possible. Because today's serial data links operate at gigahertz transmission frequencies, a host of variables can affect the integrity of signals, including transmission-line effects, impedance mismatches, signal routing, termination schemes, and grounding schemes. By using an oscilloscope to create an eye diagram, engineers can quickly evaluate system performance and gain insight into the nature of channel imperfections that can lead to errors when a receiver tries to interpret the value of a bit.

A serial digital signal can suffer impairments as it travels from a transmitter to a receiver. The transmitter, PCB traces, connectors, and cables will introduce interference that will degrade a signal both in its amplitude and timing. A signal can also suffer impairments from internal sources. For example, when signals on adjacent pairs of PCB traces or IC pins toggle, crosstalk among those signals can interfere with other signals. Thus, you need to determine at what point to place the oscilloscope probe in order to generate an eye diagram that will help you locate the source of the problem. Furthermore, where you place an oscilloscope's probe will produce differing signals on the display.

Generating an eye diagram

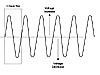

An eye diagram is a common indicator of the quality of signals in high-speed digital transmissions. An oscilloscope generates an eye diagram by overlaying sweeps of different segments of a long data stream driven by a master clock. The triggering edge may be positive or negative, but the displayed pulse that appears after a delay period may go either way; there is no way of knowing beforehand the value of an arbitrary bit. Therefore, when many such transitions have been overlaid, positive and negative pulses are superimposed on each other. Overlaying many bits produces an eye diagram, so called because the resulting image looks like the opening of an eye.

In an ideal world, eye diagrams would look like rectangular boxes. In reality, communications are imperfect, so the transitions do not line perfectly on top of each other, and an eye-shaped pattern results. On an oscilloscope, the shape of an eye diagram will depend upon various types of triggering signals, such as clock triggers, divided clock triggers, and pattern triggers. Differences in timing and amplitude from bit to bit cause the eye opening to shrink.

Interpreting an eye diagram

A properly constructed eye should contain every possible bit sequence from simple alternate 1’s and 0’s to isolated 1’s after long runs of 0’s, and all other patterns that may show up weaknesses in the design. Eye diagrams usually include voltage and time samples of the data acquired at some sample rate below the data rate. In Figure 1, the bit sequences 011, 001, 100, and 110 are superimposed over one another to obtain the final eye diagram.

A perfect eye diagram contains an immense amount of parametric information about a signal, like the effects deriving from physics, irrespective of how infrequently these effects occur. If a logic 1 is so distorted that the receiver at the far end can misjudge it for logic 0, you will easily discern this from an eye diagram. What you will not be able to detect, however, are logic or protocol problems, such as when a system is supposed to transmit a logic 0 but sends a logic 1, or when the logic is in conflict with a protocol.

What is jitter?

Although in theory eye diagrams should look like rectangular boxes, the finite rise and fall times of signals and oscilloscopes cause eye diagrams to actually look more like the image in Figure 2a. When high-speed digital signals are transmitted, the impairments introduced at various stages lead to timing errors. One such timing error is “jitter,” which results from the misalignment of rise and fall times (Figure 2b).

Jitter occurs when a riding or falling edges occur at times that differ from the ideal time. Some edges occur early, some occur late. In a digital circuit, all signals are transmitted in reference to clock signals. The deviation of the digital signals as a result of reflections, intersymbol interference, crosstalk, PVT (process-voltage-temperature) variations, and other factors amounts to jitter. Some jitter is simply random.

In Figure 2c, the absolute timing error or jitter margin is less than that in Figure 2b, but the eye opening in Figure 2c is smaller because of the higher bit rate. With the increase in bit rate, the absolute time error represents an increasing portion of the cycle, thus reducing the size of the eye opening. This may increase the potential for data errors.

The effect of termination is clearly visible in the eye diagrams generated. With improper termination, the eye looks constrained or stressed (Figure 3a), and with improved termination schemes, the eye becomes more relaxed (Figure 3b). A poorly terminated signal line suffers from multiple reflections. The reflected waves are of significant amplitude, which may severely constrict the eye. Typically, this is the worst-case operating condition for the receiver, and if the receiver can operate error-free in the presence of such interference, then it meets specifications.

As can be seen in Figure 4, an eye diagram can reveal important information. It can indicate the best point for sampling, divulge the SNR (signal-to-noise ratio) at the sampling point, and indicate the amount of jitter and distortion. Additionally, it can show the time variation at zero crossing, which is a measure of jitter.

A serial digital signal can suffer impairments as it travels from a transmitter to a receiver. The transmitter, PCB traces, connectors, and cables will introduce interference that will degrade a signal both in its amplitude and timing. A signal can also suffer impairments from internal sources. For example, when signals on adjacent pairs of PCB traces or IC pins toggle, crosstalk among those signals can interfere with other signals. Thus, you need to determine at what point to place the oscilloscope probe in order to generate an eye diagram that will help you locate the source of the problem. Furthermore, where you place an oscilloscope's probe will produce differing signals on the display.

Generating an eye diagram

An eye diagram is a common indicator of the quality of signals in high-speed digital transmissions. An oscilloscope generates an eye diagram by overlaying sweeps of different segments of a long data stream driven by a master clock. The triggering edge may be positive or negative, but the displayed pulse that appears after a delay period may go either way; there is no way of knowing beforehand the value of an arbitrary bit. Therefore, when many such transitions have been overlaid, positive and negative pulses are superimposed on each other. Overlaying many bits produces an eye diagram, so called because the resulting image looks like the opening of an eye.

In an ideal world, eye diagrams would look like rectangular boxes. In reality, communications are imperfect, so the transitions do not line perfectly on top of each other, and an eye-shaped pattern results. On an oscilloscope, the shape of an eye diagram will depend upon various types of triggering signals, such as clock triggers, divided clock triggers, and pattern triggers. Differences in timing and amplitude from bit to bit cause the eye opening to shrink.

Interpreting an eye diagram

A properly constructed eye should contain every possible bit sequence from simple alternate 1’s and 0’s to isolated 1’s after long runs of 0’s, and all other patterns that may show up weaknesses in the design. Eye diagrams usually include voltage and time samples of the data acquired at some sample rate below the data rate. In Figure 1, the bit sequences 011, 001, 100, and 110 are superimposed over one another to obtain the final eye diagram.

|

A perfect eye diagram contains an immense amount of parametric information about a signal, like the effects deriving from physics, irrespective of how infrequently these effects occur. If a logic 1 is so distorted that the receiver at the far end can misjudge it for logic 0, you will easily discern this from an eye diagram. What you will not be able to detect, however, are logic or protocol problems, such as when a system is supposed to transmit a logic 0 but sends a logic 1, or when the logic is in conflict with a protocol.

What is jitter?

Although in theory eye diagrams should look like rectangular boxes, the finite rise and fall times of signals and oscilloscopes cause eye diagrams to actually look more like the image in Figure 2a. When high-speed digital signals are transmitted, the impairments introduced at various stages lead to timing errors. One such timing error is “jitter,” which results from the misalignment of rise and fall times (Figure 2b).

|

Jitter occurs when a riding or falling edges occur at times that differ from the ideal time. Some edges occur early, some occur late. In a digital circuit, all signals are transmitted in reference to clock signals. The deviation of the digital signals as a result of reflections, intersymbol interference, crosstalk, PVT (process-voltage-temperature) variations, and other factors amounts to jitter. Some jitter is simply random.

In Figure 2c, the absolute timing error or jitter margin is less than that in Figure 2b, but the eye opening in Figure 2c is smaller because of the higher bit rate. With the increase in bit rate, the absolute time error represents an increasing portion of the cycle, thus reducing the size of the eye opening. This may increase the potential for data errors.

The effect of termination is clearly visible in the eye diagrams generated. With improper termination, the eye looks constrained or stressed (Figure 3a), and with improved termination schemes, the eye becomes more relaxed (Figure 3b). A poorly terminated signal line suffers from multiple reflections. The reflected waves are of significant amplitude, which may severely constrict the eye. Typically, this is the worst-case operating condition for the receiver, and if the receiver can operate error-free in the presence of such interference, then it meets specifications.

|

As can be seen in Figure 4, an eye diagram can reveal important information. It can indicate the best point for sampling, divulge the SNR (signal-to-noise ratio) at the sampling point, and indicate the amount of jitter and distortion. Additionally, it can show the time variation at zero crossing, which is a measure of jitter.

|

Eye diagrams provide instant visual data that engineers can use to check the signal integrity of a design and uncover problems early in the design process. Used in conjunction with other measurements such as bit-error rate, an eye diagram can help a designer predict performance and identify possible sources of problems.

Evolution of the eye diagram

focused on the evolution of the eye diagram: from individual signal integrity measurements to a BER bathtub that determines eye closure or Tj at a specific BER. The downside to the BER bathtub is that it solely focuses on the horizontal time/jitter closure.

The ability of a receiver to accurately discern a 1 from a 0 is dependent not only on the horizontal placement of the logic state but also on the voltage level at the decision threshold. The evolution of the eye diagram needs to take this into account for proper signal integrity characterization.

Noise decomposition (Figure 1) extends the characterization of eye diagrams to account for vertical closure. In the same sense that we can decompose T(jitter) into Rj and Dj, we can also decompose T(noise) into Rn and Dn. For BW limited systems with severe ISI, the vertical closure can sometimes dominate the BER failures and needs to be characterized.

The resultant eye diagram or BERT scan (Figure 2) is an evolution of the eye diagram to take into account not only the horizontal impairments to the eye but also the vertical impairments. It essentially combines a BER bathtub with horizontal jitter closing and a BER bathtub with vertical noise closing.

I hope that this information helps you to see how the eye diagram has evolved and how these new measurement results can help you develop a system with better signal integrity. Please don’t hesitate to post up your questions or comments.

XXX . XXX How to verify and debug next-generation memory

With the recent release of both LPDDR3 and DDR4 specifications by JEDEC, the move to the next generation of memory technologies is in full swing. First out of the gates was LPDDR3, which will replace LPDDR2 in mobile devices and ultra-thin laptops with commercial deployments beginning in 2014. Then came the new specification for synchronous DDR4, the next generation DRAM used in everything from laptops to servers that will likely start seeing production deployments in early 2015.

The trends in the new memory specs are predictable: higher speeds, increased densities, smaller packaging, and reduced power consumptions. For design engineers charged with electrical verification and debugging, the new standards bring a number of notable changes and new measurement challenges. The combination of faster speeds and lower voltage means that signal integrity is more important than ever, while denser packaging will create signal access challenges.

This article highlights the changes these new standards bring to electrical verification and describes how to prepare for proper signal access to LPDDR3 and DDR4 memory systems. It will also look at instrument selection and techniques needed for performing electrical verification tests on these emerging standards.

LPDDR3 Overview

In May of 2012, JEDEC published the JESD209-3 Low Power Memory Device Standard. In comparison to LPDDR2, LPDDR3 offers a higher data rate, greater bandwidth and power efficiency, and higher memory density. LPDDR3 achieves a data rate of 1600 MT/s and uses a number of new technologies, including write-leveling and command/address training, optional on-die termination (ODT), and low-I/O capacitance.

As with LPDDR2, LPDDR3 supports both package-on-package and discrete packaging types to meet the requirements of various mobile devices, and it offers designers the ability to select the options that best meet the needs of their product. LPDDR3 preserves the power-efficient features and signaling interface of LPDDR2, allowing for fast clock stop/start, low-power self-refresh, and smart array management.

From an electrical verification perspective, LPDDR3 is pushing the system power envelope with its combination of lower operating voltage and higher bandwidths. By comparison, first-generation LPDDR ran at 200 MHz at 1.8V. With LPDDR2 voltage dropped to 1.2V while speed increased to 533 MHz. Now LPDDR3 calls for the same 1.2V but runs at 800 MHz. This means that signal integrity will be an important factor in order to verify clock cycles on shorter 1.25 ns clock periods. Table 1 shows a comparison between the two generations, indicating the need for higher bandwidth oscilloscopes to maintain signal integrity.

DDR4 Overview

Released in September of 2012, the DDR4 specification calls for greater performance, significantly increased packaging density, improved reliability and lower power consumption compared to DDR3. As shown in Table 2, voltages drop to 1.2V from 1.5V to reduce power consumption while the performance factor nearly doubles to 3,200 MT/sec. (megatransfers per second). Other changes include higher densities to support memory-intensive server applications, higher data rates and the ability to stack pins for higher density module placement.

From a test perspective, as with LPDDRR3, the move to higher transfer rates and lower voltages will increase the emphasis on signal integrity and timing and margin measurements for validating memory technologies. To accommodate the increased measurement complexity, JEDEC is making a number of changes and updates to required test methodology.

One of the most significant changes is the proposed requirement to establish the reference voltage or V center used for compliance testing using a variable approach. For DDR3, this value was fixed at 750 mV. The new approach involves making multiple acquisitions of the output data (DQ) and a data strobe signal (DQS) Write burst. The largest to smallest voltage value for each is then measured and an average created using a simple formula. This then becomes the DQ voltage reference for centering and making reference measurements using an eye diagram. This process is shown in Figure 1.

Another notable change in DDR4 testing is the expanded use of eye diagrams. The proposed test specification will set required eye height and width and will provide guidance on what will be considered a deterministic level of performance from a timing perspective. It will also set margin requirements with random or Gaussian noise applied to the measurement. Figure 2 shows the eye diagram for DQ from the DD4 spec with recommended mask sizing that is placed on the Vref center.

Following the lead of many serial standards, DDR4 will now incorporate a statistical jitter measurement approach for speeds greater than 2133. For speeds under 2133, all jitter will be assumed to be deterministic jitter (DJ). For 2133 and above, tests will look at both DJ and random jitter (RJ). To date, many of the timing parameters for jitter have not been published, but designers should be aware that jitter testing will be a requirement. One benefit of expanded jitter testing in DDR4 is that should devices fail to meet jitter requirements, the test and measurement vendor community offers robust jitter decomposition tools that can help isolate the source of problems.

In addition, JEDEC will no longer require de-rating of pass/fail limits for setup and hold measurement based on signal slew rate, as was the case with DDR2 and DDR3. In discussing the issue with engineers, the JEDEC standards committee found that the complex de-rating procedure was poorly understood and rarely performed. Typically, most engineers relied on making standard slew rate measurements. In DDR4, this has been replaced with the Vref averaging and eye-mask measurements described above.

The trends in the new memory specs are predictable: higher speeds, increased densities, smaller packaging, and reduced power consumptions. For design engineers charged with electrical verification and debugging, the new standards bring a number of notable changes and new measurement challenges. The combination of faster speeds and lower voltage means that signal integrity is more important than ever, while denser packaging will create signal access challenges.

This article highlights the changes these new standards bring to electrical verification and describes how to prepare for proper signal access to LPDDR3 and DDR4 memory systems. It will also look at instrument selection and techniques needed for performing electrical verification tests on these emerging standards.

LPDDR3 Overview

In May of 2012, JEDEC published the JESD209-3 Low Power Memory Device Standard. In comparison to LPDDR2, LPDDR3 offers a higher data rate, greater bandwidth and power efficiency, and higher memory density. LPDDR3 achieves a data rate of 1600 MT/s and uses a number of new technologies, including write-leveling and command/address training, optional on-die termination (ODT), and low-I/O capacitance.

As with LPDDR2, LPDDR3 supports both package-on-package and discrete packaging types to meet the requirements of various mobile devices, and it offers designers the ability to select the options that best meet the needs of their product. LPDDR3 preserves the power-efficient features and signaling interface of LPDDR2, allowing for fast clock stop/start, low-power self-refresh, and smart array management.

From an electrical verification perspective, LPDDR3 is pushing the system power envelope with its combination of lower operating voltage and higher bandwidths. By comparison, first-generation LPDDR ran at 200 MHz at 1.8V. With LPDDR2 voltage dropped to 1.2V while speed increased to 533 MHz. Now LPDDR3 calls for the same 1.2V but runs at 800 MHz. This means that signal integrity will be an important factor in order to verify clock cycles on shorter 1.25 ns clock periods. Table 1 shows a comparison between the two generations, indicating the need for higher bandwidth oscilloscopes to maintain signal integrity.

Table 1 – Faster speeds with LPDDR3 require greater oscilloscope bandwidth.

DDR4 Overview

Released in September of 2012, the DDR4 specification calls for greater performance, significantly increased packaging density, improved reliability and lower power consumption compared to DDR3. As shown in Table 2, voltages drop to 1.2V from 1.5V to reduce power consumption while the performance factor nearly doubles to 3,200 MT/sec. (megatransfers per second). Other changes include higher densities to support memory-intensive server applications, higher data rates and the ability to stack pins for higher density module placement.

Table 2 – The DDR4 specification calls for faster transfers, higher densities and lower power consumption compared to DDR3.

From a test perspective, as with LPDDRR3, the move to higher transfer rates and lower voltages will increase the emphasis on signal integrity and timing and margin measurements for validating memory technologies. To accommodate the increased measurement complexity, JEDEC is making a number of changes and updates to required test methodology.

One of the most significant changes is the proposed requirement to establish the reference voltage or V center used for compliance testing using a variable approach. For DDR3, this value was fixed at 750 mV. The new approach involves making multiple acquisitions of the output data (DQ) and a data strobe signal (DQS) Write burst. The largest to smallest voltage value for each is then measured and an average created using a simple formula. This then becomes the DQ voltage reference for centering and making reference measurements using an eye diagram. This process is shown in Figure 1.

Figure 1 – Early DDR4 verification proposals indicate a variable approach to establishing the reference voltage or V center.

Another notable change in DDR4 testing is the expanded use of eye diagrams. The proposed test specification will set required eye height and width and will provide guidance on what will be considered a deterministic level of performance from a timing perspective. It will also set margin requirements with random or Gaussian noise applied to the measurement. Figure 2 shows the eye diagram for DQ from the DD4 spec with recommended mask sizing that is placed on the Vref center.

Figure 2 –The proposed DDR4 specification adds eye diagram requirements.

Following the lead of many serial standards, DDR4 will now incorporate a statistical jitter measurement approach for speeds greater than 2133. For speeds under 2133, all jitter will be assumed to be deterministic jitter (DJ). For 2133 and above, tests will look at both DJ and random jitter (RJ). To date, many of the timing parameters for jitter have not been published, but designers should be aware that jitter testing will be a requirement. One benefit of expanded jitter testing in DDR4 is that should devices fail to meet jitter requirements, the test and measurement vendor community offers robust jitter decomposition tools that can help isolate the source of problems.

In addition, JEDEC will no longer require de-rating of pass/fail limits for setup and hold measurement based on signal slew rate, as was the case with DDR2 and DDR3. In discussing the issue with engineers, the JEDEC standards committee found that the complex de-rating procedure was poorly understood and rarely performed. Typically, most engineers relied on making standard slew rate measurements. In DDR4, this has been replaced with the Vref averaging and eye-mask measurements described above.

XXX . XXX 4%zero null How much RAM (random access memory) does a human brain have?

Working memory is not equivalent to RAM. While limitations in working memory is something we may occasionally face, it’s really not a blocking issue for most people, like it is for a computer. Although, working memory limitations can certainly be annoying when trying to remember a phone number, taking a person’s order without a notepad, or juggling 10 requests at once.

RAM on the other hand is used to load up pretty much everything you are actively working on, all the applications, browser windows and plenty of other stuff, as to be accessible as quickly as possible without the need to read from a disk. When it runs out, it causes serious performance issues for the computer.

To illustrate, look at the memory requirements on my brain when writing this answer.

While I am writing, my mind doesn’t need to “load up” anything. I simply have immediate access to all the contents in my brain, the entire time I am writing.

I can recall all the articles I’ve read on the topic, I can synthesize what I know of biology, neurology, computer science and creative writing. Combining that with my knowledge of English, written communication mixed with touch typing, eye sight and vocalizing (I often read my posts out-loud before posting) I generate the answer.

I then review and edit what I’ve written.

Oh, my dogs want to come inside. Now I access my muscle memory. I’ve just stood up, opened the door, let them in, closed the door and sat back down. It was an epic use of my entire body! Now I can continue exactly where I left off.

Comparing it like this, the human brain has pretty much an amount of RAM, because pretty much everything we’ve ever known is available to us all the time.

Side note: The attempts to calculate how much “memory” the human brain has by estimating the neurons, is not useful and wildly inaccurate.

Extensive use of any part of the mind leads to thickening of that part of the brain. Neurons are constantly created.

If a person spends their entire life learning, languages, new hobbies and activities, etc., they will continually increase the number of connections and the number of neurons. As far as is useful, there simply is no limit to the size of our minds and there will never be a way to find if there is a limit.

The human brain does not have RAM, nor does it have anything exactly comparable.

The closest analog to RAM in the human brain might be what is called “working memory” or “short term memory.”

The capacity of working memory is difficult to quantify, but cognitive scientists measure its capacity at “7 +/- 2 items.”

But what is an item?

An item is anything that can be held in working memory, so the definition is a bit circular. An item could be a word, a name, a number, or a concept. With “chunking,” an item could be a set of related concepts, so “the three little pigs” or “1 2 3” or “red white and blue” might be one chunked item or maybe two.

Working memory is for concepts. Vision seems to have its own type of short term memory, called visual short term memory (VSTM), and its capacity seems to be 3 - 5 items, where an item is something within the visual field that can be seen or noticed.

Most computational neuroscientists tend to estimate human storage capacity somewhere between 10 terabytes and 100 terabytes, though the full spectrum of guesses ranges from 1 terabyte to 2.5 petabytes. (One terabyte is equal to about 1,000 gigabytes or about 1 million megabytes; a petabyte is about 1,000 terabytes.)

The math behind these estimates is fairly simple. The human brain contains roughly 100 billion neurons. Each of these neurons seems capable of making around 1,000 connections, representing about 1,000 potential synapses, which largely do the work of data storage. Multiply each of these 100 billion neurons by the approximately 1,000 connections it can make, and you get 100 trillion data points, or about 100 terabytes of information.

Can you grow new brain cells?

The science of neurogenesis suggests it's possible to create neurons that improve your memory and thinking skills.

There are many aspects of aging you cannot prevent, but surprisingly, memory trouble is not one of them.

"The dogma for the longest time was that adult brains couldn't generate any new brain cells. "But the reality is that everyone has the capacity to develop new cells that can help enhance cognitive functions."

XXX , XXX 4%zero null 0 Resistive random-access memory

Resistive random-access memory (RRAM or ReRAM) is a type of non-volatile (NV) random-access (RAM) computer memory that works by changing the resistance across a dielectric solid-state material often referred to as a memristor. This technology bears some similarities to conductive-bridging RAM (CBRAM), and phase-change memory (PCM).

CBRAM involves one electrode providing ions that dissolve readily in an electrolyte material, while PCM involves generating sufficient Joule heating to effect amorphous-to-crystalline or crystalline-to-amorphous phase changes. On the other hand, RRAM involves generating defects in a thin oxide layer, known as oxygen vacancies (oxide bond locations where the oxygen has been removed), which can subsequently charge and drift under an electric field. The motion of oxygen ions and vacancies in the oxide would be analogous to the motion of electrons and holes in a semiconductor.

RRAM is currently under development by a number of companies, some of which have filed patent applications claiming various implementations of this technology. RRAM has entered commercialization on an initially limited KB-capacity scale.

Although anticipated as a replacement technology for flash memory, the cost benefit and performance benefit of RRAM have not been enough for companies to proceed with the replacement. A broad range of materials apparently can potentially be used for RRAM. However, the discovery[5] that the popular high-κ gate dielectric HfO2 can be used as a low-voltage RRAM has greatly encouraged others to investigate many other possibilities. Among others, SiOx has been identified to offer significant benefits. Weebit-Nano Ltd is one company that is pursuing SiOx and has already demonstrated functional devices.

In February 2012, Rambus bought an RRAM company called Unity Semiconductor for $35 million.[6] Panasonic launched an RRAM evaluation kit in May 2012, based on a tantalum oxide 1T1R (1 transistor – 1 resistor) memory cell architecture.[7]

In 2013, Crossbar introduced an RRAM prototype as a chip about the size of a postage stamp that could store 1 TB of data. In August 2013, the company claimed that large-scale production of their RRAM chips was scheduled for 2015.[8] The memory structure (Ag/a-Si/Si) closely resembles a silver-based CBRAM.

Different forms of RRAM have been disclosed, based on different dielectric materials, spanning from perovskites to transition metal oxides to chalcogenides. Silicon dioxide was shown to exhibit resistive switching as early as 1967,[9] and has recently been revisited.[10][11]

Leon Chua argued that all two-terminal non-volatile memory devices including RRAM should be considered memristors.[12] Stan Williams of HP Labs also argued that RRAM was a memristor.[13] However, others challenged this terminology and the applicability of memristor theory to any physically realizable device is open to question.[14][15] Whether redox-based resistively switching elements (RRAM) are covered by the current memristor theory is disputed.[16]

Silicon oxide presents an interesting case of resistance switching. Two distinct modes of intrinsic switching have been reported - surface-based, in which conductive silicon filaments are generated at exposed edges (which may be internal - within pores - or external - on the surface of mesa structures), and bulk switching, in which oxygen vacancy filaments are generated within the bulk of the oxide. The former mode suffers from oxidation of the filaments in air, requiring hermetic sealing to enable switching. The latter requires no sealing. In 2014 researchers from Rice University announced a silicon filament-based device that used a porous silicon oxide dielectric with no external edge structure - rather, filaments were formed at internal edges within pores. Devices can be manufactured at room temperature and have a sub-2V forming voltage, high on-off ratio, low power consumption, nine-bit capacity per cell, high switching speeds and good endurance. Problems with their inoperability in air can be overcome by hermetic sealing of devices[17]. Bulk switching in silicon oxide, pioneered by researchers at UCL (University College London) since 2012[18], offers low electroforming voltages (2.5V), switching voltages around 1V, switching times in the nanoseconds regime, and more than 10,000,000 cycles without device failure - all in ambient conditions.[19]

Forming

The basic idea is that a dielectric, which is normally insulating, can be made to conduct through a filament or conduction path formed after application of a sufficiently high voltage.[20] The conduction path can arise from different mechanisms, including vacancy or metal defect migration. Once the filament is formed, it may be reset (broken, resulting in high resistance) or set (re-formed, resulting in lower resistance) by another voltage. Many current paths, rather than a single filament, are possibly involved.[21] The presence of these current paths in the dielectric can be in situ demonstrated via conductive atomic force microscopy.[20][22][23][24]

The low-resistance path can be either localized (filamentary) or homogeneous. Both effects can occur either throughout the entire distance between the electrodes or only in proximity to one of the electrodes. Filamentary and homogenous switching effects can be distinguished by measuring the area dependence of the low-resistance state.[25]

Under certain conditions, the forming operation may be bypassed.[26] It is expected that under these conditions, the initial current is already quite high compared to insulating oxide layers.

CBRAM cells generally would not require forming if Cu ions are already present in the electrolyte, having already been driven-in by a designed photo-diffusion or annealing process; such cells may also readily return to their initial state.[27] In the absence of such Cu initially being in the electrolyte, the voltage would still be applied directly to the electrolyte, and forming would be a strong possibility.[28]

Operation styles

For random-access type memories, a 1T1R (one transistor, one resistor) architecture is preferred because the transistor isolates current to cells that are selected from cells that are not. On the other hand, a cross-point architecture is more compact and may enable vertically stacking memory layers, ideally suited for mass-storage devices. However, in the absence of any transistors, isolation must be provided by a "selector" device, such as a diode, in series with the memory element or by the memory element itself. Such isolation capabilities are inferior to the use of transistors if the on/off ratio for the selector is not sufficient, limiting the ability to operate very large arrays in this architecture. Thin film based threshold switch can work as a selector for bipolar and unipolar RRAM. Threshold switch-based selector was demonstrated for 64 Mb array. However, One shouldn't forget that the cross-point architecture requires BEOL compatible two terminal selectors like punch-through diode for bipolar RRAM or PIN diode for unipolar RRAM.

Polarity can be either binary or unary. Bipolar effects cause polarity to reverse when switching from low to high resistance (reset operation) compared to switching high to low (set operation). Unipolar switching leaves polarity unaffected, but uses different voltages.

Material systems for resistive memory cells

Multiple inorganic and organic material systems display thermal or ionic resistive switching effects. These can be grouped into the following categories:

- phase-change chalcogenides such as Ge

2Sb

2Te

5 or AgInSbTe - binary transition metal oxides such as NiO or TiO

2 - perovskites such as Sr(Zr)TiO

3 or PCMO - solid-state electrolytes such as GeS, GeSe, SiO

x or Cu

2S - organic charge-transfer complexes such as CuTCNQ

- organic donor–acceptor systems such as Al AIDCN

- two dimensional (layered) insulating materials like hexagonal boron nitride

Demonstrations

Papers at the IEDM Conference in 2007 suggested for the first time that RRAM exhibits lower programming currents than PRAM or MRAM without sacrificing programming performance, retention or endurance. Some commonly cited ReRAM systems are described further below.

HfO2-based RRAM

At IEDM 2008, the highest-performance RRAM technology to date was demonstrated by ITRI using HfO2 with a Ti buffer layer, showing switching times less than 10 ns and currents less than 30μA. At IEDM 2010, ITRI again broke the speed record, showing <0.3 ns switching time, while also showing process and operation improvements to allow yield up to 100% and endurance up to 10 billion cycles. IMEC presented updates of their RRAM program at the 2012 Symposia on VLSI Technology and Circuits, including a solution with a 500 nA operating current.

ITRI had focused on the Ti/HfO2 system since its first publication in 2008. ITRI's patent 8362454 has since been sold to TSMC; the number of prior licensees is unknown. On the other hand, IMEC focused mainly on Hf/HfO2. Winbond had done more recent work toward advancing and commercializing the HfO2-based RRAM.

Panasonic

Panasonic revealed its TaOx-based ReRAM at IEDM 2008. A key requirement was the need for a high work function metal such as Pt or Ir to interface with the TaOx layer. The change of O content results in resistance change as well as Schottky barrier change. More recently, a Ta2O5/TaOx layer was implemented, which still requires the high work function metal to interface with Ta2O5.[41] This system has been associated with high endurance demonstration (trillion cycles),[42] but products are specified at 100K cycles.[43] Filament diameters as large as ~100 nm have been observed.[44] Panasonic released a 4Mb part with Fujitsu,[45] and is developing 40nm embedded memory with UMC.

HP Memristor

On 30 April 2008, HP announced that they had discovered the memristor, originally envisioned as a missing 4th fundamental circuit element by Chua in 1971. On 8 July they announced they would begin prototyping RRAM using their memristors.[47] HP first demonstrated its memristor using TiOx,[48] but later migrated to TaOx,[49] possibly due to improved stability.[50] The TaOx-based device has some material similarity to Panasonic's ReRAM, but the operation characteristics are different. The Hf/HfOx system was similarly studied.[51]

Adesto Technologies

Adesto Technologies' ReRAM is based on filaments generated from the electrode metal rather than oxygen vacancies. The original material system was Ag/GeS2[52] but eventually migrated to ZrTe/Al2O3.[53] The tellurium filament achieved better stability as compared to silver. Adesto has targeted the ultralow power memory for Internet-of-Things (IoT) applications. Adesto has released products manufactured at Altis foundry[54] and entered into a 45nm foundry agreement with TowerJazz/Panasonic.

Crossbar

Crossbar implements an Ag filament in amorphous Si along with a threshold switching system to achieve a diode+ReRAM.[56][57] Their system includes the use of a transistor in 1T1R or 1TNR architecture. Crossbar started producing samples at SMIC on the 40nm process in 2017.[58] The Ag filament diameter has been visualized on the scale of tens of nanometers.

Programmable metallization cell

Infineon Technologies calls it conductive-bridging RAM(CBRAM), NEC has a variant called “Nanobridge” and Sony calls their version “electrolytic memory”. New research suggests CBRAM can be 3D printed[

ReRam test boards

- Panasonic AM13L-STK2 : MN101LR05D 8-bit MCU with built in ReRAM for evaluation, USB 2.0 connector

Future applications

Compared to PRAM, RRAM operates at a faster timescale (switching time can be less than 10 ns), while compared to MRAM, it has a simpler, smaller cell structure (less than 8F² MIM stack). A vertical 1D1R (one diode, one resistive switching device) integration can be used for crossbar memory structure to reduce the unit cell size to 4F² (F is the feature dimension).[62] Compared to flash memory and racetrack memory, a lower voltage is sufficient, and hence it can be used in low-power applications. Also, due to its relatively small access latency and high density, RRAM is considered a promising candidate for designing caches.

ITRI has shown that RRAM is scalable below 30 nm. The motion of oxygen atoms is a key phenomenon for oxide-based RRAM; one study indicated that oxygen motion may take place in regions as small as 2 nm.[66] It is believed that if a filament is responsible, it would not exhibit direct scaling with cell size.[67] Instead, the current compliance limit (set by an outside resistor, for example) could define the current-carrying capacity of the filament.

A significant hurdle to realizing the potential of RRAM is the sneak path problem that occurs in larger passive arrays. In 2010, complementary resistive switching (CRS) was introduced as a possible solution to sneak-path current interference.[69] In the CRS approach, the information storing states are pairs of high- and low-resistance states (HRS/LRS and LRS/HRS) so that the overall resistance is always high, allowing larger passive crossbar arrays.

A drawback to the initial CRS solution is the requirement for switching endurance caused by conventional destructive readout based on current measurements. A new approach for a nondestructive readout based on capacity measurement potentially lowers the requirements for both material endurance and power consumption. Bi-layer structure is used to produce the nonlinearity in LRS to avoid the sneak path problem. A single-layer device exhibiting a strong nonlinear conduction in LRS was reported. Another bi-layer structure was introduced for bipolar RRAM to improve the HRS and stability.

Another solution to the sneak current issue is to perform read and reset operations in parallel across an entire row of cells, while using set on selected cells.In this case, for a 3D-RRAM 1TNR array, with a column of N RRAM cells situated above a select transistor, only the intrinsic nonlinearity of the HRS is required to be sufficiently large, since the number of vertical levels N is limited (e.g., N = 8–32), and this has been shown possible for a low-current RRAM system.

Modeling of 2D and 3D caches designed with RRAM and other non-volatile random access memories such as MRAM and PCM can be done using DESTINY tool.

How RAM Works

Random access memory (RAM) is the best known form of computer memory. RAM is considered "random access" because you can access any memory cell directly if you know the row and column that intersect at that cell.

The opposite of RAM is serial access memory (SAM). SAM stores data as a series of memory cells that can only be accessed sequentially (like a cassette tape). If the data is not in the current location, each memory cell is checked until the needed data is found. SAM works very well for memory buffers, where the data is normally stored in the order in which it will be used (a good example is the texture buffer memory on a video card). RAM data, on the other hand, can be accessed in any order.

Similar to a microprocessor, a memory chip is an integrated circuit (IC) made of millions of transistors and capacitors. In the most common form of computer memory, dynamic random access memory (DRAM), a transistor and a capacitor are paired to create a memory cell, which represents a single bit of data. The capacitor holds the bit of information -- a 0 or a 1 (see How Bits and Bytes Work for information on bits). The transistor acts as a switch that lets the control circuitry on the memory chip read the capacitor or change its state.

A capacitor is like a small bucket that is able to store electrons. To store a 1 in the memory cell, the bucket is filled with electrons. To store a 0, it is emptied. The problem with the capacitor's bucket is that it has a leak. In a matter of a few milliseconds a full bucket becomes empty. Therefore, for dynamic memory to work, either the CPU or the memory controller has to come along and recharge all of the capacitors holding a 1 before they discharge. To do this, the memory controller reads the memory and then writes it right back. This refresh operation happens automatically thousands of times per second.

The capacitor in a dynamic RAM memory cell is like a leaky bucket. It needs to be refreshed periodically or it will discharge to 0. This refresh operation is where dynamic RAM gets its name. Dynamic RAM has to be dynamically refreshed all of the time or it forgets what it is holding. The downside of all of this refreshing is that it takes time and slows down the memory.

In this article, you'll learn all about what RAM is, what kind you should buy and how to install it.

Memory is made up of bits arranged in a two-dimensional grid.

In this figure, red cells represent 1s and white cells represent 0s. In the animation, a column is selected and then rows are charged to write data into the specific column.

Memory cells are etched onto a silicon wafer in an array of columns (bitlines) and rows (wordlines). The intersection of a bitline and wordline constitutes the address of the memory cell.

DRAM works by sending a charge through the appropriate column (CAS) to activate the transistor at each bit in the column. When writing, the row lines contain the state the capacitor should take on. When reading, the sense-amplifier determines the level of charge in the capacitor. If it is more than 50 percent, it reads it as a 1; otherwise it reads it as a 0. The counter tracks the refresh sequence based on which rows have been accessed in what order. The length of time necessary to do all this is so short that it is expressed in nanoseconds (billionths of a second). A memory chip rating of 70ns means that it takes 70 nanoseconds to completely read and recharge each cell.

Memory cells alone would be worthless without some way to get information in and out of them. So the memory cells have a whole support infrastructure of other specialized circuits. These circuits perform functions such as:

- Identifying each row and column (row address select and column address select)

- Keeping track of the refresh sequence (counter)

- Reading and restoring the signal from a cell (sense amplifier)

- Telling a cell whether it should take a charge or not (write enable)

Other functions of the memory controller include a series of tasks that include identifying the type, speed and amount of memory and checking for errors.

Static RAM is fast and expensive, and dynamic RAM is less expensive and slower. So static RAM is used to create the CPU's speed-sensitive cache, while dynamic RAM forms the larger system RAM space.

Memory chips in desktop computers originally used a pin configuration called dual inline package (DIP). This pin configuration could be soldered into holes on the computer's motherboard or plugged into a socket that was soldered on the motherboard. This method worked fine when computers typically operated on a couple of megabytes or less of RAM, but as the need for memory grew, the number of chips needing space on the motherboard increased.

The solution was to place the memory chips, along with all of the support components, on a separate printed circuit board (PCB) that could then be plugged into a special connector (memory bank) on the motherboard. Most of these chips use a small outline J-lead (SOJ) pin configuration, but quite a few manufacturers use the thin small outline package (TSOP) configuration as well. The key difference between these newer pin types and the original DIP configuration is that SOJ and TSOP chips are surface-mounted to the PCB. In other words, the pins are soldered directly to the surface of the board, not inserted in holes or sockets.

Memory chips are normally only available as part of a card called a module. You've probably seen memory listed as 8x32 or 4x16. These numbers represent the number of the chips multiplied by the capacity of each individual chip, which is measured in megabits (Mb), or one million bits. Take the result and divide it by eight to get the number of megabytes on that module. For example, 4x32 means that the module has four 32-megabit chips. Multiply 4 by 32 and you get 128 megabits. Since we know that a byte has 8 bits, we need to divide our result of 128 by 8. Our result is 16 megabytes!

The following are some common types of RAM:

- SRAM: Static random access memory uses multiple transistors, typically four to six, for each memory cell but doesn't have a capacitor in each cell. It is used primarily for cache.

- DRAM: Dynamic random access memory has memory cells with a paired transistor and capacitor requiring constant refreshing.

- FPM DRAM: Fast page mode dynamic random access memory was the original form of DRAM. It waits through the entire process of locating a bit of data by column and row and then reading the bit before it starts on the next bit. Maximum transfer rate to L2 cache is approximately 176 MBps.

- EDO DRAM: Extended data-out dynamic random access memory does not wait for all of the processing of the first bit before continuing to the next one. As soon as the address of the first bit is located, EDO DRAM begins looking for the next bit. It is about five percent faster than FPM. Maximum transfer rate to L2 cache is approximately 264 MBps.

- SDRAM: Synchronous dynamic random access memory takes advantage of the burst mode concept to greatly improve performance. It does this by staying on the row containing the requested bit and moving rapidly through the columns, reading each bit as it goes. The idea is that most of the time the data needed by the CPU will be in sequence. SDRAM is about five percent faster than EDO RAM and is the most common form in desktops today. Maximum transfer rate to L2 cache is approximately 528 MBps.

- DDR SDRAM: Double data rate synchronous dynamic RAM is just like SDRAM except that is has higher bandwidth, meaning greater speed. Maximum transfer rate to L2 cache is approximately 1,064 MBps (for DDR SDRAM 133 MHZ).

- RDRAM: Rambus dynamic random access memory is a radical departure from the previous DRAM architecture. Designed by Rambus, RDRAM uses a Rambus in-line memory module (RIMM), which is similar in size and pin configuration to a standard DIMM. What makes RDRAM so different is its use of a special high-speed data bus called the Rambus channel. RDRAM memory chips work in parallel to achieve a data rate of 800 MHz, or 1,600 MBps. Since they operate at such high speeds, they generate much more heat than other types of chips. To help dissipate the excess heat Rambus chips are fitted with a heat spreader, which looks like a long thin wafer. Just like there are smaller versions of DIMMs, there are also SO-RIMMs, designed for notebook computers.

- Credit Card Memory: Credit card memory is a proprietary self-contained DRAM memory module that plugs into a special slot for use in notebook computers.

- PCMCIA Memory Card: Another self-contained DRAM module for notebooks, cards of this type are not proprietary and should work with any notebook computer whose system bus matches the memory card's configuration.

- CMOS RAM: CMOS RAM is a term for the small amount of memory used by your computer and some other devices to remember things like hard disk settings -- see Why does my computer need a battery? for details. This memory uses a small battery to provide it with the power it needs to maintain the memory contents.

- VRAM: VideoRAM, also known as multiport dynamic random access memory (MPDRAM), is a type of RAM used specifically for video adapters or 3-D accelerators. The "multiport" part comes from the fact that VRAM normally has two independent access ports instead of one, allowing the CPU and graphics processor to access the RAM simultaneously. VRAM is located on the graphics card and comes in a variety of formats, many of which are proprietary. The amount of VRAM is a determining factor in the resolution and color depth of the display. VRAM is also used to hold graphics-specific information such as 3-D geometry data and texture maps. True multiport VRAM tends to be expensive, so today, many graphics cards use SGRAM (synchronous graphics RAM) instead. Performance is nearly the same, but SGRAM is cheaper.

The type of board and connector used for RAM in desktop computershas evolved over the past few years. The first types were proprietary, meaning that different computer manufacturers developed memory boards that would only work with their specific systems. Then came SIMM, which stands for single in-line memory module. This memory board used a 30-pin connector and was about 3.5 x .75 inches in size (about 9 x 2 cm). In most computers, you had to install SIMMs in pairs of equal capacity and speed. This is because the width of the bus is more than a single SIMM. For example, you would install two 8-megabyte (MB) SIMMs to get 16 megabytes total RAM. Each SIMM could send 8 bits of data at one time, while the system bus could handle 16 bits at a time. Later SIMM boards, slightly larger at 4.25 x 1 inch (about 11 x 2.5 cm), used a 72-pin connector for increased bandwidth and allowed for up to 256 MB of RAM.

As processors grew in speed and bandwidth capability, the industry adopted a new standard in dual in-line memory module (DIMM). With a whopping 168-pin or 184-pin connector and a size of 5.4 x 1 inch (about 14 x 2.5 cm), DIMMs range in capacity from 8 MB to 1 GB per module and can be installed singly instead of in pairs. Most PC memory modules and the modules for the Mac G5 systems operate at 2.5 volts, while older Mac G4 systems typically use 3.3 volts. Another standard, Rambus in-line memory module (RIMM), is comparable in size and pin configuration to DIMM but uses a special memory bus to greatly increase speed.

Many brands of notebook computers use proprietary memory modules, but several manufacturers use RAM based on the small outline dual in-line memory module (SODIMM) configuration. SODIMM cards are small, about 2 x 1 inch (5 x 2.5 cm), and have 144 or 200 pins. Capacity ranges from 16 MB to 1 GB per module. To conserve space, the Apple iMac desktop computer uses SODIMMs instead of the traditional DIMMs. Sub-notebook computers use even smaller DIMMs, known as MicroDIMMs, which have either 144 pins or 172 pins.

Most memory available today is highly reliable. Most systems simply have the memory controller check for errors at start-up and rely on that. Memory chips with built-in error-checking typically use a method known as parity to check for errors. Parity chips have an extra bit for every 8 bits of data. The way parity works is simple. Let's look at even parity first.

When the 8 bits in a byte receive data, the chip adds up the total number of 1s. If the total number of 1s is odd, the parity bit is set to 1. If the total is even, the parity bit is set to 0. When the data is read back out of the bits, the total is added up again and compared to the parity bit. If the total is odd and the parity bit is 1, then the data is assumed to be valid and is sent to the CPU. But if the total is odd and the parity bit is 0, the chip knows that there is an error somewhere in the 8 bits and dumps the data. Odd parity works the same way, but the parity bit is set to 1 when the total number of 1s in the byte are even.

The problem with parity is that it discovers errors but does nothing to correct them. If a byte of data does not match its parity bit, then the data are discarded and the system tries again. Computers in critical positions need a higher level of fault tolerance. High-end servers often have a form of error-checking known as error-correction code (ECC). Like parity, ECC uses additional bits to monitor the data in each byte. The difference is that ECC uses several bits for error checking -- how many depends on the width of the bus -- instead of one. ECC memory uses a special algorithm not only to detect single bit errors, but actually correct them as well. ECC memory will also detect instances when more than one bit of data in a byte fails. Such failures are very rare, and they are not correctable, even with ECC.

The majority of computers sold today use nonparity memory chips. These chips do not provide any type of built-in error checking, but instead rely on the memory controller for error detection.

It's been said that you can never have enough money, and the same holds true for RAM, especially if you do a lot of graphics-intensive work or gaming. Next to the CPU itself, RAM is the most important factor in computer performance. If you don't have enough, adding RAM can make more of a difference than getting a new CPU!

If your system responds slowly or accesses the hard drive constantly, then you need to add more RAM. If you are running Windows XP, Microsoft recommends 128MB as the minimum RAM requirement. At 64MB, you may experience frequent application problems. For optimal performance with standard desktop applications, 256MB is recommended. If you are running Windows 95/98, you need a bare minimum of 32 MB, and your computer will work much better with 64 MB. Windows NT/2000 needs at least 64 MB, and it will take everything you can throw at it, so you'll probably want 128 MB or more.

Linux works happily on a system with only 4 MB of RAM. If you plan to add X-Windows or do much serious work, however, you'll probably want 64 MB. Mac OS X systems should have a minimum of 128 MB, or for optimal performance, 512 MB.

The amount of RAM listed for each system above is estimated for normal usage -- accessing the Internet, word processing, standard home/office applications and light entertainment. If you do computer-aided design (CAD), 3-D modeling/animation or heavy data processing, or if you are a serious gamer, then you will most likely need more RAM. You may also need more RAM if your computer acts as a server of some sort (Web pages, database, application, FTP or network).

Another question is how much VRAM you want on your video card. Almost all cards that you can buy today have at least 16 MB of RAM. This is normally enough to operate in a typical office environment. You should probably invest in a 32-MB or better graphics card if you want to do any of the following:

- Play realistic games

- Capture and edit video

- Create 3-D graphics

- Work in a high-resolution, full-color environment

- Design full-color illustrations

When shopping for video cards, remember that your monitor and computer must be capable of supporting the card you choose.

Most of the time, installing RAM is a very simple and straightforward procedure. The key is to do your research. Here's what you need to know:

- How much RAM you have

- How much RAM you wish to add

- Form factor

- RAM type

- Tools needed

- Warranty

- Where it goes

RAM is usually sold in multiples of 16 megabytes: 16, 32, 64, 128, 256, 512, 1024 (which is the same as 1GB). This means that if you currently have a system with 64 MB RAM and you want at least 100 MB RAM total, then you will probably need to add another 64 MB module.

To install more RAM, look for memory modules on your computer's motherboard. At the left is a Macintosh G4 and on the right is a PC.

Once you know how much RAM you want, check to see what form factor(card type) you need to buy. You can find this in the manual that came with your computer, or you can contact the manufacturer. An important thing to realize is that your options will depend on the design of your computer. Most computers sold today for normal home/office use have DIMM slots. High-end systems are moving to RIMM technology, which will eventually take over in standard desktop computers as well. Since DIMM and RIMM slots look a lot alike, be very careful to make sure you know which type your computer uses. Putting the wrong type of card in a slot can cause damage to your system and ruin the card.

You will also need to know what type of RAM is required. Some computers require very specific types of RAM to operate. For example, your computer may only work with 60ns-70ns parity EDO RAM. Most computers are not quite that restrictive, but they do have limitations. For optimal performance, the RAM you add to your computer must also match the existing RAM in speed, parity and type. The most common type available today is SDRAM.

Additionally, some computers support Dual Channel RAM configuration either as an option or as a requirement. Dual Channel means that RAM modules are installed in matched pairs, so if there is a 512MB RAM card installed, there is another 512 MB card installed next to it. When Dual Channel is an optional configuration, installing RAM in matched pairs speeds up the performance of certain applications. When it's a requirement, as in computers with the Mac G5 chip(s), the computer will not function properly without matched pairs of RAM chips.

For complete guidelines on setting up Dual Channel configuration on Intel Pentium 4-based systems, check out this guide.

Before you open your computer, check to make sure you won't be voiding the warranty. Some manufacturers seal the case and request that the customer have an authorized technician install RAM. If you're set to open the case, turn off and unplug the computer. Ground yourself by using an anti-static pad or wrist strap to discharge any static electricity. Depending on your computer, you may need a screwdriver or nut-driver to open the case. Many systems sold today come in tool-less cases that use thumbscrews or a simple latch.

The actual installation of the memory module does not normally require any tools. RAM is installed in a series of slots on the motherboard known as the memory bank. The memory module is notched at one end so you won't be able to insert it in the wrong direction. For SIMMs and some DIMMs, you install the module by placing it in the slot at approximately a 45-degree angle. Then push it forward until it is perpendicular to the motherboard and the small metal clips at each end snap into place. If the clips do not catch properly, check to make sure the notch is at the right end and the card is firmly seated. Many DIMMs do not have metal clips; they rely on friction to hold them in place. Again, just make sure the module is firmly seated in the slot.

Once the module is installed, close the case, plug the computer back in and power it up. When the computer starts the POST, it should automatically recognize the memory.

Read-Only Memory (ROM)

Every computer uses both read-write (RW) memory and read-only (R) memory. We see these same designations applied to CD-RW and CD-R, or DVD-RW and DVD-R optical disks. "Writing to" memory is the same as placing information in a memory address. "Reading from" memory is the same as retrieving information from an address. Information can be temporarily stored (written) in RAM, then a moment later, taken out (read). New information can then be written to the same place. Although the acronym RAM stands for random access memory, think of it, for the moment, as read/write memory: It can be both written to and read from.

ROM is read-only memory, and typically, doesn't allow changes. ROM can have information written into it only one time. From that point on, all we can do is read whatever was put there. Imagine a bulletin board under glass at the back of a classroom. ROM information is like hard-copy notes placed under the glass. At the end of the day, we turn out the lights and everyone goes home. The words on the paper remain unchanged. The next day, the notes are exactly the way they were the day before.

ROM is nonvolatile because no electrical current is required for the information to remain stored. ROM chips are mostly used for BIOS, although the same concept and acronym applies to commercial pre-recorded compact disks. The ROM in CDROM stands for read-only memory. Although CD-RW and DVD-RW can be changed, they're referred to as permanent storage media.

CAUTION

In some instances, ROM can be changed through the use of certain tools. Flash ROM is nonvolatile memory that occasionally can be changed, such as when a BIOS chip must be updated.

A single letter can really mess you up on the exam if you don't pay close attention. We've seen questions like, "RAM BIOS is used to permanently store instructions for a hardware device: True or False?" (The answer is false.) Keep your eyes peeled, and remember that RAM sounds like RANdom. RAM is never used in BIOS. Because the BIOS instructions are permanent, they almost always use ROM.

RAM, on the other hand, is like a blackboard. It starts out empty, then during the day, information is written on it, read from it, and maybe even erased. When something is erased, new information is then written to the same place on the blackboard. At the end of the day, we turn off the lights and wash off the blackboard. Whatever data was on the board goes away forever. When you turn off the power to a computer, RAM no longer has the necessary electrical current to sustain the data in its memory cells. Once again, RAM is volatile because it can't store information without using electricity.

NOTE

Windows sets aside (allocates) some amount of memory as resource memory. When we write and erase many times on a blackboard, we get a chalk build-up. Similarly, resource memory can sometimes become disorganized and confusing for Windows to read. You can repair this memory fragmentation either by re-starting the machine or by using specialized third-party software utilities.

RAM Versus ROM

RAM is to a computer as your attention span is to your mind. When you cram for this exam, you'll focus your attention on facts and figures, placing them into short-term memory just long enough to write them out to a piece of paper in the exam room. After the data is stored to the sheet of paper, you can "erase" the information in your attention area and bring in new data. New data might be an exam question, on which you can then perform calculations such as determining a correct answer. When you require the information you wrote to the paper, you can return it to your attention by reading the page.

CAUTION

The tear-away Cram Sheet on the front cover of this book is designed to give you the minimal basics of those difficult-to-remember facts you'll likely want to have handy during the exam. Although you can't bring the sheet into the exam room, you can try to remember them long enough to write them on the blank piece of paper you'll be given when you've entered the exam room.

The piece of paper in the example is similar to a floppy disk. There isn't a lot of room on the paper, but you can carry it easily in your shirt pocket. A loose-leaf binder or notebook would be more like a hard disk. Depending upon the size of the binder, you can store a lot more information than on a single piece of paper. If you were to engrave the information on the desk in the exam room (not allowed), it would be analogous to authoring a CDROM.

ROM is like your long-term memory, holding the things you remember from your past. This is also like the information stored in BIOS and CMOS. When the computer "wakes up," ROM settings provide an awareness of the size of the hard disk, the presence of a sound card, whether or not any memory exists (and how to use it), and simple access routines to permanent hardware.

Basic Input/Output System (BIOS)

When you turn on a PC, the processor first looks at the basic input/output system to determine the machine's fundamental configuration and environment. This information is stored in a ROM chip and largely determines what peripherals the system can support. BIOS instructions are updated regularly by the manufacturer, not by the end user. If the chip is made to be updated (re-programmed) by the end user, it is often called Flash BIOS, or sometimes, Flash ROM. These programmable chips are often referred to as EEPROM (pronounced ee-prom) chips, discussed in a moment.

In a human being, BIOS would be like waking up and learning that you have a head, two arms, and two legs. The POST would be like a quick self- assessment as to whether or not you can move your arms and legs, and how bad a headache you have. CMOS would be like knowing your name, your address, and that you were last configured as a drinking machine.

Shadow RAM

In Chapter 2, "Motherboards," we pointed out that memory speed has usually been measured in nanoseconds (billionths of a second). We measure processor speeds in megahertz (millions of cycles per second) or gigahertz (billions of cycles per second). Although gigahertz CPUs operate in billionths of a second, instructions executing out of other processors, such as BIOS chips, execute quite a bit slower. The CPU and other devices may have to repeatedly query the BIOS chip for simple but permanent instructions, thereby reducing system performance. Shadow RAM is a method of storing a copy of certain BIOS instructions in main memory, rather than leaving them in a chip. The process improves execution speed and avoids constant calls to the slower chip. Many computers provide an option to shadow both the BIOS and certain video functions.

DRAM, fast page mode (FPM), and extended data output (EDO) mode all measured memory access times in nanoseconds. A 70ns unit would be labeled a "7." A 60ns unit would be labeled a "6," and so on. The lower the number, the faster the memory (shorter access time). With the introduction of SDRAM, these time measurements became less accurate. At such short intervals, fractions began to lose any real meaning. Instead, it began to make more sense to use speed measurements in the same way as CPUs. For this reason, SRAM and SDRAM modules use ratings such as 66MHz, 100MHz, 133MHz, or 800MHz.

Programmable ROM

Here's an example of one-time, read-only memory: storing a book on CDROM. Technically, write-once, read-many (times) is written as WORM. A magnetic disk is write-many, read-many, but you won't see a WMRM acronym. Instead, we speak of re-writeable optical disks. CD-RW changed the way that we use CDs and DVDs, just as programmable ROM chips changed the BIOS.

A manufacturing mask is the photographic blueprint for the given chip. It's used to etch the complex circuitry into a piece (chip) of silicon. The overall combination of silicon wafers, circuits, and microscopic components making up a CPU is called the die (like one of a pair of dice). The formal name for a chip that cannot be modified is mask ROM (from the manufacturing mask). The following types of chips offer varying degrees of programmability:

- Programmable ROM (PROM)—Requires a special type of machine called a PROM programmer or PROM burner (like a CD burner) and can be changed only one time. The original chip is blank, and the programmer burns in specific instructions. From that point, it cannot be changed.

- Erasable programmable ROM (EPROM)—Uses the PROM burner, but can be erased by shining ultraviolet (UV) light through a window in the top of the chip. Normal room light contains very little UV light.

- Electrically erasable programmable ROM (EEPROM)—Can be erased by an electrical charge, then written to by using higher-than-normal voltage. EEPROM can be erased one byte at a time, rather than erasing the entire chip with UV light. Because these chips can be changed without opening a casing, they're often used to store programmable instructions in devices such as printers and other peripherals.

Flash BIOS

With advances in technology, most BIOS chips became Flash EEPROM. These chips make it easier to change the BIOS. Rather than pulling out an actual chip and replacing it with a newer one, upgraded programming can be downloaded through the Internet or a bulletin board service (BBS). A small installation program changes the actual program instructions, eliminating the need for opening the computer case.

These types of chips are sometimes called Flash ROM or Flash memory, and store data much as EEPROM does. They use a super-voltage charge to erase a block of data. However, as we said earlier, EEPROM can be erased only one byte at a time. Although both Flash ROM and EEPROM can perform unlimited read/write operations, they can be erased only a certain number of times. (Be aware that Flash memory is not the same thing as nonvolatile memory cards used in such devices as digital cameras.)

CMOS Memory

As you know, basic motherboards vary in components such as CD or DVD drives, hard drives, memory, and so forth. The CMOS chip is a particular type of memory (static RAM) used to store optional system settings for those components. For example, the board might have a floppy drive and some memory chips. The BIOS stores instructions as to how to reach those components, and the fact that they exist. The CMOS stores variable settings, such as the disk size, the number of platters, and how much memory happens to be installed.

CMOS tends to store information about "unexpected" devices, and settings are held in memory through the use of a small electrical charge. Although CMOS is technically volatile memory, a trickle chargecomes from a battery installed on the motherboard. Even when the main power is turned off, the charge continues to maintain the settings. However, if the battery power fails, all CMOS information vanishes.

CAUTION

BIOS determines compatibility. Some modern BIOS settings are often stored in the CMOS chip. Older BIOS was completely stored in nonvolatile ROM chips, often soldered right onto the motherboard. Remember that the CMOS is almost always where the computer's configuration settings are stored. BIOS is where basic input/output routines for the computer are stored.

CMOS is different from ROM BIOS in that the CMOS settings require some source of electrical power. Nonvolatile memory doesn't require electricity at all. CMOS settings are essential to the configuration of a specific computer. BIOS instructions typically work with a generic type of motherboard and its chipset.

A symptom of a fading CMOS battery is that the system date begins to fluctuate, sometimes by months at a time. Backing up files and software are a standard part of keeping a current backup, but you should also have a report of the current CMOS settings. On many PCs, turning on a local printer, re-starting the machine (as opposed to a first-time boot), and going into the CMOS settings can generate this type of report. Press the Print Screen key at each screen.

When you exit out of the CMOS setup, the machine will most likely restart. From within Windows, open a text editor (for example, Notepad) and print a blank page. The stored page in the printer comes out as part of the print job. From a DOS command line, you can send an end-of-form page request to the printer to print the last page in the printer's memory. The following ^L is actually created by pressing the Ctrl+L key. Type echo ^L > prn.

NOTE

Most computers cannot access the PRN device before a successful boot process. Therefore, the Print Screen function may not work. However, a warm reset, as opposed to a power-down and cold reboot, often allows the Print Screen function to remain in low memory. If the Print Screen function doesn't remain loaded on a particular machine, the only other way to store the CMOS settings is to manually write them down on a piece of paper.

Random Access Memory (RAM)

The memory experts over at Crucial Technology, a division of Micron Technology, Inc. have created a great illustration of memory. We're going to modify their original inspiration, and expand it to include some of the related concepts discussed throughout this book. Imagine a motherboard as being like a printing business. Originally, there was only "the guy in charge" and a few employees. They all worked in a small building, and things were pretty disorganized. The CPU—the boss—is in charge of getting things done. The other components on the board all have been developed to lend a helping hand.

When the CPU finishes a processing job, it uses the address bus to set up memory locations for the results of its processing. It then sends the data to the memory controller, where each bit in every byte is stored in a memory cell. At some point, if the CPU needs the results again, it orders the memory controller to find the stored bits and send them back.

Dynamic RAM (DRAM)

In the old days, when the boss took in a print job, he'd have to go running back to the pressman to have it printed. The pressman is the memory controller, and the printing press is a memory chip. (The print job is a set of bits the CPU needs to move out of its registers.) The pressman would examine each document he got from the boss, character by character, and grab matching lead blocks, individually carved with each letter. He would then place each block of lead into a form, one by one. In other words, each bit gets its own address in a matrix.

After the form was typeset (filled with letters), the pressman slopped on ink and put a piece of paper under the press. He would crank down a handle and print a copy of the document. Then he had to re-ink the grid to get it ready to print another copy. This is much like the process where a memory controller takes bits from the CPU, examines them, then assigns each one a memory address. The "printing" step is the moment the storage takes place in the memory cells. Keep an eye on that moment, because the re-inking step relates to a memory refresh.

NOTE

A controller is a small device, usually a single chip, that controls data flow for a particular piece of hardware. A memory chip is also a device, and the memory controller executes various instructions as to how to use the chip. A disk drive controller contains instructions to operate the drive mechanics. Most PC motherboards use simple controllers for the basic I/O ports, as well as having two controllers for IDE drives.