XXX . XXX Four-dimensional space

A four-dimensional space or 4D space is a mathematical extension of the concept of three-dimensional or 3D space. Three-dimensional space is the simplest possible generalization of the observation that one only needs three numbers, called dimensions, to describe the sizes or locations of objects in the everyday world. For example, the volume of a rectangular box is found by measuring its length (often labeled x), width (y), and depth (z).

The idea of adding a fourth dimension began with Joseph-Louis Lagrange in the mid-1700s and culminated in a precise formalization of the concept in 1854 by Bernhard Riemann. In 1880 Charles Howard Hinton popularized these insights in an essay titled What is the Fourth Dimension?, which explained the concept of a four-dimensional cube with a step-by-step generalization of the properties of lines, squares, and cubes. The simplest form of Hinton's method is to draw two ordinary cubes separated by an "unseen" distance, and then draw lines between their equivalent vertices. This can be seen in the accompanying animation, whenever it shows a smaller inner cube inside a larger outer cube. The eight lines connecting the vertices of the two cubes in that case represent a single direction in the "unseen" fourth dimension.

Higher dimensional spaces have since become one of the foundations for formally expressing modern mathematics and physics. Large parts of these topics could not exist in their current forms without the use of such spaces. Einstein's concept of spacetime uses such a 4D space, though it has a Minkowski structure that is a bit more complicated than Euclidean 4D space.

When dimensional locations are given as ordered lists of numbers such as (t,x,y,z) they are called vectors or n-tuples. It is only when such locations are linked together into more complicated shapes that the full richness and geometric complexity of 4D and higher spaces emerges. A hint of that complexity can be seen in the accompanying animation of one of simplest possible 4D objects, the 4D cube or tesseract.

The 4D equivalent of a cube, known as a tesseract. The tesseract is rotating in four dimensions, which are then projected into three dimensions.

Lagrange wrote in his Mécanique analytique (published 1788, based on work done around 1755) that mechanics can be viewed as operating in a four-dimensional space — three dimensions of space, and one of time.[1] In 1827 Möbius realized that a fourth dimension would allow a three-dimensional form to be rotated onto its mirror-image,[2]:141 and by 1853 Ludwig Schläfli had discovered many polytopes in higher dimensions, although his work was not published until after his death.[2]:142–143 Higher dimensions were soon put on firm footing by Bernhard Riemann's 1854 thesis, Über die Hypothesen welche der Geometrie zu Grunde liegen, in which he considered a "point" to be any sequence of coordinates (x1, ..., xn). The possibility of geometry in higher dimensions, including four dimensions in particular, was thus established.

An arithmetic of four dimensions called quaternions was defined by William Rowan Hamilton in 1843. This associative algebra was the source of the science of vector analysis in three dimensions as recounted in A History of Vector Analysis. Soon after tessarines and coquaternions were introduced as other four-dimensional algebras over R.

One of the first major expositors of the fourth dimension was Charles Howard Hinton, starting in 1880 with his essay What is the Fourth Dimension?; published in the Dublin University magazine.[3] He coined the terms tesseract, ana and kata in his book A New Era of Thought, and introduced a method for visualising the fourth dimension using cubes in the book Fourth Dimension.[4][5]

Hinton's ideas inspired a fantasy about a "Church of the Fourth Dimension" featured by Martin Gardner in his January 1962 "Mathematical Games column" in Scientific American. In 1886 Victor Schlegel described[6]his method of visualizing four-dimensional objects with Schlegel diagrams.

In 1908, Hermann Minkowski presented a paper[7] consolidating the role of time as the fourth dimension of spacetime, the basis for Einstein's theories of special and general relativity.[8] But the geometry of spacetime, being non-Euclidean, is profoundly different from that popularised by Hinton. The study of Minkowski space required new mathematics quite different from that of four-dimensional Euclidean space, and so developed along quite different lines. This separation was less clear in the popular imagination, with works of fiction and philosophy blurring the distinction, so in 1973 H. S. M. Coxeter felt compelled to write:

Vectors

Mathematically four-dimensional space is simply a space with four spatial dimensions, that is a space that needs four parameters to specify a point in it. For example, a general point might have position vector a, equal to

This can be written in terms of the four standard basis vectors (e1, e2, e3, e4), given by

so the general vector a is

Vectors add, subtract and scale as in three dimensions.

The dot product of Euclidean three-dimensional space generalizes to four dimensions as

and calculate or define the angle between two non-zero vectors as

Minkowski spacetime is four-dimensional space with geometry defined by a non-degenerate pairing different from the dot product:

As an example, the distance squared between the points (0,0,0,0) and (1,1,1,0) is 3 in both the Euclidean and Minkowskian 4-spaces, while the distance squared between (0,0,0,0) and (1,1,1,1) is 4 in Euclidean space and 2 in Minkowski space; increasing actually decreases the metric distance. This leads to many of the well-known apparent "paradoxes" of relativity.

The cross product is not defined in four dimensions. Instead the exterior product is used for some applications, and is defined as follows:

This is bivector valued, with bivectors in four dimensions forming a six-dimensional linear space with basis (e12, e13, e14, e23, e24, e34). They can be used to generate rotations in four dimensions.

Orthogonality and vocabulary

In the familiar three-dimensional space in which we live there are three coordinate axes—usually labeled x, y, and z—with each axis orthogonal (i.e. perpendicular) to the other two. The six cardinal directions in this space can be called up, down, east, west, north, and south. Positions along these axes can be called altitude, longitude, and latitude. Lengths measured along these axes can be called height, width, and depth.

Comparatively, four-dimensional space has an extra coordinate axis, orthogonal to the other three, which is usually labeled w. To describe the two additional cardinal directions, Charles Howard Hinton coined the terms ana and kata, from the Greek words meaning "up toward" and "down from", respectively. A position along the w axis can be called spissitude, as coined by Henry More.

Geometry

The geometry of four-dimensional space is much more complex than that of three-dimensional space, due to the extra degree of freedom.

Just as in three dimensions there are polyhedra made of two dimensional polygons, in four dimensions there are 4-polytopes made of polyhedra. In three dimensions, there are 5 regular polyhedra known as the Platonic solids. In four dimensions, there are 6 convex regular 4-polytopes, the analogues of the Platonic solids. Relaxing the conditions for regularity generates a further 58 convex uniform 4-polytopes, analogous to the 13 semi-regular Archimedean solids in three dimensions. Relaxing the conditions for convexity generates a further 10 nonconvex regular 4-polytopes.

| A4, [3,3,3] | B4, [4,3,3] | F4, [3,4,3] | H4, [5,3,3] | ||

|---|---|---|---|---|---|

5-cell {3,3,3} |  tesseract {4,3,3} |  16-cell {3,3,4} |  24-cell {3,4,3} |  120-cell {5,3,3} |  600-cell {3,3,5} |

In three dimensions, a circle may be extruded to form a cylinder. In four dimensions, there are several different cylinder-like objects. A sphere may be extruded to obtain a spherical cylinder (a cylinder with spherical "caps", known as a spherinder), and a cylinder may be extruded to obtain a cylindrical prism (a cubinder). The Cartesian product of two circles may be taken to obtain a duocylinder. All three can "roll" in four-dimensional space, each with its own properties.

In three dimensions, curves can form knots but surfaces cannot (unless they are self-intersecting). In four dimensions, however, knots made using curves can be trivially untied by displacing them in the fourth direction—but 2D surfaces can form non-trivial, non-self-intersecting knots in 4D space.[9][page needed] Because these surfaces are two-dimensional, they can form much more complex knots than strings in 3D space can. The Klein bottle is an example of such a knotted surface.[citation needed] Another such surface is the real projective plane.

Hypersphere

The set of points in Euclidean 4-space having the same distance R from a fixed point P0 forms a hypersurface known as a 3-sphere. The hyper-volume of the enclosed space is:

This is part of the Friedmann–Lemaître–Robertson–Walker metric in General relativity where R is substituted by function R(t) with t meaning the cosmological age of the universe. Growing or shrinking R with time means expanding or collapsing universe, depending on the mass density inside.[10]

Cognition

Research using virtual reality finds that humans, in spite of living in a three-dimensional world, can, without special practice, make spatial judgments based on the length of, and angle between, line segments embedded in four-dimensional space.[11] The researchers noted that "the participants in our study had minimal practice in these tasks, and it remains an open question whether it is possible to obtain more sustainable, definitive, and richer 4D representations with increased perceptual experience in 4D virtual environments."[11] In another study,[12] the ability of humans to orient themselves in 2D, 3D and 4D mazes has been tested. Each maze consisted of four path segments of random length and connected with orthogonal random bends, but without branches or loops (i.e. actually labyrinths). The graphical interface was based on John McIntosh's free 4D Maze game.[13] The participating persons had to navigate through the path and finally estimate the linear direction back to the starting point. The researchers found that some of the participants were able to mentally integrate their path after some practice in 4D (the lower-dimensional cases were for comparison and for the participants to learn the method).

Dimensional analogy

To understand the nature of four-dimensional space, a device called dimensional analogy is commonly employed. Dimensional analogy is the study of how (n − 1) dimensions relate to n dimensions, and then inferring how n dimensions would relate to (n + 1) dimensions.

Dimensional analogy was used by Edwin Abbott Abbott in the book Flatland, which narrates a story about a square that lives in a two-dimensional world, like the surface of a piece of paper. From the perspective of this square, a three-dimensional being has seemingly god-like powers, such as ability to remove objects from a safe without breaking it open (by moving them across the third dimension), to see everything that from the two-dimensional perspective is enclosed behind walls, and to remain completely invisible by standing a few inches away in the third dimension.

By applying dimensional analogy, one can infer that a four-dimensional being would be capable of similar feats from our three-dimensional perspective. Rudy Rucker illustrates this in his novel Spaceland, in which the protagonist encounters four-dimensional beings who demonstrate such powers.

Cross-sections

As a three-dimensional object passes through a two-dimensional plane, a two-dimensional being would only see a cross-section of the three-dimensional object. For example, if a spherical balloon passed through a sheet of paper, a being on the paper would see first a single point, then a circle gradually growing larger, then smaller again until it shrank to a point and then disappeared. Similarly, if a four-dimensional object passed through three dimensions, we would see a three-dimensional cross-section of the four-dimensional object—for example, a hypersphere would appear first as a point, then as a growing sphere, with the sphere then shrinking to a single point and then disappearing.[15] This means of visualizing aspects of the fourth dimension was used in the novel Flatland and also in several works of Charles Howard Hinton.

Projections

A useful application of dimensional analogy in visualizing the fourth dimension is in projection. A projection is a way for representing an n-dimensional object in n − 1 dimensions. For instance, computer screens are two-dimensional, and all the photographs of three-dimensional people, places and things are represented in two dimensions by projecting the objects onto a flat surface. When this is done, depth is removed and replaced with indirect information. The retina of the eye is also a two-dimensional array of receptors but the brain is able to perceive the nature of three-dimensional objects by inference from indirect information (such as shading, foreshortening, binocular vision, etc.). Artists often use perspective to give an illusion of three-dimensional depth to two-dimensional pictures.

Similarly, objects in the fourth dimension can be mathematically projected to the familiar three dimensions, where they can be more conveniently examined. In this case, the 'retina' of the four-dimensional eye is a three-dimensional array of receptors. A hypothetical being with such an eye would perceive the nature of four-dimensional objects by inferring four-dimensional depth from indirect information in the three-dimensional images in its retina.

The perspective projection of three-dimensional objects into the retina of the eye introduces artifacts such as foreshortening, which the brain interprets as depth in the third dimension. In the same way, perspective projection from four dimensions produces similar foreshortening effects. By applying dimensional analogy, one may infer four-dimensional "depth" from these effects.

As an illustration of this principle, the following sequence of images compares various views of the three-dimensional cube with analogous projections of the four-dimensional tesseract into three-dimensional space.

| Cube | Tesseract | Description |

|---|---|---|

|  | The image on the left is a cube viewed face-on. The analogous viewpoint of the tesseract in 4 dimensions is the cell-first perspective projection, shown on the right. One may draw an analogy between the two: just as the cube projects to a square, the tesseract projects to a cube.

Note that the other 5 faces of the cube are not seen here. They are obscured by the visible face. Similarly, the other 7 cells of the tesseract are not seen here because they are obscured by the visible cell.

|

|  | The image on the left shows the same cube viewed edge-on. The analogous viewpoint of a tesseract is the face-first perspective projection, shown on the right. Just as the edge-first projection of the cube consists of two trapezoids, the face-first projection of the tesseract consists of two frustums.

The nearest edge of the cube in this viewpoint is the one lying between the red and green faces. Likewise, the nearest face of the tesseract is the one lying between the red and green cells.

|

|  | On the left is the cube viewed corner-first. This is analogous to the edge-first perspective projection of the tesseract, shown on the right. Just as the cube's vertex-first projection consists of 3 deltoids surrounding a vertex, the tesseract's edge-first projection consists of 3 hexahedral volumes surrounding an edge. Just as the nearest vertex of the cube is the one where the three faces meet, so the nearest edge of the tesseract is the one in the center of the projection volume, where the three cells meet. |

|  | A different analogy may be drawn between the edge-first projection of the tesseract and the edge-first projection of the cube. The cube's edge-first projection has two trapezoids surrounding an edge, while the tesseract has three hexahedral volumes surrounding an edge. |

|  | On the left is the cube viewed corner-first. The vertex-first perspective projection of the tesseract is shown on the right. The cube's vertex-first projection has three tetragons surrounding a vertex, but the tesseract's vertex-first projection has four hexahedral volumes surrounding a vertex. Just as the nearest corner of the cube is the one lying at the center of the image, so the nearest vertex of the tesseract lies not on boundary of the projected volume, but at its center inside, where all four cells meet.

Note that only three faces of the cube's 6 faces can be seen here, because the other 3 lie behind these three faces, on the opposite side of the cube. Similarly, only 4 of the tesseract's 8 cells can be seen here; the remaining 4 lie behind these 4 in the fourth direction, on the far side of the tesseract.

|

Shadows

A concept closely related to projection is the casting of shadows.

If a light is shone on a three-dimensional object, a two-dimensional shadow is cast. By dimensional analogy, light shone on a two-dimensional object in a two-dimensional world would cast a one-dimensional shadow, and light on a one-dimensional object in a one-dimensional world would cast a zero-dimensional shadow, that is, a point of non-light. Going the other way, one may infer that light shone on a four-dimensional object in a four-dimensional world would cast a three-dimensional shadow.

If the wireframe of a cube is lit from above, the resulting shadow is a square within a square with the corresponding corners connected. Similarly, if the wireframe of a tesseract were lit from “above” (in the fourth dimension), its shadow would be that of a three-dimensional cube within another three-dimensional cube. (Note that, technically, the visual representation shown here is actually a two-dimensional image of the three-dimensional shadow of the four-dimensional wireframe figure.)

Bounding volumes

Dimensional analogy also helps in inferring basic properties of objects in higher dimensions. For example, two-dimensional objects are bounded by one-dimensional boundaries: a square is bounded by four edges. Three-dimensional objects are bounded by two-dimensional surfaces: a cube is bounded by 6 square faces. By applying dimensional analogy, one may infer that a four-dimensional cube, known as a tesseract, is bounded by three-dimensional volumes. And indeed, this is the case: mathematics shows that the tesseract is bounded by 8 cubes. Knowing this is key to understanding how to interpret a three-dimensional projection of the tesseract. The boundaries of the tesseract project to volumes in the image, not merely two-dimensional surfaces.

Visual scope

Being three-dimensional, we are only able to see the world with our eyes in two dimensions. A four-dimensional being would be able to see the world in three dimensions. For example, it would be able to see all six sides of an opaque box simultaneously, and in fact, what is inside the box at the same time, just as we can see the interior of a square on a piece of paper. It would be able to see all points in 3-dimensional space simultaneously, including the inner structure of solid objects and things obscured from our three-dimensional viewpoint. Our brains receive images in two dimensions and use reasoning to help us "picture" three-dimensional objects.

Limitations

Reasoning by analogy from familiar lower dimensions can be an excellent intuitive guide, but care must be exercised not to accept results that are not more rigorously tested. For example, consider the formulas for the circumference of a circle and the surface area of a sphere: . One might be tempted to suppose that the surface volume of a hypersphere is , or perhaps , but either of these would be wrong. The correct formula is .

exotic R4

In mathematics, an exotic R4 is a differentiable manifold that is homeomorphic but not diffeomorphic to the Euclidean space R4. The first examples were found in 1982 by Michael Freedman and others, by using the contrast between Freedman's theorems about topological 4-manifolds, and Simon Donaldson's theorems about smooth 4-manifolds. There is a continuum of non-diffeomorphic differentiable structures of R4, as was shown first by Clifford Taubes.

Prior to this construction, non-diffeomorphic smooth structures on spheres – exotic spheres – were already known to exist, although the question of the existence of such structures for the particular case of the 4-sphere remained open (and still remains open as of 2018). For any positive integer n other than 4, there are no exotic smooth structures on Rn; in other words, if n ≠ 4 then any smooth manifold homeomorphic to Rnis diffeomorphic to Rn.

Small exotic R4s

An exotic R4 is called small if it can be smoothly embedded as an open subset of the standard R4.

Small exotic R4s can be constructed by starting with a non-trivial smooth 5-dimensional h-cobordism (which exists by Donaldson's proof that the h-cobordism theorem fails in this dimension) and using Freedman's theorem that the topological h-cobordism theorem holds in this dimension.

Large exotic R4s

An exotic R4 is called large if it cannot be smoothly embedded as an open subset of the standard R4.

Examples of large exotic R4s can be constructed using the fact that compact 4-manifolds can often be split as a topological sum (by Freedman's work), but cannot be split as a smooth sum (by Donaldson's work).

Michael Hartley Freedman and Laurence R. Taylor (1986) showed that there is a maximal exotic R4, into which all other R4s can be smoothly embedded as open subsets.

Related exotic structures

Casson handles are homeomorphic to D2×R2 by Freedman's theorem (where D2 is the closed unit disc) but it follows from Donaldson's theorem that they are not all diffeomorphic to D2×R2. In other words, some Casson handles are exotic D2×R2s.

It is not known (as of 2017) whether or not there are any exotic 4-spheres; such an exotic 4-sphere would be a counterexample to the smooth generalized Poincaré conjecture in dimension 4. Some plausible candidates are given by Gluck twists.

example concept at : Transition maps

A transition map provides a way of comparing two charts of an atlas. To make this comparison, we consider the composition of one chart with the inverse of the other. This composition is not well-defined unless we restrict both charts to the intersection of their domains of definition. (For example, if we have a chart of Europe and a chart of Russia, then we can compare these two charts on their overlap, namely the European part of Russia.)

To be more precise, suppose that and are two charts for a manifold M such that is non-empty. The transition map is the map defined by

Note that since and are both homeomorphisms, the transition map is also a homeomorphism.

More structure

One often desires more structure on a manifold than simply the topological structure. For example, if one would like an unambiguous notion of differentiation of functions on a manifold, then it is necessary to construct an atlas whose transition functions are differentiable. Such a manifold is called differentiable. Given a differentiable manifold, one can unambiguously define the notion of tangent vectors and then directional derivatives.

If each transition function is a smooth map, then the atlas is called a smooth atlas, and the manifold itself is called smooth. Alternatively, one could require that the transition maps have only k continuous derivatives in which case the atlas is said to be .

Very generally, if each transition function belongs to a pseudo-group of homeomorphisms of Euclidean space, then the atlas is called a -atlas. If the transition maps between charts of an atlas preserve a local trivialization, then the atlas defines the structure of a fibre bundle.

XXX . XXX 4% zero 2 dimensional, 3 dimensional, 4 dimensional and 5 dimensional

for this question, first to understand what is dimension. dimension of a space is the number of mutually perpendicular straight lines that can be drawn in that space.

for example,

1) on a piece of paper, two such lines can be drawn so the page is 2 dimensional.

2)in/on a dice or box or room or something like that where 3 such lines can be drawn or imagined are 3 dimensional.

*a straight line itself is one dimensional (although there is some ambiguity if a curved line can be thought of as 1D considering a curved co-ordinate but that is different case in doing physics. in general, straight line is 1D).

*a point is said to be zero dimensional.

--these are about spatial dimensions. Now let's come to higher dimensions.

1) In pure mathematics, any number of dimensions can be assumed which has no need to be visualized. one has to generalize lower dimensional formulae using more than 3 variables.

2) In physics (specially in Special Relativity theory), time behaves like another dimension like spatial ones and including time, a 4D mathematical structure is used in relativistic mechanics which is called 'space-time' and the corresponding diagrammatic representation is called 'Minkowski space/diagram'. In General Relativity, mathematical analysis often uses previously discussed infinite dimensions but for practical cases, 4D is used for space-time.

for example,

1) on a piece of paper, two such lines can be drawn so the page is 2 dimensional.

2)in/on a dice or box or room or something like that where 3 such lines can be drawn or imagined are 3 dimensional.

*a straight line itself is one dimensional (although there is some ambiguity if a curved line can be thought of as 1D considering a curved co-ordinate but that is different case in doing physics. in general, straight line is 1D).

*a point is said to be zero dimensional.

--these are about spatial dimensions. Now let's come to higher dimensions.

1) In pure mathematics, any number of dimensions can be assumed which has no need to be visualized. one has to generalize lower dimensional formulae using more than 3 variables.

2) In physics (specially in Special Relativity theory), time behaves like another dimension like spatial ones and including time, a 4D mathematical structure is used in relativistic mechanics which is called 'space-time' and the corresponding diagrammatic representation is called 'Minkowski space/diagram'. In General Relativity, mathematical analysis often uses previously discussed infinite dimensions but for practical cases, 4D is used for space-time.

With each added dimension you gain an additional direction of reference.

A sheet of paper, for instance, is two dimensional. It has only length and width. Our human brains are hardwired to perceive three dimensions giving us one more direction of reference, height. After the three dimensions we can perceive, four or more dimensions become a challenge for the human mind to even imagine in terms of increasing directions of reference. Beyond doubt, at this point, we know additional dimensions surround us and have always been with us even though we are consciously unaware of them as a result of the way our brains function. Quantum, String Theory and M-Dimensional physics speaks of these additional dimensions as "enfolded" within in the three dimensions which frame our conscious awareness.

A sheet of paper, for instance, is two dimensional. It has only length and width. Our human brains are hardwired to perceive three dimensions giving us one more direction of reference, height. After the three dimensions we can perceive, four or more dimensions become a challenge for the human mind to even imagine in terms of increasing directions of reference. Beyond doubt, at this point, we know additional dimensions surround us and have always been with us even though we are consciously unaware of them as a result of the way our brains function. Quantum, String Theory and M-Dimensional physics speaks of these additional dimensions as "enfolded" within in the three dimensions which frame our conscious awareness.

Two dimensions

If you locate somebody by longitude and latitude on earth that is called two dimensional. Two dimensions can represent on paper very easily.

If you locate somebody by longitude and latitude on earth that is called two dimensional. Two dimensions can represent on paper very easily.

Three dimensions

When aircraft flying in air, its required minimum three dimensions to represent its position. These three dimensions are latitude, longitude and altitude. Three dimensions can't represent on paper or 2D computer screen.

When aircraft flying in air, its required minimum three dimensions to represent its position. These three dimensions are latitude, longitude and altitude. Three dimensions can't represent on paper or 2D computer screen.

Four dimensions

When aircraft flying in air its position can't represent easily by three dimensions. Because it's moving at every moment. So time is also required to explain when aircraft was available at particular position.

So now position represent by latitude, longitude, altitude and time.

When aircraft flying in air its position can't represent easily by three dimensions. Because it's moving at every moment. So time is also required to explain when aircraft was available at particular position.

So now position represent by latitude, longitude, altitude and time.

Imagine a one dimensional shape. It is is comprised of zero dimensional shapes (vertices).

A two dimensional shape is comprised of one dimensional shapes (sides) and zero dimensional shapes (vertices).

A three dimensional shape has two (faces), one (edges) and zero (faces) dimensional shapes that make it up.

So similarly, a four dimensional shape is like a three dimensional shape, but is also comprised of three dimensional shapes. This means that unlike being able to see the shape in two dimensions (like we do in our universe) we can see it in three dimensions. We could be able to see inside the shape, or around the shape.

This is all assuming, of course, that you are talking about spatial dimensions. We only live in one time dimension, meaning that our understanding of time is linear; we travel alone it, without being able to explore it. If you thought looking inside objects was mind-boggling, imagine living in a universe where you had free will on where in time to move!

A two dimensional shape is comprised of one dimensional shapes (sides) and zero dimensional shapes (vertices).

A three dimensional shape has two (faces), one (edges) and zero (faces) dimensional shapes that make it up.

So similarly, a four dimensional shape is like a three dimensional shape, but is also comprised of three dimensional shapes. This means that unlike being able to see the shape in two dimensions (like we do in our universe) we can see it in three dimensions. We could be able to see inside the shape, or around the shape.

This is all assuming, of course, that you are talking about spatial dimensions. We only live in one time dimension, meaning that our understanding of time is linear; we travel alone it, without being able to explore it. If you thought looking inside objects was mind-boggling, imagine living in a universe where you had free will on where in time to move!

in the sense of 4 dimensions is the changing and moving of space and time

The one dimensional interval

The TWO DIMENSIONAL SQUARE

THE THREE DIMENSIONAL CUBE

THE FOUR DIMENSIONAL CUBE : THE TESSERACT

STEREO VISION

A KNOTTY CHALLENGE

USING COLORS TO VISUALIZE THE EXTRA DIMENSIONS

XXX . XXX 4%zero null 0 Lumped element model jump to electronic circuit

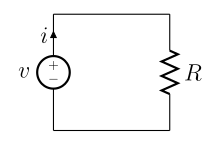

The lumped element model (also called lumped parameter model, or lumped component model) simplifies the description of the behaviour of spatially distributed physical systems into a topology consisting of discrete entities that approximate the behaviour of the distributed system under certain assumptions. It is useful in electrical systems (including electronics), mechanical multibody systems, heat transfer, acoustics, etc.

Mathematically speaking, the simplification reduces the state space of the system to a finite dimension, and the partial differential equations (PDEs) of the continuous (infinite-dimensional) time and space model of the physical system into ordinary differential equations (ODEs) with a finite number of parameters.

Electrical systems

Lumped matter discipline

The lumped matter discipline is a set of imposed assumptions in electrical engineering that provides the foundation for lumped circuit abstraction used in network analysis. The self-imposed constraints are:

1. The change of the magnetic flux in time outside a conductor is zero.

2. The change of the charge in time inside conducting elements is zero.

3. Signal timescales of interest are much larger than propagation delay of electromagnetic waves across the lumped element.

The first two assumptions result in Kirchhoff's circuit laws when applied to Maxwell's equations and are only applicable when the circuit is in steady state. The third assumption is the basis of the lumped element model used in network analysis. Less severe assumptions result in the distributed element model, while still not requiring the direct application of the full Maxwell equations.

Lumped element model

The lumped element model of electronic circuits makes the simplifying assumption that the attributes of the circuit, resistance, capacitance, inductance, and gain, are concentrated into idealized electrical components; resistors, capacitors, and inductors, etc. joined by a network of perfectly conducting wires.

The lumped element model is valid whenever , where denotes the circuit's characteristic length, and denotes the circuit's operating wavelength. Otherwise, when the circuit length is on the order of a wavelength, we must consider more general models, such as the distributed element model (including transmission lines), whose dynamic behaviour is described by Maxwell's equations. Another way of viewing the validity of the lumped element model is to note that this model ignores the finite time it takes signals to propagate around a circuit. Whenever this propagation time is not significant to the application the lumped element model can be used. This is the case when the propagation time is much less than the period of the signal involved. However, with increasing propagation time there will be an increasing error between the assumed and actual phase of the signal which in turn results in an error in the assumed amplitude of the signal. The exact point at which the lumped element model can no longer be used depends to a certain extent on how accurately the signal needs to be known in a given application.

Real-world components exhibit non-ideal characteristics which are, in reality, distributed elements but are often represented to a first-order approximation by lumped elements. To account for leakage in capacitors for example, we can model the non-ideal capacitor as having a large lumped resistor connected in parallel even though the leakage is, in reality distributed throughout the dielectric. Similarly a wire-wound resistor has significant inductance as well as resistance distributed along its length but we can model this as a lumped inductor in series with the ideal resistor.

Thermal systems

A lumped capacitance model, also called lumped system analysis, reduces a thermal system to a number of discrete “lumps” and assumes that the temperature difference inside each lump is negligible. This approximation is useful to simplify otherwise complex differential heat equations. It was developed as a mathematical analog of electrical capacitance, although it also includes thermal analogs of electrical resistance as well.

The lumped capacitance model is a common approximation in transient conduction, which may be used whenever heat conduction within an object is much faster than heat transfer across the boundary of the object. The method of approximation then suitably reduces one aspect of the transient conduction system (spatial temperature variation within the object) to a more mathematically tractable form (that is, it is assumed that the temperature within the object is completely uniform in space, although this spatially uniform temperature value changes over time). The rising uniform temperature within the object or part of a system, can then be treated like a capacitative reservoir which absorbs heat until it reaches a steady thermal state in time (after which temperature does not change within it).

An early-discovered example of a lumped-capacitance system which exhibits mathematically simple behavior due to such physical simplifications, are systems which conform to Newton's law of cooling. This law simply states that the temperature of a hot (or cold) object progresses toward the temperature of its environment in a simple exponential fashion. Objects follow this law strictly only if the rate of heat conduction within them is much larger than the heat flow into or out of them. In such cases it makes sense to talk of a single "object temperature" at any given time (since there is no spatial temperature variation within the object) and also the uniform temperatures within the object allow its total thermal energy excess or deficit to vary proportionally to its surface temperature, thus setting up the Newton's law of cooling requirement that the rate of temperature decrease is proportional to difference between the object and the environment. This in turn leads to simple exponential heating or cooling behavior (details below).

Method

To determine the number of lumps, the Biot number (Bi), a dimensionless parameter of the system, is used. Bi is defined as the ratio of the conductive heat resistance within the object to the convective heat transfer resistance across the object's boundary with a uniform bath of different temperature. When the thermal resistance to heat transferred into the object is larger than the resistance to heat being diffused completely within the object, the Biot number is less than 1. In this case, particularly for Biot numbers which are even smaller, the approximation of spatially uniform temperature within the object can begin to be used, since it can be presumed that heat transferred into the object has time to uniformly distribute itself, due to the lower resistance to doing so, as compared with the resistance to heat entering the object.

If the Biot number is less than 0.1 for a solid object, then the entire material will be nearly the same temperature with the dominant temperature difference will be at the surface. It may be regarded as being "thermally thin". The Biot number must generally be less than 0.1 for usefully accurate approximation and heat transfer analysis. The mathematical solution to the lumped system approximation gives Newton's law of cooling.

A Biot number greater than 0.1 (a "thermally thick" substance) indicates that one cannot make this assumption, and more complicated heat transfer equations for "transient heat conduction" will be required to describe the time-varying and non-spatially-uniform temperature field within the material body.

The single capacitance approach can be expanded to involve many resistive and capacitive elements, with Bi < 0.1 for each lump. As the Biot number is calculated based upon a characteristic length of the system, the system can often be broken into a sufficient number of sections, or lumps, so that the Biot number is acceptably small.

Some characteristic lengths of thermal systems are:

For arbitrary shapes, it may be useful to consider the characteristic length to be volume / surface area.

Thermal purely resistive circuits

A useful concept used in heat transfer applications once the condition of steady state heat conduction has been reached, is the representation of thermal transfer by what is known as thermal circuits. A thermal circuit is the representation of the resistance to heat flow in each element of a circuit, as though it were an electrical resistor. The heat transferred is analogous to the electric current and the thermal resistance is analogous to the electrical resistor. The values of the thermal resistance for the different modes of heat transfer are then calculated as the denominators of the developed equations. The thermal resistances of the different modes of heat transfer are used in analyzing combined modes of heat transfer. The lack of "capacitative" elements in the following purely resistive example, means that no section of the circuit is absorbing energy or changing in distribution of temperature. This is equivalent to demanding that a state of steady state heat conduction (or transfer, as in radiation) has already been established.

The equations describing the three heat transfer modes and their thermal resistances in steady state conditions, as discussed previously, are summarized in the table below:

| Transfer Mode | Rate of Heat Transfer | Thermal Resistance |

|---|---|---|

| Conduction | ||

| Convection | ||

| Radiation | , where |

In cases where there is heat transfer through different media (for example, through a composite material), the equivalent resistance is the sum of the resistances of the components that make up the composite. Likely, in cases where there are different heat transfer modes, the total resistance is the sum of the resistances of the different modes. Using the thermal circuit concept, the amount of heat transferred through any medium is the quotient of the temperature change and the total thermal resistance of the medium.

As an example, consider a composite wall of cross-sectional area . The composite is made of an long cement plaster with a thermal coefficient and long paper faced fiber glass, with thermal coefficient . The left surface of the wall is at and exposed to air with a convective coefficient of . The right surface of the wall is at and exposed to air with convective coefficient .

Using the thermal resistance concept, heat flow through the composite is as follows:

where

, , , and

Newton's law of cooling

Newton's law of cooling is an empirical relationship attributed to English physicist Sir Isaac Newton (1642 - 1727). This law stated in non-mathematical form is the following:

Or, using symbols:

An object at a different temperature from its surroundings will ultimately come to a common temperature with its surroundings. A relatively hot object cools as it warms its surroundings; a cool object is warmed by its surroundings. When considering how quickly (or slowly) something cools, we speak of its rate of cooling - how many degrees' change in temperature per unit of time.

The rate of cooling of an object depends on how much hotter the object is than its surroundings. The temperature change per minute of a hot apple pie will be more if the pie is put in a cold freezer than if it is placed on the kitchen table. When the pie cools in the freezer, the temperature difference between it and its surroundings is greater. On a cold day, a warm home will leak heat to the outside at a greater rate when there is a large difference between the inside and outside temperatures. Keeping the inside of a home at high temperature on a cold day is thus more costly than keeping it at a lower temperature. If the temperature difference is kept small, the rate of cooling will be correspondingly low.

As Newton's law of cooling states, the rate of cooling of an object - whether by conduction, convection, or radiation - is approximately proportional to the temperature difference ΔT. Frozen food will warm up faster in a warm room than in a cold room. Note that the rate of cooling experienced on a cold day can be increased by the added convection effect of the wind. This is referred to as wind chill. For example, a wind chill of -20 °C means that heat is being lost at the same rate as if the temperature were -20 °C without wind.

Applicable situations

This law describes many situations in which an object has a large thermal capacity and large conductivity, and is suddenly immersed in a uniform bath which conducts heat relatively poorly. It is an example of a thermal circuit with one resistive and one capacitative element. For the law to be correct, the temperatures at all points inside the body must be approximately the same at each time point, including the temperature at its surface. Thus, the temperature difference between the body and surroundings does not depend on which part of the body is chosen, since all parts of the body have effectively the same temperature. In these situations, the material of the body does not act to "insulate" other parts of the body from heat flow, and all of the significant insulation (or "thermal resistance") controlling the rate of heat flow in the situation resides in the area of contact between the body and its surroundings. Across this boundary, the temperature-value jumps in a discontinuous fashion.

In such situations, heat can be transferred from the exterior to the interior of a body, across the insulating boundary, by convection, conduction, or diffusion, so long as the boundary serves as a relatively poor conductor with regard to the object's interior. The presence of a physical insulator is not required, so long as the process which serves to pass heat across the boundary is "slow" in comparison to the conductive transfer of heat inside the body (or inside the region of interest—the "lump" described above).

In such a situation, the object acts as the "capacitative" circuit element, and the resistance of the thermal contact at the boundary acts as the (single) thermal resistor. In electrical circuits, such a combination would charge or discharge toward the input voltage, according to a simple exponential law in time. In the thermal circuit, this configuration results in the same behavior in temperature: an exponential approach of the object temperature to the bath temperature.

Mathematical statement

Newton's law is mathematically stated by the simple first-order differential equation:

where

- Q is thermal energy in joules

- h is the heat transfer coefficient between the surface and the fluid

- A is the surface area of the heat being transferred

- T is the temperature of the object's surface and interior (since these are the same in this approximation)

- Tenv is the temperature of the environment

- ΔT(t) = T(t) - Tenv is the time-dependent thermal gradient between environment and object

Putting heat transfers into this form is sometimes not a very good approximation, depending on ratios of heat conductances in the system. If the differences are not large, an accurate formulation of heat transfers in the system may require analysis of heat flow based on the (transient) heat transfer equation in nonhomogeneous or poorly conductive media.

Solution in terms of object heat capacity

If the entire body is treated as lumped capacitance heat reservoir, with total heat content which is proportional to simple total heat capacity , and , the temperature of the body, or . It is expected that the system will experience exponential decay with time in the temperature of a body.

From the definition of heat capacity comes the relation . Differentiating this equation with regard to time gives the identity (valid so long as temperatures in the object are uniform at any given time): . This expression may be used to replace in the first equation which begins this section, above. Then, if is the temperature of such a body at time , and is the temperature of the environment around the body:

where

is a positive constant characteristic of the system, which must be in units of , and is therefore sometimes expressed in terms of a characteristic time constant given by: . Thus, in thermal systems, . (The total heat capacity of a system may be further represented by its mass-specific heat capacity multiplied by its mass , so that the time constant is also given by ).

The solution of this differential equation, by standard methods of integration and substitution of boundary conditions, gives:

If:

- is defined as : where is the initial temperature difference at time 0,

then the Newtonian solution is written as:

This same solution is almost immediately apparent if the initial differential equation is written in terms of , as the single function to be solved for. '

Applications

This mode of analysis has been applied to forensic sciences to analyze the time of death of humans. Also, it can be applied to HVAC (heating, ventilating and air-conditioning, which can be referred to as "building climate control"), to ensure more nearly instantaneous effects of a change in comfort level setting.[3]

Mechanical systems

The simplifying assumptions in this domain are:

- all objects are rigid bodies;

- all interactions between rigid bodies take place via kinematic pairs (joints), springs and dampers.

Acoustics

In this context, the lumped component model extends the distributed concepts of Acoustic theory subject to approximation. In the acoustical lumped component model, certain physical components with acoustical properties may be approximated as behaving similarly to standard electronic components or simple combinations of components.

- A rigid-walled cavity containing air (or similar compressible fluid) may be approximated as a capacitor whose value is proportional to the volume of the cavity. The validity of this approximation relies on the shortest wavelength of interest being significantly (much) larger than the longest dimension of the cavity.

- A reflex port may be approximated as an inductor whose value is proportional to the effective length of the port divided by its cross-sectional area. The effective length is the actual length plus an end correction. This approximation relies on the shortest wavelength of interest being significantly larger than the longest dimension of the port.

- Certain types of damping material can be approximated as a resistor. The value depends on the properties and dimensions of the material. The approximation relies in the wavelengths being long enough and on the properties of the material itself.

- A loudspeaker drive unit (typically a woofer or subwoofer drive unit) may be approximated as a series connection of a zero-impedance voltage source, a resistor, a capacitor and an inductor. The values depend on the specifications of the unit and the wavelength of interest.

Heat transfer for buildings

The simplifying assumption in this domain are:

- all heat transfer mechanisms are linear, implying that radiation and convection are linearised for each problem;

Several publications can be found that describe how to generate LEMs of buildings. In most cases, the building is considered a single thermal zone and in this case, turning multi-layered walls into Lumped Elements can be one of the most complicated tasks in the creation of the model. Ramallo-González's method (Dominant Layer Method) is the most accurate and simple so far.[4] In this method, one of the layers is selected as the dominant layer in the whole construction, this layer is chosen considering the most relevant frequencies of the problem. In his thesis, Ramallo-González shows the whole process of obtaining the LEM of a complete building.

LEMs of buildings have also been used to evaluate the efficiency of domestic energy systems In this case the LEMs allowed to run many simulations under different future weather scenarios.

XXX . XXX 4%zero null 0 1 2 Isomorphism

In mathematics, an isomorphism (from the Ancient Greek: ἴσος isos "equal", and μορφή morphe "form" or "shape") is a homomorphism or morphism (i.e. a mathematical mapping) that admits an inverse.[note 1] Two mathematical objects are isomorphic if an isomorphism exists between them. An automorphism is an isomorphism whose source and target coincide. The interest of isomorphisms lies in the fact that two isomorphic objects cannot be distinguished by using only the properties used to define morphisms; thus isomorphic objects may be considered the same as long as one considers only these properties and their consequences.

For most algebraic structures, including groups and rings, a homomorphism is an isomorphism if and only if it is bijective.

In topology, where the morphisms are continuous functions, isomorphisms are also called homeomorphisms or bicontinuous functions. In mathematical analysis, where the morphisms are differentiable functions, isomorphisms are also called diffeomorphisms.

A canonical isomorphism is a canonical map that is an isomorphism. Two objects are said to be canonically isomorphic if there is a canonical isomorphism between them. For example, the canonical map from a finite-dimensional vector space V to its second dual space is a canonical isomorphism; on the other hand, V is isomorphic to its dual space but not canonically in general.

Isomorphisms are formalized using category theory. A morphism f : X → Y in a category is an isomorphism if it admits a two-sided inverse, meaning that there is another morphism g : Y → X in that category such that gf = 1X and fg = 1Y, where 1X and 1Y are the identity morphisms of X and Y, respectively.

The group of fifth roots of unity under multiplication is isomorphic to the group of rotations of the regular pentagon under composition.

Examples

Logarithm and exponential

Let be the multiplicative group of positive real numbers, and let be the additive group of real numbers.

The logarithm function satisfies for all , so it is a group homomorphism. The exponential function satisfies for all , so it too is a homomorphism.

The identities and show that and are inverses of each other. Since is a homomorphism that has an inverse that is also a homomorphism, is an isomorphism of groups.

Because is an isomorphism, it translates multiplication of positive real numbers into addition of real numbers. This facility makes it possible to multiply real numbers using a ruler and a table of logarithms, or using a slide rule with a logarithmic scale.

Integers modulo 6

Consider the group , the integers from 0 to 5 with addition modulo 6. Also consider the group , the ordered pairs where the x coordinates can be 0 or 1, and the y coordinates can be 0, 1, or 2, where addition in the x-coordinate is modulo 2 and addition in the y-coordinate is modulo 3.

These structures are isomorphic under addition, under the following scheme:

- (0,0) ↦ 0

- (1,1) ↦ 1

- (0,2) ↦ 2

- (1,0) ↦ 3

- (0,1) ↦ 4

- (1,2) ↦ 5

or in general (a,b) ↦ (3a + 4b) mod 6.

For example, (1,1) + (1,0) = (0,1), which translates in the other system as 1 + 3 = 4.

Even though these two groups "look" different in that the sets contain different elements, they are indeed isomorphic: their structures are exactly the same. More generally, the direct product of two cyclic groups and is isomorphic to if and only if m and n are coprime, per the Chinese remainder theorem.

Relation-preserving isomorphism

If one object consists of a set X with a binary relation R and the other object consists of a set Y with a binary relation S then an isomorphism from X to Y is a bijective function ƒ: X → Y such that:[2]

S is reflexive, irreflexive, symmetric, antisymmetric, asymmetric, transitive, total, trichotomous, a partial order, total order, strict weak order, total preorder (weak order), an equivalence relation, or a relation with any other special properties, if and only if R is.

For example, R is an ordering ≤ and S an ordering , then an isomorphism from X to Y is a bijective function ƒ: X → Y such that

Such an isomorphism is called an order isomorphism or (less commonly) an isotone isomorphism.

If X = Y, then this is a relation-preserving automorphism.

Isomorphism vs. bijective morphism

In a concrete category (that is, roughly speaking, a category whose objects are sets and morphisms are mappings between sets), such as the category of topological spaces or categories of algebraic objects like groups, rings, and modules, an isomorphism must be bijective on the underlying sets. In algebraic categories (specifically, categories of varieties in the sense of universal algebra), an isomorphism is the same as a homomorphism which is bijective on underlying sets. However, there are concrete categories in which bijective morphisms are not necessarily isomorphisms (such as the category of topological spaces), and there are categories in which each object admits an underlying set but in which isomorphisms need not be bijective (such as the homotopy category of CW-complexes).

Applications

In abstract algebra, two basic isomorphisms are defined:

- Group isomorphism, an isomorphism between groups

- Ring isomorphism, an isomorphism between rings. (Isomorphisms between fields are actually ring isomorphisms)

Just as the automorphisms of an algebraic structure form a group, the isomorphisms between two algebras sharing a common structure form a heap. Letting a particular isomorphism identify the two structures turns this heap into a group.

In mathematical analysis, the Laplace transform is an isomorphism mapping hard differential equations into easier algebraic equations.

In category theory, let the category C consist of two classes, one of objects and the other of morphisms. Then a general definition of isomorphism that covers the previous and many other cases is: an isomorphism is a morphism ƒ: a → b that has an inverse, i.e. there exists a morphism g: b → a with ƒg = 1b and gƒ = 1a. For example, a bijective linear map is an isomorphism between vector spaces, and a bijective continuous function whose inverse is also continuous is an isomorphism between topological spaces, called a homeomorphism.

In graph theory, an isomorphism between two graphs G and H is a bijective map f from the vertices of G to the vertices of H that preserves the "edge structure" in the sense that there is an edge from vertex u to vertex v in G if and only if there is an edge from ƒ(u) to ƒ(v) in H. See graph isomorphism.

In mathematical analysis, an isomorphism between two Hilbert spaces is a bijection preserving addition, scalar multiplication, and inner product.

In early theories of logical atomism, the formal relationship between facts and true propositions was theorized by Bertrand Russell and Ludwig Wittgenstein to be isomorphic. An example of this line of thinking can be found in Russell's Introduction to Mathematical Philosophy.

In cybernetics, the good regulator or Conant–Ashby theorem is stated "Every good regulator of a system must be a model of that system". Whether regulated or self-regulating, an isomorphism is required between the regulator and processing parts of the system.

Relation with equality

In certain areas of mathematics, notably category theory, it is valuable to distinguish between equality on the one hand and isomorphism on the other.[3] Equality is when two objects are exactly the same, and everything that's true about one object is true about the other, while an isomorphism implies everything that's true about a designated part of one object's structure is true about the other's. For example, the sets

- and

are equal; they are merely different presentations—the first an intensional one (in set builder notation), and the second extensional (by explicit enumeration)—of the same subset of the integers. By contrast, the sets {A,B,C} and {1,2,3} are not equal—the first has elements that are letters, while the second has elements that are numbers. These are isomorphic as sets, since finite sets are determined up to isomorphism by their cardinality (number of elements) and these both have three elements, but there are many choices of isomorphism—one isomorphism is

- while another is

and no one isomorphism is intrinsically better than any other.[note 2][note 3] On this view and in this sense, these two sets are not equal because one cannot consider them identical: one can choose an isomorphism between them, but that is a weaker claim than identity—and valid only in the context of the chosen isomorphism.

Sometimes the isomorphisms can seem obvious and compelling, but are still not equalities. As a simple example, the genealogical relationships among Joe, John, and Bobby Kennedy are, in a real sense, the same as those among the American football quarterbacks in the Manning family: Archie, Peyton, and Eli. The father-son pairings and the elder-brother-younger-brother pairings correspond perfectly. That similarity between the two family structures illustrates the origin of the word isomorphism (Greek iso-, "same," and -morph, "form" or "shape"). But because the Kennedys are not the same people as the Mannings, the two genealogical structures are merely isomorphic and not equal.

Another example is more formal and more directly illustrates the motivation for distinguishing equality from isomorphism: the distinction between a finite-dimensional vector space V and its dual spaceV* = { φ: V → K} of linear maps from V to its field of scalars K. These spaces have the same dimension, and thus are isomorphic as abstract vector spaces (since algebraically, vector spaces are classified by dimension, just as sets are classified by cardinality), but there is no "natural" choice of isomorphism . If one chooses a basis for V, then this yields an isomorphism: For all u. v ∈ V,

- .

This corresponds to transforming a column vector (element of V) to a row vector (element of V*) by transpose, but a different choice of basis gives a different isomorphism: the isomorphism "depends on the choice of basis". More subtly, there is a map from a vector space V to its double dual V** = { x: V* → K} that does not depend on the choice of basis: For all v ∈ V and φ ∈ V*,

- .

This leads to a third notion, that of a natural isomorphism: while V and V** are different sets, there is a "natural" choice of isomorphism between them. This intuitive notion of "an isomorphism that does not depend on an arbitrary choice" is formalized in the notion of a natural transformation; briefly, that one may consistently identify, or more generally map from, a vector space to its double dual, , for any vector space in a consistent way. Formalizing this intuition is a motivation for the development of category theory.

However, there is a case where the distinction between natural isomorphism and equality is usually not made. That is for the objects that may be characterized by a universal property. In fact, there is a unique isomorphism, necessarily natural, between two objects sharing the same universal property. A typical example is the set of real numbers, which may be defined through infinite decimal expansion, infinite binary expansion, Cauchy sequences, Dedekind cuts and many other ways. Formally these constructions define different objects, which all are solutions of the same universal property. As these objects have exactly the same properties, one may forget the method of construction and considering them as equal. This is what everybody does when talking of "the set of the real numbers". The same occurs with quotient spaces: they are commonly constructed as sets of equivalence classes. However, talking of set of sets may be counterintuitive, and quotient spaces are commonly considered as a pair of a set of undetermined objects, often called "points", and a surjective map onto this set.

If one wishes to draw a distinction between an arbitrary isomorphism (one that depends on a choice) and a natural isomorphism (one that can be done consistently), one may write ≈ for an unnatural isomorphism and ≅ for a natural isomorphism, as in V ≈ V* and V ≅ V**. This convention is not universally followed, and authors who wish to distinguish between unnatural isomorphisms and natural isomorphisms will generally explicitly state the distinction.

Generally, saying that two objects are equal is reserved for when there is a notion of a larger (ambient) space that these objects live in. Most often, one speaks of equality of two subsets of a given set (as in the integer set example above), but not of two objects abstractly presented. For example, the 2-dimensional unit sphere in 3-dimensional space

- and the Riemann sphere

which can be presented as the one-point compactification of the complex plane C ∪ {∞} or as the complex projective line (a quotient space)

are three different descriptions for a mathematical object, all of which are isomorphic, but not equal because they are not all subsets of a single space: the first is a subset of R3, the second is C ≅ R2[note 4] plus an additional point, and the third is a subquotient of C2

In the context of category theory, objects are usually at most isomorphic—indeed, a motivation for the development of category theory was showing that different constructions in homology theory yielded equivalent (isomorphic) groups. Given maps between two objects X and Y, however, one asks if they are equal or not (they are both elements of the set Hom(X, Y), hence equality is the proper relationship), particularly in commutative diagrams.

Notes

- ^ For clarity, by inverse is meant inverse homomorphism or inverse morphism respectively, not inverse function.

- ^ A, B, C have a conventional order, namely alphabetical order, and similarly 1, 2, 3 have the order from the integers, and thus one particular isomorphism is "natural", namely

- .

- ^ In fact, there are precisely different isomorphisms between two sets with three elements. This is equal to the number of automorphisms of a given three-element set (which in turn is equal to the order of the symmetric group on three letters), and more generally one has that the set of isomorphisms between two objects, denoted is a torsor for the automorphism group of A, and also a torsor for the automorphism group of B. In fact, automorphisms of an object are a key reason to be concerned with the distinction between isomorphism and equality, as demonstrated in the effect of change of basis on the identification of a vector space with its dual or with its double dual, as elaborated in the sequel.

- ^ Being precise, the identification of the complex numbers with the real plane,

XXX . XXX 4%zero null 0 1 2 3 4 Equation of time

The equation of time describes the discrepancy between two kinds of solar time. The word equation is used in the medieval sense of "reconcile a difference". The two times that differ are the apparent solar time, which directly tracks the diurnal motion of the Sun, and mean solar time, which tracks a theoretical mean Sun with noons 24 hours apart. Apparent solar time can be obtained by measurement of the current position (hour angle) of the Sun, as indicated (with limited accuracy) by a sundial. Mean solar time, for the same place, would be the time indicated by a steady clock set so that over the year its differences from apparent solar time would resolve to zero.

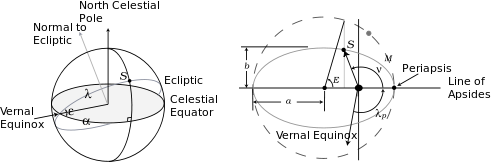

The equation of time is the east or west component of the analemma, a curve representing the angular offset of the Sun from its mean position on the celestial sphere as viewed from Earth. The equation of time values for each day of the year, compiled by astronomical observatories, were widely listed in almanacs and ephemerides .

The concept

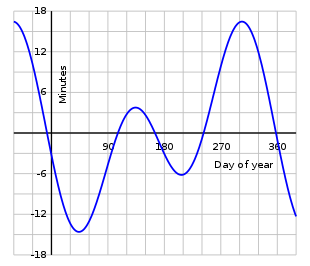

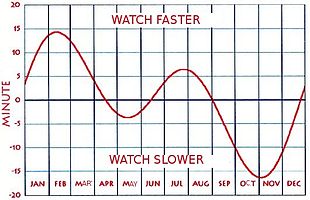

During a year the equation of time varies as shown on the graph; its change from one year to the next is slight. Apparent time, and the sundial, can be ahead (fast) by as much as 16 min 33 s (around 3 November), or behind (slow) by as much as 14 min 6 s (around 12 February). The equation of time has zeros near 15 April, 13 June, 1 September and 25 December. Ignoring very slow changes in the Earth's orbit and rotation, these events are repeated at the same times every tropical year. However, due to the non-integer number of days in a year, these dates can vary by a day or so from year to year.

The graph of the equation of time is closely approximated by the sum of two sine curves, one with a period of a year and one with a period of half a year. The curves reflect two astronomical effects, each causing a different non-uniformity in the apparent daily motion of the Sun relative to the stars:

- the obliquity of the ecliptic (the plane of the Earth's annual orbital motion around the Sun), which is inclined by about 23.44 degrees relative to the plane of the Earth's equator; and

- the eccentricity of the Earth's orbit around the Sun, which is about 0.0167.

The equation of time is constant only for a planet with zero axial tilt and zero orbital eccentricity. On Mars the difference between sundial time and clock time can be as much as 50 minutes, due to the considerably greater eccentricity of its orbit. The planet Uranus, which has an extremely large axial tilt, has an equation of time that makes its days start and finish several hours earlier or later depending on where it is in its orbit.

Sign of the equation of time

There is no universally accepted definition of the sign of the equation of time. Some publications show it as positive when a sundial is ahead of a clock, as shown in the upper graph above; others when the clock is ahead of the sundial, as shown in the lower graph. In the English-speaking world, the former usage is the more common, but is not always followed. Anyone who makes use of a published table or graph should first check its sign usage. Often, there is a note or caption which explains it. Otherwise, the sign can be determined by knowing that, during the first three months of each year, the clock is ahead of the sundial. The mnemonic "NYSS" (pronounced "nice"), for "new year, sundial slow", can be useful. Some published tables avoid the ambiguity by not using signs, but by showing phrases such as "sundial fast" or "sundial slow" instead.[6]

In this article, and others in English Wikipedia, a positive value of the equation of time implies that a sundial is ahead of a clock.

Flash Back

The phrase "equation of time" is derived from the medieval Latin, aequātiō diērum meaning "equation of days" or "difference of days". The word aequātiō was widely used in early astronomy to tabulate the difference between an observed value and the expected value (as in the equation of centre, the equation of the equinoxes, the equation of the epicycle). The difference between apparent solar time and mean time was recognized by astronomers since antiquity, but prior to the invention of accurate mechanical clocks in the mid-17th century, sundials were the only reliable timepieces, and apparent solar time was the generally accepted standard.

A description of apparent and mean time was given by Nevil Maskelyne in the Nautical Almanac for 1767: "Apparent Time is that deduced immediately from the Sun, whether from the Observation of his passing the Meridian, or from his observed Rising or Setting. This Time is different from that shewn by Clocks and Watches well regulated at Land, which is called equated or mean Time." He went on to say that, at sea, the apparent time found from observation of the Sun must be corrected by the equation of time, if the observer requires the mean time.[1]

The right time was originally considered to be that which was shown by a sundial. When good mechanical clocks were introduced, they agreed with sundials only near four dates each year, so the equation of time was used to "correct" their readings to obtain sundial time. Some clocks, called equation clocks, included an internal mechanism to perform this "correction". Later, as clocks became the dominant good timepieces, uncorrected clock time, i.e., "mean time" became the accepted standard. The readings of sundials, when they were used, were then, and often still are, corrected with the equation of time, used in the reverse direction from previously, to obtain clock time. Many sundials, therefore, have tables or graphs of the equation of time engraved on them to allow the user to make this correction.

The equation of time was used historically to set clocks. Between the invention of accurate clocks in 1656 and the advent of commercial time distribution services around 1900, there were three common land-based ways to set clocks. Firstly, in the unusual event of having an astronomer present, the sun's transit across the meridian (the moment the sun passed overhead) was noted, the clock was then set to noon and offset by the number of minutes given by the equation of time for that date. Secondly, and much more commonly, a sundial was read, a table of the equation of time (usually engraved on the dial) was consulted and the watch or clock set accordingly. These calculated the mean time, albeit local to a point of longitude. (The third method did not use the equation of time; instead, it used stellar observations to give sidereal time, exploiting the relationship between sidereal time and mean solar time.)[7]

Of course, the equation of time can still be used, when required, to obtain apparent solar time from clock time. Devices such as solar trackers, which move to keep pace with the Sun's movements in the sky, frequently do not include sensors to determine the Sun's position. Instead, they are controlled by a clock mechanism, along with a mechanism that incorporates the equation of time to make the device keep pace with the Sun.

Ancient history — Babylon and Egypt

The irregular daily movement of the Sun was known by the Babylonians. Book III of Ptolemy's Almagest is primarily concerned with the Sun's anomaly, and he tabulated the equation of time in his Handy Tables.[8]Ptolemy discusses the correction needed to convert the meridian crossing of the Sun to mean solar time and takes into consideration the nonuniform motion of the Sun along the ecliptic and the meridian correction for the Sun's ecliptic longitude. He states the maximum correction is 81⁄3 time-degrees or 5⁄9 of an hour (Book III, chapter 9).[9] However he did not consider the effect to be relevant for most calculations since it was negligible for the slow-moving luminaries and only applied it for the fastest-moving luminary, the Moon.

Medieval and Renaissance astronomy

Based on Ptolemy's discussion in the Almagest, medieval Islamic astronomers such as al-Khwarizmi, al-Battani, Kushyar ibn Labban, Jamshīd al-Kāshī and others, made improvements to the solar tables and the value of obliquity, and published tables of the equation of time (taʿdīl al-ayyām bi layālayhā) in their zij (astronomical tables).[10]

After that, the next substantial improvement in the computation didn't come until Kepler's final upset of the geocentric astronomy of the ancients. Gerald J. Toomer uses the medieval term "equation" from the Latin aequātiō[n 1], for Ptolemy's difference between the mean solar time and the apparent solar time. Johannes Kepler's definition of the equation is "the difference between the number of degrees and minutes of the mean anomaly and the degrees and minutes of the corrected anomaly."[11]

Apparent time versus mean time

Until the invention of the pendulum and the development of reliable clocks during the 17th century, the equation of time as defined by Ptolemy remained a curiosity, of importance only to astronomers. However, when mechanical clocks started to take over timekeeping from sundials, which had served humanity for centuries, the difference between clock time and sundial time became an issue for everyday life. Apparent solar time is the time indicated by the Sun on a sundial (or measured by its transit over a preferred local meridian), while mean solar time is the average as indicated by well-regulated clocks. The first tables to give the equation of time in an essentially correct way were published in 1665 by Christiaan Huygens.[12] Huygens, following the tradition of Ptolemy and medieval astronomers in general, set his values for the equation of time so as to make all values positive throughout the year.[13][n 2]

Another set of tables was published in 1672–73 by John Flamsteed, who later became the first Astronomer Royal of the new Royal Greenwich Observatory. These appear to have been the first essentially correct tables that gave today's meaning of Mean Time (rather than mean time based on the latest sunrise of the year as proposed by Huygens). Flamsteed adopted the convention of tabulating and naming the correction in the sense that it was to be applied to the apparent time to give mean time.[14]

The equation of time, correctly based on the two major components of the Sun's irregularity of apparent motion,[n 3] was not generally adopted until after Flamsteed's tables of 1672–73, published with the posthumous edition of the works of Jeremiah Horrocks.[15]

Robert Hooke (1635–1703), who mathematically analyzed the universal joint, was the first to note that the geometry and mathematical description of the (non-secular) equation of time and the universal joint were identical, and proposed the use of a universal joint in the construction of a "mechanical sundial".

18th and early 19th centuries

The corrections in Flamsteed's tables of 1672–1673 and 1680 gave mean time computed essentially correctly and without need for further offset. But the numerical values in tables of the equation of time have somewhat changed since then, owing to three factors:

- general improvements in accuracy that came from refinements in astronomical measurement techniques,

- slow intrinsic changes in the equation of time, occurring as a result of small long-term changes in the Earth's obliquity and eccentricity (affecting, for instance, the distance and dates of perihelion), and

- the inclusion of small sources of additional variation in the apparent motion of the Sun, unknown in the 17th century, but discovered from the 18th century onwards, including the effects of the Moon[n 4], Venus and Jupiter.[17]