The microwave domain

Microwave is a term used to identify electromagnetic waves above 103 megahertz (1 Gigahertz) up to 300 Gigahertz because of the short physical wavelengths of these frequencies. Short wavelength energy offers distinct advantages in many applications. For instance, sufficient directivity can be obtained using relatively small antennas and low-power transmitters. These characteristics are ideal for use in both military and civilian radar and communication applications. Small antennas and other small components are made possible by microwave frequency applications. The size advantage can be considered as part of a solution to problems of space, or weight, or both. Microwave frequency usage is significant for the design of shipboard radar because it makes possible the detection of smaller targets. Microwave frequencies present special problems in transmission, generation, and circuit design that are not encountered at lower frequencies. Conventional circuit theory is based on voltages and currents while microwave theory is based on electromagnetic fields.

Apparatus and techniques may be described qualitatively as "microwave" when the wavelengths of signals are roughly the same as the dimensions of the equipment, so that lumped-element circuit theory is inaccurate. As a consequence, practical microwave technique tends to move away from the discrete resistors, capacitors, and inductors used with lower frequency radio waves. Instead, distributed circuit elements and transmission-line theory are more useful methods for design and analysis. Open-wire and coaxial transmission lines give way to waveguides and stripline, and lumped-element tuned circuits are replaced by cavity resonators or resonant lines. Effects of reflection, polarization, scattering, diffraction and atmospheric absorption usually associated with visible light are of practical significance in the study of microwave propagation. The same equations of electromagnetic theory apply at all frequencies .

The microwave engineering discipline has become relevant as the microwave domain moves into the commercial sector, and no longer only applicable to 20th and 21st century military technologies. Inexpensive components and digital communications in the microwave domain have opened up areas pertinent to this discipline. Some of these areas are radar, satellite, wireless radio, optical communication, faster computer circuits, and collision avoidance radar .

Microwave transmission is the transmission of information or energy by microwave radio waves. Although an experimental 64 km (40 mile) microwave telecommunication link across the English Channel was demonstrated in 1931, the development of radar in World War II provided the technology for practical exploitation of microwave communication. In the 1950s, large transcontinental microwave relay networks, consisting of chains of repeater stations linked by line-of-sight beams of microwaves were built in Europe and America to relay long distance telephone traffic and television programs between cities. Communication satellites which transferred data between ground stations by microwaves took over much long distance traffic in the 1960s. In recent years, there has been an explosive increase in use of the microwave spectrum by new telecommunication technologies such as wireless networks, and direct-broadcast satellites which broadcast television and radio directly into consumers' homes.

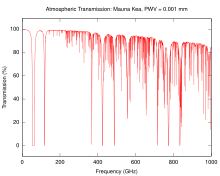

The atmospheric attenuation of microwaves in dry air with a precipitable water vapor level of 0.001 mm. The downward spikes in the graph corresponds to frequencies at which microwaves are absorbed more strongly, such as by oxygen molecules .

Uses

Microwaves are widely used for point-to-point communications because their small wavelength allows conveniently-sized antennas to direct them in narrow beams, which can be pointed directly at the receiving antenna. This allows nearby microwave equipment to use the same frequencies without interfering with each other, as lower frequency radio waves do. Another advantage is that the high frequency of microwaves gives the microwave band a very large information-carrying capacity; the microwave band has a bandwidth 30 times that of all the rest of the radio spectrum below it. A disadvantage is that microwaves are limited to line of sight propagation; they cannot pass around hills or mountains as lower frequency radio waves can.Microwave radio transmission is commonly used in point-to-point communication systems on the surface of the Earth, in satellite communications, and in deep space radio communications. Other parts of the microwave radio band are used for radars, radio navigation systems, sensor systems, and radio astronomy.

The next higher part of the radio electromagnetic spectrum, where the frequencies are above 30 GHz and below 100 GHz, are called "millimeter waves" because their wavelengths are conveniently measured in millimeters, and their wavelengths range from 10 mm down to 3.0 mm (Higher frequency waves are smaller in wavelength). Radio waves in this band are usually strongly attenuated by the Earthly atmosphere and particles contained in it, especially during wet weather. Also, in wide band of frequencies around 60 GHz, the radio waves are strongly attenuated by molecular oxygen in the atmosphere. The electronic technologies needed in the millimeter wave band are also much more difficult to utilize than those of the microwave band.

- Wireless transmission of information

- One-way (e.g. television broadcasting) and two-way telecommunication using communications satellite

- Terrestrial microwave relay links in telecommunications networks including backbone or backhaul carriers in cellular networks

C band horn-reflector antennas on the roof of a telephone switching center in Seattle, Washington, part of the U.S. AT&T Long Lines microwave relay network.

- Wireless transmission of power

- Proposed systems e.g. for connecting solar power collecting satellites to terrestrial power grids

Microwave radio relay

Beginning in the 1950s, networks of microwave relay links, such as the AT&T Long Lines system in the U.S., carried long distance telephone calls and television programs between cities. The first system, dubbed TD-2 and built by AT&T, connected New York and Boston in 1947 with a series of eight radio relay stations.[1] These included long daisy-chained series of such links that traversed mountain ranges and spanned continents. Much of the transcontinental traffic is now carried by cheaper optical fibers and communication satellites, but microwave relay remains important for shorter distances.

Planning

Because of the high frequencies used, a line-of-sight path between the stations is required. Additionally, in order to avoid attenuation of the beam an area around the beam called the first Fresnel zone must be free from obstacles. Obstacles in the signal field cause unwanted attenuation. High mountain peak or ridge positions are often ideal

Production truck used for remote broadcasts by television news has a microwave dish on a retractible telescoping mast to transmit live video back to the studio.

The effects of atmospheric stratification cause the radio path to bend downward in a typical situation so a major distance is possible as the earth equivalent curvature increases from 6370 km to about 8500 km (a 4/3 equivalent radius effect). Rare events of temperature, humidity and pressure profile versus height, may produce large deviations and distortion of the propagation and affect transmission quality. High intensity rain and snow making rain fade must also be considered as an impairment factor, especially at frequencies above 10 GHz. All previous factors, collectively known as path loss, make it necessary to compute suitable power margins, in order to maintain the link operative for a high percentage of time, like the standard 99.99% or 99.999% used in 'carrier class' services of most telecommunication operators.

The link was built in 1979 by Telettra to transmit 300 telephone channels and 1 TV signal, in the 2 GHz frequency band. (Hop distance is the distance between two microwave stations) .

Previous considerations represent typical problems characterizing terrestrial radio links using microwaves for the so-called backbone networks: hop lengths of few tens of kilometers (typically 10 to 60 km) were largely used until 1990s. Frequency bands below 10 GHz and, above all, the information to be transmitted was a stream containing a fixed capacity block. The target was to supply the requested availability for the whole block (Plesiochronous digital hierarchy, PDH, or Synchronous Digital Hierarchy, SDH). Fading and/or multipath affecting the link for short time period during the day had to be counteracted by the diversity architecture. During 1990s microwave radio links begun widely to be used for urban links in cellular network. Requirements regarding link distances changed to shorter hops (less than 10 km, typically 3 to 5 km) and frequency increased to bands between 11 and 43 GHz and more recently up to 86 GHz (E-band). Furthermore, link planning deals more with intense rainfall and less with multipath, so diversity schemes became less used. Another big change that occurred during the last decade was evolution towards packet radio transmission. Therefore, new countermeasures, such as adaptive modulation, have been adopted.

The emitted power is regulated by norms (EIRP) both for cellular system and microwave. These microwave transmissions use emitted power typically from 30 mW to 0,3 W, radiated by the parabolic antenna on a beam wide round few degrees (1 to 3-4). The microwave channel arrangement is regulated by International Telecommunication Union (ITU-R) or local regulations (ETSI, FCC). In the last decade the dedicated spectrum for each microwave band reaches an extreme overcrowding, forcing efforts towards techniques for increasing the transmission capacity (frequency reuse, Polarization-division multiplexing, XPIC, MIMO).

Flash Back

US Army Signal Corps portable microwave relay station, 1945. Microwave relay systems were first developed in World War 2 for secure military communication

In 1931 an Anglo-French consortium headed by Andre C. Clavier demonstrated an experimental microwave relay link across the English Channel using 10 foot (3 m) dishes. Telephony, telegraph and facsimile data was transmitted over the bidirectional 1.7 GHz beams 64 km (40 miles) between Dover, UK and Calais, France. The radiated power, produced by a miniature Barkhausen-Kurz tube located at the dish's focus, was one-half watt. A 1933 military microwave link between airports at St. Inglevert, UK and Lympne, France, a distance of 56 km (35 miles) was followed in 1935 by a 300 MHz telecommunication link, the first commercial microwave relay system.

The development of radar during World War II provided much of the microwave technology which made practical microwave communication links possible, particularly the klystron oscillator and techniques of designing parabolic antennas. Though not commonly known, the US military used both portable and fixed-station microwave communications in the European Theater during World War II.

After the war telephone companies used this technology to build large microwave radio relay networks to carry long distance telephone calls. During the 1950s a unit of the US telephone carrier, AT&T Long Lines, built a transcontinental system of microwave relay links across the US that grew to carry the majority of US long distance telephone traffic, as well as television network signals. The main motivation in 1946 to use microwave radio instead of cable was that a large capacity could be installed quickly and at less cost. It was expected at that time that the annual operating costs for microwave radio would be greater than for cable. There were two main reasons that a large capacity had to be introduced suddenly: Pent up demand for long distance telephone service, because of the hiatus during the war years, and the new medium of television, which needed more bandwidth than radio. The prototype was called TDX and was tested with a connection between New York City and Murray Hill, the location of Bell Laboratories in 1946. The TDX system was set up between New York and Boston in 1947. The TDX was upgraded to the TD2 system, which used [the Morton tube, 416B and later 416C, manufactured by Western Electric] in the transmitters, and then later to TD3 that used solid state electronics.

Military microwave relay systems continued to be used into the 1960s, when many of these systems were supplanted with tropospheric scatter or communication satellite systems. When the NATO military arm was formed, much of this existing equipment was transferred to communications groups. The typical communications systems used by NATO during that time period consisted of the technologies which had been developed for use by the telephone carrier entities in host countries. One example from the USA is the RCA CW-20A 1–2 GHz microwave relay system which utilized flexible UHF cable rather than the rigid waveguide required by higher frequency systems, making it ideal for tactical applications. The typical microwave relay installation or portable van had two radio systems (plus backup) connecting two line of sight sites. These radios would often carry 24 telephone channels frequency division multiplexed on the microwave carrier (i.e. Lenkurt 33C FDM). Any channel could be designated to carry up to 18 teletype communications instead. Similar systems from Germany and other member nations were also in use.

Long distance microwave relay networks were built in many countries until the 1980s, when the technology lost its share of fixed operation to newer technologies such as fiber-optic cable and communication satellites, which offer lower cost per bit.

During the Cold War, the US intelligence agencies, such as the National Security Agency (NSA), were reportedly able to intercept Soviet microwave traffic using satellites such as Rhyolite.[8] Much of the beam of a microwave link passes the receiving antenna and radiates toward the horizon, into space. By positioning a geosynchronous satellite in the path of the beam, the microwave beam can be received.

At the turn of the century, microwave radio relay systems are being used increasingly in portable radio applications. The technology is particularly suited to this application because of lower operating costs, a more efficient infrastructure, and provision of direct hardware access to the portable radio operator.

A microwave link is a communications system that uses a beam of radio waves in the microwave frequency range to transmit video, audio, or data between two locations, which can be from just a few feet or meters to several miles or kilometers apart. Microwave links are commonly used by television broadcasters to transmit programmes across a country, for instance, or from an outside broadcast back to a studio.

Mobile units can be camera mounted, allowing cameras the freedom to move around without trailing cables. These are often seen on the touchlines of sports fields on Steadicam systems.

Properties of microwave links

- Involve line of sight (LOS) communication technology

- Affected greatly by environmental constraints, including rain fade

- Have very limited penetration capabilities through obstacles such as hills, buildings and trees

- Sensitive to high pollen count

- Signals can be degraded during Solar proton events

Uses of microwave links

- In communications between satellites and base stations

- As backbone carriers for cellular systems

- In short range indoor communications

- Linking remote and regional telephone exchanges to larger (main) exchanges without the need for copper/optical fibre lines.

- Measuring the intensity of rain between two locations

Troposcatter

Terrestrial microwave relay links are limited in distance to the visual horizon, a few tens of miles or kilometers depending on tower height. Tropospheric scatter ("troposcatter" or "scatter") was a technology developed in the 1950s allow microwave communication links beyond the horizon, to a range of several hundred kilometers. The transmitter radiates a beam of microwaves into the sky, at a shallow angle above the horizon toward the receiver. As the beam passes through the troposphere a small fraction of the microwave energy is scattered back toward the ground by water vapor and dust in the air. A sensitive receiver beyond the horizon picks up this reflected signal. Signal clarity obtained by this method depends on the weather and other factors, and as a result a high level of technical difficulty is involved in the creation of a reliable over horizon radio relay link. Troposcatter links are therefore only used in special circumstances where satellites and other long distance communication channels cannot be relied on, such as in military communications.Microwave power transmission

Microwave power transmission (MPT) is the use of microwaves to transmit power through outer space or the atmosphere without the need for wires. It is a sub-type of the more general wireless energy transfer methods.Flash Back

Following World War II, which saw the development of high-power microwave emitters known as cavity magnetrons, the idea of using microwaves to transmit power was researched. In 1964, William C. Brown demonstrated a miniature helicopter equipped with a combination antenna and rectifier device called a rectenna. The rectenna converted microwave power into electricity, allowing the helicopter to fly.[10] In principle, the rectenna is capable of very high conversion efficiencies - over 90% in optimal circumstances.Most proposed MPT systems now usually include a phased array microwave transmitter. While these have lower efficiency levels, they have the advantage of being electrically steered using no moving parts, and are easier to scale to the necessary levels that a practical MPT system requires.

Using microwave power transmission to deliver electricity to communities without having to build cable-based infrastructure is being studied at Grand Bassin on Reunion Island in the Indian Ocean.

Common safety concerns

The common reaction to microwave transmission is one of concern, as microwaves are generally perceived by the public as dangerous forms of radiation, stemming from the fact that they are used in microwave ovens. While high power microwaves can be painful and dangerous as in the United States Military's Active Denial System, MPT systems are generally proposed to have only low intensity at the rectenna.Though this would be extremely safe as the power levels would be about equal to the leakage from a microwave oven, and only slightly more than a cell phone, the relatively diffuse microwave beam necessitates a large receiving antenna area for a significant amount of energy to be transmitted.

Research has involved exposing multiple generations of animals to microwave radiation of this or higher intensity, and no health issues have been found.

Proposed uses

MPT is the most commonly proposed method for transferring energy to the surface of the Earth from solar power satellites or other in-orbit power sources. MPT is occasionally proposed for the power supply in beam-powered propulsion for orbital lift space ships. Even though lasers are more commonly proposed, their low efficiency in light generation and reception has led some designers to opt for microwave based systems.Current status

Wireless power transmission (using microwaves) is well proven. Experiments in the tens of kilowatts have been performed at Goldstone in California in 1975 and more recently (1997) at Grand Bassin on Reunion Island. In 2008, a long-range transmission experiment successfully transmitted 20 watts 92 miles (148 km) from a mountain on Maui to the main island of Hawaii.JAXA announced on 12 March 2015 that they wirelessly beamed 1.8 kilowatts 50 meters to a small receiver by converting electricity to microwaves and then back to electricity. This is the standard plan for this type of power. On 12 March 2015, Mitsubishi Heavy Industries demonstrated transmission of 10 kilowatts (kW) of power to a receiver unit located at a distance of 500 meters (m) away

XXX . XXX Wireless power transfer

Wireless power transfer (WPT), wireless power transmission, wireless energy transmission, or electromagnetic power transfer is the transmission of electrical energy without wires. Wireless power transmission technologies use time-varying electric, magnetic, or electromagnetic fields. Wireless transmission is useful to power electrical devices where interconnecting wires are inconvenient, hazardous, or are not possible.

Wireless power techniques mainly fall into two categories, non-radiative and radiative. In near field or non-radiative techniques, power is transferred over short distances by magnetic fields using inductive coupling between coils of wire, or by electric fields using capacitive coupling between metal electrodes. Inductive coupling is the most widely used wireless technology; its applications include charging handheld devices like phones and electric toothbrushes, RFID tags, and chargers for implantable medical devices like artificial cardiac pacemakers, or electric vehicles.

In far-field or radiative techniques, also called power beaming, power is transferred by beams of electromagnetic radiation, like microwaves or laser beams. These techniques can transport energy longer distances but must be aimed at the receiver. Proposed applications for this type are solar power satellites, and wireless powered drone aircraft.

An important issue associated with all wireless power systems is limiting the exposure of people and other living things to potentially injurious electromagnetic fields

Wireless power techniques mainly fall into two categories, non-radiative and radiative. In near field or non-radiative techniques, power is transferred over short distances by magnetic fields using inductive coupling between coils of wire, or by electric fields using capacitive coupling between metal electrodes. Inductive coupling is the most widely used wireless technology; its applications include charging handheld devices like phones and electric toothbrushes, RFID tags, and chargers for implantable medical devices like artificial cardiac pacemakers, or electric vehicles.

In far-field or radiative techniques, also called power beaming, power is transferred by beams of electromagnetic radiation, like microwaves or laser beams. These techniques can transport energy longer distances but must be aimed at the receiver. Proposed applications for this type are solar power satellites, and wireless powered drone aircraft.

An important issue associated with all wireless power systems is limiting the exposure of people and other living things to potentially injurious electromagnetic fields

Inductive charging pad for LG smartphone, using the Qi system, an example of near-field wireless transfer. When the phone is set on the pad, a coil in the pad creates a magnetic field[1] which induces a current in another coil, in the phone, charging its battery.

Overview

Coupling (electronics)

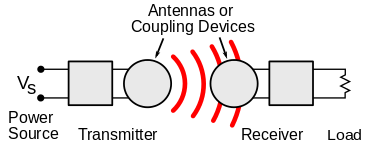

Wireless power transfer is a generic term for a number of different technologies for transmitting energy by means of electromagnetic fields.[8][9][10] The technologies, listed in the table below, differ in the distance over which they can transfer power efficiently, whether the transmitter must be aimed (directed) at the receiver, and in the type of electromagnetic energy they use: time varying electric fields, magnetic fields, radio waves, microwaves, infrared or visible light waves.[11]In general a wireless power system consists of a "transmitter" connected to a source of power such as a mains power line, which converts the power to a time-varying electromagnetic field, and one or more "receiver" devices which receive the power and convert it back to DC or AC electric current which is used by an electrical load.[8][11] At the transmitter the input power is converted to an oscillating electromagnetic field by some type of "antenna" device. The word "antenna" is used loosely here; it may be a coil of wire which generates a magnetic field, a metal plate which generates an electric field, an antenna which radiates radio waves, or a laser which generates light. A similar antenna or coupling device at the receiver converts the oscillating fields to an electric current. An important parameter that determines the type of waves is the frequency, which determines the wavelength.

Wireless power uses the same fields and waves as wireless communication devices like radio, another familiar technology that involves electrical energy transmitted without wires by electromagnetic fields, used in cellphones, radio and television broadcasting, and WiFi. In radio communication the goal is the transmission of information, so the amount of power reaching the receiver is not so important, as long as it is sufficient that the information can be received intelligibly In wireless communication technologies only tiny amounts of power reach the receiver. In contrast, with wireless power the amount of energy received is the important thing, so the efficiency (fraction of transmitted energy that is received) is the more significant parameter.[9] For this reason, wireless power technologies are likely to be more limited by distance than wireless communication technologies.

These are the different wireless power technologies:[

| Technology | Range[17] | Directivity[11] | Frequency | Antenna devices | Current and/or possible future applications |

|---|---|---|---|---|---|

| Inductive coupling | Short | Low | Hz – MHz | Wire coils | Electric tooth brush and razor battery charging, induction stovetops and industrial heaters. |

| Resonant inductive coupling | Mid- | Low | kHz – GHz | Tuned wire coils, lumped element resonators | Charging portable devices (Qi), biomedical implants, electric vehicles, powering buses, trains, MAGLEV, RFID, smartcards. |

| Capacitive coupling | Short | Low | kHz – MHz | Metal plate electrodes | Charging portable devices, power routing in large-scale integrated circuits, Smartcards. |

| Magnetodynamic coupling[15] | Short | N.A. | Hz | Rotating magnets | Charging electric vehicles, buses, biomedical implants. |

| Microwaves | Long | High | GHz | Parabolic dishes, phased arrays, rectennas | Solar power satellite, powering drone aircraft, charging wireless devices |

| Light waves | Long | High | ≥THz | Lasers, photocells, lenses | Powering drone aircraft, powering space elevator climbers. |

Field regions

Electric and magnetic fields are created by charged particles in matter such as electrons. A stationary charge creates an electrostatic field in the space around it. A steady current of charges (direct current, DC) creates a static magnetic field around it. The above fields contain energy, but cannot carry power because they are static. However time-varying fields can carry power.[18] Accelerating electric charges, such as are found in an alternating current (AC) of electrons in a wire, create time-varying electric and magnetic fields in the space around them. These fields can exert oscillating forces on the electrons in a receiving "antenna", causing them to move back and forth. These represent alternating current which can be used to power a load.The oscillating electric and magnetic fields surrounding moving electric charges in an antenna device can be divided into two regions, depending on distance Drange from the antenna The boundary between the regions is somewhat vaguely defined.[11] The fields have different characteristics in these regions, and different technologies are used for transferring power:

- Near-field or nonradiative region – This means the area within about 1 wavelength (λ) of the antenna.[ In this region the oscillating electric and magnetic fields are separate[12] and power can be transferred via electric fields by capacitive coupling (electrostatic induction) between metal electrodes, or via magnetic fields by inductive coupling (electromagnetic induction) between coils of wire.[ These fields are not radiative,[20] meaning the energy stays within a short distance of the transmitter. If there is no receiving device or absorbing material within their limited range to "couple" to, no power leaves the transmitter.[22] The range of these fields is short, and depends on the size and shape of the "antenna" devices, which are usually coils of wire. The fields, and thus the power transmitted, decrease exponentially with distance,[ so if the distance between the two "antennas" Drange is much larger than the diameter of the "antennas" Dant very little power will be received. Therefore, these techniques cannot be used for long range power transmission.

- Resonance, such as resonant inductive coupling, can increase the coupling between the antennas greatly, allowing efficient transmission at somewhat greater distances, although the fields still decrease exponentially. Therefore the range of near-field devices is conventionally divided into two categories:

- Short range – up to about one antenna diameter: Drange ≤ Dant. This is the range over which ordinary nonresonant capacitive or inductive coupling can transfer practical amounts of power.

- Mid-range – up to 10 times the antenna diameter: Drange ≤ 10 Dant.[ This is the range over which resonant capacitive or inductive coupling can transfer practical amounts of power.

- Far-field or radiative region – Beyond about 1 wavelength (λ) of the antenna, the electric and magnetic fields are perpendicular to each other and propagate as an electromagnetic wave; examples are radio waves, microwaves, or light waves.[ This part of the energy is radiative,[20] meaning it leaves the antenna whether or not there is a receiver to absorb it. The portion of energy which does not strike the receiving antenna is dissipated and lost to the system. The amount of power emitted as electromagnetic waves by an antenna depends on the ratio of the antenna's size Dant to the wavelength of the waves λ,[28] which is determined by the frequency: λ = c/f. At low frequencies f where the antenna is much smaller than the size of the waves, Dant << λ, very little power is radiated. Therefore the near-field devices above, which use lower frequencies, radiate almost none of their energy as electromagnetic radiation. Antennas about the same size as the wavelength Dant ≈ λ such as monopole or dipole antennas, radiate power efficiently, but the electromagnetic waves are radiated in all directions (omnidirectionally), so if the receiving antenna is far away, only a small amount of the radiation will hit it. Therefore, these can be used for short range, inefficient power transmission but not for long range transmission.[29]

- However, unlike fields, electromagnetic radiation can be focused by reflection or refraction into beams. By using a high-gain antenna or optical system which concentrates the radiation into a narrow beam aimed at the receiver, it can be used for long range power transmission. From the Rayleigh criterion, to produce the narrow beams necessary to focus a significant amount of the energy on a distant receiver, an antenna must be much larger than the wavelength of the waves used: Dant >> λ = c/f.[30] Practical beam power devices require wavelengths in the centimeter region or below, corresponding to frequencies above 1 GHz, in the microwave range or above.[8]

Near-field (nonradiative) techniques

At large relative distance, the near-field components of electric and magnetic fields are approximately quasi-static oscillating dipole fields. These fields decrease with the cube of distance: (Drange/Dant)−3 Since power is proportional to the square of the field strength, the power transferred decreases as (Drange/Dant)−6.[ or 60 dB per decade. In other words, if far apart, doubling the distance between the two antennas causes the power received to decrease by a factor of 26 = 64. As a result, inductive and capacitive coupling can only be used for short-range power transfer, within a few times the diameter of the antenna device Dant. Unlike in a radiative system where the maximum radiation occurs when the dipole antennas are oriented transverse to the direction of propagation, with dipole fields the maximum coupling occurs when the dipoles are oriented longitudinally.Inductive coupling

Inductive coupling is the oldest and most widely used wireless power technology, and virtually the only one so far which is used in commercial products. It is used in inductive charging stands for cordless appliances used in wet environments such as electric toothbrushes[14] and shavers, to reduce the risk of electric shock.[35] Another application area is "transcutaneous" recharging of biomedical prosthetic devices implanted in the human body, such as cardiac pacemakers and insulin pumps, to avoid having wires passing through the skin.[36][37] It is also used to charge electric vehicles such as cars and to either charge or power transit vehicles like buses and trains.[14][16]

However the fastest growing use is wireless charging pads to recharge mobile and handheld wireless devices such as laptop and tablet computers, cellphones, digital media players, and video game controllers.[16]

The power transferred increases with frequency[34] and the mutual inductance between the coils,[9] which depends on their geometry and the distance between them. A widely used figure of merit is the coupling coefficient . This dimensionless parameter is equal to the fraction of magnetic flux through the transmitter coil that passes through the receiver coil when L2 is open circuited. If the two coils are on the same axis and close together so all the magnetic flux from passes through , and the link efficiency approaches 100%. The greater the separation between the coils, the more of the magnetic field from the first coil misses the second, and the lower and the link efficiency are, approaching zero at large separations.[34] The link efficiency and power transferred is roughly proportional to .[34] In order to achieve high efficiency, the coils must be very close together, a fraction of the coil diameter ,[34] usually within centimeters,[29] with the coils' axes aligned. Wide, flat coil shapes are usually used, to increase coupling.[34] Ferrite "flux confinement" cores can confine the magnetic fields, improving coupling and reducing interference to nearby electronics,[ but they are heavy and bulky so small wireless devices often use air-core coils.

Ordinary inductive coupling can only achieve high efficiency when the coils are very close together, usually adjacent. In most modern inductive systems resonant inductive coupling (described below) is used, in which the efficiency is increased by using resonant circuits. This can achieve high efficiencies at greater distances than nonresonant inductive coupling.

Powermat inductive charging spots in a coffee shop. Customers can set their phones and computers on them to recharge.

Resonant inductive coupling

Tesla coil § Resonant transformer

Diagram of the resonant inductive wireless power system demonstrated by Marin Soljačić's MIT team in 2007. The resonant circuits were coils of copper wire which resonated with their internal capacitance (dotted capacitors) at 10 MHz. Power was coupled into the transmitter resonator, and out of the receiver resonator into the rectifier, by small coils which also served for impedance matching.

Nikola Tesla first discovered resonant coupling during his pioneering experiments in wireless power transfer around the turn of the 20th century,[ but the possibilities of using resonant coupling to increase transmission range has only recently been explored.[43] In 2007 a team led by Marin Soljačić at MIT used two coupled tuned circuits each made of a 25 cm self-resonant coil of wire at 10 MHz to achieve the transmission of 60 W of power over a distance of 2 meters (6.6 ft) (8 times the coil diameter) at around 40% efficiency . Soljačić founded the company WiTricity (the same name the team used for the technology) which is attempting to commercialize the technology.

The concept behind the WiTricity resonant inductive coupling system is that high Q factor resonators (Highly Resonant) exchange energy at a much higher rate than they lose energy due to internal damping.[24] Therefore, by using resonance, the same amount of power can be transferred at greater distances, using the much weaker magnetic fields out in the peripheral regions ("tails") of the near fields (these are sometimes called evanescent fields[24]). Resonant inductive coupling can achieve high efficiency at ranges of 4 to 10 times the coil diameter (Dant). This is called "mid-range" transfer,[26] in contrast to the "short range" of nonresonant inductive transfer, which can achieve similar efficiencies only when the coils are adjacent. Another advantage is that resonant circuits interact with each other so much more strongly than they do with nonresonant objects that power losses due to absorption in stray nearby objects are negligible.

A drawback of resonant coupling theory is that at close ranges when the two resonant circuits are tightly coupled, the resonant frequency of the system is no longer constant but "splits" into two resonant peaks,[ so the maximum power transfer no longer occurs at the original resonant frequency and the oscillator frequency must be tuned to the new resonance peak. The case of using such a shifted peak is called "Single resonant".[49] The "Single resonant" systems have also been used, in which only the secondary is a tuned circuit. The principle of this phenomenon is also called "(Magnetic) phase synchronization" and already started practical application for AGV in Japan from around 1993.[53] And now, the concept of Highly Resonant presented by researcher of MIT is applied only to the secondary side resonator, and high efficiency wide gap high power wireless power transfer system is realized and it is used for induction current collector of SCMaglev.

Resonant technology is currently being widely incorporated in modern inductive wireless power systems.[34] One of the possibilities envisioned for this technology is area wireless power coverage. A coil in the wall or ceiling of a room might be able to wirelessly power lights and mobile devices anywhere in the room, with reasonable efficiency.[35] An environmental and economic benefit of wirelessly powering small devices such as clocks, radios, music players and remote controls is that it could drastically reduce the 6 billion batteries disposed of each year, a large source of toxic waste and groundwater contamination.

Capacitive coupling

In capacitive coupling (electrostatic induction), the conjugate of inductive coupling, energy is transmitted by electric fields[9] between electrodes such as metal plates. The transmitter and receiver electrodes form a capacitor, with the intervening space as the dielectric.[9][12][14][36][55] An alternating voltage generated by the transmitter is applied to the transmitting plate, and the oscillating electric field induces an alternating potential on the receiver plate by electrostatic induction,[9][55] which causes an alternating current to flow in the load circuit. The amount of power transferred increases with the frequency[55] the square of the voltage, and the capacitance between the plates, which is proportional to the area of the smaller plate and (for short distances) inversely proportional to the separation.[9]Capacitive coupling has only been used practically in a few low power applications, because the very high voltages on the electrodes required to transmit significant power can be hazardous, and can cause unpleasant side effects such as noxious ozone production. In addition, in contrast to magnetic fields,[24] electric fields interact strongly with most materials, including the human body, due to dielectric polarization.[36] Intervening materials between or near the electrodes can absorb the energy, in the case of humans possibly causing excessive electromagnetic field exposure.[12] However capacitive coupling has a few advantages over inductive coupling. The field is largely confined between the capacitor plates, reducing interference, which in inductive coupling requires heavy ferrite "flux confinement" cores. Also, alignment requirements between the transmitter and receiver are less critical. Capacitive coupling has recently been applied to charging battery powered portable devices.[56] and is being considered as a means of transferring power between substrate layers in integrated circuits.

Two types of circuit have been used:

- Bipolar design: In this type of circuit, there are two transmitter plates and two receiver plates. Each transmitter plate is coupled to a receiver plate. The transmitter oscillator drives the transmitter plates in opposite phase (180° phase difference) by a high alternating voltage, and the load is connected between the two receiver plates. The alternating electric fields induce opposite phase alternating potentials in the receiver plates, and this "push-pull" action causes current to flow back and forth between the plates through the load. A disadvantage of this configuration for wireless charging is that the two plates in the receiving device must be aligned face to face with the charger plates for the device to work.

- Unipolar design: In this type of circuit, the transmitter and receiver have only one active electrode, and either the ground or a large passive electrode serves as the return path for the current. The transmitter oscillator is connected between an active and a passive electrode. The load is also connected between an active and a passive electrode. The electric field produced by the transmitter induces alternating charge displacement in the load dipole through electrostatic induction.

Resonant capacitive coupling

Resonance can also be used with capacitive coupling to extend the range. At the turn of the 20th century, Nikola Tesla did the first experiments with both resonant inductive and capacitive coupling.Magnetodynamic coupling

In this method, power is transmitted between two rotating armatures, one in the transmitter and one in the receiver, which rotate synchronously, coupled together by a magnetic field generated by permanent magnets on the armatures.[15] The transmitter armature is turned either by or as the rotor of an electric motor, and its magnetic field exerts torque on the receiver armature, turning it. The magnetic field acts like a mechanical coupling between the armatures.[15] The receiver armature produces power to drive the load, either by turning a separate electric generator or by using the receiver armature itself as the rotor in a generator.This device has been proposed as an alternative to inductive power transfer for noncontact charging of electric vehicles.[15] A rotating armature embedded in a garage floor or curb would turn a receiver armature in the underside of the vehicle to charge its batteries.[15] It is claimed that this technique can transfer power over distances of 10 to 15 cm (4 to 6 inches) with high efficiency, over 90%. Also, the low frequency stray magnetic fields produced by the rotating magnets produce less electromagnetic interference to nearby electronic devices than the high frequency magnetic fields produced by inductive coupling systems. A prototype system charging electric vehicles has been in operation at University of British Columbia since 2012. Other researchers, however, claim that the two energy conversions (electrical to mechanical to electrical again) make the system less efficient than electrical systems like inductive coupling.

Far-field (radiative) techniques

Far field methods achieve longer ranges, often multiple kilometer ranges, where the distance is much greater than the diameter of the device(s). The main reason for longer ranges with radio wave and optical devices is the fact that electromagnetic radiation in the far-field can be made to match the shape of the receiving area (using high directivity antennas or well-collimated laser beams). The maximum directivity for antennas is physically limited by diffraction.In general, visible light (from lasers) and microwaves (from purpose-designed antennas) are the forms of electromagnetic radiation best suited to energy transfer.

The dimensions of the components may be dictated by the distance from transmitter to receiver, the wavelength and the Rayleigh criterion or diffraction limit, used in standard radio frequency antenna design, which also applies to lasers. Airy's diffraction limit is also frequently used to determine an approximate spot size at an arbitrary distance from the aperture. Electromagnetic radiation experiences less diffraction at shorter wavelengths (higher frequencies); so, for example, a blue laser is diffracted less than a red one.

The Rayleigh criterion dictates that any radio wave, microwave or laser beam will spread and become weaker and diffuse over distance; the larger the transmitter antenna or laser aperture compared to the wavelength of radiation, the tighter the beam and the less it will spread as a function of distance (and vice versa). Smaller antennae also suffer from excessive losses due to side lobes. However, the concept of laser aperture considerably differs from an antenna. Typically, a laser aperture much larger than the wavelength induces multi-moded radiation and mostly collimators are used before emitted radiation couples into a fiber or into space.

Ultimately, beamwidth is physically determined by diffraction due to the dish size in relation to the wavelength of the electromagnetic radiation used to make the beam.

Microwave power beaming can be more efficient than lasers, and is less prone to atmospheric attenuation caused by dust or water vapor.

Here, the power levels are calculated by combining the above parameters together, and adding in the gains and losses due to the antenna characteristics and the transparency and dispersion of the medium through which the radiation passes. That process is known as calculating a link budget.

Microwaves

An artist's depiction of a solar satellite that could send electric energy by microwaves to a space vessel or planetary surface.

Power beaming by microwaves has the difficulty that, for most space applications, the required aperture sizes are very large due to diffraction limiting antenna directionality. For example, the 1978 NASA study of solar power satellites required a 1-kilometre-diameter (0.62 mi) transmitting antenna and a 10-kilometre-diameter (6.2 mi) receiving rectenna for a microwave beam at 2.45 GHz.[65] These sizes can be somewhat decreased by using shorter wavelengths, although short wavelengths may have difficulties with atmospheric absorption and beam blockage by rain or water droplets. Because of the "thinned-array curse", it is not possible to make a narrower beam by combining the beams of several smaller satellites.

For earthbound applications, a large-area 10 km diameter receiving array allows large total power levels to be used while operating at the low power density suggested for human electromagnetic exposure safety. A human safe power density of 1 mW/cm2 distributed across a 10 km diameter area corresponds to 750 megawatts total power level. This is the power level found in many modern electric power plants.

Following World War II, which saw the development of high-power microwave emitters known as cavity magnetrons, the idea of using microwaves to transfer power was researched. By 1964, a miniature helicopter propelled by microwave power had been demonstrated.[66]

Japanese researcher Hidetsugu Yagi also investigated wireless energy transmission using a directional array antenna that he designed. In February 1926, Yagi and his colleague Shintaro Uda published their first paper on the tuned high-gain directional array now known as the Yagi antenna. While it did not prove to be particularly useful for power transmission, this beam antenna has been widely adopted throughout the broadcasting and wireless telecommunications industries due to its excellent performance characteristics.[67]

Wireless high power transmission using microwaves is well proven. Experiments in the tens of kilowatts have been performed at Goldstone in California in 1975 and more recently (1997) at Grand Bassin on Reunion Island.[71] These methods achieve distances on the order of a kilometer.

Under experimental conditions, microwave conversion efficiency was measured to be around 54%.[72]

A change to 24 GHz has been suggested as microwave emitters similar to LEDs have been made with very high quantum efficiencies using negative resistance, i.e., Gunn or IMPATT diodes, and this would be viable for short range links.

In 2013, inventor Hatem Zeine demonstrated how wireless power transmission using phased array antennas can deliver electrical power up to 30 feet. It uses the same radio frequencies as WiFi.[73][74]

In 2015, researchers at the University of Washington introduced power over Wi-Fi, which trickle-charges batteries and powered battery-free cameras and temperature sensors using transmissions from Wi-Fi routers. Wi-Fi signals were shown to power battery-free temperature and camera sensors at ranges of up to 20 feet. It was also shown that Wi-Fi can be used to wirelessly trickle-charge nickel–metal hydride and lithium-ion coin-cell batteries at distances of up to 28 feet.

In 2017, the Federal Communication Commission (FCC) certified the first mid-field radio frequency (RF) transmitter of wireless power.

Lasers

Advantages compared to other wireless methods are:[81]

- Collimated monochromatic wavefront propagation allows narrow beam cross-section area for transmission over large distances.

- Compact size: solid state lasers fit into small products.

- No radio-frequency interference to existing radio communication such as Wi-Fi and cell phones.

- Access control: only receivers hit by the laser receive power.

- Laser radiation is hazardous. Low power levels can blind humans and other animals. High power levels can kill through localized spot heating.

- Conversion between electricity and light is limited. Photovoltaic cells achieve 40%–50% efficiency.[82] (The conversion efficiency of laser light into electricity is much higher than that of sun light into electricity).

- Atmospheric absorption, and absorption and scattering by clouds, fog, rain, etc., causes up to 100% losses.

- Requires a direct line of sight with the target. (Instead of being beamed directly onto the receiver, the laser light can also be guided by an optical fiber. Then one speaks of power-over-fiber technology.)

Other details include propagation,[88] and the coherence and the range limitation problem.[89]

Geoffrey Landis is one of the pioneers of solar power satellites[93] and laser-based transfer of energy especially for space and lunar missions. The demand for safe and frequent space missions has resulted in proposals for a laser-powered space elevator.[94][95]

NASA's Dryden Flight Research Center demonstrated a lightweight unmanned model plane powered by a laser beam.[96] This proof-of-concept demonstrates the feasibility of periodic recharging using the laser beam system.

Atmospheric plasma channel coupling

In atmospheric plasma channel coupling, energy is transferred between two electrodes by electrical conduction through ionized air.[97] When an electric field gradient exists between the two electrodes, exceeding 34 kilovolts per centimeter at sea level atmospheric pressure, an electric arc occurs. This atmospheric dielectric breakdown results in the flow of electric current along a random trajectory through an ionized plasma channel between the two electrodes. An example of this is natural lightning, where one electrode is a virtual point in a cloud and the other is a point on Earth. Laser Induced Plasma Channel (LIPC) research is presently underway using ultrafast lasers to artificially promote development of the plasma channel through the air, directing the electric arc, and guiding the current across a specific path in a controllable manner.[99] The laser energy reduces the atmospheric dielectric breakdown voltage and the air is made less insulating by superheating, which lowers the density () of the filament of air.[100]This new process is being explored for use as a laser lightning rod and as a means to trigger lightning bolts from clouds for natural lightning channel studies,[101] for artificial atmospheric propagation studies, as a substitute for conventional radio antennas,[102] for applications associated with electric welding and machining, for diverting power from high-voltage capacitor discharges, for directed-energy weapon applications employing electrical conduction through a ground return path and electronic jamming.

Energy harvesting

In the context of wireless power, energy harvesting, also called power harvesting or energy scavenging, is the conversion of ambient energy from the environment to electric power, mainly to power small autonomous wireless electronic devices.[110] The ambient energy may come from stray electric or magnetic fields or radio waves from nearby electrical equipment, light, thermal energy (heat), or kinetic energy such as vibration or motion of the device.[110] Although the efficiency of conversion is usually low and the power gathered often minuscule (milliwatts or microwatts),[110] it can be adequate to run or recharge small micropower wireless devices such as remote sensors, which are proliferating in many fields.[111][110] This new technology is being developed to eliminate the need for battery replacement or charging of such wireless devices, allowing them to operate completely autonomouslyFlash Back

19th century developments and dead ends

The 19th century saw many developments of theories, and counter-theories on how electrical energy might be transmitted. In 1826 André-Marie Ampère found Ampère's circuital law showing that electric current produces a magnetic field. Michael Faraday described in 1831 with his law of induction the electromotive force driving a current in a conductor loop by a time-varying magnetic flux. The fact that electrical energy could by transmitted at a distance without wires was actually observed by many inventors and experimenters,[ but lack of a coherent theory attributed these phenomena vaguely to electromagnetic induction.[117] A concise explanation of these phenomena would come from the 1860s Maxwell's equations by James Clerk Maxwell, establishing a theory that unified electricity and magnetism to electromagnetism, predicting the existence of electromagnetic waves as the "wireless" carrier of electromagnetic energy. Around 1884 John Henry Poynting defined the Poynting vector and gave Poynting's theorem, which describe the flow of power across an area within electromagnetic radiation and allow for a correct analysis of wireless power transfer systems. This was followed on by Heinrich Rudolf Hertz' 1888 validation of the theory, which included the evidence for radio waves.During the same period two schemes of wireless signaling were put forward by William Henry Ward (1871) and Mahlon Loomis (1872) that were based on the erroneous belief that there was an electrified atmospheric stratum accessible at low altitude. Both inventors' patents noted this layer connected with a return path using "Earth currents"' would allow for wireless telegraphy as well as supply power for the telegraph, doing away with artificial batteries, and could also be used for lighting, heat, and motive power.[ A more practical demonstration of wireless transmission via conduction came in Amos Dolbear's 1879 magneto electric telephone that used ground conduction to transmit over a distance of a quarter of a mile.

Tesla

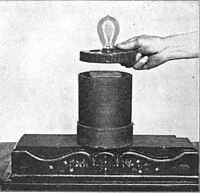

Tesla demonstrating wireless transmission by "electrostatic induction" during an 1891 lecture at Columbia College. The two metal sheets are connected to a Tesla coil oscillator, which applies high-voltage radio frequency alternating current. An oscillating electric field between the sheets ionizes the low-pressure gas in the two long Geissler tubes in his hands, causing them to glow in a manner similar to neon tubes.

Near-field and non-radiative technologies

Inductive power transfer between nearby wire coils was the earliest wireless power technology to be developed, existing since the transformer was developed in the 1800s. Induction heating has been used since the early 1900s. With the advent of cordless devices, induction charging stands have been developed for appliances used in wet environments, like electric toothbrushes and electric razors, to eliminate the hazard of electric shock. One of the earliest proposed applications of inductive transfer was to power electric locomotives. In 1892 Maurice Hutin and Maurice Leblanc patented a wireless method of powering railroad trains using resonant coils inductively coupled to a track wire at 3 kHz. The first passive RFID (Radio Frequency Identification) technologies were invented by Mario Cardullo (1973) and Koelle et al. (1975) and by the 1990s were being used in proximity cards and contactless smartcards.The proliferation of portable wireless communication devices such as mobile phones, tablet, and laptop computers in recent decades is currently driving the development of mid-range wireless powering and charging technology to eliminate the need for these devices to be tethered to wall plugs during charging.[145] The Wireless Power Consortium was established in 2008 to develop interoperable standards across manufacturers. Its Qi inductive power standard published in August 2009 enables high efficiency charging and powering of portable devices of up to 5 watts over distances of 4 cm (1.6 inches). The wireless device is placed on a flat charger plate (which can be embedded in table tops at cafes, for example) and power is transferred from a flat coil in the charger to a similar one in the device.

In 2007, a team led by Marin Soljačić at MIT used a dual resonance transmitter with a 25 cm diameter secondary tuned to 10 MHz to transfer 60 W of power to a similar dual resonance receiver over a distance of 2 meters (6.6 ft) (eight times the transmitter coil diameter) at around 40% efficiency. In 2008 the team of Greg Leyh and Mike Kennan of Nevada Lightning Lab used a grounded dual resonance transmitter with a 57 cm diameter secondary tuned to 60 kHz and a similar grounded dual resonance receiver to transfer power through coupled electric fields with an earth return circuit over a distance of 12 meters (39 ft).

Microwaves and lasers

Before World War 2, little progress was made in wireless power transmission. Radio was developed for communication uses, but couldn't be used for power transmission due to the fact that the relatively low-frequency radio waves spread out in all directions and little energy reached the receiver. In radio communication, at the receiver, an amplifier intensifies a weak signal using energy from another source. For power transmission, efficient transmission required transmitters that could generate higher-frequency microwaves, which can be focused in narrow beams towards a receiver.The development of microwave technology during World War 2, such as the klystron and magnetron tubes and parabolic antennas made radiative (far-field) methods practical for the first time, and the first long-distance wireless power transmission was achieved in the 1960s by William C. Brown. In 1964 Brown invented the rectenna which could efficiently convert microwaves to DC power, and in 1964 demonstrated it with the first wireless-powered aircraft, a model helicopter powered by microwaves beamed from the ground. A major motivation for microwave research in the 1970s and 80s was to develop a solar power satellite. Conceived in 1968 by Peter Glaser, this would harvest energy from sunlight using solar cells and beam it down to Earth as microwaves to huge rectennas, which would convert it to electrical energy on the electric power grid. In landmark 1975 experiments as technical director of a JPL/Raytheon program, Brown demonstrated long-range transmission by beaming 475 W of microwave power to a rectenna a mile away, with a microwave to DC conversion efficiency of 54%. At NASA's Jet Propulsion Laboratory he and Robert Dickinson transmitted 30 kW DC output power across 1.5 km with 2.38 GHz microwaves from a 26 m dish to a 7.3 x 3.5 m rectenna array. The incident-RF to DC conversion efficiency of the rectenna was 80%. In 1983 Japan launched MINIX (Microwave Ionosphere Nonlinear Interaction Experiment), a rocket experiment to test transmission of high power microwaves through the ionosphere.

In recent years a focus of research has been the development of wireless-powered drone aircraft, which began in 1959 with the Dept. of Defense's RAMP (Raytheon Airborne Microwave Platform) project which sponsored Brown's research. In 1987 Canada's Communications Research Center developed a small prototype airplane called Stationary High Altitude Relay Platform (SHARP) to relay telecommunication data between points on earth similar to a communication satellite. Powered by a rectenna, it could fly at 13 miles (21 km) altitude and stay aloft for months. In 1992 a team at Kyoto University built a more advanced craft called MILAX (MIcrowave Lifted Airplane eXperiment).

In 2003 NASA flew the first laser powered aircraft. The small model plane's motor was powered by electricity generated by photocells from a beam of infrared light from a ground-based laser, while a control system kept the laser pointed at the plane.

The World Wireless System was a turn of the 20th century proposed telecommunications and electrical power delivery system designed by inventor Nikola Tesla based on his theories of using Earth and its atmosphere as electrical conductors. He claimed this system would allow for "the transmission of electric energy without wires" on a global scale[1] as well as point-to-point wireless telecommunications and broadcasting. He made public statements citing two related methods to accomplish this from the mid-1890s on. By the end of 1900 he had convinced banker J. P. Morgan to finance construction of a wireless station (eventually sited at Wardenclyffe) based on his ideas intended to transmit messages across the Atlantic to England and to ships at sea. Almost as soon as the contract was signed Tesla decided to scale up the facility to include his ideas of terrestrial wireless power transmission to better compete with Guglielmo Marconi's radio based telegraph system.[2] Morgan refused to fund the changes and, when no additional investment capital became available, the project at Wardenclyffe was abandoned in 1906, never to become operational.

During this period Tesla filed numerous patents associated with the basic functions of his system, including transformer design, transmission methods, tuning circuits, and methods of signaling. He also described a plan to have some thirty Wardenclyffe-style telecommunications stations positioned around the world to be tied into existing telephone and telegraph systems. He would continue to elaborate to the press and in his writings for the next few decades on the system's capabilities and how it was superior to radio-based systems.

Despite claims of having, "carried on practical experiments in wireless transmission"[3] there is no documentation he ever transmitted power beyond relatively short distances and modern scientific opinion is generally that his wireless power scheme would not have worked

Oscillation frequency

The impedance of a Tesla transformer as a function of frequency measured by a network analyzer.[28] The coil acts as a transmission line, exhibiting multiple resonant frequencies.

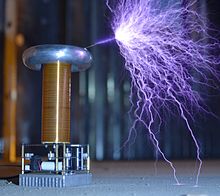

The resonant frequency of Tesla coils is in the low radio frequency (RF) range, usually between 50 kHz and 1 MHz. However, because of the impulsive nature of the spark they produce broadband radio noise, and without shielding can be a significant source of RFI, interfering with nearby radio and television reception.

Output voltage

The above peak voltage is only achieved in coils in which air discharges do not occur; in coils which produce sparks, like entertainment coils, the peak voltage on the terminal is limited to the voltage at which the air breaks down and becomes conductive. As the output voltage increases during each voltage pulse, it reaches the point where the air next to the high voltage terminal ionizes and coronas, brush discharges and streamer arcs, break out from the terminal. This happens when the electric field strength exceeds the dielectric strength of the air, about 30 kV per centimeter. Since the electric field is greatest at sharp points and edges, air discharges start at these points on the high voltage terminal. The voltage on the high voltage terminal cannot increase above the air breakdown voltage, because additional electric charge pumped into the terminal from the secondary winding just escapes into the air. The output voltage of open-air Tesla coils is limited to around several million volts by air breakdown, but higher voltages can be achieved by coils immersed in pressurized tanks of insulating oil.

The top load or "toroid" electrode

If the top electrode is large and smooth enough, the electric field at its surface may never get high enough even at the peak voltage to cause air breakdown, and air discharges will not occur. Some entertainment coils have a sharp "spark point" projecting from the torus to start discharges.

Types

The term "Tesla coil" is applied to a number of high voltage resonant transformer circuits.Tesla coil circuits can be classified by the type of "excitation" they use, what type of circuit is used to apply current to the primary winding of the resonant transformer:

- Spark-excited or Spark Gap Tesla Coil (SGTC) - This type uses a spark gap to switch pulses of current through the primary, exciting oscillation in the transformer. This pulsed (disruptive) drive creates a pulsed high voltage output. Spark gaps have disadvantages due to the high primary currents they must handle. They produce a very loud noise while operating, noxious ozone gas, and high temperatures which often require a cooling system. The energy dissipated in the spark also reduces the Q factor and the output voltage.

- Static spark gap - This is the most common type, which was described in detail in the previous section. It is used in most entertainment coils. An AC voltage from a high voltage supply transformer charges a capacitor, which discharges through the spark gap. The spark rate is not adjustable but is determined by the line frequency. Multiple sparks may occur on each half-cycle, so the pulses of output voltage may not be equally-spaced.

- Static triggered spark gap - Commercial and industrial circuits often apply a DC voltage from a power supply to charge the capacitor, and use high voltage pulses generated by an oscillator applied to a triggering electrode to trigger the spark.[15] This allows control of the spark rate and exciting voltage. Commercial spark gaps are often enclosed in an insulating gas atmosphere such as sulfur hexafluoride, reducing the length and thus the energy loss in the spark.

- Rotary spark gap - These use a spark gap consisting of electrodes around the periphery of a wheel rotated at high speed by a motor, which create sparks when they pass by a stationary electrode. Tesla used this type on his big coils, and they are used today on large entertainment coils. The rapid separation speed of the electrodes quenches the spark quickly, allowing "first notch" quenching, making possible higher voltages. The wheel is usually driven by a synchronous motor, so the sparks are synchronized with the AC line frequency, the spark occurring at the same point on the AC waveform on each cycle, so the primary pulses are repeatable.

- Switched or Solid State Tesla Coil (SSTC) - These use power semiconductor devices, usually thyristors or transistors such as MOSFETs or IGBTs,[15] to switch pulses of current from a DC power supply through the primary winding. They provide pulsed (disruptive) excitation without the disadvantages of a spark gap: the loud noise, high temperatures, and poor efficiency. The voltage, frequency, and excitation waveform can be finely controllable. SSTCs are used in most commercial, industrial, and research applications[15] as well as higher quality entertainment coils.

- Single resonant solid state Tesla coil (SRSSTC) - In this circuit the primary does not have a capacitor and so is not a tuned circuit; only the secondary is. The pulses of current to the primary from the switching transistors excite resonance in the secondary tuned circuit. Single tuned SSTCs are simpler, but don't have as high a Q and cannot produce as high voltage from a given input power as the DRSSTC.

- Dual Resonant Solid State Tesla Coil (DRSSTC) - The circuit is similar to the double tuned spark excited circuit, except in place of the spark gap semiconductor switches are used. This functions similarly to the double tuned spark-excited circuit. Since both primary and secondary are resonant it has higher Q and can generate higher voltage for a given input power than the SRSSTC.

- Singing Tesla coil or musical Tesla coil - This is a Tesla coil which can be played like a musical instrument, with its high voltage discharges reproducing simple musical tones. The drive current pulses applied to the primary are modulated at an audio rate by a solid state "interrupter" circuit, causing the arc discharge from the high voltage terminal to emit sounds. Only tones and simple chords have been produced so far; the coil cannot function as a loudspeaker, reproducing complex music or voice sounds. The sound output is controlled by a keyboard or MIDI file applied to the circuit through a MIDI interface. Two modulation techniques have been used: AM (amplitude modulation of the exciting voltage) and PFM (pulse-frequency modulation). These are mainly built as novelties for entertainment.

- Continuous wave - In these the transformer is driven by a feedback oscillator, which applies a sinusoidal current to the transformer. The primary tuned circuit serves as the tank circuit of the oscillator, and the circuit resembles a radio transmitter. Unlike the previous circuits which generate a pulsed output, they generate a continuous sine wave output. Power vacuum tubes are often used as active devices instead of transistors because they are more robust and tolerant of overloads. In general, continuous excitation produces lower output voltages from a given input power than pulsed excitation.

- Two coil or double-resonant circuits - Virtually all present Tesla coils use the two coil resonant transformer, consisting of a primary winding to which current pulses are applied, and a secondary winding that produces the high voltage, invented by Tesla in 1891. The term "Tesla coil" normally refers to these circuits.

- Three coil, triple-resonant, or magnifier circuits - These are circuits with three coils, based on Tesla's "magnifying transmitter" circuit which he began experimenting with sometime before 1898 and installed in his Colorado Springs lab 1899-1900, and patented in 1902. They consist of a two coil air-core step-up transformer similar to the Tesla transformer, with the secondary connected to a third coil not magnetically coupled to the others, called the "extra" or "resonator" coil, which is series-fed and resonates with its own capacitance. The presence of three energy-storing tank circuits gives this circuit more complicated resonant behavior. It is the subject of research, but has been used in few practical applications.

Primary switching

Modern transistor or vacuum tube Tesla coils do not use a primary spark gap. Instead, the transistor(s) or vacuum tube(s) provide the switching or amplifying function necessary to generate RF power for the primary circuit. Solid-state Tesla coils use the lowest primary operating voltage, typically between 155 and 800 volts, and drive the primary winding using either a single, half-bridge, or full-bridge arrangement of bipolar transistors, MOSFETs or IGBTs to switch the primary current. Vacuum tube coils typically operate with plate voltages between 1500 and 6000 volts, while most spark gap coils operate with primary voltages of 6,000 to 25,000 volts. The primary winding of a traditional transistor Tesla coil is wound around only the bottom portion of the secondary coil. This configuration illustrates operation of the secondary as a pumped resonator. The primary 'induces' alternating voltage into the bottom-most portion of the secondary, providing regular 'pushes' (similar to providing properly timed pushes to a playground swing). Additional energy is transferred from the primary to the secondary inductance and top-load capacitance during each "push", and secondary output voltage builds (called 'ring-up'). An electronic feedback circuit is usually used to adaptively synchronize the primary oscillator to the growing resonance in the secondary, and this is the only tuning consideration beyond the initial choice of a reasonable top-load.

Demonstration of the Nevada Lightning Laboratory 1:12 scale prototype twin Tesla Coil at Maker Faire 2008

XXX . XXX 4% Spectrum Analyzer as like as microwave instrument

Spectrum analysis primarily measures power, frequency, and noise. It is concerned primarily with characterizing signal components (such as its spurious and harmonic components, modulation, noise, etc.) Spectrum analysis locates frequencies where microwave energy exists.

Distortion measurements

Distortion measurement is an area where the spectrum analyzer makes a significant contribution. There are two basic types of distortion that are usually specified by the manufacturer: harmonic distortion and two-tone, third-order intermodulation distortion. The third-order intermodulation products are represented by: 2f1 - f2 and 2f2 - f1, where f1 and f2 are the two-tone input signals.The HP 8565A can measure harmonic distortion products up to 100 dB down in the 1.7 to 22 GHz frequency range. Third-order intermodulation products can also be measured up to 100 dB down, depending on signal separation and frequency range. In all, the HP 8565A is capable of making a wide variety of distortion measurements with speed and precision.

Distortion in amplifiers

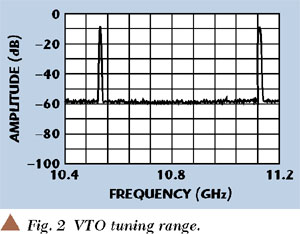

All amplifiers generate some distortion at the output and these distortion products can be significant if the amplifier is overdriven with a high-level input signal. The test setup in Figure 1 was used to measure the third-order intermodulation products of a microwave FET (field-effect transistor) amplifier. Directional couplers and attenuators were used to provide isolation between sources.Figure 2 is a CRT photo of a two-tone, third-order intermodulation measurement. The third-order products P(2f1 - f2) and P(2f2 - f1) are 50 dB below the two-tone signals P(f1) and P(f2). The difference between the power levels of the two-tone signals and the intermodulation products is known as the "intermodulation ratio". Note that if you adjust the power levels of the two tones P(f1) and P(f2) to be exactly equal in power, the power levels of the intermodulation products will be exactly equal as well. So tweak the power levels of P(f1) and P(f2) carefully or you will have several choices on the display to calculate the intermodulation ratio from.

Third-order intercept point measurement

If you measured and plotted the power levels P(f1) and P(2f2-f1) versus input power, eventually at some power level they would be equal. (We promise to get into this more in-depth in a future chapter on receivers. Unknown Editor) This is known as the "third-order intercept point", sometimes abbreviated TOI, sometimes IP3 or even other weird ways. The higher the TOI, the more power an amplifier can handle.The beautiful thing about the scope output in Figure 2 is that from this one picture the amplifier's TOI can be calculated. How is this possible without taking multiple sets of data and plotting the relationship between P(f1) and P(2f2-f1)? A phenomenon you need to be aware of is that the third-order modulation products increase 3 dB for every 1 dB that the input power of the two tones are increased, while the two-tone power levels only increase 1 dB/dB (thanks for the correction, Barrett!) Why does this happen? Let some scientist worry about that, just be glad that it does and use it to calculate the TOI from the data in Figure 2:

P(f1) = 0 dBmHow close should the two frequencies be for measuring intermodulation ratio and TOI? Why argue with an HP manual? Use 100 MHz separation, with 1 MHz resolution bandwidth.

P(2f2-f1) = -50 dBm

Intermodulation Ratio = P(f1) - P(2f2-f1) = 0 dB - (-50 dB) = 50 dB

TOI = P(f1) +1/2(Intermodulation Ratio) = 0 dBm + 25 dB = 25 dBm

One word of caution about TOI measurements: the actual intercept point is only a mathematical construct; you should never try to measure it directly. Chances are the DUT will blow up well before the four output tones are all equal in power!

Figure 1. Two-tone test setup

RES BW 1 MHz, REF LEVEL 0 dBm, LOG SCALE 10 db/

FREQUENCY 5.950 GHz, FREQ SPAN/DIV 50 MHz

Figure 2. Two-tone, third-order intermodulation products

Distortion in mixers

Mixers utilize the non-linear characteristics of an active or passive device to achieve a desired frequency conversion. This results in some distortion at the output due to the inherent non-linearity of the device. Figure 3 illustrates the test setup and CRT photograph of a typical mixer measurement.

Figure 3. Mixer measurement

Conversion loss (SSB):

RFin - IF = (-30) - (-40)= 10 dB

LO to IF Isolation:

LOin - LOout(IF) = (+5) - (-25)=30 dB

RF to IF Isolation:

RFin - RFout(IF) = (-30) - (-57)=27 dB

Third-Order Distortion Product (2 LO - RF)= -64 dBm @ 600 MHz.

Distortion in oscillators