Sensors and Actuators A:

Physical brings together multidisciplinary interests in one journal entirely devoted to disseminating information on all aspects of research and development of solid-state devices for transducing physical signals. Sensors and Actuators A: Physical regularly publishes original papers, letters to the Editors and from time to time invited review articles within the following device areas:

• Fundamentals and Physics, such as: classification of effects, physical effects, measurement theory, modelling of sensors, measurement standards, measurement errors, units and constants, time and frequency measurement. Modeling papers should bring new modeling techniques to the field and be supported by experimental results.

• Materials and their Processing, such as: piezoelectric materials, polymers, metal oxides, III-V and II-VI semiconductors, thick and thin films, optical glass fibres, amorphous, polycrystalline and monocrystalline silicon.

• Optoelectronic sensors, such as: photovoltaic diodes, photoconductors, photodiodes, phototransistors, positron-sensitive photodetectors, optoisolators, photodiode arrays, charge-coupled devices, light-emitting diodes, injection lasers and liquid-crystal displays.

• Mechanical sensors, such as: metallic, thin-film and semiconductor strain gauges, diffused silicon pressure sensors, silicon accelerometers, solid-state displacement transducers, piezo junction devices, piezoelectric field-effect transducers (PiFETs), tunnel-diode strain sensors, surface acoustic wave devices, silicon micromechanical switches, solid-state flow meters and electronic flow controllers.

• Thermal sensors, such as: platinum resistors, thermistors, diode temperature sensors, silicon transistor thermometers, integrated temperature transducers, PTAT circuits, thermocouples, thermopiles, pyroelectric thermometers, quartz thermometers, power transistors and thick-film thermal print heads.

• Magnetic sensors, such as: magnetoresistors, Corbino disks, magnetodiodes, Hall-effect devices, integrated Hall devices, silicon depletion-layer magnetometers, magneto-injection transistors, magnistors, lateral magnetotransistors, carrier-domain magnetometers, MOS magnetic-field sensors, solid-state read and write heads.

• Micromechanics, such as: research papers on actuators, structures, integrated sensors-actuators, microsystems, and other devices or subdevices ranging in size from millimetres to sub-microns; micromechatronics; microelectromechanical systems; microoptomechanical systems; microchemomechanical systems; microrobots; silicon and non-silicon fabrication techniques; basic studies of physical phenomena of interest to micromechanics; analysis of microsystems; exploration of new topics and materials related to micromechanics; microsystem-related problems like power supplies and signal transmission, microsystem-related simulation tools; other topics of interest to micromechanics.

• Interface electronics: electronic circuits which are designed to interface directly with the above transducers and which are used for improving or complementing the characteristics of these devices, such as linearization, A/D conversion, temperature compensation, light-intensity compensation, current/frequency conversion and microcomputer interfacing.

• Sensor Systems and Applications, such as: sensor buses, multiple-sensor systems, sensor networks, voting systems, telemetering, sensor arrays, and automotive, environmental, monitoring and control, consumer, medical, alarm and security, robotic, nautical, aeronautical and space measurement systems.

glimpses of electronic and human comparisons in sensors and actuators

smart sensing system developed for the flavour analysis of liquids. The system comprises both a so-called “electronic tongue” based on shear horizontal surface acoustic wave (SH-SAW) sensors analysing the liquid phase and a so-called “electronic nose” based on chem FET sensors analysing the gaseous phase. Flavour is generally understood to be the overall experience from the combination of oral and nasal stimulation and is principally derived from a combination of the human senses of taste (gustation) and smell (olfaction). Thus, by combining two types of micro sensors, an artificial flavour sensing system has been developed. Initial tests conducted with different liquid samples, i.e. water, orange juice and milk (of different fat content), resulted in 100% discrimination using principal components analysis; although it was found that there was little contribution from the electronic nose. Therefore further flavour experiments were designed to demonstrate the potential of the combined electronic nose/tongue flavour system. Consequently, experiments were conducted on low vapour pressure taste-biased solutions and high vapour pressure, smell-biased solutions. Only the combined flavour analysis system could achieve 100% discrimination between all the different liquids. We believe that this is the first report of a SAW-based analysis system that determines flavour through the combination of both liquid and headspace analysis.

The proposed architecture is based on the concept of modularity and comprises several freely inter connectable modules (function blocks) that host separate sensors and circuitry. Each module is designed to be self-contained and manages all its on-board resources, hiding the implementation details behind a common interface.

This interface makes it possible to handle all modules the same way regardless of their electrical characteristics, something fundamental when dealing with heterogeneous gas sensor technologies (having different electrical characteristics not only in the form of power requirements but also regarding the electrical properties associated to their transducer principles). Moreover, this versatility also enables the integration of modules with auxiliary functionalities, like data logging, wireless communications (e.g., Bluetooth, Wi-Fi, and Zigbee), or even non-gas-related sensors (e.g., GPS, humidity, and wind speed), provided that they all adhere to the same interface. Accordingly, it is also possible to design modules with several sensors in a way that is transparent to the rest of the e-nose, making it feasible to create modules with high sensor-count integrated chips , like those employed by Cyranose 320 (32 sensors) or by Che Harun et al. (900 sensors) .

Modules can be connected or disconnected from the e-nose in a plug-and-play fashion, enabling an easy configuration based on the target application. Concretely, they are connected in a daisy chain topology that shares a single power and communications bus. Once a module is connected and powered, it works as an individual agent that handles its own resources and communicates with the others. Thus, neither a central main board is required nor is any module indispensable for the correct operation of the e-nose (power can be provided externally), allowing to assemble an e-nose with the required modules only.

Though a module is the basic element of the proposed architecture, within it, we can differentiate a series of components required for its operation . These components are present in all modules, but their physical distribution and/or electronic design may vary depending on the specific implementation. In order to keep a clear and readable structure, we describe each of these components in the following subsections.

In this work, we have revised the current state of electronic noses, focusing on their ability to adapt to different requirements (target gases, concentration ranges, georeferenced measurements, and others). We have found that most e-noses are conceived for very specific applications, involving the detection of a reduced set of gases, and that they lack versatility for general-purpose artificial olfaction.

To overcome these limitations, we have proposed a novel architecture that enhances the capabilities of e-noses. It combines heterogeneous gas sensor technologies and auxiliary devices (e.g., GPS and wireless communications) in a completely modular design of interconnectable smart modules, thus enabling an easy and cost-efficient reconfiguration of the e-nose and increasing its service lifecycle, as faulty modules can be replaced individually, and new functions added when needed.

In this line, we have implemented a prototype and tested it in three real applications. Each scenario had its own and different requirements in terms of sensing capabilities, autonomy, and mobility, which were successfully met by assembling our prototype into completely different e-noses.

Finally, our next steps will focus on the development of additional modules (e.g., QCM gas sensors, ambient sensors for drift correction, and GSM communications) and on the further improvement of the functionality of our design.

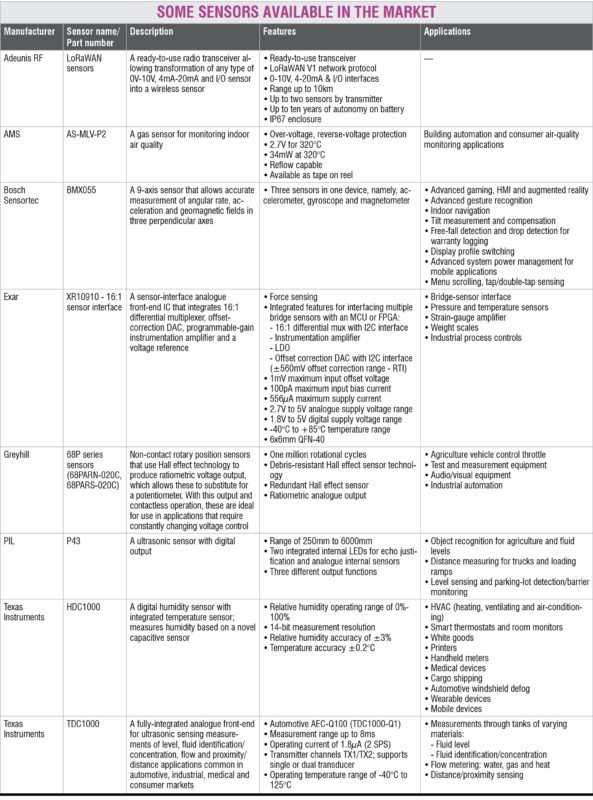

Sensor Technology and Latest Trends

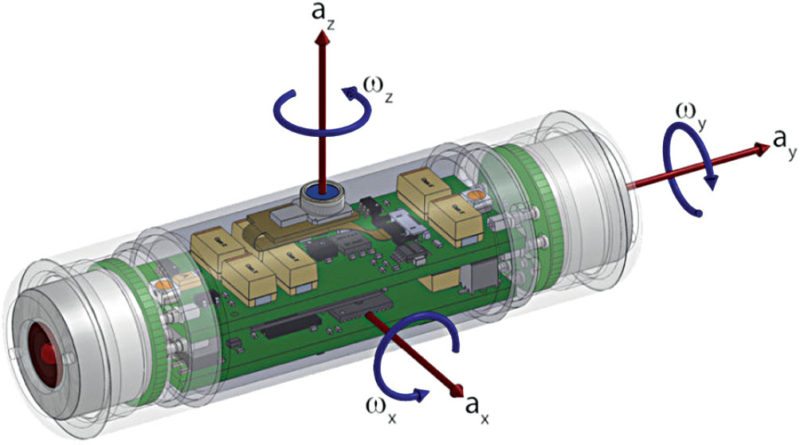

A sensor-like accelerometer is not something new. It has been miniaturised and volume production has made it so cost-effective that you can put it almost everywhere. Sensor technology started as a component in numerous applications such as mobiles and tablets, but you can now see these in industrial equipment, toys, medical electronics, wearables and even safety in vehicles.

What is driving these little pieces of technology that are so crucial for the Internet of Things (IoT)? Could sensors be the new linen?

The IoT has had a great influence on sensor technology. Whether it is wearables, implantables, smartfabrics or smartpills, improvements in micro-electro-mechanical systems (MEMS) technology and sensors have been one of the driving factors.

Hybrid circuits

Walden C. Rhines, chairman and CEO of Mentor Graphics, told us in an interview that the IoT has created a greater interest in multi-die packaging, in analogue and RF for relatively-low-complexity designs and in hybrid circuits that combine sensors, actuators, MEMS and other things with ASIC chips and then package these accordingly.

Firms like PragmatIC Printing are developing ultra-thin, low-cost flexible microcircuits that can be incorporated in mass-market packaging. Steven Bagshaw, marketing executive at Centre for Process Innovation Ltd also mentioned how hybrid electronics will give rise to wireless medical devices for rapid diagnostics using printed sensors, thus helping build better medical devices in line with the IoT concept.

Centre for Nano Science and Engineering (CeNSE) at Indian Institute of Science (IISc) is also working on nano-electro-mechanical systems (NEMS). Dr Vijay Mishra, CTO at CeNSE in IISc, has presented a talk on nanotech sensor technology for human body health monitoring at the 4th edition of Electronics Rocks conference that took place in Bengaluru last year.

Scaling up sensor deployment

John Rogers’ materials science research team from University of Illinois, USA, has developed a way of building circuits that act like tattoos, collect power wirelessly and can be worn just about anywhere on the body. Their sensors harvest energy through near field communication to power themselves. Rogers’ company named MC10 Inc. has marketed these in the form of sensor patches, and they are now working on a new generation of the technology since late 2014.

“We have always gone towards higher and higher integration. Starting from small chips, we kept on integrating more features and blocks into it. From just individual sensors in the beginning, we brought in more sensors into the mix to create a sensor hub for engineers. Embedded microcontrollers and a communication system followed this, and the final result is the self-contained unit we see today,”

Large-area integration of these micro-electronics devices makes the most of their small size by creating processes that can scale these over a larger area.

ISORG and Plastic Logic have a flexible plastic image sensor made from flexible photo detector sensors and organic thin-film technology. This technology won them the prestigious FLEXI award, a recognition given to firms in the flexible and printed electronics segment.

Integrated smart sensors

Newer sensor technology has now integrated vital components of a smart sensor on a chip. It offers a controlled specification set across the operation range of a sensor. The underlying idea here is to integrate sensor technology at the silicon level itself. This is believed to improve power consumption while simplifying product development. How are companies implementing it?

Texas Instruments not only integrates data conversion and communication sections of a smart sensor but also helps in either eliminating the traditional sensing element or integrating it on-chip. For instance, a wheel speed sensor typically employs either a multi-pole ring magnet and hall arrangement or a magnetic rotary encoder and magnet arrangement for measuring wheel speed in vehicles.

“The proposed inductive switch sensor completely does away with a costly multi-pole ring magnet and utilises the metallic wheel hub and printed circuit board itself as a sensor to measure wheel speed. Inductive sensing utilises LC tank resonance to identify presence of metallic teeth and valley as an object to switch between high/low states. Given sensitivity, mounting as well as temperature issues with magnets, new solutions make way for a reliable non-magnet approach and low-cost implementation. Moreover, this technology is enabling the placement of control electronics remotely from location of sensing, thereby making it easier to operate the sub-system away from noise environment,” explains Sanjay Jain, analogue applications manager, MGTS, Texas Instruments India (SC sales and marketing). This technology is also being adapted for a whole lot of other position and speed-sensing applications. It includes passenger-occupancy detection, seatbelt-buckle detection and gear-position detection, to name a few.

Allegro Micro Systems had launched an angle-position sensor that is also programmable. Model A1335 is a contactless, programmable magnetic angle position sensor integrated circuit. It comes with a system-on-chip architecture with a front-end based on circular vertical hall (CVH) technology. It also includes programmable microprocessor based signal processing and supports multiple communication interfaces including inter-integrated circuit, serial peripheral interface and single-edge nibble transmission.

Handling data

Traditional heavy cryptography is difficult to deploy on a typical sensor technology, hence deployment of many insecure IoT devices. “Regulations for the IoT need to address issues of minimum specifications for IoT devices. Existing IoT sensors are not equipped to take advantage of 5G technology either,” explained Kevin Curran, IEEE senior member and reader in computer science at University of Ulster in an interview with EFY.

Production process upgrades

In an interaction earlier this year with Uday Prabhu, general manager, electronics product engineering and product management, Robert Bosch Engineering and Business Solutions Pvt Ltd, he explained that there is compression happening on a large scale with MEMS. An increase in the number of transistors that can go into a chip has resulted in reduced size with higher functionality. Earlier, MEMS elements functioned exclusively as sensing elements.

“Now, intelligence, which is the processor, is built into the MEMS chip itself. This is the primary influencer for the wearables segment, with MEMS chips doubling as control units. Also, structural advances help make finer measurements of acceleration, momentum and so on,” he adds.

Will we soon see the addition of communications technologies to the MEMS chip? Probably not! Prabhu explained that it is good to separate fast- and slow-moving technologies. Otherwise, our wearables would become obsolete very fast and may not support usage with a new, upgraded phone.

What is trending here in Sensor Technology

A lot of applications have long used sensors (of one sort or another) to diagnose, manage and report information. In the last few years, however, sensors have become increasingly important as devices and sub-systems have become more sophisticated with the increase in demand for reliability, especially in safety-critical applications. “The advent of real-time data capture and analysis applications is making these new-age sensor technology increasingly useful in a range of applications including consumer electronics, automotive and in industrial segments,” .

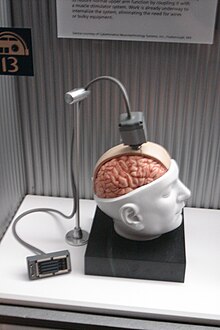

XXX . XXX Sensor Implants for Everywhere in the Body

building the first wireless, dust particle–size sensors that could eventually be implanted in the human body to monitor nerves, muscles, and organs.

The sensors, known as neural dust motes, have so far been implanted in rats’ muscles and peripheral nerves. The motes rely on ultrasound projected into the body for power and to read out measurements. Ultrasound, already widely used in hospitals, can penetrate nearly anywhere in the body. The journal Neuron in August published an article describing the researchers’ work: “Wireless Recording in the Peripheral Nervous System With Ultrasonic Neural Dust.”The scientific codirector of the Wireless Research Center and chair of the electrical engineering division at UC-Berkeley, Rabaey has been with the university since 1987. His background is primarily in integrated circuits and systems, including wireless systems such as the low-power interfaces he describes in the Neuron article. In 1995 he was elevated to IEEE Fellow for “contributions in design synthesis and concurrent architectures for signal processing applications.”

For the past decade, he has been collaborating with IEEE Senior Member Jose Carmena and other IEEE colleagues, like Senior Members Michel Maharbiz and Elad Alon, on wireless brain-machine interfaces (BMIs). Carmena, a professor of electrical engineering and neuroscience at UC-Berkeley, is codirector of its Center for Neural Engineering and Prostheses. Carmena, a coauthor of the article on the neural dust, is also cochair of IEEE Brain. Rabaey is an active member of the initiative and has been presenting his BMI research at IEEE Brain workshops including one held in December at Columbia University.

The Institute asked him about his work and about his concept of a human Intranet.

What is a neural dust mote?

Think of the dust mote as a measurement device tapping into the body’s electric fields. A 1-millimeter cube, about the size of a large grain of sand, the mote contains a piezoelectric crystal that converts ultrasound vibrations projected to it from outside the body into electricity to power a tiny, on-board transistor placed in contact with a nerve or muscle fiber. As natural electrical activity in the nerve varies, it changes the current passing through the transistor, thus providing a read-out mechanism for the nerve’s signal.

To send the information back out of the body, the mote uses the ultrasound. The external transducer first sends ultrasound vibrations to power the mote and then listens for the returning echo as vibrations bounce back. The changing current through the transistor alters the piezoelectric crystal’s mechanical impedance, thereby modulating the amplitude of the signal received by the ultrasound transducer in the room. The slight change in received signal strength allows the receiver to determine the voltage sensed by the mote.

Right now the mote is larger than the researchers would like. Once it’s shrunk to 50 microns on a side—of a size that can be inserted into the brain or central nervous system—the sensor could identify changes as they occur in the human body or during a particular physical activity. Based on that information, a physician or even the person himself could either stimulate a certain body part—a peripheral nerve, say—or a part of the brain. For example, the mote could be implanted in the brain of a paraplegic to enable control of a computer or a robotic arm.

A human intranet would be a network of sensors and actuators that connects points in your brain and your body. The electronics could be in the form of neural motes, or they could be in wearables attached to clothing or integrated into garments. Electronics could also be worn on top of the skin or inserted under the skin, like an electronic tattoo. The sensors would continuously monitor our state of health—what is going on in our bodies, and how well, or not so well, we are performing activities.

Marathon runners, for example, could continuously measure their heart rate and glucose level, and the air their muscles receive. Sensors would collect that information, send it wirelessly to an outside network with a central computer, where it would be processed and converted to a form that could say how the body is performing. That information also could be sent back to the runner. Even better, that processing could be performed on a local processor, avoiding the need to transmit the data.

We already have one of these computational systems: the brain. It gets signals from different nerves, processes them, and decides how to act on them. This natural computer could react to the implanted sensors. That is where BMIs come in. They would connect our biological brain to the electronics that will be on us. BMIs allow for a tighter integration among these auxiliary devices and how we operate as humans. Combine this electronics with the brain, and we have what I call the human intranet. With it, every part of the body could be monitored, rather than just through a single wearable on, say, the wrist. People could see early signs of wellness or illness—which would be great to know.

All the activity taking place today with augmented reality and other ways to enhance ourselves with technology is already moving toward real-time monitoring of the body. However, the true human intranet is still many years away.

Who could benefit from the human intranet?

People with severe medical problems, such as paraplegics, and those who push the limits of their bodies, such as athletes and those in the military. They are going to drive this. Also some artists willing to experiment with their bodies in the interest of their art. The extra sensing capability could expand the scope of what they’re doing.

In the long term, the human intranet will benefit everyone, because it will allow the able-bodied to augment themselves with technology and enhance their interactions with the world around them.

Brain

We also report on an implantable device called an electroceutical, which someday will be able to treat patients with chronic diseases with targeted treatments by adjusting the electrical signals emitted by the nervous system. We also profile the work of IEEE Fellow Jan Rabaey, who is building the first wireless, dust-particle-sized sensors called neural dust motes that could eventually be implanted in the human body to monitor nerves, muscles, and organs. And we feature Braiq, the startup launched by IEEE Fellow Paul Sajda, which is developing an emotional intelligence software program for autonomous cars.

When it comes to learning about what’s going on in brain research, IEEE has a number of products and services, conferences, and standards focused on the latest technologies.

The Future of Medicine Might Be Bio electronic Implants

Unlike oral drugs that travel through the bloodstream and interact with organs along the way, often causing side effects, electroceuticals could precisely target the medical condition by controlling the neural signals going to a specific organ. The procedure is minimally invasive.

Leading the way in this new form of treatment is one of the world’s largest pharmaceutical companies, GlaxoSmithKline of Brentford, England, which is also involved with the IEEE Brain Initiative.

GSK is currently conducting research on how the treatment will improve conditions that include rheumatoid arthritis and diabetes. Google’s life sciences venture, Verily, partnered with GSK in August to help advance the research. The two companies are investing a combined US $700 million over the next seven years to study the treatment, which won’t be available to patients for 10 years.

“Our internal organs are under electrical control, and this means there is potential for treatment that hasn’t been fully developed until now,” says Roy Katso, director of open innovation and funding partnerships at GSK. Katso is the external engagement lead for the company’s work in this area. “Over time, as the efficacy of electroceuticals is proven, implantable devices may either become the standard line of treatment or complement conventional treatments.”

ACTIVITIES UNDERWAY

Despite much optimism about electroceuticals from the health care community, few studies have been conducted to determine their effectiveness in treatments. Moreover, researchers—including those at GSK—still don’t fully understand the body’s electrical pathways, or how to precisely manipulate their currents to treat medical conditions, says Katso.Others are also in the field. The U.S. National Institutes of Health announced this year it will provide more than $20 million for research into its Stimulating Peripheral Activity to Relieve Conditions (SPARC) program. And DARPA received $80 million from the U.S. government for its initiative, ElectRX, to develop bioelectronic treatments for chronic diseases and mental health conditions for active military and veterans.

Researchers from the University of California, Berkeley, have designed an electroceutical neural dust that’s the size of a 1 millimeter cube, or about as big as a large grain of sand.

Startup NeuSpera Medical of San Jose, Calif., received $8 million from GSK’s venture fund to develop an injectable electroceutical, which could eliminate the need for surgery.

ELECTROCEUTICALS IN PRACTICE

GSK researchers are working to reduce the electroceutical, which could be as large as a pacemaker to about the size of a pill, or even smaller. Katso and his colleagues are testing how small the device needs to be to still deliver optimal treatment. GSK is also analyzing the physiological effects of electroceuticals to better regulate its electrical signals, including the voltage output and duration.The treatment requires the device to be attached to the nerve or area of the nervous system that affects organs associated with a disease. For people with asthma, the device likely would be attached to a pulmonary nerve to block signals that cause the lungs to constrict.

Beyond electrically stimulating the nerves, electroceuticals could monitor diseases as well. For people with diabetes, the sensor could detect in real time if glucose levels were too high or too low. The device could then modify the nerve impulses that stimulate insulin production in the pancreas.

Electroceuticals also could monitor the progression of a disease, sharing information with the patient’s physician. The devices could be customized for each patient to account for the severity of a disease.

“In the future, we will be talking about bioelectronic medicine the way we talk about pacemakers today,” Katso says. “Those growing up with technology as well as patients with rare conditions may be more accepting of implanting devices in their bodies. However, at some point in the future, will likely take the treatment for granted and will be the norm.”

XXX . XXX 4% zero Sensor

Sensors are used in everyday objects such as touch-sensitive elevator buttons (tactile sensor) and lamps which dim or brighten by touching the base, besides innumerable applications of which most people are never aware. With advances in micromachinery and easy-to-use microcontroller platforms, the uses of sensors have expanded beyond the traditional fields of temperature, pressure or flow measurement, for example into MARG sensors. Moreover, analog sensors such as potentiometers and force-sensing resistors are still widely used. Applications include manufacturing and machinery, airplanes and aerospace, cars, medicine, robotics and many other aspects of our day-to-day life.

A sensor's sensitivity indicates how much the sensor's output changes when the input quantity being measured changes. For instance, if the mercury in a thermometer moves 1 cm when the temperature changes by 1 °C, the sensitivity is 1 cm/°C (it is basically the slope Dy/Dx assuming a linear characteristic). Some sensors can also affect what they measure; for instance, a room temperature thermometer inserted into a hot cup of liquid cools the liquid while the liquid heats the thermometer. Sensors are usually designed to have a small effect on what is measured; making the sensor smaller often improves this and may introduce other advantages. Technological progress allows more and more sensors to be manufactured on a microscopic scale as microsensors using MEMS technology. In most cases, a microsensor reaches a significantly higher speed and sensitivity compared with macroscopic approaches .

Classification of measurement errors

A good sensor obeys the following rules:- it is sensitive to the measured property

- it is insensitive to any other property likely to be encountered in its application, and

- it does not influence the measured property.

For an analog sensor signal to be processed, or used in digital equipment, it needs to be converted to a digital signal, using an analog-to-digital converter.

Sensor deviations

Since sensors cannot replicate an ideal transfer function, several types of deviations can occur which limit sensor accuracy:- Since the range of the output signal is always limited, the output signal will eventually reach a minimum or maximum when the measured property exceeds the limits. The full scale range defines the maximum and minimum values of the measured property.[citation needed]

- The sensitivity may in practice differ from the value specified. This is called a sensitivity error. This is an error in the slope of a linear transfer function.

- If the output signal differs from the correct value by a constant, the sensor has an offset error or bias. This is an error in the y-intercept of a linear transfer function.

- Nonlinearity is deviation of a sensor's transfer function from a straight line transfer function. Usually, this is defined by the amount the output differs from ideal behavior over the full range of the sensor, often noted as a percentage of the full range.

- Deviation caused by rapid changes of the measured property over time is a dynamic error. Often, this behavior is described with a bode plot showing sensitivity error and phase shift as a function of the frequency of a periodic input signal.

- If the output signal slowly changes independent of the measured property, this is defined as drift. Long term drift over months or years is caused by physical changes in the sensor.

- Noise is a random deviation of the signal that varies in time.

- A hysteresis error causes the output value to vary depending on the previous input values. If a sensor's output is different depending on whether a specific input value was reached by increasing vs. decreasing the input, then the sensor has a hysteresis error.

- If the sensor has a digital output, the output is essentially an approximation of the measured property. This error is also called quantization error.

- If the signal is monitored digitally, the sampling frequency can cause a dynamic error, or if the input variable or added noise changes periodically at a frequency near a multiple of the sampling rate, aliasing errors may occur.

- The sensor may to some extent be sensitive to properties other than the property being measured. For example, most sensors are influenced by the temperature of their environment.

Resolution

The resolution of a sensor is the smallest change it can detect in the quantity that it is measuring. The resolution of a sensor with a digital output is usually the resolution of the digital output. The resolution is related to the precision with which the measurement is made, but they are not the same thing. A sensor's accuracy may be considerably worse than its resolution.Sensors in nature

All living organisms contain biological sensors with functions similar to those of the mechanical devices described. Most of these are specialized cells that are sensitive to:- Light, motion, temperature, magnetic fields, gravity, humidity, moisture, vibration, pressure, electrical fields, sound, and other physical aspects of the external environment

- Physical aspects of the internal environment, such as stretch, motion of the organism, and position of appendages (proprioception)

- Environmental molecules, including toxins, nutrients, and pheromones

- Estimation of biomolecules interaction and some kinetics parameters

- Internal metabolic indicators, such as glucose level, oxygen level, or osmolality

- Internal signal molecules, such as hormones, neurotransmitters, and cytokines

Chemical sensor

A chemical sensor is a self-contained analytical device that can provide information about the chemical composition of its environment, that is, a liquid or a gas phase.[5] The information is provided in the form of a measurable physical signal that is correlated with the concentration of a certain chemical species (termed as analyte). Two main steps are involved in the functioning of a chemical sensor, namely, recognition and transduction. In the recognition step, analyte molecules interact selectively with receptor molecules or sites included in the structure of the recognition element of the sensor. Consequently, a characteristic physical parameter varies and this variation is reported by means of an integrated transducer that generates the output signal. A chemical sensor based on recognition material of biological nature is a biosensor. However, as synthetic biomimetic materials are going to substitute to some extent recognition biomaterials, a sharp distinction between a biosensor and a standard chemical sensor is superfluous. Typical biomimetic materials used in sensor development are molecularly imprinted polymers and aptamers.Biosensor

In biomedicine and biotechnology, sensors which detect analytes thanks to a biological component, such as cells, protein, nucleic acid or biomimetic polymers, are called biosensors. Whereas a non-biological sensor, even organic (=carbon chemistry), for biological analytes is referred to as sensor or nanosensor. This terminology applies for both in-vitro and in vivo applications. The encapsulation of the biological component in biosensors, presents a slightly different problem that ordinary sensors; this can either be done by means of a semipermeable barrier, such as a dialysis membrane or a hydrogel, or a 3D polymer matrix, which either physically constrains the sensing macromolecule or chemically constrains the macromolecule by bounding it to the scaffold.

XXX . XXX 4%zero null Actuator

An actuator requires a control signal and a source of energy. The control signal is relatively low energy and may be electric voltage or current, pneumatic or hydraulic pressure, or even human power. Its main energy source may be an electric current, hydraulic fluid pressure, or pneumatic pressure. When it receives a control signal, an actuator responds by converting the signal's energy into mechanical motion.

An actuator is the mechanism by which a control system acts upon an environment. The control system can be simple (a fixed mechanical or electronic system), software-based (e.g. a printer driver, robot control system), a human, or any other input

Hydraulic

A hydraulic actuator consists of cylinder or fluid motor that uses hydraulic power to facilitate mechanical operation. The mechanical motion gives an output in terms of linear, rotatory or oscillatory motion. As liquids are nearly impossible to compress, a hydraulic actuator can exert a large force. The drawback of this approach is its limited acceleration.The hydraulic cylinder consists of a hollow cylindrical tube along which a piston can slide. The term single acting is used when the fluid pressure is applied to just one side of the piston. The piston can move in only one direction, a spring being frequently used to give the piston a return stroke. The term double acting is used when pressure is applied on each side of the piston; any difference in pressure between the two sides of the piston moves the piston to one side or the other.[2]

Pneumatic

Pneumatic rack and pinion actuators for valve controls of water pipes

Pneumatic actuators enable considerable forces to be produced from relatively small pressure changes. These forces are often used with valves to move diaphragms to affect the flow of liquid through the valve.

Electric

An electric actuator is powered by a motor that converts electrical energy into mechanical torque. The electrical energy is used to actuate equipment such as multi-turn valves. It is one of the cleanest and most readily available forms of actuator because it does not directly involve oil or other fossil fuels.Thermal or magnetic (shape memory alloys)

Actuators which can be actuated by applying thermal or magnetic energy have been used in commercial applications. Thermal actuators tend to be compact, lightweight, economical and with high power density. These actuators use shape memory materials (SMMs), such as shape memory alloys (SMAs) or magnetic shape-memory alloys (MSMAs). Some popular manufacturers of these devices are Finnish Modti Inc., American Dynalloy and Rotork.Mechanical

A mechanical actuator functions to execute movement by converting one kind of motion, such as rotary motion, into another kind, such as linear motion. An example is a rack and pinion. The operation of mechanical actuators is based on combinations of structural components, such as gears and rails, or pulleys and chains.3D printed soft actuators

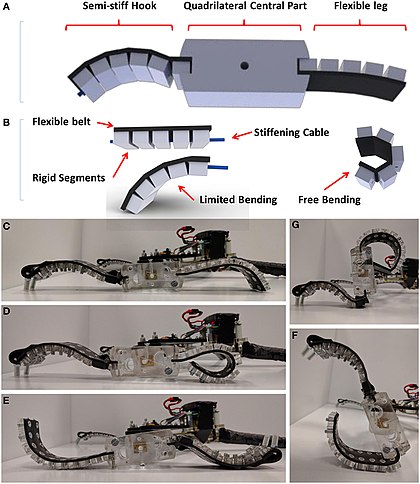

Soft actuators are being developed to handle fragile objects like fruit harvesting in agriculture or manipulating the internal organs in biomedicine that has always been a challenging task for robotics. Unlike conventional actuators, soft actuators produce flexible motion due to the integration of microscopic changes at the molecular level into a macroscopic deformation of the actuator materials.The majority of the existing soft actuators are fabricated using multistep low yield processes such as micro-moulding, solid freeform fabrication, and mask lithography. However, these methods require manual fabrication of devices, post processing/assembly, and lengthy iterations until maturity in the fabrication is achieved. To avoid the tedious and time-consuming aspects of the current fabrication processes, researchers are exploring an appropriate manufacturing approach for effective fabrication of soft actuators. Therefore, special soft systems that can be fabricated in a single step by rapid prototyping methods, such as 3D printing, are utilized to narrow the gap between the design and implementation of soft actuators, making the process faster, less expensive, and simpler. They also enable incorporation of all actuator components into a single structure eliminating the need to use external joints, adhesives, and fasteners. These result in a decrease in the number of discrete parts, post-processing steps, and fabrication time.

3D printed soft actuators are classified into two main groups namely “semi 3D printed soft actuators” and “3D printed soft actuators”. The reason for such classification is to distinguish between the printed soft actuators that are fabricated by means of 3D printing process in whole and the soft actuators whose parts are made by 3D printers and post processed subsequently. This classification helps to clarify the advantages of 3D printed soft actuators over the semi 3D printed soft actuators due to their capability of operating without the need of any further assembly.

Shape memory polymer (SMP) actuators are the most similar to our muscles, providing a response to a range of stimuli such as light, electrical, magnetic, heat, pH, and moisture changes. They have some deficiencies including fatigue and high response time that have been improved through the introduction of smart materials and combination of different materials by means of advanced fabrication technology. The advent of 3D printers has made a new pathway for fabricating low-cost and fast response SMP actuators. The process of receiving external stimuli like heat, moisture, electrical input, light or magnetic field by SMP is referred to as shape memory effect (SME). SMP exhibits some rewarding features such a low density, high strain recovery, biocompatibility, and biodegradability.

Photopolymer/light activated polymers (LAP) are another type of SMP that are activated by light stimuli. The LAP actuators can be controlled remotely with instant response and, without any physical contact, only with the variation of light frequency or intensity.

A need for soft, lightweight and biocompatible soft actuators in soft robotics has influenced researchers for devising pneumatic soft actuators because of their intrinsic compliance nature and ability to produce muscle tension.

Polymers such as dielectric elastomers (DE), ionic polymer metal composites (IPMC), ionic electroactive polymers, polyelectrolyte gels, and gel-metal composites are common materials to form 3D layered structures that can be tailored to work as soft actuators. EAP actuators are categorized as 3D printed soft actuators that respond to electrical excitation as deformation in their shape.

Examples and applications

In engineering, actuators are frequently used as mechanisms to introduce motion, or to clamp an object so as to prevent motion. In electronic engineering, actuators are a subdivision of transducers. They are devices which transform an input signal (mainly an electrical signal) into some form of motion.Examples of actuators

- Comb drive

- Digital micromirror device

- Electric motor

- Electroactive polymer

- Hydraulic cylinder

- Piezoelectric actuator

- Pneumatic actuator

- Screw jack

- Servomechanism

- Solenoid

- Stepper motor

- Shape-memory alloy

- Thermal bimorph

Circular to linear conversion

Motors are mostly used when circular motions are needed, but can also be used for linear applications by transforming circular to linear motion with a lead screw or similar mechanism. On the other hand, some actuators are intrinsically linear, such as piezoelectric actuators. Conversion between circular and linear motion is commonly made via a few simple types of mechanism including:- Screw: Screw jack, ball screw and roller screw actuators all operate on the principle of the simple machine known as the screw. By rotating the actuator's nut, the screw shaft moves in a line. By moving the screw shaft, the nut rotates.

- Wheel and axle: Hoist, winch, rack and pinion, chain drive, belt drive, rigid chain and rigid belt actuators operate on the principle of the wheel and axle. By rotating a wheel/axle (e.g. drum, gear, pulley or shaft) a linear member (e.g. cable, rack, chain or belt) moves. By moving the linear member, the wheel/axle rotates.

Virtual instrumentation

In virtual instrumentation, actuators and sensors are the hardware complements of virtual instruments.Performance metrics

Performance metrics for actuators include speed, acceleration, and force (alternatively, angular speed, angular acceleration, and torque), as well as energy efficiency and considerations such as mass, volume, operating conditions, and durability, among others.Force

When considering force in actuators for applications, two main metrics should be considered. These two are static and dynamic loads. Static load is the force capability of the actuator while not in motion. Conversely, the dynamic load of the actuator is the force capability while in motion. The two aspects rarely have the same weight capability and must be considered separately.Speed

Speed should be considered primarily at a no-load pace, since the speed will invariably decrease as the load amount increases. The rate the speed will decrease will directly correlate with the amount of force and the initial speed.Operating conditions

Actuators are commonly rated using the standard IP Code rating system. Those that are rated for dangerous environments will have a higher IP rating than those for personal or common industrial use.Durability

This will be determined by each individual manufacturer, depending on usage and quality.

XXX . XXX 4%zero null 0 1 Robotics

These technologies are used to develop machines that can substitute for humans and replicate human actions. Robots can be used in any situation and for any purpose, but today many are used in dangerous environments (including bomb detection and de-activation), manufacturing processes, or where humans cannot survive. Robots can take on any form but some are made to resemble humans in appearance. This is said to help in the acceptance of a robot in certain replicative behaviors usually performed by people. Such robots attempt to replicate walking, lifting, speech, cognition, and basically anything a human can do. Many of today's robots are inspired by nature, contributing to the field of bio-inspired robotics.

The concept of creating machines that can operate autonomously dates back to classical times, but research into the functionality and potential uses of robots did not grow substantially until the 20th century.[1] Throughout history, it has been frequently assumed that robots will one day be able to mimic human behavior and manage tasks in a human-like fashion. Today, robotics is a rapidly growing field, as technological advances continue; researching, designing, and building new robots serve various practical purposes, whether domestically, commercially, or militarily. Many robots are built to do jobs that are hazardous to people such as defusing bombs, finding survivors in unstable ruins, and exploring mines and shipwrecks. Robotics is also used in STEM (Science, Technology, Engineering, and Mathematics) as a teaching aid.

Robotics is a branch of engineering that involves the conception, design, manufacture, and operation of robots. This field overlaps with electronics, computer science, artificial intelligence, mechatronics, nanotechnology and bioengineering.

Science-fiction author Isaac Asimov is often given credit for being the first person to use the term robotics in a short story composed in the 1940s. In the story, Asimov suggested three principles to guide the behavior of robots and smart machines. Asimov's Three Laws of Robotics, as they are called, have survived to the present: 1. Robots must never harm human beings. 2. Robots must follow instructions from humans without violating rule 1. 3. Robots must protect themselves without violating the other rules.

The Shadow robot hand system

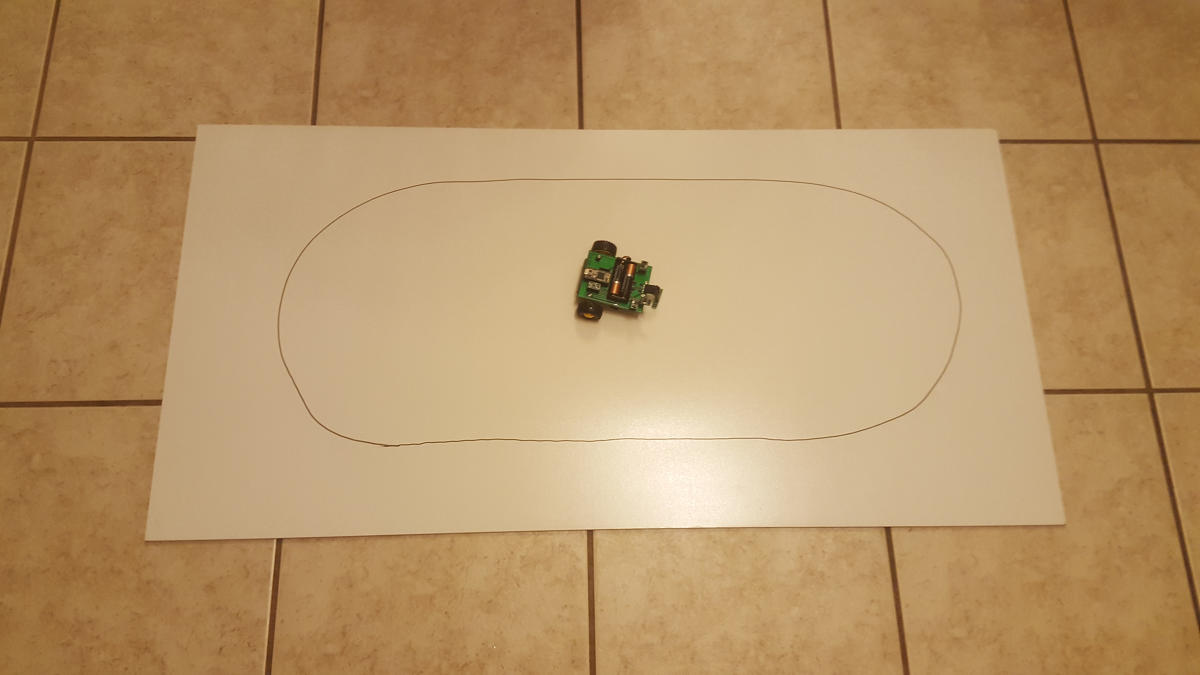

How to Build a Robot - Design and Schematic

Start building a robot that can follow lines or walls and avoid obstacles!

Overview

This is part 1 of a series of articles on my experiences building a robot that can do various things. I thought it would be neat to create a robot that was easy to put together with a single soldering iron and was also affordable. I made up the following requirements for my robot:- Many kits are expensive, so it must be relatively inexpensive.

- It must be easily put together without special equipment.

- It must be easily programmable without a complicated IDE or programmer.

- It must be powerful enough for expandability.

- It should run off a simple power source.

- It should be able to follow a line or a wall, and avoid obstacles.

Choosing the components

The first step in any project is figuring out what pieces are required. A robot needs a few key things to be useful: a way to move, think, and interact with its surroundings. To keep costs down, I need to get by with two wheels. This means to steer I need two separate motors that can be operated independently. I also need a ball caster that the robot can lean on to glide along. This has the unfortunate downside that the robot really can't go on any surfaces other than smooth floors. I want the brains to be some sort of well-known microcontroller platform. This way it won' need a programmer or guide to use the development tools. The robot needs to have sensors that allow it to be aware of lines, walls, and obstacles. I also want to minimize the amount of different places that I buy things to keep shipping costs low. Lastly, the components need to be small because I want to design the board for low cost PCB manufacturing and stay within the limits of the free version of Eagle CAD.Mechanical: Motors, Gears, Wheels

I found a couple websites that offer various hobby motors and robot parts, but I settled on Pololu because their prices were decent and they had everything I needed. The products from Tamiya looked pretty good. The 70168 Double Gearbox Kit comes with gears, motors, and shafts, which greatly simplifies the mechanical. It's also very cheap! The motors run on 3V normally, but could run higher at the expense of reduced operational life. Several gear ratios are supported, so I can fine tune the speed of the robot when I get it. I decided on the cheapest wheels that would fit the shaft of this kit, the Tamiya 70101 Truck Tire Set. This set comes with four wheels and I only need two, but it's cheap and spares never hurt! The front wheel is just a ball caster or plastic screw so that the robot can slide along the floor.

Brains: Microcontroller

There are several different microcontroller platforms that are fairly popular. The obvious choice is some sort of Arduino based on polarity. Other options are Teensy, Launchpad, and Raspberry Pi. The Pi is way too big and power hungry and the Launchpad is too big. I've used Teensy in the past and had good success. The Teensy is slightly more expensive than the Arduino Mini but offers a much more powerful platform. The latest Teensy has a Cortex M4 which is plenty of power for a simple robot. A bonus is that the Teensy has an onboard 500mA regulator which can be used for all of the sensors.Interaction: Sensors

Different sensors are needed for following lines and following walls. The line following sensors are usually reflectometers that vary a voltage depending on how much light is reflected from the ground. This is done using an LED and photodiode or light detector. The wall and obstacle detectors are usually some sort of distance sensor. Both of these types were available in a convenient DIP breakout form from the same store as the motors which allows me to save on shipping and be easily soldered! For the line sensor, I found one that has 3 sensors which allows the line to be centered on the robot at all times. For the distance sensor, I decided on the high brightness IR sensor, since I'm operating on a lower voltage than what is expected.Power: Motor Driver, Battery

The motor driver needs to be able to drive the 3V motors above. I also wanted it to be scalable in case I wanted to upgrade the motors in the future. I found one from the same store as above here. It can operate on 0-11V and supply plenty of current for any motor I'd want to add in the future. For the battery, I'd prefer that the robot runs on almost anything. The input to the Teensy accepts up to 5.5V, which means a lithium cell could be used. Lithium's require a battery charger though, and I don't want to add that to the expenses. Using two normal AA batteries offers quite a bit of power without this need. The downside is they only supply ~3V and are large. An input voltage of 3V is below the Teensy's 3.3V linear regulator. The robot will still operate, because all of the components chosen for the Teensy can operate on a lower voltage. However, the onboard regulator on the Teensy will ;be running unregulated.Optional Items

I want a way to control the board through my smart phone at some point, so I included a BLE device in the schematic. This isn't necessary to follow lines and walls, but I thought it would be a cool addition. I also want a way to easily remove items, so I'm going to use female headers to connect everything to the board.Complete Bill of Materials

Necessary Materials

| Part Type | Part Number | Cost |

|---|---|---|

| Microcontroller | Teensy 3.2 | 19.80 |

| Motor | Tamiya 70168 | 9.25 |

| Motor Driver | DRV8835 | 4.49 |

| Ball Caster | Tamiya 70144 | 5.99 |

| Reflector Sensor | QTR-3RC | 4.95 |

| Tires | Tamiya 70101 | 4.10 |

| Distance sensor | Pololu 38kHz | 5.95 |

| PCB | Elecro 10x10cm | 14.00 |

| Battery Case | 2-AA Battery Holder | 0.79 |

| Total w/o shipping: | $49.52 |

Optional Materials

| Part Type | Part Number | Cost |

|---|---|---|

| Wireless | nRF51 Dongle | 52.39 |

| Connectors | Various female 100mil headers | 5.00 |

Schematic

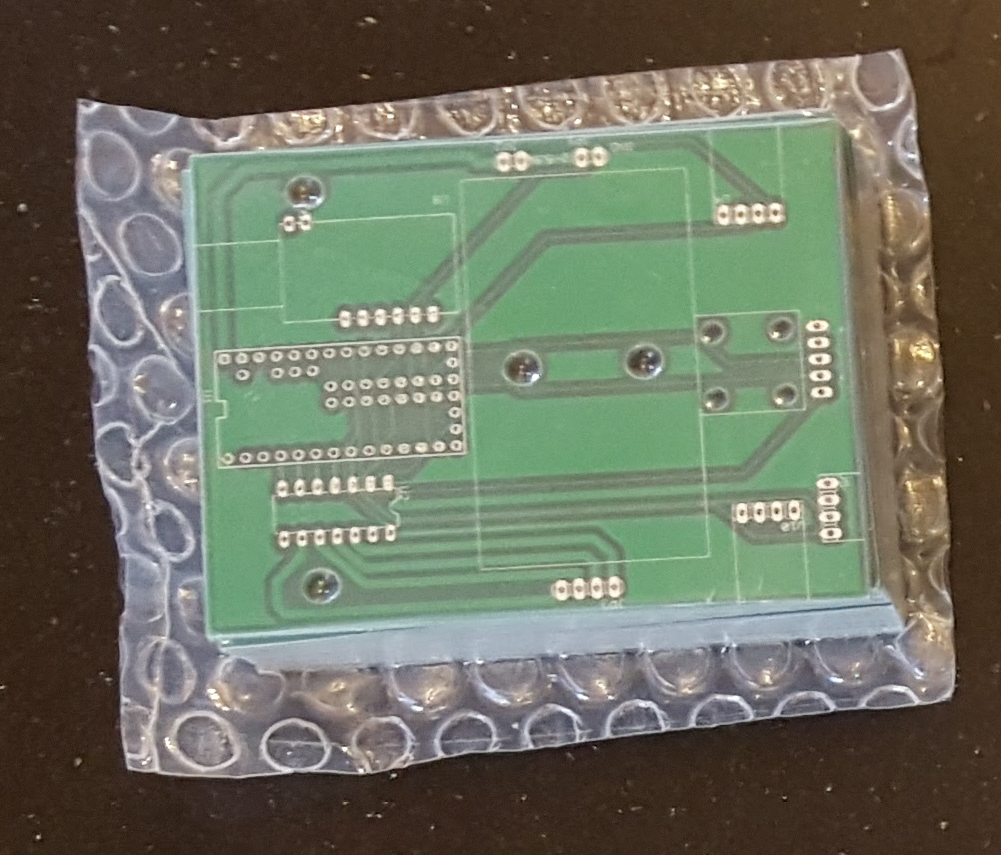

I am using the freeware version of Eagle CAD to draw the schematic and layout. I have created custom symbols/footprints for all of the items except for the Teensy device, available for download in Part 2 of this series. The Teensy has libraries for Eagle here. You might notice the schematic is lacking any simple devices like resistors or capacitors. This is because every one of these boards is a break-out board to make assembly as easy as possible. Any recent chip will likely be surface mount which is difficult to do for a hobbyist. The schematics for each of these boards are available from their respective sellers. Here are some key points for this schematic:- I put a jumper between the battery and the rest of the circuitry. This is useful to disconnect the power without removing any batteries, to measure current, or to protect the circuit from reverse polarity with a diode.

- All interfaces are digital except two. There is a UART connection between the nRF51 and the Teensy through pins 9/10. The motor controller requires PWM, which is through pin 6 and 4 of the Teensy.

- There is no LED on the schematic. The LED that is on the Teensy can be used for debugging or indication.

- There is no button. I considered putting a button on the reset line of the Teensy but didn't to keep costs lower.

- When programming the Teensy through USB, you must either cut the small trace connecting Vin/Vusb or make sure the batteries are not connected while the USB is plugged in.

Schematic File

Conclusion

In this article I outlined the requirements for the robot and my design choices to meet those requirements. These choices led to a schematic and bill of materials (BOM) to add up the costs for the project. In part 2 of this series, I'll draw the circuit board so it can be manufactured

This is part 2 of a series of articles on my experiences building a robot that can do various things. Please see part 1 here. I thought it would be neat to create a robot that was easy to put together with a single soldering iron and was also affordable. I made up the following requirements for my robot:

- Many kits are expensive, so it must be relatively inexpensive.

- It must be easily put together without special equipment.

- It must be easily programmable without a complicated IDE or programmer.

- It must be powerful enough for expandability.

- It should run off a simple power source.

- It should be able to follow a line or a wall, and avoid obstacles.

In this article I'll talk about how I converted the design and schematic into a printed-circuit board that can be ordered online!

Choosing a Board House

There are so many board houses these days that it can actually be hard to choose one. I went with the lowest cost one I could find since my objective was an affordable robot and I don't have any complicated board requirements. I found a website called Elecrow that offered a deal on 10 PCBs for only $14! That's pretty amazing. The shipping doubles the cost if you want it shipped in a reasonable amount of time, but it's still not too bad considering it's coming from China. Whenever you find a board house you'll want to make sure to look at their specifications for boards. Some key things to look for are:

- How many layers do they support?

- Do they offer silkscreen for free on both layers?

- What is the minimum via size?

- What are the drill hole ranges?

- What are the minimum trace thicknesses and spacing? This specification is one of the more critical ones for small/dense boards because if the board house can't handle close traces it will be difficult to route the board.

- It makes it way easier if the board house has a DRC (design-rule check) file you can load into your layout program to make sure you adhere to their specifications. Fortunately, Elecrow made one of these files for 2-layer boards.

Placing Components

It's important to spend a lot of time on component placement to meet mechanical requirements and to group circuits together. This board has a lot of break-out boards tied together so grouping circuits isn't as crucial. The big issue mechanically is fitting everything on the board size that is allowed by Eagle. The freeware version of Eagle only allows for a board that is 100 x 80mm. This works out since the board house has a special on boards that are 10x10cm or less. However, it makes it difficult to fit large items such as batteries and motors. I created packages for all of the components and placed them below. The only issue I had was that two of the ball caster screws interfere with the battery hold plastic, so the battery holder will either be nudged up because it's resting on the screws, or I can skip the two screws and rely on the front two. The line sensor will actually be connected with a series of headers because it has to be very close to the floor. Since I didn't have room underneath the board, it will have to be soldered to the top with a right angle connector and come off the front of the robot.

Routing Key Nets

The auto-router doesn't do a good job at routing power traces. I have routed the important connections manually below, such as power, motor, and motor control. The trace width for power should be as large as possible to limit the voltage drop. If possible, it's best to route all of the traces manually so you know what's happening with the signals. For signals such as low-speed digital connections and other non-critical nets the auto-router can be a great time saver. I did not route the ground because I plan to use a ground plane to connect grounds together. The yellow lines shown below are called "ratsnest" lines and they show which connections have yet to be made. They are useful for manual routing to see where the net should go.

Auto-routing the Rest

I set up the auto-router to use the spacing and trace width specified by the board house, which actually was just the default settings. I also told the router to use high effort and all of my computers processing power. This small of a board doesn't tax the auto-router too much, but a larger board could take a while. Before running the auto-router, be sure to save a copy of your PCB file in case the auto-router doesn't turn out the way you want.

Here is the result of the auto-router:

Clean-up and Ground Pour

It's important to run a DRC check because the auto-router can make mistakes and leave little traces around that shouldn't be there. There was one overlap error caught by the DRC that the auto-router created by the line sensor pin 5:

I also removed all of the ground traces since I intend to use a ground pour. To use a ground pour, draw a polygon around the board on top of the board dimension lines. Then use the "name" command to set the net to "GND." I set up the ground plane to stay 50 mils away from any other traces using the Spacing option in the polygon settings. This reduces the odds that a trace could be shorted to ground if the board house makes a mistake.

Ground Plane Properties

Ground Plane, No Stitching

The ground plane needs to be stitched together using ground vias. This minimizes capacitive coupling between the layers which can cause issues with analog and RF circuitry. More importantly for this robot though is that it reduces the loops and length that the return current needs to take to make it back to the battery. It also allows areas that the plane couldn't reach because of signal traces to be filled.

Ground Plane - Stitched

Final DRC and ERC checks

Run the DRC and ERC checkers one last time to make sure there aren't any board issues that will be discovered by the board house. It's also good practice to double check key connections and orientations, especially off-board connections. It's really common to get them backwards.

Creating Gerbers

What are Gerbers? They are a collection of files that the board house uses to create the PCB. A CAM (computer-aided manufacturing) file is a way to tell the design program how to create the Gerbers. Elecrow has a CAM file that is available for Eagle which makes it really easy to create Gerbers. Essentially it defines which layers should be combined in each Gerber file. The CAM processor looks like the following:

After processing the job, the following files are generated. These files are combined into a zip and uploaded to the board house during the checkout process.

I ordered the boards from Elecrow using the Shenzhen DHL(2-3 business days) shipping method. I placed my order on Oct/18 and recieved the boards on Oct/23! Here they are:

Note: The boards pictured have a smaller hole pattern for a ball caster. I redesigned the package for this article to fit a larger ball caster.

Conclusion

In this article I showed the process of taking a schematic and creating a PCB that can be ordered from online manufacturers. Boards are so cheap to make these days that unless a project is very simple, it makes sense to order the PCB. Your project will be much cleaner and take less time to wire together! In the next article I'll put the robot together and verify the electrical connections!

This is part 3 of a series of articles on my experiences building a robot that can do various things. I thought it would be neat to create a robot that was easy to put together with a single soldering iron and was also affordable. I made up the following requirements for my robot:

- Many kits are expensive, so it must be relatively inexpensive.

- It must be easily put together without special equipment.

- It must be easily programmable without a complicated IDE or programmer.

- It must be powerful enough for expandability.

- It should run off a simple power source.

- It should be able to follow a line or a wall, and avoid obstacles.

In this article I'll talk about how assembled the robot and wrote a robot library to test the various circuitry.

Gathering the Components

I ordered all of the components, which all came in a reasonably fast amount of time. Here they are laid out ready to be assembled.

Assembling the Mechanical Components

Ball Caster

The ball caster came as a kit that had to be assembled. It offered a variety of size options. It wasn't too difficult so I didn't take any pictures of the assembly process.

Motor

The motor also came as a kit and was much more complicated. The motor kit offers 4 different gear ratios. I ended up choosing 38:1 for the great ratio because I didn't want the robot to be too slow but still handle pulling the weight around. The higher gear ratios would have been an unneccessary amount of torque. The only real weight on the robot is the batteries. The gear ratio can always be adjusted after assembly, but it would be a bit of a pain. The motor speed can be adjusted by altering the PWM duty cycle to the motor controller, so if the gear ratio produced too high of a speed it can be lowered with software.

Here are all of the various pieces from the kit. I used a cutting board for assembly because the small screws have a habit of rolling off the table.

The fully assembled robot motor and gears. The kit came with everything required, including grease. The only tool I needed was a screw driver.

Attached to the PCB, it actually lines up with the silk screen nicely!

Soldering the Components

I used female headers in case I wanted to swap out the components later. Soldering all of the male headers to the DIP break-out boards was a bit tedious, but much easier than soldering all of the surface mount components would have been! I had to use a bunch of headers connected together to get the line sensor to be close enough to the ground to be affective. If I started from scratch I'd prefer a cleaner way of mounting the line sensor.

Testing the Motors

At first I just connected the motors directly to the 2AA batteries to see if the gears were properly greased. I also measured the current to make sure it was in spec. After proving the motors could be turned forwards and reverse, I connected them to the motor driver. I wrote some test software that ran the motors forwards and backwards for five seconds. This proved out the connections between the Teensy, the motor controller, and the motor all at once. It's a pleasing result when you don't have to troubleshoot connections on a first revision PCB! The following code drives the robot forwards for 5 seconds at about half power, and then backwards for 5 seconds. I wrote a driver called "robot.ino" that takes care of turns and sensor reading. See the end of the article for the driver. To program the Teensy, download the Teensyduino add-on for the Arduino platform. Programming is then exactly the same as using an Arduino.

I used a jumper as an on/off switch during testing.

It's alive!

#include "robot.h"

void setup()

{

Serial.begin(38400);

Serial.println("Boot");

rbt_init();

rbt_move(FWD,100);

delay(5000);

rbt_move(REV,100);

delay(5000);

rbt_move(BRAKE,0);

}

void loop()

{

}

#include "robot.h"

void setup()

{

Serial.begin(38400);

Serial.println("Boot");

rbt_init();

rbt_move(FWD,100);

delay(5000);

rbt_move(REV,100);

delay(5000);

rbt_move(BRAKE,0);

}

void loop()

{

}

Testing the Sensors

To test the sensors I wrote a program that printed the raw value from the sensors. I found out that I couldn't use the wall sensors at the same time or they would all activate 100% of the time. The reason for this is that the IR receivers have a wide angle and they pick up the signals from adjacent transmitters. I wrote the library so that it alternates reading each sensor by taking advantage of the enable pin on the sensor. The wall sensors also have to be angled up a little bit, or they pick up the ground as an object.

The sensor reading function executes in 1ms, offering 1kHz speed to handle fairly complex control algorithms if needed. The test code also demonstrated that the line sensor has to be very close to the ground to effectively determine the difference between colors. If the robot is a couple inches off the ground, the line sensors max out at a reading of 1000. This actually can be useful to make the robot stop moving when being carried around.

Line sensor very close to ground, but not touching!

#include "robot.h"

void setup()

{

Serial.begin(38400);

Serial.println("Boot");

rbt_init();

}

uint16_t lleft,lmid,lright;

boolean wleft,wmid,wright;

void loop()

{

rbt_sns(&lleft,&lmid,&lright,&wleft,&wmid,&wright);

Serial.print("Line left: ");

Serial.print(lleft);

Serial.print("Line mid: ");

Serial.print(lmid);

Serial.print("Line right: ");

Serial.print(lright);

Serial.print("Wall left: ");

Serial.print(wleft);

Serial.print("Wall mid: ");

Serial.print(wmid);

Serial.print("Wall right: ");

Serial.println(wright);

}

#include "robot.h"

void setup()

{

Serial.begin(38400);

Serial.println("Boot");

rbt_init();

}

uint16_t lleft,lmid,lright;

boolean wleft,wmid,wright;

void loop()

{

rbt_sns(&lleft,&lmid,&lright,&wleft,&wmid,&wright);

Serial.print("Line left: ");

Serial.print(lleft);

Serial.print("Line mid: ");

Serial.print(lmid);

Serial.print("Line right: ");

Serial.print(lright);

Serial.print("Wall left: ");

Serial.print(wleft);

Serial.print("Wall mid: ");

Serial.print(wmid);

Serial.print("Wall right: ");

Serial.println(wright);

}

Conclusion

In this article I showed the process of assembling a robot and testing the components individually. It's important to do this when you first get boards, because you never know what mistakes might have been made in the design or the manufacture of the PCB. If you jump right in to the application design, you might miss something that causes problems down the road. In the next article I'll talk about how to turn the robot into a line follower by righting a simple algorithm to stay centered on a black line.

Robot Library

robot.h

#ifndef _ROBOT_H

#define _ROBOT_H

#include "Arduino.h"

/*DRV8835*/

const int BPHASE = 5;

const int APHASE = 3;

const int AEN = 4;

const int BEN = 6;

const int DRV_MODE = 2;

#define MOTOR_REV LOW

#define MOTOR_FWD HIGH

/*reflection sensor interface*/

const int OUT1 = 33;

const int OUT2 = 32;

const int OUT3 = 31;

/*wall sensor interface*/

const int WALL_LEFT_EN = 15;

const int WALL_LEFT = 14;

const int WALL_RIGHT_EN = 19;

const int WALL_RIGHT = 18;

const int WALL_MID_EN = 17;

const int WALL_MID = 16;

/*robot interface*/

typedef enum{

LEFT,

RIGHT,

FWD,

REV,

BRAKE,

}direction_t;

void rbt_move(direction_t new_dir, uint8_t speed);

void rbt_sns( uint16_t *line_left,

uint16_t *line_mid,

uint16_t *line_right,

boolean *wall_left,

boolean *wall_mid,

boolean *wall_right);

void rbt_init();

#endif /*_ROBOT_H*/

#ifndef _ROBOT_H

#define _ROBOT_H

#include "Arduino.h"

/*DRV8835*/

const int BPHASE = 5;

const int APHASE = 3;

const int AEN = 4;

const int BEN = 6;

const int DRV_MODE = 2;

#define MOTOR_REV LOW

#define MOTOR_FWD HIGH

/*reflection sensor interface*/

const int OUT1 = 33;

const int OUT2 = 32;

const int OUT3 = 31;

/*wall sensor interface*/

const int WALL_LEFT_EN = 15;

const int WALL_LEFT = 14;

const int WALL_RIGHT_EN = 19;

const int WALL_RIGHT = 18;

const int WALL_MID_EN = 17;

const int WALL_MID = 16;

/*robot interface*/

typedef enum{

LEFT,

RIGHT,

FWD,

REV,

BRAKE,

}direction_t;

void rbt_move(direction_t new_dir, uint8_t speed);

void rbt_sns( uint16_t *line_left,

uint16_t *line_mid,

uint16_t *line_right,

boolean *wall_left,

boolean *wall_mid,

boolean *wall_right);

void rbt_init();

#endif /*_ROBOT_H*/

robot.ino

#include "robot.h"

void rbt_init()

{

pinMode(BPHASE, OUTPUT);

pinMode(APHASE, OUTPUT);

pinMode(AEN, OUTPUT);

pinMode(BEN, OUTPUT);

pinMode(DRV_MODE, OUTPUT);

pinMode(WALL_LEFT_EN, OUTPUT);

pinMode(WALL_MID_EN, OUTPUT);

pinMode(WALL_RIGHT_EN, OUTPUT);

pinMode(WALL_LEFT, INPUT);

pinMode(WALL_MID, INPUT);

pinMode(WALL_RIGHT, INPUT);

digitalWrite(WALL_LEFT_EN,LOW);

digitalWrite(WALL_MID_EN,LOW);

digitalWrite(WALL_RIGHT_EN,LOW);

/*simplified drive mode*/

digitalWrite(DRV_MODE, HIGH);

}

void rbt_move(direction_t new_dir, uint8_t speed)

{

if(speed)

{

switch(new_dir){

case LEFT:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed);

analogWrite(BEN,speed-speed/2);

break;

case RIGHT:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed-speed/2);

analogWrite(BEN,speed);

break;

case FWD:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed);

analogWrite(BEN,speed);

break;

case REV:

digitalWrite(BPHASE,MOTOR_REV);

digitalWrite(APHASE,MOTOR_REV);

analogWrite(AEN,speed);

analogWrite(BEN,speed);

break;

default:

analogWrite(AEN,0);

analogWrite(BEN,0);

break;

}

}

else

{

analogWrite(AEN,0);

analogWrite(BEN,0);

}

}

/*function takes 1ms to run*/

#define LOOP_ITER_CNT 2

void rbt_sns( uint16_t *line_left,

uint16_t *line_mid,

uint16_t *line_right,

boolean *wall_left,

boolean *wall_mid,

boolean *wall_right)

{

*line_left=0;

*line_mid=0;

*line_right=0;

uint16_t usec_timer=0;

/*line sensor*/

/*charge lines*/

pinMode(OUT1, OUTPUT);

pinMode(OUT2, OUTPUT);

pinMode(OUT3, OUTPUT);

digitalWrite(OUT1,HIGH);

digitalWrite(OUT2,HIGH);

digitalWrite(OUT3,HIGH);

delayMicroseconds(3);

/*set to Hi-Z to let cap discharge*/

pinMode(OUT1, INPUT);

pinMode(OUT2, INPUT);

pinMode(OUT3, INPUT);

/*enable first wall sensor*/

digitalWrite(WALL_LEFT_EN,HIGH);

while(1){

/*each loop is about 2us at 48MHz*/

usec_timer+=LOOP_ITER_CNT;

/*increment counts for line sensors every us to track the decay of the capacitor*/

if(digitalRead(OUT1) == 1)

{

(*line_left)+=LOOP_ITER_CNT;

}

if(digitalRead(OUT2) == 1)

{

(*line_mid)+=LOOP_ITER_CNT;

}

if(digitalRead(OUT3) == 1)

{

(*line_right)+=LOOP_ITER_CNT;

}

/*take turns reading wall sensors because they interfere with each other*/

if(usec_timer == 300)

{

*wall_left = (digitalRead(WALL_LEFT) ? false:true);

digitalWrite(WALL_LEFT_EN,LOW);

}

if(usec_timer == 400)

{

digitalWrite(WALL_MID_EN,HIGH);

}

if(usec_timer == 700)

{

*wall_mid = (digitalRead(WALL_MID) ? false:true);

digitalWrite(WALL_MID_EN,LOW);

}

if(usec_timer == 700)

{

digitalWrite(WALL_RIGHT_EN,HIGH);

}

if(usec_timer>=1000)

{

*wall_right = (digitalRead(WALL_RIGHT) ? false:true);

digitalWrite(WALL_MID_EN,LOW);

return;

}

}

}

This is part 4 of a series of articles on my experiences building a robot that can do various things. I thought it would be neat to create a robot that was easy to put together with a single soldering iron and was also affordable. I made up the following requirements for my robot:

- Many kits are expensive, so it must be relatively inexpensive.

- It must be easily put together without special equipment.

- It must be easily programmable without a complicated IDE or programmer.

- It must be powerful enough for expandability.

- It should run off a simple power source.

- It should be able to follow a line or a wall, and avoid obstacles.

In this article I'll talk about how to program the robot to be a line follower.

#include "robot.h"

void rbt_init()

{

pinMode(BPHASE, OUTPUT);

pinMode(APHASE, OUTPUT);

pinMode(AEN, OUTPUT);

pinMode(BEN, OUTPUT);

pinMode(DRV_MODE, OUTPUT);

pinMode(WALL_LEFT_EN, OUTPUT);

pinMode(WALL_MID_EN, OUTPUT);

pinMode(WALL_RIGHT_EN, OUTPUT);

pinMode(WALL_LEFT, INPUT);

pinMode(WALL_MID, INPUT);

pinMode(WALL_RIGHT, INPUT);

digitalWrite(WALL_LEFT_EN,LOW);

digitalWrite(WALL_MID_EN,LOW);

digitalWrite(WALL_RIGHT_EN,LOW);

/*simplified drive mode*/

digitalWrite(DRV_MODE, HIGH);

}

void rbt_move(direction_t new_dir, uint8_t speed)

{

if(speed)

{

switch(new_dir){

case LEFT:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed);

analogWrite(BEN,speed-speed/2);

break;

case RIGHT:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed-speed/2);

analogWrite(BEN,speed);

break;

case FWD:

digitalWrite(BPHASE,MOTOR_FWD);

digitalWrite(APHASE,MOTOR_FWD);

analogWrite(AEN,speed);

analogWrite(BEN,speed);

break;

case REV:

digitalWrite(BPHASE,MOTOR_REV);

digitalWrite(APHASE,MOTOR_REV);

analogWrite(AEN,speed);

analogWrite(BEN,speed);

break;

default:

analogWrite(AEN,0);

analogWrite(BEN,0);

break;

}

}

else

{

analogWrite(AEN,0);

analogWrite(BEN,0);

}

}

/*function takes 1ms to run*/

#define LOOP_ITER_CNT 2

void rbt_sns( uint16_t *line_left,

uint16_t *line_mid,

uint16_t *line_right,

boolean *wall_left,

boolean *wall_mid,

boolean *wall_right)

{

*line_left=0;

*line_mid=0;

*line_right=0;

uint16_t usec_timer=0;

/*line sensor*/

/*charge lines*/

pinMode(OUT1, OUTPUT);

pinMode(OUT2, OUTPUT);

pinMode(OUT3, OUTPUT);

digitalWrite(OUT1,HIGH);

digitalWrite(OUT2,HIGH);

digitalWrite(OUT3,HIGH);

delayMicroseconds(3);

/*set to Hi-Z to let cap discharge*/

pinMode(OUT1, INPUT);

pinMode(OUT2, INPUT);

pinMode(OUT3, INPUT);

/*enable first wall sensor*/

digitalWrite(WALL_LEFT_EN,HIGH);

while(1){

/*each loop is about 2us at 48MHz*/

usec_timer+=LOOP_ITER_CNT;

/*increment counts for line sensors every us to track the decay of the capacitor*/

if(digitalRead(OUT1) == 1)

{

(*line_left)+=LOOP_ITER_CNT;

}

if(digitalRead(OUT2) == 1)

{

(*line_mid)+=LOOP_ITER_CNT;

}

if(digitalRead(OUT3) == 1)

{

(*line_right)+=LOOP_ITER_CNT;

}

/*take turns reading wall sensors because they interfere with each other*/

if(usec_timer == 300)

{

*wall_left = (digitalRead(WALL_LEFT) ? false:true);

digitalWrite(WALL_LEFT_EN,LOW);

}

if(usec_timer == 400)

{

digitalWrite(WALL_MID_EN,HIGH);

}

if(usec_timer == 700)

{