History and Quantum Mechanical Quantities

The Photoelectric Effect

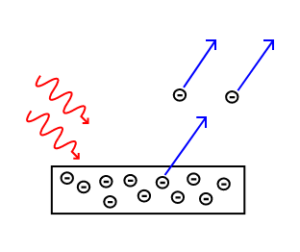

Electrons are emitted from matter that is absorbing energy from electromagnetic radiation, resulting in the photoelectric effect.Learning Objectives

Explain how the photoelectric effect paradox was solved by Albert Einstein.Key Takeaways

KEY POINTS

- The energy of the emitted electrons depends only on the frequency of the incident light, and not on the light intensity.

- Einstein explained the photoelectric effect by describing light as composed of discrete particles.

- Study of the photoelectric effect led to important steps in understanding the quantum nature of light and electrons, which would eventually lead to the concept of wave-particle duality.

KEY TERMS

black body radiation: The type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature.photoelectron: Electrons emitted from matter by absorbing energy from electromagnetic radiation.

wave-particle duality: A postulation that all particles exhibit both wave and particle properties. It is a central concept of quantum mechanics.

The photoelectric effect typically requires photons with energies from a few electronvolts to 1 MeV for heavier elements, roughly in the ultraviolet and X-ray range. Study of the photoelectric effect led to important steps in understanding the quantum nature of light and electrons and influenced the formation of the concept of wave-particle du

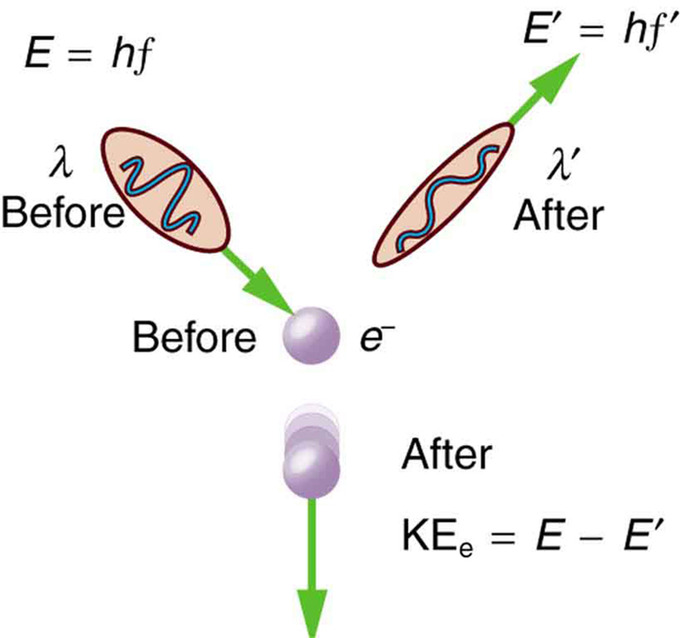

The Photoelectric Effect: Electrons are emitted from matter by absorbed light.

Photoelectric Effect: A brief introduction to the Photoelectric Effect and electron photoemission.

Heinrich Hertz discovered the photoelectric effect in 1887. Although electrons had not been discovered yet, Hertz observed that electric currents were produced when ultraviolet light was shined on a metal. By the beginning of the 20th century, physicists confirmed that:

- The energy of the individual photoelectrons increased with the frequency (or color) of the light, but was independent of the intensity (or brightness) of the radiation.

- The photoelectric current was determined by the light’s intensity; doubling the intensity of the light doubled the number of emitted electrons.

In 1905, Albert Einstein solved this apparent paradox by describing light as composed of discrete quanta (now called photons), rather than continuous waves. Building on Max Planck’s theory of black body radiation, Einstein theorized that the energy in each quantum of light was equal to the frequency multiplied by a constant h, later called Planck’s constant. A photon above a threshold frequency has the required energy to eject a single electron, creating the observed effect. As the frequency of the incoming light increases, each photon carries more energy, hence increasing the energy of each outgoing photoelectron. By doubling the number of photons as the intensity is doubled, the number of photelectrons should double accordingly.

According to Einstein, the maximum kinetic energy of an ejected electron is given by , where is the Planck constant and f is the frequency of the incident photon. The term is known as the work function, the minimum energy required to remove an electron from the surface of the metal. The work function satisfies , where is the threshold frequency for the metal for the onset of the photoelectric effect. The value of work function is an intrinsic property of matter.

Is light then composed of particles or waves? Young’s experiment suggested that it was a wave, but the photoelectric effect indicated that it should be made of particles. This question would be resolved by de Broglie: light, and all matter, have both wave-like and particle-like properties.

Photon Energies of the EM Spectrum

The electromagnetic (EM) spectrum is the range of all possible frequencies of electromagnetic radiation.Learning Objectives

Compare photon energy with the frequency of the radiationKey Takeaways

KEY POINTS

- Electromagnetic radiation is classified according to wavelength, divided into radio waves, microwaves, terahertz (or sub-millimeter) radiation, infrared, the visible region humans perceive as light, ultraviolet, X-rays, and gamma rays.

- Photon energy is proportional to the frequency of the radiation.

- Most parts of the electromagnetic spectrum are used in science for spectroscopic and other probing interactions as ways to study and characterize matter.

KEY TERMS

- Planck constant: a physical constant that is the quantum of action in quantum mechanics. It has a unit of angular momentum. The Planck constant was first described as the proportionality constant between the energy of a photon (unit of electromagnetic radiation) and the frequency of its associated electromagnetic wave in his derivation of the Planck’s law

- Maxwell’s equations: A set of equations describing how electric and magnetic fields are generated and altered by each other and by charges and currents.

The Electromagnetic Spectrum

The electromagnetic (EM) spectrum is the range of all possible frequencies of electromagnetic radiation . The electromagnetic spectrum extends from below the low frequencies used for modern radio communication to gamma radiation at the short-wavelength (high-frequency) end, thereby covering wavelengths of thousands of kiilometers down to those of a fraction of the size of an atom (approximately an angstrom). The limit for long wavelengths is the size of the universe itself.

Electromagnetic spectrum: This shows the electromagnetic spectrum, including the visible region, as a function of both frequency (left) and wavelength (right).

Filling in the Electromagnetic Spectrum

In 1895, Wilhelm Röntgen noticed a new type of radiation emitted during an experiment with an evacuated tube subjected to a high voltage. He called these radiations ‘X-rays’ and found that they were able to travel through parts of the human body but were reflected or stopped by denser matter such as bones. Before long, there were many new uses for them in the field of medicine.The last portion of the electromagnetic spectrum was filled in with the discovery of gamma rays. In 1900, Paul Villard was studying the radioactive emissions of radium when he identified a new type of radiation that he first thought consisted of particles similar to known alpha and beta particles, but far more penetrating than either. However, in 1910, British physicist William Henry Bragg demonstrated that gamma rays are electromagnetic radiation, not particles. In 1914, Ernest Rutherford (who had named them gamma rays in 1903 when he realized that they were fundamentally different from charged alpha and beta rays) and Edward Andrade measured their wavelengths, and found that gamma rays were similar to X-rays, but with shorter wavelengths and higher frequencies.

The relationship between photon energy and the radiation’s frequency and wavelength is illustrated as the following equilavent equation: , where is the frequency, is the wavelength, is photon energy, is the speed of light, and is the Planck constant. Generally, electromagnetic radiation is classified by wavelength into radio waves, microwaves, terahertz (or sub-millimeter) radiation, infrared, the visible region humans perceive as light, ultraviolet, X-rays, and gamma rays. The behavior of EM radiation depends on its wavelength. When EM radiation interacts with single atoms and molecules, its behavior also depends on the amount of energy per quantum (photon) it carries.

Most parts of the electromagnetic spectrum are used in science for spectroscopic and other probing interactions as ways to study and characterize matter. Also, radiation from various parts of the spectrum has many other uses in communications and manufacturing.

Energy, Mass, and Momentum of Photon

A photon is an elementary particle, the quantum of light, which carries momentum and energy.Learning Objectives

State physical properties of a photonKey Takeaways

KEY POINTS

- Energy of photon is proportional to its frequency.

- Momentum of photon is proportional to the wave vector.

- Photon’s rest mass is 0.

KEY TERMS

- black body radiation: The type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature.

- elementary particle: a particle not known to have any substructure

- photoelectric effect: The occurrence of electrons being emitted from matter (metals and non-metallic solids, liquids, or gases) as a consequence of their absorption of energy from electromagnetic radiation.

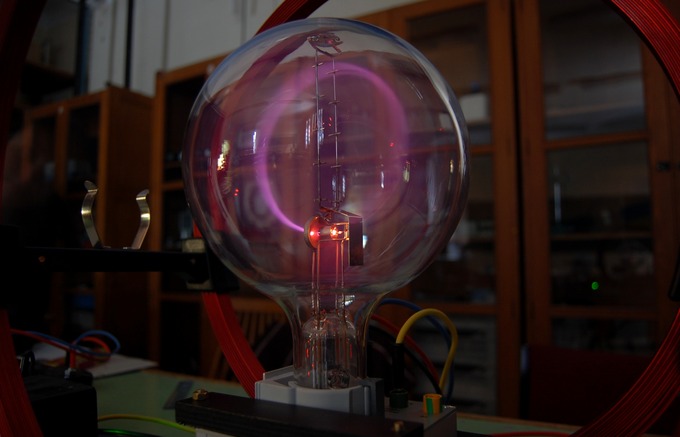

Photons are emitted in many natural processes. They are emitted from light sources such as floor lamps or lasers . For example, when a charge is accelerated it emits photons, a phenomenon known as synchrotron radiation. During a molecular, atomic or nuclear transition to a lower or higher energy level, photons of various energy will be emitted or absorbed respectively. A photon can also be emitted when a particle and its corresponding antiparticle are annihilated. During all these processes, photons will carry energy and momentum.

laser: Photons emitted in a coherent beam from a laser.

Momentum of photon: According to the theory of Special Relativity, energy and momentum (p) of a particle with rest mass m has the following relationship: , where is the speed of light. In the case of a photon with zero rest mass, we get . Combining this with Eq. 1, we get . Here, is the wavelength of the light. Since momentum is a vector quantity and p points in the direction of the photon’s propagation, we can write , where and is a wave vector.

You may wonder how an object with zero rest mass can have nonzero momentum. This confusion often arises because of the commonly used form of momentum ( in non-relativistic mechanics and in relativistic mechanics, where is velocity and . ) This formula, obviously, shouldn’t be used in the case .

Implications of Quantum Mechanics

Quantum mechanics has had enormous success in explaining microscopic systems and has become a foundation of modern science and technology.Learning Objectives

Explain importance of quantum mechanics for technology and other branches of scienceKey Takeaways

KEY POINTS

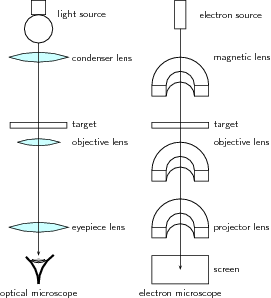

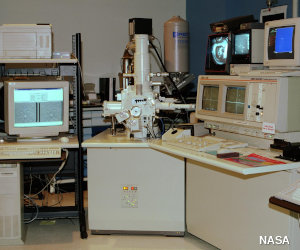

- A great number of modern technological inventions are based on quantum mechanics, including the laser, the transistor, the electron microscope, and magnetic resonance imaging.

- Quantum mechanics is also critically important for understanding how individual atoms combine covalently to form molecules. The application of quantum mechanics to chemistry is known as quantum chemistry.

- Researchers are currently seeking robust methods of directly manipulating quantum states for applications in computer and information science.

KEY TERMS

- cryptography: the practice and study of techniques for secure communication in the presence of third parties

- relativistic quantum mechanics: a theoretical framework for constructing quantum mechanical models of fields and many-body systems

- string theory: an active research framework in particle physics that attempts to reconcile quantum mechanics and general relativity

Quantum mechanics is also critically important for understanding how individual atoms combine covalently to form molecules. The application of quantum mechanics to chemistry is known as quantum chemistry. Relativistic quantum mechanics can, in principle, mathematically describe most of chemistry. Quantum mechanics can also provide quantitative insight into ionic and covalent bonding processes by explicitly showing which molecules are energetically favorable to which other molecules and the magnitudes of the energies involved. Furthermore, most of the calculations performed in modern computational chemistry rely on quantum mechanics.

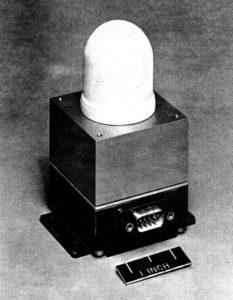

A great number of modern technological inventions operate on a scale where quantum effects are significant. Examples include the laser , the transistor (and thus the microchip), the electron microscope, and magnetic resonance imaging (MRI). The study of semiconductors led to the invention of the diode and the transistor, which are indispensable parts of modern electronic systems and devices.

Laser: Red (635-nm), green (532-nm), and blue-violet (445-nm) lasers

Particle-Wave Duality

Wave–particle duality postulates that all physical entities exhibit both wave and particle properties.Learning Objectives

Describe experiments that demonstrated wave-particle duality of physical entitiesKey Takeaways

KEY POINTS

- All entities in Nature behave as both a particle and a wave, depending on the specifics of the phenomena under consideration.

- Particle-wave duality is usually hidden in macroscopic phenomena, conforming to our intuition.

- In the double-slit experiment of electrons, individual event displays a particle-like property of localization (or a “dot”). After many repetitions, however, the image shows an interference pattern, which indicates that each event is in fact governed by a probability distribution.

KEY TERMS

- Maxwell’s equations: A set of equations describing how electric and magnetic fields are generated and altered by each other and by charges and currents.

- black body: An idealized physical body that absorbs all incident electromagnetic radiation, regardless of frequency or angle of incidence. Although black body is a theoretical concept, you can find approximate realizations of black body in nature.

- photoelectric effects: In photoelectric effects, electrons are emitted from matter (metals and non-metallic solids, liquids or gases) as a consequence of their absorption of energy from electromagnetic radiation.

From a classical physics point of view, particles and waves are distinct concepts. They are mutually exclusive, in the sense that a particle doesn’t exhibit wave-like properties and vice versa. Intuitively, a baseball doesn’t disappear via destructive interference, and our voice cannot be localized in space. Why then is it that physicists believe in wave-particle duality? Because that’s how mother Nature operates, as they have learned from several ground-breaking experiments. Here is a short, chronological list of those experiments:

- Young’s double-slit experiment: In the early Nineteenth century, the double-slit experiments by Young and Fresnel provided evidence that light is a wave. In 1861, James Clerk Maxwell explained light as the propagation of electromagnetic waves according to the Maxwell’s equations.

- Black body radiation: In 1901, to explain the observed spectrum of light emitted by a glowing object, Max Planck assumed that the energy of the radiation in the cavity was quantized, contradicting the established belief that electromagnetic radiation is a wave.

- Photoelectric effect: Classical wave theory of light also fails to explain photoelectric effect. In 1905, Albert Einstein explained the photoelectric effects by postulating the existence of photons, quanta of light energy with particulate qualities.

- De Broglie’s wave (matter wave): In 1924, Louis-Victor de Broglie formulated the de Broglie hypothesis, claiming that all matter, not just light, has a wave-like nature. His hypothesis was soon confirmed with the observation that electrons (matter) also displays diffraction patterns, which is intuitively a wave property.

So, why do we not notice a baseball acting like a wave? The wavelength of the matter wave associated with a baseball, say moving at 95 miles per hour, is extremely small compared to the size of the ball so that wave-like behavior is never noticeable.

Diffraction Revisited

De Broglie’s hypothesis was that particles should show wave-like properties such as diffraction or interference.Learning Objectives

Compare application of X-ray, electron, and neutron diffraction for materials researchKey Takeaways

KEY POINTS

- The wavelength of an electron is given by the de Broglie equation .

- Because of different forms of interaction involved, X-ray, electron, and neutron are suitable for different studies of material properties.

- De Broglie’s idea completed the wave-particle duality.

KEY TERMS

- photoelectric effect: The observation of electrons being emitted from matter (metals and non-metallic solids, liquids, or gases) as a consequence of their absorption of energy from electromagnetic radiation.

- black body radiation: The type of electromagnetic radiation within or surrounding a body in thermodynamic equilibrium with its environment, or emitted by a black body (an opaque and non-reflective body) held at constant, uniform temperature.

- grating: Any regularly spaced collection of essentially identical, parallel, elongated elements.

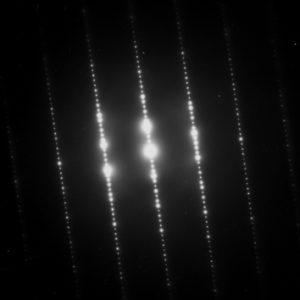

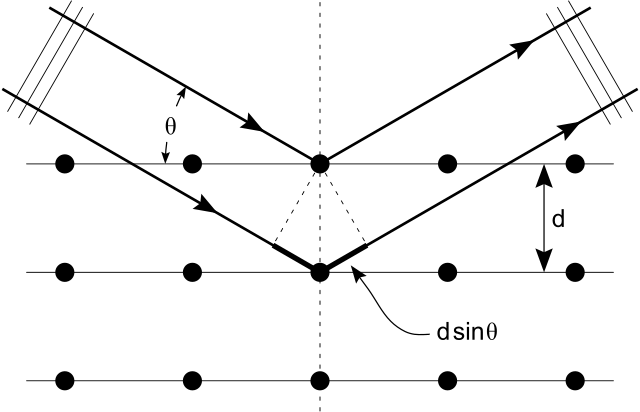

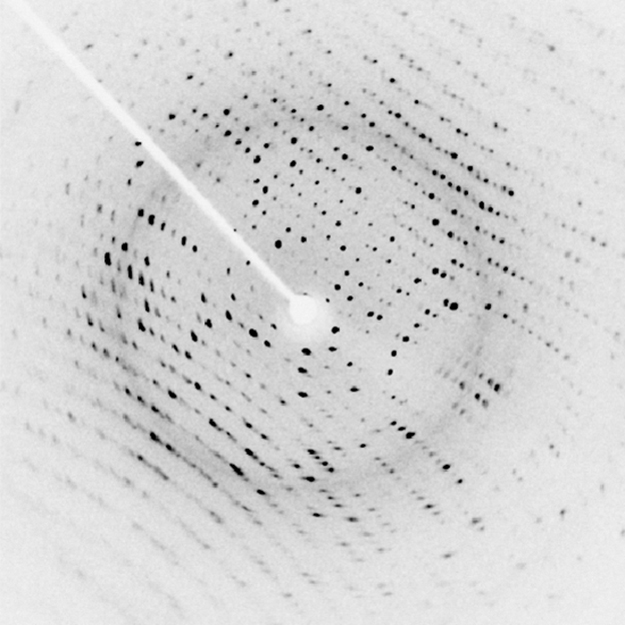

From the work by Planck (black body radiation) and Einstein (photoelectric effect), physicists understood that electromagnetic waves sometimes behaved like particles. De Broglie’s hypothesis is complementary to this idea: particles should also show wave-like properties such as diffraction or interference. De Broglie’s formula was confirmed three years later for electrons (which have a rest-mass) with the observation of electron diffraction in two independent experiments. George Paget Thomson passed a beam of electrons through a thin metal film and observed the predicted interference patterns. Clinton Joseph Davisson and Lester Halbert Germerguided their beam through a crystalline grid to observe diffraction patterns.

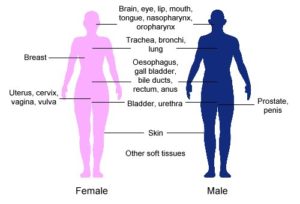

X-ray diffraction is a commonly used tool in materials research. Thanks to the wave-particle duality, matter wave diffraction can also be used for this purpose. The electron, which is easy to produce and manipulate, is a common choice. A neutron is another particle of choice. Due to the different kinds of interactions involved in the diffraction processes, the three types of radiation (X-ray, electron, neutron) are suitable for different kinds of studies.

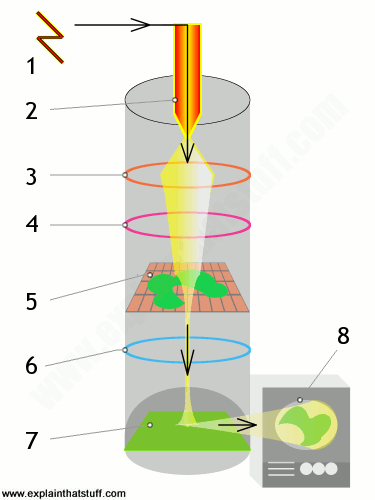

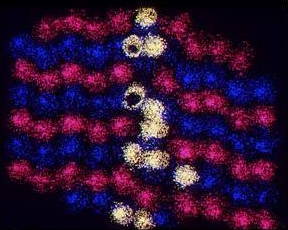

Electron diffraction is most frequently used in solid state physics and chemistry to study the crystalline structure of solids. Experiments are usually performed using a transmission electron microscope or a scanning electron microscope. In these instruments, electrons are accelerated by an electrostatic potential in order to gain the desired energy and, thus, wavelength before they interact with the sample to be studied. The periodic structure of a crystalline solid acts as a diffraction grating, scattering the electrons in a predictable manner. Working back from the observed diffraction pattern, it is then possible to deduce the structure of the crystal producing the diffraction pattern. Unlike other types of radiation used in diffraction studies of materials, such as X-rays and neutrons, electrons are charged particles and interact with matter through the Coulomb forces. This means that the incident electrons feel the influence of both the positively charged atomic nuclei and the surrounding electrons. In comparison, X-rays interact with the spatial distribution of the valence electrons, while neutrons are scattered by the atomic nuclei through the strong nuclear force.

Electron Diffraction Pattern: Typical electron diffraction pattern obtained in a transmission electron microscope with a parallel electron beam.

The Wave Function

A wave function is a probability amplitude in quantum mechanics that describes the quantum state of a particle and how it behaves.Learning Objectives

Relate the wave function with the probability density of finding a particle, commenting on the constraints the wave function must satisfy for this to make senseKey Takeaways

KEY POINTS

- corresponds to the probability density of finding a particle in a given location x at a given time.

- The laws of quantum mechanics (the Schrödinger equation) describe how the wave function evolves over time. The Schrödinger equation is a type of wave equation, which explains the name “wave function”.

- A wave function must satisfy a set of mathematical constraints for the calculations and physical interpretation to make sense.

KEY TERMS

Schrödinger equation: A partial-differential that describes how the quantum state of some physical system changes with time. It was formulated in late 1925 and published in 1926 by the Austrian physicist Erwin Schrödingerharmonic oscillator: a system that, when displaced from its equilibrium position, experiences a restoring force proportional to the displacement

Trajectories of a Harmonic Oscillator: This figure shows some trajectories of a harmonic oscillator (a ball attached to a spring) in classical mechanics (A-B) and quantum mechanics (C-H). In quantum mechanics (C-H), the ball has a wave function, which is shown with its real part in blue and its imaginary part in red. The trajectories C-F are examples of standing waves, or “stationary states. ” Each standing-wave frequency is proportional to a possible energy level of the oscillator. This “energy quantization” does not occur in classical physics, where the oscillator can have any energy.

The wave function must satisfy the following constraints for the calculations and physical interpretation to make sense:

- It must everywhere be finite.

- It must everywhere be a continuous function and continuously differentiable.

- It must everywhere satisfy the relevant normalization condition so that the particle (or system of particles) exists somewhere with 100-percent certainty.

de Broglie and the Wave Nature of Matter

The concept of “matter waves” or “de Broglie waves” reflects the wave-particle duality of matter.Learning Objectives

Formulate the de Broglie relation as an equationKey Takeaways

KEY POINTS

- de Broglie relations show that the wavelength is inversely proportional to the momentum of a particle.

- The Davisson-Germer experiment demonstrated the wave-nature of matter and completed the theory of wave-particle duality.

- Experiments demonstrated that de Broglie hypothesis is applicable to atoms and macromolecules.

KEY TERMS

- diffraction: The bending of a wave around the edges of an opening or an obstacle.

- special relativity: A theory that (neglecting the effects of gravity) reconciles the principle of relativity with the observation that the speed of light is constant in all frames of reference.

- wave-particle duality: A postulation that all particles exhibit both wave and particle properties. It is a central concept of quantum mechanics.

Einstein derived in his theory of special relativity that the energy and momentum of a photon has the following relationship:

( : energy, : momentum, : speed of light).

He also demonstrated, in his study of photoelectric effects, that energy of a photon is directly proportional to its frequency, giving us this equation:

( : Planck constant, : frequency).

Combining the two equations, we can derive a relationship between the momentum and wavelength of light:

. Therefore, we arrive at .

De Broglie’s hypothesis is that this relationship , derived for electromagnetic waves, can be adopted to describe matter (e.g. electron, neutron, etc.) as well.

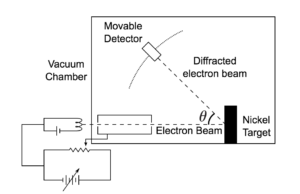

De Broglie didn’t have any experimental proof at the time of his proposal. It took three years for Clinton Davisson and Lester Germer to observe diffraction patterns from electrons passing a crystalline metallic target (see ). Before the acceptance of the de Broglie hypothesis, diffraction was a property thought to be exhibited by waves only. Therefore, the presence of any diffraction effects by matter demonstrated the wave-like nature of matter. This was a pivotal result in the development of quantum mechanics. Just as the photoelectric effect demonstrated the particle nature of light, the Davisson–Germer experiment showed the wave-nature of matter, thus completing the theory of wave-particle duality.

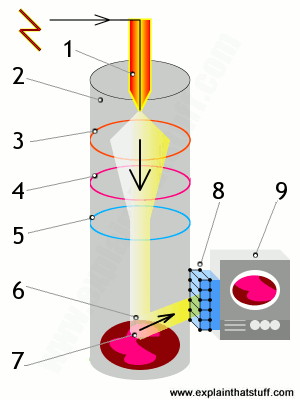

Davisson-Germer Experimental Setup: The experiment included an electron gun consisting of a heated filament that released thermally excited electrons, which were then accelerated through a potential difference (giving them a certain amount of kinetic energy towards the nickel crystal). To avoid collisions of the electrons with other molecules on their way towards the surface, the experiment was conducted in a vacuum chamber. To measure the number of electrons that were scattered at different angles, an electron detector that could be moved on an arc path about the crystal was used. The detector was designed to accept only elastically scattered electrons.

The Heisenberg Uncertainty Principle

The uncertainty principle asserts a basic limit to the precision with which some physical properties of a particle can be known simultaneously.Learning Objectives

Relate the Heisenberg uncertainty principle with the matter wave nature of all quantum objectsKey Takeaways

KEY POINTS

- The uncertainty principle is inherent in the properties of all wave-like systems, and that it arises in quantum mechanics is simply due to the matter wave nature of all quantum objects.

- The uncertainty principle is not a statement about the observational success of current technology.

- The more precisely the position of some particle is determined, the less precisely its momentum can be known, and vice versa. This can be formulated as the following inequality: .

KEY TERMS

- matter wave: A concept reflects the wave-particle duality of matter. The theory was proposed by Louis de Broglie.

- Rayleigh criterion: The angular resolution of an optical system can be estimated from the diameter of the aperture and the wavelength of the light, which was first proposed by Lord Rayleigh.

The principle is quite counterintuitive, so the early students of quantum theory had to be reassured that naive measurements to violate it were bound always to be unworkable. One way in which Heisenberg originally illustrated the intrinsic impossibility of violating the uncertainty principle is by using an imaginary microscope as a measuring device.

Heisenberg Microscope: Heisenberg’s microscope, with cone of light rays focusing on a particle with angle He imagines an experimenter trying to measure the position and momentum of an electron by shooting a photon at it.

Examples

Example One

If the photon has a short wavelength and therefore a large momentum, the position can be measured accurately. But the photon scatters in a random direction, transferring a large and uncertain amount of momentum to the electron. If the photon has a long wavelength and low momentum, the collision does not disturb the electron’s momentum very much, but the scattering will reveal its position only vaguely.Example Two

If a large aperture is used for the microscope, the electron’s location can be well resolved (see Rayleigh criterion); but by the principle of conservation of momentum, the transverse momentum of the incoming photon and hence the new momentum of the electron resolves poorly. If a small aperture is used, the accuracy of both resolutions is the other way around.Heisenberg’s Argument

Heisenberg’s argument is summarized as follows. He begins by supposing that an electron is like a classical particle, moving in the direction along a line below the microscope, as in the illustration to the right. Let the cone of light rays leaving the microscope lens and focusing on the electron makes an angle with the electron. Let be the wavelength of the light rays. Then, according to the laws of classical optics, the microscope can only resolve the position of the electron up to an accuracy of When an observer perceives an image of the particle, it’s because the light rays strike the particle and bounce back through the microscope to their eye. However, we know from experimental evidence that when a photon strikes an electron, the latter has a recoil with momentum proportional to , where is Planck’s constant.It is at this point that Heisenberg introduces objective indeterminacy into the thought experiment. He writes that “the recoil cannot be exactly known, since the direction of the scattered photon is undetermined within the bundle of rays entering the microscope”. In particular, the electron’s momentum in the direction is only determined up to . Combining the relations for and , we thus have that , which is an approximate expression of Heisenberg’s uncertainty principle.

Heisenberg Uncertainty Principle Derived and Explained

One of the most-oft quoted results of quantum physics, this doozie forces us to reconsider what we can know about the universe. Some things cannot be known simultaneously. In fact, if anything about a system is known perfectly, there is likely another characteristic that is completely shrouded in uncertainty. So significant figures ARE important after all!

Philosophical Implications

Since its inception, many counter-intuitive aspects of quantum mechanics have provoked strong philosophical debates.Learning Objectives

Formulate the Copenhagen interpretation of the probabilistic nature of quantum mechanicsKey Takeaways

KEY POINTS- According to the Copenhagen interpretation, the probabilistic nature of quantum mechanics is intrinsic in our physical universe.

- When quantum wave function collapse occurs, physical possibilities are reduced into a single possibility as seen by an observer.

- Once a particle in an entangled state is measured and its state is determined, the Copenhagen interpretation demands that the state of the other entangled particle is also determined instantaneously.

- probability density function: Any function whose integral over a set gives the probability that a random variable has a value in that set.

- Bell’s theorem: A no-go theorem famous for drawing an important line in the sand between quantum mechanics (QM) and the world as we know it classically. In its simplest form, Bell’s theorem states: No physical theory of local hidden variables can ever reproduce all of the predictions of quantum mechanics.

- epistemological: Of or pertaining to epistemology or theory of knowledge, as a field of study.

Since its inception, many counter-intuitive aspects and results of quantum mechanics have provoked strong philosophical debates and many interpretations. Even fundamental issues, such as Max Born’s basic rules interpreting as a probability density function took decades to be appreciated by society and many leading scientists. Indeed, the renowned physicist Richard Feynman once said, “I think I can safely say that nobody understands quantum mechanics. ”

The Copenhagen Interpretation

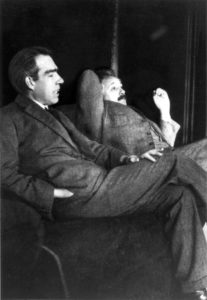

The Copenhagen interpretation—due largely to the Danish theoretical physicist Niels Bohr, shown in —remains a quantum mechanical formalism that is widely accepted amongst physicists, some 75 years after its enunciation. According to this interpretation, the probabilistic nature of quantum mechanics is not a temporary feature which will eventually be replaced by a deterministic theory, but instead must be considered a final renunciation of the classical idea of causality.

Niels Bohr and Albert Einstein: Niels Bohr (left) and Albert Einstein (right). Despite their pioneering contributions to the inception of the quantum mechanics, they disagreed on its interpretation.

Philosophical Implications

It is also believed therein that any well-defined application of the quantum mechanical formalism must always make reference to the experimental arrangement. This is due to the quantum mechanical principle of wave function collapse. That is, a wave function which is initially in a superposition of several different possible states appears to reduce to a single one of those states after interaction with an observer. In simplified terms, it is the reduction of the physical possibilities into a single possibility as seen by an observer. This raises philosophical questions about whether something that is never observed actually exists.Einstein-Podolsky-Rosen (EPR) Paradox

Albert Einstein (shown in , himself one of the founders of quantum theory) disliked this loss of determinism in measurement in the Copenhagen interpretation. Einstein held that there should be a local hidden variable theory underlying quantum mechanics and, consequently, that the present theory was incomplete. He produced a series of objections to the theory, the most famous of which has become known as the Einstein-Podolsky-Rosen (EPR) paradox. John Bell showed by Bell’s theorem that this “EPR” paradox led to experimentally testable differences between quantum mechanics and local realistic theories. Experiments have been performed confirming the accuracy of quantum mechanics, thereby demonstrating that the physical world cannot be described by any local realistic theory. The Bohr-Einstein debates provide a vibrant critique of the Copenhagen Interpretation from an epistemological point of view.Quantum Entanglement

One of the most bizarre aspect of the quantum mechanics is known as quantum entanglement. Quantum entanglement occurs when particles interact physically and then become separated, while isloated from the rest of the universe to prevent any deterioration of the quantum state. According to the Copenhagen interpretation of quantum mechanics, their shared state is indefinite until measured. Once a particle in the entangled state is measured and its state is determined, the Copenhagen interpretation demands that the other particles’ state is also determined instantaneously. This bizarre nature of action at a distance (which seemingly violate the speed limit on the transmission of information implicit in the theory of relativity) is what bothered Einstein the most. (According to the theory of relativity, nothing can travel faster than the speed of light in a vacuum. This seemingly puts a limit on the speed at which information can be transmitted. ) Quantum entanglement is the key element in proposals for quantum computers and quantum teleportation.Applications of Quantum Mechanics

Fluorescence and Phosphorescence

Fluorescence and phosphorescence are photoluminescence processes in which material emits photons after excitation.Learning Objectives

Compare mechanisms of fluorescence and phosphorescence light emissionKey Takeaways

Key Points

- The emitted light usually has a longer wavelength, and therefore lower energy, than the absorbed radiation.

- Fluorescence occurs when an orbital electron of a molecule or atom relaxes to its ground state by emitting a photon of light after being excited to a higher quantum state by some type of energy.

- In a phosphorescence, excitation of electrons to a higher state is accompanied with the change of a spin state. Relaxation is a slow process since it involves energy state transitions “forbidden” in quantum mechanics.

Key Terms

- spin: A quantum angular momentum associated with subatomic particles; it also creates a magnetic moment.

- photon: The quantum of light and other electromagnetic energy, regarded as a discrete particle having zero rest mass, no electric charge, and an indefinitely long lifetime.

- ground state: the stationary state of lowest energy of a particle or system of particles

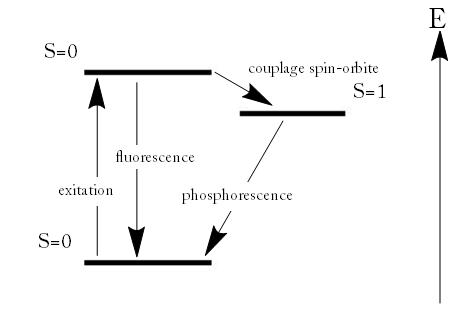

Fluorescence and Phosphorescence

Fluorescence is the emission of light by a substance that has absorbed light or other electromagnetic radiation. It is a form of photoluminescence. In most cases, the emitted light has a longer wavelength, and therefore lower energy, than the absorbed radiation. However, when the absorbed electromagnetic radiation is intense, it is possible for one electron to absorb two photons; this two-photon absorption can lead to emission of radiation having a shorter wavelength than the absorbed radiation. The emitted radiation may also be of the same wavelength as the absorbed radiation, termed “resonance fluorescence”.Fluorescence occurs when an orbital electron of a molecule or atom relaxes to its ground state by emitting a photon of light after being excited to a higher quantum state by some type of energy. The most striking examples of fluorescence occur when the absorbed radiation is in the ultraviolet region of the spectrum, and thus invisible to the human eye, and the emitted light is in the visible region.

Fluorescence: Fluorescent minerals emit visible light when exposed to ultraviolet light

Fluorescence and Phosphorescence: Energy scheme used to explain the difference between fluorescence and phosphorescence

Phosphorescence: Phosphorescent material glowing in the dark.

Lasers

A laser is a device that emits monochromatic light through a process of optical amplification based on the stimulated emission of photons.Learning Objectives

Identify process that generates laser emission and the defining characteristics of laser lightKey Takeaways

Key Points

- Principles of laser operation are largely based on quantum mechanics, most importantly on the process of the stimulated emission of photons.

- Spontaneous emission is a random decaying process. The phase associated with the emitted photon is also random.

- Atomic transition can be stimulated by the presence of an incoming photon at a frequency associated with the atomic transition. This process leads to optical amplification as an identical photon is emitted along with the incoming photon.

Key Terms

- free-electron laser: a laser that use a relativistic electron beam as the lasing medium, which moves freely through a magnetic structure

- monochromatic: Describes a beam of light with a single wavelength (i.e., of one specific color or frequency).

- coherence: an ideal property of waves that enables stationary (i.e., temporally and spatially constant) interference

Principles of laser operation are largely based on quantum mechanics. (One exception would be free-electron lasers, whose operation can be explained solely by classical electrodynamics. ) When an electron is excited from a lower-energy to a higher-energy level, it will not stay that way forever. An electron in an excited state may decay to an unoccupied lower-energy state according to a particular time constant characterizing that transition. When such an electron decays without external influence, it emits a photon; this process is called “spontaneous emission. ” The phase associated with the emitted photon is random. A material with many atoms in an excited state may thus result in radiation that is very monochromatic, but the individual photons would have no common phase relationship and would emanate in random directions. This is the mechanism of fluorescence and thermal emission.

However, an external photon at a frequency associated with the atomic transition can affect the quantum mechanical state of the atom. As the incident photon passes by, the rate of transitions of the excited atom can be significantly enhanced beyond that due to spontaneous emission. This “induced” decay process is called stimulated emission. In stimulated emission, the decaying atom produces an identical “copy” of the incoming photon. Therefore, after the atom decays, we have two identical outgoing photons. Since there was only one incoming photon, we amplified the intensity of light by a factor of 2!

Stimulated Photon Emission: In stimulated emission process, a photon (with a frequency equal to the atomic transition) encounters an excited atom, and a new photon identical to the incoming photon is produced. The result is an atom in the ground state with two outgoing photons.

Holography

Holography is an optical technique which enables three-dimensional images to be made.Learning Objectives

Explain how holographic images are recorded and their propertiesKey Takeaways

Key Points

- When the two laser beams reach the recording medium, their light waves intersect and interfere with each other. It is this interference pattern that is imprinted on the recording medium.

- When a reconstruction beam illuminates the hologram, it is diffracted by the hologram’s surface pattern. This produces a light field identical to the one originally produced by the scene and scattered onto the hologram.

- Holographic image changes as the position and orientation of the viewing system changes in exactly the same way as if the object were still present, thus making the image appear three-dimensional.

Key Terms

- interference: An effect caused by the superposition of two systems of waves, such as a distortion on a broadcast signal due to atmospheric or other effects.

- laser: A device that produces a monochromatic, coherent beam of light.

- silver halide: The light-sensitive chemicals used in photographic film and pape

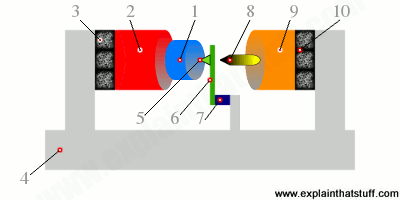

Laser: Holograms are recorded using a flash of light that illuminates a scene and then imprints on a recording medium, much in the way a photograph is recorded. In addition, however, part of the light beam must be shone directly onto the recording medium – this second light beam is known as the reference beam (]). A hologram requires a laser as the sole light source. Laser is required as a light source to produce an interference pattern on the recording plate. To prevent external light from interfering, holograms are usually taken in darkness, or in low level light of a different color from the laser light used in making the hologram. Holography requires a specific exposure time, which can be controlled using a shutter, or by electronically timing the laser

Recording a hologram: Holograms are recorded using a flash of light that illuminates a scene and then imprints on a recording medium, much in the way a photograph is recorded. In addition, however, part of the light beam must be shone directly onto the recording medium – this second light beam is known as the reference beam.

- One beam (known as the illumination or object beam) is spread using lenses and directed onto the scene using mirrors. Some of the light scattered (reflected) from the scene then falls onto the recording medium.

- The second beam (known as the reference beam) is also spread through the use of lenses, but is directed so that it doesn’t come in contact with the scene, and instead travels directly onto the recording medium.

Reconstructing a hologram: An interference pattern can be considered an encoded version of a scene, requiring a particular key – the original light source – in order to view its contents. This missing key is provided later by shining a laser, identical to the one used to record the hologram, onto the developed film. When this beam illuminates the hologram, it is diffracted by the hologram’s surface pattern. This produces a light field identical to the one originally produced by the scene and scattered onto the hologram

Several different materials can be used as the recording medium. One of the most common is a film very similar to photographic film (silver halide photographic emulsion), but with a much higher concentration of light-reactive grains, making it capable of the much higher resolution that holograms require. A layer of this recording medium (e.g. silver halide) is attached to a transparent substrate, which is commonly glass, but may also be plastic.

Process: When the two laser beams reach the recording medium, their light waves intersect and interfere with each other. It is this interference pattern that is imprinted on the recording medium. The pattern itself is seemingly random, as it represents the way in which the scene’s light interfered with the original light source – but not the original light source itself. The interference pattern can be considered an encoded version of the scene, requiring a particular key – the original light source – in order to view its contents.

This missing key is provided later by shining a laser, identical to the one used to record the hologram, onto the developed film. When this beam illuminates the hologram, it is diffracted by the hologram’s surface pattern. This produces a light field identical to the one originally produced by the scene and scattered onto the hologram. The image this effect produces in a person’s retina is known as a virtual image.

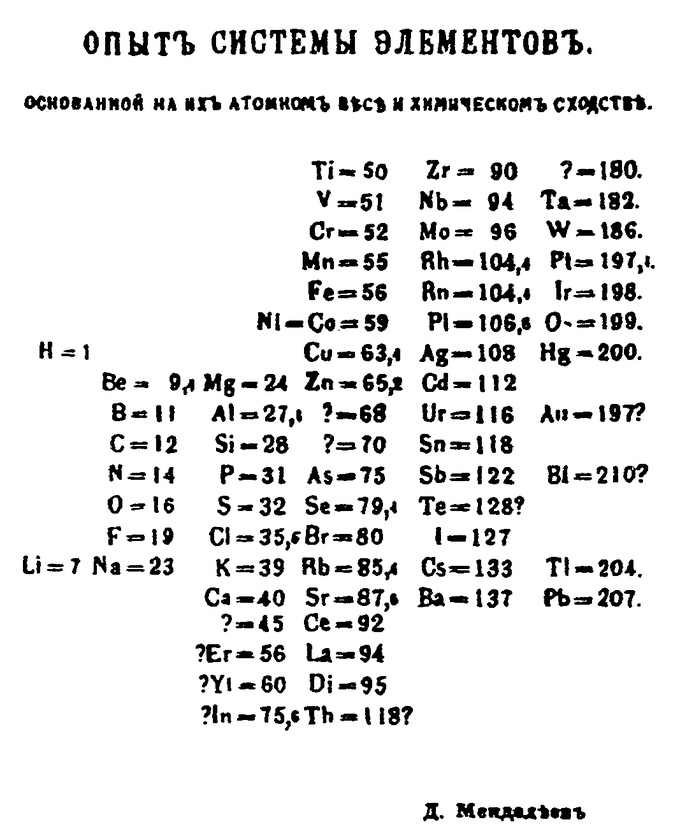

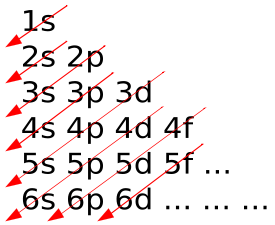

The periodic table is a tabular display of the chemical elements. The elements are organized based on their atomic numbers, electron configurations, and recurring chemical properties.

In the periodic table, elements are presented in order of increasing atomic number (the number of protons). The rows of the table are called periods; the columns of the s- (columns 1-2 and He), d- (columns 3-12), and p-blocks (columns 13-18, except He) are called groups. (The terminology of s-, p-, and d- blocks originate from the valence atomic orbitals the element’s electrons occupy. ) Some groups have specific names, such as the halogens or the noble gases. Since, by definition, a periodic table incorporates recurring trends, any such table can be used to derive relationships between the properties of the elements and predict the properties of new, yet-to-be-discovered, or synthesized elements. As a result, the periodic table provides a useful framework for analyzing chemical behavior, and such tables are widely used in chemistry and other sciences.

Process: When the two laser beams reach the recording medium, their light waves intersect and interfere with each other. It is this interference pattern that is imprinted on the recording medium. The pattern itself is seemingly random, as it represents the way in which the scene’s light interfered with the original light source – but not the original light source itself. The interference pattern can be considered an encoded version of the scene, requiring a particular key – the original light source – in order to view its contents.

This missing key is provided later by shining a laser, identical to the one used to record the hologram, onto the developed film. When this beam illuminates the hologram, it is diffracted by the hologram’s surface pattern. This produces a light field identical to the one originally produced by the scene and scattered onto the hologram. The image this effect produces in a person’s retina is known as a virtual image.

The Periodic Table of Elements

A periodic table is a tabular display of elements organized by their atomic numbers, electron configurations, and chemical properties.Learning Objectives

Explain how properties of elements vary within groups and across periods in the periodic tableKey Takeaways

Key Points

- A periodic table is a useful framework for analyzing chemical behavior. Such tables are widely used in chemistry and other sciences.

- A group, or family, is a vertical column in the periodic table. Groups usually have more significant periodic trends than do periods and blocks.

- A period is a horizontal row in the periodic table. Elements in the same period show trends in atomic radius, ionization energy, electron affinity, and electronegativity.

Key Terms

- atomic orbital: The quantum mechanical behavior of an electron in an atom describing the probability of the electron’s particular position and energy.

- electron affinity: the amount of energy released when an electron is added to a neutral atom or molecule to form a negative ion

- ionization energy: the amount of energy required to remove an electron from an atom or molecule in the gas phase

In the periodic table, elements are presented in order of increasing atomic number (the number of protons). The rows of the table are called periods; the columns of the s- (columns 1-2 and He), d- (columns 3-12), and p-blocks (columns 13-18, except He) are called groups. (The terminology of s-, p-, and d- blocks originate from the valence atomic orbitals the element’s electrons occupy. ) Some groups have specific names, such as the halogens or the noble gases. Since, by definition, a periodic table incorporates recurring trends, any such table can be used to derive relationships between the properties of the elements and predict the properties of new, yet-to-be-discovered, or synthesized elements. As a result, the periodic table provides a useful framework for analyzing chemical behavior, and such tables are widely used in chemistry and other sciences.

Blocks in the Periodic Table: A diagram of the periodic table, highlighting the different blocks

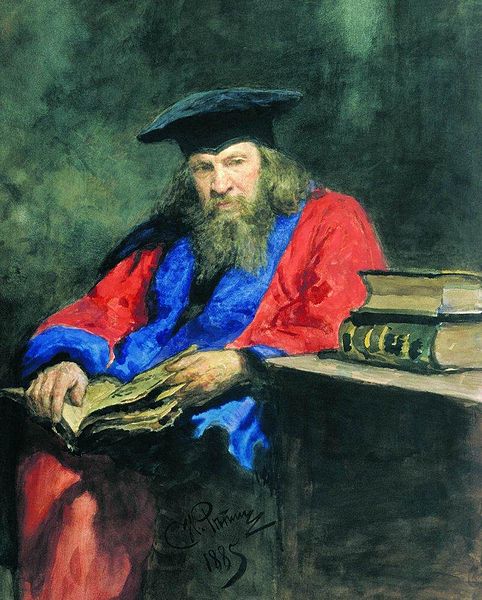

History of the Periodic Table

Although precursors exist, Dmitri Mendeleev is generally credited with the publication, in 1869, of the first widely recognized periodic table. Mendeleev designed the table in such a way that recurring (“periodic”) trends in the properties of the elements could be shown. Using the trends he observed, he even left gaps for those elements that he thought were “missing. ” He even predicted the properties that he thought the missing elements would have when they were discovered. Many of these elements were indeed later discovered, and Mendeleev’s predictions were proved to be correct.Groups

Agroup, or family, is a vertical column in the periodic table. Groups usually have more significant periodic trends than do periods and blocks, which are explained below. Modern quantum mechanical theories of atomic structure explain group trends by proposing that elements in the same group generally have the same electron configurations in their valence (or outermost, partially filled) shell. Consequently, elements in the same group tend to have shared chemistry and exhibit a clear trend in properties with increasing atomic number. However, in some parts of the periodic table, such as the d-block and the f-block, horizontal similarities can be as important as, or more pronounced than, vertical similarities.Periods

A period is a horizontal row in the periodic table. Although groups generally have more significant periodic trends, there are regions where horizontal trends are more significant than vertical group trends, such as in the f-block, where the lanthanides and actinides form two substantial horizontal series of elements. Elements in the same period show trends in atomic radius, ionization energy, and electron affinity. Atomic radius usually decreases from left to right across a period. This occurs because each successive element has an added proton and electron, which causes the electron to be drawn closer to the nucleus, decreasing the radius.

The periodic table: Here is the complete periodic table with atomic numbers, groups, and periods. Each entry on the periodic table represents one element, and compounds are made up of several of these elements.

X-Rays

X-rays are a form of electromagnetic radiation and have wavelengths in the range of 0.01 to 10 nanometers.Learning Objectives

Describe the properties of X-rays and how can be generatedKey Takeaways

Key Points

- X-rays can be generated by an x-ray tube, a vacuum tube, or a particle accelerator.

- X-ray fluorescence and Bremsstrahlung are processes through which x-rays are produced.

- Synchrotron radiation is generated by particle accelerators. Its unique features are x-ray outputs many orders of magnitude greater than those of x-ray tubes, wide x-ray spectra, excellent collimation, and linear polarization.

Key Terms

- photon: The quantum of light and other electromagnetic energy, regarded as a discrete particle having zero rest mass, no electric charge, and an indefinitely long lifetime.

- particle accelerator: A device that accelerates electrically charged particles to extremely high speeds, for the purpose of inducing high-energy reactions or producing high-energy radiation.

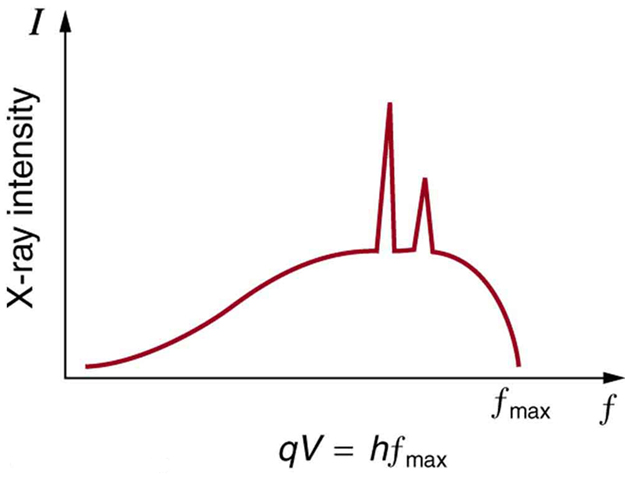

X-Ray Spectrum and Applications: X-rays are part of the electromagnetic spectrum, with wavelengths shorter than those of visible light. Different applications use different parts of the X-ray spectrum.

- X-ray fluorescence, if the electron has enough energy that it can knock an orbital electron out of the inner electron shell of a metal atom. As a result, electrons from higher energy levels fill up the vacancy, and x-ray photons are emitted. This process produces an emission spectrum of x-rays at a few discrete frequencies, sometimes referred to as the spectral lines. The spectral lines generated depend on the target (anode) element used and therefore are called characteristic lines. Usually these are transitions from upper shells into the K shell (called K lines), or the L shell (called L lines), and so on.

- Bremsstrahlung, literally meaning braking radiation. Bremsstrahlung is radiation given off by the electrons as they are scattered by the strong electric field near the high-Z (proton number) nuclei. These x-rays have a continuous spectrum. The intensity of the x-rays increases linearly with decreasing frequency, from zero at the energy of the incident electrons, the voltage on the x-ray tube.

A specialized source of x-rays that is becoming widely used in research is synchrotron radiation, which is generated by particle accelerators. Its unique features are x-ray outputs many orders of magnitude greater than those of x-ray tubes, wide x-ray spectra, excellent collimation, and linear polarization.

Quantum-Mechanical View of Atoms

Atom is a basic unit of matter that consists of a nucleus surrounded by negatively charged electron cloud, commonly called atomic orbitals.

Learning Objectives

Identify major contributions to the understanding of atomic structure that were made by Niels Bohr, Erwin Schrödinger, and Werner HeisenbergKey Takeaways

Key Points

- Niels Bohr suggested that the electrons were confined into clearly defined, quantized orbits, and could jump between these, but could not freely spiral inward or outward in intermediate states.

- Erwin Schrödinger, in 1926, developed a mathematical model of the atom that described the electrons as three-dimensional waveforms rather than point particles.

- Modern quantum mechanical view of hydrogen has evolved further after Schrödinger, by taking relativistic correction terms into account. This is referred to a quantum electrodynamics (QED).

Key Terms

- wave-particle duality: A postulation that all particles exhibit both wave and particle properties. It is a central concept of quantum mechanics.

- scanning tunneling microscope: An instrument for imaging surfaces at the atomic level.

- semiclassical approach: A theory in which one part of a system is described quantum-mechanically whereas the other is treated classically.

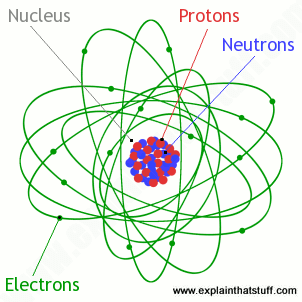

The atom is a basic unit of matter that consists of a nucleus surrounded by negatively charged electrons. The atomic nucleus contains a mix of positively charged protons and electrically neutral neutrons. The electrons of an atom are bound to the nucleus by the electromagnetic (Coulomb) force. Atoms are minuscule objects with diameters of a few tenths of a nanometer and tiny masses proportional to the volume implied by these dimensions. Atoms in solid states (or, to be precise, their electron clouds) can be observed individually using special instruments such as the scanning tunneling microscope.

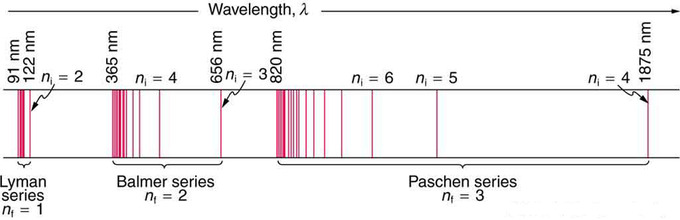

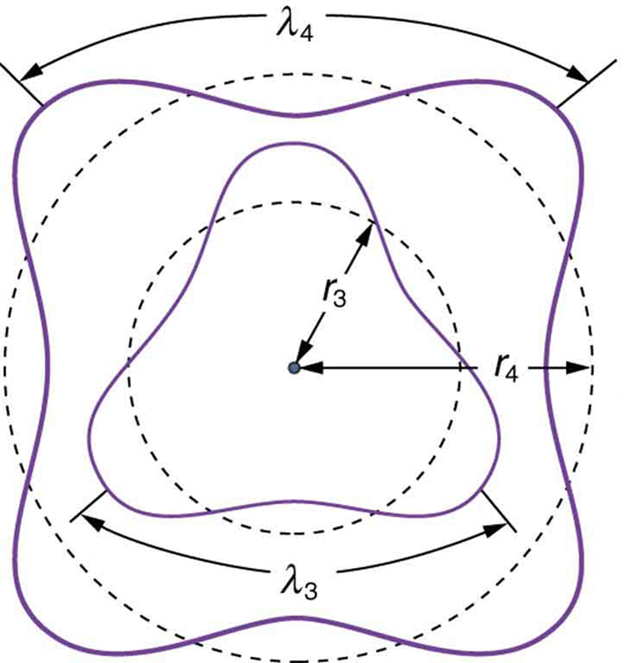

Hydrogen-1 (one proton + one electron) is the simplest form of atoms, and not surprisingly, our quantum mechanical understanding of atoms evolved with the understanding of this species. In 1913, physicist Niels Bohr suggested that the electrons were confined into clearly defined, quantized orbits, and could jump between these, but could not freely spiral inward or outward in intermediate states. An electron must absorb or emit specific amounts of energy to transition between these fixed orbits. Bohr’s model successfully explained spectroscopic data of hydrogen very well, but it adopted a semiclassical approach where electron was still considered a (classical) particle.

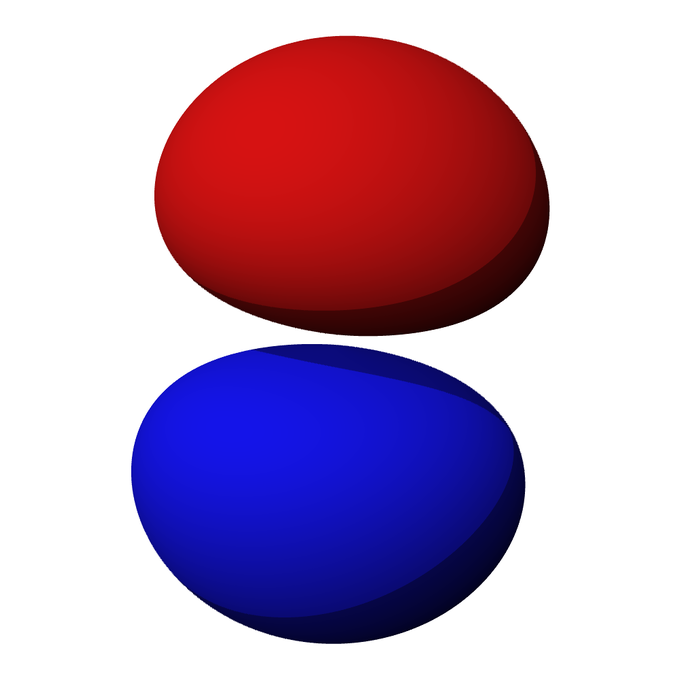

Adopting Louis de Broglie’s proposal of wave-particle duality, Erwin Schrödinger, in 1926, developed a mathematical model of the atom that described the electrons as three-dimensional waveforms rather than point particles. A consequence of using waveforms to describe particles is that it is mathematically impossible to obtain precise values for both the position and momentum of a particle at the same time; this became known as the uncertainty principle, formulated by Werner Heisenberg in 1926. Thereafter, the planetary model of the atom was discarded in favor of one that described atomic orbital zones around the nucleus where a given electron is most likely to be observed.

Illustration of the Helium Atom: This is an illustration of the helium atom, depicting the nucleus (pink) and the electron cloud distribution (black). The nucleus (upper right) in helium-4 is in reality spherically symmetric and closely resembles the electron cloud, although for more complicated nuclei this is not always the case. The black bar is one angstrom (10-10 m, or 100 pm).

Modern quantum mechanical view of hydrogen has evolved further after Schrödinger, by taking relativistic correction terms into account. Quantum electrodynamics (QED), a relativistic quantum field theory describing the interaction of electrically charged particles, has successfully predicted minuscule corrections in energy levels. One of the hydrogen’s atomic transitions (n=2 to n=1, n: principal quantum number) has been measured to an extraordinary precision of 1 part in a hundred trillion. This kind of spectroscopic precision allows physicists to refine quantum theories of atoms, by accounting for minuscule discrepancies between experimental results and theories.

Planck’s Quantum Hypothesis and Black Body Radiation

A black body emits radiation called black body radiation. Planck described the radiation by assuming that radiation was emitted in quanta.

Learning Objectives

Identify assumption made by Max Planck to describe the electromagnetic radiation emitted by a black bodyKey Takeaways

Key Points

- A black body in thermal equilibrium emits electromagnetic radiation called black body radiation.

- The radiation has a specific spectrum and intensity that depends only on the temperature of the body.

- Max Planck, in 1901, accurately described the radiation by assuming that electromagnetic radiation was emitted in discrete packets (or quanta). Planck’s quantum hypothesis is a pioneering work, heralding advent of a new era of modern physics and quantum theory.

Key Terms

- spectral radiance: measures of the quantity of radiation that passes through or is emitted from a surface and falls within a given solid angle in a specified direction.

- black body: An idealized physical body that absorbs all incident electromagnetic radiation, regardless of frequency or angle of incidence. Although black body is a theoretical concept, you can find approximate realizations of black body in nature.

- Planck constant: a physical constant that is the quantum of action in quantum mechanics. It has a unit of angular momentum. The Planck constant was first described as the proportionality constant between the energy of a photon (unit of electromagnetic radiation) and the frequency of its associated electromagnetic wave in his derivation of the Planck’s law

A black body in thermal equilibrium (i.e. at a constant temperature) emits electromagnetic radiation called black body radiation. Black body radiation has a characteristic, continuous frequency spectrum that depends only on the body’s temperature. Max Planck, in 1901, accurately described the radiation by assuming that electromagnetic radiation was emitted in discrete packets (or quanta). Planck’s quantum hypothesis is a pioneering work, heralding advent of a new era of modern physics and quantum theory.

Explaining the properties of black-body radiation was a major challenge in theoretical physics during the late nineteenth century. Predictions based on classical theories failed to explain black body spectra observed experimentally, especially at shorter wavelength. The puzzle was solved in 1901 by Max Planck in the formalism now known as Planck’s law of black-body radiation. Contrary to the common belief that electromagnetic radiation can take continuous values of energy, Planck introduced a radical concept that electromagnetic radiation was emitted in discrete packets (or quanta) of energy. Although Planck’s derivation is beyond the scope of this section (it will be covered in Quantum Mechanics), Planck’s law may be written:

where is the spectral radiance of the surface of the black body, is its absolute temperature, is wavelength of the radiation, is the Boltzmann constant, is the Planck constant, and is the speed of light. This equation explains the black body spectra shown below. Planck’s quantum hypothesis is one of the breakthroughs in the modern physics. It is not a surprise that he introduced Planck constant for the first time in his derivation of the Planck’s law.

Black body radiation spectrum: Typical spectrum from a black body at different temperatures (shown in blue, green and red curves). As the temperature decreases, the peak of the black-body radiation curve moves to lower intensities and longer wavelengths. Black line is a prediction of a classical theory for an object at 5,000K, showing catastropic discrepancy at shorter wavelengh.

Note that the spectral radiance depends on two variables, wavelength and temperature. The radiation has a specific spectrum and intensity that depends only on the temperature of the body. Despite its simplicity, Planck’s law describes radiation properties of objects (e.g. our body, planets, stars) reasonably well.

The Early Atom

The Discovery of the Parts of the Atom

Modern scientific usage denotes the atom as composed of constituent particles: the electron, the proton and the neutron.Learning Objectives

Discuss experiments that led to discovery of the electron and the nucleusKey Takeaways

Key Points

- The British physicist J. J. Thomson performed experiments studying cathode rays and discovered that they were unique particles, later named electrons.

- Rutherford proved that the hydrogen nucleus is present in other nuclei.

- In 1932, James Chadwick showed that there were uncharged particles in the radiation he was using. These particles, later called neutrons, had a similar mass of the protons but did not have the same characteristics as protons.

Key Terms

- scintillation: A flash of light produced in a transparent material by the passage of a particle.

- alpha particle: A positively charged nucleus of a helium-4 atom (consisting of two protons and two neutrons), emitted as a consequence of radioactivity.

- cathode: An electrode through which electric current flows out of a polarized electrical device.

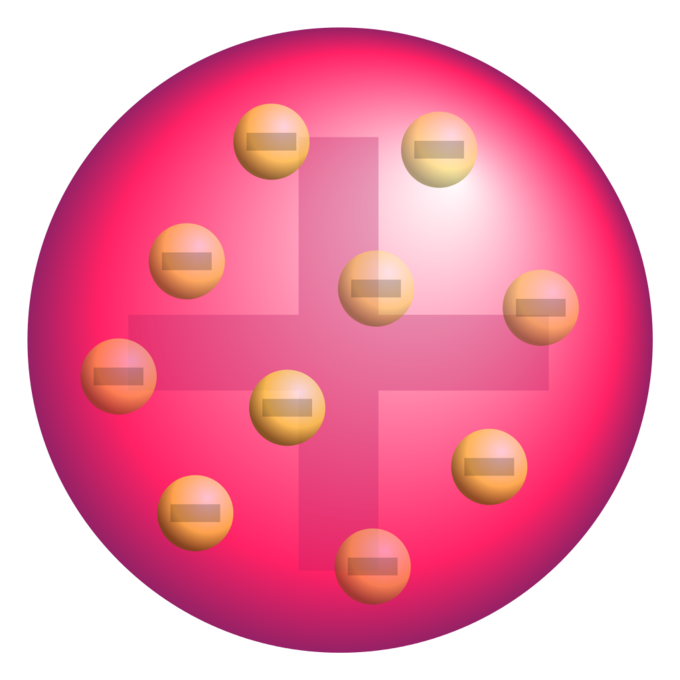

Classical Atomic Model: Atomic model before the advent of Quantum Mechanics.

Electron

The German physicist Johann Wilhelm Hittorf undertook the study of electrical conductivity in rarefied gases. In 1869, he discovered a glow emitted from the cathode that increased in size with decrease in gas pressure. In 1896, the British physicist J. J. Thomson performed experiments demonstrating that cathode rays were unique particles, rather than waves, atoms or molecules, as was believed earlier. Thomson made good estimates of both the charge and the mass , finding that cathode ray particles (which he called “corpuscles”) had perhaps one thousandth the mass of hydrogen, the least massive ion known. He showed that their charge to mass ratio (e/m) was independent of cathode material. (Fig 1 shows a beam of deflected electrons. )

Electron Beam: A beam of electrons deflected in a circle by a magnetic field.

Proton

In 1917 (in experiments reported in 1919), Rutherford proved that the hydrogen nucleus is present in other nuclei, a result usually described as the discovery of the proton. Earlier, Rutherford learned to create hydrogen nuclei as a type of radiation produced as a yield of the impact of alpha particles on hydrogen gas; these nuclei were recognized by their unique penetration signature in air and their appearance in scintillation detectors. These experiments began when Rutherford noticed that when alpha particles were shot into air (mostly nitrogen), his scintillation detectors displayed the signatures of typical hydrogen nuclei as a product. After experimentation Rutherford traced the reaction to the nitrogen in air, and found that the effect was larger when alphas were produced into pure nitrogen gas. Rutherford determined that the only possible source of this hydrogen was the nitrogen, and therefore nitrogen must contain hydrogen nuclei. One hydrogen nucleus was knocked off by the impact of the alpha particle, producing oxygen-17 in the process. This was the first reported nuclear reaction, .Neutron

In 1920, Ernest Rutherford conceived the possible existence of the neutron. In particular, Rutherford examined the disparity found between the atomic number of an atom and its atomic mass. His explanation for this was the existence of a neutrally charged particle within the atomic nucleus. He considered the neutron to be a neutral double consisting of an electron orbiting a proton. In 1932, James Chadwick showed uncharged particles in the radiation he used. These particles had a similar mass as protons, but did not have the same characteristics as protons. Chadwick followed some of the predictions of Rutherford, the first to work in this then unknown field.Early Models of the Atom

Dalton believed that that matter is composed of discrete units called atoms — indivisible, ultimate particles of matter.Learning Objectives

Describe postulates of Dalton’s atomic theory and the atomic theories of ancient Greek philosophersKey Takeaways

Key Points

- The atom is a basic unit of matter that consists of a dense central nucleus surrounded by a cloud of negatively charged electrons.

- Scattered knowledge discovered by alchemists over the Middle Ages contributed to the discovery of atoms.

- Dalton established his atomic theory based on the fact that the masses of reactants in specific chemical reactions always have a particular mass ratio.

Key Terms

- electromagnetic force: a long-range fundamental force that acts between charged bodies, mediated by the exchange of photons

- Avogadro’s number: the number of constituent particles (usually atoms or molecules) in one mole of a given substance. It has dimensions of reciprocal mol and its value is equal to $6.02214129 \cdot 10^{23} \text{ mol}^{-1}$

- nucleus: the massive, positively charged central part of an atom, made up of protons and neutrons

Illustration of the Helium Atom: This is an illustration of the helium atom, depicting the nucleus (pink) and the electron cloud distribution (black). The nucleus (upper right) in helium-4 is in reality spherically symmetric and closely resembles the electron cloud, although for more complicated nuclei this is not always the case. The black bar is one angstrom ( m, or 100 pm).

The Greeks and others speculated about the properties of atoms, proposing that only a few types existed and that all matter was formed as various combinations of these types. The famous proposal that the basic elements were earth, air, fire, and water was brilliant but incorrect. The Greeks had identified the most common examples of the four states of matter (solid, gas, plasma, and liquid) rather than the basic chemical elements. More than 2000 years passed before observations could be made with equipment capable of revealing the true nature of atoms.

Over the centuries, discoveries were made regarding the properties of substances and their chemical reactions. Certain systematic features were recognized, but similarities between common and rare elements resulted in efforts to transmute them (lead into gold, in particular) for financial gain. Secrecy was commonplace. Alchemists discovered and rediscovered many facts but did not make them broadly available. As the Middle Ages ended, the practice of alchemy gradually faded, and the science of chemistry arose. It was no longer possible, nor considered desirable, to keep discoveries secret. Collective knowledge grew, and by the beginning of the 19th century, an important fact was well established: the masses of reactants in specific chemical reactions always have a particular mass ratio. This is very strong indirect evidence that there are basic units (atoms and molecules) that have these same mass ratios. English chemist John Dalton (1766-1844) did much of this work, with significant contributions by the Italian physicist Amedeo Avogadro (1776-1856). It was Avogadro who developed the idea of a fixed number of atoms and molecules in a mole. This special number is called Avogadro’s number in his honor ( ).

Dalton believed that matter is composed of discrete units called atoms, as opposed to the obsolete notion that matter could be divided into any arbitrarily small quantity. He also believed that atoms are the indivisible, ultimate particles of matter. However, this belief was overturned near the end of the 19th century by Thomson, with his discovery of electrons.

The Thomson Model

Thomson proposed that the atom is composed of electrons surrounded by a soup of positive charge to balance the electrons’ negative charges.Learning Objectives

Describe model of an atom proposed by J. J. Thomson.Key Takeaways

Key Points

- J. J. Thomson, who discovered the electron in 1897, proposed the plum pudding model of the atom in 1904 before the discovery of the atomic nucleus in order to include the electron in the atomic model.

- In Thomson’s model, the atom is composed of electrons surrounded by a soup of positive charge to balance the electrons’ negative charges, like negatively charged “plums” surrounded by positively charged “pudding”.

- The 1904 Thomson model was disproved by Hans Geiger’s and Ernest Marsden’s 1909 gold foil experiment.

Key Terms

- nucleus: the massive, positively charged central part of an atom, made up of protons and neutrons

Plum pudding model of the atom: A schematic presentation of the plum pudding model of the atom; in Thomson’s mathematical model the “corpuscles” (in modern language, electrons) were arranged non-randomly, in rotating rings.

The 1904 Thomson model was disproved by the 1909 gold foil experiment performed by Hans Geiger and Ernest Marsden. This gold foil experiment was interpreted by Ernest Rutherford in 1911 to suggest that there is a very small nucleus of the atom that contains a very high positive charge (in the case of gold, enough to balance the collective negative charge of about 100 electrons). His conclusions led him to propose the Rutherford model of the atom.

The Rutherford Model

Rutherford confirmed that the atom had a concentrated center of positive charge and relatively large mass.Learning Objectives

Describe gold foil experiment performed by Geiger and Marsden under directions of Rutherford and its implications for the model of the atomKey Takeaways

Key Points

- Rutherford overturned Thomson’s model in 1911 with his well-known gold foil experiment, in which he demonstrated that the atom has a tiny, high- mass nucleus.

- In his experiment, Rutherford observed that many alpha particles were deflected at small angles while others were reflected back to the alpha source.

- This highly concentrated, positively charged region is named the “nucleus” of the atom.

Key Terms

- alpha particle: A positively charged nucleus of a helium-4 atom (consisting of two protons and two neutrons), emitted as a consequence of radioactivity; α-particle.

Atomic Planetary Model: Basic diagram of the atomic planetary model; electrons are in green, and the nucleus is in red

The Bohr Model of the Atom

Bohr suggested that electrons in hydrogen could have certain classical motions only when restricted by a quantum rule.Learning Objectives

Describe model of atom proposed by Niels Bohr.Key Takeaways

Key Points

- According to Bohr: 1) Electrons in atoms orbit the nucleus, 2) The electrons can only orbit stably, without radiating, in certain orbits, and 3) Electrons can only gain and lose energy by jumping from one allowed orbit to another.

- The significance of the Bohr model is that the laws of classical mechanics apply to the motion of the electron about the nucleus only when restricted by a quantum rule. Therefore, his atomic model is called a semiclassical model.

- The laws of classical mechanics predict that the electron should release electromagnetic radiation while orbiting a nucleus, suggesting that all atoms should be unstable!

Key Terms

- Maxwell’s equations: A set of equations describing how electric and magnetic fields are generated and altered by each other and by charges and currents.

- semiclassical: a theory in which one part of a system is described quantum-mechanically whereas the other is treated classically.

The Bohr Model of the Atom

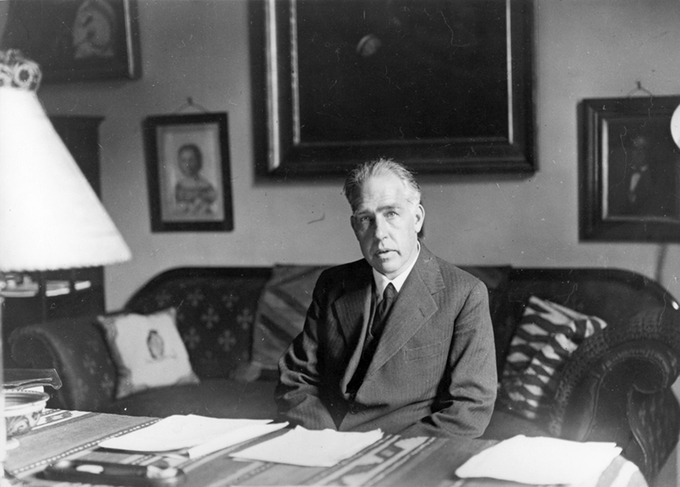

The great Danish physicist Niels Bohr (1885–1962, ) made immediate use of Rutherford’s planetary model of the atom. Bohr became convinced of its validity and spent part of 1912 at Rutherford’s laboratory. In 1913, after returning to Copenhagen, he began publishing his theory of the simplest atom, hydrogen, based on the planetary model of the atom.

Niels Bohr: Niels Bohr, Danish physicist, used the planetary model of the atom to explain the atomic spectrum and size of the hydrogen atom. His many contributions to the development of atomic physics and quantum mechanics; his personal influence on many students and colleagues; and his personal integrity, especially in the face of Nazi oppression, earned him a prominent place in history. (credit: Unknown Author, via Wikimedia Commons)

One big puzzle that the planetary-model of atom had was the following. The laws of classical mechanics predict that the electron should release electromagnetic radiation while orbiting a nucleus (according to Maxwell’s equations, accelerating charge should emit electromagnetic radiation). Because the electron would lose energy, it would gradually spiral inwards, collapsing into the nucleus. This atom model is disastrous, because it predicts that all atoms are unstable. Also, as the electron spirals inward, the emission would gradually increase in frequency as the orbit got smaller and faster. This would produce a continuous smear, in frequency, of electromagnetic radiation. However, late 19th century experiments with electric discharges have shown that atoms will only emit light (that is, electromagnetic radiation) at certain discrete frequencies.

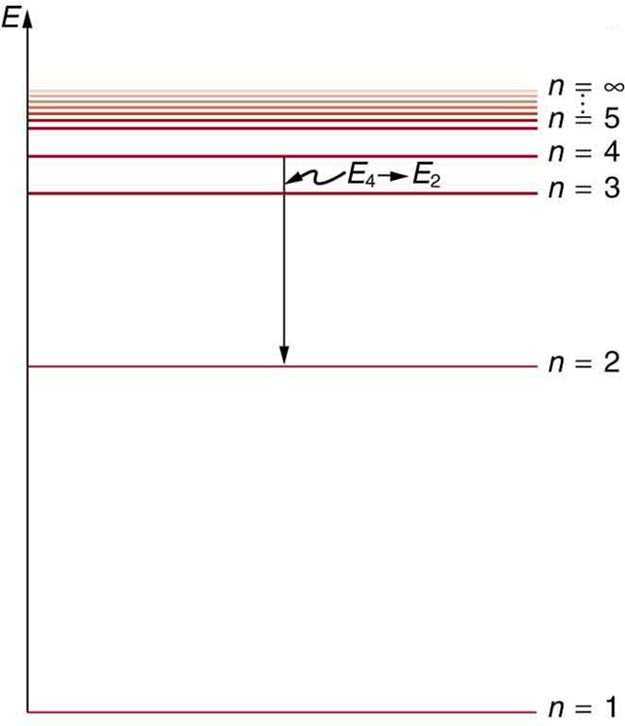

To overcome this difficulty, Niels Bohr proposed, in 1913, what is now called the Bohr model of the atom. He suggested that electrons could only have certain classical motions:

- Electrons in atoms orbit the nucleus.

- The electrons can only orbit stably, without radiating, in certain orbits (called by Bohr the “stationary orbits”): at a certain discrete set of distances from the nucleus. These orbits are associated with definite energies and are also called energy shells or energy levels. In these orbits, the electron’s acceleration does not result in radiation and energy loss as required by classical electrodynamics.

- Electrons can only gain and lose energy by jumping from one allowed orbit to another, absorbing or emitting electromagnetic radiation with a frequency determined by the energy difference of the levels according to the Planck relation:

where is Planck’s constant and is the frequency of the radiation.

Semiclassical Model

The significance of the Bohr model is that the laws of classical mechanics apply to the motion of the electron about the nucleus only when restricted by a quantum rule. Therefore, his atomic model is called a semiclassical model.Basic Assumptions of the Bohr Model

Bohr explained hydrogen’s spectrum successfully by adopting a quantization condition and by introducing the Planck constant in his model.Learning Objectives

Describe basic assumptions that were applied by Niels Bohr to the planetary model of an atomKey Takeaways

Key Points

- Classical electrodynamics predicts that an atom described by a (classical) planetary model would be unstable.

- To explain the hydrogen spectrum, Bohr had to make a few assumptions that electrons could only have certain classical motions.

- After the seminal work by Planck, Einstein, and Bohr, physicists began to realize that it was essential to introduce the notion of ” quantization ” to explain microscopic worlds.

Key Terms

- black body: An idealized physical body that absorbs all incident electromagnetic radiation, regardless of frequency or angle of incidence. Although black body is a theoretical concept, you can find approximate realizations of black body in nature.

- photoelectric effect: The occurrence of electrons being emitted from matter (metals and non-metallic solids, liquids, or gases) as a consequence of their absorption of energy from electromagnetic radiation.

- Electrons in atoms orbit the nucleus.

- The electrons can only orbit stably, without radiating, in certain orbits (called by Bohr the “stationary orbits”) at a certain discrete set of distances from the nucleus. These orbits are associated with definite energies and are also called energy shells or energy levels. In these orbits, the electron’s acceleration does not result in radiation and energy loss as required by classical electrodynamics.

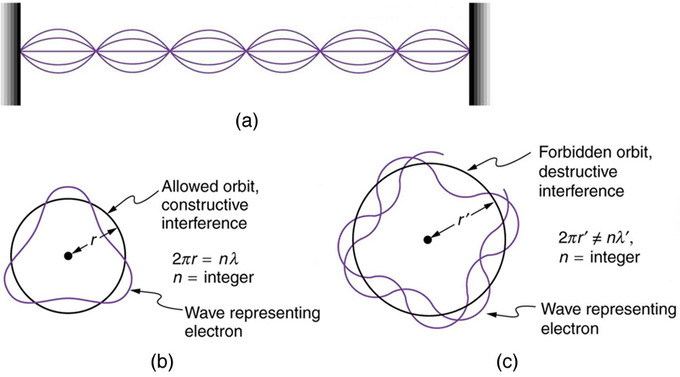

- Electrons can only gain and lose energy by jumping from one allowed orbit to another, absorbing or emitting electromagnetic radiation with a frequency determined by the energy difference of the levels according to the Planck relation: , where is the Planck constant. In addition, Bohr also assumed that the angular momentum is restricted to be an integer multiple of a fixed unit: , where is called the principal quantum number, and .