Y . K;Z I . What Is the Difference between Electronic and Electrical Devices?

When the field of electronics was invented in 1883, electrical devices had already been around for at least 100 years. For example:

- The first electric batteries were invented by a fellow named Alessandro Volta in 1800. Volta’s contribution is so important that the common volt is named for him. (There is some archeological evidence that the ancient Parthian Empire may have invented the electric battery in the second century BC, but if so we don’t know what they used their batteries for, and their invention was forgotten for 2,000 years.)

- The electric telegraph was invented in the 1830s and popularized in America by Samuel Morse, who invented the famous Morse code used to encode the alphabet and numerals into a series of short and long clicks that could be transmitted via telegraph. In 1866, a telegraph cable was laid across the Atlantic Ocean allowing instantaneous communication between the United States and Europe.

The answer lies in how devices manipulate electricity to do their work. Electrical devices take the energy of electric current and transform it in simple ways into some other form of energy — most likely light, heat, or motion. The heating elements in a toaster turn electrical energy into heat so you can burn your toast. And the motor in your vacuum cleaner turns electrical energy into motion that drives a pump that sucks the burnt toast crumbs out of your carpet.

In contrast, electronic devices do much more. Instead of just converting electrical energy into heat, light, or motion, electronic devices are designed to manipulate the electrical current itself to coax it into doing interesting and useful things.

That very first electronic device invented in 1883 by Thomas Edison manipulated the electric current passing through a light bulb in a way that let Edison create a device that could monitor the voltage being provided to an electrical circuit and automatically increase or decrease the voltage if it became too low or too high.

One of the most common things that electronic devices do is manipulate electric current in a way that adds meaningful information to the current. For example, audio electronic devices add sound information to an electric current so that you can listen to music or talk on a cellphone. And video devices add images to an electric current so you can watch great movies until you know every line by heart.

Keep in mind that the distinction between electric and electronic devices is a bit blurry. What used to be simple electrical devices now often include some electronic components in them. For example, your toaster may contain an electronic thermostat that attempts to keep the heat at just the right temperature to make perfect toast.

And even the most complicated electronic devices have simple electrical components in them. For example, although your TV set’s remote control is a pretty complicated little electronic device, it contains batteries, which are simple electrical devices.

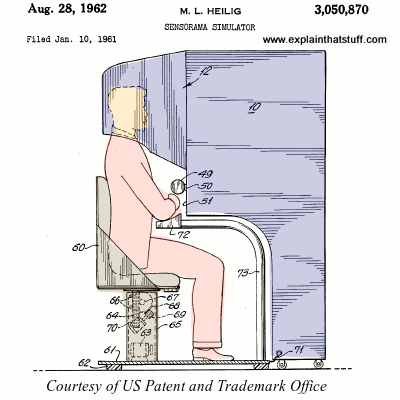

Y . I/O Electronics

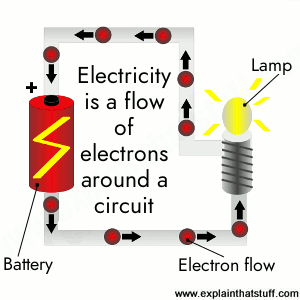

They store your money. They monitor your heartbeat. They carry the sound of your voice into other people's homes. They bring airplanes into land and guide cars safely to their destination—they even fire off the airbags if we get into trouble. It's amazing to think just how many things "they" actually do. "They" are electrons: tiny particles within atoms that march around defined paths known as circuits carrying electrical energy. One of the greatest things people learned to do in the 20th century was to use electrons to control machines and process information. The electronics revolution, as this is known, accelerated the computer revolution and both these things have transformed many areas of our lives. But how exactly do nanoscopically small particles, far too small to see, achieve things that are so big and dramatic? Let's take a closer look and find out!

What's the difference between electricity and electronics?

Electronics is a much more subtle kind of electricity in which tiny electric currents (and, in theory, single electrons) are carefully directed around much more complex circuits to process signals (such as those that carry radio and television programs) or store and process information. Think of something like a microwave oven and it's easy to see the difference between ordinary electricity and electronics. In a microwave, electricity provides the power that generates high-energy waves that cook your food; electronics controls the electrical circuit that does the cooking.

Photo: The 2500-watt heating element inside this electric kettle operates on a current of about 10 amps. By contrast, electronic components use currents likely to be measured in fractions of milliamps (which are thousandths of amps). In other words, a typical electric appliance is likely to be using currents tens, hundreds, or thousands of times bigger than a typical electronic one.

Analog and digital electronics

Photo: Digital technology: Large digital clocks like this are quick and easy for runners to read. Photo by Jhi L. Scott courtesy of US Navy.

There are two very different ways of storing information—known as analog and digital. It sounds like quite an abstract idea, but it's really very simple. Suppose you take an old-fashioned photograph of someone with a film camera. The camera captures light streaming in through the shutter at the front as a pattern of light and dark areas on chemically treated plastic. The scene you're photographing is converted into a kind of instant, chemical painting—an "analogy" of what you're looking at. That's why we say this is an analog way of storing information. But if you take a photograph of exactly the same scene with a digital camera, the camera stores a very different record. Instead of saving a recognizable pattern of light and dark, it converts the light and dark areas into numbers and stores those instead. Storing a numerical, coded version of something is known as digital.

Electronic equipment generally works on information in either analog or digital format. In an old-fashioned transistor radio, broadcast signals enter the radio's circuitry via the antenna sticking out of the case. These are analog signals: they are radio waves, traveling through the air from a distant radio transmitter, that vibrate up and down in a pattern that corresponds exactly to the words and music they carry. So loud rock music means bigger signals than quiet classical music. The radio keeps the signals in analog form as it receives them, boosts them, and turns them back into sounds you can hear. But in a modern digital radio, things happen in a different way. First, the signals travel in digital format—as coded numbers. When they arrive at your radio, the numbers are converted back into sound signals. It's a very different way of processing information and it has both advantages and disadvantages. Generally, most modern forms of electronic equipment (including computers, cell phones, digital cameras, digital radios, hearing aids, and televisions) use digital electronics.

Electronic components

If you've ever looked down on a city from a skyscraper window, you'll have marveled at all the tiny little buildings beneath you and the streets linking them together in all sorts of intricate ways. Every building has a function and the streets, which allow people to travel from one part of a city to another or visit different buildings in turn, make all the buildings work together. The collection of buildings, the way they're arranged, and the many connections between them is what makes a vibrant city so much more than the sum of its individual parts.The circuits inside pieces of electronic equipment are a bit like cities too: they're packed with components (similar to buildings) that do different jobs and the components are linked together by cables or printed metal connections (similar to streets). Unlike in a city, where virtually every building is unique and even two supposedly identical homes or office blocks may be subtly different, electronic circuits are built up from a small number of standard components. But, just like LEGO®, you can put these components together in an infinite number of different places so they do an infinite number of different jobs.

These are some of the most important components you'll encounter:

Resistors

These are the simplest components in any circuit. Their job is to restrict the flow of electrons and reduce the current or voltage flowing by converting electrical energy into heat. Resistors come in many different shapes and sizes. Variable resistors (also known as potentiometers) have a dial control on them so they change the amount of resistance when you turn them. Volume controls in audio equipment use variable resistors like these.Read more in our main article about resistors

Photo: A typical resistor on the circuit board from a radio.

Diodes

The electronic equivalents of one-way streets, diodes allow an electric current to flow through them in only one direction. They are also known as rectifiers. Diodes can be used to change alternating currents (ones flowing back and forth round a circuit, constantly swapping direction) into direct currents (ones that always flow in the same direction).Read more in our main article about diodes.

Photo: Diodes look similar to resistors but work in a different way and do a completely different job. Unlike a resistor, which can be inserted into a circuit either way around, a diode has to be wired in the right direction (corresponding to the arrow on this circuit board).

Capacitors

These relatively simple components consist of two pieces of conducting material (such as metal) separated by a non-conducting (insulating) material called a dielectric. They are often used as timing devices, but they can transform electrical currents in other ways too. In a radio, one of the most important jobs, tuning into the station you want to listen to, is done by a capacitor.Read more in our main article about capacitors.

Transistors

Easily the most important components in computers, transistors can switch tiny electric currents on and off or amplify them (transform small electric currents into much larger ones). Transistors that work as switches act as the memories in computers, while transistors working as amplifiers boost the volume of sounds in hearing aids. When transistors are connected together, they make devices called logic gates that can carry out very basic forms of decision making. (Thyristors are a little bit like transistors, but work in a different way.)Read more in our main article about transistors.

Photo: A typical field-effect transistor (FET) on an electronic circuit board.

Opto-electronic (optical electronic) components

There are various components that can turn light into electricity or vice-versa. Photocells (also known as photoelectric cells) generate tiny electric currents when light falls on them and they're used as "magic eye" beams in various types of sensing equipment, including some kinds of smoke detector. Light-emitting diodes (LEDs) work in the opposite way, converting small electric currents into light. LEDs are typically used on the instrument panels of stereo equipment. Liquid crystal displays (LCDs), such as those used in flatscreen LCD televisions and laptop computers, are more sophisticated examples of opto-electronics.Photo: An LED mounted in an electronic circuit. This is one of the LEDs that makes red light inside an optical computer mouse.

Electronic components have something very important in common. Whatever job they do, they work by controlling the flow of electrons through their structure in a very precise way. Most of these components are made of solid pieces of partly conducting, partly insulating materials called semiconductors (described in more detail in our article about transistors). Because electronics involves understanding the precise mechanisms of how solids let electrons pass through them, it's sometimes known as solid-state physics. That's why you'll often see pieces of electronic equipment described as "solid-state."

Electronic circuits

The key to an electronic device is not just the components it contains, but the way they are arranged in circuits. The simplest possible circuit is a continuous loop connecting two components, like two beads fastened on the same necklace. Analog electronic appliances tend to have far simpler circuits than digital ones. A basic transistor radio might have a few dozen different components and a circuit board probably no bigger than the cover of a paperback book. But in something like a computer, which uses digital technology, circuits are much more dense and complex and include hundreds, thousands, or even millions of separate pathways. Generally speaking, the more complex the circuit, the more intricate the operations it can perform.If you've experimented with simple electronics, you'll know that the easiest way to build a circuit is simply to connect components together with short lengths of copper cable. But the more components you have to connect, the harder this becomes. That's why electronics designers usually opt for a more systematic way of arranging components on what's called a circuit board. A basic circuit board is simply a rectangle of plastic with copper connecting tracks on one side and lots of holes drilled through it. You can easily connect components together by poking them through the holes and using the copper to link them together, removing bits of copper as necessary, and adding extra wires to make additional connections. This type of circuit board is often called "breadboard".

Electronic equipment that you buy in stores takes this idea a step further using circuit boards that are made automatically in factories. The exact layout of the circuit is printed chemically onto a plastic board, with all the copper tracks created automatically during the manufacturing process. Components are then simply pushed through pre-drilled holes and fastened into place with a kind of electrically conducting adhesive known as solder. A circuit manufactured in this way is known as a printed circuit board (PCB).

Photo: Soldering components into an electronic circuit. The smoke you can see comes from the solder melting and turning to a vapor. The blue plastic rectangle I'm soldering onto here is a typical printed circuit board—and you see various components sticking up from it, including a bunch of resistors at the front and a large integrated circuit at the top.

Although PCBs are a great advance on hand-wired circuit boards, they're still quite difficult to use when you need to connect hundreds, thousands, or even millions of components together. The reason early computers were so big, power hungry, slow, expensive, and unreliable is because their components were wired together manually in this old-fashioned way. In the late 1950s, however, engineers Jack Kilby and Robert Noyce independently developed a way of creating electronic components in miniature form on the surface of pieces of silicon. Using these integrated circuits, it rapidly became possible to squeeze hundreds, thousands, millions, and then hundreds of millions of miniaturized components onto chips of silicon about the size of a finger nail. That's how computers became smaller, cheaper, and much more reliable from the 1960s onward.

Photo: Miniaturization. There's more computing power in the processing chip resting on my finger here than you would have found in a room-sized computer from the 1940s!

Electronics around us

Electronics is now so pervasive that it's almost easier to think of things that don't use it than of things that do.Entertainment was one of the first areas to benefit, with radio (and later television) both critically dependent on the arrival of electronic components. Although the telephone was invented before electronics was properly developed, modern telephone systems, cellphone networks, and the computers networks at the heart of the Internet all benefit from sophisticated, digital electronics.

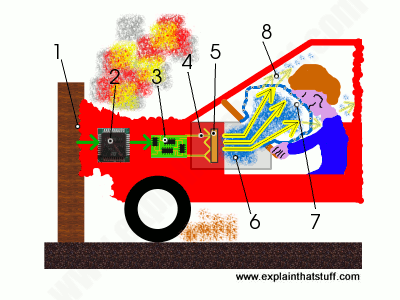

Try to think of something you do that doesn't involve electronics and you may struggle. Your car engine probably has electronic circuits in it—and what about the GPS satellite navigation device that tells you where to go? Even the airbag in your steering wheel is triggered by an electronic circuit that detects when you need some extra protection.

Electronic equipment saves our lives in other ways too. Hospitals are packed with all kinds of electronic gadgets, from heart-rate monitors and ultrasound scanners to complex brain scanners and X-ray machines. Hearing aids were among the first gadgets to benefit from the development of tiny transistors in the mid-20th century, and ever-smaller integrated circuits have allowed hearing aids to become smaller and more powerful in the decades ever since.

Who'd have thought have electrons—just about the smallest things you could ever imagine—would change people's lives in so many important ways?

A brief history of electronics

- 1874: Irish scientist George Johnstone Stoney (1826–1911) suggests electricity must be "built" out of tiny electrical charges. He coins the name "electron" about 20 years later.

- 1875: American scientist George R. Carey builds a photoelectric cell that makes electricity when light shines on it.

- 1879: Englishman Sir William Crookes (1832–1919) develops his cathode-ray tube (similar to an old-style, "tube"-based television) to study electrons (which were then known as "cathode rays").

- 1883: Prolific American inventor Thomas Edison (1847–1931) discovers thermionic emission (also known as the Edison effect), where electrons are given off by a heated filament.

- 1887: German physicist Heinrich Hertz (1857–1894) finds out more about the photoelectric effect, the connection between light and electricity that Carey had stumbled on the previous decade.

- 1897: British physicist J.J. Thomson (1856–1940) shows that cathode rays are negatively charged particles. They are soon renamed electrons.

- 1904: John Ambrose Fleming (1849–1945), an English scientist, produces the Fleming valve (later renamed the diode). It becomes an indispensable component in radios.

- 1906: American inventor Lee De Forest (1873–1961), goes one better and develops an improved valve known as the triode (or audion), greatly improving the design of radios. De Forest is often credited as a father of modern radio.

- 1947: Americans John Bardeen (1908–1991), Walter Brattain (1902–1987), and William Shockley (1910–1989) develop the transistor at Bell Laboratories. It revolutionizes electronics and digital computers in the second half of the 20th century.

- 1958: Working independently, American engineers Jack Kilby (1923–2005) of Texas Instruments and Robert Noyce (1927–1990) of Fairchild Semiconductor (and later of Intel) develop integrated circuits.

- 1971: Marcian Edward (Ted) Hoff (1937–) and Federico Faggin (1941–) manage to squeeze all the key components of a computer onto a single chip, producing the world's first general-purpose microprocessor, the Intel 4004.

- 1987: American scientists Theodore Fulton and Gerald Dolan of Bell Laboratories develop the first single-electron transistor.

- 2008: Hewlett-Packard researcher Stanley Williams builds the first working memristor, a new kind of magnetic circuit component that works like a resistor with a memory, first imagined by American physicist Leon Chua almost four decades earlier (in 1971).

Y . I/O I . Computers

It was probably the worst prediction in history. Back in the 1940s, Thomas Watson, boss of the giant IBM Corporation, reputedly forecast that the world would need no more than "about five computers." Six decades later and the global population of computers has now risen to something like one billion machines!

To be fair to Watson, computers have changed enormously in that time. In the 1940s, they were giant scientific and military behemoths commissioned by the government at a cost of millions of dollars apiece; today, most computers are not even recognizable as such: they are embedded in everything from microwave ovens to cellphones and digital radios. What makes computers flexible enough to work in all these different appliances? How come they are so phenomenally useful? And how exactly do they work? Let's take a closer look!

Photo: The IBM Blue Gene/P supercomputer at Argonne National Laboratory is one of the world's most powerful computers—but really it's just a super-scaled up version of the computer sitting right next to you. Picture courtesy of Argonne National Laboratory published on Flickr in 2009 under a Creative Commons Licence.

What is a computer?

Photo: Computers that used to take up a huge room now fit comfortably on your finger!.

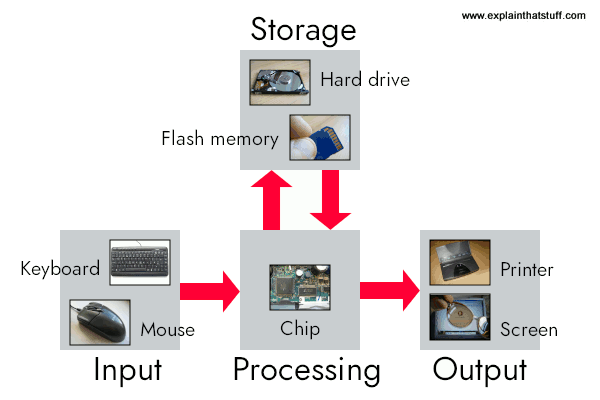

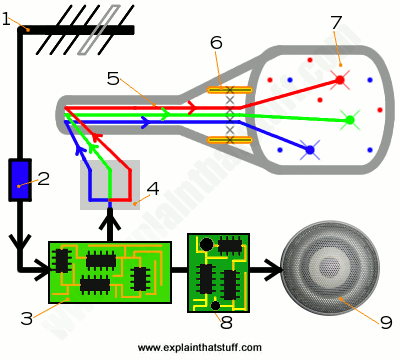

A computer is an electronic machine that processes information—in other words, an information processor: it takes in raw information (or data) at one end, stores it until it's ready to work on it, chews and crunches it for a bit, then spits out the results at the other end. All these processes have a name. Taking in information is called input, storing information is better known as memory (or storage), chewing information is also known as processing, and spitting out results is called output.

Imagine if a computer were a person. Suppose you have a friend who's really good at math. She is so good that everyone she knows posts their math problems to her. Each morning, she goes to her letterbox and finds a pile of new math problems waiting for her attention. She piles them up on her desk until she gets around to looking at them. Each afternoon, she takes a letter off the top of the pile, studies the problem, works out the solution, and scribbles the answer on the back. She puts this in an envelope addressed to the person who sent her the original problem and sticks it in her out tray, ready to post. Then she moves to the next letter in the pile. You can see that your friend is working just like a computer. Her letterbox is her input; the pile on her desk is her memory; her brain is the processor that works out the solutions to the problems; and the out tray on her desk is her output.

Once you understand that computers are about input, memory, processing, and output, all the junk on your desk makes a lot more sense:

Artwork: A computer works by combining input, storage, processing, and output. All the main parts of a computer system are involved in one of these four processes.

- Input: Your keyboard and mouse, for example, are just input units—ways of getting information into your computer that it can process. If you use a microphone and voice recognition software, that's another form of input.

- Memory/storage: Your computer probably stores all your documents and files on a hard-drive: a huge magnetic memory. But smaller, computer-based devices like digital cameras and cellphones use other kinds of storage such as flash memory cards.

- Processing: Your computer's processor (sometimes known as the central processing unit) is a microchip buried deep inside. It works amazingly hard and gets incredibly hot in the process. That's why your computer has a little fan blowing away—to stop its brain from overheating!

- Output: Your computer probably has an LCD screen capable of displaying high-resolution (very detailed) graphics, and probably also stereo loudspeakers. You may have an inkjet printer on your desk too to make a more permanent form of output.

What is a computer program?

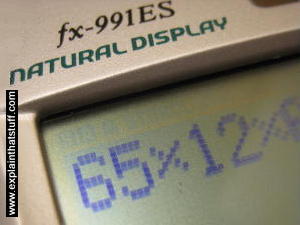

As you can read in our long article on computer history, the first computers were gigantic calculating machines and all they ever really did was "crunch numbers": solve lengthy, difficult, or tedious mathematical problems. Today, computers work on a much wider variety of problems—but they are all still, essentially, calculations. Everything a computer does, from helping you to edit a photograph you've taken with a digital camera to displaying a web page, involves manipulating numbers in one way or another.

Photo: Calculators and computers are very similar, because both work by processing numbers. However, a calculator simply figures out the results of calculations; and that's all it ever does. A computer stores complex sets of instructions called programs and uses them to do much more interesting things.

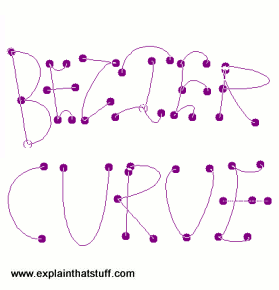

Suppose you're looking at a digital photo you just taken in a paint or photo-editing program and you decide you want a mirror image of it (in other words, flip it from left to right). You probably know that the photo is made up of millions of individual pixels (colored squares) arranged in a grid pattern. The computer stores each pixel as a number, so taking a digital photo is really like an instant, orderly exercise in painting by numbers! To flip a digital photo, the computer simply reverses the sequence of numbers so they run from right to left instead of left to right. Or suppose you want to make the photograph brighter. All you have to do is slide the little "brightness" icon. The computer then works through all the pixels, increasing the brightness value for each one by, say, 10 percent to make the entire image brighter. So, once again, the problem boils down to numbers and calculations.

What makes a computer different from a calculator is that it can work all by itself. You just give it your instructions (called a program) and off it goes, performing a long and complex series of operations all by itself. Back in the 1970s and 1980s, if you wanted a home computer to do almost anything at all, you had to write your own little program to do it. For example, before you could write a letter on a computer, you had to write a program that would read the letters you typed on the keyboard, store them in the memory, and display them on the screen. Writing the program usually took more time than doing whatever it was that you had originally wanted to do (writing the letter). Pretty soon, people started selling programs like word processors to save you the need to write programs yourself.

Today, most computer users rely on prewritten programs like Microsoft Word and Excel or download apps for their tablets and smartphones without caring much how they got there. Hardly anyone writes programs any more, which is a shame, because it's great fun and a really useful skill. Most people see their computers as tools that help them do jobs, rather than complex electronic machines they have to pre-program. Some would say that's just as well, because most of us have better things to do than computer programming. Then again, if we all rely on computer programs and apps, someone has to write them, and those skills need to survive. Thankfully, there's been a recent resurgence of interest in computer programming. "Coding" (an informal name for programming, since programs are sometimes referred to as "code") is being taught in schools again with the help of easy-to-use programming languages like Scratch. There's a growing hobbyist movement, linked to build-it yourself gadgets like the Raspberry Pi and Arduino. And Code Clubs, where volunteers teach kids programming, are springing up all over the world.

What's the difference between hardware and software?

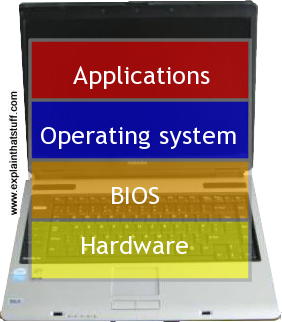

The beauty of a computer is that it can run a word-processing program one minute—and then a photo-editing program five seconds later. In other words, although we don't really think of it this way, the computer can be reprogrammed as many times as you like. This is why programs are also called software. They're "soft" in the sense that they are not fixed: they can be changed easily. By contrast, a computer's hardware—the bits and pieces from which it is made (and the peripherals, like the mouse and printer, you plug into it)—is pretty much fixed when you buy it off the shelf. The hardware is what makes your computer powerful; the ability to run different software is what makes it flexible. That computers can do so many different jobs is what makes them so useful—and that's why millions of us can no longer live without them!What is an operating system?

Suppose you're back in the late 1970s, before off-the-shelf computer programs have really been invented. You want to program your computer to work as a word processor so you can bash out your first novel—which is relatively easy but will take you a few days of work. A few weeks later, you tire of writing things and decide to reprogram your machine so it'll play chess. Later still, you decide to program it to store your photo collection. Every one of these programs does different things, but they also do quite a lot of similar things too. For example, they all need to be able to read the keys pressed down on the keyboard, store things in memory and retrieve them, and display characters (or pictures) on the screen. If you were writing lots of different programs, you'd find yourself writing the same bits of programming to do these same basic operations every time. That's a bit of a programming chore, so why not simply collect together all the bits of program that do these basic functions and reuse them each time?

Photo: Typical computer architecture: You can think of a computer as a series of layers, with the hardware at the bottom, the BIOS connecting the hardware to the operating system, and the applications you actually use (such as word processors, Web browsers, and so on) running on top of that. Each of these layers is relatively independent so, for example, the same Windows operating system might run on laptops running a different BIOS, while a computer running Windows (or another operating system) can run any number of different applications.

That's the basic idea behind an operating system: it's the core software in a computer that (essentially) controls the basic chores of input, output, storage, and processing. You can think of an operating system as the "foundations" of the software in a computer that other programs (called applications) are built on top of. So a word processor and a chess game are two different applications that both rely on the operating system to carry out their basic input, output, and so on. The operating system relies on an even more fundamental piece of programming called the BIOS (Basic Input Output System), which is the link between the operating system software and the hardware. Unlike the operating system, which is the same from one computer to another, the BIOS does vary from machine to machine according to the precise hardware configuration and is usually written by the hardware manufacturer. The BIOS is not, strictly speaking, software: it's a program semi-permanently stored into one of the computer's main chips, so it's known as firmware (it is usually designed so it can be updated occasionally, however).

Operating systems have another big benefit. Back in the 1970s (and early 1980s), virtually all computers were maddeningly different. They all ran in their own, idiosyncratic ways with fairly unique hardware (different processor chips, memory addresses, screen sizes and all the rest). Programs written for one machine (such as an Apple) usually wouldn't run on any other machine (such as an IBM) without quite extensive conversion. That was a big problem for programmers because it meant they had to rewrite all their programs each time they wanted to run them on different machines. How did operating systems help? If you have a standard operating system and you tweak it so it will work on any machine, all you have to do is write applications that work on the operating system. Then any application will work on any machine. The operating system that definitively made this breakthrough was, of course, Microsoft Windows, spawned by Bill Gates. (It's important to note that there were earlier operating systems too. You can read more of that story in our article on the history of computers.)

Computers for everyone?

Photo: The original OLPC computer, courtesy of One Laptop Per Child, licensed under a Creative Commons License.

What should we do about the digital divide—the gap between people who use computers and those who don't? Most people have chosen just to ignore it, but US computer pioneer Nicholas Negroponte and his team have taken a much more practical approach. Over the last few years, they've worked to create a trimmed-down, low-cost laptop suitable for people who live in developing countries where electricity and telephone access are harder to find. Their project is known as OLPC: One Laptop Per Child.

What's different about the OLPC?

In essence, an OLPC computer is no different from any other laptop: it's a machine with input, output, memory storage, and a processor—the key components of any computer. But in OLPC, these parts have been designed especially for developing countries.Here are some of the key features:

- Low cost: OLPC was originally designed to cost just $100. Although it failed to meet that target, it is still cheaper than most traditional laptops.

- Inexpensive LCD screen: The hi-tech screen is designed to work outdoors in bright sunlight, but costs only $35 to make—a fraction of the cost of a normal LCD flat panel display.

- Trimmed down operating system: The operating system is like the conductor of an orchestra: the part of a computer that makes all the other parts (from the processor chip to the buttons on the mouse) work in harmony. Originally, OLPC used only Linux (an efficient and low-cost operating system developed by thousands of volunteers), but it began offering Microsoft Windows versions as an alternative in 2008.

- Wireless broadband: In some parts of Africa, fewer than one person in a hundred has access to a wired, landline telephone, so dialup Internet access via telephone would be no use for OLPC users. Each machine's wireless chip will allow it to create an ad-hoc network with other machines nearby—so OLPC users will be able to talk to one another and exchange information effortlessly.

- Flash memory: Instead of an expensive and relatively unreliable hard drive, OLPC uses a huge lump of flash memory—like the memory used in USB flash memory sticks and digital camera memory cards.

- Own power: Home electricity supplies are scarce in many developing countries, so OLPC has a hand crank and built-in generator. One minute of cranking generates up to 10 minutes of power.

Is OLPC a good idea?

Anything that closes the digital divide, helping poorer children gain access to education and opportunity, must be a good thing. However, some critics have questioned whether projects like this are really meeting the most immediate needs of people in developing countries. According to the World Health Organization, around 1.1 billion people (18 percent of the world's population) have no access to safe drinking water, while 2.7 billion (a staggering 42 percent of the world's population) lack basic sanitation. During the 1990s, around 2 billion people were affected by major natural disasters such as floods and droughts. Every single day, 5000 children die because of dirty water—that's more people dying each day than were killed in the 9/11 terrorist attacks.With basic problems on this scale, it could be argued that providing access to computers and the Internet is not a high priority for most of the world's poorer people. Then again, education is one of the most important weapons in the fight against poverty. Perhaps computers could provide young people with the knowledge they need to help themselves, their families, and communities escape a life sentence of hardship?

Y . I/O II . Integrated circuits

Have you ever heard of a 1940s computer called the ENIAC? It was about the same length and weight as three to four double-decker buses and contained 18,000 buzzing electronic switches known as vacuum tubes. Despite its gargantuan size, it was thousands of times less powerful than a modern laptop—a machine about 100 times smaller.

If the history of computing sounds like a magic trick—squeezing more and more power into less and less space—it is! What made it possible was the invention of the integrated circuit (IC) in 1958. It's a neat way of cramming hundreds, thousands, millions, or even billions of electronic components onto tiny chips of silicon no bigger than a fingernail. Let's take a closer look at ICs and how they work!

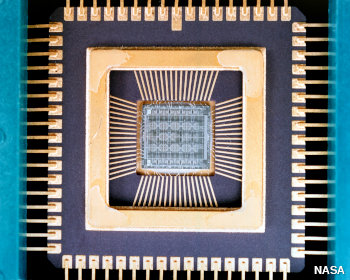

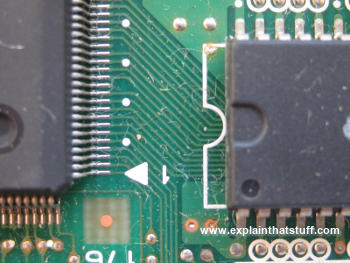

Photo: An integrated circuit from the outside. This is what an IC looks like when it's conveniently packaged inside a flash memory chip. Inside the black protective case, there's a tiny integrated circuit, with millions of transistors capable of storing millions of binary digits of information. You can see what the circuit itself looks like in the photograph below.

What is an integrated circuit?

Photo: An integrated circuit from the inside. If you could lift the cover off a typical microchip like the one in the top photo (and you can't very easily—believe me, I've tried!), this is what you'd find inside. The integrated circuit is the tiny square in the center. Connections run out from it to the terminals (metal pins or legs) around the edge. When you hook up something to one of these terminals, you're actually connecting into the circuit itself. You can just about see the pattern of electronic components on the surface of the chip itself. Photo by courtesy of NASA Glenn Research Center (NASA-GRC).

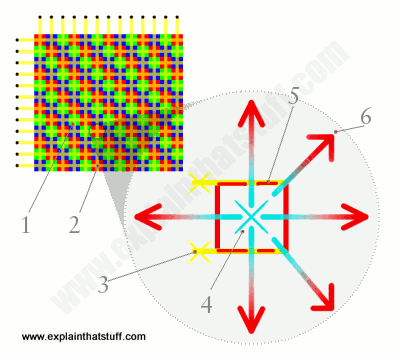

Open up a television or a radio and you'll see it's built around a printed circuit board (PCB): a bit like an electric street-map with small electronic components (such as resistors and capacitors) in place of the buildings and printed copper connections linking them together like miniature metal streets. Circuit boards are fine in small appliances like this, but if you try to use the same technique to build a complex electronic machine, such as a computer, you quickly hit a snag. Even the simplest computer needs eight electronic switches to store a single byte (character) of information. So if you want to build a computer with just enough memory to store this paragraph, you're looking at about 750 characters times 8 or about 6000 switches—for a single paragraph! If you plump for switches like they had in the ENIAC—vacuum tubes about the size of an adult thumb—you soon end up with a whopping great big, power-hungry machine that needs its own mini electricity plant to keep it running.

When three American physicists invented transistors in 1947, things improved somewhat. Transistors were a fraction the size of vacuum tubes and relays (the electromagnetic switches that had started to replace vacuum tubes in the mid-1940s), used much less power, and were far more reliable. But there was still the problem of linking all those transistors together in complex circuits. Even after transistors were invented, computers were still a tangled mass of wires.

Photo: A typical modern transistor mounted on a printed circuit board. Imagine having to wire hundreds of millions of these things onto a PCB!

Integrated circuits changed all that. The basic idea was to take a complete circuit, with all its many components and the connections between them, and recreate the whole thing in microscopically tiny form on the surface of a piece of silicon. It was an amazingly clever idea and it's made possible all kinds of "microelectronic" gadgets we now take for granted, from digital watches and pocket calculators to Moon-landing rockets and missiles with built-in satellite navigation.

Integrated circuits revolutionized electronics and computing during the 1960s and 1970s. First, engineers were putting dozens of components on a chip in what was called Small-Scale Integration (SSI). Medium-Scale Integration (MSI) soon followed, with hundreds of components in an area the same size. Predictably, around 1970, Large-Scale Integration (LSI) brought thousands of components, Very-Large-Scale Integration (VLSI) gave us tens of thousands, and Ultra Large Scale (ULSI) millions—and all on chips no bigger than they'd been before. In 1965, Gordon Moore of the Intel Company, a leading chip maker, noticed that the number of components on a chip was doubling roughly every one to two years. Moore's Law, as this is known, has continued to hold ever since. Interviewed by The New York Times 50 years later, in 2015, Moore revealed his astonishment that the law has continued to hold: "The original prediction was to look at 10 years, which I thought was a stretch. This was going from about 60 elements on an integrated circuit to 60,000—a thousandfold extrapolation over 10 years. I thought that was pretty wild. The fact that something similar is going on for 50 years is truly amazing."

How are integrated circuits made?

How do we make something like a memory or processor chip for a computer? It all starts with a raw chemical element such as silicon, which is chemically treated or doped to make it have different electrical properties...Doping semiconductors

Photo: A traditional printed circuit board (PCB) like this has tracks linking together the terminals (metal connecting legs) from different electronic components. Think of the tracks as "streets" making paths between "buildings" where useful things are done (the components themselves). There's a miniaturized version of a circuit board inside an integrated circuit: the tracks are created in microscopic form on the surface of a silicon wafer. This is the reverse side of the flash memory chip in our top photo.

If you've read our articles on diodes and transistors, you'll be familiar with the idea of semiconductors. Traditionally, people thought of materials fitting into two neat categories: those that allow electricity to flow through them quite readily (conductors) and those that don't (insulators). Metals make up most of the conductors, while nonmetals such as plastics, wood, and glass are the insulators. In fact, things are far more complex than this—especially when it comes to certain elements in the middle of the periodic table (in groups 14 and 15), notably silicon and germanium. Normally insulators, these elements can be made to behave more like conductors if we add small quantities of impurities to them in a process known as doping. If you add antimony to silicon, you give it slightly more electrons than it would normally have—and the power to conduct electricity. Silicon "doped" that way is called n-type. Add boron instead of antimony and you remove some of silicon's electrons, leaving behind "holes" that work as "negative electrons," carrying a positive electric current in the opposite way. That kind of silicon is called p-type. Putting areas of n-type and p-type silicon side by side creates junctions where electrons behave in very interesting ways—and that's how we create electronic, semiconductor-based components like diodes, transistors, and memories.

Inside a chip plant

Photo: A silicon wafer. Photo by courtesy of NASA Glenn Research Center (NASA-GRC).

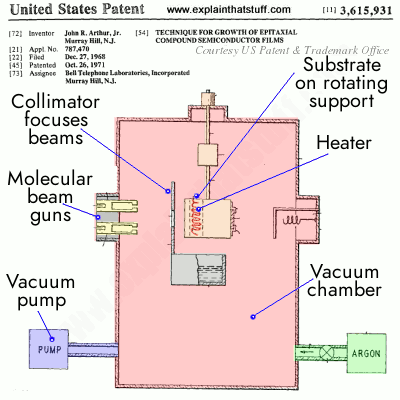

The process of making an integrated circuit starts off with a big single crystal of silicon, shaped like a long solid pipe, which is "salami sliced" into thin discs (about the dimensions of a compact disc) called wafers. The wafers are marked out into many identical square or rectangular areas, each of which will make up a single silicon chip (sometimes called a microchip). Thousands, millions, or billions of components are then created on each chip by doping different areas of the surface to turn them into n-type or p-type silicon. Doping is done by a variety of different processes. In one of them, known as sputtering, ions of the doping material are fired at the silicon wafer like bullets from a gun. Another process called vapor deposition involves introducing the doping material as a gas and letting it condense so the impurity atoms create a thin film on the surface of the silicon wafer. Molecular beam epitaxy is a much more precise form of deposition.

Of course, making integrated circuits that pack hundreds, millions, or billions of components onto a fingernail-sized chip of silicon is all a bit more complex and involved than it sounds. Imagine the havoc even a speck of dirt could cause when you're working at the microscopic (or sometimes even the nanoscopic) scale. That's why semiconductors are made in spotless laboratory environments called clean rooms, where the air is meticulously filtered and workers have to pass in and out through airlocks wearing all kinds of protective clothing.

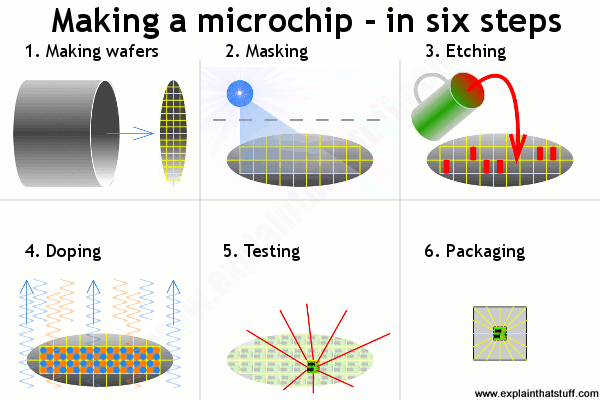

How you make a microchip - a quick summary

Although making a chip is very intricate and complex, there are really only six separate steps (some of them are repeated more than once). Greatly simplified, here's how the process works:

- Making wafers: We grow pure silicon crystals into long cylinders and slice them (like salami) into thin wafers, each of which will ultimately be cut up into many chips.

- Masking: We heat the wafers to coat them in silicon dioxide and use ultraviolet light (blue) to add a hard, protective layer called photoresist.

- Etching: We use a chemical to remove some of the photoresist, making a kind of template pattern showing where we want areas of n-type and p-type silicon.

- Doping: We heat the etched wafers with gases containing impurities to make the areas of n-type and p-type silicon. More masking and etching may follow.

- Testing: Long metal connection leads run from a computer-controlled testing machine to the terminals on each chip. Any chips that don't work are marked and rejected.

- Packaging: All the chips that work OK are cut out of the wafer and packaged into protective lumps of plastic, ready for use in computers and other electronic equipment.

Who invented the integrated circuit?

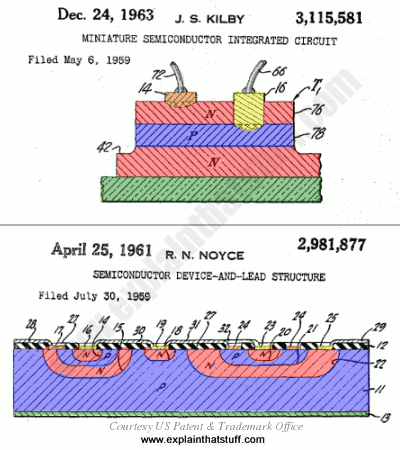

You've probably read in books that ICs were developed jointly by Jack Kilby (1923–2005) and Robert Noyce (1927–1990), as though these two men happily collaborated on their brilliant invention! In fact, Kilby and Noyce came up with the idea independently, at more or less exactly the same time, prompting a furious battle for the rights to the invention that was anything but happy.

Photo: Computer microchips like these—and all the appliances and gadgets that use them—owe their existence to Jack Kilby and Robert Noyce. Photo by Warren Gretz courtesy of US Department of Energy/National Renewable Energy Laboratory (US DOE/NREL).

How could two people invent the same thing at exactly the same time? Easy: integrated circuits were an idea waiting to happen. By the mid-1950s, the world (and the military, in particular) had discovered the amazing potential of electronic computers and it was blindingly apparent to visionaries like Kilby and Noyce that there needed to be a better way of building and connecting transistors in large quantities. Kilby was working at Texas Instruments when he came upon the idea he called the monolithic principle: trying to build all the different parts of an electronic circuit on a silicon chip. On September 12, 1958, he hand-built the world's first, crude integrated circuit using a chip of germanium (a semiconducting element similar to silicon) and Texas Instruments applied for a patent on the idea the following year.

Meanwhile, at another company called Fairchild Semiconductor (formed by a small group of associates who had originally worked for the transistor pioneer William Shockley) the equally brilliant Robert Noyce was experimenting with miniature circuits of his own. In 1959, he used a series of photographic and chemical techniques known as the planar process (which had just been developed by a colleague, Jean Hoerni) to produce the first, practical, integrated circuit, a method that Fairchild then tried to patent.

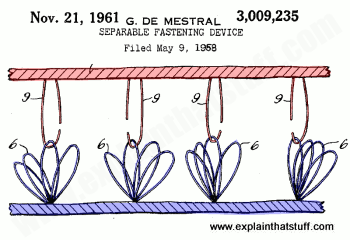

Artwork: Snap! Two great electrical engineers, Jack Kilby and Robert Noyce, came up with the same idea at almost exactly the same time in 1959. Although Kilby filed his patent first, Noyce's patent was granted earlier. Here are drawings from their original patent applications. You can see that we have essentially the same idea in both, with electronic components formed from junctions between layers of p-type (blue) and n-type (red) semiconductors. Connections to the p-type and n-type regions are shown in orange and yellow and the base layers (substrates) are shown in green. Artworks courtesy of US Patent and Trademark Office with our own added coloring to improve clarity and highlight the similarities. You can find links to the patents themselves in the references down below.

There was considerable overlap between the two men's work and Texas Instruments and Fairchild battled in the courts for much of the 1960s over who had really developed the integrated circuit. Finally, in 1969, the companies agreed to share the idea.

Kilby and Noyce are now rightly regarded as joint-inventors of arguably the most important and far-reaching technology developed in the 20th century. Both men were inducted into the National Inventors Hall of Fame (Kilby in 1982, Noyce the following year) and Kilby's breakthrough was also recognized with the award of a half-share in the Nobel Prize in Physics in 2000 (as Kilby very generously noted in his acceptance speech, Noyce would surely have shared in the prize too had he not died of a heart attack a decade earlier).

While Kilby is remembered as a brilliant scientist, Noyce's legacy has an added dimension. In 1968, he co-founded the Intel Electronics company with Gordon Moore (1929–), which went on to develop the microprocessor (single-chip computer) in 1974. With IBM, Microsoft, Apple, and other pioneering companies, Intel is credited with helping to bring affordable personal computers to our homes and workplaces. Thanks to Noyce and Kilby, and brilliant engineers who subsequently built on their work, there are now something like two billion computers in use throughout the world, many of them incorporated into cellphones, portable satellite navigation devices, and other electronic gadgets.

Y . I/O III Molecular beam epitaxy

Growing crystals is easy. Fill a plastic bottle almost to the top with cold water and place it in a freezer for a couple of hours. Take it out again at just the right time and the water will still be a liquid but, if you tilt the bottle very gently, it will snap into an amazing snow forest of ice crystals right before your eyes! Growing single crystals for scientific or industrial use in such things as integrated circuits is somewhat harder because you need to combine atoms of different chemical elements much more precisely. At the opposite end of the spectrum from growing random ice crystals in your freezer, one of the most exacting methods of making a crystal is a technique called molecular beam epitaxy (MBE). It sounds horribly complex, but it's fairly easy to understand. Let's take a closer look!

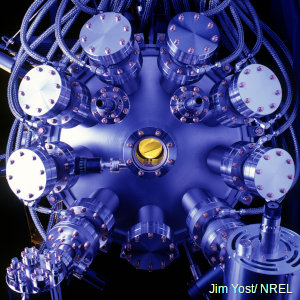

Photo: Molecular beam epitaxy (MBE) being used to make photovoltaic solar cells: You can see the beams around the edge that fire molecules onto the substrate in the center. Photo by Jim Yost courtesy of US DOE/NREL (U.S. Department of Energy/National Renewable Energy Laboratory).

What is molecular beam epitaxy?

Photo: Molecular beam epitaxy (MBE) in action. MBE takes place in ultra-high vacuum (UHV) chambers like this, at temperatures of around 500°C (932°F), to ensure a totally clean, dust-free environment; the slightest contamination could ruin the crystal. Photo by Jim Yost courtesy of US DOE/NREL (U.S. Department of Energy/National Renewable Energy Laboratory).

To make an interesting new crystal using MBE, you start off with a base material called a substrate, which could be a familiar semiconductor material such as silicon, germanium, or gallium arsenide. First, you heat the substrate, typically to some hundreds of degrees (for example, 500–600°C or about 900–1100°F in the case of gallium arsenide). Then you fire relatively precise beams of atoms or molecules (heated up so they're in gas form) at the substrate from "guns" called effusion cells. You need one "gun" for each different beam, shooting a different kind of molecule at the substrate, depending on the nature of the crystal you're trying to create. The molecules land on the surface of the substrate, condense, and build up very slowly and systematically in ultra-thin layers, so the complex, single crystal you're after grows one atomic layer at a time. That's why MBE is an example of what's called thin-film deposition. Since it involves building up materials by manipulating atoms and molecules, it's also a perfect example of what we mean by nanotechnology.

One reason that MBE is such a precise way of making a crystal is that it happens in highly controlled conditions: extreme cleanliness and what's called an ultra-high vacuum (UHV), so no dirt particles or unwanted gas molecules can interfere with or contaminate the crystal growth. "Extreme cleanliness" means even cleaner than the conditions used in normal semiconductor manufacture; an "ultra-high vacuum" means the pressure is so low that it's at the limit of what's easily measurable.

That's pretty much MBE in a nutshell. If you want a really simply analogy, it's a little bit like the way an inkjet printer makes layers of colored print on a page by firing jets of ink from hot guns. In an inkjet printer, you have four separate guns firing different colored inks (one for cyan ink, one for magenta, one for yellow, and one for black), which slowly build up a complex colored image on the paper. In MBE, separate beams fire different molecules and they build up on the surface of the substrate, albeit more slowly than in inkjet printing—MBE can take hours! Epitaxially simply means "arranged on top of," so all molecular beam epitaxy really means is using beams of molecules to build up layers on top of a substrate.

Typical uses

You might want to create a semiconductor laser for a CD player, or an advanced computer chip, or a low-temperature superconductor. Or maybe you want to build a solar-cell by depositing a thin film of a photovoltaic material (something that creates electricity when light falls on it) onto a substrate. In short, if you're designing a really precise thin-film device for computing, optics, or photonics (using light beams to carry and process signals in a similar way to electronics), MBE is one of the techniques you'll probably consider using. Apart from industrial processes, it's also used in all kinds of advanced nanotechnology research.Advantages and disadvantages

Why use MBE rather than some other method making a crystal? It's particularly good for making high-quality (low-defect, highly uniform) semiconductor crystals from compounds (based on elements in groups III(a)–V(a) of the periodic table), or from a number of different elements, instead of from a single element. It also allows extremely thin films to be fabricated in a very precise, carefully controlled way. Unfortunately, it does have some drawbacks too. It's a slow and laborious method (crystal growth rate is typically a few microns per hour), which means it's more suited for scientific research laboratories than high-volume production, and the equipment involved is complex and very expensive (partly because of the difficulty of achieving such clean, high-vacuum conditions).

Who invented molecular beam epitaxy?

The basic MBE technique was developed around 1968 at Bell Laboratories by two American physicists, Chinese-born Alfred Y. Cho and John R. Arthur, Jr. Important contributions were also made by other scientists, such as Japanese-born physicist Leo Esaki (who won the 1973 Nobel Prize in Physics for his work on semiconductor electronics) and Ray Tsu, working at IBM. Since then, many other researchers have developed and refined the process. Alfred Cho was awarded the inaugural Nanotechnology International Prize, RUSNANOPRIZE-2009, for his work in developing MBE.Photo: A drawing taken from John R. Arthur, Jr.'s original molecular beam epitaxy patent, filed in 1968 and granted in 1971. Artwork courtesy of US Patent and Trademark office.

Y . I/O IIII The Internet

When you chat to somebody on the Net or send them an e-mail, do you ever stop to think how many different computers you are using in the process? There's the computer on your own desk, of course, and another one at the other end where the other person is sitting, ready to communicate with you. But in between your two machines, making communication between them possible, there are probably about a dozen other computers bridging the gap. Collectively, all the world's linked-up computers are called the Internet. How do they talk to one another? Let's take a closer look!

Photo: What most of us think of as the Internet—Google, eBay, and all the rest of it—is actually the World Wide Web. The Internet is the underlying telecommunication network that makes the Web possible. If you use broadband, your computer is probably connected to the Internet all the time it's on.

What is the Internet?

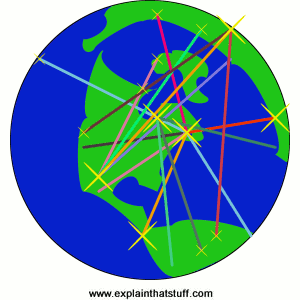

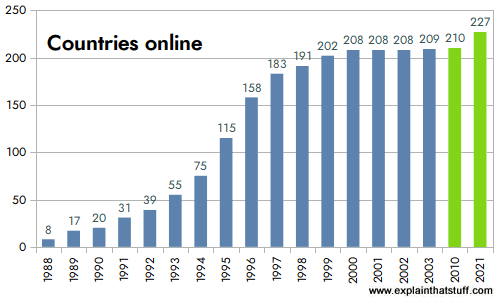

Global communication is easy now thanks to an intricately linked worldwide computer network that we call the Internet. In less than 20 years, the Internet has expanded to link up around 210 different nations. Even some of the world's poorest developing nations are now connected.

Lots of people use the word "Internet" to mean going online. Actually, the "Internet" is nothing more than the basic computer network. Think of it like the telephone network or the network of highways that criss-cross the world. Telephones and highways are networks, just like the Internet. The things you say on the telephone and the traffic that travels down roads run on "top" of the basic network. In much the same way, things like the World Wide Web (the information pages we can browse online), instant messaging chat programs, MP3 music downloading, and file sharing are all things that run on top of the basic computer network that we call the Internet.

The Internet is a collection of standalone computers (and computer networks in companies, schools, and colleges) all loosely linked together, mostly using the telephone network. The connections between the computers are a mixture of old-fashioned copper cables, fiber-optic cables (which send messages in pulses of light), wireless radio connections (which transmit information by radio. waves), and satellite links.

Photo: Countries online: Between 1988 and 2003, virtually every country in the world went online. Although most countries are now "wired," that doesn't mean everyone is online in all those countries, as you can see from the next chart, below. Source: Redrawn by Explainthatstuff.com from ITU World Telecommunication Development Report: Access Indicators for the Information Society: Summary, 2003. I've not updated this figure because the message is pretty clear: virtually all countries now have at least some Internet access.

What does the Internet do?

The Internet has one very simple job: to move computerized information (known as data) from one place to another. That's it! The machines that make up the Internet treat all the information they handle in exactly the same way. In this respect, the Internet works a bit like the postal service. Letters are simply passed from one place to another, no matter who they are from or what messages they contain. The job of the mail service is to move letters from place to place, not to worry about why people are writing letters in the first place; the same applies to the Internet.

Just like the mail service, the Internet's simplicity means it can handle many different kinds of information helping people to do many different jobs. It's not specialized to handle emails, Web pages, chat messages, or anything else: all information is handled equally and passed on in exactly the same way. Because the Internet is so simply designed, people can easily use it to run new "applications"—new things that run on top of the basic computer network. That's why, when two European inventors developed Skype, a way of making telephone calls over the Net, they just had to write a program that could turn speech into Internet data and back again. No-one had to rebuild the entire Internet to make Skype possible.

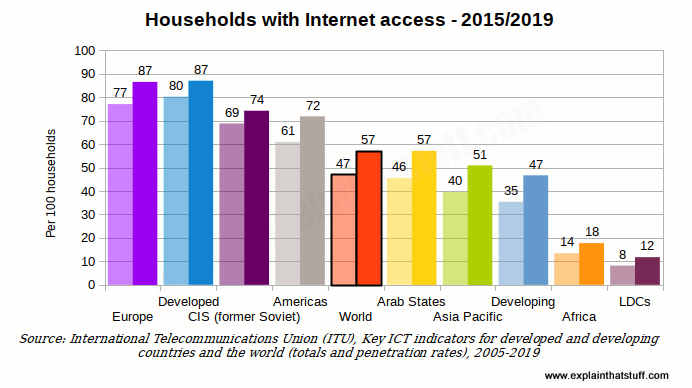

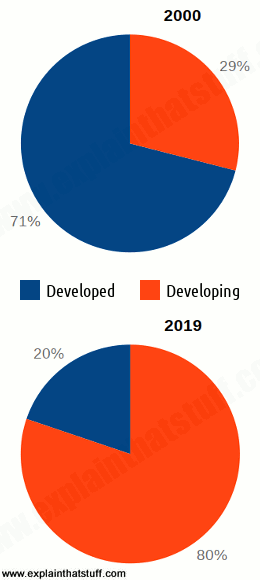

Photo: Internet use around the world: This chart compares the estimated percentage of households with Internet access for different world regions and economic groupings. Although there have been dramatic improvements in all regions, there are still great disparities between the "richer" nations and the "poorer" ones. The world average, shown by the black-outlined orange center bar, is still only 46.4 out of 100 (less than half). Not surprisingly, richer nations are to the left of the average and poorer ones to the right. Source: Redrawn from Chart 1.5 of the Executive Summary of Measuring the Information Society 2015, International Telecommunication Union (ITU).

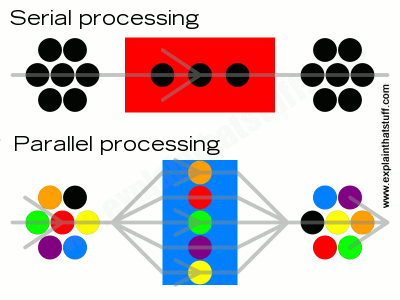

How does Internet data move?

Circuit switching

Much of the Internet runs on the ordinary public telephone network—but there's a big difference between how a telephone call works and how the Internet carries data. If you ring a friend, your telephone opens a direct connection (or circuit) between your home and theirs. If you had a big map of the worldwide telephone system (and it would be a really big map!), you could theoretically mark a direct line, running along lots of miles of cable, all the way from your phone to the phone in your friend's house. For as long as you're on the phone, that circuit stays permanently open between your two phones. This way of linking phones together is called circuit switching. In the old days, when you made a call, someone sitting at a "switchboard" (literally, a board made of wood with wires and sockets all over it) pulled wires in and out to make a temporary circuits that connected one home to another. Now the circuit switching is done automatically by an electronic telephone exchange.If you think about it, circuit switching is a really inefficient way to use a network. All the time you're connected to your friend's house, no-one else can get through to either of you by phone. (Imagine being on your computer, typing an email for an hour or more—and no-one being able to email you while you were doing so.) Suppose you talk very slowly on the phone, leave long gaps of silence, or go off to make a cup of coffee. Even though you're not actually sending information down the line, the circuit is still connected—and still blocking other people from using it.

Packet switching

The Internet could, theoretically, work by circuit switching—and some parts of it still do. If you have a traditional "dialup" connection to the Net (where your computer dials a telephone number to reach your Internet service provider in what's effectively an ordinary phone call), you're using circuit switching to go online. You'll know how maddeningly inefficient this can be. No-one can phone you while you're online; you'll be billed for every second you stay on the Net; and your Net connection will work relatively slowly.Most data moves over the Internet in a completely different way called packet switching. Suppose you send an email to someone in China. Instead of opening up a long and convoluted circuit between your home and China and sending your email down it all in one go, the email is broken up into tiny pieces called packets. Each one is tagged with its ultimate destination and allowed to travel separately. In theory, all the packets could travel by totally different routes. When they reach their ultimate destination, they are reassembled to make an email again.

Packet switching is much more efficient than circuit switching. You don't have to have a permanent connection between the two places that are communicating, for a start, so you're not blocking an entire chunk of the network each time you send a message. Many people can use the network at the same time and since the packets can flow by many different routes, depending on which ones are quietest or busiest, the whole network is used more evenly—which makes for quicker and more efficient communication all round.

How packet switching works

What is circuit switching?

Suppose you want to move home from the United States to Africa and you decide to take your whole house with you—not just the contents, but the building too! Imagine the nightmare of trying to haul a house from one side of the world to the other. You'd need to plan a route very carefully in advance. You'd need roads to be closed so your house could squeeze down them on the back of a gigantic truck. You'd also need to book a special ship to cross the ocean. The whole thing would be slow and difficult and the slightest problem en-route could slow you down for days. You'd also be slowing down all the other people trying to travel at the same time. Circuit switching is a bit like this. It's how a phone call works.Picture: Circuit switching is like moving your house slowly, all in one go, along a fixed route between two places.

What is packet switching?

Is there a better way? Well, what if you dismantled your home instead, numbered all the bricks, put each one in an envelope, and mailed them separately to Africa? All those bricks could travel by separate routes. Some might go by ship; some might go by air. Some might travel quickly; others slowly. But you don't actually care. All that matters to you is that the bricks arrive at the other end, one way or another. Then you can simply put them back together again to recreate your house. Mailing the bricks wouldn't stop other people mailing things and wouldn't clog up the roads, seas, or airways. Because the bricks could be traveling "in parallel," over many separate routes at the same time, they'd probably arrive much quicker. This is how packet switching works. When you send an email or browse the Web, the data you send is split up into lots of packets that travel separately over the Internet.

Picture: Packet switching is like breaking your house into lots of bits and mailing them in separate packets. Because the pieces travel separately, in parallel, they usually go more quickly and make better overall use of the network.

How computers do different jobs on the Internet

Photo: The Internet is really nothing more than a load of wires! Much of the Internet's traffic moves along ethernet networking cables like this one.

There are hundreds of millions of computers on the Net, but they don't all do exactly the same thing. Some of them are like electronic filing cabinets that simply store information and pass it on when requested. These machines are called servers. Machines that hold ordinary documents are called file servers; ones that hold people's mail are called mail servers; and the ones that hold Web pages are Web servers. There are tens of millions of servers on the Internet.

A computer that gets information from a server is called a client. When your computer connects over the Internet to a mail server at your ISP (Internet Service Provider) so you can read your messages, your computer is the client and the ISP computer is the server. There are far more clients on the Internet than servers—probably getting on for a billion by now!

When two computers on the Internet swap information back and forth on a more-or-less equal basis, they are known as peers. If you use an instant messaging program to chat to a friend, and you start swapping party photos back and forth, you're taking part in what's called peer-to-peer (P2P) communication. In P2P, the machines involved sometimes act as clients and sometimes as servers. For example, if you send a photo to your friend, your computer is the server (supplying the photo) and the friend's computer is the client (accessing the photo). If your friend sends you a photo in return, the two computers swap over roles.

Apart from clients and servers, the Internet is also made up of intermediate computers called routers, whose job is really just to make connections between different systems. If you have several computers at home or school, you probably have a single router that connects them all to the Internet. The router is like the mailbox on the end of your street: it's your single point of entry to the worldwide network.

How the Net really works: TCP/IP and DNS

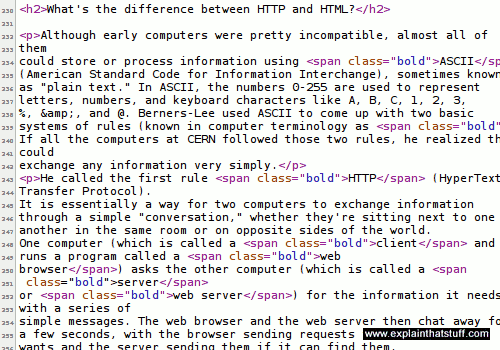

The real Internet doesn't involving moving home with the help of envelopes—and the information that flows back and forth can't be controlled by people like you or me. That's probably just as well given how much data flows over the Net each day—roughly 3 billion emails and a huge amount of traffic downloaded from the world's 250 million websites by its 2 billion users. If everything is sent by packet-sharing, and no-one really controls it, how does that vast mass of data ever reach its destination without getting lost?The answer is called TCP/IP, which stands for Transmission Control Protocol/Internet Protocol. It's the Internet's fundamental "control system" and it's really two systems in one. In the computer world, a "protocol" is simply a standard way of doing things—a tried and trusted method that everybody follows to ensure things get done properly. So what do TCP and IP actually do?

Internet Protocol (IP) is simply the Internet's addressing system. All the machines on the Internet—yours, mine, and everyone else's—are identified by an Internet Protocol (IP) address that takes the form of a series of digits separated by dots or colons. If all the machines have numeric addresses, every machine knows exactly how (and where) to contact every other machine. When it comes to websites, we usually refer to them by easy-to-remember names (like www.explainthatstuff.com) rather than their actual IP addresses—and there's a relatively simple system called DNS (Domain Name System) that enables a computer to look up the IP address for any given website. In the original version of IP, known as IPv4, addresses consisted of four pairs of digits, such as 12.34.56.78 or 123.255.212.55, but the rapid growth in Internet use meant that all possible addresses were used up by January 2011. That has prompted the introduction of a new IP system with more addresses, which is known as IPv6, where each address is much longer and looks something like this: 123a:b716:7291:0da2:912c:0321:0ffe:1da2.

The other part of the control system, Transmission Control Protocol (TCP), sorts out how packets of data move back and forth between one computer (in other words, one IP address) and another. It's TCP that figures out how to get the data from the source to the destination, arranging for it to be broken into packets, transmitted, resent if they get lost, and reassembled into the correct order at the other end.

A brief history of the Internet

- 1844: Samuel Morse transmits the first electric telegraph message, eventually making it possible for people to send messages around the world in a matter of minutes.

- 1876: Alexander Graham Bell (and various rivals) develop the telephone.

- 1940: George Stibitz accesses a computer in New York using a teletype (remote terminal) in New Hampshire, connected over a telephone line.

- 1945: Vannevar Bush, a US government scientist, publishes a paper called As We May Think, anticipating the development of the World Wide Web by half a century.

- 1958: Modern modems are developed at Bell Labs. Within a few years, AT&T and Bell begin selling them commercially for use on the public telephone system.

- 1964: Paul Baran, a researcher at RAND, invents the basic concept of computers communicating by sending "message blocks" (small packets of data); Welsh physicist Donald Davies has a very similar idea and coins the name "packet switching," which sticks.

- 1963: J.C.R. Licklider envisages a network that can link people and user-friendly computers together.

- 1964: Larry Roberts, a US computer scientist, experiments with connecting computers over long distances.

- 1960s: Ted Nelson invents hypertext, a way of linking together separate documents that eventually becomes a key part of the World Wide Web.

- 1966: Inspired by the work of Licklider, Bob Taylor of the US government's Advanced Research Projects Agency (ARPA) hires Larry Roberts to begin developing a national computer network.

- 1969: The ARPANET computer network is launched, initially linking together four scientific institutions in California and Utah.

- 1971: Ray Tomlinson sends the first email, introducing the @ sign as a way of separating a user's name from the name of the computer where their mail is stored.

- 1973: Bob Metcalfe invents Ethernet, a convenient way of linking computers and peripherals (things like printers) on a local network.

- 1974: Vinton Cerf and Bob Kahn write an influential paper describing how computers linked on a network they called an "internet" could send messages via packet switching, using a protocol (set of formal rules) called TCP (Transmission Control Protocol).

- 1978: TCP is improved by adding the concept of computer addresses (Internet Protocol or IP addresses) to which Internet traffic can be routed. This lays the foundation of TCP/IP, the basis of the modern Internet.

- 1978: Ward Christensen sets up Computerized Bulletin Board System (a forerunner of topic-based Internet forums, groups, and chat rooms) so computer hobbyists can swap information.

- 1983: TCP/IP is officially adopted as the standard way in which Internet computers will communicate.

- 1982–1984: DNS (Domain Name System) is developed, allowing people to refer to unfriendly IP addresses (12.34.56.78) with friendly and memorable names (like google.com).

- 1986: The US National Science Foundation (NSF) creates its own network, NSFnet, allowing universities to piggyback onto the ARPANET's growing infrastructure.

- 1988: Finish computer scientist Jarkko Oikarinen invents IRC (Internet Relay Chat), which allows people to create "rooms" where they can talk about topics in real-time with like-minded online friends.

- 1989: The Peapod grocery store pioneers online grocery shopping and e-commerce.

- 1989: Tim Berners-Lee invents the World Wide Web at CERN, the European particle physics laboratory in Switzerland. It owes a considerable debt to the earlier work of Ted Nelson and Vannevar Bush.

- 1993: Marc Andreessen writes Mosaic, the first user-friendly web browser, which later evolves into Netscape and Mozilla.

- 1993: Oliver McBryan develops the World Wide Web Worm, one of the first search engines.

- 1994: People soon find they need help navigating the fast-growing World Wide Web. Brian Pinkerton writes WebCrawler, a more sophisticated search engine and Jerry Yang and David Filo launch Yahoo!, a directory of websites organized in an easy-to-use, tree-like hierarchy.

- 1995: E-commerce properly begins when Jeff Bezos founds Amazon.com and Pierre Omidyar sets up eBay.

- 1996: ICQ becomes the first user-friendly instant messaging (IM) system on the Internet.

- 1997: Jorn Barger publishes the first blog (web-log).

- 1998: Larry Page and Sergey Brin develop a search engine called BackRub that they quickly decide to rename Google.

- 2004: Harvard student Mark Zuckerberg revolutionizes social networking with Facebook, an easy-to-use website that connects people with their friends.

- 2006: Jack Dorsey and Evan Williams found Twitter, an even simpler "microblogging" site where people share their thoughts and observations in off-the-cuff, 140-character status messages.

Y . I/O IIIII Wireless Internet

Imagine for a moment if all the wireless connections in the world were instantly replaced by cables. You'd have cables stretching through the air from every radio in every home hundreds of miles back to the transmitters. You'd have wires reaching from every cellphone to every phone mast. Radio-controlled cars would disappear too, replaced by yet more cables. You couldn't step out of the door without tripping over cables. You couldn't fly a plane through the sky without getting tangled up. If you peered through your window, you'd see nothing at all but a cats-cradle of wires. That, then, is the brilliance of wireless: it does away with all those cables, leaving our lives simple, uncluttered, and free! Let's take a closer look at how it works.

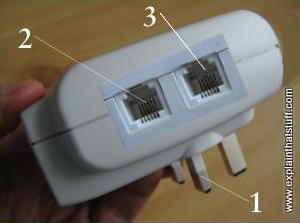

Photo: A typical wireless router. This one, made by Netgear, can connect up to four different computers to the Internet at once using wired connections, because it has four ethernet sockets. But—in theory—it can connect far more machines using wireless. The white bar sticking out of the back is the wireless antenna.From wireless to radio

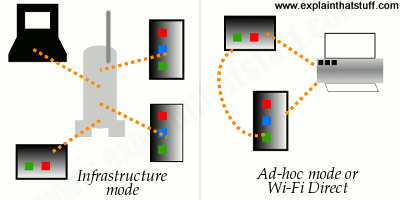

Wireless started out as a way of sending audio programs through the air. Pretty soon we started calling it radio and, when pictures were added to the signal, TV was born. The word "wireless" had become pretty old-fashioned by the mid-20th century, but over the last few years it's made a comeback. Now it's hip to be wireless once again thanks to the Internet. By 2007, approximately half of all the world's Internet users were expected to be using some kind of wireless access—many of them in developing countries where traditional wired forms of access, based on telephone networks, are not available. Wireless Internet, commonly used in systems called Wi-Fi®, WAP, and i-mode, has made the Internet more convenient than ever before. But what makes it different from ordinary Internet access?From radio to Wi-Fi

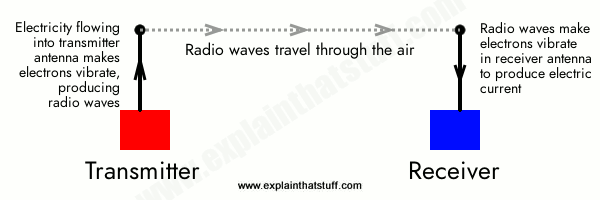

Artwork: The basic concept of radio: sending messages from a transmitter to a receiver at the speed of light using radio waves. In wireless Internet, the communication is two-way: there's a transmitter and receiver in both your computer (or handheld device) and the piece of equipment (such as a router) that connects you to the Internet.