Automatic control glance with

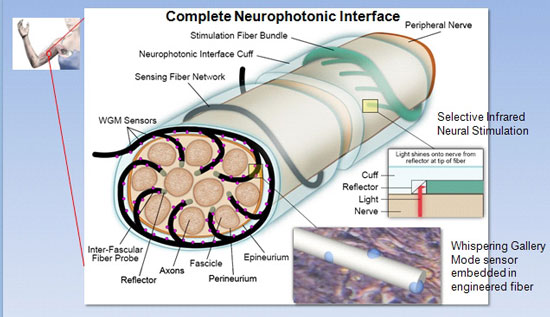

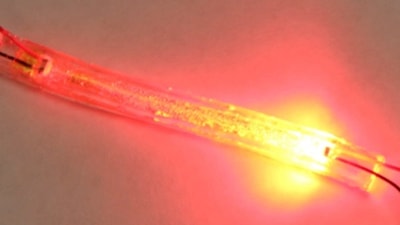

Fiber optic sensors for automation, medical, and robo

X . I PID / PITa and digital control efficiencies

Most proportional - integral - derivative (PID) applications use digital controllers, though some still use analog. Digital control can offer additional process control system efficiencies. digital controller has several advantages over analog controller to a proportional-integral-derivative (PID) applications. maybe we can explain how digital controls may offer extra efficiency of the process control system by answering five questions like the following:

Digital PID controller

I . maria: For PID applications, about how many analog controls are still in place or used?

anselo: By looking around the plants where we have control of the project and ask some customers, we would say there is less than 5% analog controllers on crop production today.

II . maria : What are the main advantages of using digital controllers over analog in PID applications?

anselo: There are many useful options in digital controllers that are unavailable in analog controllers, such as variable tuning; selectable PID structure [P and D on process variable (PV) or error] and form; gap control; error squared; tuning algorithms; accurate tuning settings; and others.

III . maria: Are there PID applications where analog has advantages over digital?

anselo: I have heard that a digital control controller must have input to output response time less than 100 milliseconds to match the speed of an analog controller. So, for loops that require a very fast response, the analog controller is faster than most digital controllers.

IIII . maria: When transitioning from analog to digital PID - type controllers, what are the special considerations, if any, for selection, setup, operation, optimization, or modifications over time?

anselo: The first step is to make sure you PROPERLY convert the current tuning in the analog controller to the digital controller.

Also, ensure you don't configure a lot of new alarms just because it is easy to do so.

Finally, investigate the new features of the digital controller to find better ways to duplicate or improve previous functionality.

IIIII . maria: beyond performance, what are some other advantages of digital controllers for PID applications?

anselo: Other advantages include ease of collecting data from the controller and easier troubleshooting the controller action.

system PID / Pita maria and anselo ; Understanding PID control and loop tuning fundamentals

PID loop tuning may not be a hard science, but it’s not magic either. Here are some tuning tips that work. A "control loop" is a feedback mechanism that attempts to correct discrepancies between a measured process variable and the desired setpoint. A special-purpose computer known as the "controller" applies the necessary corrective efforts via an actuator that can drive the process variable up or down. A home furnace uses a basic feedback controller to turn the heat up or down if the temperature measured by the thermostat is too low or too high.

For industrial applications, a proportional-integral-derivative (PID) controller tracks the error between the process variable and the setpoint, the integral of recent errors, and the derivative of the error signal. It computes its next corrective effort from a weighted sum of those three terms, then applies the results to the process, and awaits the next measurement. It repeats this measure-decide-actuate loop until the error is eliminated.

PID basics

A PID controller using the ideal or International Society of Automation (ISA) standard form of the PID algorithm computes its output CO(t) according to the formula shown in Figure ; PV(t) is the process variable measured at time t, and the error e(t) is the difference between the process variable and the setpoint. The PID formula weights the proportional term by a factor of P, the integral term by a factor of P/TI, and the derivative term by a factor of P.TD where P is the controller gain, TI is the integral time, and TD is the derivative time.

That terminology bears some explaining. "Gain" refers to the percentage by which the error signal will gain or lose strength as it passes through the controller en route to becoming part of the controller's output. A PID controller with a high gain will tend to generate particularly aggressive corrective efforts.

The "integral time" refers to a hypothetical sequence of events where the error starts at zero, then abruptly jumps to a fixed value. Such an error would cause an instantaneous response from the controller's proportional term and a response from the integral term that starts at zero and increases steadily. The time required for the integral term to catch up to the unchanging proportional term is the integral time TI. A PID controller with a long integral time is more heavily weighted toward proportional action than integral action.

Similarly, the "derivative time" TD is a measure of the relative influence of the derivative term in the PID formula. If the error were to start at zero and begin increasing at a fixed rate, the proportional term would start at zero, while the derivative term assumes a fixed value. The proportional term would then increase steadily until it catches up with the derivative term at the end of the derivative time. A PID controller with a long derivative time is more heavily weighted toward derivative action than proportional action.

Historical note

The first feedback controllers included just the proportional term. For mathematical reasons that only became apparent later on, a P-only controller tends to drive the error downward to a small, but non-zero, value and then quit. Operators observing this phenomenon would manually increase the controller's output until the last vestiges of the error were eliminated. They called this operation "resetting" the controller.

When the integral term was introduced, operators observed that it would tend to perform the reset operation automatically. That is, the controller would augment its proportional action just enough to eliminate the error entirely. Hence, integral action was originally called "automatic reset" and remains labeled that way on some PID controllers to this day. The derivative term was invented shortly thereafter and was described, accurately enough, as "rate control."

Tricky business

Loop tuning is the art of selecting values for the tuning parameters P, TI, and TD so that the controller will be able to eliminate an error quickly without causing the process variable to fluctuate excessively. That's easier said than done.

Consider a car's cruise controller, for example. It can accelerate the car to a desired cruising speed, but not instantaneously. The car's inertia causes a delay between the time that the controller engages the accelerator and the time that the car's speed reaches the setpoint. How well a PID controller performs depends in large part on such lags.

Suppose an overloaded car with an undersized engine suddenly starts up a steep hill. The ensuing error between the car's actual and desired speeds would cause the controller's derivative and proportional actions to kick in immediately. The controller would begin to accelerate the car, but only as fast as the lag allows.

After a while, the integral action would also begin to contribute to the controller's output and eventually come to dominate it because the error decreases so slowly when the lag time is long, and a sustained error is what drives the integral action. But exactly when that would happen and how dominant the integral action would become thereafter would depend on the severity of the lag and the relative sizes of the controller's integral and derivative times.

This simple example demonstrates a fundamental principle of PID tuning. The best choice for each of the tuning parameters P, TI, and TD depends on the values of the other two as well as the behavior of the controlled process. Furthermore, modifying the tuning of any one term affects the performance of the others because the modified controller affects the process, and the process in turn affects the controller.

How can a control engineer designing a PID loop determine the values for P, TI, and TD

that will work best for a particular application? John G. Ziegler and

Nathaniel B. Nichols of Taylor Instruments (now part of ABB) addressed

that question in 1942 when they published two loop tuning techniques

that remain popular to this day.

How can a control engineer designing a PID loop determine the values for P, TI, and TD

that will work best for a particular application? John G. Ziegler and

Nathaniel B. Nichols of Taylor Instruments (now part of ABB) addressed

that question in 1942 when they published two loop tuning techniques

that remain popular to this day.

Their open loop technique is based on the results of a bump or step test for which the controller is taken offline and manually forced to increase its output abruptly. A strip chart of the process variable's subsequent trajectory is known as the "reaction curve" (see Figure 2).

A sloped line drawn tangent to the reaction curve at its steepest point shows how fast the process reacted to the step change in the controller's output. The inverse of this line's slope is the process time constant T, which measures the severity of the lag.

The reaction curve also shows how long it took for the process to demonstrate its initial reaction to the step (the dead time d) and how much the process variable increased relative to the size of the step (the process gain K). By trial-and-error, Ziegler and Nichols determined that the best settings for the tuning parameters P, TI, and TD could be computed from T, d, and K as shown by the equation:

After these parameter settings have been loaded into the PID formula and the controller returned to automatic mode, the controller should be able to eliminate future errors without causing the process variable to fluctuate excessively.

Ziegler and Nichols also described a closed loop tuning technique that is conducted with the controller in automatic mode, but with the integral and derivative actions shut off. The controller gain is increased until even the slightest error causes a sustained oscillation in the process variable (see Figure 3).

The smallest controller gain that can cause such an oscillation is called the "ultimate gain" Pu. The period of those oscillations is called the "ultimate period" Tu. The appropriate tuning parameters can be computed from these two values according to the following rules:

Caveats

Unfortunately, PID loop tuning isn't really that simple. Different PID controllers use different versions of the PID formula, and each must be tuned according to the appropriate set of rules. The rules also change when:

- The derivative and/or the integral actions are disabled.

- The process itself is inherently oscillatory.

- The process behaves as if it contains its own integral term (as is the case with level control).

- The dead time d is very small or significantly larger than the time constant T.

That's where loop tuning becomes an art. It takes more than a little experience and sometimes a lot of luck to come up with just the right combination of P, TI, and TD.

MORE ADVICE

Key concepts

A PID controller with a high gain tends to generate particularly aggressive corrective efforts.

Loop tuning is the art of selecting values for tuning parameters that enable the controller to eliminate the error quickly without causing excessive process variable fluctuations.

Different PID controllers use different versions of the PID formula, and each must be tuned according to the appropriate set of rules.

________________________________________________________________________________

Fixing PID

Proportional-integral-derivative controllers may be ubiquitous, but they’re not perfect.

Although the PID algorithm was first applied to a feedback control problem more than a century ago and has served as the de facto standard for process control since the 1940s, PID loops have seen a number of improvements over the years. Mechanical PID mechanisms have been supplanted in turn by pneumatic, electronic, and computer-based devices; and the PID calculation itself has been tweaked to provide tighter control.

Integral action or automatic reset was the first fix introduced to improve the performance of feedback controllers back when they were equipped with only proportional action. A basic “P-only” controller applies a corrective effort to the process in proportion to the difference or error between the desired setpoint and the current process variable measurement.

When the setpoint goes up or the process variable goes down, the error between them grows in the positive direction, causing a P-only controller to respond with a positive control effort that drives the process variable back up towards the setpoint. In the converse case, the error grows in the negative direction and the controller responds with a negative control effort that drives the process variable back down.

A P-only controller is intuitive and easy to implement, but it tends to reach a point of diminishing returns. As the error decreases with each new control effort, so does the next control effort. That in turn slows the rate at which the error decreases until it ceases to change altogether. Unfortunately, that steady-state error is never zero. A P-only controller always leaves the process variable slightly off the setpoint. See the “Steady-state error” graphic for a demonstration of why this happens.

In

this simple example of a generic control loop, a proportional-only

controller with a gain of 2 drives a process with a steady-state gain of

3. That is, the controller amplifies the error by a factor of 2 to

produce the control effort, and the process multiplies the control

effort by a factor of 3 (and adds a few transient oscillations) to

produce the process variable. If the setpoint is 70%, the process

variable will end up at 60% after the transients die out. The error

remains nonzero, yet the controller stops trying to reduce it any

further.

In

this simple example of a generic control loop, a proportional-only

controller with a gain of 2 drives a process with a steady-state gain of

3. That is, the controller amplifies the error by a factor of 2 to

produce the control effort, and the process multiplies the control

effort by a factor of 3 (and adds a few transient oscillations) to

produce the process variable. If the setpoint is 70%, the process

variable will end up at 60% after the transients die out. The error

remains nonzero, yet the controller stops trying to reduce it any

further.

Integral action operating in parallel with proportional action eliminates that steady-state error. It increases the control effort in proportion to the running total or integral of all the previous errors and continues to grow so long as any error remains. As a result, a proportional-plus-integral or “PI” controller never gives up. It continues to apply an ever-increasing control effort until the process variable reaches the setpoint.

Unfortunately, the introduction of integral action causes other problems that require their own fixes. Arguably the most significant is an increased risk of closed-loop instability where the integral action’s persistence does more harm than good. It can grow so large that it forces the process variable to overshoot the setpoint.

If the controlled process happens to be particularly sensitive to the controller’s efforts, that overshoot will cause an even larger error in the opposite direction. The controller will eventually change course in an effort to eliminate the new error but will end up driving the process variable back the other way, ending up even further from the setpoint. Eventually, the controller will start oscillating from fully on to fully off in a vain effort to reach a steady state.

The simplest solution to this problem is to reduce the amplification factor or gain by which the controller multiplies the integrated error to generate the integral action. On the other hand, reducing the integral gain risks increasing the time required for the closed-loop system to reach a steady state with zero error. Hundreds of analytical and heuristic techniques have been developed over the years to determine values for the integral and proportional gains that are just right for a particular application.

Reset windup

Integral action can also cause reset windup when the actuator is too small to implement an especially large control effort requested by the controller. That can happen when a burner isn’t large enough to supply the necessary heat, a valve is too small to generate a high enough flow rate, or a pump reaches its maximum speed. The actuator is said to be saturated at some limiting value—either its maximum or minimum output.

When actuator saturation prevents the process variable from rising any further, the controller continues to see positive errors between the setpoint and the process variable. The integrated error continues to rise, and the integral action continues to call for an increasingly aggressive control effort. However, the actuator remains stuck at its maximum output, unable to affect the process any further, so the process variable doesn’t get any closer to the setpoint.

An operator can try to fix the problem by reducing the setpoint back into the range that the actuator is capable of achieving, but the controller will not respond because of the enormous value that the total integrated error will have achieved during the time that the actuator was saturated fully on. That total will remain very large for a very long time, no matter what the current value of the error happens to be. As a result, the integral action will remain very high, and the controller will keep pushing the actuator against its upper limit.

Fortunately, the error will turn negative if the operator drops the setpoint low enough, so the total integrated error will eventually start to fall. Still, a long series of negative errors will be required to cancel the long series of positive errors that had been accumulating in the integrator’s running total. Until that happens, the integral action will remain large enough to continue saturating the actuator, no matter what the simultaneous proportional action is calling for. See the “Reset windup” graphic.

Several techniques have been devised to protect against reset windup. The obvious solution is to replace the undersized actuator with a larger one capable of actually driving the process variable all the way to the setpoint or selecting setpoints that the existing actuator can actually reach. Otherwise, the integrator can simply be turned off when the actuator saturates.

In

this example, the operator has tried to increase the setpoint to a

value higher than the actuator is capable of achieving. After observing

that the controller has been unable to drive the process variable that

high, the operator returns the setpoint to a lower value. Note that the

controller does not begin to lower its control effort until well after

the setpoint has been lowered because the integral action has grown very

large during the controller’s futile attempt to reach the higher

setpoint. Instead, the controller continues to call for a maximum

control effort even though the error has turned negative. The control

effort does not begin to drop until the negative errors following the

setpoint change have persisted as long as the positive errors had

persisted prior to the setpoint change (or more precisely, until the

integral of the negative errors reaches the same magnitude as the

integral of the positive errors).

In

this example, the operator has tried to increase the setpoint to a

value higher than the actuator is capable of achieving. After observing

that the controller has been unable to drive the process variable that

high, the operator returns the setpoint to a lower value. Note that the

controller does not begin to lower its control effort until well after

the setpoint has been lowered because the integral action has grown very

large during the controller’s futile attempt to reach the higher

setpoint. Instead, the controller continues to call for a maximum

control effort even though the error has turned negative. The control

effort does not begin to drop until the negative errors following the

setpoint change have persisted as long as the positive errors had

persisted prior to the setpoint change (or more precisely, until the

integral of the negative errors reaches the same magnitude as the

integral of the positive errors).

In

this case, the operator has repeated the same sequence of setpoint

moves, but this time using a PID controller equipped with reset windup

protection. Extra logic added to the PID algorithm shuts off the

controller’s integrator when the actuator hits its upper limit. The

process variable still can’t reach the higher setpoint, but the

controller’s integral action doesn’t wind up during the attempt. That

allows the controller to respond immediately when the setpoint is

lowered.

In

this case, the operator has repeated the same sequence of setpoint

moves, but this time using a PID controller equipped with reset windup

protection. Extra logic added to the PID algorithm shuts off the

controller’s integrator when the actuator hits its upper limit. The

process variable still can’t reach the higher setpoint, but the

controller’s integral action doesn’t wind up during the attempt. That

allows the controller to respond immediately when the setpoint is

lowered.

Pre-loading

Reset windup also occurs when the actuator is turned off but the controller isn’t. In a cascade controller, for example, the outer-loop controller will see no response to its control efforts while the inner loop is in manual mode. If the outer-loop controller is left operating during that interval, its integral action will “wind up” as the error remains constant.

Similarly, when the burner, valve, or pump is shut down between cycles of a batching operation, the process variable is prevented from getting any closer to the setpoint, again leading to windup. That’s not a problem while the actuator remains off, but as soon the actuator is reactivated at the beginning of the next batch, the controller will immediately call for a 100% control effort and saturate the actuator.

The obvious solution to this problem is to turn off the controller’s integrator whenever the actuator is turned off or to eliminate the error artificially by adjusting the setpoint to whatever value the process variable takes between batches. But there’s another approach that not only prevents reset windup between batches but actually improves the controller’s performance during the next batch.

Pre-loading freezes the output of the controller’s integrator between batches so that the integral action starts the next batch with the total integrated error that it had accumulated as of the end of the previous batch. This technique assumes that the controller is eventually going to need the same amount of integral action to reach the same steady state as in the previous batch, so there’s no point in starting the integrated error at zero. With pre-loading, the integral action essentially picks up right where it left off at the end of the previous batch, thereby shortening the time required to achieve a steady state in the next batch.

Pre-loading works best if each successive batch is more-or-less identical to its predecessor so that the controller is attempting to achieve the same setpoint under the same load conditions every time. But even if the batches aren’t identical, it is sometimes possible to use a mathematical model of the process to predict what level of integral action is eventually going to be required to achieve a steady state. The required integrated error can then be deduced and pre-loaded into the controller’s integrator at the start of each batch. This approach will also work for a continuous process if it can be modeled prior to the initial start-up.

Bumpless transfer

One potential drawback to pre-loading is the abrupt change it can cause in the actuator’s output at the beginning of each batch. Although the actuator won’t immediately slam all the way open if pre-loading is used to prevent reset windup, it will try to start the next batch at or near the position it was in at the end of the previous batch. If that causes an abrupt change that is likely to damage the actuator, the controller’s integrator can be ramped up gradually to its pre-load value.

A similar problem can occur when a controller is switched from automatic to manual mode and back again. If an operator manually modifies the control effort during that interval, the controller will abruptly switch the control effort to whatever the PID algorithm is calling for at the time automatic mode is resumed.

Bumpless transfer solves this problem by artificially pre-loading the integrator with whatever value is required to restart automatic operations without changing the control effort from wherever the operator left it. The controller will still have to make adjustments if the operator’s actions, changes in the process variable, or changes in the setpoint have created an error while the controller was in manual mode, but less of a “bump” will result when the controller is “transferred” back to automatic mode.

Still more windup problems

All of these windup-related problems are compounded by deadtime—the interval required for the process variable to change following a change in the control effort. Deadtime typically occurs when the process variable sensor is located too far downstream from the actuator. No matter how hard the controller works, it cannot begin to reduce an error between the process variable and the setpoint until the material that the actuator has modified reaches the sensor.

As the controller is waiting for its efforts to take effect, the process variable and the error will remain fixed, causing the integral action to wind up just as if the actuator were saturated or turned off. The most common fix to this problem is to de-tune the controller; that is, reduce the integral gain to reduce the maximum integral action caused by windup.

The same effect can be achieved by equipping the integral action with its own deadtime so that windup does not begin until at least some of the process deadtime has elapsed. This in turn lowers the total integrated error that the controller can accumulate during the interval that the error is fixed.

Deadtime-induced windup can also be ameliorated by making the integral action intermittent. Let the proportional action do all the work until the process variable has settled somewhere close to the setpoint, then turn on the integral action only long enough to eliminate the remaining steady-state error. This approach not only delays the onset of windup, it gives the integral action only small errors to deal with, thereby reducing the maximum windup effect.

But wait, there’s more

This only scratches the surface of the many ways engineers have sought to improve control performance. The PID algorithm has also been modified to deal with velocity-limited actuators, time-varying process models, noise in the process variable measurement, excessive derivative action during setpoint changes, and more. Future installments of this series will look at the effects these problems cause and how they can be avoided.

_________________________________________________________________________________

Open- vs. closed-loop control

Automatic control operations can be described as either open-loop or closed-loop. The difference is feedback.

- The process that is to be controlled

- An instrument with a sensor that measures the condition of the process

- A transmitter that converts the measurement into an electronic signal

- A controller that reads the transmitter's signal and decides whether or not the current condition of the process is acceptable, and

- An actuator functioning as the final control element that applies a corrective effort to the process per the controller's instructions.

In

a closed-loop control system, information flows around a feedback loop

from the process to the sensor to the transmitter to the controller to

the actuator and back to the process. This measure-decide-actuate

sequence-known as closed-loop control-repeats as often as necessary

until the desired process condition is achieved. Familiar examples

include using a thermostat controlling a furnace to maintain the

temperature in a room or cruise control to maintain the speed of a car.

In

a closed-loop control system, information flows around a feedback loop

from the process to the sensor to the transmitter to the controller to

the actuator and back to the process. This measure-decide-actuate

sequence-known as closed-loop control-repeats as often as necessary

until the desired process condition is achieved. Familiar examples

include using a thermostat controlling a furnace to maintain the

temperature in a room or cruise control to maintain the speed of a car.

But not all automatic control operations require feedback. A much larger class of control commands can be executed in an open-loop configuration without confirmation or further adjustment. Open-loop control is sufficient for predictable operations such as opening a door, starting a motor, or turning off a pump.

Continuous closed-loop control

Continuing the analysis, it is clear that all closed-loop operations are not alike. For a continuous process, a feedback loop attempts to maintain a process variable (or controlled variable) at a desired value known as the setpoint. The controller subtracts the latest measurement of the process variable from the setpoint to generate an error signal. The magnitude and duration of the error signal then determines the value of the controller's output or manipulated variable which in turn dictates the corrective efforts applied by the actuator.

For example, a car equipped with a cruise control uses a speedometer to measure and maintain the car's speed. If the car is traveling too slowly, the controller instructs the accelerator to feed more fuel to the engine. If the car is traveling too quickly, the controller lets up on the accelerator. The car is the process, the speedometer is the sensor, and the accelerator is the actuator.

The car's speed is the process variable. Other common process variables include temperatures, pressures, flow rates, and tank levels. These are all quantities that can vary constantly and can be measured at any time. Common actuators for manipulating such conditions include heating elements, valves, and dampers.

Discrete closed-loop control

For a discrete process, the variable of interest is measured only when a triggering event occurs, and the measure-decide-actuate sequence is typically executed just once for each event. For example, the human controller driving the car uses her eyes to measure ambient light levels at the beginning of each trip. If she decides that it's too dark to see well, she turns on the car's lights. No further adjustment is required until the next triggering event such as the end of the trip.

Feedback loops for discrete processes are generally much simpler than continuous control loops since discrete processes do not involve as much inertia. The driver controlling the car gets instantaneous results after turning on the lights, whereas the cruise control sees much more gradual results as the car slowly speeds up or slows down.

Inertia tends to complicate the design of a continuous control loop since a continuous controller typically needs to make a series of decisions before the results of its earlier efforts are completely evident. It has to anticipate the cumulative effects of its recent corrective efforts and plan future efforts accordingly. Waiting to see how each one turns out before trying another simply takes too long.

Open-loop control

Open-loop controllers do not use feedback per se. They apply a single control effort when so commanded and assume that the desired results will be achieved. An open-loop controller may still measure the results of its commands: Did the door actually open? Did the motor actually start? Is the pump actually off? Generally, these actions are for safety considerations rather than as part of the control sequence.

Even closed-loop feedback controllers must operate in an open-loop mode on occasion. A sensor may fail to generate the feedback signal or an operator may take over the feedback operation in order to manipulate the controller's output manually.

Operator intervention is generally required when a feedback controller proves unable to maintain stable closed-loop control. For example, a particularly aggressive pressure controller may overcompensate for a drop in line pressure. If the controller then overcompensates for its overcompensation, the pressure may end up lower than before, then higher, then even lower, then even higher, etc. The simplest way to terminate such unstable oscillations is to break the loop and regain control manually.

There are also many applications where experienced operators can make manual corrections faster than a feedback controller can. Using their knowledge of the process' past behavior, operators can manipulate the process inputs now to achieve the desired output values later. A feedback controller, on the other hand, must wait until the effects of its latest efforts are measurable before it can decide on the next appropriate control action. Predictable processes with longtime constants or excessive dead time are particularly suited for open-loop manual control.

Open- and closed-loop control combined

The principal drawback of open-loop control is a loss of accuracy. Without feedback, there is no guarantee that the control efforts applied to the process will actually have the desired effect. If speed and accuracy are both required, open-loop and closed-loop control can be applied simultaneously using a feedforward strategy. A feedforward controller uses a mathematical model of the process to make its initial control moves like an experienced operator would. It then measures the results of its open-loop efforts and makes additional corrections as necessary like a traditional feedback controller.

Feedforward is particularly useful when sensors are available to measure an impending disturbance before it hits the process. If its future effects on the process can be accurately predicted with the process model, the controller can take preemptive actions to counteract the disturbance as it occurs.

For example, if a car equipped with cruise control and radar could see a hill coming, it could begin to accelerate even before it begins to slow down. The car may not end up at the desired speed as it climbs the hill, but even that error can eventually be eliminated by the cruise controller's normal feedback control algorithm. Without the advance notice provided by the radar, the cruise controller wouldn't know that acceleration is required until the car had already slowed below the desired speed halfway up the hill.

Open- and closed-loop control in parallel

Many automatic control systems use both open- and closed-loop control in parallel. Consider, for example, a brewery that ferments and bottles beer.

Brew kettles in a modern brewery rely on continuous closed-loop control to maintain prescribed temperatures and pressures while turning water and grain into fermentable mash.

A brewery's bottling line uses both discrete closed-loop control and open-loop control to fill and cap the individual bottles.

The conditions inside the brew kettles are maintained by closed-loop controllers using feedback loops that measure the temperature and pressure, then adjust steam flow into the kettle and flow pumps to compensate for out-of-spec conditions. Open-loop control is also required for one-time operations such as starting and stopping the mixer motors or opening and closing the steam lines to the heat exchangers.

Simultaneously, finished batches of beer are bottled using open-loop and discrete closed-loop control. A proximity sensor determines that a bottle is present before filling can begin, then a valve opens to fill each bottle until a level sensor determines that the bottle is full.

In general, continuous closed-loop control applications require at least a few ancillary open-loop control operations, whereas many open-loop control applications require no feedback loops at all.

Which is which?

The differences between continuous closed-loop control, discrete closed-loop control, and open-loop control can be subtle. Here are some snippets of pseudo-code to illustrate each:

Open-loop control

IF (time_for_action = TRUE) THEN

take_action(X)

END

Discrete closed-loop control

IF (time_for_action = TRUE) THEN

measure(Y)

IF (Y = specified_condition) THEN

take_action(X)

END

END

Continuous closed-loop control

WHILE (Y <> specified_condition)

take_action(X)

measure(Y)

wait(Z)

REPEAT

In the first two cases the time for action usually means that a particular step has been reached in the control sequence. At that point, an open-loop controller would simply execute action X and proceed to the next step in the program. A discrete closed-loop controller would first measure or observe some condition Y in the process to determine if action X needs to be executed or not. Once activated, a continuous closed-loop controller is always ready for action. It takes action X, measures condition Y, waits Z seconds, then repeats the loop unless Y had reached the specified condition. In the discrete case, the specified condition is usually a discrete event such as the completion of a prior task or a change in some go/no-go decision criteria. In the continuous case, the specified condition is usually met when the measured variable reaches a desired value.

Key concepts:

- There are different types of control loops and the most critical characteristic that separates them is how they handle feedback.

- The needs of an application should be the primary reason for choosing one type or another.

- In some cases, human intervention may be more desirable than an automatic approach.

Fundamentals of integrating vs. self-regulating processes

Sometimes a process is easier to control if it “leaks.” Here’s a look at why.

An integrating process produces an output that is proportional to the running total of some input that the process has been accumulating over time. Alternately, an integrating process can accumulate a related quantity proportional to the input rather than the input itself. Either way, if the input happens to turn negative, an integrating process will start to relinquish whatever it has accumulated, thereby lowering its output.

For the water tank shown in the "Integrating process example," input is the water flowing into the tank—the net flow from all incoming streams minus outgoing streams. The output is the level of water the tank has accumulated. As long as net inflow remains positive (more incoming than outgoing), the output level will continue to increase as the tank fills up. If net inflow turns negative (more outgoing than incoming), output level will decrease as the tank drains.

Servo motors have an entirely different accumulation mechanism, but the same effects. A servo motor's input voltage generates torque, which accelerates a load around the shaft. The resulting rotation turns the load a bit more for every instant input voltage is non-zero. The load's net position results from the accumulation of all those incremental rotations, each of which is proportional to input voltage at that instant. As long as input voltage remains positive, the output position will continue to increase. If input voltage turns negative, the output position will decrease as the shaft turns backwards.

The water tank and servo motor also behave similarly in that fixing their inputs at zero will fix their outputs at whatever values they'd reached up to that point. The tank's water level will remain unchanged as long as incoming flow exactly matches outgoing flow (typically when both are zero), and the servo motor's shaft will remain in its last position as long as input voltage is neither positive nor negative.

This is a defining characteristic of all integrating processes. They can accumulate their inputs and subsequently disperse them without suffering spontaneous losses to the surrounding environment. Accumulation and dispersal rates can vary considerably from process to process, and the two rates can be different within the same process depending on the effects of friction and inertia. But once a chunk of input has been successfully added to the running total, it will stay there until a negative input removes it. That is, a drop of water that has entered the tank will remain within (or be replaced) until outflow exceeds inflow.

Losses as well as gains

Other processes can lose what they've accumulated without the benefit of a negative input. A leaky tank will lose water no matter how the inlet and outlet valves are set, and a servo motor rotating against a torsional spring will lose position whether input voltage is positive, negative, or zero.

Such processes can reach an equilibrium point where further accumulations are offset by spontaneous losses. If a tank is leaky enough, a given inflow rate will not be able to raise the output level beyond a certain height. If the spring opposing the servo motor is strong enough, it will eventually prevent the shaft from rotating any further.

These are often called non-integrating processes, though "short-term integrating" might be a more apt description. They accumulate their inputs just as integrating processes do but only until they reach an equilibrium point between input and losses, as shown in the "Non-integrating process example."

Key concepts:

- Processes have specific characteristics that affect the way in which they should be controlled.

- Understanding how a process responds to control efforts is a critical step of establishing control strategy.

Fundamentals of cascade control

Sometimes two controllers can do a better job of keeping one process variable where you want it.

When multiple sensors are available for measuring conditions in a

controlled process, a cascade control system can often perform better

than a traditional single-measurement controller. Consider, for example,

the steam-fed water heater shown in the sidebar Heating Water with

Cascade Control. In Figure A, a traditional controller is shown

measuring the temperature inside the tank and manipulating the steam

valve opening to add more or less heat as inflowing water disturbs the

tank temperature. This arrangement works well enough if the steam supply

and the steam valve are sufficiently consistent to produce another X%

change in tank temperature every time the controller calls for another

Y% change in the valve opening.

However, several factors could alter the ratio of X to Y or the time

required for the tank temperature to change after a control effort. The

pressure in the steam supply line could drop while other tanks are

drawing down the steam supply they share, in which case the controller

would have to open the valve more than Y% in order to achieve the same

X% change in tank temperature.

Or, the steam valve could start sticking as friction takes its mechanical toll over time. That would lengthen the time required for the valve to open to the extent called for by the controller and slow the rate at which the tank temperature changes in response to a given control effort.

A better way

A

cascade control system could solve both of these problems as shown in

Figure B where a second controller has taken over responsibility for

manipulating the valve opening based on measurements from a second

sensor monitoring the steam flow rate. Instead of dictating how widely

the valve should be opened, the first controller now tells the second

controller how much heat it wants in terms of a desired steam flow rate.

A

cascade control system could solve both of these problems as shown in

Figure B where a second controller has taken over responsibility for

manipulating the valve opening based on measurements from a second

sensor monitoring the steam flow rate. Instead of dictating how widely

the valve should be opened, the first controller now tells the second

controller how much heat it wants in terms of a desired steam flow rate.

The second

controller then manipulates the valve opening until the steam is flowing at the requested rate. If that rate turns out to be insufficient to produce the desired tank temperature, the first controller can call for a higher flow rate, thereby inducing the second controller to provide more steam and more heat (or vice versa).

That may sound like a convoluted way to achieve the same result as the first controller could achieve on its own, but a cascade control system should be able to provide much faster compensation when the steam flow is disturbed. In the original single-controller arrangement, a drop in the steam supply pressure would first have to lower the tank temperature before the temperature sensor could even notice the disturbance. With the second controller and second sensor on the job, the steam flow rate can be measured and maintained much more quickly and precisely, allowing the first controller to work with the belief that whatever steam flow rate it wants it will in fact get, no matter what happens to the steam pressure.

The second controller can also shield the first controller from deteriorating valve performance. The valve might still slow down as it wears out or gums up, and the second controller might have to work harder as a result, but the first controller would be unaffected as long as the second controller is able to maintain the steam flow rate at the required level.

Without the acceleration afforded by the second controller, the first controller would see the process becoming slower and slower. It might still be able to achieve the desired tank temperature on its own, but unless a perceptive operator notices the effect and re-tunes it to be more aggressive about responding to disturbances in the tank temperature, it too would become slower and slower.

Similarly, the second controller can smooth out any quirks or nonlinearities in the valve's performance, such as an orifice that is harder to close than to open. The second controller might have to struggle a bit to achieve the desired steam flow rate, but if it can do so quickly enough, the first controller will never see the effects of the valve's quirky behavior.

Elements of cascade control

The Cascade Control Block Diagram shows a generic cascade control system with two controllers, two sensors, and one actuator acting on two processes in series. A primary or master controller generates a control effort that serves as the setpoint for a secondary or slave controller. That controller in turn uses the actuator to apply its control effort directly to the secondary process. The secondary process then generates a secondary process variable that serves as the control effort for the primary process.

The geometry of this block diagram defines an inner loop involving the secondary controller and an outer loop involving the primary controller. The inner loop functions like a traditional feedback control system with a setpoint, a process variable, and a controller acting on a process by means of an actuator. The outer loop does the same except that it uses the entire inner loop as its actuator.

In the water heater example, the tank temperature controller would be primary since it defines the setpoint that the steam flow controller is required to achieve. The water in the tank, the tank temperature, the steam, and the steam flow rate would be the primary process, the primary process variable, the secondary process, and the secondary process variable, respectively (refer to the Cascade Control Block Diagram). The valve that the steam flow controller uses to maintain the steam flow rate serves as the actuator which acts directly on the secondary process and indirectly on the primary process.

Requirements

Naturally, a cascade control system can't solve every feedback control problem, but it can prove advantageous if under the right circumstances:

- The inner loop has influence over the outer loop. The actions of the secondary controller must affect the primary process variable in a predictable and repeatable way or else the primary controller will have no mechanism for influencing its own process.

- The inner loop is faster than the outer loop. The secondary process must react to the secondary controller's efforts at least three or four times faster than the primary process reacts to the primary controller. This allows the secondary controller enough time to compensate for inner loop disturbances before they can affect the primary process.

- The inner loop disturbances are less severe than the outer loop disturbances. Otherwise, the secondary controller will be constantly correcting for disturbances to the secondary process and unable to apply consistent corrective efforts to the primary process.

Cascade control block diagram

A cascade control system reacts to physical phenomena shown in blue and process data shown in green.

In the water heater example:

- Setpoint - temperature desired for the water in the tank

- Primary controller (master) - measures water temperature in the tank and asks the secondary controller for more or less heat

- Secondary controller (slave) - measures and maintains steam flow rate directly

- Actuator - steam flow valve

- Secondary process - steam in the supply line

- Inner loop disturbances - fluctuations in steam supply pressure

- Primary process - water in the tank

- Outer loop disturbances - fluctuations in the tank temperature due to uncontrolled ambient conditions, especially fluctuations in the inflow temperature

- Secondary process variable - steam flow rate

- Primary process variable - tank water temperature

Cascade control can also have its drawbacks. Most notably, the extra sensor and controller tend to increase the overall equipment costs. Cascade control systems are also more complex than single-measurement controllers, requiring twice as much tuning. Then again, the tuning procedure is fairly straightforward: tune the secondary controller first, then the primary controller using the same tuning tools applicable to single-measurement controllers.

However, if the inner loop tuning is too aggressive and the two processes operate on similar time scales, the two controllers might compete with each other to the point of driving the closed-loop system unstable. Fortunately, this is unlikely if the inner loop is inherently faster than the outer loop or the tuning forces it to be.

And it's not always clear when cascade control will be worth the extra effort and expense. There are several classic examples that typically benefit from cascade control-often involving a flow rate as the secondary process variable-but it's usually easier to predict when a cascade control system won't help than to predict when it will.

Key concepts:

- When more than one element can affect a single process variable, treating each separately can make the process easier to control.

- One process variable that depends on more than one measurement might need more than one controller.

________________________________________________________________________________

Back to Basics: Closed-loop stability

Stability is how a control loop reduces errors between the measured process variable and its desired value or setpoint.

For the purposes of feedback control, stability refers to a control loop’s ability to reduce errors between the measured process variable and its desired value or setpoint. A stable control loop will manipulate the process so as to bring the process variable closer to the setpoint, whereas an unstable control loop will maintain or even widen the gap between them.

With the exception of explosive devices that depend on self-sustained reactions to increase the temperature and pressure of a process exponentially, feedback loops are generally designed to be stable so that the process variable will eventually achieve a constant steady state after a setpoint change or a disturbance to the process.Unfortunately, some control loops don’t turn out that way. The problem is often a matter of inertia – a process’s tendency to continue moving in the same direction after the controller has tried to reverse course.

Consider, for example, the child’s toy shown in the first figure. It consists of a

weight hanging from a vertical spring that the human controller can raise or lower by tugging on the spring’s handle. If the controller’s goal is to position the weight at a specified height above the floor, it would be a simple matter to slowly raise the

handle until the height measurement matches the desired setpoint.

Doing so would certainly achieve the desired objective, but if this were an industrial positioning system, the inordinate amount of time required to move the weight slowly to its final height would degrade the performance of any process that depends on the weight’s position. The longer the weight remains above or below the setpoint, the poorer the performance.

Moving the weight faster would address the time-out-of-position problem, but moving it too quickly could make matters worse. The weight’s inertia might cause it to move past the setpoint even after the controller has observed the impending overshoot and begun pushing in the opposite direction. And if the controller’s attempt to reverse course is also too aggressive, the weight will overshoot the other way.Fortunately, each successive overshoot will typically be smaller than the last so that the weight will eventually reach the desired height after bouncing around a bit. But as anyone who has ever played with such a toy knows, the faster the controller moves the handle, the longer those oscillations will be sustained. And at one particular speed corresponding to the resonant frequency of the weight-and-spring process, each successive overshoot will have the same magnitude as its predecessor and the oscillations will continue until the controller gives up.

But if the controller were to become even more aggressive, those oscillations would grow in magnitude until the spring reaches its maximum distention or breaks. Such an unstable control loop might be amusing for a child playing with a toy spring, but it would be disastrous for a commercial positioning system or any other application of closed-loop feedback.

One solution to this problem would be to limit the controller’s aggressiveness by equipping it with a speed-sensitive damper such as a dashpot or a shock absorber as shown in the second figure. Such a device would resist the controller’s movements more and more as the controller tries to move faster and faster. The

derivative term in a PID controller serves the same function, though too much derivative damping can actually make matters worse.

__________________________________________________________________________________

Disturbance-Rejection vs. Setpoint-Tracking Controllers

Designing a feedback control loop starts with understanding its objective as well as the process’s behavior.

The principal objective of a feedback controller is typically either disturbance rejection or setpoint tracking. A controller designed to reject disturbances will take action to force the process variable back toward the desired setpoint whenever a disturbance or load on the process causes a deviation.

A car’s cruise controller, for example, will throttle up the engine whenever it detects a drop in the car’s speed during an uphill climb. It will continue working to reject or overcome the extra load on the car until the car is once again moving as fast as the driver originally specified. Disturbance-rejection controllers are best suited for applications where the setpoint is constant and the process variable is required to stay close to it.

In contrast, a setpoint-tracking controller is appropriate when the setpoint is expected to change frequently and the controller is required to raise or lower the process variable accordingly. A luxury car equipped with an automatic temperature controller will track a changing setpoint by adjusting the heater’s output whenever a new driver calls for a new interior temperature.

Disturbance-rejection and setpoint-tracking controllers can each do the job of the other (a cruise controller can increase the car’s speed when the driver wants to go faster, and the car’s temperature controller can cut back the heating when the sun comes out), but optimal performance generally requires that a controller be designed or tuned for one role or the other. To see why, consider the feedback loop shown in the Control Loop diagram and the effects of an abrupt disturbance to the process or an abrupt change in the process variable’s setpoint.

Open-loop operations

First, suppose that the feedback path is disabled so that the controller is operating in open-loop mode. After a disturbance, the process variable will begin to change according to the magnitude of the load and the physical characteristics of the process. In the cruise control example, the sudden resistance added by the hill will start to decelerate the car according to the hill’s steepness and the car’s inertia.

Note that an open-loop controller doesn’t actually play any role in determining how the process reacts to a disturbance, so the controller’s tuning is irrelevant when feedback is disabled. In contrast, a setpoint change will pass through both the controller and the process, even without any feedback. See the Open-Loop Operations diagram.

As a result, the mathematical inertia of the controller combines with the physical inertia of the process to make the process’s response to a setpoint change slower than its response to an abrupt disturbance. This is especially true when the controller is equipped with integral action. The I component of a PID controller tends to filter or average-out the effects of a setpoint change by introducing a time lag that limits the rate at which the resulting control effort can change.

In the car temperature control example, this phenomenon is evident when the controller starts turning up the heat upon receiving the driver’s request for a warmer interior. The car’s heater will in turn begin to raise the car’s temperature at a rate that depends on how aggressively the controller is tuned and how quickly the interior temperature reacts to the heater’s efforts. A direct disturbance such as a burst of sunshine would typically raise the car’s temperature at a much faster rate because the effects of the disturbance would not depend on the controller ramping up first.

Closed-loop operations

Of course an open-loop controller can’t really reject disturbances nor track setpoint changes without feedback, so it makes sense to ask, “What happens to that extra setpoint response time when the feedback is enabled?” Usually, nothing. Unless the controller happens to be equipped with setpoint filtering, the setpoint response will remain slower than the disturbance response by exactly the same amount as in the open-loop case. See the Closed-Loop Operations diagram.

But since that difference in response times is attributable entirely to the time lag of the controller, one might wonder if it would still be possible to design a setpoint-tracking controller that is just as fast as its disturbance-rejection counterpart by tuning it to respond instantaneously to a setpoint change.

That won’t work either. Eliminating the controller’s time lag would require disabling its integral action, and that would prevent the process variable from ever reaching the setpoint. For more on this steady-state offset phenomenon, see “The Three Faces of PID ;

On the other hand, the controller’s mathematical inertia can be minimized without completely defeating its ability to eliminate errors between the process variable and the setpoint. A fast setpoint-tracking controller would require particularly aggressive tuning, but that shouldn’t be a problem so long as the controller never needs to reject a disturbance. But if an unexpected load ever does disturb the process abruptly, a setpoint-tracking controller will tend to overreact and cause the process variable to oscillate unnecessarily.

Conversely, a controller tuned to reject abrupt disturbances will typically be relatively slow about implementing a setpoint change. Fortunately, a typical feedback control loop in an industrial application will operate for extended periods at a constant setpoint, so the only time that a disturbance-rejection controller normally experiences a delay because of a setpoint change is at start-up.

Caveats

Unfortunately, that’s not the end of the disturbance-rejection vs. setpoint-tracking story. To this point we have assumed that the process is subject to abrupt disturbances such as when a car with cruise control suddenly encounters a steep hill. Many if not most feedback control applications involve much less dramatic disturbances—rolling hills rather than steep inclines, for example.

When the physical properties of the process limit the rate at which disturbances can affect the process variable, the disturbance response will sometimes be slower than the setpoint response, not faster. In such cases, more aggressive tuning would be appropriate for a disturbance-rejection controller than for its setpoint-tracking counterpart. The key is determining which scenario applies to the process at hand and which objective the controller is required to achieve.

___________________________________________________________________________________

Tuning PID loops for level control

One-in-four control loops are regulating level, but techniques for tuning PID controllers in these integrating processes are not widely understood.

Since the first two PID controller tuning methods were published in 1942 by J. G. Ziegler and N. B. Nichols, more than 100 additional tuning rules have been developed for self-regulating control loops (e.g., flow, temperature, pressure). In contrast, fewer than 10 tuning methods have been developed for integrating (e.g., level) process types, though roughly one-in-four industrial PID loops controls liquid level.

Applicable process types

This modified Ziegler-Nichols tuning method is intended for use with integrating processes, and level control loops (Figure ) are the most common example.

Unlike

a self-regulating process, an integrating process will stabilize at

only one controller output, which has to be at the point of equilibrium.

If the controller output is set to a different value, the process will

increase or decrease indefinitely at a steady slope

Unlike

a self-regulating process, an integrating process will stabilize at

only one controller output, which has to be at the point of equilibrium.

If the controller output is set to a different value, the process will

increase or decrease indefinitely at a steady slope Note: This tuning method provides a fast response to disturbances in level and is therefore not suitable for tuning surge tank level control loops.

The modified Ziegler-Nichols tuning rules presented here are designed for use on a non-interactive controller algorithm with its integral time set in minutes. Dataforth's MAQ20 industrial data acquisition and control system uses this approach as do other controllers from a variety of manufacturers.

Procedure

To apply these tuning rules to an integrating process, follow these steps. The process variable and controller output must be time-trended so that measurements can be taken from them, as illustrated in Figure 3.

Step I. Do a step test

a) Make sure, as far as possible, that the uncontrolled flow in and out of the vessel is as constant as possible.

b) Put the controller in manual control mode.

c) Wait for a steady slope in the level. If the level is very volatile, wait long enough to be able to confidently draw a straight line though the general slope of the level.

d) Make a step change in the controller output. Try to make the step change 5% to 10% in size, if the process can tolerate it.

e) Wait for the level to change its slope into a new direction. If the level is volatile, wait long enough to be able to confidently draw a straight line though the general slope of the level.

f) Restore the level to an acceptable operating point and place the controller back into automatic control mode.

Step II. Determine process characteristics

Based on the example shown in Figure 3:

Based on the example shown in Figure 3:

a) Draw a line (Slope 1) through the initial slope, and extend it to the right as shown in Figure 3.

b) Draw a line (Slope 2) through the final slope, and extend it to the left to intersect Slope 1.

c) Measure the time between the beginning of the change in controller output and the intersection between Slope 1 and Slope 2. This is the process dead time (td), the first parameter required for tuning the controller.

d) If td was measured in seconds, divide it by 60 to convert it to minutes. As mentioned earlier, the calculations here are based on the integral time in minutes, so all time measurements should be in minutes.

e) Pick any two points (PV1 and PV2) on Slope 1, located conveniently far from each other to make accurate measurements.

f) Pick any two points (PV3 and PV4) on Slope 2, located conveniently far from each other to make accurate measurements.

g) Calculate the difference in the two slopes (DS) as follows:

DS = (PV4 - PV3) / T2 - (PV2 - PV1) / T1

Note: If T1 and T2 measurements were made in seconds, divide them by 60 to convert them to minutes.

h) If the PV is not ranged 0%-100%, convert DS to a percentage of the range as follows:

DS% = 100 × DS / (PV range max - PV range min)

i) Calculate the process integration rate (ri), which is the second parameter needed for tuning the controller:

ri = DS [in %] / dCO [in %]

Step III. Repeat

Perform steps 1 and 2 at least three more times to obtain good average values for the process characteristics td and ri.

Step IIII. Calculate tuning constants

Using the equations below, calculate your tuning constants. Both PI and PID calculations are provided since some users will select the former based on the slow-moving nature of many level applications.

For PI Control

Controller Gain, Kc = 0.45 / (ri × td)

Integral Time, Ti = 6.67 × td

Derivative Time, Td = 0

For PID Control

Controller Gain, Kc = 0.75 / (ri × td)

Integral Time, Ti = 5 × td

Derivative Time, Td = 0.4 × td

Note that these tuning equations look different from the commonly published Ziegler-Nichols equations. The first reason is that Kc has been reduced and Ti increased by a factor of two, to make the loop more stable and less oscillatory. The second reason is that the Ziegler-Nichols equations for PID control target an interactive controller algorithm, while this approach is designed for a non-interactive algorithm such as is used in the Dataforth MAQ20 and others. (If you are using a different controller, make sure you find out which approach it uses.) The PID equations above have been adjusted to compensate for the difference.

Step IIIII . Enter the values

Key

your calculated values into the controller, making sure the algorithm

is set to non-interactive, and put the controller back into automatic

mode.

Key

your calculated values into the controller, making sure the algorithm

is set to non-interactive, and put the controller back into automatic

mode.

Step IIIIII . Test and tune your work

Change the setpoint to test the new values and see how it responds. It might still need some additional fine-tuning to look like Figure 4. For integrating processes, Kc and Ti need to be adjusted simultaneously and in opposite directions. For example, to slow down the control loop, use Kc / 2 and Ti × 2.

With just a few modifications to the original Ziegler-Nichols tuning approach, these rules can be used to tune level control loops for both stability and fast response to setpoint changes and disturbances.

Lee Payne is CEO of Dataforth.

Key concepts:

- Modified Ziegler-Nichols tuning rules are effective for tuning level control loops.

- They are designed for use with integrating processes and on non-interactive controller algorithms.

- These rules provide loop stability as well as fast response to setpoint changes and disturbances.

_________________________________________________________________________________

Designing control: Smart sensors and data acquisition

Smart sensors enable accurate, efficient, and expansive data collection. Smart sensing solutions can preserve signal integrity in harsh industrial environments and give customers quick and easy solutions for test and measurement and other big data applications.

Traditional sensors such as thermocouples, resistance temperature detectors (RTDs), strain gages, linear variable differential transducers (LVDTs) and flowmeters measure physical parameters and provide data needed to monitor and control processes. Many of these produce low-level analog outputs that require precision signal conditioning to preserve the critical information they are gathering. Signal conditioning functions include sensor excitation, signal amplification, anti-alias filtering, low-pass and high-pass filtering, linearization, and an often overlooked but essential feature—isolation. Once signal conditioning has been performed, sensor signals can be digitized using an analog-to-digital converter and then further processed by a data acquisition system.

System on a chip

Developments in electronics are rapidly changing the way process data is collected using sensors. Microcontrollers and microprocessors have become much more sophisticated, incorporating communications interfaces, excitation sources, high-resolution analog to digital (A to D) and D to A converters, general purpose discrete I/O, fast architectures, math support, and low-power modes. Commonly called SoC, or system-on-chip, these small devices outperform the full and half-length ISA and PCI card solutions of yesterday at a much reduced cost. Microcontrollers with integrated data converters are even available in packages as small as 2 mm x 3 mm.

So what does all this mean for smart sensors and data acquisition? How are these changes in electronics technology changing the way data is acquired and used?

First, let's understand what a smart sensor is. Smart sensors analyze collected measurements, make decisions based on the physical parameter being measured, and most importantly, are able to communicate. Local computational power enables self-test, self-calibration, cancellation of component drift over time and temperature, remote updating, and reconfiguration.

Widely distributed

Low-cost and low-power electronics allow signal conditioning to occur much closer to the sensor, or even within the sensor package itself. This enables processing power to be widely distributed throughout a data acquisition system, resulting in far more signal processing capability than was previously possible. Systems are flexible and scalable and sensor packages are rugged, leading to high reliability. Long bundles of sensor wires composed of carefully routed twisted-shielded pair wiring with specific shield grounding requirements now can be replaced with fewer wires that connect to multiple sensors and don't have as stringent routing and shielding needs. Since low-level analog signal lines can be short with smart sensors, environmental electrical noise coupled into signal lines is avoided and signal integrity is preserved. When conditioned sensor data format and a smart sensor communication standard are defined, physical parameters measured with a wide range of sensors becomes universal. In a nutshell, smart sensors make data acquisition systems easy to design, use, and maintain.

Smart sensors

Smart sensors may be thought of as discrete elements like an intelligent thermocouple or accelerometer with integrated electronics, but in a broader sense, smart sensing and smart signal processing at the system level also are rapidly evolving with advances in electronics. Data acquisition systems used to rely on host computer software to perform complex calculations on measured data. Now, individual signal conditioning input and output modules commonly interface to between 1 and 32 sensors and perform signal processing functions within a module, remote from a host computer.

Signals can be locally monitored for alarm conditions with programmed actions taken when limits are reached. In addition, high-performance signal conditioners provide essential sensor signal isolation required in harsh industrial applications and protect against transient events, such as electrostatic discharge (ESD) or secondary lightning strikes, as well as extreme overvoltage.

SoC microcontrollers and microprocessors in a data acquisition system have integrated peripherals for communicating over Ethernet, USB, CAN, and other fieldbuses. Fast processors, math support, and local memory allow control functions, such as proportional-integral-derivative (PID) algorithms, to reside and execute within a system control module and operate in parallel with communication to individual I/O modules and host computer software applications. Many systems are moving toward true stand-alone capability where no host computer is required to run application software. Data acquisition and control is fully contained within the data acquisition system with application software running on system resources and user interface occurring simply through a web browser from anywhere in the world.

Higher cost, safety considerations

As with any technology, smart sensors have disadvantages. Integration of electronics with sensors drives up system costs. When retrofitting existing installations, costs to change wiring can be significant. New hardware and systems have learning curves for optimal operation. Safety and compliance to regulatory standards may prohibit smart sensor use. Each application needs to be individually analyzed with costs weighed against benefits, but in general, smart sensors and smart systems are changing the way data is collected and will work their way into new and existing applications.

The world is hungry for more data. The need is omnipresent in industrial applications, manufacturing, laboratories, medical applications, and now even in our homes. Data is used to automate processes and expand capabilities. Data analysis tells us about health of system components, efficiency of processes, and fault conditions.

Signal integrity

Smart sensors enable accurate, efficient, and expansive data collection. More than ever, smart sensors and smart sensing are the future of data acquisition and processing.

Smart sensing solutions can preserve signal integrity in harsh industrial environments and give customers quick and easy solutions for test and measurement applications. Products that help with this include signal conditioning modules, and data acquisition and control systems, and data communication products have some of the best performance metrics in the industry while maintaining low cost. Products remove analog problems from systems design and development and provide a better user experience for data acquisition and control.

Key concepts

- Smart sensors and data acquisition help with Internet of Things (IoT) and big data.

- System-on-a-chip capabilities distribute intelligence to sensors.

- Integrity of signals from sensors ensures proper information.

Tuning PID control loops for fast response

When choosing a tuning strategy for a specific control loop, it is important to match the technique to the needs of that loop and the larger process. It is also important to have more than one approach in your repertoire, and the Cohen-Coon method can be a handy addition in the right situation.

The method's original design resulted in loops with too much oscillatory response and consequently fell into disuse. However, with some modification, Cohen-Coon tuning rules proved their value for control loops that need to respond quickly while being much less prone to oscillations.

Applicable process types

The Cohen-Coon tuning method isn't suitable for every application. For starters, it can be used only on self-regulating processes. Most control loops, e.g., flow, temperature, pressure, speed, and composition, are, at least to some extent, self-regulating processes. (On the other hand, the most common integrating process is a level control loop.)

A

self-regulating process always stabilizes at some point of equilibrium,

which depends on the process design and the controller output. If the

controller output is set to a different value, the process will respond

and stabilize at a new point of equilibrium.

A

self-regulating process always stabilizes at some point of equilibrium,

which depends on the process design and the controller output. If the