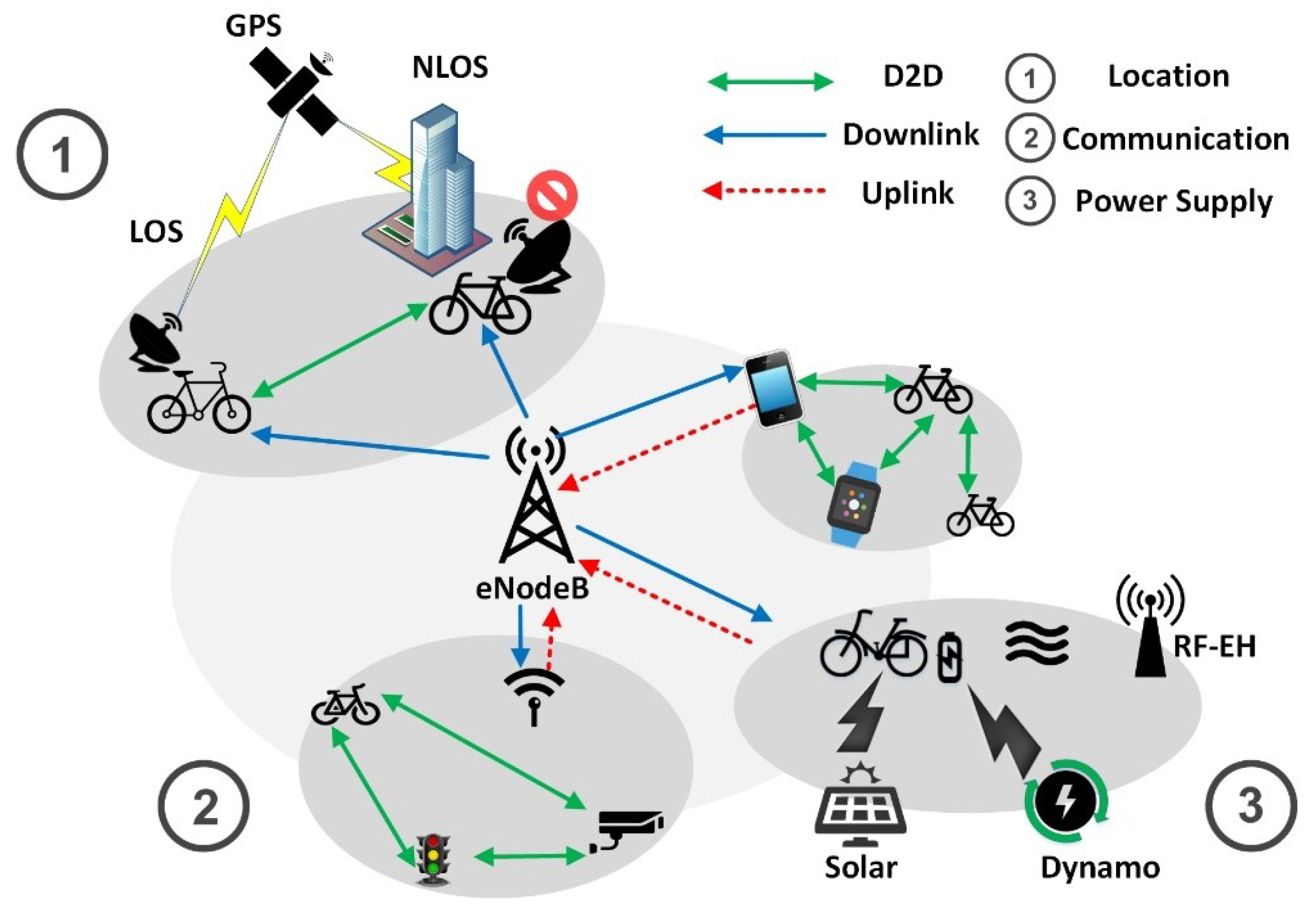

Dynamo movement in component electronics is done either manually or using DC signals as well as data transmission on computer networks driven by digital signal signals that are started up by DC signals because all electronic equipment will run when DC power is given. on both traditional and hybrid internet networks are found many points where there is what is called ADA (Address - Data --- Accumulation), there is individual computer as manifested as a computer hardware system and interface while ADA on computer networks internationally or INTERNET is made through the network server that is connected to the modem as well as proxy points and fire wall which is where ADA is located, while the center of the ADA is in the future energy system called Future Energy Cloud as the ADA Data Bank. proxy points and fire walls and protocol traffic on the international network of computers on the earth moving networks that have been determined in each country where they are adapted to the best contour and climate in the country and on extraterrestrial systems. The bank data movement ADA uses the principle of the Dynamo Virtual movement that gets energy from the Cloud system. This virtual dynamo can move all existing electronic system networks on the earth where for the time being is on computer electronic communication machines and has not provided supply to other electronic machine tool equipment but can be driven if it is connected interface with international computer networks (INTERNET).

LOVE ( Line ON Victory Endless )

Gen. Mac Tech Zone Virtual Dynamo on e- WET

Virtual reality (VR) is a computer technology that uses virtual reality headsets or multi-projected environments, sometimes in combination with physical environments or props, to generate realistic images, sounds and other sensations that simulate a user’s physical presence in a virtual or imaginary environment.

Virtual Reality technologies will be more focused on the game and events sphere as it is already doing so in 2017 and will go beyond to add more evolved app usage experience to offer an elevated dose of entertainment for the gaming user.

We find:

With iPhone X, Apple is trying to change the face of AR by making it a common use case for masses. Also A whole bunch of top tech players think this technology which is also called a mixed reality or immersive environments — is all set to create a truly digital-physical blended environment for the people who are majorly consuming digital world through their mobile power house

Some of The Popular AR/VR Companies :

The smart objects will be interacting with our smartphone/tablets which will eventually function like our TV remort displaying and analyzing data, interfacing with social networks to monitor “things” that can tweet or post, paying for subscription services, ordering replacement consumables and updating object firmware.

Connected World:

- Microsoft is powering their popular IIS(Intelligent Systems Service) by integrating IoT capabilities to their enterprise service offerings.

- Some of the known communication technology powering IoT concept is RFID, WIFI, EnOcean, RiotOS etc….

- Google is working on two of its ambitious project called Nest & Brillo which is circled around usage of IoT to fuel your home automation needs. Brillo is an IoT OS which enables Wi-Fi, Bluetooth Low Energy, and other Android stuffs.

the virtual reality process is moved by future cloud energy with the virtual dynamo connection concept

The Dynamo Process

Dynamo Process by moving a magnet next to a closed electric circuit, or changing the magnetic field passing through it, an electric current could be "induced" to flow in it. That "electromagnetic induction" remains the principle behind electric generators, transformers and many other devices. .

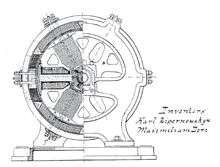

That is showed that another way of inducing the current was to move the electric conductor while the magnetic source stood still. This was the principle behind his disk dynamo, which featured a conducting disk spinning in a magnetic field--you may imagine it to be spun up by some belt and pulley, not drawn here. The electric circuit was then completed by stationary wires touching the disk on its rim and on its axle, shown on the right side of the drawing. This is not a very practical dynamo design (unless one seeks to generate huge currents at very low voltages), but in the large-scale universe, most currents are apparently produced by motions of this sort.

The Disk Dynamo

| disk dynamo needs a magnetic field in order to produce an electric current. Is it possible for the current which it generated to also produce the magnetic field which the dynamo process required? |

| At first sight this looks like a "chicken and egg" propostion: to produce a chicken, you need an egg, but to produce an egg you need a chicken--so which of these came first? Similarly here--to produce a current, you need a magnetic field, but to produce a magnetic field you need a current. Where does one begin? Actually, weak magnetic fields are always present and would be gradually amplified by the process, so this poses no obstacle. |

| When the shaft of a dynamo is turned by some outside force, its electrical connections can produce an electric current. Many dynamos however are reversible: if an electric current is fed into its electrical connection, the force on the current can turn the same shaft, converting the dynamo into a motor. In some models of "hybrid" automobiles, electric motors fed by a storage battery help the gasoline engine accelerate the car, but then when the car slows down, they become dynamos and feed an electric current back into the battery, saving energy which otherwise might turn into waste heat in brakes. |

| The rotating disk here is a shallow layer of water containing copper sulfate, which conducts electricity. The solution in put in a Petri dish and placed on top of one of the poles of a vertical laboratory electromagnet; little pieces of cork floating on the fluid then trace its rotation The electrodes are two circles of copper (they can be sawed from the ends of pipes)--a big one on the outer boundary of the solution and a small one in the middle. When the terminals of a battery of an electric power supply are connected to the two copper circles, the fluid starts rotating. Reverse the contacts and the rotation reverses too. |

The word dynamo (from the Greek word dynamis (δύναμις), meaning force or power) was originally another name for an electrical generator, and still has some regional usage as a replacement for the word generator. The word "dynamo" was coined in 1831 by Michael Faraday, who utilized his invention toward making many discoveries in electricity (Faraday discovered electrical induction) and magnetism.

The original "dynamo principle" of Wehrner von Siemens or Werner von Siemens referred only the direct current generators which use exclusively the self-excitation (self-induction) principle to generate DC power. The earlier DC generators which used permanent magnets were not considered "dynamo electric machines". The invention of the dynamo principle (self-induction) was a huge technological leap over the old traditional permanent magnet based DC generators. The discovery of the dynamo principle made industrial scale electric power generation technically and economically feasible. After the invention of the alternator and that alternating current can be used as a power supply, the word dynamo became associated exclusively with the commutated direct current electric generator, while an AC electrical generator using either slip rings or rotor magnets would become known as an alternator.

A small electrical generator built into the hub of a bicycle wheel to power lights is called a hub dynamo, although these are invariably AC devices, and are actually magnetos.

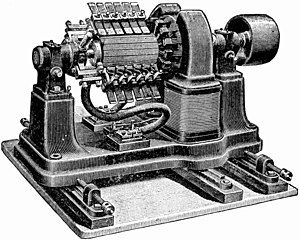

The electric dynamo uses rotating coils of wire and magnetic fields to convert mechanical rotation into a pulsing direct electric current through Faraday's law of induction. A dynamo machine consists of a stationary structure, called the stator, which provides a constant magnetic field, and a set of rotating windings called the armature which turn within that field. Due to Faraday's law of induction the motion of the wire within the magnetic field creates an electromotive force which pushes on the electrons in the metal, creating an electric current in the wire. On small machines the constant magnetic field may be provided by one or more permanent magnets; larger machines have the constant magnetic field provided by one or more electromagnets, which are usually called field coils.

Commutation

The commutator is needed to produce direct current. When a loop of wire rotates in a magnetic field, the magnetic flux through it, and thus the potential induced in it, reverses with each half turn, generating an alternating current. However, in the early days of electric experimentation, alternating current generally had no known use. The few uses for electricity, such as electroplating, used direct current provided by messy liquid batteries. Dynamos were invented as a replacement for batteries. The commutator is essentially a rotary switch. It consists of a set of contacts mounted on the machine's shaft, combined with graphite-block stationary contacts, called "brushes", because the earliest such fixed contacts were metal brushes. The commutator reverses the connection of the windings to the external circuit when the potential reverses, so instead of alternating current, a pulsing direct current is produced.

Excitation

The earliest dynamos used permanent magnets to create the magnetic field. These were referred to as "magneto-electric machines" or magnetos.[4] However, researchers found that stronger magnetic fields, and so more power, could be produced by using electromagnets (field coils) on the stator.[5] These were called "dynamo-electric machines" or dynamos.[4] The field coils of the stator were originally separately excited by a separate, smaller, dynamo or magneto. An important development by Wilde and Siemens was the discovery (by 1866) that a dynamo could also bootstrap itself to be self-excited, using current generated by the dynamo itself. This allowed the growth of a much more powerful field, thus far greater output power.

Self-excited direct current dynamos commonly have a combination of series and parallel (shunt) field windings which are directly supplied power by the rotor through the commutator in a regenerative manner. They are started and operated in a manner similar to modern portable alternating current electric generators, which are not used with other generators on an electric grid.

There is a weak residual magnetic field that persists in the metal frame of the device when it is not operating, which has been imprinted onto the metal by the field windings. The dynamo begins rotating while not connected to an external load. The residual magnetic field induces a very small electrical current into the rotor windings as they begin to rotate. Without an external load attached, this small current is then fully supplied to the field windings, which in combination with the residual field, cause the rotor to produce more current. In this manner the self-exciting dynamo builds up its internal magnetic fields until it reaches its normal operating voltage. When it is able to produce sufficient current to sustain both its internal fields and an external load, it is ready to be used.

A self-excited dynamo with insufficient residual magnetic field in the metal frame will not be able to produce any current in the rotor, regardless of what speed the rotor spins. This situation can also occur in modern self-excited portable generators, and is resolved for both types of generators in a similar manner, by applying a brief direct current battery charge to the output terminals of the stopped generator. The battery energizes the windings just enough to imprint the residual field, to enable building up the current. This is referred to as flashing the field.

Both types of self-excited generator, which have been attached to a large external load while it was stationary, will not be able to build up voltage even if the residual field is present. The load acts as an energy sink and continuously drains away the small rotor current produced by the residual field, preventing magnetic field buildup in the field coil.

Induction with permanent magnets

The operating principle of electromagnetic generators was discovered in the years 1831–1832 by Michael Faraday. The principle, later called Faraday's law, is that an electromotive force is generated in an electrical conductor which encircles a varying magnetic flux.

He also built the first electromagnetic generator, called the Faraday disk, a type of homopolar generator, using a copper disc rotating between the poles of a horseshoe magnet. It produced a small DC voltage. This was not a dynamo in the current sense, because it did not use a commutator.

This design was inefficient, due to self-cancelling counterflows of current in regions of the disk that were not under the influence of the magnetic field. While current was induced directly underneath the magnet, the current would circulate backwards in regions that were outside the influence of the magnetic field. This counterflow limited the power output to the pickup wires, and induced waste heating of the copper disc. Later homopolar generators would solve this problem by using an array of magnets arranged around the disc perimeter to maintain a steady field effect in one current-flow direction.

Another disadvantage was that the output voltage was very low, due to the single current path through the magnetic flux. Faraday and others found that higher, more useful voltages could be produced by winding multiple turns of wire into a coil. Wire windings can conveniently produce any voltage desired by changing the number of turns, so they have been a feature of all subsequent generator designs, requiring the invention of the commutator to produce direct current.

The first dynamos

The first dynamo based on Faraday's principles was built in 1832 by Hippolyte Pixii, a French instrument maker. It used a permanent magnet which was rotated by a crank. The spinning magnet was positioned so that its north and south poles passed by a piece of iron wrapped with insulated wire.

Pixii found that the spinning magnet produced a pulse of current in the wire each time a pole passed the coil. However, the north and south poles of the magnet induced currents in opposite directions. To convert the alternating current to DC, Pixii invented a commutator, a split metal cylinder on the shaft, with two springy metal contacts that pressed against it.

This early design had a problem: the electric current it produced consisted of a series of "spikes" or pulses of current separated by none at all, resulting in a low average power output. As with electric motors of the period, the designers did not fully realize the seriously detrimental effects of large air gaps in the magnetic circuit.

Antonio Pacinotti, an Italian physics professor, solved this problem around 1860 by replacing the spinning two-pole axial coil with a multi-pole toroidal one, which he created by wrapping an iron ring with a continuous winding, connected to the commutator at many equally spaced points around the ring; the commutator being divided into many segments. This meant that some part of the coil was continually passing by the magnets, smoothing out the current.

The Woolrich Electrical Generator of 1844, now in Thinktank, Birmingham Science Museum, is the earliest electrical generator used in an industrial process. It was used by the firm of Elkingtons for commercial electroplating.

Dynamo self excitation

Independently of Faraday, the Hungarian Anyos Jedlik started experimenting in 1827 with the electromagnetic rotating devices which he called electromagnetic self-rotors. In the prototype of the single-pole electric starter, both the stationary and the revolving parts were electromagnetic.

About 1856 he formulated the concept of the dynamo about six years before Siemens and Wheatstone but did not patent it as he thought he was not the first to realize this. His dynamo used, instead of permanent magnets, two electromagnets placed opposite to each other to induce the magnetic field around the rotor. It was also the discovery of the principle of dynamo self-excitation,[13] which replaced permanent magnet designs.

Practical designs

The dynamo was the first electrical generator capable of delivering power for industry. The modern dynamo, fit for use in industrial applications, was invented independently by Sir Charles Wheatstone, Werner von Siemens and Samuel Alfred Varley. Varley took out a patent on 24 December 1866, while Siemens and Wheatstone both announced their discoveries on 17 January 1867, the latter delivering a paper on his discovery to the Royal Society.

The "dynamo-electric machine" employed self-powering electromagnetic field coils rather than permanent magnets to create the stator field.[14] Wheatstone's design was similar to Siemens', with the difference that in the Siemens design the stator electromagnets were in series with the rotor, but in Wheatstone's design they were in parallel. The use of electromagnets rather than permanent magnets greatly increased the power output of a dynamo and enabled high power generation for the first time. This invention led directly to the first major industrial uses of electricity. For example, in the 1870s Siemens used electromagnetic dynamos to power electric arc furnaces for the production of metals and other materials.

The dynamo machine that was developed consisted of a stationary structure, which provides the magnetic field, and a set of rotating windings which turn within that field. On larger machines the constant magnetic field is provided by one or more electromagnets, which are usually called field coils.

Zénobe Gramme reinvented Pacinotti's design in 1871 when designing the first commercial power plants operated in Paris. An advantage of Gramme's design was a better path for the magnetic flux, by filling the space occupied by the magnetic field with heavy iron cores and minimizing the air gaps between the stationary and rotating parts. The Gramme dynamo was one of the first machines to generate commercial quantities of power for industry.[16] Further improvements were made on the Gramme ring, but the basic concept of a spinning endless loop of wire remains at the heart of all modern dynamos.[17]

Charles F. Brush assembled his first dynamo in the summer of 1876 using a horse-drawn treadmill to power it. Brush's design modified the Gramme dynamo by shaping the ring armature like a disc rather than a cylinder shape. The field electromagnets were also positioned on the sides of the armature disc rather than around the circumference.

Rotary converters

After dynamos and motors were found to allow easy conversion back and forth between mechanical or electrical power, they were combined in devices called rotary converters, rotating machines whose purpose was not to provide mechanical power to loads but to convert one type of electric current into another, for example DC into AC. They were multi-field single-rotor devices with two or more sets of rotating contacts (either commutators or sliprings, as required), one to provide power to one set of armature windings to turn the device, and one or more attached to other windings to produce the output current.

The rotary converter can directly convert, internally, any type of electric power into any other. This includes converting between direct current (DC) and alternating current (AC), three phase and single phase power, 25 Hz AC and 60 Hz AC, or many different output voltages at the same time. The size and mass of the rotor was made large so that the rotor would act as a flywheel to help smooth out any sudden surges or dropouts in the applied power.

The technology of rotary converters was replaced in the early 20th century by mercury-vapor rectifiers, which were smaller, did not produce vibration and noise, and required less maintenance. The same conversion tasks are now performed by solid state power semiconductor devices. Rotary converters remained in use in the West Side IRT subway in Manhattan into the late 1960s, and possibly some years later. They were powered by 25 Hz AC, and provided DC at 600 volts for the trains.

uses

Electric power generation

Dynamos, usually driven by steam engines, were widely used in power stations to generate electricity for industrial and domestic purposes. They have since been replaced by alternators.

Large industrial dynamos with series and parallel (shunt) windings can be difficult to use together in a power plant, unless either the rotor or field wiring or the mechanical drive systems are coupled together in certain special combinations. It seems theoretically possible to run dynamos in parallel to create induction and self sustaining system for electrical power.

Transport

Dynamos were used in motor vehicles to generate electricity for battery charging. An early type was the third-brush dynamo. They have, again, been replaced by alternators.

Modern uses

Dynamos still have some uses in low power applications, particularly where low voltage DC is required, since an alternator with a semiconductor rectifier can be inefficient in these applications.

Hand cranked dynamos are used in clockwork radios, hand powered flashlights, mobile phone chargers, and other human powered equipment to recharge batteries.

A bottle dynamo or sidewall dynamo is a small electrical generator for bicycles employed to power a bicycle's lights. The traditional bottle dynamo (pictured) is not actually a dynamo, which creates DC power, but a low-power magneto that generates AC. Newer models can include a rectifier to create DC output to charge batteries for electronic devices including cellphones or GPS receivers

Named after their resemblance to bottles, these generators are also called sidewall dynamos because they operate using a roller placed on the sidewall of a bicycle tire. When the bicycle is in motion and the dynamo roller is engaged, electricity is generated as the tire spins the roller.

Two other dynamo systems used on bicycles are hub dynamos and bottom bracket dynamos.

Advantages over hub dynamos

- No extra resistance when disengaged

- When engaged, a dynamo requires the bicycle rider to exert more effort to maintain a given speed than would otherwise be necessary when the dynamo is not present or disengaged. Bottle dynamos can be completely disengaged when they are not in use, whereas a hub dynamo will always have added drag (though it may be so low as to be irrelevant or unnoticeable to the rider, and it is reduced significantly when lights are not being powered by the hub).

- Easy retrofitting

- A bottle dynamo may be more feasible than a hub dynamo to add to an existing bicycle, as it does not require a replacement or rebuilt wheel.

- Price

- A bottle dynamo is generally cheaper than a hub dynamo, but not always.

Disadvantages over hub dynamos

- Slippage

- In wet conditions, the roller on a bottle dynamo can slip against the surface of a tire, which interrupts or reduces the amount of electricity generated. This can cause the lights to go out completely or intermittently. Hub dynamos do not need traction and are sealed from the elements.

- Increased resistance

- Bottle dynamos typically create more drag than hub dynamos. However, when they are properly adjusted, the drag may be so low as to be trivial, and there is no resistance when the bottle dynamo is disengaged.

- Tire wear

- Because bottle dynamos rub against the sidewall of a tire to generate electricity, they cause added wear on the side of tire. Hub dynamos do not.

- Noise

- Bottle dynamos make an easily audible mechanical humming or whirring sound when engaged. Hub dynamos are silent.

- Switching

- Bottle dynamos must be physically repositioned to engage them, to turn on the lamps. Hub dynamos are switched on electronically. Hub dynamos can be engaged automatically by using electronic ambient light detection, providing zero-effort activation.

- Positioning

- Bottle dynamos must be carefully adjusted to touch the sidewall at correct angles, height and pressure. Bottle dynamos can be knocked out of position if the bike falls, or if the mounting screws are too loose. A badly positioned bottle dynamo will make more noise and drag, slip more easily, and can in worst case fall into the spokes. Some dynamo mounts have tabs to try to prevent the latter.

Technological Evolution of the ICT Sector

Segment Routing

Segment routing is a technology that is gaining popularity as a way to simplify MPLS networks. It has the benefits of interfacing with software-defined networks and allows for source-based routing. It does this without keeping state in the core of the network. It's one of the topics I'm learning more about at Cisco Live U.S. this week.

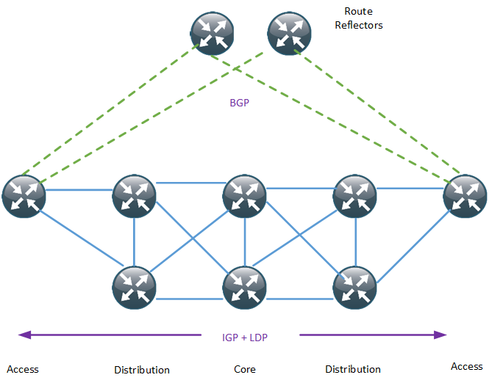

Multi Protocol Label Switching (MPLS) is the main forwarding paradigm in all the major service providers (SP) networks. MPLS, as the name implies, uses labels to forward the packets, thus providing the major advantage of a Border Gateway Protocol (BGP)-free core. To assign these labels, the most commonly used protocol is the Label Distribution Protocol (LDP). A typical provider network can then look like the diagram below.

In order to provide stability and scalability, large networks should have as few protocols as possible running in the core, and they should keep state away from the core if possible. That brings up some of the drawbacks of LDP:

- It uses an additional protocol running on all devices just to generate labels

- It introduces the potential for blackholing of traffic, because Interior Gateway Protocol (IGP) and LDP are not synchronized

- It does not employ global label space, meaning that adjacent routers may use a different label to reach the same router

- It's difficult to visualize the Label Switched Path (LSP) because of the issue above

- It may take time to recover after link failure unless session protection is utilized

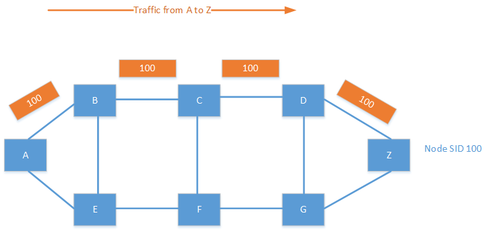

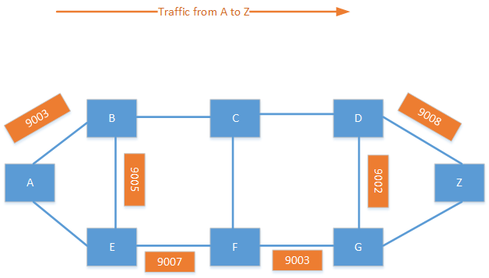

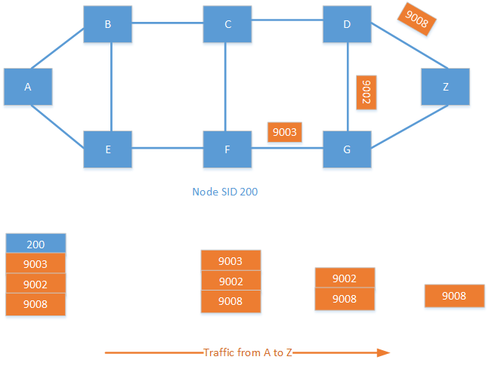

Segment routing is a new forwarding paradigm that provides source routing, which means that the source can define the path that the packet will take. SR still uses MPLS to forward the packets, but the labels are carried by an IGP. In SR, every node has a unique identifier called the node SID. This identifier is globally unique and would normally be based on a loopback on the device. Adjacency SIDs can also exist for locally significant labels for a segment between two devices.

It is also possible to combine the node SID and adjacency SID to create a custom traffic policy. Labels are specified in a label stack, which may include several labels. By combining labels, you can create policies such as, "Send the traffic to F; I don't care how you get there. From F, go to G, then to D and then finally to Z." This creates endless possibilities for traffic engineering in the provider network.

Now we have a basic understanding of how SR works. What are the different use cases for SR? How do we expect to see it being used?

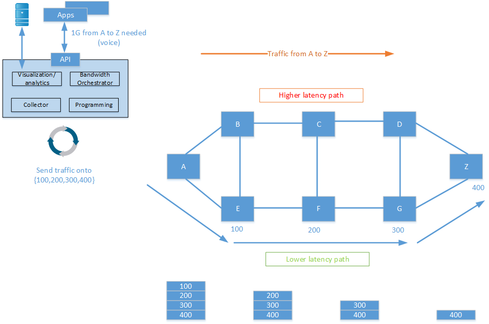

One of the main applications for SR is to enable some kind of application controller that can steer traffic over different paths, depending on different requirements and the current state of the network. Some might relate to this as software-defined networking (SDN). It is then possible to program the network to send voice over a lower latency path and send bulk data over a higher latency path. Doing this today requires MPLS-TE, as well as keeping state in many devices. With SR, there is no need to keep state in intermediary devices.

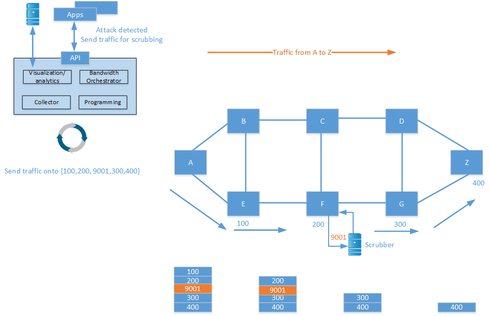

SR can also help protect against distributed denial of service (DDoS) attacks. When an attack is detected, traffic can be redirected to a scrubbing device which cleans the traffic and injects it into the network again.

SR has a great deal of potential, and may cause the decline or even disappearance of protocols such as LDP and RSVP-TE. SR is one piece of the puzzle to start implementing SDN in provider networks.

XO__XO ++DW Virtual Dynamo

Virtual Dynamo: The Next Generation Of Virtual Distributed Storage

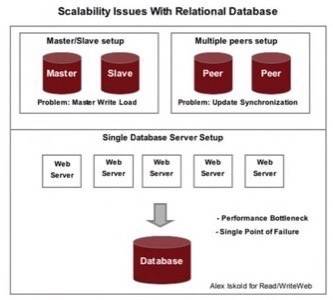

Scalability Issues With Relational Databases

Before discussing Dynamo it is worth taking look back to understand its origins. One of the most powerful

and useful technologies that has been powering the web since its early days is the relational database.

Particularly, relational databases have been used a lot for retail sites where visitors are able to browse and search for products.

Modern relational database are able to handle millions of products and service very large sites.

and useful technologies that has been powering the web since its early days is the relational database.

Particularly, relational databases have been used a lot for retail sites where visitors are able to browse and search for products.

Modern relational database are able to handle millions of products and service very large sites.

However, it is difficult to create redundancy and parallelism with relational databases, so

they become a single point of failure. In particular, replication is not trivial. To understand why, consider

the problem of having two database servers that need to have identical data. Having both servers for reading and writing

data makes it difficult to synchronize changes. Having one master server and another slave is bad too, because the master has to

take all the heat when users are writing information.

they become a single point of failure. In particular, replication is not trivial. To understand why, consider

the problem of having two database servers that need to have identical data. Having both servers for reading and writing

data makes it difficult to synchronize changes. Having one master server and another slave is bad too, because the master has to

take all the heat when users are writing information.

So as a relational database grows, it becomes a bottle neck and the point of failure for the entire system.

As mega e-commerce sites grew over the past decade they became aware of this issue – adding more web servers does not help because it is the database

that ends up being a problem.

As mega e-commerce sites grew over the past decade they became aware of this issue – adding more web servers does not help because it is the database

that ends up being a problem.

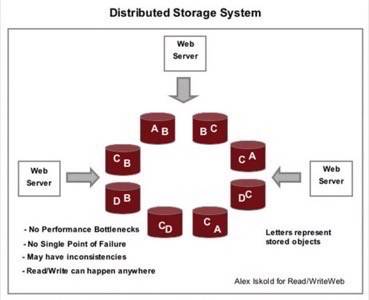

Dynamo – A Distributed Storage System

Unlike a relational database, Dynamo is a distributed storage system. Like a relational database it is stores information

to be retrieved, but it does not break the data into tables. Instead all objects are stored and looked up via a key.

A simple way to think about such a system is in terms of URLs. When you navigate to the page on Amazon for the last

Harry Potter book, http://www.amazon.com/gp/product/0545010225 you see a page that includes a

description of the book, customer reviews, related books, and so on. To create this page, Amazon’s infrastructure

has to perform many database lookups, the most basic of which is to grab information about the book from its URL

(or, more likely, from its ASIN – a unique code for each Amazon product, 0545010225 in this case).

to be retrieved, but it does not break the data into tables. Instead all objects are stored and looked up via a key.

A simple way to think about such a system is in terms of URLs. When you navigate to the page on Amazon for the last

Harry Potter book, http://www.amazon.com/gp/product/0545010225 you see a page that includes a

description of the book, customer reviews, related books, and so on. To create this page, Amazon’s infrastructure

has to perform many database lookups, the most basic of which is to grab information about the book from its URL

(or, more likely, from its ASIN – a unique code for each Amazon product, 0545010225 in this case).

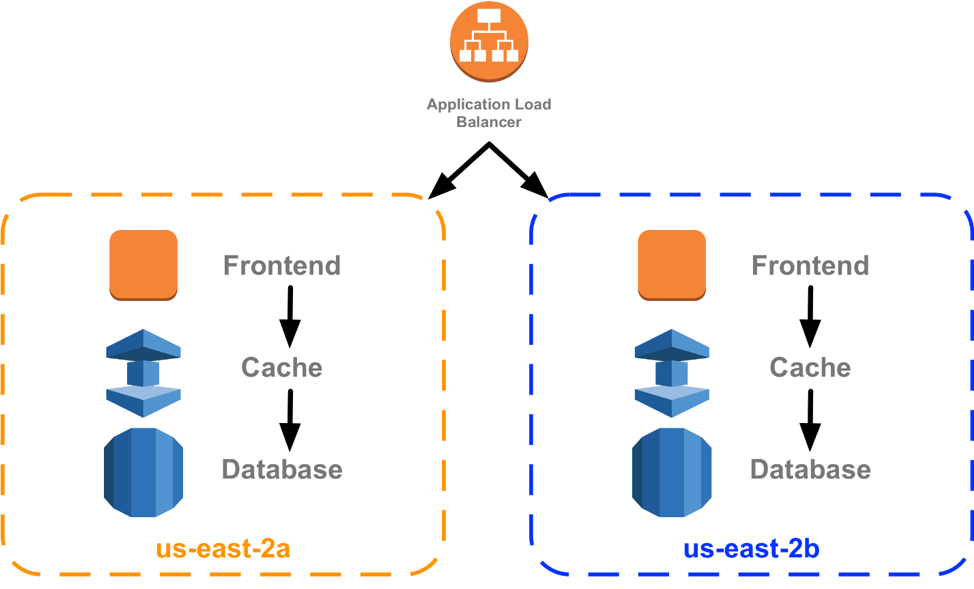

In the figure above we show a concept schematic for how a distributed storage system works.

The information is distributed around a ring of computers, each computer is identical. To ensure fault tolerance, in case

a particular node breaks down, the data is made redundant, so each object is stored in the system multiple times.

The information is distributed around a ring of computers, each computer is identical. To ensure fault tolerance, in case

a particular node breaks down, the data is made redundant, so each object is stored in the system multiple times.

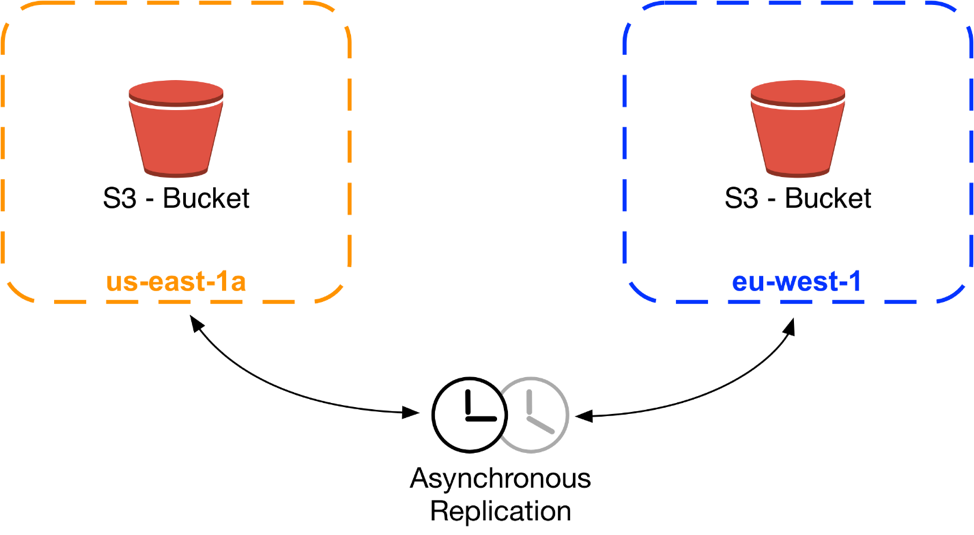

In technical terms, Dynamo is called an eventually consistent storage system. The terminology

may seem a bit odd, but as it turns out creating a distributed storage solution which is both responsive

and consistent is a difficult problem. As you can tell from the diagram above, if one computer updates object A,

these changes need to propagate to other machines. This is done using asynchronous communication, which is why

the system is called “eventually consistent.”

may seem a bit odd, but as it turns out creating a distributed storage solution which is both responsive

and consistent is a difficult problem. As you can tell from the diagram above, if one computer updates object A,

these changes need to propagate to other machines. This is done using asynchronous communication, which is why

the system is called “eventually consistent.”

The example Amazon .Dynamo :

How Dynamo Works

The technical details in Vogels’ paper are quite complex, but the way in which Dynamo works can be understood more simply.

First, like Amazon S3, Dynamo offers a simple put and get interface. Each put

requires the key, context and the object. The context is based on the object and is used by Dynamo

for validating updates. Here is the high level description of Dynamo and a put request:

First, like Amazon S3, Dynamo offers a simple put and get interface. Each put

requires the key, context and the object. The context is based on the object and is used by Dynamo

for validating updates. Here is the high level description of Dynamo and a put request:

- Physical nodes are thought of as identical and organized into a ring.

- Virtual nodes are created by the system and mapped onto physical nodes, so that hardware

can be swapped for maintenance and failure. - The partitioning algorithm is one of the most complicated pieces of the system, it specifies which nodes will store

a given object. - The partitioning mechanism automatically scales as nodes enter and leave the system.

- Every object is asynchronously replicated to N nodes.

- The updates to the system occur asynchronously and may result in multiple copies of the object in the system with slightly

different states. - The discrepancies in the system are reconciled after a period of time, ensuring eventual consistency.

- Any node in the system can be issued a put or get request for any key.

So Dynamo is quite complex, but is also conceptually simple. It is inspired by the way things work in nature – based on

self-organization and emergence. Each node is identical to other nodes, the nodes can come in and out of existence, and the data is automatically

balanced around the ring – all of this makes Dynamo similar to an ant colony or beehive.

self-organization and emergence. Each node is identical to other nodes, the nodes can come in and out of existence, and the data is automatically

balanced around the ring – all of this makes Dynamo similar to an ant colony or beehive.

Finally, Dynamo’s internals are implemented in Java. The choice is likely because, as we’ve written here,

Java is an elegant programming language, which allows the appropriate level of object-orineted modeling. And yes, once again, it is fast enough!

Java is an elegant programming language, which allows the appropriate level of object-orineted modeling. And yes, once again, it is fast enough!

Dynamo – The SLA In A Box

Perhaps the most stunning revelation about Dynamo is that it can be tuned using just a handful parameters to achieve

different, technical goals that in turn support different business requirements. Dynamo is a storage service in the box driven by an SLA.

Different applications at Amazon use different configurations of Dynamo depending on their tolerence to delays or data discrepancy.

The paper lists these main uses of Dynamo:

different, technical goals that in turn support different business requirements. Dynamo is a storage service in the box driven by an SLA.

Different applications at Amazon use different configurations of Dynamo depending on their tolerence to delays or data discrepancy.

The paper lists these main uses of Dynamo:

Business logic specific reconciliation: This is a popular use case for Dynamo. Each data object is replicated across multiple nodes. The shopping cart service is a prime example of this category.

Timestamp based reconciliation: This case differs from the previous one only in the reconciliation mechanism. The service that maintains customers’ session information is a good example of a service that uses this mode.

High performance read engine: While Dynamo is built to be an “always writeable” data store, a few services are tuning its quorum characteristics and using it as a high performance read engine. Services that maintain a product catalog and promotional items fit in this category.

Timestamp based reconciliation: This case differs from the previous one only in the reconciliation mechanism. The service that maintains customers’ session information is a good example of a service that uses this mode.

High performance read engine: While Dynamo is built to be an “always writeable” data store, a few services are tuning its quorum characteristics and using it as a high performance read engine. Services that maintain a product catalog and promotional items fit in this category.

Dynamo shows once again how disciplined and rare Amazon is at using and re-using its infrastructure. The technical management

must have realized early on that the very survival of the business depended on common, bullet proof, flexible, and scalable software systems.

Amazon succeeded in both implementing and spreading the infrastructure through the company. It truly earned the mandate to then

leverage its internal pieces and offer them as web services.

must have realized early on that the very survival of the business depended on common, bullet proof, flexible, and scalable software systems.

Amazon succeeded in both implementing and spreading the infrastructure through the company. It truly earned the mandate to then

leverage its internal pieces and offer them as web services.

How Does Dynamo Fit With AWS?

The short answer is that it does not, because it is not a public service. The short answer is also shortsighted because

there are clear implications. First, is that since Amazon is committed to building a stack of web services, a version of Dynamo

is likely to be available to the public at some time in the future.

there are clear implications. First, is that since Amazon is committed to building a stack of web services, a version of Dynamo

is likely to be available to the public at some time in the future.

Second, Amazon is restless in its innovation; and that applies to web services as well as it applies to its retail business. S3 has already made possible a whole new generation

of startups and web services and Dynamo is likely to do the same when it comes out. And we know that more is likely to come, as even with

Dynamo, the stack of web services is far from complete.

of startups and web services and Dynamo is likely to do the same when it comes out. And we know that more is likely to come, as even with

Dynamo, the stack of web services is far from complete.

Finally, Amazon is showing openness – a highly valuable characteristic. Surely, Google and Microsoft

have similar systems, but Amazon is putting them out in the open and turning its infrastructure into a business faster

than its competitors. It is this openness that will allow Amazon to build trust and community around their Web Services stack. It is a powerful force, which is likely to win over developers and business people as well.

have similar systems, but Amazon is putting them out in the open and turning its infrastructure into a business faster

than its competitors. It is this openness that will allow Amazon to build trust and community around their Web Services stack. It is a powerful force, which is likely to win over developers and business people as well.

The Future – Amazon Web OS

To any computer scientist or software engineer to watch what Amazon is doing is both awesome and humbling.

Taking complex theoretical concepts and algorithms, adopting them to business environments (down to the SLA!), proving

that they work at the world’s largest retail site, and then turning around and making them available to the rest of the world

is nothing short of a tour-de-force. What Emre Sokullu called HaaS (Hardware as a Service) in his post

yesterday is not only an innovative business strategy, but also a triumph of software engineering.

Taking complex theoretical concepts and algorithms, adopting them to business environments (down to the SLA!), proving

that they work at the world’s largest retail site, and then turning around and making them available to the rest of the world

is nothing short of a tour-de-force. What Emre Sokullu called HaaS (Hardware as a Service) in his post

yesterday is not only an innovative business strategy, but also a triumph of software engineering.

Amazon is on track to roll out more virtual web services, which will collectively amount to a web operating system.

The building blocks that are already in place and the ones to come are going to be remixed to create new web applications

that were simply not possible before. As we have written here recently, it is the libraries that make it possible to create giant systems

with just a handful of engineers. Amazon’s Web Services are going to make web-scale computing available to anyone. This is

unimaginably powerful. This future is almost here, so we can begin thinking about it today. What kinds of things do you want to build

with existing and coming Amazon Web Services?

The building blocks that are already in place and the ones to come are going to be remixed to create new web applications

that were simply not possible before. As we have written here recently, it is the libraries that make it possible to create giant systems

with just a handful of engineers. Amazon’s Web Services are going to make web-scale computing available to anyone. This is

unimaginably powerful. This future is almost here, so we can begin thinking about it today. What kinds of things do you want to build

with existing and coming Amazon Web Services?

The Energy Cloud

We are in the midst of a technology-driven shift in how electricity is generated and managed. Today’s one-way grid, built around generation from large-scale power plants, is gradually being transformed into a multi-directional network that relies far more on small-scale, distributed generation. It’s what a recent piece in Public Utilities Fortnightly dubbed the Energy Cloud.

That’s a very appropriate way to think about this grid of the future, because it will parallel the technology “cloud” in important ways.

The technology cloud distributes computing power and data storage around a network so they are easily accessible. Users can draw on the services they need, when they need them – Software as a Service or SaaS – and the network automatically manages resources to provide the bandwidth and performance required for their tasks. Users pay for the value of the resources they actually use, giving them greater visibility and control over costs. They also get incredible freedom to choose – rather than being locked into one vendor, they can always switch whatever app or service best suits them at a given time.

The Energy Cloud represents the same kind of structure for electricity, and will offer not only electric power, but also intelligent management of power consumption and other value-added services to consumers on demand. This service-based approach will be driven by the sharp growth of distributed energy and storage systems based on renewables like solar, and that allow consumers to both obtain power from the grid as well as provide power out to it.

As the Energy Cloud matures, both consumers and businesses will be able to draw on these renewable power sources as needed, at a price based on the value of all the resources involved in providing them that power. Like the IT cloud, the Energy Cloud gives consumers freedom of choice for where they get power at any given time – they aren’t locked into any one provider. It also lets utilities easily meet this consumer demand, by employing sophisticated control software to manage choice, performance and bandwidth (in other words, energy sources, demand management and reliability).

In fact, in both the technology cloud and the Energy Cloud, management of the network is what creates the greatest value.

It requires sophisticated technology and network operations expertise to ensure a technology network collects, processes, formats and delivers data across the network to meet highly dynamic demand. The same degree of sophistication and expertise will be needed to manage the complexities of the Energy Cloud so it will perform predictably, reliably, efficiently and safely. Because the existing grid structure was designed as a one-way system, the dynamics of two-way distributed generation and storage can create issues of reliability or even safety if flows are not carefully managed.

What isn’t as clear is who will take on this crucial role in the Energy Cloud. If you look at what happened when IT shifted to the cloud, you’ll discover that it also shifted the relationships among the various participants – some established players saw their roles diminish, while major aspects of the network are handled by entirely new entities. Expect similar changes when it comes to the Energy Cloud, as utilities, DER equipment providers, “intelligent home” companies and other technology companies all vie for position.

What’s most likely to happen, particularly given the regulation in the energy industry that’s absent in the IT industry, is that network operations will be managed by a partnership among all those entities. Incumbent utilities will have a crucial role – provided they recognize the coming changes and move rapidly (if not, they risk being relegated to just owning the wires, a commodity play).

These partnerships also will rely on control technology and software from new companies that bring that expertise to the table. The Energy Cloud needs standards around controls and management technology so that different devices and equipment can co-exist seamlessly across the entire network.

At Sunverge we see this leading to the “Platform as a Service” – PaaS – where all the participants can make use of the aspects of the central control platform as needed. Along with that, we also see the emergence of CaaS – Capacity as a Service – that uses the Platform to create Virtual Power Plants that will be a key source of capacity and reliability.

This standard platform has been a core part of our work from the beginning. As part of our Solar Integration System (SIS), the platform has been constantly advanced over the past five years as we have learned from having it “in the field” how to make it even more robust and flexible. To make it a standard, we have built the platform with open APIs so other Energy Cloud participants can take advantage of the control function and the rich data generated by the platform and develop value-added services on top of it.

Thanks to the technology core of PaaS and CaaS, and with these new partnerships, utilities will be able to expand new customer services more rapidly than ever before — and conceive new revenue models never thought possible. This will be as disruptive as Uber, AirBnB and other new companies that are using technology to transform traditional industry sectors.

We expect there to be a lot of interesting developments in the coming year in this Energy Cloud transition, and for this realignment of participants to begin in earnest. While we can’t know today exactly how this will look in a few years, we can be certain that even 12 months from now the change will be visible and accelerating.

COMPUTEROME CLOUD TODAY:

Accessible from anywhere through proper mechanisms

Secure private clusters

Virtual clusters

Cloud bursting capabilities

Security, isolation and access control in compliance with statutory acts

Accessible from anywhere through proper mechanisms

Secure private clusters

Virtual clusters

Cloud bursting capabilities

Security, isolation and access control in compliance with statutory acts

Dynamic Network Analysis via Motifs (DYNAMO)

For develop a graph mining-based approach and framework that will allow humans to discover and detect important or critical graph patterns in data streams through the analysis of local patterns of interactions and behaviors of actors, entities, and/or features. Conceptually, this equates to identifying small local subgraphs in a massive virtual dynamic network (generated from data streams) that have specific meaning and relevance to the user and that are indicators of a particular activity or event. We refer to these local graph patterns, which are small directed attributed subgraphs, as network motifs. Detecting motifs in streaming data amounts to more than looking for the occurrence of specific entities or features in particular states, but also their relationships, interactions, and collaborative behaviors.

Approach

In the detection of insider cyber threats, one may characterize the type of an insider agent, computer user, or organizational role by examining the modes and frequencies of its local interactions or network motifs within the cloud. For example, a cloud interaction graph may be generated from cloud telemetry data that shows a user’s particular interactions with or access to specific tenants, data stores, and applications. In examining such a graph, one can look closely for small local graph patterns or network motifs that are indicative of an insider cyber threat. Additional motif analysis of prox card access data may identify changes in an actor’s work patterns (time and place) and the people she interacts with. Motif analysis of email and phone communications records could identify an actor’s interactions with other people and their associated statuses and roles.

Each of the network motifs is an individual indicator of insider agent behavior. We might expect dozens to hundreds of potential indicators that are drawn from subject-matter-experts or learned from activity monitoring data. From these potential indicators, we may derive a sufficient and optimal set of indicators to apply to insider threat detection. The set of influential network motifs may be organized and monitored through a motif census, which is a scalar vector of the counts of each motif pattern in the cloud interaction graph. The motif census may be considered as a signature of network interaction patterns for a particular person, class of users, or type of insider agent.

For the electron microscopy use case, image features may be detected in an electron microscope image much like facial features are detected from a picture for facial recognition. The image features may be linked together to form a feature map that is analogous to a facial recognition map. Motif analysis may then be applied to the dynamic feature map to identify the occurrence of substructures that are indicative of a particular event or chemical behavior.

The concepts of the network motif and the motif census have some very compelling characteristics for threat, event, or feature detection. They provide a simple and intuitive representation for users to encode critical patterns. They provide a structure for assessing multiple indicators. They enable a mechanism for throttling the accuracy of findings and limiting false positives by adjusting the threshold associated with the computed similarity distance measure (e.g., lower threshold = higher accuracy and less false positives). Indicators in a motif census may be active or inactive depending on whether data is available to assess the indicator. In the case where active indicators may be alerting a potential insider or image feature or event, the inactive indicators identify additional data to gather and assess to further confirm or reject the alert. This enables an approach for directing humans or systems to specific areas to look for additional information or data.

Benefit

Dynamic network motif analysis represents a graph-theoretic approach to conduct “pattern-of-life” analysis of agents or entities. The analytical approach should allow an analyst or scientist to:

- Expose relationships and interactions of an agent with other various articles or entities such as other people, computing resources, places or locations, and subject areas

- Learn normal relationship and interaction patterns of different classes of agents from monitored activities

- Detect when anomalous patterns occur and offer potential explanations

- Facilitate encoding of hypothetical behavioral patterns or indicators for an agent to use in future detection (e.g., off-hour access to computing resources, unusual access patterns, accumulation of large amounts of data)

- Provide framework for evaluating and confirming multiple indicators

- Analyze temporal evolution of interactions and behaviors of an agent

- Support all the above analytics in the presence of streaming data

All Things Distributed

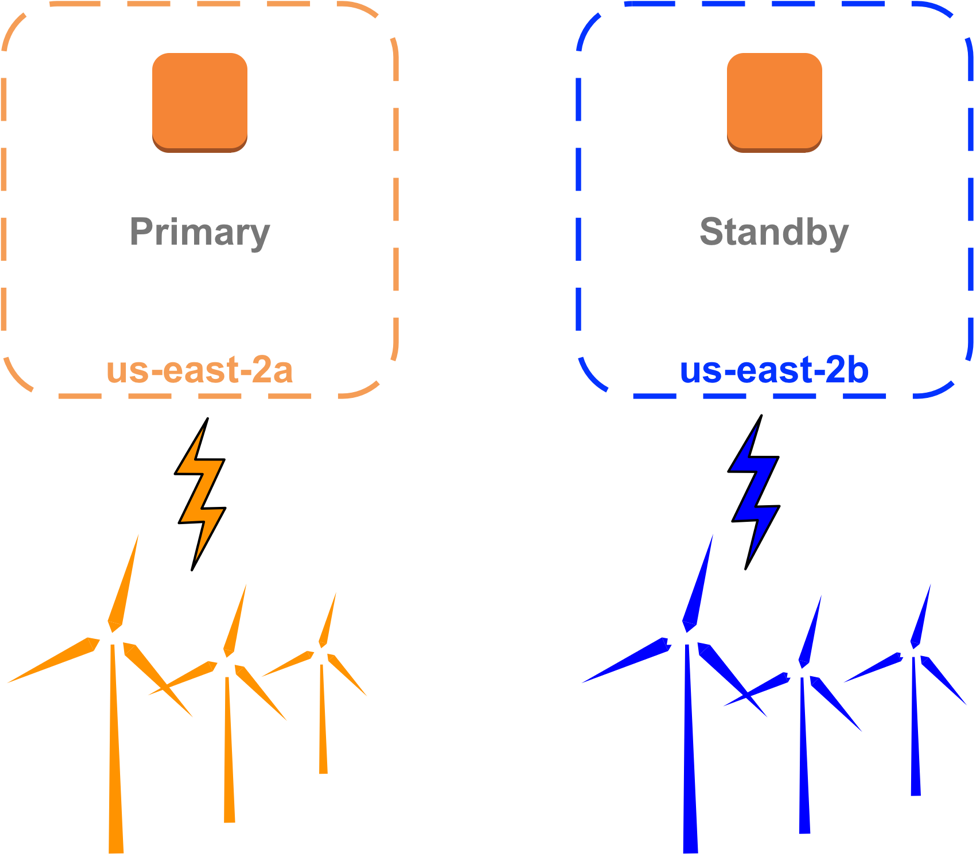

The AWS GovCloud (US-East) Region is our second AWS GovCloud (US) Region, joining AWS GovCloud (US-West) to further help US government agencies, the contractors that serve them, and organizations in highly regulated industries move more of their workloads to the AWS Cloud by implementing a number of US government-specific regulatory requirements.

Similar to the AWS GovCloud (US-West), the AWS GovCloud (US-East) Region provides three Availability Zones and meets the stringent requirements of the public sector and highly regulated industries, including being operated on US soil by US citizens. It is accessible only to vetted US entities and AWS account root users, who must confirm that they are US citizens or US permanent residents. The AWS GovCloud (US-East) Region is located in the eastern part of the United States, providing customers with a second isolated Region in which to run mission-critical workloads with lower latency and high availability.

In 2011, AWS was the first cloud provider to launch an isolated infrastructure Region designed to meet the stringent requirements of government agencies and other highly regulated industries when it opened the AWS GovCloud (US-West) Region. The new AWS GovCloud (US-East) Region also meets the top US government compliance requirements, including:

- Federal Risk and Authorization Management Program (FedRAMP) Moderate and High baselines

- US International Traffic in Arms Regulations (ITAR)

- Federal Information Security Management Act (FISMA) Low, Moderate, and High baselines

- Department of Justice's Criminal Justice Information Services (CJIS) Security Policy

- Department of Defense (DoD) Impact Levels 2, 4, and 5

The AWS GovCloud (US) environments also conform to commercial security and privacy standards such as:

- Healthcare Insurance Portability and Accountability Act (HIPAA)

- Payment Card Industry (PCI) Security

- System and Organization Controls (SOC) 1, 2, and 3

- ISO/IEC27001, ISO/IEC 27017, ISO/IEC 27018, and ISO/IEC 9001 compliance, which are primarily for healthcare, life sciences, medical devices, automotive, and aerospace customers

Some of the largest organizations in the US public sector, as well as the education, healthcare, and financial services industries, are using AWS GovCloud (US) Regions. They appreciate the reduced latency, added redundancy, data durability, resiliency, greater disaster recovery capability, and the ability to scale across multiple Regions. This includes US government agencies and companies such as:

The US Department of Treasury, US Department of Veterans Affairs, Adobe, Blackboard, Booz Allen Hamilton, Drakontas, Druva, ECS, Enlighten IT, General Dynamics Information Technology, GE, Infor, JHC Technology, Leidos, NASA's Jet Propulsion Laboratory, Novetta, PowerDMS, Raytheon, REAN Cloud, a Hitachi Vantara company, SAP NS2, and Smartronix.

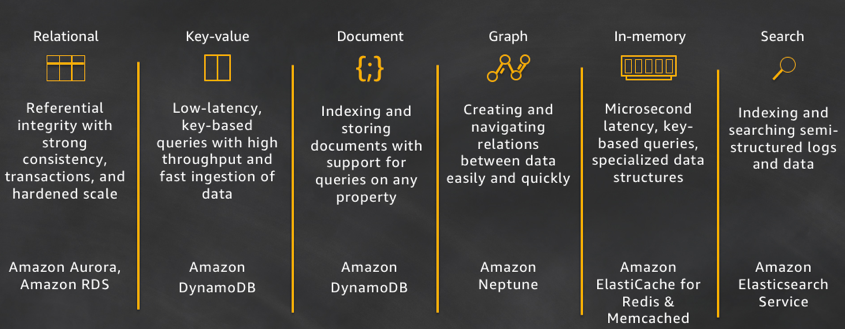

Purpose-built databases

The world is still changing and the categories of nonrelational databases continue to grow. We are increasingly seeing customers wanting to build Internet-scale applications that require diverse data models. In response to these needs, developers now have the choice of relational, key-value, document, graph, in-memory, and search databases. Each of these databases solve a specific problem or a group of problems.

Let's take a closer look at the purpose for each of these databases:

- Relational: A relational database is self-describing because it enables developers to define the database's schema as well as relations and constraints between rows and tables in the database. Developers rely on the functionality of the relational database (not the application code) to enforce the schema and preserve the referential integrity of the data within the database. Typical use cases for a relational database include web and mobile applications, enterprise applications, and online gaming. Airbnb is a great example of a customer building high-performance and scalable applications with Amazon Aurora. Aurora provides Airbnb a fully-managed, scalable, and functional service to run their MySQL workloads.

- Key-value: Key-value databases are highly partitionable and allow horizontal scaling at levels that other types of databases cannot achieve. Use cases such as gaming, ad tech, and IoT lend themselves particularly well to the key-value data model where the access patterns require low-latency Gets/Puts for known key values. The purpose of DynamoDB is to provide consistent single-digit millisecond latency for any scale of workloads. This consistent performance is a big part of why the Snapchat Stories feature, which includes Snapchat's largest storage write workload, moved to DynamoDB.

- Document: Document databases are intuitive for developers to use because the data in the application tier is typically represented as a JSON document. Developers can persist data using the same document model format that they use in their application code. Tinder is one example of a customer that is using the flexible schema model of DynamoDB to achieve developer efficiency.

- Graph: A graph database's purpose is to make it easy to build and run applications that work with highly connected datasets. Typical use cases for a graph database include social networking, recommendation engines, fraud detection, and knowledge graphs. Amazon Neptune is a fully-managed graph database service. Neptune supports both the Property Graph model and the Resource Description Framework (RDF), giving you the choice of two graph APIs: TinkerPop and RDF/SPARQL. Current Neptune users are building knowledge graphs, making in-game offer recommendations, and detecting fraud. For example, Thomson Reuters is helping their customers navigate a complex web of global tax policies and regulations by using Neptune.

- In-memory: Financial services, Ecommerce, web, and mobile application have use cases such as leaderboards, session stores, and real-time analytics that require microsecond response times and can have large spikes in traffic coming at any time. We built Amazon ElastiCache, offering Memcached and Redis, to serve low latency, high throughput workloads, such as McDonald's, that cannot be served with disk-based data stores. Amazon DynamoDB Accelerator (DAX) is another example of a purpose-built data store. DAX was built is to make DynamoDB reads an order of magnitude faster.

- Search: Many applications output logs to help developers troubleshoot issues. Amazon Elasticsearch Service (Amazon ES) is purpose built for providing near real-time visualizations and analytics of machine-generated data by indexing, aggregating, and searching semi structured logs and metrics. Amazon ES is also a powerful, high-performance search engine for full-text search use cases. Expedia is using more than 150 Amazon ES domains, 30 TB of data, and 30 billion documents for a variety of mission-critical use cases, ranging from operational monitoring and troubleshooting to distributed application stack tracing and pricing optimization.

Building applications with purpose-built databases

Developers are building highly distributed and decoupled applications, and AWS enables developers to build these cloud-native applications by using multiple AWS services. Take Expedia, for example. Though to a customer the Expedia website looks like a single application, behind the scenes Expedia.com is composed of many components, each with a specific function. By breaking an application such as Expedia.com into multiple components that have specific jobs (such as microservices, containers, and AWS Lambda functions), developers can be more productive by increasing scale and performance, reducing operations, increasing deployment agility, and enabling different components to evolve independently. When building applications, developers can pair each use case with the database that best suits the need.

To make this real, take a look at some of our customers that are using multiple different kinds of databases to build their applications:

- Airbnb uses DynamoDB to store users' search history for quick lookups as part of personalized search. Airbnb also uses ElastiCache to store session states in-memory for faster site rendering, and they use MySQL on Amazon RDS as their primary transactional database.

- Capital One uses Amazon RDS to store transaction data for state management, Amazon Redshift to store web logs for analytics that need aggregations, and DynamoDB to store user data so that customers can quickly access their information with the Capital One app.

- Expedia built a real-time data warehouse for the market pricing of lodging and availability data for internal market analysis by using Aurora, Amazon Redshift, and ElastiCache. The data warehouse performs a multistream union and self-join with a 24-hour lookback window using ElastiCache for Redis. The data warehouse also persists the processed data directly into Aurora MySQL and Amazon Redshift to support both operational and analytical queries.

- Zynga migrated the Zynga poker database from a MySQL farm to DynamoDB and got a massive performance boost. Queries that used to take 30 seconds now take one second. Zynga also uses ElastiCache (Memcached and Redis) in place of their self-managed equivalents for in-memory caching. The automation and serverless scalability of Aurora make it Zynga's first choice for new services using relational databases.

- Johnson & Johnson uses Amazon RDS, DynamoDB, and Amazon Redshift to minimize time and effort spent on gathering and provisioning data, and allow the quick derivation of insights. AWS database services are helping Johnson & Johnson improve physicians' workflows, optimize the supply chain, and discover new drugs.

Just as they are no longer writing monolithic applications, developers also are no longer using a single database for all use cases in an application—they are using many databases. Though the relational database remains alive and well, and is still well suited for many use cases, purpose-built databases for key-value, document, graph, in-memory, and search uses cases can help you optimize for functionality, performance, and scale and—more importantly—your customers' experience. Build on.

The workplace of the future

We already have an idea of how digitalization, and above all new technologies like machine learning, big-data analytics or IoT, will change companies' business models — and are already changing them on a wide scale. So now's the time to examine more closely how different facets of the workplace will look and the role humans will have.

In fact, the future is already here – but it's still not evenly distributed. Science fiction author William Gibson said that nearly 20 years ago. We can observe a gap between the haves and the have-nots: namely between those who are already using future technologies and those who are not. The consequences of this are particularly visible on the labor market many people still don't know which skills will be required in the future or how to learn them.

Against that background, it's natural for people – even young digital natives – to feel some growing uncertainty. According to a Gallup poll, 37% of millennials are afraid of losing their jobs in the next 20 years due to AI. At the same time there are many grounds for optimism. Studies by the German ZEW Center for European Economic Research, for example, have found that companies that invest in digitalization create significantly more jobs than companies that don't.

How many of the jobs that we know today will even exist in the future? Which human activities can be taken over by machines or ML-based systems? Which tasks will be left over for humans to do? And will there be completely new types of the jobs in the future that we can't even imagine today?

Future of work or work of the future?

All of these questions are legitimate. "But where there is danger, a rescuing element grows as well." German poet Friedrich Hölderlin knew that already in the 19th century. As for me, I'm a technology optimist: Using technology to drive customer-centric convenience, such as in the cashier-less Amazon Go stores, will create shifts in where jobs are created. Thinking about the work of tomorrow, it doesn't help to base the discussion on structures that exist today. After the refrigerator was invented in the 1930s, many people who worked in businesses that sold ice feared for their jobs. Indeed, refrigerators made this business superfluous for the most part; but in its place, many new jobs were created. For example, companies that produced refrigerators needed people to build them, and now that food could be preserved, whole new businesses were created which were targeted at that market. We should not let ourselves be guided in our thinking by the perception of work as we know it today. Instead, we should think about how the workplace could look like in the future. And to do that, we need to ask ourselves an entirely different question, namely: What is changing in the workplace, both from an organizational and qualitative standpoint?

Many of the tasks carried out by people in manufacturing, for example, have remained similar over time in terms of the workflows. Even the activities of doctors, lawyers or taxi drivers have hardly changed in the last decade, at least in terms of their underlying processes. Only parts of the processes are being performed by machines, or at least supported by them. Ultimately, the desired product or service is delivered in – hopefully – the desired quality. But in the age of digitalization, people do much more than fill the gaps between the machines. The work done by humans and machines is built around solving customer problems. It's no longer about producing a car, but about the service "mobility", about bringing people to a specific location. "I want to be in a central place in Berlin as quickly as possible" is the requirement that needs to be fulfilled. In the first step we might reach this goal by combining the fastest mobility services through a digital platform; in the next, it might be a task fulfilled by Virtual Reality. These new offerings are organized on platforms or networks, and less so in processes. And artificial intelligence makes it possible to break down tasks in such a way that everyone contributes what he or she can do best. People define problems and pre-structure them, and machines or algorithms develop solutions that people evaluate in the end.

Radiologists are now assisted by machine-learning-driven tools that allow them to evaluate digital content in ways that were not possible before. Many radiologists have even claimed that ML-driven advice has significantly improved their ability to interpret X-rays.

I would even go a step further because I believe it's possible to "rehumanize" work and make our unique abilities as human beings even more important. Until now, access to digital technologies was limited above all by a machine's abilities: The interfaces to our systems are no longer machine-driven; in the future humans will be the starting point. For example, anyone who wanted to teach a robot how to walk in the age of automation had to exactly calculate every single angle of slope from the upper to lower thigh, as well as the speed of movement and other parameters, and then formulate them as a command in a programming language. In the future, we'll be able to communicate and work with robots more intensively in our "language". So teaching a robot to walk will be much easier in the future. The robot can be controlled by anyone via voice command, and it could train itself by analyzing how humans do it via a motion scanner, applying the process, and perfecting it.

With the new technological possibilities and greater computing power, work in the future will be more focused on people and less on machines. Machine learning can make human labor more effective. Companies like C-SPAN show how: scores of people would have to scan video material for hours in order to create keywords, for example, according to a person's name. Today, automated face recognition can do this task in seconds, allowing employees to immediately begin working with the results.

Redefining the relationship between human and machine

The progress at the interface of human and machine is happening at a very fast pace with already a visible impact on how we work. In the future, technology can become a much more natural part of our workplace that can be activated by several input methods — speaking, seeing, touching or even smelling. Take voice-control technologies, a field that is currently undergoing a real disruption. This area distinguishes itself radically from what we knew until now as the "hands-free" work approach, which ran purely through simple voice commands. Modern voice-control systems can understand, interpret and answer conversations in a professional way, which makes a lot of work processes easier to perform. Examples are giving diagnoses to patients or legal advice. At the end of 2018, voice (input) will have already significantly changed the way we develop devices and apps. People will be able to connect technologies into their work primarily through voice. One can already get an inkling of what that looks like in detail.

At the US space agency NASA, for example, Amazon Alexa organizes the ordering of conference rooms. A room doesn't always have to be requested for every single meeting. Rather, anyone who needs a room asks Alexa and the rest happens automatically. Everyone knows the stress caused by telephone conferences: they never start on time because someone hasn't found the right dial-in number and it takes a while until you've typed in the 8-digit number plus a 6-digit conference code. A voice command creates a lot more productivity. The AWS Service Transcribe could start creating a transcript right away during the meeting and send it to all participants afterwards. Other companies, such as the Japanese firm Mitsui or the software provider bmc, use Alexa for Business to achieve a more efficient and better collaboration between their employees, among others.

The software provider fme also uses voice control to offer its customers innovative applications in the field of business intelligence, social business collaboration and enterprise-content-management technologies. The customers of fme mainly come from life sciences and industrial manufacturing. Employees can search different types of content using voice control, navigate easily through the content, and have the content displayed or read to them. Users can have Alexa explain individual tasks to them in OpenText Documentum, to give another example. This could be used to make the onboarding of new employees faster and cheaper – their managers would not have to perform the same information ritual again and again. A similar approach can be found at pharmaceutical company AstraZeneca, which uses Alexa in its manufacturing: Team members can ask questions about standard processes to find out what they need to do next.

Of course, responsibilities and organizations will change as a result of these developments. Resources for administrative tasks can be turned into activities that have a direct benefit for the customer. Regarding the character of work in the future, we will probably need more "architects," "developers," "creatives," "relationship experts," "platform specialists," and "analysts" and fewer people who need to perform tasks according to certain pre-determined steps, as well as fewer "administrators". By speaking more to humans' need to create and shape, work might ultimately become more fulfilling and enjoyable.

Expanding the digital world

This new understanding of the relationship between man and machine has another important effect: It will significantly expand the number of people who can participate in digital value creation: older people, people who at the moment don't have access to a computer or smartphone, people for whom using the smartphone in a specific situation is too complicated, and people in developing countries who can't read or write. A good example of the latter is rice farmers who work with the International Rice Research Institute, an organization based near Manila, the Philippines. The institute's mission is to fight poverty, hunger and malnutrition by easing the lives and work of rice farmers. Rice farmers can benefit from knowledge to which they wouldn't have access were they on their own. The institute has saved 70,000 DNA sequences of different types of rice, from which conclusions can be drawn about the best conditions for growing rice. Every village has a telephone, and by using it the farmers can access this knowledge: they select their dialect in a menu and describe which piece of land they tend. The service is based on machine learning. It generates recommendations on how much fertilizer is needed and when the best time is to plant the crops. So with the help of digital technologies, farmers can see how their work becomes more valuable: a given amount of effort produces a richer harvest of rice.

Until now we only have a tiny insight into the possibilities for the world of work. But they make clear that the quality of work for us humans will most probably increase, and that technology can allow us to perform many activities that we still cannot imagine today. Although there are twice as many robots per capita in German companies than in US firms, German industry still has trouble finding qualified employees rather than having to fight unemployment. In the future we humans will be able to carry out activities in a way that is closer to our creative human nature than is the case today. I believe that if we want to do justice to the technological possibilities, we should do it like Hölderlin and have faith in the rescue, but at the same time try to minimize the risks by understanding and shaping things.

Interfacing a Relational Database to the Web

How does an RDBMS talk to the rest of the world?

Remember the African Grey parrot we trained in the last chapter? The one holding down a $250,000 information systems management position saying "We're pro-actively leveraging our object-oriented client/server database to target customer service during reengineering"? The profound concept behind the "client/server" portion of this sentence is that the database server is a program that sits around waiting for another program, the database client, to request a connection. Once the connection is established, the client sends SQL queries to the server, which inspects the physical database and returns the matching data. These days, connections are generally made via TCP sockets even if the two programs are running on the same computer. Here's a schematic:

Lets's get a little more realistic and dirty. In a classical RDBMS, you have a daemon program that waits for requests from clients for new connections. For each client, the daemon spawns a server program. All the servers and the daemon cache data from disk and communicate locking information via a large block of shared RAM (often as much as 1 GB). The raison d'etre of an RDBMS is that N clients can simultaneously access and update the tables. We could have an AOLserver database pool taking up six of the client positions, a programmer using a shell-type tool such as SQL*Plus as another, an administrator using Microsoft Access as another, and a legacy CGI script as the final client. The client processes could be running on three or four separate physical computers. The database server processes would all be running on one physical computer (this is why Macintoshes and Windows 95/98 machines, which lack multiprocessing capability, are not used as database servers). Here's a schematic:

Note that the schematic above is for a "classical" RDBMS. The trend is for database server programs to run as one operating system process with multiple kernel threads. This is an implementation detail, however, that doesn't affect the logical structure that you see in the schematic.