5x WS2811 Addressable RGB Pixel Bit LED Module Node 5pcs Non Waterproof USA G17

Google Pixel C tablet review: Out with the Nexus, in with the Pixel

The Pixel C comes in two storage quantities – 32GB or 64GB (with the latter priced at $100 more.

X . I Electronic circuit

An electronic circuit is composed of individual electronic components, such as resistors, transistors, capacitors, inductors and diodes, connected by conductive wires or traces through which electric current can flow. The combination of components and wires allows various simple and complex operations to be performed: signals can be amplified, computations can be performed, and data can be moved from one place to another.

Circuits can be constructed of discrete components connected by individual pieces of wire, but today it is much more common to create interconnections by photolithographic techniques on a laminated substrate (a printed circuit board or PCB) and solder the components to these interconnections to create a finished circuit. In an integrated circuit or IC, the components and interconnections are formed on the same substrate, typically a semiconductor such as silicon or (less commonly) gallium arsenide.

An electronic circuit can usually be categorized as an analog circuit, a digital circuit, or a mixed-signal circuit (a combination of analog circuits and digital circuits).

Breadboards, perfboards, and stripboards are common for testing new designs. They allow the designer to make quick changes to the circuit during development.

Analog circuits

In a series circuit, the same current passes through a series of components. A string of Christmas lights is a good example of a series circuit: if one goes out, they all do.

In a parallel circuit, all the components are connected to the same voltage, and the current divides between the various components according to their resistance.

The basic components of analog circuits are wires, resistors, capacitors, inductors, diodes, and transistors. (In 2012 it was demonstrated that memristors can be added to the list of available components.) Analog circuits are very commonly represented in schematic diagrams, in which wires are shown as lines, and each component has a unique symbol. Analog circuit analysis employs Kirchhoff's circuit laws: all the currents at a node (a place where wires meet), and the voltage around a closed loop of wires is 0. Wires are usually treated as ideal zero-voltage interconnections; any resistance or reactance is captured by explicitly adding a parasitic element, such as a discrete resistor or inductor. Active components such as transistors are often treated as controlled current or voltage sources: for example, a field-effect transistor can be modeled as a current source from the source to the drain, with the current controlled by the gate-source voltage.

When the circuit size is comparable to a wavelength of the relevant signal frequency, a more sophisticated approach must be used. Wires are treated as transmission lines, with (hopefully) constant characteristic impedance, and the impedances at the start and end determine transmitted and reflected waves on the line. Such considerations typically become important for circuit boards at frequencies above a GHz; integrated circuits are smaller and can be treated as lumped elements for frequencies less than 10GHz or so.

An alternative model is to take independent power sources and induction as basic electronic units; this allows modeling frequency dependent negative resistors, gyrators, negative impedance converters, and dependent sources as secondary electronic components

Digital circuits

In digital electronic circuits, electric signals take on discrete values, to represent logical and numeric values. These values represent the information that is being processed. In the vast majority of cases, binary encoding is used: one voltage (typically the more positive value) represents a binary '1' and another voltage (usually a value near the ground potential, 0 V) represents a binary '0'. Digital circuits make extensive use of transistors, interconnected to create logic gates that provide the functions of Boolean logic: AND, NAND, OR, NOR, XOR and all possible combinations thereof. Transistors interconnected so as to provide positive feedback are used as latches and flip flops, circuits that have two or more metastable states, and remain in one of these states until changed by an external input. Digital circuits therefore can provide both logic and memory, enabling them to perform arbitrary computational functions. (Memory based on flip-flops is known as static random-access memory (SRAM). Memory based on the storage of charge in a capacitor, dynamic random-access memory (DRAM) is also widely used.)The design process for digital circuits is fundamentally different from the process for analog circuits. Each logic gate regenerates the binary signal, so the designer need not account for distortion, gain control, offset voltages, and other concerns faced in an analog design. As a consequence, extremely complex digital circuits, with billions of logic elements integrated on a single silicon chip, can be fabricated at low cost. Such digital integrated circuits are ubiquitous in modern electronic devices, such as calculators, mobile phone handsets, and computers. As digital circuits become more complex, issues of time delay, logic races, power dissipation, non-ideal switching, on-chip and inter-chip loading, and leakage currents, become limitations to the density, speed and performance.

Digital circuitry is used to create general purpose computing chips, such as microprocessors, and custom-designed logic circuits, known as application-specific integrated circuit (ASICs). Field-programmable gate arrays (FPGAs), chips with logic circuitry whose configuration can be modified after fabrication, are also widely used in prototyping and development.

Mixed-signal circuits

Mixed-signal or hybrid circuits contain elements of both analog and digital circuits. Examples include comparators, timers, phase-locked loops, analog-to-digital converters, and digital-to-analog converters. Most modern radio and communications circuitry uses mixed signal circuits. For example, in a receiver, analog circuitry is used to amplify and frequency-convert signals so that they reach a suitable state to be converted into digital values, after which further signal processing can be performed in the digital domain.

Electronic circuit design comprises the analysis and synthesis of electronic circuits

Methods

To design any electrical circuit, either analog or digital, electrical engineers need to be able to predict the voltages and currents at all places within the circuit. Linear circuits, that is, circuits wherein the outputs are linearly dependent on the inputs, can be analyzed by hand using complex analysis. Simple nonlinear circuits can also be analyzed in this way. Specialized software has been created to analyze circuits that are either too complicated or too nonlinear to analyze by hand.Circuit simulation software allows engineers to design circuits more efficiently, reducing the time cost and risk of error involved in building circuit prototypes. Some of these make use of hardware description languages such as VHDL or Verilog.

Network simulation software

More complex circuits are analyzed with circuit simulation software such as SPICE and EMTP.Linearization around operating point

When faced with a new circuit, the software first tries to find a steady state solution wherein all the nodes conform to Kirchhoff's Current Law and the voltages across and through each element of the circuit conform to the voltage/current equations governing that element.Once the steady state solution is found, the software can analyze the response to perturbations using piecewise approximation, harmonic balance or other methods.

Piece-wise linear approximation

Software such as the PLECS interface to Simulink uses piecewise linear approximation of the equations governing the elements of a circuit. The circuit is treated as a completely linear network of ideal diodes. Every time a diode switches from on to off or vice versa, the configuration of the linear network changes. Adding more detail to the approximation of equations increases the accuracy of the simulation, but also increases its running time.Synthesis

Simple circuits may be designed by connecting a number of elements or functional blocks such as integrated circuits.More complex digital circuits are typically designed with the aid of computer software. Logic circuits (and sometimes mixed mode circuits) are often described in such hardware description languages as HDL, VHDL or Verilog, then synthesized using a logic synthesis engine.

X . II Digital electronics

Digital electronics or digital (electronic) circuits are electronics that operate on digital signals. In contrast, analog circuits manipulate analog signals whose performance is more subject to manufacturing tolerance, signal attenuation and noise. Digital techniques are helpful because it is a lot easier to get an electronic device to switch into one of a number of known states than to accurately reproduce a continuous range of values.

Digital electronic circuits are usually made from large assemblies of logic gates (often printed on integrated circuits), simple electronic representations of Boolean logic functions

An advantage of digital circuits when compared to analog circuits is that signals represented digitally can be transmitted without degradation due to noise.[8] For example, a continuous audio signal transmitted as a sequence of 1s and 0s, can be reconstructed without error, provided the noise picked up in transmission is not enough to prevent identification of the 1s and 0s. An hour of music can be stored on a compact disc using about 6 billion binary digits.

In a digital system, a more precise representation of a signal can be obtained by using more binary digits to represent it. While this requires more digital circuits to process the signals, each digit is handled by the same kind of hardware, resulting in an easily scalable system. In an analog system, additional resolution requires fundamental improvements in the linearity and noise characteristics of each step of the signal chain.

Computer-controlled digital systems can be controlled by software, allowing new functions to be added without changing hardware. Often this can be done outside of the factory by updating the product's software. So, the product's design errors can be corrected after the product is in a customer's hands.

Information storage can be easier in digital systems than in analog ones. The noise-immunity of digital systems permits data to be stored and retrieved without degradation. In an analog system, noise from aging and wear degrade the information stored. In a digital system, as long as the total noise is below a certain level, the information can be recovered perfectly.

Even when more significant noise is present, the use of redundancy permits the recovery of the original data provided too many errors do not occur.

In some cases, digital circuits use more energy than analog circuits to accomplish the same tasks, thus producing more heat which increases the complexity of the circuits such as the inclusion of heat sinks. In portable or battery-powered systems this can limit use of digital systems.

For example, battery-powered cellular telephones often use a low-power analog front-end to amplify and tune in the radio signals from the base station. However, a base station has grid power and can use power-hungry, but very flexible software radios. Such base stations can be easily reprogrammed to process the signals used in new cellular standards.

Digital circuits are sometimes more expensive, especially in small quantities.

Most useful digital systems must translate from continuous analog signals to discrete digital signals. This causes quantization errors. Quantization error can be reduced if the system stores enough digital data to represent the signal to the desired degree of fidelity. The Nyquist-Shannon sampling theorem provides an important guideline as to how much digital data is needed to accurately portray a given analog signal.

In some systems, if a single piece of digital data is lost or misinterpreted, the meaning of large blocks of related data can completely change. Because of the cliff effect, it can be difficult for users to tell if a particular system is right on the edge of failure, or if it can tolerate much more noise before failing.

Digital fragility can be reduced by designing a digital system for robustness. For example, a parity bit or other error management method can be inserted into the signal path. These schemes help the system detect errors, and then either correct the errors, or at least ask for a new copy of the data. In a state-machine, the state transition logic can be designed to catch unused states and trigger a reset sequence or other error recovery routine.

Digital memory and transmission systems can use techniques such as error detection and correction to use additional data to correct any errors in transmission and storage.

On the other hand, some techniques used in digital systems make those systems more vulnerable to single-bit errors. These techniques are acceptable when the underlying bits are reliable enough that such errors are highly unlikely.

A single-bit error in audio data stored directly as linear pulse code modulation (such as on a CD-ROM) causes, at worst, a single click. Instead, many people use audio compression to save storage space and download time, even though a single-bit error may corrupt the entire song.

Construction

Integrated circuits consist of multiple transistors on one silicon chip, and are the least expensive way to make large number of interconnected logic gates. Integrated circuits are usually designed by engineers using electronic design automation software (see below for more information) to perform some type of function.

Integrated circuits are usually interconnected on a printed circuit board which is a board which holds electrical components, and connects them together with copper traces.

Design

Each logic symbol is represented by a different shape. The actual set of shapes was introduced in 1984 under IEEE/ANSI standard 91-1984. "The logic symbol given under this standard are being increasingly used now and have even started appearing in the literature published by manufacturers of digital integrated circuits."[9]Another form of digital circuit is constructed from lookup tables, (many sold as "programmable logic devices", though other kinds of PLDs exist). Lookup tables can perform the same functions as machines based on logic gates, but can be easily reprogrammed without changing the wiring. This means that a designer can often repair design errors without changing the arrangement of wires. Therefore, in small volume products, programmable logic devices are often the preferred solution. They are usually designed by engineers using electronic design automation software.

When the volumes are medium to large, and the logic can be slow, or involves complex algorithms or sequences, often a small microcontroller is programmed to make an embedded system. These are usually programmed by software engineers.

When only one digital circuit is needed, and its design is totally customized, as for a factory production line controller, the conventional solution is a programmable logic controller, or PLC. These are usually programmed by electricians, using ladder logic.

Structure of digital systems

Engineers use many methods to minimize logic functions, in order to reduce the circuit's complexity. When the complexity is less, the circuit also has fewer errors and less electronics, and is therefore less expensive.The most widely used simplification is a minimization algorithm like the Espresso heuristic logic minimizer within a CAD system, although historically, binary decision diagrams, an automated Quine–McCluskey algorithm, truth tables, Karnaugh maps, and Boolean algebra have been used.

Representation

Representations are crucial to an engineer's design of digital circuits. Some analysis methods only work with particular representations.The classical way to represent a digital circuit is with an equivalent set of logic gates. Another way, often with the least electronics, is to construct an equivalent system of electronic switches (usually transistors). One of the easiest ways is to simply have a memory containing a truth table. The inputs are fed into the address of the memory, and the data outputs of the memory become the outputs.

For automated analysis, these representations have digital file formats that can be processed by computer programs. Most digital engineers are very careful to select computer programs ("tools") with compatible file formats.

Combinational vs. Sequential

To choose representations, engineers consider types of digital systems. Most digital systems divide into "combinational systems" and "sequential systems." A combinational system always presents the same output when given the same inputs. It is basically a representation of a set of logic functions, as already discussed.A sequential system is a combinational system with some of the outputs fed back as inputs. This makes the digital machine perform a "sequence" of operations. The simplest sequential system is probably a flip flop, a mechanism that represents a binary digit or "bit".

Sequential systems are often designed as state machines. In this way, engineers can design a system's gross behavior, and even test it in a simulation, without considering all the details of the logic functions.

Sequential systems divide into two further subcategories. "Synchronous" sequential systems change state all at once, when a "clock" signal changes state. "Asynchronous" sequential systems propagate changes whenever inputs change. Synchronous sequential systems are made of well-characterized asynchronous circuits such as flip-flops, that change only when the clock changes, and which have carefully designed timing margins.

Synchronous systems

The state register is just a representation of a binary number. If the states in the state machine are numbered (easy to arrange), the logic function is some combinational logic that produces the number of the next state.

Asynchronous systems

As of 2014, most digital logic is synchronous because it is easier to create and verify a synchronous design. However, asynchronous logic is thought can be superior because its speed is not constrained by an arbitrary clock; instead, it runs at the maximum speed of its logic gates. Building an asynchronous system using faster parts makes the circuit faster.Nevertherless, most systems need circuits that allow external unsynchronized signals to enter synchronous logic circuits. These are inherently asynchronous in their design and must be analyzed as such. Examples of widely used asynchronous circuits include synchronizer flip-flops, switch debouncers and arbiters.

Asynchronous logic components can be hard to design because all possible states, in all possible timings must be considered. The usual method is to construct a table of the minimum and maximum time that each such state can exist, and then adjust the circuit to minimize the number of such states. Then the designer must force the circuit to periodically wait for all of its parts to enter a compatible state (this is called "self-resynchronization"). Without such careful design, it is easy to accidentally produce asynchronous logic that is "unstable," that is, real electronics will have unpredictable results because of the cumulative delays caused by small variations in the values of the electronic components.

Register transfer systems

In register transfer logic, binary numbers are stored in groups of flip flops called registers. The outputs of each register are a bundle of wires called a "bus" that carries that number to other calculations. A calculation is simply a piece of combinational logic. Each calculation also has an output bus, and these may be connected to the inputs of several registers. Sometimes a register will have a multiplexer on its input, so that it can store a number from any one of several buses. Alternatively, the outputs of several items may be connected to a bus through buffers that can turn off the output of all of the devices except one. A sequential state machine controls when each register accepts new data from its input.

Asynchronous register-transfer systems (such as computers) have a general solution. In the 1980s, some researchers discovered that almost all synchronous register-transfer machines could be converted to asynchronous designs by using first-in-first-out synchronization logic. In this scheme, the digital machine is characterized as a set of data flows. In each step of the flow, an asynchronous "synchronization circuit" determines when the outputs of that step are valid, and presents a signal that says, "grab the data" to the stages that use that stage's inputs. It turns out that just a few relatively simple synchronization circuits are needed.

Computer design

In this way, the complex task of designing the controls of a computer is reduced to a simpler task of programming a collection of much simpler logic machines.

Almost all computers are synchronous. However, true asynchronous computers have also been designed. One example is the Aspida DLX core.[10] Another was offered by ARM Holdings. Speed advantages have not materialized, because modern computer designs already run at the speed of their slowest component, usually memory. These do use somewhat less power because a clock distribution network is not needed. An unexpected advantage is that asynchronous computers do not produce spectrally-pure radio noise, so they are used in some mobile-phone base-station controllers. They may be more secure in cryptographic applications because their electrical and radio emissions can be more difficult to decode.

Computer architecture is a specialized engineering activity that tries to arrange the registers, calculation logic, buses and other parts of the computer in the best way for some purpose. Computer architects have applied large amounts of ingenuity to computer design to reduce the cost and increase the speed and immunity to programming errors of computers. An increasingly common goal is to reduce the power used in a battery-powered computer system, such as a cell-phone. Many computer architects serve an extended apprenticeship as microprogrammers.

Digital circuits are made from analog components. The design must assure that the analog nature of the components doesn't dominate the desired digital behavior. Digital systems must manage noise and timing margins, parasitic inductances and capacitances, and filter power connections.

Bad designs have intermittent problems such as "glitches", vanishingly fast pulses that may trigger some logic but not others, "runt pulses" that do not reach valid "threshold" voltages, or unexpected ("undecoded") combinations of logic states.

Additionally, where clocked digital systems interface to analog systems or systems that are driven from a different clock, the digital system can be subject to metastability where a change to the input violates the set-up time for a digital input latch. This situation will self-resolve, but will take a random time, and while it persists can result in invalid signals being propagated within the digital system for a short time.

Since digital circuits are made from analog components, digital circuits calculate more slowly than low-precision analog circuits that use a similar amount of space and power. However, the digital circuit will calculate more repeatably, because of its high noise immunity. On the other hand, in the high-precision domain (for example, where 14 or more bits of precision are needed), analog circuits require much more power and area than digital equivalents.

Automated design tools

To save costly engineering effort, much of the effort of designing large logic machines has been automated. The computer programs are called "electronic design automation tools" or just "EDA."Simple truth table-style descriptions of logic are often optimized with EDA that automatically produces reduced systems of logic gates or smaller lookup tables that still produce the desired outputs. The most common example of this kind of software is the Espresso heuristic logic minimizer.

Most practical algorithms for optimizing large logic systems use algebraic manipulations or binary decision diagrams, and there are promising experiments with genetic algorithms and annealing optimizations.

To automate costly engineering processes, some EDA can take state tables that describe state machines and automatically produce a truth table or a function table for the combinational logic of a state machine. The state table is a piece of text that lists each state, together with the conditions controlling the transitions between them and the belonging output signals.

It is common for the function tables of such computer-generated state-machines to be optimized with logic-minimization software such as Minilog.

Often, real logic systems are designed as a series of sub-projects, which are combined using a "tool flow." The tool flow is usually a "script," a simplified computer language that can invoke the software design tools in the right order.

Tool flows for large logic systems such as microprocessors can be thousands of commands long, and combine the work of hundreds of engineers.

Writing and debugging tool flows is an established engineering specialty in companies that produce digital designs. The tool flow usually terminates in a detailed computer file or set of files that describe how to physically construct the logic. Often it consists of instructions to draw the transistors and wires on an integrated circuit or a printed circuit board.

Parts of tool flows are "debugged" by verifying the outputs of simulated logic against expected inputs. The test tools take computer files with sets of inputs and outputs, and highlight discrepancies between the simulated behavior and the expected behavior.

Once the input data is believed correct, the design itself must still be verified for correctness. Some tool flows verify designs by first producing a design, and then scanning the design to produce compatible input data for the tool flow. If the scanned data matches the input data, then the tool flow has probably not introduced errors.

The functional verification data are usually called "test vectors". The functional test vectors may be preserved and used in the factory to test that newly constructed logic works correctly. However, functional test patterns don't discover common fabrication faults. Production tests are often designed by software tools called "test pattern generators". These generate test vectors by examining the structure of the logic and systematically generating tests for particular faults. This way the fault coverage can closely approach 100%, provided the design is properly made testable (see next section).

Once a design exists, and is verified and testable, it often needs to be processed to be manufacturable as well. Modern integrated circuits have features smaller than the wavelength of the light used to expose the photoresist. Manufacturability software adds interference patterns to the exposure masks to eliminate open-circuits, and enhance the masks' contrast.

Design for testability

There are several reasons for testing a logic circuit. When the circuit is first developed, it is necessary to verify that the design circuit meets the required functional and timing specifications. When multiple copies of a correctly designed circuit are being manufactured, it is essential to test each copy to ensure that the manufacturing process has not introduced any flaws.[12]A large logic machine (say, with more than a hundred logical variables) can have an astronomical number of possible states. Obviously, in the factory, testing every state is impractical if testing each state takes a microsecond, and there are more states than the number of microseconds since the universe began. Unfortunately, this ridiculous-sounding case is typical.

Fortunately, large logic machines are almost always designed as assemblies of smaller logic machines. To save time, the smaller sub-machines are isolated by permanently installed "design for test" circuitry, and are tested independently.

One common test scheme known as "scan design" moves test bits serially (one after another) from external test equipment through one or more serial shift registers known as "scan chains". Serial scans have only one or two wires to carry the data, and minimize the physical size and expense of the infrequently used test logic.

After all the test data bits are in place, the design is reconfigured to be in "normal mode" and one or more clock pulses are applied, to test for faults (e.g. stuck-at low or stuck-at high) and capture the test result into flip-flops and/or latches in the scan shift register(s). Finally, the result of the test is shifted out to the block boundary and compared against the predicted "good machine" result.

In a board-test environment, serial to parallel testing has been formalized with a standard called "JTAG" (named after the "Joint Test Action Group" that made it).

Another common testing scheme provides a test mode that forces some part of the logic machine to enter a "test cycle." The test cycle usually exercises large independent parts of the machine.

Trade-offs

Several numbers determine the practicality of a system of digital logic: cost, reliability, fanout and speed. Engineers explored numerous electronic devices to get a favourable combination of these personalities.Cost

The cost of a logic gate is crucial, primarily because very many gates are needed to build a computer or other advanced digital system and because the more gates can be used, the more able and/or respondent the machine can become. Since the bulk of a digital computer is simply an interconnected network of logic gates, the overall cost of building a computer correlates strongly with the price per logic gate. In the 1930s, the earliest digital logic systems were constructed from telephone relays because these were inexpensive and relatively reliable. After that, electrical engineers always used the cheapest available electronic switches that could still fulfill the requirements.The earliest integrated circuits were a happy accident. They were constructed not to save money, but to save weight, and permit the Apollo Guidance Computer to control an inertial guidance system for a spacecraft. The first integrated circuit logic gates cost nearly $50 (in 1960 dollars, when an engineer earned $10,000/year). To everyone's surprise, by the time the circuits were mass-produced, they had become the least-expensive method of constructing digital logic. Improvements in this technology have driven all subsequent improvements in cost.

With the rise of integrated circuits, reducing the absolute number of chips used represented another way to save costs. The goal of a designer is not just to make the simplest circuit, but to keep the component count down. Sometimes this results in more complicated designs with respect to the underlying digital logic but nevertheless reduces the number of components, board size, and even power consumption. A major motive for reducing component count on printed circuit boards is to reduce the manufacturing defect rate and increase reliability, as every soldered connection is a potentially bad one, so the defect and failure rates tend to increase along with the total number of component pins.

For example, in some logic families, NAND gates are the simplest digital gate to build. All other logical operations can be implemented by NAND gates. If a circuit already required a single NAND gate, and a single chip normally carried four NAND gates, then the remaining gates could be used to implement other logical operations like logical and. This could eliminate the need for a separate chip containing those different types of gates.

Reliability

The "reliability" of a logic gate describes its mean time between failure (MTBF). Digital machines often have millions of logic gates. Also, most digital machines are "optimized" to reduce their cost. The result is that often, the failure of a single logic gate will cause a digital machine to stop working. It is possible to design machines to be more reliable by using redundant logic which will not malfunction as a result of the failure of any single gate (or even any two, three, or four gates), but this necessarily entails using more components, which raises the financial cost and also usually increases the weight of the machine and may increase the power it consumes.Digital machines first became useful when the MTBF for a switch got above a few hundred hours. Even so, many of these machines had complex, well-rehearsed repair procedures, and would be nonfunctional for hours because a tube burned-out, or a moth got stuck in a relay. Modern transistorized integrated circuit logic gates have MTBFs greater than 82 billion hours (8.2 · 1010 hours),[13] and need them because they have so many logic gates.

Fanout

Fanout describes how many logic inputs can be controlled by a single logic output without exceeding the electrical current ratings of the gate outputs.[14] The minimum practical fanout is about five. Modern electronic logic gates using CMOS transistors for switches have fanouts near fifty, and can sometimes go much higher.Speed

The "switching speed" describes how many times per second an inverter (an electronic representation of a "logical not" function) can change from true to false and back. Faster logic can accomplish more operations in less time. Digital logic first became useful when switching speeds got above 50 Hz, because that was faster than a team of humans operating mechanical calculators. Modern electronic digital logic routinely switches at 5 GHz (5 · 109 Hz), and some laboratory systems switch at more than 1 THz (1 · 1012 Hz).Logic families

Design started with relays. Relay logic was relatively inexpensive and reliable, but slow. Occasionally a mechanical failure would occur. Fanouts were typically about 10, limited by the resistance of the coils and arcing on the contacts from high voltages.Later, vacuum tubes were used. These were very fast, but generated heat, and were unreliable because the filaments would burn out. Fanouts were typically 5...7, limited by the heating from the tubes' current. In the 1950s, special "computer tubes" were developed with filaments that omitted volatile elements like silicon. These ran for hundreds of thousands of hours.

The first semiconductor logic family was resistor–transistor logic. This was a thousand times more reliable than tubes, ran cooler, and used less power, but had a very low fan-in of 3. Diode–transistor logic improved the fanout up to about 7, and reduced the power. Some DTL designs used two power-supplies with alternating layers of NPN and PNP transistors to increase the fanout.

Transistor–transistor logic (TTL) was a great improvement over these. In early devices, fanout improved to 10, and later variations reliably achieved 20. TTL was also fast, with some variations achieving switching times as low as 20 ns. TTL is still used in some designs.

Emitter coupled logic is very fast but uses a lot of power. It was extensively used for high-performance computers made up of many medium-scale components (such as the Illiac IV).

By far, the most common digital integrated circuits built today use CMOS logic, which is fast, offers high circuit density and low-power per gate. This is used even in large, fast computers, such as the IBM System z.

Recent developments

In 2009, researchers discovered that memristors can implement a boolean state storage (similar to a flip flop, implication and logical inversion), providing a complete logic family with very small amounts of space and power, using familiar CMOS semiconductor processes.The discovery of superconductivity has enabled the development of rapid single flux quantum (RSFQ) circuit technology, which uses Josephson junctions instead of transistors. Most recently, attempts are being made to construct purely optical computing systems capable of processing digital information using nonlinear optical elements.

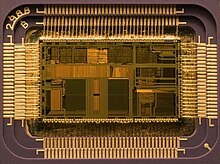

X . III Integrated circuit design

Integrated circuit design, or IC design, is a subset of electronics engineering, encompassing the particular logic and circuit design techniques required to design integrated circuits, or ICs. ICs consist of miniaturized electronic components built into an electrical network on a monolithic semiconductor substrate by photolithography.

IC design can be divided into the broad categories of digital and analog IC design. Digital IC design is to produce components such as microprocessors, FPGAs, memories (RAM, ROM, and flash) and digital ASICs. Digital design focuses on logical correctness, maximizing circuit density, and placing circuits so that clock and timing signals are routed efficiently. Analog IC design also has specializations in power IC design and RF IC design. Analog IC design is used in the design of op-amps, linear regulators, phase locked loops, oscillators and active filters. Analog design is more concerned with the physics of the semiconductor devices such as gain, matching, power dissipation, and resistance. Fidelity of analog signal amplification and filtering is usually critical and as a result, analog ICs use larger area active devices than digital designs and are usually less dense in circuitry.

Modern ICs are enormously complicated. An average desktop computer chip, as of 2015, has over 1 billion transistors. The rules for what can and cannot be manufactured are also extremely complex. Common IC processes of 2015 have more than 500 rules. Furthermore, since the manufacturing process itself is not completely predictable, designers must account for its statistical nature. The complexity of modern IC design, as well as market pressure to produce designs rapidly, has led to the extensive use of automated design tools in the IC design process. In short, the design of an IC using EDA software is the design, test, and verification of the instructions that the IC is to carry out.

Layout view of a simple CMOS Operational Amplifier (inputs are to the left and the compensation capacitor is to the right). The metal layer is coloured blue, green and brown are N- and P-doped Si, the polysilicon is red and vias are crosses

Fundamentals

Integrated circuit design involves the creation of electronic components, such as transistors, resistors, capacitors and the metallic interconnect of these components onto a piece of semiconductor, typically silicon. A method to isolate the individual components formed in the substrate is necessary since the substrate silicon is conductive and often forms an active region of the individual components. The two common methods are p-n junction isolation and dielectric isolation. Attention must be given to power dissipation of transistors and interconnect resistances and current density of the interconnect, contacts and vias since ICs contain very tiny devices compared to discrete components, where such concerns are less of an issue. Electromigration in metallic interconnect and ESD damage to the tiny components are also of concern. Finally, the physical layout of certain circuit subblocks is typically critical, in order to achieve the desired speed of operation, to segregate noisy portions of an IC from quiet portions, to balance the effects of heat generation across the IC, or to facilitate the placement of connections to circuitry outside the IC.Design steps

A typical IC design cycle involves several steps:- Feasibility study and die size estimate

- Function analysis

- System Level Design

- Analogue Design, Simulation & Layout

- Digital Design, Simulation & Synthesis

- System Simulation & Verification

- Design For Test and Automatic test pattern generation

- Design for manufacturability (IC)

- Tape-in

- Mask data preparation

- Tape-out

- Wafer fabrication

- Die test

- Packaging

- Post silicon validation and integration

- Device characterization

- Tweak (if necessary)

- Datasheet generation (of usually a Portable Document Format (PDF) file)

- Ramp up

- Production

- Yield Analysis / Warranty Analysis Reliability (semiconductor)

- Failure analysis on any returns

- Plan for next generation chip using production information if possible

- Electronic system-level design: This step creates the user functional specification. The user may use a variety of languages and tools to create this description. Examples include a C/C++ model, SystemC, SystemVerilog Transaction Level Models, Simulink and MATLAB.

- RTL design: This step converts the user specification (what the user wants the chip to do) into a register transfer level (RTL) description. The RTL describes the exact behavior of the digital circuits on the chip, as well as the interconnections to inputs and outputs.

- Physical design: This step takes the RTL, and a library of available logic gates, and creates a chip design. This involves figuring out which gates to use, defining places for them, and wiring them together.

Design process

Microarchitecture and system-level design

The initial chip design process begins with system-level design and microarchitecture planning. Within IC design companies, management and often analytics will draft a proposal for a design team to start the design of a new chip to fit into an industry segment. Upper-level designers will meet at this stage to decide how the chip will operate functionally. This step is where an IC's functionality and design are decided. IC designers will map out the functional requirements, verification testbenches, and testing methodologies for the whole project, and will then turn the preliminary design into a system-level specification that can be simulated with simple models using languages like C++ and MATLAB and emulation tools. For pure and new designs, the system design stage is where an Instruction set and operation is planned out, and in most chips existing instruction sets are modified for newer functionality. Design at this stage is often statements such as encodes in the MP3 format or implements IEEE floating-point arithmetic. At later stages in the design process, each of these innocent looking statements expands to hundreds of pages of textual documentation.RTL design

Upon agreement of a system design, RTL designers then implement the functional models in a hardware description language like Verilog, SystemVerilog, or VHDL. Using digital design components like adders, shifters, and state machines as well as computer architecture concepts like pipelining, superscalar execution, and branch prediction, RTL designers will break a functional description into hardware models of components on the chip working together. Each of the simple statements described in the system design can easily turn into thousands of lines of RTL code, which is why it is extremely difficult to verify that the RTL will do the right thing in all the possible cases that the user may throw at it.To reduce the number of functionality bugs, a separate hardware verification group will take the RTL and design testbenches and systems to check that the RTL actually is performing the same steps under many different conditions, classified as the domain of functional verification. Many techniques are used, none of them perfect but all of them useful – extensive logic simulation, formal methods, hardware emulation, lint-like code checking, code coverage, and so on.

A tiny error here can make the whole chip useless, or worse. The famous Pentium FDIV bug caused the results of a division to be wrong by at most 61 parts per million, in cases that occurred very infrequently. No one even noticed it until the chip had been in production for months. Yet Intel was forced to offer to replace, for free, every chip sold until they could fix the bug, at a cost of $475 million (US).[citation needed]

Physical design

RTL is only a behavioral model of the actual functionality of what the chip is supposed to operate under. It has no link to a physical aspect of how the chip would operate in real life at the materials, physics, and electrical engineering side. For this reason, the next step in the IC design process, physical design stage, is to map the RTL into actual geometric representations of all electronics devices, such as capacitors, resistors, logic gates, and transistors that will go on the chip.The main steps of physical design are listed below. In practice there is not a straightforward progression - considerable iteration is required to ensure all objectives are met simultaneously. This is a difficult problem in its own right, called design closure.

- Logic synthesis: The RTL is mapped into a gate-level netlist in the target technology of the chip.

- Floorplanning: The RTL of the chip is assigned to gross regions of the chip, input/output (I/O) pins are assigned and large objects (arrays, cores, etc.) are placed.

- Placement: The gates in the netlist are assigned to nonoverlapping locations on the die area.

- Logic/placement refinement: Iterative logical and placement transformations to close performance and power constraints.

- Clock insertion: Clock signal wiring is (commonly, clock trees) introduced into the design.

- Routing: The wires that connect the gates in the netlist are added.

- Postwiring optimization: Performance (timing closure), noise (signal integrity), and yield (Design for manufacturability) violations are removed.

- Design for manufacturability: The design is modified, where possible, to make it as easy and efficient as possible to produce. This is achieved by adding extra vias or adding dummy metal/diffusion/poly layers wherever possible while complying to the design rules set by the foundry.

- Final checking: Since errors are expensive, time consuming and hard to spot, extensive error checking is the rule, making sure the mapping to logic was done correctly, and checking that the manufacturing rules were followed faithfully.

- Tapeout and mask generation: the design data is turned into photomasks in mask data preparation.

Analog design

Before the advent of the microprocessor and software based design tools, analog ICs were designed using hand calculations and process kit parts. These ICs were low complexity circuits, for example, op-amps, usually involving no more than ten transistors and few connections. An iterative trial-and-error process and "overengineering" of device size was often necessary to achieve a manufacturable IC. Reuse of proven designs allowed progressively more complicated ICs to be built upon prior knowledge. When inexpensive computer processing became available in the 1970s, computer programs were written to simulate circuit designs with greater accuracy than practical by hand calculation. The first circuit simulator for analog ICs was called SPICE (Simulation Program with Integrated Circuits Emphasis). Computerized circuit simulation tools enable greater IC design complexity than hand calculations can achieve, making the design of analog ASICs practical. The computerized circuit simulators also enable mistakes to be found early in the design cycle before a physical device is fabricated. Additionally, a computerized circuit simulator can implement more sophisticated device models and circuit analysis too tedious for hand calculations, permitting Monte Carlo analysis and process sensitivity analysis to be practical. The effects of parameters such as temperature variation, doping concentration variation and statistical process variations can be simulated easily to determine if an IC design is manufacturable. Overall, computerized circuit simulation enables a higher degree of confidence that the circuit will work as expected upon manufacture.Coping with variability

A challenge most critical to analog IC design involves the variability of the individual devices built on the semiconductor chip. Unlike board-level circuit design which permits the designer to select devices that have each been tested and binned according to value, the device values on an IC can vary widely which are uncontrollable by the designer. For example, some IC resistors can vary ±20% and β of an integrated BJT can vary from 20 to 100. In the latest CMOS processes, β of vertical PNP transistors can even go below 1. To add to the design challenge, device properties often vary between each processed semiconductor wafer. Device properties can even vary significantly across each individual IC due to doping gradients. The underlying cause of this variability is that many semiconductor devices are highly sensitive to uncontrollable random variances in the process. Slight changes to the amount of diffusion time, uneven doping levels, etc. can have large effects on device properties.Some design techniques used to reduce the effects of the device variation are:

- Using the ratios of resistors, which do match closely, rather than absolute resistor value.

- Using devices with matched geometrical shapes so they have matched variations.

- Making devices large so that statistical variations becomes an insignificant fraction of the overall device property.

- Segmenting large devices, such as resistors, into parts and interweaving them to cancel variations.

- Using common centroid device layout to cancel variations in devices which must match closely (such as the transistor differential pair of an op amp).

Vendors

The three largest companies selling electronic design automation tools are Synopsys, Cadence, and Mentor Graphics.X . IIII Digital information - bits, bytes and pixels.

a Bit

1 A bit is an irreducible discrete unit of information used by computers.

o It can have two different values or "settings."

o It be thought of as an on-off switch.

ü In this case, the two possible settings are "on" or "off".

o It can also be thought of as a yes-no instruction.

ü In this case, the two possible values are "yes" or "no".

o It can be represented by the digits 0 or 1

ü In this case, the two possible values are 1 or 0

2 A set or ordered "stream" of bits can be used to carry complex information

b Binary numbers

o A set of bits can be thought of as a representation of a "binary" number, which is another way of representing familiar (base 10) numbers, as discussed in class. Examples:

ü 00 (binary number for 0)

ü 01 (binary number for 1)

ü 10 (binary number for 2)

ü 11 (binary number for 3)

c Byte

1 A byte is an ordered set of 8 bits having given values

2 A byte can have any one of 256 "values," depending on the values (e.g., 0 or 1) of each of its 8 bits.

o Examples of different values of a byte are:

ü 00000000 (binary number for 0)

ü 00000001 (binary number for 1)

ü 00000010 (binary number for 2)

ü .....

ü 11001000 (binary for 208)

ü .....

ü 11111111 (binary number for 255)

o The reason there are 256 values is that there are 256 different 8 digit "binary numbers" made up out of 0's and 1's only. Mathematically,

ü 256 = (# of values of each bit)(# of bits) = 28 = 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2.

o The value of a byte, therefore, can be ordered from 0 to 255.

3 A Megabyte is a million bytes.

o Each of the bytes can have any one of the 256 values.

o Computer memory and information storage capability is measured in Megabytes

d The ASCII character set

1 ASCII stands for the American Standard Code for Information Interchange.

2 There are 256 characters in the ASCII character set.

o These characters include all of the lower and upper case letters of the alphabet.

o The standard typewriter characters, such as @, $,& , etc. are also included in the ASCII set.

3 Each of these different characters is represented by a different byte, according to a specific permanently agreed upon convention.

o Examples:

ü A = character #65 (byte value 01000001)

ü d = character #100 (byte value 01100100)

ü @ = character #65 (byte value 01000000)

4 Hence, a Megabyte of computer memory can hold a million characters (including spaces between words).

e Pixel

1 A pixel is an irreducible discrete unit of information in an image or picture.

o Pixel stands for "picture element."

o In a "mosaic," a picture is made up of discrete colored tiles. Each tile is a pixel. The value of each pixel is its color.

o The "pointillist" artist, George Seurat, used dots of color as pixels. It is often convenient to think of pixels as dots of color (or grey-scale values).

o TV screens examined closely will be found to consist of dots of color (pixels).

o All images can be "digitized" into pixels by using digitizing instruments, such as scanners.

2 The location of a pixel is given by an x-y coordinate on a graph.

f Bitmap images and color digital photos.

1 Any bitmapped image can be represented by giving the locations and values of all its pixels.

o A "map" of locations and values of pixels is called a bitmap.

o An image described by a bitmap is called a bitmapped image.

o A digital photo is an example of a bitmapped image.

2 The depth of a pixel in a digital photo seen on a computer screen is determined by the number of bits of information it contains.

o The appearance and quality of a digital photo seen on the computer screen is determined by the depth of the pixels.

o A 1 bit pixel can take on 21 = 2 different values, 0 or 1 (dot is off or on)

ü Usually the dot is white, so this means the color of a 1 bit pixel is black or white

ü Note, in Photoshop language, a bitmapped image is alway made up of 1 bit pixels, whereas in more general usage a bitmapped image can be made up of pixels of arbitrary depth

ü Show using Photoshop mode how a color image looks in 1 bit mode. Show pixels (simulated)

o A 2 bit pixel can take on 22 = 4 different values corresponding to a two digit binary number.

ü These values are 00, 01, 10, and 11.

ü For example, these values can trigger black, white, and two shades of gray.

o A 3 bit pixel can take on 23 = 8 different values.

ü For example, the values can trigger black, white and 6 different colors.

o An n-bit pixel can take on 2n different values.

ü In simple language, multiply 2 times itself a total of n times: 2 x 2 x 2 x ... (n factors of 2)

o A computer set to show 8-bit color has 8-bit pixels, and can display images with 28 = 256 colors. A color scheme using 256 colors is sometimes called in dexed color.

ü Later we will show what a color image looks like in 8 bit color using the Photoshop mode indexed color.

o A computer set to show 16-bit color, has 16-bit pixels and displays images with 216 = 32,768 colors

o These days, most laptops can show 24-bit color (also known as RGB color, with 8 bits (1 byte) describing the brightness of each primary)

ü An image in 24-bit color can show 224 = (over 16 million) different colors. (Check with your calculators)

ü See the section of Physics 2000 under Color TV's dealing with partitive mixing to see one way this works.

II Networking

a The Internet (the Net)

1 An all-encompasing term that describes a complex interconnection of international computer-information networks.

o Domains identify unique top-level Net addresses.

ü In the email address goldman@spot.colorado .edu, the domain is edu.

o Subdomains organize the network structure within a domain

ü In the above email address the subdomain is colorado.

o As we shall see later, spot is the name of the server in that subdomain

2 Information travels between 2 (often distant) computers by unpredictable routes.

o The communication occurs over wires, telephone, fiber-optic and other special cables, but also over satellite links to radar dishes hooked up to computers by cables.

o The route was deliberately made unpredictable in the earliest version ot the Internet

ü The ArpaNet (in the 1960's) was designed to be hard to destroy in a potential war, by using automatic switching to choose the best of a variety of different "routes" between the computers.

ü There is an analogy between this aspect of the Net and blood vessels carrying blood: If some paths are damaged or blocked, the blood takes another path.

3 Typically, one computer contains files of bits or bytes, while the other receives copies of the files which it decodes and displays as words, pictures, etc.

o This requires special software on each computer.

ü The computer containing the original file is called the HOST.

ü The remote computer is sometimes called the DESKTOP or the USER.

o The SERVER is software on the HOST, used to make the file available to the remote computer.

o The CLIENT is the software you use on your DESKTOP (sometimes called the FRONT END).

o Examples of client software are Netscape and Explorer (browsers) and Eudora (for email).

o Client software relies on an underlying PROTOCOL

ü A protocol is a set of communication rules and structures designed to traffic information from host to desktop, desktop to host, and host to host.

ü TCP/IP is a protocol which manages the transfer of data between two points.

ü World Wide Web is another protocol that distributes information to users running WWW software such as Netscaape

ü The SERVER carries out the commands issued by the CLIENT

b The World Wide Web (the Web)

1 The Web is a wide-area hypermedia information retrieval initiative, including a protocol, client/host software, and a set of sites.

o Hypermedia show effective active "buttons" on the screen — highlighted text (hypertext) or framed pictures — which act as links to other documents.

ü These lecture notes, when viewed in outline format on Microsoft Word have hypertext capability.

ü HTML HyperText Markup Language is a set of formatting conventions used to create Web Home pages for a host.

o HTTP, or HyperText Transmission Protocol is the protocol used by the Web.

ü Browsers, such as Netscape and Explorer are browser programs used for surfing the net.

ü These browsers interpret the HTTP protocol and translate it into formatted images and sentences.

o Web addresses always begin with http://, followed by server, subdomain and domain names and then directory and file information

ü For example, the address of our class Webpage is http://www.colorado.edu/physics/phys1230. Here, www is the name of the server, colorado is the subdomain, edu is the domain and the top level directory is physics, followed by our class's directory, phys 1230.

III Computer "hardware."

a A desktop computer consists of input devices, output devices, a processing unit, and memory units, all run by computer programs.

1 Input devices

o Keyboard

o Mouse or drawing/pointing pad

o Floppy disk drive, hard disk drives and Zip, CD-ROM and DVD drives

ü These drives receive input (read) from disks containing programs and data which is then loaded into the processing unit.

ü These disks can also receive data, and therefore act as output devices (or temporary storage devices).

2 Other output devices

o Monitor screen

o Printer

3 Central Processing Unit (CPU)

o Desktop computers use a microprocessor — a processing unit contained in a microchip.

ü The CPU follows orders from a program loaded into its Random Access Memory (RAM) chips from an external input drive device.

ü The CPU can also receive new data from the keyboard

ü The CPU also sends results to output.

4 Memory units

o ROM memory chip

ü Read only memory that contains permanent instructions which enable the microprocessor to control the computer

o RAM memory chip

ü A random access memory chip inside the computer used for temporary storage of programs loaded into the computer and data currently in use.

ü The capacity of this chip is often called the amount of memory the computer has. My laptop has 256 Mb of memory.

o VRAM memory chip and image (video) processing

ü A video RAM chip which holds the codes and data that generate the picture on the monitor screen.

ü The space in the VRAM chip where screen image data is stored and read is called the video buffer.

ü Working together with the VRAM chip, is a video adapter — circuitry that reads video buffer values and converts them to color (voltage) signals that run the monitor. The video adapter is therefore a processor, rather than memory.

5 Summary

o

IV How does a computer screen display colored images such as digital photos?

a A computer monitor screen consists of a large array of pixels.

1 A low cost color computer monitor screen might contain 823 x 624 = over 500 thousand pixels. A more expensive one 1280 x 1024 = over 1.3 million pixels.

2 A colored image is produced when the pixels on the screen each take on appropriate colors.

o Every different image can therefore also be thought of as a large array of pixels which can take on different colors.

o These colors are represented by numerical values in the video buffer .

b The Red, Blue and Green "parts" of each pixel determine the color of that pixel.

1 Each pixel on an active color computer screen is composed of 3 glowing phosphors : one red, one green and one blue:

o

2 A computer monitor (or TV screen) makes each of the 3 phosphors in each pixel glow with a different (adjustable) brightness.

o The brightness of a given phosphor in the color monitor or TV at a given instant is controlled by the tube's electron gun.

ü A stream of electrons emanates from each of the 3 electron guns in a color monitor or TV.

ü Each stream of electrons scans over the entire screen, line by line with an intensity changing from pixel to pixel, depending on the voltage driving the electrons at each moment.

ü The brightness of the 3 phosphors in each pixel is controlled by the voltage at each instant.

3 When viewed from a distance, the separate glowing phosphors cannot be seen as distinct, but together make the pixel appear to be a single "new' color.

o This occurs by partitive mixing (which we have already studied) of the three different phosphors in each pixel.

ü The colors of the 3 different phosphers are the additive primaries, R, G and B.

o For example, red and green glowing phophors in one pixel make that pixel appear yellow from a distance.

4 Our perception of the overall color of each pixel depends on the brightness of each of the 3 phosphors.

o In computer applications the brightness is measured by numbers which can only take on certain integer values.

o The brightness is not continuously adjustable, as with a dimmer switch, but can only take on a set of discrete values determined by these integers.

o See section of Physics-2000 Website dealing with how TV screens work

c 24 bit or RGB color makes the most colors available to pixels

1 The widest assortment of pixel colors is achieved with 24 bit pixels

o In 24 bit color, the red, green and blue phosphor brightness are each separately determined by 8 bit (1 byte) values

ü For example, 00000000 = brightness level 0 is the darkest, followed by 00000001, 00000010, 00000011, 00000100, ... etc, up to 11111111 = level 255, the brightest.

o Thus the red, green and blue phosphor brightnesses can each separately take on any of 256 different brightness levels.

o The total number of different colors each pixel can exibit in 24 bit color is therefore = 256 x 256 x 256 ≈ 16.8 million colors.

ü Each of the different colors is defined by a different set of 24 bits. For example, bright yellow is 11111111 11111111 00000000, meaning full brightness red (11111111), full brightness green (11111111) and no blue (00000000).

d How to think about an image such as a digital photo when it is not on screen, but stored in your computer: bitmapped image files

1 We can speak abstractly about the numerical values of pixels in a bitmapped image separately from the pixels on a computer screen.

o This is called the bitmapped data

o The bitmapped data is one part of the bitmapped image file — a long stream of bits organized in a special way

o In this case there are no phosphors, but we can still talk about the image pixels in the file

ü The colors available to those pixels will depend on whether the color scheme is 24 bit color, 8 bit color or something else

ü For 24 bit color we can describe the relative amounts (intensities) of Red Green and Blue (RGB) in a pixel without reference to phosphors.

e Hue, saturation and brightness are another way to descibe the color of pixels in a digital picture

1 Another way of describing our perception of the color of a pixel is by 3 properties: Hue (color name) , Saturation (color deepness or purity) and Brightness (light or dark color ).

2 This description is called the HSB model of color. Bitmapped computer images have discrete (countable) values of H, S and B.

o (Do not confuse the brightness levels of the red, green and blue phosphors within each pixel with the brightness level of the resulting effective pixel color).

3 Show examples of HSB, RGB, and other color descriptions in Photoshop (Choose Foreground Color).

o Explain color cube in terms of sliding to different hue cross-sections. Like a loaf of bread cut into sandwich slices.

o Explain L*a*b color description in terms of lightness and psychological opposition primaries

ü "a" corresponds to the r-g channel, "b" corresponds to the y-b channel and L corresponds to lightness or darkeness

f Size and resolution of images such as digital photos

1 Show in Photoshop, using still life picture

2 The size of an image is the length and width (e.g. in inches) of the picture when printed

o The size of the image when viewed on the screen may be very different (larger or smaller than the printed size)

o The size of the printed picture can be changed in Photoshop

3 Another measure commonly used for size is the pixel dimension of a picture.

o The pixel dimension is the number of bytes needed to store the color information of all of the pixels in the picture

ü Usually this is given in thousands of bytes (Kilabytes, Kb or K) or millions of bytes (Megabytes, Mb or M)

o The pixel dimension can be obtained from the number of pixels in the entire image as follows

ü The total number of pixels in the entire image is obtained by multiplying the number of pixels in the one row (along width of the picture) by the number of pixels in one column (along the height of the picture).

ü The total number of pixels must then be multiplied by 3 to get the total number of bytes, assuming there are 3 bytes of information stored in each pixel (RGB 24 bit color uses 3 bytes for each color). This gives the pixel dimension.

4 Digital cameras are usually rated by the maximum number of pixels in their photos.

o A megapixel is a million pixels and abbreviated Mp. The maximum number of megabytes in an RGB file for one photo is three times the number of pixels. Why?

o Digital cameras capable of making pictures of size one or two Mp are fine for creating Web images or small prints (up to 4 x 6 inches)

o If a picture is going to be enlarged to 8 x 10 inches or larger or cropped (only a small portion of it printed) then the digital camera should be rated at 3 Mp or higher

5 The resolution of an image is the number of pixels per inch

o Computer monitors have a number of pixels (RGB phosphor trios) per inch called the screen resolution

ü The screen resolution can be between 70 and 90 pixels per inch and often can be adjusted. (Show)

o An image which has the same resolution as the screen resolution on the monitor on which it is viewed will show all of its pixels when the image is displayed at full size (100%). The size of such an image will be the size of the print when the image is sent to a printer.

ü However, in order for a digital color print to look good on paper the image usually needs to have a higher resolution than the screen resolution

ü It generally must have at least 100 pixels per inch and up to 300 pixels per inch for the richest color and sharpness when printed.

o Images whose resolution is more than screen resolution can still be seen on the computer screen by displaying only a small portion of a larger on-screen image at full 100% size and screen resolution

ü This is the way digital photos are opened in Photoshop

ü The onscreen version of an image can also be displayed as smaller than the original image — reduced in size by 50%, 33% or some other percent, with not all pixels showing onscreen, or

ü displayed as lerger than the original image — enlarged in size by 200%, 300%, etc, with pixels added

o The resolution and size of a digital photo can be changed in Photoshop

ü Show

V Images with fewer colors than in 24 bit color

a Displaying bitmapped images at lower pixel depth (fewer colors)

1 The number of colors present in computer images is often much less than 16.8 million.

o This makes the image file much smaller than an RGB file

o This can greatly reduce the storage space normally needed by RGB images and the colors in the image are almost as good as in the RGB image

o For example, an image in 8-bit color can only show 256 different colors, compared to 16.8 million in 24-bit color, but the bitmapped image file is 1/3 the size

ü GIF images (Graphics Interchange Format, developed by CompuServe) are up to 8-bit (1 byte) are images which contain only 256 different colors.

2 In addition, an image file with many colors (even 16.8 million) is often viewed on a monitor which is set only to show a smaller total number of colors

3 Images in Photoshop can be viewed using various schemes for showing a smaller total numbers of pixel colors. These are usually called indexed color

o 2-bit (4 colors), 4-bit (16 colors), 8-bit (256 colors), 16-bit (65,536 colors), etc

o Show using Photoshop and mode set for Indexed Color.

4 Image quality is usually much more sensitive to the number of colors (pixel depth) than it is to the image resolution!

5 Dithering

o One trick to make smaller palette images look realistic on the screen and in the printed version is called "dithering."

o Dithering uses partitive mixing of pixels (rather than the phosphors within each pixel) to create new colors and desaturated versions of existing colors.

o For example if we didn't have yellow pixels among the 256 available, we could make yellow from red and green pixels

o More realistically, we can make pink from red and white pixels juxtaposed (see Photoshop example).

o Dithering is often more effective when the pixels are arranged in patterns.

b Converting RGB 24 bit images into indexed color (up to 8 bit) images using color tables and palettes

1 A color coding table for an indexed color image may consist of a total of 256 or fewer different colors used to display a given image.

o Each pixel in the picture can take on one of the 256 different colors in the color table.

o The 256 different colors in an 8-bit color table are each labeled by a different 8-bit binary number

ü Note, this is a different scheme from RGB color in which each color is represented by a different 24 bit binary number which gives the intensities of R, G and B.

o Each of the colors in the table can still be a 24-bit color.

ü Thus, any 256 out of 16.8 million different colors can be put in the table.

o There is an entirely different color table needed for each picture or digital photo.

ü The color table is part of the bitmapped image file for each picture.

o In Photoshop, a color table for an image seen in indexed color (8-bit color or less) may be viewed

ü Demonstrate using Photoshop

2 Here is how Photoshop converts an RGB image into an indexed color image by constructing a color table

o A color cube containing all of the RGB colors is subdivided to construct a color table for a particular digital photograph.

ü The color cube contains all 16.7 million colors of a digitized RGB image

ü The x, y and z axes give 256 different brightness levels for each of the red, green and blue primaries

ü (The brightness levels range from a minimum = 0 to a maximum = 255, as in the demonstration of partitive mixing in Physics 2000 under Color TV)

ü Think of the color cube as as a cube of cake containing an evenly- spaced 3-D array of poppyseeds – each one representing a different RGB color. All together there are 256 x 256 x 256 = 16.8 million different colors (poppyseeds) in the cube.

ü However, not all of these colors (poppyseeds) are present in any given digital image. In a particular digital image, only certain of these colors will be present. Think of those as a much smaller number of glowing poppyseeds in the cube containing the evenly-spaced array of 16.8 million poppyseeds.

ü The object now is to cut the cake (cube) into 256 smaller pieces (for 8-bit indexed color). Each piece will still contain many glowing poppyseeds (colors from the picture) but these will be very close in color, so we can take one glowing poppyseed (color) from each piece (to represent all the other glowing poppyseeds in that piece) and put it into the color table.

ü The actual method of cutting the cake (subdividing the color cube) is to make each cut in such a way as to have an equal number of glowing poppyseeds (picture colors) in each piece, but these details are not important here and will not be discussed further)

3 The computer's color palette is filled using the table.

o A program like Photoshop reads the table into the computer's display hardware color palette (e.g., into VRAM).

4 The color palette is used to color the screen pixels in the display of this image if the computer does not support 24-bit color.

o Such a color palette is sometimes called a Look Up Table , or LUT.

VI Storing bitmapped image files (e.g., digital photos)

a How IBM BMP files are stored

1 (All information is stored as bytes.)

2 File header: ,

o Contains file type (e.g., BMP), file size, location of bitmap data

3 Information header

o Size of information header

o Image height

o Image width

o Number of bits per pixel (pixel depth)

o Compression method (to be discussed next)

o Resolution

o Number of colors in image.

o ......

4 Color table

5 Bitmap data

o Pixel values (colors) for each row of pixels in the image.

VII Compression of bitmapped images

a Image files which are not "compressed" can get very large

o Aside from headers, image file size is proportional to the number of pixels in the image and to the pixel depth (# of bits to represent each image.)

o A "true-color" (24-bit) image of size 1024 x 768 pixels contains over 2 megabytes of info.

b Image files can be made smaller by "compression" tricks.

1 Compression tricks can shrink a file by a factor of 5 or more.

2 Two classes of compression:

o Lossless compression

ü Keeps all pixels, but "coding" is changed.

ü Compression is modest.

o Lossy compression

ü Throws away some image information.

ü Compression can be more extreme.

c RLE lossless compression (e.g, TIFF)

1 RLE = Run Length Encoding

2 TIFF files are RLE-compressed

3 Here is how it works for an image with 128 different colors, labeled from 0 to 127:

o Consider a sequence of values of the first 18 pixels in the top row of pixels on the screen.

o Each value is represented by a different number from 0 to 127, designating a specific color (say, in a color table). Thus, only 7 bits are needed to specify a color, since here are exactly 27 = 128 different 7 place binary numbers.

o Since most images have certain areas of the same color, it is common to have some repeating pixel values.

o A sequence of repeating pixel values is called a "run," and the number of repetitions is called the run length.

o Below we give an example of RLE compression:

4 In the table below, there is a run of zeros of run length 5, a run of 128's of run length 4, and a run of 37's of run length 4.

0

|

0

|

0

|

0

|

0

|

32

|

84

|

128

|

128

|

128

|

128

|

96

|

74

|

56

|

32

|

32

|

32

|

32

|

o This might, represent, for example, the following sequence of colors:

o An RLE compressor program scans pixel-values in the row of numbers from left to right, looking for repeated pixels.

o When 3 or more consecutive pixels of identical value are found, they are replaced by two values — one specifying the run length, and the next specifying the value of the repeated pixel.

o The pixel carrying run length information is specified below by a grey cell with a value given by a red number for the run length. Such a value is called a run-length token..

ü A run-length token does not specify a color or correspond to a physical set of three phosphors on the screen, but is a marker , carryingcode for the number of repetitions of the next pixel value.

ü An 8th bit with value one could be used to indicate that the number given by the first 7 bits is a run-length token rather than a code for a color. In reading the compressed file, the 8th bit would be ignored in the value of the next pixel, which gives the color value to be repeated.

5

|

0

|

32

|

84