An oscilloscope, previously called an oscillograph, and informally known as a scope, CRO (for cathode-ray oscilloscope), or DSO (for the more modern digital storage oscilloscope), is a type of electronic test instrument that allows observation of constantly varying signal voltages, usually as a two-dimensional plot of one or more signals as a function of time. Other signals (such as sound or vibration) can be converted to voltages and displayed.

Oscilloscopes are used to observe the change of an electrical signal over time, such that voltage and time describe a shape which is continuously graphed against a calibrated scale. The observed waveform can be analyzed for such properties as amplitude, frequency, rise time, time interval, distortion and others. Modern digital instruments may calculate and display these properties directly. Originally, calculation of these values required manually measuring the waveform against the scales built into the screen of the instrument.

The oscilloscope can be adjusted so that repetitive signals can be observed as a continuous shape on the screen. A storage oscilloscope allows single events to be captured by the instrument and displayed for a relatively long time, allowing observation of events too fast to be directly perceptible.

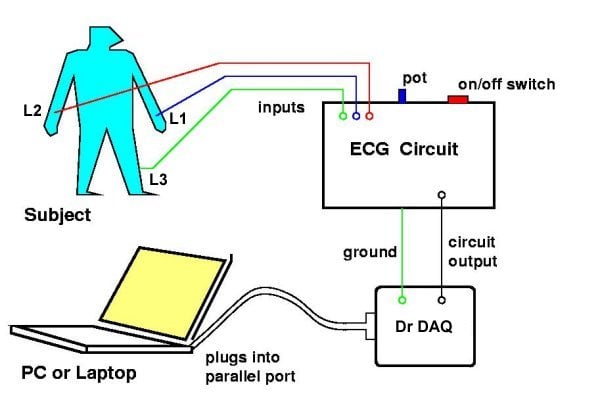

Oscilloscopes are used in the sciences, medicine, engineering, automotive and the telecommunications industry. General-purpose instruments are used for maintenance of electronic equipment and laboratory work. Special-purpose oscilloscopes may be used for such purposes as analyzing an automotive ignition system or to display the waveform of the heartbeat as an electrocardiogram.

Early oscilloscopes used cathode ray tubes (CRTs) as their display element (hence they were commonly referred to as CROs) and linear amplifiers for signal processing. Storage oscilloscopes used special storage CRTs to maintain a steady display of a single brief signal. CROs were later largely superseded by digital storage oscilloscopes (DSOs) with thin panel displays, fast analog-to-digital converters and digital signal processors. DSOs without integrated displays (sometimes known as digitisers) are available at lower cost and use a general-purpose digital computer to process and display waveforms.

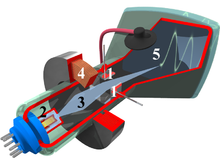

Oscilloscope cathode-ray tube

The interior of a cathode-ray tube for use in an oscilloscope. 1. Deflection voltage electrode; 2. Electron gun; 3. Electron beam; 4. Focusing coil; 5. Phosphor-coated inner side of the screen .

Features and uses

Description

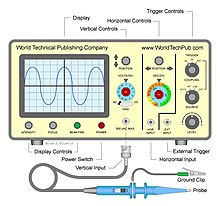

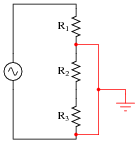

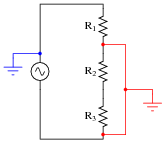

The basic oscilloscope, as shown in the illustration, is typically divided into four sections: the display, vertical controls, horizontal controls and trigger controls. The display is usually a CRT or LCD panel which is laid out with both horizontal and vertical reference lines referred to as the graticule. In addition to the screen, most display sections are equipped with three basic controls: a focus knob, an intensity knob and a beam finder button.The vertical section controls the amplitude of the displayed signal. This section carries a Volts-per-Division (Volts/Div) selector knob, an AC/DC/Ground selector switch and the vertical (primary) input for the instrument. Additionally, this section is typically equipped with the vertical beam position knob.

The horizontal section controls the time base or "sweep" of the instrument. The primary control is the Seconds-per-Division (Sec/Div) selector switch. Also included is a horizontal input for plotting dual X-Y axis signals. The horizontal beam position knob is generally located in this section.

The trigger section controls the start event of the sweep. The trigger can be set to automatically restart after each sweep or it can be configured to respond to an internal or external event. The principal controls of this section will be the source and coupling selector switches. An external trigger input (EXT Input) and level adjustment will also be included.

In addition to the basic instrument, most oscilloscopes are supplied with a probe as shown. The probe will connect to any input on the instrument and typically has a resistor of ten times the oscilloscope's input impedance. This results in a .1 (‑10X) attenuation factor, but helps to isolate the capacitive load presented by the probe cable from the signal being measured. Some probes have a switch allowing the operator to bypass the resistor when appropriate.

Size and portability

Most modern oscilloscopes are lightweight, portable instruments that are compact enough to be easily carried by a single person. In addition to the portable units, the market offers a number of miniature battery-powered instruments for field service applications. Laboratory grade oscilloscopes, especially older units which use vacuum tubes, are generally bench-top devices or may be mounted into dedicated carts. Special-purpose oscilloscopes may be rack-mounted or permanently mounted into a custom instrument housing.Inputs

The signal to be measured is fed to one of the input connectors, which is usually a coaxial connector such as a BNC or UHF type. Binding posts or banana plugs may be used for lower frequencies. If the signal source has its own coaxial connector, then a simple coaxial cable is used; otherwise, a specialized cable called a "scope probe", supplied with the oscilloscope, is used. In general, for routine use, an open wire test lead for connecting to the point being observed is not satisfactory, and a probe is generally necessary. General-purpose oscilloscopes usually present an input impedance of 1 megohm in parallel with a small but known capacitance such as 20 picofarads.[4] This allows the use of standard oscilloscope probes.[5] Scopes for use with very high frequencies may have 50‑ohm inputs, which must be either connected directly to a 50‑ohm signal source or used with Z0 or active probes.Less-frequently-used inputs include one (or two) for triggering the sweep, horizontal deflection for X‑Y mode displays, and trace brightening/darkening, sometimes called z'‑axis inputs.

Probes

Open wire test leads (flying leads) are likely to pick up interference, so they are not suitable for low level signals. Furthermore, the leads have a high inductance, so they are not suitable for high frequencies. Using a shielded cable (i.e., coaxial cable) is better for low level signals. Coaxial cable also has lower inductance, but it has higher capacitance: a typical 50 ohm cable has about 90 pF per meter. Consequently, a one-meter direct (1X) coaxial probe will load a circuit with a capacitance of about 110 pF and a resistance of 1 megohm.To minimize loading, attenuator probes (e.g., 10X probes) are used. A typical probe uses a 9 megohm series resistor shunted by a low-value capacitor to make an RC compensated divider with the cable capacitance and scope input. The RC time constants are adjusted to match. For example, the 9 megohm series resistor is shunted by a 12.2 pF capacitor for a time constant of 110 microseconds. The cable capacitance of 90 pF in parallel with the scope input of 20 pF and 1 megohm (total capacitance 110 pF) also gives a time constant of 110 microseconds. In practice, there will be an adjustment so the operator can precisely match the low frequency time constant (called compensating the probe). Matching the time constants makes the attenuation independent of frequency. At low frequencies (where the resistance of R is much less than the reactance of C), the circuit looks like a resistive divider; at high frequencies (resistance much greater than reactance), the circuit looks like a capacitive divider.

The result is a frequency compensated probe for modest frequencies that presents a load of about 10 megohms shunted by 12 pF. Although such a probe is an improvement, it does not work when the time scale shrinks to several cable transit times (transit time is typically 5 ns). In that time frame, the cable looks like its characteristic impedance, and there will be reflections from the transmission line mismatch at the scope input and the probe that causes ringing.[7] The modern scope probe uses lossy low capacitance transmission lines and sophisticated frequency shaping networks to make the 10X probe perform well at several hundred megahertz. Consequently, there are other adjustments for completing the compensation.

Probes with 10:1 attenuation are by far the most common; for large signals (and slightly-less capacitive loading), 100:1 probes are not rare. There are also probes that contain switches to select 10:1 or direct (1:1) ratios, but one must be aware that the 1:1 setting has significant capacitance (tens of pF) at the probe tip, because the whole cable's capacitance is now directly connected.

Most oscilloscopes allow for probe attenuation factors, displaying the effective sensitivity at the probe tip. Historically, some auto-sensing circuitry used indicator lamps behind translucent windows in the panel to illuminate different parts of the sensitivity scale. To do so, the probe connectors (modified BNCs) had an extra contact to define the probe's attenuation. (A certain value of resistor, connected to ground, "encodes" the attenuation.) Because probes wear out, and because the auto-sensing circuitry is not compatible between different makes of oscilloscope, auto-sensing probe scaling is not foolproof. Likewise, manually setting the probe attenuation is prone to user error and it is a common mistake to have the probe scaling set incorrectly; resultant voltage readings can then be wrong by a factor of 10.

There are special high voltage probes which also form compensated attenuators with the oscilloscope input; the probe body is physically large, and some require partly filling a canister surrounding the series resistor with volatile liquid fluorocarbon to displace air. At the oscilloscope end is a box with several waveform-trimming adjustments. For safety, a barrier disc keeps one's fingers distant from the point being examined. Maximum voltage is in the low tens of kV. (Observing a high voltage ramp can create a staircase waveform with steps at different points every repetition, until the probe tip is in contact. Until then, a tiny arc charges the probe tip, and its capacitance holds the voltage (open circuit). As the voltage continues to climb, another tiny arc charges the tip further.)

There are also current probes, with cores that surround the conductor carrying current to be examined. One type has a hole for the conductor, and requires that the wire be passed through the hole; they are for semi-permanent or permanent mounting. However, other types, for testing, have a two-part core that permit them to be placed around a wire. Inside the probe, a coil wound around the core provides a current into an appropriate load, and the voltage across that load is proportional to current. However, this type of probe can sense AC, only.

A more-sophisticated probe includes a magnetic flux sensor (Hall effect sensor) in the magnetic circuit. The probe connects to an amplifier, which feeds (low frequency) current into the coil to cancel the sensed field; the magnitude of that current provides the low-frequency part of the current waveform, right down to DC. The coil still picks up high frequencies. There is a combining network akin to a loudspeaker crossover network.

Front panel controls

Focus control

This control adjusts CRT focus to obtain the sharpest, most-detailed trace. In practice, focus needs to be adjusted slightly when observing quite-different signals, which means that it needs to be an external control. Flat-panel displays do not need focus adjustments and therefore do not include this control.Intensity control

This adjusts trace brightness. Slow traces on CRT oscilloscopes need less, and fast ones, especially if not often repeated, require more. On flat panels, however, trace brightness is essentially independent of sweep speed, because the internal signal processing effectively synthesizes the display from the digitized data.Astigmatism

Can also be called "Shape" or "spot shape". Adjusts the relative voltages on two of the CRT anodes such that a displayed spot changes from elliptical in one plane through a circular spot to an ellipse at 90 degrees to the first. This control may be absent from simpler oscilloscope designs or may even be an internal control. It is not necessary with flat panel displays.Beam finder

Modern oscilloscopes have direct-coupled deflection amplifiers, which means the trace could be deflected off-screen. They also might have their beam blanked without the operator knowing it. To help in restoring a visible display, the beam finder circuit overrides any blanking and limits the beam deflected to the visible portion of the screen. Beam-finder circuits often distort the trace while activated.Graticule

The graticule is a grid of squares that serve as reference marks for measuring the displayed trace. These markings, whether located directly on the screen or on a removable plastic filter, usually consist of a 1 cm grid with closer tick marks (often at 2 mm) on the centre vertical and horizontal axis. One expects to see ten major divisions across the screen; the number of vertical major divisions varies. Comparing the grid markings with the waveform permits one to measure both voltage (vertical axis) and time (horizontal axis). Frequency can also be determined by measuring the waveform period and calculating its reciprocal.On old and lower-cost CRT oscilloscopes the graticule is a sheet of plastic, often with light-diffusing markings and concealed lamps at the edge of the graticule. The lamps had a brightness control. Higher-cost instruments have the graticule marked on the inside face of the CRT, to eliminate parallax errors; better ones also had adjustable edge illumination with diffusing markings. (Diffusing markings appear bright.) Digital oscilloscopes, however, generate the graticule markings on the display in the same way as the trace.

External graticules also protect the glass face of the CRT from accidental impact. Some CRT oscilloscopes with internal graticules have an unmarked tinted sheet plastic light filter to enhance trace contrast; this also serves to protect the faceplate of the CRT.

Accuracy and resolution of measurements using a graticule is relatively limited; better instruments sometimes have movable bright markers on the trace that permit internal circuits to make more refined measurements.

Both calibrated vertical sensitivity and calibrated horizontal time are set in 1 - 2 - 5 - 10 steps. This leads, however, to some awkward interpretations of minor divisions

Timebase controls

These select the horizontal speed of the CRT's spot as it creates the trace; this process is commonly referred to as the sweep. In all but the least-costly modern oscilloscopes, the sweep speed is selectable and calibrated in units of time per major graticule division. Quite a wide range of sweep speeds is generally provided, from seconds to as fast as picoseconds (in the fastest) per division. Usually, a continuously-variable control (often a knob in front of the calibrated selector knob) offers uncalibrated speeds, typically slower than calibrated. This control provides a range somewhat greater than that of consecutive calibrated steps, making any speed available between the extremes.Holdoff control

Found on some better analog oscilloscopes, this varies the time (holdoff) during which the sweep circuit ignores triggers. It provides a stable display of some repetitive events in which some triggers would create confusing displays. It is usually set to minimum, because a longer time decreases the number of sweeps per second, resulting in a dimmer trace.Vertical sensitivity, coupling, and polarity controls

To accommodate a wide range of input amplitudes, a switch selects calibrated sensitivity of the vertical deflection. Another control, often in front of the calibrated-selector knob, offers a continuously-variable sensitivity over a limited range from calibrated to less-sensitive settings.Often the observed signal is offset by a steady component, and only the changes are of interest. A switch (AC position) connects a capacitor in series with the input that passes only the changes (provided that they are not too slow -- "slow" would mean visible). However, when the signal has a fixed offset of interest, or changes quite slowly, the input is connected directly (DC switch position). Most oscilloscopes offer the DC input option. For convenience, to see where zero volts input currently shows on the screen, many oscilloscopes have a third switch position (GND) that disconnects the input and grounds it. Often, in this case, the user centers the trace with the Vertical Position control.

Better oscilloscopes have a polarity selector. Normally, a positive input moves the trace upward, but this permits inverting—positive deflects the trace downward.

Horizontal sensitivity control

This control is found only on more elaborate oscilloscopes; it offers adjustable sensitivity for external horizontal inputs.Vertical position control

The vertical position control moves the whole displayed trace up and down. It is used to set the no-input trace exactly on the center line of the graticule, but also permits offsetting vertically by a limited amount. With direct coupling, adjustment of this control can compensate for a limited DC component of an input.Horizontal position control

The horizontal position control moves the display sidewise. It usually sets the left end of the trace at the left edge of the graticule, but it can displace the whole trace when desired. This control also moves the X-Y mode traces sidewise in some instruments, and can compensate for a limited DC component as for vertical position.Dual-trace controls

Each input channel usually has its own set of sensitivity, coupling, and position controls, although some four-trace oscilloscopes have only minimal controls for their third and fourth channels.Dual-trace oscilloscopes have a mode switch to select either channel alone, both channels, or (in some) an X‑Y display, which uses the second channel for X deflection. When both channels are displayed, the type of channel switching can be selected on some oscilloscopes; on others, the type depends upon timebase setting. If manually selectable, channel switching can be free-running (asynchronous), or between consecutive sweeps. Some Philips dual-trace analog oscilloscopes had a fast analog multiplier, and provided a display of the product of the input channels.

Multiple-trace oscilloscopes have a switch for each channel to enable or disable display of that trace's signal.

Delayed-sweep controls

These include controls for the delayed-sweep timebase, which is calibrated, and often also variable. The slowest speed is several steps faster than the slowest main sweep speed, although the fastest is generally the same. A calibrated multiturn delay time control offers wide range, high resolution delay settings; it spans the full duration of the main sweep, and its reading corresponds to graticule divisions (but with much finer precision). Its accuracy is also superior to that of the display.A switch selects display modes: Main sweep only, with a brightened region showing when the delayed sweep is advancing, delayed sweep only, or (on some) a combination mode.

Good CRT oscilloscopes include a delayed-sweep intensity control, to allow for the dimmer trace of a much-faster delayed sweep that nevertheless occurs only once per main sweep. Such oscilloscopes also are likely to have a trace separation control for multiplexed display of both the main and delayed sweeps together.

Sweep trigger controls

A switch selects the Trigger Source. It can be an external input, one of the vertical channels of a dual or multiple-trace oscilloscope, or the AC line (mains) frequency. Another switch enables or disables Auto trigger mode, or selects single sweep, if provided in the oscilloscope. Either a spring-return switch position or a pushbutton arms single sweeps.

A Level control varies the voltage on the waveform which generates a trigger, and the Slope switch selects positive-going or negative-going polarity at the selected trigger level.

Basic types of sweep

Triggered sweep

Type 465 Tektronix oscilloscope. This was a popular analog oscilloscope, portable, and is a representative example.

A triggered sweep starts at a selected point on the signal, providing a stable display. In this way, triggering allows the display of periodic signals such as sine waves and square waves, as well as nonperiodic signals such as single pulses, or pulses that do not recur at a fixed rate.

With triggered sweeps, the scope will blank the beam and start to reset the sweep circuit each time the beam reaches the extreme right side of the screen. For a period of time, called holdoff, (extendable by a front-panel control on some better oscilloscopes), the sweep circuit resets completely and ignores triggers. Once holdoff expires, the next trigger starts a sweep. The trigger event is usually the input waveform reaching some user-specified threshold voltage (trigger level) in the specified direction (going positive or going negative—trigger polarity).

In some cases, variable holdoff time can be really useful to make the sweep ignore interfering triggers that occur before the events to be observed. In the case of repetitive, but complex waveforms, variable holdoff can create a stable display that cannot otherwise be achieved.

Holdoff

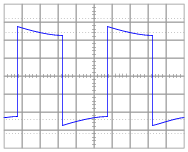

Trigger holdoff defines a certain period following a trigger during which the scope will not trigger again. This makes it easier to establish a stable view of a waveform with multiple edges which would otherwise cause another trigger.Example

Imagine the following repeating waveform:

The green line is the waveform, the red vertical partial line represents the location of the trigger, and the yellow line represents the trigger level. If the scope was simply set to trigger on every rising edge, this waveform would cause three triggers for each cycle:

Assuming the signal is fairly high frequency, the scope would probably look something like this:

Except that on the scope, each trigger would be the same channel, and so would be the same color.

It is desired to set the scope to only trigger on one edge per cycle, so it is necessary to set the holdoff to be slightly less than the period of the waveform. That will prevent it from triggering more than once per cycle, but still allow it to trigger on the first edge of the next cycle.

Automatic sweep mode

Triggered sweeps can display a blank screen if there are no triggers. To avoid this, these sweeps include a timing circuit that generates free-running triggers so a trace is always visible. Once triggers arrive, the timer stops providing pseudo-triggers. Automatic sweep mode can be de-selected when observing low repetition rates.Recurrent sweeps

If the input signal is periodic, the sweep repetition rate can be adjusted to display a few cycles of the waveform. Early (tube) oscilloscopes and lowest-cost oscilloscopes have sweep oscillators that run continuously, and are uncalibrated. Such oscilloscopes are very simple, comparatively inexpensive, and were useful in radio servicing and some TV servicing. Measuring voltage or time is possible, but only with extra equipment, and is quite inconvenient. They are primarily qualitative instruments.They have a few (widely spaced) frequency ranges, and relatively wide-range continuous frequency control within a given range. In use, the sweep frequency is set to slightly lower than some submultiple of the input frequency, to display typically at least two cycles of the input signal (so all details are visible). A very simple control feeds an adjustable amount of the vertical signal (or possibly, a related external signal) to the sweep oscillator. The signal triggers beam blanking and a sweep retrace sooner than it would occur free-running, and the display becomes stable.

Single sweeps

Some oscilloscopes offer these—the sweep circuit is manually armed (typically by a pushbutton or equivalent) "Armed" means it's ready to respond to a trigger. Once the sweep is complete, it resets, and will not sweep until re-armed. This mode, combined with an oscilloscope camera, captures single-shot events.Types of trigger include:

- external trigger, a pulse from an external source connected to a dedicated input on the scope.

- edge trigger, an edge-detector that generates a pulse when the input signal crosses a specified threshold voltage in a specified direction. These are the most-common types of triggers; the level control sets the threshold voltage, and the slope control selects the direction (negative or positive-going). (The first sentence of the description also applies to the inputs to some digital logic circuits; those inputs have fixed threshold and polarity response.)

- video trigger, a circuit that extracts synchronizing pulses from video formats such as PAL and NTSC and triggers the timebase on every line, a specified line, every field, or every frame. This circuit is typically found in a waveform monitor device, although some better oscilloscopes include this function.

- delayed trigger, which waits a specified time after an edge trigger before starting the sweep. As described under delayed sweeps, a trigger delay circuit (typically the main sweep) extends this delay to a known and adjustable interval. In this way, the operator can examine a particular pulse in a long train of pulses.

Delayed sweeps

More sophisticated analog oscilloscopes contain a second timebase for a delayed sweep. A delayed sweep provides a very detailed look at some small selected portion of the main timebase. The main timebase serves as a controllable delay, after which the delayed timebase starts. This can start when the delay expires, or can be triggered (only) after the delay expires. Ordinarily, the delayed timebase is set for a faster sweep, sometimes much faster, such as 1000:1. At extreme ratios, jitter in the delays on consecutive main sweeps degrades the display, but delayed-sweep triggers can overcome that.The display shows the vertical signal in one of several modes: the main timebase, or the delayed timebase only, or a combination thereof. When the delayed sweep is active, the main sweep trace brightens while the delayed sweep is advancing. In one combination mode, provided only on some oscilloscopes, the trace changes from the main sweep to the delayed sweep once the delayed sweep starts, although less of the delayed fast sweep is visible for longer delays. Another combination mode multiplexes (alternates) the main and delayed sweeps so that both appear at once; a trace separation control displaces them.

DSOs allow waveforms to be displayed in this way, without offering a delayed timebase as such.

Dual and multiple-trace oscilloscopes

Oscilloscopes with two vertical inputs, referred to as dual-trace oscilloscopes, are extremely useful and commonplace. Using a single-beam CRT, they multiplex the inputs, usually switching between them fast enough to display two traces apparently at once. Less common are oscilloscopes with more traces; four inputs are common among these, but a few (Kikusui, for one) offered a display of the sweep trigger signal if desired. Some multi-trace oscilloscopes use the external trigger input as an optional vertical input, and some have third and fourth channels with only minimal controls. In all cases, the inputs, when independently displayed, are time-multiplexed, but dual-trace oscilloscopes often can add their inputs to display a real-time analog sum. (Inverting one channel provides a difference, provided that neither channel is overloaded. This difference mode can provide a moderate-performance differential input.)Switching channels can be asynchronous, that is, free-running, with trace blanking while switching, or after each horizontal sweep is complete. Asynchronous switching is usually designated "Chopped", while sweep-synchronized is designated "Alt[ernate]". A given channel is alternately connected and disconnected, leading to the term "chopped". Multi-trace oscilloscopes also switch channels either in chopped or alternate modes.

In general, chopped mode is better for slower sweeps. It is possible for the internal chopping rate to be a multiple of the sweep repetition rate, creating blanks in the traces, but in practice this is rarely a problem; the gaps in one trace are overwritten by traces of the following sweep. A few oscilloscopes had a modulated chopping rate to avoid this occasional problem. Alternate mode, however, is better for faster sweeps.

True dual-beam CRT oscilloscopes did exist, but were not common. One type (Cossor, U.K.) had a beam-splitter plate in its CRT, and single-ended deflection following the splitter. Others had two complete electron guns, requiring tight control of axial (rotational) mechanical alignment in manufacturing the CRT. Beam-splitter types had horizontal deflection common to both vertical channels, but dual-gun oscilloscopes could have separate time bases, or use one time base for both channels. Multiple-gun CRTs (up to ten guns) were made in past decades. With ten guns, the envelope (bulb) was cylindrical throughout its length.

The vertical amplifier

In an analog oscilloscope, the vertical amplifier acquires the signal[s] to be displayed. In better oscilloscopes, it delays them by a fraction of a microsecond, and provides a signal large enough to deflect the CRT's beam. That deflection is at least somewhat beyond the edges of the graticule, and more typically some distance off-screen. The amplifier has to have low distortion to display its input accurately (it must be linear), and it has to recover quickly from overloads. As well, its time-domain response has to represent transients accurately—minimal overshoot, rounding, and tilt of a flat pulse top.A vertical input goes to a frequency-compensated step attenuator to reduce large signals to prevent overload. The attenuator feeds a low-level stage (or a few), which in turn feed gain stages (and a delay-line driver if there is a delay). Following are more gain stages, up to the final output stage which develops a large signal swing (tens of volts, sometimes over 100 volts) for CRT electrostatic deflection.

In dual and multiple-trace oscilloscopes, an internal electronic switch selects the relatively low-level output of one channel's amplifiers and sends it to the following stages of the vertical amplifier, which is only a single channel, so to speak, from that point on.

In free-running ("chopped") mode, the oscillator (which may be simply a different operating mode of the switch driver) blanks the beam before switching, and unblanks it only after the switching transients have settled.

Part way through the amplifier is a feed to the sweep trigger circuits, for internal triggering from the signal. This feed would be from an individual channel's amplifier in a dual or multi-trace oscilloscope, the channel depending upon the setting of the trigger source selector.

This feed precedes the delay (if there is one), which allows the sweep circuit to unblank the CRT and start the forward sweep, so the CRT can show the triggering event. High-quality analog delays add a modest cost to an oscilloscope, and are omitted in oscilloscopes that are cost-sensitive.

The delay, itself, comes from a special cable with a pair of conductors wound around a flexible, magnetically soft core. The coiling provides distributed inductance, while a conductive layer close to the wires provides distributed capacitance. The combination is a wideband transmission line with considerable delay per unit length. Both ends of the delay cable require matched impedances to avoid reflections.

X-Y mode

A 24-hour clock displayed on a CRT oscilloscope configured in X-Y mode as a vector monitor with dual R2R DACs to generate the analog voltages.

The X-Y mode also allows the oscilloscope to be used as a vector monitor to display images or user interfaces. Many early games, such as Tennis for Two, used an oscilloscope as an output device.

Complete loss of signal in an X-Y CRT display means that the beam strikes a small spot, which risks burning the phosphor. Older phosphors burned more easily. Some dedicated X-Y displays reduce beam current greatly, or blank the display entirely, if there are no inputs present.

Bandwidth

As with all practical instruments, oscilloscopes do not respond equally to all possible input frequencies. The range of frequencies an oscilloscope can usefully display is referred to as its bandwidth. Bandwidth applies primarily to the Y-axis, although the X-axis sweeps have to be fast enough to show the highest-frequency waveforms.The bandwidth is defined as the frequency at which the sensitivity is 0.707 of that at DC or the lowest AC frequency (a drop of 3 dB). The oscilloscope's response will drop off rapidly as the input frequency is raised above that point. Within the stated bandwidth the response will not necessarily be exactly uniform (or "flat"), but should always fall within a +0 to -3 dB range. One source[12] states that there is a noticeable effect on the accuracy of voltage measurements at only 20 percent of the stated bandwidth. Some oscilloscopes' specifications do include a narrower tolerance range within the stated bandwidth.

Probes also have bandwidth limits and must be chosen and used to properly handle the frequencies of interest. To achieve the flattest response, most probes must be "compensated" (an adjustment performed using a test signal from the oscilloscope) to allow for the reactance of the probe's cable.

Another related specification is rise time. This is the duration of the fastest pulse that can be resolved by the scope. It is related to the bandwidth approximately by:

Bandwidth in Hz x rise time in seconds = 0.35

For example, an oscilloscope intended to resolve pulses with a rise time of 1 nanosecond would have a bandwidth of 350 MHz.

In analog instruments, the bandwidth of the oscilloscope is limited by the vertical amplifiers and the CRT or other display subsystem. In digital instruments, the sampling rate of the analog to digital converter (ADC) is a factor, but the stated analog bandwidth (and therefore the overall bandwidth of the instrument) is usually less than the ADC's Nyquist frequency. This is due to limitations in the analog signal amplifier, deliberate design of the Anti-aliasing filter that precedes the ADC, or both.

For a digital oscilloscope, a rule of thumb is that the continuous sampling rate should be ten times the highest frequency desired to resolve; for example a 20 megasample/second rate would be applicable for measuring signals up to about 2 megahertz. This allows the anti-aliasing filter to be designed with a 3 dB down point of 2 MHz and an effective cutoff at 10 MHz (the Nyquist frequency), avoiding the artifacts of a very steep ("brick-wall") filter.

A sampling oscilloscope can display signals of considerably higher frequency than the sampling rate if the signals are exactly, or nearly, repetitive. It does this by taking one sample from each successive repetition of the input waveform, each sample being at an increased time interval from the trigger event. The waveform is then displayed from these collected samples. This mechanism is referred to as "equivalent-time sampling".Some oscilloscopes can operate in either this mode or in the more traditional "real-time" mode at the operator's choice.

Other features

Some oscilloscopes have cursors, which are lines that can be moved about the screen to measure the time interval between two points, or the difference between two voltages. A few older oscilloscopes simply brightened the trace at movable locations. These cursors are more accurate than visual estimates referring to graticule lines.Better quality general purpose oscilloscopes include a calibration signal for setting up the compensation of test probes; this is (often) a 1 kHz square-wave signal of a definite peak-to-peak voltage available at a test terminal on the front panel. Some better oscilloscopes also have a squared-off loop for checking and adjusting current probes.

Sometimes the event that the user wants to see may only happen occasionally. To catch these events, some oscilloscopes, known as "storage scopes", preserve the most recent sweep on the screen. This was originally achieved by using a special CRT, a "storage tube", which would retain the image of even a very brief event for a long time.

Some digital oscilloscopes can sweep at speeds as slow as once per hour, emulating a strip chart recorder. That is, the signal scrolls across the screen from right to left. Most oscilloscopes with this facility switch from a sweep to a strip-chart mode at about one sweep per ten seconds. This is because otherwise, the scope looks broken: it's collecting data, but the dot cannot be seen.

In current oscilloscopes, digital signal sampling is more often used for all but the simplest models. Samples feed fast analog-to-digital converters, following which all signal processing (and storage) is digital.

Many oscilloscopes have different plug-in modules for different purposes, e.g., high-sensitivity amplifiers of relatively narrow bandwidth, differential amplifiers, amplifiers with four or more channels, sampling plugins for repetitive signals of very high frequency, and special-purpose plugins, including audio/ultrasonic spectrum analyzers, and stable-offset-voltage direct-coupled channels with relatively high gain.

Examples of use

Lissajous figures on an oscilloscope, with 90 degrees phase difference between x and y inputs.

In a piece of electronic equipment, for example, the connections between stages (e.g. electronic mixers, electronic oscillators, amplifiers) may be 'probed' for the expected signal, using the scope as a simple signal tracer. If the expected signal is absent or incorrect, some preceding stage of the electronics is not operating correctly. Since most failures occur because of a single faulty component, each measurement can prove that half of the stages of a complex piece of equipment either work, or probably did not cause the fault.

Once the faulty stage is found, further probing can usually tell a skilled technician exactly which component has failed. Once the component is replaced, the unit can be restored to service, or at least the next fault can be isolated. This sort of troubleshooting is typical of radio and TV receivers, as well as audio amplifiers, but can apply to quite-different devices such as electronic motor drives.

Another use is to check newly designed circuitry. Very often a newly designed circuit will misbehave because of design errors, bad voltage levels, electrical noise etc. Digital electronics usually operate from a clock, so a dual-trace scope which shows both the clock signal and a test signal dependent upon the clock is useful. Storage scopes are helpful for "capturing" rare electronic events that cause defective operation.

Automotive use

First appearing in the 1970s for ignition system analysis, automotive oscilloscopes are becoming an important workshop tool for testing sensors and output signals on electronic engine management systems, braking and stability systems. Some oscilloscopes can trigger and decode serial bus messages, such as the CAN bus commonly used in automotive applications.Selection

For work at high frequencies and with fast digital signals, the bandwidth of the vertical amplifiers and sampling rate must be high enough. For general-purpose use, a bandwidth of at least 100 MHz is usually satisfactory. A much lower bandwidth is sufficient for audio-frequency applications only. A useful sweep range is from one second to 100 nanoseconds, with appropriate triggering and (for analog instruments) sweep delay. A well-designed, stable trigger circuit is required for a steady display. The chief benefit of a quality oscilloscope is the quality of the trigger circuit.Key selection criteria of a DSO (apart from input bandwidth) are the sample memory depth and sample rate. Early DSOs in the mid- to late 1990s only had a few KB of sample memory per channel. This is adequate for basic waveform display, but does not allow detailed examination of the waveform or inspection of long data packets for example. Even entry-level (<$500) modern DSOs now have 1 MB or more of sample memory per channel, and this has become the expected minimum in any modern DSO. Often this sample memory is shared between channels, and can sometimes only be fully available at lower sample rates. At the highest sample rates, the memory may be limited to a few tens of KB.[15] Any modern "real-time" sample rate DSO will have typically 5–10 times the input bandwidth in sample rate. So a 100 MHz bandwidth DSO would have 500 Ms/s – 1 Gs/s sample rate. The theoretical minimum sample rate required, using SinX/x interpolation, is 2.5 times the bandwidth.[16]

Analog oscilloscopes have been almost totally displaced by digital storage scopes except for use exclusively at lower frequencies. Greatly increased sample rates have largely eliminated the display of incorrect signals, known as "aliasing", that was sometimes present in the first generation of digital scopes. The problem can still occur when, for example, viewing a short section of a repetitive waveform that repeats at intervals thousands of times longer than the section viewed (for example a short synchronization pulse at the beginning of a particular television line), with an oscilloscope that cannot store the extremely large number of samples between one instance of the short section and the next.

The used test equipment market, particularly on-line auction venues, typically has a wide selection of older analog scopes available. However it is becoming more difficult to obtain replacement parts for these instruments, and repair services are generally unavailable from the original manufacturer. Used instruments are usually out of calibration, and recalibration by companies with the equipment and expertise usually costs more than the second-hand value of the instrument.

As of 2007[update], a 350 MHz bandwidth (BW), 2.5 gigasamples per second (GS/s), dual-channel digital storage scope costs about US$7000 new.

On the lowest end, an inexpensive hobby-grade single-channel DSO could be purchased for under $90 as of June 2011. These often have limited bandwidth and other facilities, but fulfill the basic functions of an oscilloscope.

Software

Many oscilloscopes today provide one or more external interfaces to allow remote instrument control by external software. These interfaces (or buses) include GPIB, Ethernet, serial port, and USB.Types and models

The following section is a brief summary of various types and models available. For a detailed discussion, refer to the other article.Cathode-ray oscilloscope (CRO)

For analog television, an analog oscilloscope can be used as a vectorscope to analyze complex signal properties, such as this display of SMPTE color bars.

Analog scopes do not necessarily include a calibrated reference grid for size measurement of waves, and they may not display waves in the traditional sense of a line segment sweeping from left to right. Instead, they could be used for signal analysis by feeding a reference signal into one axis and the signal to measure into the other axis. For an oscillating reference and measurement signal, this results in a complex looping pattern referred to as a Lissajous curve. The shape of the curve can be interpreted to identify properties of the measurement signal in relation to the reference signal, and is useful across a wide range of oscillation frequencies.

Dual-beam oscilloscope

The dual-beam analog oscilloscope can display two signals simultaneously. A special dual-beam CRT generates and deflects two separate beams. Although multi-trace analog oscilloscopes can simulate a dual-beam display with chop and alternate sweeps, those features do not provide simultaneous displays. (Real time digital oscilloscopes offer the same benefits of a dual-beam oscilloscope, but they do not require a dual-beam display.) The disadvantages of the dual trace oscilloscope are that it cannot switch quickly between the traces and it cannot capture two fast transient events. In order to avoid this problems a dual beam oscilloscope is used.Analog storage oscilloscope

Trace storage is an extra feature available on some analog scopes; they used direct-view storage CRTs. Storage allows the trace pattern that normally decays in a fraction of a second to remain on the screen for several minutes or longer. An electrical circuit can then be deliberately activated to store and erase the trace on the screen.Digital oscilloscopes

While analog devices make use of continually varying voltages, digital devices employ binary numbers which correspond to samples of the voltage. In the case of digital oscilloscopes, an analog-to-digital converter (ADC) is used to change the measured voltages into digital information.The digital storage oscilloscope, or DSO for short, is now the preferred type for most industrial applications, although simple analog CROs are still used by hobbyists. It replaces the electrostatic storage method used in analog storage scopes with digital memory, which can store data as long as required without degradation and with uniform brightness. It also allows complex processing of the signal by high-speed digital signal processing circuits.[3]

A standard DSO is limited to capturing signals with a bandwidth of less than half the sampling rate of the ADC (called the Nyquist limit). There is a variation of the DSO called the digital sampling oscilloscope that can exceed this limit for certain types of signal, such as high-speed communications signals, where the waveform consists of repeating pulses. This type of DSO deliberately samples at a much lower frequency than the Nyquist limit and then uses signal processing to reconstruct a composite view of a typical pulse. A similar technique, with analog rather than digital samples, was used before the digital era in analog sampling oscilloscopes.

A digital phosphor oscilloscope (DPO) uses color information to convey information about a signal. It may, for example, display infrequent signal data in blue to make it stand out. In a conventional analog scope, such a rare trace may not be visible.

Mixed-signal oscilloscopes

A mixed-signal oscilloscope (or MSO) has two kinds of inputs, a small number of analog channels (typically two or four), and a larger number of digital channels(typically sixteen). It provides the ability to accurately time-correlate analog and digital channels, thus offering a distinct advantage over a separate oscilloscope and logic analyser. Typically, digital channels may be grouped and displayed as a bus with each bus value displayed at the bottom of the display in hex or binary. On most MSOs, the trigger can be set across both analog and digital channels.Mixed-domain oscilloscopes

In a mixed-domain oscilloscope (MDO) you have an additional RF input port that goes into a spectrum analyzer part. It links those traditionally separate instruments, so that you can e.g. time correlate events in the time domain (like a specific serial data package) with events happening in the frequency domain (like RF transmissions).Handheld oscilloscopes

Handheld oscilloscopes are useful for many test and field service applications. Today, a hand held oscilloscope is usually a digital sampling oscilloscope, using a liquid crystal display.Many hand-held and bench oscilloscopes have the ground reference voltage common to all input channels. If more than one measurement channel is used at the same time, all the input signals must have the same voltage reference, and the shared default reference is the "earth". If there is no differential preamplifier or external signal isolator, this traditional desktop oscilloscope is not suitable for floating measurements. (Occasionally an oscilloscope user will break the ground pin in the power supply cord of a bench-top oscilloscope in an attempt to isolate the signal common from the earth ground. This practice is unreliable since the entire stray capacitance of the instrument cabinet will be connected into the circuit. Since it is also a hazard to break a safety ground connection, instruction manuals strongly advise against this practice.)

Some models of oscilloscope have isolated inputs, where the signal reference level terminals are not connected together. Each input channel can be used to make a "floating" measurement with an independent signal reference level. Measurements can be made without tying one side of the oscilloscope input to the circuit signal common or ground reference.

The isolation available is categorized as shown below:

| Overvoltage category | Operating voltage (effective value of AC/DC to ground) | Peak instantaneous voltage (repeated 20 times) | Test resistor |

|---|---|---|---|

| CAT I | 600 V | 2500 V | 30 Ω |

| CAT I | 1000 V | 4000 V | 30 Ω |

| CAT II | 600 V | 4000 V | 12 Ω |

| CAT II | 1000 V | 6000 V | 12 Ω |

| CAT III | 600 V | 6000 V | 2 Ω |

PC-based oscilloscopes

A new type of oscilloscope is emerging that consists of a specialized signal acquisition board (which can be an external USB or parallel port device, or an internal add-on PCI or ISA card). The user interface and signal processing software runs on the user's computer, rather than on an embedded computer as in the case of a conventional DSO.Related instruments

A large number of instruments used in a variety of technical fields are really oscilloscopes with inputs, calibration, controls, display calibration, etc., specialized and optimized for a particular application. Examples of such oscilloscope-based instruments include waveform monitors for analyzing video levels in television productions and medical devices such as vital function monitors and electrocardiogram and electroencephalogram instruments. In automobile repair, an ignition analyzer is used to show the spark waveforms for each cylinder. All of these are essentially oscilloscopes, performing the basic task of showing the changes in one or more input signals over time in an X‑Y display.Other instruments convert the results of their measurements to a repetitive electrical signal, and incorporate an oscilloscope as a display element. Such complex measurement systems include spectrum analyzers, transistor analyzers, and time domain reflectometers (TDRs). Unlike an oscilloscope, these instruments automatically generate stimulus or sweep a measurement parameter.

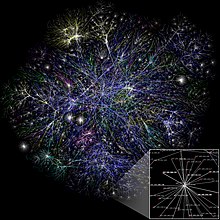

conjunction Networking and the Internet to biggest oscilloscope minus sequence

Visualization of a portion of the routes on the Internet

In time, the network spread beyond academic and military institutions and became known as the Internet. The emergence of networking involved a redefinition of the nature and boundaries of the computer. Computer operating systems and applications were modified to include the ability to define and access the resources of other computers on the network, such as peripheral devices, stored information, and the like, as extensions of the resources of an individual computer. Initially these facilities were available primarily to people working in high-tech environments, but in the 1990s the spread of applications like e-mail and the World Wide Web, combined with the development of cheap, fast networking technologies like Ethernet and ADSL saw computer networking become almost ubiquitous. In fact, the number of computers that are networked is growing phenomenally. A very large proportion of personal computers regularly connect to the Internet to communicate and receive information. "Wireless" networking, often utilizing mobile phone networks, has meant networking is becoming increasingly ubiquitous even in mobile computing environments

There is active research to make computers out of many promising new types of technology, such as optical computers, DNA computers, neural computers, and quantum computers. Most computers are universal, and are able to calculate any computable function, and are limited only by their memory capacity and operating speed. However different designs of computers can give very different performance for particular problems; for example quantum computers can potentially break some modern encryption algorithms (by quantum factoring) very quickly.

Computer architecture paradigms

- Quantum computer vs. Chemical computer

- Scalar processor vs. Vector processor

- Non-Uniform Memory Access (NUMA) computers

- Register machine vs. Stack machine

- Harvard architecture vs. von Neumann architecture

- Cellular architecture

Artificial intelligence

A computer will solve problems in exactly the way it is programmed to, without regard to efficiency, alternative solutions, possible shortcuts, or possible errors in the code. Computer programs that learn and adapt are part of the emerging field of artificial intelligence and machine learning.Basic Oscilloscope Operation

AC Electric Circuits

Question 1

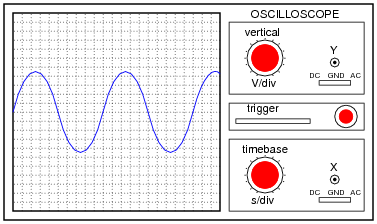

An oscilloscope is a very useful piece of electronic test equipment. Most everyone has seen an oscilloscope in use, in the form of a heart-rate monitor (electrocardiogram, or EKG) of the type seen in doctor’s offices and hospitals.

When monitoring heart beats, what do the two axes (horizontal and vertical) of the oscilloscope screen represent?

In general electronics use, when measuring AC voltage signals, what do the two axes (horizontal and vertical) of the oscilloscope screen represent?

When monitoring heart beats, what do the two axes (horizontal and vertical) of the oscilloscope screen represent?

Question 2

The core of an analog oscilloscope is a special type of vacuum tube known as a Cathode Ray Tube, or CRT. While similar in function to the CRT used in televisions, oscilloscope display tubes are specially built for the purpose of serving an a measuring instrument.

Explain how a CRT functions. What goes on inside the tube to produce waveform displays on the screen?

Explain how a CRT functions. What goes on inside the tube to produce waveform displays on the screen?

Question 3

Question 4

Question 5

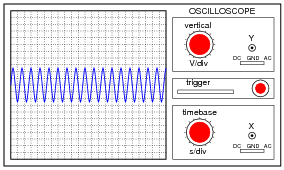

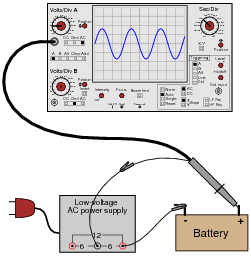

A technician prepares to use an oscilloscope to display an AC voltage signal. After turning the oscilloscope on and connecting the Y input probe to the signal source test points, this display appears:

What display control(s) need to be adjusted on the oscilloscope in order to show fewer cycles of this signal on the screen, with a greater height (amplitude)?

Question 6

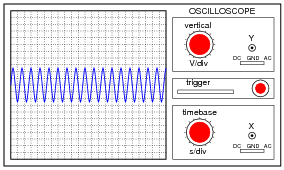

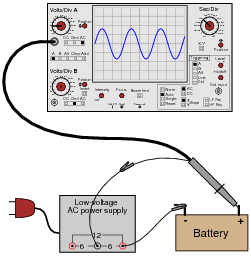

A technician prepares to use an oscilloscope to display an AC voltage signal. After turning the oscilloscope on and connecting the Y input probe to the signal source test points, this display appears:

What display control(s) need to be adjusted on the oscilloscope in order to show a normal-looking wave on the screen?

Question 7

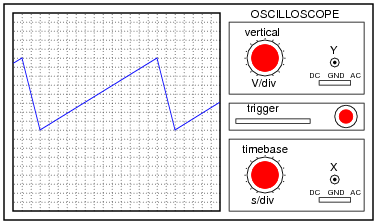

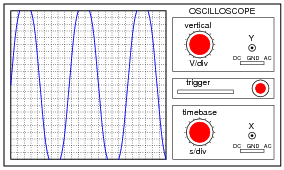

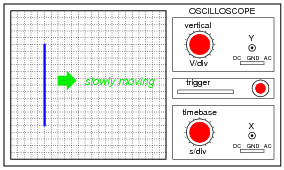

A technician prepares to use an oscilloscope to display an AC voltage signal. After turning the oscilloscope on and connecting the Y input probe to the signal source test points, this display appears:

What appears on the oscilloscope screen is a vertical line that moves slowly from left to right. What display control(s) need to be adjusted on the oscilloscope in order to show a normal-looking wave on the screen?

Question 8

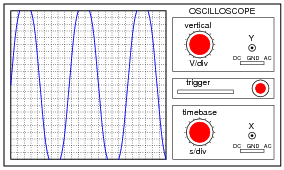

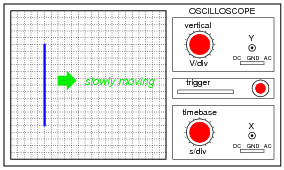

A technician prepares to use an oscilloscope to display an AC voltage signal. After turning the oscilloscope on and connecting the Y input probe to the signal source test points, this display appears:

What display control(s) need to be adjusted on the oscilloscope in order to show a normal-looking wave on the screen?

Question 9

Question 10

Question 11

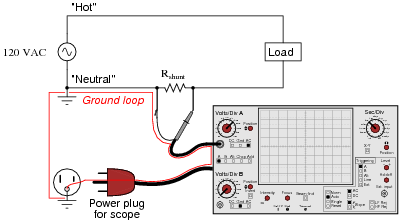

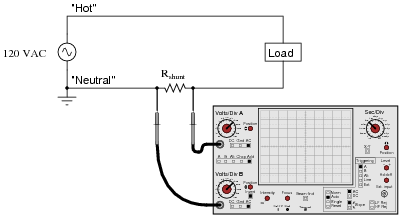

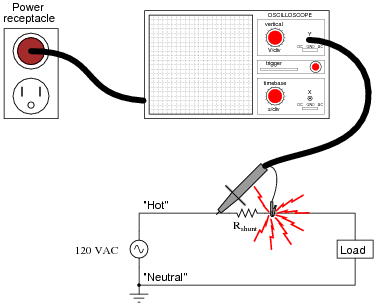

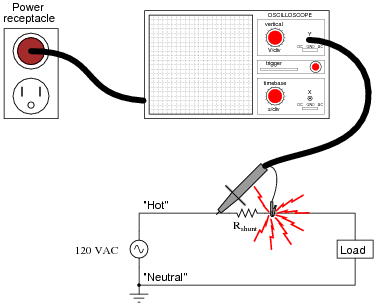

Most oscilloscopes can only directly measure voltage, not current. One way to measure AC current with an oscilloscope is to measure the voltage dropped across a shunt resistor. Since the voltage dropped across a resistor is proportional to the current through that resistor, whatever wave-shape the current is will be translated into a voltage drop with the exact same wave-shape.

However, one must be very careful when connecting an oscilloscope to any part of a grounded system, as many electric power systems are. Note what happens here when a technician attempts to connect the oscilloscope across a shunt resistor located on the “hot” side of a grounded 120 VAC motor circuit:

Here, the reference lead of the oscilloscope (the small alligator clip, not the sharp-tipped probe) creates a short-circuit in the power system. Explain why this happens.

However, one must be very careful when connecting an oscilloscope to any part of a grounded system, as many electric power systems are. Note what happens here when a technician attempts to connect the oscilloscope across a shunt resistor located on the “hot” side of a grounded 120 VAC motor circuit:

Question 12

Most oscilloscopes have at least two vertical inputs, used to display more than one waveform simultaneously:

While this feature is extremely useful, one must be careful in connecting two sources of AC voltage to an oscilloscope. Since the “reference” or “ground” clips of each probe are electrically common with the oscilloscope’s metal chassis, they are electrically common with each other as well.

Explain what sort of problem would be caused by connecting a dual-trace oscilloscope to a circuit in the following manner:

Explain what sort of problem would be caused by connecting a dual-trace oscilloscope to a circuit in the following manner:

Question 13

Question 14

Question 15

Question 16

Shunt resistors are low-value, precision resistors used as current-measuring elements in high-current circuits. The idea is to measure the voltage dropped across this precision resistance and use Ohm’s Law (I = V/R) to infer the amount of current in the circuit:

Since the schematic shows a shunt resistor being used to measure current in an AC circuit, it would be equally appropriate to use an oscilloscope instead of a voltmeter to measure the voltage drop produced by the shunt. However, we must be careful in connecting the oscilloscope to the shunt because of the inherent ground reference of the oscilloscope’s metal case and probe assembly.

Explain why connecting an oscilloscope to the shunt as shown in this second diagram would be a bad idea:

Explain why connecting an oscilloscope to the shunt as shown in this second diagram would be a bad idea:

Question 17

Question 18

One of the more complicated controls to master on an oscilloscope, but also one of the most useful, is the triggering control. Without proper “triggering,” a waveform will scroll horizontally across the screen rather than staying “locked” in place.

Describe how the triggering control is able to “lock” an AC waveform on the screen so that it appears stable to the human eye. What, exactly, is the triggering function doing that makes an AC waveform appear to stand still?

Describe how the triggering control is able to “lock” an AC waveform on the screen so that it appears stable to the human eye. What, exactly, is the triggering function doing that makes an AC waveform appear to stand still?

Question 19

If an oscilloscope is connected to a series combination of AC and DC voltage sources, what is displayed on the oscilloscope screen depends on where the “coupling” control is set.

With the coupling control set to “DC”, the waveform displayed will be elevated above (or depressed below) the “zero” line:

Setting the coupling control to ÄC”, however, results in the waveform automatically centering itself on the screen, about the zero line.

Based on these observations, explain what the “DC” and ÄC” settings on the coupling control actually mean.

With the coupling control set to “DC”, the waveform displayed will be elevated above (or depressed below) the “zero” line:

Question 20

Question 21

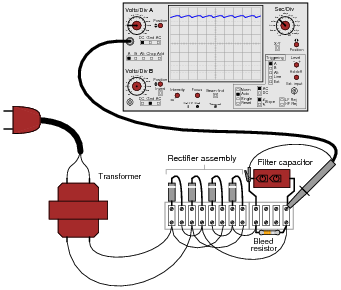

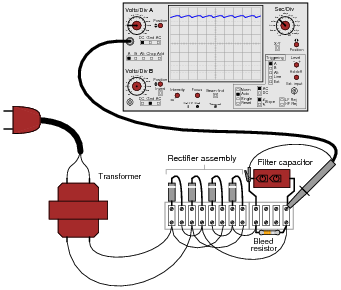

Suppose a technician measures the voltage output by an AC-DC power supply circuit:

The waveform shown by the oscilloscope is mostly DC, with just a little bit of AC “ripple” voltage appearing as a ripple pattern on what would otherwise be a straight, horizontal line. This is quite normal for the output of an AC-DC power supply.

Suppose we wished to take a closer view of this “ripple” voltage. We want to make the ripples more pronounced on the screen, so that we may better discern their shape. Unfortunately, though, when we decrease the number of volts per division on the “vertical” control knob to magnify the vertical amplification of the oscilloscope, the pattern completely disappears from the screen!

Explain what the problem is, and how we might correct it so as to be able to magnify the ripple voltage waveform without having it disappear off the oscilloscope screen.

Suppose we wished to take a closer view of this “ripple” voltage. We want to make the ripples more pronounced on the screen, so that we may better discern their shape. Unfortunately, though, when we decrease the number of volts per division on the “vertical” control knob to magnify the vertical amplification of the oscilloscope, the pattern completely disappears from the screen!

Explain what the problem is, and how we might correct it so as to be able to magnify the ripple voltage waveform without having it disappear off the oscilloscope screen.

Question 22

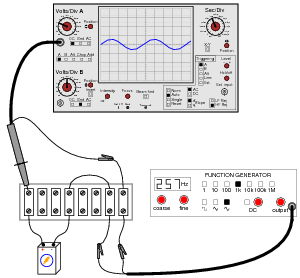

A student just learning to use oscilloscopes connects one directly to the output of a signal generator, with these results:

As you can see, the function generator is configured to output a square wave, but the oscilloscope does not register a square wave. Perplexed, the student takes the function generator to a different oscilloscope. At the second oscilloscope, the student sees a proper square wave on the screen:

It is then that the student realizes the first oscilloscope has its “coupling” control set to AC, while the second oscilloscope was set to DC. Now the student is really confused! The signal is obviously AC, as it oscillates above and below the centerline of the screen, but yet the “DC” setting appears to give the most accurate results: a true-to-form square wave.

How would you explain what is happening to this student, and also describe the appropriate uses of the ÄC” and “DC” coupling settings so he or she knows better how to use it in the future?

How would you explain what is happening to this student, and also describe the appropriate uses of the ÄC” and “DC” coupling settings so he or she knows better how to use it in the future?

Question 23

There are times when you need to use an oscilloscope to measure a differential voltage that also has a significant common-mode voltage: an application where you cannot connect the oscilloscope’s ground lead to either point of contact. One application is measuring the voltage pulses on an RS-485 digital communications network, where neither conductor in the two-wire cable is at ground potential, and where connecting either wire to ground (via the oscilloscope’s ground clip) may cause problems:

One solution to this problem is to use both probes of a dual-trace oscilloscope, and set it up for differential measurement. In this mode, only one waveform will be shown on the screen, even though two probes are being used. No ground clips need be connected to the circuit under test, and the waveform shown will be indicative of the voltage between the two probe tips.

Describe how a typical oscilloscope may be set up to perform differential voltage measurement. Be sure to include descriptions of all knob and button settings (with reference to the oscilloscope shown in this question):

Describe how a typical oscilloscope may be set up to perform differential voltage measurement. Be sure to include descriptions of all knob and button settings (with reference to the oscilloscope shown in this question):

Question 24

A very common accessory for oscilloscopes is a ×10 probe, which effectively acts as a 10:1 voltage divider for any measured signals. Thus, an oscilloscope showing a waveform with a peak-to-peak amplitude of 4 divisions, with a vertical sensitivity setting of 1 volt per division, using a ×10 probe, would actually be measuring a signal of 40 volts peak-peak:

Obviously, one use for a ×10 probe is measuring voltages beyond the normal range of an oscilloscope. However, there is another application that is less obvious, and it regards the input impedance of the oscilloscope. A ×10 probe gives the oscilloscope 10 times more input impedance (as seen from the probe tip to ground). Typically this means an input impedance of 10 MΩ (with the ×10 probe) rather than 1 MΩ (with a normal 1:1 probe). Identify an application where this feature could be useful.

I won’t give away an answer here, but I will provide a hint in the form of another question: why is it generally a good thing for voltmeters to have high input impedance? Or conversely, what bad things might happen if you tried to use a low-impedance voltmeter to measure voltages?

Notes:

Increased input impedance is often a more common reason for choosing ×10 probes, as opposed to increased voltage measurement range. The answer to this question is more readily grasped by students after they have worked with loading-sensitive electronic circuits

Increased input impedance is often a more common reason for choosing ×10 probes, as opposed to increased voltage measurement range. The answer to this question is more readily grasped by students after they have worked with loading-sensitive electronic circuits

Pulse computation

Pulse computation is a hybrid of digital and analog computation that uses aperiodic electrical spikes, as opposed to the periodic voltages in a digital computer or the continuously varying voltages in an analog computer. Pulse streams are unlocked, so they can arrive at arbitrary times and can be generated by analog processes, although each spike is allocated a binary value, as it would be in a digital computer.

Pulse computation is primarily studied as part of the field of neural networks. The processing unit in such a network is called a "neuron".

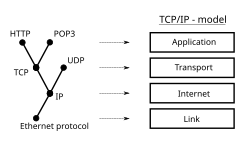

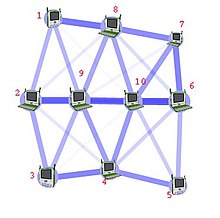

A computer network or data network is a digital telecommunications network which allows nodes to share resources. In computer networks, networked computing devices exchange data with each other using a data link. The connections between nodes are established using either cable media or wireless media.

Network computer devices that originate, route and terminate the data are called network nodes.[1] Nodes can include hosts such as personal computers, phones, servers as well as networking hardware. Two such devices can be said to be networked together when one device is able to exchange information with the other device, whether or not they have a direct connection to each other. In most cases, application-specific communications protocols are layered (i.e. carried as payload) over other more general communications protocols. This formidable collection of information technology requires skilled network management to keep it all running reliably.

Computer networks support an enormous number of applications and services such as access to the World Wide Web, digital video, digital audio, shared use of application and storage servers, printers, and fax machines, and use of email and instant messaging applications as well as many others. Computer networks differ in the transmission medium used to carry their signals, communications protocols to organize network traffic, the network's size, topology and organizational intent. The best-known computer network is the Internet.

Computer networking may be considered a branch of electrical engineering, telecommunications, computer science, information technology or computer engineering, since it relies upon the theoretical and practical application of the related disciplines.

A computer network facilitates interpersonal communications allowing users to communicate efficiently and easily via various means: email, instant messaging, online chat, telephone, video telephone calls, and video conferencing. A network allows sharing of network and computing resources. Users may access and use resources provided by devices on the network, such as printing a document on a shared network printer or use of a shared storage device. A network allows sharing of files, data, and other types of information giving authorized users the ability to access information stored on other computers on the network. Distributed computing uses computing resources across a network to accomplish tasks.

A computer network may be used by security hackers to deploy computer viruses or computer worms on devices connected to the network, or to prevent these devices from accessing the network via a denial-of-service attack.

Computer communication links that do not support packets, such as traditional point-to-point telecommunication links, simply transmit data as a bit stream. However, most information in computer networks is carried in packets. A network packet is a formatted unit of data (a list of bits or bytes, usually a few tens of bytes to a few kilobytes long) carried by a packet-switched network.

In packet networks, the data is formatted into packets that are sent through the network to their destination. Once the packets arrive they are reassembled into their original message. With packets, the bandwidth of the transmission medium can be better shared among users than if the network were circuit switched. When one user is not sending packets, the link can be filled with packets from other users, and so the cost can be shared, with relatively little interference, provided the link isn't overused.

Packets consist of two kinds of data: control information, and user data (payload). The control information provides data the network needs to deliver the user data, for example: source and destination network addresses, error detection codes, and sequencing information. Typically, control information is found in packet headers and trailers, with payload data in between.

Often the route a packet needs to take through a network is not immediately available. In that case the packet is queued and waits until a link is free.

A widely adopted family of transmission media used in local area network (LAN) technology is collectively known as Ethernet. The media and protocol standards that enable communication between networked devices over Ethernet are defined by IEEE 802.3. Ethernet transmits data over both copper and fiber cables. Wireless LAN standards (e.g. those defined by IEEE 802.11) use radio waves, or others use infrared signals as a transmission medium. Power line communication uses a building's power cabling to transmit data.

The orders of the following wired technologies are, roughly, from slowest to fastest transmission speed.

The orders of the following wired technologies are, roughly, from slowest to fastest transmission speed.

A network interface controller (NIC) is computer hardware that provides a computer with the ability to access the transmission media, and has the ability to process low-level network information. For example, the NIC may have a connector for accepting a cable, or an aerial for wireless transmission and reception, and the associated circuitry.

A network interface controller (NIC) is computer hardware that provides a computer with the ability to access the transmission media, and has the ability to process low-level network information. For example, the NIC may have a connector for accepting a cable, or an aerial for wireless transmission and reception, and the associated circuitry.

The NIC responds to traffic addressed to a network address for either the NIC or the computer as a whole.

In Ethernet networks, each network interface controller has a unique Media Access Control (MAC) address—usually stored in the controller's permanent memory. To avoid address conflicts between network devices, the Institute of Electrical and Electronics Engineers (IEEE) maintains and administers MAC address uniqueness. The size of an Ethernet MAC address is six octets. The three most significant octets are reserved to identify NIC manufacturers. These manufacturers, using only their assigned prefixes, uniquely assign the three least-significant octets of every Ethernet interface they produce.

A repeater with multiple ports is known as a hub. Repeaters work on the physical layer of the OSI model. Repeaters require a small amount of time to regenerate the signal. This can cause a propagation delay that affects network performance and may affect proper function. As a result, many network architectures limit the number of repeaters that can be used in a row, e.g., the Ethernet 5-4-3 rule.

Hubs and repeaters in LANs have been mostly obsoleted by modern switches.

Bridges come in three basic types:

Multi-layer switches are capable of routing based on layer 3 addressing or additional logical levels. The term switch is often used loosely to include devices such as routers and bridges, as well as devices that may distribute traffic based on load or based on application content (e.g., a Web URL identifier).

A router is an internetworking device that forwards packets between networks by processing the routing information included in the packet or datagram (Internet protocol information from layer 3). The routing information is often processed in conjunction with the routing table (or forwarding table). A router uses its routing table to determine where to forward packets. A destination in a routing table can include a "null" interface, also known as the "black hole" interface because data can go into it, however, no further processing is done for said data, i.e. the packets are dropped.

Overlay networks have been around since the invention of networking when computer systems were connected over telephone lines using modems, before any data network existed.

The most striking example of an overlay network is the Internet itself. The Internet itself was initially built as an overlay on the telephone network.[11] Even today, each Internet node can communicate with virtually any other through an underlying mesh of sub-networks of wildly different topologies and technologies. Address resolution and routing are the means that allow mapping of a fully connected IP overlay network to its underlying network.

Another example of an overlay network is a distributed hash table, which maps keys to nodes in the network. In this case, the underlying network is an IP network, and the overlay network is a table (actually a map) indexed by keys.

Overlay networks have also been proposed as a way to improve Internet routing, such as through quality of service guarantees to achieve higher-quality streaming media. Previous proposals such as IntServ, DiffServ, and IP Multicast have not seen wide acceptance largely because they require modification of all routers in the network.[citation needed] On the other hand, an overlay network can be incrementally deployed on end-hosts running the overlay protocol software, without cooperation from Internet service providers. The overlay network has no control over how packets are routed in the underlying network between two overlay nodes, but it can control, for example, the sequence of overlay nodes that a message traverses before it reaches its destination.

For example, Akamai Technologies manages an overlay network that provides reliable, efficient content delivery (a kind of multicast). Academic research includes end system multicast,[12] resilient routing and quality of service studies, among others.

A communications protocol is a set of rules for exchanging information over a network. In a protocol stack (also see the OSI model), each protocol leverages the services of the protocol below it. An important example of a protocol stack is HTTP (the World Wide Web protocol) running over TCP over IP (the Internet protocols) over IEEE 802.11 (the Wi-Fi protocol). This stack is used between the wireless router and the home user's personal computer when the user is surfing the web.

While the use of protocol layering is today ubiquitous across the field of computer networking, it has been historically criticized by many researchers[13] for two principal reasons. Firstly, abstracting the protocol stack in this way may cause a higher layer to duplicate functionality of a lower layer, a prime example being error recovery on both a per-link basis and an end-to-end basis.[14] Secondly, it is common that a protocol implementation at one layer may require data, state or addressing information that is only present at another layer, thus defeating the point of separating the layers in the first place. For example, TCP uses the ECN field in the IPv4 header as an indication of congestion; IP is a network layer protocol whereas TCP is a transport layer protocol.

Communication protocols have various characteristics. They may be connection-oriented or connectionless, they may use circuit mode or packet switching, and they may use hierarchical addressing or flat addressing.

There are many communication protocols, a few of which are described below.

For example, MAC bridging (IEEE 802.1D) deals with the routing of Ethernet packets using a Spanning Tree Protocol. IEEE 802.1Q describes VLANs, and IEEE 802.1X defines a port-based Network Access Control protocol, which forms the basis for the authentication mechanisms used in VLANs (but it is also found in WLANs) – it is what the home user sees when the user has to enter a "wireless access key".

While the role of ATM is diminishing in favor of next-generation networks, it still plays a role in the last mile, which is the connection between an Internet service provider and the home user.[15]

A network can be characterized by its physical capacity or its organizational purpose. Use of the network, including user authorization and access rights, differ accordingly.

The defining characteristics of a LAN, in contrast to a wide area network (WAN), include higher data transfer rates, limited geographic range, and lack of reliance on leased lines to provide connectivity. Current Ethernet or other IEEE 802.3 LAN technologies operate at data transfer rates up to 100 Gbit/s, standarized by IEEE in 2010.[20] Currently, 400 Gbit/s Ethernet is being developed.