Electronically Defined Natural Attributes (eDNA) ; not AND in logic i ( R,L,C trans )

Internet security start-up Oxford Bio-Chronometrics developed Electronically Defined Natural Attributes (eDNA) which blocks spam bots and makes the Internet easier for humans.

e-DNA is more unique than a fingerprint or a retina scan since it’s the result of hundreds of different behaviors measured continuously while a user interacts with any kind digital content – a website, ad, login, registration form or contact form. While bots, hackers and scammers can perhaps mimic a few of these behaviors, nothing is able to replicate them all.

About 500 different behaviors are unique to every individual and, taken together, form what they call ‘eDNA’ continue to this discovery researchers from University of Oxford says that they can also detect when a person is drunk or has had sex.

Over a period of time automated programs are great hazards for webmasters. CEO for the Bio-Chronometrics says “Forcing humans to enter a code they can barely read makes many of them go away, now the new R&D could allow our physical behavior to be used as a secure way of logging in to our computers and smart phones.

eDNA would be able to locate whether a click on an advertisement or a site is from an automated program, or so-called bot, or a real human. “We can hold companies like Google and Facebook to account ,and they know this technology is coming .

How your electronic DNA could be the secure login of the future

Unique habits can be used to prove users' identity – but may also reveal if they are drunk, or have had sex, researchers say

About 500 different behaviours are unique to every individual and, taken together, form what they call ‘eDNA’

New research could allow our physical behaviour to be used as a secure way of logging in to our computers and smartphones, a team at the University of Oxford say, claiming that they can also detect when a person is drunk or has had sex.

Researchers have identified that every individual creates a unique pattern of physical behaviour including the speed at which they type, the way they move a mouse of the way they hold a phone.

About 500 different behaviours are unique to every individual and, taken together, form what they call "eDNA", or electronically Defined Natural Attributes. Changes in this string of physical behaviour might even be able to signal when someone has taken drugs, had sex, or if they might be susceptible to a heart attack in three months’ time.

"Electronic DNA allows us to see vastly more information about you, who developed the technology while studying for an MSc at the university and is now chief executive of Oxford BioChronometrics.

"Like DNA it is almost impossible to fake, as it is very hard to go online and not be yourself. It is as huge a jump in the amount of information that could be gathered about an individual as the jump from fingerprints to DNA. It is that order of magnitude."

Oxford BioChronometrics is a startup from Oxford University that with the help of Isis Innovation Software Incubator is being transferred into the private sector, or spun out, on 18 July in order to take the commercialisation of the technology to the next stage. Isis Innovation is the technology transfer company of Oxford University. Biochronometrics is the measurement of change in biological behaviour over time.

"It is easy to tell when someone has been taking drugs using this technology," says Neal. "But it would place us in a difficult situation if we did. So it’s best we don’t. We just want to collect the data to make sure that x is who x says they are."

Oxford BioChronometrics, says that eDNA would be able to spot whether a click on an advert or a site is from an automated program, or so-called bot, or a real human. "We can hold companies like Google and Facebook to account ,and they know this technology is coming," he said.

Oxford BioChronometrics' own research suggests that 90-92% of clicks on adverts and 95% of logins are actually from bots. Their first product NoMoreCaptchas which stops spam bots from registering and logging on has already quietly been rolled out to 700 companies.

Adrian Neal, a former cryptographic expert, said the eDNA project has its roots in several decades' worth of research including biometrics, which can measure keystrokes or mouse movements, but these were thought to be too insecure to use as a login principle.

As computing power, along with the ability to gather large volumes of information from users, researchers were able to identify much broader and more complex patterns of interaction with their devices.

Information Security Group at Royal Holloway, is more sceptical that eDNA will reach the mainstream. "Using different factors to prove your identity online is always good," he says, but believes consumers won't be happy to be continuously assessed in this way. "It may also add to the cost and inconvenience of business as companies’ own software will likely have to be rejigged."

"But there will also be resistance by customers if you find your behaviour monitored, a little bit of pushback

Researchers Can Identify You From The Way You Type And Use Your Mouse

New research could allow our physical behaviour to be used as a secure way of logging in to our computers and smartphones, a team at the University of Oxford say, claiming that they can also detect when a person is drunk or has had sex.

Researchers have identified that every individual creates a unique pattern of physical behaviour including the speed at which they type, the way they move a mouse of the way they hold a phone.

About 500 different behaviours are unique to every individual and, taken together, form what they call "eDNA", or electronically Defined Natural Attributes. Changes in this string of physical behaviour might even be able to signal when someone has taken drugs, had sex, or if they might be susceptible to a heart attack in three months’ time.

e-DNA User Authentication distinguishes bots from humans and humans from each other based on hundreds of behavioral data points users don’t even notice they make. We let in who and what you want, and keep the rest away based on the digital signature created by the user’s behavior.

We call this identifier the user’s Electronically Defined Natural Attributes, or e-DNA and it is the new proof-positive of user identification and authentication.

Typical biometrics require multiple different angles/captures of a physical attribute to attain a high degree of confidence.

Behavioral biometrics relies on collecting enough behavioral data from different “angles” (time, activity, sensory data, etc.) to produce a confidence level that the user is who they claim to be. Thanks to the high level of complexity of understanding and mapping behavior, e-DNA User Authentication is highly secure with many added benefits .

How It Works

Behavioral data is acquired via a collection script embedded in the webpage or app the user is visiting. The script relays as many data points as possible from a given device to servers which perform the analysis.

There are no additional steps/challenges to complete because the collection code and back-end processing are invisible to the user.

There are no additional steps/challenges to complete because the collection code and back-end processing are invisible to the user.

A Good Solution Gets Better with Time The way we measure behavior, certainty improves with increased use. The more user-device interaction/behavioral data collected, the more effective the e-DNA becomes.

Behavior is the Key, Not Hardware or Puzzles

By scrutinizing real-time behavior, e-DNA User Authentication can determine if a user is permitted to access something without ever breaching their (or your) privacy.

X . I Signal

A signal as referred to in communication systems, signal processing, and electrical engineering is a function that "conveys information about the behavior or attributes of some phenomenon". In the physical world, any quantity exhibiting variation in time or variation in space (such as an image) is potentially a signal that might provide information on the status of a physical system, or convey a message between observers, among other possibilities. The IEEE Transactions on Signal Processing states that the term "signal" includes audio, video, speech, image, communication, geophysical, sonar, radar, medical and musical signals.

In nature, signals can take the form of any action by one organism able to be perceived by other organisms, ranging from the release of chemicals by plants to alert nearby plants of the same type of a predator, to sounds or motions made by animals to alert other animals of the presence of danger or of food. Signaling occurs in organisms all the way down to the cellular level, with cell signaling. Signaling theory, in evolutionary biology, proposes that a substantial driver for evolution is the ability for animals to communicate with each other by developing ways of signaling. In human engineering, signals are typically provided by a sensor, and often the original form of a signal is converted to another form of energy using a transducer. For example, a microphone converts an acoustic signal to a voltage waveform, and a speaker does the reverse.

The formal study of the information content of signals is the field of information theory. The information in a signal is usually accompanied by noise. The term noise usually means an undesirable random disturbance, but is often extended to include unwanted signals conflicting with the desired signal (such as crosstalk). The prevention of noise is covered in part under the heading of signal integrity. The separation of desired signals from a background is the field of signal recovery,[4] one branch of which is estimation theory, a probabilistic approach to suppressing random disturbances.

Engineering disciplines such as electrical engineering have led the way in the design, study, and implementation of systems involving transmission, storage, and manipulation of information. In the latter half of the 20th century, electrical engineering itself separated into several disciplines, specialising in the design and analysis of systems that manipulate physical signals; electronic engineering and computer engineering as examples; while design engineering developed to deal with functional design of man–machine interfaces .

In "The Signal" painting by William Powell Frith, a woman waves a handkerchief as a signal to a person able to see this action, in order to convey a message to this person .

Definitions

Definitions specific to sub-fields are common. For example, in information theory, a signal is a codified message, that is, the sequence of states in a communication channel that encodes a message.In the context of signal processing, arbitrary binary data streams are not considered as signals, but only analog and digital signals that are representations of analog physical quantities.

In a communication system, a transmitter encodes a message to a signal, which is carried to a receiver by the communications channel. For example, the words "Mary had a little lamb" might be the message spoken into a telephone. The telephone transmitter converts the sounds into an electrical voltage signal. The signal is transmitted to the receiving telephone by wires; at the receiver it is reconverted into sounds.

In telephone networks, signalling, for example common-channel signaling, refers to phone number and other digital control information rather than the actual voice signal.

Signals can be categorized in various ways. The most common distinction is between discrete and continuous spaces that the functions are defined over, for example discrete and continuous time domains. Discrete-time signals are often referred to as time series in other fields. Continuous-time signals are often referred to as continuous signals even when the signal functions are not continuous; an example is a square-wave signal.

A second important distinction is between discrete-valued and continuous-valued. Particularly in digital signal processing a digital signal is sometimes defined as a sequence of discrete values, that may or may not be derived from an underlying continuous-valued physical process. In other contexts, digital signals are defined as the continuous-time waveform signals in a digital system, representing a bit-stream. In the first case, a signal that is generated by means of a digital modulation method is considered as converted to an analog signal, while it is considered as a digital signal in the second case.

Another important property of a signal (actually, of a statistically defined class of signals) is its entropy or information content.

Analog and digital signals

Analog signal

An analog signal is any continuous signal for which the time varying feature (variable) of the signal is a representation of some other time varying quantity, i.e., analogous to another time varying signal. For example, in an analog audio signal, the instantaneous voltage of the signal varies continuously with the pressure of the sound waves. It differs from a digital signal, in which the continuous quantity is a representation of a sequence of discrete values which can only take on one of a finite number of values. The term analog signal usually refers to electrical signals; however, mechanical, pneumatic, hydraulic, human speech, and other systems may also convey or be considered analog signals.An analog signal uses some property of the medium to convey the signal's information. For example, an aneroid barometer uses rotary position as the signal to convey pressure information. In an electrical signal, the voltage, current, or frequency of the signal may be varied to represent the information.

Any information may be conveyed by an analog signal; often such a signal is a measured response to changes in physical phenomena, such as sound, light, temperature, position, or pressure. The physical variable is converted to an analog signal by a transducer. For example, in sound recording, fluctuations in air pressure (that is to say, sound) strike the diaphragm of a microphone which induces corresponding fluctuations in the current produced by a coil in an electromagnetic microphone, or the voltage produced by a condenser microphone. The voltage or the current is said to be an "analog" of the sound.

Digital signal

A digital signal is a signal that is constructed from a discrete set of waveforms of a physical quantity so as to represent a sequence of discrete values. A logic signal is a digital signal with only two possible values,[10][11] and describes an arbitrary bit stream. Other types of digital signals can represent three-valued logic or higher valued logics.Alternatively, a digital signal may be considered to be the sequence of codes represented by such a physical quantity.[12] The physical quantity may be a variable electric current or voltage, the intensity, phase or polarization of an optical or other electromagnetic field, acoustic pressure, the magnetization of a magnetic storage media, etcetera. Digital signals are present in all digital electronics, notably computing equipment and data transmission.

A received digital signal may be impaired by noise and distortions without necessarily affecting the digits

Digital signals often arise via sampling of analog signals, for example, a continually fluctuating voltage on a line that can be digitized by an analog-to-digital converter circuit, wherein the circuit will read the voltage level on the line, say, every 50 microseconds and represent each reading with a fixed number of bits. The resulting stream of numbers is stored as digital data on a discrete-time and quantized-amplitude signal. Computers and other digital devices are restricted to discrete time.

Time discretization

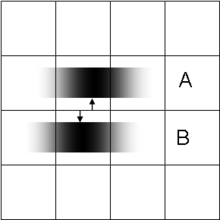

Discrete-time signal created from a continuous signal by sampling

If for a signal, the quantities are defined only on a discrete set of times, we call it a discrete-time signal. A simple source for a discrete time signal is the sampling of a continuous signal, approximating the signal by a sequence of its values at particular time instants.

A discrete-time real (or complex) signal can be seen as a function from (a subset of) the set of integers (the index labeling time instants) to the set of real (or complex) numbers (the function values at those instants).

A continuous-time real (or complex) signal is any real-valued (or complex-valued) function which is defined at every time t in an interval, most commonly an infinite interval.

Amplitude quantization

Examples of signals

Signals in nature can be converted to electronic signals by various sensors. Some examples are:- Motion. The motion of an object can be considered to be a signal, and can be monitored by various sensors to provide electrical signals. For example, radar can provide an electromagnetic signal for following aircraft motion. A motion signal is one-dimensional (time), and the range is generally three-dimensional. Position is thus a 3-vector signal; position and orientation of a rigid body is a 6-vector signal. Orientation signals can be generated using a gyroscope.

- Sound. Since a sound is a vibration of a medium (such as air), a sound signal associates a pressure value to every value of time and three space coordinates. A sound signal is converted to an electrical signal by a microphone, generating a voltage signal as an analog of the sound signal, making the sound signal available for further signal processing. Sound signals can be sampled at a discrete set of time points; for example, compact discs (CDs) contain discrete signals representing sound, recorded at 44,100 samples per second; each sample contains data for a left and right channel, which may be considered to be a 2-vector signal (since CDs are recorded in stereo). The CD encoding is converted to an electrical signal by reading the information with a laser, converting the sound signal to an optical signal.[15]

- Images. A picture or image consists of a brightness or color signal, a function of a two-dimensional location. The object's appearance is presented as an emitted or reflected electromagnetic wave, one form of electronic signal. It can be converted to voltage or current waveforms using devices such as the charge-coupled device. A 2D image can have a continuous spatial domain, as in a traditional photograph or painting; or the image can be discretized in space, as in a raster scanned digital image. Color images are typically represented as a combination of images in three primary colors, so that the signal is vector-valued with dimension three.

- Videos. A video signal is a sequence of images. A point in a video is identified by its two-dimensional position and by the time at which it occurs, so a video signal has a three-dimensional domain. Analog video has one continuous domain dimension (across a scan line) and two discrete dimensions (frame and line).

- Biological membrane potentials. The value of the signal is an electric potential ("voltage"). The domain is more difficult to establish. Some cells or organelles have the same membrane potential throughout; neurons generally have different potentials at different points. These signals have very low energies, but are enough to make nervous systems work; they can be measured in aggregate by the techniques of electrophysiology.

Signal processing

A typical role for signals is in signal processing. A common example is signal transmission between different locations. The embodiment of a signal in electrical form is made by a transducer that converts the signal from its original form to a waveform expressed as a current (I) or a voltage (V), or an electromagnetic waveform, for example, an optical signal or radio transmission. Once expressed as an electronic signal, the signal is available for further processing by electrical devices such as electronic amplifiers and electronic filters, and can be transmitted to a remote location by electronic transmitters and received using electronic receivers.Signals and systems

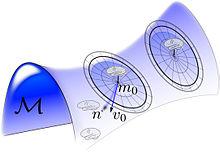

The field studies input and output signals, and the mathematical representations between them known as systems, in four domains: Time, Frequency, s and z. Since signals and systems are both studied in these four domains, there are 8 major divisions of study. As an example, when working with continuous time signals (t), one might transform from the time domain to a frequency or s domain; or from discrete time (n) to frequency or z domains. Systems also can be transformed between these domains like signals, with continuous to s and discrete to z.

Although S and S falls under and includes all the topics covered in this article, as well as Analog signal processing and Digital signal processing, it actually is a subset of the field of Mathematical modeling. The field goes back to RF over a century ago, when it was all analog, and generally continuous. Today, software has taken the place of much of the analog circuitry design and analysis, and even continuous signals are now generally processed digitally. Ironically, digital signals also are processed continuously in a sense, with the software doing calculations between discrete signal "rests" to prepare for the next input/transform/output event.

In past EE curricula S and S, as it is often called, involved circuit analysis and design via mathematical modeling and some numerical methods, and was updated several decades ago with Dynamical systems tools including differential equations, and recently, Lagrangians. The difficulty of the field at that time included the fact that not only mathematical modeling, circuits, signals and complex systems were being modeled, but physics as well, and a deep knowledge of electrical (and now electronic) topics also was involved and required.

Today, the field has become even more daunting and complex with the addition of circuit, systems and signal analysis and design languages and software, from MATLAB and Simulink to NumPy, VHDL, PSpice, Verilog and even Assembly language. Students are expected to understand the tools as well as the mathematics, physics, circuit analysis, and transformations between the 8 domains.

Because mechanical engineering topics like friction, dampening etc. have very close analogies in signal science (inductance, resistance, voltage, etc.), many of the tools originally used in ME transformations (Laplace and Fourier transforms, Lagrangians, sampling theory, probability, difference equations, etc.) have now been applied to signals, circuits, systems and their components, analysis and design in EE. Dynamical systems that involve noise, filtering and other random or chaotic attractors and repellors have now placed stochastic sciences and statistics between the more deterministic discrete and continuous functions in the field. (Deterministic as used here means signals that are completely determined as functions of time).

EE taxonomists are still not decided where S&S falls within the whole field of signal processing vs. circuit analysis and mathematical modeling, but the common link of the topics that are covered in the course of study has brightened boundaries with dozens of books, journals, etc. called Signals and Systems, and used as text and test prep for the EE, as well as, recently, computer engineering exams. The Hsu general reference given below is a good example, with a new edition scheduled for late 2013/ early 2014.

X . II

Digital image processing

Digital image processing is the use of computer algorithms to perform image processing on digital images. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and signal distortion during processing. Since images are defined over two dimensions (perhaps more) digital image processing may be modeled in the form of multidimensional systems.

Many of the techniques of digital image processing, or digital picture processing as it often was called, were developed in the 1960s at the Jet Propulsion Laboratory, Massachusetts Institute of Technology, Bell Laboratories, University of Maryland, and a few other research facilities, with application to satellite imagery, wire-photo standards conversion, medical imaging, videophone, character recognition, and photograph enhancement.[1] The cost of processing was fairly high, however, with the computing equipment of that era. That changed in the 1970s, when digital image processing proliferated as cheaper computers and dedicated hardware became available. Images then could be processed in real time, for some dedicated problems such as television standards conversion. As general-purpose computers became faster, they started to take over the role of dedicated hardware for all but the most specialized and computer-intensive operations. With the fast computers and signal processors available in the 2000s, digital image processing has become the most common form of image processing and generally, is used because it is not only the most versatile method, but also the cheapest.

Digital image processing technology for medical applications was inducted into the Space Foundation Space Technology Hall of Fame in 1994.

In 2002 Raanan Fattel, introduced Gradient domain image processing, a new way to process images in which the differences between pixels are manipulated rather than the pixel values themselves.

Tasks

Digital image processing allows the use of much more complex algorithms, and hence, can offer both more sophisticated performance at simple tasks, and the implementation of methods which would be impossible by analog means.In particular, digital image processing is the only practical technology for:

Some techniques which are used in digital image processing include:

- Anisotropic diffusion

- Hidden Markov models

- Image editing

- Image restoration

- Independent component analysis

- Linear filtering

- Neural networks

- Partial differential equations

- Pixelation

- Principal components analysis

- Self-organizing maps

- Wavelets

Digital image transformations

Filtering

Digital filters are used to blur and sharpen digital images. Filtering can be performed in the spatial domain by convolution with specifically designed kernels (filter array), or in the frequency (Fourier) domain by masking specific frequency regions. The following examples show both methods:| Filter type | Kernel or mask | Example |

|---|---|---|

| Original Image |  | |

| Spatial Lowpass |  | |

| Spatial Highpass |  | |

| Fourier Representation | Pseudo-code: image = checkerboard F = Fourier Transform of image Show Image: log(1+Absolute Value(F)) |  |

| Fourier Lowpass |  |  |

| Fourier Highpass |  |  |

Image padding in Fourier domain filtering

Images are typically padded before being transformed to the Fourier space, the highpass filtered images below illustrate the consequences of different padding techniques:| Zero padded | Repeated edge padded |

|---|---|

|  |

Filtering Code Examples

MATLAB example for spatial domain highpass filtering.img=checkerboard(20); % generate checkerboard

% ************************** SPATIAL DOMAIN ***************************

klaplace=[0 -1 0; -1 5 -1; 0 -1 0]; % Laplacian filter kernel

X=conv2(img,klaplace); % convolve test img with

% 3x3 Laplacian kernel

figure()

imshow(X,[]) % show Laplacian filtered

title('Laplacian Edge Detection')

img=checkerboard(20); % generate checkerboard

% ************************** FOURIER DOMAIN ***************************

pad=paddedsize([m,n]); % get padding size

imgp=padarray(img,[pad(1),pad(2)],'both'); % set pad before & after

[p,q]=size(imgp); % get size of padded img

fftpad=fft2(imgp); % Fourier transform

F=fftshift(fftpad); % shift low freq to middle

Hlp=fftshift(lpfilter('btw',p,q,60)); % get butterworth filter

Hhp=1-Hlp; % get highpass

HPimg=abs(ifft2(F.*Hhp)); % apply filter and IFFT

figure(7)

imshow(Hhp,[]) % show the filter

title('Highpass Butterworth')

figure(8) % show result cropped

imshow(HPimg(round((p-n)/2):round(n+(p-n)/2),round((q-m)/2):round(m+(q-m)/2)),[])

title('FFT Highpass Filtered')

Affine transformations

Affine transformations enable basic image transformations including scale, rotate, translate, mirror and sheer as is shown in the following examples show:| Transformation Name | Affine Matrix | Example |

|---|---|---|

| Identity |  | |

| Reflection |  | |

| Scale |  | |

| Rotate |  | |

| Shear |  |

Applications

Digital camera images

Digital cameras generally include specialized digital image processing hardware – either dedicated chips or added circuitry on other chips – to convert the raw data from their image sensor into a color-corrected image in a standard image file formatX . III

Computer vision

Computer vision is an interdisciplinary field that deals with how computers can be made for gaining high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do.

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g., in the forms of decisions.[4][5][6][7] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that can interface with other thought processes and elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[8]

As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner. As a technological discipline, computer vision seeks to apply its theories and models for the construction of computer vision systems.

Sub-domains of computer vision include scene reconstruction, event detection, video tracking, object recognition, object pose estimation, learning, indexing, motion estimation, and image restoration.

Definition

Computer vision is an interdisciplinary field that deals with how computers can be made for gaining high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do. "Computer vision is concerned with the automatic extraction, analysis and understanding of useful information from a single image or a sequence of images. It involves the development of a theoretical and algorithmic basis to achieve automatic visual understanding." [9] As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner. As a technological discipline, computer vision seeks to apply its theories and models for the construction of computer vision systems.In the late 1960s, computer vision began at universities that were pioneering artificial intelligence. It was meant to mimic the human visual system, as a stepping stone to endowing robots with intelligent behavior. In 1966, it was believed that this could be achieved through a summer project, by attaching a camera to a computer and having it "describe what it saw".

What distinguished computer vision from the prevalent field of digital image processing at that time was a desire to extract three-dimensional structure from images with the goal of achieving full scene understanding. Studies in the 1970s formed the early foundations for many of the computer vision algorithms that exist today, including extraction of edges from images, labeling of lines, non-polyhedral and polyhedral modeling, representation of objects as interconnections of smaller structures, optical flow, and motion estimation.

The next decade saw studies based on more rigorous mathematical analysis and quantitative aspects of computer vision. These include the concept of scale-space, the inference of shape from various cues such as shading, texture and focus, and contour models known as snakes. Researchers also realized that many of these mathematical concepts could be treated within the same optimization framework as regularization and Markov random fields. By the 1990s, some of the previous research topics became more active than the others. Research in projective 3-D reconstructions led to better understanding of camera calibration. With the advent of optimization methods for camera calibration, it was realized that a lot of the ideas were already explored in bundle adjustment theory from the field of photogrammetry. This led to methods for sparse 3-D reconstructions of scenes from multiple images. Progress was made on the dense stereo correspondence problem and further multi-view stereo techniques. At the same time, variations of graph cut were used to solve image segmentation. This decade also marked the first time statistical learning techniques were used in practice to recognize faces in images (see Eigenface). Toward the end of the 1990s, a significant change came about with the increased interaction between the fields of computer graphics and computer vision. This included image-based rendering, image morphing, view interpolation, panoramic image stitching and early light-field rendering.

Recent work has seen the resurgence of feature-based methods, used in conjunction with machine learning techniques and complex optimization frameworks

Related fields

Areas of artificial intelligence deal with autonomous planning or deliberation for robotical systems to navigate through an environment. A detailed understanding of these environments is required to navigate through them. Information about the environment could be provided by a computer vision system, acting as a vision sensor and providing high-level information about the environment and the robot.Artificial intelligence and computer vision share other topics such as pattern recognition and learning techniques. Consequently, computer vision is sometimes seen as a part of the artificial intelligence field or the computer science field in general.

Solid-state physics is another field that is closely related to computer vision. Most computer vision systems rely on image sensors, which detect electromagnetic radiation, which is typically in the form of either visible or infra-red light. The sensors are designed using quantum physics. The process by which light interacts with surfaces is explained using physics. Physics explains the behavior of optics which are a core part of most imaging systems. Sophisticated image sensors even require quantum mechanics to provide a complete understanding of the image formation process. Also, various measurement problems in physics can be addressed using computer vision, for example motion in fluids.

A third field which plays an important role is neurobiology, specifically the study of the biological vision system. Over the last century, there has been an extensive study of eyes, neurons, and the brain structures devoted to processing of visual stimuli in both humans and various animals. This has led to a coarse, yet complicated, description of how "real" vision systems operate in order to solve certain vision related tasks. These results have led to a subfield within computer vision where artificial systems are designed to mimic the processing and behavior of biological systems, at different levels of complexity. Also, some of the learning-based methods developed within computer vision (e.g. neural net and deep learning based image and feature analysis and classification) have their background in biology.

Some strands of computer vision research are closely related to the study of biological vision – indeed, just as many strands of AI research are closely tied with research into human consciousness, and the use of stored knowledge to interpret, integrate and utilize visual information. The field of biological vision studies and models the physiological processes behind visual perception in humans and other animals. Computer vision, on the other hand, studies and describes the processes implemented in software and hardware behind artificial vision systems. Interdisciplinary exchange between biological and computer vision has proven fruitful for both fields.

Yet another field related to computer vision is signal processing. Many methods for processing of one-variable signals, typically temporal signals, can be extended in a natural way to processing of two-variable signals or multi-variable signals in computer vision. However, because of the specific nature of images there are many methods developed within computer vision which have no counterpart in processing of one-variable signals. Together with the multi-dimensionality of the signal, this defines a subfield in signal processing as a part of computer vision.

Beside the above-mentioned views on computer vision, many of the related research topics can also be studied from a purely mathematical point of view. For example, many methods in computer vision are based on statistics, optimization or geometry. Finally, a significant part of the field is devoted to the implementation aspect of computer vision; how existing methods can be realized in various combinations of software and hardware, or how these methods can be modified in order to gain processing speed without losing too much performance.

The fields most closely related to computer vision are image processing, image analysis and machine vision. There is a significant overlap in the range of techniques and applications that these cover. This implies that the basic techniques that are used and developed in these fields are similar, something which can be interpreted as there is only one field with different names. On the other hand, it appears to be necessary for research groups, scientific journals, conferences and companies to present or market themselves as belonging specifically to one of these fields and, hence, various characterizations which distinguish each of the fields from the others have been presented.

Computer graphics produces image data from 3D models, computer vision often produces 3D models from image data. There is also a trend towards a combination of the two disciplines, e.g., as explored in augmented reality.

The following characterizations appear relevant but should not be taken as universally accepted:

- Image processing and image analysis tend to focus on 2D images, how to transform one image to another, e.g., by pixel-wise operations such as contrast enhancement, local operations such as edge extraction or noise removal, or geometrical transformations such as rotating the image. This characterization implies that image processing/analysis neither require assumptions nor produce interpretations about the image content.

- Computer vision includes 3D analysis from 2D images. This analyzes the 3D scene projected onto one or several images, e.g., how to reconstruct structure or other information about the 3D scene from one or several images. Computer vision often relies on more or less complex assumptions about the scene depicted in an image.

- Machine vision is the process of applying a range of technologies & methods to provide imaging-based automatic inspection, process control and robot guidance[16] in industrial applications.[17] Machine vision tends to focus on applications, mainly in manufacturing, e.g., vision based robots and systems for vision based inspection or measurement. This implies that image sensor technologies and control theory often are integrated with the processing of image data to control a robot and that real-time processing is emphasised by means of efficient implementations in hardware and software. It also implies that the external conditions such as lighting can be and are often more controlled in machine vision than they are in general computer vision, which can enable the use of different algorithms.

- There is also a field called imaging which primarily focus on the process of producing images, but sometimes also deals with processing and analysis of images. For example, medical imaging includes substantial work on the analysis of image data in medical applications.

- Finally, pattern recognition is a field which uses various methods to extract information from signals in general, mainly based on statistical approaches and artificial neural networks. A significant part of this field is devoted to applying these methods to image data.

Applications

Applications range from tasks such as industrial machine vision systems which, say, inspect bottles speeding by on a production line, to research into artificial intelligence and computers or robots that can comprehend the world around them. The computer vision and machine vision fields have significant overlap. Computer vision covers the core technology of automated image analysis which is used in many fields. Machine vision usually refers to a process of combining automated image analysis with other methods and technologies to provide automated inspection and robot guidance in industrial applications. In many computer vision applications, the computers are pre-programmed to solve a particular task, but methods based on learning are now becoming increasingly common. Examples of applications of computer vision include systems for:- Automatic inspection, e.g., in manufacturing applications;

- Assisting humans in identification tasks, e.g., a species identification system;[18]

- Controlling processes, e.g., an industrial robot;

- Detecting events, e.g., for visual surveillance or people counting;

- Interaction, e.g., as the input to a device for computer-human interaction;

- Modeling objects or environments, e.g., medical image analysis or topographical modeling;

- Navigation, e.g., by an autonomous vehicle or mobile robot; and

- Organizing information, e.g., for indexing databases of images and image sequences.

DARPA's Visual Media Reasoning concept video

A second application area in computer vision is in industry, sometimes called machine vision, where information is extracted for the purpose of supporting a manufacturing process. One example is quality control where details or final products are being automatically inspected in order to find defects. Another example is measurement of position and orientation of details to be picked up by a robot arm. Machine vision is also heavily used in agricultural process to remove undesirable food stuff from bulk material, a process called optical sorting.

Military applications are probably one of the largest areas for computer vision. The obvious examples are detection of enemy soldiers or vehicles and missile guidance. More advanced systems for missile guidance send the missile to an area rather than a specific target, and target selection is made when the missile reaches the area based on locally acquired image data. Modern military concepts, such as "battlefield awareness", imply that various sensors, including image sensors, provide a rich set of information about a combat scene which can be used to support strategic decisions. In this case, automatic processing of the data is used to reduce complexity and to fuse information from multiple sensors to increase reliability.

Artist's Concept of Rover on Mars, an example of an unmanned land-based vehicle. Notice the stereo cameras mounted on top of the Rover.

Other application areas include:

- Support of visual effects creation for cinema and broadcast, e.g., camera tracking (matchmoving).

- Surveillance.

Typical tasks

Each of the application areas described above employ a range of computer vision tasks; more or less well-defined measurement problems or processing problems, which can be solved using a variety of methods. Some examples of typical computer vision tasks are presented below.Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g., in the forms of decisions.[4][5][6][7] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that can interface with other thought processes and elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.[8]

Recognition

The classical problem in computer vision, image processing, and machine vision is that of determining whether or not the image data contains some specific object, feature, or activity. Different varieties of the recognition problem are described in the literature:- Object recognition (also called object classification) – one or several pre-specified or learned objects or object classes can be recognized, usually together with their 2D positions in the image or 3D poses in the scene. Blippar, Google Goggles and LikeThat provide stand-alone programs that illustrate this functionality.

- Identification – an individual instance of an object is recognized. Examples include identification of a specific person's face or fingerprint, identification of handwritten digits, or identification of a specific vehicle.

- Detection – the image data are scanned for a specific condition. Examples include detection of possible abnormal cells or tissues in medical images or detection of a vehicle in an automatic road toll system. Detection based on relatively simple and fast computations is sometimes used for finding smaller regions of interesting image data which can be further analyzed by more computationally demanding techniques to produce a correct interpretation.

Several specialized tasks based on recognition exist, such as:

- Content-based image retrieval – finding all images in a larger set of images which have a specific content. The content can be specified in different ways, for example in terms of similarity relative a target image (give me all images similar to image X), or in terms of high-level search criteria given as text input (give me all images which contains many houses, are taken during winter, and have no cars in them).

Computer vision for people counter purposes in public places, malls, shopping centres

- Pose estimation – estimating the position or orientation of a specific object relative to the camera. An example application for this technique would be assisting a robot arm in retrieving objects from a conveyor belt in an assembly line situation or picking parts from a bin.

- Optical character recognition (OCR) – identifying characters in images of printed or handwritten text, usually with a view to encoding the text in a format more amenable to editing or indexing (e.g. ASCII).

- 2D Code reading Reading of 2D codes such as data matrix and QR codes.

- Facial recognition

- Shape Recognition Technology (SRT) in people counter systems differentiating human beings (head and shoulder patterns) from objects

Motion analysis

Several tasks relate to motion estimation where an image sequence is processed to produce an estimate of the velocity either at each points in the image or in the 3D scene, or even of the camera that produces the images . Examples of such tasks are:- Egomotion – determining the 3D rigid motion (rotation and translation) of the camera from an image sequence produced by the camera.

- Tracking – following the movements of a (usually) smaller set of interest points or objects (e.g., vehicles or humans) in the image sequence.

- Optical flow – to determine, for each point in the image, how that point is moving relative to the image plane, i.e., its apparent motion. This motion is a result both of how the corresponding 3D point is moving in the scene and how the camera is moving relative to the scene.

Scene reconstruction

Given one or (typically) more images of a scene, or a video, scene reconstruction aims at computing a 3D model of the scene. In the simplest case the model can be a set of 3D points. More sophisticated methods produce a complete 3D surface model. The advent of 3D imaging not requiring motion or scanning, and related processing algorithms is enabling rapid advances in this field. Grid-based 3D sensing can be used to acquire 3D images from multiple angles. Algorithms are now available to stitch multiple 3D images together into point clouds and 3D models.Image restoration

The aim of image restoration is the removal of noise (sensor noise, motion blur, etc.) from images. The simplest possible approach for noise removal is various types of filters such as low-pass filters or median filters. More sophisticated methods assume a model of how the local image structures look like, a model which distinguishes them from the noise. By first analysing the image data in terms of the local image structures, such as lines or edges, and then controlling the filtering based on local information from the analysis step, a better level of noise removal is usually obtained compared to the simpler approaches.An example in this field is inpainting.

System methods

The organization of a computer vision system is highly application dependent. Some systems are stand-alone applications which solve a specific measurement or detection problem, while others constitute a sub-system of a larger design which, for example, also contains sub-systems for control of mechanical actuators, planning, information databases, man-machine interfaces, etc. The specific implementation of a computer vision system also depends on if its functionality is pre-specified or if some part of it can be learned or modified during operation. Many functions are unique to the application. There are, however, typical functions which are found in many computer vision systems.- Image acquisition – A digital image is produced by one or several image sensors, which, besides various types of light-sensitive cameras, include range sensors, tomography devices, radar, ultra-sonic cameras, etc. Depending on the type of sensor, the resulting image data is an ordinary 2D image, a 3D volume, or an image sequence. The pixel values typically correspond to light intensity in one or several spectral bands (gray images or colour images), but can also be related to various physical measures, such as depth, absorption or reflectance of sonic or electromagnetic waves, or nuclear magnetic resonance.

- Pre-processing – Before a computer vision method can be applied to image data in order to extract some specific piece of information, it is usually necessary to process the data in order to assure that it satisfies certain assumptions implied by the method. Examples are

- Re-sampling in order to assure that the image coordinate system is correct.

- Noise reduction in order to assure that sensor noise does not introduce false information.

- Contrast enhancement to assure that relevant information can be detected.

- Scale space representation to enhance image structures at locally appropriate scales.

- Feature extraction – Image features at various levels of complexity are extracted from the image data.[20] Typical examples of such features are

- Lines, edges and ridges.

- Localized interest points such as corners, blobs or points.

- More complex features may be related to texture, shape or motion.

- Detection/segmentation – At some point in the processing a decision is made about which image points or regions of the image are relevant for further processing. Examples are

- Selection of a specific set of interest points

- Segmentation of one or multiple image regions which contain a specific object of interest.

- Segmentation of image into nested scene architecture comprised foreground, object groups, single objects or salient object parts (also referred to as spatial-taxon scene hierarchy)

- High-level processing – At this step the input is typically a small set of data, for example a set of points or an image region which is assumed to contain a specific object. The remaining processing deals with, for example:

- Verification that the data satisfy model-based and application specific assumptions.

- Estimation of application specific parameters, such as object pose or object size.

- Image recognition – classifying a detected object into different categories.

- Image registration – comparing and combining two different views of the same object.

- Decision making Making the final decision required for the application, for example:

- Pass/fail on automatic inspection applications

- Match / no-match in recognition applications

- Flag for further human review in medical, military, security and recognition applications

Image-understanding systems

Image-understanding systems (IUS) include three levels of abstraction as follows: Low level includes image primitives such as edges, texture elements, or regions; intermediate level includes boundaries, surfaces and volumes; and high level includes objects, scenes, or events. Many of these requirements are really topics for further research.The representational requirements in the designing of IUS for these levels are: representation of prototypical concepts, concept organization, spatial knowledge, temporal knowledge, scaling, and description by comparison and differentiation.

While inference refers to the process of deriving new, not explicitly represented facts from currently known facts, control refers to the process that selects which of the many inference, search, and matching techniques should be applied at a particular stage of processing. Inference and control requirements for IUS are: search and hypothesis activation, matching and hypothesis testing, generation and use of expectations, change and focus of attention, certainty and strength of belief, inference and goal satisfaction.

Hardware

There are many kinds of computer vision systems, nevertheless all of them contain these basic elements: a power source, at least one image acquisition device (i.e. camera, ccd, etc.), a processor as well as control and communication cables or some kind of wireless interconnection mechanism. In addition, a practical vision system contains software, as well as a display in order to monitor the system. Vision systems for inner spaces, as most industrial ones, contain an illumination system and may be placed in a controlled environment. Furthermore, a completed system includes many accessories like camera supports, cables and connectors.Most computer vision systems use visible-light cameras passively viewing a scene at frame rates of at most 60 frames per second (usually far slower).

A few computer vision systems use image acquisition hardware with active illumination or something other than visible light or both. For example, a structured-light 3D scanner, a thermographic camera, a hyperspectral imager, radar imaging, a lidar scanner, a magnetic resonance image, a side-scan sonar, a synthetic aperture sonar, or etc. Such hardware captures "images" that are then processed often using the same computer vision algorithms used to process visible-light images.

While traditional broadcast and consumer video systems operate at a rate of 30 frames per second, advances in digital signal processing and consumer graphics hardware has made high-speed image acquisition, processing, and display possible for real-time systems on the order of hundreds to thousands of frames per second. For applications in robotics, fast, real-time video systems are critically important and often can simplify the processing needed for certain algorithms. When combined with a high-speed projector, fast image acquisition allows 3D measurement and feature tracking to be realised.[23]

As of 2016, vision processing units are emerging as a new class of processor, to complement CPUs and Graphics processing units (GPUs) in this role.

X . IIII

Super-resolution imaging

Super-resolution imaging (SR) is a class of techniques that enhance the resolution of an imaging system. In some SR techniques—termed optical SR—the diffraction limit of systems is transcended, while in others—geometrical SR—the resolution of digital imaging sensors is enhanced.

Super-resolution imaging techniques are used in general image processing and in super-resolution microscopy.

Basic concepts

Because some of the ideas surrounding superresolution raise fundamental issues, there is need at the outset to examine the relevant physical and information-theoretical principles.Diffraction Limit The detail of a physical object that an optical instrument can reproduce in an image has limits that are mandated by laws of physics, whether formulated by the diffraction equations in the wave theory of light[1] or the Uncertainty Principle for photons in quantum mechanics.[2] Information transfer can never be increased beyond this boundary, but packets outside the limits can be cleverly swapped for (or multiplexed with) some inside it.[3] One does not so much “break” as “run around” the diffraction limit. New procedures probing electro-magnetic disturbances at the molecular level (in the so-called near field)[4] remain fully consistent with Maxwell's equations.

A succinct expression of the diffraction limit is given in the spatial-frequency domain. In Fourier optics light distributions are expressed as superpositions of a series of grating light patterns in a range of fringe widths, technically spatial frequencies. It is generally taught that diffraction theory stipulates an upper limit, the cut-off spatial-frequency, beyond which pattern elements fail to be transferred into the optical image, i.e., are not resolved. But in fact what is set by diffraction theory is the width of the passband, not a fixed upper limit. No laws of physics are broken when a spatial frequency band beyond the cut-off spatial frequency is swapped for one inside it: this has long been implemented in dark-field microscopy. Nor are information-theoretical rules broken when superimposing several bands,[5][6] disentangling them in the received image needs assumptions of object invariance during multiple exposures, i.e., the substitution of one kind of uncertainty for another.

Information When the term superresolution is used in techniques of inferring object details from statistical treatment of the image within standard resolution limits, for example, averaging multiple exposures, it involves an exchange of one kind of information (extracting signal from noise) for another (the assumption that the target has remained invariant).

Resolution and localization True resolution involves the distinction of whether a target, e.g. a star or a spectral line, is single or double, ordinarily requiring separable peaks in the image. When a target is known to be single, its location can be determined with higher precision than the image width by finding the centroid (center of gravity) of its image light distribution. The word ultra-resolution had been proposed for this process[7] but it did not catch on, and the high-precision localization procedure is typically referred to as superresolution.

In summary: The technical achievements of enhancing the performance of imaging-forming and –sensing devices now classified as superresolution utilize to the fullest but always stay within the bounds imposed by the laws of physics and information theory.

Techniques to which the term "superresolution" has been applied

Optical or diffractive super resolution

Substituting spatial-frequency bands. Though the bandwidth allowable by diffraction is fixed, it can be positioned anywhere in the spatial-frequency spectrum. Dark-field illumination in microscopy is an example. See also aperture synthesis.

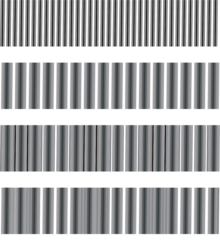

The "structured illumination" technique of superresolution is related to moiré patterns. The target, a band of fine fringes (top row), is beyond the diffraction limit. When a band of somewhat coarser resolvable fringes (second row) is artificially superimposed, the combination (third row) features moiré components that are within the diffraction limit and hence contained in the image (bottom row) allowing the presence of the fine fringes to be inferred even though they are not themselves represented in the image.

- Multiplexing spatial-frequency bands such as structured illumination (see figure to left)

- An image is formed using the normal passband of the optical device. Then some known light structure, for example a set of light fringes that is also within the passband, is superimposed on the target.[6] The image now contains components resulting from the combination of the target and the superimposed light structure, e.g. moiré fringes, and carries information about target detail which simple, unstructured illumination does not. The “superresolved” components, however, need disentangling to be revealed.

- Multiple parameter use within traditional diffraction limit

-

- If a target has no special polarization or wavelength properties, two polarization states or non-overlapping wavelength regions can be used to encode target details, one in a spatial-frequency band inside the cut-off limit the other beyond it. Both would utilize normal passband transmission but are then separately decoded to reconstitute target structure with extended resolution.

- Probing near-field electromagnetic disturbance

- The usual discussion of superresolution involved conventional imagery of an object by an optical system. But modern technology allows probing the electromagnetic disturbance within molecular distances of the source[4] which has superior resolution properties, see also evanescent waves and the development of the new Super lens.

Geometrical or image-processing superresolution

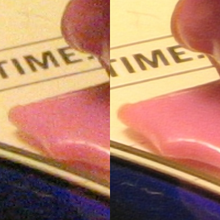

Compared to a single image marred by noise during its acquisition or transmission (left), the signal-to-noise ratio is improved by suitable combination of several separately-obtained images (right). This can be achieved only within the intrinsic resolution capability of the imaging process for revealing such detail.

- Multi-exposure image noise reduction

- When an image is degraded by noise, there can be more detail in the average of many exposures, even within the diffraction limit. See example on the right.

- Single-frame deblurring

- Known defects in a given imaging situation, such as defocus or aberrations, can sometimes be mitigated in whole or in part by suitable spatial-frequency filtering of even a single image. Such procedures all stay within the diffraction-mandated passband, and do not extend it.

- Sub-pixel image localization

- The location of a single source can be determined by computing the "center of gravity" (centroid) of the light distribution extending over several adjacent pixels (see figure on the left). Provided that there is enough light, this can be achieved with arbitrary precision, very much better than pixel width of the detecting apparatus and the resolution limit for the decision of whether the source is single or double. This technique, which requires the presupposition that all the light comes from a single source, is at the basis of what has becomes known as superresolution microscopy, e.g. STORM, where fluorescent probes attached to molecules give nanoscale distance information. It is also the mechanism underlying visual hyperacuity.

- Bayesian induction beyond traditional diffraction limit

- Some object features, though beyond the diffraction limit, may be known to be associated with other object features that are within the limits and hence contained in the image. Then conclusions can be drawn, using statistical methods, from the available image data about the presence of the full object.[9] The classical example is Toraldo di Francia's proposition[10] of judging whether an image is that of a single or double star by determining whether its width exceeds the spread from a single star. This can be achieved at separations well below the classical resolution bounds, and requires the prior limitation to the choice "single or double?"

- The approach can take the form of extrapolating the image in the frequency domain, by assuming that the object is an analytic function, and that we can exactly know the function values in some interval. This method is severely limited by the ever-present noise in digital imaging systems, but it can work for radar, astronomy, microscopy or magnetic resonance imaging.[11] More recently, a fast single image super-resolution algorithm based on a closed-form solution to problems has been proposed and demonstrated to accelerate most of the existing Bayesian super-resolution methods significantly.

Aliasing

Geometrical SR reconstruction algorithms are possible if and only if the input low resolution images have been under-sampled and therefore contain aliasing. Because of this aliasing, the high-frequency content of the desired reconstruction image is embedded in the low-frequency content of each of the observed images. Given a sufficient number of observation images, and if the set of observations vary in their phase (i.e. if the images of the scene are shifted by a sub-pixel amount), then the phase information can be used to separate the aliased high-frequency content from the true low-frequency content, and the full-resolution image can be accurately reconstructed.In practice, this frequency-based approach is not used for reconstruction, but even in the case of spatial approaches (e.g. shift-add fusion), the presence of aliasing is still a necessary condition for SR reconstruction.

Technical implementations

There are both single-frame and multiple-frame variants of SR. Multiple-frame SR uses the sub-pixel shifts between multiple low resolution images of the same scene. It creates an improved resolution image fusing information from all low resolution images, and the created higher resolution images are better descriptions of the scene. Single-frame SR methods attempt to magnify the image without introducing blur. These methods use other parts of the low resolution images, or other unrelated images, to guess what the high-resolution image should look like. Algorithms can also be divided by their domain: frequency or space domain. Originally, super-resolution methods worked well only on grayscale images, but researchers have found methods to adapt them to color camera images. Recently, the use of super-resolution for 3D data has also been shown.X . IIIII

Neural coding

Neural coding is a neuroscience related field concerned with characterizing the relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activity of the neurons in the ensemble. Based on the theory that sensory and other information is represented in the brain by networks of neurons, it is thought that neurons can encode both digital and analog information.

Overview

Neurons are remarkable among the cells of the body in their ability to propagate signals rapidly over large distances. They do this by generating characteristic electrical pulses called action potentials: voltage spikes that can travel down nerve fibers. Sensory neurons change their activities by firing sequences of action potentials in various temporal patterns, with the presence of external sensory stimuli, such as light, sound, taste, smell and touch. It is known that information about the stimulus is encoded in this pattern of action potentials and transmitted into and around the brain.Although action potentials can vary somewhat in duration, amplitude and shape, they are typically treated as identical stereotyped events in neural coding studies. If the brief duration of an action potential (about 1ms) is ignored, an action potential sequence, or spike train, can be characterized simply by a series of all-or-none point events in time.[3] The lengths of interspike intervals (ISIs) between two successive spikes in a spike train often vary, apparently randomly.[4] The study of neural coding involves measuring and characterizing how stimulus attributes, such as light or sound intensity, or motor actions, such as the direction of an arm movement, are represented by neuron action potentials or spikes. In order to describe and analyze neuronal firing, statistical methods and methods of probability theory and stochastic point processes have been widely applied.

With the development of large-scale neural recording and decoding technologies, researchers have begun to crack the neural code and already provided the first glimpse into the real-time neural code as memory is formed and recalled in the hippocampus, a brain region known to be central for memory formation.[5][6][7] Neuroscientists have initiated several large-scale brain decoding projects.[8][9]

Encoding and decoding

The link between stimulus and response can be studied from two opposite points of view. Neural encoding refers to the map from stimulus to response. The main focus is to understand how neurons respond to a wide variety of stimuli, and to construct models that attempt to predict responses to other stimuli. Neural decoding refers to the reverse map, from response to stimulus, and the challenge is to reconstruct a stimulus, or certain aspects of that stimulus, from the spike sequences it evokes.Hypothesized coding schemes

A sequence, or 'train', of spikes may contain information based on different coding schemes. In motor neurons, for example, the strength at which an innervated muscle is flexed depends solely on the 'firing rate', the average number of spikes per unit time (a 'rate code'). At the other end, a complex 'temporal code' is based on the precise timing of single spikes. They may be locked to an external stimulus such as in the visual[10] and auditory system or be generated intrinsically by the neural circuitry.[11]Whether neurons use rate coding or temporal coding is a topic of intense debate within the neuroscience community, even though there is no clear definition of what these terms mean. In one theory, termed "neuroelectrodynamics", the following coding schemes are all considered to be epiphenomena, replaced instead by molecular changes reflecting the spatial distribution of electric fields within neurons as a result of the broad electromagnetic spectrum of action potentials, and manifested in information as spike directivity.[12][13][14][15][16]

Rate coding

The rate coding model of neuronal firing communication states that as the intensity of a stimulus increases, the frequency or rate of action potentials, or "spike firing", increases. Rate coding is sometimes called frequency coding.Rate coding is a traditional coding scheme, assuming that most, if not all, information about the stimulus is contained in the firing rate of the neuron. Because the sequence of action potentials generated by a given stimulus varies from trial to trial, neuronal responses are typically treated statistically or probabilistically. They may be characterized by firing rates, rather than as specific spike sequences. In most sensory systems, the firing rate increases, generally non-linearly, with increasing stimulus intensity.[17] Any information possibly encoded in the temporal structure of the spike train is ignored. Consequently, rate coding is inefficient but highly robust with respect to the ISI 'noise'.[4]

During rate coding, precisely calculating firing rate is very important. In fact, the term "firing rate" has a few different definitions, which refer to different averaging procedures, such as an average over time or an average over several repetitions of experiment.

In rate coding, learning is based on activity-dependent synaptic weight modifications.

Rate coding was originally shown by ED Adrian and Y Zotterman in 1926.[18] In this simple experiment different weights were hung from a muscle. As the weight of the stimulus increased, the number of spikes recorded from sensory nerves innervating the muscle also increased. From these original experiments, Adrian and Zotterman concluded that action potentials were unitary events, and that the frequency of events, and not individual event magnitude, was the basis for most inter-neuronal communication.

In the following decades, measurement of firing rates became a standard tool for describing the properties of all types of sensory or cortical neurons, partly due to the relative ease of measuring rates experimentally. However, this approach neglects all the information possibly contained in the exact timing of the spikes. During recent years, more and more experimental evidence has suggested that a straightforward firing rate concept based on temporal averaging may be too simplistic to describe brain activity.[4]

Spike-count rate

The spike-count rate, also referred to as temporal average, is obtained by counting the number of spikes that appear during a trial and dividing by the duration of trial. The length T of the time window is set by the experimenter and depends on the type of neuron recorded from and to the stimulus. In practice, to get sensible averages, several spikes should occur within the time window. Typical values are T = 100 ms or T = 500 ms, but the duration may also be longer or shorter.[19]The spike-count rate can be determined from a single trial, but at the expense of losing all temporal resolution about variations in neural response during the course of the trial. Temporal averaging can work well in cases where the stimulus is constant or slowly varying and does not require a fast reaction of the organism — and this is the situation usually encountered in experimental protocols. Real-world input, however, is hardly stationary, but often changing on a fast time scale. For example, even when viewing a static image, humans perform saccades, rapid changes of the direction of gaze. The image projected onto the retinal photoreceptors changes therefore every few hundred milliseconds.[19]

Despite its shortcomings, the concept of a spike-count rate code is widely used not only in experiments, but also in models of neural networks. It has led to the idea that a neuron transforms information about a single input variable (the stimulus strength) into a single continuous output variable (the firing rate).

There is a growing body of evidence that in Purkinje neurons, at least, information is not simply encoded in firing but also in the timing and duration of non-firing, quiescent periods.

Time-dependent firing rate

For sufficiently small Δt, r(t)Δt is the average number of spikes occurring between times t and t+Δt over multiple trials. If Δt is small, there will never be more than one spike within the interval between t and t+Δt on any given trial. This means that r(t)Δt is also the fraction of trials on which a spike occurred between those times. Equivalently, r(t)Δt is the probability that a spike occurs during this time interval.

As an experimental procedure, the time-dependent firing rate measure is a useful method to evaluate neuronal activity, in particular in the case of time-dependent stimuli. The obvious problem with this approach is that it can not be the coding scheme used by neurons in the brain. Neurons can not wait for the stimuli to repeatedly present in an exactly same manner before generating response.

Nevertheless, the experimental time-dependent firing rate measure can make sense, if there are large populations of independent neurons that receive the same stimulus. Instead of recording from a population of N neurons in a single run, it is experimentally easier to record from a single neuron and average over N repeated runs. Thus, the time-dependent firing rate coding relies on the implicit assumption that there are always populations of neurons.

Temporal coding

When precise spike timing or high-frequency firing-rate fluctuations are found to carry information, the neural code is often identified as a temporal code.[22] A number of studies have found that the temporal resolution of the neural code is on a millisecond time scale, indicating that precise spike timing is a significant element in neural coding.[2][23]Neurons exhibit high-frequency fluctuations of firing-rates which could be noise or could carry information. Rate coding models suggest that these irregularities are noise, while temporal coding models suggest that they encode information. If the nervous system only used rate codes to convey information, a more consistent, regular firing rate would have been evolutionarily advantageous, and neurons would have utilized this code over other less robust options.[24] Temporal coding supplies an alternate explanation for the “noise," suggesting that it actually encodes information and affects neural processing. To model this idea, binary symbols can be used to mark the spikes: 1 for a spike, 0 for no spike. Temporal coding allows the sequence 000111000111 to mean something different from 001100110011, even though the mean firing rate is the same for both sequences, at 6 spikes/10 ms.[25] Until recently, scientists had put the most emphasis on rate encoding as an explanation for post-synaptic potential patterns. However, functions of the brain are more temporally precise than the use of only rate encoding seems to allow[citation needed]. In other words, essential information could be lost due to the inability of the rate code to capture all the available information of the spike train. In addition, responses are different enough between similar (but not identical) stimuli to suggest that the distinct patterns of spikes contain a higher volume of information than is possible to include in a rate code.[26]