Intel core 2 eliminates the problem 2 bugs on pentium 5 in 1999 to 2000, where prossesor at core count 1 bit into processor with core count 2 bits dozens

Like the illustrations of the Lord Jesus are better than the two alone and God says do not you worry what you eat and drink because Heavenly Father will give His blessing. The two are better because they can transcend all human minds and minds that God says the Lord Jesus with us is what the heavenly Father gave us for the children of light.

Intel Core 2

Core 2 is a brand encompassing a range of Intel's consumer 64-bit x86-64 single-, dual-, and quad-core microprocessors based on the Core microarchitecture. The single- and dual-core models are single-die, whereas the quad-core models comprise two dies, each containing two cores, packaged in a multi-chip module. The introduction of Core 2 relegated the Pentium brand to the mid-range market, and reunified laptop and desktop CPU lines for marketing purposes under the same product name, which previously had been divided into the Pentium 4, Pentium D, and Pentium M brands.The Core 2 brand was introduced on 27 July 2006, comprising the Solo (single-core), Duo (dual-core), Quad (quad-core), and in 2007, the Extreme (dual- or quad-core CPUs for enthusiasts) subbrands. Intel Core 2 processors with vPro technology (designed for businesses) include the dual-core and quad-core branches .

Models

The Core 2-branded CPUs include: "Conroe"/"Allendale" (dual-core for desktops), "Merom" (dual-core for laptops), "Merom-L" (single-core for laptops), "Kentsfield" (quad-core for desktops), and the updated variants named "Wolfdale" (dual-core for desktops), "Penryn" (dual-core for laptops), and "Yorkfield" (quad-core for desktops). (Note: For the server and workstation "Woodcrest", "Tigerton", "Harpertown" and "Dunnington" CPUs see the Xeon brand.)The Core 2 branded processors feature Virtualization Technology (with some exceptions), Execute Disable Bit, and SSE3. Their Core microarchitecture introduced SSSE3, Trusted Execution Technology, Enhanced SpeedStep, and Active Management Technology (iAMT2). With a maximum thermal design power (TDP) of 65W, the Core 2 Duo Conroe dissipates half the power of the less capable contemporary Pentium D-branded desktop chips that have a max TDP of 130W.

| Intel Core 2 processor family | |||||||

|---|---|---|---|---|---|---|---|

| Original logo |

2009 new logo |

Desktop | Laptop | ||||

| Code-name | Core | Date released | Code-name | Core | Date released | ||

|

|

Conroe Allendale Wolfdale |

dual (65 nm) dual (65 nm) dual (45 nm) |

August 2006 January 2007 January 2008 |

Merom Penryn |

dual (65 nm) dual (45 nm) |

July 2006 January 2008 |

|

|

Conroe XE Kentsfield XE Yorkfield XE |

dual (65 nm) quad (65 nm) quad (45 nm) |

July 2006 November 2006 November 2007 |

Merom XE Penryn XE Penryn XE |

dual (65 nm) dual (45 nm) quad (45 nm) |

July 2007 January 2008 August 2008 |

|

|

Kentsfield Yorkfield |

quad (65 nm) quad (45 nm) |

January 2007 March 2008 |

Penryn | quad (45 nm) | August 2008 |

|

|

|

Merom-L Penryn-L |

Single (65 nm) Single (45 nm) |

September 2007 May 2008 |

||

| List of Intel Core 2 microprocessors | |||||||

With the release of the Core 2 processor, the abbreviation C2 has come into common use, with its variants C2D (the present Core 2 Duo), and C2Q, C2E to refer to the Core 2 Quad and Core 2 Extreme processors respectively. C2QX stands for the Extreme-Editions of the Quad (QX6700, QX6800, QX6850). The successors to the Core 2 brand are a set of Nehalem microarchitecture based processors called Core i3, i5, and i7. Core i7 was officially launched on 17 November 2008 as a family of three quad-core processor desktop models, further models started appearing throughout 2009. The last Core 2 processor to be released was the Core 2 Quad Q9500 in January 2010. The Core 2 processor line was removed from the official price lists in July 2011 .

X . I

processor with capacity x86-64

x86-64 (also known as x64, x86_64, AMD64 and Intel 64[note 1]) is the 64-bit version of the x86 instruction set. It supports vastly larger amounts (theoretically, 264 bytes or 16 exabytes) of virtual memory and physical memory than is possible on its 32-bit predecessors, allowing programs to store larger amounts of data in memory. x86-64 also provides 64-bit general-purpose registers and numerous other enhancements. It is fully backward compatible with 16-bit and 32-bit x86 code. (p13–14) Because the full x86 16-bit and 32-bit instruction sets remain implemented in hardware without any intervening emulation, existing x86 executables run with no compatibility or performance penalties, whereas existing applications that are recoded to take advantage of new features of the processor design may achieve performance improvements.

Note that the ability of the processor to execute code written using 16-bit compatible instructions does not mean 16-bit applications can still be run on the processor. Some 64-bit operating systems may no longer be able to support 16-bit application programs. Specifically, all 64-bit versions of Windows are no longer able to execute 16-bit applications due to changes in support for this mode of operation. Either the 16-bit application must be recompiled to run as a 32- or 64-bit application, or it must be run using an emulator.

The original specification, created by AMD and released in 2000, has been implemented by AMD, Intel and VIA. The AMD K8 processor was the first to implement the architecture; this was the first significant addition to the x86 architecture designed by a company other than Intel. Intel was forced to follow suit and introduced a modified NetBurst family which was fully software-compatible with AMD's design and specification. VIA Technologies introduced x86-64 in their VIA Isaiah architecture, with the VIA Nano.

The x86-64 specification is distinct from the Intel Itanium architecture (formerly IA-64), which is not compatible on the native instruction set level with the x86 architecture.

AMD64 logo

cloud in bright

AMD64 was created as an alternative to the radically different IA-64 architecture, which was designed by Intel and Hewlett Packard. Originally announced in 1999 while a full specification became available in August 2000, the AMD64 architecture was positioned by AMD from the beginning as an evolutionary way to add 64-bit computing capabilities to the existing x86 architecture, as opposed to Intel's approach of creating an entirely new 64-bit architecture with IA-64.The first AMD64-based processor, the Opteron, was released in April 2003.

Implementations

AMD's processors implementing the AMD64 architecture include Opteron, Athlon 64, Athlon 64 X2, Athlon 64 FX, Athlon II (followed by "X2", "X3", or "X4" to indicate the number of cores, and XLT models), Turion 64, Turion 64 X2, Sempron ("Palermo" E6 stepping and all "Manila" models), Phenom (followed by "X3" or "X4" to indicate the number of cores), Phenom II (followed by "X2", "X3", "X4" or "X6" to indicate the number of cores), FX, Fusion and Ryzen.Architectural features

The primary defining characteristic of AMD64 is the availability of 64-bit general-purpose processor registers (for example, rax and rbx), 64-bit integer arithmetic and logical operations, and 64-bit virtual addresses. The designers took the opportunity to make other improvements as well. Some of the most significant changes are described below.- 64-bit integer capability

- All general-purpose registers (GPRs) are expanded from 32 bits to 64 bits, and all arithmetic and logical operations, memory-to-register and register-to-memory operations, etc., can now operate directly on 64-bit integers. Pushes and pops on the stack default to 8-byte strides, and pointers are 8 bytes wide.

- Additional registers

- In addition to increasing the size of the general-purpose registers, the number of named general-purpose registers is increased from eight (i.e. eax, ebx, ecx, edx, ebp, esp, esi, edi) in x86 to 16 (i.e. rax, rbx, rcx, rdx, rbp, rsp, rsi, rdi, r8, r9, r10, r11, r12, r13, r14, r15). It is therefore possible to keep more local variables in registers rather than on the stack, and to let registers hold frequently accessed constants; arguments for small and fast subroutines may also be passed in registers to a greater extent.

- AMD64 still has fewer registers than many common RISC instruction sets (which typically have 32 registers ) or VLIW-like machines such as the IA-64 (which has 128 registers). However, an AMD64 implementation may have far more internal registers than the number of architectural registers exposed by the instruction set (see register renaming).

- Additional XMM (SSE) registers

- Similarly, the number of 128-bit XMM registers (used for Streaming SIMD instructions) is also increased from 8 to 16.

- Larger virtual address space

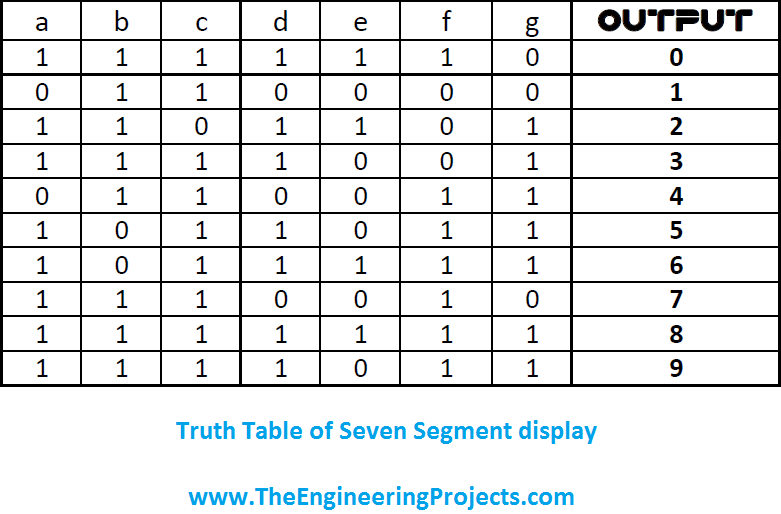

- The AMD64 architecture defines a 64-bit virtual address format, of which the low-order 48 bits are used in current implementations. (p120) This allows up to 256 TB (248 bytes) of virtual address space. The architecture definition allows this limit to be raised in future implementations to the full 64 bits, (p2)(p3)(p13)(p117)(p120) extending the virtual address space to 16 EB (264 bytes). This is compared to just 4 GB (232 bytes) for the x86.

- This means that very large files can be operated on by mapping the entire file into the process' address space (which is often much faster than working with file read/write calls), rather than having to map regions of the file into and out of the address space.

- Larger physical address space

- The original implementation of the AMD64 architecture implemented 40-bit physical addresses and so could address up to 1 TB (240 bytes) of RAM. (p24) Current implementations of the AMD64 architecture (starting from AMD 10h microarchitecture) extend this to 48-bit physical addresses and therefore can address up to 256 TB of RAM. The architecture permits extending this to 52 bits in the future ](p24) (limited by the page table entry format);(p131) this would allow addressing of up to 4 PB of RAM. For comparison, 32-bit x86 processors are limited to 64 GB of RAM in Physical Address Extension (PAE) mode, or 4 GB of RAM without PAE mode.](p4)

- Larger physical address space in legacy mode

- When operating in legacy mode the AMD64 architecture supports Physical Address Extension (PAE) mode, as do most current x86 processors, but AMD64 extends PAE from 36 bits to an architectural limit of 52 bits of physical address. Any implementation therefore allows the same physical address limit as under long mode.[11](p24)

- Instruction pointer relative data access

- Instructions can now reference data relative to the instruction pointer (RIP register). This makes position independent code, as is often used in shared libraries and code loaded at run time, more efficient.

- SSE instructions

- The original AMD64 architecture adopted Intel's SSE and SSE2 as core instructions. These instruction sets provide a vector supplement to the scalar x87 FPU, for the single-precision and double-precision data types. SSE2 also offers integer vector operations, for data types ranging from 8bit to 64bit precision. This makes the vector capabilities of the architecture on par with those of the most advanced x86 processors of its time. These instructions can also be used in 32-bit mode. The proliferation of 64-bit processors has made these vector capabilities ubiquitous in home computers, allowing the improvement of the standards of 32-bit applications. The 32-bit edition of Windows 8, for example, requires the presence of SSE2 instructions.[19] SSE3 instructions and later Streaming SIMD Extensions instruction sets are not standard features of the architecture.

- No-Execute bit

- The No-Execute bit or NX bit (bit 63 of the page table entry) allows the operating system to specify which pages of virtual address space can contain executable code and which cannot. An attempt to execute code from a page tagged "no execute" will result in a memory access violation, similar to an attempt to write to a read-only page. This should make it more difficult for malicious code to take control of the system via "buffer overrun" or "unchecked buffer" attacks. A similar feature has been available on x86 processors since the 80286 as an attribute of segment descriptors; however, this works only on an entire segment at a time.

- Segmented addressing has long been considered an obsolete mode of operation, and all current PC operating systems in effect bypass it, setting all segments to a base address of zero and (in their 32 bit implementation) a size of 4 GB. AMD was the first x86-family vendor to implement no-execute in linear addressing mode. The feature is also available in legacy mode on AMD64 processors, and recent Intel x86 processors, when PAE is used.

- Removal of older features

- A few "system programming" features of the x86 architecture were either unused or underused in modern operating systems and are either not available on AMD64 in long (64-bit and compatibility) mode, or exist only in limited form. These include segmented addressing (although the FS and GS segments are retained in vestigial form for use as extra base pointers to operating system structures),[11](p70) the task state switch mechanism, and virtual 8086 mode. These features remain fully implemented in "legacy mode", allowing these processors to run 32-bit and 16-bit operating systems without modifications. Some instructions that proved to be rarely useful are not supported in 64-bit mode, including saving/restoring of segment registers on the stack, saving/restoring of all registers (PUSHA/POPA), decimal arithmetic, BOUND and INTO instructions, and "far" jumps and calls with immediate operands.

Virtual address space details

Canonical form addresses

In addition, the AMD specification requires that the most significant 16 bits of any virtual address, bits 48 through 63, must be copies of bit 47 (in a manner akin to sign extension). If this requirement is not met, the processor will raise an exception.[11](p131) Addresses complying with this rule are referred to as "canonical form."[11](p130) Canonical form addresses run from 0 through 00007FFF'FFFFFFFF, and from FFFF8000'00000000 through FFFFFFFF'FFFFFFFF, for a total of 256 TB of usable virtual address space. This is still 65,536 times larger than the virtual 4 GB address space of 32-bit machines.

This feature eases later scalability to true 64-bit addressing. Many operating systems (including, but not limited to, the Windows NT family) take the higher-addressed half of the address space (named kernel space) for themselves and leave the lower-addressed half (user space) for application code, user mode stacks, heaps, and other data regions.[20] The "canonical address" design ensures that every AMD64 compliant implementation has, in effect, two memory halves: the lower half starts at 00000000'00000000 and "grows upwards" as more virtual address bits become available, while the higher half is "docked" to the top of the address space and grows downwards. Also, enforcing the "canonical form" of addresses by checking the unused address bits prevents their use by the operating system in tagged pointers as flags, privilege markers, etc., as such use could become problematic when the architecture is extended to implement more virtual address bits.

The first versions of Windows for x64 did not even use the full 256 TB; they were restricted to just 8 TB of user space and 8 TB of kernel space. Windows did not support the entire 48-bit address space until Windows 8.1, which was released in October 2013.

Page table structure

The 64-bit addressing mode ("long mode") is a superset of Physical Address Extensions (PAE); because of this, page sizes may be 4 KB (212 bytes) or 2 MB (221 bytes).[11](p120) Long mode also supports page sizes of 1 GB (230 bytes).[11](p120) Rather than the three-level page table system used by systems in PAE mode, systems running in long mode use four levels of page table: PAE's Page-Directory Pointer Table is extended from 4 entries to 512, and an additional Page-Map Level 4 (PML4) Table is added, containing 512 entries in 48-bit implementations. (p131) In implementations providing larger virtual addresses, this latter table would either grow to accommodate sufficient entries to describe the entire address range, up to a theoretical maximum of 33,554,432 entries for a 64-bit implementation, or be over ranked by a new mapping level, such as a PML5. A full mapping hierarchy of 4 KB pages for the whole 48-bit space would take a bit more than 512 GB of RAM (about 0.195% of the 256 TB virtual space).Operating system limits

The operating system can also limit the virtual address space. Details, where applicable, are given in the "Operating system compatibility and characteristics" section.Physical address space details

Current AMD64 processors support a physical address space of up to 248 bytes of RAM, or 256 TB.[16] However, as of June 2010, there were no known x86-64 motherboards that support 256 TB of RAM. The operating system may place additional limits on the amount of RAM that is usable or supported. Details on this point are given in the "Operating system compatibility and characteristics" section of this article.Operating modes

| Operating mode | Operating sub-mode | Operating system required | Type of code being run | Default address size | Default operand size | Supported typical operand sizes | Register file size | Typical GPR width |

|---|---|---|---|---|---|---|---|---|

| Long mode | 64-bit mode | 64-bit operating system or boot loader | 64-bit code | 64 bits | 32 bits | 8, 16, 32, or 64 bits | 16 registers per file | 64 bits |

| Compatibility mode | 64-bit operating system or boot loader | 32-bit protected mode code | 32 bits | 32 bits | 8, 16, or 32 bits | 8 registers per file | 32 bits | |

| 64-bit operating system | 16-bit protected mode code | 16 bits | 16 bits | 8, 16, or 32 bits | 8 registers per file | 32 bits | ||

| Legacy mode | Protected mode | 32-bit operating system or boot loader, or 64-bit boot loader | 32-bit protected mode code | 32 bits | 32 bits | 8, 16, or 32 bits | 8 registers per file | 32 bits |

| 16-bit protected mode operating system or boot loader, or 32- or 64-bit boot loader | 16-bit protected mode code | 16 bits | 16 bits | 8, 16, or 32 bits | 8 registers per file | 16 or 32 bits | ||

| Virtual 8086 mode | 16- or 32-bit protected mode operating system | 16-bit real mode code | 16 bits | 16 bits | 8, 16, or 32 bits | 8 registers per file | 16 or 32 bits | |

| Real mode | 16-bit real mode operating system or boot loader, or 32- or 64-bit boot loader | 16-bit real mode code | 16 bits | 16 bits | 8, 16, or 32 bits | 8 registers per file | 16 or 32 bits |

State diagram of the x86-64 operating modes

Long mode

Long mode is the architecture's intended primary mode of operation; it is a combination of the processor's native 64-bit mode and a combined 32-bit and 16-bit compatibility mode. It is used by 64-bit operating systems. Under a 64-bit operating system, 64-bit programs run under 64-bit mode, and 32-bit and 16-bit protected mode applications (that do not need to use either real mode or virtual 8086 mode in order to execute at any time) run under compatibility mode. Real-mode programs and programs that use virtual 8086 mode at any time cannot be run in long mode unless those modes are emulated in software.[11]:11 However, such programs may be started from an operating system running in long mode on processors supporting VT-x or AMD-V by creating a virtual processor running in the desired mode.Since the basic instruction set is the same, there is almost no performance penalty for executing protected mode x86 code. This is unlike Intel's IA-64, where differences in the underlying instruction set means that running 32-bit code must be done either in emulation of x86 (making the process slower) or with a dedicated x86 processor. However, on the x86-64 platform, many x86 applications could benefit from a 64-bit recompile, due to the additional registers in 64-bit code and guaranteed SSE2-based FPU support, which a compiler can use for optimization. However, applications that regularly handle integers wider than 32 bits, such as cryptographic algorithms, will need a rewrite of the code handling the huge integers in order to take advantage of the 64-bit registers.

Legacy mode

Legacy mode is the mode used by 16-bit ("protected mode" or "real mode") and 32-bit operating systems. In this mode, the processor acts like a 32-bit x86 processor, and only 16-bit and 32-bit code can be executed. Legacy mode allows for a maximum of 32 bit virtual addressing which limits the virtual address space to 4 GB. (p14)(p24)(p118) 64-bit programs cannot be run from legacy mode.Intel 64

Intel 64 is Intel's implementation of x86-64, used and implemented in various processors made by Intel.cloud in bright

Historically, AMD has developed and produced processors with instruction sets patterned after Intel's original designs, but with x86-64, roles were reversed: Intel found itself in the position of adopting the ISA which AMD had created as an extension to Intel's own x86 processor line.Intel's project was originally codenamed Yamhill (after the Yamhill River in Oregon's Willamette Valley). After several years of denying its existence, Intel announced at the February 2004 IDF that the project was indeed underway. Intel's chairman at the time, Craig Barrett, admitted that this was one of their worst-kept secrets.

Intel's name for this instruction set has changed several times. The name used at the IDF was CT (presumably for Clackamas Technology, another codename from an Oregon river); within weeks they began referring to it as IA-32e (for IA-32 extensions) and in March 2004 unveiled the "official" name EM64T (Extended Memory 64 Technology). In late 2006 Intel began instead using the name Intel 64 for its implementation, paralleling AMD's use of the name AMD64.[27]

The first processor to implement Intel 64 was the multi-socket processor Xeon code-named Nocona in June 2004. In contrast, the initial Prescott chips (February 2004) did not enable this feature. Intel subsequently began selling Intel 64-enabled Pentium 4s using the E0 revision of the Prescott core, being sold on the OEM market as the Pentium 4, model F. The E0 revision also adds eXecute Disable (XD) (Intel's name for the NX bit) to Intel 64, and has been included in then current Xeon code-named Irwindale. Intel's official launch of Intel 64 (under the name EM64T at that time) in mainstream desktop processors was the N0 stepping Prescott-2M.

The first Intel mobile processor implementing Intel 64 is the Merom version of the Core 2 processor, which was released on July 27, 2006. None of Intel's earlier notebook CPUs (Core Duo, Pentium M, Celeron M, Mobile Pentium 4) implement Intel 64.

The Intel white paper "5-Level Paging and 5-Level EPT" describe a mechanism, under development by Intel, that will allow Intel 64 processors to support 57-bit virtual addresses, with an additional page table level.[28]

Implementations

Intel's processors implementing the Intel64 architecture include the Pentium 4 F-series/5x1 series, 506, and 516, Celeron D models 3x1, 3x6, 355, 347, 352, 360, and 365 and all later Celerons, all models of Xeon since "Nocona", all models of Pentium Dual-Core processors since "Merom-2M", the Atom 230, 330, D410, D425, D510, D525, N450, N455, N470, N475, N550, N570, N2600 and N2800, and all versions of the Pentium D, Pentium Extreme Edition, Core 2, Core i7, Core i5, and Core i3 processors.VIA's x86-64 implementation

VIA Technologies introduced their first implementation of the x86-64 architecture in 2008 after five years of development by its CPU division, Centaur Technology.[29] Codenamed "Isaiah", the 64-bit architecture was unveiled on January 24, 2008,[30] and launched on May 29 under the VIA Nano brand name.[31]The processor supports a number of VIA-specific x86 extensions designed to boost efficiency in low-power appliances. It is expected that the Isaiah architecture will be twice as fast in integer performance and four times as fast in floating-point performance as the previous-generation VIA Esther at an equivalent clock speed. Power consumption is also expected to be on par with the previous-generation VIA CPUs, with thermal design power ranging from 5 W to 25 W.[32] Being a completely new design, the Isaiah architecture was built with support for features like the x86-64 instruction set and x86 virtualization which were unavailable on its predecessors, the VIA C7 line, while retaining their encryption extensions.

Differences between AMD64 and Intel 64

Although nearly identical, there are some differences between the two instruction sets in the semantics of a few seldom used machine instructions (or situations), which are mainly used for system programming.[33] Compilers generally produce executables (i.e. machine code) that avoid any differences, at least for ordinary application programs. This is therefore of interest mainly to developers of compilers, operating systems and similar, which must deal with individual and special system instructions.Recent implementations

- Intel 64's

BSFandBSRinstructions act differently than AMD64's when the source is zero and the operand size is 32 bits. The processor sets the zero flag and leaves the upper 32 bits of the destination undefined. - AMD64 requires a different microcode update format and control MSRs (model-specific registers) while Intel 64 implements microcode update unchanged from their 32-bit only processors.

- Intel 64 lacks some MSRs that are considered architectural in AMD64. These include

SYSCFG,TOP_MEM, andTOP_MEM2. - Intel 64 allows

SYSCALL/SYSRETonly in 64-bit mode (not in compatibility mode),[34] and allowsSYSENTER/SYSEXITin both modes.[35] AMD64 lacksSYSENTER/SYSEXITin both sub-modes of long mode.[11]:33 - In 64-bit mode, near branches with the 66H (operand size override) prefix behave differently. Intel 64 ignores this prefix: the instruction has 32-bit sign extended offset, and instruction pointer is not truncated. AMD64 uses 16-bit offset field in the instruction, and clears the top 48 bits of instruction pointer.

- AMD processors raise a floating point Invalid Exception when performing an

FLDorFSTPof an 80-bit signalling NaN, while Intel processors do not. - Intel 64 lacks the ability to save and restore a reduced (and thus faster) version of the floating-point state (involving the

FXSAVEandFXRSTORinstructions). - Recent AMD64 processors have reintroduced limited support for segmentation, via the Long Mode Segment Limit Enable (LMSLE) bit, to ease virtualization of 64-bit guests.

- When returning to a non-canonical address using

SYSRET, AMD64 processors execute the general protection fault handler in privilege level 3, while on Intel 64 processors it is executed in privilege level 0.

Older implementations

- Early AMD64 processors (typically on Socket 939 and 940) lacked the CMPXCHG16B instruction, which is an extension of the CMPXCHG8B instruction present on most post-80486 processors. Similar to CMPXCHG8B, CMPXCHG16B allows for atomic operations on octal words. This is useful for parallel algorithms that use compare and swap on data larger than the size of a pointer, common in lock-free and wait-free algorithms. Without CMPXCHG16B one must use workarounds, such as a critical section or alternative lock-free approaches.[40] Its absence also prevents 64-bit Windows prior to Windows 8.1 from having a user-mode address space larger than 8 terabytes.[41] The 64-bit version of Windows 8.1 requires the instruction.[42]

- Early AMD64 and Intel 64 CPUs lacked LAHF and SAHF instructions in 64-bit mode. AMD introduced these instructions (also in 64-bit mode) with their Athlon 64, Opteron and Turion 64 revision D processors in March 2005[43][44][45] while Intel introduced the instructions with the Pentium 4 G1 stepping in December 2005. The 64-bit version of Windows 8.1 requires this feature.[42]

- Early Intel CPUs with Intel 64 also lack the NX bit of the AMD64 architecture. This feature is required by all versions of Windows 8.x.

- Early Intel 64 implementations (Prescott and Cedar Mill) only allowed access to 64 GB of physical memory while original AMD64 implementations allowed access to 1 TB of physical memory. Recent AMD64 implementations provide 256 TB of physical address space (and AMD plans an expansion to 4 PB),[citation needed] while some Intel 64 implementations could address up to 64 TB.[46] Physical memory capacities of this size are appropriate for large-scale applications (such as large databases), and high-performance computing (centrally oriented applications and scientific computing).

Adoption

An area chart showing the representation of different families of

microprocessors in the TOP500 supercomputer ranking list, from 1992 to

2014.

As of 2014, the main non-x86 CPU architecture which is still used in supercomputing is the Power Architecture used by IBM POWER microprocessors (blue with a diamond pattern on the diagram), with SPARC being far behind in numbers on TOP500, while recently a Fujitsu SPARC64 VIIIfx based supercomputer without co-processors reached number one that is still in the top ten. Non-CPU architecture co-processors (GPGPU) have also played a big role in performance. Intel's Xeon Phi coprocessors, which implement a subset of x86-64 with some vector extensions,[48] are also used, along with x86-64 processors, in the Tianhe-2 supercomputer.

Operating system compatibility and characteristics

The following operating systems and releases support the x86-64 architecture in long mode.BSD

DragonFly BSD

Preliminary infrastructure work was started in February 2004 for a x86-64 port.[50] This development later stalled. Development started again during July 2007[51] and continued during Google Summer of Code 2008 and SoC 2009.[52][53] The first official release to contain x86-64 support was version 2.4.FreeBSD

FreeBSD first added x86-64 support under the name "amd64" as an experimental architecture in 5.1-RELEASE in June 2003. It was included as a standard distribution architecture as of 5.2-RELEASE in January 2004. Since then, FreeBSD has designated it as a Tier 1 platform. The 6.0-RELEASE version cleaned up some quirks with running x86 executables under amd64, and most drivers work just as they do on the x86 architecture. Work is currently being done to integrate more fully the x86 application binary interface (ABI), in the same manner as the Linux 32-bit ABI compatibility currently works.NetBSD

x86-64 architecture support was first committed to the NetBSD source tree on June 19, 2001. As of NetBSD 2.0, released on December 9, 2004, NetBSD/amd64 is a fully integrated and supported port. 32-bit code is still supported in 64-bit mode, with a netbsd-32 kernel compatibility layer for 32-bit syscalls. The NX bit is used to provide non-executable stack and heap with per-page granularity (segment granularity being used on 32-bit x86).OpenBSD

OpenBSD has supported AMD64 since OpenBSD 3.5, released on May 1, 2004. Complete in-tree implementation of AMD64 support was achieved prior to the hardware's initial release because AMD had loaned several machines for the project's hackathon that year. OpenBSD developers have taken to the platform because of its support for the NX bit, which allowed for an easy implementation of the W^X feature.The code for the AMD64 port of OpenBSD also runs on Intel 64 processors which contains cloned use of the AMD64 extensions, but since Intel left out the page table NX bit in early Intel 64 processors, there is no W^X capability on those Intel CPUs; later Intel 64 processors added the NX bit under the name "XD bit". Symmetric multiprocessing (SMP) works on OpenBSD's AMD64 port, starting with release 3.6 on November 1, 2004.

DOS

It is possible to enter long mode under DOS without a DOS extender,[55] but the user must return to real mode in order to call BIOS or DOS interrupts.It may also be possible to enter long mode with a DOS extender similar to DOS/4GW, but more complex since x86-64 lacks virtual 8086 mode. DOS itself is not aware of that, and no benefits should be expected unless running DOS in an emulation with an adequate virtualization driver backend, for example: the mass storage interface.

Linux

Linux was the first operating system kernel to run the x86-64 architecture in long mode, starting with the 2.4 version in 2001 (preceding the hardware's availability).[56][57] Linux also provides backward compatibility for running 32-bit executables. This permits programs to be recompiled into long mode while retaining the use of 32-bit programs. Several Linux distributions currently ship with x86-64-native kernels and userlands. Some, such as Arch Linux,[58] SUSE, Mandriva, and Debian allow users to install a set of 32-bit components and libraries when installing off a 64-bit DVD, thus allowing most existing 32-bit applications to run alongside the 64-bit OS. Other distributions, such as Fedora, Slackware and Ubuntu, are available in one version compiled for a 32-bit architecture and another compiled for a 64-bit architecture. Fedora and Red Hat Enterprise Linux allow concurrent installation of all userland components in both 32 and 64-bit versions on a 64-bit system.x32 ABI (Application Binary Interface), introduced in Linux 3.4, allows programs compiled for the x32 ABI to run in the 64-bit mode of x86-64 while only using 32-bit pointers and data fields.[59][60][61] Though this limits the program to a virtual address space of 4 GB it also decreases the memory footprint of the program and in some cases can allow it to run faster.[59][60][61]

64-bit Linux allows up to 128 TB of virtual address space for individual processes, and can address approximately 64 TB of physical memory, subject to processor and system limitations.[62]

macOS

Mac OS X 10.4.7 and higher versions of Mac OS X 10.4 run 64-bit command-line tools using the POSIX and math libraries on 64-bit Intel-based machines, just as all versions of Mac OS X 10.4 and 10.5 run them on 64-bit PowerPC machines. No other libraries or frameworks work with 64-bit applications in Mac OS X 10.4.[63] The kernel, and all kernel extensions, are 32-bit only.Mac OS X 10.5 supports 64-bit GUI applications using Cocoa, Quartz, OpenGL, and X11 on 64-bit Intel-based machines, as well as on 64-bit PowerPC machines.[64] All non-GUI libraries and frameworks also support 64-bit applications on those platforms. The kernel, and all kernel extensions, are 32-bit only.

Mac OS X 10.6 is the first version of macOS that supports a 64-bit kernel. However, not all 64-bit computers can run the 64-bit kernel, and not all 64-bit computers that can run the 64-bit kernel will do so by default.[65] The 64-bit kernel, like the 32-bit kernel, supports 32-bit applications; both kernels also support 64-bit applications. 32-bit applications have a virtual address space limit of 4 GB under either kernel.[66][67]

OS X 10.8 includes only the 64-bit kernel, but continues to support 32-bit applications.

The 64-bit kernel does not support 32-bit kernel extensions, and the 32-bit kernel does not support 64-bit kernel extensions.

macOS uses the universal binary format to package 32- and 64-bit versions of application and library code into a single file; the most appropriate version is automatically selected at load time. In Mac OS X 10.6, the universal binary format is also used for the kernel and for those kernel extensions that support both 32-bit and 64-bit kernels.

Solaris

Solaris 10 and later releases support the x86-64 architecture.For Solaris 10, just as with the SPARC architecture, there is only one operating system image, which contains a 32-bit kernel and a 64-bit kernel; this is labeled as the "x64/x86" DVD-ROM image. The default behavior is to boot a 64-bit kernel, allowing both 64-bit and existing or new 32-bit executables to be run. A 32-bit kernel can also be manually selected, in which case only 32-bit executables will run. The

isainfo command can be used to determine if a system is running a 64-bit kernel.For Solaris 11, only the 64-bit kernel is provided. However, the 64-bit kernel supports both 32- and 64-bit executables, libraries, and system calls.

Windows

x64 editions of Microsoft Windows client and server—Windows XP Professional x64 Edition and Windows Server 2003 x64 Edition—were released in March 2005.[68] Internally they are actually the same build (5.2.3790.1830 SP1),[69][70] as they share the same source base and operating system binaries, so even system updates are released in unified packages, much in the manner as Windows 2000 Professional and Server editions for x86. Windows Vista, which also has many different editions, was released in January 2007. Windows 7 was released in July 2009. Windows Server 2008 R2 was sold in only x64 and Itanium editions; later versions of Windows Server only offer an x64 edition.Versions of Windows for x64 prior to Windows 8.1 and Windows Server 2012 R2 offer the following:

- 8 TB of virtual address space per process, accessible from both user mode and kernel mode, referred to as the user mode address space. An x64 program can use all of this, subject to backing store limits on the system, and provided it is linked with the "large address aware" option.[71] This is a 4096-fold increase over the default 2 GB user-mode virtual address space offered by 32-bit Windows.

- 8 TB of kernel mode virtual address space for the operating system.[72]

As with the user mode address space, this is a 4096-fold increase over

32-bit Windows versions. The increased space primarily benefits the file

system cache and kernel mode "heaps" (non-paged pool and paged pool).

Windows only uses a total of 16 TB out of the 256 TB implemented by the

processors because early AMD64 processors lacked a

CMPXCHG16Binstruction.

CMPXCHG16B instruction.The following additional characteristics apply to all x64 versions of Windows:

- Ability to run existing 32-bit applications (

.exeprograms) and dynamic link libraries (.dlls) using WoW64 if WoW64 is supported on that version. Furthermore, a 32-bit program, if it was linked with the "large address aware" option,[71] can use up to 4 GB of virtual address space in 64-bit Windows, instead of the default 2 GB (optional 3 GB with /3GB boot option and "large address aware" link option) offered by 32-bit Windows.[75] Unlike the use of the /3GB boot option on x86, this does not reduce the kernel mode virtual address space available to the operating system. 32-bit applications can therefore benefit from running on x64 Windows even if they are not recompiled for x86-64. - Both 32- and 64-bit applications, if not linked with "large address aware," are limited to 2 GB of virtual address space.

- Ability to use up to 128 GB (Windows XP/Vista), 192 GB (Windows 7), 512 GB (Windows 8), 1 TB (Windows Server 2003), 2 TB (Windows Server 2008/Windows 10), 4 TB (Windows Server 2012), or 24 TB (Windows Server 2016) of physical random access memory (RAM).

- LLP64 data model: "int" and "long" types are 32 bits wide, long long is 64 bits, while pointers and types derived from pointers are 64 bits wide.

- Kernel mode device drivers must be 64-bit versions; there is no way to run 32-bit kernel mode executables within the 64-bit operating system. User mode device drivers can be either 32-bit or 64-bit.

- 16-bit Windows (Win16) and DOS applications will not run on x86-64 versions of Windows due to removal of the virtual DOS machine subsystem (NTVDM) which relied upon the ability to use virtual 8086 mode. Virtual 8086 mode cannot be entered while running in long mode.

- Full implementation of the NX (No Execute) page protection feature. This is also implemented on recent 32-bit versions of Windows when they are started in PAE mode.

- Instead of FS segment descriptor on x86 versions of the Windows NT family, GS segment descriptor is used to point to two operating system defined structures: Thread Information Block (NT_TIB) in user mode and Processor Control Region (KPCR) in kernel mode. Thus, for example, in user mode GS:0 is the address of the first member of the Thread Information Block. Maintaining this convention made the x86-64 port easier, but required AMD to retain the function of the FS and GS segments in long mode — even though segmented addressing per se is not really used by any modern operating system.[72]

- Early reports claimed that the operating system scheduler would not save and restore the x87 FPU machine state across thread context switches. Observed behavior shows that this is not the case: the x87 state is saved and restored, except for kernel mode-only threads (a limitation that exists in the 32-bit version as well). The most recent documentation available from Microsoft states that the x87/MMX/3DNow! instructions may be used in long mode, but that they are deprecated and may cause compatibility problems in the future.[75]

- Some components like Microsoft Jet Database Engine and Data Access Objects will not be ported to 64-bit architectures such as x86-64 and IA-64.[77][78]

- Microsoft Visual Studio can compile native applications to target either the x86-64 architecture, which can run only on 64-bit Microsoft Windows, or the IA-32 architecture, which can run as a 32-bit application on 32-bit Microsoft Windows or 64-bit Microsoft Windows in WoW64 emulation mode. Managed applications can be compiled either in IA-32, x86-64 or AnyCPU modes. Software created in the first two modes behave like their IA-32 or x86-64 native code counterparts respectively; When using the AnyCPU mode however, applications in 32-bit versions of Microsoft Windows run as 32-bit applications, while they run as a 64-bit application in 64-bit editions of Microsoft Windows.

Video game consoles

Both PlayStation 4 and Xbox One and their successors incorporate AMD x86-64 processors, based on Jaguar microarchitecture. Firmware and games are written in the x86-64 codes, no legacy x86 codes are involved with them.Industry naming conventions

Since AMD64 and Intel 64 are substantially similar, many software and hardware products use one vendor-neutral term to indicate their compatibility with both implementations. AMD's original designation for this processor architecture, "x86-64", is still sometimes used for this purpose,[2] as is the variant "x86_64". Other companies, such as Microsoft and Sun Microsystems/Oracle Corporation,[5] use the contraction "x64" in marketing material.The term IA-64 refers to the Itanium processor, and should not be confused with x86-64, as it is a completely different instruction set.

Many operating systems and products, especially those that introduced x86-64 support prior to Intel's entry into the market, use the term "AMD64" or "amd64" to refer to both AMD64 and Intel 64.

- amd64

- Most BSD systems such as FreeBSD, MidnightBSD, NetBSD and OpenBSD refer to both AMD64 and Intel 64 under the architecture name "amd64".

- Some Linux distributions such as Debian, Ubuntu, and Gentoo refer to both AMD64 and Intel 64 under the architecture name "amd64".

- Microsoft Windows's x64 versions use the AMD64 moniker internally to designate various components which use or are compatible with this architecture. For example, the environment variable PROCESSOR_ARCHITECTURE is assigned the value "AMD64" as opposed to "x86" in 32-bit versions, and the system directory on a Windows x64 Edition installation CD-ROM is named "AMD64", in contrast to "i386" in 32-bit versions.[81]

- Sun's Solaris' isalist command identifies both AMD64- and Intel 64-based systems as "amd64".

- Java Development Kit (JDK): the name "amd64" is used in directory names containing x86-64 files.

- x86_64

- The Linux kernel[82] and the GNU Compiler Collection refers to 64-bit architecture as "x86_64".

- Some Linux distributions, such as Fedora, openSUSE, and Arch Linux refer to this 64-bit architecture as "x86_64".

- Apple macOS refers to 64-bit architecture as "x86-64" or "x86_64", as seen in the Terminal command

arch[3] and in their developer documentation.[2][4] - Breaking with most other BSD systems, DragonFly BSD refers to 64-bit architecture as "x86_64".

- Haiku refers to 64-bit architecture as "x86_64".

Licensing

x86-64/AMD64 was solely developed by AMD. AMD holds patents on techniques used in AMD64; those patents must be licensed from AMD in order to implement AMD64. Intel entered into a cross-licensing agreement with AMD, licensing to AMD their patents on existing x86 techniques, and licensing from AMD their patents on techniques used in x86-64.[86] In 2009, AMD and Intel settled several lawsuits and cross-licensing disagreements, extending their cross-licensing agreements.X . II

Microprocessor

A microprocessor is a computer processor which incorporates the functions of a computer's central processing unit (CPU) on a single integrated circuit (IC),[1] or at most a few integrated circuits.[2] The microprocessor is a multipurpose, clock driven, register based, digital-integrated circuit which accepts binary data as input, processes it according to instructions stored in its memory, and provides results as output. Microprocessors contain both combinational logic and sequential digital logic. Microprocessors operate on numbers and symbols represented in the binary numeral system.

The integration of a whole CPU onto a single chip or on a few chips greatly reduced the cost of processing power, increasing efficiency. Integrated circuit processors are produced in large numbers by highly automated processes resulting in a low per unit cost. Single-chip processors increase reliability as there are many fewer electrical connections to fail. As microprocessor designs get better, the cost of manufacturing a chip (with smaller components built on a semiconductor chip the same size) generally stays the same.

Before microprocessors, small computers had been built using racks of circuit boards with many medium- and small-scale integrated circuits . Microprocessors combined this into one or a few large-scale ICs. Continued increases in microprocessor capacity have since rendered other forms of computers almost completely obsolete (see history of computing hardware), with one or more microprocessors used in everything from the smallest embedded systems and handheld devices to the largest mainframes and supercomputers.

A Japanese manufactured HuC6260A microprocessor

Structure

A block diagram of the architecture of the Z80

microprocessor, showing the arithmetic and logic section, register

file, control logic section, and buffers to external address and data

lines

A minimal hypothetical microprocessor might only include an arithmetic logic unit (ALU) and a control logic section. The ALU performs operations such as addition, subtraction, and operations such as AND or OR. Each operation of the ALU sets one or more flags in a status register, which indicate the results of the last operation (zero value, negative number, overflow, or others). The control logic retrieves instruction codes from memory and initiates the sequence of operations required for the ALU to carry out the instruction. A single operation code might affect many individual data paths, registers, and other elements of the processor.

As integrated circuit technology advanced, it was feasible to manufacture more and more complex processors on a single chip. The size of data objects became larger; allowing more transistors on a chip allowed word sizes to increase from 4- and 8-bit words up to today's 64-bit words. Additional features were added to the processor architecture; more on-chip registers sped up programs, and complex instructions could be used to make more compact programs. Floating-point arithmetic, for example, was often not available on 8-bit microprocessors, but had to be carried out in software. Integration of the floating point unit first as a separate integrated circuit and then as part of the same microprocessor chip, sped up floating point calculations.

Occasionally, physical limitations of integrated circuits made such practices as a bit slice approach necessary. Instead of processing all of a long word on one integrated circuit, multiple circuits in parallel processed subsets of each data word. While this required extra logic to handle, for example, carry and overflow within each slice, the result was a system that could handle, for example, 32-bit words using integrated circuits with a capacity for only four bits each.

With the ability to put large numbers of transistors on one chip, it becomes feasible to integrate memory on the same die as the processor. This CPU cache has the advantage of faster access than off-chip memory, and increases the processing speed of the system for many applications. Processor clock frequency has increased more rapidly than external memory speed, except in the recent past, so cache memory is necessary if the processor is not delayed by slower external memory.

Special-purpose designs

A microprocessor is a general purpose system. Several specialized processing devices have followed from the technology:- A digital signal processor (DSP) is specialized for signal processing.

- Graphics processing units (GPUs) are processors designed primarily for realtime rendering of 3D images. They may be fixed function (as was more common in the 1990s), or support programmable shaders. With the continuing rise of GPGPU, GPUs are evolving into increasingly general purpose stream processors (running compute shaders), whilst retaining hardware assist for rasterizing, but still differ from CPUs in that they are optimized for throughput over latency, and are not suitable for running application or OS code.

- Other specialized units exist for video processing and machine vision.

- Microcontrollers integrate a microprocessor with peripheral devices in embedded systems. These tend to have different tradeoffs compared to CPUs.

Nevertheless, trade-offs apply: running 32-bit arithmetic on an 8-bit chip could end up using more power, as the chip must execute software with multiple instructions. Modern microprocessors go into low power states when possible,[6] and an 8-bit chip running 32-bit calculations would be active for more cycles. This creates a delicate balance between software, hardware and use patterns, plus costs.

When manufactured on a similar process, 8-bit microprocessors use less power when operating and less power when sleeping than 32-bit microprocessors.

However, a 32-bit microprocessor may use less average power than an 8-bit microprocessor when the application requires certain operations such as floating-point math that take many more clock cycles on an 8-bit microprocessor than a 32-bit microprocessor so the 8-bit microprocessor spends more time in high-power operating mode .

Embedded applications

Thousands of items that were traditionally not computer-related include microprocessors. These include large and small household appliances, cars (and their accessory equipment units), car keys, tools and test instruments, toys, light switches/dimmers and electrical circuit breakers, smoke alarms, battery packs, and hi-fi audio/visual components (from DVD players to phonograph turntables). Such products as cellular telephones, DVD video system and HDTV broadcast systems fundamentally require consumer devices with powerful, low-cost, microprocessors. Increasingly stringent pollution control standards effectively require automobile manufacturers to use microprocessor engine management systems, to allow optimal control of emissions over widely varying operating conditions of an automobile. Non-programmable controls would require complex, bulky, or costly implementation to achieve the results possible with a microprocessor.A microprocessor control program (embedded software) can be easily tailored to different needs of a product line, allowing upgrades in performance with minimal redesign of the product. Different features can be implemented in different models of a product line at negligible production cost.

Microprocessor control of a system can provide control strategies that would be impractical to implement using electromechanical controls or purpose-built electronic controls. For example, an engine control system in an automobile can adjust ignition timing based on engine speed, load on the engine, ambient temperature, and any observed tendency for knocking—allowing an automobile to operate on a range of fuel grades.

The advent of low-cost computers on integrated circuits has transformed modern society. General-purpose microprocessors in personal computers are used for computation, text editing, multimedia display, and communication over the Internet. Many more microprocessors are part of embedded systems, providing digital control over myriad objects from appliances to automobiles to cellular phones and industrial process control.

The first use of the term "microprocessor" is attributed to Viatron Computer Systems describing the custom integrated circuit used in their System 21 small computer system announced in 1968.

By the late-1960s, designers were striving to integrate the central processing unit (CPU) functions of a computer onto a handful of MOS LSI chips, called microprocessor unit (MPU) chipsets. Building on an earlier Busicom design from 1969, Intel introduced the first commercial microprocessor, the 4-bit Intel 4004, in 1971, followed by its 8-bit microprocessor 8008 in 1972. Building on 8-bit arithmetic logic units (3800/3804) he designed earlier at Fairchild, in 1969, Lee Boysel created the Four-Phase Systems Inc. AL-1 an 8-bit CPU slice that was expandable to 32-bits. In 1970, Steve Geller and Ray Holt of Garrett AiResearch designed the MP944 chipset to implement the F-14A Central Air Data Computer on six metal-gate chips fabricated by AMI.

During the 1960s, computer processors were constructed out of small and medium-scale ICs—each containing from tens of transistors to a few hundred. These were placed and soldered onto printed circuit boards, and often multiple boards were interconnected in a chassis. A large number of discrete logic gates uses more electrical power—and therefore produces more heat—than a more integrated design with fewer ICs. The distance that signals have to travel between ICs on the boards limits a computer's operating system speed.

In the NASA Apollo space missions to the moon in the 1960s and 1970s, all onboard computations for primary guidance, navigation, and control were provided by a small custom processor called "The Apollo Guidance Computer". It used wire wrap circuit boards whose only logic elements were three-input NOR gates.[11]

The first microprocessors emerged in the early 1970s, and were used for electronic calculators, using binary-coded decimal (BCD) arithmetic on 4-bit words. Other embedded uses of 4-bit and 8-bit microprocessors, such as terminals, printers, various kinds of automation etc., followed soon after. Affordable 8-bit microprocessors with 16-bit addressing also led to the first general-purpose microcomputers from the mid-1970s on.

Since the early 1970s, the increase in capacity of microprocessors has followed Moore's law; this originally suggested that the number of components that can be fitted onto a chip doubles every year. With present technology, it is actually every two years,[12] and as such Moore later changed the period to two year

Intel 4004 (1969-1971)

The 4004 with cover removed (left) and as actually used (right)

Intel 4004, the first commercial microprocessor

Silicon and germanium alloy for microprocessors

Four-Phase Systems AL1 (1969)

AL1 by Four-Phase Systems Inc: one from the earliest inventions in the field of microprocessor technology

Pico/General Instrument

The PICO1/GI250 chip introduced in 1971. This was designed by Pico

Electronics (Glenrothes, Scotland) and manufactured by General

Instrument of Hicksville NY.

Pico was a spinout by five GI design engineers whose vision was to create single chip calculator ICs. They had significant previous design experience on multiple calculator chipsets with both GI and Marconi-Elliott.[29] The key team members had originally been tasked by Elliott Automation to create an 8-bit computer in MOS and had helped establish a MOS Research Laboratory in Glenrothes, Scotland in 1967.

Calculators were becoming the largest single market for semiconductors so Pico and GI went on to have significant success in this burgeoning market. GI continued to innovate in microprocessors and microcontrollers with products including the CP1600, IOB1680 and PIC1650.[30] In 1987, the GI Microelectronics business was spun out into the Microchip PIC microcontroller business.

Gilbert Hyatt

Gilbert Hyatt was awarded a patent claiming an invention pre-dating both TI and Intel, describing a "microcontroller". The patent was later invalidated, but not before substantial royalties were paid out.TMS 1000

The Smithsonian Institution says TI engineers Gary Boone and Michael Cochran succeeded in creating the first microcontroller (also called a microcomputer) and the first single-chip CPU in 1971. The result of their work was the TMS 1000, which went on the market in 1974.[34] TI stressed the 4-bit TMS 1000 for use in pre-programmed embedded applications, introducing a version called the TMS1802NC on September 17, 1971 that implemented a calculator on a chip.TI filed for a patent on the microprocessor. Gary Boone was awarded U.S. Patent 3,757,306 for the single-chip microprocessor architecture on September 4, 1973. In 1971, and again in 1976, Intel and TI entered into broad patent cross-licensing agreements, with Intel paying royalties to TI for the microprocessor patent. A history of these events is contained in court documentation from a legal dispute between Cyrix and Intel, with TI as inventor and owner of the microprocessor patent.

A computer-on-a-chip combines the microprocessor core (CPU), memory, and I/O (input/output) lines onto one chip. The computer-on-a-chip patent, called the "microcomputer patent" at the time, U.S. Patent 4,074,351, was awarded to Gary Boone and Michael J. Cochran of TI. Aside from this patent, the standard meaning of microcomputer is a computer using one or more microprocessors as its CPU(s), while the concept defined in the patent is more akin to a microcontroller.

8-bit designs

The 8008 was the precursor to the successful Intel 8080 (1974), which offered improved performance over the 8008 and required fewer support chips. Federico Faggin conceived and designed it using high voltage N channel MOS. The Zilog Z80 (1976) was also a Faggin design, using low voltage N channel with depletion load and derivative Intel 8-bit processors: all designed with the methodology Faggin created for the 4004. Motorola released the competing 6800 in August 1974, and the similar MOS Technology 6502 in 1975 (both designed largely by the same people). The 6502 family rivaled the Z80 in popularity during the 1980s.

A low overall cost, small packaging, simple computer bus requirements, and sometimes the integration of extra circuitry (e.g. the Z80's built-in memory refresh circuitry) allowed the home computer "revolution" to accelerate sharply in the early 1980s. This delivered such inexpensive machines as the Sinclair ZX-81, which sold for US$99 (equivalent to $260.80 in 2016). A variation of the 6502, the MOS Technology 6510 was used in the Commodore 64 and yet another variant, the 8502, powered the Commodore 128.

The Western Design Center, Inc (WDC) introduced the CMOS 65C02 in 1982 and licensed the design to several firms. It was used as the CPU in the Apple IIe and IIc personal computers as well as in medical implantable grade pacemakers and defibrillators, automotive, industrial and consumer devices. WDC pioneered the licensing of microprocessor designs, later followed by ARM (32-bit) and other microprocessor intellectual property (IP) providers in the 1990s.

Motorola introduced the MC6809 in 1978. It was an ambitious and well thought-through 8-bit design that was source compatible with the 6800, and implemented using purely hard-wired logic (subsequent 16-bit microprocessors typically used microcode to some extent, as CISC design requirements were becoming too complex for pure hard-wired logic).

Another early 8-bit microprocessor was the Signetics 2650, which enjoyed a brief surge of interest due to its innovative and powerful instruction set architecture.

A seminal microprocessor in the world of spaceflight was RCA's RCA 1802 (aka CDP1802, RCA COSMAC) (introduced in 1976), which was used on board the Galileo probe to Jupiter (launched 1989, arrived 1995). RCA COSMAC was the first to implement CMOS technology. The CDP1802 was used because it could be run at very low power, and because a variant was available fabricated using a special production process, silicon on sapphire (SOS), which provided much better protection against cosmic radiation and electrostatic discharge than that of any other processor of the era. Thus, the SOS version of the 1802 was said to be the first radiation-hardened microprocessor.

The RCA 1802 had a static design, meaning that the clock frequency could be made arbitrarily low, or even stopped. This let the Galileo spacecraft use minimum electric power for long uneventful stretches of a voyage. Timers or sensors would awaken the processor in time for important tasks, such as navigation updates, attitude control, data acquisition, and radio communication. Current versions of the Western Design Center 65C02 and 65C816 have static cores, and thus retain data even when the clock is completely halted.

12-bit designs

The Intersil 6100 family consisted of a 12-bit microprocessor (the 6100) and a range of peripheral support and memory ICs. The microprocessor recognised the DEC PDP-8 minicomputer instruction set. As such it was sometimes referred to as the CMOS-PDP8. Since it was also produced by Harris Corporation, it was also known as the Harris HM-6100. By virtue of its CMOS technology and associated benefits, the 6100 was being incorporated into some military designs until the early 1980s.16-bit designs

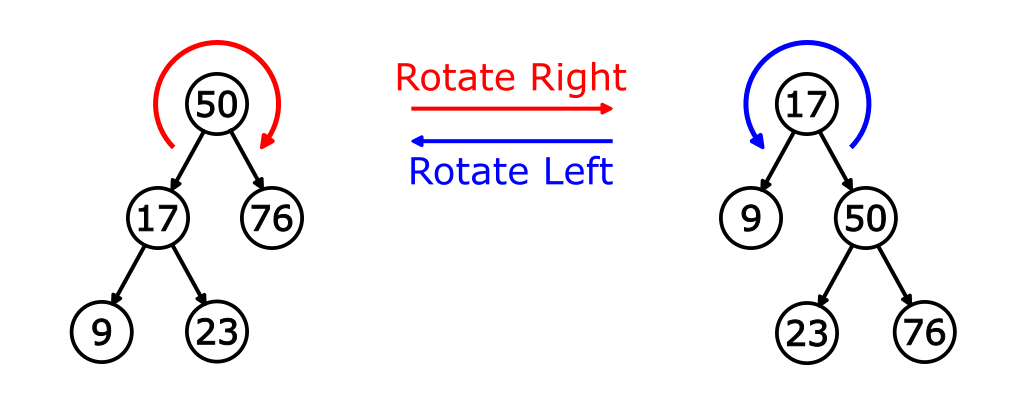

| Microprocessor modes for the x86 architecture |

|---|

|

Other early multi-chip 16-bit microprocessors include one that Digital Equipment Corporation (DEC) used in the LSI-11 OEM board set and the packaged PDP 11/03 minicomputer—and the Fairchild Semiconductor MicroFlame 9440, both introduced in 1975–76. In 1975, National introduced the first 16-bit single-chip microprocessor, the National Semiconductor PACE, which was later followed by an NMOS version, the INS8900.

Another early single-chip 16-bit microprocessor was TI's TMS 9900, which was also compatible with their TI-990 line of minicomputers. The 9900 was used in the TI 990/4 minicomputer, the TI-99/4A home computer, and the TM990 line of OEM microcomputer boards. The chip was packaged in a large ceramic 64-pin DIP package, while most 8-bit microprocessors such as the Intel 8080 used the more common, smaller, and less expensive plastic 40-pin DIP. A follow-on chip, the TMS 9980, was designed to compete with the Intel 8080, had the full TI 990 16-bit instruction set, used a plastic 40-pin package, moved data 8 bits at a time, but could only address 16 KB. A third chip, the TMS 9995, was a new design. The family later expanded to include the 99105 and 99110.

The Western Design Center (WDC) introduced the CMOS 65816 16-bit upgrade of the WDC CMOS 65C02 in 1984. The 65816 16-bit microprocessor was the core of the Apple IIgs and later the Super Nintendo Entertainment System, making it one of the most popular 16-bit designs of all time.

Intel "upsized" their 8080 design into the 16-bit Intel 8086, the first member of the x86 family, which powers most modern PC type computers. Intel introduced the 8086 as a cost-effective way of porting software from the 8080 lines, and succeeded in winning much business on that premise. The 8088, a version of the 8086 that used an 8-bit external data bus, was the microprocessor in the first IBM PC. Intel then released the 80186 and 80188, the 80286 and, in 1985, the 32-bit 80386, cementing their PC market dominance with the processor family's backwards compatibility. The 80186 and 80188 were essentially versions of the 8086 and 8088, enhanced with some onboard peripherals and a few new instructions. Although Intel's 80186 and 80188 were not used in IBM PC type designs,[dubious ] second source versions from NEC, the V20 and V30 frequently were. The 8086 and successors had an innovative but limited method of memory segmentation, while the 80286 introduced a full-featured segmented memory management unit (MMU). The 80386 introduced a flat 32-bit memory model with paged memory management.

The 16-bit Intel x86 processors up to and including the 80386 do not include floating-point units (FPUs). Intel introduced the 8087, 80187, 80287 and 80387 math coprocessors to add hardware floating-point and transcendental function capabilities to the 8086 through 80386 CPUs. The 8087 works with the 8086/8088 and 80186/80188,[38] the 80187 works with the 80186 but not the 80188,[39] the 80287 works with the 80286 and the 80387 works with the 80386. The combination of an x86 CPU and an x87 coprocessor forms a single multi-chip microprocessor; the two chips are programmed as a unit using a single integrated instruction set.[40] The 8087 and 80187 coprocessors are connected in parallel with the data and address buses of their parent processor and directly execute instructions intended for them. The 80287 and 80387 coprocessors are interfaced to the CPU through I/O ports in the CPU's address space, this is transparent to the program, which does not need to know about or access these I/O ports directly; the program accesses the coprocessor and its registers through normal instruction opcodes.

32-bit designs

Upper interconnect layers on an Intel 80486DX2 die

The most significant of the 32-bit designs is the Motorola MC68000, introduced in 1979.[dubious ] The 68k, as it was widely known, had 32-bit registers in its programming model but used 16-bit internal data paths, three 16-bit Arithmetic Logic Units, and a 16-bit external data bus (to reduce pin count), and externally supported only 24-bit addresses (internally it worked with full 32 bit addresses). In PC-based IBM-compatible mainframes the MC68000 internal microcode was modified to emulate the 32-bit System/370 IBM mainframe.[41] Motorola generally described it as a 16-bit processor. The combination of high performance, large (16 megabytes or 224 bytes) memory space and fairly low cost made it the most popular CPU design of its class. The Apple Lisa and Macintosh designs made use of the 68000, as did a host of other designs in the mid-1980s, including the Atari ST and Commodore Amiga.

The world's first single-chip fully 32-bit microprocessor, with 32-bit data paths, 32-bit buses, and 32-bit addresses, was the AT&T Bell Labs BELLMAC-32A, with first samples in 1980, and general production in 1982.[42][43] After the divestiture of AT&T in 1984, it was renamed the WE 32000 (WE for Western Electric), and had two follow-on generations, the WE 32100 and WE 32200. These microprocessors were used in the AT&T 3B5 and 3B15 minicomputers; in the 3B2, the world's first desktop super microcomputer; in the "Companion", the world's first 32-bit laptop computer; and in "Alexander", the world's first book-sized super microcomputer, featuring ROM-pack memory cartridges similar to today's gaming consoles. All these systems ran the UNIX System V operating system.

The first commercial, single chip, fully 32-bit microprocessor available on the market was the HP FOCUS.

Intel's first 32-bit microprocessor was the iAPX 432, which was introduced in 1981, but was not a commercial success. It had an advanced capability-based object-oriented architecture, but poor performance compared to contemporary architectures such as Intel's own 80286 (introduced 1982), which was almost four times as fast on typical benchmark tests. However, the results for the iAPX432 was partly due to a rushed and therefore suboptimal Ada compiler.[citation needed]

Motorola's success with the 68000 led to the MC68010, which added virtual memory support. The MC68020, introduced in 1984 added full 32-bit data and address buses. The 68020 became hugely popular in the Unix supermicrocomputer market, and many small companies (e.g., Altos, Charles River Data Systems, Cromemco) produced desktop-size systems. The MC68030 was introduced next, improving upon the previous design by integrating the MMU into the chip. The continued success led to the MC68040, which included an FPU for better math performance. The 68050 failed to achieve its performance goals and was not released, and the follow-up MC68060 was released into a market saturated by much faster RISC designs. The 68k family faded from use in the early 1990s.

Other large companies designed the 68020 and follow-ons into embedded equipment. At one point, there were more 68020s in embedded equipment than there were Intel Pentiums in PCs.[44] The ColdFire processor cores are derivatives of the venerable 68020.

During this time (early to mid-1980s), National Semiconductor introduced a very similar 16-bit pinout, 32-bit internal microprocessor called the NS 16032 (later renamed 32016), the full 32-bit version named the NS 32032. Later, National Semiconductor produced the NS 32132, which allowed two CPUs to reside on the same memory bus with built in arbitration. The NS32016/32 outperformed the MC68000/10, but the NS32332—which arrived at approximately the same time as the MC68020—did not have enough performance. The third generation chip, the NS32532, was different. It had about double the performance of the MC68030, which was released around the same time. The appearance of RISC processors like the AM29000 and MC88000 (now both dead) influenced the architecture of the final core, the NS32764. Technically advanced—with a superscalar RISC core, 64-bit bus, and internally overclocked—it could still execute Series 32000 instructions through real-time translation.

When National Semiconductor decided to leave the Unix market, the chip was redesigned into the Swordfish Embedded processor with a set of on chip peripherals. The chip turned out to be too expensive for the laser printer market and was killed. The design team went to Intel and there designed the Pentium processor, which is very similar to the NS32764 core internally. The big success of the Series 32000 was in the laser printer market, where the NS32CG16 with microcoded BitBlt instructions had very good price/performance and was adopted by large companies like Canon. By the mid-1980s, Sequent introduced the first SMP server-class computer using the NS 32032. This was one of the design's few wins, and it disappeared in the late 1980s. The MIPS R2000 (1984) and R3000 (1989) were highly successful 32-bit RISC microprocessors. They were used in high-end workstations and servers by SGI, among others. Other designs included the Zilog Z80000, which arrived too late to market to stand a chance and disappeared quickly.

The ARM first appeared in 1985.[45] This is a RISC processor design, which has since come to dominate the 32-bit embedded systems processor space due in large part to its power efficiency, its licensing model, and its wide selection of system development tools. Semiconductor manufacturers generally license cores and integrate them into their own system on a chip products; only a few such vendors are licensed to modify the ARM cores. Most cell phones include an ARM processor, as do a wide variety of other products. There are microcontroller-oriented ARM cores without virtual memory support, as well as symmetric multiprocessor (SMP) applications processors with virtual memory.

From 1993 to 2003, the 32-bit x86 architectures became increasingly dominant in desktop, laptop, and server markets, and these microprocessors became faster and more capable. Intel had licensed early versions of the architecture to other companies, but declined to license the Pentium, so AMD and Cyrix built later versions of the architecture based on their own designs. During this span, these processors increased in complexity (transistor count) and capability (instructions/second) by at least three orders of magnitude. Intel's Pentium line is probably the most famous and recognizable 32-bit processor model, at least with the public at broad.

64-bit designs in personal computers

While 64-bit microprocessor designs have been in use in several markets since the early 1990s (including the Nintendo 64 gaming console in 1996), the early 2000s saw the introduction of 64-bit microprocessors targeted at the PC market.With AMD's introduction of a 64-bit architecture backwards-compatible with x86, x86-64 (also called AMD64), in September 2003, followed by Intel's near fully compatible 64-bit extensions (first called IA-32e or EM64T, later renamed Intel 64), the 64-bit desktop era began. Both versions can run 32-bit legacy applications without any performance penalty as well as new 64-bit software. With operating systems Windows XP x64, Windows Vista x64, Windows 7 x64, Linux, BSD, and macOS that run 64-bit natively, the software is also geared to fully utilize the capabilities of such processors. The move to 64 bits is more than just an increase in register size from the IA-32 as it also doubles the number of general-purpose registers.

The move to 64 bits by PowerPC had been intended since the architecture's design in the early 90s and was not a major cause of incompatibility. Existing integer registers are extended as are all related data pathways, but, as was the case with IA-32, both floating point and vector units had been operating at or above 64 bits for several years. Unlike what happened when IA-32 was extended to x86-64, no new general purpose registers were added in 64-bit PowerPC, so any performance gained when using the 64-bit mode for applications making no use of the larger address space is minimal.

In 2011, ARM introduced a new 64-bit ARM architecture.

RISC

In the mid-1980s to early 1990s, a crop of new high-performance reduced instruction set computer (RISC) microprocessors appeared, influenced by discrete RISC-like CPU designs such as the IBM 801 and others. RISC microprocessors were initially used in special-purpose machines and Unix workstations, but then gained wide acceptance in other roles.The first commercial RISC microprocessor design was released in 1984, by MIPS Computer Systems, the 32-bit R2000 (the R1000 was not released). In 1986, HP released its first system with a PA-RISC CPU. In 1987, in the non-Unix Acorn computers' 32-bit, then cache-less, ARM2-based Acorn Archimedes became the first commercial success using the ARM architecture, then known as Acorn RISC Machine (ARM); first silicon ARM1 in 1985. The R3000 made the design truly practical, and the R4000 introduced the world's first commercially available 64-bit RISC microprocessor. Competing projects would result in the IBM POWER and Sun SPARC architectures. Soon every major vendor was releasing a RISC design, including the AT&T CRISP, AMD 29000, Intel i860 and Intel i960, Motorola 88000, DEC Alpha.

In the late 1990s, only two 64-bit RISC architectures were still produced in volume for non-embedded applications: SPARC and Power ISA, but as ARM has become increasingly powerful, in the early 2010s, it became the third RISC architecture in the general computing segment.

A different approach to improving a computer's performance is to add extra processors, as in symmetric multiprocessing designs, which have been popular in servers and workstations since the early 1990s. Keeping up with Moore's Law is becoming increasingly challenging as chip-making technologies approach their physical limits. In response, microprocessor manufacturers look for other ways to improve performance so they can maintain the momentum of constant upgrades.

A multi-core processor is a single chip that contains more than one microprocessor core. Each core can simultaneously execute processor instructions in parallel. This effectively multiplies the processor's potential performance by the number of cores, if the software is designed to take advantage of more than one processor core. Some components, such as bus interface and cache, may be shared between cores. Because the cores are physically close to each other, they can communicate with each other much faster than separate (off-chip) processors in a multiprocessor system, which improves overall system performance.

In 2001, IBM introduced the first commercial multi-core processor, the monolithic two-core POWER4. Personal computers did not receive multi-core processors until the 2003 introduction, of the two-core Intel Pentium D. The Pentium D, however, was not a monolithic multi-core processor. It was constructed from two dies, each containing a core, packaged on a multi-chip module. The first monolithic multi-core processor in the personal computer market was the AMD Athlon X2, which was introduced a few weeks after the Pentium D. As of 2012, dual- and quad-core processors are widely used in home PCs and laptops, while quad-, six-, eight-, ten-, twelve-, and sixteen-core processors are common in the professional and enterprise markets with workstations and servers.

Sun Microsystems has released the Niagara and Niagara 2 chips, both of which feature an eight-core design. The Niagara 2 supports more threads and operates at 1.6 GHz.

High-end Intel Xeon processors that are on the LGA 775, LGA 1366, and LGA 2011 sockets and high-end AMD Opteron processors that are on the C32 and G34 sockets are DP (dual processor) capable, as well as the older Intel Core 2 Extreme QX9775 also used in an older Mac Pro by Apple and the Intel Skulltrail motherboard. AMD's G34 motherboards can support up to four CPUs and Intel's LGA 1567 motherboards can support up to eight CPUs.