Field programmed Gate windows on the network at local family

homes in Fourier mathematical models and gamma functions

X . I

Electronics

Various electronic components

Electronics is the science of controlling electrical energy electrically, in which the electrons have a fundamental role. Electronics deals with electrical circuits that involve active electrical components such as vacuum tubes, transistors, diodes, integrated circuits, optoelectronics, sensors etc. associated passive electrical components, and interconnection technologies. Commonly, electronic devices contain circuitry consisting primarily or exclusively of active semiconductors supplemented with passive elements; such a circuit is described as an electronic circuit.

The science of electronics is also considered to be a branch of physics and electrical engineering.

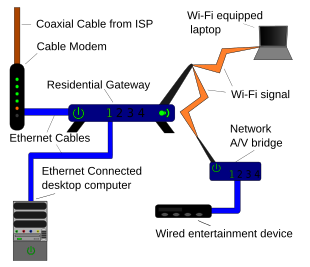

The nonlinear behaviour of active components and their ability to control electron flows makes amplification of weak signals possible, and electronics is widely used in information processing, telecommunication, and signal processing. The ability of electronic devices to act as switches makes digital information processing possible. Interconnection technologies such as circuit boards, electronics packaging technology, and other varied forms of communication infrastructure complete circuit functionality and transform the mixed components into a regular working system.

Electronics is distinct from electrical and electro-mechanical science and technology, which deal with the generation, distribution, switching, storage, and conversion of electrical energy to and from other energy forms using wires, motors, generators, batteries, switches, relays, transformers, resistors, and other passive components. This distinction started around 1906 with the invention by Lee De Forest of the triode, which made electrical amplification of weak radio signals and audio signals possible with a non-mechanical device. Until 1950 this field was called "radio technology" because its principal application was the design and theory of radio transmitters, receivers, and vacuum tubes.

Today, most electronic devices use semiconductor components to perform electron control. The study of semiconductor devices and related technology is considered a branch of solid-state physics, whereas the design and construction of electronic circuits to solve practical problems come under electronics engineering. This article focuses on engineering aspects of electronics.

Electronics encompasses a purely scientific field and is independent of the dependence of other fields of science; Electronics is controlled by the motion of electrons in the central energy of the orbital spin of nuclear potential and kinetic potentials of the atomic atom; The outer energy that shoots the essence of atoms tends to be harmonic fourier; The more stable fourier harmonic frequency the more unstable electrons and making the electrons dispersed into other forms of energy such as dynamic explosions; Programmed light; Continuity of ocean waves; Programmed movements of asteroids in gamma rays to stars in the galaxy.

An electronic component is any physical entity in an electronic system used to affect the electrons or their associated fields in a manner consistent with the intended function of the electronic system. Components are generally intended to be connected together, usually by being soldered to a printed circuit board (PCB), to create an electronic circuit with a particular function (for example an amplifier, radio receiver, or oscillator). Components may be packaged singly, or in more complex groups as integrated circuits. Some common electronic components are capacitors, inductors, resistors, diodes, transistors, etc. Components are often categorized as active (e.g. transistors and thyristors) or passive (e.g. resistors, diodes, inductors and capacitors).

Analog circuits ( linier electronic )

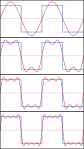

Most analog electronic appliances, such as radio receivers, are constructed from combinations of a few types of basic circuits. Analog circuits use a continuous range of voltage or current as opposed to discrete levels as in digital circuits.

The number of different analog circuits so far devised is huge, especially because a 'circuit' can be defined as anything from a single component, to systems containing thousands of components.

Analog circuits are sometimes called linear circuits although many non-linear effects are used in analog circuits such as mixers, modulators, etc. Good examples of analog circuits include vacuum tube and transistor amplifiers, operational amplifiers and oscillators.

One rarely finds modern circuits that are entirely analog. These days analog circuitry may use digital or even microprocessor techniques to improve performance. This type of circuit is usually called "mixed signal" rather than analog or digital.

Sometimes it may be difficult to differentiate between analog and digital circuits as they have elements of both linear and non-linear operation. An example is the comparator which takes in a continuous range of voltage but only outputs one of two levels as in a digital circuit. Similarly, an overdriven transistor amplifier can take on the characteristics of a controlled switch having essentially two levels of output. In fact, many digital circuits are actually implemented as variations of analog circuits similar to this example—after all, all aspects of the real physical world are essentially analog, so digital effects are only realized by constraining analog behavior.

Digital circuits ( switch electronic )

Digital electronics

Digital circuits are electric circuits based on a number of discrete voltage levels. Digital circuits are the most common physical representation of Boolean algebra, and are the basis of all digital computers. To most engineers, the terms "digital circuit", "digital system" and "logic" are interchangeable in the context of digital circuits. Most digital circuits use a binary system with two voltage levels labeled "0" and "1". Often logic "0" will be a lower voltage and referred to as "Low" while logic "1" is referred to as "High". However, some systems use the reverse definition ("0" is "High") or are current based. Quite often the logic designer may reverse these definitions from one circuit to the next as he sees fit to facilitate his design. The definition of the levels as "0" or "1" is arbitrary.Ternary (with three states) logic has been studied, and some prototype computers made.

Computers, electronic clocks, and programmable logic controllers (used to control industrial processes) are constructed of digital circuits. Digital signal processors are another example.

Building blocks:

Highly integrated devices:

Heat dissipation and thermal management

Heat generated by electronic circuitry must be dissipated to prevent immediate failure and improve long term reliability. Heat dissipation is mostly achieved by passive conduction/convection. Means to achieve greater dissipation include heat sinks and fans for air cooling, and other forms of computer cooling such as water cooling. These techniques use convection, conduction, and radiation of heat energy.Noise

Electronic noise is defined as unwanted disturbances superposed on a useful signal that tend to obscure its information content. Noise is not the same as signal distortion caused by a circuit. Noise is associated with all electronic circuits. Noise may be electromagnetically or thermally generated, which can be decreased by lowering the operating temperature of the circuit. Other types of noise, such as shot noise cannot be removed as they are due to limitations in physical properties.Electronics theory

Mathematical methods are integral to the study of electronics. To become proficient in electronics it is also necessary to become proficient in the mathematics of circuit analysis.Circuit analysis is the study of methods of solving generally linear systems for unknown variables such as the voltage at a certain node or the current through a certain branch of a network. A common analytical tool for this is the SPICE circuit simulator.

Also important to electronics is the study and understanding of electromagnetic field theory.

Electronics lab

Due to the complex nature of electronics theory, laboratory experimentation is an important part of the development of electronic devices. These experiments are used to test or verify the engineer’s design and detect errors. Historically, electronics labs have consisted of electronics devices and equipment located in a physical space, although in more recent years the trend has been towards electronics lab simulation software, such as CircuitLogix, Multisim, and PSpice.Computer aided design (CAD)

Today's electronics engineers have the ability to design circuits using premanufactured building blocks such as power supplies, semiconductors (i.e. semiconductor devices, such as transistors), and integrated circuits. Electronic design automation software programs include schematic capture programs and printed circuit board design programs. Popular names in the EDA software world are NI Multisim, Cadence (ORCAD), EAGLE PCB and Schematic, Mentor (PADS PCB and LOGIC Schematic), Altium (Protel), LabCentre Electronics (Proteus), gEDA, KiCad and many others.Construction methods

Many different methods of connecting components have been used over the years. For instance, early electronics often used point to point wiring with components attached to wooden breadboards to construct circuits. Cordwood construction and wire wrap were other methods used. Most modern day electronics now use printed circuit boards made of materials such as FR4, or the cheaper (and less hard-wearing) Synthetic Resin Bonded Paper (SRBP, also known as Paxoline/Paxolin (trade marks) and FR2) - characterised by its brown colour. Health and environmental concerns associated with electronics assembly have gained increased attention in recent years, especially for products destined to the European Union, with its Restriction of Hazardous Substances Directive (RoHS) and Waste Electrical and Electronic Equipment Directive (WEEE), which went into force in July 2006.Mathematical methods in electronics

Mathematics in Electronics

Electrical Engineering careers usually include courses in Calculus (single and multivariable), Complex Analysis, Differential Equations (both ordinary and partial), Linear Algebra and Probability. Fourier Analysis and Z-Transforms are also subjects which are usually included in electrical engineering programs.Basic applications

A number of electrical laws apply to all electrical networks. These include- Faraday's law of induction: Any change in the magnetic environment of a coil of wire will cause a voltage (emf) to be "induced" in the coil.

- Gauss's Law: The total of the electric flux out of a closed surface is equal to the charge enclosed divided by the permittivity.

- Kirchhoff's current law: the sum of all currents entering a node is equal to the sum of all currents leaving the node or the sum of total current at a junction is zero

- Kirchhoff's voltage law: the directed sum of the electrical potential differences around a circuit must be zero.

- Ohm's law: the voltage across a resistor is the product of its resistance and the current flowing through it.at constant temperature.

- Norton's theorem: any two-terminal collection of voltage sources and resistors is electrically equivalent to an ideal current source in parallel with a single resistor.

- Thevenin's theorem: any two-terminal combination of voltage sources and resistors is electrically equivalent to a single voltage source in series with a single resistor.

- Millman's theorem: the voltage on the ends of branches in parallel is equal to the sum of the currents flowing in every branch divided by the total equivalent conductance.

- See also Analysis of resistive circuits.

Components

There are many electronic components currently used and they all have their own uses and particular rules and methods for use.Complex numbers

If you apply a voltage across a capacitor, it 'charges up' by storing the electrical charge as an electrical field inside the device. This means that while the voltage across the capacitor remains initially small, a large current flows. Later, the current flow is smaller because the capacity is filled, and the voltage raises across the device.A similar though opposite situation occurs in an inductor; the applied voltage remains high with low current as a magnetic field is generated, and later becomes small with high current when the magnetic field is at maximum.

The voltage and current of these two types of devices are therefore out of phase, they do not rise and fall together as simple resistor networks do. The mathematical model that matches this situation is that of complex numbers, using an imaginary component to describe the stored energy.

Signal analysis

- Fourier analysis. Deconstructing a periodic waveform into its constituent frequencies; see also: Fourier theorem, Fourier transform.

- Nyquist-Shannon sampling theorem.

- Information theory. Sets fundamental limits on how information can be transmitted or processed by any system.

X . II

Fourier series

In mathematics, a Fourier series (English: /ˈfʊəriˌeɪ/) is a way to represent a function as the sum of simple sine waves. More formally, it decomposes any periodic function or periodic signal into the sum of a (possibly infinite) set of simple oscillating functions, namely sines and cosines (or, equivalently, complex exponentials). The discrete-time Fourier transform is a periodic function, often defined in terms of a Fourier series. The Z-transform, another example of application, reduces to a Fourier series for the important case |z|=1. Fourier series are also central to the original proof of the Nyquist–Shannon sampling theorem. The study of Fourier series is a branch of Fourier analysis.

| Fourier transforms |

|---|

| Continuous Fourier transform |

| Fourier series |

| Discrete-time Fourier transform |

| Discrete Fourier transform |

| Discrete Fourier transform over a ring |

| Fourier analysis |

| Related transforms |

a bright cloud under star

The Fourier series is named in honour of Jean-Baptiste Joseph Fourier (1768–1830), who made important contributions to the study of trigonometric series, after preliminary investigations by Leonhard Euler, Jean le Rond d'Alembert, and Daniel Bernoulli.[nb 1] Fourier introduced the series for the purpose of solving the heat equation in a metal plate, publishing his initial results in his 1807 Mémoire sur la propagation de la chaleur dans les corps solides (Treatise on the propagation of heat in solid bodies), and publishing his Théorie analytique de la chaleur (Analytical theory of heat) in 1822. The Mémoire introduced Fourier analysis, specifically Fourier series. Through Fourier's research the fact was established that an arbitrary (continuous) function can be represented by a trigonometric series. The first announcement of this great discovery was made by Fourier in 1807, before the French Academy. Early ideas of decomposing a periodic function into the sum of simple oscillating functions date back to the 3rd century BC, when ancient astronomers proposed an empiric model of planetary motions, based on deferents and epicycles.

The heat equation is a partial differential equation. Prior to Fourier's work, no solution to the heat equation was known in the general case, although particular solutions were known if the heat source behaved in a simple way, in particular, if the heat source was a sine or cosine wave. These simple solutions are now sometimes called eigensolutions. Fourier's idea was to model a complicated heat source as a superposition (or linear combination) of simple sine and cosine waves, and to write the solution as a superposition of the corresponding eigensolutions. This superposition or linear combination is called the Fourier series.

From a modern point of view, Fourier's results are somewhat informal, due to the lack of a precise notion of function and integral in the early nineteenth century. Later, Peter Gustav Lejeune Dirichlet and Bernhard Riemann expressed Fourier's results with greater precision and formality.

Although the original motivation was to solve the heat equation, it later became obvious that the same techniques could be applied to a wide array of mathematical and physical problems, and especially those involving linear differential equations with constant coefficients, for which the eigensolutions are sinusoids. The Fourier series has many such applications in electrical engineering, vibration analysis, acoustics, optics, signal processing, image processing, quantum mechanics, econometrics, thin-walled shell theory, etc.

Definition

In this section, s(x) denotes a function of the real variable x, and s is integrable on an interval [x0, x0 + P], for real numbers x0 and P. We will attempt to represent s in that interval as an infinite sum, or series, of harmonically related sinusoidal functions. Outside the interval, the series is periodic with period P (frequency 1/P). It follows that if s also has that property, the approximation is valid on the entire real line. We can begin with a finite summation (or partial sum):

Function s(x) (in red) is a sum of six sine functions of different amplitudes and harmonically related frequencies. Their summation is called a Fourier series. The Fourier transform, S(f) (in blue), which depicts amplitude vs frequency, reveals the 6 frequencies (at odd harmonics) and their amplitudes (1/odd number).

Complex-valued functions

Both components of a complex-valued function are real-valued functions that can be represented by a Fourier series. The two sets of coefficients and the partial sum are given by:- and

Convergence

In engineering applications, the Fourier series is generally presumed to converge everywhere except at discontinuities, since the functions encountered in engineering are more well behaved than the ones that mathematicians can provide as counter-examples to this presumption. In particular, if s is continuous and the derivative of s(x) (which may not exist everywhere) is square integrable, then the Fourier series of s converges absolutely and uniformly to s(x). If a function is square-integrable on the interval [x0, x0+P], then the Fourier series converges to the function at almost every point. Convergence of Fourier series also depends on the finite number of maxima and minima in a function which is popularly known as one of the Dirichlet's condition for Fourier series. See Convergence of Fourier series. It is possible to define Fourier coefficients for more general functions or distributions, in such cases convergence in norm or weak convergence is usually of interest.- Another visualisation of an approximation of a square wave by taking the first 1, 2, 3 and 4 terms of its Fourier series. (An interactive animation can be seen here)

Example 1: a simple Fourier series

We now use the formula above to give a Fourier series expansion of a very simple function. Consider a sawtooth wave- (Eq.1)

This example leads us to a solution to the Basel problem.

Example 2: Fourier's motivation

The Fourier series expansion of our function in Example 1 looks more complicated than the simple formula s(x) = x/π, so it is not immediately apparent why one would need the Fourier series. While there are many applications, Fourier's motivation was in solving the heat equation. For example, consider a metal plate in the shape of a square whose side measures π meters, with coordinates (x, y) ∈ [0, π] × [0, π]. If there is no heat source within the plate, and if three of the four sides are held at 0 degrees Celsius, while the fourth side, given by y = π, is maintained at the temperature gradient T(x, π) = x degrees Celsius, for x in (0, π), then one can show that the stationary heat distribution (or the heat distribution after a long period of time has elapsed) is given byOther applications

Another application of this Fourier series is to solve the Basel problem by using Parseval's theorem. The example generalizes and one may compute ζ(2n), for any positive integer n.Other common notations

The notation cn is inadequate for discussing the Fourier coefficients of several different functions. Therefore, it is customarily replaced by a modified form of the function (s, in this case), such as or S, and functional notation often replaces subscripting:Another commonly used frequency domain representation uses the Fourier series coefficients to modulate a Dirac comb:

Beginnings

| “ | Multiplying both sides by , and then integrating from to yields: | ” |

| — Joseph Fourier, Mémoire sur la propagation de la chaleur dans les corps solides. (1807)[12][nb 3] | ||

In these few lines, which are close to the modern formalism used in Fourier series, Fourier revolutionized both mathematics and physics. Although similar trigonometric series were previously used by Euler, d'Alembert, Daniel Bernoulli and Gauss, Fourier believed that such trigonometric series could represent any arbitrary function. In what sense that is actually true is a somewhat subtle issue and the attempts over many years to clarify this idea have led to important discoveries in the theories of convergence, function spaces, and harmonic analysis.

When Fourier submitted a later competition essay in 1811, the committee (which included Lagrange, Laplace, Malus and Legendre, among others) concluded: ...the manner in which the author arrives at these equations is not exempt of difficulties and...his analysis to integrate them still leaves something to be desired on the score of generality and even rigour.[citation needed]

Birth of harmonic analysis

Since Fourier's time, many different approaches to defining and understanding the concept of Fourier series have been discovered, all of which are consistent with one another, but each of which emphasizes different aspects of the topic. Some of the more powerful and elegant approaches are based on mathematical ideas and tools that were not available at the time Fourier completed his original work. Fourier originally defined the Fourier series for real-valued functions of real arguments, and using the sine and cosine functions as the basis set for the decomposition.Many other Fourier-related transforms have since been defined, extending the initial idea to other applications. This general area of inquiry is now sometimes called harmonic analysis. A Fourier series, however, can be used only for periodic functions, or for functions on a bounded (compact) interval.

Extensions

Fourier series on a square

We can also define the Fourier series for functions of two variables x and y in the square [−π, π] × [−π, π]:Fourier series of Bravais-lattice-periodic-function

The Bravais lattice is defined as the set of vectors of the form:Thus we can define a new function,

Hilbert space interpretation

In the language of Hilbert spaces, the set of functions is an orthonormal basis for the space L2([−π, π]) of square-integrable functions on [−π, π]. This space is actually a Hilbert space with an inner product given for any two elements f and g byProperties

We say that f belongs to if f is a 2π-periodic function on R which is k times differentiable, and its kth derivative is continuous.- If f is a 2π-periodic odd function, then an = 0 for all n.

- If f is a 2π-periodic even function, then bn = 0 for all n.

- If f is integrable, , and This result is known as the Riemann–Lebesgue lemma.

- A doubly infinite sequence {an} in c0(Z) is the sequence of Fourier coefficients of a function in L1([0, 2π]) if and only if it is a convolution of two sequences in . See

- If , then the Fourier coefficients of the derivative f′ can be expressed in terms of the Fourier coefficients of the function f, via the formula .

- If , then . In particular, since tends to zero, we have that tends to zero, which means that the Fourier coefficients converge to zero faster than the kth power of n.

- Parseval's theorem. If f belongs to L2([−π, π]), then .

- Plancherel's theorem. If are coefficients and then there is a unique function such that for every n.

- The first convolution theorem states that if f and g are in L1([−π, π]), the Fourier series coefficients of the 2π-periodic convolution of f and g are given by:

- where:

- The second convolution theorem states that the Fourier series coefficients of the product of f and g are given by the discrete convolution of the and sequences:

Compact groups

One of the interesting properties of the Fourier transform which we have mentioned, is that it carries convolutions to pointwise products. If that is the property which we seek to preserve, one can produce Fourier series on any compact group. Typical examples include those classical groups that are compact. This generalizes the Fourier transform to all spaces of the form L2(G), where G is a compact group, in such a way that the Fourier transform carries convolutions to pointwise products. The Fourier series exists and converges in similar ways to the [−π,π] case.An alternative extension to compact groups is the Peter–Weyl theorem, which proves results about representations of compact groups analogous to those about finite groups.

Riemannian manifolds

The atomic orbitals of chemistry are spherical harmonics and can be used to produce Fourier series on the sphere.

Locally compact Abelian groups

The generalization to compact groups discussed above does not generalize to noncompact, nonabelian groups. However, there is a straightfoward generalization to Locally Compact Abelian (LCA) groups.This generalizes the Fourier transform to L1(G) or L2(G), where G is an LCA group. If G is compact, one also obtains a Fourier series, which converges similarly to the [−π, π] case, but if G is noncompact, one obtains instead a Fourier integral. This generalization yields the usual Fourier transform when the underlying locally compact Abelian group is R.

Approximation and convergence of Fourier series

An important question for the theory as well as applications is that of convergence. In particular, it is often necessary in applications to replace the infinite series by a finite one,Least squares property

We say that p is a trigonometric polynomial of degree N when it is of the formTheorem. The trigonometric polynomial fN is the unique best trigonometric polynomial of degree N approximating f(x), in the sense that, for any trigonometric polynomial p ≠ fN of degree N, we have

where the Hilbert space norm is defined as:

Convergence

Because of the least squares property, and because of the completeness of the Fourier basis, we obtain an elementary convergence result.Theorem. If f belongs to L2([−π, π]), then f∞ converges to f in L2([−π, π]), that is, converges to 0 as N → ∞.

We have already mentioned that if f is continuously differentiable, then is the nth Fourier coefficient of the derivative f′. It follows, essentially from the Cauchy–Schwarz inequality, that f∞ is absolutely summable. The sum of this series is a continuous function, equal to f, since the Fourier series converges in the mean to f:

Theorem. If , then f∞ converges to f uniformly (and hence also pointwise.)

This result can be proven easily if f is further assumed to be C2, since in that case tends to zero as n → ∞. More generally, the Fourier series is absolutely summable, thus converges uniformly to f, provided that f satisfies a Hölder condition of order α > ½. In the absolutely summable case, the inequality proves uniform convergence.

Many other results concerning the convergence of Fourier series are known, ranging from the moderately simple result that the series converges at x if f is differentiable at x, to Lennart Carleson's much more sophisticated result that the Fourier series of an L2 function actually converges almost everywhere.

These theorems, and informal variations of them that don't specify the convergence conditions, are sometimes referred to generically as "Fourier's theorem" or "the Fourier theorem".

Divergence

Since Fourier series have such good convergence properties, many are often surprised by some of the negative results. For example, the Fourier series of a continuous T-periodic function need not converge pointwise. The uniform boundedness principle yields a simple non-constructive proof of this fact.In 1922, Andrey Kolmogorov published an article titled "Une série de Fourier-Lebesgue divergente presque partout" in which he gave an example of a Lebesgue-integrable function whose Fourier series diverges almost everywhere. He later constructed an example of an integrable function whose Fourier series diverges everywhere (Katznelson 1976).

X . III

GAMMA FUNCTION

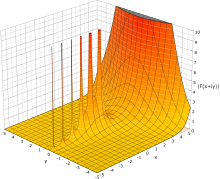

Gamma function

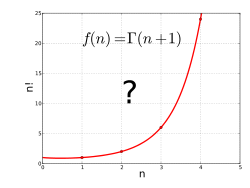

In mathematics, the gamma function (represented by the capital Greek alphabet letter Γ) is an extension of the factorial function, with its argument shifted down by 1, to real and complex numbers. That is, if n is a positive integer:

The gamma function can be seen as a solution to the following interpolation problem:

There are infinitely many continuous extensions of the factorial to non-integers: infinitely many curves can be drawn through any set of isolated points. The gamma function is the most useful solution in practice, being analytic (except at the non-positive integers), and it can be characterized in several ways. However, it is not the only analytic function which extends the factorial, as adding to it any analytic function which is zero on the positive integers, such as k sin mπx, will give another function with that property.

A more restrictive property than satisfying the above interpolation is to satisfy the recurrence relation defining a translated version of the factorial function,

The identity can be used (or, yielding the same result, analytic continuation can be used) to uniquely extend the integral formulation for to a meromorphic function defined for all complex numbers , except integers less than or equal to zero.

It is this extended version that is commonly referred to as the gamma function.

Specifically, for a fixed integer m, it is the case that

Similarly for the gamma function, the definition as an infinite product due to Euler is valid for all complex numbers except the non-positive integers:

A somewhat unusual parametrization of the gamma function in terms of Laguerre polynomials is given by

It might be tempting to generalize the result that Γ(1/2) = √π by looking for a formula for other individual values Γ(r) where r is rational. However, these numbers are not known to be expressible by themselves in terms of elementary functions. It has been proved that Γ(n + r) is a transcendental number and algebraically independent of π for any integer n and each of the fractions r = 1/6, 1/4, 1/3, 2/3, 3/4, 5/6. In general, when computing values of the gamma function, we must settle for numerical approximations.

Another useful limit for asymptotic approximations is:

Using the identity

The behavior of for an increasing positive variable is simple: it grows quickly — faster than an exponential function. Asymptotically as , the magnitude of the gamma function is given by Stirling's formula

The behavior of for an increasing positive variable is simple: it grows quickly — faster than an exponential function. Asymptotically as , the magnitude of the gamma function is given by Stirling's formula

We can rewrite this definition as:

Using the pi function the reflection formula takes on the form

The volume of an n-ellipsoid with radii r1, …, rn can be expressed as

In a certain sense, the ln(Γ) function is the more natural form; it makes some intrinsic attributes of the function clearer. A striking example is the Taylor series of ln(Γ) in 1:

So, using the following property:

It can also be written in terms of the Hurwitz zeta function:[14][15]

The gamma function can be computed to fixed precision for Re(z) ∈ [1,2] by applying integration by parts to Euler's integral. For any positive number x the gamma function can be written

The only fast algorithm for calculation of the Euler gamma function for any algebraic argument (including rational) was constructed by E.A. Karatsuba,

For arguments that are integer multiples of 1/24, the gamma function can also be evaluated quickly using arithmetic-geometric mean iterations (see particular values of the gamma function).

The primary reason for the gamma function's usefulness in such contexts is the prevalence of expressions of the type f(t) e−g(t) which describe processes that decay exponentially in time or space. Integrals of such expressions can occasionally be solved in terms of the gamma function when no elementary solution exists. For example, if f is a power function and g is a linear function, a simple change of variables gives the evaluation

It is of course frequently useful to take limits of integration other than 0 and ∞ to describe the cumulation of a finite process, in which case the ordinary gamma function is no longer a solution; the solution is then called an incomplete gamma function. (The ordinary gamma function, obtained by integrating across the entire positive real line, is sometimes called the complete gamma function for contrast.)

An important category of exponentially decaying functions is that of Gaussian functions

The integrals we have discussed so far involve transcendental functions, but the gamma function also arises from integrals of purely algebraic functions. In particular, the arc lengths of ellipses and of the lemniscate, which are curves defined by algebraic equations, are given by elliptic integrals that in special cases can be evaluated in terms of the gamma function. The gamma function can also be used to calculate "volume" and "area" of n-dimensional hyperspheres.

Another important special case is that of the beta function

We can replace the factorial by a gamma function to extend any such formula to the complex numbers. Generally, this works for any product wherein each factor is a rational function of the index variable, by factoring the rational function into linear expressions. If P and Q are monic polynomials of degree m and n with respective roots p1, …, pm and q1, …, qn, we have

By taking limits, certain rational products with infinitely many factors can be evaluated in terms of the gamma function as well. Due to the Weierstrass factorization theorem, analytic functions can be written as infinite products, and these can sometimes be represented as finite products or quotients of the gamma function. We have already seen one striking example: the reflection formula essentially represents the sine function as the product of two gamma functions. Starting from this formula, the exponential function as well as all the trigonometric and hyperbolic functions can be expressed in terms of the gamma function.

More functions yet, including the hypergeometric function and special cases thereof, can be represented by means of complex contour integrals of products and quotients of the gamma function, called Mellin-Barnes integrals.

James Stirling, a contemporary of Euler, also attempted to find a continuous expression for the factorial and came up with what is now known as Stirling's formula. Although Stirling's formula gives a good estimate of n!, also for non-integers, it does not provide the exact value. Extensions of his formula that correct the error were given by Stirling himself and by Jacques Philippe Marie Binet.

Karl Weierstrass further established the role of the gamma function in complex analysis, starting from yet another product representation,

The name gamma function and the symbol Γ were introduced by Adrien-Marie Legendre around 1811; Legendre also rewrote Euler's integral definition in its modern form. Although the symbol is an upper-case Greek gamma, there is no accepted standard for whether the function name should be written "gamma function" or "Gamma function" (some authors simply write "Γ-function"). The alternative "pi function" notation Π(z) = z! due to Gauss is sometimes encountered in older literature, but Legendre's notation is dominant in modern works.

It is justified to ask why we distinguish between the "ordinary factorial" and the gamma function by using distinct symbols, and particularly why the gamma function should be normalized to Γ(n + 1) = n! instead of simply using "Γ(n) = n!". Consider that the notation for exponents, xn, has been generalized from integers to complex numbers xz without any change. Legendre's motivation for the normalization does not appear to be known, and has been criticized as cumbersome by some (the 20th-century mathematician Cornelius Lanczos, for example, called it "void of any rationality" and would instead use z!). Legendre's normalization does simplify a few formulae, but complicates most others. From a modern point of view, the Legendre normalization of the Gamma function is the integral of the additive character e−x against the multiplicative character xz with respect to the Haar measure on the Lie group R+. Thus this normalization makes it clearer that the gamma function is a continuous analogue of a Gauss sum.

One way to prove would be to find a differential equation that characterizes the gamma function. Most special functions in applied mathematics arise as solutions to differential equations, whose solutions are unique. However, the gamma function does not appear to satisfy any simple differential equation. Otto Hölder proved in 1887 that the gamma function at least does not satisfy any algebraic differential equation by showing that a solution to such an equation could not satisfy the gamma function's recurrence formula, making it a transcendentally transcendental function. This result is known as Hölder's theorem.

A definite and generally applicable characterization of the gamma function was not given until 1922. Harald Bohr and Johannes Mollerup then proved what is known as the Bohr–Mollerup theorem: that the gamma function is the unique solution to the factorial recurrence relation that is positive and logarithmically convex for positive z and whose value at 1 is 1 (a function is logarithmically convex if its logarithm is convex).

The Bohr–Mollerup theorem is useful because it is relatively easy to prove logarithmic convexity for any of the different formulas used to define the gamma function. Taking things further, instead of defining the gamma function by any particular formula, we can choose the conditions of the Bohr–Mollerup theorem as the definition, and then pick any formula we like that satisfies the conditions as a starting point for studying the gamma function. This approach was used by the Bourbaki group.

Borwein & Corless rewiew three centuries of work on the gamma function.

Tables of complex values of the gamma function, as well as hand-drawn graphs, were given in Tables of Higher Functions by Jahnke and Emde, first published in Germany in 1909. According to Michael Berry, "the publication in J&E of a three-dimensional graph showing the poles of the gamma function in the complex plane acquired an almost iconic status."

There was in fact little practical need for anything but real values of the gamma function until the 1930s, when applications for the complex gamma function were discovered in theoretical physics. As electronic computers became available for the production of tables in the 1950s, several extensive tables for the complex gamma function were published to meet the demand, including a table accurate to 12 decimal places from the U.S. National Bureau of Standards.

Abramowitz and Stegun became the standard reference for this and many other special functions after its publication in 1964.

Double-precision floating-point implementations of the gamma function and its logarithm are now available in most scientific computing software and special functions libraries, for example TK Solver, Matlab, GNU Octave, and the GNU Scientific Library. The gamma function was also added to the C standard library (math.h). Arbitrary-precision implementations are available in most computer algebra systems, such as Mathematica and Maple. PARI/GP, MPFR and MPFUN contain free arbitrary-precision implementations. A little-known feature of the calculator app included with the Android operating system is that it will accept fractional values as input to the factorial function and return the equivalent gamma function value. The same is true for Windows Calculator (in scientific mode).

X . III

Information theory

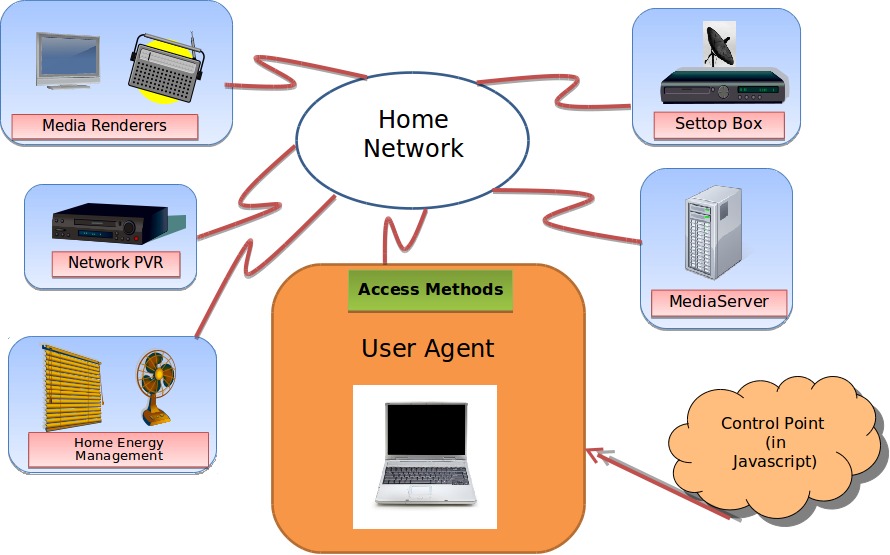

Information theory studies the quantification, storage, and communication of information. It was originally proposed by Claude E. Shannon in 1948 to find fundamental limits on signal processing and communication operations such as data compression, in a landmark paper entitled "A Mathematical Theory of Communication". Now this theory has found applications in many other areas, including statistical inference, natural language processing, cryptography, neurobiology,[1] the evolution[2] and function[3] of molecular codes, model selection in ecology,[4] thermal physics,[5] quantum computing, linguistics, plagiarism detection,[6] pattern recognition, and anomaly detection.[7]

A key measure in information theory is "entropy". Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a die (with six equally likely outcomes). Some other important measures in information theory are mutual information, channel capacity, error exponents, and relative entropy.

Applications of fundamental topics of information theory include lossless data compression (e.g. ZIP files), lossy data compression (e.g. MP3s and JPEGs), and channel coding (e.g. for digital subscriber line (DSL)).

The field is at the intersection of mathematics, statistics, computer science, physics, neurobiology, and electrical engineering. Its impact has been crucial to the success of the Voyager missions to deep space, the invention of the compact disc, the feasibility of mobile phones, the development of the Internet, the study of linguistics and of human perception, the understanding of black holes, and numerous other fields. Important sub-fields of information theory include source coding, channel coding, algorithmic complexity theory, algorithmic information theory, information-theoretic security, and measures of information.

Information theory is closely associated with a collection of pure and applied disciplines that have been investigated and reduced to engineering practice under a variety of rubrics throughout the world over the past half century or more: adaptive systems, anticipatory systems, artificial intelligence, complex systems, complexity science, cybernetics, informatics, machine learning, along with systems sciences of many descriptions. Information theory is a broad and deep mathematical theory, with equally broad and deep applications, amongst which is the vital field of coding theory.

Coding theory is concerned with finding explicit methods, called codes, for increasing the efficiency and reducing the error rate of data communication over noisy channels to near the channel capacity. These codes can be roughly subdivided into data compression (source coding) and error-correction (channel coding) techniques. In the latter case, it took many years to find the methods Shannon's work proved were possible. A third class of information theory codes are cryptographic algorithms (both codes and ciphers). Concepts, methods and results from coding theory and information theory are widely used in cryptography and cryptanalysis. See the article ban (unit) for a historical application.

Information theory is also used in information retrieval, intelligence gathering, gambling, statistics, and even in musical composition.

The landmark event that established the discipline of information theory and brought it to immediate worldwide attention was the publication of Claude E. Shannon's classic paper "A Mathematical Theory of Communication" in the Bell System Technical Journal in July and October 1948.

Prior to this paper, limited information-theoretic ideas had been developed at Bell Labs, all implicitly assuming events of equal probability. Harry Nyquist's 1924 paper, Certain Factors Affecting Telegraph Speed, contains a theoretical section quantifying "intelligence" and the "line speed" at which it can be transmitted by a communication system, giving the relation W = K log m (recalling Boltzmann's constant), where W is the speed of transmission of intelligence, m is the number of different voltage levels to choose from at each time step, and K is a constant. Ralph Hartley's 1928 paper, Transmission of Information, uses the word information as a measurable quantity, reflecting the receiver's ability to distinguish one sequence of symbols from any other, thus quantifying information as H = log Sn = n log S, where S was the number of possible symbols, and n the number of symbols in a transmission. The unit of information was therefore the decimal digit, much later renamed the hartley in his honour as a unit or scale or measure of information. Alan Turing in 1940 used similar ideas as part of the statistical analysis of the breaking of the German second world war Enigma ciphers.

Much of the mathematics behind information theory with events of different probabilities were developed for the field of thermodynamics by Ludwig Boltzmann and J. Willard Gibbs. Connections between information-theoretic entropy and thermodynamic entropy, including the important contributions by Rolf Landauer in the 1960s, are explored in Entropy in thermodynamics and information theory.

In Shannon's revolutionary and groundbreaking paper, the work for which had been substantially completed at Bell Labs by the end of 1944, Shannon for the first time introduced the qualitative and quantitative model of communication as a statistical process underlying information theory, opening with the assertion that

The choice of logarithmic base in the following formulae determines the unit of information entropy that is used. A common unit of information is the bit, based on the binary logarithm. Other units include the nat, which is based on the natural logarithm, and the hartley, which is based on the common logarithm.

In what follows, an expression of the form p log p is considered by convention to be equal to zero whenever p = 0. This is justified because for any logarithmic base.

Intuitively, the entropy HX of a discrete random variable X is a measure of the amount of uncertainty associated with the value of X when only its distribution is known.

The entropy of a source that emits a sequence of N symbols that are independent and identically distributed (iid) is N·H bits (per message of N symbols). If the source data symbols are identically distributed but not independent, the entropy of a message of length N will be less than N·H.

If one transmits 1000 bits (0s and 1s), and the value of each of these bits is known to the receiver (has a specific value with certainty) ahead of transmission, it is clear that no information is transmitted. If, however, each bit is independently equally likely to be 0 or 1, 1000 shannons of information (more often called bits) have been transmitted. Between these two extremes, information can be quantified as follows. If 𝕏 is the set of all messages {x1, …, xn} that X could be, and p(x) is the probability of some , then the entropy, H, of X is defined:

If one transmits 1000 bits (0s and 1s), and the value of each of these bits is known to the receiver (has a specific value with certainty) ahead of transmission, it is clear that no information is transmitted. If, however, each bit is independently equally likely to be 0 or 1, 1000 shannons of information (more often called bits) have been transmitted. Between these two extremes, information can be quantified as follows. If 𝕏 is the set of all messages {x1, …, xn} that X could be, and p(x) is the probability of some , then the entropy, H, of X is defined:

The special case of information entropy for a random variable with two outcomes is the binary entropy function, usually taken to the logarithmic base 2, thus having the shannon (Sh) as unit:

For example, if (X, Y) represents the position of a chess piece — X the row and Y the column, then the joint entropy of the row of the piece and the column of the piece will be the entropy of the position of the piece.

A basic property of the mutual information is that

Mutual information is symmetric:

Another interpretation of the KL divergence is the "unnecessary surprise" introduced by a prior from the truth: suppose a number X is about to be drawn randomly from a discrete set with probability distribution p(x). If Alice knows the true distribution p(x), while Bob believes (has a prior) that the distribution is q(x), then Bob will be more surprised than Alice, on average, upon seeing the value of X. The KL divergence is the (objective) expected value of Bob's (subjective) surprisal minus Alice's surprisal, measured in bits if the log is in base 2. In this way, the extent to which Bob's prior is "wrong" can be quantified in terms of how "unnecessarily surprised" it is expected to make him.

Coding theory is one of the most important and direct applications of information theory. It can be subdivided into source coding theory and channel coding theory. Using a statistical description for data, information theory quantifies the number of bits needed to describe the data, which is the information entropy of the source.

Coding theory is one of the most important and direct applications of information theory. It can be subdivided into source coding theory and channel coding theory. Using a statistical description for data, information theory quantifies the number of bits needed to describe the data, which is the information entropy of the source.

It is common in information theory to speak of the "rate" or "entropy" of a language. This is appropriate, for example, when the source of information is English prose. The rate of a source of information is related to its redundancy and how well it can be compressed, the subject of source coding.

Consider the communications process over a discrete channel. A simple model of the process is shown below:

Here X represents the space of messages transmitted, and Y the space of messages received during a unit time over our channel. Let p(y|x) be the conditional probability distribution function of Y given X. We will consider p(y|x) to be an inherent fixed property of our communications channel (representing the nature of the noise of our channel). Then the joint distribution of X and Y is completely determined by our channel and by our choice of f(x), the marginal distribution of messages we choose to send over the channel. Under these constraints, we would like to maximize the rate of information, or the signal, we can communicate over the channel. The appropriate measure for this is the mutual information, and this maximum mutual information is called the channel capacity and is given by:

Channel coding is concerned with finding such nearly optimal codes that can be used to transmit data over a noisy channel with a small coding error at a rate near the channel capacity.

Information theory leads us to believe it is much more difficult to keep secrets than it might first appear. A brute force attack can break systems based on asymmetric key algorithms or on most commonly used methods of symmetric key algorithms (sometimes called secret key algorithms), such as block ciphers. The security of all such methods currently comes from the assumption that no known attack can break them in a practical amount of time.

Information theoretic security refers to methods such as the one-time pad that are not vulnerable to such brute force attacks. In such cases, the positive conditional mutual information between the plaintext and ciphertext (conditioned on the key) can ensure proper transmission, while the unconditional mutual information between the plaintext and ciphertext remains zero, resulting in absolutely secure communications. In other words, an eavesdropper would not be able to improve his or her guess of the plaintext by gaining knowledge of the ciphertext but not of the key. However, as in any other cryptographic system, care must be used to correctly apply even information-theoretically secure methods; the Venona project was able to crack the one-time pads of the Soviet Union due to their improper reuse of key material.

The Intelligence Cycle

X . IIII

Field-Programmable Gate Array

Field-Programmable Gate Array (FPGA) is a digital IC that is often used to implement digital circuits. FPGAs are electronics and semiconductor components consisting of programmable logic components and programmed (interconnected) connections. The programmed gate components include the usual logical gate types (AND, OR, NOT) as well as more complex types of mathematical and combinatorial functions, such as decoders, adders, subtractors, multipliers, etc. The component blocks inside the FPGA can also contain memory elements (registers) ranging from flip-flops to RAM (Random Access Memory). FPGA is particularly suitable for computational processing of numerical integration algorithms. The advantages of FPGA implementation are used to improve design efficiency by reducing the use of software programming. FPGA has a small error correction and is a technology-independent technology to be implemented in various algorithms. FPGA application performance is faster than microcontroller application, because FPGA only synthesizes hardware, while microcontroller executes software instruction used to control hardware, so that the delayed time is only time-consuming delay of propagation (Propagation delay) only. FPGA modeling requires information related to the level of abstraction differences and the type of model used. An FPGA designer must be able to take several modeling stages to ensure the results of the design model through the simulation model provided by the respective FPGA vendor.

Motivation

- "Find a smooth curve that connects the points (x, y) given by y = (x − 1)! at the positive integer values for x."

There are infinitely many continuous extensions of the factorial to non-integers: infinitely many curves can be drawn through any set of isolated points. The gamma function is the most useful solution in practice, being analytic (except at the non-positive integers), and it can be characterized in several ways. However, it is not the only analytic function which extends the factorial, as adding to it any analytic function which is zero on the positive integers, such as k sin mπx, will give another function with that property.

A more restrictive property than satisfying the above interpolation is to satisfy the recurrence relation defining a translated version of the factorial function,

Definition

Main definition

Legendre not crackers

The notation Γ(z) is due to Legendre. If the real part of the complex number z is positive (Re(z) > 0), then the integral- Recognizing that as

The identity can be used (or, yielding the same result, analytic continuation can be used) to uniquely extend the integral formulation for to a meromorphic function defined for all complex numbers , except integers less than or equal to zero.

It is this extended version that is commonly referred to as the gamma function.

Alternative definitions

Euler's definition as an infinite product

When seeking to approximate z! for a complex number z, it turns out that it is effective to first compute n! for some large integer n, then use that to approximate a value for (n+z)!, and then use the recursion relation m! = m (m−1)! backwards n times, to unwind it to an approximation for z!. Furthermore, this approximation is exact in the limit as n goes to infinity.Specifically, for a fixed integer m, it is the case that

Similarly for the gamma function, the definition as an infinite product due to Euler is valid for all complex numbers except the non-positive integers:

Weierstrass's definition

The definition for the gamma function due to Weierstrass is also valid for all complex numbers z except the non-positive integers:In terms of generalized Laguerre polynomials

A parameterization of the incomplete gamma function in terms of generalized Laguerre polynomials isA somewhat unusual parametrization of the gamma function in terms of Laguerre polynomials is given by

- which converges for Re(z) < 1/2.

Properties

General

Other important functional equations for the gamma function are Euler's reflection formulaIt might be tempting to generalize the result that Γ(1/2) = √π by looking for a formula for other individual values Γ(r) where r is rational. However, these numbers are not known to be expressible by themselves in terms of elementary functions. It has been proved that Γ(n + r) is a transcendental number and algebraically independent of π for any integer n and each of the fractions r = 1/6, 1/4, 1/3, 2/3, 3/4, 5/6. In general, when computing values of the gamma function, we must settle for numerical approximations.

Another useful limit for asymptotic approximations is:

Using the identity

Stirling's formula

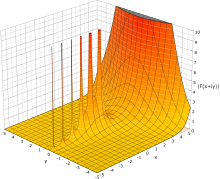

The absolute value of the gamma function on the complex plane.

Residues

The behavior for non-positive is more intricate. Euler's integral does not converge for , but the function it defines in the positive complex half-plane has a unique analytic continuation to the negative half-plane. One way to find that analytic continuation is to use Euler's integral for positive arguments and extend the domain to negative numbers by repeated application of the recurrence formula,We can rewrite this definition as:

Minima

The gamma function has a local minimum at where it attains the value . The gamma function must alternate sign between the poles because the product in the forward recurrence contains an odd number of negative factors if the number of poles between and is odd, and an even number if the number of poles is even.Fourier series expansion

The logarithm of the gamma function has the following Fourier series expansion- , where is the Euler–Mascheroni constant

Raabe's formula

In 1840 Raabe proved thatPi function

An alternative notation which was originally introduced by Gauss and which was sometimes used is the pi function, which in terms of the gamma function isUsing the pi function the reflection formula takes on the form

The volume of an n-ellipsoid with radii r1, …, rn can be expressed as

Relation to other functions

- In the first integral above, which defines the gamma function, the limits of integration are fixed. The upper and lower incomplete gamma functions are the functions obtained by allowing the lower or upper (respectively) limit of integration to vary.

- The gamma function is related to the beta function by the formula

- The logarithmic derivative of the gamma function is called the digamma function; higher derivatives are the polygamma functions.

- The analog of the gamma function over a finite field or a finite ring is the Gaussian sums, a type of exponential sum.

- The reciprocal gamma function is an entire function and has been studied as a specific topic.

- The gamma function also shows up in an important relation with the Riemann zeta function, ζ(z).

-

- It also appears in the following formula:

- which is valid only for Re(z) > 1.

- The logarithm of the gamma function satisfies the following formula due to Lerch:

- where ζH is the Hurwitz zeta function, ζ is the Riemann zeta function and the prime (′) denotes differentiation in the first variable.

- The gamma function is related to the stretched exponential function. For instance, the moments of that function are

Particular values

Some particular values of the gamma function are:The log-gamma function

Because the gamma and factorial functions grow so rapidly for moderately large arguments, many computing environments include a function that returns the natural logarithm of the gamma function (often given the name lgamma or lngamma in programming environments or gammaln in spreadsheets); this grows much more slowly, and for combinatorial calculations allows adding and subtracting logs instead of multiplying and dividing very large values. The digamma function, which is the derivative of this function, is also commonly seen. In the context of technical and physical applications, e.g. with wave propagation, the functional equation- as | z | → ∞ at constant | arg(z) | < π.

- as | z | → ∞ at constant | arg(z) | < π.

Properties

The Bohr–Mollerup theorem states that among all functions extending the factorial functions to the positive real numbers, only the gamma function is log-convex, that is, its natural logarithm is convex on the positive real axis.In a certain sense, the ln(Γ) function is the more natural form; it makes some intrinsic attributes of the function clearer. A striking example is the Taylor series of ln(Γ) in 1:

So, using the following property:

Integration over log-gamma

The integralIt can also be written in terms of the Hurwitz zeta function:[14][15]

Approximations

Complex values of the gamma function can be computed numerically with arbitrary precision using Stirling's approximation or the Lanczos approximation.The gamma function can be computed to fixed precision for Re(z) ∈ [1,2] by applying integration by parts to Euler's integral. For any positive number x the gamma function can be written

The only fast algorithm for calculation of the Euler gamma function for any algebraic argument (including rational) was constructed by E.A. Karatsuba,

For arguments that are integer multiples of 1/24, the gamma function can also be evaluated quickly using arithmetic-geometric mean iterations (see particular values of the gamma function).

Applications

One author describes the gamma function as "Arguably, the most common special function, or the least 'special' of them. The other transcendental functions […] are called 'special' because you could conceivably avoid some of them by staying away from many specialized mathematical topics. On the other hand, the gamma function y = Γ(x) is most difficult to avoid."Integration problems

The gamma function finds application in such diverse areas as quantum physics, astrophysics and fluid dynamics. The gamma distribution, which is formulated in terms of the gamma function, is used in statistics to model a wide range of processes; for example, the time between occurrences of earthquakes.The primary reason for the gamma function's usefulness in such contexts is the prevalence of expressions of the type f(t) e−g(t) which describe processes that decay exponentially in time or space. Integrals of such expressions can occasionally be solved in terms of the gamma function when no elementary solution exists. For example, if f is a power function and g is a linear function, a simple change of variables gives the evaluation

It is of course frequently useful to take limits of integration other than 0 and ∞ to describe the cumulation of a finite process, in which case the ordinary gamma function is no longer a solution; the solution is then called an incomplete gamma function. (The ordinary gamma function, obtained by integrating across the entire positive real line, is sometimes called the complete gamma function for contrast.)

An important category of exponentially decaying functions is that of Gaussian functions

The integrals we have discussed so far involve transcendental functions, but the gamma function also arises from integrals of purely algebraic functions. In particular, the arc lengths of ellipses and of the lemniscate, which are curves defined by algebraic equations, are given by elliptic integrals that in special cases can be evaluated in terms of the gamma function. The gamma function can also be used to calculate "volume" and "area" of n-dimensional hyperspheres.

Another important special case is that of the beta function

Calculating products

The gamma function's ability to generalize factorial products immediately leads to applications in many areas of mathematics; in combinatorics, and by extension in areas such as probability theory and the calculation of power series. Many expressions involving products of successive integers can be written as some combination of factorials, the most important example perhaps being that of the binomial coefficientWe can replace the factorial by a gamma function to extend any such formula to the complex numbers. Generally, this works for any product wherein each factor is a rational function of the index variable, by factoring the rational function into linear expressions. If P and Q are monic polynomials of degree m and n with respective roots p1, …, pm and q1, …, qn, we have

By taking limits, certain rational products with infinitely many factors can be evaluated in terms of the gamma function as well. Due to the Weierstrass factorization theorem, analytic functions can be written as infinite products, and these can sometimes be represented as finite products or quotients of the gamma function. We have already seen one striking example: the reflection formula essentially represents the sine function as the product of two gamma functions. Starting from this formula, the exponential function as well as all the trigonometric and hyperbolic functions can be expressed in terms of the gamma function.

More functions yet, including the hypergeometric function and special cases thereof, can be represented by means of complex contour integrals of products and quotients of the gamma function, called Mellin-Barnes integrals.

Analytic number theory

An elegant and deep application of the gamma function is in the study of the Riemann zeta function. A fundamental property of the Riemann zeta function is its functional equation:a bright cloud under the star

The gamma function has caught the interest of some of the most prominent mathematicians of all time. Its history, notably documented by Philip J. Davis in an article that won him the 1963 Chauvenet Prize, reflects many of the major developments within mathematics since the 18th century. In the words of Davis, "each generation has found something of interest to say about the gamma function. Perhaps the next generation will also."18th century: Euler and Stirling

The problem of extending the factorial to non-integer arguments was apparently first considered by Daniel Bernoulli and Christian Goldbach in the 1720s, and was solved at the end of the same decade by Leonhard Euler. Euler gave two different definitions: the first was not his integral but an infinite product,James Stirling, a contemporary of Euler, also attempted to find a continuous expression for the factorial and came up with what is now known as Stirling's formula. Although Stirling's formula gives a good estimate of n!, also for non-integers, it does not provide the exact value. Extensions of his formula that correct the error were given by Stirling himself and by Jacques Philippe Marie Binet.

19th century: Gauss, Weierstrass and Legendre

Carl Friedrich Gauss rewrote Euler's product asKarl Weierstrass further established the role of the gamma function in complex analysis, starting from yet another product representation,

The name gamma function and the symbol Γ were introduced by Adrien-Marie Legendre around 1811; Legendre also rewrote Euler's integral definition in its modern form. Although the symbol is an upper-case Greek gamma, there is no accepted standard for whether the function name should be written "gamma function" or "Gamma function" (some authors simply write "Γ-function"). The alternative "pi function" notation Π(z) = z! due to Gauss is sometimes encountered in older literature, but Legendre's notation is dominant in modern works.

It is justified to ask why we distinguish between the "ordinary factorial" and the gamma function by using distinct symbols, and particularly why the gamma function should be normalized to Γ(n + 1) = n! instead of simply using "Γ(n) = n!". Consider that the notation for exponents, xn, has been generalized from integers to complex numbers xz without any change. Legendre's motivation for the normalization does not appear to be known, and has been criticized as cumbersome by some (the 20th-century mathematician Cornelius Lanczos, for example, called it "void of any rationality" and would instead use z!). Legendre's normalization does simplify a few formulae, but complicates most others. From a modern point of view, the Legendre normalization of the Gamma function is the integral of the additive character e−x against the multiplicative character xz with respect to the Haar measure on the Lie group R+. Thus this normalization makes it clearer that the gamma function is a continuous analogue of a Gauss sum.

19th–20th centuries: characterizing the gamma function

It is somewhat problematic that a large number of definitions have been given for the gamma function. Although they describe the same function, it is not entirely straightforward to prove the equivalence. Stirling never proved that his extended formula corresponds exactly to Euler's gamma function; a proof was first given by Charles Hermite in 1900. Instead of finding a specialized proof for each formula, it would be desirable to have a general method of identifying the gamma function.One way to prove would be to find a differential equation that characterizes the gamma function. Most special functions in applied mathematics arise as solutions to differential equations, whose solutions are unique. However, the gamma function does not appear to satisfy any simple differential equation. Otto Hölder proved in 1887 that the gamma function at least does not satisfy any algebraic differential equation by showing that a solution to such an equation could not satisfy the gamma function's recurrence formula, making it a transcendentally transcendental function. This result is known as Hölder's theorem.

A definite and generally applicable characterization of the gamma function was not given until 1922. Harald Bohr and Johannes Mollerup then proved what is known as the Bohr–Mollerup theorem: that the gamma function is the unique solution to the factorial recurrence relation that is positive and logarithmically convex for positive z and whose value at 1 is 1 (a function is logarithmically convex if its logarithm is convex).

The Bohr–Mollerup theorem is useful because it is relatively easy to prove logarithmic convexity for any of the different formulas used to define the gamma function. Taking things further, instead of defining the gamma function by any particular formula, we can choose the conditions of the Bohr–Mollerup theorem as the definition, and then pick any formula we like that satisfies the conditions as a starting point for studying the gamma function. This approach was used by the Bourbaki group.

Borwein & Corless rewiew three centuries of work on the gamma function.

Reference tables and software

Although the gamma function can be calculated virtually as easily as any mathematically simpler function with a modern computer—even with a programmable pocket calculator—this was of course not always the case. Until the mid-20th century, mathematicians relied on hand-made tables; in the case of the gamma function, notably a table computed by Gauss in 1813 and one computed by Legendre in 1825.There was in fact little practical need for anything but real values of the gamma function until the 1930s, when applications for the complex gamma function were discovered in theoretical physics. As electronic computers became available for the production of tables in the 1950s, several extensive tables for the complex gamma function were published to meet the demand, including a table accurate to 12 decimal places from the U.S. National Bureau of Standards.

Abramowitz and Stegun became the standard reference for this and many other special functions after its publication in 1964.

Double-precision floating-point implementations of the gamma function and its logarithm are now available in most scientific computing software and special functions libraries, for example TK Solver, Matlab, GNU Octave, and the GNU Scientific Library. The gamma function was also added to the C standard library (math.h). Arbitrary-precision implementations are available in most computer algebra systems, such as Mathematica and Maple. PARI/GP, MPFR and MPFUN contain free arbitrary-precision implementations. A little-known feature of the calculator app included with the Android operating system is that it will accept fractional values as input to the factorial function and return the equivalent gamma function value. The same is true for Windows Calculator (in scientific mode).

X . III

Information theory

Information theory studies the quantification, storage, and communication of information. It was originally proposed by Claude E. Shannon in 1948 to find fundamental limits on signal processing and communication operations such as data compression, in a landmark paper entitled "A Mathematical Theory of Communication". Now this theory has found applications in many other areas, including statistical inference, natural language processing, cryptography, neurobiology,[1] the evolution[2] and function[3] of molecular codes, model selection in ecology,[4] thermal physics,[5] quantum computing, linguistics, plagiarism detection,[6] pattern recognition, and anomaly detection.[7]

A key measure in information theory is "entropy". Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a die (with six equally likely outcomes). Some other important measures in information theory are mutual information, channel capacity, error exponents, and relative entropy.

Applications of fundamental topics of information theory include lossless data compression (e.g. ZIP files), lossy data compression (e.g. MP3s and JPEGs), and channel coding (e.g. for digital subscriber line (DSL)).

The field is at the intersection of mathematics, statistics, computer science, physics, neurobiology, and electrical engineering. Its impact has been crucial to the success of the Voyager missions to deep space, the invention of the compact disc, the feasibility of mobile phones, the development of the Internet, the study of linguistics and of human perception, the understanding of black holes, and numerous other fields. Important sub-fields of information theory include source coding, channel coding, algorithmic complexity theory, algorithmic information theory, information-theoretic security, and measures of information.

Overview

Information theory studies the transmission, processing, utilization, and extraction of information. Abstractly, information can be thought of as the resolution of uncertainty. In the case of communication of information over a noisy channel, this abstract concept was made concrete in 1948 by Claude Shannon in his paper "A Mathematical Theory of Communication", in which "information" is thought of as a set of possible messages, where the goal is to send these messages over a noisy channel, and then to have the receiver reconstruct the message with low probability of error, in spite of the channel noise. Shannon's main result, the noisy-channel coding theorem showed that, in the limit of many channel uses, the rate of information that is asymptotically achievable is equal to the channel capacity, a quantity dependent merely on the statistics of the channel over which the messages are sent.Information theory is closely associated with a collection of pure and applied disciplines that have been investigated and reduced to engineering practice under a variety of rubrics throughout the world over the past half century or more: adaptive systems, anticipatory systems, artificial intelligence, complex systems, complexity science, cybernetics, informatics, machine learning, along with systems sciences of many descriptions. Information theory is a broad and deep mathematical theory, with equally broad and deep applications, amongst which is the vital field of coding theory.

Coding theory is concerned with finding explicit methods, called codes, for increasing the efficiency and reducing the error rate of data communication over noisy channels to near the channel capacity. These codes can be roughly subdivided into data compression (source coding) and error-correction (channel coding) techniques. In the latter case, it took many years to find the methods Shannon's work proved were possible. A third class of information theory codes are cryptographic algorithms (both codes and ciphers). Concepts, methods and results from coding theory and information theory are widely used in cryptography and cryptanalysis. See the article ban (unit) for a historical application.

Information theory is also used in information retrieval, intelligence gathering, gambling, statistics, and even in musical composition.

The landmark event that established the discipline of information theory and brought it to immediate worldwide attention was the publication of Claude E. Shannon's classic paper "A Mathematical Theory of Communication" in the Bell System Technical Journal in July and October 1948.