CONTOUR AND LANDSCAPE

Contour maps

When drawing in three dimensions is inconvenient, a contour map is a useful alternative for representing functions with a two-dimensional input and a one-dimensional output.

The process

Contour maps are a way to depict functions with a two-dimensional input and a one-dimensional output. For example, consider this function:

.

With graphs, the way to associate the input with the output is to combine both into a triplet , and plot that triplet as a point in three-dimensional space. The graph itself consists of all possible three-dimensional points of the form , which collectively form a surface of some kind.

But sometimes rendering a three-dimensional image can be clunky, or difficult to do by hand on the fly. Contour maps give a way to represent the function while only drawing on the two-dimensional input space.

Here's how it's done:

- Step 1: Start with the graph of the function.

- Step 2: Slice the graph with a few evenly-spaced level planes, each of which should be parallel to the -plane. You can think of these planes as the spots where equals some given output, like .

- Step 3: Mark the graph where the planes cut into it.

- Step 4: Project these lines onto the -plane, and label the heights they correspond to.

In other words, you choose a set of output values to represent, and for each one of these output values you draw a line which passes through all the input values for which equals that value. To keep track of which lines correspond to which values, people commonly write down the appropriate number somewhere along each line.

Note: The choice of outputs you want to represent, such as in this example, should almost always be evenly spaced. This makes it much easier to understand the "shape" of the function just by looking at the contour map.

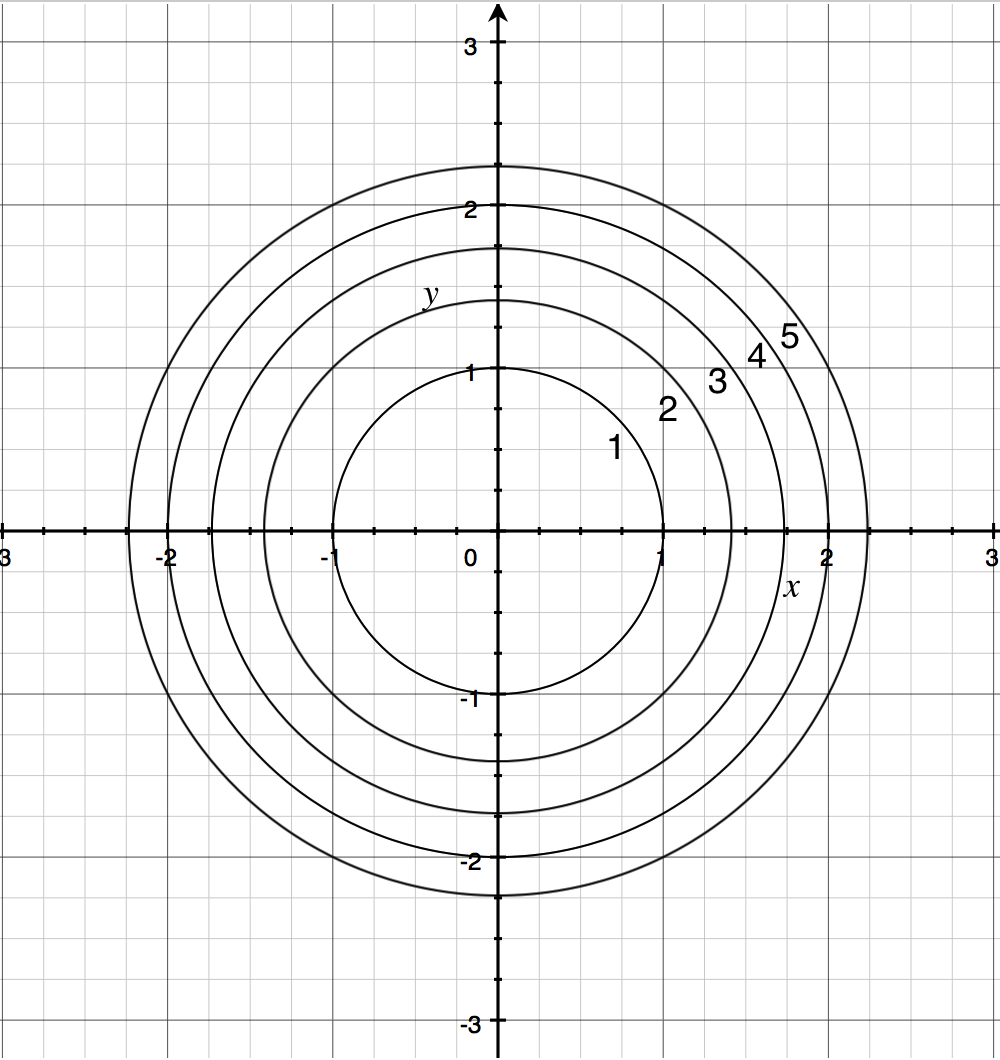

Example 1: Paraboloid

Consider the function . The shape of its graph is what's known as a "paraboloid", the three-dimensional equivalent of a parabola.

Here's what its contour map looks like:

Notice that the circles are not evenly spaced. This is because the height of the graph increases more quickly as you get farther away from the origin. Therefore, increasing the height by a given amount requires a smaller step away from the origin in the input space.

Example 2: Waves

How about the function ? Its graph looks super wavy:

And here is its contour map:

One feature worth pointing out here is that peaks and valleys can easily look very similar on a contour map, and can only be distinguished by reading the labels.

Example 3: Linear function

Next, let's look at . Its graph is a slanted plane.

This corresponds to a contour map with evenly spaced straight lines:

Example 4: Literal map

Contour maps are often used in actual maps to portray altitude in hilly terrains. The image on the right, for example, is a depiction of a certain crater on the moon.

Imagine walking around this crater. Where the contour lines are close together, the slope is rather steep. For instance, you descend from meters to meters over a very short distance. At the bottom, where lines are sparse, things are more flat, varying between meters and meters over larger distances.

Iso-suffs

The lines on a contour map have various names:

- Contour lines.

- Level sets, so named because they represent values of where the height of the graph remains unchanged, hence level.

- Isolines, where "iso" is a greek prefix meaning "same".

Depending on what the contour map represents, this iso prefix might come attached to a number of things. Here are two common examples from weather maps.

- An isotherm is a line on a contour map for a function representing temperature.

- An isobar is a line on a contour map representing pressure.

Gaining intuition from a contour map

You can tell how steep a portion of your graph is by how close the contour lines are to one another. When they are far apart, it takes a lot of lateral distance to increase altitude, but when they are close, altitude increases quickly for small lateral increments.

The level sets associated with heights that approach a peak of the graph will look like smaller and smaller closed loops, each one encompassing the next. Likewise for an inverted peak of the graph. This means you can spot the maximum or minimum of a function using its contour map by looking for sets of closed loops enveloping one another, like distorted concentric circles.

____________________________________________________

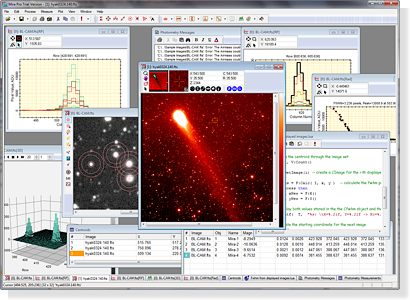

New technologies can make previously invisible phenomena visible. Nowhere is this more obvious than in the field of light microscopy and macro scope . camera technologies and associated image processing that have been a major driver of technical innovations in light microscopy. We describe five types of developments in camera technology: video-based analog contrast enhancement, charge-coupled devices (CCDs), intensified sensors, electron multiplying gain, and scientific complementary metal-oxide-semiconductor cameras, which, together, have had major impacts in light microscopy.

Technology for television broadcasts became available during the 1930s. The early developers of this technology were well aware of the potential usefulness of microscopy , the development of the television, in his landmark paper wrote, “Wide possibilities appear in application of such tubes in many fields as a substitute for the human eye, or for the observation of phenomena at present completely hidden from the eye, as in the case of the ultraviolet microscope” and Macro scope Joint . Over the following decades, while television technology became more mature and more widely accessible, its use in microscopes often appeared to be limited to classroom demonstrations. Shinya Inoué, a Japanese scientist whose decisive contributions to the field of cytoskeleton dynamics included ground-breaking new microscope technologies, described “the exciting displays of a giant amoeba, twice my height, crawling up on the auditorium screen at Princeton as I participated in a demonstration of the RCA projection video system to which we had coupled a phase-contrast microscope” . a video camera to a microscope setup for differential interference contrast (DIC), and discovered that sub-resolution structures could be made visible that were not visible when using the eye-pieces or on film . To view samples by eye, it was necessary to reduce the light intensity and to close down the iris diaphragm to increase apparent contrast, at the expense of reduced resolution. The video equipment enabled the use of a fully opened iris diaphragm and all available light, because one could now change the brightness and contrast and display the full resolution of the optical system on the television screen . Independently, yet at a similar time, Shinya Inoué used video cameras with auto-gain and auto-black level controls, which helped to improve the quality of both polarization and DIC microscope images . The Allens subsequently explored the use of frame memory to store a background image and continuously subtract it from a live video stream, further refining image quality . In practice, this background image was collected by slightly defocusing the microscope, accumulating many images, and averaging them . These new technologies enabled visualization of transport of vesicles in the squid giant axon , followed by visualization of such transport in extracts of the squid giant axon , leading to the development of microscopy-based assays for motor activity and subsequent purification of the motor protein, kinesin .

Until the development of the charge-coupled device (see next section), video cameras consisted of vacuum cathode ray tubes with a photosensitive front plate (target). These devices operated by scanning the cathode ray over the target while the resulting current varied with the amount of light “seen” by the scanned position. The analog signal (which most often followed the National Television System Committee (NTSC) standard of 30 frames per second and 525 lines) was displayed on a video screen. To store the data, either a picture of the screen was taken with a photo camera and silver emulsion-based film, or the video signal was stored on tape. Over the years, the design of both video cameras and recording equipment improved dramatically; cost reduction was driven by development for the consumer market. (In fact, to this day microscope camera technology development benefits enormously from industrial development aimed at the consumer electronics market.) However, the physical design of a vacuum tube and video tape recording device places limits on the minimum size possible, an important consideration motivating development of alternative technologies. Initially, digital processing units for background subtraction and averaging at video rate were home-built; but, later, commercial versions became available. The last widely used analog, a vacuum tube-based system used in microscopes, was probably the Hamamatsu Newvicon camera in combination with the Hamamatsu Argus image processor .

CCD Technology

Development of charge-coupled devices (CCDs) was driven by the desire to reduce the physical size of video cameras used in television stations to encourage their use by the consumer market. The CCD was invented in 1969 by Willard Boyle and George E. Smith at AT&T’s Bell Labs, an invention for which they received a half-share of the Nobel Prize in Physics in 2009. The essence of the invention was its ability to transfer charge from one area storage capacitor on the surface of a semiconductor to the next. In a CCD camera, these capacitors are exposed to light and they collect photo electrons through the photoelectric effect––in which a photon is converted into an electron. By shifting these charges along the surface of the detector to a readout node, the content of each storage capacitor can be determined and translated into a voltage that is equivalent to the amount of light originally hitting that area.

Various companies were instrumental in the development of the CCD, including Sony (for a short history of CCD camera development within Sony,

and Fairchild (Kodak developed the first digital camera based on a Fairchild 100 × 100-pixel sensor in 1975). These efforts quickly led to the wide availability of consumer-grade CCD cameras.

Even after CCD sensors had become technically superior to the older, tube-based sensors, it took time for everyone to transition from the tube-based camera technology and associated hardware, such as matching video recorders and image analyzers. One of the first descriptions of the use of a CCD sensor on a microscope can be found in Roos and Brady (1982), who attached a 1728-pixel CCD (which appears to be a linear CCD) to a standard microscope setup for Nomarski-type DIC microscopy. The authors built their own circuitry to convert the analog signals from the CCD to digital signals, which were stored in a micro-computer with 192 KB of memory. They were able to measure the length of sarcomeres in isolated heart cells at a time resolution of ~6 ms and a spatial precision of ~50 nm. Shinya Inoué’s book, Video Microscopy (1986), mentions CCDs as an alternative to tube-based sensors, but clearly there were very few CCDs in use on microscopes at that time. Remarkably, one of the first attempts to use deconvolution, an image-processing technique to remove out-of-focus “blur,” used photographic images that were digitized by a densitometer rather than a camera (Agard and Sedat, 1980). It was only in 1987 that the same group published a paper on the use of a CCD camera (Hiraoka et al., 1987). In that publication, the work of John A. Connor at AT&T’s Bell Labs is mentioned as the first use of the CCD in microscope imaging. Connor (1986) used a 320 × 512-pixel CCD camera from Photo-metrics (Tucson, AZ) for calcium imaging, using the fluorescent dye, Fura-2, as a reporter in rat embryonic nerve cells. Hiraoka et al. (1987) highlighted the advantages of the CCD technology, which included extraordinary sensitivity and numerical accuracy, and noted its main downside, slow speed. At the time, the readout speed was 50,000 pixels/s, and readout of the 1024 × 600-pixel camera took 13 s (speeds of 20 MHz are now commonplace, and would result in a 31-ms readout time for this particular camera).

One of the advantages of CCD cameras was their ability to expose the array for a defined amount of time. Rather than frame-averaging multiple short-exposure images in a digital video buffer (as was needed with a tube-based sensor), one could simply accumulate charge on the chip itself. This allowed for the recording of very sensitive measurements of dim, non-moving samples. As a result, CCD cameras became the detector of choice for (fixed) fluorescent samples during the 1990s.

Because CCDs are built using silicon, they inherit its excellent photon absorption properties at visible wavelengths. A very important parameter of camera performance is the fraction of light (photons) hitting the sensor that is converted into signal (in this case, photoelectrons). Hence, the quantum efficiency (QE) is expressed as the percentage of photoelectrons resulting from a given number of photons hitting the sensor. Even though the QE of crystalline silicon itself can approach 100%, the overlying electrodes and other structures reduce light absorption and, therefore, QE, especially at lower wavelengths (i.e., towards the blue range of the spectrum). One trick to increase the QE is to turn the sensor around so that its back faces the light source and to etch the silicon to a thin layer (10 –15 μm). Such back-thinned CCD sensors can have a QE of ~95% at certain wavelengths. The QE of charge-coupled devices tends to peak at wavelengths of around 550 nm, and drop off towards the red, because photons at wavelengths of 1100 nm are not energetic enough to elicit an electron in the silicon. Other tricks have been employed to improve the QE and its spectral properties, such as coating with fluorescent plastics to enhance the QE at lower wavelengths, changing the electrode material, or using micro-lenses that focus light on the most sensitive parts of the sensor. The Sony ICX285 sensor, which is still in use today, uses micro-lenses, achieving a QE of about 65% from ~450 –550 nm.

Concomitant with the advent of the CCD camera in microscope imaging was the widespread availability of desktop computers. Computers not only provided a means for digital storage of images, but also enabled image processing and analysis. Even though these desktop computers at first were unable to keep up with the data generated by a video rate camera (for many years, it was normal to store video on tape and digitize only sections of relevance), they were ideal for storage of images from the relatively slow CCD cameras. For data to enter the computer, analog-to-digital conversion (AD) is needed. AD conversion used to be a complicated step that took place in dedicated hardware or, later, in a frame grabber board or device, but nowadays it is often carried out in the camera itself (which provides digitized output). The influence of computers on microscopy cannot be overstated. Not only are computers now the main recording device, they also enable image reconstruction approaches––such as deconvolution, structured illumination, and super-resolution microscopy––that use the raw images to create realistic models for the microscopic object with resolutions that can be far greater than the original data. These models (or the raw data) can be viewed in many different ways, such as through 3D reconstructions, which are impossible to generate without computers. Importantly, computers also greatly facilitate extraction of quantitative information from the microscope data.

Intensified Sensors

The development of image intensifiers, which amplify the brightness of the image before it reaches the sensor or eye, started early in the twentieth century. These devices consisted of a photo cathode that converted photons into electrons, followed by an electron amplification mechanism, and, finally, a layer that converted electrons back into an image. The earliest image intensifiers were developed in the 1930s by Gilles Holst, who was working for Philips in the Netherlands (Morton, 1964). His intensifier consisted of a photo cathode upon which an image was projected in close proximity to a fluorescent screen. A voltage differential of several thousand volts accelerated electrons emitted from the photo cathode, directing them onto a phosphor screen. The high-energy electrons each produced many photons in the phosphor screen, thereby amplifying the signal. By cascading intensifiers, the signal can be intensified significantly. This concept was behind the so-called Gen I image-intensifiers.

The material of the photocathode determines the wavelength detected by the intensifier. Military applications required high sensitivity at infrared wavelengths, driving much of the early intensifier development; however, intensifiers can be built for other wavelengths, including X-rays.

Most intensifier designs over the last forty years or so (i.e., Gen II and beyond) include a micro-channel plate consisting of a bundle of thousands of small glass fibers bordered at the entrance and exit by nickel chrome electrodes. A high-voltage differential between the electrodes accelerates electrons into the glass fibers, and collisions with the wall elicit many more electrons, multiplying electrons coming from the photocathode. Finally, the amplified electrons from the micro-channel plate are projected onto a phosphor screen.

The sensitivity of an intensifier is ultimately determined by the quantum efficiency (QE) of the photocathode, and––despite decades of developments––it still lags significantly behind the QE of silicon-based sensors (i.e., the QE of the photocathode of a modern intensified camera peaks at around 50% in the visible region).

Intensifiers must be coupled to a camera in order to record images. For instance, intensifiers were placed in front of vidicon tubes either by fiber-optic coupling or by using lens systems in intensified vidicon cameras. Alternatively, intensifiers were built into the vacuum imaging tube itself. Probably the most well-known implementation of such a design is the silicon-intensifier target (SIT) camera. In this design, electrons from the photocathode are accelerated onto a silicon target made up of p-n junction silicon diodes. Each high-energy electron generates a large number of electron-hole pairs, which are subsequently detected by a scanning electron beam that generates the signal current. The SIT camera had a sensitivity that was several hundredfold higher than that of standard vidicon tubes (Inoué, 1986). SIT cameras were a common instrument in the 1980s and 1990s for low-light imaging. For instance, our lab used a SIT camera for imaging of sliding microtubules, using dark-field microscopy (e.g., Vale and Toyoshima, 1988) and other low-light applications such as fluorescence imaging. The John W. Sedat group used SIT cameras, at least for some time during the transition from film to CCD cameras, in their work on determining the spatial organization of DNA in the Drosophila nucleus (Gruenbaum et al., 1984).

When charge-coupled devices began to replace vidicon tubes as the sensor of choice, intensifiers were coupled to CCD or complementary metal-oxide-semiconductor (CMOS) sensors, either with a lens or by using fiber-optic bonding between the phosphor plate and the solid-state sensor. Such “ICCD” cameras can be quite compact and produce images from very low-light scenes at astonishing rates (for current commercial offerings, see, e.g., Stanford Photonics, 2016 and Andor, 2016a).

Intensified cameras played an important role at the beginning of single-molecule-imaging experimentation. Toshio Yanagida’s group performed the first published imaging of single fluorescent molecules in solution at room temperature. They visualized individual, fluorescently labeled myosin molecules as well as the turnover of individual ATP molecules, using total internal reflection microscopy, an ISIT camera (consisting of an intensifier in front of a SIT), and an ICCD camera (Funatsu et al., 1995). Until the advent of the electron multiplying charge-coupled device (EMCCD; see next section), ICCD cameras were the detector of choice for imaging of single fluorescent molecules. For instance, ICCD cameras were used to visualize single kinesin motors moving on axonemes (Vale et al., 1996), the blinking of green fluorescent protein (GFP) molecules (Dickson et al., 1997), and in demonstrating that F1-ATPase is a rotational motor that takes 120-degree steps (Yasuda et al., 1998).

Since the gain of an ICCD depends on the voltage differential between entrance and exit of the micro-channel plate, it can be modulated at extremely high rates (i.e., MHz rates). This gating not only provides an “electronic shutter,” but also can be used in more interesting ways. For example, the lifetime of a fluorescent dye (i.e., the time between absorption of a photon and emission of fluorescence) can be determined by modulating both the excitation light source and the detector gain. It can be appreciated that the emitted fluorescence will be delayed with respect to the excitation light, and that the amount of delay depends on the lifetime of the dye. By gating the detector at various phase delays with respect to the excitation light, signals with varying intensity will be obtained, from which the fluorescent lifetime can be deduced. This frequency-domain fluorescence lifetime imaging (FLIM) can be executed in wide-field mode, using an ICCD as a detector. FLIM is often used for measurement of Foerster energy transfer (FRET) between two dyes, which can be used as a proxy for the distance between the dyes. By using carefully designed probes, researchers have visualized cellular processes such as epidermal growth factor phosphorylation (Wouters and Bastiaens, 1999) and Rho GTPase activity (Hinde et al., 2013).

Electron Multiplying CCD Cameras

Despite the unprecedented sensitivity of intensified CCD cameras, which enable observation of single photons with relative ease, this technology has a number of drawbacks. These include the small linear range of the sensor (often no greater than a factor of 10), relatively low quantum efficiency (even the latest-generation ICCD cameras have a maximal QE of 50%), spreading of the signal due to the coupling of the intensifier to a CCD in an ICCD, and the possibility of irreversible sensor damage by high-light intensities, which can happen easily and at great financial cost. The signal spread was so significant that researchers were using non-amplified, traditional CCD cameras rather than ICCDs to obtain maximal localization precision in single-molecule experiments (see, e.g., Yildiz et al., 2003), despite the much longer readout times needed to collect sufficient signal above the readout (read) noise. Clearly, there was a need for CCD cameras with greater spatial precision, lower effective read noise, higher readout speeds, and a much higher damage threshold.

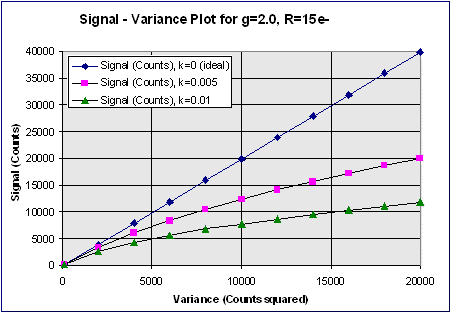

In 2001, both the British company, e2v technologies (Chelmsford, UK), and Texas Instruments (Dallas, TX) launched a new chip design that amplified the signal on the chip before reaching the readout amplifier, rather than using an external image intensifier. This on-chip amplification is carried out by an extra row of silicon “pixels,” through which all charge is transferred before reaching the readout amplifier. The well-to-well transfer in this special register is driven by a relatively high voltage, resulting in occasional amplification of electrons through a process called “impact ionization.” This process provides the transferred electrons with enough kinetic energy to knock an electron out of the silicon from the next well. Repeating this amplification in many wells (the highly successful e2v chip CCD97 has 536 elements in the amplification register) leads to very high effective amplification gains. Although the relation between voltage and gain is non-linear, the gain versus voltage curve has been calibrated by the manufacturer in modern electron multiplying (EM) CCD cameras, so that the end user can set a desired gain rather than a voltage. EM gain enables readout of the CCD at a much higher speed and read noise than normal, because the signal is amplified before readout. For instance, when the CCD readout noise is 30 e− (i.e., 30 electrons of noise per pixel) and a 100-fold EM gain is used, the effective read noise is 0.3 e−, using the unrealistic assumption that the EM gain itself does not introduce noise (read noise below 1 e− is negligible).

Amplification is never noise-free and several additional noise factors need to be considered when using EM gain. (For a thorough discussion of noise sources, see Andor, 2016b). Dark noise, or the occasional spontaneous accumulation of an electron in a CCD well, now becomes significant, since every “dark noise electron” will also be increased by EM amplification. Some impact ionization events take place during the normal charge transfers on the CCD. These “spurious charge” events are of no concern in a standard CCD, since they disappear in the noise floor dominated by readout noise, but they do become an issue when using EM gain. EM amplification itself is a stochastic process, and has noise characteristics very similar to that of the Poisson distributed photon shot noise, resulting in a noise factor (representing the additional noise over the noise expected from noise-free amplification) equal to √2, or ~1.41. Therefore, it was proposed that one can think of EM amplification as being noise-free but reducing the input signal by a factor of two, or halving the QE (Pawley, 2006).

Very quickly after their initial release around 2001, EMCCDs became the camera of choice for fluorescent, single-molecule detection. The most popular detector was the back-thinned EMCCD from e2v technologies, which has a QE reaching 95% in some parts of the spectrum and 512 × 512 × 16 μm-square pixels; through a frame transfer architecture, it can run continuously at ~30 frames per second (fps) full frame. One of the first applications of this technology in biological imaging was by the Jim Spudich group at Stanford University, who used the speed and sensitive detection offered by EMCCD cameras to image the mechanism of movement of the molecular motor protein, myosin VI. They showed that both actin-binding sites (heads) of this dimeric motor protein take 72-nm steps and that the heads move in succession, strongly suggesting a hand-over-hand displacement mechanism (Ökten et al., 2004).

One of the most spectacular contributions made possible by EMCCD cameras was the development of super-resolution localization microscopy. For many years, single-molecule imaging experiments had shown that it was possible to localize a single fluorescent emitter with high resolution, in principle limited only by the amount of photons detected. However, to image biological structures with high fidelity, one needs to image many single molecules, whose projections on the camera (the point spread function) overlap. As William E. Moerner and Eric Betzig explained in the 1990s, as long as one can separate the emission of single molecules based on any physical criteria, such as time or wavelength, it is possible to uniquely localize many single molecules within a diffraction-limited volume. Several groups implemented this idea in 2006, using blinking of fluorophores, either photo-activatable GFPs, in the case of photo-activated localization microscopy (PALM; Betzig et al., 2006) and fluorescence PALM (fPALM; Hess et al., 2006), or small fluorescent molecules, as in stochastic optical reconstruction microscopy (STORM; Rust et al., 2006). Clearly, the development and availability of fluorophores with the desired properties was essential for these advances (which is why the Nobel prize in Chemistry was awarded in 2014 to Moerner and Betzig, as well as Stefan W. Hell, who used non-camera based approaches to achieve super-resolution microscopy images). But successful implementation of super-resolution localization microscopy was greatly aided by EMCCD camera technology, which allowed the detection of single molecules with high sensitivity and low background, and at high speeds (the Betzig, Xiaowei Zhuang, and Samuel T. Hess groups all used EMCCD cameras in their work).

Other microscope-imaging modalities that operate at very low light levels have also greatly benefited from the use of EMCCD cameras. Most notably, spinning disk confocal microscopy is aided enormously by EMCCD cameras, since that microscopy enables visualization of the biological process of interest at lower light exposure of the sample and at higher speed than possible with a normal CCD. EMCCD-based imaging reduces photobleaching and photodamage of the live sample compared to CCDs, and offers better spatial resolution and larger linear dynamic range than do intensified CCD cameras. Hence, EMCCDs have largely replaced other cameras as the sensor of choice for spinning disk confocal microscopes .

Scientific CMOS Cameras

Charge-coupled device (CCD) technology is based on shifting charge between potential wells with high accuracy and the use of a single, or very few, readout amplifiers. (Note: It is possible to attach a unique readout amplifier to a subsection of the CCD, resulting in so-called multi-tap CCD sensors. But application of this approach has been limited in research microscopy). In active-pixel sensor (APS) architecture, each pixel contains not only a photodetector, but also an amplifier composed of transistors located adjacent to the photosensitive area of the pixel. These APS sensors are built using complementary metal-oxide-semiconductor (CMOS) technology, and are referred to as CMOS sensors. Because of their low cost, CMOS sensors were used for a long time in consumer-grade devices such as web cameras, cell phones, and digital cameras. However, they were considered far too noisy for use in scientific imaging, since every pixel contains its own amplifier, each slightly different from the other. Moreover, the transistors take up space on the chip that is not photosensitive, a problem that can be partially overcome by the use of micro-lenses to focus the light onto the photosensitive area of the sensor. Two developments, however, made CMOS cameras viable for microscopy imaging. First, Fairchild Imaging (Fairchild Imaging, 2016) improved the design of the CMOS sensor so that low read noise (around 1 electron per pixel) and high quantum efficiency (current sensors can reach 82% QE) became possible. These new sensors were named sCMOS (scientific CMOS). Second, the availability of field-programmable gate arrays (FPGAs), which are integrated circuits that can be configured after they have been produced (i.e., they can be used as custom-designed chips, cost much less because only the software has to be written. No new hardware needs to be designed). All current sCMOS cameras contain FPGAs that execute blemish corrections, such as reducing hot pixels and noisy pixels, and linearize the output of pixels, in addition to performing other functions, such as binning (pooling) of pixels. More and more image-processing functions are being integrated into these FPGAs. For instance, the latest sCMOS camera

Remarkably, sCMOS cameras can run at very high speeds (100 frames per s for a ~5-megapixel sensor), have desirable pixel sizes (the standard is 6.5 μm2, which matches the resolution provided by the often used 100 × 1.4-na objective lens; see Maddox et al., 2003 for an explanation), and cost significantly less than electron multiplying CCD (EMCCD) cameras. These features led to the rapid adoption of these cameras, even though the early models still had obvious defects, such as uneven dark image, non-linear pixel response (most pronounced around pixel value 2048 due to the use of separate digital-to-analog converters for the low- and high-intensity ranges), and the rolling shutter mode, which causes the exposure to start and end at varying time points across the chip (up to 10 ms apart). The speed, combined with large pixel number and low-read noise, makes sCMOS cameras highly versatile for most types of microscopy. In practice, however, EMCCDs still offer an advantage under extremely low-light conditions, such as is often encountered in spinning disk confocal microscopy (Fig. 3; Oreopoulos et al., 2013). However, super-resolution microscopy can make good use of the larger field of view and higher speed of sCMOS cameras, resulting in much faster data acquisition of larger areas. For example, acquisition of reconstructed super-resolution images at a rate of 32 per s using sCMOS cameras has been demonstrated (Huang et al., 2013). Another application that has greatly benefited from sCMOS cameras is light sheet fluorescence microscopy, in which objects ranging in size from single cells to small animals are illuminated sideways, such that only the area to be imaged is illuminated, greatly reducing phototoxicity. The large field of view, low-read noise, and high speed of sCMOS cameras has, for instance, made it possible to image calcium signaling in 80% of the neurons of a zebrafish embryo at 0.8 Hz (Ahrens et al., 2013). A recent development is lattice light sheet microscopy, which uses a very thin sheet and allows for imaging of individual cells at high resolution for extended periods of time. Lattice light sheet microscopes use sCMOS cameras because of their high speed, low-read noise, and large field of view (Chen et al., 2015). New forms and improvements in light sheet microscopy will occur in the next several years, and make significant contributions to the understanding of biological systems.

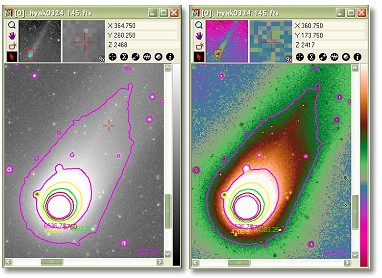

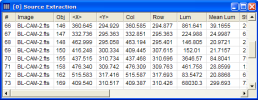

Comparison of the electron multiplying charge-coupled device (EMCCD) and scientific complementary metal-oxide-semiconductor (sCMOS) cameras for use with a spinning disk confocal microscope. Adjacent cells were imaged, making use of either an EMCCD or sCMOS camera, using different tube lenses such that the pixel size in the image plane was the same for both (16-μm pixels for the EMCCD, with a 125-mm tube lens; 6.5-μm pixels for the sCMOS, with a 50-mm tube lens). (Reprinted from fig. 9.8, panel b, of Oreopoulos, J., et al., 2013, Methods Cell Biol. 123: 153–175, with permission from Elsevier.) from Photometrics can execute a complicated noise reduction algorithm on the FPGA of the camera before the image reaches the computer

Summary and Outlook

Microscope imaging has progressed from the written recording of qualitative observations to a quantitative technique with permanent records of images. This leap was made possible through the emergence of highly quantitative, sensitive, and fast cameras, as well as computers, which make it possible to capture, display, and analyze the data generated by the cameras. It is safe to say that, despite notable improvements in microscope optics, progress in microscopy over the last three decades has been largely driven by sensors and analysis of digital images; structured illumination, super-resolution, lifetime, and light sheet microscopy, to name a few, would have been impossible without fast quantitative sensors and computers. The development of camera technologies was propelled by the interests of the military, the consumer market, and researchers, who have benefited from the much larger economic influence of the other groups.

Camera technology has become very impressive, pushing closer and closer to the theoretical limits. The newest sCMOS cameras have an effective read noise of about 1 e− and high linearity over a range spanning almost 4 orders of magnitude; they can acquire 5 million pixels at a rate of 100 fps and have a maximal QE of 82%. Although there is still room for improvement, these cameras enable sensitive imaging at the single-molecule level, probe biochemistry in living cells, and image organs or whole organisms at a fast rate and high resolution in three dimensions. The biggest challenge is to make sense of the enormous amount of data generated. At maximum rate, sCMOS cameras produce data at close to 1 GB/s, or 3.6 TB/h, making it a challenge to even store on computer disk, let alone analyze the images with reasonable throughput. Reducing raw data to information useful for researchers, both by extracting quantitative measurements from raw data and by visualization (especially in 3D), is increasingly becoming a bottleneck and an area where improvements and innovations could have profound effects on obtaining new biological insights. We expect that this data reduction will occur more and more “upstream,” close to the sensor itself, and that data analysis and data reduction will become more integrated with data acquisition. The aforementioned noise filters built into the new Photometrics sCMOS camera, as well as recently released software by Andor that can transfer data directly from the camera to the computer’s graphical processing unit (GPU) for fast analysis, foreshadow such developments. Whereas researchers now still consider the entire acquired image as the de facto data that needs to be archived, we may well transition to a workflow in which images are quickly reduced to the measurements of interest (using well-described, open, and reproducible procedures), either by computer processing in the camera itself or in closely connected computational units. Developments in this area will open new possibilities for the ways in which scientists visualize and analyze biological samples.

Abbreviations

| AD | analog-to-digital conversion |

| APS | active pixel sensor |

| CCD | charge-coupled device |

| CMOS | complementary metal-oxide-semiconductor |

| DIC | differential interference contrast |

| EMCCD | electron multiplying charge-coupled device |

| FLIM | fluorescence lifetime imaging microscopy |

| fps | frames per second |

| FRET | Foerster resonance energy transfer |

| GFPs | green fluorescent proteins |

| PALM | photoactivated localization microscopy |

| QE | quantum efficiency |

| sCMOS | scientific complementary metal-oxide-semiconductor |

| SIT | silicon-intensifier target |

| STORM | stochastic optical reconstruction microscopy |

A.XO Light Sensors

__________________________________________________________________________________

Light Sensors are photoelectric devices that convert light energy (photons) whether visible or infra-red light into an electrical (electrons) signal

A Light Sensor generates an output signal indicating the intensity of light by measuring the radiant energy that exists in a very narrow range of frequencies basically called “light”, and which ranges in frequency from “Infra-red” to “Visible” up to “Ultraviolet” light spectrum.

The light sensor is a passive devices that convert this “light energy” whether visible or in the infra-red parts of the spectrum into an electrical signal output. Light sensors are more commonly known as “Photoelectric Devices” or “Photo Sensors” because the convert light energy (photons) into electricity (electrons).

Photoelectric devices can be grouped into two main categories, those which generate electricity when illuminated, such as Photo-voltaics or Photo-emissives etc, and those which change their electrical properties in some way such as Photo-resistors or Photo-conductors. This leads to the following classification of devices.

- • Photo-emissive Cells – These are photodevices which release free electrons from a light sensitive material such as caesium when struck by a photon of sufficient energy. The amount of energy the photons have depends on the frequency of the light and the higher the frequency, the more energy the photons have converting light energy into electrical energy.

- • Photo-conductive Cells – These photodevices vary their electrical resistance when subjected to light. Photoconductivity results from light hitting a semiconductor material which controls the current flow through it. Thus, more light increase the current for a given applied voltage. The most common photoconductive material is Cadmium Sulphide used in LDR photocells.

- • Photo-voltaic Cells – These photodevices generate an emf in proportion to the radiant light energy received and is similar in effect to photoconductivity. Light energy falls on to two semiconductor materials sandwiched together creating a voltage of approximately 0.5V. The most common photovoltaic material is Selenium used in solar cells.

- • Photo-junction Devices – These photodevices are mainly true semiconductor devices such as the photodiode or phototransistor which use light to control the flow of electrons and holes across their PN-junction. Photojunction devices are specifically designed for detector application and light penetration with their spectral response tuned to the wavelength of incident light.

The Photoconductive Cell

A Photoconductive light sensor does not produce electricity but simply changes its physical properties when subjected to light energy. The most common type of photoconductive device is the Photoresistor which changes its electrical resistance in response to changes in the light intensity.

Photoresistors are Semiconductor devices that use light energy to control the flow of electrons, and hence the current flowing through them. The commonly used Photoconductive Cell is called the Light Dependent Resistor or LDR.

The Light Dependent Resistor

Typical LDR

As its name implies, the Light Dependent Resistor (LDR) is made from a piece of exposed semiconductor material such as cadmium sulphide that changes its electrical resistance from several thousand Ohms in the dark to only a few hundred Ohms when light falls upon it by creating hole-electron pairs in the material.

The net effect is an improvement in its conductivity with a decrease in resistance for an increase in illumination. Also, photoresistive cells have a long response time requiring many seconds to respond to a change in the light intensity.

Materials used as the semiconductor substrate include, lead sulphide (PbS), lead selenide (PbSe), indium antimonide (InSb) which detect light in the infra-red range with the most commonly used of all photoresistive light sensors being Cadmium Sulphide (Cds).

Cadmium sulphide is used in the manufacture of photoconductive cells because its spectral response curve closely matches that of the human eye and can even be controlled using a simple torch as a light source. Typically then, it has a peak sensitivity wavelength (λp) of about 560nm to 600nm in the visible spectral range.

The Light Dependent Resistor Cell

The most commonly used photoresistive light sensor is the ORP12 Cadmium Sulphide photoconductive cell. This light dependent resistor has a spectral response of about 610nm in the yellow to orange region of light. The resistance of the cell when unilluminated (dark resistance) is very high at about 10MΩ’s which falls to about 100Ω’s when fully illuminated (lit resistance).

To increase the dark resistance and therefore reduce the dark current, the resistive path forms a zigzag pattern across the ceramic substrate. The CdS photocell is a very low cost device often used in auto dimming, darkness or twilight detection for turning the street lights “ON” and “OFF”, and for photographic exposure meter type applications.

Connecting a light dependant resistor in series with a standard resistor like this across a single DC supply voltage has one major advantage, a different voltage will appear at their junction for different levels of light.

The amount of voltage drop across series resistor, R2is determined by the resistive value of the light dependant resistor, RLDR. This ability to generate different voltages produces a very handy circuit called a “Potential Divider” or Voltage Divider Network.

As we know, the current through a series circuit is common and as the LDR changes its resistive value due to the light intensity, the voltage present at VOUT will be determined by the voltage divider formula. An LDR’s resistance, RLDR can vary from about 100Ω in the sun light, to over 10MΩ in absolute darkness with this variation of resistance being converted into a voltage variation at VOUT as shown.

One simple use of a Light Dependent Resistor, is as a light sensitive switch as shown below.

LDR Switch

This basic light sensor circuit is of a relay output light activated switch. A potential divider circuit is formed between the photoresistor, LDR and the resistor R1. When no light is present ie in darkness, the resistance of the LDR is very high in the Megaohms (MΩ) range so zero base bias is applied to the transistor TR1 and the relay is de-energised or “OFF”.

As the light level increases the resistance of the LDR starts to decrease causing the base bias voltage at V1 to rise. At some point determined by the potential divider network formed with resistor R1, the base bias voltage is high enough to turn the transistor TR1 “ON” and thus activate the relay which in turn is used to control some external circuitry. As the light level falls back to darkness again the resistance of the LDR increases causing the base voltage of the transistor to decrease, turning the transistor and relay “OFF” at a fixed light level determined again by the potential divider network.

By replacing the fixed resistor R1 with a potentiometer VR1, the point at which the relay turns “ON” or “OFF” can be pre-set to a particular light level. This type of simple circuit shown above has a fairly low sensitivity and its switching point may not be consistent due to variations in either temperature or the supply voltage. A more sensitive precision light activated circuit can be easily made by incorporating the LDR into a “Wheatstone Bridge” arrangement and replacing the transistor with an Operational Amplifier as shown.

Light Level Sensing Circuit

In this basic dark sensing circuit, the light dependent resistor LDR1 and the potentiometer VR1 form one adjustable arm of a simple resistance bridge network, also known commonly as a Wheatstone bridge, while the two fixed resistors R1 and R2 form the other arm. Both sides of the bridge form potential divider networks across the supply voltage whose outputs V1 and V2 are connected to the non-inverting and inverting voltage inputs respectively of the operational amplifier.

The operational amplifier is configured as a Differential Amplifier also known as a voltage comparator with feedback whose output voltage condition is determined by the difference between the two input signals or voltages, V1 and V2. The resistor combination R1 and R2 form a fixed voltage reference at input V2, set by the ratio of the two resistors. The LDR – VR1 combination provides a variable voltage input V1 proportional to the light level being detected by the photoresistor.

As with the previous circuit the output from the operational amplifier is used to control a relay, which is protected by a free wheel diode, D1. When the light level sensed by the LDR and its output voltage falls below the reference voltage set at V2 the output from the op-amp changes state activating the relay and switching the connected load.

Likewise as the light level increases the output will switch back turning “OFF” the relay. The hysteresis of the two switching points is set by the feedback resistor Rf can be chosen to give any suitable voltage gain of the amplifier.

The operation of this type of light sensor circuit can also be reversed to switch the relay “ON” when the light level exceeds the reference voltage level and vice versa by reversing the positions of the light sensor LDR and the potentiometer VR1. The potentiometer can be used to “pre-set” the switching point of the differential amplifier to any particular light level making it ideal as a simple light sensor project circuit.

Photojunction Devices

Photojunction Devices are basically PN-Junction light sensors or detectors made from silicon semiconductor PN-junctions which are sensitive to light and which can detect both visible light and infra-red light levels. Photo-junction devices are specifically made for sensing light and this class of photoelectric light sensors include the Photodiode and the Phototransistor.

The Photodiode.

Photo-diode

The construction of the Photodiode light sensor is similar to that of a conventional PN-junction diode except that the diodes outer casing is either transparent or has a clear lens to focus the light onto the PN junction for increased sensitivity. The junction will respond to light particularly longer wavelengths such as red and infra-red rather than visible light.

This characteristic can be a problem for diodes with transparent or glass bead bodies such as the 1N4148 signal diode. LED’s can also be used as photodiodes as they can both emit and detect light from their junction. All PN-junctions are light sensitive and can be used in a photo-conductive unbiased voltage mode with the PN-junction of the photodiode always “Reverse Biased” so that only the diodes leakage or dark current can flow.

The current-voltage characteristic (I/V Curves) of a photodiode with no light on its junction (dark mode) is very similar to a normal signal or rectifying diode. When the photodiode is forward biased, there is an exponential increase in the current, the same as for a normal diode. When a reverse bias is applied, a small reverse saturation current appears which causes an increase of the depletion region, which is the sensitive part of the junction. Photodiodes can also be connected in a current mode using a fixed bias voltage across the junction. The current mode is very linear over a wide range.

Photo-diode Construction and Characteristics

When used as a light sensor, a photodiodes dark current (0 lux) is about 10uA for geranium and 1uA for silicon type diodes. When light falls upon the junction more hole/electron pairs are formed and the leakage current increases. This leakage current increases as the illumination of the junction increases.

Thus, the photodiodes current is directly proportional to light intensity falling onto the PN-junction. One main advantage of photodiodes when used as light sensors is their fast response to changes in the light levels, but one disadvantage of this type of photodevice is the relatively small current flow even when fully lit.

The following circuit shows a photo-current-to-voltage converter circuit using an operational amplifier as the amplifying device. The output voltage (Vout) is given as Vout = IP*Rƒ and which is proportional to the light intensity characteristics of the photodiode.

This type of circuit also utilizes the characteristics of an operational amplifier with two input terminals at about zero voltage to operate the photodiode without bias. This zero-bias op-amp configuration gives a high impedance loading to the photodiode resulting in less influence by dark current and a wider linear range of the photocurrent relative to the radiant light intensity. Capacitor Cf is used to prevent oscillation or gain peaking and to set the output bandwidth (1/2πRC).

Photo-diode Amplifier Circuit

Photodiodes are very versatile light sensors that can turn its current flow both “ON” and “OFF” in nanoseconds and are commonly used in cameras, light meters, CD and DVD-ROM drives, TV remote controls, scanners, fax machines and copiers etc, and when integrated into operational amplifier circuits as infrared spectrum detectors for fibre optic communications, burglar alarm motion detection circuits and numerous imaging, laser scanning and positioning systems etc.

The Phototransistor

Photo-transistor

An alternative photo-junction device to the photodiode is the Phototransistor which is basically a photodiode with amplification. The Phototransistor light sensor has its collector-base PN-junction reverse biased exposing it to the radiant light source.

Phototransistors operate the same as the photodiode except that they can provide current gain and are much more sensitive than the photodiode with currents are 50 to 100 times greater than that of the standard photodiode and any normal transistor can be easily converted into a phototransistor light sensor by connecting a photodiode between the collector and base.

Phototransistors consist mainly of a bipolar NPN Transistor with its large base region electrically unconnected, although some phototransistors allow a base connection to control the sensitivity, and which uses photons of light to generate a base current which in turn causes a collector to emitter current to flow. Most phototransistors are NPN types whose outer casing is either transparent or has a clear lens to focus the light onto the base junction for increased sensitivity.

Photo-transistor Construction and Characteristics

In the NPN transistor the collector is biased positively with respect to the emitter so that the base/collector junction is reverse biased. therefore, with no light on the junction normal leakage or dark current flows which is very small. When light falls on the base more electron/hole pairs are formed in this region and the current produced by this action is amplified by the transistor.

Usually the sensitivity of a phototransistor is a function of the DC current gain of the transistor. Therefore, the overall sensitivity is a function of collector current and can be controlled by connecting a resistance between the base and the emitter but for very high sensitivity optocoupler type applications, Darlington phototransistors are generally used.

Photo-darlington

Photodarlington transistors use a second bipolar NPN transistor to provide additional amplification or when higher sensitivity of a photodetector is required due to low light levels or selective sensitivity, but its response is slower than that of an ordinary NPN phototransistor.

Photo darlington devices consist of a normal phototransistor whose emitter output is coupled to the base of a larger bipolar NPN transistor. Because a darlington transistor configuration gives a current gain equal to a product of the current gains of two individual transistors, a photodarlington device produces a very sensitive detector.

Typical applications of Phototransistors light sensors are in opto-isolators, slotted opto switches, light beam sensors, fibre optics and TV type remote controls, etc. Infrared filters are sometimes required when detecting visible light.

Another type of photojunction semiconductor light sensor worth a mention is the Photo-thyristor. This is a light activated thyristor or Silicon Controlled Rectifier, SCR that can be used as a light activated switch in AC applications. However their sensitivity is usually very low compared to equivalent photodiodes or phototransistors.

To help increase their sensitivity to light, photo-thyristors are made thinner around the gate junction. The downside to this process is that it limits the amount of anode current that they can switch. Then for higher current AC applications they are used as pilot devices in opto-couplers to switch larger more conventional thyristors.

Photovoltaic Cells.

The most common type of photovoltaic light sensor is the Solar Cell. Solar cells convert light energy directly into DC electrical energy in the form of a voltage or current to a power a resistive load such as a light, battery or motor. Then photovoltaic cells are similar in many ways to a battery because they supply DC power.

However, unlike the other photo devices we have looked at above which use light intensity even from a torch to operate, photovoltaic solar cells work best using the suns radiant energy.

Solar cells are used in many different types of applications to offer an alternative power source from conventional batteries, such as in calculators, satellites and now in homes offering a form of renewable power.

Photovoltaic Cell

Photovoltaic cells are made from single crystal silicon PN junctions, the same as photodiodes with a very large light sensitive region but are used without the reverse bias. They have the same characteristics as a very large photodiode when in the dark.

When illuminated the light energy causes electrons to flow through the PN junction and an individual solar cell can generate an open circuit voltage of about 0.58v (580mV). Solar cells have a “Positive” and a “Negative” side just like a battery.

Individual solar cells can be connected together in series to form solar panels which increases the output voltage or connected together in parallel to increase the available current. Commercially available solar panels are rated in Watts, which is the product of the output voltage and current (Volts times Amps) when fully lit.

Characteristics of a typical Photovoltaic Solar Cell.

The amount of available current from a solar cell depends upon the light intensity, the size of the cell and its efficiency which is generally very low at around 15 to 20%. To increase the overall efficiency of the cell commercially available solar cells use polycrystalline silicon or amorphous silicon, which have no crystalline structure, and can generate currents of between 20 to 40mA per cm2.

Other materials used in the construction of photovoltaic cells include Gallium Arsenide, Copper Indium Diselenide and Cadmium Telluride. These different materials each have a different spectrum band response, and so can be “tuned” to produce an output voltage at different wavelengths of light.

B. XO CCD SENSOR IN CAMERAS

_____________________________________________________

In place of the film used in conventional film cameras, digital cameras incorporate an electronic component known as an image sensor. Most digital cameras are equipped with the image sensor known as a CCD Sensors, a semiconductor sensor that converts light into electrical signals. CCD Sensors are made up of tiny elements known as pixels. Expressions such as "2-megapixel" and "4-megapixel" refer to the number of pixels comprising the CCD Sensors of a camera. Each pixel is in fact a tiny photo diode that is sensitive to light and becomes electrically charged in accordance with the strength of light that strikes it. These electrical charges are relayed much like buckets of water in a bucket line, to eventually be converted into electrical signals.

The Way Light Is Converted Into Electric Current

The surface of a CCD Sensors is packed with photodiodes, each of which senses light and accumulates electrical charge in accordance with the strength of light that strikes it. Let's take a look at what happens in each photodiode. Photodiodes are, in fact, semiconductors, the most basic of which is a pn pair made up of a p-type and an n-type semiconductor. If a plus electrode (anode) is attached to the p-type side, and a minus electrode (cathode) is attached to the n-type side of the pn pair, and electric current is then passed through this circuit, current flows through the semiconductor. This is known as forward bias. If you create a reversed circuit by attaching a plus electrode to the n-type side, and a minus electrode to the p-type side of the pn pair, then electrical current will be unable to flow. This is known as reverse bias. Photodiodes possess this reverse bias structure. The main difference from standard semiconductors is the way in which they accumulate electrical charge in direct proportion to the amount of light that strikes them.

Photodiodes Accumulate Electrical Charge

Photodiodes are designed to enable light to strike them on their p-type sides. When light strikes this side, electrons and holes are created within the semiconductor in a photoelectric effect. Light of a short wavelength that strikes the photodiode is absorbed by the p-type layer, and the electrons created as a result are attracted to the n-type layer. Light of a long wavelength reaches the n-type layer, and the holes created as a result in the n-type layer are attracted to the p-type layer. In short, holes gather on the p-type side, which accumulates positive charge, while electrons gather on the n-type side, which accumulates negative charge. And because the circuit is reverse-biased, the electrical charges generated are unable to flow. The brighter the light that hits the photodiode, the greater the electrical charge that will accumulate within it. This accumulation of electrical charge at the junction of the pn pair when light strikes is known as a photovoltaic effect. Photodiodes are basically devices that make use of such photovoltaic effects to convert light into electrical charge, which accumulates in direct proportion to the strength of the light striking them. Photodiodes are also at work in our everyday lives in such devices as infrared remote control sensors and camera exposure meters.

CCD Sensors Relay Electrical Charge Like Buckets of Water in a Bucket Line

CCD Sensors comprise photodiodes and mechanisms consisting of polysilicon gates for transferring the charges accumulating within them to the edge of the CCD. The charges themselves cannot be read as electrical signals, and need to be transferred across the CCD Sensors to the edge, where they are converted into voltage. By applying series of pulses, the charges accumulated at each photodiode are relayed in succession, much like buckets of water in a bucket line, down the rows of photodiodes to the edge. CCD is the abbreviation of "Charge-Coupled Device," and "Charge-Coupled" means the way charges are moved through gates from one photodiode to the next.

C . XO The EARTH ___ SPACE AND LIGHT

---------------------------------------------------------------------------------

Space is filled with waves of various wavelengths. In addition to visible light, there are wavelengths that cannot be seen by the naked eye, such as radio waves and infrared, ultraviolet, X-, and gamma rays. These are collectively known as electromagnetic waves because they pass through space by alternately oscillating between electric and magnetic fields. Light is a type of electromagnetic wave. The electromagnetic waves reaching us consist of only a portion of the visible light, near-infrared rays and radio waves from space because the earth is surrounded by a layer of gases known as the atmosphere. This structure is intimately related to the existence of life on earth.

Does Light Nurture Life?

Life on earth receives the blessings of the light emitted by the sun. The energy that reaches the earth from the sun is about 2 calories per square centimeter per minute, the figure known as solar constant. Calculations based on this figure indicate that every second the sun emits as much energy into space as burning 10 quadrillion (10,000 trillion) tons of coal. The greatest of the sun's gifts to earth is photosynthesis by plants. When a material absorbs the energy of light, the light is changed into heat, thereby raising the object's temperature. There are also cases where fluorescence or phosphorescence is emitted, but most of the time materials do not change. Sometimes, however, materials do chemically react to light. This is called a "photochemical reaction." Photochemical reactions do not occur with infrared light, but happen primarily when visible light and ultraviolet rays, which have shorter wavelengths, are absorbed.

Photosynthesis also is a type of chemical reaction. Plants use the energy of sunlight to synthesize glucose from carbon dioxide and water. They then use this glucose to produce such materials as starch and cellulose. In short, photosynthesis stores the energy of sunlight in the form of glucose. All animals, including humans, live by eating these plants, thereby absorbing the oxygen produced during photosynthesis and indirectly taking in the energy of sunlight. In other words, sunlight is a source of life on earth. We do not yet know the detail of how plants conduct photosynthesis, but in green plants, chloroplasts within cells are known to play a crucial role.

Does the Earth Have Windows?

The earth is surrounded by layers of gases called the atmosphere. Some wavelengths of electromagnetic waves arriving from space are absorbed by the atmosphere and never reach the surface of our planet. Take a look at the following diagram. The earth's atmosphere absorbs the majority of ultraviolet, X-, and gamma rays, which are all shorter wavelengths than visible light. High energy X- and gamma rays would damage organisms and cells of creatures if they were to reach the earth's surface directly. Fortunately, the atmosphere protects life on earth. Long-wavelength radio waves and infrared rays also do not reach the surface. The electromagnetic waves we can generally observe on the ground consist of visible light, which is difficult for the atmosphere to absorb, near-infrared rays, and some electromagnetic waves. These wavelength ranges are called atmospheric windows. Ground-based astronomical observation employs optical and radio telescopes that take advantage of atmospheric windows. Infrared, X-, and gamma rays, for which atmospheric windows are closed, can only be observed using balloons and astronomical satellites outside the earth's atmosphere.

What Does Invisible Light Do?

Electromagnetic waves are all around us. This includes not only visible light, but also invisible ultraviolet and infrared rays. Infrared rays, with wavelengths longer than visible light, are also a familiar part of electrical appliances. Infrared rays with wavelengths of 2,500 nm and less are called near-infrared rays and are used in TV and VCR remote controllers, as well as in fiber-optic communications. Wavelengths above 2,500 nm are called far-infrared rays and are used in heaters and stoves.

Ultraviolet rays, with wavelengths shorter than visible light, pack high energy that results in sunburns and faded curtains. Ultraviolet rays with wavelengths of 315 nm and less are particularly dangerous because they destroy the DNA within the cells of living creatures. The bactericidal effect of ultraviolet rays is employed for medical implements, but we also know that large doses of these rays can trigger diseases such as skin cancer and have an impact on the entire ecosystem. Ultraviolet rays are suspected in ozone layer destruction, one of the global environmental issues that has been focused on in recent years. Near an altitude of 25 km, there is a rather thick ozone layer of 15 parts per million (ppm). This layer absorbs ultraviolet rays with wavelengths of 350 nm and less, thereby preventing them from reaching the earth's surface, where they would devastate life. Part of the ozone layer is being destroyed by chlorofluorocarbons (CFCs) that are used in refrigerators and air conditioners. "Ozone holes," where the level of ozone is extremely low, can be observed in the Antarctic and other places.

What If Light Does Nasty Things?

"Photochemical smog" is another environmental problem caused by photochemical reactions. Light causes automobile exhaust to chemically react and form irritating materials that produce smog. This occurs around high-traffic roadways on hot, windless days. Automobile engines and other machinery emit nitrogen monoxide (NO). Immediately after entering the atmosphere, NO reacts with oxygen molecules, transforming into nitrogen dioxide, a brownish gas that readily absorbs visible light, causing a photochemical reaction that breaks it down into NO and oxygen atoms. However, this NO once again immediately reacts with oxygen molecules, transforming back into nitrogen dioxide.

Through the process of photolysis, sunlight produces a vast amount of highly reactive oxygen atoms from a small amount of nitrogen dioxide. (A reaction is taking place through which single oxygen molecules consisting of two oxygen atoms are dissociated to form oxygen atoms.) The resulting oxygen atoms bond with oxygen molecules, forming ozone, and with other organic compounds in exhaust gas, forming peroxides. Ozone and peroxides are a source of eye and throat irritati

D. XO LIGHT AND COLOUR

______________________________________________________

The human eye resembles a camera. It has an iris and a lens, and the retina-a membrane deep inside the eye-functions much like film. Light passes through the lens of the eye and strikes the retina, thereby stimulating its photoreceptor cells, which in turn emit a signal. This signal travels over the optic nerve to the brain, where color is perceived. There are two types of photoreceptor cells known as "cones" and "rods." Rods detect brightness and darkness, while cones detect color. Between the two, there are some 200 million photoreceptor cells. Cones are further classified into L, M, and S cones, each of which senses a different color wavelength, allowing us to perceive color.

Why Can We See Color?

Sunlight itself is colorless and is therefore called white light. So, why can we see color in the objects around us? An object's color depends on its properties. For example, an object that looks red when light is shined on it will appear bright red when only red light is used. When only blue light is used, however, the same object will look dark. An object that normally looks red reflects red light most intensely while absorbing other colors of light. In short, we see an object's color based on what color it is reflecting most intensely.

Light is comprised of the three primary colors: red, green and blue. Combining these three primary colors of light yields white, while other combinations can produce all the colors we see. The human retina has L, M and S cones, which act as sensors for red, green and blue, respectively.

Why do things appear in 3-D? (1)

The world as seen by humans is a 3-dimensional space. Why are we able to perceive the depth of objects despite the human retina sensing flat 2-dimensional information?

Humans have two eyes, which are separated horizontally (by a distance of approximately 6.5 cm). Because of this, the left eye and right eye view objects from slightly different angles (this is called "binocular disparity") and the images sensed by the retinas differ slightly. This difference is processed in the brain (in the visual area of the cerebral cortex) and recognized as 3-dimensional information.

Movie and television images are flat, 2-dimensional in nature, but 3-D movies and 3-D television utilize this "binocular disparity" to create different images for the left and right eyes. These two types of images are delivered to the left and right eyes, which provides the sensation of a 3-D image.

3-D movies generally require 3-D glasses to enable viewers to see two types of images on a single screen. Projection methods vary from theater to theater, as do the types of glasses used. Some typical types of 3-D glasses include color filter (anaglyph) glasses, polarized glasses, and shutter glasses. In the color filter method, images are divided into separate RGB wavelengths. When passed through glasses with different colored filters on the left and right lenses, the image matching each eye enters. In the polarization method, two types of polarized light differing by 90 degrees are applied to images, and these are separated using a polarization filter in the glasses. The shutter method switches between the left and right images. Shutter glasses alternate the opening and closing of the left and right shutters in sync with the switching of images on the screen, delivering the respective images to each eye. Because switching takes place over extremely short periods of time, the images appear without breaks due to the "afterimage effect" that takes place in human eyes.

Humans have two eyes, which are separated horizontally (by a distance of approximately 6.5 cm). Because of this, the left eye and right eye view objects from slightly different angles (this is called "binocular disparity") and the images sensed by the retinas differ slightly. This difference is processed in the brain (in the visual area of the cerebral cortex) and recognized as 3-dimensional information.

Movie and television images are flat, 2-dimensional in nature, but 3-D movies and 3-D television utilize this "binocular disparity" to create different images for the left and right eyes. These two types of images are delivered to the left and right eyes, which provides the sensation of a 3-D image.

3-D movies generally require 3-D glasses to enable viewers to see two types of images on a single screen. Projection methods vary from theater to theater, as do the types of glasses used. Some typical types of 3-D glasses include color filter (anaglyph) glasses, polarized glasses, and shutter glasses. In the color filter method, images are divided into separate RGB wavelengths. When passed through glasses with different colored filters on the left and right lenses, the image matching each eye enters. In the polarization method, two types of polarized light differing by 90 degrees are applied to images, and these are separated using a polarization filter in the glasses. The shutter method switches between the left and right images. Shutter glasses alternate the opening and closing of the left and right shutters in sync with the switching of images on the screen, delivering the respective images to each eye. Because switching takes place over extremely short periods of time, the images appear without breaks due to the "afterimage effect" that takes place in human eyes.

Why do things appear in 3-D? (2)

Like 3-D movies, 3-D televisions make use of "binocular disparity." The mechanism, however, is slightly different. 3-D televisions display two types of images by attaching optical components to the display panel or embedding fine slits in it. A typical optical component is the "lenticular lens," a lens sheet with a cross-section characterized by a semi-circular pattern, which is placed in front of the television screen, resulting in the image created by the pixels for each eye entering the left and right eyes of the viewer separately. This method enables viewers to enjoy 3-dimensional video without the need for special glasses, but the viewing location is limited. For example, when c represents the distance from the television screen to the lenticular lens, L the distance from the lenticular lens to the viewer, and f the focal length of the lenticular lens, the following relationship applies: 1/c + 1/L = 1/f. Once c and f have been determined, then the viewing position L becomes fixed. Furthermore, the frame sequential method, which alternates between two types of images without the use of optical components like the shutter method used in films, has recently been practically implemented for 3-D television and is becoming the most widely adopted technology. This method, however, requires glasses with shutters synchronized with the switching of images.

Other technologies that enable the world of 3-D to be enjoyed with even higher levels of realism are also being developed. A human's depth perception not only utilizes binocular disparity, but also "motion parallax," which enables not only the front of an object to be seen, but also the sides and back of the object when the object moves or is seen from a different angle. There are also systems that incorporate compact cameras and positional sensors like the HMD (head mounted display) used in Canon's MR (Mixed Reality) technology, which changes images in real time according to the movement of the viewer to provide a realistic 3-D video experience. Technology that makes use of holography is also under consideration.

Other technologies that enable the world of 3-D to be enjoyed with even higher levels of realism are also being developed. A human's depth perception not only utilizes binocular disparity, but also "motion parallax," which enables not only the front of an object to be seen, but also the sides and back of the object when the object moves or is seen from a different angle. There are also systems that incorporate compact cameras and positional sensors like the HMD (head mounted display) used in Canon's MR (Mixed Reality) technology, which changes images in real time according to the movement of the viewer to provide a realistic 3-D video experience. Technology that makes use of holography is also under consideration.

Does Light from Receding Objects Appear Reddish?

The "Doppler effect" acts on sound. For example, the pitch of an ambulance siren becomes higher as it moves toward you and lower as it moves away. This phenomenon occurs because many sound waves are squeezed into a shortening distance as the siren moves towards you, and stretched as the distance grows when the ambulance moves away from you. Light and sound are both waves, so the same phenomenon occurs with light, as well. Since the speed of light is extremely fast, this phenomenon can only be observed in space. Light from stars approaching the earth has a shorter wavelength, appearing bluer than its true color, while light from stars receding into the distance has a longer wavelength, appearing reddish. These phenomena are known as blue shift and red shift, both of which are products of the Doppler effect.

What Is the Gravitational Lens Effect?

Light behaves strangely in space. The gravitation lens effect produces this strange behavior. According to Einstein's General Theory of Relativity, an object that has mass (or weight, so to speak) will cause space-time to bend around it. Objects with extremely high mass will cause the surrounding space-time to bend even more. When such an object exists in outer space, light passing near it will travel along the curve it creates in space. By nature, light travels in a straight line, but when it encounters such an object in space-time, it bends much like it does when passing through a convex lens, hence the term gravitational lens effect.

When the light from a distant star is bent according to the gravitational lens effect, observers see the star as though it were in a different position than it really is. Just like a convex lens made of glass, this gigantic lens in space also forms images by enlarging stars and increasing brightness. Research on wobble in space and small stars that are dim and distant is underway at a fever pitch using this effect. Incidentally, when light from distant stars reaches the earth after skimming around the edge of the sun, gravitational refraction produces a lens-like effect that bends the light by an angle of 1.75 seconds (1 second is 1/3,600 degrees).

Does Gravity Change the Color of Light?