when communication data and data storage and its accumulation on communication computer networks that exist on earth at this time can use cloud intermediary media as a catalyst for computer network communication which is expected at one time and time when computer networks and electronic communications on earth have independent energy consumption Efficient outside of the initial start-up use of electric media generators and adapters to turn on and turn off computer networks, which is certainly when we can use energy above the clouds to turn on independent energy for the equipment of computer communication networks and also possible star energy in the sky for energy storage continued, which of course we can excavate using future electronic engineering techniques, namely at proxy wells on earth as well as fire wall wells that surround computer network systems on earth before entering into the computer network diagnostic window system. on earth .

Love ( Line On Victory Endless )

Gen. Mac Tech Zone cloud energy and above the cloud

energy-efficient is cloud computing

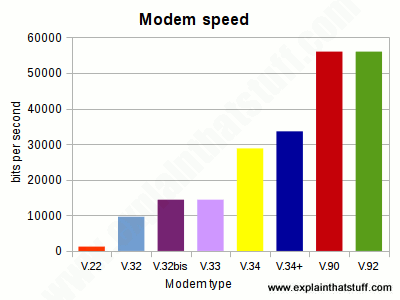

Researchers have found that, at high usage levels, the energy required to transport data in cloud computing can be larger than the amount of energy required to store the data.

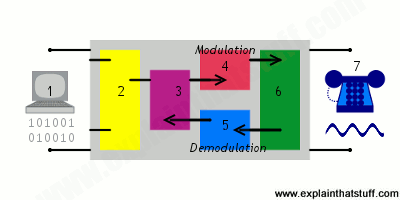

Conventionally, data storage and data processing are done at the user's own computer, using that computer's storage system and processor. An alternative to this method is cloud computing, which is Internet-based computing that enables users at home or office computers to transfer data to a remote data center for storage and processing. Cloud computing offers potential benefits – especially financial ones – to users, but in a new study, researchers have investigated a different aspect of cloud computing: how does its energy consumption compare with conventional computing?

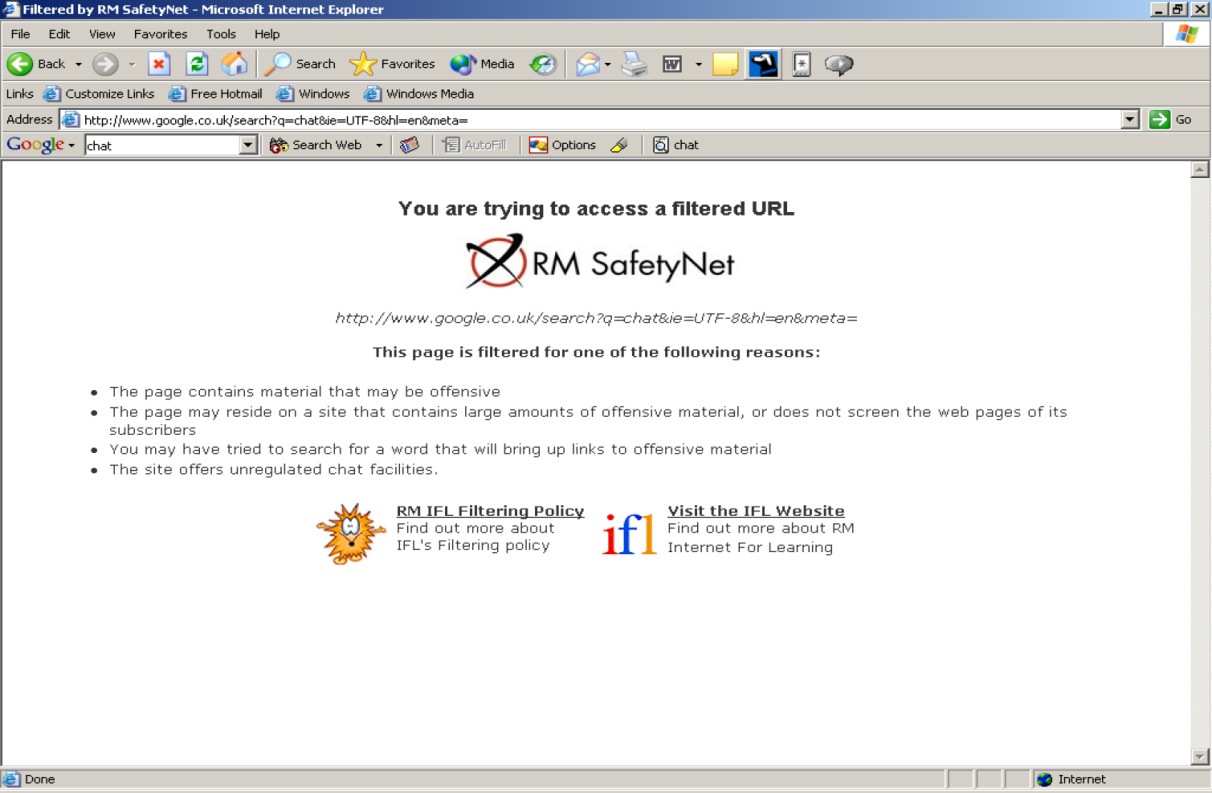

cloud computing is not always the greenest option. They investigated using cloud computing for three different services – storage, software, and processing – on public and private systems. (A public cloud is hosted on the Internet, and a private cloud is hosted within a company behind its firewall.) While previous studies of energy consumption in cloud computing have focused only on the energy consumed in the data center, the researchers found that transporting data between data centers and home computers can consume even larger amounts of energy than storing it.

“The most important conclusion in our analysis is that, when comparing the energy consumption of cloud-based services with that of a typical desktop PC, we must include the energy consumption required to transport the data from the user into the cloud resources and back,” Rod Tucker, leader of the University of Melbourne research team, told PhysOrg.com. “This is particularly important if the cloud service is provided via the public Internet. Some papers that have claimed that cloud computing provides a 'greener' alternative to current desktop computing fail to include the energy consumption involved with transporting the data from the user into the cloud. In many cases, we may find that the data center used by the cloud-based services are located in another city, state or even country.”

In general, not much attention has been paid to the energy consumption used in transmitting data, since cloud computing is more often praised for its other features. Some advantages of cloud computing are that it offers high-capacity storage and high-performance computing from any location with Internet access, while not requiring users to invest in new hardware or upgrade their software. Cloud computing systems can be free (such as Google Docs), or users may pay a yearly subscription fee or fee per resources used.

“Energy efficiency is crucial in two contexts,” Tucker said. “Firstly, if the user device is a mobile device (phone, i-pad, PDA, etc.), then its battery lifetime is a key issue. Secondly, as the use of cloud services balloons, its energy consumption will likewise grow. The US Environmental Protection Agency estimated that in 2007 servers and data centers were responsible for about 0.5% of US greenhouse gas production. The greenhouse gas production that results from power consumption of data centers is expected to double between 2007 and 2020 if we just continue with business as usual. Without careful consideration of the power consumption of cloud services, their growing popularity will become a significant contributor to greenhouse gas production. Therefore, we need to develop technologies and strategies to address this issue before cloud services become more widespread.”

When using the cloud for data storage (such as storing documents, photos, and videos using services such as Amazon Simple Storage), the researchers found that cloud computing can consume less power than conventional computing when the cloud service is used infrequently and at low intensities. This is because, at low usage levels, power consumption for storage dominates total power consumption, and power consumption for transport is minimal. But at medium and high usage levels, more energy is required to transport data, so that transport dominates total power consumption and greatly increases the overall energy consumed. Specifically, power for transport can be as low as 10% and 25% at low usage levels for private and public storage services, respectively, and nearly 60% and 90%, respectively, at high usage levels.

But overall, cloud storage services use less energy compared to cloud software and cloud processing. For cloud software services (such as Google Docs), the power consumption in transport is negligibly small as long as screen refresh rates are low (lower than 0.1 frames/sec, where 1 frame/sec means that 100% of the screen changes every second; a smaller percentage of the screen changing corresponds to a smaller screen refresh rate). However, for cloud software services, the biggest factor determining energy efficiency is the number of users per server, where more users corresponds to lower power consumption per user. In this case, public cloud computing, with its larger number of users, would benefit more than private cloud computing.

For cloud processing services (in which a server such as Amazon Elastic Compute Cloud processes large computational tasks only, and smaller tasks are processed on the user's computer), the researchers again found that the cloud alternative can use lower consumption only under certain conditions. The results showed that, for public cloud processing services, data transport consumed large amounts of energy compared to private cloud processing services, particularly at high usage levels. The reason is that the large number of router hops required on the public Internet greatly increases the energy consumption in transport, and private cloud processing requires significantly fewer routers. Still, the researchers found that, for both public and private clouds, a cloud processing service is more energy-efficient than older-generation PCs.

The results of the study mean different things for different users. As the researchers explain, home computer users can achieve significant energy savings by using low-end laptops for routine tasks and cloud processing services for computationally intensive tasks that are infrequent, instead of using a mid- or high-end PC. For corporations, it is less clear whether the energy consumption saved in transport with a private cloud compared to a public cloud offsets the private cloud's higher energy consumption. Private clouds that serve a relatively small number of users may not benefit from the same energy-saving techniques due to their smaller scale.

Overall, the researchers predict that the technology used in cloud computing – for example, data centers, routers, switches, etc. – will continue to become more energy-efficient. Most importantly, they recommend that one of the biggest areas of improvement is improving the energy efficiency of data transport, especially as cloud computing becomes more widespread.

“Many industry participants see the evolution toward mobility will intrinsically mean an evolution toward cloud-based services,” Tucker said. “The reason is that mobile access devices will have limited processing and storage capacity (due to size and power constraints) and so the most convenient place to put the applications and data is in the cloud. The user device will contain little more than a browser when it is started up. Any application or data that it requires will be brought down from the cloud. When that application is finished, its data will be put back into the cloud and the application will be removed from the user device until it is again required. In this way, the user device is kept simple, energy-efficient and cheap.”

Cloud computing gathers steam with mobile devices

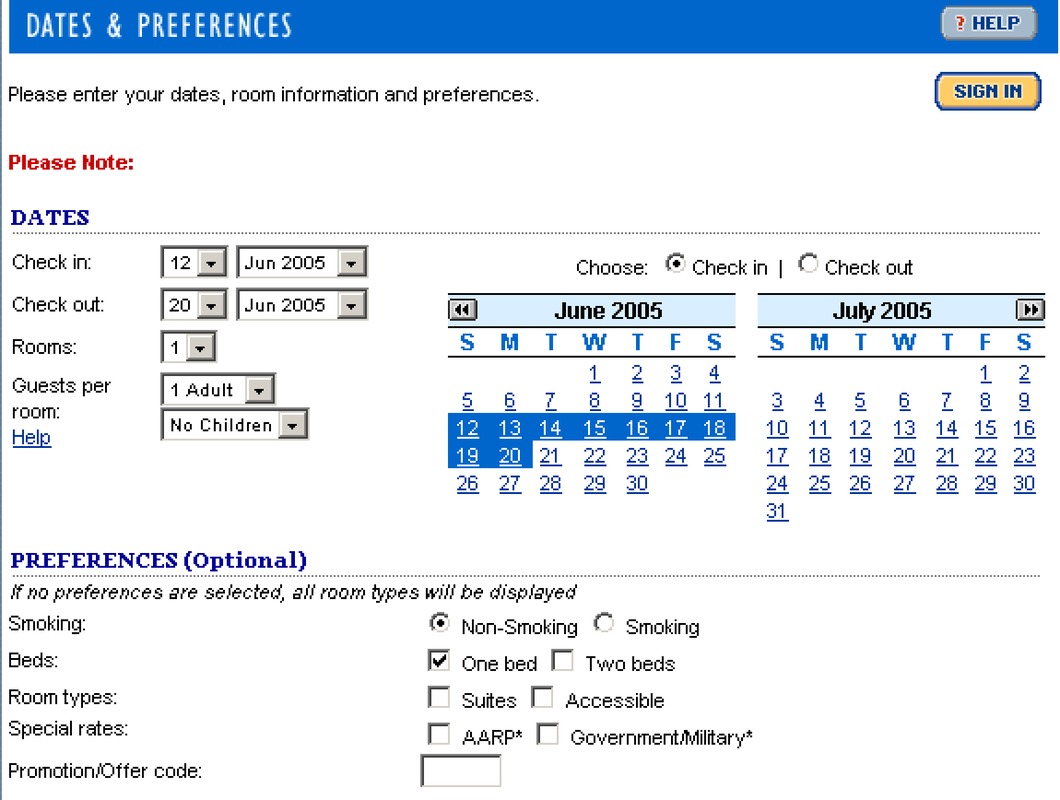

The outlook for the wireless industry is getting cloudier. Consumers have tapped into cloud computing technology for years: sending messages via Hotmail, uploading photos to Facebook or editing a spreadsheet on Google Docs are just a few examples. Simply put, cloud computing moves data from a single machine, such as a personal computer, to the Internet. The technology frees users from needing to be at a specific PC to access saved information.

Now the cloud is going mobile, and industry players expect the number of mobile cloud computing applications and Web-enabled portable devices to grow rapidly over the next few years. Motorola is building much of its turnaround strategy for mobile phones on a cloud-based solution, and wireless broadband service provider Clearwire believes the advent of faster, more sophisticated networks will unleash the full power of cloud computing.

If the industry projections come true, cloud computing will be even more commonplace. Colleagues may edit the same document over the Internet, with one person on a home computer and the other using a touch screen built into the back of the driver's seat of a car. Or a tourist who takes photos on a phone could store them in an online album, then view the pictures from a laptop.

AT&T has seen its network burdened by the large amount of data being consumed by iPhone users, and the strain across wireless networks will increase with growth in mobile applications and user-generated content.

"The biggest component (of mobile cloud computing) is you have to have a fat pipe wherever you are

faster networks will dovetail with the proliferation of smarter devices. "This is going to be much more accessible to a lot of us on affordable phones,

XO__XO WHAT IS A DATA CENTER? Tomorrow and Future

A data center is a facility that centralizes an organization’s IT operations and equipment, as well as where it stores, manages, and disseminates its data. Data centers house a network’s most critical systems and are vital to the continuity of daily operations. Consequentially, the security and reliability of data centers and their information is a top priority for organizations.

Although data center designs are unique, they can generally be classified as internet-facing or enterprise (or “internal”) data centers. Internet-facing data centers usually support relatively few applications, are typically browser-based, and have many users, typically unknown. In contrast, enterprise data centers service fewer users, but host more applications that vary from off-the-shelf to custom applications.

Data center architectures and requirements can differ significantly. For example, a data center built for a cloud service provider like Amazon® EC2 satisfies facility, infrastructure, and security requirements that significantly differ from a completely private data center, such as one built for the Pentagon that is dedicated to securing classified data.

Regardless of classification, an effective data center operation is achieved through a balanced investment in the facility and equipment housed. The elements of a data center break down as follows:

Facility – the location and “white space,” or usable space, that is available for IT equipment. Providing round-the-clock access to information makes data centers some of the most energy-consuming facilities in the world. A high emphasis is placed on design to optimize white space and environmental control to keep equipment within manufacturer-specified temperature/humidity range.

Support infrastructure – equipment contributing to securely sustaining the highest level of availability possible. The Uptime Institute defined four tiers data centers can fall under, with availability ranging from 99.671% to 99.995%. Some components for supporting infrastructure include:

- Uninterruptible Power Sources (UPS) – battery banks, generators and redundant power sources.

- Environmental Control – computer room air conditioners (CRAC), heating, ventilation, and air conditioning (HVAC) systems, and exhaust systems.

- Physical Security Systems – biometrics and video surveillance systems.

IT equipment – actual equipment for IT operations and storage of the organization’s data. This includes servers, storage hardware, cables and racks, as well as a variety of information security elements, such as firewalls.

Operations staff – to monitor operations and maintain IT and infrastructural equipment around the clock.

Data centers have evolved significantly in recent years, adopting technologies such as virtualization to optimize resource utilization and increase IT flexibility. As enterprise IT needs continue to evolve toward on-demand services, many organizations are moving toward cloud-based services and infrastructure. A focus has also been placed on initiatives to reduce the enormous energy consumption of data centers by incorporating more efficient technologies and practices in data center management. Data centers built to these standards have been coined “green data centers.”

To address growing data center demands and provide the added benefits of agility, scalability and global reach, the traditional data center is transforming into what is commonly referred to as a hybrid data center.

A hybrid cloud combines your existing data center (private cloud) resources, over which you have complete control, with ready-made IT infrastructure resources (e.g., compute, networking, storage, applications and services) that provide bursting and scaling capabilities found in IaaS (infrastructure as a service) or public cloud offerings, such as Amazon® Web Services (AWS®).

Here are three key benefits of using a hybrid cloud approach:

Benefit 1: Start Small and Expand as Needed

A hybrid cloud approach enables you to license IT infrastructure resources on a project-by-project basis with the ability to add more as needed. Without the public cloud, you would potentially invest in hardware that would sit idly during off-peak times and only be used for short-term projects.

A hybrid cloud also lets you take advantage of component-based development methodologies. If you use AWS for building new applications, architects and coders can leverage development techniques that are more component-based than previously used techniques. You can easily separate development, testing and production environments for new applications. Environments can be cloned or replicated, spooled up, and used as needed with seamless traffic flow and strong security policy enforcement.

Benefit 2: Expand Your Data Center Seamlessly and Transparently

With a hybrid strategy, your public cloud essentially functions as an extension of your data center via an IPsec VPN connection, allowing you to safely and securely deploy workloads in either location. The IPsec VPN connection acts as an overlay network, bringing added benefits of privacy and simplicity from the reduction in the number of Layer 3 hops across the end-to-end network. This allows you to transparently expand your internal IP address space into the public cloud using widely supported routing protocols. With an overlay network, there’s nothing new or challenging to your network operations team or security specialists, and security policies can be easily extended to cover the routes.

Benefit 3: Security Policy Consistency – From the Network to the Cloud

Your business relies on the consistent, reliable operation of applications and data whether on-premise or in the cloud. To ensure your applications and data are protected from cyber adversaries, best practices dictate that your policies be consistent and, ideally, managed centrally. By centrally managing your on-premise and public cloud security policies, you are able to perform logical groupings of like rules, security objects and so on. This creates many opportunities for improved efficiency using a single pane of glass for all your firewalls, public and private.

For example, many configuration elements universal to all firewalls in your organization can be configured once and shared with all firewalls, including such elements as DNS servers, NTP servers, local admin accounts and syslog servers.

Secure the Hybrid Cloud With VM-Series

The hybrid cloud offers significant benefits for organizations: You can start small, expand as needed, and extend your data center into the public cloud securely with the same security policy constructs in use in your private cloud. Palo Alto Networks® VM-Series virtualized next-generation firewall enables you to securely deploy a hybrid cloud by protecting your applications and data in AWS.

Cloud Computing Saves Energy

consolidating pictures, videos, e-mails and documents on remote servers saves more energy than keeping them on personal computers

When it comes to computing, the "cloud" may rain efficiency benefits.

Researchers at Lawrence Berkeley National Laboratory and Northwestern University unveiled a modeling tool yesterday that estimates the energy savings of moving local network software and computing into the server farms that make up the cloud.

The tool, available to the public online, is called the Cloud Energy and Emissions Research Model (CLEER). It aims to give scientists a better understanding of how energy use changes as the world moves away from storing and processing information in local networks and moves toward outsourcing these tasks to centralized facilities.

Though the word "cloud" evokes images of a clean, simple and environmentally friendly process, the systems that support it are massive industrial facilities, densely packed with processors and hard drives, that devour energy by the megawatt. Data centers use between 1 and 2 percent of the world's electricity and, with dead trees that make paper giving way to magnetic disks, energy use and consequently emissions from the Internet is poised to surge further

Nonetheless, moving to the cloud could still save huge amounts of energy.

In a case study using the CLEER simulation, researchers found that if all American businesses moved their email programs, spreadsheet applications, customer management software and the like to centralized off-site servers, companies would shrink their computing energy footprints by 87 percent, enough to satiate the 23 billion kilowatt-hour annual appetite for the city of Los Angeles.

"The main gains in cloud computing come from consolidation," a scientist at Berkeley Lab who co-authored the study. Many businesses have servers and computing hardware on-site, which are often inefficient and underused, soaking up electricity while sitting idle.

Pooling these resources in a central location means companies can effectively buy computing power in bulk and servers can spend more time doing actual work per processing unit, reducing the overall need for more computers.

A counterintuitive finding

It seems to make intuitive sense, but researchers said they had some difficulty confirming their suspicions that the cloud saves energy. "There is a gap here where there is not enough data," Ramakrishnan said.

It seems to make intuitive sense, but researchers said they had some difficulty confirming their suspicions that the cloud saves energy. "There is a gap here where there is not enough data," Ramakrishnan said.

Another issue is that there are so many variables at play. "The savings are really going to vary depending on the system you're studying and what your baseline

Whether watching a video uses less energy when it is streamed compared to a DVD depends on your computer, how you use it, the quality of your Internet connection and server loads, along with a host of other factors.

"The analyses that we need for understanding the net implications of these new technologies can be quite complex,

"There are a lot of moving parts that determine whether it's a good or bad thing."

The CLEER Model starts to chip away at this problem, aggregating available models of how data moves through the Internet. It then calculates the energy used to deliver the ones and zeros as well as the carbon intensity behind it, since not all electrons are created equal; electricity from dirty fuels like coal or from renewable sources, like solar and wind power, changes the overall environmental impact from the cloud.

There are also some limitations to CLEER. "We didn't include things like cost and latency and other things that come into play when you're making a business decision," Masanet said. However, researchers can rebuild and reconfigure the model from the bottom up as better data come in.

Eventually, Internet companies could tell you just how efficient their data centers are using tools like CLEER, increasing energy transparency and letting consumers shop for the most efficient option.

A new wireless technology on computer science and engineering.

“it transforms existing wireless signals into both a source of power and a communication medium. It enables two battery-free devices to communicate by backscattering existing wireless signals. Backscatter communication is orders of magnitude more power-efficient than traditional radio communication. Further, since it leverages the ambient RF signals that are already around us, it does not require a dedicated power infrastructure as in RFID.”

“It’s hopefully going to have applications in a number of areas including wearable computing, smart homes and self-sustaining sensor networks,”

“We can reflect these signals slightly to create a Morse code of communication between battery-free devices.”

The researchers tested the ambient backscatter technique with credit card-sized prototype devices placed within several feet of each other. Groups of the devices were tested in a variety of settings in the Seattle area, including inside an apartment building, on a street corner and on the top level of a parking garage. These locations ranged from less than half a mile away from a TV tower to about 6.5 miles away.

They found that the devices were able to communicate with each other, even the ones farthest from a TV tower. The receiving devices picked up a signal from their transmitting counterparts at a rate of 1 kilobit per second when up to 2.5 feet apart outdoors and 1.5 feet apart indoors. This is enough to send information such as a sensor reading, text messages and contact information. It’s also feasible to build this technology into devices that do rely on batteries, such as smartphones. It could be configured so that when the battery dies, the phone could still send text messages by leveraging power from an ambient TV signal.

The researchers are not the only people looking at solving the problem of mobile / wireless power. The Wireless Power Consortium have created a standard called Qi (pronounced "Chee") which uses inductive electrical power transfer over distances of up to 4 cm (2 inches). The Qi system comprises a power transmission pad and a compatible receiver in a portable device. To use the system, the mobile device is placed on top of the power transmission pad, which charges it via electromagnetic induction .

The researchers are not the only people looking at solving the problem of mobile / wireless power. The Wireless Power Consortium have created a standard called Qi (pronounced "Chee") which uses inductive electrical power transfer over distances of up to 4 cm (2 inches). The Qi system comprises a power transmission pad and a compatible receiver in a portable device. To use the system, the mobile device is placed on top of the power transmission pad, which charges it via electromagnetic induction. But unlike the UW researcher’s technology, the Qi specification is primarily targeting the recharging of a device rather than the actual powering of it.

The applications of true long haul wireless power transmission are as broad as they are exciting as Time .

“given their size and wireless self-sufficiency, imagine these sensors embedded in everything from structures to vehicles to clothing. An office in a skyscraper might alert someone that a window’s been left open; a vehicle might alert you if the child lock’s been tripped or that there’s change under the seat; running shoes could let you know when you’ve reached their optimal mileage threshold. Or consider : examples: bridges capable of alerting someone if stress-related cracks form, couches that sing out after they “eat” your keys and the option to send text messages or emails with “wearable” technology, battery-free.”

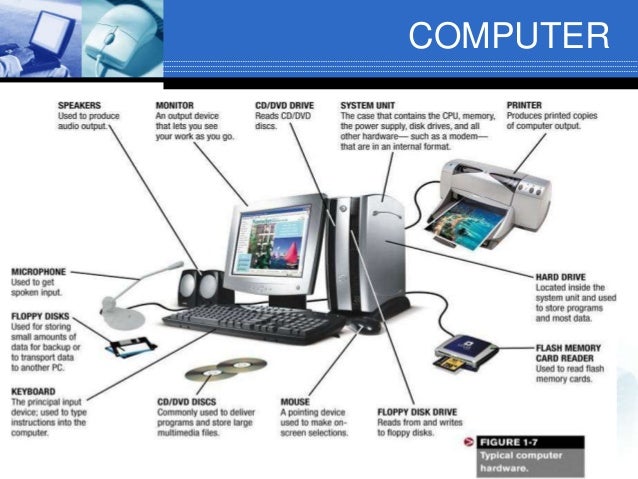

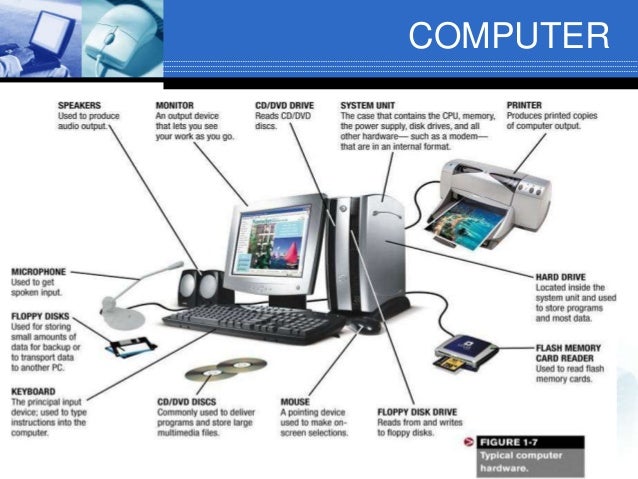

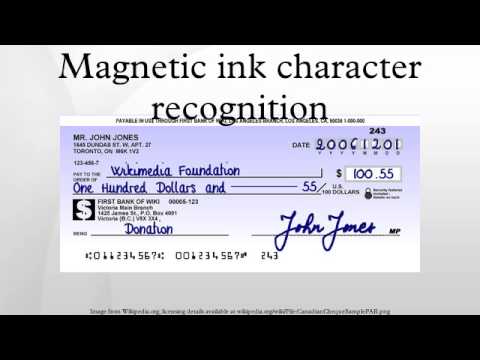

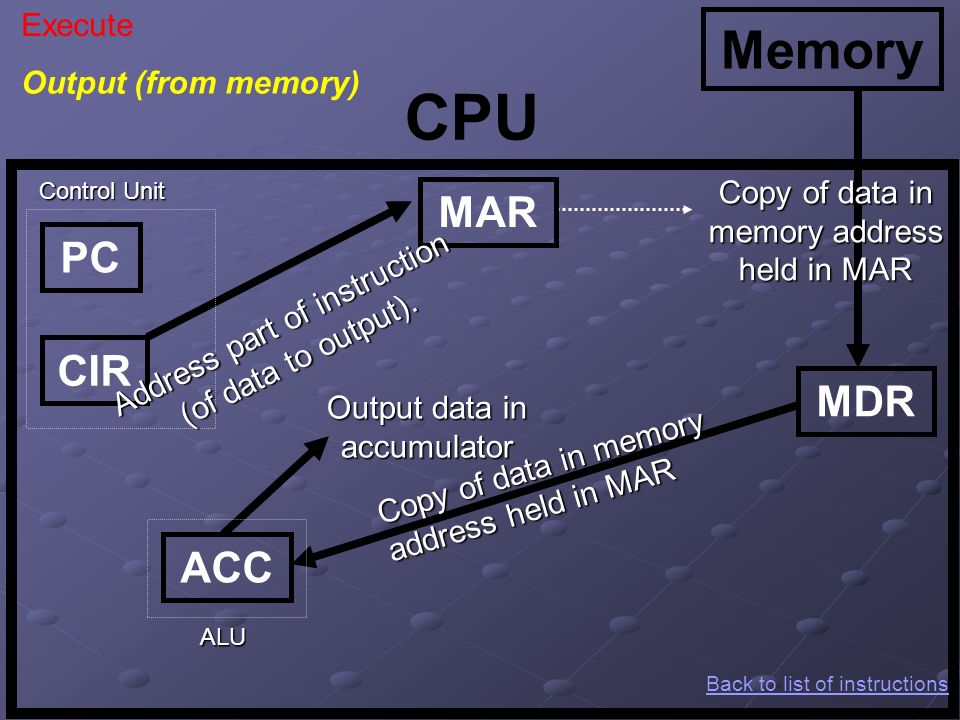

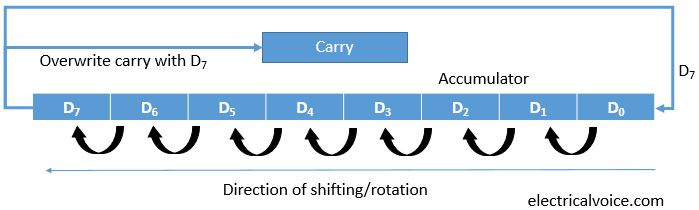

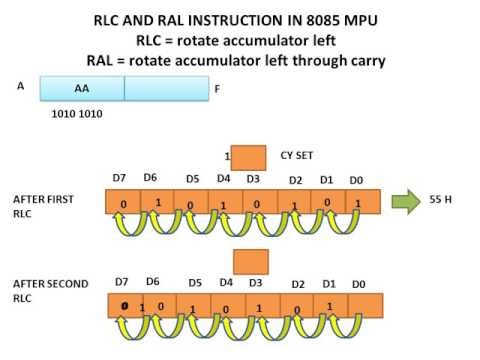

XO_XO ++ DW the earliest example of ADA ( Address - Data - Accumulation ) in the flash disk electronic circuit

Flash Drives Work :

You plug it in, grab an important file, and yank it out. It all seems so simple. But have you ever wondered what is going on inside your flash drive as your files are moving to and fro?

Perhaps you’re like most people: perfectly content to enjoy the usefulness of your flash drive without ever considering the mysterious flow of electrons between the myriad tiny components that make it up. And that’s okay. But sometimes, even for the blissfully ignorant, curiosity can be peeked when a device stops doing the thing it's supposed to do, like when a flash drive breaks or stops being recognized by your computer.

For the electronics lay person who wants a general idea of how flash drives work, the following info is for you.

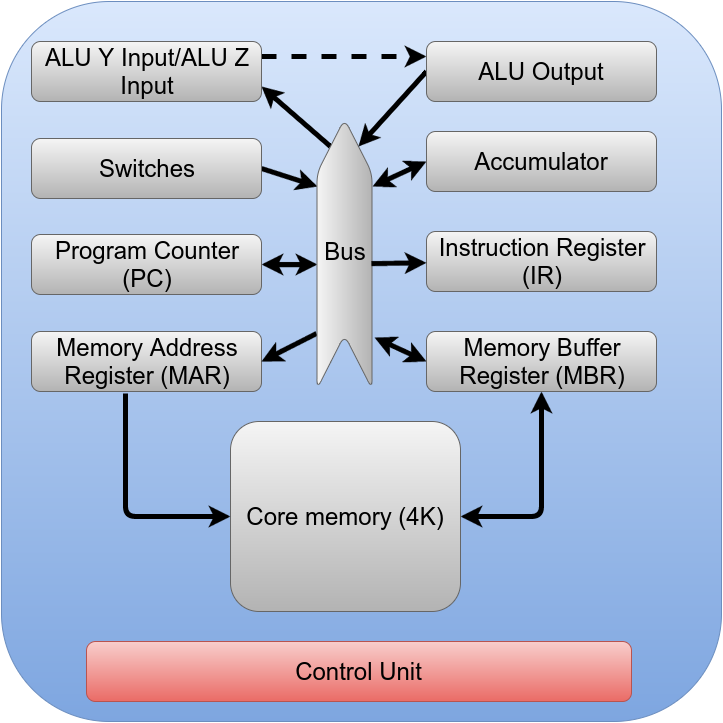

Flash Drives vs. Hard Drives

A key difference between flash drives and other types of memory devices is the lack of moving parts. Flash drives are solid state, which means they store data on a piece of silicon with zillions of tiny transistors, as opposed to traditional hard drives which use magnetic spinning disks.

Each transistor on the silicon chip holds a single bit of binary digital information: either 1 or 0. In flash drives, the transistor is set to 1 by default. To change one of these transistors' states to 0, an electric charge resulting in a crazy little process called Fowler-Nordheim tunneling is required.

One cool thing about flash memory is that it doesn't require a power source to remember what is on it. In fact, if you bury your flash drive in your back yard and dig it up a thousand years from now, the data will still be on the memory chip just as you left it.

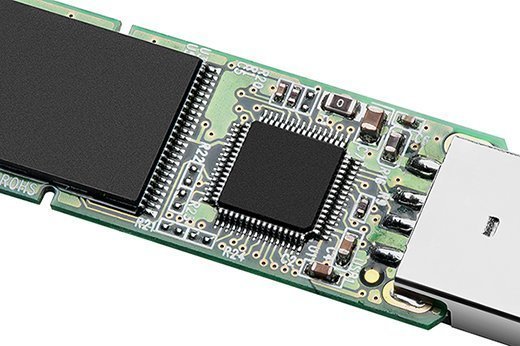

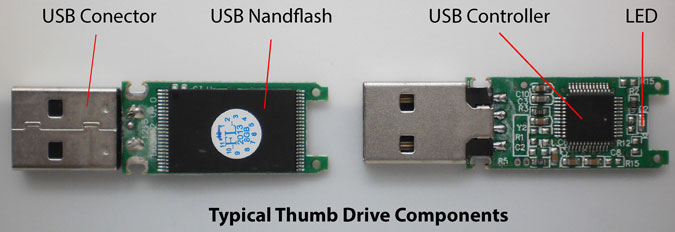

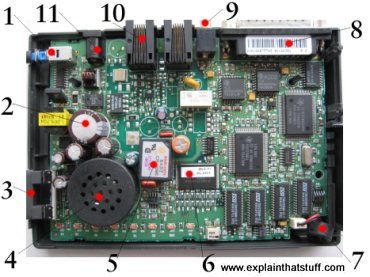

The Brain of a Flash Drive

Basically, the data on a memory chip is nothing more than a bunch of tiny ones and zeros. Making sense of those ones and zeros-telling them where to go and keeping track of where they are-is accomplished by the flash drive’s microcontroller. A flash drive’s microcontroller is roughly analogous to a computer’s CPU, or a human’s brain, but somewhat simpler, in most cases.

There is a vast array of brands and varieties of microcontrollers used by flash drive manufacturers. Some are better than others in terms of speed and durability. Second only to broken connectors, bad microcontrollers are the leading cause of flash drive failure.

How Flash Drives Communicate with Computers

USB flash drives talk with computers by means of either a standard USB 2.0 or USB 3.0 interface. At present, flash drives using the USB 3.0 interface are backwards compatible with the USB 2.0 plug.

USB 2.0 interfaces have four connections: a +5VDC power source, one positive and one negative data line, and a ground. USB 3.0 connectors have four additional data lines and a drain, creating a 10X increase in theoretical transfer speed.

In addition to the essential parts mentioned above, flash drive circuit boards are covered in lots of little surface mounted components like resistors, capacitors, diodes, oscillators, LEDs, and other parts, the primary purpose of which is to divide and direct electricity to the right places. we hope that helps unravel a little bit of the mystery behind the flash drive. Don’t forget to back up your flash drive and let us know if we can help you recover lost photos or other data. .

Flash Drives and The Cloud

Cloud storage platforms like Dropbox and Google Drive have some genuine advantages over USB flash drives: You obviously don't have to remember to bring your flash drive with you in order to access your files, and the risk of losing your files is relatively small compared to flash drives that can break, malfunction, be misplaced or get stolen. On the other hand, cloud storage also has its drawbacks. For many of us the issue of security is a deal breaker. Just today it would appear that Dropbox user accounts have once again been hacked. Even if you go in a change your password right away, in reality it may be too late. You won't know if hackers have accessed your private files until unknown charges appear on your bank statement, private pictures are posted online, etc.

One solution is to keep your sensitive files off of cloud storage and on a flash drive instead. If you tend to leave your flash drive at home, you might want to consider a flash drive that attaches to your key chain. Encrypting your flash drive with TrueCrypt or BitLocker adds an additional layer of security ( e- Key Shifting ) .

Flash memory

Imagine if your memory worked only while you were awake. Every morning when you got up, your mind would be completely blank! You'd have to relearn everything you ever knew before you could do anything. It sounds like a nightmare, but it's exactly the problem computers have. Ordinary computer chips "forget" everything (lose their entire contents) when the power is switched off. Large personal computers get around this by having powerful magnetic memories called hard drives, which can remember things whether the power is on or off. But smaller, more portable devices, such as digital cameras and MP3 players, need smaller and more portable memories. They use special chips called flash memories to store information permanently. Flash memories are clever—but rather complex too. How exactly do they work?

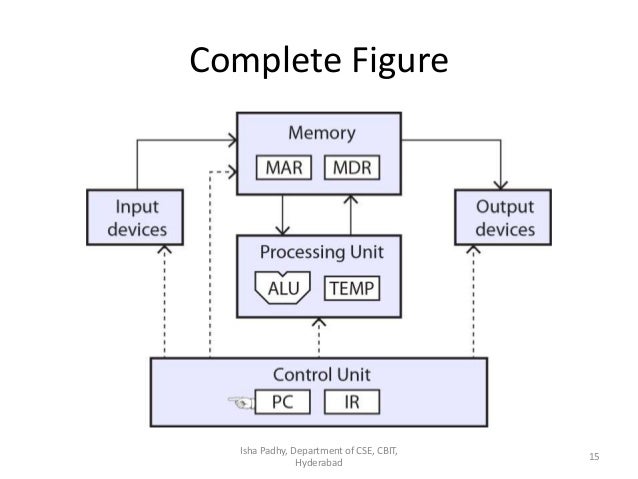

How computers store information

Computers are electronic machines that process information in digital format. Instead of understanding words and numbers, as people do, they change those words and numbers into strings of zeros and ones called binary (sometimes referred to as "binary code"). Inside a computer, a single letter "A" is stored as eight binary numbers: 01000001. In fact, all the basic characters on your keyboard (the letters A–Z in upper and lower case, the numbers 0–9, and the symbols) can be represented with different combinations of just eight binary numbers. A question mark (?) is stored as 00111111, a number 7 as 00110111, and a left bracket ([) as 01011011. Virtually all computers know how to represent information with this "code," because it's an agreed, worldwide standard. It's called ASCII (American Standard Code for Information Interchange).

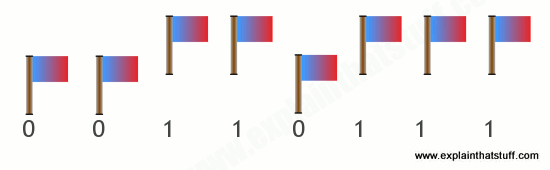

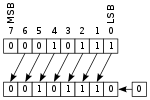

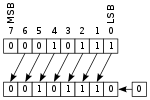

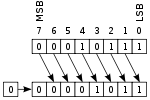

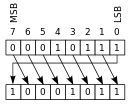

Computers can represent information with patterns of zeros and ones, but how exactly is the information stored inside their memory chips? It helps to think of a slightly different example. Suppose you're standing some distance away, I want to send a message to you, and I have only eight flags with which to do it. I can set the flags up in a line and then send each letter of the message to you by raising and lowering a different pattern of flags. If we both understand the ASCII code, sending information is easy. If I raise a flag, you can assume I mean a number 1, and if I leave a flag down, you can assume I mean a number 0. So if I show you this pattern:

You can figure out that I am sending you the binary number 00110111, equivalent to the decimal number 55, and so signaling the character "7" in ASCII.

What does this have to do with memory? It shows that you can store, or represent, a character like "7" with something like a flag that can be in two places, either up or down. A computer memory is effectively a giant box of billions and billions of flags, each of which can be either up or down. They're not really flags, though—they are microscopic switches called transistors that can be either on or off. It takes eight switches to store a character like A, 7, or [. It takes one transistor to store each binary digit (which is called a bit). In most computers, eight of these bits are collectively called a byte. So when you hear people say a computer has so many megabytes of memory, it means it can store roughly that many million characters of information (mega means million; giga means thousand million or billion).

What is flash memory?

Photo: A typical USB memory stick—and the flash memory chip you'll find inside if you take it apart (the large black rectangle on the right).

Ordinary transistors are electronic switches turned on or off by electricity—and that's both their strength and their weakness. It's a strength, because it means a computer can store information simply by passing patterns of electricity through its memory circuits. But it's a weakness too, because as soon as the power is turned off, all the transistors revert to their original states—and the computer loses all the information it has stored. It's like a giant attack of electronic amnesia!

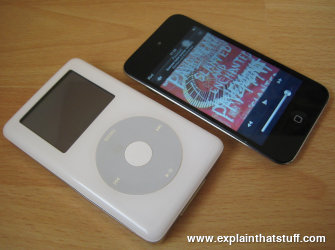

Photo: Apple iPods, past and present. The white one on the left is an old-style classic iPod with a 20GB hard drive memory. The newer black model on the right has a 32GB flash memory, which makes it lighter, thinner, more robust (less likely to die if you drop it), and less power hungry.

Memory that "forgets" when the power goes off is called Random Access Memory (RAM). There is another kind of memory called Read-Only Memory (ROM) that doesn't suffer from this problem. ROM chips are pre-stored with information when they are manufactured, so they don't "forget" what they know when the power is switched on and off. However, the information they store is there permanently: they can never be rewritten again. In practice, a computer uses a mixture of different kinds of memory for different purposes. The things it needs to remember all the time—like what to do when you first switch it on—are stored on ROM chips. When you're working on your computer and it needs temporary memory for processing things, it uses RAM chips; it doesn't matter that this information is lost later. Information you want a computer to remember indefinitely is stored on its hard drive. It takes longer to read and write information from a hard drive than from memory chips, so hard drives are not generally used as temporary memory. In gadgets like digital cameras and small MP3 players, flash memory is used instead of a hard drive. It has certain things in common with both RAM and ROM. Like ROM, it remembers information when the power is off; like RAM, it can be erased and rewritten over and over again.

How flash memory works—the simple explanation

Photo: Turn a digital camera's flash memory card over and you can see the electrical contacts that let the camera connect to the memory chip inside the protective plastic case.

Flash works using an entirely different kind of transistor that stays switched on (or switched off) even when the power is turned off. A normal transistor has three connections (wires that control it) called the source, drain, and gate. Think of a transistor as a pipe through which electricity can flow as though it's water. One end of the pipe (where the water flows in) is called the source—think of that as a tap or faucet. The other end of the pipe is called the drain—where the water drains out and flows away. In between the source and drain, blocking the pipe, there's a gate. When the gate is closed, the pipe is shut off, no electricity can flow and the transistor is off. In this state, the transistor stores a zero. When the gate is opened, electricity flows, the transistor is on, and it stores a one. But when the power is turned off, the transistor switches off too. When you switch the power back on, the transistor is still off, and since you can't know whether it was on or off before the power was removed, you can see why we say it "forgets" any information it stores.

A flash transistor is different because it has a second gate above the first one. When the gate opens, some electricity leaks up the first gate and stays there, in between the first gate and the second one, recording a number one. Even if the power is turned off, the electricity is still there between the two gates. That's how the transistor stores its information whether the power is on or off. The information can be erased by making the "trapped electricity" drain back down again.

How flash memory works—a more complex explanation

That's a very glossed over, highly simplified explanation of something that's extremely complex. If you want more detail, it helps if you read our article about transistors first, especially the bit at the bottom about MOSFETs—and then read on.

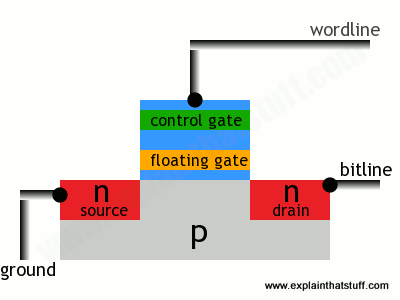

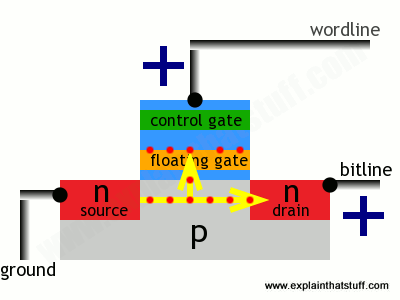

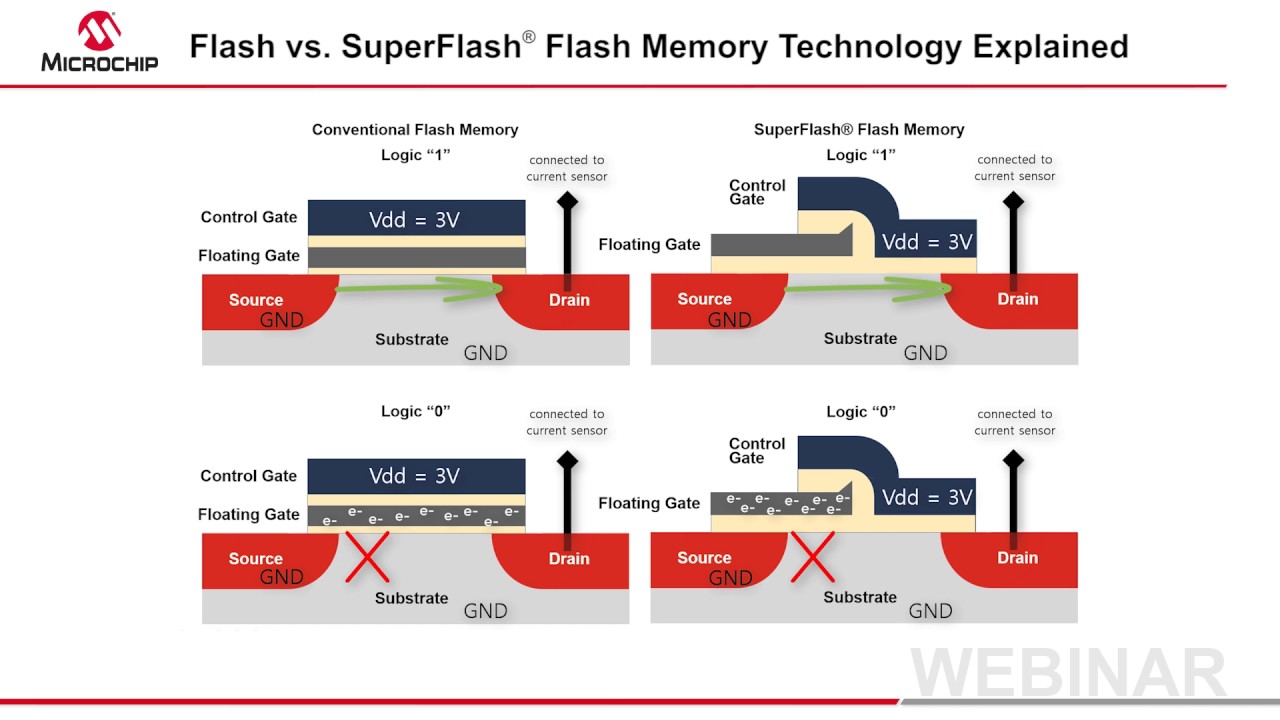

The transistors in flash memory are like MOSFETs only they have two gates on top instead of one. This is what a flash transistor looks like inside. You can see it's an n-p-n sandwich with two gates on top, one called a control gate and one called a floating gate. The two gates are separated by oxide layers through which current cannot normally pass:

In this state, the transistor is switched off—and effectively storing a zero. How do we switch it on? Both the source and the drain regions are rich in electrons (because they're made of n-type silicon), but electrons cannot flow from source to drain because of the electron deficient, p-type material between them. But if we apply a positive voltage to the transistor's two contacts, called the bitline and the wordline, electrons get pulled in a rush from source to drain. A few also manage to wriggle through the oxide layer by a process called tunneling and get stuck on the floating gate:

The presence of electrons on the floating gate is how a flash transistor stores a one. The electrons will stay there indefinitely, even when the positive voltages are removed and whether there is power supplied to the circuit or not. The electrons can be flushed out by putting a negative voltage on the wordline—which repels the electrons back the way they came, clearing the floating gate and making the transistor store a zero again.

Not an easy process to understand, but that's how flash memory works its magic!

How long does flash memory last?

Flash memory eventually wears out because its floating gates take longer to work after they've been used a certain number of times. It's very widely quoted that flash memory degrades after it's been written and rewritten about "10,000 times," but that's misleading. According to a 1990s flash patent by Steven Wells of Intel, "although switching begins to take longer after approximately ten thousand switching operations, approximately one hundred thousand switching operations are required before the extended switching time has any affect on system operation." Whether it's 10,000 or 100,000, it's usually fine for a USB stick or the SD memory card in a digital camera you use once a week, but less satisfactory for the main storage in a computer, cellphone, or other gadget that's in daily use for years on end. One practical way around the limit is for the operating system to ensure that different bits of flash memory are used each time information is erased and stored (technically, this is called wear-leveling), so no bit is erased too often. In practice, modern computers might simply ignore and "tiptoe round" the bad parts of a flash memory chip, just like they can ignore bad sectors on a hard drive, so the real practical lifetime limit of flash drives is much higher: somewhere between 10,000 and 1 million cycles. Cutting-edge flash devices have been demonstrated that survive for 100 million cycles or more.

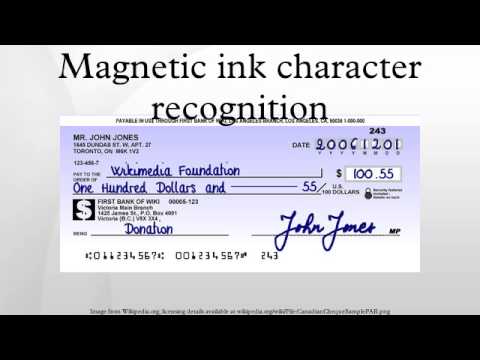

Who invented flash memory?

Flash was originally developed by Toshiba electrical engineer Fujio Masuoka, who filed US Patent 4,531,203 on the idea with colleague Hisakazu Iizuka back in 1981. Originally known as simultaneously erasable EEPROM (Electrically Erasable Programmable Read-Only Memory), it earned the nickname "flash" because it could be instantly erased and reprogrammed—as fast as a camera flash. At that time, state-of-the-art erasable memory chips (ordinary EPROMS) took 20 minutes or so to wipe for reuse with a beam of ultraviolet light, which meant they needed expensive, light-transparent packaging. Cheaper, electrically erasable EPROMS did exist, but used a bulkier and less efficient design that required two transistors to store each bit of information. Flash memory solved these problems.

Toshiba released the first flash chips in 1987, but most of us didn't come across the technology for another decade or so, after SD memory cards first appeared in 1999 (jointly supported by Toshiba, Matsushita, and SanDisk). SD cards allowed digital cameras to record hundreds of photos and made them far more useful than older film cameras, which were limited to taking about 24–36 pictures at a time. Toshiba launched the first digital music player using an SD card the following year. It took Apple a few more years to catch up and fully embrace flash technology in its own digital music player, the iPod. Early "classic" iPods all used hard drives, but the release of the tiny iPod Shuffle in 2005 marked the beginning of a gradual switchover, and all modern iPods and iPhones now use flash memory instead.

SuperFlash® Technology

SuperFlash® technology is an innovative and versatile type of NOR Flash. SuperFlash technology uses a proprietary split-gate cell architecture which provides superior performance, data retention, and reliability over conventional stacked gate Flash.

Flash memory form factors

Flash-based media is based on a silicon substrate. Also known as solid-state devices, they are widely used in both consumer electronics and enterprise data storage systems.

There are three SSD form factors that have been identified by the Solid State Storage Initiative:

- SSDs that fit into the same slots used by traditional electromechanical hard disk drives (HDDs). SSDs have architecture similar to that of an integrated circuit.

- Solid-state cards that reside on a printed circuit board and use a standard card form factor, such as Peripheral Component Interconnect Express (PCIe).

- Solid-state modules that fit in a dual inline memory module (DIMM) or small outline dual inline memory module using a standard HDD interface, such as the Serial Advanced Technology Attachment (SATA).

An additional subcategory is a hybrid hard drive that combines a conventional HDD with a NAND flash module. A hybrid hard drive is generally viewed as a way to bridge the divide between rotating media and flash memory.

All-flash and hybrid flash memory

The advent of flash memory fueled the rise of all-flash arrays. These systems contain only SSDs. They offer advantages in performance, and sometimes reduced operational costs, compared to all disk-based storage arrays. The chief difference, aside from the media, is in the underlying physical architecture used to write data to a storage device.

HDD-based arrays have an actuator arm that enables data to be written to a specific block on a specific sector on the disk. All-flash storage systems do not require moving parts to write data. The writes are made directly to the flash memory, and custom software handles data management.

A hybrid flash array blends disk and SSDs. Hybrid arrays use SSDs as a cache to speed access to frequently requested hot data, which subsequently is rewritten to back-end disk. Many enterprises commonly archive data from disk as it ages by replicating it to an external magnetic tape library.

Flash plus tape, also known as flape, describes a type of tiered storage in which primary data in flash is simultaneously written to a linear tape system.

In addition to flash memory arrays, the ability to insert SSDs in x86-based servers has increased the technology's popularity. This arrangement is known as server-side flash memory and it enables companies to sidestep the vendor lock-in associated with purchasing expensive and integrated flash storage arrays.

The drawback of placing flash in a server is that customers need to build the hardware system internally, including the purchase and installation of a storage management software stack from a third-party vendor.

Pros and cons of flash memory

Flash is the least expensive form of semiconductor memory. Unlike dynamic random access memory (DRAM) and static RAM (SRAM), flash memory is nonvolatile, offers lower power consumption and can be erased in large blocks. Also, on the plus side, NOR flash offers fast random reads, while NAND flash is fast with serial reads and writes.

An SSD with NAND flash memory chips delivers significantly higher performance than traditional magnetic media, such as HDDs and tape. Flash drives also consume less power and produce less heat than HDDs. Enterprise storage systems equipped with flash drives are capable of low latency, which is measured in microseconds or milliseconds.

The main disadvantages of flash memory are the wear-out mechanism and cell-to-cell interference as the dies get smaller. Bits can fail with excessively high numbers of program/erase cycles, which eventually break down the oxide layer that traps electrons. The deterioration can distort the manufacturer-set threshold value at which a charge is determined to be a zero or a one. Electrons may escape and get stuck in the oxide insulation layer, leading to errors and bit rot.

Anecdotal evidence suggests NAND flash drives are not wearing out to the degree once feared. Flash drive manufacturers have improved endurance and reliability through error correction code algorithms, wear leveling and other technologies.

In addition, SSDs do not wear out without warning. They typically alert users in the same way a sensor might indicate an underinflated tire.

NAND flash memory storage types

NAND flash semiconductor manufacturers have developed different types of memory suitable for a wide range of data storage uses cases. The following chart explains the various NAND flash types.

Types of NAND flash memory storage

| ||||

Description

|

Advantages

|

Disadvantages

|

Primary use

| |

Stores one bit per cell and two levels of charge.

|

Higher performance, endurance and reliability than other types of NAND flash.

|

Higher cost than other types of NAND flash.

|

Enterprise storage, mission-critical applications.

| |

Can store multiple bits per cell and multiple levels of charge. The term MLC equates to two bits per cell.

|

Cheaper than SLC and enterprise MLC (eMLC), high density.

|

Lower endurance than SLC and eMLC, slower than SLC.

|

Consumer devices, enterprise storage.

| |

Typically stores two bits per cell and multiple levels of charge; uses special algorithms to extend write endurance.

|

Less expensive than SLC flash, greater endurance than MLC flash.

|

More expensive than MLC, slower than SLC.

|

Enterprise applications with high write workloads.

| |

Stores three bits per cell and multiple levels of charge. Also referred to as MLC-3, X3 or 3-bit MLC.

|

Lower cost and higher density than MLC and SLC.

|

Lower performance and endurance than MLC and SLC.

|

Mass storage consumer applications, such as USB drives, flash memory cards, smartphones, and client SSDs, and data center SSDs for read-intensive workloads.

| |

Vertical/3D NAND

|

Stacks memory cells on top of each other in three dimensions vs. traditional planar NAND technology.

|

Higher density, higher write performance and lower cost per bit vs. planar NAND.

|

Higher manufacturing cost than planar NAND; difficulty in manufacturing using production planar NAND processes; potentially lower data retention.

|

Consumer and enterprise storage.

|

*Quad-level cell (QLC)

|

Uses a 64-layer architecture that is considered thenext iteration of 3D NAND. Not widely available as of November 2017.

|

Stores four bits of data per NAND cell, potentially boosting SSD densities.

|

More data bits per cell can affect endurance; increased costs of engineering.

|

Mostly write once, read many (WORM) use cases.

|

Note: NAND flash wear-out is less of a problem in SLC flash than it is in less expensive types of flash, such as MLC and TLC, for which the manufacturers may set multiple threshold values for a charge. The commonly cited industry wear-out figures are 100,000 program/erase -- write/erase -- cycles for SLC NAND flash; 30,000 for eMLC; 10,000 or fewer for MLC; and 3,000 or fewer for TLC. Actual endurance figures may be higher.

* Samsung and Western Digital have disclosed or previewed preliminary designs for their respective QLC architectures.

| ||||

Vendor breakdown of enterprise NAND flash memory products

Major manufacturers of NAND flash memory chips include Intel Corp., Micron Technology Inc., Samsung Group, SanDisk Corp. -- now owned by Western Digital Corp. -- SK Hynix Inc. and Toshiba Memory Corp.

A NAND flash shortage is causing disruption in the market. The shortfall is causing SSD prices to rise and lead times to lengthen. The demand outstrips supply largely due to soaring demand from smartphone makers.

Other turmoil is exerting an impact on the market. As of November 2017, leading flash supplier Toshiba agreed to sell its chip making unit to a group of corporate and institutional investors led by Bain Capital. Toshiba sold the flash business as part of its effort to cover financial losses and to avoid being delisted on the Tokyo Stock Exchange.

However, Toshiba partner Western Digital (WD) has vowed to fight the $18 billion deal. WD inherited a stake in Toshiba's chip business following its acquisition of SanDisk Corp., which had a joint venture with Toshiba.

NOR flash memory types

The two main types of NOR flash memory are parallel and serial, also known as serial peripheral interface. NOR flash was originally available only with a parallel interface. Parallel NOR offers high performance, security and additional features; its primary uses include industrial, automotive, networking, and telecom systems and equipment.

NOR cells are connected in parallel for random access. The configuration is geared for random reads associated with microprocessor instructions and to execute codes used in portable electronic devices, almost exclusively of the consumer variety.

Serial NOR flash has a lower pin count and smaller packaging, making it less expensive than parallel NOR. Use cases for serial NOR include personal and ultra-thin computers, servers, HDDs, printers, digital cameras, modems and routers.

Leading NOR vendor products

Major manufacturers of NOR flash memory include Cypress Semiconductor Corp. -- through its acquisition of Spansion Inc. -- Macronix International Co. Ltd., Microchip Technology Inc., Micron Technology Inc. and Winbond Electronics Corp.

Cypress Semiconductor acquired NOR flash provider Spansion in 2015. The Cypress NOR portfolio includes FL-L, FL-S, FS-S and FL1-K products.

Macronix OctaFlash uses multiple banks to enable write access to one bank and read from another. Macronix MX25R Serial NOR is a low-power version that targets internet of things (IoT) applications.

Microchip NOR is branded as Serial SPI Flash and Serial Quad I/O Flash. The vendor's parallel NOR products include the Multi-Purpose Flash devices and Advanced Multi-Purpose Flash devices families.

Micron sells Serial NOR Flash and Parallel NOR Flash, as well as Micron Xccela high-performance NOR flash for automotive and IoT applications.

The Winbond serial NOR product line is branded as SpiFlash Memories and includes the W25X and W25Q SpiFlash Multi-I/O Memories. In 2017, Winbond expanded its line of Secure Flash NOR for additional uses, including system-on-a-chip design to support artificial intelligence, IoT and mobile applications.

The future of the flash memory market

The flash memory market continues to see advances in form factor and deployment options. Storage array vendors are adding support for the nonvolatile memory express (NVMe) controller interface, a protocol that accelerates data transfer between client systems and flash storage. An NVMe host controller exploits the high-speed performance of the PCIe bus.

Using PCIe enables an application to communicate directly with flash storage by reducing network hops that would occur with host bus adapters and routers. PCIe enables the emergence of drives built on the NVMe specification, providing an alternative that experts believe could supplant 2.5-inch and 3.5-inch form factors. NVMe SSDs plug into idle server slots on a computer, removing the cost and complexity associated with cabling.

Rising with NVMe and PCIe-connected SSDs are nonvolatile dual inline memory modules (NVDIMMs) that integrate DRAM, NAND flash and a dedicated backup power supply. NVDIMMs may be inserted in a standard DIMM connector on a memory bus. The flash memory on an NVDIMM card serves to back up and restore data kept in DRAM, while the onboard power supply ensures the storage remains nonvolatile.

Candidates to succeed NAND flash have emerged. In fact, some of these memories have been in development for a while. These alternative technologies are based on memory architectures that differ from NAND's floating gate transistor.

Phase change memory (PCM) stores data by changing the state of the bit cell using electrical pulses. These pulses alter the nonvolatile DRAM from an unstructured to a structured state. Potential advantages of PCM include lower latency on read data and accelerated write performance. In addition, PCM enables an individual byte to be written, whereas flash memory requires an entire block of data to be written.

Resistive random access memory, known also as ReRAM or RRAM, is nonvolatile storage that bears similarities to PCM. Instead of electrical pulses, ReRAM changes the resistance properties of the underlying dielectric, which can include various oxide metals and other solid materials. A ReRAM device includes a nonvolatile memory resistor, or memristor, which is a component that regulates a circuit's electricity flow and retains information on the amount of charge that has flowed through it historically.

In comparison with NAND, ReRAM is said to offer higher switching speed and lower power consumption. 4DS Memory Ltd., Crossbar Inc., Fujitsu Semiconductor, Israeli startup Weebit Nano and Western Digital -- through SanDisk ReRAM -- are all working to bring ReRAM-based storage products to market. Hewlett Packard Enterprise dropped its Memristor product in 2016, after experimenting with it in joint ventures with SanDisk and SK Hynix.

Magneto-resistive RAM (MRAM) stores data using magnetic states. A pair of ferromagnetic metal plates is used: one plate is a permanent magnet and a second plate is capable of being magnetized. Separating the two plates is a thin layer of insulating material. Binary bits are defined as one or zero according to the orientation of the two magnetic fields.

A related approach is spin-torque transfer, a method that sends an electric current to thin magnetic layers situated between non-magnetized materials. The current alters the spin of electrons to an up or down state.

the devices being integrated with an interface that is a USB. They are very convenient due to their small size and weight and the fact that they are both rewritable and removable. These drives are available with different storage limits ranging from usually 4GB to 64GB.

There are a number of advantages of using a thumb drive as opposed to the now antiquated floppy disc. They are smaller, more reliable, rarely break due to the lack of moving parts, and they can hold more data and are faster too. Inside a thumb drive you will find a circuit board; this board is printed and encased in a tough metal or plastic exterior, meaning you can carry it around in your bag or pocket. There is generally a removable cap on the end that protects the protruding USB connector. The vast majority of flash drives can be connected directly to your PC through a standard USB connector.

The USB connection provides the power supply for the flash drive, meaning that it needs no other external source of power and will work when simply connected to the USB of your computer or other device. You need to connect your flash drive to your computer via a USB port or HUB in order to read the data that is saved on it.

The very first USB flash drive that was created was an 8MB version that was known as the 16 megabyte “disgo”. Dov Moran, CEO and president of M-System Flash Pioneers invented the very first flash drive in 1998. The now Vice President of SanDisk, Dan Harkabi led the marketing and development of the drive at M-Systems. The most significant advance made by him was the development of a flash drive that did not need you to install any drivers to use it. Trek 2000 and Netac also undertook simultaneous developments, creating very similar products. These three companies all patented their devices, the patents being disputed at the time. The first flash drive to be sold was in the US, the drive being sold in 2001 under the name “Memory key”. The drive had an 8MB capacity. Later on, Trek 2000 manufactured a 16MB version that was introduced onto the market by IBM; then in 2003 the 64MB version was to be introduced by M-Systems. Lexar is another company that can lay claim to a flash or thumb drive. In 2000, Lexar were to produce a CF (Compact Flash) card that had an internal USB. This product eliminated the need for a USB as it was offered with a USB cable and a companion card reader.

The first thumb or flash drives that were to be sold in Europe were sold under the “disgo” name, and they were made by M-Systems in sizes of 8, 16, 32, and 64MB. This product was marketed to kill the floppy disc for good, and the largest capacity to be introduced onto the market was 256MB. After the introduction of flash drives in Europe, cheaper alternatives would then appear onto the Asian market.

The first thumb or flash drives that were to be sold in Europe were sold under the “disgo” name, and they were made by M-Systems in sizes of 8, 16, 32, and 64MB. This product was marketed to kill the floppy disc for good, and the largest capacity to be introduced onto the market was 256MB. After the introduction of flash drives in Europe, cheaper alternatives would then appear onto the Asian market.

A modern flash drive has a USB2.0 connectivity. However, although systems will support a 480 Mbit/s drive, technical limitations in the NAND flash mean that this limit is never actually reached.

Everywhere you look nowadays you will see flash drives that include business logos and are printed and made in many different designs. Flash drives have mean that businesses have been able to reach their real branding objectives, the promotion merchandise being heavily successful. When you own a flash drive, you can simply save and transport your files and data wherever you go. A business can promote itself to anyone, anywhere; all that is necessary is a USB port. Businesses are even able to offer further services such as providing catalogs, discount vouchers, and promotional materials on their pen drives. They can include huge amounts of electronic data on their drives, providing huge success for them wherever they go.

Since the first introduction of the flash drive, the growth has been extremely quick, and both the quality and quantity have increased. Businesses began to make bulk order of flash or thumb drives; this meant that they could save both time and financial resources. Also, the quality of flash drives has improved immensely since they were first introduced, and there is a wide range of capacities available to meet the different demands of the consumer. Some people require a small drive such as an 1GB drive for personal use; others need 32GB or more for their business requirements. As well as choosing from different storage amounts, businesses can also choose promotional flash drives to promote their brand, the storage limitations being unimportant in this case. As well as flash drives and thumb drives, businesses have also being developing USB watches and pens; in these cases the development has been geared more to design and fashion rather than to the actual capacity of the drive.

USB 2.0 Hi-speed Flash drive

Whether you refer to it as a pen drive, jump drive, thumb drive, key drive, or memory stick, you have to admit, the USB Flash drive is pretty darn cool. Just stick it into the USB port on your computer and within a few seconds a new drive appears on your desktop. So simple and so easy. What makes them so good is that they have a spate of desirable features such as no need for batteries, solid state storage, good transfer speeds, durability, portability, and expected data retention of ten years. All of these features allow these little babies to practically replace the floppy, the Zip disk, and the CD-R/RW all in one fell swoop.

Unfortunately, when comparing USB Flash drives side-by-side in a computer or electronics store, it can be awfully difficult to decide which is best for you just by looking at the drive and the packaging. It would be easy to make an assumption that all drives are pretty much the same and that shopping for the best price is the smartest way of deciding. We are here to inform you that USB Flash drives are not a commodity and can be very unique offerings. As you will soon find out, some are clearly better than others while others come bundled with nifty features that can really make your day. In short, not all flash drives are created equal.

Products (left to right)

- Fujifilm USB drive - 128MB (US$62 — shop for this drive)

- Iomega Mini - 128MB (US$60 — shop for this drive)

- Mushkin Flashkin - 128MB (US$46)

- PNY Attache - 128MB (US$60 — shop for this drive)

- Transcend JetFlash2A - 128MB (US$38 — shop for this drive)

- SanDisk Cruzer Mini - 128MB (US$31 — shop for this drive)

- Verbatim Store 'n' Go - 256MB (US$85 — shop for this drive)

- SimpleTech Bonzai Xpress - 256MB (US$80 — shop for this drive)

Background on the Universal Serial Bus (USB)

Back in 1995, it was becoming quite clear that the serial and parallel ports on the PC were outliving their usefulness. While Ethernet was becoming generally available, it was still rather expensive to implement and not all devices needed to be shared on a Local Area Network. Thus it was deemed that a new serial bus should be designed to take on the role of the old serial and parallel ports. By January of 1996, USB 1.0 was born. The new serial bus protocol supported two signaling rates: low speed (1.5Mbps) and high speed (12Mbps) Unfortunately, there was practically no operating system support and virtually no hardware available with which to operate. Microsoft, ultimately, did create OEM versions of Windows 95 with USB support, but the support written in was barely usable.

In May 1998, Apple released the original Bondi Blue iMac, which was the first personal computer to completely abandon legacy ports in favor of USB. Microsoft released Windows 98 that year with much better USB support and by September a new revision of USB (version 1.1) was released. Increasing numbers of USB devices started to hit the market and made certain that USB was here to stay. Unfortunately, it quickly became evident that 12Mbps was not enough throughput for devices like CD writers and external Hard drives. By 2000, USB 2.0 was created with a theoretical data transfer rate of 480Mbps. With the 14-fold speed increase, USB became a viable option for fast, portable storage devices.

Consequently, as a warning to the reader by mentioning that if it does not say "USB 2.0 Hi-Speed" or does not feature the logo on the left, it ain't very fast. All the drives found in this review are USB 2.0 Hi-Speed drives. While USB 1.1 (a.k.a. USB 2.0 Full-Speed) drives still exist, the price of Hi-Speed USB 2.0 drives have come down so much recently that there is no really good reason to consider the slower drives. For the sake of comparison, benchmark scores from one such drive are included in this review.

How we tested

Each drive was evaluated on several factors:

- Features

- Accessories

- Extras

- Construction

- Software

Each drive was tested in a variety of scenarios as well as on different operating systems such as Win98, Win2000/XP, and Mac OS X. We tested on various kinds of hardware and USB hubs. We carried each drive around and gave them a fair amount of real world use to see how each would hold up to the rigors of daily usage. The following systems were used for the majority of our testing:

Macintosh test system

PowerBook G4 1.33Ghz

512MB DDR RAM 80GB Fujitsu HDD Mac OS X 10.3.3 | PC system

AthlonXP 3200+ (2.2Ghz)

512MB PC3200 DDR RAM 120GB WD1200JB HDD Windows XP SP1 |

To round things out, we benchmarked each USB Flash drive using various applications. For Windows, we used SiSoft Sandra 2004.2.9.104. On the Mac, we used SpeedTools QuickBench program. We also used a “stop-watch” Perl script in Windows to replicate the Sandra write-performance benchmarks as well as create custom benchmarks. This combination of benchmarking across platforms allowed us to get a good picture of performance on each drive and each platform.

Common features, accessories, and extras

Neck strap with quick release: Most of the drives here feature a neck strap with the exact same quick-release snap. As shown on the left, by pinching the snap, the drive is able to detach from the neck strap. While some may not find the neck strap very useful, it is a nice addition for others.

| |

USB extension cable: Virtually all computers come equipped with USB ports these days, but there are still a large number of machines that have no USB ports on the front of the case. If the only available ports are in the back, plugging and unplugging USB drives can be a pain. The simplest solution to this problem is a three-foot extension cable. Some manufacturers have been thoughtful enough to include one with their drive offering.

| |

Write-protect switch: Just like the floppy disk, some Flash drives contain write-protect switches. The obvious benefit of the write-protect switch is to prevent the accidental deletion or overwriting of files. This is particularly advantageous to the system administrator who is trying to disinfect a machine that has somehow contracted a worm or virus; just carry the write-protected USB drive with the anti-virus software to the infected machine and never worry about the Flash drive becoming compromised. Perhaps the best thing about the write-protect switch is that, unlike the floppy, write-protection can be turned on and off without needing to remove the drive from the USB port.

| |

Security/password protection software and driver software: All USB drives have Win98 drivers available. Some come on full-size CDs, others come on the mini CDs, while others are only available for download from the company website. Windows ME/2000/XP all have native support for USB Mass Storage devices as does Mac OS 9, OS X, and Linux 2.4.2+. (Windows 95 does not appear to have support, but Mac OS 8.6 has drivers available for download from Apple's site.) Many drives also have security/password protection or file synchronization software on CD or are available free for download.

| |

Low power support, boot support, and warranty: Considering how small most of these drives are, it might be logical to think that they use little power, but unfortunately, some use more power than an unpowered USB hub can provide. About half the drives in this review worked fine, while the other half did not. For some people this may be an issue (Mac users with USB keyboards: please take note!) Some of these drives are also able to masquerade as USB-based ZIP or Hard drives allowing them to boot at startup. For most people this is a useless feature, but for most geeks and system administrators, this could be really worthwhile. Although few manufacturers listed the MTBF for their drives, all carry at least a 1-year warranty, with a few offering 2-year, 3-year, and Lifetime warranties.

|

Meet the contenders

PNY Technologies Attaché

Features

|

Construction: The PNY Attaché drive enclosure is made entirely of plastic, yet feels solid in the hand. The rectangular shape and the smooth corners make it feel pretty normal as USB drives go. Some people will find the colored transparent casing interesting as it allows viewing of the components on the inside. The protective cap stays firmly fixed on the end of the drive but is not difficult to remove. The only questionable aspect of this drive's construction is that the blue transparent part of the drive pulls out from the larger silver plastic piece. While not a show stopper, it seemed strange that the two pieces of plastic were not permanently joined together.

The PNY Attaché features a red LED on one side of the drive to indicate that the unit is plugged in and operational. The LED flashes when data is being transferred. This drive contains a plastic loop to attach to a lanyard or key ring for your keychain. It also includes a write-protect switch to prevent accidental overwriting/deletion of files. The switch is counter-sunk, so a pen or similar tool is needed to flip the switch. Alternatively, it is possible to pull the blue transparent center part out of the silver plastic part and flip the switch with a fingernail and then merge the two parts back together.

Software: The Attaché includes a mini CD with only a driver for Windows 98 SE users. No other software is included.

SanDisk Cruzer Mini

Features:

|

Construction: The SanDisk Cruzer Mini drive is made entirely of plastic. While it does not feel as solid is some other drives made of thicker plastic, it definitely feels durable to withstand the rigors of daily life. The Cruzer Mini is a rather svelte drive, as USB Flash drives go. From the picture on the left, it should be evident that the drive is about as thick as the USB port on your computer. While some might argue that the drive is a bit too thin, it is easy to recognize that this drive definitely does not block access to dual-stacked USB ports found on many computers. The Cruzer Mini is one of the four drives that are "low power" (i.e. work in unpowered USB hubs and USB keyboards.) The Cruzer Mini also comes with 3 different colored caps that snap on pretty securely.

The SanDisk Cruzer Mini features a bright, green LED on the end of the drive that can be seen from nearly any angle (really smart!) The LED flashes when transferring data and turns off when the OS is no longer accessing the drive. The drive has a small loop for a lanyard string to pass through. Unfortunately, this little loop is really small and is kind of like trying to thread a needle. We have also witnessed this thin little plastic loop break under moderate stress. The current revision of the Cruzer Mini has NO write-protect switch, although older, thicker models have this feature. SanDisk probably dropped this feature when attempting to make the drive thinner. Unfortunately, for some people, this is a useful feature to have.

Software: The packaging does NOT include a CD-ROM with software, but the Win98 drivers and the CruzerLock security software are both available on their website for download.

CruzerLock

The CruzerLock software looks and behaves much like Windows Explorer. The difference is that the files that you view on the drive are either decrypted or encrypted. As shown in the picture, there are three text files and the CruzerLock software in the unencrypted folder and a "contacts" file that is encrypted. Unfortunately, we were unable to ascertain the type and strength of encryption. In addition, we found it odd that the software would allow us to encrypt the CruzerLock executable itself. This becomes problematic the next time the drive is inserted. Obviously, the decrypt/encrypt software cannot run if it is encrypted and thus all the encrypted files are rendered useless (unless a local copy of the CruzerLock software is available.) That aside, the encryption and decryption seemed to take place rather quickly, even on a 1Ghz machine.

Mushkin Flashkin

Features:

|

Construction: The Mushkin Flashkin drive is made almost entirely of plastic with exception to the metal clip on the cap. The smooth, egg-like shape of the Flashkin feels nice in the hand, yet the plastic is thin enough that it is pretty clear that the drive case is a good deal larger than the actual components inside. It is unfortunate that the drive enclosure is so oversized since the drive somewhat blocks dual-stacked USB ports found on most computers. The drive is held together by one small screw. Given the opportunity, we decided to take a look inside and examine the innards.

The Mushkin Flashkin drive features a small ,but bright red LED on one side that flashes when transferring data. The Flashkin has a small loop on the end of the drive for a lanyard or key ring. The metal clip on the cap is somewhat useful if you plan on carrying it in a shirt pocket. If not, you can always remove the clip by unscrewing the retention screw underneath the cap. The Flashkin has a write protect switch that flips without any difficulty, yet has enough friction to stay switched in the direction that it is set at. It is one of the four drives that are "low power" (i.e., works in unpowered USB hubs and keyboards.)

Software: The package includes a mini CD with Win98 SE drivers as well as software for enabling password protection and boot support.

Want to boot from your Flashkin?

The Mushkin security and support software appears very Spartan and simplistic on the surface. While not very pretty, it is actually rather functional. The software allows the user to set/change/clear the password, partition the device into a public and private area, and even copy system boot files onto the drive (if you are running on Win98/Me.) A common problem with USB Flash drives is that sometimes after partitioning/reformatting using Windows tools, the drive actually shrinks in overall usable size by a few MB. Fortunately, Mushkin includes a format utility which allows full recovery of all the available space.

As shown above, the available drive space can be partitioned into two drives: a "public" drive which can be accessed by anyone and a "private" drive which can only be accessed with the right password. The drive can also be put into one of three different possible boot modes.

More contenders

SimpleTech Bonzai Xpress

Features:

|

Construction: The SimpleTech Bonzai Xpress has the best construction design of all the USB drives in this review. The enclosure is made mostly of texturized, durable black plastic which has a good, almost rubbery feel in the hand. The side walls of the drive are made of aluminum, making it extra sturdy. The ridges on the black part of the drive make it especially easy to grasp. It is neither too large nor too small and is just about the right thickness so as not to obstruct dual-stacked USB ports.

The SimpleTech Bonzai Xpress drive features a small LED on one side that flashes just like every other drive when transferring data. The aluminum loop on the end is clearly large enough to loop a neck strap through and strong enough to put on a keychain. The Bonzai Xpress has a write-protect switch that is easily moved, yet has enough friction to stay in place.

Software: Downloadable storage synchronization software comes standard. For a small fee, this standard StorageSync software is upgradeable to a professional edition which features 2:1 software compression, 128, 192, and 256-bit AES encryption, and a 20-deep file revision tracking system.

StorageSync

While we did not purchase a copy of the professional edition, we did test the synchronization software extensively. Overall, we were rather satisfied with the program. It was easy to figure out that in order to synchronize the data between the hard drive and the flash drive, we needed to "backup" the data first. Pretty easy. The big question: how well does the program sync the data? We started by creating a text file and then backed it up so that it would be the same on both the hard disk and flash drive. We then altered the file in both locations to be two different files. It appears that the newer file always overwrites the older file. If you do not understand this, you may get yourself into trouble, especially if there are two people doing work on the same file. More recent changes may overwrite the changes that someone else made earlier. Apparently, to get file revision tracking, you need to purchase the professional upgrade.

We did note one minor caveat and a more serious annoyance. The small problem was that instead of "My Documents" being readily available under the root of the file/directory menu, we had to drill down through "Documents and Settings" then "Username" then "My Documents." A minor quibble, but an irritating oversight nonetheless. The more serious annoyance was that the program only seemed able to pick up changes to already-backed-up files. Any new files created on the Flash drive's backup directory or the hard disk's backed up folders were completely missed. This is somewhat frustrating since most people not only change files, but frequently create new files and folders. Scanning for new files as well as changes to old ones seems like a no-brainer. Since SimpleTech has a software update system, hopefully they will pick up on this problem and fix their StorageSync software.