Hybrid Networking

With the ever-increasing growth of media-rich applications from traditional video to virtual meeting/classrooms to tactile internet, the future networking paradigm will go away from a networking concept based on single-technology, single-flow, single-route model of information transfer, pushing the issues of usage prediction, integrated resource utilization and parallelism to all layers of protocol stack. Recent successful approaches, such as multi-homing, overlay networking, and distributed storage, deviate from this paradigm by using intelligent control to take advantage of redundancy and path diversity that already exists in the modern networks to provide a better experience to end-users.

Rather than treating the heterogeneous nature of the network and diversity of access technologies as an afterthought, this proposal considers an integrated approach that systematically takes advantage of various hybrid networking techniques to improve upon the network experience in general, and in particular upon latency in media-rich applications. These techniques include content caching, multicasting, multi-homing, multi-user coding, as well as braiding satellite+wifi+cellular technologies.

Introduction To Data Communications

Network Management

The Three Faces of Networking

Circuits (Data Communications and Networking)

Circuit Configuration

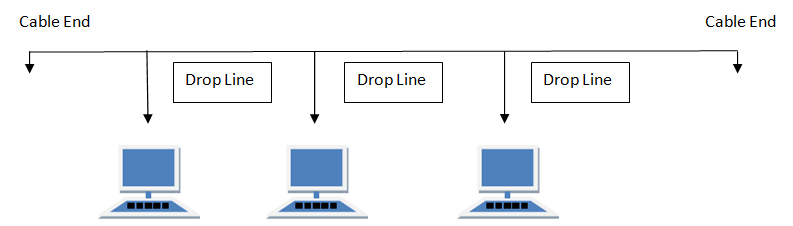

Circuit configuration is the basic physical layout of the circuit. There are two fundamental circuit configurations: point-to-point and multipoint. In practice, most complex computer networks have many circuits, some of which are point-to-point and some of which are multipoint.

Figure 3.1 illustrates a point-to-point circuit, which is so named because it goes from one point to another (e.g., one computer to another computer). These circuits sometimes are called dedicated circuits because they are dedicated to the use of these two computers. This type of configuration is used when the computers generate enough data to fill the capacity of the communication circuit. When an organization builds a network using point-to-point circuits, each computer has its own circuit running from itself to the other computers. This can get very expensive, particularly if there is some distance between the computers.

Figure 3.1 Point-to-point circuit

Figure 3.2 shows a multipoint circuit (also called a shared circuit). In this configuration, many computers are connected on the same circuit. This means that each must share the circuit with the others. The disadvantage is that only one computer can use the circuit at a time. When one computer is sending or receiving data, all others must wait. The advantage of multipoint circuits is that they reduce the amount of cable required and typically use the available communication circuit more efficiently. Imagine the number of circuits that would be required if the network in Figure 3.2 was designed with separate point-to-point circuits. For this reason, multipoint configurations are cheaper than point-to-point circuits. Thus, multipoint circuits typically are used when each computer does not need to continuously use the entire capacity of the circuit or when building point-to-point circuits is too expensive. Wireless circuits are almost always multipoint circuits because multiple computers use the same radio frequencies and must take turns transmitting.

Data Flow

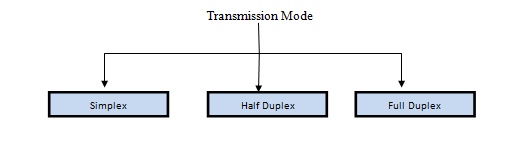

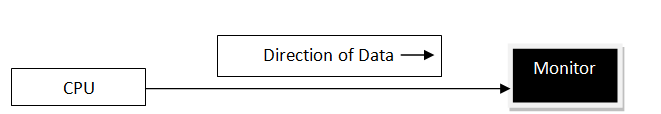

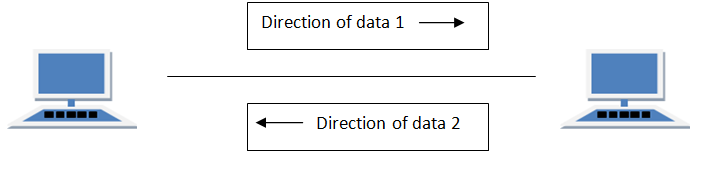

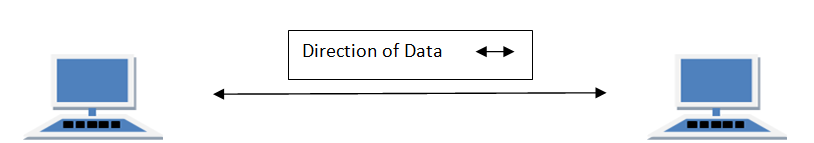

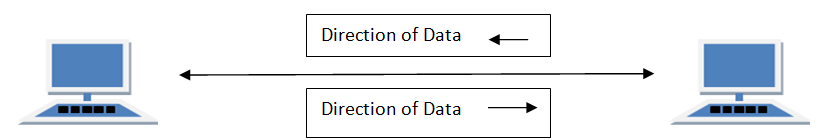

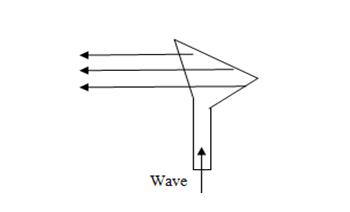

Circuits can be designed to permit data to flow in one direction or in both directions. Actually, there are three ways to transmit: simplex, half-duplex, and full-duplex (Figure 3.3).

Simplex is one-way transmission, such as that with radios and TVs.

Half-duplex is two-way transmission, but you can transmit in only one direction at a time. A half-duplex communication link is similar to a walkie-talkie link; only one computer can transmit at a time.

Figure 3.2 Multipoint circuit

Figure 3.3 Simplex, half-duplex, and full-duplex transmissions

Computers use control signals to negotiate which will send and which will receive data. The amount of time half-duplex communication takes to switch between sending and receiving is called turnaround time (also called retrain time or reclocking time). The turnaround time for a specific circuit can be obtained from its technical specifications (often between 20 and 50 milliseconds). Europeans sometimes use the term simplex circuit to mean a half-duplex circuit.

With full-duplex transmission, you can transmit in both directions simultaneously, with no turnaround time.

How do you choose which data flow method to use? Obviously, one factor is the application. If data always need to flow only in one direction (e.g., from a remote sensor to a host computer), then simplex is probably the best choice. In most cases, however, data must flow in both directions.

The initial temptation is to presume that a full-duplex channel is best; however, each circuit has only so much capacity to carry data. Creating a full-duplex circuit means that the available capacity in the circuit is divided—half in one direction and half in the other. In some cases, it makes more sense to build a set of simplex circuits in the same way a set of one-way streets can speed traffic. In other cases, a half-duplex circuit may work best. For example, terminals connected to mainframes often transmit data to the host, wait for a reply, transmit more data, and so on, in a turn-taking process; usually, traffic does not need to flow in both directions simultaneously. Such a traffic pattern is ideally suited to half-duplex circuits.

Multiplexing

Multiplexing means to break one high-speed physical communication circuit into several lower-speed logical circuits so that many different devices can simultaneously use it but still "think" that they have their own separate circuits (the multiplexer is "transparent"). It is multiplexing (specifically, wavelength division multiplexing [WDM], discussed later in this section) that has enabled the almost unbelievable growth in network capacity discussed in last topic; without WDM, the Internet would have collapsed in the 1990s.

Multiplexing often is done in multiples of 4 (e.g., 8, 16). Figure 3.4 shows a four-level multiplexed circuit. Note that two multiplexers are needed for each circuit:

Figure 3.4 Multiplexed circuit

The primary benefit of multiplexing is to save money by reducing the amount of cable or the number of network circuits that must be installed. For example, if we did not use multiplexers in Figure 3.4, we would need to run four separate circuits from the clients to the server. If the clients were located close to the server, this would be inexpensive. However, if they were located several miles away, the extra costs could be substantial.

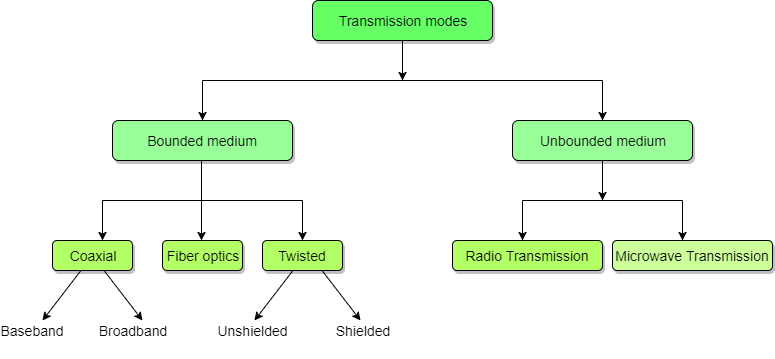

There are four types of multiplexing: frequency division multiplexing (FDM), time division multiplexing (TDM), statistical time division multiplexing (STDM), and WDM.

Frequency Division Multiplexing Frequency division multiplexing (FDM) can be described as dividing the circuit "horizontally" so that many signals can travel a single communication circuit simultaneously. The circuit is divided into a series of separate channels, each transmitting on a different frequency, much like series of different radio or TV stations. All signals exist in the media at the same time, but because they are on different frequencies, they do not interfere with each other.

Figure 3.5 illustrates the use of FDM to divide one circuit into four channels. Each channel is a separate logical circuit, and the devices connected to them are unaware that their circuit is multiplexed. In the same way that radio stations must be assigned separate frequencies to prevent interference, so must the signals in a FDM circuit. The guardbands in Figure 3.5 are the unused portions of the circuit that separate these frequencies from each other.

With FDM, the total capacity of the physical circuit is simply divided among the multiplexed circuits. For example, suppose we had a physical circuit with a data rate of 64 Kbps that we wanted to divide into four circuits. We would simply divide the 64 Kbps among the four circuits and assign each circuit 16 Kbps. However, because FDM needs guardbands, we also have to allocate some of the capacity to the guardbands, so we might actually end up with four circuits, each providing 15 Kbps, with the remaining 4 Kbps allocated to the guardbands. There is no requirement that all circuits be the same size, as you will see in a later section. FDM was commonly used in older telephone systems, which is why the bandwidth on older phone systems was only 3,000 Hz, not the 4,000 Hz actually available—1,000 Hz were used as guardbands, with the voice signals traveling between two guardbands on the outside of the channel.

Figure 3.5 Frequency division multiplex (FDM) circuit

Time Division Multiplexing Time division multiplexing (TDM) shares a communication circuit among two or more terminals by having them take turns, dividing the circuit vertically, so to speak. Figure 3.6 shows the same four terminals connected using TDM. In this case, one character is taken from each computer in turn, transmitted down the circuit, and delivered to the appropriate device at the far end (e.g., one character from computer A, then one from B, one from C, one from D, another from A, another from B, and so on). Time on the circuit is allocated even when data are not being transmitted, so that some capacity is wasted when terminals are idle. TDM generally is more efficient than FDM because it does not need guardbands. Guardbands use "space" on the circuit that otherwise could be used to transmit data. Therefore, if one divides a 64-Kbps circuit into four circuits, the result would be four 16-Kbps circuits.

Statistical Time Division Multiplexing Statistical time division multiplexing (STDM) is the exception to the rule that the capacity of the multiplexed circuit must equal the sum of the circuits it combines.

Figure 3.6 Time division multiplex (TDM) circuit

STDM allows more terminals or computers to be connected to a circuit than does FDM or TDM. If you have four computers connected to a multiplexer and each can transmit at 64 Kbps, then you should have a circuit capable of transmitting 256 Kbps (4 x 64 Kbps). However, not all computers will be transmitting continuously at their maximum transmission speed. Users typically pause to read their screens or spend time typing at lower speeds. Therefore, you do not need to provide a speed of 256 Kbps on this multiplexed circuit. If you assume that only two computers will ever transmit at the same time, 128 Kbps would be enough. STDM is called statistical because selection of transmission speed for the multiplexed circuit is based on a statistical analysis of the usage requirements of the circuits to be multiplexed.

The key benefit of STDM is that it provides more efficient use of the circuit and saves money. You can buy a lower-speed, less-expensive circuit than you could using FDM or TDM. STDM introduces two additional complexities. First, STDM can cause time delays. If all devices start transmitting or receiving at the same time (or just more than at the statistical assumptions), the multiplexed circuit cannot transmit all the data it receives because it does not have sufficient capacity. Therefore, STDM must have internal memory to store the incoming data that it cannot immediately transmit. When traffic is particularly heavy, you may have a 1- to 30-second delay. The second problem is that because the logical circuits are not permanently assigned to specific devices as they are in FDM and TDM, the data from one device are interspersed with data from other devices. The first message might be from the third computer, the second from the first computer, and so on. Therefore, we need to add some address information to each packet to make sure we can identify the logical circuit to which it belongs. This is not a major problem, but it does increase the complexity of the multiplexer and also slightly decreases efficiency, because now we must "waste" some of the circuit’s capacity in transmitting the extra address we have added to each packet.

Wavelength Division Multiplexing Wavelength division multiplexing (WDM) is a version of FDM used in fiber-optic cables. When fiber-optic cables were first developed, the devices attached to them were designed to use only one color of light generated by a laser or LED. With one commonly used type of fiber cable, the data rate is 622 Mbps (622 million bits per second). At first, the 622-Mbps data rate seemed wonderful. Then the amount of data transferred over the Internet began doubling at fairly regular intervals, and several companies began investigating how we could increase the amount of data sent over existing fiber-optic cables.

The answer, in hindsight, was obvious. Light has different frequencies (i.e., colors), so rather than building devices to transmit using only one color, why not send multiple signals, each in a different frequency, through the same fiber cable? By simply attaching different devices that could transmit in the full spectrum of light rather than just one frequency, the capacity of the existing fiber-optic cables could be dramatically increased, with no change to the physical cables themselves.

WDM works by using lasers to transmit different frequencies of light (i.e., colors) through the same fiber-optic cable. As with FDM, each logical circuit is assigned a different frequency, and the devices attached to the circuit don’t "know" they are multiplexed over the same physical circuit.

NASA’s Ground Communications Network

MANAGEMENT FOCUS

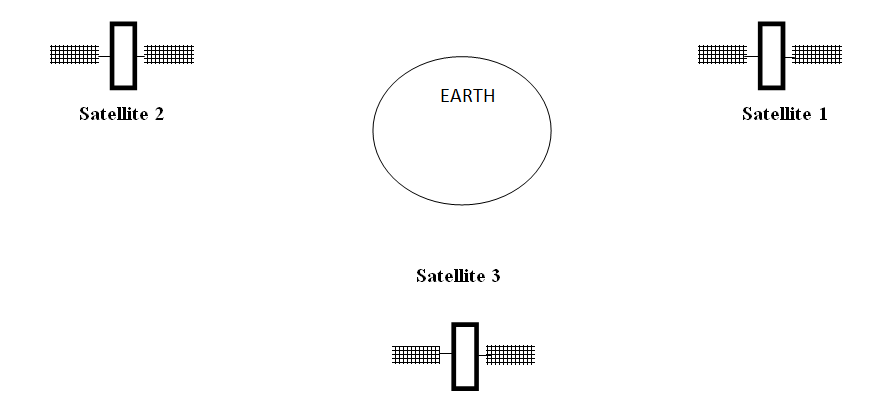

NASA’s communications network is extensive because its operations are spread out around the world and into space. The main Deep Space Network is controlled out of the Jet Propulsion Laboratory (JPL) in California. JPL is connected to the three main Deep Space Communications Centers (DSCCs) that communicate with NASA spacecraft. The three DSCCs are spread out equidistantly around the world so that one will always be able to communicate with spacecraft no matter where they are in relation to the earth: Canberra, Australia; Madrid, Spain; and Goldstone, California.

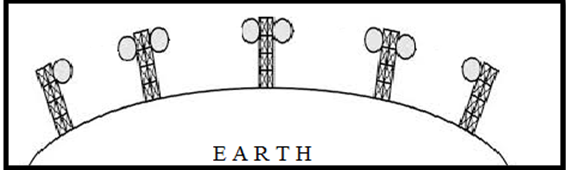

Figure 3.7 shows the JPL network. Each DSCC has four large-dish antennas ranging in size from 85 to 230 feet (26 to 70 meters) that communicate with the spacecraft. These send and receive operational data such as telemetry, commands, tracking, and radio signals. Each DSCC also sends and receives administrative data such as e-mail, reports, and Web pages, as well as telephone calls and video.

The three DSCCs and JPL use Ethernet local area networks (LANs) that are connected to multiplexers that integrate the data, voice, and video signals fortransmission. Satellite circuits are used between Canberra and JPL and Madrid and JPL. Fiber-optic circuits are used between JPL and Goldstone.

Dense WDM (DWDM) is a variant of WDM that further increases the capacity of WDM by adding TDM to WDM. Today, DWDM permits up to 40 simultaneous circuits, each transmitting up to 10 Gbps, giving a total network capacity in one fiber-optic cable of 400 Gbps (i.e., 400 billion bits per second). Remember, this is the same physical cable that until recently produced only 622 Mbps; all we’ve changed are the devices connected to it.

DWDM is a relatively new technique, so it will continue to improve over the next few years. As we write this, DWDM systems have been announced that provide 128 circuits, each at 10 Gbps (1.28 terabits per second [1.28 Tbps]) in one fiber cable. Experts predict that DWDM transmission speeds should reach 25 Tbps (i.e., 25 trillion bits per second) within a few years (and possibly 1 petabit [Pbps], or 1 million billion bits per second)—all on that same single fiber-optic cable that today typically provides 622 Mbps. Once we reach these speeds, the most time-consuming part of the process is converting from the light used in the fiber cables into the electricity used in the computer devices used to route the messages through the Internet. Therefore, many companies are now developing computer devices that run on light, not electricity.

Inverse Multiplexing Multiplexing uses one high-speed circuit to transmit a set of several low-speed circuits. It can also be used to do the opposite. Inverse multiplexing (IMUX) combines several low-speed circuits to make them appear as one high-speed circuit to the user (Figure 3.8).

One of the most common uses of IMUX is to provide T1 circuits for WANs. T1 circuits provide data transmission rates of 1.544 Mbps by combining 24 slower-speed circuits (64 Kbps). As far as the users are concerned, they have access to one highspeed circuit, even though their data actually travel across a set of slower circuits.

Until recently, there were no standards for IMUX. If you wanted to use IMUX, you had to ensure that you bought IMUX circuits from the same vendor so both clients or hosts could communicate.

Figure 3.7 NASA’s Deep Space Communications Centers ground communications network. MUX = multiplexer

Several vendors have recently adopted the BONDING standard (Bandwidth on Demand Interoperability Networking Group). Any IMUX circuit that conforms to the BONDING standard can communicate with any other IMUX circuit that conforms to the same standard. BONDING splits outgoing messages from one client or host across several low-speed telephone lines and combines incoming messages from several telephone lines into one circuit so that the client or host "thinks" it has a faster circuit.

The most common use for BONDING is for room-to-room videoconferencing. In this case, organizations usually have the telephone company install six telephone lines into their videoconferencing room that are connected to the IMUX. (The telephone lines are usually 64-Kbps ISDN telephone lines.) When an organization wants to communicate with another videoconferencing room that has a similar six-telephone-line IMUX configuration, the first IMUX circuit uses one telephone line to call the other IMUX circuit on one of its telephone lines. The two IMUX circuits then exchange telephone numbers and call each other on the other five lines until all six lines are connected. Once the connection has been established, the IMUX circuits transmit data over the six lines simultaneously, thus giving a total data rate of 6 x 64 Kbps = 384 Kbps.

Figure 3.8 Inverse multiplexer

Get More Bandwidth for Less

MANAGEMENT FOCUS

Upstart network provider Yipes is among the first to offer metropolitan area network services based on wavelength division multiplexing (WDM). It offers circuits that range from 1 Mbps up to 1 Gbps in 1-Mbps increments and costs anywhere between 10 percent and 80 percent of the cost of traditional services. The challenge Yipes faces is to expand its WDM services beyond the MAN.

How DSL Transmits Data

The reason for the limited capacity on voice telephone circuits lies with the telephone and the switching equipment at the telephone company offices. The actual twisted-pair wire in the local loop is capable of providing much higher data transmission rates. Digital subscriber line (DSL) is one approach to changing the way data are transmitted in the local loop to provide higher-speed data transfer. DSL is a family of techniques that combines analog transmission and FDM to provide a set of voice and data circuits. There are many different types of DSL, so many in fact that DSL is sometimes called xDSL, where the x is intended to represent one of the many possible flavors.

With DSL, a DSL modem (called customer premises equipment [CPE]) is installed in the customer’s home or office and another DSL modem is installed at the telephone company switch closest to the customer’s home or office. The modem is first an FDM device that splits the physical circuit into three logical circuits: a standard voice circuit used for telephone calls, an upstream data circuit from the customer to the telephone switch, and a downstream data circuit from the switch to the customer. TDM is then used within the two data channels to provide a set of one or more individual channels that can be used to carry different data. A combination of amplitude and phase modulation is used in the data circuits to provide the desired data rate (the exact combination depends on which flavor of DSL is used).2 One version of DSL called G.Lite ASDL provides one voice circuit, a 1.5-Mbps downstream circuit, and a 384-Kbps upstream channel.

What Does a Data Center Do in computer communication networking

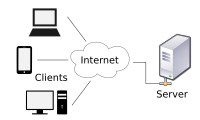

In recent years, Internet companies such as Google, Microsoft, Facebook, and Amazon (as well as their counterparts in Asia and Europe) have built massive data centers, each housing tens and thousands of hosts, and concurrently supporting many distinct applications (e.g., search, mail, social networking, and e-commerce). Each data center has its own data center network that interconnects its hosts with each other and interconnects the data center with the internet. In this section, we provide a brief introduction to data center networking for cloud applications.

The cost of a large data center is huge, exceeding $12 million per month for a 100,000 host data center . Of these costs, about 45 percent can be attributed to the hosts themselves (which need to be replaced every 3-4 years); 25 percent to infrastructure, including transformers, uninterruptable power supplies (UPS) systems, generators for long-term outages, and cooling systems; 15 percent for electric utility costs for the power draw; and 15 percent for networking, including network gear (switches, routers, and load balancers), external links, and transit traffic costs. (in these percentages, cost for equipment are amortized so that a common cost metric is applied for one-time purchases and ongoing expenses such as power). While networking is not the largest cost, networking is the key to reducing overall cost and maximizing performance.

The worker bees in a data center are the hosts: They serve content (e.g web pages and videos), store emails and documents, and collectively perform massively distributed computations (e.g. distributed index computations for search engines). The hosts in data centers, called blades and resembling pizza boxes, are generally commodity hosts that include CPU, memory, and disk storage.

The hosts are stacked in racks, with each rack typically having 20 to 40 blades. At the top of each rack there is a switch , aptly named the Top of Rack (TOR) switch, that interconnects the hosts in the rack with each other and with other switches in the data center. Specifically, each host in the rack has a network interface card that connects to its TOR switch, and each TOR switch has additional ports that can be connected to other switches. Although today hosts typically have 1 Gbps Ethernet connections to their TOR switches, 10 Gbp connections may become the norm. Each host is also assigned its own data center internal IP address.

The data center network supports two types of traffic : traffic flowing between external clients and internal hosts and traffic flowing between internal hosts. To handle flows between external clients and internal hosts, the data center network includes one or more border routers, connecting the data center network to the public internet. The data center network therefore interconnects the racks with each other and connects the racks to the border routers. The figure below (5.30) shows an example of a data center network.

Data center network design, the art of designing the interconnection network and protocols that connect the racks with each other and with the border routers, has become an important branch of computer networking research in recent years.

Load Balancing

A cloud data center, such as Google or Microsoft data center, provided many applications concurrently, such as search, email, and video applications. To support requests from external clients, each application is associated with a publicly visible IP address to which clients send their requests and from which they receive response.

Inside the data center, the external requests are first directed to a load balancer whose job is to distribute requests to the hosts, balancing the load across the hosts as function of their current load.

A large data center will often have several load balancers, each one devoted to a set of specific cloud applications. Such a load balancer is sometimes referred to as a “layer-4 switch” since it makes decision based on the destination port number (layer 4) as well as destination IP address in the packet. Upon receiving a request for a particular application, the load balancer, forwards it to one of the hosts that handles the application. (A host may then invoke the services of others hosts to help process the request). When the host finishes processing the request, it sends its response back to the load balancer, which in turn relays the response back to the external client. The load balancer not only balances the work load across hosts, but also proves a NAT-like function, translating the public external IP address to the internal IP address of the appropriate host, and then translating back for packets traveling in the reverse direction back to the clients. This prevents clients from contacting hosts directly, which has the security benefit of hiding the internal network structure and preventing clients from directly acting with the hosts.

Hierarchical Structure

For a small data center housing only a few thousand hosts, a simple network consisting of a border router, a load balancer, and a few tens of racks all interconnected by a single Ethernet switch could possibly suffice. But to scale to tens to hundreds of thousands of hosts, a data center often employs a hierarchy of routers and switches, as shown in the figure above.

At the top of the hierarchy , the border router connects to access routers (you can see only two in the figure, but there can be many more). Below each access router there are three tiers of switches. Each access router connects to a top-tier switch, and each top-tier switch connects to multiple second-tier switches and a load balancer. Each second-tier switch in turn connects to multiple racks via the racks’ TOR switches (third-tier switches). All links typically use Ethernet for their link-layer and physical-layer protocols, with a mix of copper and fiber cabling. With such a hierarchical design, it is possible to scale a data center to hundreds of thousands of hosts.

Link Layer

We know that network layer provides a communication service between any two network hosts. Between the two hosts, datagrams travel over a series of communication links, some wires and some wireless, starting at the source host, passing through a series of packet switches (switches and routers) and ending at the destination host.

As we continue down the protocol stack, from the network layer to the link layer, we naturally wonder :

- How packets are sent across the individual links that make up the end-to-end communication path.

- How are the network-layer datagrams encapsulated in the link layer frames for transmission over a single link?

- Are different link layer protocols used in the different links along the communication path?

- How are transmission conflicts in broadcast links resolved?

- Is there addressing at the link layer and, if so, how does the link layer addressing operate with the network layer addressing.

- And what exactly is the difference between a switch and a router?

We’ll answer these and other important questions in this tutorial.

Understanding Link Layer with Example

It will be convenient, in this tutorial, to refer to any device that runs a link layer protocol as a node. Nodes include hosts, routers, switches, and WiFi access points.

We’ll also refer to the communication channels that connect adjacent nodes along the communication path as links. In order for a datagram to be transferred from source host to destination host, it must be move over each of the individual links in the end-to-end path.

As an example, in the company network shown in the figure below (5.1), consider sending a datagram from one of the wireless hosts to one of the servers.

This datagram will actually pass through six links:

- a WiFi link between sending host and a WiFi access point,

- an Ethernet link between the access point and a link layer switch;

- a link between the link layer switch and the router,

- a link between two routers;

- an Ethernet link between the router and a link layer switch; &

- finally an Ethernet link between the switch and the server.

Over a given link, a transmitting node encapsulates the datagram in a link layer frame and transmits the frame into the link.

In order to gain further insight into the link layer and how it relates to the network layer, let’s consider a transportation analogy. Consider a travel agent who is planning a trip for a tourist traveling from Princeton, New Jersey, to Lausanne, Switzerland. The travel agent decides that it is most convenient for the tourist to:

- take a limousine from Princeton to JFK airport,

- then a plane from JFK airport to Geneva’s airport, and

- finally a train from Geneva’s airport to Lausanne’s train station.

Once the travel agent makes the three reservations,

- it is the responsibility of the Princeton limousine company to get the tourist from Princeton to JFK;

- it is the responsibility of the airline company to get the tourist from JFK to Geneva; and

- it is the responsibility of the Swiss train service to get the tourist from Geneva to Lausanne.

Each of the three segments of the trip is “direct” between two “adjacent” locations. Note that the three transportation segments are managed by different companies and use entirely different transportation modes (limousine, plane and train).

Although the transportation modes are different, they each provide the basic service of moving passengers from one location to an adjacent location. In this transportation analogy:

- The tourist is a datagram,

- Each transportation segment is a link,

- The transportation mode is a link layer protocol, and

- The travel agent is a routing protocol.

Where Is Link Layer Implemented

For the most part, the link layer is implemented in a network adapter, also sometimes known as a Network Interface Card (NIC).

At the heart of the network adapter is the link layer controller, usually a single, special purpose chip that implements many of the link layer services (framing, link access, error detection etc.). Thus, much of a link layer controller’s functionality is implemented in hardware.

For example, Intel’s 8254x controller implements the Ethernet protocols, the Atheros AR5006 controller implements the 802.11 WiFi protocols.

Until the late 1990s most network adapters were physically separate cards (such as PCMCIA card or a plug-in card fitting into a PC’s PCI card slot) but increasingly, network adapters are being integrated onto the host’s motherboard – a so called LAN-on-motherboard configuration.

On the sending side, the controller takes a datagram that has been created and stored in host memory by the higher layers of the protocol stack, encapsulates the datagram in a link layer frame (filling in the frame’s various fields), and then transmits the fame into the communication link, following the link access protocol. On the receiving side, a controller receivers the entire frame, and extracts the network layer datagram. If the link layer performs error detection , then it is the sending controller that sets the error detection bits in the frame header and it is the receiving controller that performs the error detection.

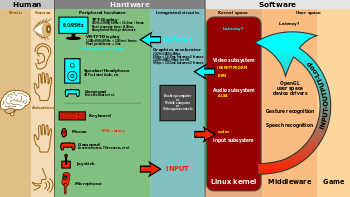

The figure below show a network adapter attaching to a host’s bus (e.g. a PCI or PCI-X bus), where it looks much like any other I/O device to the other host components.

The above figure also shows that while most of the link layer is implemented in hardware, part of the link layer is implemented in software that runs on the host’s CPU.

The software components of the link layer implement higher-level link layer functionality such as assembling link layer addressing information and activating the controller hardware.

On the receiving side, link layer software responds to controller interrupts (e.g. due to the receipt of one or more frames), handling error conditions and passing a datagram up to the network layer. Thus, the link layer is a combination of hardware and software – the place in the protocol stack where software meets hardware.

How Big is Big Data

Initially the term was being used to denote data that was too big for the existing computers to store and process.

Just to get a glimpse consider the following realities:

- Google needs to save more than 3 billion search it receives per day

- Facebook needs to store more than 10 million photo that users upload per hour

- Twitter needs to store the 400 million tweets per day.

Any of the above activities was not possible earlier. But now we have computers that far larger storage and processing capacities.

Processing data this bid has helped Google to predict the spread of disease in real time.

Hedge funds are processing the twitter data for trading purpose.

Now, with big data, you can do things that were impossible earlier.

Now we can see things that were hidden earlier.

What Can We Do With Big Data

The main purpose of big data is to predict things.

Public heath, finance & e-commerce are some of the areas where big data is already showing awesome results.

- Google can detect the spread of diseases

- Farecast could predict the price of flight tickets

- Twitter data is used to predict stock market movements

Not only that, big data has helped :

- Reduce crime in certain states by increasing patrolling in suspected areas

- Reduce fire cases

- Reduce bad loans

Future of Big Data

Big data is the future. It is going to be used enormously both in government & corporate agencies.

It has its won advantages & disadvantages.

I have already mentioned about the advantages, now let us have a look over the disadvantages as well.

- In the name of security, big data will be used to zero in people. It may be used to punish people even before the crime is committed.

- Bit data will be used by banks and insurance companies to charge higher loan interests & premiums on certain people.

- Big data may cause people to see correlations where correlations do not exist – the fooled by randomness phenomena.

The future holds both the benefits of bid data. One thing is for sure – the world will never be the same again. Most of the things in future, will be done on big data predictions.

IPv4 to IPv6 Conversion Method

Any IPv4 to IPv6 conversion method is not going to be easy as the changes are being made in the network layer. It is like changing the foundation of the house. However, two methods have been suggested. We will cover those methods shortly.

One option would be to declare a flag day – a given time and date when all internet machines would be turned off and upgraded from IPv4 to IPv6. The last major technology transition (from using NCP to using TCP for reliable transport service) occurred almost 25 years ago. Even back then [RFC 801], when the internet was tiny and still being administered by a small number of “wizards”, it was realized that such a flag day was not possible. A flag day involving hundreds of millions of machines and millions of network administrators and users is even more unthinkable today. RFC 4213 describes two approaches (which can be used either alone or together) for gradually integrating IPv6 hosts and routers into an IPv4 world (with the long-term goa, of course, of having all IPv4 nodes eventually transition to IPv6).

Dual Stack Approach

Probably the most straightforward way to introduce IPv6-capable nodes is a dual stack approach, where IPv6 nodes also have a complete IPv4 implementation.

Such a node, referred to as an IPv6/IPv4 node in RFC 421, has the ability to send and receive both IPv4 and IPv6 datagrams. When interoperating with an IPv4 node, and IPv6/IPv4 node can use IPv4 datagrams; when interoperating with IPv6 node, it can speak IPv6.

IPv6/IPv4 nodes must have both IPv6 and IPv4 addressed. They must therefore be able to determine whether another node is IPv6-capable or IPv4 only. This problem can be solved using the DNS, which can return an IPv6 address if the node name being resolved is IPv6 capable, or otherwise return an IPv4 address. Of course, if the node issuing the DNS request is only IPv4-capable, the DNS returns only an IPv4 address.

In the dual stack approach. If either the sender or the receiver is only IPv4-capable, an IPv4 datagram must be used. As a result, it is possible that two IPv6-capable nodes can end up, in essence, sending IPv4 datagrams to each other. This is illustrated in the figure below.

Suppose Node A is IPv6-capable and wants to send an IP datagram to Node F, which is also IPv6-capable. Nodes A and B can exchange an IPv6 datagram.

However, Node B must create an IPv4 datagram to send to C. Certainly, the data field of the IPv6 datagram can be copied into the data field of the IPv4 datagram and appropriate address mapping be done.

However, in performing the conversion from IPv6 to IPv4, there will be IPv6-specific fields in the IPv6 datagram (for example, the flow identifier field) that have no counterpart in IPv4. The information field will be lost. Thus, even though E and F can exchange IPv6 datagrams, the arriving IPv4 datagram at E and D do not contain all of the fields that were in the original IPv6 datagram sent from A.

Tunneling

An alternative to the dual stack approach, also discussed in RFC 4213, is known as tunneling . Tunneling can solve the problem noted above, allowing, for example, E to receive the IPv6 datagram originated by A.

The basic idea behind tunneling is the following. Suppose two IPv6 nodes (for example, B and E in the figure above) wan to interoperate using IPv6 datagrams but are connected to each other by intervening IPv4 routers. We refer to the intervening set of IPv4 routers between two IPv6 routers as a tunnel, as shown in the figure below (4.26).

With tunneling, the IPv6 node on the sending side of the tunnel (for example, B) takes the entire IPv6 datagram and puts it in the data (payload) field of an IPv4 datagram. This IPv4 datagram is then addressed to the IPv6 node on the receiving side of the tunnel (for example, E) and sent to the first node in the tunnel (for example C).

The intervening routers in the tunnel route this IPv4 datagram among themselves, just as they would any other datagram, blissfully unaware that the IPv4 datagram itself contains a complete IPv6 datagram.

The IPv6 node on the receiving side of the tunnel eventually receives the IPv4 datagram (it is the destination of the IPv4 datagram), determines that the IPv4 datagram contains an IPv6 datagram, extracts the IPv6 datagram, and then routes the IPv6 datagram exactly as it would if it had received the IPv6 datagram from a directly connected IPv6 neighbour.

Comparison Between PCM, DM, ADM and DPCM

we will compare Pulse Code Modulation (PCM), Delta Modulation (DM), Adaptive Delta Modulation (ADM) and Differential Pulse Code Modulation.Comparison between all these modulation techniques is shown in the table below.

XO__XO DIGITAL LINE CODING ON COMMUNICATION NETWORKING

Line Coding

Digital Line Coding is a special coding system used for the transmission of digital signal over a transmission line.

It is the process of converting binary data to a digital signal.

The data, text,numbers, graphical images, audio and video which are stored in computer memory are in the form of binary data or sequence of bits. Line coding converts these sequences into digital signal.

Properties of Line Coding

- Transmission bandwidth : For a line-code, the transmission bandwidth must be as small as possible.

- Power efficiency : For a given bandwidth and a specified detection error probability, the transmitted power for a line-code should be as small as possible.

- Probability of Error : The probability of error is much reduced.

- Error detection and correction capability : It must be possible to detect and preferably correct detection errors. For example, in a bipolar case, a signal error will cause bipolar violation and thus can easily be detected.

- Power density : It is desirable to have zero power spectral density (PSD) at ω =0 (i.e. , d.c) since ac coupling and transformers are used at the repeaters. Significant power in low-frequency components causes dc wander in the pulse stream when ac coupling is used.The a.c . coupling is required since the dc paths provided by the cable pairs between the repeater sites are used to transmit the power required to operate the repeaters.

- Adequate timing content : It must be possible to extract timing or clock information from the signal.

- Transparency : It must be possible to transmit a digital signal correctly regardless the pattern of 1’s and 0’s.

Types of Line Coding

There are 3 types of Line Coding

- Unipolar

- Polar

- Bi-polar

Fig.1 : Types of Line Coding

Unipolar Signaling

The Unipolar signaling is also known as On-Off Keying or simply OOK.

Here, a ‘1’ is transmitted by a pulse and a ‘0’ is transmitted by no pulse. i.e.,the presence of pulse represents a ‘1′ and the absence of pulse represents a ‘0′.

There are two common variations in Unipolar signaling :

- Non Return to Zero (NRZ)

- Return to Zero (RZ)

Unipolar Non-Return to Zero (NRZ)

In this type of unipolar signaling, a High (1)in data is represented by a positive pulse called as Mark, which has a duration T0 equal to the symbol bit duration. A Low(0) in data input has no pulse.

Fig.2 : Unipolar Non-Return to Zero (NRZ)

Advantages

The advantages of Unipolar NRZ are −

- It is simple.

- A lesser bandwidth is required.

Disadvantages

The disadvantages of Unipolar NRZ are −

- No error correction capability.

- Presence of low frequency components may cause the signal droop.

- No clock is present for the ease of synchronization.

- It is not transparent.Loss of synchronization is likely to occur especially for long strings of 1s and 0s.

Unipolar Return to Zero (RZ)

In this type of unipolar signaling, a High in data, though represented by a Mark pulse, its duration T0 is less than the symbol bit duration. Half of the bit duration remains high but it immediately returns to zero and shows the absence of pulse during the remaining half of the bit duration.

Fig.3: Unipolar Return to Zero (RZ)

Advantages

- simple to implement.

- The spectral line present at the symbol rate can be used as a clock.

Disadvantages

- No error correction capability.

- Occupies twice the bandwidth as unipolar NRZ.

- The signal droop is caused at the places where signal is non-zero at 0 Hz.

- Not transparent.

Polar Signaling

There are two methods of Polar Signaling. Such as:

- Polar NRZ

- Polar RZ

Polar NRZ

In this type of Polar signaling, a High (1)in data is represented by a positive pulse, while a Low (0) in data is represented by a negative pulse.

This is shown in fig.4 below.

Fig.4 : Polar NRZ

Advantages

The advantages of Polar NRZ are −

- Simplicity in implementation.

- No low-frequency (DC) components are present.

Disadvantages

The disadvantages of Polar NRZ are −

- No error correction capability.

- No clock is present for the ease of synchronization.

- Signal droop is caused at the places where the signal is non-zero at 0 Hz.

- It is not transparent.

Polar RZ

In this type of Polar signaling, a High (1) in data, though represented by a Mark pulse, its duration T0 is less than the symbol bit duration. Half of the bit duration remains high but it immediately returns to zero and shows the absence of pulse during the remaining half of the bit duration.

However, for a Low (0) input, a negative pulse represents the data, and the zero level remains same for the other half of the bit duration.

This is shown in fig.5 below .

Fig.5 : Polar RZ

Advantages

The advantages of Polar RZ are −

- Simplicity in implementation.

- No low-frequency (DC) components are present.

Disadvantages

The disadvantages of Polar RZ are −

- No error correction capability.

- No clock is present for the ease of synchronization.

- Occupies twice the bandwidth of Polar NRZ.

- The signal droop is caused at places where the signal is non-zero at 0 Hz.

Bipolar Signaling

This is an encoding technique which has three voltage levels such as : +, – and 0. Such a signal is known as duo-binary signal.

An example of this type is Alternate Mark Inversion (AMI).

For a 1, the voltage level gets a transition from + to – or from – to +, having alternate 1s to be of equal polarity. A 0 will have a zero voltage level.

There are two types in this method. such as :

- Bipolar NRZ

- Bipolar RZ

As we have already discussed the difference between NRZ and RZ, the same way here too.

The following figure clearly describes this.

Fig.6

In the above fig.6, both the Bipolar NRZ and RZ waveforms are shown.

The pulse duration and symbol bit duration are equal in NRZ type, while the pulse duration is half of the symbol bit duration in RZ type.

Advantages

- Simplicity in implementation.

- No low-frequency(DC) components are present.

- Occupies low bandwidth than unipolar and polar NRZ techniques.

- Signal drooping doesn’t occur here, hence it is suitable for transmission over AC coupled lines.

- A single error detection capability is present here.

Disadvantages

- No clock is present.

- It is not transparent.i.e.,long strings of data causes loss of synchronization.

Power Spectral Density

The function which shows how the power of a signal got distributed at various frequencies, in the frequency domain is known as Power Spectral Density (PSD).

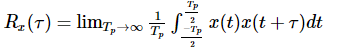

PSD is the Fourier Transform of Auto-Correlation . It is in the form of a rectangular pulse.

Fig.7

PSD Derivation

According to the Einstein-Wiener-Khintchine theorem, if the auto correlation function or power spectral density of a random process is known, the other can be found exactly.

Hence, to derive the power spectral density, we shall use the time auto-correlation (Rx(τ)) of a power signal x(t) as shown below.

Since x(t) consists of impulses, (Rx(τ)) can be written as:

Where:

Getting to know that Rn = R-n , for real signals, we have

Since the pulse filter has the spectrum of (ω)↔f(t), we have

Now we can find the PSD of various line codes using this equation for Power Spectral Density .

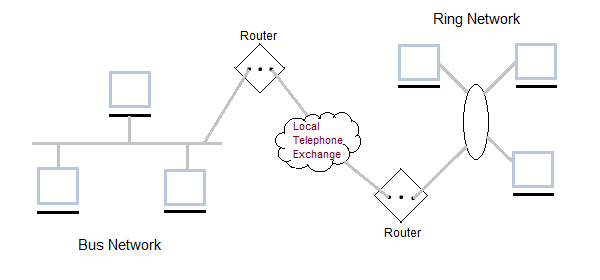

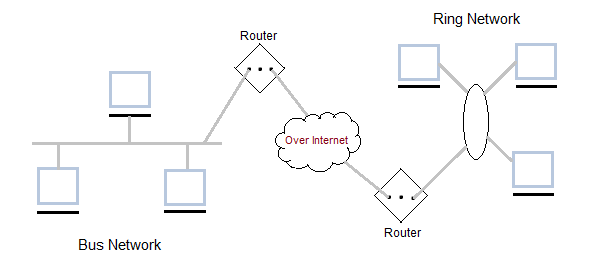

XO ___XO ++DW How Hybrid Networks Work

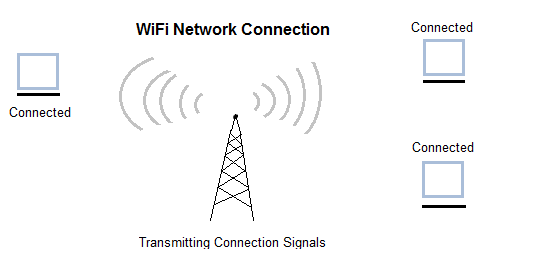

Wires are for work. Wireless is for play. A few years ago, that was the conventional wisdom on wired versus wireless networks. Wi-Fi was great for checking e-mail at Starbucks, but it wasn't fast enough or secure enough for an office setting -- even a home office. For speed and security, Ethernet cables were the only way to go.

Things are changing. Now people are viewing Ethernet and Wi-Fi as important components of the same local area network (LAN). Wires are great for linking servers and desktop computers, but Wi-Fi is ideal for extending that network seamlessly into the conference room, the lunch room, and yes, even the bathroom.

Think about the typical college or university LAN. According to a 2007 survey, 73.7 percent of college students own a laptop [source: Educause Center for Applied Research]. And they expect to be able to access the Internet and share files across the college network, whether they're in the physics lab or sunbathing in the quad. That's the role of a hybrid network.

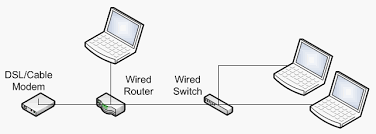

A hybrid network refers to any computer network that contains two or more different communications standards. In this case, the hybrid network uses both Ethernet (802.3) and Wi-Fi (802.11 a/b/g) standards. A hybrid network relies on special hybrid routers, hubs and switches to connect both wired and wireless computers and other network-enabled devices.

How do you set up a hybrid network? Are hybrid routers expensive? Is it hard to configure a Wi-Fi laptop to join an existing wired network? Read on to find out more about hybrid networks.

In a wired computer network, all devices need to be connected by physical cable. A typical configuration uses a central access point. In networking terms, this is called a star topology, where information must travel through the center to reach other points of the star.

The central access point in a wired network can be a router, hub or a switch. The access point's function is to share a network connection among several devices. All the devices are plugged into the access point using individual Ethernet (CAT 5) cables. If the devices want to share an Internet connection as well, then the access point needs to be plugged into a broadband Internet modem, either cable or DSL.

A router allows connections to be shared among devices.

In a standard wireless network, all networked devices communicate with a central wireless access point. The devices themselves need to contain wireless modems or cards that conform with one or more Wi-Fi standards, either 802.11 a, b or g. In this configuration, all wireless devices can share files with each other over the network. If they also want to share an Internet connection, then the wireless access point needs to be plugged into a broadband modem.

A standard hybrid network uses something called a hybrid access point, a networking device that both broadcasts a wireless signal and contains wired access ports. The most common hybrid access point is a hybrid router. The typical hybrid router broadcasts a Wi-Fi signal using 802.11 a, b or g and contains four Ethernet ports for connecting wired devices. The hybrid router also has a port for connecting to a cable or DSL modem via Ethernet cable.

When shopping for a hybrid router, you might not see the word "hybrid" anywhere. You're more likely to see the router advertised as a wireless or Wi-Fi router with Ethernet ports or "LAN ports" . Hybrid routers start at around $50 for a basic model with four Ethernet ports and a network speed of 54Mbps (megabits per second).

There are several different possible network configurations for a hybrid network. The most basic configuration has all the wired devices plugged into the Ethernet ports of the hybrid router. Then the wireless devices communicate with the wired devices via the wireless router.

But maybe you want to network more than four wired devices. In that case, you could string several routers together, both wired and wireless, in a daisy chain formation. You'd need enough wired routers to handle all of the wired devices (number of devices divided by four) and enough wireless routers -- in the right physical locations -- to broadcast a Wi-Fi signal to every corner of the network.

Computers aren't the only devices that can be linked over a hybrid network. You can now buy both wired and wireless peripheral devices like printers, Web cams and fax machines. An office worker with a laptop, for example, can print a document without plugging directly into the printer. He can send the document over the hybrid network to the networked printer of his choice.

Now let's look at the advantages and disadvantages of traditional wired and wireless networks and how hybrid networks offer the best of both worlds.

The chief advantage of a wired network is speed. So-called "Fast Ethernet" cables can send data at 100Mbps while most Wi-Fi networks max out at 54Mpbs . So if you want to set up a LAN gaming party or share large files in an office environment, it's better to stick with wired connections for optimum speed. Take note, however, that the upcoming 802.11n Wi-Fi standard claims throughput speeds of 150 to 300Mbps .

The chief advantage of a wireless network is mobility and flexibility. You can be anywhere in the office and access the Internet and any files on the LAN. You can also use a wider selection of devices to access the network, like Wi-Fi-enabled handhelds and PDAs.

To get the maximum speed for LAN parties, it's best to use a wired connection.

Another advantage of wireless networks is that they're comparatively cheaper to set up, especially in a large office or college environment. Ethernet cables and routers are expensive. So is drilling through walls and running cable through ceilings. A few well-placed wireless access points -- or even better, a wireless mesh network -- can reach far more devices with far less money.

Other than that, both wired and wireless networks are equally easy (or difficult) to set up, depending on the organization's size and complexity. For a small office or home network, the most popular operating systems -- Windows XP, Vista and Mac OS 10 -- can guide you through the process with a networking wizard. Installing and administering a large office or organizational network is equally tricky whether you're using wired or wireless. Although with wireless connections, you don't have to go around checking physical Ethernet connections.

As for security, wired is generally viewed as more secure, since someone would have to physically hack into your network. With wireless, there's always a chance that a hacker could use packet-sniffing software to spy on information traveling over your wireless network. But with new wireless encryption standards like WEP (Wired Equivalent Privacy) and WPA (Wi-Fi Protected Access) built into most Wi-Fi routers, wireless networking is nearly as secure as wired.

A hybrid wired/Wi-Fi network would seem to offer the best of both worlds in terms of speed, mobility, affordability and security. If a user needs maximum Internet and file-sharing speed, then he can plug into the network with an Ethernet cable. If he needs to show a streaming video to his buddy in the hallway, he can access the network wirelessly. With the right planning, an organization can save money on CAT 5 cable and routers by maximizing the reach of the wireless network. And with the right encryption and password management in place, the wireless portion of the network can be just as secure as the wired.

A hybrid computer communication network.

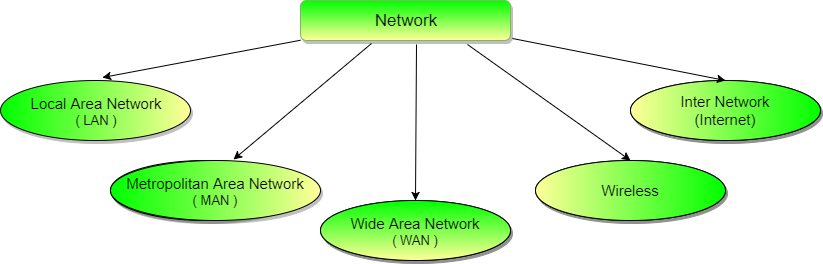

Hybrid Networking Topologies: Types, Uses & Examples

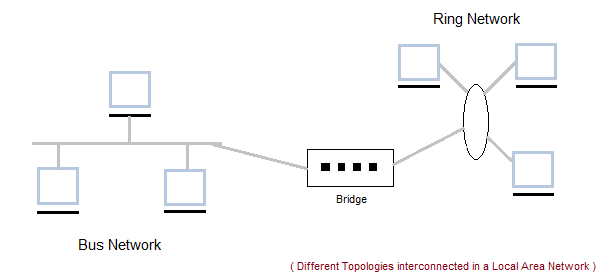

Physical design of a network requires proper planning which for certain cases requires multiple topologies being combined(hybrid topology) to address all organizational needs. This lesson explains hybrid network topologies, their examples and uses.

A hybrid network is any computer network that uses more than one type of connecting technology or topology. For example, a home network that uses both Wi-Fi and Ethernet cables to connect computers is a hybrid. In the early years of computer networking, hybrid networks often consisted of Token Ring or Star technologies, however these were quickly antiquated by Ethernet.

Hybrid Network Topologies

Hybrid topology is an interconnection of two or more basic network topologies, each of which contains its own nodes.

The resulting interconnection allows the nodes in a given basic topology to communicate with other nodes in the same basic topology as well as those in other basic topologies within the hybrid topology.

Advantages of a hybrid network includes increased flexibility as new basic topologies can easily be added or existing ones removed and increased fault tolerance.

Types of Hybrid Network Topologies

There are different types of hybrid network topologies depending on the basic topologies that makes up the hybrid and the adjoining topology that interconnects the basic topologies.

The following are some of the Hybrid Network Topologies:

Star-Wired Ring Network Topology

In a Star-Wired Ring hybrid topology, a set of Star topologies are connected by a Ring topology as the adjoining topology. Joining each star topology to the ring topology is a wired connection.

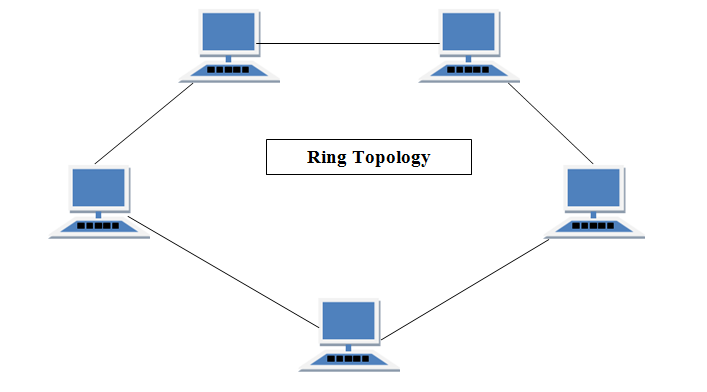

Figure 1 is a diagrammatic representation of the star-wired ring topology

|

Figure 1: A Star-Wired Ring Network Topology

In Figure 1, individual nodes of a given Star topology like Star Topology 1 are interconnected by a central switch which in turn provide an external connection to other star topologies through a node A in the main ring topology.

Information from a given star topology reaching a connecting node in the main ring topology like A flows either in a bidirectional or unidirectional manner.

A bidirectional flow will ensure that a failure in one node of the main ring topology does not lead to the complete breakdown of information flow in the main ring topology.

Star-wired bus Network Topology

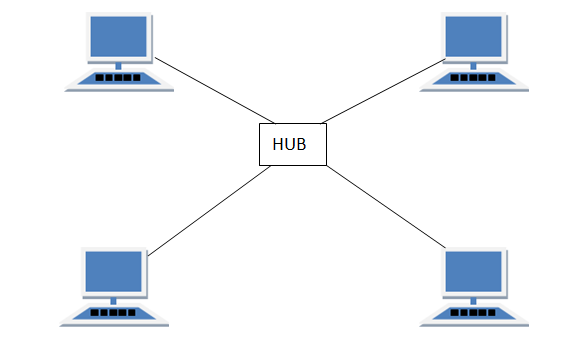

A Star-Wired Bus topology is made up of a set of Star topologies interconnected by a central Bus topology.

Joining each Star topology to the Bus topology is a wired connection.

Figure 2 is a diagrammatic representation of the star-wired bus topology

|

Figure 2: A Star-Wired Bus Network Topology

In this setup, the main Bus topology provides a backbone connection that interconnects the individual Star topologies.

The backbone in this case is a wired connection.

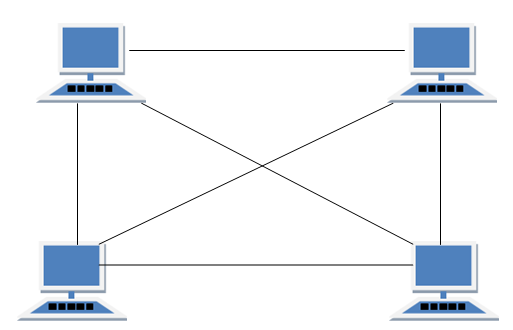

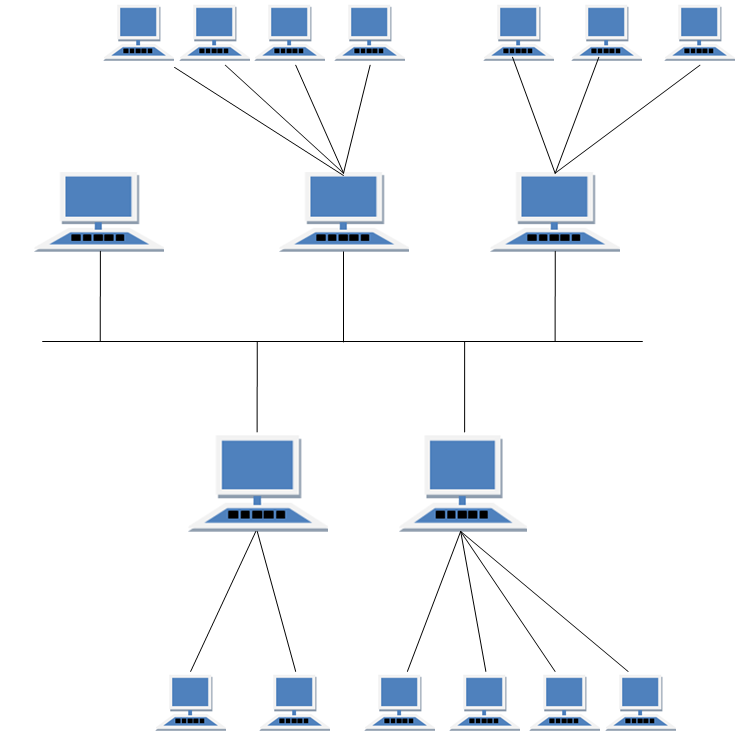

Hierarchical Network Topology

Hierarchical Network topology is structured in different levels as a hierarchical tree. It is also referred to as Tree network topology.

Figure 3 shows a diagrammatic representation of Hierarchical network topology.

|

Figure 3: A Tree Network Topology

Connection of the lower levels like level 2 to higher levels like level 1 is done through wired connection.

The top most level, level 0, contains the parent (root) node. The second level, level 1 contains the child nodes which in turn have child nodes in level 3. All the nodes in a given level have a higher parent node except for the node(s) at the top most level.

The nodes at the bottom most level are called leaf nodes as they are peripheral and are parent to no other node. At the basic level, a tree network topology is a collection of star network topologies arranged in different levels.

Each level including the top most can contain one or more nodes.

Uses of Hybrid Network Topologies

The decision to use the Hybrid network topology over a basic topology in a network is mostly based on the organizational needs to be addressed by the network envisioned. The following are some of the reasons that can make an organization pick on a hybrid as the preferred network topology:

Where there is need for Flexibility and Ease of network Growth

Network growth is when more network nodes are added to an existing network. A hybrid network easens addition of new nodes to the network as changes can be done at the basic network levels as well as on the main network.

For example, in a Campus set up, there could be different hostels each of which could be having its own network. The individual hostel networks have the liberty of adding new node to its network at any time without affecting the other hostels network.

Home Network Hybrids

Although Ethernet and Wi-Fi usually use the same router in a home network, this doesn't mean that the technology behind them is identical. Both have different specifications developed by the IEEE Standards Association. Ethernet cable networks use the 802.3 standards, while Wi-Fi networks use 802.11. These standards have different rules about how data is transferred. A home WiFi Ethernet router is a hybrid device that brings these two different technologies together. Without such a hybrid device, there would be no way to connect an Ethernet-based desktop to a Wi-Fi-based tablet on the same network.

An ethernet cable is hooked up to a laptop.

Business Network Hybrids

Business networks often rely on hybrid networks to ensure employees using different devices can access the same data, which may be stored in different locations. Since businesses seldom upgrade an entire network to the latest technology at the same time, a typical enterprise network today may include components from different eras. For example, two offices may be joined with an ATM fiber optic connection, with some people connecting to the network using Ethernet, others using Wi-Fi and home users connecting through the Internet or, more recently, 4G wireless. Technologies like Multi-Protocol Label Switching, or MPLS, allow businesses to route traffic from different technologies into the same network.

Advantages of Hybrids

The two main advantages of a hybrid network are cost-savings and accessibility. If you have an Ethernet network at home and buy a tablet, rather than replacing all of your Ethernet components with Wi-Fi, you can simply add a Wi-Fi router to your existing network. The same is true for business networks, but on a larger scale. Few businesses have the budget to replace an entire network all at once. Hybrids allow a business to bring in new networking technologies, while phasing out old technologies over the course of several years.

Disadvantages of Hybrids

The main disadvantage of hybrids are security and support costs. Each network technology introduces new security concerns. Having a router with a good firewall becomes meaningless, for example, if you add a Wi-Fi access point that hasn't been encrypted with a strong password. In business networks, supporting different types of network technologies can become expensive, since they usually need someone with expertise in each technology. Business hybrid networks are always the result of balancing the need for a fast, accessible network with the need for data e- Key Shifting .

LAN

Local Area Network" or LAN is a term meaning "private network." The dominant set of standards for the physical properties of LANs is called Ethernet. The Ethernet standards are published by the Institute of Electrical and Electronics Engineers and periodically updated to create better performing networks. The "100" LAN refers to one of these standards.

Ethernet

Ethernet was originally a proprietary networking system owned by Xerox. The early Xerox standards recommended the use of coaxial cable. In 1983, the responsibility for managing the standards was handed over to the IEEE, and Ethernet became an open standard. An open standard is available to all, either free of charge, or for a subscription fee. The IEEE has since produced a number of amendments to the Ethernet standards; each carries the code 802.3, followed by one or two letters to indicate a series.

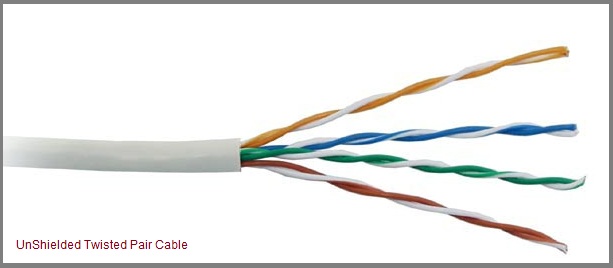

Unshielded Twisted Pair cable is the most common

Naming Convention

Although the IEEE uses the 802.3 code for all its Ethernet standards, the complete LAN systems it defines are given a different code. This naming system has three elements. The first is the data throughput speed. Originally, this was expressed in megabits per second, but later systems are given a code based on gigabits per second. The next part of the name gives its transmission method, which is either baseband or broadband. The final part is a code for the cable type of the network.

Fast Ethernet

The 100 Megabits per second version of Ethernet was released by the IEEE in 1995 with the publication of 802.3u. The standard defined three different cabling systems, each achieving the same performance levels. These networks types are called 100BASE-T4 and 100BASE-TX (which together are known as 100BASE-T) and 100BASE-FX. The "T" in the first two standards refers to twisted pair cable. The "F" of 100BASE-FX indicates that the standard used two strands of multi-mode fiber optic cable.

Twisted Pair

Twisted pair cable for networking comes in two forms: Unshielded Twisted Pair, or UTP, and Shielded Twisted Pair, or STP. Of the two, UTP is more widely implemented. Both types of cable contain eight wires configured as pairs, with the two wires of each pair twisted around each other. The twisting forms a protection against magnetic interference, making shielding unnecessary, although STP has additional shielding. Usually, only four of the eight wires inside the cable are used. UTP cable is categorized into grades and higher grades have better capabilities. The 100BASE-TX uses Cat-5 UTP cable, but that can replaced by the equivalent STP cable. The 100BASE-T4 allows for the employment of lower-grade UTP cable, which are called "Cat-3" and "Cat-4." These implementations use all the wires inside the cable, not just two pairs.

How Does a Wireless Adapter Work?

A wireless adapter, also called a wireless network adapter, has two primary functions: 1) convert data from its binary electronic form into radio frequency signals and transmit them and 2) receive RF signals and convert them into binary electronic data and pass them to a computer.

Essentially, a wireless adapter works much like a radio or television. These devices receive signals and convert them into an output: sound, sight or both. Similarly, wireless adapters convert signals into the electronic equivalent of data, sight and sound for either transmission as radio frequency signals or into data for a computer to process.

RF Signals

RF signals are vibrating electromagnetic waves transmitted through the air. When you use your cellular telephone, you are communicating by way of RF signals. Although seemingly unlimited, the air space in which RF signals are transmitted is divided into a variety of bands, nearly all of which are assigned to specific applications, industries or purposes. The frequencies used for wireless networking are reserved for that specific purpose. The frequencies used in wireless networking, primarily 2.4 Gigahertz and 5 GHz, are divided into fourteen channels, over which wireless network devices communicate.

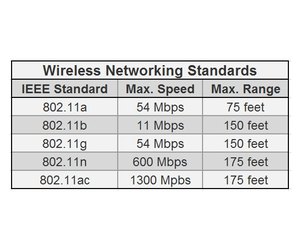

Bandwidth

Bandwidth, or the data transfer rate, is the speed, measured in bits per second, at which a wireless device transmits data. Bandwidth depends on the particular wireless network standard implemented on a wireless network. The standards for wireless networking, the IEEE 802.11 standards, define a bandwidth range from 11 megabits per second (IEEE 802.11b) to 1300 Mbps (IEEE 802.11ac).

Range

Range is the straight-line distance defined by a wireless network standard in which a transmitted signal remains viable. Wireless signals are effected by distance. As a receiving wireless device moves away from a transmitting device, the signal becomes weaker. The highest bandwidth of a wireless standard is rarely available at the outer limit of its range. In addition to distance issues, the materials a signal must pass through can also weaken, or perhaps even block, a wireless signal. On a wireless network device, signal strength (the number of bars) is very important, just like it is on a cell phone.

Device Type

Wireless adapters come in a variety of shapes, sizes, technologies and even colors. The adapter that is right for your computer must meet certain criteria: the device to which it will attach, the wireless standard you want to use, which determines bandwidth and range and where and how you wish to connect to wireless (indoors vs outdoors). There are wireless adapters for notebooks, laptops, tablets, phones, cameras and, of course, desktop computers. A network adapter for a desktop computer is typically an expansion card that is installed inside the computer's system case. However, many network adapters connect through a USB port and some are external peripheral devices that connect to a computer through a cable.

Wireless Adapter Functions

So, what does a wireless adapter do? It facilitates the capability for interaction with wireless RF signals transmitted within its range. With a wireless adapter built in, installed in or attached to a computer or wireless communication device, you are able to join a wireless network to communicate with websites, other people or Internet resources.

Difference Between Wireless LAN & WiFi

Wi-Fi is the implementation of Wireless LAN. Wireless LAN describes the broader concept of wireless networked communications between machines. Wi-Fi is a trademark that can be used on devices that meet the 802.11 standards. Wi-Fi is used by a variety of devices to allow them to connect to wireless networks and the Internet.Wireless LAN

Wireless LAN or WLAN refers to Wireless Local Access Network. This is a somewhat broad term in that it describes wireless networks of machines through several means. One type of WLAN can be peer to peer, which is basically two machines setup to communicate directly with one another. Another form of WLAN is sometimes called infrastructure mode. This is the most common type of WLAN and involves two or more computers communicating through a bridge or or access point. This latter type is typically used for wireless Internet access using a wireless router.

Wi-Fi

Wi-Fi is a trademark of the Wi-Fi Alliance an organization that sets standards for Wi-Fi and certifies devices. Wi-Fi is a widely used standard and as such Wi-Fi devices can be used across the world without difficulty. Wi-Fi, basically, describes the implementation of WLAN. Wi-Fi is a technology and set of standards that allows for the implementation of WLANs.

Wi-Fi allows Internet access nearly anywhere

The Same

In practical terms there really isn't a difference between WLAN and Wi-Fi, because Wi-Fi is WLAN. WLAN is a broader concept but Wi-Fi is so widespread in its use that it is effectively the only technology for WLAN in use. Wi-Fi itself is broad as it represents a set of standards for technology for accessing WLANs but isn't an explicit product or service offered by a single company. Multiple companies are associated with the Wi-Fi Alliance and set the standards. Wi-Fi is separate from certain other wireless networking technologies worth noting.

Cellular Phones

Cellular phone data networks are neither WLAN nor Wi-Fi. WLAN refers to networks covering a limited area and number of devices. Cellular phone data networks are Wide Area Networks (WAN), meaning they cover a broad area and a large number of devices in that area. Wi-Fi offers data advantages over cellular data networks, because Wi-Fi is a more broadly used and higher bandwidth delivery of wireless access. There are a broad range of cellular data networks from EVDO and GPRS to LTE. These all interact with different devices without any crossover limiting access to only particular devices for each network. In contrast, Wi-Fi is used the same all over the world and a Wi-Fi device from one country will easily access a Wi-Fi WLAN in another.

Bluetooth

Bluetooth differs from Wi-Fi and WLAN in that it is designed to encourage specific interactions through devices that are communicating directly with one another. This is called a Wireless Personal Area Network (WPAN) and differs from WLAN, because it is designed to wirelessly unite the use of otherwise incompatible devices. While one could use Bluetooth for internet access through an internet access point broadcasting a Bluetooth signal, Wi-Fi offers the advantage of more network range and potentially higher speeds of data transfer.

Wireless Networks

As shown there are several types of wireless networks that differ from Wi-Fi. WLAN is not one of them as Wi-Fi is essentially the practical application of WLAN. Wi-Fi offers advantages over other wireless technologies that can offer some of the same things as WLAN and Wi-Fi but in a more limited capacity.

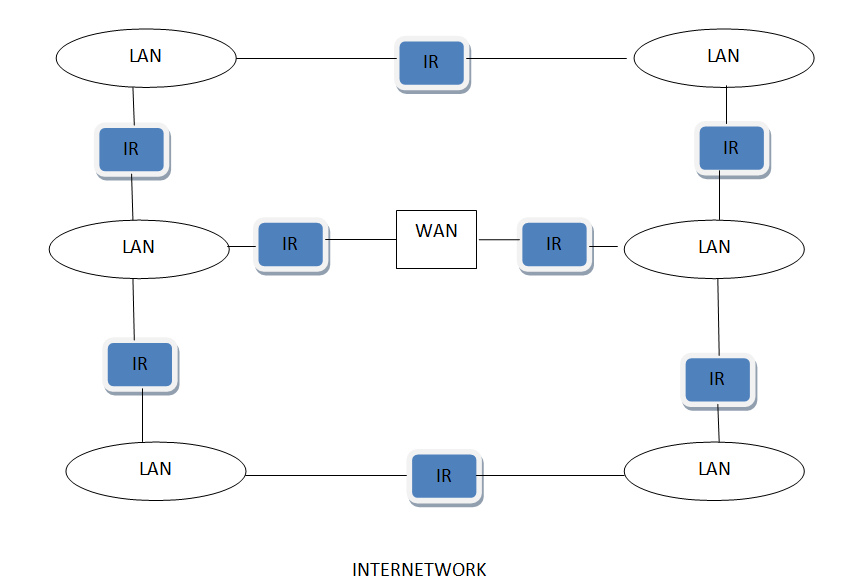

Wan/Lan Protocols

"Local Area Networks," or LANs, and "Wide Area Networks," or WANs, can both be defined by the network protocols that constitute them. Protocols are standards that define how data is ultimately transferred from one system to another. The difference between a LAN and a WAN is the distance the data travels, with a LAN typically serving a single building and a WAN usually covering different geograph locations.LAN Protocols

LAN protocols are distinguished by their capability to efficiently deliver data over shorter distances, such as a few hundred feet, through various mediums, such as copper cabling. Different protocols exist for different purposes and exist in different "layers" of the "Open Systems Interconnect," or OSI, model. Typically when using the word "LAN" to describe a protocol, the intent is to describe lower level, or physical, layers. Some of the most common LAN protocols are "Ethernet," "Token Ring" and "Fiber Distributed Data Interface," or "FDDI."

"Ethernet" is by far the most common type of LAN protocol. It is found in homes and offices throughout the world and is recognizable by its common "CAT5" copper cable medium. It uses a switch or hub to which all systems connect to exchange data.

Two systems must be using the same LAN or WAN

Token Ring" is an older LAN technology that is not prevalent anymore. The basic premise of "Token Ring" is a single "token" is passed from system to system, or through a hub, and only the intended recipient reads the token.

"FDDI" defines how LAN traffic is transmitted over fiber cabling. Fiber cabling is used when longer distances, usually between floors or buildings, are required, or where heightened security is required.

WAN Protocols

WAN protocols are distinguished by their capability to efficiently deliver data over longer distances, such as hundreds of miles. This is generally required to bridge data between multiple LANs. The Internet is the world's largest WAN. Routers, modems and other WAN devices are used to transmit the data over various mediums, commonly fiber cabling. Some of the most common WAN protocols in use today are "Frame Relay," "X.25," "Integrated Services Digital Network," or "ISDN," and "Point-to-Point Protocol," or "PPP."

"Frame Relay" and "X.25" are similar in that they are both packet-switching technologies for sending data over large distances. "Frame Relay" is newer and faster, whereas "X.25" delivers data more reliably.

"PPP" is a protocol that is used to transmit data for other protocols over mediums that they would not normally support, such as sending the "Internet Protocol," or IP, over serial lines.

"ISDN" is a method of combining multiple dial-up lines on a public telephone network into a single data stream.

Wireless LAN Protocols

Wireless LANs, sometimes referred to as "WLAN" or "Wi-Fi," are becoming increasingly prevalent. They operate essentially the same as a traditional LAN, but use wireless signals between antennas as the medium, rather than cabling. Most of the wireless protocols in use today are based on the 802.11 standard and are differentiated by the letter appearing after the number. The four main protocols are "802.11a," "802.11b," "802.11g" and "802.11n."

"802.11a" is designed to carry data over shorter distances at higher speeds of up to 54 megabits per second, or Mbps. "802.11b" does the opposite, operating at lower speeds of up to only 11 Mbps but with higher reliability at longer distances and with more obstructing objects in the environment.

"802.11g" combines the best of the previous two protocols, operating at up to 54 Mbps over longer distances. "802.11n" is the latest wireless protocol to be released. It can operate at speeds of greater than 150 Mbps over longer distances than the other protocols.

Hybrid system simulation of computer control applications over communication networks

Discrete event-driven simulations of digital communication networks have been used widely. However, it is difficult to use a network simulator to simulate a hybrid system in which some objects are not discrete event-driven but are continuous time-driven. A networked control system (NCS) is such an application, in which physical process dynamics are continuous by nature. We have designed and implemented a hybrid simulation environment which effectively integrates models of continuous-time plant processes and discrete-event communication networks by extending the open source network simulator NS-2. To do this a synchronisation mechanism was developed to connect a continuous plant simulation with a discrete network simulation. Furthermore, for evaluating co-design approaches in an NCS environment, a piggybacking method was adopted to allow the control period to be adjusted during simulations. The effectiveness of the technique is demonstrated through case studies which simulate a networked control scenario in which the communication and control system properties are defined explicitly.