SIGNAL CONDITION PRINCIPLE:

1. Changing the Signal Level

2. Linearization

3. Conversion

4. Filters and Impedance Adjustments

5. Concept of Loading

6. Dynamic object in blocking recipients and inverters

Changing Signal Level:

1. Strengthening

2. Damping

3. Dynamic object stabilization

Considerations in selecting amplifiers and e- IC :

The input impedance offered to the sensor (or other element that functions as input)

Reinforcement frequency response and responsive reliability of components.

e-IC as control controller and signal conditioning process

In electronics, signal conditioning is the manipulation of an analog signal in such a way that it meets the requirements of the next stage for further processing.

In an analog-to-digital converter application, signal conditioning includes voltage or current limiting and anti-aliasing filtering.

In control engineering applications, it is common to have a sensing stage (which consists of a sensor), a signal conditioning stage (where usually amplification of the signal is done) and a processing stage (normally carried out by an ADC and a micro-controller). Operational amplifiers (op-amps) are commonly employed to carry out the amplification of the signal in the signal conditioning stage. In some transducers this feature will come inherent for eg in hall effect sensors.

In power electronics, before processing the input sensed signals by sensors like voltage sensor and current sensor, signal conditioning scales signals to level acceptable to the microprocessor.

Signal inputs accepted by signal conditioners include DC voltage and current, AC voltage and current, frequency and electric charge. Sensor inputs can be accelerometer, thermocouple, thermistor, resistance thermometer, strain gauge or bridge, and LVDT or RVDT. Specialized inputs include encoder, counter or tachometer, timer or clock, relay or switch, and other specialized inputs. Outputs for signal conditioning equipment can be voltage, current, frequency, timer or counter, relay, resistance or potentiometer, and other specialized outputs.

Signal conditioning can include amplification, filtering, converting, range matching, isolation and any other processes required to make sensor output suitable for processing after conditioning.

Filtering

Filtering is the most common signal conditioning function, as usually not all the signal frequency spectrum contains valid data. The common example is 50/60 Hz AC power lines, present in most environments, which cause noise if amplified.Amplification

Signal amplification performs two important functions: increases the resolution of the input signal, and increases its signal-to-noise ratio.[citation needed] For example, the output of an electronic temperature sensor, which is probably in the millivolts range is probably too low for an analog-to-digital converter (ADC) to process directly.[citation needed] In this case it is necessary to bring the voltage level up to that required by the ADC.Commonly used amplifiers used for signal conditioning include sample and hold amplifiers, peak detectors, log amplifiers, antilog amplifiers, instrumentation amplifiers and programmable gain amplifiers.

Attenuation

Attenuation, the opposite of amplification, is necessary when voltages to be digitized are beyond the ADC range. This form of signal conditioning decreases the input signal amplitude so that the conditioned signal is within ADC range. Attenuation is typically necessary when measuring voltages that are more than 10 V.Excitation

External power is required for the operation of an active sensor. (E.g. a temperature sensor like a thermistor & RTD, a pressure sensor(piezo-resistive and capacitive), etc.). The stability and precision of the excitation signal directly relates to the sensor accuracy and stability.Linearization

Linearization is necessary when sensors produce voltage signals that are not linearly related to the physical measurement. Linearization is the process of interpreting the signal from the sensor and can be done either with signal conditioning or through software.Electrical Isolation

Signal isolation may be used to pass the signal from the source to the measuring device without a physical connection. It is often used to isolate possible sources of signal perturbations that could otherwise follow the electrical path from the sensor to the processing circuitry. In some situations, it may be important to isolate the potentially expensive equipment used to process the signal after conditioning from the sensor.Magnetic or optical isolation can be used. Magnetic isolation transforms the signal from a voltage to a magnetic field so the signal can be transmitted without physical connection (for example, using a transformer). Optical isolation works by using an electronic signal to modulate a signal encoded by light transmission (optical encoding). The decoded light transmission is then used for input for the next stage of processing.

Surge protection

A surge protector absorbs voltage spikes to protect the next stage from damage.Automatic control has played a very important role, in the development of science and technology such as developments in the arrangement of spacecraft; missile; aircraft control system.

Similarly, the regulatory system has become an important and integrated part of processes in modern modern industries:

1. As a pressure controller

2. As a temperature controller

3. As a humidity controller

4. Flow systems in industrial processes

Control activities and monitoring that are commonly carried out by humans can be replaced by applying the principle of automation. Control activities that are carried out repeatedly, lack of precision in reading data, and the risks that may arise from a controlled system further strengthen the position of the tool / machine to control automatically.

These automatic control devices are very useful for humans. Especially if coupled with an intelligence through the programs implanted in the system will further alleviate human tasks. However, as smart as a machine, it certainly still requires the role of humans to regulate and control these devices. Automation control is not to completely replace the human role, but reduces the role and alleviates human tasks in controlling a process.

With the development of technology, the subject of the Control Engineering System course provides convenience in:

1. Get performance from a Dynamic system,

2. Can enhance production quality

3. Reducing production costs,

4. Increasing the rate of production,

5. And eliminate routine work that is boring, which must be done by humans.

The Basic Concept of Controller :

The system setting (signal conditioning) technique is carried out based on the basics of "feedback" techniques and linear analysis of the system.

So by including the concepts of network theory (Network theory) will get an analysis of system settings and controls on the desired output (output).

Thus in the problem of "Technical System Analysis" the problem will be discussed:

• System and model of the system, also the mathematical formulation of the system that is reviewed and how to solve it.

• For feedback techniques (feedback) is one of the most basic processes and almost exists in all dynamic systems, among others:

- Matters relating to the human self

- Relationship between humans and machines

- Equipment that supports each other.

So that due to the theory of feedback control systems will continue to develop as a particular scientific discipline, and will be useful for analyzing and designing a practical control system for other technological devices.

In order to be able to understand in the above, it is necessary to understand the knowledge of the basics of science, including:

• Fundamentals of physics

• Calculus basics

• The basics of mathematics

• Electrical and mechanical components and character.

So that the necessary mathematical tools include various topics including:

• Settlement of problems with differential and integral equations

• Laplace transform and complex variables.

The control system is classified into 2, namely:

1. "Open loop system" is a sustu system whose control measures are free of output.

The advantages:

- The construction is simple

- Cheaper than a closed system

- There are no problems with instability

- Accuracy of work is determined by caliber

The disadvantages:

- Interference and calinberation changes, will cause an error, so the output is not as desired.

- To maintain the required quality at the output, repeated calibration is required at any given time.

2. "Closed Loop System" Control System, is a system whose control measures depend on the output.

Its characteristics include:

- Able to improve accuracy, so that it can continue to reproduce its input.

- Can reduce the sensitivity of the comparison of entry to input for changes in system characteristics.

- Reduces the effect of linearity and distortion.

So the system is a combination of several components that work together and can carry out certain tasks including:

- Electrical system

- Mechanical System

- Thermic system

- Biological system

- The system of everyday human life

- Communication systems and object information in electronic media

- Etc.

Thus the system of controls (feetback control system): namely a system where the instantaneous price of the output, is always assessed and compared with the input, thus producing the desired output.

As a result, thus the input minus "Output" will produce a driving signal will result in "Signal Error" that regulates the system, so as to produce the desired Output.

Problems in the control system

The main point in the system analysis in the synthesis of a control system, among others:

1. Transient period: i.e. each control system is expected to have as little transient time as possible, meaning that it can be as short as possible, so that the output price is in accordance with the desired. Staying with a small transient time, the output will have a large deviation and or oscillation in the direction of a higher yana price (increasing).

2. Steady state time (after the symptoms of the transition are considered complete), here are two very important things, namely:

a. The error (steady state error) is that the output is actually not the same as the desired output.

b. The magnitude of the steady state error errors of the two systems is strongly influenced by the "type system" and the type of "input"

3. Stability: That is, a system determines whether the system has magnitude (especially its output) at a price that is very large or outside the limits of our assessment.

The main point in the system analysis in the synthesis of a control system, among others:

1. Transient period: i.e. each control system is expected to have as little transient time as possible, meaning that it can be as short as possible, so that the output price is in accordance with the desired. Staying with a small transient time, the output will have a large deviation and or oscillation in the direction of a higher yana price (increasing).

2. Steady state time (after the symptoms of the transition are considered complete), here are two very important things, namely:

a. The error (steady state error) is that the output is actually not the same as the desired output.

b. The magnitude of the steady state error errors of the two systems is strongly influenced by the "type system" and the type of "input"

3. Stability: That is, a system determines whether the system has magnitude (especially its output) at a price that is very large or outside the limits of our assessment.

4. objects and instruments that move in different spaces and times

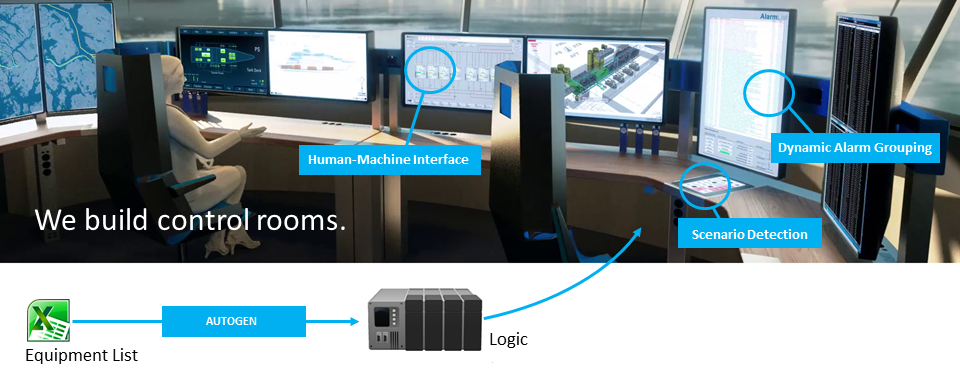

Fig 1. The example of control dynamic with concept signal conditioner

Fig 2 . The monitoring Tank with the sensor for signal conditioner over

BASIC TERMINOLOGY

• Modeling

• Explicit solutions

• Numerical solutions

• Empirical data

• Simulation

• Lumped parameter (masses and springs)

• Distributed parameters (stress in a solid)

• Continuous vs. Discrete

• Linear vs. Non-linear

• linearity and superposition

• reversibility

• through and across variables

• Analog vs. Digital

• process vs. controllers

• Basic system categories

. control system types: servo vs. regulating/process control

• open loop vs. closed loop

• disturbances

• component variations

• system error

• analysis vs. design

• mechatronics

• embedded systems

• real-time systems

EXAMPLE SYSTEM

• Servo control systems

• Robot

TRANSLATION IN INTRODUCTION :

If the velocity and acceleration of a body are both zero then the body will be static.

If the applied forces are balanced, and cancel each other out, the body will not accelerate.

If the forces are unbalanced then the body will accelerate. If all of the forces act through

the center of mass then the body will only translate. Forces that do not act through the center

of mass will also cause rotation to occur. This chapter will focus only on translational

systems.

These state simply that velocity is the first derivative of position, and velocity is the first derivative of

acceleration. Conversely the acceleration can be integrated to find velocity, and the velocity

can be integrated to find position. Therefore, if we know the acceleration of a body, we

can determine the velocity and position. Finally, when a force is applied to a mass, an

acceleration can be found by dividing the net force by the mass.

MISSION :

• To be able to develop differential equations that describe translating systems.

• Basic laws of motion

• Gravity, inertia, springs, dampers, cables and pulleys, drag, friction, FBDs

• System analysis techniques

• Design case

MODELING

When modeling translational systems it is common to break the system into parts.

These parts are then described with Free Body Diagrams (FBDs). Common components

that must be considered when constructing FBDs are listed below, and are discussed in

following sections.

• gravity and other fields - apply non-contact forces

• inertia - opposes acceleration and deceleration

• springs - resist deflection

• dampers and drag - resist motion

• friction - opposes relative motion between bodies in contact

. cables and pulleys - redirect forces

• contact points/joints - transmit forces through up to 3 degrees of freedom

Free Body Diagrams

Free Body Diagrams (FBDs) allow us to reduce a complex mechanical system into

smaller, more manageable pieces. The forces applied to the FBD can then be summed to

provide an equation for the piece. These equations can then be used later to do an analysis

of system behavior. These are required elements for any engineering problem involving

rigid bodies.

Mass and Inertia

In a static system the sum of forces is zero and nothing is in motion. In a dynamic

system the sum of forces is not zero and the masses accelerate. The resulting imbalance in

forces acts on the mass causing it to accelerate. For the purposes of calculation we create a

virtual reaction force, called the inertial force. This force is also known as D’Alembert’s

(pronounced as daa-lamb-bears) force. It can be included in calculations in one of two

ways.The first is to add the inertial force to the FBD and then add it into the sum of The second method is known as D’Alembert’s equation where all of the forces are summed and set equal to the inertial force.

Gravity and Other Fields

Gravity is a weak force of attraction between masses. In our situation we are in the

proximity of a large mass (the earth) which produces a large force of attraction. When analyzing

forces acting on rigid bodies we add this force to our FBDs. The magnitude of the

force is proportional to the mass of the object, with a direction toward the center of the

earth (down). The relationship between mass and force is clear in the metric system with mass

having the units Kilograms (kg), and force the units Newtons (N). The relationship

between these is the gravitational constant 9.81N/kg, which will vary slightly over the surface

of the earth. The Imperial units for force and mass are both the pound (lb.) which

often causes confusion. To reduce this confusion the unit for force is normally modified to

be, lbf.

Springs

Springs are typically constructed with elastic materials such as metals and plastics,

that will provide an opposing force when deformed. The properties of the spring are determined

by the Young’s modulus (E) of the material and the geometry of the spring.

Damping and Drag

A damper is a component that resists motion. The resistive force is relative to the

rate of displacement. As mentioned before, springs store energy in a system but dampers

dissipate energy. Dampers and springs are often used to compliment each other in designs.

Damping can occur naturally in a system, or can be added by design.

Cables And Pulleys

Cables are useful when transmitting tensile forces or displacements. The center line

of the cable becomes the centerline for the force. And, if the force becomes compressive,

the cable becomes limp, and will not transmit force. A cable by itself can be represented as

a force vector. When used in combination with pulleys, a cable can redirect a force vector

or multiply a force.

Typically we assume that a pulley is massless and frictionless (in the rotation chapter

we will assume they are not).

Friction

Viscous friction was discussed before, where a lubricant would provide a damping

effect between two moving objects. In cases where there is no lubricant, and the touching

surfaces are dry, dry coulomb friction may result. In this case the surfaces will stick in

place until a maximum force is overcome. After that the object will begin to slide and a

constant friction force will result.

Contact Points And Joints

A system is built by connecting components together. These connections can be

rigid or moving. In solid connections all forces and moments are transmitted and the two

pieces act as a single rigid body. In moving connections there is at least one degree of freedom.

If we limit this to translation only, there are up to three degrees of freedom, x, y and

z. In any direction there is a degree of freedom, a force or moment cannot be transmitted.

When constructing FBDs for a system we must break all of the components into

individual rigid bodies. Where the mechanism has been broken the contact forces must be

added to both of the separated pieces.

SYSTEM EXAMPLES

An orderly approach to system analysis can simplify the process of analyzing large

systems. The list of steps below is based on general observations of good problem solving

techniques.

1. Assign letters/numbers to designate force components (if not already done) -

this will allow you to refer to components in your calculations.

2. Define positions and directions for any moving masses. This should include the

selection of reference points.

3. Draw free body diagrams for each component, and add forces (inertia is

optional).

4. Write equations for each component by summing forces.

5. Combine the equations by eliminating unwanted variables.

6. Develop a final equation that relates input (forcing functions) to

outputs (results).

I . ANALYSIS OF DIFFERENTIAL EQUATIONS

Tobe Know :

• First and second-order homogeneous differential equations

• Non-homogeneous differential equations

• First and second-order responses

• Non-linear system elements

• Design case

MISSION :

• To develop explicit equations that describe a system response.

• To recognize first and second-order equation forms.

we derived differential equations of motion for translating

systems. These equations can be used to analyze the behavior of the system and make

design decisions. The most basic method is to select a standard input type (a forcing function)

and initial conditions, and then solve the differential equation. differential the same wit timing or timer in electronic work .

Solving a differential equation results in an explicit solution. This equation provides

the general response as a function of time, but it can also be used to find frequencies

and other characteristics of interest. This section will review techniques used to integrate

first and second-order homogenous differential equations. These equations correspond to

systems without inputs, also called unforced systems.

RESPONSES

Solving differential equations tends to yield one of two basic equation forms. The

e-to-the-negative-t forms are the first-order responses and slowly decay over time. They

never naturally oscillate, and only oscillate if forced to do so. The second-order forms may

include natural oscillation.

First-order

A first-order system is described with a first-order differential equation. The

response function for these systems is natural decay or growth .

Second-order

A second-order system response typically contains two first-order responses, or a

first-order response and a sinusoidal component .

Other Responses

First-order systems have e-to-the-t type responses. Second-order systems add

another e-to-the-t response or a sinusoidal excitation. As we move to higher order linear

systems we typically add more e-to-the-t terms, and/or more sinusoidal terms.

In some cases we will have systems with multiple differential equations, or nonlinear

terms. In these cases explicit analysis of the equations may not be feasible

RESPONSE ANALYSIS

Up to this point we have mostly discussed the process of calculating the system

response. As an engineer, obtaining the response is important, but evaluating the results is

more important. The most critical design consideration is system stability. In most cases a

system should be inherently stable in all situations, such as a car "cruise control". In other

cases an unstable system may be the objective, such as an explosive device .

Simple methods

for determining the stability of a system are listed below:

1. If a step input causes the system to go to infinity, it will be inherently unstable.

2. A ramp input might cause the system to go to infinity; if this is the case, the system

might not respond well to constant change.

3. If the response to a sinusoidal input grows with each cycle, the system is probably

resonating, and will become unstable.

Beyond establishing the stability of a system, we must also consider general performance.

This includes the time constant for a first-order system, or damping coefficient

and natural frequency for a second-order system. For example, assume we have designed

an elevator that is a second-order system. If it is under damped the elevator will oscillate,

possibly leading to motion sickness, or worse. If the elevator is over damped it will take

longer to get to floors. If it is critically damped it will reach the floors quickly, without

overshoot.

Engineers distinguish between initial setting effects (transient) and long term

effects (steady-state). The transient effects are closely related to the homogeneous solution

to the differential equations and the initial conditions. The steady-state effects occur after

some period of time when the system is acting in a repeatable or non-changing form. Figure

3.30 shows a system response. The transient effects at the beginning include a quick

rise time and an overshoot. The steady-state response settles down to a constant amplitude

sine wave.

NON-LINEAR SYSTEMS

Non-linear systems cannot be described with a linear differential equation. A basic

linear differential equation has coefficients that are constant, and the derivatives are all

first order.

Non-Linear Differential Equations

A non-linear differential equation is description as like as It involves a person

ejected from an aircraft with a drag force coefficient of 0.8. (Note: This coefficient is calculated

using the drag coefficient and other properties such as the speed of sound and

cross sectional area.) The FBD shows the sum of forces, and the resulting differential

equation. The velocity squared term makes the equation non-linear, and so it cannot be

analyzed with the previous methods. In this case the terminal velocity is calculated by setting

the acceleration to zero. This results in a maximum speed of 126 kph. The equation can also be solved using explicit integration .

Non-Linear Equation Terms

If our models include a device that is non-linear and we want to use a linear technique to solve the equation, we will need to linearize the model before we can proceed. A

non-linear system can be approximated with a linear equation using the following method.

1. Pick an operating point or range for the component.

2. Find a constant value that relates a change in the input to a change in the output.

3. Develop a linear equation.

4. Use the linear equation for the analysis.

A linearized differential equation can be approximately solved using known techniques

as long as the system doesn’t travel too far from the linearized point. The example

in Figure 3.36 shows the linearization of a non-linear equation about a given operating

point. This equation will be approximately correct as long as the first derivative doesn’t

move too far from 100. When this value does, the new velocity can be calculated.

Changing Systems

In practical systems, the forces at work are continually changing. For example a

system often experiences a static friction force when motion is starting, but once motion

starts it is replaced with a smaller kinetic friction. Another example is tension in a cable.

When in tension a cable acts as a spring. But, when in compression the force goes to zero.

The values of the spring and damping coefficients can be used to select actual

components. Some companies will design and build their own components. Components

can also be acquired by searching catalogs, or requesting custom designs from other companies.

SUMMARY

• First and second-order differential equations were analyzed explicitly.

• First and second-order responses .

• analysis was brainstorming.

• A case study looked at a second-order system.

• Non-linear systems can be analyzed by making them linear.

Key points:

First-order:

find initial final values

find time constant with 63% or by slope

use these in standard equation

Second-order:

find damped frequency from graph

find time to first peak

use these in cosine equation

II. NUMERICAL ANALYSIS

INTRODUCTION

For engineering analysis it is always preferable to develop explicit equations that

include symbols, but this is not always practical. In cases where the equations are too

costly to develop, numerical methods can be used. As their name suggests, numerical

methods use numerical calculations (i.e., numbers not symbols) to develop a unique solution

to a differential equation. The solution is often in the form of a set of numbers, or a

graph. This can then be used to analyze a particular design case. The solution is often

returned quickly so that trial and error design techniques may be used. But, without a symbolic

equation the system can be harder to understand and manipulate.

This chapter focuses on techniques that can be used for numerically integrating

systems of differential equations.

THE GENERAL METHOD

The general process of analyzing systems of differential equations involves first

putting the equations into standard form, and then integrating these with one of a number

of techniques. State variable equations are the most common standard equation form. In

this form all of the equations are reduced to first-order differential equations. These firstorder

equations are then easily integrated to provide a solution for the system of equations.

MISSION :

• To be able to solve systems of differential equations using numerical methods.

• State variable form for differential equations

• Numerical integration with software and calculators

• Numerical integration theory: first-order, Taylor series and Runge-Kutta

• Using tabular data

• A design case

State Variable Form

At any time a system can be said to have a state. Consider a car for example, the

state of the car is described by its position and velocity. Factors that are useful when identifying

state variables are:

• The variables should describe energy storing elements (potential or kinetic).

• The variables must be independent.

• They should fully describe the system elements.

After the state variables of a system have been identified, they can be used to write

first-order state variable equations.

NUMERICAL INTEGRATION ( RESET NUMERIC IN Electronic Work )

Repetitive calculations can be used to develop an approximate solution to a set of

differential equations. Starting from given initial conditions, the equation is solved with

small time steps. Smaller time steps result in a higher level of accuracy, while larger time

steps give a faster solution.

Numerical Integration With Tools

Numerical solutions can be developed with hand calculations, but this is a very

time consuming task. In this section we will explore some common tools for solving state

variable equations. The analysis process follows the basic steps listed below.

1. Generate the differential equations to model the process.

2. Select the state variables.

3. Rearrange the equations to state variable form.

4. Add additional equations as required.

5. Enter the equations into a computer or calculator and solve.

Numerical Integration

The simplest form of numerical integration is Euler’s first-order method. Given the

current value of a function and the first derivative, we can estimate the function value a

short time later,

Taylor Series

First-order integration works well with smooth functions. But, when a highly

curved function is encountered we can use a higher order integration equation.

Runge-Kutta Integration

First-order integration provides reasonable solutions to differential equations. That

accuracy can be improved by using higher order derivatives to compensate for function

curvature. The Runge-Kutta technique uses first-order differential equations (such as state

equations) to estimate the higher order derivatives, thus providing higher accuracy without

requiring other than first-order differential equations.

SYSTEM RESPONSE

In most cases the result of numerical analysis is graphical or tabular.

In both cases details such as time constants and damped frequencies can be obtained by the same methods used for experimental analysis. In addition to these methods there is a technique that

can determine the steady-state response of the system.

Steady-State Response

The state equations can be used to determine the steady-state response of a system

by setting the derivatives to zero, and then solving the equations.

DIFFERENTIATION AND INTEGRATION OF EXPERIMENTAL DATA

When doing experiments, data is often collected in the form of individual data

points (not as complete functions). It is often necessary to integrate or differentiate these

values.

III . ADVANCED CONTROL DYNAMIC

Switching Functions

When analyzing a system, we may need to choose an input that is more complex

than inputs such as steps, ramps, sinusoidal and parabolic. The easiest way to do this is to

use switching functions. Switching functions turn on (have a value of 1) when their arguments are greater than or equal to zero, or off (a value of 0) when the argument is negative.

Examples of the use of switching functions .

Interpolating Tabular Data

In some cases we are given tables of numbers instead of equations for a system

component. These can still be used to do numerical integration by calculating coefficient

values as required, in place of an equation. Tabular data consists of separate data points

Modeling Functions with Splines

When greater accuracy is required, smooth curves can be fitted to interpolate

points. These curves are known as splines. There are multiple methods for creating

splines, but the simplest is to use a polynomial fitted to a set of points.

Non-Linear Elements

Despite our deepest wishes for simplicity, most systems contain non-linear components.

In the last chapter we looked at dealing with non-linearities by linearizing them

locally. Numerical techniques will handle non-linearities easily, but smaller time steps are

required for accuracy. Consider the mass and an applied force .

SUMMARY

• State variable equations are used to reduced to first order differential equations.

• First order equations can be integrated numerically.

• Higher order integration, such as Runge-Kutta increase the accuracy.

• Switching functions allow functions terms to be turned on and off to provide

more complex function.

• Tabular data can be used to get numerical values.

IV . ROTATION

INTRODUCTION

The equations of motion for a rotating mass Given the angular position, the angular velocity can be found by differentiating once, the angular acceleration can be found by differentiating again. The angular acceleration can be integrated to find the angular velocity, the angular velocity can be integrated to find the angular position. The angular acceleration is proportional to an applied torque, but inversely

proportional to the mass moment of inertia.

Basic properties of rotation

MISSION :

• To be able to develop and analyze differential equations for rotational systems.

• Basic laws of motion

• Inertia, springs, dampers, levers, gears and belts

• Design case

MODELING

Free Body Diagrams (FBDs) are required when analyzing rotational systems, as

they were for translating systems. The force components normally considered in a rotational

system include,

• inertia - opposes acceleration and deceleration

• springs - resist deflection

• dampers - oppose velocity

• levers - rotate small angles

• gears and belts - change rotational speeds and torques

Inertia

When unbalanced torques are applied to a mass it will begin to accelerate, in rotation.

The sum of applied torques is equal to the inertia forces .

The mass moment of inertia determines the resistance to acceleration. This can be

calculated using integration, or found in tables. When dealing with rotational acceleration

it is important to use the mass moment of inertia, not the area moment of inertia.

The center of rotation for free body rotation will be the centroid. Moment of inertia

values are typically calculated about the centroid. If the object is constrained to rotate

about some point, other than the centroid, the moment of inertia value must be recalculated.

Springs

Twisting a rotational spring will produce an opposing torque. This torque increases

as the deformation increases. The spring constant for a torsional spring will be relatively constant, unless the

material is deformed outside the linear elastic range, or the geometry of the spring changes

significantly.

When dealing with strength of material properties the area moment of inertia is

required. The calculation for the area moment of inertia is similar to that for the mass

moment of inertia.

Damping

Rotational damping is normally caused by viscous fluids, such as oils, used for

lubrication. The first equation is used for a system with one rotating and one stationary part. The second equation is used for damping between two rotating parts.

Levers

The lever can be used to amplify forces or motion. Although

theoretically a lever arm could rotate fully, it typically has a limited range of motion. The

amplification is determined by the ratio of arm lengths to the left and right of the center.

Gears and Belts

While levers amplify forces and motions over limited ranges of motion, gears can

rotate indefinitely. Some of the basic gear forms are listed below.

Spur - Round gears with teeth parallel to the rotational axis.

Rack - A straight gear (used with a small round gear called a pinion).

Helical - The teeth follow a helix around the rotational axis.

Bevel - The gear has a conical shape, allowing forces to be transmitted at angles.

Gear teeth are carefully designed so that they will mesh smoothly as the gears

rotate. The forces on gears acts at a tangential distance from the center of rotation called

the pitch diameter. In the transmission the gear ratio

is changed by sliding (left-right) some of the gears to change the sequence of gears transmitting

the force.

Friction

Friction between rotating components is a major source of inefficiency in

machines. It is the result of contact surface materials and geometries. Calculating friction

values in rotating systems is more difficult than translating systems. Normally rotational

friction will be given as static and kinetic friction torques. Basically

these problems require that the model be analyzed as if the friction surface is fixed. If the

friction force exceeds the maximum static friction the mechanism is then analyzed using

the dynamic friction torque. There is friction between the shaft and the hole in the wall.

The friction force is left as a variable for the derivation of the state equations. The friction

value must be calculated using the appropriate state equation. The result of this calculation

and the previous static or dynamic condition is then used to determine the new friction

value.

Permanent Magnet Electric Motors

DC motors create a torque between the rotor (inside) and stator (outside) that is

related to the applied voltage or current. In a permanent magnet motor there are magnets

mounted on the stator, while the rotor consists of wound coils. When a voltage is applied

to the coils the motor will accelerate. The speed response of a permanent magnet DC motor is first-order.

SUMMARY

• The basic equations of motion were execute .

• Mass and area moment of inertia are used for inertia and springs.

• Rotational dampers and springs.

• A design case was presented.

IV . INPUT-OUTPUT EQUATIONS

INTRODUCTION

To solve a set of differential equations we have two choices, solve them numerically

or symbolically. For a symbolic solution the system of differential equations must be

manipulated into a single differential equation. In this chapter we will look at methods for

manipulating differential equations into useful forms.

THE DIFFERENTIAL OPERATOR

The differential operator ’d/dt’ can be written in a number of forms. In this book

there have been two forms used thus far, d/dt x and x-dot. For convenience we will add a

third, ’D’. The basic definition of this operator, and related operations . In basic terms the operator can be manipulated as if it is a normal variable. Multiplying

by ’D’ results in a derivative, dividing by ’D’ results in an integral. The first-order

axiom can be used to help solve a first-order differential equation.

MISSION :

• To be able to develop input-output equations for mechanical systems.

• The differential operator, input-output equations

• Design case - vibration isolation

Converting Input-Output Equations to State Equations

In some instances we will want to numerically integrate an input-output equation.

Integrating Input-Output Equations

An input-output equation is already in a form suitable for normal integration techniques,

with the left hand side being the homogeneous part, and the right hand side is the

particular part. If the non homogeneous part includes derivatives, these determine the values

of initial conditions.

DESIGN CASE

The classic mass-spring-damper system is shown in Figure 6.13. In this example

the forces are summed to provide an equation. The differential operator is replaced, and

the equation is manipulated into transfer function form. The transfer function is given in

two different forms because the system is reversible and the output could be either ’F’ or

’x’.

SUMMARY

• The differential operator can be manipulated algebraically

• Equations can be manipulated into input-output forms and solved as normal differential

equations

V . ELECTRICAL SYSTEMS

MISSION :

• To apply analysis techniques to circuits

• Basic components; resistors, power sources, capacitors, inductors and op-amps

• Device impedance

• Example circuits

INTRODUCTION

A voltage is a pull or push acting on electrons. The voltage will produce a current

when the electrons can flow through a conductor. The more freely the electrons can flow,

the lower the resistance of a material. Most electrical components are used to control this

flow.

MODELING

Kirchoff’s voltage and current laws : The node current law

holds true because the current flow in and out of a node must total zero. If the sum of currents

was not zero then electrons would be appearing and disappearing at that node, thus

violating the law of conservation of matter. The loop voltage law states that the sum of all

rises and drops around a loop must total zero.

The simplest form of circuit analysis is for DC circuits, typically only requiring

algebraic manipulation. In AC circuit analysis we consider the steady-state response to a

MISSION

• To apply analysis techniques to circuits

• Basic components; resistors, power sources, capacitors, inductors and op-amps

• Device impedance

sinusoidal input. Finally the most complex is transient analysis, often requiring integration,

or similar techniques.

• DC (Direct Current) - find the response for a constant input.

• AC (Alternating Current) - find the steady-state response to an AC input.

• Transient - find the initial response to changes.

There is a wide range of components used in circuits. The simplest components are

passive, such as resistors, capacitors and inductors. Active components are capable of

changing their behaviors, such as op-amps and transistors.

Component :

• resistors - reduce current flow as described with ohm’s law

• voltage/current sources - deliver power to a circuit

• capacitors - pass current based on current flow, these block DC currents

• inductors - resist changes in current flow, these block high frequencies

• op-amps - very high gain amplifiers useful in many forms

Voltage and Current Sources

A voltage source will maintain a voltage in a circuit, by varying the current as

required. A current source will supply a current to a circuit, by varying the voltage as

required. The schematic symbols for voltage and current sources are shown in Figure 7.5.

The supplies with ’+’ and ’-’ symbols provide DC voltages, with the symbols indicating

polarity. The symbol with two horizontal lines is a battery. The circle with a sine wave is

an AC voltage supply. The last symbol with an arrow inside the circle is a current supply.

The arrow indicates the direction of positive current flow.

Op-Amps

The ideal model of an op-amp : On the left hand side are

the inverting and non-inverting inputs. Both of these inputs are assumed to have infinite

impedance, and so no current will flow. Op-amp application circuits are designed so that

the inverting and non-inverting inputs are driven to the same voltage level. The output of

the op-amp is shown on the right. In circuits op-amps are used with feedback to perform

standard operations such as those listed below.

• adders, subtractors, multipliers, and dividers - simple analog math operations

• amplifiers - increase the amplitude of a signal

• impedance isolators - hide the resistance of a circuit while passing a voltage

Feedforward Controllers

When a model of a system is well known it can be used to improve the performance

of a control system by adding a feedforward function : PID _____.

The feedforward function is basically an inverse model of the process. When this is used

together with a more traditional feedback function the overall system can outperform

more traditional controllers function, such as the PID controller.

SUMMARY

• Transfer functions can be used to model the ratio of input to output.

• Block diagrams can be used to describe and simplify systems.

• Controllers can be designed to meet criteria, such as damping ratio and natural

frequency.

• System errors can be used to determine the long term stability and accuracy of a

controlled system.

• Other control types are possible for more advanced systems.

VI . ANALOG INPUTS AND OUTPUTS

INTRODUCTION

An analog value is continuous, not discrete : In the previous

chapters, techniques were discussed for designing continuos control systems. In this

chapter we will examine analog inputs and outputs so that we may design continuous control

systems that use computers.

Logical and Continuous Values :

Typical analog inputs and outputs for computers are listed below. Actuators and

sensors that can be used with analog inputs and outputs will be discussed in later chapters.

Inputs:

• oven temperature

• fluid pressure

• fluid flow rate

MISSION :

• To understand the basics of conversion to and from analog values.

• Analog inputs and outputs

• Sampling issues; aliasing, quantization error, resolution

OUTPUT :

. fluid valve position

• motor position

• motor velocity

type of system a physical

value is converted to a voltage, current or other value by a transducer. A signal conditioner

converts the signal from the transducer to a voltage or current that is read by the analog

input.

Analog to digital and digital to analog conversion uses integers within the computer.

Integers limit the resolution of the numbers to a discrete, or quantized range.

ANALOG INPUTS

To input an analog voltage (into a computer) the continuous voltage value must be

sampled and then converted to a numerical value by an A/D (Analog to Digital) converter

(also known as ADC). Figure 13.4 shows a continuous voltage changing over time.

ANALOG OUTPUTS

Analog outputs are much simpler than analog inputs. To set an analog output an

integer is converted to a voltage. This process is very fast, and does not experience the

timing problems with analog inputs. But, analog outputs are subject to quantization errors.

These relationships are

almost identical to those of the A/D converter.

NOISE REDUCTION

Shielding

When a changing magnetic field cuts across a conductor, it will induce a current

flow. The resistance in the circuits will convert this to a voltage.

result in erroneous readings from sensors, and signal to outputs. Shielding will reduce the

effects of the interference. When shielding and grounding are done properly, the effects of

electrical noise will be negligible. Shielding is normally used for; all logical signals in

noisy environments, high speed counters or high speed circuitry, and all analog signals.

There are two major approaches to reducing noise; shielding and twisted pairs.

Shielding involves encasing conductors and electrical equipment with metal. As a result

electrical equipment is normally housed in metal cases. Wires are normally put in cables

with a metal sheath surrounding both wires. The metal sheath may be a thin film, or a

woven metal mesh. Shielded wires are connected at one end to "drain" the unwanted signals

into the cases of the instruments.

Grounding

- ground voltages are based upon the natural voltage level in the physical ground

(the earth under your feet). This will vary over a distance. Most buildings and electrical

systems use a ground reference for the building. Between different points on the same

building ground voltage levels may vary as much as a few hundred millivolts. This can

lead to significant problems with voltage readings and system safety.

- A signal can be floating, or connected to a ground

- if floating a system normally has a self contained power source, or self reference

such as a battery, strain gauge or thermocouple. These are usually read with double ended

outputs. The potential for floating voltage levels can be minimized by connecting larger

resistors (up to 100K) from the input to ground.

- a grounded system uses a single common (ground) for all signals. These are normally

connected to a single ended inputs.

- the analog common can also be connected to the ground with a large resistor to

drain off induced voltages.

- cable shields or grounds are normally only connected at one side to prevent

ground loops.

SUMMARY

• A/D conversion will convert a continuous value to an integer value.

• D/A conversion is easier and faster and will convert a digital value to an analog

value.

• Resolution limits the accuracy of A/D and D/A converters.

• Sampling too slowly will alias the real signal.

• Analog inputs are sensitive to noise.

• Analog shielding should be used to improve the quality of electrical signals.

VII . CONTINUOUS SENSORS

INTRODUCTION

Continuous sensors convert physical phenomena to measurable signals, typically

voltages or currents. Consider a simple temperature measuring device, there will be an

increase in output voltage proportional to a temperature rise. A computer could measure

the voltage, and convert it to a temperature. The basic physical phenomena typically measured

with sensors include;

- angular or linear position

- acceleration

- temperature

- pressure or flow rates

- stress, strain or force

- light intensity

- sound

Most of these sensors are based on subtle electrical properties of materials and

devices. As a result the signals often require signal conditioners. These are often amplifiers

that boost currents and voltages to larger voltages.

Sensors are also called transducers. This is because they convert an input phenomena

to an output in a different form. This transformation relies upon a manufactured

device with limitations and imperfection. As a result sensor limitations are often character

MISSION :

• To understand the common continuous sensor types.

• To understand interfacing issues.

• Continuous sensor issues; accuracy, resolution, etc.

• Angular measurement; potentiometers, encoders and tachometers

• Linear measurement; potentiometers, LVDTs, Moire fringes and accelerometers

• Force measurement; strain gages and piezoelectric

• Liquid and fluid measurement; pressure and flow

• Temperature measurement; RTDs, thermocouples and thermistors

• Other sensors

• Continuous signal inputs and wiring

• Glossary

Objectives:

• To understand the common continuous sensor types.

• To understand interfacing issues.

INTRODUCTION

Continuous sensors convert physical phenomena to measurable signals, typically

voltages or currents. Consider a simple temperature measuring device, there will be an

increase in output voltage proportional to a temperature rise. A computer could measure

the voltage, and convert it to a temperature. The basic physical phenomena typically measured

with sensors include;

- angular or linear position

- acceleration

- temperature

- pressure or flow rates

- stress, strain or force

- light intensity

- sound

Most of these sensors are based on subtle electrical properties of materials and

devices. As a result the signals often require signal conditioners. These are often amplifiers

that boost currents and voltages to larger voltages.

Sensors are also called transducers. This is because they convert an input phenomena

to an output in a different form. This transformation relies upon a manufactured

device with limitations and imperfection. As a result sensor limitations are often characterterized with;

Accuracy - This is the maximum difference between the indicated and actual reading.

For example, if a sensor reads a force of 100N with a ±1% accuracy, then

the force could be anywhere from 99N to 101N.

Resolution - Used for systems that step through readings. This is the smallest

increment that the sensor can detect, this may also be incorporated into the

accuracy value. For example if a sensor measures up to 10 inches of linear displacements,

and it outputs a number between 0 and 100, then the resolution of

the device is 0.1 inches.

Repeatability - When a single sensor condition is made and repeated, there will be

a small variation for that particular reading. If we take a statistical range for

repeated readings (e.g., ±3 standard deviations) this will be the repeatability.

For example, if a flow rate sensor has a repeatability of 0.5cfm, readings for an

actual flow of 100cfm should rarely be outside 99.5cfm to 100.5cfm.

Linearity - In a linear sensor the input phenomenon has a linear relationship with

the output signal. In most sensors this is a desirable feature. When the relationship

is not linear, the conversion from the sensor output (e.g., voltage) to a calculated

quantity (e.g., force) becomes more complex.

Precision - This considers accuracy, resolution and repeatability or one device relative

to another.

Range - Natural limits for the sensor. For example, a sensor for reading angular

rotation may only rotate 200 degrees.

Dynamic Response - The frequency range for regular operation of the sensor. Typically

sensors will have an upper operation frequency, occasionally there will be

lower frequency limits. For example, our ears hear best between 10Hz and

16KHz.

Environmental - Sensors all have some limitations over factors such as temperature,

humidity, dirt/oil, corrosives and pressures. For example many sensors

will work in relative humidities (RH) from 10% to 80%.

Calibration - When manufactured or installed, many sensors will need some calibration

to determine or set the relationship between the input phenomena, and

output. For example, a temperature reading sensor may need to be zeroed or

adjusted so that the measured temperature matches the actual temperature. This

may require special equipment, and need to be performed frequently.

Cost - Generally more precision costs more. Some sensors are very inexpensive,

but the signal conditioning equipment costs are significant.

Encoders

Encoders use rotating disks with optical windows: The encoder contains an optical disk with fine windows etched into it. Light from emitters

passes through the openings in the disk to detectors. As the encoder shaft is rotated, the

light beams are broken.

There are two fundamental types of encoders; absolute and incremental. An absolute

encoder will measure the position of the shaft for a single rotation. The same shaft

angle will always produce the same reading. The output is normally a binary or grey code

number. An incremental (or relative) encoder will output two pulses that can be used to

determine displacement. Logic circuits or software is used to determine the direction of

rotation, and count pulses to determine the displacement. The velocity can be determined

by measuring the time between pulses.

The absolute encoder has two rings, the

outer ring is the most significant digit of the encoder, the inner ring is the least significant

digit. The relative encoder has two rings, with one ring rotated a few degrees ahead of the

other, but otherwise the same. Both rings detect position to a quarter of the disk. To add

accuracy to the absolute encoder more rings must be added to the disk, and more emitters

and detectors. To add accuracy to the relative encoder we only need to add more windows

to the existing two rings. Typical encoders will have from 2 to thousands of windows per

ring.

When using absolute encoders, the position during a single rotation is measured

directly. If the encoder rotates multiple times then the total number of rotations must be

counted separately.

When using a relative encoder, the distance of rotation is determined by counting

the pulses from one of the rings. If the encoder only rotates in one direction then a simple

count of pulses from one ring will determine the total distance. If the encoder can rotate

both directions a second ring must be used to determine when to subtract pulses. The

quadrature scheme, using two rings, is shown in Figure 14.5. The signals are set up so that

one is out of phase with the other. Notice that for different directions of rotation, input B

either leads or lags A. Interfaces for encoders are commonly available for PLCs and as purchased units. Newer PLCs will also allow two normal inputs to be used to decode encoder inputs

Normally absolute and relative encoders require a calibration phase when a controller

is turned on. This normally involves moving an axis until it reaches a logical sensor

that marks the end of the range. The end of range is then used as the zero position.

Machines using encoders, and other relative sensors, are noticeable in that they normally

move to some extreme position before use.

Tachometers

Tachometers measure the velocity of a rotating shaft. A common technique is to

mount a magnet to a rotating shaft. When the magnetic moves past a stationary pick-up

coil, current is induced. For each rotation of the shaft there is a pulse in the coil : When the time between the pulses is measured the period for one rotation

can be found, and the frequency calculated. This technique often requires some signal

conditioning circuitry. Another common technique uses a simple permanent magnet DC generator (note:

you can also use a small DC motor). The generator is hooked to the rotating shaft. The

rotation of a shaft will induce a voltage proportional to the angular velocity. This technique

will introduce some drag into the system, and is used where efficiency is not an

issue.

Both of these techniques are common, and inexpensive.

Linear position ;

Rotational potentiometers were discussed before, but potentiometers are also

available in linear/sliding form. These are capable of measuring linear displacement over

long distances.

Linear Variable Differential Transformers (LVDT)

Linear Variable Differential Transformers (LVDTs) measure linear displacements

over a limited range. The basic device is shown in Figure 14.8. It consists of outer coils

with an inner moving magnetic core. High frequency alternating current (AC) is applied to

the center coil. This generates a magnetic field that induces a current in the two outside

coils. The core will pull the magnetic field towards it, so in the figure more current will be

induced in the left hand coil. The outside coils are wound in opposite directions so that

when the core is in the center the induced currents cancel, and the signal out is zero

(0Vac). The magnitude of the signal out voltage on either line indicates the position of the

core. Near the center of motion the change in voltage is proportional to the displacement.

But, further from the center the relationship becomes nonlinear.

Moire Fringes

High precision linear displacement measurements can be made with Moire

Fringes : Both of the strips are transparent (or reflective), with

black lines at measured intervals. The spacing of the lines determines the accuracy of the

position measurements. The stationary strip is offset at an angle so that the strips interfere

to give irregular patterns. As the moving strip travels by a stationary strip the patterns will

move up, or down, depending upon the speed and direction of motion.

These are used in high precision applications over long distances, often meters.

They can be purchased from a number of suppliers, but the cost will be high. Typical

applications include Coordinate Measuring Machines (CMMs).

Accelerometers

Accelerometers measure acceleration using a mass suspended on a force sensor :

When the sensor accelerates, the inertial resistance of the mass

will cause the force sensor to deflect. By measuring the deflection the acceleration can be

determined. In this case the mass is cantilevered on the force sensor. A base and housing

enclose the sensor. A small mounting stud (a threaded shaft) is used to mount the accelerometer.

Accelerometers are dynamic sensors, typically used for measuring vibrations between 10Hz to 10KHz. Temperature variations will affect the accuracy of the sensors.

Standard accelerometers can be linear up to 100,000 m/s**2: high shock designs can be

used up to 1,000,000 m/s**2. There is often a trade-off between a wide frequency range

and device sensitivity (note: higher sensitivity requires a larger mass).

The force sensor is often a small piece of piezoelectric material (discussed later in

this chapter). The piezoelectic material can be used to measure the force in shear or compression.

Piezoelectric based accelerometers typically have parameters such as,

-100 to 250°C operating range

1mV/g to 30V/g sensitivity

operate well below one forth of the natural frequency

The accelerometer is mounted on the vibration source : The accelerometer is electrically isolated from the vibration source so that the sensor may

be grounded at the amplifier (to reduce electrical noise). Cables are fixed to the surface of

the vibration source, close to the accelerometer, and are fixed to the surface as often as

possible to prevent noise from the cable striking the surface. Background vibrations can be

detected by attaching control electrodes to non-vibrating surfaces. Each accelerometer is

different, but some general application guidelines are;

• The control vibrations should be less than 1/3 of the signal for the error to be less

than 12%).

• Mass of the accelerometers should be less than a tenth of the measurement mass.

• These devices can be calibrated with shakers, for example a 1g shaker will hit a

peak velocity of 9.81 m/s**2.

Accelerometers are commonly used for control systems that adjust speeds to

reduce vibration and noise. Computer Controlled Milling machines now use these sensors

to actively eliminate chatter, and detect tool failure. The signal from accelerometers can be integrated to find velocity and acceleration.

Forces and Moments

Strain Gages

Strain gages measure strain in materials using the change in resistance of a wire.

The wire is glued to the surface of a part, so that it undergoes the same strain as the part (at

the mount point).

Piezoelectric

When a crystal undergoes strain it displaces a small amount of charge. In other

words, when the distance between atoms in the crystal lattice changes some electrons are

forced out or drawn in. This also changes the capacitance of the crystal.

Liquids and Gases

There are a number of factors to be considered when examining liquids and gasses.

• Flow velocity

• Density

• Viscosity

• Pressure

There are a number of differences factors to be considered when dealing with fluids

and gases. Normally a fluid is considered incompressible, while a gas normally follows

the ideal gas law. Also, given sufficiently high enough temperatures, or low enough

pressures a fluid can be come a liquid. When flowing, the flow may be smooth, or laminar. In case of high flow rates or

unrestricted flow, turbulence may result. The Reynold’s number is used to determine the

transition to turbulence.

Pressure

The different two mechanisms for pressure measurement. The

Bourdon tube uses a circular pressure tube. When the pressure inside is higher than the

surrounding air pressure (14.7psi approx.) the tube will straighten. A position sensor, connected

to the end of the tube, will be elongated when the pressure increases.

Venturi Valves

When a flowing fluid or gas passes through a narrow pipe section (neck) the pressure

drops. If there is no flow the pressure before and after the neck will be the same. The

faster the fluid flow, the greater the pressure difference before and after the neck.

Coriolis Flow Meter

Fluid passes through thin tubes, causing them to vibrate. As the fluid approaches

the point of maximum vibration it accelerates.

Magnetic Flow Meter

A magnetic sensor applies a magnetic field perpendicular to the flow of a conductive

fluid. As the fluid moves, the electrons in the fluid experience an electromotive force.

Ultrasonic Flow Meter

A transmitter emits a high frequency sound at point on a tube. The signal must then

pass through the fluid to a detector where it is picked up. If the fluid is flowing in the same

direction as the sound it will arrive sooner. If the sound is against the flow it will take

longer to arrive. In a transit time flow meter two sounds are used, one traveling forward,

and the other in the opposite direction. The difference in travel time for the sounds is used

to determine the flow velocity.

A doppler flowmeter bounces a soundwave off particle in a flow. If the particle is

moving away from the emitter and detector pair, then the detected frequency will be lowered,

if it is moving towards them the frequency will be higher.

The transmitter and receiver have a minimal impact on the fluid flow, and therefore

don’t result in pressure drops.

Vortex Flow Meter

Fluid flowing past a large (typically flat) obstacle will shed vortices. The frequency

of the vortices will be proportional to the flow rate. Measuring the frequency

allows an estimate of the flow rate. These sensors tend be low cost and are popular for low

accuracy applications.

Positive Displacement Meters

In some cases more precise readings of flow rates and volumes may be required.

These can be obtained by using a positive displacement meter. In effect these meters are

like pumps run in reverse. As the fluid is pushed through the meter it produces a measurable

output, normally on a rotating shaft.

Temperature

Temperature measurements are very common with control systems. The temperature

ranges are normally described with the following classifications.

very low temperatures <-60 deg C - e.g. superconductors in MRI units

low temperature measurement -60 to 0 deg C - e.g. freezer controls

fine temperature measurements 0 to 100 deg C - e.g. environmental controls

high temperature measurements <3000 deg F - e.g. metal refining/processing

OBJECTIVES AND CONSTRAINTS

• Objectives are those things we want to minimize, or maximize.

- money

- time

- mass

- volume

- power consumption

- some combination of factors

SEARCHING FOR THE OPTIMUM

• Local Search Space

• A topographical map shows the relationship between search parameters and cost

values.

OPTIMIZATION ALGORITHMS

• The search algorithms change system parameters and try to lower system parameters.

• The main question is how to change the system parameters to minimize the system

value.

Training a Neural Network for Inverse Kinematics

Neural Network solutions have the benefit of having faster processing times since information is processed

in parallel. The solutions may be adaptive and still be implemented in hardware by using specialized electronics. Neural

systems can generalize to approximate solutions from small training sets. Neural systems are fault tolerant and

robust. The network will not fail if a few neurons are damaged, and the solutions may still retain accuracy. When

implementing a neural network approach, complex computers are not essential and robot controllers need not be specific to any one manipulator.

EMBEDDED CONTROL SYSTEM

INTRODUCTION

• Elements of embedded systems (with program examples of each)

NOTE: emphasize a top-down program structure with subroutines for each

one.

logical IO - digital inputs and outputs

analog outputs - immediate

analog inputs - delayed in (show use of nops and loop to wait), use potentiometer

timers including PWM to control transisor/h-bridge for motor control,

show sound generation also.

counters including encoder decoding and tachometer decoding

serial IO

other peripherals such as displays, sounds, etc.

• Control system fundamentals

a simple PID feedback loop using nops and time calcs to ensure time

a multiple step process that waits for an input and does a task with the PID

loop. Show an executive subroutine and calls to

dealing with events

event types; asynchronous, delayed

polling

interrupts

• Concurrent processes

single thread vs concurrent processing

how to implement a single control thread

how to create multiple processes

real-time

the need for multiple processes

non-time critical

time critical; regular updates and minimum time between runs

priority levels

hard vs. soft

• Structured Design

General systems design topics

show the general structure of a program with an executive routine

that calls task routines.

show a program that mixes an asynchronous GUI mixed with a

realtime routine

Modal System design

show the use of global mode bits to track the mode of the system

show how the mode bits changes the flow of execution in the executive

routine.

Flowcharts

map flowchart structures to program structure

using a register value to track the location in the flowchart

State Diagrams

show the use of state diagrams to model a process and then how the

program is written for it.

Failure Analysis

show basic probability theory

show parallel vs serial failures

show failure estimation using theory for single and chained failure

modes

show the methods for categorizing failures as a hazard, danger, etc.

• Communication

Receiving and sending strings

String Handling

Parsing strings

Composing strings

Command and response structures

Error Checking

Non byte oriented data

Networked structured, ie destination address header

A full feedback control system

• Other topics

Keyboard multiplexing

output refreshing, LEDs

VIII . Wireless and personal communications systems as a concept of RADAR for point LASER Tracking

THE TERM WIRELESS WAS COINED IN THE LATE NINETEENTH CENTURY WHEN inventors toyed with the idea of sending and receiving telegraph messages using electromagnetic fields rather than electric currents. In the early twentieth century, the technology became known as radio; video and data broadcasting and communications were added in the middle of the century and the general term electromagnetic communications emerged. The word wireless was relegated to history. In the late 1980s, the term found new life. Today it refers to communications, networking, control devices, and security systems in which signals travel without direct electrical connections.

Cellular communications Radio transceivers can be used as telephones in a specialized communications system called cellular. Originally, the cellular communications network was patchy and unreliable, and was used mainly by traveling business people. Nowadays, cellular telephone units are so common that many people regard them as necessities.

How cellular systems work A cellular telephone set, or cell phone, looks and functions like a cross between a cordless telephone receiver and a walkie-talkie. The unit contains a radio transmitter and receiver combination called a transceiver. Transmission and reception take place on different frequencies, so you can talk and listen at the same time. This capability, which allows you to hear the other person interrupt you if he or she chooses, is known as full duplex.

In an ideal cellular network, all the transceivers are always within range of at least one repeater. The repeaters pick up the transmissions from the portable units and retransmit the signals to the telephone network and to other portable units. The region of coverage for any repeater (also known as a base station) is called a cell. When a cell phone is in motion, say in a car or on a boat, the set goes from cell to cell in the network. This situation is shown in Fig. 32-1. The curved line is the path of the vehicle. Base stations (dots) transfer access to the cell phone. This is called handoff. Solid lines show the limits of the transmission/reception range for each base station. All the base stations are connected to the regional telephone system. This makes it possible for the user of the portable unit to place calls to, or receive calls from, anyone else in the system, whether those other subscribers have cell phones or regular phones.

Older cellular systems occasionally suffer from call loss or breakup when signals are handed off from one repeater to another. This problem has been largely overcome by a technology called code-division multiple access (CDMA). In CDMA, the repeater coverage zones overlap significantly, but signals do not interfere with each other because every phone set is assigned a unique signal code. Rather than abruptly switching from one base-station zone to the next, the signal goes through a region in which it is actually sent through more than one base station at a time. This make-before-break scheme gets rid of one of the most annoying problems inherent in cellular communications. To use a cellular network, you must purchase or rent a transceiver and pay a monthly fee. The fees vary, depending on the location and the amount of time per month you use the service. When using such a system, it is important to keep in mind that your conversations are not necessarily private. It is easier for unauthorized people to eavesdrop on wireless communications than to intercept wire or cable communications.

Cell phones and computers A personal computer (PC) can be hooked up to the telephone lines for use with online networks such as the Internet. For some people, getting on the Internet is the most important justification for buying a computer. You can connect a laptop or notebook computer to a cell phone with a portable modemthat converts incoming computer data from analog to digital and also converts outgoing data from digital to analog.

Most commercial aircraft have telephones at each row of seats, complete with jacks into which you can plug a modem. If you plan to get on-line from an aircraft, you must use the phones provided by the airline, not your own cell phone, because radio transceivers can cause interference to flight instruments. You must also observe the airline’s restrictions concerning the operation of electronic equipment while in flight. If you aren’t sure what these regulations are, ask one of the flight attendants.

Satellite systems

A satellite system is, in a certain sense, a gigantic cellular network. The repeaters, rather than being in fixed locations, are constantly moving. The zones of coverage

are much larger in a satellite network than in a cellular network, and they change in size and shape if the satellite moves relative to the earth’s surface.