Frequency counter

A frequency counter is an electronic instrument, or component of one, that is used for measuring frequency. Frequency counters usually measure the number of oscillations or pulses per second in a periodic electronic signal. Such an instrument is sometimes referred to as a cymometer, particularly one of Chinese manufacture .

Operating principle

Most frequency counters work by using a counter which accumulates the number of events occurring within a specific period of time. After a preset period known as the gate time (1 second, for example), the value in the counter is transferred to a display and the counter is reset to zero. If the event being measured repeats itself with sufficient stability and the frequency is considerably lower than that of the clock oscillator being used, the resolution of the measurement can be greatly improved by measuring the time required for an entire number of cycles, rather than counting the number of entire cycles observed for a pre-set duration (often referred to as the reciprocal technique). The internal oscillator which provides the time signals is called the timebase, and must be calibrated very accurately.If the event to be counted is already in electronic form, simple interfacing to the instrument is all that is required. More complex signals may need some conditioning to make them suitable for counting. Most general purpose frequency counters will include some form of amplifier, filtering and shaping circuitry at the input. DSP technology, sensitivity control and hysteresis are other techniques to improve performance. Other types of periodic events that are not inherently electronic in nature will need to be converted using some form of transducer. For example, a mechanical event could be arranged to interrupt a light beam, and the counter made to count the resulting pulses.

Frequency counters designed for radio frequencies (RF) are also common and operate on the same principles as lower frequency counters. Often, they have more range before they overflow. For very high (microwave) frequencies, many designs use a high-speed prescaler to bring the signal frequency down to a point where normal digital circuitry can operate. The displays on such instruments take this into account so they still display the correct value. Microwave frequency counters can currently measure frequencies up to almost 56 GHz. Above these frequencies the signal to be measured is combined in a mixer with the signal from a local oscillator, producing a signal at the difference frequency, which is low enough to be measured directly.

Accuracy and resolution

The accuracy of a frequency counter is strongly dependent on the stability of its timebase. A timebase is very delicate like the hands of a watch, and can be changed by movement, interference, or even drift due to age, meaning it might not "tick" correctly. This can make a frequency reading, when referenced to the timebase, seem higher or lower than the actual value. Highly accurate circuits are used to generate timebases for instrumentation purposes, usually using a quartz crystal oscillator within a sealed temperature-controlled chamber, known as an oven controlled crystal oscillator or crystal oven.For higher accuracy measurements, an external frequency reference tied to a very high stability oscillator such as a GPS disciplined rubidium oscillator may be used. Where the frequency does not need to be known to such a high degree of accuracy, simpler oscillators can be used. It is also possible to measure frequency using the same techniques in software in an embedded system. A central processing unit (CPU) for example, can be arranged to measure its own frequency of operation provided it has some reference timebase to compare with.

Accuracy is often limited by the available resolution of the measurement. Resolution of a single count is generally proportional to the timebase oscillator frequency and the gate time. Improved resolution can be obtained by several techniques such as oversampling/averaging.

Additionally, accuracy can be significantly degraded by jitter on the signal being measured. It is possible to reduce this error by oversampling/averaging techniques.

I/O Interfaces

I/O interfaces allow the user to send information to the frequency counter and receive information from the frequency counter. Commonly used interfaces include RS232, USB, GPIB and Ethernet. Besides sending measurement results, a counter can notify the user when user-defined measurement limits are exceeded. Common to many counters are the SCPI commands used to control them. A new development is built-in LAN-based control via Ethernet complete with GUI's. This allows one computer to control one or several instruments and eliminates the need to write SCPI commandsSound Transducers

Sound is the generalised name given to “acoustic waves”. These acoustic waves have frequencies ranging from just 1Hz up to many tens of thousands of Hertz with the upper limit of human hearing being around the 20 kHz, (20,000Hz) range .The sound that we hear is basically made up from mechanical vibrations produced by an Audio Sound Transducer used to generate the acoustic waves, and for sound to be “heard” it requires a medium for transmission either through the air, a liquid, or a solid.

Sound Transducer

Related Products: Audio Indicator and Alerts

Audio Sound Transducers include both input sensors, that convert sound into and electrical signal such as a microphone, and output actuators that convert the electrical signals back into sound such as a loudspeaker.

We tend to think of sound as only existing in the range of frequencies detectable by the human ear, from 20Hz up to 20kHz (a typical loudspeaker frequency response), but sound can also extend way beyond these ranges.

Sound transducers can also both detect and transmit sound waves and vibrations from very low frequencies called infra-sound up to very high frequencies called ultrasound. But in order for a sound transducer to either detect or produce “sound” we first need to understand what sound is.

What is Sound?

Sound is basically a waveform of energy that is produced by some form of a mechanical vibration such as a tuning fork, and which has a “frequency” determined by the origin of the sound for example, a bass drum has a low frequency sound while a cymbal has a higher frequency sound.A sound waveform has the same characteristics as that of an electrical waveform which are Wavelength (λ), Frequency (ƒ) and Velocity (m/s). Both the sounds frequency and wave shape are determined by the origin or vibration that originally produced the sound but the velocity is dependent upon the medium of transmission (air, water etc.) that carries the sound wave. The relationship between wavelength, velocity and frequency is given below as:

Sound Wave Relationship

- Where:

- Wavelength – is the time period of one complete cycle in Seconds, (λ)

- Frequency – is the number of wavelengths per second in Hertz, (ƒ)

- Velocity – is the speed of sound through a transmission medium in m/s-1

The Microphone Input Transducer

The Microphone, also called a “mic”, is a sound transducer that can be classed as a “sound sensor”. This is because it produces an electrical analogue output signal which is proportional to the “acoustic” sound wave acting upon its flexible diaphragm. This signal is an “electrical image” representing the characteristics of the acoustic waveform. Generally, the output signal from a microphone is an analogue signal either in the form of a voltage or current which is proportional to the actual sound wave.The most common types of microphones available as sound transducers are Dynamic, Electret Condenser, Ribbon and the newer Piezo-electric Crystal types. Typical applications for microphones as a sound transducer include audio recording, reproduction, broadcasting as well as telephones, television, digital computer recording and body scanners, where ultrasound is used in medical applications. An example of a simple “Dynamic” microphone is shown below.

Dynamic Moving-coil Microphone Sound Transducer

The movement of the coil within the magnetic field causes a voltage to be induced in the coil as defined by Faraday’s law of Electromagnetic Induction. The resultant output voltage signal from the coil is proportional to the pressure of the sound wave acting upon the diaphragm so the louder or stronger the sound wave the larger the output signal will be, making this type of microphone design pressure sensitive.

As the coil of wire is usually very small the range of movement of the coil and attached diaphragm is also very small producing a very linear output signal which is 90o out of phase to the sound signal. Also, because the coil is a low impedance inductor, the output voltage signal is also very low so some form of “pre-amplification” of the signal is required.

As the construction of this type of microphone resembles that of a loudspeaker, it is also possible to use an actual loudspeaker as a microphone.

Obviously, the average quality of a loudspeaker will not be as good as that for a studio type recording microphone but the frequency response of a reasonable speaker is actually better than that of a cheap “freebie” microphone. Also the coils impedance of a typical loudspeaker is different at between 8 to 16Ω. Common applications where speakers are generally used as microphones are in intercoms and walki-talkie’s.

The Loudspeaker Output Transducer

Sound can also be used as an output device to produce an alert noise or act as an alarm, and loudspeakers, buzzers, horns and sounders are all types of sound transducer that can be used for this purpose with the most commonly used audible type output sound actuator being the “Loudspeaker”.Loudspeaker Transducer

Loudspeakers are available in all shapes, sizes and frequency ranges with the more common types being moving coil, electrostatic, isodynamic and piezo-electric. Moving coil type loudspeakers are by far the most commonly used speaker in electronic circuits, kits and toys, and as such it is this type of sound transducer we will examine below.

The principle of operation of the Moving Coil Loudspeaker is the exact opposite to that of the “Dynamic Microphone” we look at above. A coil of fine wire, called the “speech or voice coil”, is suspended within a very strong magnetic field, and is attached to a paper or Mylar cone, called a “diaphragm” which itself is suspended at its edges to a metal frame or chassis. Then unlike the microphone which is pressure sensitive input device, this type of sound transducer can be classed as a pressure generating output device.

The Moving Coil Loudspeaker

As the voice coil is permanently attached to the cone/diaphragm this also moves in tandem and its movement causes a disturbance in the air around it thus producing a sound or note. If the input signal is a continuous sine wave then the cone will move in and out acting like a piston pushing and pulling the air as it moves and a continuous single tone will be heard representing the frequency of the signal. The strength and therefore its velocity, by which the cone moves and pushes the surrounding air produces the loudness of the sound.

As the speech or voice coil is essentially a coil of wire it has, like an inductor an impedance value. This value for most loudspeakers is between 4 and 16Ω’s and is called the “nominal impedance” value of the speaker measured at 0Hz, or DC.

Remember that it is important to always match the output impedance of the amplifier with the nominal impedance of the speaker to obtain maximum power transfer between the amplifier and speaker. Most amplifier-speaker combinations have an efficiency rating as low as 1 or 2%.

Although disputed by some, the selection of good speaker cable is also an important factor in the efficiency of the speaker, as the internal capacitance and magnetic flux characteristics of the cable change with the signal frequency, thereby causing both frequency and phase distortion. This has the effect of attenuating the signal. Also, with high power amplifiers large currents are flowing through these cables so small thin bell wire type cables can overheat during extended periods of use, again reducing efficiency.

The human ear can generally hear sounds from between 20Hz to 20kHz, and the frequency response of modern loudspeakers called general purpose speakers are tailored to operate within this frequency range as well as headphones, earphones and other types of commercially available headsets used as sound transducers.

However, for high performance High Fidelity (Hi-Fi) type audio systems, the frequency response of the sound is split up into different smaller sub-frequencies thereby improving both the loudspeakers efficiency and overall sound quality as follows:

Generalised Frequency Ranges

| Descriptive Unit | Frequency Range |

| Sub-Woofer | 10Hz to 100Hz |

| Bass | 20Hz to 3kHz |

| Mid-Range | 1kHz to 10kHz |

| Tweeter | 3kHz to 30kHz |

This crossover network consists of Resistors, Inductors, Capacitors, RLC type passive filters or op-amp active filters whose crossover or cut-off frequency point is finely tuned to that of the individual loudspeakers characteristics and an example of a multi-speaker “Hi-fi” type design is given below.

Multi-speaker (Hi-Fi) Design

Summary of Transducers

Below is a summary of transducers and sensors we have looked at in this section along with a list of the main characteristics associated with Transducers, Sensors and ActuatorsInput Devices or Sensors

- Sensors are “Input” devices which convert one type of energy or quantity into an electrical analogue signal.

- The most common forms of sensors are those that detect Position, Temperature, Light, Pressure and Velocity.

- The simplest of all input devices is the switch or push button.

- Some sensors called “Self-generating” sensors generate output voltages or currents relative to the quantity being measured, such as thermocouples and photo-voltaic solar cells and their output bandwidth equals that of the quantity being measured.

- Some sensors called “Modulating” sensors change their physical properties, such as inductance or resistance relative to the quantity being measured such as inductive sensors, LDR’s and potentiometers and need to be biased to provide an output voltage or current.

- Not all sensors produce a straight linear output and linearisation circuitry may be required.

- Signal conditioning may also be required to provide compatibility between the sensors low output signal and the detection or amplification circuitry.

- Some form of amplification is generally required in order to produce a suitable electrical signal which is capable of being measured.

- Instrumentation type Operational Amplifiers are ideal for signal processing and conditioning of a sensors output signal.

Output Devices or Actuators

- “Output” devices are commonly called Actuators and the simplest of all actuators is the lamp.

- Relays provide good separation of the low voltage electronic control signals and the high power load circuits.

- Relays provide separation of DC and AC circuits (i.e. switching an alternating current path via a DC control signal or vice versa).

- Solid state relays have fast response, long life, no moving parts with no contact arcing or bounce but require heat sinking.

- Solenoids are electromagnetic devices that are used mainly to open or close pneumatic valves, security doors and robot type applications. They are inductive loads so a flywheel diode is required.

- Permanent magnet DC motors are cheaper and smaller than equivalent wound motors as they have no field winding.

- Transistor switches can be used as simple ON/OFF unipolar controllers and pulse width speed control is obtained by varying the duty cycle of the control signal.

- Bi-directional motor control can be achieved by connecting the motor inside a transistor H-bridge.

- Stepper motors can be controlled directly using transistor switching techniques.

- The speed and position of a stepper motor can be accurately controlled using pulses so can operate in an Open-loop mode.

- Microphones are input sound transducers that can detect acoustic waves either in the Infra sound, Audible sound or Ultrasound range generated by a mechanical vibration.

- Loudspeakers, buzzers, horns and sounders are output devices and are used to produce an output sound, note or alarm.

Frequency divider

A frequency divider, also called a clock divider or scaler or prescaler, is a circuit that takes an input signal of a frequency, , and generates an output signal of a frequency:

Analog dividers

Analog frequency dividers are less common and used only at very high frequencies. Digital dividers implemented in modern IC technologies can work up to tens of GHz.Regenerative frequency divider

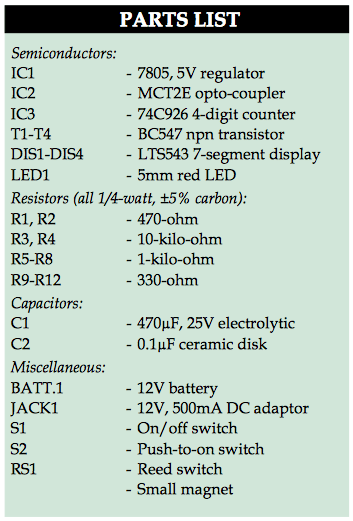

A regenerative frequency divider, also known as a Miller frequency divider, mixes the input signal with the feedback signal from the mixer.The feedback signal is . This produces sum and difference frequencies , at the output of the mixer. A low pass filter removes the higher frequency and the frequency is amplified and fed back into mixer.

Injection-locked frequency divider

A free-running oscillator which has a small amount of a higher-frequency signal fed to it will tend to oscillate in step with the input signal. Such frequency dividers were essential in the development of television.It operates similarly to an injection locked oscillator. In an injection locked frequency divider, the frequency of the input signal is a multiple (or fraction) of the free-running frequency of the oscillator. While these frequency dividers tend to be lower power than broadband static (or flip-flop based) frequency dividers, the drawback is their low locking range. The ILFD locking range is inversely proportional to the quality factor (Q) of the oscillator tank. In integrated circuit designs, this makes an ILFD sensitive to process variations. Care must be taken to ensure the tuning range of the driving circuit (for example, a voltage-controlled oscillator) must fall within the input locking range of the ILFD.

Digital dividers

For power-of-2 integer division, a simple binary counter can be used, clocked by the input signal. The least-significant output bit alternates at 1/2 the rate of the input clock, the next bit at 1/4 the rate, the third bit at 1/8 the rate, etc. An arrangement of flipflops is a classic method for integer-n division. Such division is frequency and phase coherent to the source over environmental variations including temperature. The easiest configuration is a series where each flip-flop is a divide-by-2. For a series of three of these, such system would be a divide-by-8. By adding additional logic gates to the chain of flip flops, other division ratios can be obtained. Integrated circuit logic families can provide a single chip solution for some common division ratios.Another popular circuit to divide a digital signal by an even integer multiple is a Johnson counter. This is a type of shift register network that is clocked by the input signal. The last register's complemented output is fed back to the first register's input. The output signal is derived from one or more of the register outputs. For example, a divide-by-6 divider can be constructed with a 3-register Johnson counter. The six valid values of the counter are 000, 100, 110, 111, 011, and 001. This pattern repeats each time the network is clocked by the input signal. The output of each register is a f/6 square wave with 60° of phase shift between registers. Additional registers can be added to provide additional integer divisors.

Mixed signal division

An arrangement of D flip-flops are a classic method for integer-n division. Such division is frequency and phase coherent to the source over environmental variations including temperature. The easiest configuration is a series where each D flip-flop is a divide-by-2. For a series of three of these, such system would be a divide-by-8. More complicated configurations have been found that generate odd factors such as a divide-by-5. Standard, classic logic chips that implement this or similar frequency division functions include the 7456, 7457, 74292, and 74294. (see List of 7400 series integrated circuits)

Fractional-n dividers

A fractional-n frequency synthesizer can be constructed using two integer dividers, a divide-by-n and a divide-by-(n + 1) frequency divider. With a modulus controller, n is toggled between the two values so that the VCO alternates between one locked frequency and the other. The VCO stabilizes at a frequency that is the time average of the two locked frequencies. By varying the percentage of time the frequency divider spends at the two divider values, the frequency of the locked VCO can be selected with very fine granularity.Delta-sigma fractional-n synthesizers

If the sequence of divide by n and divide by (n + 1) is periodic, spurious signals appear at the VCO output in addition to the desired frequency. Delta-sigma fractional-n dividers overcome this problem by randomizing the selection of n and (n + 1), while maintaining the time-averaged ratios.Phase-locked loop

A phase-locked loop or phase lock loop abbreviated as PLL is a control system that generates an output signal whose phase is related to the phase of an input signal. There are several different types; the simplest is an electronic circuit consisting of a variable frequency oscillator and a phase detector in a feedback loop. The oscillator generates a periodic signal, and the phase detector compares the phase of that signal with the phase of the input periodic signal, adjusting the oscillator to keep the phases matched.

Keeping the input and output phase in lock step also implies keeping the input and output frequencies the same. Consequently, in addition to synchronizing signals, a phase-locked loop can track an input frequency, or it can generate a frequency that is a multiple of the input frequency. These properties are used for computer clock synchronization, demodulation, and frequency synthesis.

Phase-locked loops are widely employed in radio, telecommunications, computers and other electronic applications. They can be used to demodulate a signal, recover a signal from a noisy communication channel, generate a stable frequency at multiples of an input frequency (frequency synthesis), or distribute precisely timed clock pulses in digital logic circuits such as microprocessors. Since a single integrated circuit can provide a complete phase-locked-loop building block, the technique is widely used in modern electronic devices, with output frequencies from a fraction of a hertz up to many gigahertz.

Simplest analog phase locked loop

Simplest analog phase locked loop Practical analogies

Automobile race analogy

As an analogy of a PLL, consider an auto race with two cars. One represents the input frequency, the other the PLL's output VCO frequency. Each lap corresponds to a complete cycle. The number of laps per hour (a speed) corresponds to the frequency. The separation of the cars (a distance) corresponds to the phase difference between the two oscillating signals.During most of the race, each car is on its own and free to pass the other and lap the other. This is analogous to the PLL in an unlocked state.

However, if there is an accident, a yellow caution flag is raised. This means neither of the race cars is permitted to overtake and pass the other car. The two race cars represent the input and output frequency of the PLL in a locked state. Each driver will measure the phase difference (a distance in laps) between himself and the other race car. If the hind driver is far away, he will increase his speed to close the gap. If he's too close to the other car he will slow down. The result is that both race cars will circle the track in lockstep with minimum phase difference between them. Since neither car is allowed to lap the other, the cars make the same number of laps in a given time period. Therefore the frequency of the two signals is the same.

Clock analogy

Phase can be proportional to time,[1] so a phase difference can be a time difference. Clocks are, with varying degrees of accuracy, phase-locked (time-locked) to a master clock.Left on its own, each clock will mark time at slightly different rates. A wall clock, for example, might be fast by a few seconds per hour compared to the reference clock at NIST. Over time, that time difference would become substantial.

To keep the wall clock in sync with the reference clock, each week the owner compares the time on his wall clock to a more accurate clock (a phase comparison), and he resets his clock. Left alone, the wall clock will continue to diverge from the reference clock at the same few seconds per hour rate.

Some clocks have a timing adjustment (a fast-slow control). When the owner compared his wall clock's time to the reference time, he noticed that his clock was too fast. Consequently, he could turn the timing adjust a small amount to make the clock run a little slower (frequency). If things work out right, his clock will be more accurate than before. Over a series of weekly adjustments, the wall clock's notion of a second would agree with the reference time (locked both in frequency and phase within the wall clock's stability).

An early electromechanical version of a phase-locked loop was used in 1921 in the Shortt-Synchronome clock.

bright to basic

Spontaneous synchronization of weakly coupled pendulum clocks was noted by the Dutch physicist Christiaan Huygens as early as 1673.[2] Around the turn of the 19th century, Lord Rayleigh observed synchronization of weakly coupled organ pipes and tuning forks.[3] In 1919, W. H. Eccles and J. H. Vincent found that two electronic oscillators that had been tuned to oscillate at slightly different frequencies but that were coupled to a resonant circuit would soon oscillate at the same frequency.[4] Automatic synchronization of electronic oscillators was described in 1923 by Edward Victor Appleton.[5]Earliest research towards what was later named the phase-locked loop goes back to 1932, when British researchers developed an alternative to Edwin Armstrong's superheterodyne receiver, the Homodyne or direct-conversion receiver. In the homodyne or synchrodyne system, a local oscillator was tuned to the desired input frequency and multiplied with the input signal. The resulting output signal included the original modulation information. The intent was to develop an alternative receiver circuit that required fewer tuned circuits than the superheterodyne receiver. Since the local oscillator would rapidly drift in frequency, an automatic correction signal was applied to the oscillator, maintaining it in the same phase and frequency of the desired signal. The technique was described in 1932, in a paper by Henri de Bellescize, in the French journal L'Onde Électrique.[6][7][8]

In analog television receivers since at least the late 1930s, phase-locked-loop horizontal and vertical sweep circuits are locked to synchronization pulses in the broadcast signal.[9]

When Signetics introduced a line of monolithic integrated circuits like the NE565 that were complete phase-locked loop systems on a chip in 1969,[10] applications for the technique multiplied. A few years later RCA introduced the "CD4046" CMOS Micropower Phase-Locked Loop, which became a popular integrated circuit.

Structure and function

Phase-locked loop mechanisms may be implemented as either analog or digital circuits. Both implementations use the same basic structure. Both analog and digital PLL circuits include four basic elements:- Phase detector,

- Low-pass filter,

- Variable-frequency oscillator, and

- feedback path (which may include a frequency divider).

Variations

There are several variations of PLLs. Some terms that are used are analog phase-locked loop (APLL) also referred to as a linear phase-locked loop (LPLL), digital phase-locked loop (DPLL), all digital phase-locked loop (ADPLL), and software phase-locked loop (SPLL).[11]- Analog or linear PLL (APLL)

- Phase detector is an analog multiplier. Loop filter is active or passive. Uses a Voltage-controlled oscillator (VCO).

- Digital PLL (DPLL)

- An analog PLL with a digital phase detector (such as XOR, edge-trigger JK, phase frequency detector). May have digital divider in the loop.

- All digital PLL (ADPLL)

- Phase detector, filter and oscillator are digital. Uses a numerically controlled oscillator (NCO).

- Software PLL (SPLL)

- Functional blocks are implemented by software rather than specialized hardware.

- Neuronal PLL (NPLL)

- Phase detector, filter and oscillator are neurons or small neuronal pools. Uses a rate controlled oscillator (RCO). Used for tracking and decoding low frequency modulations (< 1 kHz), such as those occurring during mammalian-like active sensing.

Performance parameters

- Type and order

- Hold-in range

- Pull-in range (capture range, acquisition range)

- Lock-in range

- Loop bandwidth: Defining the speed of the control loop.

- Transient response: Like overshoot and settling time to a certain accuracy (like 50ppm).

- Steady-state errors: Like remaining phase or timing error.

- Output spectrum purity: Like sidebands generated from a certain VCO tuning voltage ripple.

- Phase-noise: Defined by noise energy in a certain frequency band (like 10 kHz offset from carrier). Highly dependent on VCO phase-noise, PLL bandwidth, etc.

- General parameters: Such as power consumption, supply voltage range, output amplitude, etc.

Applications

Phase-locked loops are widely used for synchronization purposes; in space communications for coherent demodulation and threshold extension, bit synchronization, and symbol synchronization. Phase-locked loops can also be used to demodulate frequency-modulated signals. In radio transmitters, a PLL is used to synthesize new frequencies which are a multiple of a reference frequency, with the same stability as the reference frequency.Other applications include:

- Demodulation of both FM and AM signals

- Recovery of small signals that otherwise would be lost in noise (lock-in amplifier to track the reference frequency)

- Recovery of clock timing information from a data stream such as from a disk drive

- Clock multipliers in microprocessors that allow internal processor elements to run faster than external connections, while maintaining precise timing relationships

- DTMF decoders, modems, and other tone decoders, for remote control and telecommunications

- DSP of video signals; Phase-locked loops are also used to synchronize phase and frequency to the input analog video signal so it can be sampled and digitally processed

- Atomic force microscopy in tapping mode, to detect changes of the cantilever resonance frequency due to tip–surface interactions

- DC motor drive

Clock recovery

Some data streams, especially high-speed serial data streams (such as the raw stream of data from the magnetic head of a disk drive), are sent without an accompanying clock. The receiver generates a clock from an approximate frequency reference, and then phase-aligns to the transitions in the data stream with a PLL. This process is referred to as clock recovery. In order for this scheme to work, the data stream must have a transition frequently enough to correct any drift in the PLL's oscillator. Typically, some sort of line code, such as 8b/10b encoding, is used to put a hard upper bound on the maximum time between transitions.Deskewing

If a clock is sent in parallel with data, that clock can be used to sample the data. Because the clock must be received and amplified before it can drive the flip-flops which sample the data, there will be a finite, and process-, temperature-, and voltage-dependent delay between the detected clock edge and the received data window. This delay limits the frequency at which data can be sent. One way of eliminating this delay is to include a deskew PLL on the receive side, so that the clock at each data flip-flop is phase-matched to the received clock. In that type of application, a special form of a PLL called a delay-locked loop (DLL) is frequently used.Clock generation

Many electronic systems include processors of various sorts that operate at hundreds of megahertz. Typically, the clocks supplied to these processors come from clock generator PLLs, which multiply a lower-frequency reference clock (usually 50 or 100 MHz) up to the operating frequency of the processor. The multiplication factor can be quite large in cases where the operating frequency is multiple gigahertz and the reference crystal is just tens or hundreds of megahertz.Spread spectrum

All electronic systems emit some unwanted radio frequency energy. Various regulatory agencies (such as the FCC in the United States) put limits on the emitted energy and any interference caused by it. The emitted noise generally appears at sharp spectral peaks (usually at the operating frequency of the device, and a few harmonics). A system designer can use a spread-spectrum PLL to reduce interference with high-Q receivers by spreading the energy over a larger portion of the spectrum. For example, by changing the operating frequency up and down by a small amount (about 1%), a device running at hundreds of megahertz can spread its interference evenly over a few megahertz of spectrum, which drastically reduces the amount of noise seen on broadcast FM radio channels, which have a bandwidth of several tens of kilohertz.Clock distribution

Typically, the reference clock enters the chip and drives a phase locked loop (PLL), which then drives the system's clock distribution. The clock distribution is usually balanced so that the clock arrives at every endpoint simultaneously. One of those endpoints is the PLL's feedback input. The function of the PLL is to compare the distributed clock to the incoming reference clock, and vary the phase and frequency of its output until the reference and feedback clocks are phase and frequency matched.PLLs are ubiquitous—they tune clocks in systems several feet across, as well as clocks in small portions of individual chips. Sometimes the reference clock may not actually be a pure clock at all, but rather a data stream with enough transitions that the PLL is able to recover a regular clock from that stream. Sometimes the reference clock is the same frequency as the clock driven through the clock distribution, other times the distributed clock may be some rational multiple of the reference.

Jitter and noise reduction

One desirable property of all PLLs is that the reference and feedback clock edges be brought into very close alignment. The average difference in time between the phases of the two signals when the PLL has achieved lock is called the static phase offset (also called the steady-state phase error). The variance between these phases is called tracking jitter. Ideally, the static phase offset should be zero, and the tracking jitter should be as low as possible.[dubious ]Phase noise is another type of jitter observed in PLLs, and is caused by the oscillator itself and by elements used in the oscillator's frequency control circuit. Some technologies are known to perform better than others in this regard. The best digital PLLs are constructed with emitter-coupled logic (ECL) elements, at the expense of high power consumption. To keep phase noise low in PLL circuits, it is best to avoid saturating logic families such as transistor-transistor logic (TTL) or CMOS.[citation needed]

Another desirable property of all PLLs is that the phase and frequency of the generated clock be unaffected by rapid changes in the voltages of the power and ground supply lines, as well as the voltage of the substrate on which the PLL circuits are fabricated. This is called substrate and supply noise rejection. The higher the noise rejection, the better.

To further improve the phase noise of the output, an injection locked oscillator can be employed following the VCO in the PLL.

Frequency synthesis

In digital wireless communication systems (GSM, CDMA etc.), PLLs are used to provide the local oscillator up-conversion during transmission and down-conversion during reception. In most cellular handsets this function has been largely integrated into a single integrated circuit to reduce the cost and size of the handset. However, due to the high performance required of base station terminals, the transmission and reception circuits are built with discrete components to achieve the levels of performance required. GSM local oscillator modules are typically built with a frequency synthesizer integrated circuit and discrete resonator VCOs.Block diagram

Block diagram of a phase-locked loop

Analog phase locked loops are generally built with an analog phase detector, low pass filter and VCO placed in a negative feedback configuration. A digital phase locked loop uses a digital phase detector; it may also have a divider in the feedback path or in the reference path, or both, in order to make the PLL's output signal frequency a rational multiple of the reference frequency. A non-integer multiple of the reference frequency can also be created by replacing the simple divide-by-N counter in the feedback path with a programmable pulse swallowing counter. This technique is usually referred to as a fractional-N synthesizer or fractional-N PLL.[dubious ]

The oscillator generates a periodic output signal. Assume that initially the oscillator is at nearly the same frequency as the reference signal. If the phase from the oscillator falls behind that of the reference, the phase detector changes the control voltage of the oscillator so that it speeds up. Likewise, if the phase creeps ahead of the reference, the phase detector changes the control voltage to slow down the oscillator. Since initially the oscillator may be far from the reference frequency, practical phase detectors may also respond to frequency differences, so as to increase the lock-in range of allowable inputs.

Depending on the application, either the output of the controlled oscillator, or the control signal to the oscillator, provides the useful output of the PLL system.

Elements

Phase detector

A phase detector (PD) generates a voltage, which represents the phase difference between two signals. In a PLL, the two inputs of the phase detector are the reference input and the feedback from the VCO. The PD output voltage is used to control the VCO such that the phase difference between the two inputs is held constant, making it a negative feedback system. There are several types of phase detectors in the two main categories of analog and digital.Different types of phase detectors have different performance characteristics.

For instance, the frequency mixer produces harmonics that adds complexity in applications where spectral purity of the VCO signal is important. The resulting unwanted (spurious) sidebands, also called "reference spurs" can dominate the filter requirements and reduce the capture range well below and/or increase the lock time beyond the requirements. In these applications the more complex digital phase detectors are used which do not have as severe a reference spur component on their output. Also, when in lock, the steady-state phase difference at the inputs using this type of phase detector is near 90 degrees. The actual difference is determined by the DC loop gain.[citation needed]

A bang-bang charge pump phase detector must always have a dead band where the phases of inputs are close enough that the detector detects no phase error. For this reason, bang-bang phase detectors are associated with significant minimum peak-to-peak jitter, because of drift within the dead band. However these types, having outputs consisting of very narrow pulses at lock, are very useful for applications requiring very low VCO spurious outputs. The narrow pulses contain very little energy and are easy to filter out of the VCO control voltage. This results in low VCO control line ripple and therefore low FM sidebands on the VCO.

In PLL applications it is frequently required to know when the loop is out of lock. The more complex digital phase-frequency detectors usually have an output that allows a reliable indication of an out of lock condition.

An XOR-gate is often used for digital PLLs as an effective yet simple phase detector. It can also be used in an analog sense with only slight modification to the circuitry.

Filter

The block commonly called the PLL loop filter (usually a low pass filter) generally has two distinct functions.The primary function is to determine loop dynamics, also called stability. This is how the loop responds to disturbances, such as changes in the reference frequency, changes of the feedback divider, or at startup. Common considerations are the range over which the loop can achieve lock (pull-in range, lock range or capture range), how fast the loop achieves lock (lock time, lock-up time or settling time) and damping behavior. Depending on the application, this may require one or more of the following: a simple proportion (gain or attenuation), an integral (low pass filter) and/or derivative (high pass filter). Loop parameters commonly examined for this are the loop's gain margin and phase margin. Common concepts in control theory including the PID controller are used to design this function.

The second common consideration is limiting the amount of reference frequency energy (ripple) appearing at the phase detector output that is then applied to the VCO control input. This frequency modulates the VCO and produces FM sidebands commonly called "reference spurs". The low pass characteristic of this block can be used to attenuate this energy, but at times a band reject "notch" may also be useful.[citation needed]

The design of this block can be dominated by either of these considerations, or can be a complex process juggling the interactions of the two. Typical trade-offs are: increasing the bandwidth usually degrades the stability or too much damping for better stability will reduce the speed and increase settling time. Often also the phase-noise is affected.

Oscillator

All phase-locked loops employ an oscillator element with variable frequency capability. This can be an analog VCO either driven by analog circuitry in the case of an APLL or driven digitally through the use of a digital-to-analog converter as is the case for some DPLL designs. Pure digital oscillators such as a numerically controlled oscillator are used in ADPLLs.Feedback path and optional divider

PLLs may include a divider between the oscillator and the feedback input to the phase detector to produce a frequency synthesizer. A programmable divider is particularly useful in radio transmitter applications, since a large number of transmit frequencies can be produced from a single stable, accurate, but expensive, quartz crystal–controlled reference oscillator.Some PLLs also include a divider between the reference clock and the reference input to the phase detector. If the divider in the feedback path divides by and the reference input divider divides by , it allows the PLL to multiply the reference frequency by . It might seem simpler to just feed the PLL a lower frequency, but in some cases the reference frequency may be constrained by other issues, and then the reference divider is useful.

Frequency multiplication can also be attained by locking the VCO output to the Nth harmonic of the reference signal. Instead of a simple phase detector, the design uses a harmonic mixer (sampling mixer). The harmonic mixer turns the reference signal into an impulse train that is rich in harmonics.[13] The VCO output is coarse tuned to be close to one of those harmonics. Consequently, the desired harmonic mixer output (representing the difference between the N harmonic and the VCO output) falls within the loop filter passband.

It should also be noted that the feedback is not limited to a frequency divider. This element can be other elements such as a frequency multiplier, or a mixer. The multiplier will make the VCO output a sub-multiple (rather than a multiple) of the reference frequency. A mixer can translate the VCO frequency by a fixed offset. It may also be a combination of these. An example being a divider following a mixer; this allows the divider to operate at a much lower frequency than the VCO without a loss in loop gain.

Modeling

Time domain model

The equations governing a phase-locked loop with an analog multiplier as the phase detector and linear filter may be derived as follows. Let the input to the phase detector be and the output of the VCO is with phases and . The functions and describe waveforms of signals. Then the output of the phase detector is given byThe loop filter can be described by a system of linear differential equations

Hence the following system describes PLL

Phase domain model

Consider the input of PLL and VCO output are high frequency signals. Then for any piecewise differentiable -periodic functions and there is a function such that the output of FilterDenote by the phase difference

Example

Consider sinusoidal signalsLinearized phase domain model

Phase locked loops can also be analyzed as control systems by applying the Laplace transform. The loop response can be written as:- is the output phase in radians

- is the input phase in radians

- is the phase detector gain in volts per radian

- is the VCO gain in radians per volt-second

- is the loop filter transfer function (dimensionless)

- is the damping factor

- is the natural frequency of the loop

The Fundamentals Of Signal Generation

A signal generator, a general-purpose piece of test equipment, performs numerous measurements for a variety of applications requiring electromagnetic wave signals as stimuli. Its roots trace back to the beginnings of the electronic test-and-measurement industry. The first product, developed by Hewlett-Packard Company (now known as Agilent Technologies), was the model 200A audio oscillator. One of HP’s first customers was the Walt Disney Company—in 1940, Walt Disney purchased eight audio oscillators to calibrate the breakthrough sound system it developed for the movie “Fantasia.”

Today, RF signal generators are widely used throughout the electronics industry, including aerospace/defense electronics and wireless communications. Typical applications include RF/IF signal generation and LO substitution, as well as radar, GPS, and avionics signal simulation.In modern wireless communications systems, signal generators supporting a range of digital-modulation formats are commonly used to test digital receivers and transmitters against increasingly complex requirements.

Regardless of the industry or application, getting the most value out of the signal generator requires a good understanding of its basic operation and function, as well as its key specifications. Another critical factor is becoming familiar with a signal generator’s ability to go beyond general-purpose applications to simulating advanced signals with impairments, interference, and waveform correction.

Generating Signals

The most basic signal created from a signal generator is the continuous wave (CW) signal, or sine wave, which has no modulation and is produced by a basic signal source. CW signals less than 6 GHz are commonly referred to as RF signals, while those between 6 GHz and 30 GHz and those greater than 30 GHz are called microwave signals and millimeter signals, respectively.

Many signal generators also offer various types of modulation, including analog and composite (digital) modulation. Classic analog-modulation types include amplitude-modulation (AM), frequency-modulation (FM), phase-modulation (ΦM), and pulse-modulation signals. Modulation is important because it’s the information-carrying part of the signal.

To better understand analog modulation, consider the basic equation of a sine wave:

In this equation, three parameters can be varied: amplitude, frequency and phase. Varying the sine wave’s amplitude achieves AM and pulse modulation. Varying the sine wave’s frequency or phase generates FM and ΦM, respectively.

Composite modulation, also known as vector or digital modulation, occurs when two or more modulation types are used to create a composite modulated signal. For example, AM and ΦM can be combined to create various magnitude and phase values. Varying the signal’s phase in conjunction with the AM control in the ALC circuit can produce a digitally modulated signal. It’s also possible to generate a variety of communications, satellite, and radar signals by employing a combination of pulse and either ΦM or FM.

All types of modulation can be represented on a polar plane using vector (phasor) notation, although magnitude and phase values aren’t typically used when describing the vectors in digital modulation (Fig. 1). Instead, the polar plane is mapped to a rectangular format (with a horizontal and vertical axis) called the I-Q plane, where I stands for in-phase and Q denotes quadrature.

1. A magnitude change with no rotation represents amplitude modulation (AM), and a vector that rotates along an arc (the length of which indicates the maximum phase deviation) represents phase modulation (ΦM). Simultaneous AM and ΦM are indicated by a vector whose length and phase change with time. FM results in a vector that rotates clockwise or counterclockwise.

I/Q diagrams are particularly useful because they mirror the way an I/Q modulator creates most digital communications signals. Independent dc voltages (I and Q components) provided to the input of an I/Q modulator correlate to a composite signal with a specific amplitude and phase at the modulator output. Conversely, a modulated signal’s amplitude and phase sent to an I/Q demodulator correspond to discrete dc values at the demodulator’s output.

Quickly transmitting large amounts of binary bits at high rates in composite signals requires large information bandwidths. The faster the data rate, the wider the bandwidth. Available bandwidth can be used more efficiently by grouping blocks of digital data (1s, 0s) into symbols, although this increases signal complexity. The number of bits per symbol will vary depending on the specific format. Transmitting digital data via multi-bit symbols requires less bandwidth. For example, for two bits/symbol, the symbol rate is one-half the bit rate, and for four bits/symbol, the symbol rate is one-quarter the bit rate.

IQ or vector modulation, commonly used in modern digital communications and radar systems due to the large modulation bandwidths and ease in creating composite modulated signals, have a number of important characteristics. These include symbol rate (the number of symbols/second), modulation bandwidth (the maximum effective bandwidth of the IQ modulator), frequency response/flatness, IQ quadrature skew (a measure of how orthogonal the I and Q planes are to each other), and IQ gain balance (a measure of how closely the I channel and Q channel are in gain).

When the IQ characteristics aren’t ideal, magnitude and phase errors will occur that may cause transmission of incorrect digital information. Digital errors are referred to as bit errors, often expressed as a bit-error rate.

Signal-Generator Architectures

Signal generators come in different flavors: CW, analog, and vector. Each has a different function, and thus will find homes in different applications.

CW Signal Generator

Looking at a basic block diagram of theCW signal generator, the RF CW source splits into three sections: reference, synthesizer, and output (Fig. 2). Thereference section supplies a sine wave with a known frequency to the phase-locked loop (PLL) in the synthesizer section. Its reference oscillator determines the accuracy of the source’s output frequency. The synthesizer section produces a sine wave at the desired frequency and supplies a stable frequency to the output section. The output section determines the overall amplitude range and accuracy of the source.

2. This basic block diagram of an RF CW source shows different reference, synthesizer, and output sections.

Within the CW source, the reference oscillator, phase detector, and voltage-controlled oscillator (VCO) all contribute to phase noise. The broadband noise floor also contributes in this regard. However, since it stems from the source’s thermal noise, it doesn’t greatly limit performance in most applications. Noise performance can be optimized by carefully selecting the bandwidth of the PLL in the synthesizer section. This determines exactly when the VCO contribution to overall phase noise becomes suppressed.

Like the RF CW source, a microwave CW source consists of a reference section, synthesizer section, and output section. The microwave CW source may also feature a number of differences, though. For example, although the reference section only has one reference oscillator, it may supply two or more signals to the synthesizer section.

Another difference may be the choice of oscillators in the synthesizer section. While RF sources generally use VCOs, microwave signal generators typically employ an yttrium-iron-garnet (YIG) oscillator tuned with a magnetic field. A PLL ensures frequency stability. In addition, output frequency range can be extended via frequency dividers and multipliers.

When determining the appropriate source for an application, it’s critical to first understand source specifications. For CW sources, the specifications generally divide into three categories:

• Frequency: The main frequency specifications are range, resolution, and accuracy. Range specifies the range of output frequencies produced by the source. Resolution specifies the smallest frequency increment. Source accuracy is affected by the reference oscillator’s stability and the amount of time since the source’s last calibration.

• Amplitude: The main amplitude specifications are range, accuracy, resolution, switching speed, and reverse power protection. Range is determined by the source’s maximum output power and amount of built-in internal attenuation. Resolution indicates the smallest amplitude increment. Switching speed measures how fast the source changes from one amplitude level to another. Reverse power protection helps prevent transmitted signals from the device under test from damaging the source.

Amplitude specifications have a direct impact on the CW source’s sweeping capability (the ability to move the CW tone in frequency). Frequency sweeps include ramp sweep, step sweep, and arbitrary list sweep. For ramp sweep, the source’s accuracy, sweep time, and frequency resolution are usually specified. For step and list sweep, the accuracy, number of points and switching time are specified.

In a frequency sweep, the output power varies by no more than the flatness specification throughout the sweep. CW sources also can sweep power level. When sweeping power, the amplitude sweep range determines the possible range of output powers, while the slope range determines how quickly the source can sweep from one power to another.

• Spectral Purity: Specifications associated with spectral purity (e.g., phase noise, spurious, harmonics, and sub-harmonics) can sometimes be difficult to understand. The ideal CW output is a sine wave at a single frequency—however, no ideal CW source actually exists since all are made with non-ideal (e.g., real) components that introduce phase noise and unwanted distortion products.

Harmonics represent integer multiples of the CW output. Sources contain many nonlinear components to provide a broad range of frequencies and output powers. The nonlinear characteristics of an amplifier create second-, third-, and higher-order harmonics. Non-harmonic spurs come from a variety of sources (e.g., power supply) and are typically quite low (less than −65 dBc). Finally, multipliers are often used to extend a source’s frequency output, resulting in the presence of sub-harmonics.

A number of applications can take advantage of basic CW signals, including use [OK?] as a local oscillator (LO) during transmitter development, for intermodulation distortion (IMD) testing of receivers, and for in-channel and out-of-channel receiver testing. Nonlinear amplifier testing is another key application. Third-order-intercept (TOI), a common amplifier measurement, uses two CW sources combined at the input of an amplifier (DUT). The amplifier’s nonlinearities produce third-order mixing products.

The test system itself also may introduce sources of error. These can be reduced either through better isolation of the signal sources, or by suppressing the power that transfers from one source to the other with a circulator.

Sweeping CW generators are most often used in stimulus-response testing (finding the DUT’s swept response). Frequency sweeps determine the frequency response of devices. Power sweeps, typically performed on amplifiers, measure linearity and saturation levels. When measuring a device’s frequency response, the important specifications are frequency accuracy, output power (level), flatness, and speed. Frequency-response measurements are made on many types of devices, including amplifiers, filters, and mixers.

Analog Signal Generator

The block diagram of an analog signal generator is similar to that of a CW generator, except for additional components that allow the source to modulate the carrier (Fig. 3).

3. In an analog signal generator, the FM and ΦM inputs go to the synthesizer’s frequency-control block to modulate the carrier. To change the frequency or phase of the signal generator, the FM or PM input signal is applied to the VCO. This signal, along with the reference oscillator signal, creates the FM or ΦM signal.

To create AM, the AM signal must be applied to the automatic-level-control (ALC) driver block, which converts voltages from the AM input into amplitude changes in the carrier through the ALC modulator. To create pulse modulation, a pulse input is added. That signal is applied to a pulse modulator in the signal’s output path. An internal modulator generator may be added to the CW source for convenience and to simplify test setups.A key application for pulsed signals is radar testing. When creating a composite modulation signal like the chirped pulse, important signal-generator modulation specifications include FM deviation and rate, pulse rate, pulse width, and pulse rise time.

Vector Signal Generator

Creating a vector signal generator simply involves adding an IQ modulator to the basic CW block diagram. To generate baseband IQ signals, a baseband generator takes binary data containing the desired “information” to be transmitted, maps it to digital symbols and then to digital I and Q signals, converts the digital IQ signals to analog IQ signals, and sends them to the IQ modulator to be coded onto the carrier signal.

After the data undergoes symbol mapping, the digital signals are digitally filtered using two sets of filters in the baseband generator. The filters are designed to limit the bandwidth of the I and Q symbols and slow down the transitions between symbols. Many types of baseband filters exist, with each having different attributes that must be set in the signal generator. Common filter types are Root Raised Cosine, Gaussian, and Rectangular.

Vector signal generators are especially useful for simulating a wide variety of digitally modulated signals, including cellular, wireless LAN, Bluetooth, GNSS, and military communications formats. Some of the more commonplace vector measurements include adjacent channel power ratio (ACPR) and error vector magnitude (EVM).

ACPR is an important measurement, particularly for power amplifiers. It characterizes the distortion of digitally modulated signals and the likelihood that a givensignal may interfere with a neighboring radio. EVM and phase error are the two principal parameters for evaluating the quality of a digitally modulated signal. Measurement of these values entails examining the differences between a measured magnitude and phase vector of a digitally modulated signal and its corresponding ideal reference vector.

Another application revolves around measuring receiver sensitivity and selectivity. Sensitivity, one of the key specifications for a receiver, is the lowest possible signal level that can be reliably detected and demodulated. When making a sensitivity measurement, the signal generator’s level accuracy becomes an extremely important factor. Adjacent and alternate channel selectivity measures the receiver’s ability to process a desired signal, while rejecting a strong signal in an adjacent channel or alternate channel. Here, frequency and amplitude (level) accuracy, along with the spectral characteristics of the test and interfering signal, are important.

A vector signal generator can also be used in a Connected Solutions development environment. Connected Solutions is a concept that merges computer simulation with real-world measurements. Consider, for example, how desirable it would be to test receiver sensitivity before all of the receiver’s hardware blocks are available. Typically, bit-error-rate (BER) measurements can only be performed with the entire receiver. However, newer hardware and software development within test equipment now allows for BER measurements when just portions of the receiver are available. The Connected Solutions technique requires simulation software and test equipment to work together.

Signal Simulation Solutions

The process of simulating real signals includes waveform creation, baseband IQ signal generation, and upconversion of the IQ signals to the RF or microwave frequency of interest. While the vector signal generator and its associated baseband generator are core pieces to any signal simulation solution, other components may be necessary to simulate advanced signals.

For example, simulating fading impairments needs additional software and hardware to create the impairment and add it to the original signal. Luckily, modern vector signal generators can go beyond general-purpose applications to create advanced signals with various impairments and interference signals, providing a more realistic simulation of real-world environments.

While impaired signals are very useful for testing receivers under realistic conditions, other applications prefer a very clean, undistorted test signal. For example, the performance of an RF component could be evaluated by comparing its output to the input signal. In this case, a clean input signal ensures that the measured output reflects the device’s performance and not the input signal.

A signal generator has many potential sources of error (e.g., I/Q modulator, RF chain, and IQ path). Each of these affects the modulated test signal, resulting in an array of issues, including passband tilt, ripple, and roll off. Waveform correction provides a means of removing test signal imperfections (Fig. 4).

4. Waveform correction is accomplished by measuring the modulated vector signal generator’s output with a vector spectrum analyzer. Then those results are reported to signal correction software, which in turn predistorts the IQ data before it gets to the IQ modulator and effectively removes the imperfection.

One type of vector signal generator imperfection is the amplitude flatness of the IQ modulator, or IQ flatness. IQ flatness can be viewed by creating 32 equal magnitude test tones within an 80-MHz bandwidth (Fig. 5). Notice that before waveform correction is measured and applied, the tones vary as much as 2.4 dB across the 80 MHz of interest. By measuring the vector signal generator in this mode and applying the appropriate waveform correction, tone amplitude variation reduces to less than 0.1 dB.

5. Waveform correction offers a means of addressing IQ flatness, although the tradeoff is calculation time and valid calibration time. Itis typically used on wideband, multitone, and multicarrier signals.

The same IQ correction can be applied to extremely wide band signals, such as the 500-MHz UWB signal shown in Figure 5. While it may not be possible to achieve 0.1-dB flatness on this type of signal, the performance nevertheless improves significantly.

Another test signal imperfection concerns in-band IMD—the intermodulation products that fall within the channel bandwidth of the generated signal. Such distortion is particularly undesirable because it can’t be filtered and directly interferes with the signal-of-interest. This predistortion technique generates a canceling tone at the IMD frequency that’s 180° out of phase with the distortion product.

A spectrum analyzer is used to measure the IMD of the original test stimulus. Then, a predistorted waveform created from these measurements removes the in-band, as well as the out-of-band, IMD products (Fig. 6). By employing waveform correction, the vector signal generator provides much higher fidelity waveforms with a significant reduction in imperfections.

6. As can be seen in the before and after predistortion measurements, exceptional distortion suppression is attainable. This test stimulus achieved over 40-dB improvement.

Other types of impairments, such as multipath signals or fading, can be simulated with appropriate equipment, such as the Agilent PXB baseband generator and channel emulator used in conjunction with the N5182B MXG signal and then added to the clean modulated signal in the vector signal generator. Examples include performance of single-input, single-output (SISO) test configurations and multiple-input, multiple-output (MIMO) receiver tests at RF/baseband (Fig. 7).7. Multipath and fading impairments can be conquered with the appropriate hardware and software, as illustrated with these example SISO signal and fading tests (a) and MIMO receiver and fading tests (b) .

Signal generator, electronic test instrument that delivers an accurately calibrated signal at frequencies from the audio to the microwave ranges. It is valuable in the development and testing of electronic hardware. The signal generator provides a signal that can be adjusted according to frequency, output voltage, impedence, waveform, and modulation.

Signal generators are of five major types: oscillators, which generate sine waves useful in measuring the response of loudspeakers, amplifiers, microphones, transducers, and acoustic systems; standard signal generators, which generate sine waves over a wide range of output power and modulation, used, for example, to test radio receivers and measure gain.

Automatic sounding

Automatic soundings are primarily intended to increase the efficiency of the automatic link establishment (ALE) function, thereby increasing system throughput.

In ALE, the sounding information consists of a heavily error-corrected short message identifying the sender. Recipients decode it and use the bit error rate to calculate and store a (channel, node, quality) tuple. As ionospheric conditions and mobile-node locations change, these quality tuples will shift. The stored data can be used to maximize the chance that the best channel to link with a given partner will be chosen first.

A dedicated circuit, private circuit, or leased line is a line that is dedicated to only one use. Originally, this was analog, and was often used by radio stations as a studio/transmitter link (STL) or remote pickup unit (RPU) for their audio, sometimes as a backup to other means. Later lines were digital, and used for private corporate data networks.

The opposite of a dedicated circuit is a switched circuit, which can be connected to different paths. A POTS or ISDN telephone line is a switched circuit, because it can connect to any other telephone number.

On digital lines, a virtual circuit can be created to serve either purpose, while sharing a single physical circuit .

A telecommunication circuit may be defined as follows:

- The complete path between two terminals over which one-way or two-way communications may be provided. See communications protocol.

- An electronic path between two or more points, capable of providing a number of channels.

- A number of conductors connected for the purpose of carrying an electric current.

- An electronic closed-loop path among two or more points used for signal transfer.

- A number of electrical components, such as resistors, inductances, capacitors, transistors, and power sources connected in one or more closed loops.

Communications protocol

In telecommunications, a communication protocol is a system of rules that allow two or more entities of a communications system to transmit information via any kind of variation of a physical quantity. The protocol defines the rules syntax, semantics and synchronization of communication and possible error recovery methods. Protocols may be implemented by hardware, software, or a combination of both.[1]

Communicating systems use well-defined formats (protocol) for exchanging various messages. Each message has an exact meaning intended to elicit a response from a range of possible responses pre-determined for that particular situation. The specified behavior is typically independent of how it is to be implemented. Communications protocols have to be agreed upon by the parties involved.[2] To reach agreement, a protocol may be developed into a technical standard. A programming language describes the same for computations, so there is a close analogy between protocols and programming languages: protocols are to communications what programming languages are to computations.[3]

Multiple protocols often describe different aspects of a single communication. A group of protocols designed to work together are known as a protocol suite; when implemented in software they are a protocol stack.

Internet communication protocols are published by the Internet Engineering Task Force (IETF). The IEEE handles wired and wireless networking The International Organization for Standardization (ISO) other types. The ITU-T handles telecommunications protocols and formats for the public switched telephone network (PSTN). As the PSTN and Internet converge, the standards are also being driven towards convergence.

Basic requirements of protocols

Getting the data across a network is only part of the problem for a protocol. The data received has to be evaluated in the context of the progress of the conversation, so a protocol has to specify rules describing the context. These kind of rules are said to express the syntax of the communications. Other rules determine whether the data is meaningful for the context in which the exchange takes place. These kind of rules are said to express the semantics of the communications.Messages are sent and received on communicating systems to establish communications. Protocols should therefore specify rules governing the transmission. In general, much of the following should be addressed:[12]

- Data formats for data exchange. Digital message bitstrings are exchanged. The bitstrings are divided in fields and each field carries information relevant to the protocol. Conceptually the bitstring is divided into two parts called the header area and the data area. The actual message is stored in the data area, so the header area contains the fields with more relevance to the protocol. Bitstrings longer than the maximum transmission unit (MTU) are divided in pieces of appropriate size.[13]

- Address formats for data exchange. Addresses are used to identify both the sender and the intended receiver(s). The addresses are stored in the header area of the bitstrings, allowing the receivers to determine whether the bitstrings are intended for themselves and should be processed or should be ignored. A connection between a sender and a receiver can be identified using an address pair (sender address, receiver address). Usually some address values have special meanings. An all-1s address could be taken to mean an addressing of all stations on the network, so sending to this address would result in a broadcast on the local network. The rules describing the meanings of the address value are collectively called an addressing scheme.[14]

- Address mapping. Sometimes protocols need to map addresses of one scheme on addresses of another scheme. For instance to translate a logical IP address specified by the application to an Ethernet hardware address. This is referred to as address mapping.[15]

- Routing. When systems are not directly connected, intermediary systems along the route to the intended receiver(s) need to forward messages on behalf of the sender. On the Internet, the networks are connected using routers. This way of connecting networks is called internetworking.

- Detection of transmission errors is necessary on networks which cannot guarantee error-free operation. In a common approach, CRCs of the data area are added to the end of packets, making it possible for the receiver to detect differences caused by errors. The receiver rejects the packets on CRC differences and arranges somehow for retransmission.[16]

- Acknowledgements of correct reception of packets is required for connection-oriented communication. Acknowledgements are sent from receivers back to their respective senders.[17]

- Loss of information - timeouts and retries. Packets may be lost on the network or suffer from long delays. To cope with this, under some protocols, a sender may expect an acknowledgement of correct reception from the receiver within a certain amount of time. On timeouts, the sender must assume the packet was not received and retransmit it. In case of a permanently broken link, the retransmission has no effect so the number of retransmissions is limited. Exceeding the retry limit is considered an error.[18]

- Direction of information flow needs to be addressed if transmissions can only occur in one direction at a time as on half-duplex links. This is known as Media Access Control. Arrangements have to be made to accommodate the case when two parties want to gain control at the same time.[19]

- Sequence control. We have seen that long bitstrings are divided in pieces, and then sent on the network individually. The pieces may get lost or delayed or take different routes to their destination on some types of networks. As a result, pieces may arrive out of sequence. Retransmissions can result in duplicate pieces. By marking the pieces with sequence information at the sender, the receiver can determine what was lost or duplicated, ask for necessary retransmissions and reassemble the original message.[20]

- Flow control is needed when the sender transmits faster than the receiver or intermediate network equipment can process the transmissions. Flow control can be implemented by messaging from receiver to sender

Systems engineering principles have been applied to create a set of common network protocol design principles.

Communicating systems operate in parallel. The programming tools and techniques for dealing with parallel processes are collectively called concurrent programming. Concurrent programming only deals with the synchronization of communication. The syntax and semantics of the communication governed by a low-level protocol usually have modest complexity, so they can be coded with relative ease. High-level protocols with relatively large complexity could however merit the implementation of language interpreters. An example of the latter case is the HTML language.

Concurrent programming has traditionally been a topic in operating systems theory texts.[28] Formal verification seems indispensable, because concurrent programs are notorious for the hidden and sophisticated bugs they contain.[29] A mathematical approach to the study of concurrency and communication is referred to as Communicating Sequential Processes (CSP).[30] Concurrency can also be modelled using finite state machines like Mealy and Moore machines. Mealy and Moore machines are in use as design tools in digital electronics systems, which we encounter in the form of hardware used in telecommunications or electronic devices in general.[31]

This kind of design can be a bit of a challenge to say the least, so it is important to keep things simple. For the Internet protocols, in particular and in retrospect, this meant a basis for protocol design was needed to allow decomposition of protocols into much simpler, cooperating protocols.

A basis for protocol design

Systems do not use a single protocol to handle a transmission. Instead they use a set of cooperating protocols, sometimes called a protocol family or protocol suite.[9] To cooperate the protocols have to communicate with each other, so some kind of conceptual framework is needed to make this communication possible. Also note that software is needed to implement both the 'xfer-mechanism' and a protocol (no protocol, no communication).In literature there are numerous references to the analogies between computer communication and programming. By analogy we could say that the aforementioned 'xfer-mechanism' is comparable to a cpu; a 'xfer-mechanism' performs communications and a cpu performs computations and the 'framework' introduces something that allows the protocols to be designed independent of one another by providing separate execution environments for them. Furthermore, it is repeatedly stated that protocols are to computer communication what programming languages are to computation.[32][33]

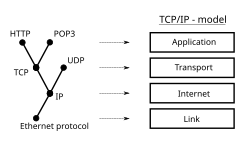

Layering

In modern protocol design, protocols are "layered". Layering is a design principle which divides the protocol design into a number of smaller parts, each of which accomplishes a particular sub-task, and interacts with the other parts of the protocol only in a small number of well-defined ways.Layering allows the parts of a protocol to be designed and tested without a combinatorial explosion of cases, keeping each design relatively simple. Layering also permits familiar protocols to be adapted to unusual circumstances. For example, the mail protocol above can be adapted to send messages to aircraft by changing the V.42 modem protocol to the INMARS LAPD data protocol used by the international marine radio satellites.