Memory-Based Learning for Control

memory-based methods provide natural and powerful mechanisms for

high-autonomy learning control. This paper takes the form of a survey of

the ways in which memory-based methods can and have been applied to

control tasks, with an emphasis on tasks in robotics and manufacturing.

We explain the various forms that control tasks can take, and how this

impacts on the choice of learning algorithm. We show a progression of

five increasingly more complex algorithms which are applicable to

increasingly more complex kinds of control tasks. We examine their

empirical behavior on robotic and industrial tasks. The final section

discusses the interesting impact that explicitly remembering all

previous experiences has on the problem of learning control.

Robots learn by ‘following the leader’

Mission of robots how to be better mission partners to soldiers — starting with how to find their way with minimal human intervention.

Given that autonomous vehicles have been navigating streets in many

U.S. cities for over a year, that may seem like not that big a deal.

But according to ARL researcher Maggie Wigness, the challenges facing

military robots are much greater. Specifically, unlike the self-driving

cars being developed by Google, Uber and others, military robots will

be operating in complex environments that don’t have the benefit of

standardized markings like lanes, street signs, curbs and traffic

lights.“Environments that we operate in are highly unstructured compared to [those for] self-driving cars,” “We cannot assume that there are road markings on the roads, we cannot assume that there is a road at all. We are working with different types of terrain.” The challenges extend beyond unmarked and variable terrain. The training of self-driving cars “requires a tremendous amount of labeled training data. “That is something we don’t have the luxury of collecting in an Army-relevant environment, so we are focusing more on how to learn from small amounts of labeled data.” Specifically, in the ARL project, the robots are trained to navigate environmental features following examples provided by humans. By observing, the robot can learn to do the same. “It’s a form of emulation, In the ARL project, humans assigned weights to the various features in the environment to help the robot learn to resolve conflicting commands. “For example, we train a robot to drive on road terrain and avoid the grass, so it learns the grass is bad to drive on and the road is good to drive on. But then the team gave the robot an additional command to avoid the field-of-view of a sniper. The robot, he said, “needs to balance these two goals simultaneously. It needs to break one of the behaviors.” Presumably, with proper weighting of factors the robot will opt to drive on the grass. “In the ultimate vision of this research, the robot will be operating alongside soldiers while they are performing the mission and doing whatever specific duties it has been assigned,”

Memory and learning for social robots

While most research in social robotics embraces the challenge of

designing and studying the interaction between robots and humans itself,

this talk will discuss the utility of social interaction in order to

facilitate for more flexible robotics. What can a robot gain with

respect to learning and adaptation from being able to sociably interact?

What are basic learning-enabling behaviors? And how do inexperienced

human tutor robots a sociable way? In order to answer these question we

consider the challenge of learning by interaction as a systemic one,

comprising appropriate perception, system design, and feedback. Basic

abilities of robots will be outlined which resemble concepts of

developmental learning in infants, apply linguistic models of

interaction management, and take tutoring as a joint task of a human and

a robot. However, in order to tackle the challenge of learning by

interaction the robot has to couple and coordinate these behaviors in a

very flexible and adaptive manner. The active memory as an architectural

concept in particular suitable for learning-enabled robots will be

briefly discussed as a foundation for coordination and integration of

such interactive robotic systems. The talk will build a bridge from the

construction of integrated robotic systems to their evaluation,

analysis, and way back. It will outline why we intend to enable our

robots to learn by interacting and how this paradigm impacts the design

of systems and interaction behaviors.

Prototype of a robotic system with emotion and memory

a prototype of a social robot which supports independent living for the

elderly, working in partnership with their relatives or carers.

Robotics/Computer Control/The Interface / Microcontrollers

Microcontrollers are the core of many robots. They have considerable

processing power packed on to one chip, allowing lots of freedom for

programmers. Microcontrollers are low level devices and it is common to program them using an assembly language, this provides a great deal of control over the hardware connected to the controller. Many manufacturers also provide high-level language compilers for their chips, including BASIC and C.

What's the difference between a

microcontroller, microprocessor, and a CPU ?

The CPU is the part which actually executes the instructions (add, subtract, shift, fetch, etc.).

A microprocessor is any CPU on a single chip.

A microcontroller is a kind of microprocessor, because it includes a CPU, but it typically also contains all of the following components on the same single chip:

- (discrete) inputs

- (discrete) outputs

- ROM for the program

- RAM for temporary data

- EEPROM for non-volatile data

- counter/timer

- clock

With this capability, microcontrollers are quite convenient pieces of silicon.

The outputs of a microcontroller can be used to drive many things, common examples include LEDs and transistors. The outputs on a microcontroller are generally low power. Transistors are used to switch higher power devices (such as motors) on and off.

All CPUs are useless without software.

Most software for a PC is stored on the hard drive. But when you first turn one on, it starts executing the software in the boot ROM. If you wanted to change that software, you would have to pull out the ROM chip, program a new ROM chip (in a "chip programmer"), and then plug the new chip into the PC.

Most robots don't have a hard drive -- all their software is stored in ROM. So changing that software is exactly like changing the boot code of a PC. (If your robot has an external ROM chip, then that is the one that is pulled and replaced. If your robot uses a microcontroller with internal ROM, then the microcontroller is pulled and replaced).

Many recent PC motherboards and microcontrollers use Flash instead of ROM. That allows people to change the program without pulling out or putting in any chips. They can be rewritten with new data, like a memory chip, but permanently, and only a certain number of times (10,000 to 100,000 erase/write cycles).

Here are a few pages about specific µcontrollers:

- 8051

- Atmel AVR

- Microchip PIC

- Embedded Systems/ARM Microprocessors

Robotics/Communication Sensors

Data transmission channels

Being able to send data to and from your robot can be a very handy addition. There are 2 commonly used methods for wireless data transmission: IR (InfraRed) and RF (Radio Frequency). Both methods have their own advantages and disadvantages. Which to use depends on several factors.IR

IR data transmission best known example is the TVs remote control. Using IR on a robot is quite easy and very cheap. The disadvantage of IR is that it's limited to line-of-sight transmission.Line-of-sight or the distance of operating can be increased by use of microwaves (transmission-receiver) systemsRF

RF are well known in radio controlled cars. RF is more expensive than IR, but doesn't have the line-of-sight limitation. These days there are complete RF "modems" available which can be connected to a robot without much (or even any) extra components. Although possible building your own RF communication modules isn't advisable. There are strict laws about which frequencies you can use and with how much power you can transmit.Using IR

IR is not much more than modulated lightflashes. Since IR falls outside of the visual spectrum humans can't see these flashes without e.g. a digital camera (the CMOS image chip can see IR, on the screen those IR flashes appear bright white).Remote Control

The great thing about IR remote controls is that you can use many of these directly to control your robot. Although there are several (very) differend IR remote control standards, there is one standard that is used by multiple manufacturers. This standard, called RC5, is very easy to use as many programming languages for microcontrollers have build in RC5 support. The hardware is limited to an integrated receiver module (e.g. TSOP1736), a capacitor and a resistor.Robotics/Sensors/Digital image Acquisition

Image Sensors

There are 2 types of image sensors commonly used to digitally acquire an image, CCD and CMOS. While both have similar image quality, their core functionality and other features greatly differ.CCD

A CCD, or Charge-Coupled Device, is an older technology based on an analog system. A photoelectric surface that coats the CCD creates an electric charger when light hits it, and the charge is then transferred and stored in a capacitive bin that sits below the surface of each pixel.[2] The CCD then functions like a shift register, and transfers the charge in the bin below each pixel one spot over by applying a voltage in a cascading motion across the surface. The charge that reaches the edge of the CCD is transferred to an analog to digital converter, which turns the charge into a digital value for each pixel. This process relatively slow because of the way it has to shift each pixel to the edge of the CCD to then turn it into digital information.CMOS

A CMOS image sensor is a type of Active-Pixel Sensor (APS) constructed of Complementary metal–oxide–semiconductors. CMOS sensors can be much faster at generating a digital image, and consume less power than a CCD. They can also be larger than a CCD, allowing for higher resolution images, and can be manufactured through cheaper methods than a CCD. Each pixel in a CMOS sensor contains a photodetector and an amplifier.[4] The simplest type of CMOS image sensor is the 3T model, where each pixel is made up of 3 transistors and a photodiode. The transistor Mrst is used to clear the value of the pixel and reset it to acquire a new image. The Msf transistor buffers and amplifies the value from the photodiode until the pixel can be read and is reset. The Msel transistor is the pixel select transistor that only outputs the pixel’s value to the bus when the device is reading the row it is in. In this model the data is gathered in parallel via a shared bus, where all pixels in a column share the same bus, and then the data is sent down the bus one row at a time. This method is faster at shifting the charge value to the digital converter. There are other variations of the CMOS sensor which help reduce image lag and noise. Image lag is created where some of the previous image remains in the current one. This is often caused by a pixel not getting fully reset, so some of the charge from the previous image still exists. Image noise is a measure of how accurately the amount of light that hits the pixel is measured.It is very important tool for robotics.Color Images

The 2 types of image sensors do not natively measure color; they simply convert the amount of light (regardless of color) to a digital value.[1] There are several different ways to gather color data. The 2 most common are to use a color filtering array, the Foveon X3 specialized sensor and using a trichroic prism and 3 image sensors.Color Filtering Array

The most common method is to use a color filtering array. The most common type of filter used is the Bayer filter, developed by Kodak researcher Bryce Bayer in 1976. A color filtering array filters the light coming into each pixel so that the pixel only detects one of the primary colors. The full color image can later be recreated by adding the colors from each pixel together to create a full color image.[1] The Bayer filter uses a pattern of 50% green, 25% red and 25% blue to match the sensitivity of the human eye to the 3 primary colors. The Bayer filter repeats over 4 pixels. Because the images have to be reconstructed and you only know one of the colors in each pixel, some of the image fidelity is lost in the process of image reconstruction called demosaicing. Edges of objects in the image can often be jagged and have non uniform color along the edges. In addition to the Bayer filter, there are many other filter patterns that can achieve the same result. The main problem with using a filter is that it reduces the amount of light that reaches each photodetector, thus reducing each pixel’s sensitivity to light. This can cause problems in low-light situations because the photodetectors will not receive enough light to produce a charge, resulting in large amounts of noise. To help reduce this effect, there is another type of filter in use that has some pixels that are not filtered. These Panchromatic filters mimic the human eye, which has detectors of color and detectors of light and dark. These do much better in low light, but require a much larger area to mimic the pattern than a traditional Bayer filter. This causes some loss in fidelityImage Transfer

The two most common methods for connecting cameras to a robot are USB and FireWire (IEEE 1394). When Apple developed FireWire they had in mind that it would be used to transfer audio and video. This resulted in a greater effective speed and higher sustained data transfer rates than USB, which are needed for audio and video streaming. FireWire also has the benefits of being capable of supplying more power to devices than USB can. FireWire can also function without a computer host. Devices can communicate with each other over FireWire without a computer to mediateRobotics/Real World Sensors

There is no such thing as a "distance sensor". period. Those components commonly called "distance sensor" or similar names measure something and extract distance information out of that. This extraction works pretty good in particular circumstances, but are worthless in many others. The key to successfully measuring e.g. a distance, is knowing, exactly, what your sensors measures and how external factors influence the measurement. This doesn't mean you just need to know how accurate the sensor is, it means you need to know what part of physics is used, and what the laws of physics say about that.

I'll cover some of the most commonly used sensors and what laws of physics apply to those. I'm not going very deep into explaining physics, there are better sources for that (a wiki physics book for example), just enough to give you the idea of what problems you may expect and where to look for a solution.

Light Based Sensors

Reflection: short range sensors

This type of sensor detects objects at a range up to a few centimeter. These sensors are quite simple. They consist of a light source, usually an IR diode which signal can be modulated and a light detector, which can be as simple as a light sensitive diode or transistor with an amplification circuit or a more complex IC complete with filters and TTL level outputs.These sensors work by detecting the reflection of the emitted light. The range at which an object is detected depends on a number of properties of the object:

- reflectivity/color: how well does the object reflect IR-light? Every object has a color. A green object means that it reflects light with wavelengths that we interpret as the color green. This can be a pretty large range. IR is also a color. Like any other color, some objects reflect IR, and other objects absorb IR.

- surface smoothness: A very smooth surface (like a mirror) reflects more light than a rough surface. (For example, a photograph of a black billiards ball usually shows a white spot caused by w:specular reflection).

- Angle: The more the surface is turned away from the sensor the more light is going to be reflected away from the sensor.

- Lightsources: Other lightsources like light bulbs or the sun emit IR light as well. Especially the sun can prevent an IR based sensor to operate.

Reflection: medium range sensors

Medium range sensors are a bit more complicated than short range sensors. These sensors consist of an IR emitting diode which signal is modulated, the receiver has a lens to focus the reflected light onto a light sensitive strip. Moving the sensor back and forward towards an object moves the reflection beam along the light sensitive strip. The resistance of the strip depends on where the light hits the strip.Its range has the same limiting factors as short range sensors.

Reflection: Long range sensors

Long range sensors use the time a laser pulse takes to travel from the emitter to the object and back. There are several methods of measuring this time of flight, but most involve correlating the transmitted and received light pulse. By comparing the phase of these two pieces of data, a very accurate time value can be extracted. These sensors can operate over a wide range, typically between a few centimeters up to several kilometers.Its range is also limited in the same way as the previous IR sensors. Another thing than can limit is haze, smoke and other particles on the air.

Camera

Cameras used in robotics are commonly built around a image Sensor. These cameras are sensitive to IR-light and usually have a IR-filter in front of the lens. Cheap webcams may not contain such a filter, which makes them very sensitive to sunlight.Stereo vision

These sensors consist of (at least) 2 cameras mounted some fixed distance from each other.This is rarely used because solving the correspondence problem is difficult.

Sound Based Sensors

What is sound?

Sound is in essence vibrations and pressure differences in the air. These vibrations are split into 3 groups by their frequency. The first group, called infrasone has a frequency below 20 Hz. The second group, called ultrasonic, has a frequency above 20 kHz and an upperbound of 2 MHz in air or 30 MHz in water. The last group is what is commonly called sound. This groups range lays between 20 Hz and 20 kHz and can be heard. Although only newborn babies can really hear all the way up to 20 kHz. The older you get the less frequencies you can hear.Most sensors use ultrasonic sound, usually around 40kHz. Such a signal can't be heard, while it's still easy to use (generate, detect, ...).

Doppler effect

If both the source and the receiver are motionless relative to each other, the receiver will hear the same frequency as the source emitted. However if one or both of them move relative to the other, the receiver will detect a different frequency. This change in frequency is called the Doppler Effect. Most people know this effect from the sirens of passing police cars or ambulances. When they pass you'll hear one sound as they approach and a somewhat different sound as they move away.Calculating what frequency the receiver will hear is quite easy:

With:

= The frequency the receiver hears

= the frequency the source emits

= the speed of sound

= the speed of the receiver

= the speed of the source

Speed of sound

The speed of sound depends on the medium it traverse through and its temperature. For air this is approximately 330m/s at 0°C. Of course most of the time the temperature is a bit higher than this. Calculating the actual speed is fairly easy:

with:

= the actual speed at the current temperature.

= the speed of sound at 0°C: 330m/s.

= the current temperature in Kelvin.

= 273,15 K (this is 0°C in Kelvin)

Reflection

Resonance

Diffraction

Ultrasonic Distance sensors

These sensors are pretty simple. In theory that is. In practice these sensors can be a real pain in the pinky. In this section I'll cover some of the troubles you may run into when trying to get them to work.Ultrasonic distance sensors consist of 3 major parts: A transmitter, a receiver and a timer. To measure a distance the timer triggers the transmitter which emits a series of pulses, then the timer waits until the receiver detects the reflection of the pulses and stops the timer. The time measured is then divided by 2 and multiplied with the speed of sound. The result is the distance between the sensor and the object in front of it. The transmitter send out a stream of pulses on a carrier frequency. The maximum frequency humans can hear is about 20 kHz. A frequency higher than that is picked to avoid annoying humans with the constant beep -- 40 kHz is a common value.

The receiver triggers when it receives a signal with that particular frequency. This is not necessary the signal the transmitter sent. If more than one ultrasonic sensor with the same carrier frequency are used, they can detect each others signals.

Sound doesn't move in a straight line, but rather as a 3D expanding wave. When the wave reaches an object part of it bounces back and moves again as a 3D expanding wave in the opposite direction. Such a wave can easily bounce multiple times before disappearing. So it is very possible that you receive pulses that have travel a much larger trajectory than just to and back from the object in front of the sensor. While some part of this problem can be solved by letting the sensor wait some time before starting another measurement, other situation can produce incorrect measurements which are fairly tough to correct. For example moving through a doorway can fail because the sensors emitted pulses bounce from the walls back to the sensor and so giving a measurement that indicates an object in front of the sensor. One way of correcting this is using another sensor, for example a IR distance sensor to see if there really is an object. However such solution pose another problem: which sensor to believe? 3 sensors allow you to go with the majority, but then things become quite complicated in constructing and interfacing such systems, not to mention what it does to the power consumption.

Distance Formula

The formula for calculating distance from a sonar pulse looks like:

343 m/s is the speed of sound, and we need to divide the time by 2 because the sound travels out and back.

Availability & Range

Sonar sensors are widely available and relatively inexpensive, ranging from $15 to $40 depending on the desired range. On average the maximum range of a midlevel sonar sensor will be between 4 and 6 meters. Unlike infrared or laser sensors, sonar sensors also have a minimum sensing distance as well. This is due to the fact that the distance measurements are based on the speed of sound, and over very short ranges the sound travels out and back more quickly than the circuitry can respond. This minimum distance will vary by sensors, but is typically around 2 to 5 centimeters. Also unlike infrared sensors, sonar sensors don’t have a perfect “cone” of vision. Because sound propagates as a 3D pressure wave, the sensor actually has a range that resembles a sinc function wrapped around a curve.Potential Problems

Sonar sensors work very well for collision avoidance in large areas, but they do have some limitations. Because sound propagates out as a 3D pressure wave and echoes, your robot may see things that are not really in its way. For instance, near angled walls the sound waves may bounce several times before returning to the sonar receiver. This makes it difficult for the robot to know which echo is actually the correct distance to the nearest obstacle.Similar to this is the problem of having multiple sonar sensors operating the in the same area. If the frequencies of nearby sonar sensors are too similar they may cause false readings since the sensors have no method besides frequency to distinguish pulses it sent out from those other sensors send out.

Another common problem is the difference in absorbency and reflection of different materials. If you shoot a sonar pulse at a cloth covered wall (i.e. cubicle), it is likely that the cloth will absorb a significant amount of the acoustic energy and that the robot will not see the wall at all. On the opposite end of the spectrum, a floor with very high acoustic reflection may register as an obstacle when it is really a clear plane.

Magnetism Based Sensors

Compass sensors

These sensors are used to measure the orientation of the robot relative to the magnetic north. It is important to remember that the magnetic north is not exactly the same as the geographical north. They differ several degrees.The magnetic field of Earth is quite weak. This makes that these sensors will not operate well along other magnetic fields. E.g. speakers would mess up the reading. If you use these sensors it is best to mount them as far away from your motors as possible. While you can't shield them without making them useless, paying attentions to where you mount them can make a considerable difference in reliability.

Robotics/Computer Control/The Interface/Computers

Personal computers (PC) have a large number of ports to which you could add your own hardware to control your robot. Some of these are very easy to use, while others are nearly impossible without special (expensive) ICs. Not all of these interfaces are available on all computers. This section gives an overview of some of the best known ports on a PC. These ports and their uses are well document all over the internet.External Ports

These are all the ports that are available on the outside of a PC. Most computer users are familiar with them (or at least know them by name and looks).Serial Port

The serial port is one of the two easiest to use ports on a PC. This port consist of 2 wires to transfer your data (one for each direction) and a number of signal wires. This port is reasonably sturdy, and if you know some digital electronics or use a microcontroller, is pretty easy to use too. It is limited on speed and can only connect two devices directly. By using special ICs you can connect the serial port to a RS-485 network and connect it to multiple devices.- Serial Programming/RS-232

- This site contains a lot information on serial ports (among others).

Parallel Port

The parallel port is the second of the easiest to use ports on a PC. This port uses 8 lines to transfer data and has several signal lines. Modern parallel ports can send and receive data. This port is easier to use, but less sturdy than the serial port. Since it operates on TTL voltage levels (0 and 5V) it can connect directly to microcontrollers and TTL logic.USB

USB is the successor of the serial port. It's faster and allows connecting devices without turning off the PC. Some modern microcontrollers have built in USB support.- Everything you'd need to know about USB.

- Serial Programming:USB Technical Manual

- Embedded Systems/Particular Microprocessors#USB interface

IEEE 1394: Firewire, i.link, Lynx

IEEE 1394 also known as FireWire, i.link or lynx is a (much) faster port, similar to the USB port. It reaches speeds up to 400Mbit/s.- The Connector

- Article about IEEE 1394.

- Linux FireWire wiki

Keyboard Connector

Keyboards use TTL level signals to transfer button presses and releases to the PC. A keyboard sends a code when a button is pressed and sends another one when the button is released. This port could be used for some purposes.Covers both how to replace the keyboard as how to use the keyboard for other purposes.

Ethernet

Ethernet can be used to connect other devices to a PC. Complete webserver-on-a-chip are available these days, and an ethernet network can be a way to connect multiple devices in a robot (and even hook it up to the internet and let people control the robot from all over the world).Internal Ports

These are the connectors inside the PC, generally these are used with special PCBs (called cards). Although harder to use, they offer great speed.ISA

ISA was the (8-, later 16-bit) bus where you plugged your video, sound, IDE and network card in the old days. You'll find these on PC up to (some) early Pentium II (the latter usually has only 1 E-ISA socket, if any). This bus is pretty easy to use for your own projects and well documented on the internet.ISA bus connector More indepth explaination of the ISA bus

PCI

PCI is the successor of the ISA bus. It's a faster 32bit bus. Since it support plug and play, a PCI device needs a few registers which identify the component and manufacturer.AGP

The Accelerated Graphics Port is aimed at 3D graphic cards. It's a fast bus, but optimized for graphics.PCI Express

PCI Express replaces both PCI and AGP. It's quite different from all the other busses, as it uses serial communication, rather than parallel. Its speed depend on the number of "lanes" (serial connections) used PCI Express support 1, 2, 4, 8 and 16 lanes.Wireless

These are "ports" too as they can be used to connect other devices to the PC.IRDA

IRDA is an infrared communication port. Modern versions reach speeds up to 4Mbit/s. IRDA may be a good alternative to wires for table top robots. Since it's an Infrared port it needs a line of sight to work reliable and its range is limited to 1m. Note that this port works at a much higher speed than remote controls and therefor standard remote control repeaters may not work reliable for IRDA.This site covers the basics of IRDA.

Here is a pinout of the mainboard IRDA connector.

WiFi / WLAN / 802.11 / Wireless Ethernet

All the names in the headline are synonyms for the same technology. WLANs are commonly used in PCs (especially laptops) as data networks. The bandwidth available is in the order of several megabits per second or more, far more than normally is necessary in any robotics project. A WLAN typically reaches about 100m, but with special directional antennas far more is possible (in a specific direction).A WLAN is the obvious choice if your robot has an inbuilt PC or perhaps even PDA for control. Also, when you have ethernet connectivity in your controller (reasonably low cost but not a standard feature except in certain kits), there are low cost (~€50) WLAN bridges available, such as the D-Link DWL-810+ and DWL-G810.

If you only have a serial port available, a wireless device server could be used. The cost of one of them is, however, over €100.

Bluetooth

Bluetooth is a low bandwidth protocol most commonly found in cellular phones. It is increasingly being deployed in laptops, and there are separate USB "sticks" available as well. Bluetooth can be used for making a serial connection wireless - there are Bluetooth serial ports available on the market, which can be used as converters. Total bandwidth in the system is about a megabit per second, with range up to about ten meters (standard Bluetooth, 2.5 mW), or about hundred meters (industrial Bluetooth, 100 mW). There are limitations on scaling with Bluetooth - it is mostly deployed in 1:1 links even though the standard includes networks with up to 8 active nodes (and even more passive ones). This means that if you plan on building large numbers of robots with a common communication network, Bluetooth might be less well suited.ZigBee

ZigBee is a protocol stack based on the 802.15.4 wireless network communication standard. It is low-cost, low-power and all-in-all perfectly suited for low-bandwidth communication needs. The bandwidth is on the order of tens to hundreds kilobits per second, and the range is up to about a kilometer, depending on equipment.Interesting solutions are XBee from Maxstream, which basically provide a wireless serial link. There also is a list of other vendors at w:ZigBee.

UWB

Wireless USB

Cellular networks

A possibility is to use standard cellular networks (mobile phones). It is only a viable solution for large-scale geostationary outdoor applications with low communication needs though, because of cost, latency and bandwidth limitations.Radio modems

Radio modems are normally older proprietary solutions for wireless linking of serial ports. Proprietary solutions probably shouldn't be considered for new designs.Using a PC or laptop in a robot

PCs have the benefit of abundance of memory, program space and processing power. Additionally they provide the best debug I/O (screen, keyboard and mouse) you could wish for. But they do have a few flaws that limit their usefulness in a mobile robot.- First of all their size. Even the smallest PC, a laptop, is quite bulky and forces you to use a rather large frame.

- Secondly, except for a laptop, power consumption is large and provide AC-power on a mobile unit is bulky as you need heavy batteries and an inverter.

- Lastly Pcs are lousy when it comes to getting a reliable accurate timing from the outside world.

The last point is quite easy to get around. Most PCs have a serial port. Most microcontrollers have a serial port as well. Use a level converter to connect the TTL level serial port of the microcontroller with the RS232 level computer serial port and use a little program that handles the accurate timing in the microcontroller and transfers this information to the computer through the serial connection. This is a very powerful setup that combines the strengths of both the PC and the microcontroller.

See this site for example interfacing hardware for the serial port. Covers an I/O module and RS232 to TTL level converter for use with robotics or microcontroller based projects.

Some microcontrollers provide USB or Ethernet ports, which can be used in pretty much the same way, although the software would be a bit harder to implement.

Robotics/Feedback Sensors/Encoders

It's not at all uncommon to require information concerning rotation on a

robot, whether from arm or manipulator axis motion to drive wheel

speed. Rotary encoders can be found in several varieties, most commonly

mechanical or using photodetectors. In mechanical encoders, electrical

contacts periodically connect, usually to drive a terminal high or low.

These electrical pulses are then used for information. Photodetector

encoders can be made smaller and operate much quicker. Typically, a

reflective sensor will shine on a marked reflective surface, or a

photointerruptor will shine through a disc with transparent and opaque

sections, such that the amplitude of received light causes high and low

electrical pulses in the receiver. These pulses are then decoded by

external circuitry.

Encoding

Depending on the type and reliability of information needed, the patterning on the rotary encoder will be increasingly complex. If only rotational speed is required, a simple alternating pattern will do, but if both speed and direction are needed, more encoded information is required. Encoding position will inherently yield information regarding velocity and direction, as comparing the direction of change in position will give direction of rotation, where the change in position with respect to time will (by definition) indicate speed. The specific type of position encoding will depend on the precision required, as well as the reliability: how much of an error in measured rotational position due to an error in reading the encoded information, is acceptable in the application.Measuring Rotational Speed

Example of simple speed-detection rotary encoder

Measuring Direction

Two-row encoder for finding speed and direction

If the disc is rotating in a particular direction (in this case, such that the pattern slides to the left), the rising (or falling) edge of channel A will be detected before that of channel B; if rotation is in the opposite direction, the edge of channel B will be detected first. By testing for which pattern's edges are detected first, the direction of rotation can be determined. The time between pulses for a single channel can be measured to approximate rotational speed. However, combining detections from the two channels gives an effective pulse rate double that of a single channel, which halves the measuring time and doubles precision. A means of measuring speed and direction with a single channel exists as well. Two receivers are placed on a single channel spaced half a pulse width apart, so that as the disc rotates, one sensor will be in state as the other transitions. The order in which the two sensors transition into a state is dependent on the direction of rotation of the disc.

Measuring Position

Simple binary encoder example

Simple Gray code encoder example

Reliability

One of the problems with using a binary encoding scheme is detector error. There's no guarantee that every channel is going to transition at the same time, which can cause a misread of a segment's ID. If the section of the disc below the detector is transitioning from one segment to the next, briefly the decoder might read only part of the bits correctly. Assume a standard eight-segment binary encoding scheme, where segments are numbered from 0 to 8 sequentially: if the transition from segment 000 to segment 001 doesn't read the rising edge correctly, your read is off by one space, which for the moment is negligible. If, however, it's transitioning from segment 011 to segment 100, and the least significant bit is read in first, briefly your controller will think it's transitioned to 010, in the complete opposite direction. If the most significant bit is read in first, it'll think it jumped from 011 to 111, on the complete opposite side of the wheel. Transition errors when a single bit changes can often be considered negligible, as the error in calculated position is 1. Errors from multi-bit transitions can be catastrophic.Gray Code

|

Robotics/Sensors/Tactile Sensors

Simple Contact Sensor

Bumper switches

One of the most simple sensors available are contact sensors or bumper switches. These sensors use some form of bumper to press a button when the robot comes in contact with another object. A well built bumper switch is a very reliable sensor, but since these detect by touch, they're not very practical on fast or heavy robots.One key point on bumper switches (or any kind of mechanical switches) is that they don't give a clean signal when closed. The contact tends to "bounce" a bit.

Whiskers

Whiskers use the same principle as bumper switches, however they give a slightly larger detection range and so avoid the need to bump against other objects. One common design is to mount the whisker through a circular bend wire. When something hits the whisker, it bends and touches the circular bend wire. The electrical contact formed in this way is used to detect the object.Robotics/Components/Power Sources

Though perhaps other power sources can be used, the main sources of

electrical power for robots are batteries and photovoltaic cells. These

can be used separately or together (for practical applications, most

solar-powered robots will need a battery backup).

Photo voltaic cell

Photo Voltaic Cellssolar cells are well known for their use as power sources for satelites, enviromentalist green energy campaigns and pocket calculators. In robotics solar cells are used mainly in BEAM robots. Commonly these consist of a solar cell which charges a capacitor and a small circuit which allows the capacitor to be charged up to a set voltage level and then be discharged through the motor(s) making it move.For a larger robot solar cells can be used to charge its batteries. Such robots have to be designed around energy efficiency as they have little energy to spare.

Batteries

Batteries are an essential component of the majority of robot designs. Many types of batteries can be used. Batteries can be grouped by whether or not they are rechargeable.Batteries that are not rechargeable usually deliver more power for their size, and are thus desirable for certain applications. Various types of alkaline and lithium batteries can be used. Alkaline batteries are much cheaper and sufficient for most uses, but lithium batteries offer better performance and a longer shelf life.

Common rechargeable batteries include lead acid, nickel-cadmium (NiCd)and the newer nickel metal-hydride (Ni-MH). NiCd & Ni-MH batteries come in common sizes such as AA, but deliver a smaller voltage than alkaline batteries (1.2V instead of 1.5V). They also can be found in battery packs with specialized power connectors. These are commonly called race packs and are used in the more expensive RC race cars. They will last for some time if used properly. Ni-MH batteries are currently more expensive than NiCd, but are less affected by memory effect.

Lead acid batteries are relatively cheap and carry quite a lot of power, although they are quite heavy and can be damaged when they are discharged below a certain voltage. These batteries are commonly used as backup power supply in alarm systems and UPS.

An extremely common problem in robots is "the microcontroller resets when I turn the motor on" problem. When the motor turns on, it briefly pulls the battery voltage low enough to reset the microcontroller. The simplest solution is to run the microcontroller on a separate set of batteries.

HISTORY OF THE BATTERY:

The first evidence of batteries comes from discoveries in Sumerian ruins dating around 250 B.C.E. Archaeological digs in Baghdad, Iraq . But the man most credited for the creation of the battery was named Alessandro Volta, who created his battery in the year 1800 C.E. called the voltaic pile. The voltaic pile was constructed from discs of zinc and copper with pieces of cardboard soaked in saltwater between the metal discs. The unit of electric force, the volt, was named to honor Alessandro Volta . A time line of breakthroughs and developments of the battery can be seen here .

HOW A BATTERY WORKS:

Most batteries have two terminals on the exterior, one end is a positive end marked “+” and the other end is the negative marked “-”. Once a load, any electronic device, a flashlight, a clock, etc., is connected to the battery the circuit being completed, electrons begin flowing from the negative to positive end, producing a current. Electrons will keep flowing as fast as possible until the chemical reaction on the interior of the battery lasts. Inside the battery there is a chemical reaction going on producing the electrons to flow, the speed of production depends on the battery’s internal resistance. Electrons travel from the negative to positive end fueling the chemical reaction, if the battery isn’t connected then there is no chemical reaction taking place. That is why a battery (except Lithium batteries) can sit on the shelves for a year and there will still be most of the capacity to use. Once the battery is connected from positive to negative pole, the reaction starts, that explains the reason why people have gotten a burn when a 9-volt battery in their pocket touches a coin or something else metallic to connect the two ends, shorting the battery making electrons flow without any resistance, making it very, very hot.

MAIN CONCERNS CHOOSING A BATTERY:

- - Geometry of the batteries. The shape of the batteries can be an important characteristic according to the form of the robots.

- - Durability. Primary(disposable) or secondary (rechargeable)

- - Capacity. The capacity of the battery pack in milliamperes-hour is important. It determines how long the robot will run until a new charge is needed.

- - Initial cost. This is an important parameter, but a higher initial cost can be offset by a longer expected life.

- - Environmental factors. Used batteries have to be disposed of and some of them contain toxic materials.

- • Zinc-carbon battery - mid cost - used in light drain applications

- • Zinc-chloride battery - similar to zinc carbon but slightly longer life

- • Alkaline battery - alkaline/manganese "long life" batteries widely used in both light drain and heavy drain applications

- • Silver-oxide battery - commonly used in hearing aids

- • Lithium Iron Disulphide battery - commonly used in digital cameras. Sometimes used in watches and computer clocks. Very long life (up to ten years in wristwatches) and capable of delivering high currents but expensive. Will operate in sub-zero temperatures.

- • Lithium-Thionyl Chloride battery - used in industrial applications, including computers and electric meters. Other applications include providing power for wireless gas and water meters. The cells are rated at 3.6 Volts and come in 1/2AA, AA, 2/3A, A, C, D & DD sizes. They are relatively expensive, but have a proven ten year shelf life.

- • Mercury battery - formerly used in digital watches, radio communications, and portable electronic instruments, manufactured only for specialist applications due to toxicity

SECONDARY (RECHARGEABLE):

(Will be discussing the two most popular secondary batteries)

Lithium-ion Batteries:

Advantages:

These batteries are much lighter than non-lithium batteries of the same size. Made of Lithium (obviously) and Carbon. The element Lithium is highly reactive meaning a lot of energy can be stored there. A typical lithium-ion battery can store 150 watt-hours of electricity in 1 kilogram of battery. A NiMH (nickel-metal hydride) battery pack can store perhaps 100 watt-hours per kilogram, although 60 to 70 watt-hours might be more typical. A lead-acid battery can store only 25 watt-hours per kilogram. Using lead-acid technology, it takes 6 kilograms to store the same amount of energy that a 1 kilogram lithium-ion battery can handle. Huge difference!

Disadvantages:

Begin degrading once they are created, lasting only two or three years tops, used or not. Extremely sensitive to high temperatures, heat degrades battery even faster. If a lithium battery is completely discharged, it is ruined and a new one will be needed. Because of size and ability to discharge and recharge hundreds of times it is one of the most expensive rechargeable batteries. And a SMALL chance they could burst into flames (internal short, separator sheet inside battery keeping the positive and negative ends apart gets punctured).

Alkaline Batteries

The anode, the positive end, is made of zinc powder because the granules have a high surface area, increasing the rate of reaction and higher electron flows. It also helps limit the rate of corrosion. Manganese dioxide is use on the cathode, or the negative side, in powder form as well. And potassium hydroxide is the electrolyte in an alkaline battery. There is a separator inside the battery to separate the electrolyte between the positive and negative electrodes.

Fuel Cells

Fuel cells are a possible future replacement for chemical cells (batteries). They generate electricity by recombining hydrogen gas and oxygen. (commercial fuel cells will probably use methanol or other simple alcohols instead of hydrogen). Currently these are very expensive, but this might change in the near future when these cells are more commonly used as a replacement for laptop batteries.Note: since fuel cells use flammable products you should be extra careful when you build a power source with these. Hydrogen has no odor like natural gas and is flammable and in some conditions explosive.

Pressurized canisters have their own set of risks. Make sure you really know how to handle these. Or at least allow other people enough time to get behind something thick and heavy before experimenting with these.

Mechanical

Another way to store energy in a robot is mechanical means. Best known method is the wind-up spring, commonly used in toys, radios or clocks.Another example of mechanical energy storage is the flywheel. A heavy wheel used to store kinetic energy.

Air Pressure

Some robots use pneumatic cylinders to move their body. These robots can use either a bottle of pressurized air or have a compressor on board. Only the first one is a power source. The latter power source is the batteries powering the compressor. Pneumatic cylinders can deliver very large forces and can be a very good choice for larger walkers or grippers.Note: Pressurized canisters and pneumatic components can be dangerous when they are handled wrongly. Failing pressurized components can shoot metal pieces around. Although these aren't necessarily life threatening, they can cause serious injuries even at low pressures.

Canisters on their own pose additional risks: Air escaping from a pressurized canister can freeze whatever happens to be in its way. Don't hold any body parts in front of it.

Pneumatic and hydraulic cylinders can deliver large forces. Your body parts can't handle large forces.

Chemical Fuel

The model airplanes there exist small internal combustion engines. These engines can be used to power robots either directly for propulsion or indirectly by driving an alternator or dynamo. A well designed system can power a robot for a very long time, but it's not advisable to use this power system indoors.Note: This is another dangerous way of doing things. Fuel burns and is toxic. Small amounts of fuel in a open container can explode when ignited. Exhaust is toxic and a suffocation risk. Make sure of that you know what doing or get good life insurance

Robotics/Types of Robots/Wheeled

Wheeled robots are robots that navigate around the ground using

motorized wheels to propel themselves. This design is simpler than using

treads or legs and by using wheels they are easier to design, build,

and program for movement in flat, not-so-rugged terrain. They are also

more well controlled than other types of robots. Disadvantages of

wheeled robots are that they can not navigate well over obstacles, such

as rocky terrain, sharp declines, or areas with low friction. Wheeled

robots are most popular among the consumer market, their differential

steering provides low cost and simplicity. Robots can have any number

of wheels, but three wheels are sufficient for static and dynamic

balance. Additional wheels can add to balance; however, additional

mechanisms will be required to keep all the wheels in the ground, when

the terrain is not flat.

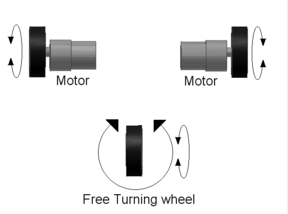

Most wheeled robots use differential steering, which uses separately driven wheels for movement. They can change direction by rotating each wheel at a different speed. There may be additional wheels that are not driven by a motor these extra wheels help keep it balanced.

2-wheeled robots

Two wheeled robots are harder to balance than other types because they must keeping moving to maintain upright. The center of gravity of the robot body is kept below the axle, usually this is accomplished by mounting the batteries below the body. They can have their wheels parallel to each other, these vehicles are called dicycles, or one wheel in front of the other, tandemly placed wheels. Two wheeled robots must keep moving to remain upright and they can do this by driving in the direction the robot is falling. To balance, the base of the robot must stay with under its center of gravity. For a robot that has the left and right wheels, it needs at least two sensors. A tilt sensor that is used to determine tilt angle and wheel encoders which keep track of the position of the platform of the robot.

Swing-type robot

Examples

Roomba

Roombas are two-wheeled vacuum cleaners that automatically moves around cleaning up a room. They utilizes a contact sensor in the front and a infrared sensor on its top.

Roomba

Segway

Segways are self-balancing dicycle electric vehicles.Ghost Rider

Ghost Rider was the only two wheeled robot entered for the Darpa Grand 2005 Challenge. It was unique because of its motorcycle design, unlike the other two-wheeled robots, the wheel alignment is front and back, which makes it harder to balance as it turns. This tandem design of the wheels is much less common than that of a dicycle.3-wheeled vehicles

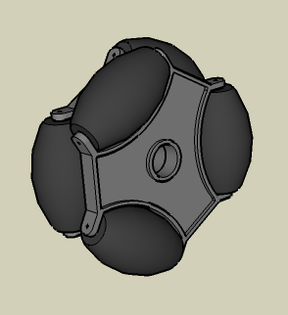

3-wheeled robots may be of two types: differentially steered (2 powered wheels with an additional free rotating wheel to keep the body in balance) or 2 wheels powered by a single source and a powered steering for the third wheel. In the case of differentially steered wheels, the robot direction may be changed by varying the relative rate of rotation of the two separately driven wheels. If both the wheels are driven in the same direction and speed, the robot will go straight. Otherwise, depending on the speed of rotation and its direction, the center of rotation may fall anywhere in the line joining the two wheels.Omni Wheels

Another option for wheeled robots that makes it easier for robots with wheels not all mounted on the same axis to have Omni Wheels. An omni wheel is like many smaller wheels making up a large one, the smaller ones have axis perpendicular to the axis of the core wheel. This allows the wheels to move in two directions, and the ability to move holonomically, which means it can instantaneously move in any direction. Unlike a car, which moves non-holnomicallly and has to be in motion to change heading. Omni-wheeled robots can move in at any angle in any direction, without rotating beforehand. Some omni wheel robots use a triangular platform, with the three wheels spaced at 60 degree angles. Advantages of using 3 wheels and not 4 are that its cheaper, and 3 points are guaranteed to be on the same plane, so each wheel in contact with the ground, but only one wheel will be rotating in the direction of travel. The disadvantages of using Omni wheels is that they have poor efficiency due to not all the wheels rotating in the direction of movement, which also causes loss from friction, and are more computationally complex because of the angle calculations of movement.4-wheeled vehicles

2 powered, 2 free rotating wheels

Same as the Differentially steered ones above but with 2 free rotating wheels for extra balance.2-by-2 powered wheels for tank-like movement

The

Pioneer 3-AT robot has four motors and four unsteered wheels; on each

side a pair of motors drives a pair of wheels through a single belt.

Car-like steering

Examples

The DARPA Grand and Urban Challenges pit robotic cars against one another in a series of navigational tests. These robots are fully automated and drive themselves along the test course. The DOD sponsors the competition and it is used to facilitate robotic development.5 or more wheeled vehicles

For larger robots. Not always very practical.Especially when more powered wheels are used the design becomes much more complex as each of the wheels have to turn with the same speed when the robot has to move forwards. Differences in speed between the left and right wheels in differentially steered robots cause the robot to move to the side instead of in a straight line. Difference in speed between wheel on the same side cause slipping of the slowest wheel.

Sometimes an extra free rotating wheel with odometry is added to the robot. This measures more accurately how the robot moves. Odometry on the powered wheels excludes slip and other movements and thus could be erroneous.

Examples

The Mars Rovers (Sojourner, Spirit, Opportunity) are six wheeled robots that navigate across Martian terrain after landing. They are used to examine territory, interesting landmarks and make observations about the surface of Mars. They have a suspension system which keeps all six wheels in contact with the surface, and helps them traverse slopes ans sandy terrain.One Wheel

One wheeled robots are extremely difficult to keep balanced due to the single point of contact with the ground. There have been experimental designs and robots that do only have one wheel. It is easier to use a spherical wheel rather than a typical disc wheel, as the robot can move in any direction along the sphere. An example sketch shows the basic idea of using gyroscopes and counter-torque mechanisms to keep the robot upright. The spinning flywheels stabilize and tilt it, allowing for non-holonomic movement.Robotics/Components/Actuation Devices

Actuation devices are the components of the robot that make it move (excluding your feet). Best known actuators are electric motors, servos and stepper motors and also pneumatic or hydraulic cylinders. Today there are new alternatives, some which wouldn't be out of place in a good SF-movie. One of the new types of actuators are Shape Memory Alloys (SMA). These are metal alloys that shrink or enlarge depending on how warm they are. Another new technique is the use of air-muscles.

- Motors

- Shape Memory Alloys

- Air muscle

- Linear Electromagnetic

- Piezoelectric Actuators

- Pneumatics/Hydraulics

- Miniature internal combustion engines

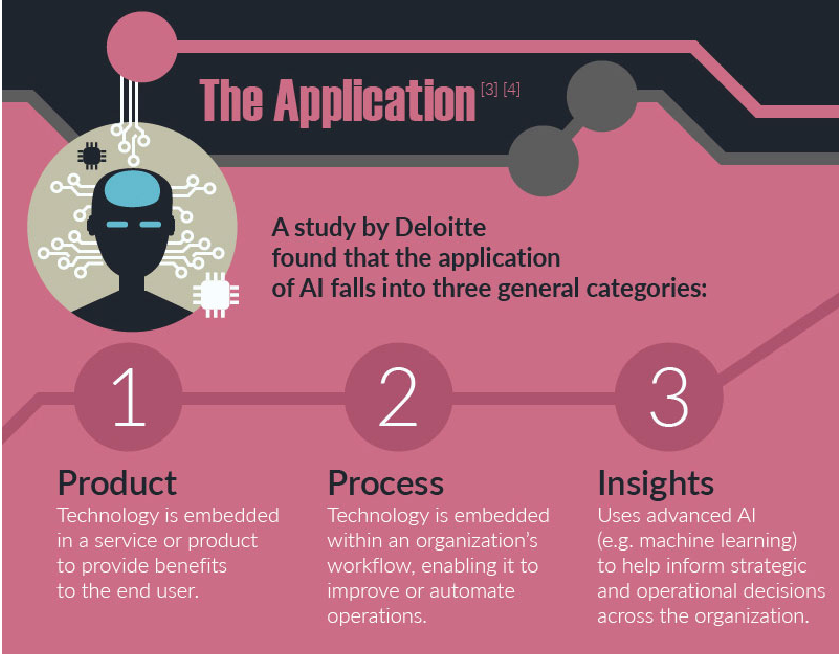

XO___XO ARTIFICIAL INTELIGENCE

There is a multitude of ways that artificial intelligence is changing

our day-to-day life. In some of the largest industries in the world,

this ever-growing technology is rearing itself as a force to be reckoned

with. Already we are seeing artificial intelligence creep into our

education systems, our businesses, and our financial structures.

AI Is Changing Education

Artificial intelligence powered education programs are already helping students

learn basic math and writing skills. These programs can only teach

students the fundamentals of subjects, but at the rate this technology

has changed, it’s safe to say it will be able to teach higher level

thinking in the future. Artificial intelligence allows for an

individualized learning experience. This type of technology can show

what subjects a student is suffering in and allow teachers to help focus

on building up specific skill sets.

With the expansion of technology knowledge and accessibility, we are

seeing the very road map of education change. In the future, a

combination of artificial intelligence tutoring and support software

will give an opportunity for students anywhere around the globe to learn

any subject at their own pace and on their own time.

Artificial intelligence has been able to automate simple actions like

grading, which is relieving teachers and professors from time-consuming

work. Teachers spend a lot of their time grading and reporting for their

students, but it is now possible for educators to automate their

grading for almost all types of multiple choice testing. Essay grading

software has emerged in its early years as an improvable tool to help

teachers focus more on classroom management than assessment.

Finances and Artificial Intelligence

Artificial

intelligence is able to process a significant amount of data in a short

amount of time — more data than any human or computer program has ever

been able to process. This allows banks to provide more targeted and

individualized wealth management advice to their customers. For example,

with risk assessment and artificial intelligence

the time it takes to apply and be approved for a home or personal loan

could be a matter of hours instead of months. This is due to AI’s

capability to work faster at unearthing and analyzing customer

information.

“artificial intelligence is capable of understanding each individual

customer’s financial situation is a real possibility for the future of

personal banking.” At this stage in the technology game, banks are

already utilizing AI customer service with automated tellers, chatbots,

and voice automation. Seven leading United States commercial banks have

invested in AI applications that will serve as a part of their customer

service to improve performance and increase overall revenue.

Artificial intelligence works to help financial service companies

decrease their overhead risks, generate more money, and maximize their

already available resources. Artificial intelligence is even changing

the way that infamous Wall Street will one day operate. Eventually

quantitative analysts will be replaced with a machine learned system

that can build upon previous trading algorithms automatically updating

and making their trading decisions more effective.

“artificial intelligence is expected to transform how companies in

almost every industry do business.” Technology is already changing the

way that businesses process their products, create their products, and

find their target market — allowing artificial intelligence to find its

way into every application of business.

Artificial intelligence in manufacturing

is having the largest impact on business and project management right

now. For example, BP has begun using AI when drilling for oil. They use

AI technology to prevent human errors by taking data from the drilling

programs and advising the operators on how and when to adjust the

drilling depth or distance. The hope is that in the future this type of

technology can completely dissipate human error in intricate jobs like

this.

Machine

learning is programming that provides a computer with the necessary

data and ability to learn for itself through algorithms. Deep learning

is a form of machine learning that allows artificial intelligence

computers to learn by listening to examples instead of giving it

specific guidelines to follow. This is important for businesses because

it allows companies to process massive amounts of data to find patterns

and determine what will happen next without needed to be programmed

exactly to do so.

Businesses

can use this information to look at past sales information and

variables that could affect overall performance. This can help both

increase profits and help companies keep inventory efficiently stocked.

Artificial intelligence allows project managers to interpret the data

provided and find gaps in information. This data gives them a deeper

understanding of potential budgets, changes in production that can be

made, and efficiency of current procedures.

As

technology changes in the upcoming years, it’s safe to say that

artificial intelligence will begin to creep its way more and more in our

modern lives. For right now it is the dominating force to be reckoned

with as we move in to the future of business, education, and financial

services. In the future, it will be in every facet of life.

Artificial intelligence

The modern definition of artificial

intelligence (or AI) is "the study and design of intelligent agents"

where an intelligent agent is a system that perceives its environment

and takes actions which maximizes its chances of success.

"the science and engineering of making intelligent machines."

Other names for the field have been proposed, such as computational

intelligence, synthetic intelligence or computational rationality.The term artificial intelligence is also used to describe a property of machines or programs: the intelligence that the system demonstrates.

AI research uses tools and insights from many fields, including computer science, psychology, philosophy, neuroscience, cognitive science, linguistics, operations research, economics, control theory, probability, optimization and logic.

AI research also overlaps with tasks such as robotics, control systems, scheduling, data mining, logistics, speech recognition, facial recognition and many others.

Computational intelligence Computational intelligence involves iterative development or learning (e.g., parameter tuning in connectionist systems).

Learning is based on empirical data and is associated with non-symbolic AI, scruffy AI and soft computing.

Subjects in computational intelligence as defined by IEEE Computational Intelligence Society mainly include: Neural networks: trainable systems with very strong pattern recognition capabilities.

Fuzzy systems: techniques for reasoning under uncertainty, have been widely used in modern industrial and consumer product control systems; capable of working with concepts such as 'hot', 'cold', 'warm' and 'boiling'.

Evolutionary computation: applies biologically inspired concepts such as populations, mutation and survival of the fittest to generate increasingly better solutions to the problem.

These methods most notably divide into evolutionary algorithms (e.g., genetic algorithms) and swarm intelligence (e.g., ant algorithms).

With hybrid intelligent systems, attempts are made to combine these two groups.

Expert inference rules can be generated through neural network or production rules from statistical learning such as in ACT-R or CLARION.

It is thought that the human brain uses multiple techniques to both formulate and cross-check results.

Thus, systems integration is seen as promising and perhaps necessary for true AI, especially the integration of symbolic and connectionist models.

Artificial Intelligence Could Optimize Your Next Design

Modern electronics design is increasingly revealing the inadequacies of simulation-based verification. But researchers believe machine learning holds the answer.

The more complex modern electronic systems have gotten – the less comprehensive simulation has become as a design tool. But there's a solution on the horizon in the form of behavioral modeling based on machine learning. One of the leading centers behind this research is the Center for Advanced Electronics through Machine Learning (CAEML) . CAEML formed with the aim of applying machine-learning techniques to microelectronics and micro-systems modeling, CAEML is already conducting research into several areas including: Design Optimization of High-Speed Links; Nonlinear Modeling of Power Delivery Networks; and Modeling for Design Reuse. “The limitations in simulation that people experience have always been there, And we need more accurate models than we've had in the past . “For example, we make everything smaller. The physical accuracy of the models hasn't changed, but we're entering regimes where there's increasing cross talks between components simply because we're packing them together more closely.” which calls for ever-improving energy minimization – are creating an environment for design engineers in which simulation-based verification alone is simply not practical. “When you're designing a product, such as, say, a cellphone, you have maybe about a hundred or so components on the circuit board. That's a lot of design values. To completely explore that design space and try every possible combination of components is unfeasible . researchers at CAEML is highly abstracted behavioral models that let engineers rapidly do a design space exploration to find an optimal sign, not just one that's good enough.

“When we want to do design optimization we can't be concerned with every single variable inside the system. “All we really care about is what's happening in the aggregate – the signals at the outside of the device where the humans are interacting with it. So we want these abstracted models and that's what machine learning gives you – models that you then use for simulation.”

Accomplishing this is no small task, given that simulations require engineers to model everything in a system, and all of those effects can be represented. completely data-driven modeling, not based on any prior knowledge of what's inside the system. To do this they need to use machine learning algorithms to that can predict a particular output and represent the behaviors of particular components. machine learning-based modeling also offers several other benefits that should be attractive to companies, such as the ability to share models without revealing vital intellectual property (IP).

“Because behavior modeling only describes, say input/output characteristics, they don't tell you what's inside the black box. They preserve or obscure IP. With a behavioral model a supplier can easily share that model with their customer without disclosing proprietary information,” Rosenbaum explained. “It allows for the free flow of critical information and it allows the customer then to be able to design their system using that model from the supplier.”

Most integrated circuit manufacturers, for example, use Input/Output Buffer Information Specification (IBIS) models to share information about input/output (I/O) signals with customers, while also protecting IP. “Where machine learning can help is to make models . models don't represent interactions between the multiple I/O pins of an integrated circuit. There's a lot of unintended coupling that current models can't replicate. But with more powerful methods based on machine learning for obtaining models, next-gen models may be able to capture those important effects.”

The other great benefit would be reduced time to market. In the current state of circuit design there's almost a sense of planned failure that eats up a lot of development time. “Many chips don't pass qualification testing and need to undergo a re-spin,” “With better models we can get designs right the first time.” background in system level ESD, a world she said is built on trial and error and would benefit greatly from behavioral modeling. “[Design engineers] make a product, say a laptop, it undergoes testing, probably fails, then they start sticking additional components on the circuit board until it passes...and it wastes a lot of time. “They build in time to fix things, but it's often by the seat of one's pants. If we had accurate models for how these systems would respond to ESD we could design them to pass qualification testing the first time.”

The willingness and interest in machine learning-based behavioral models is there, but the hurdles are in the details. How do you actually do this? Today, machine learning finds itself being largely applied to image recognition, natural language processing, and, perhaps most ignominiously, the sort of behavior prediction that lets Google guess what ads it wants to serve you. “There's only been a little bit of work in regards to electronics modeling, “We have to figure out all the details. We're working with real measurement data. How much do you need? Do you need to process or filter it before delivering it to the algorithm? And which algorithms are suitable for representing electronic components and systems? We have to answer all of those questions.”

CAEML's aim is to demonstrate, over a five-year period, that machine learning can be applied to modeling for many different applications within the realm of electronics design. As part of that the center will be doing foundational research on the actual machine learning on the algorithms – identifying ones that are most suitable and how to use them.

“Although we're working on many applications – signal integrity analysis, IP reuse, power delivery network design, even IC layouts and physical design – all of which require models, there are common problems that we're facing, a lot of them do pertain to working with a limited set of real measurement data . “Historically, machine learning theorists really only focused on the algorithm. They assumed there's an unlimited quantity of data available, and that's not realistic, at least in our domain. In order to get data you have to fabricate samples and measure them, which that's takes time and money. The amount of data, though it seems huge to us, is very small compared to what they use in the field. “

Artificially Intelligent

Folk Songs

humanity envisioned and dreamed of a technology-enabled future. One with autonomous transportation, flying vehicles, a clean and safe environment and a healthy, extended life.

“artificial intelligence” to describe the science and engineering of making machines intelligent, and after surviving two so-called “AI winters,” recent advancements suggest that the once-distant future of our dreams is becoming a reality.

AI bested its human counterpart in the most strategic of games, including Jeopardy and Go. Just recently, AI has acquired the skill to handle mis-information and incomplete information by winning against world-class poker players in a Texas’hold’em contest. Although Artificial General Intelligence (machines that compare to or surpass the human mind) still belongs in the distant future, researchers believe that machines are gradually approaching human levels when performing “simple” (tasks that are simple for humans, not machines) tasks, such as understanding naturally spoken language or evaluating unknown, new situations (in non predictable environments).

In

fact, one of the most common applications of AI today is speech

recognition. Personal virtual assistants like Alexa, Siri, Cortana and

Google Assistant can understand speech and respond to it accordingly.

The biggest breakthrough in speech recognition

thus far has come from IBM, which has managed to reduce the error rate

in conversational speech recognition to 5.5% (relative to the human

error rate is of 5.1%).

Other existing AI applications

include predictive technologies found in early self-driving cars and

search engines. Companies such as Netflix and Pandora are also using AI

to improve content recommendations.

It’s realistic to envision an AI-human hybrid

that increases our mental skills and masters scientific challenges.

This hybrid may also extend to combining our bodies with artificial

devices that enable us to improve our physical or cognitive abilities.

Despite

countless advancements, machines still lack the ability to process deep

emotional intelligence. In response, much research is being conducted

and time spent training AI to read a user’s emotional needs.

Although these machines cannot fully understand emotion, businesses are

now implementing cognitive technology tools in the form of bots and

virtual agents to handle customer questions. These technologies can

detect various emotions and develop customized responses to offer more

empathetic feedback and support. We have finally reached a point where

humans and machines can build engaging relationships, thus allowing

businesses to provide more personalized services through AI.

This is the starting point for the three core paradigms that will shape the applications of AI technology in the coming years:

Conversational AI

The

internet enables us to connect, share and engage without time, location

or other physical constraints. And now, bots are poised to change

humankind’s favorite communication technology: messaging apps. However,

while conversational interfaces allow us to engage chatbots, the

technology still lacks the broad understanding of individual

conversational context to create a meaningful and valuable interaction

with the user.

The predicts that conversational AI, when used properly with visual

solutions and UX, will supersede today’s cloud and mobile-first paradigm

by 2021.

Although

conversational AI is primarily deployed in customer- or user-facing

applications, We expect a much bigger use in bot-to-bot communication

across business applications throughout the next 2 to 3 years. This type

of bot will result in a true personal assistant.

Bot-to-bot communication will be the most used form of interaction involving bots.

It

will extract the real value from these systems, providing access to

information sets that couldn’t be provided by user-facing interactions,

and even less by competitive services at scale in the past.

Mass-Individualization

Mass

individualization will change the way products are made. New offerings

will automatically be created based on an individual’s current and

future needs. Web applications will serve and produce specific items on

the fly, via bot-to-bot communication and real-time customer engagement.

This trend will lead us to a world where machines are deeply integrated into our everyday lives.

How?

Mass individualization is poised to take over today’s mode of mass

standardization,and enable entrepreneurs and product managers to build

offerings around each user’s personal context. This will include factors

such as their behavior, attitude, goals and needs — all understood via

conversational (message-based) applications, buying and browsing

history, geography and much more.

Early

progress in content and e-commerce will push the digitization of

industries to new heights thanks to its ability to transfer complex

processes and engagement models that usually require a large amount of

service. High-cost, repetitive processes are poised for disruption, such

as accounting and legal advice. AI will help professional augment their

work, acomodate the customized needs of more customers and introduce

entirely new business processes that increase efficiency and

productivity.

AI-Enabled Convergence

By

definition, technological convergence is the tendency that different

technological systems will evolve towards performing similar tasks. New

technologies take over to perform the same task but in a more advanced

manner.

AI-enabled

convergence means that AI-based technologies are embedded in all new

systems which provide smart, context-aware and pro-active products and

services that can engage with humans in a more natural and smarter way.

These systems can either be based purely on software applications or on

robotics that engage with us physically.

AI

will be used together with enabling technologies such as Blockchain, a

distributed but controlled network of billions of systems connecting and