e- Bridge Communication is Good Solution

In the rapidly changing global environment, there is a need for a conceptual frame that takes account of the wide range of theories and explanations for developments in media and communication, which also encompasses drivers like globalization, individualization and the growing importance of the market economy as a reference system. We need to better understand how media and communication may be used, both as tools and as a way of articulating processes of development and social change, improving everyday lives and empowering people to influence their own lives and those of their fellow community members .

The communicational change results from the transformation of media consumption, that is, entertainment, communication and provision of news and information, but also knowledge creation in general, including the scientific dimension. Because the education system is based on the communication of the produced knowledge and, in turn, the scientific system depends on knowledge production, a change in the communication paradigm is also felt in the scientific dimension - therefore influencing also all society. In this digital age it is easy to marginalize traditional media as radio, newspapers, journals and books, and fail to confront critical issues such as the lack of media freedom in many parts of the world, the rising global concentration of private media ownership, the absence of media legislation and the challenges facing public service media In a world where consumption is no longer entirely driven by media companies and begins to be shared by participants, through the availability of technology, this dimension of communicational change is also a change of cognitive character, that is, it also surfaces in tensions within the educational system, that is, through oppositions like: the face to face vs. the distant in real time; the expositive lecturing vs. the interactive lecturing; the multimedia presentation vs. oral communication “plus” writing on the board.

We love social media, smart phones and daily digital rituals. It is currently our key to both education and entertainment (not to mention news, our networks and our social calendars). In addition to the latest and greatest apps and gadgets, we also love vintage, antique and timeless tools of communication. It is all because the face of communication has changed dramatically over the past few years. Traditional Telcos, which have historically dominated two-way interpersonal conversations, are increasingly being challenged by new market entrants that use open platforms to meet diverse and rapidly changing user wants and needs.

We are intrigued and constantly awed by communication channels and gadgets of the illustrious past. They were the vehicles that carried stories, traditions, fact and fiction that were passed down generation to generation. There is something extremely romantic and nostalgic about the beautiful curves of handwritten prose, calligraphic ink on paper, tiny photographs-once crisp, now time-warped, the Mad Men-esque click and clack of typewriter keys – antiques are awesome! But for a new generation of digitally aware consumers, Facebook, MySpace and Cyworld, have become primary communication media. Driven by high broadband penetration, maturing “social software” and readily available, affordable Internet enabled multimedia devices, these sites and services are making inroads with enthusiastic users and garnering the attention of advertisers, consumer product companies and enterprises that are using social media to reach their customers, build brand loyalty and communicate with geographically dispersed employees, suppliers and partners.

The Social Lights enjoy a blend of the old and the new – digital invites and handwritten thank-you. Multimedia presentations and inperson explanations. When we are communicating by way of digital devices – we try to add humanizing elements whenever possible – voice, video, photos, quotes – elements that reflect the fact that there is a person behind that status update, that email, that newsletter…not just a monotonous robot or lifeless, lackluster laptop spewing out social media posts. The widespread social networking phenomenon reflects shifts in two long-term communication trends. First, there is a shift in communication patterns – from point-to-point, two-way conversations, to many-to-many, collaborative communications. Secondly, control of the communication environment is transitioning from Telcos to open Internet platform providers, enabled by better, cheaper technology, open standards, greater penetration of broadband services and wireless communication networks.

The combined effect of these trends is altering the competitive landscape in communications and giving rise to emerging business models that include:

• Open and Free – This model features companies that offer oneto- one communication services, but through an open Internet platform and at no – or very little – cost. These services potentially threaten profitable traditional services, such as long distance calling and mobile roaming.

• Gated Communities – Companies using this model focus on many-to-many communications, rather than point-to-point, within telecom-controlled environments. They are, essentially, a “walled-garden” for operator-led collaboration services and are likely to appeal to users and enterprises that desire secure and reliable communication environments.

• Shared Social Spaces – This rapidly growing model facilitates collaboration on the open Internet. Key players include social networking sites such as MySpace and Facebook. These providers have the potential to become de facto integrated communication platforms, bringing together social networking, voice communication, e-mail, instant and text messaging, as well as content. They are drawing attention away from traditional Telcos and contributing to the fragmentation of the market. Beyond gaining audience share, these services pose an operational challenge to Telcos as they “piggyback” on the existing communications infrastructure, imposing network capacity issues and increased costs for the network providers.

e- Bridge Communications has become an increasingly important paradigm in social science fields . Gone are the days of typewriters, rotary phones and snail mail… of dial-up, phone books and dusty dictionaries. Now it is new media technology offering -Smart phones, emails in rapid succession, texts, DMs, status updates, push updates, check-ins, and incessant email checking… It is time to embrace these new tools of communication, because before we know if they will be gone and the next newfangled gadget will be even more cutting edge and innovative than we can even imagine! Although it seems as if these new technologies and platforms are crowding our daily lives, when we stop and think about it, they are truly saving us time and energy – making us more efficient and productive than ever before. When we talk about the impact or effects of new media technology, there are a host of effects that we might potentially contemplate. Computers and the World Wide Web have certainly changed the way we behave in many domains. People shop online, trade stocks online, get their news online, initiate friendships online, and so forth. Children spend time playing the latest computer games. The exponential rate of technological change that has transformed media and communication structures globally is reflected in the degree of attention paid to the convergent media nexus by the international community. With the rapid growth of new media technology including the Internet, interactive television networks, and multimedia information services, many proponents emphasize their potential to increase interactive mass media, entertainment, commerce, and education. Pundits and policy makers also predicting that free speech and privacy will be preserved and our democratic institutions will be strengthened by new communication opportunities enhanced by digital media. This is because access to and use of digital media technologies such as PCs, the Internet, computer games, mobile telephones, etc., have become a normal aspect of everyday life in the world community country.

There is plenty of evidence demonstrating the power of digital communications and new media. Most marketers know this. They also have the first-hand experience of the diminishing returns from traditional techniques. And yet, once again, changing their behavior just seems too hard. This is why many organizations seem to be waiting for the digital revolution to come. They know intellectually it’s going to impact them. But perhaps tomorrow, not today. A day which never seems to arrive – until it’s too late. By then their competitor has seized the initiative and dominated them in digital. Until they get the wake-up call that their competitor is first in search, has higher and more qualified web traffic, gets better conversions, which lead to improved sales, lower costs and higher margins.

Introducing networks

A network is created when more than one device is connected together. A network can be a small collection of computers connected within a building (eg a school, business or home) or it can be a wide collection of computers connected around the world.

Data packets

The main purpose of networking is to share data between computers. A file has to be broken up into small chunks of data known as data packets in order to be transmitted over a network. The data is then re-built once it reaches the destination computer. Networking hardware is required to connect computers and manage how data packets are communicated. Protocols are used to control how data is transmitted across networks.

There are advantages and disadvantages to using networks.

Advantages

- Communication – it is easy (and often free) to communicate using email, text messages, voice calls and video calls.

- Roaming – if information is stored on a network, it means users are not fixed to one place. They can use computers anywhere in the world to access their information.

- Sharing information – it is easy to share files and information over a network. Music and video files, for instance, can be stored on one device and shared across many computers, so every computer does not need to fill the hard drive with files.

- Sharing resources – it is easy to share resources such as printers. Twenty computers in a room could share one printer over a network.

- Sharing software – it is possible to stream software using web applications. This avoids needing to download and store the whole software file.

Disadvantages

- Dependence – users relying on a network might be stuck without access to it.

- Hacking - criminal hackers attempt to break into networks in order to steal personal information and banking details. This wouldn't be possible on a stand-alone computer without physically getting into the room, but with a network it is easier to gain access.

- Hardware – routers, network cards and other network hardware is required to set up a network. At home, it is quite easy to set up a wireless network without much technical expertise. However, a complicated network in a school or an office would require professional expertise.

- Viruses - networks make it easier to share viruses and other malware. They can quickly spread and damage files on many computers via a network.

LANs and WANs

A network can be anything from two computers connected together, to millions of computers connected on the internet. There are many different types of networks such as LAN, WAN, VPN, WPAN and PAN.

LAN

A LAN (local area network) is a network of computers within the same building, such as a school, home or business. A LAN is not necessarily connected to the internet.

WAN

A WAN (wide area network) is created when LANs are connected. This requires media such as broadband cables, and can connect up organisations based in different geographical places. The internet is a WAN.

VPN

A VPN (virtual private network) is usually hosted securely on another network, such as the internet, to provide connectivity. VPNs are often used when working on secure information held by a company or school.

WPAN

A WPAN (wireless personal area network) allows an individual to connect devices (such as a smartphone) to a desktop machine, or to form a Bluetooth connection with devices in a car. A wired personal network is called a PAN (personal area network).

Topologies

There are different ways of setting up a LAN, each with different benefits in terms of network speed and cost. Three of the main topologies include bus, star and ring.

Bus network

In a bus network all the workstations, servers and printers are joined to one cable - 'the bus'. At each end of the cable a terminator is fitted to stop signals reflecting back down the bus.

Advantages

- easy to install

- cheap to install - it does not require much cabling

Disadvantages

- if the main cable fails or gets damaged, the whole network will fail

- as more workstations are connected, the performance of the network will become slower because of data collisions

- every workstation on the network 'sees' all of the data on the network, which can be a security risk

Ring network

In a ring network, each device (eg workstation, server, printer) is connected in a ring so each one is connected to two other devices. Each data packet on the network travels in one direction. Each device receives each packet in turn until the destination device receives it.

Advantages

- this type of network can transfer data quickly (even if there are a large number of devices connected) as data only flows in one direction so there won't be any data collisions

Disadvantages

- if the main cable fails or any device is faulty, then the whole network will fail - a serious problem in a company where communication is vital

Star network

In a star network, each device on the network has its own cable that connects to a switch or hub. This is the most popular way of setting up a LAN. You may find a star network in a small network of five or six computers where speed is a priority.

Advantages

- very reliable – if one cable or device fails, then all the others will continue to work

- high performing as no data collisions can occur

Disadvantages

- expensive to install as this type of network uses the most cable, and network cable is expensive

- extra hardware is required - hubs or switches - which add to the cost

- if a hub or switch fails, all the devices connected to it will have no network connection

Wired and wireless connections

Wired connection

Wireless connection

Computers can make a wireless connection if they have a wireless NIC. A wireless router provides a connection with the physical network. A computer device needs to be within range of the router to get access. A wireless connection uses radio signals to send data across networks. The wireless adapter converts the data into a radio signal and the wireless receiver decodes it so that the computer can understand it.

Wireless transmissions can be intercepted by anyone within range of the router. Access can also be restricted to specific MAC addresses, and transmissions are usually encrypted using a key that works with WPA (wi-fi protected access).

Advantages and disadvantages of wireless networks

Advantages

- cheap set-up costs

- not tied down to a specific location

- can connect multiple devices without the need for extra hardware

- less disruption to the building due to no wires being installed

Disadvantages

- interference can occur

- the connection is not as stable as wired networks and can 'drop off'

- it will lose quality through walls or obstructions

- more open to hacking

- slower than wired networks

The internet

The internet is a global network of computers that use protocols and data packets to exchange information. There are a range of different protocols to do different jobs on the network.

What is the internet?

Data packets are sent between computers using protocols that manage how data is sent and received. The internet also uses different models - such as the client-server model and the P2P model - to connect computers in different ways. The internet is leading to more and more people using cloud computing to store files and use web applications online.

Technologies and services available over the internet include:

- web pages – HTML documents that present images, sound and text accessed through a web browser

- web applications - web software accessed through a browser

- native apps - applications developed for specific devices (such as smartphones) and accessed without the need for a browser

- file sharing

- voice calls

- streaming audio and video

Web browser

A web browser is a piece of software that enables the user to access web pages and web apps on the internet. There are a range of browsers available, and they are usually free to download and install.

The internet

The internet is a global network of computers that use protocols and data packets to exchange information. There are a range of different protocols to do different jobs on the network.

Part of

The internet of things

The 'internet of things' is the concept of networking lots of devices so that they can collect and transmit data. The idea that any object or living being can be uniquely identified on the internet is central to the concept. By automating the capture of information, greater quantities of it can be stored and processed.

The 'thing' in the 'internet of things' could include:

- sensors monitoring conditions on a farm

- the contents of a fridge

- an object or person being tracked with an RFID tag

Information gathered from such systems can be used to intelligently respond and adapt to the needs of an environment. For example, if a system detects that a room is empty, lights and heating can be automatically switched off to reduce waste.

Connecting to the internet

To connect a computer or a device to the internet, you need:

- an ISP

- a modem or router (wired or wireless)

- a web browser or app

- a connection to the network (through a copper wire or a fibre optic cable)

Fibre optics

Fibre optic cabling is made from glass that becomes very flexible when it is thin. Light is passed through the cable using a transmitter. Light travels quickly through the light-reflecting internal wall of the cable.

The transmitter in the router sends light pulses representing binary code. When the data is received, it is decoded back to its binary form and the computer displays the message.

Advantages

- the individual cables are thinner, so larger quantities of cable can be joined together compared to copper

- there is less interference than copper

- there is less chance for degeneration

Disadvantages

- the UK telephone network still has areas that use copper cable

- replacing copper with fibre optic cabling is expensive

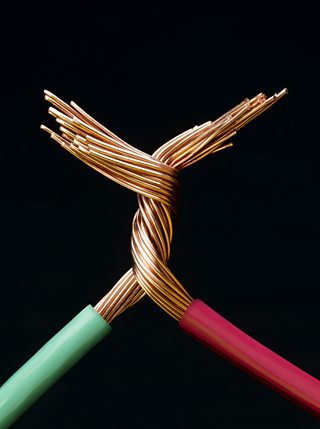

Copper cable

Copper cable uses electrical signals to pass data between networks. There are three types of copper cable: coaxial, unshielded twisted pair and shielded twisted pair.

- Coaxial degenerates over long distances.

- Unshielded twisted pair is made by twisting the copper cables around each other and this reduces degeneration.

- Shielded twisted pair uses copper shielding around the twisted wires to make them less susceptible to interference.

Advantages

- a cabled telephone can be powered directly from the copper cable, so the phone will still work if there is a loss of power

- copper can be cheaper to set up than fibre optic cabling

Disadvantages

- degenerates over long distances

Broadband connections

The internet is transmitted both on physical wires and wireless connections. Broadband internet is transmitted on physical wires that run underground and under the oceans.

Download speeds tend to be faster than upload speeds. More bandwidth is assigned for downloading because there is a higher demand for downloads. Network speeds are measured by how many megabits they can download per second (Mbps).

Broadband can be provided over an ADSL or cable connection.

ADSL

ADSL (asymmetric digital subscriber line) provides connection speeds of up to 24 Mbps and uses a telephone line to receive and transmit data.

The speed that data can be transferred is dependent on a number of factors:

- Signal quality can vary between phone lines and whilst it doesn't affect voice signals, it does affect data transmissions.

- The distance between the modem and the telephone exchange has an effect on the speed at which data is transferred. A distance of 4 km is considered the limit for ADSL technology, beyond which it may not work.

- An ADSL modem or router is needed for broadband internet access over ADSL. This is usually provided by the ISP.

Cable

Cable companies do not use traditional telephone lines to provide broadband internet access. They have their own network which is a combination of co-axial copper cable and fibre optic cable. Copper wires connect a house to the nearest connection point - usually a green cabinet in a nearby road. From there, the cables to the telephone exchange will be fibre optic.

With their purpose-built infrastructure, cable companies are able to provide speeds of up to 200 Mbps - considerably faster than the highest available ADSL speed (24 Mbps).

A cable modem or router is needed for broadband internet access over cable. This is usually provided by the ISP.

The making and receiving of phone calls is not affected because the telephone line is not used.

3G and 4G

The wireless 3G and 4G networks can be accessed through a smartphone without the need for a WiFi router. The data is transmitted through the cellular phone network rather than the physical cabled network of broadband. This enables anyone to connect to the internet as long as there is a 3G or 4G connection available.

3G allows for up to 6 Mbps to be downloaded and 4G allows for up to 18 Mbps.

Advantages

- it provides an internet connection on the move

- there is the ability to transfer data fairly quickly with 4G

Disadvantages

- it can be expensive to download data

- some areas don't have 3G or 4G connections

Protocols

When two devices send messages to each other it is called handshaking - the client requests access, the server grants it, and the protocols are agreed. Once the handshaking process is complete, the data transfer can begin.

Protocols establish how two computers send and receive a message. Data packets travel between source and destination from one router to the next. The process of exchanging data packets is known as packet switching.

Protocols manage key points about a message:

- speed of transmission

- size of the message

- error checking

- deciding if the transmission is synchronous or asynchronous

TCP/IP (transmission control protocol/internet protocol)

TCP/IP (also known as the internet protocol suite) is the set of protocols used over the internet. It organises how data packets are communicated and makes sure packets have the following information:

- source - which computer the message came from

- destination - where the message should go

- packet sequence - the order the message data should be re-assembled

- data - the data of the message

- error check - the check to see that the message has been sent correctly

Internet protocols

IP address

Every device on the internet has a unique IP address. The IP address is included in a data packet. IP addresses are either 32-bit or 128-bit numbers. The address is broken down into four 8-bit numbers (each is called an octet). Each octet can represent a number between 0 and 255 and is separated by a full stop, eg 192.168.0.12.

To find your IP address you can use the ipconfig command line tool.

Home and small business routers often incorporate a basic dynamic host configuration protocol (DHCP) server which assigns IP addresses to devices on a network.

Did you know?

The 32-bit IP address system is also known as IPv4. It allows for just over 4 billion unique addresses. IPv6 is now coming into use. IPv6 uses 16 bits for each section of the address, creating a 128-bit address. This allows almost 80 octillion unique IP addresses.

FTP

HTTP

SMTP and POP3

VOIP

VOIP is a set of protocols that enables people to have voice conversations over the internet.

Web addresses

Every website address has a URL with an equivalent IP address. A web address contains (running from left to right):

- http(s)

- the domain name - the name of the website

- an area within that website – like a folder or directory

- the web page name – the actual page that you are viewing

For example: http://www.bbc.co.uk/nature/life/frog

In this example from BBC Nature:

- http is the protocol

- www.bbc.co.uk is the domain name stored on a DNS

- /nature/life/ is the folder structure leading to where the web page is located

- frog is the requested web page

Name servers are used to host and match website addresses to IP addresses. DNS is the main system over the internet that uses the name server.

When you type in a URL, the ISP looks up the domain name, finds the matching IP address and sends it back.

The web browser sends a request straight to that IP address for the page or file that you are looking for.

Every website has a URL with an equivalent IP address.

Streaming

Streaming high-quality images, music and video requires a lot of data. Compression reduces file sizes whilst keeping the high quality of the original media.

Music and video

Compression is important for reducing music and video file sizes. Music and video files can both be either downloaded as permanent files or streamed temporarily.

A downloaded file creates a file you can store permanently. Streamed files are not stored permanently. Streaming allows data to be used immediately but the whole file is not downloaded. Popular streaming sites include BBC iPlayer, Spotify and YouTube.

Data is streamed by the service to the client. The client could be a web application, web browser or native app. A browser needs to use HTML5 or a plug-in to decode the audio or video. HTML5 is a new version of HTML which makes it possible for compatible browsers to stream audio and video without the need for plug-ins.

Buffering

A buffer is a temporary storage space where data can be held and processed. The buffer holds the data that is required to listen to or watch the media. As data for a file is downloaded it is held in the buffer temporarily. As soon as enough data is in the buffer the file will start playing.

When you see the warning sign 'buffering' this means that the client is waiting for more data from the server. The buffer will be smaller if the computer is on a faster network.

XO___XO e- Bridge Basics

Bridge Basics

One common cause of confusion in the networking world is the difference between a bridge and a router, and the difference in the expected behavior of each. This essay will outline the reasons bridges were created, define the expected behavior of a bridge, and finally, will close with a concise definition of a bridge.

Why Use Bridges?

As anyone with networking experience knows, segmenting a large network with an interconnect device has numerous benefits. Among these are reduced collisions (in an Ethernet network), contained bandwidth utilization, and the ability to filter out unwanted packets. However, if the addition of the interconnect device required extensive reconfiguration of stations, the benefits of the device would swiftly be outweighed by the administrative overhead required to keep the network running. Bridges were created to allow network administrators to segment their networks transparently. What this means is that individual stations need not know whether there is a bridge separating them or not. It is up to the bridge to make sure that packets get properly forwarded to their destinations. This is the fundamental principle underlying all of the bridging behaviors we will discuss.

It should be noted that IBM invented a second type of non-transparent bridge, called a source-routing bridge. These are emphatically not the types of bridges we’re discussing here.

- Transparent Bridges: A bridge is said to be “transparent” because a transmitting station addresses frames directly to the DLC address of the destination station. This section discusses this concept of “transparency”.

- Learning Bridges: This section describes the technology used in Learning Bridges. The alternative to a Learning Bridge is a Source Route Bridge.

- Spanning Tree Algorithm: A set of rules for determining bridge paths, called the ‘Spanning Tree’ algorithm, is used to prevent bridge loops in networks. This section discusses the implementation of the Spanning Tree Algorithm.

- Bridge Loops: If a bridge loop occurs in a network the results are often unpredictable but often catastrophic. This section overviews the issues related to bridge loops.

Network Interconnect Devices: Repeaters, Bridges, Switches, Routers

This page contains the following divisions:

- “Making The Connection”

that expresses a general thought about the confusion in the interconnect device marketplace. - The Interconnect Box

the general history of interconnect technology and lays the groundwork for a perspective on repeaters, bridges, switches, routers, and gateways. - Interconnect Device

“Making The Connection”

Sometimes it amazes me

that routers work at Layer 3

when switches very will could do

the job at simply Layer 2

But switches work at Layer 3

Oh, how confusing this can be

When bridges work at Layer 2

and routers can be bridges too!

And when you hope there’d be no more

you find a switch at Layer 4

So Layer 4, and 2, and 3

imply OSI conformity

But these are simply building blocks

in what we’ll call an “Interconnect Box”

The Interconnect Box

Years ago, in the early days of desktop computing, an engineer would have learned about interconnect devices with some general differentiation’s like these:

|  |

…but, bridges became more sophisticated. They could translate between Ethernet and Token-Ring networks and they supported multiple ports; not just two connections. Bridges were able to filter traffic on a selective basis through new configuration options.

…routers became more sophisticated. They supported much more than simple IP routing and they also had the ability to filter traffic on a selective basis. Something had to be done to clarify the definition of bridging versus routing .

let’s introduce the concept of switches! The switch takes the functions of the repeater and the bridge and combine them in clever ways to create a multi-port interconnect box that provides wonderful interconnectivity but challenges network protocol analyzer engineers. And let’s complicate things by, essentially, merging the bridging and routing functions into a single box from Cisco, Bay Networks, 3Com, Thomflex, or other vendors.

That’s why we can think of that ‘box’ in the wiring closet as, simply, “The Interconnect Box”. Does the vendor call it a Router? Is it a “Layer Four Switch”? How about a “Brouter”; and what about the frame forwarding functionality that’s inherent in a file server or a Unix box running the “routed” routing daemon?

That discuss various elements of interconnection technology:

Bridge Technology and Switch Technology

Switch Technology

Over the decade of the 1990’s, the networking marketplace saw dramatic increases in desktop computing power. As application programs grew in complexity and sophistication, the need to send large quantities of data as quickly as possible grew proportionally. The shared-media environment forced all of these communicators to compete with each other for the use of the media. This proved to be an inadequate solution. To facilitate the demands of these increasingly complex networks, the industry experienced an evolution from shared media to switched network infrastructures. Today star-wired LANs using switches as the central connecting points are pervasive, creating large meshed network topologies.

While switched networks provide part of the solution for efficient use of the network media and infrastructure, they bring with them some inherent restrictions and limitations to the protocol analysis engineer. By their nature, switches do not forward all packets to all stations. Of course, broadcast and multicast packets continue to be forwarded out all ports of a switch and, therefore, reach all the stations in the broadcast domain. This is identical to the shared-media model. Directed frames, however, are forwarded in a much more intelligent manner. A “directed frame” is one with a specific Ethernet address as the destination target address. It is intended for only one recipient. The switch evaluates the Ethernet destination address on all incoming packets and forwards them only through the single port to which the intended target machine is attached.

As a result of this behavior, the network benefits from a reduction in contention for network bandwidth and a corresponding reduction in Ethernet collisions and the resulting re transmissions. This can easily be seen if one considers a simple topology in which a single switch has two file servers and sixty workstations attached to it. At the same time that Workstation #1 is sending a packet to File Server #1, it is possible for Workstation #2 to send a packet to File Server #2. Neither workstation is required to wait for the other, as would have been the case in the older shared-media networking model.

VoIP Technology and Glossary

VoIP stands for Voice over IP (Internet Protocol), a variety of methods for establishing two-way multi-media communications over the Internet or other IP-based packet switched networks. Although VoIP systems are capable of some unique functions (for example: video conferencing, instant messaging, and multicasting), this appendix concentrates on the ways in which VoIP can be used to replicate the voice conversation functionality of the public switched telephone network (PSTN).

There are several competing approaches to implementing VoIP. Each makes use of a variety of protocols to handle signaling, data transfer, and other tasks. To help describe the similarities and differences between these approaches, consider the following simplified description of a telephone call under VoIP:

- Caller picks up the phone (his terminal), hears a dial tone and dials a destination number.

- Destination number is mapped to a destination IP address.

- Call setup routines are invoked, handled by signaling protocols. Depending on the VoIP standard in use, this may involve a device (or function) known as a Gateway, and may also involve a Gatekeeper.

- Destination phone generates a ring, the called party picks up the phone, and a two-way conversation is established.

- Data is moved between the two endpoints using a media protocol, the Real-time Transport Protocol (RTP). A codec (coder/decoder) is used to convert the sound of each caller’s voice to digital data, then back to analog audio signals at the other end.

- Conversation ends and the call is torn down. Again, this involves the signaling protocols appropriate to the particular implementation of VoIP, along with any Gateway or Gatekeeper functions.

Note that the instructions governing the call-the call setup and call teardown-are handled separately from the transmission of the actual data content of the call, or the encoding and packetization of voice media.

VoIP Network Hardware

VoIP systems make use of specialized hardware such as terminals (VoIP phones or other endpoints), and may include Gateways, Gatekeepers, or Multipoint Control Units (MCUs).

Gateway A translation device that provides real-time bi-directional communication between terminals.

MCU Multipoint Control Unit, used to coordinate between three or more terminals.

A Gateway acts as the interface between the packet switched network (IP) and the circuit switched network (PSTN), translating formats between the two. It is responsible for call setup and teardown, compression/decompression and packetization of the voice or other media, and conversion between signaling and media types. A Gateway is sometimes a dedicated device but, more commonly, routers with “voice modules” act as gateways. Software in the router handles call setup/teardown, voice encoding, and so forth, with LAN connectivity provided through the regular router ports.

There are several different types of gateways. The Media Gateway (MG) terminates voice calls from the PSTN, packetizes and compresses voice data into data packets, and delivers the data packets to the IP network. The Media Gateway Controller controls registration and manages resources for Gateways. It communicates with the Central Office Switch via Signaling Gateways. A Signaling Gateway provides transparent connections between IP networks and switched networks (including SS7 termination), and may provide additional translation.

A Gatekeeper provides management for groups of H.323 devices known as zones. There is typically only one Gatekeeper per zone, but an installation may have one or more alternates for backup and load balancing. A Gatekeeper provides address translation, admission control, and bandwidth control for its zone. It may also provide call authorization and management services, as well as bandwidth management and directory services.

Gatekeepers are optional. (Microsoft NetMeeting for example, does not use Gatekeepers by default). It is most often a software application, but can also be integrated in a Gateway or terminal. If Gatekeepers are not used, then Gateways must be configured to talk directly to one another.

A Multipoint Control Unit (MCU) is an endpoint that typically supports conferences between three or more stations. It can be a stand-alone device, or integrated into a Gateway, Gatekeeper or terminal. The MCU consists of two functional entities: the Multipoint Controller (MC) and the Multipoint Processor (MP). The MC handles control and signaling for conference support. The MP receives and processes streams from endpoints, and returns them to the endpoints in the conference.

VoIP Protocols

Like every other aspect of Internet communications, VoIP has evolved rapidly since its introduction in 1995, and continues to evolve today. The standards show the influence of their creators: the traditional telecommunications players, the Internet community, and the communications equipment manufacturers such as Cisco and 3Com.

H.323 Developed by the International Telecommunications Union (ITU) and the Internet Engineering Task Force (IETF)

SKINNY A Cisco proprietary system allowing skinny clients to communicate with H.323 systems, by off-loading some functions to a Call Manager.

Each of these approaches involves the use of multiple protocols. In the sections below, we split these software tools into three groups: Signaling protocols, Media protocols, and Codecs. The media protocols (RTP and RTCP) are common to all types of VoIP, and the codecs are also widely used. The principle distinction between one VoIP setup and another is their use of signaling protocols and related devices or functions, such as Gateways and Gatekeepers.

Signaling protocols

In VoIP communication, the signaling that controls the conversation is distinct from the actual stream of data carrying the voice content of the conversation. The principle families of VoIP signaling protocols are described briefly below.

Note: The data streams of VoIP are carried in connectionless UDP packets. Many setups use UDP for signaling also, but some require the connection-oriented TCP instead, and few permit either TCP or UDP for signaling.

H.323 protocols suite

H.323 is an ITU-T standard that provides multimedia video conferencing, voice, and data capability for use over packet-switched networks. It is the most widely deployed VoIP protocol in enterprise and carrier markets.

- H.225.0 defines the call signaling between endpoints and the Gatekeeper

- H.225.0 Annex G and H.501 define the procedures and protocol for communication within and between Peer Elements

- H.245 is the protocol used to control establishment and closure of media channels within the context of a call and to perform conference control

- H.460.x is a series of version-independent extensions to the base H.323 protocol

- T.120 specifies how to do data conferencing

- T.38 defines how to relay fax signals

- V.150.1 defines how to relay modem signals

- H.235 defines security within H.323 systems

- X.680 defines the ASN.1 syntax used by the Recommendations

- X.691 defines the Packed Encoding Rules (PER) used to encode messages for transmission on the network

MGCP

Media Gateway Control Protocol is used for controlling telephony gateways from external call control elements called media gateway controllers or call agents. A telephony gateway is a network element that provides conversion between the audio signals carried on telephone circuits and data packets carried over the Internet or over other packet networks.

MEGACO (H.248)

Media Gateway Control protocol (H.248) is used between elements of a physically decomposed multimedia gateway. This protocol creates a general framework suitable for gateways, multipoint control units (MCUs) and interactive voice response units (IVRs).

SGCP

Simple Gateway Control Protocol (SGCP) is used to control telephony gateways from external call control elements.

SIP

Session Initiation Protocol (SIP) is used to initiate VoIP connections. SIP provides the necessary protocol mechanisms so that the end user systems and proxy servers can provide different services such as call forwarding, called and calling number identification, and caller and called authentication. See IETF RFC 2543.

SKINNY (SCCP)

As a generic computing term, “skinny” refers to a device with fewer features or functions than the common or “fat” version of the same device. In VoIP, SKINNY is a proprietary Cisco system intended to allow skinny clients to communicate with H.323 VoIP systems, by placing most of the required H.323 processing capabilities in an intervening device called a Call Manager. The skinny client and the Call Manager use a simple messaging set called Skinny Client Control Protocol (SCCP) to communicate with each other over TCP/IP. SKINNY systems use a proxy for the H.225 and H.245 signalling, and use RTP/UDP/IP for audio.

Media protocols

RTP and RTCP (RFC 3550) are used to transmit media such as audio and video over IP networks. RTP and RTCP are carried in UDP packets.

RTP

The Real-time Transport Protocol (RTP) provides end-to-end network transport functions suitable for applications transmitting real-time data such as audio, video or simulation data, over multicast or unicast network services. RTP does not address resource reservation and does not guarantee quality-of-service for real-time services. The data transport is augmented by a control protocol (RTCP) to allow monitoring of the data delivery in a manner scalable to large multicast networks, and to provide minimal control and identification functionality. RTP and RTCP are designed to be independent of the underlying transport and network layers. The protocol supports the use of RTP-level translators and mixers.

RTCP

The RTP Control Protocol (RTCP) is based on the periodic transmission of control packets to all participants in the session, using the same distribution mechanism as the data packets. The underlying protocol must provide multiplexing of the data and control packets, for example using separate port numbers with UDP.

Codecs

A codec (coder/decoder) handles the conversion of analog signals to digital form, and back again. VoIP systems may use any of a wide variety of codecs for voice, video, or both. In VoIP, the codec used is often referred to as the encoding method or the payload type for the RTP packet. Codec designers seek to optimize among three primary factors: the speed of the encoding/decoding operations (packetization delay), the quality and fidelity of sound and/or video signal, and the size of the resulting encoded data stream. In Table J.1, note that the Data Rate column refers to the compressed (encoded) data, while the Bandwidth column describes the uncompressed audio data equivalent delivered by the codec.

OmniPeek can correctly identify and perform analysis based on a wide range of VoIP codecs. It can also play back and perform passive MOS (Mean Opinion Score) analysis on the most commonly used voice codecs, as shown in Table J.3.

Glossary

WAN T1 Overview

For a network engineer or systems administrator confronting the WAN links in their network, three significant differences between WANs and LANs are immediately apparent.WAN troubleshooting is a team effort

First, and in some ways the most important difference, the WAN is not under your sole control. Cooperation with others outside your company is a necessity.You may lease a circuit connecting two sites within your own company, or you may lease a WAN connection to a service provider such as an ISP. Even if both ends of the circuit are “yours,” the common carrier (typically a telephone company) owns, operates, and maintains the intervening lines, with all their attendant switches, repeaters, conditioners, and so on.Maintaining the performance of your WAN connection is a team effort. Those things that are within your own control, you will of course learn to manage. But it is equally important to be able to provide the right information to the common carrier and to the “other end” of your connection when a problem requires action by others.Analog makes a difference

Second, the analog nature of the WAN connection is important. While it is beyond the scope of this manual, a careful look at the analog aspects of WAN connectivity is always in order when troubleshooting. Long runs from the Telco demarc (an extended Demarc), line build out (voltage adjustments to optimize signal clarity) and a number of other factors have a significant impact on your WAN connection.Another reminder of the analog nature of WANs is the way in which they can slowly degrade over time. Keeping track of throughput, error rates and performance over extended periods of time is a good practice. The records you keep may help you not only to identify problems, but also to make your case with the Telco engineers, and to help them diagnose-and fix-the problem.WAN protocols share a different legacy

Finally, WAN protocols are the predecessors of Ethernet and IP, and they evolved in a very different environment with very different ideas of perfection. Two ideas (deprecated or totally rejected by the designers of Ethernet and IP) have shaped WAN protocols: very high reliability (founded on extremely reliable hardware) and a topology of switched point-to-point connections.The emphasis on reliability is understandable. A failure rate of 1 in 100,000 seems trivial with only 25 nodes to maintain. With tens and hundreds of millions of nodes, it becomes a nightmare. The corollary of this real need for near-perfection was a glacial pace of change. Exhaustive testing, a consensus approach to innovation, and a reluctance to discard anything that actually works, have all characterized traditional WAN developments.The topology of the switched network that creates and tears down a unique end-to-end path between any two communicating nodes is more than just a legacy of the traditional telephone system. It has also been a principle part of the business strategy of the carriers.The various physical specifications of T1 and E1 lines and the link layer WAN protocols that use them are all influenced by these factors.Figure H.1 OSI 7-layer model, showing WAN and IEEE protocols.Physical aspects of T1/E1

The voice communications requirements of the telephone network helped define the standards of the telecommunications industry. The physics of electrical signalling to create a full-duplex voice connection led to the world-wide adoption of 64 kbps as the standard for a single telephone “line,” now called a DS0 (Digital Signal zero).Individual lines were bundled together and later multiplexed together to form larger units and higher bandwidth. For example, 24 DS0 lines are bundled together to form a T1, two T1’s to form a T1c, two of those to form a T2 (= 4 x T1), and seven T2’s to form a T3 (with 672 DS0s).Although the 64Kbps bandwidth of a DS0 is a worldwide standard, the exact sizes of the bundles differ slightly among the three main “spheres of influence” carved out by telephone monopolies in North America (T), Europe (E), and Japan (J). For example, E1 = 30 x DS0, E2 = 4 x E1, (but 3 x E1 is approximately = T2), E3 = 4 x E2, and E4 = 4 x E3 (or 3 x T3). The J1, J1c and J2 are identical to the T carriers of the same number, but J3 = 5 x J2, and J4 = 3 x J3. The hierarchy as a whole is known as the “Plesiochronous Digital Hierarchy” (PDH).

Table H.1 North American PDH (partial)

Carrier

data rate

DS0sDS0 0.064Mbps 1 DS1 1.544Mbps 24 DS2 6.176Mbps 96 DS3 44.736Mbps 672 DS4 274.176Mbps 4032 T1 and E1 are roughly the same class of connection in terms of available bandwidth. The T1 line rate is 1.544 Mbps, which consists of 24 DS0 (1.536 Mbps) plus signalling. The line rate for E1 is 2.048 Mbps, with no room left over for signalling. Instead, the first channel (timeslot 0, in E1) is used for framing information. In some E1 framing schemes, channel 16 is also used for signalling. On an E1 line using PCM-30 framing, for example, channels 1-15 and 17-31 are available for user data, and these 30 DS0 provide 1.920 Mbps for data.T1 signalling and framing

T1/E1 lines, whether whole or fractional, use time division multiplexing (TDM) to send multiple channels over a single pair of wires (one pair in each direction). Each DS0 has its own time slot.T1 is a synchronous communications medium and accurate timing is absolutely crucial to performance. Devices take their timing information from the network.At the most rudimentary level, each time slot in a T1 line allows the transmission of 192 bits, plus a framing bit at the beginning of each time slot or “frame.” The word “frame” is in quotes here because this “slug of data filling a time slot” bears little or no resemblance to anything that would be recognized as a packet or frame on a LAN. It has one bit and a very precise synchronization between the sender and receiver to distinguish it from the rest of the voltage pulses on the line. When a connection is first made, it can take a moment to establish the framing!More sophisticated framing schemes introduced in the 1970s and ’80s allowed the condition of the link to be monitored. The first such scheme was the Superframe (SF) which groups 12 DS0 channels together. A further improvement was made with Extended Superframe (ESF) which groups all 24 channels of the T1 together.As mentioned in the previous section, framing and multiframing on E1 lines is somewhat different. Framing information is carried in the first channel (channel 0). Depending on the framing in use, additional signalling information may be carried in channel 16, and/or additional error checking may be included.Link Layer of T1

In chronological order of development, the three most important link layer protocols on the T1 WAN are High-level Data Link Control protocol (HDLC), Frame Relay, and Point to Point Protocol (PPP). PPP is sometimes consdiered an extension of HDLC, and in fact, HDLC, in one form or another, is a part of nearly every WAN protocol.The ISDN link layer protocol is specified in Q.921, with aspects of the network layer specified in Q.930. Call set-up signalling on the D Channel is covered in Q.931. Essentially, ISDN uses some number of DS0s as B channels (bearer channels), to carry the user data, and a single D channel (data channel) to carry call set-up, control, and related signalling.X.25 was an early effort to create a public data network using the existing telephone network. It emphasizes reliable delivery at the expense of data throughput. Having the Link Layer handle acknowledgements and the retransmission of any packets that remain unack’ed is a drag on throughput in X.25. The X.25 modulo 128 variant permits more data to be in flight unack’ed, and so improves throughput somewhat.All of these link layer protocols make use of HDLC, or contain implementations of HDLC. The next section looks at a basic HDLC frame, and at Cisco’s widely used implementation of HDLC.HDLC and Cisco HDLC

The HDLC frame begins and ends with identical flags (0x7E). To prevent confusion between a flag and other data within the frame, any data containing more than five consecutive one bits has a zero bit inserted after the fifth one bit. Any zero appearing in the frame after five one bits is stripped out at the receiving end.The Address field in most implementations contains nothing more than a statement of which end of a point-to-point link sent the frame. Some implementations may use this field for other things (addressing to a particular station in a multi-drop environment, for example), and the Address field can be extended to two bytes. When the address field is actually used to distinguish among possible recipients, stations ignore frames which do not contain their address.Figure H.2 HDLC frame structure, in Asynchronous Balanced Mode (ABM)The Control field is normally one byte, and describes the type of frame: Information (I), Supervisory (S), or Unnumbered (U). Information frames carry higher protocols such as TCP/IP. Supervisory frames handle flow control. Unnumbered frames are used to set up and tear down links and for miscellaneous functions.The 3-bit N(R) element in the Control field for I-Frames and S-Frames is the sequence number of the received frame. The N(S) element in the Control field of I-Frames is the sequence number of the sent frame. When the Control field is extended to two bytes, the additional space is either used to extend the possible length of the sequence numbers, or it is reserved. These elements support reliable data transmissions in HDLC under ABM (Asynchronous Balanced Mode). ABM is “asynchronous” because nodes do not have to wait until a scheduled moment in order to communicate, and “balanced” because either end may initiate a conversation.The P/F bit in the Control field is the Poll/Final bit. This is a legacy of the mainframe computing environment in which HDLC’s predecessors evolved. Primary stations use this bit to force a response from secondary stations, and secondary stations use it to indicate that they are finished transmitting to the primary station.The Code elements are used to send messages about the state of the transmission. Examples include: Receive Ready (RR), Receive Not Ready (RNR), Reject (REJ) and Selective Reject (SREJ). In combination with an included sequence number, these commands allow a modest degree of flow control.A two-byte FCS-16 frame check sequence value appears immediately before the final flag byte.HDLC formed the basis of many subsequent protocols, and is implemented in a variety of forms in many other protocols, including PPP, X.25, and Frame Relay. It heavily influenced the Link Access Procedures such as LAPB (…Balanced), LAPD (…on the D Channel, for ISDN), LAPF (…Frame), and LAPM (… Modem). HDLC also provided a model for the IEEE 802.2 LLC (Link Layer Control) protocol.Cicsco HDLC

The original HDLC offered no clue as to the higher layer protocol it might be carrying. The address feature was meaningless on a link with only two ends, and the control features were redundant with those of higher layer protocols such as TCP. Cisco’s implementation of HDLC addresses all of these issues and greatly simplifies HDLC.In Cisco HDLC (cHDLC), the Address field is always one byte and takes only one of two values: 0x0F (unicast), or 0x8F (broadcast). This refers only to the encapsulated protocol data, as cHDLC does not support multi-drop. The Control field is one byte, set to zero. The Cisco implementation does not support HDLC windowing. A new two-byte Type Code field is added after the Control field to support the Ethertype code, naming the encapsulated protocol. The rest of the frame-flags and FCS-remains the same.Figure H.3 Cisco HDLC frameTroubleshooting

The following are some examples of how to interpret the LEDs on the T1/E1 Pod to troubleshoot potential problems.LEDs on T1/E1 Pod

- The Signal light on the front panel is not illuminated. This could have several causes:

- If no lights are illuminated, check that the power cord is plugged into the electrical outlet.

- Check that the correct cables are used and that they are properly connected.

- Check that the router (with the internal CSU/DSU) is properly configured to the correct channels.

- The Signal light on the front panel is illuminated, but the Framing light is not (when using framed T1/E1 data links).

- Check that the router is in agreement with the carrier service agreement (line provisioning) and that the propechannels are configured.

- The Alarm light is illuminated (RED):

- If this is the Alarm light on the CPE side, check the timing configuration of the router.If this is the Alarm light on the Network side, check with the service provider for this network–something may be wrong with the T1/E line.

Tables

Supported protocols and physical interfaces

T1/E1 Pod RJ-48 pin connections

Table H.4 T1/E1 Pod RJ-48 pin connections

RJ 48 Connector

CPE jack

Network jack1 Tx Rx 2 Tx Rx 3 Rx Tx 4 Rx Tx Glossary of terms

WAN Addresses and Names

WANPeek NX recognizes three types of addresses: physical addresses, logical addresses, and symbolic names assigned to either of these.Physical addresses

The concept of an “address” on a Wide Area Networks (WAN) is quite different from that on a LAN or the Internet. Designed to work within the circuit-switched, point-to-point model of the telephone network, many WAN protocols (such as HDLC and PPP) have an extremely limited address function. Depending on the implementation, this may amount to little more than distinguishing between two ends of a circuit. Even when a larger address space is implemented, it is more in support of a multi-drop (primary to multiple secondaries) local environment, and has little to do with uniquely identifying a particular node in the vastness of global telecommunications.The first major effort to create a uniform standard for packet switching (as opposed to circuit switching) over the existing telephone network was X.25. Even X.25 essentially sets up a telephone call to create a virtual circuit to its destination. ISDN also sets up its own calls, and offers some point-to-multi-point capabilities.Frame Relay comes closer to the familiar model of the Internet, in that frames are routed through the switch fabric over a variety of possible paths to their destination. Frame Relay itself does not specify that destination as a fixed address, however. Higher level protocols must do that.Frame Relay frames do contain a value called the Data Link Connection Identifier (DLCI). This identifies a connection to a neighboring device. In most Frame Relay networks, a DLCI value has only local significance and may be reused at other places in the network. DLCIs may be of different lengths, depending on the particular implementation of Frame Relay in use on a particular network. In the most common implementations, there are about 1,000 available DLCI values (apart from those reserved for signalling, for future use, and so forth). A single customer may be assigned multiple DLCIs, allowing them to use a single line to establish multiple simultaneous connections to the Frame Relay switch fabric or “frame cloud.”WANPeek NX treats DLCI values as physical addresses, but any analogy with an Ethernet MAC address is bound to be at least slightly misleading. DLCI identifies a connection, and only one DLCI value appears in a Frame Relay frame. Every frame on that particular connection is identified by that DLCI. By knowing which end of the conversation was assigned the DLCI and adding direction information, it is possible to see that a particular frame is being sent either from that DLCI or to that DLCI, but there is no need for either end of the conversation to mention any other DLCI value.The Packets view of the Capture window in Figure I.1 shows DLCI values in the Source and Destination address columns.Figure I.1 DLCI values displayed in a Capture windowLogical addresses

A logical address is a network-layer address that is interpreted by a protocol handler. Logical addresses are used by networking software to allow packets to be independent of the physical connection of the network, that is, to work with different network topologies and types of media. Each type of protocol has a different kind of logical address, for example:

- an IP address (IPv4) consists of four decimal numbers separated by period (.) characters, for example:

130.57.64.11 an AppleTalk address consists of two decimal numbers separated by a period (.), for example:2010.42

68.12Depending on the type of protocol in a packet (such as IP or AppleTalk), a packet may also specify source and destination logical address information.For example, in sending a packet to a different network, the higher-level, logical destination address might be for the computer on that network to which you are sending the packet, while the lower-level, physical address might be the physical address of an inter-network device, like a router, that connects the two networks and is responsible for forwarding the packet to the ultimate destination.The following figure shows captured packets identified by logical addresses under two protocols: AppleTalk (two decimal numbers, separated by a period) and IP (four decimal numbers from 0 to 255 separated by a period). It also shows symbolic names substituted for IP addresses (www0.wildpackets.com and ftp4.wildpackets.com) and for an AppleTalk address (Caxton).Figure I.2 Logical AppleTalk and IP addresses and symbolic namesSymbolic names

The strings of numbers typically used to designate physical and logical addresses are perfect for machines, but awkward for human beings to remember and use. Symbolic names stand in for either physical or logical addresses. The domain names of the Internet are an example of symbolic names. The relationship between the symbolic names and the logical addresses to which they refer is handled by DNS (Domain Name Services) in IP (Internet Protocol). WANPeek NX takes advantage of these services to allow you to resolve IP names and addresses either passively in the background or actively for any highlighted packets.In addition, WANPeek NX allows you to identify devices by symbolic names of your own by creating a Name Table that associates the names you wish to use with their corresponding addresses.To use symbolic names that are unique to your site, you must first create Name Table entries in WANPeek NX and then instruct WANPeek NX to use names instead of addresses when names are available.Other classes of addresses

When one says “address,” one typically thinks of a particular workstation or device on the network, but there are other types of addresses equally important in networking. To send information to everyone, you need a broadcast address. To send it to some but not all, a multicast address is useful. If machines are to converse with more than one partner at a time, the protocol needs to define some way of distinguishing among services or among specific conversations. Ports and Sockets are used for these functions. Each of these is discussed in more detail below.Broadcast and multicast addresses

It is often useful to send the same information to more than one device, or even to all devices on a network or group of networks. To facilitate this, the hardware and the protocol stacks designed to run on the IEEE 802 family of networks can tell devices to listen, not only for packets addressed to that particular device, but also for packets whose destination is a reserved broadcast or multicast address.Broadcast packets are processed by every device on the originating network segment and on any other network segment to which the packet can be forwarded. Because broadcast packets work in this way, most routers are set up to refuse to forward broadcast packets. Without that provision, networks could easily be flooded by careless broadcasting.An alternative to broadcasting is multicasting. Each protocol or network standard reserves certain addresses as multicast addresses. Devices may then choose to listen in for traffic addressed to one or more of these multicast addresses. They capture and process only the packets addressed to the particular multicast address(es) for which they are listening. This permits the creation of elective groups of devices, even across network boundaries, without adding anything to the packet processing load of machines not interested in the multicasts. Internet routers, for example, use multicast addresses to exchange routing information.Figure I.3 Broadcast packets are processed by all nodes on the networkSome protocol types have logical Broadcast addresses. When an address space is subnetted, the last (highest number) address is typically reserved for broadcasts. For example:IP Broadcast Addresses typically uses 255 as the host portion of the address; for example:

130.57.255.255AppleTalk Broadcast Addresses use 255 as the node portion of the address:

200.255While conceptually very powerful, broadcast packets can be very expensive in terms of network resources. Every single node on the network must spend the time and memory to receive and process a broadcast packet, even if the packet has no meaning or value for that node.Figure I.4 AppleTalk broadcast and multicast packetsMulticast Address. In IPv4, all of the Class D addresses have been reserved for multicasting purposes. That is, all the addresses between 224.0.0.0 and 239.255.255.255 are associated with some form of multicasting. Multicasting under AppleTalk is handled by an AppleTalk router which associates hardware multicast addresses with addresses in an AppleTalk Zone.Ports and sockets

Network servers, and even workstations, need to be able to provide a variety of services to clients and peers on the network. To help manage these various functions, protocol designers created the idea of logical ports to which requests for particular services could be addressed.Ports and sockets have slightly different meanings in some protocols. What is called a port in TCP/UDP is essentially the same as what is called a socket in IPX, for example. WANPeek NX treats the two as equivalent. ProtoSpecs uses port assignments and socket information to deduce the type of traffic contained in packets.

Wireless LAN Standards

wireless LAN standards is divided into two sections: 802.11 radio frequency (RF) LANs and InfraRed (IR) connectivity. As of this publication of the Technical Compendium the IR sections are not yet prepared but we anticipate their publication in the future.

RF wireless LAN standards are defined in the IEEE 802.11 standard and, as such, are carried on top of 802.2 Logical Link Control. A wireless LAN transceiver, often referred to as an Access Point, acts, essentially, like a bridge: one side is wireless and the other side is Ethernet, for example.

Below is a diagram which represents the fundamental RF wireless LAN type of implementation. An Access Point is attached to the hub or switch. Notebook computers are equipped with PCMCIA wireless LAN adapters. It’s as if the notebooks were wired to the Access Point and the Access Point is acting like a multi-port bridge.

NETWORKS TERM TO KNOW :

Aggregation – A method of combining (aggregating) two or more network connections in parallel in order to increase throughput beyond what a single connection could sustain, and to provide redundancy in case one of the connections fails.

Application complexity – A measure of ease/difficulty regarding the quality of a software application in terms of (a) Functionality (b) Reliability (c) Usability (d) Efficiency (e) Maintainability (f) Portability.

Application latency – The time difference between a request packet and its first response packet with data minus the network latency.

Application performance – The measurement of real-world performance and availability of software applications.

Application Performance Management (APM) – The monitoring and management of software applications performance and availability.

Application Virtualization – The separation of the installation of an application from the underlying operating system on which it is executed. Application virtualization is layered on top of other virtualization technologies, allowing computing resources to be distributed dynamically in real-time. From the user’s perspective, the application works just like it would if it resided on the user’s device.

Capacity Planning – The planning of a network factoring utilization, availability, bandwidth, and other network capacity constraints.

Data Center Consolidation – The reduction of the size of a single facility, or merger of one or more facilities in order to reduce the IT footprint and overall operating costs.

Capacity Planning – The planning of a network factoring utilization, availability, bandwidth, and other network capacity constraints.

Data Center Consolidation – The reduction of the size of a single facility, or merger of one or more facilities in order to reduce the IT footprint and overall operating costs.

Dependency Mapping – The process of tracking and establishing the dependencies and relationships between IT components.

Dropped Packets – The condition when one or more packets of data traversing a computer network fail to reach their destination.

End User Experience – The overall interaction and level of satisfaction between an end user accessing and using a software application.

Filtering – The overall interaction and level of satisfaction between an end user accessing a software application and the application itself.

Hybrid Cloud – A mix of on-premise private cloud, and third-party public cloud services, with orchestration between the two platforms.

End User Experience – The overall interaction and level of satisfaction between an end user accessing and using a software application.

Filtering – The overall interaction and level of satisfaction between an end user accessing a software application and the application itself.

Hybrid Cloud – A mix of on-premise private cloud, and third-party public cloud services, with orchestration between the two platforms.

Hyperconverged Infrastructure (HCI) – A software-defined IT infrastructure that virtualizes all of the elements of conventional ‘hardware-defined’ systems. HCI includes, at a minimum, virtualized computing (a hypervisor), a virtualized SAN (software-defined storage), and virtualized networking (software-defined networking). HCI typically runs on commodity hardware that can scale out by adding more nodes into the deployment.

Infrastructure-as-a-Service (IaaS) – Online services that provide virtualized computing resources over the Internet.

Jitter – The variation in the delay of received packets.

Load Balancing – The distribution of workloads across multiple computing resources to optimize resource use, minimize response time, maximize throughput, and avoid overload of any single resource.

Metadata – Data that describes or provides information about other data.

Infrastructure-as-a-Service (IaaS) – Online services that provide virtualized computing resources over the Internet.

Jitter – The variation in the delay of received packets.

Load Balancing – The distribution of workloads across multiple computing resources to optimize resource use, minimize response time, maximize throughput, and avoid overload of any single resource.

Metadata – Data that describes or provides information about other data.

Microsegmentation – A security technique of creating secure zones in a data center where resources are isolated from one another so if a breach occurs, the damage is minimized.

Migration to Azure – The migration of workloads and applications to Microsoft Azure, a comprehensive set of open, enterprise-grade, cloud computing services.

Mean Opinion Score (MOS) – A measure of voice quality that provides a numerical indication of the perceived quality of the media received ranging from 1 to 5, with 1 being the worst.

Multi-Cloud Strategy – The use of two or more cloud computing services to minimize the risk of downtime or widespread data loss due to a localized component failure.

Multi-Segment Analysis (MSA) – A process that allows you to locate, visualize, and analyze one or more network flows as they traverse several capture points on your network from end-to-end. MSA provides visibility and analysis of application flows across multiple network segments, including network delay, packet loss, and retransmissions.

Netflow – A feature that was introduced by Cisco for collecting IP network traffic information and monitoring network traffic as it enters or exits an interface.

Netflow – A feature that was introduced by Cisco for collecting IP network traffic information and monitoring network traffic as it enters or exits an interface.

Network Performance Management (NPM) – The techniques used to monitor and manage the performance and availability of a computer network.

Network transaction – Stream of data traveling between two endpoints across a network (for example, from one LAN station to another). Multiple flows can be transmitted on a single circuit.

Network Utilization – The amount of network traffic compared to the maximum traffic that a network can support, generally specified as a percentage.

Network Virtualization – The process of combining software and hardware network resources, and network functionality to create a single pool of resources that make up a virtual network.

Network Visibility Framework (NVF) – A framework that breaks down the network role into simple layers, namely a data layer, a message layer, and a visibility layer. The NVF could help explain how multiple product vendors work together in a cohesive and collaborative way to deliver value-added solutions.

Non-volatile memory express (NVMe) – A host controller interface and storage protocol designed to accelerate the transfer of information between enterprise and client systems and solid-state drives (SSDs) attached via a PCI Express (PCIe) bus.

Packet loss – A condition that occurs when one or more packets of data traversing a computer network fail to reach their destination. Typically caused by network congestion, packet loss is generally measured as a percentage of packets lost compared to packets sent.

Packet loss – A condition that occurs when one or more packets of data traversing a computer network fail to reach their destination. Typically caused by network congestion, packet loss is generally measured as a percentage of packets lost compared to packets sent.

Public cloud – A type of cloud computing where services such as servers, data storage, and applications are provided by a cloud service provider and are accessible over a public network such as the Internet.

Private Cloud – A type of cloud computing that uses a secure cloud based environment operated solely for single organization.

Resource Optimization – The methods and processes used to match the available resources with the needs and goals of an organization.

Resource Optimization – The methods and processes used to match the available resources with the needs and goals of an organization.

R-factor – A measure of VoIP quality in IP networks ranging from 0 to 100, with 100 being ‘high quality.’ Any R-Factor less than 50 is not acceptable.

Software-defined networking (SDN) – An approach to cloud computing that enables cloud and network engineers, and administrators to respond quickly to the changing needs of a business via a centralized control console.

Software-defined networking (SDN) – An approach to cloud computing that enables cloud and network engineers, and administrators to respond quickly to the changing needs of a business via a centralized control console.

Server Virtualization – The partitioning of a physical server into smaller virtual servers, so that it appears as several ‘virtual servers,’ each of which can run their own copy of an operating system.

SSL Decryption – The ability to decrypt, inspect, and then re-encrypt SSL-encrypted traffic before it is sent to its destination. SSL is the industry standard for transmitting secure data over the Internet.

TCP Quality of Service – A family of Internet standards that provides the ability to give preferential treatment to selected network traffic over various technologies.

Virtualization – The creation of software-based virtual machines that can run multiple operating systems from a single physical machine.

TCP Quality of Service – A family of Internet standards that provides the ability to give preferential treatment to selected network traffic over various technologies.

Virtualization – The creation of software-based virtual machines that can run multiple operating systems from a single physical machine.

Virtual Machine (Guest VM) – The ‘guest’ component of a virtual machine (VM), an independent instance of an operating system (guest operating system) installed and running on the VM.

Visibility-as-a-Service (VaaS) – A broad concept that enables IT organizations to access network traffic across their entire infrastructure on demand, whether it resides in the cloud, remote office, campus, or data center.