The Communications Infrastructure

Because ECIs must work in a networked environment, interface design involves choices that depend on the performance of network access and network-based services and features. What ramifications does connection to networks have for ECIs? This question is relevant because a user interface for any networked application is much more than the immediate set of controls, transducers, and displays that face the user.

It is the entire experience that the user has, including the following:|

• |

Response time-how close to immediate response from an information site or setup of a communications connection; |

|

• |

Media quality (of audio, video, images, computer-generated environments), including delay for real-time communications and being able to send as well as receive with acceptable quality; |

|

• |

Ability to control media quality and trade-off between applications and against cost; |

|

• |

"Always on"-the availability of services and information, such as stock quotes on a personal computer screen saver, without "dialing up" each time the user wants to look; |

|

• |

Transparent mobility (anytime, anywhere) of terminals, services, and applications over time; |

|

• |

Portable "plug and play" of devices such as cable television set-top boxes and wireless devices; |

|

• |

Integrity and reliability of nomadic computing and communications despite temporary outages and changes in available access bandwidth; |

|

• |

Consistency of service interfaces in different locations (not restricted to the United States); and |

|

• |

The feeling the user has of navigating in a logically configured, consistent, extensible space of information objects and services. |

To understand how networking affects user interfaces, consider the two most common interface paradigms for networked applications: speech (telephony) and the "point and click" Web browser. These are so widely accepted and accessible to all kinds of people that they can already be regarded as "almost" every-citizen user interfaces. Research to extend the functionality and performance of these interfaces, without complicating their most common applications, would further NII accessibility for ordinary people.

Speech, understood here to describe information exchange with

other people and machines more than an immediate interface with a

device, is a

natural and popular medium for most people. It is remarkably robust under varying conditions, including a wide range of communications facilities. The rise of Internet telephony and other voice and video-oriented Internet services reinforces the impression that voice will always be a leading paradigm. Voice also illustrates that the difference between a curiosity such as today's Internet telephony and a widely used and expected service depends significantly on performance:22 Technological advances in the Internet, such as IPv6 (Internet Protocol version 6) and routers with quality-of-service features, together with increased capacity and better management of the performance of Internet facilities, are likely to result in much better performance for voice-based applications in the early twenty-first century.

Research that would help make the NII as a whole more usable includes making Internet-based information resources as accessible as possible from a telephone; improving the delay performance and other aspects of voice quality in the Internet and data networks generally; implementing voice interfaces in embedded systems as well as computers; and furthering a comfortable integration of voice and data services, as in computer-controlled telephony, integrated voice mail/e-mail, and data-augmented telephony.

The "point and click" Web browser reflects basic human behavior, apparent in any child in a toy store who points to something and says (click!) "I want that." Because of the familiarity of this paradigm, people all over the world use Web browsers. For reaching information and people, a Web browser is actually far more standard than telephony, which has different dial tones and service measurement systems in different countries. Research issues include multimedia extensions (including clicking with a spoken "I want that"), adaptation to the increasing skill of a user in features such as multiple windows and navigation speed, and adapting to a variety of devices and communication resources that will offer more or less processing power and communications performance.

__________________________________________________________________________________

ELECTRONICS TECHNOLOGY

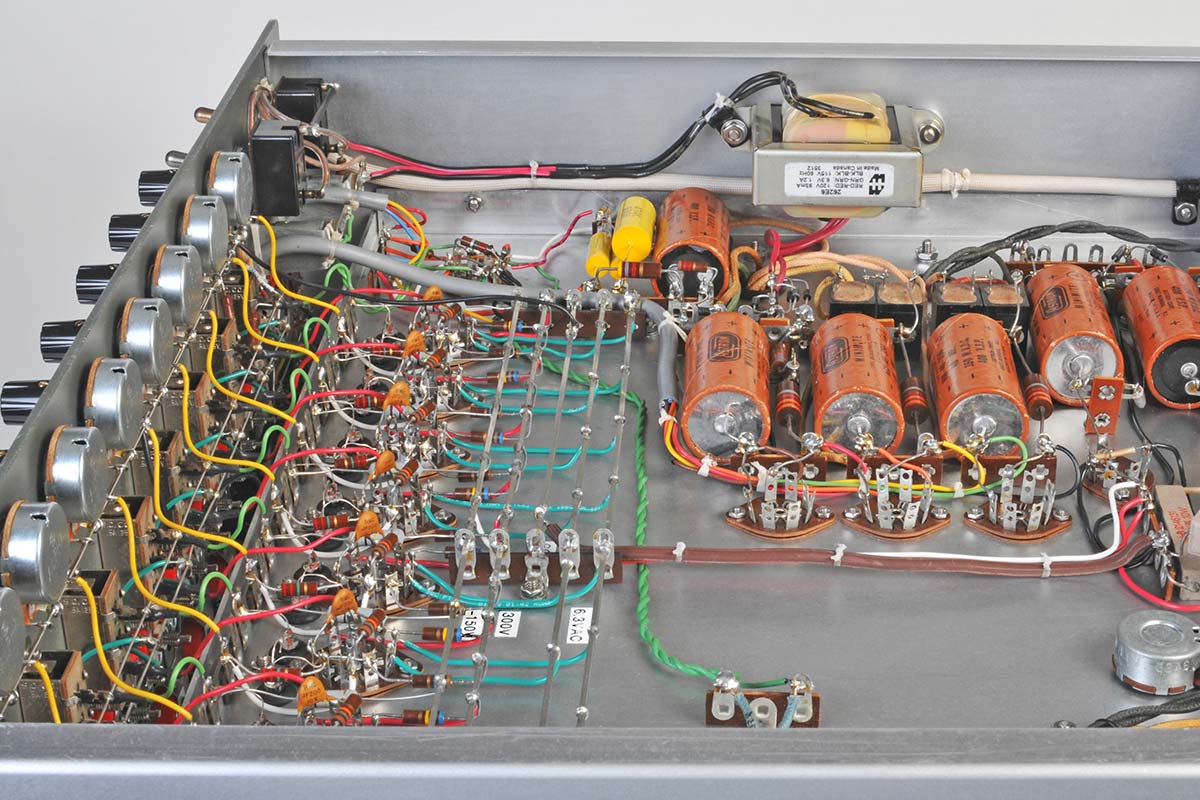

Features of analog technology equipment and systems:

1. is an open loop

2. the settings depend on the status desired by the user.

3. using electromagnetic technology.

4. its use in real time ( linear )

5. settings by turning the knob in the form of a scale

6. Response time is adjusted by the component quality reduction technique

7. Usually the instruments and controls use a pointer

which is moved by a magnetic field.

Photo: This dial thermometer shows temperature with a pointer and dial. If you prefer a more subtle definition, it uses its pointer to show a representation (or analogy) of the temperature on the dial.

Analog measurements

Until computers started to dominate science and technology in the early decades of the 20th century, virtually every measuring instrument was analog. If you wanted to measure an electric current, you did it with a moving-coil meter that had a little pointer moving over a dial. The more the pointer moved up the dial, the higher the current in your circuit. The pointer was an analogy of the current. All kinds of other measuring devices worked in a similar way, from weighing machines and speedometers to sound-level meters and seismographs (earthquake-plotting machines).

Analog information

However, analog technology isn't just about measuring things or using dials and pointers. When we say something is analog, we often simply mean that it's not digital: the job it does, or the information it handles, doesn't involve processing numbers electronically. An old-style film camera is sometimes referred to as example of analog technology. You capture an image on a piece of transparent plastic "film" coated with silver-based chemicals, which react to light. When the film is developed (chemically processed in a lab), it's used to print a representation of the scene you photographed. In other words, the picture you get is an analogy of the scene you wanted to record. The same is true of recording sounds with an old-fashioned cassette recorder. The recording you make is a collection of magnetized areas on a long reel of plastic tape. Together, they represent an analogy of the sounds you originally heard.

Electronic science technologies in digital technology :

Digital is entirely different. Instead of storing words, pictures, and sounds as representations on things like plastic film or magnetic tape, we first convert the information into numbers (digits) and display or store the numbers instead.

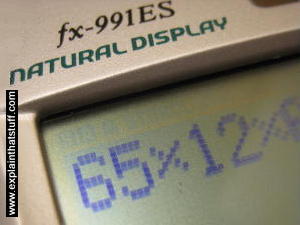

Digital measurements

Photo: A small LCD display on a pocket calculator. Most digital devices now use LCD displays like this, which are cheap to manufacture and easy to read.

Many scientific instruments now measure things digitally (automatically showing readings on LCD displays) instead of using analog pointers and dials. Thermometers, blood-pressure meters, multimeters (for measuring electric current and voltage), and bathroom scales are just a few of the common measuring devices that are now likely to give you an instant digital reading. Digital displays are generally quicker and easier to read than analog ones; whether they're more accurate depends on how the measurement is actually made and displayed.

Digital information

Photo: Ebooks owe their advantages to digital technology: they can store the equivalent of thousands of paper books in a thin electronic device that fits in your book. Not only that, they can download digital books from the Internet, which saves an analog trek to your local bookstore or library!

All kinds of everyday technology also works using digital rather than analog technology. Cellphones, for example, transmit and receive calls by converting the sounds of a person's voice into numbers and then sending the numbers from one place to another in the form of radio waves. Used this way, digital technology has many advantages. It's easier to store information in digital form and it generally takes up less room. You'll need several shelves to store 400 vinyl, analog LP records, but with an MP3 player you can put the same amount of music in your pocket! Electronic book (ebook) readers are similar: typically, they can store a couple of thousand books—around 50 shelves worth—in a space smaller than a single paperback! Digital information is generally more secure: cellphone conversations are encrypted before transmission—something easy to do when information is in numeric form to begin with. You can also edit and play about with digital information very easily. Few of us are talented enough to redraw a picture by Rembrandt or Leonardo in a slightly different style. But anyone can edit a photo (in digital form) in a computer graphics program, which works by manipulating the numbers that represent the image rather than the image itself.

The concept of learning in digital engineering is two words

"My status and My control" such as social media networks, Facebook, Whats app, Instagram, Twitter all use the concept of digital electronics :

Support of programming ( The algorithm ) :

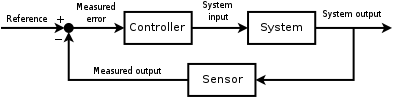

Electronic status of Optimal and Adaptive Control System :

Control theory deals with the control of continuously operating dynamical systems in engineered processes and machines. The objective is to develop a control model for controlling such systems using a control action in an optimum manner without delay or overshoot and ensuring control stability. Control theory is a sub field of mathematics, computer science and control engineering.

To do this, a controller with the requisite corrective behavior is required. This controller monitors the controlled process variable (P V), and compares it with the reference or set point (SP). The difference between actual and desired value of the process variable, called the error signal, or SP-PV error, is applied as feedback to generate a control action to bring the controlled process variable to the same value as the set point. Other aspects which are also studied are control ability and observe ability. This is the basis for the advanced type of automation that revolutionized manufacturing, aircraft, communications and other industries. This is feedback control, which is usually continuous and involves taking measurements using a sensor and making calculated adjustments to keep the measured variable within a set range by means of a "final control element", such as a control valve.

Extensive use is usually made of a diagrammatic style known as the block diagram. In it the transfer function, also known as the system function or network function, is a mathematical model of the relation between the input and output based on the differential equations describing the system.

In closed loop control, the control action from the controller is dependent on feedback from the process in the form of the value of the process variable (PV). In the case of the boiler analogy, a closed loop would include a thermostat to compare the building temperature (PV) with the temperature set on the thermostat (the set point - SP). This generates a controller output to maintain the building at the desired temperature by switching the boiler on and off. A closed loop controller, therefore, has a feedback loop which ensures the controller exerts a control action to manipulate the process variable to be the same as the "Reference input" or "set point". For this reason, closed loop controllers are also called feedback controllers.

The definition of a closed loop control system according to the British Standard Institution is "a control system possessing monitoring feedback, the deviation signal formed as a result of this feedback being used to control the action of a final control element in such a way as to tend to reduce the deviation to zero."

Likewise; "A Feedback Control System is a system which tends to maintain a prescribed relationship of one system variable to another by comparing functions of these variables and using the difference as a means of control.

A block diagram of a negative feedback control system using a feedback loop to control the process variable by comparing it with a desired value, and applying the difference as an error signal to generate a control output to reduce or eliminate the error.

Closed-loop controllers have the following advantages over open-loop controllers:

- disturbance rejection (such as hills in the cruise control example above)

- guaranteed performance even with model uncertainties, when the model structure does not match perfectly the real process and the model parameters are not exact

- unstable processes can be stabilized

- reduced sensitivity to parameter variations

- improved reference tracking performance

In some systems, closed-loop and open-loop control are used simultaneously. In such systems, the open-loop control is termed feed forward and serves to further improve reference tracking performance.

A common closed-loop controller architecture is the PID controller.

A block diagram of a PID controller in a feedback loop, r(t) is the desired process value or "set point", and y(t) is the measured process value.

A proportional–integral–derivative controller (PID controller) is a control loop feedback mechanism control technique widely used in control systems.

A PID controller continuously calculates an error value as the difference between a desired setpoint and a measured process variable and applies a correction based on proportional, integral, and derivative terms. PID is an initialism for Proportional-Integral-Derivative, referring to the three terms operating on the error signal to produce a control signal.

The theoretical understanding and application dates from the 1920s, and they are implemented in nearly all analogue control systems; originally in mechanical controllers, and then using discrete electronics and latterly in industrial process computers. The PID controller is probably the most-used feedback control design.

If u(t) is the control signal sent to the system, y(t) is the measured output and r(t) is the desired output, and is the tracking error, a PID controller has the general form

The desired closed loop dynamics is obtained by adjusting the three parameters , and , often iteratively by "tuning" and without specific knowledge of a plant model. Stability can often be ensured using only the proportional term. The integral term permits the rejection of a step disturbance (often a striking specification in process control). The derivative term is used to provide damping or shaping of the response. PID controllers are the most well-established class of control systems: however, they cannot be used in several more complicated cases, especially if MIMO systems are considered.

Applying Laplace transformation results in the transformed PID controller equation

with the PID controller transfer function

As an example of tuning a PID controller in the closed-loop system , consider a 1st order plant given by

where and are some constants. The plant output is fed back through

where is also a constant. Now if we set , , and , we can express the PID controller transfer function in series form as

Plugging , , and into the closed-loop transfer function , we find that by setting

. With this tuning in this example, the system output follows the reference input exactly.

However, in practice, a pure differentiator is neither physically realizable nor desirable due to amplification of noise and resonant modes in the system. Therefore, a phase-lead compensator type approach or a differentiator with low-pass roll-off are used instead.

The field of control theory can be divided into two branches:

- Linear control theory – This applies to systems made of devices which obey the superposition principle, which means roughly that the output is proportional to the input. They are governed by linear differential equations. A major subclass is systems which in addition have parameters which do not change with time, called linear time invariant (LTI) systems. These systems are amenable to powerful frequency domain mathematical techniques of great generality, such as the Laplace transform, Fourier transform, Z transform, Bode plot, root locus, and Nyquist stability criterion. These lead to a description of the system using terms like bandwidth, frequency response, eigenvalues, gain, resonant frequencies, zeros and poles, which give solutions for system response and design techniques for most systems of interest.

- Nonlinear control theory

– This covers a wider class of systems that do not obey the

superposition principle, and applies to more real-world systems because

all real control systems are nonlinear. These systems are often

governed by nonlinear differential equations.

The few mathematical techniques which have been developed to handle

them are more difficult and much less general, often applying only to

narrow categories of systems. These include limit cycle theory, Poincaré maps, Lyapunov stability theorem, and describing functions. Nonlinear systems are often analyzed using numerical methods on computers, for example by simulating their operation using a simulation language. If only solutions near a stable point are of interest, nonlinear systems can often be linearized by approximating them by a linear system using perturbation theory, and linear techniques can be used.

System interfacing

Control systems can be divided into different categories depending on the number of inputs and outputs.

- Single-input single-output (SISO) – This is the simplest and most common type, in which one output is controlled by one control signal. Examples are the cruise control example above, or an audio system, in which the control input is the input audio signal and the output is the sound waves from the speaker.

- Multiple-input multiple-output (MIMO) – These are found in more complicated systems. For example, modern large telescopes such as the Keck and MMT have mirrors composed of many separate segments each controlled by an actuator. The shape of the entire mirror is constantly adjusted by a MIMO active optics control system using input from multiple sensors at the focal plane, to compensate for changes in the mirror shape due to thermal expansion, contraction, stresses as it is rotated and distortion of the wavefront due to turbulence in the atmosphere. Complicated systems such as nuclear reactors and human cells are simulated by a computer as large MIMO control systems.

Control ability and observe ability are main issues in the analysis of a system before deciding the best control strategy to be applied, or whether it is even possible to control or stabilize the system. Control ability is related to the possibility of forcing the system into a particular state by using an appropriate control signal. If a state is not controllable, then no signal will ever be able to control the state. If a state is not controllable, but its dynamics are stable, then the state is termed stabilizable. Observability instead is related to the possibility of observing, through output measurements, the state of a system. If a state is not observable, the controller will never be able to determine the behavior of an unobservable state and hence cannot use it to stabilize the system. However, similar to the stabilizability condition above, if a state cannot be observed it might still be detectable.

From a geometrical point of view, looking at the states of each variable of the system to be controlled, every "bad" state of these variables must be controllable and observable to ensure a good behavior in the closed-loop system. That is, if one of the eigenvalues of the system is not both controllable and observable, this part of the dynamics will remain untouched in the closed-loop system. If such an eigenvalue is not stable, the dynamics of this eigenvalue will be present in the closed-loop system which therefore will be unstable. Unobservable poles are not present in the transfer function realization of a state-space representation, which is why sometimes the latter is preferred in dynamical systems analysis.

Solutions to problems of an uncontrollable or unobserveable system include adding actuators and sensors.

Control specification

Several different control strategies have been devised in the past years. These vary from extremely general ones (PID controller), to others devoted to very particular classes of systems (especially robotics or aircraft cruise control).

A control problem can have several specifications. Stability, of course, is always present. The controller must ensure that the closed-loop system is stable, regardless of the open-loop stability. A poor choice of controller can even worsen the stability of the open-loop system, which must normally be avoided. Sometimes it would be desired to obtain particular dynamics in the closed loop: i.e. that the poles have , where is a fixed value strictly greater than zero, instead of simply asking that .

Another typical specification is the rejection of a step disturbance; including an integrator in the open-loop chain (i.e. directly before the system under control) easily achieves this. Other classes of disturbances need different types of sub-systems to be included.

Other "classical" control theory specifications regard the time-response of the closed-loop system. These include the rise time (the time needed by the control system to reach the desired value after a perturbation), peak overshoot (the highest value reached by the response before reaching the desired value) and others (settling time, quarter-decay). Frequency domain specifications are usually related to robustness .

Modern performance assessments use some variation of integrated tracking error (IAE, ISA, CQI).

The main control techniques :

- Adaptive control uses on-line identification of the process parameters, or modification of controller gains, thereby obtaining strong robustness properties. Adaptive controls were applied for the first time in the aerospace industry in the 1950s, and have found particular success in that field.

- A hierarchical control system is a type of control system in which a set of devices and governing software is arranged in a hierarchical tree. When the links in the tree are implemented by a computer network, then that hierarchical control system is also a form of networked control system.

- Intelligent control uses various AI computing approaches like artificial neural networks, Bayesian probability, fuzzy logic, machine learning, evolutionary computation and genetic algorithms or a combination of these methods, such as neuro-fuzzy algorithms, to control a dynamic system.

- Optimal control is a particular control technique in which the control signal optimizes a certain "cost index": for example, in the case of a satellite, the jet thrusts needed to bring it to desired trajectory that consume the least amount of fuel. Two optimal control design methods have been widely used in industrial applications, as it has been shown they can guarantee closed-loop stability. These are Model Predictive Control (MPC) and linear-quadratic-Gaussian control (LQG). The first can more explicitly take into account constraints on the signals in the system, which is an important feature in many industrial processes. However, the "optimal control" structure in MPC is only a means to achieve such a result, as it does not optimize a true performance index of the closed-loop control system. Together with PID controllers, MPC systems are the most widely used control technique in process control.

- Robust control deals explicitly with uncertainty in its approach to controller design. Controllers designed using robust control methods tend to be able to cope with small differences between the true system and the nominal model used for design. The early methods of Bode and others were fairly robust; the state-space methods invented in the 1960s and 1970s were sometimes found to lack robustness. Examples of modern robust control techniques include H-infinity loop-shaping developed by Duncan McFarlane and Keith Glover, Sliding mode control (SMC) developed by Vadim Utkin, and safe protocols designed for control of large heterogeneous populations of electric loads in Smart Power Grid applications. Robust methods aim to achieve robust performance and/or stability in the presence of small modeling errors.

- Stochastic control deals with control design with uncertainty in the model. In typical stochastic control problems, it is assumed that there exist random noise and disturbances in the model and the controller, and the control design must take into account these random deviations.

- Energy-shaping control view the plant and the controller as energy-transformation devices. The control strategy is formulated in terms of interconnection (in a power-preserving manner) in order to achieve a desired behavior.

- Self-organized criticality control may be defined as attempts to interfere in the processes by which the self-organized system dissipates energy.

Mathematical and Computational Thinking in Science

Although there are differences in how mathematics and computational thinking are applied in science and in engineering, mathematics often brings these two fields together by enabling engineers to apply the mathematical form of scientific theories and by enabling scientists to use powerful information technologies designed by engineers. Both kinds of professionals can thereby accomplish investigations and analyses and build complex models, which might otherwise be out of the question.

Analog and Digital Information Transfer in Physics: UPC Codes

One example of the integration of computing and math into science is in developing binary representations for messages, communicated by changes in light (or, in essence, in voltage by a photovoltaic cell).

There are a number of performance expectations that actually have a very deep connection to computer science concepts and practices -- so much so, that many teachers of core natural sciences are likely to feel under prepared in certain areas, not for lack of ability, but because some of these topics are so new and often beyond the realm of the traditional science curriculum.

Take, for example, this performance expectation from HS-PS4-2: Evaluate questions about the advantages of using a digital transmission and storage of information. This

performance expectation, and many like it, make up a bulk of standards

associated with waves (what most physics teachers would likely have

expected to include mostly mechanical sound waves, electromagnetic

waves, and geometric optics).

Teaching the Fundamentals of Cell Phones and Wireless Communication. The theme entailed understanding how cell phone networks worked (and why they are "cell" phones after all!) We spent a full week learning about the geometry of cellular networks and radio signal transmission. This included transmitting our voices via multiple modes, using easily-acquired stereo speakers and amplifiers to transmit our voices along wire. We then progressed up to the transmission of sound to an electrical signal to a modulated LED (or LASER), then transmitted back into an electrical signal by sending the light wave to a photovoltaic cell attached to an amplifier and speaker. Along the way, we learned about how changes in voltage observed by the photovoltaic cell (and visualized using Logger Pro), could be interpreted manually as sound or as a message. This required us to compare the benefits and disadvantages to interpreting data as analog or digital, and even allowed us to do some manual encryption.

For one of the activities, we used a slice of an overhead projector laminate, and used thin strips of masking tape to create opaque "bars" to construct a bar code. By sliding the bar code across a photovoltaic cell attached to a Logger Pro voltmeter interface, we were able to produce a graph of changing voltage (caused by changes in light intensity hitting the cell) and to interpret it.

Design Principles are a tool for creating a better, more consistent experience for your users. They are high level principles that guide the detailed design decisions you make as you're working on a project. There are essentially two kinds of them. Universal and Specific.

1. Make your user interface design easy .

- The structure principle: Design should organize the user interface purposefully, in meaningful and useful ways based on clear, consistent models that are apparent and recognizable to users, putting related things together and separating unrelated things, differentiating dissimilar things and making similar things resemble one another. The structure principle is concerned with overall user interface architecture.

- The simplicity principle: The design should make simple, common tasks easy, communicating clearly and simply in the user's own language, and providing good shortcuts that are meaningfully related to longer procedures.

- The visibility principle: The design should make all needed options and materials for a given task visible without distracting the user with extraneous or redundant information. Good designs don't overwhelm users with alternatives or confuse with unneeded information.

- The feedback principle: The design should keep users informed of actions or interpretations, changes of state or condition, and errors or exceptions that are relevant and of interest to the user through clear, concise, and unambiguous language familiar to users.

- The tolerance principle: The design should be flexible and tolerant, reducing the cost of mistakes and misuse by allowing undoing and redoing, while also preventing errors wherever possible by tolerating varied inputs and sequences and by interpreting all reasonable actions reasonable.

- The reuse principle: The design should reuse internal and external components and behaviors, maintaining consistency with purpose rather than merely arbitrary consistency, thus reducing the need for users to rethink and remember.

Principles of User Interface Design

"To design is much more than simply to assemble, to order, or even to edit; it is to add value and meaning, to illuminate, to simplify, to clarify, to modify, to dignify, to dramatize, to persuade, and perhaps even to amuse."

-

Clarity is job #1

Clarity is the first and most important job of any interface. To be effective using an interface you've designed, people must be able to recognize what it is, care about why they would use it, understand what the interface is helping them interact with, predict what will happen when they use it, and then successfully interact with it. While there is room for mystery and delayed gratification in interfaces, there is no room for confusion. Clarity inspires confidence and leads to further use. One hundred clear screens is preferable to a single cluttered one.

-

Interfaces exist to enable interaction

Interfaces exist to enable interaction between humans and our world. They can help clarify, illuminate, enable, show relationships, bring us together, pull us apart, manage our expectations, and give us access to services. The act of designing interfaces is not Art. Interfaces are not monuments unto themselves. Interfaces do a job and their effectiveness can be measured. They are not just utilitarian, however. The best interfaces can inspire, evoke, mystify, and intensify our relationship with the world.

-

Conserve attention at all costs

We live in a world of interruption. It's hard to read in peace anymore without something trying to distract us and direct our attention elsewhere. Attention is precious. Don't litter the side of your applications with distractible material…remember why the screen exists in the first place. If someone is reading let them finish reading before showing that advertisement (if you must). Honor attention and not only will your readers be happier, your results will be better. When use is the primary goal, attention becomes the prerequisite. Conserve it at all costs.

-

Keep users in control

Humans are most comfortable when they feel in control of themselves and their environment. Thoughtless software takes away that comfort by forcing people into unplanned interactions, confusing pathways, and surprising outcomes. Keep users in control by regularly surfacing system status, by describing causation (if you do this that will happen) and by giving insight into what to expect at every turn. Don't worry about stating the obvious…the obvious almost never is.

-

Direct manipulation is best

The best interface is none at all, when we are able to directly manipulate the physical objects in our world. Since this is not always possible, and objects are increasingly informational, we create interfaces to help us interact with them. It is easy to add more layers than necessary to an interface, creating overly-wrought buttons, chrome, graphics, options, preferences, windows, attachments, and other cruft so that we end up manipulating UI elements instead of what's important. Instead, strive for that original goal of direct manipulation…design an interface with as little a footprint as possible, recognizing as much as possible natural human gestures. Ideally, the interface is so slight that the user has a feeling of direct manipulation with the object of their focus.

-

One primary action per screen

Every screen we design should support a single action of real value to the person using it. This makes it easier to learn, easier to use, and easier to add to or build on when necessary. Screens that support two or more primary actions become confusing quickly. Like a written article should have a single, strong thesis, every screen we design should support a single, strong action that is its raison d'etre.

-

Keep secondary actions secondary

Screens with a single primary action can have multiple secondary actions but they need to be kept secondary! The reason why your article exists isn't so that people can share it on Twitter…it exists for people to read and understand it. Keep secondary actions secondary by making them lighter weight visually or shown after the primary action has been achieved.

-

Provide a natural next step

Very few interactions are meant to be the last, so thoughtfully design a next step for each interaction a person has with your interface. Anticipate what the next interaction should be and design to support it. Just as we like in human conversation, provide an opening for further interaction. Don't leave a person hanging because they've done what you want them to do…give them a natural next step that helps them further achieve their goals.

-

Appearance follows behavior

Humans are most comfortable with things that behave the way we expect. Other people, animals, objects, software. When someone or something behaves consistently with our expectations we feel like we have a good relationship with it. To that end designed elements should look like how they behave. Form follows function. In practice this means that someone should be able to predict how an interface element will behave merely by looking at it. If it looks like a button it should act like a button. Don't get cute with the basics of interaction…keep your creativity for higher order concerns.

-

Consistency matters

Following on the previous principle, screen elements should not appear consistent with each other unless they behave consistently with each other. Elements that behave the same should look the same. But it is just as important for unlike elements to appear unlike (be inconsistent) as it is for like elements to appear consistent. In an effort to be consistent novice designers often obscure important differences by using the same visual treatment (often to re-use code) when different visual treatment is appropriate.

-

Strong visual hierarchies work best

A strong visual hierarchy is achieved when there is a clear viewing order to the visual elements on a screen. That is, when users view the same items in the same order every time. Weak visual hierarchies give little clue about where to rest one's gaze and end up feeling cluttered and confusing. In environments of great change it is hard to maintain a strong visual hierarchy because visual weight is relative: when everything is bold, nothing is bold. Should a single visually heavy element be added to a screen, the designer may need to reset the visual weight of all elements to once again achieve a strong hierarchy. Most people don't notice visual hierarchy but it is one of the easiest ways to strengthen (or weaken) a design.

-

Smart organization reduces cognitive load

As John Maeda says in his book Simplicity, smart organization of screen elements can make the many appear as the few. This helps people understand your interface easier and more quickly, as you've illustrated the inherent relationships of content in your design. Group together like elements, show natural relationships by placement and orientation. By smartly organizing your content you make it less of a cognitive load on the user…who doesn't have to think about how elements are related because you've done it for them. Don't force the user to figure things out…show them by designing those relationships into your screens.

-

Highlight, don't determine, with color

The color of physical things changes as light changes. In the full light of day we see a very different tree than one outlined against a sunset. As in the physical world, where color is a many-shaded thing, color should not determine much in an interface. It can help, be used for highlighting, be used to guide attention, but should not be the only differentiator of things. For long-reading or extended screen hours, use light or muted background colors, saving brighter hues for your accent colors. Of course there is a time for vibrant background colors as well, just be sure that it is appropriate for your audience.

-

Progressive disclosure

Show only what is necessary on each screen. If people are making a choice, show enough information to allow them the choice, then dive into details on a subsequent screen. Avoid the tendency to over-explain or show everything all at once. When possible, defer decisions to subsequent screens by progressively disclosing information as necessary. This will keep your interactions more clear.

-

Help people inline

In ideal interfaces, help is not necessary because the interface is learnable and usable. The step below this, reality, is one in which help is inline and contextual, available only when and where it is needed, hidden from view at all other times. Asking people to go to help and find an answer to their question puts the onus on them to know what they need. Instead build in help where it is needed…just make sure that it is out of the way of people who already know how to use your interface.

-

A crucial moment: the zero state

The first time experience with an interface is crucial, yet often overlooked by designers. In order to best help our users get up to speed with our designs, it is best to design for the zero state, the state in which nothing has yet occurred. This state shouldn't be a blank canvas…it should provide direction and guidance for getting up to speed. Much of the friction of interaction is in that initial context…once people understand the rules they have a much higher likelihood of success.

-

Great design is invisible

A curious property of great design is that it usually goes unnoticed by the people who use it. One reason for this is that if the design is successful the user can focus on their own goals and not the interface…when they complete their goal they are satisfied and do not need to reflect on the situation. As a designer this can be tough…as we receive less adulation when our designs are good. But great designers are content with a well-used design…and know that happy users are often silent.

-

Build on other design disciplines

Visual and graphic design, typography, copy writing, information architecture and visualization…all of these disciplines are part of interface design. They can be touched upon or specialized in. Do not get into turf wars or look down on other disciplines: grab from them the aspects that help you do your work and push on. Pull in insights from seemingly unrelated disciplines as well…what can we learn from publishing, writing code, bookbinding, skateboarding, firefighting, karate?

-

Interfaces exist to be used

As in most design disciplines, interface design is successful when people are using what you've designed. Like a beautiful chair that is uncomfortable to sit in, design has failed when people choose not to use it. Therefore, interface design can be as much about creating an environment for use as it is creating an artifact worth using. It is not enough for an interface to satisfy the ego of its designer: it must be used!

- Interface status have got Power safety and saving : It is meaning Efficiency and Effectiveness .

In computing, an interface is a shared boundary across which two or more separate components of a computer system exchange information. The exchange can be between software, computer hardware, peripheral devices, humans, and combinations of these.

- VGA Cable. Also known as D-sub cable, analog video cable. ...

- DVI Cable. Connect one end to: computer monitor. ...

- PS/2 Cable. Connect one end to: PS/2 keyboard, PS/2 mouse. ...

- Ethernet Cable. Also known as RJ-45 cable. ...

- 3.5mm Audio Cable. ...

- USB Cable. ...

- Computer Power Cord (Kettle Plug)

- command line (cli)

- graphical user interface (GUI)

- menu driven (mdi)

- form based (fbi)

- natural language (nli)

A good interface makes it easy for users to tell the computer what they want to do, for the computer to request information from the users, and for the computer to present understandable information. Clear communication between the user and the computer is the working premise of good UI design.

Case Video ports connector a computer might have include the VGA, S-Video, DVI, DisplayPort, and HDMI ports. Other ports include RJ-45, audio, S/PDIF, USB, FireWire, eSATA, PS/2, serial, parallel, and RJ-11 ports.

One of the most common video connectors for computer monitors and high-definition TVs is the VGA cable. A standard VGA connector has 15-pins and other than connecting a computer to a monitor, you may also use a VGA cable to connect your laptop to a TV screen or a projector.

Most current Unix-based systems offer both a command line interface and a graphical user interface. The MS-DOS operating system and the command shell in the Windows operating system are examples of command line interfaces. In addition, programming languages can support command line interfaces, such as Python.

Operating systems can be used with different user interfaces (UI): text user interfaces (TUI) and graphical user interfaces (GUI) as examples.

- Input Controls: checkboxes, radio buttons, dropdown lists, list boxes, buttons, toggles, text fields, date field.

- Navigational Components: breadcrumb, slider, search field, pagination, slider, tags, icons.

A user interface, also called a "UI" or simply an "interface," is the means in which a person controls a software application or hardware device. ... Nearly all software programs have a graphical user interface, or GUI. This means the program includes graphical controls, which the user can select using a mouse or keyboard.

- Keep the interface simple.

- Create consistency and use common UI elements.

- Be purposeful in page layout.

- Strategically use color and texture.

- Use typography to create hierarchy and clarity.

- Make sure that the system communicates what's happening.

- Think about the defaults.

In computing, an interface is a shared boundary across which two or more separate components of a computer system exchange information. The exchange can be between software, computer hardware, peripheral devices, humans, and combinations of these.

- Input Controls: checkboxes, radio buttons, dropdown lists, list boxes, buttons, toggles, text fields, date field.

- Navigational Components: breadcrumb, slider, search field, pagination, slider, tags, icons.

The mixer board can connect to the audio interface with ¼ inch cable, RCA cable, or XLR cable. Audio interface. The audio interface connects to the computer with firewire or USB cables.

There are three types cable connectors in a basic cabling installation techniques: twisted-pair connectors,coaxial cable connectors and fiber-optic connectors. Generally cable connectors have a male component and a female component, except in the case of hermaphroditic connectors such as the IBM data connector.

interface, port(noun) (computer science) computer circuit consisting of the hardware and associated circuitry that links one device with another (especially a computer and a hard disk drive or other peripherals) Synonyms: port, larboard, user interface, port wine, porthole, embrasure.

The interface allows sending a message to an object without concerning which classes it belongs. Class needs to provide functionality for the methods declared in the interface. An interface cannot implement another Interface. It has to extend another interface if needed.

the advantages of interface :

The creation of interfaces that make the best-possible use of these human-machine communications technologies-and system attributes that lie beneath the veneer of the interface, such as system intelligence and software support for collaborative activities.

Interfaces can be assessed and compared in terms of three key dimensions: (1) the language(s) they use, (2) the ways in which they allow users to say things in the language(s), and (3) the surface(s) or device(s) used to produce output (or register input) expressions of the language. The design and implementation of an interface entail choosing (or designing) the language for communication, specifying the ways in which users may express ''statements" of that language (e.g., by typing words or by pointing at icons), and selecting device(s) that allow communication to be realized-the input/output devices.

There are two language classes of interest in the design of interfaces: natural languages (e.g., English, Spanish, Japanese) and artificial languages .

Natural languages are derived evolutionarily; they typically have unrestricted and complex syntax and semantics (assignment of meaning to symbols and to the structures built from those symbols). Artificial languages are created by computer scientists or mathematicians to meet certain design and functional criteria; the syntax is typically tightly constrained and designed to minimize semantic complexity and ambiguity.

Because an artificial language has a language definition, construction of an interpreter for the language is a more straightforward task than construction of a system for interpreting sentences in a natural language. The grammar of a programming language is given; defining a grammar for English (or any other natural language) remains a challenging task (though there are now several extensive grammars used in computational systems). Furthermore, the interactions between syntax and semantics can be tightly controlled in an artificial language (because people design them) but can be quite complex in a natural language.1,2

Natural languages are thus more difficult to process. However, they allow for a wider range of expression and as a result are more powerful (and more "natural"). It is likely that the expressivity of natural languages and the ways it allows for incompleteness and indirectness may matter more to their being easy to use than the fact that people already "know them." For example, the phrase, "the letter to Aunt Jenny I wrote last March," may be a more natural way to identify a letter in one's files than trying to recall the file name, identify a particular icon, or grep (a UNIX search command) for a certain string that must be in the letter. The complex requests that may arise in seeking information from on-line databases provide another example of the advantages of complex languages near the natural language end of this dimension. Constraint specifications that are natural to users (e.g., "display the protein structures having more than 40 percent alpha helix'') are both diverse and rich in structure, whereas menu- or form-based paradigms cannot readily cover the space of possible queries. Although natural language processing remains a challenging long-range problem in artificial intelligence (as discussed under "Natural Language Processing" below in this chapter), progress continues to be made, and better understanding of the ways in which it makes communication easier may be used to inform the design of more restricted languages.

However, the fact that restricted languages have limitations is

not, per se, a shortcoming for their use in ECIs. Limiting the

range of language in using a system can (if done right) promote

correct interpretation by the system by limiting ambiguity and

allowing more effective communication.

In short, the design of an interface along the language

dimension entails choices of syntax (which may be simple or

complex) and semantics (which can be simple or complex either in

itself or in how it relates to syntax). More complex languages

typically allow the user to say more but make it harder for the

system to figure out what a person means.

Layers of Communications | ||

|

1. |

Language Layer |

|

|

• |

Natural language: complex syntax, complex semantics (whatever a human can say) |

|

|

• |

Restricted verbal language (e.g., operating systems command language, air traffic control language): limited syntax, constrained semantics |

|

|

• |

Direct manipulation languages: objects are "noun-like," get "verb equivalents" from manipulations (e.g., drag file X to Trash means ''erase X"; drag message onto Outgoing Mailbox means "send message"; draw circle around object Y and click means "I'm referring to Y, so I can say something about it.") |

|

|

2. |

Expression Layer |

|

|

Most of these types of realization can be used to express statements in most of the above types of languages. For instance, one can speak or write natural language; one can say or write a restricted language, such as a command-line interface; and one can say or write/draw a direct manipulation language. |

||

|

• |

Speaking: continuous speech recognition, isolated-word speech recognition |

|

|

• |

Writing: typing on a keyboard, handwriting |

|

|

• |

Drawing |

|

|

• |

Gesturing (American Sign Language provides an example of gesture as the realization (expression layer choice) for a full-scale natural language.) |

|

|

• |

Pick-from-set: various forms of menus |

|

|

• |

Pointing, clicking, dragging |

|

|

• |

Various three-dimensional manipulations-stretching, rotating, etc. |

|

|

• |

Manipulations within a virtual reality environment-same range of speech, gesture, point, click, drag, etc., as above, but with three dimensions and broader field of view |

|

|

• |

Manipulation unique to virtual reality environment-locomotion (flying through/over things as a means of manipulating them or at least looking at them) |

|

|

3. |

Devices |

|

|

Hardware mechanisms (and associated device-specific software) that provide a way to express a statement. Again, more than one technology at this layer can be used to implement items at the layer above. |

||

|

• |

Keyboards (many different kinds of typing) |

|

|

• |

Microphones |

|

|

• |

Light pen/drawing pads, touch-sensitive screens, whiteboards |

|

|

• |

Video display screen and mouse |

|

|

• |

Video display screen and keypad (e.g., automated teller machine) |

|

|

• |

Touch-sensitive screen (touch with pen; touch with finger) |

|

|

• |

Telephone (audible menu with keypad and/or speech input) |

|

|

• |

Push-button interface, with different button for each choice (like big buttons on an appliance) |

|

|

• |

Joystick |

|

|

• |

Virtual reality input gear-glove, helmet, suit, etc.; also body position detectors | |

Devices

The hardware realization of communication can take many forms;

common ones include microphones and speakers, keyboards and mice,

drawing pads, touch-sensitive screens, light pens, and push

buttons. The choice of device interacts with the choice of medium:

display, film/videotape, speaker/audiotape, and so on. There may

also be interactions between expression and device (an obvious

example is the connection between pointing device (mouse,

trackball, joystick) and pull-down menus or icons). On the other

hand, it is also possible to relax some of these associations to

allow for alternative surfaces (e.g., keyboard alternatives to

pointers, aural alternatives to visual outputs). Producing

interfaces for every citizen will entail providing for alternative

input/output devices for a given language-expression combination;

it might also call for alternative approaches to expression.

Technologies For Communicating With Systems

Humans modulate energy in many ways. Recognizing that fact allows for exploration of a rich set of alternatives and complements-at any time, a user-chosen subset of controls and displays-that a focus on simplicity of interface design as the primary goal can obscure. Current direct manipulation interfaces with two-dimensional display and mouse input make use, minimally, of one arm with two fingers and a thumb and one eye-about what is used to control a television remote. It was considered a stroke of genius, of course, to reduce all computer interactions to this simple set as a transition mechanism to enable people to learn to use computers without much training. There are no longer any reasons (including cost) to remain stuck in this transition mode. We need to develop a fuller coupling of human and computer, with attention to flexibility of input and output.

In some interactive situations, for example, all a computer or

information appliance needs for input is a modulated signal that it

can use to direct rendered data to the user's eyes, ears, and skin.

Over 200 different transducers have been used to date with people

having disabilities. In work with severely disabled children, David

Warner, of Syracuse University, has developed a suite of sensors to

let kids control computer displays with muscle twitches, eye

movement, facial expressions, voice, or whatever signal they can

manage to modulate. The results evoke profound emotion in patients,

doctors, and observers and demonstrate the value of research on

human capabilities to modulate energy in real time, the sensors

that can transducer those energies, and effective ways to render the

information affected by such interactions.

Machine Vision and Passive Input

Machine vision is likely to play a number of roles in future interface systems. Primary roles are likely to be:

|

• |

Data input (including text, graphics, movement) |

|

• |

Context recognition (as discussed above) |

|

• |

Gesture recognition (particularly in graphic and virtual reality environments) |

|

• |

Artificial sight for people with visual impairments |

Experience with text and image recognition provides a number of

insights relevant to future interface development, especially in

the context of aiding individuals with physical disabilities. In

particular, systems that are difficult to use by blind people would

pose the same problems to

people who can see but who are trying to access information aurally because their vision is otherwise occupied. Similar problems may arise as well for intelligent agents.

Text Recognition

Today, there are powerful tools for turning images of text into electronic text (such as ASCII). Optical character recognition (OCR) is quite good and is improving daily. Driven by a desire to turn warehouses of printed documents into electronic searchable form, companies have been and are making steady advances. Some OCR programs will convert programs into electronic text that is compatible with particular word processing packages, preserving the text layout, emphasis, font, and so on. The problem with OCR is that it is not 100 percent accurate. When it makes a mistake, however, it is not usually a character anymore (since word lookup is used to improve accuracy). As a result, when an error is made, it is often a legal (but wrong) word. Thus, it is often impossible to look at a document and figure out exactly what it did say-some sentences may not be accurate (or even make sense). One company gets around this by pasting a picture of any words the system is not sure about into the text where the unknown word would go. This works well for sighted persons, allows human editors to easily fix the mistakes, and preserves the image for later processing by a more powerful image recognizer. It does not help blind users much except that they are not misled by a wrong word and can ask a sighted person for help if they cannot figure something out. (Most helpful would be to have an OCR system include its guess as to the letters of a word in question as hidden text, which a person who is blind could call up to assist in guessing the word.) Highly stylized or embellished characters or words are not recognizable. Text that is wrapped around, tied in knots, or arranged on the page or laid out in an unusual way may be difficult to interpret even if available in electronic text. This is a separate problem from image recognition, though.

Image Recognition

Despite great strides by the military, weather, intelligence,

and other communities, image interpretation remains quite

specialized and focused on looking for particular features. The

ability to identify and describe arbitrary images is still beyond

us. However, advances in artificial intelligence, neural networks,

and image processing in combination with large data banks of image

information may make it possible in the future to provide verbal

interpretation or description for many types of information. A

major impetus comes from the desire to make image information .

searchable by computers. The combination of a tactile representation with feature or texture information presented aurally may provide the best early access to graphic information by users who are blind or cannot use their sight.

Some images, such as pie charts and line graphs, can be recognized easily and turned into raw data or a text description. Standard software has been available for some time that will take a scanned image of a chart and provide a spreadsheet of the data represented in the chart. Other images, such as electronic schematic diagrams, could be recognized but are difficult to describe. A house plan illustrates the kind of diagram that may be describable in general terms and would benefit from combining a verbal description with a tactile representation for those who cannot see to deal with this type of information.

Visual Displays

Visual display progress begins with the screen design (graphics, layouts, icons, metaphors, widget sets, animation, color, fisheye views, overviews, zooming) and other aspects of how information is visualized. The human eye can see far more than current computer displays can show. The bandwidth of our visual channel is many orders of magnitude greater than other senses: ˜1 gigabit/second. It has a dynamic range of 1013 to 1 (10 trillion to 1). No human-made sensor or graphics display has this dynamic range. The eye/brain can detect very small displacements at very low rates of motion and sees change up to a rate of about 50 times a second. The eye has a very focused view that is optimized for perceiving movement. Humans cannot see clearly outside an ˜5-degree cone of foveal vision and cannot see behind them.

State-of-the-art visualization systems (as of 1996) can create images of approximately 4,000 polygons complexity at 50 Hz per eye. Modern graphics engines also filter the image to remove sampling artifacts on polygon edges and, more importantly, textures. Partial transparency is also possible, which allows fog and atmospheric contrast attenuation in a natural-looking way. Occlusion (called "hidden surface removal" in graphics) is provided, as is perspective transformation of vertices. Smooth shading in hardware is also common now.

Thus, the images look rather good in real time, although the

scene complexity is limited to several thousand polygons and the

resolution to 1,280 × 1,024. Typical computer-aided design

constructions or animated graphics for television commercials

involve scenes with millions of polygons; these are not rendered in

real time. Magazine illustrations are rendered at resolutions in

excess of 4,000 × 3,000. Thus, the imagery used in real-time

systems is portrayed at rather less than optimal resolution,

often much less actually than the effective visual acuity required to drive a car. In addition, there are better ways of rendering scenes, as when the physics of light is more accurately simulated, but these techniques are not currently achievable in real time. A six-order-of-magnitude increase in computer speed and graphics generation would be easy to absorb; a teraflop personal computer would be rather desirable, therefore, but is probably 10 years off.

Visual Input/Output Hardware

The computer industry provides a range of display devices, from small embedded liquid-crystal displays (LCDs) in personal digital assistants (PDAs) and navigational devices to large cathode-ray tubes (CRTs) and projectors. Clearly, desirable goals are lower cost, power consumption, latency, weight, and both much larger and much smaller screens. Current commercial CRTs achieve up to 2,048 × 2,048 pixels at great cost. Projectors can do ˜1,900 × 1,200 displays. It is possible to tesselate projectors at will to achieve arbitrarily higher resolution (Woodward, 1993) and/or brightness (e.g., video walls shown at trade shows and conventions). Screens with › 5,000-pixel resolution are desirable. Durability could be improved, especially for portable units.15 Some increase in the capability of television sets to handle computer output, which may be furthered by recent industry-based standards negotiations for advanced television (sometimes referred to as high-definition television), is expected to help lower costs.16 How, when, and where to trade off the generality of personal computers against other qualities that may inhere in more specialized or cheaper devices is an issue for which there may be no one answer.

Hollywood and science fiction have described most of the conceivable, highly futuristic display devices-direct retinal projection, direct cerebral input, Holodecks, and so on. Less futuristic displays still have a long way to go to enable natural-appearing virtual reality (VR). Liquid crystal displays do not have the resolution and low weight needed for acceptable head-mounted displays to be built; users of currently available head-mounted displays are effectively legally blind given the lack of acuity offered. Projected VR displays are usable, although they are large and are not portable.

The acceptance of VR is also hindered by the extreme cost of the

high-end graphics engines required to render realistic scenes in

real time, the enormous computing power needed to simulate

meaningful situations, and the nonlinearity and/or short range of

tracking devices. Given that the powerful graphics hardware in the

$200 Nintendo 64 game is just incremental steps from supporting the

stereo graphics needed for VR, it is clear that the barriers are

now in building consumer-level tracking gear ,

and some kind of rugged stereo glasses, at least in the home game context. Once these barriers are overcome, VR will be open for wider application.

High-resolution visual input devices are becoming available to nonprofessionals, allowing them to produce their own visual content. Digital snapshot cameras and scanners, for example, have become available at high-end consumer levels. These devices, while costly, are reasonable in quality and are a great aid to people creating visual materials for the NII.17 Compositing and nonlinear editing software assist greatly as well. Similarly, two-dimensional illustration and three-dimensional animation software make extraordinary graphics achievable by the motivated and talented citizen. The cost of such software will continue to come down as the market widens, and the availability of more memory, processing, graphics power, and disk space will make results more achievable and usable.

As a future goal that defines a conceptual outer limit for input and output, one might choose the Holodeck from the movie Star Trek, a device that apparently stores and replays the molecular reconstruction information from the transporter that beams people up and down. In The Physics of Star Trek, physicist Lawrence Krauss (1995, pp. 76-77) works out the information needed to store the molecular dynamics of a single human body: 1031 bytes, some 1016 times the storage needed for all the books ever written. Krauss points out the other difficulties in transporter/Holodeck reconstruction as well.

Auditory Displays

The ear collects sound waves and encodes the spatial characteristics of the sound source into temporal and spectral attributes. Intensity difference and temporal/phase difference in sound reaching the two ears provide mechanisms for horizontal (left to right) sound localization. The ear gets information from the whole space via movement in time.

Hearing individual components of sound requires frequency identification. The ear acts such as a series of narrowly tuned filters. Sound cues can be used to catch attention with localization, indicate near or far positions with reverberation, indicate collisions and other events, and even portray abstract events such as change over time. Low-frequency sound can vibrate the user's body to somewhat simulate physical displacement.

Speakers and headphones as output devices for synthesized sound match the ears well, unlike the case with visual displays. However, understanding which sounds to create as part of the human-computer interface is much less well understood than for the visual case.

About 50 million instructions per second are required for each

synthesized sound source. Computing reverberation off six surfaces

for four sound sources might easily require a

billion-instruction-per-second computer,

one that is within today's range but is rarely dedicated to audio synthesis in practice. Audio sampling and playback are far simpler and are most often used for primitive cues such as beacons and alarms.

Thus, the barriers to good matching to human hearing have to do with computing the right sound and getting it to each ear in a properly weighted way. Although in many ways producing sound by computer is simpler than displaying imagery, many orders of magnitude more research and development have been devoted to graphics than sound synthesis.

Haptic and Tactile Displays18

Human touch is achieved by the parallel operation of many sensor systems in the body (Kandel and Schwartz, 1981). The hand alone has 19 bones, 19 joints, and 20 muscles with 22 degrees of freedom and many classes of receptors and nerve endings in the joints, skin, tendons, and muscles. The hand can squeeze, stroke, grasp, and press; it can also feel texture, shape, softness, and temperature.

The fingerpad has hairless ridged skin enclosing soft tissues made of fat in a semiliquid state. Fingers can glide over a surface without losing contact or grab an object to manipulate it. Computed output and input of human touch (called "haptics") is currently very primitive compared to graphics and sound. Haptic tasks are of two types: exploration and manipulation. Exploration involves the extraction of object properties such as shape and surface texture, mass, and solidity. Manipulation concerns modification of the environment, from watch repair to using a sledge hammer.

Kinesthetic information (e.g., limb posture, finger position), conveyed by receptors in the tendons, and muscles and neural signals from motor commands communicate a sense of position. Joint rotations of a fraction of a degree can be perceived. Other nerve endings signal skin temperature, mechanical and thermal pain, chemical pain, and itch.

Responses range from fast spinal reflex to slow deliberate conscious action. Experiments on lifting objects show that slipping is counteracted in 70 milliseconds. Humans can perceive a 2-micrometer-high single dot on a glass plate, a 6-micrometer-high grating, using different types of receptors (Kalawsky, 1993). Tactile and kinesthetic perception extends into the kilohertz range (Shimoga, 1993). Tactile interfaces aim to reproduce sensations arising from contact with textures and edges but do not support the ability to modify the underlying model.

Haptic interfaces are high-performance mechanical devices that

support bidirectional input and output of displacement and forces.

They measure positions, contact forces, and time derivatives and

output new forces and positions (Burdea, 1996). Output to the skin

can be point,

multipoint, patterned, and time-varying. Consider David Warner, who makes his rounds in a ''cyberwear" buzz suit that captures information from his patients' monitors, communicating it with bar charts tingling his arms, pulse rates sent down to his fingertips, and test results whispered in his ears, yet allowing him to maintain critical eye contact with his patients (http://www.pulsar.org).

There are many parallels and differences between haptics and visual (computer graphics) interfaces. The history of computing technology over the past 30 to 40 years is dominated by the exponential growth in computing power enabled by semiconductor technology. Most of this new computing power has supported enriched high-bandwidth user interfaces. Haptics is a sensory/motor interaction modality that is just now being exploited in the quest for seamless interaction with computers. Haptics can be qualitatively different from graphics and audio input/output because it is bidirectional. The computer model both delivers information to the human and is modified by the human during the haptic interaction. Another way to look at this difference is to note that, unlike graphics or audio output, physical energy flows in both directions between the user and the computer through a haptic display device.

In 1996 three distinct market segments emerged for haptic technology: low-end (2 degrees of freedom (DOF), entertainment); mid-range (3 DOF, visualization and training); and high-end (6 DOF, advanced computer-aided engineering). The lesson of video games has been to optimize for real-time feedback and feel. The joysticks or other interfaces for video games are very carefully handled so that they feel continuous. The obviously cheap joystick on the Nintendo 64 game is very smooth, such that a 2 year old has no problem with it. Such smoothness is necessary to be a good extension of a person's hand motion, since halting response changes the dynamics, causing one to overcompensate, slow down, etc.

A video game joystick with haptic feedback, the "Force FX," is now on the market from CH Products (Vista, Calif.) using technology licensed from Immersion Corp. It is currently supported by about 10 video game software vendors. Other joystick vendors are readying haptic feedback joysticks for this low-priced, high-volume market. In April 1996, MicroSoft bought Exos, Inc. (Cambridge, Mass.) to acquire its haptic interaction software interface.

Haptic interaction will play a major role in all simulation-based training involving manual skill (Buttolo et al., 1995). For example, force feedback devices for surgical training are already in the initial stages of commercialization by such companies as Boston Dynamics (Cambridge, Mass.), Immersion Corp. (Palo Alto, Calif.), SensAble Devices (Cambridge, Mass.), and HT Medical (Rockville, Md.).

Advanced CAD users at major industrial corporations such as

Boeing

(McNeely, 1993) and Ford (Buttolo et al., 1995) are actively funding internal and external research and development in haptic technologies to solve critical bottlenecks they have identified in their computer-aided product development processes.

These are the first signs of a new and broad-based high-technology industry with great potential for U.S. leadership. Research (as discussed below) is necessary to foster and accelerate the development of these and other emerging areas into full-fledged industries.

A number of science and technology issues arise in the haptics and tactile display arena. Haptics is attracting the attention of a growing number of researchers because of the many fascinating problems that must be solved to realize the vision of a rich set of haptic-enabled applications. Because haptic interaction intimately involves high-performance computing, advanced mechanical engineering, and human psychophysics and biomechanics, there are pressing needs for interdisciplinary collaborations as well as basic disciplinary advances. These key areas include the following:

|

• |

Better understanding of the biomechanics of human interaction with haptic displays. For example, stability of the haptic interaction goes beyond the traditional control analysis to include simulated geometry and nonlinear time-varying properties of human biomechanics. |

|

• |

Faster algorithms for rendering geometric models into haptic input/output maps. Although many ideas can be adapted from computer graphics, haptic devices require at least 1,000-Hz update rates and a latency of no more than 1 millisecond for stability and performance. Thus, the bar is raised for the definition of "real-time" performance for algorithms such as collision detection, shading, and dynamic multibody simulation. |

|

• |

Advanced design of mechanisms for haptic interactions. Real haptic interaction uses all of the degrees of freedom of the human hand and arm (as many as 29; see above). The most advanced haptic devices have 6 or 7 degrees of freedom for the whole arm/hand. To provide high-quality haptic interaction over many degrees of freedom will continuously create many research challenges in mechanism design, actuator design, and control over many years to come. |

Some of the applications of haptics that are practical today may seem arcane and specialized. This was also true for the first applications of computer graphics in the 1960s. Emerging applications today are the ones with the most urgent need for haptic interaction. Below are some examples of what may become possible:

The first of these examples is technically possible today; the second is not. There are critical computational and mechatronic challenges that will be crucial to successful implementation of ever-more realistic haptic interfaces.

Because haptics is such a basic human interaction mode for so many activities, there is little doubt that, as the technology matures, new and unforeseen applications and a substantial new industry will develop to give people the ability to physically interact with computational models. Once user interfaces are as responsive as musical instruments, for example, virtuosity is more achievable. As in music, there will always be a phase appropriate to contemplation (such as composing/programming) and a phase for playing/exploring. The consumer will do more of the latter, of course. Better feedback continuously delivered appears to take less prediction. Being able to predict is what expertise is mostly about in a technical/scientific world, and we want systems to be usable by nonexperts, hence the need for real-time interactions with as much multisensory realism as is helpful in each circumstance. Research is necessary now to provide the intellectual capital upon that such an industry can be based.

Tactile Displays for Low- or No-Vision Environments or Users

Tactile displays can help add realism to multisensory virtual

reality environments. For people who are blind, however, tactile

displays are

much more important for the provision of information that would be provided visually to those who can see. For people who are deaf and blind and who cannot use auditory displays or synthetic speech, it is the principal display form.