The information from System Analysis and Control is a major part of the systems engineering process database that forms the process output. The analysis activity provides the results of all analyses performed, identifies approaches considered and discarded, and the rationales used to reach all conclusions.

A control system manages, commands, directs, or regulates the behavior of other devices or systems using control loops. It can range from a single home heating controller using a thermostat controlling a domestic boiler to large Industrial control systems which are used for controlling processes or machines.

There are three main types of internal controls: detective, preventative and corrective.

- Detective Internal Controls. Detective internal controls are designed to find errors after they have occurred. ...

- Preventative Internal Controls. ...

- Corrective Internal Controls. ...

- Limitations.

Organizational control is important to know how well the organization is performing, identifying areas of concern, and then taking an appropriate action. There are three basic types of control systems available to executives: (1) output control, (2) behavioral control, and (3) clan control.

The three basic elements of an automated system are (1) power to accomplish the process and operate the system, (2) a program of instructions to direct the process, and (3) a control system to actuate the instructions.

The system used for controlling the position, velocity, acceleration, temperature, pressure, voltage and current etc. are examples of control systems.

The nervous system and hormones are responsible for controlling this. The body control systems are all automatic, and involve both nervous and chemical responses. It has many important parts, including: Receptors detect a stimulus, which is a change in the environment, such as temperature change.

The BASIC ( Building Assignment Social Intimate Creation ) concept of "social bond" is comprised of the following four elements: (1) attachment and application learning , (2) commitment, (3) involvement, and (4) beliefs. Attachment refers to the symbiotic linkage between a person and society and system e- Control .

*******************************************************************************

Artificial intelligence for control engineering

Robotics, cars, and wheelchairs are among artificial intelligence beneficiaries, making control loops smarter, adaptive, and able to change behavior, hopefully for the better.

Two common problems in control engineering. As usual, some inner control loops had to behave in a predictable and desired manner with strict timing, but the outer loops had to decide what those predictable inner loops were to do; they had to provide set points (or reference points) or reference profiles for the controllers in the inner loop to use as inputs .

Inner control loops

Control engineering is all about getting a diverse range of dynamic systems (for example, mechanical systems) to do what you want them to do. That involves designing controllers. The inner loop problems we faced yesterday were about creating a suitable controller for a system or plant to be controlled. That is interfacing systems to sensors and actuators (in this case wheelchair motors and ultrasonic sensors for obstacle avoidance) and the problems could be solved using computer interrupts, timer circuits, additional micro controllers, or simple open loop control.

Inner control loops are similar to autonomic nervous systems in animals. They tend to be control systems that act largely unconsciously to do things like regulate heart and respiratory rate, pupillary response, and other natural functions. This system is also the primary mechanism in controlling the fight-or-flight responses .

The autonomic nervous system has two branches: the sympathetic nervous system and the parasympathetic nervous system. The sympathetic nervous system is a quick response mobilizing system, and the parasympathetic is a more slowly activated dampening system. In our case that was similar to quickly controlling the powered wheelchair motors and more slowly monitoring sensor systems for urgent reasons to quickly change a motor input.

Automatic control

When a device is designed to perform without the need of conscious human inputs for correction, it is called automatic control. many automatic control devices have been used over the centuries, older ones often being open-loop and more recent ones often being closed. Examples of relatively early closed-loop automatic control devices that used sensor feedback in an inner loop include the temperature regulator of a furnace. . Most control systems of that time used governor mechanisms, and Maxwell (1868) used differential equations to investigate the control systems dynamics for the systems. Routh (1874) and Hurwitz (1895) then investigated stability conditions for the systems. The idea was to use sensors to measure the output performance of a device being controlled so that those measurements could be used to provide feedback to input actuators that could make corrections toward some desired performance.

Feedback controllers

Feedback controllers began to be created as separate multipurpose devices, and Minorsky (1922) invented the three-term or PID control at the General Electric Research Laboratory while helping install and test some automatic steering on board a ship. PID controllers have regularly been used for inner loops ever since, and these feedback controllers were used to develop ideas about optimal control in the 1950s and ’60s. The maximum principle was developed in 1956 (Pontryagin et al., 1962), and dynamic programming (Bellman 1952 & 1957) laid the foundations of optimal control theory. That was followed by progress in stochastic and robust control techniques in the 1970s. The design methodologies of that time were for linear single input, single output systems and tended to be based on frequency response techniques or the Laplace transform solution of differential equations. The advent of computers and need to control ballistic objects for which physical models could be constructed led to the state-space approach, which tended to replace the general differential equation by a system of first order differential equations. That led to the development of modern systems and control theory with an emphasis on mathematical formulation.

Adaptive control

Recent controllers were traditionally electrical or at least electromechanical, but in 1969-1970, the Intel microprocessor was invented by Ted Hoff, and since then the price of microprocessors (and memory) has fallen roughly in line with Moore’s Law, which stated that the number of transistors on an integrated circuit would double roughly every two years. That all made the implementation of a basic feedback control system in an inner loop more trivial, and more recent systems have used robust control and then adaptive control. Adaptive control does not need a priori information and has parameters that vary, or are initially uncertain. For example, as an aircraft flies, its mass will slowly decrease as a result of fuel consumption, or in our case, a powered wheelchair user may become more tired as the day goes on. In these cases a low level control law is needed that adapts itself as conditions change.

Outer control loops

Inner control loops need inputs. They need reference points or profiles. These were originally just a set value. For example, the input to Ktesibios’s water clock was a desired level of water, and the input to Drebbel’s temperature regulator was a specific temperature value. As the inner loops became more reliable and could largely be left unattended (although often monitored), attention shifted to control loops that sit outside of them.

Where inner control loops are similar to autonomic nervous systems in animals, outer control loops are similar to their brains (Kandel, 2012). That is, they tend to be more conscious and less automatically predictable. Even flatworms have a simple and clearly defined nervous system with a central system and a peripheral system that includes a simple autonomic nervous system (Cleveland et al., 2008).

The brain is the higher control center for functions such as walking, talking, and swallowing. It controls our thinking functions, how we behave, and all our intellectual (cognitive) activities, such as how we attend to things, how we perceive and understand our world and its physical surroundings, how we learn and remember, and so on. In our case that was similar to deciding where a wheelchair user wanted to go and what the user might want to do, and whether or not control parameters should be adjusted because of them. In both cases, the more complicated higher level of control in the outer loops can only work if the lower level control in the inner loops acts in a reasonably predictable and repeatable fashion.

Originally, control engineering was all about continuous systems. Development of computers and microcontrollers led to discrete control system engineering because communications between the computer-based digital controllers and the physical systems were governed by clocks. Many control systems are now computer controlled and consist of both digital and analog components, and key to advancing their success is unsupervised and adaptive learning.

But a computer can do many things over and above controlling an outer loop to produce a desired input or inputs for some inner loops. Many people believe that the brain can be simulated by machines and because brains are intelligent, simulated brains must also be intelligent; thus machines can be intelligent. It may be technologically feasible to copy the brain directly into hardware and software, and that such a simulation will be essentially identical to the original (Russell & Norvig, 2003; Crevier, 1993).

Computer programs have plenty of speed and memory, but their abilities only correspond to the intellectual mechanisms that program designers understood well enough to put into them. Some abilities that children normally don’t develop until they are teenagers may be in, and some abilities possessed by two-year-olds are still out (Basic Questions, 2015). The matter is further complicated because cognitive sciences still have not succeeded in determining exactly what human abilities are. The organization of the intellectual mechanisms for intelligent control can be different from that in people. Whenever people do better than computers on some task, or computers use a lot of computation to do as well as people, this demonstrates that the program designers lack understanding of the intellectual mechanisms required to do the task efficiently. Or perhaps the task can be done better in a different way.

While control engineers have been migrating from traditional electromechanical and analog electronic control technologies to digital mechatronic control systems incorporating computerized analysis and decision-making algorithms, novel computer technologies have appeared on the horizon that may change things even more (Masi, 2007). Outer loops have become more complicated and less predictable as microcontrollers and computers have developed over the decades, and they have begun to be regarded as AI systems since John McCarthy coined the term in 1955 (Skillings, 2006).

Artificial intelligence tools

AI is the intelligence exhibited by machines or software. AI in control engineering is often not about simulating human intelligence. We can learn something about how to make machines solve problems by observing other people, but most work in intelligent control involves studying real problems in the world rather than studying people or animals.

AI can be technical and specialized, and is often deeply divided into subfields that often don’t communicate with each other at all (McCorduck, 2004) as different subfields focus on the solutions to specific problems. But general intelligence is still among the long-term goals (Kurzweil, 2005), and the central problems (or goals) of AI include reasoning, knowledge, planning, learning, communication, and perception. Currently popular approaches in control engineering to achieve them include statistical methods, computational intelligence, and traditional symbolic AI. The whole field is interdisciplinary and includes control engineers, computer scientists, mathematicians, psychologists, linguists, philosophers, and neuroscientists.

There are a large number of tools used in AI, including search and mathematical optimization, logic, methods based on probability, and many others. I reviewed an article about seven AI tools in Control Engineering that have proved to be useful with control and sensor systems (Sanders, 2013); they were knowledge-based systems, fuzzy logic, automatic knowledge acquisition, neural networks, genetic algorithms, case-based reasoning, and ambient-intelligence. Applications of these tools have become more widespread due to the power and affordability of present-day computers, and greater use may be made of hybrid tools that combine the strengths of two or more of them. Control engineering tools and methods tend to have less computational complexity than some other AI applications, and they can often be implemented with low-capability microcontrollers. The appropriate deployment of new AI tools will contribute to the creation of more capable control systems and applications. Other technological developments in AI that will impact on control engineering include data mining techniques, multi-agent systems, and distributed self-organizing systems.

AI assumes that at least some of something like human intelligence can be so precisely described that a machine can be made to simulate it. That raises philosophical issues about the nature of the mind and the ethics of creating AI endowed with some human-like intelligence, issues which have been addressed by myth, fiction, and philosophy since antiquity (McCorduck, 2004). But how do we do it? Well, mechanical or formal reasoning has been developed by philosophers and mathematicians since antiquity, and the study of logic led directly to the invention of the programmable digital electronic computer. Turing’s theory of computation suggested that a machine, by shuffling symbols as simple as "0" and "1", could simulate any conceivable act of mathematical deduction (Berlinski, 2000).

This, along with discoveries in neurology, information theory, and cybernetics, inspired researchers to consider the possibility of building an electronic brain (McCorduck, 2004).

Computers can now win at checkers and chess, solve some word problems in algebra, prove logical theorems, and speak, but the difficulty of some of the problems that have been faced, surprised everyone in the community. In 1974, in response to criticism from Lighthill (1973) and ongoing pressure from the U.S. Congress, both the U.S. and British governments cut off all undirected exploratory research in AI, leaving only specific research in areas like control engineering.

Analog vs. digital; unbounded vs. defined

The brain (of a human or of a fruit fly) is an analog computer (Dyson, 2014). It is not a digital computer, and intelligence may not be any sort of algorithm. Is there any evidence for a programmable digital computer evolving the ability to take initiative or make choices which are not on a list of options programmed in by a human anyway? Is there any reason to think that a digital computer is a good model for what goes on in the brain? We are not digital machines. Turing machines are discrete state / discrete time machines while we are continuous state / continuous time organisms.

We have made advances with continuous models of neural systems as nonlinear dynamical systems, but in all these cases the present state of the system tends to determine the next state of the system, so that next state is entailed by the laws programmed into the computer. There is nothing for consciousness to do in a digital control system as the current state of the system suffices entirely for the next state.

In the coming decades, humanity may create a powerful AI but, in 1999, I suggested that machine intelligence was just around the corner (Sanders, 1999). It has all taken longer than I thought it would—and there has been frustration along the way (Sanders 2008)—but what is the story so far?

Intelligent machines, so far

It was probably the idea of making a "child machine" that could improve itself by reading and learning from experience that began the study of machine intelligence. That was first proposed in the 1940s, and after World War II, a number of people independently started to work on intelligent machines. Alan Turing was one of the first, and after his 1947 lecture, Turing predicted that there would be intelligent computers by the end of the century. Zadeh (1950) published a paper entitled "Thinking Machines-A New Field in Electrical Engineering," and Turing (1950) discussed the conditions for considering a machine to be intelligent that same year. He made his now famous argument that if a machine could successfully pretend to be human to a knowledgeable observer then it should be considered intelligent.

Later that decade, a group of computer scientists gathered at Dartmouth College in New Hampshire (in 1956) to consider a brand-new topic: artificial intelligence. It was John McCarthy (now a professor at Stanford) who coined the name "artificial intelligence" just ahead of that meeting. That debate served as a springboard for further discussion about ways that machines could simulate aspects of human cognition. An underlying assumption in those early discussions was that learning (and other aspects of human intelligence) could be precisely described. McCarthy defined AI there as "the science and engineering of making intelligent machines" (McCarthy, 2007; Russell & Norvig, 2003). The attendees at Dartmouth, including John McCarthy, Marvin Minsky, Allen Newell and Herbert Simon, then became the leaders of AI research for decades.

By the late 1950s, there were many researchers in the area, and most of them were basing their work on programming computers. Minsky (head of the MIT Laboratory) predicted in 1967 that "within a generation the problem of creating ‘artificial intelligence’ will be substantially solved" (Dreyfus, 2008). Then, the field ran into unexpected difficulties around 1970 with the failure of any machine to understand even the most basic children’s story. Machine intelligence programs lacked the intuitive common sense of a four-year-old, and Dreyfus still believes that no one knows what to do about it.

Now (nearly 60 years after that first conference), we still have not managed to create a "child machine." Programs still can’t learn much of what a child learns naturally from physical experience.

But, we do appear to be at a point in history when our human biology appears too frail, slow, and over-complicated in many industrial situations (Sanders, 2008). We are turning to powerful new control technologies to overcome those weaknesses, and the longer we use that technology, the more we are getting out of it. Our machines are exceeding human performance in more tasks. As they merge with us more intimately, and we combine our brain power with computer capacity to deliberate, analyze, deduce, communicate, and invent, then many scientists are predicting a period when the pace of technological change will be so fast and far-reaching that our lives will be irreversibly altered.

A fundamental problem, though, is that nobody appears to know what intelligence is. Varying kinds and degrees of intelligence occur in people, many animals, and now some machines. A problem is that we cannot agree what kinds of computation we want to call intelligent. Some people appear to think that human-level intelligence can be achieved by writing large numbers of programs of the kind that people are writing now or by assembling vast knowledge bases of facts in the languages now used for expressing knowledge. However, most AI researchers now appear to believe that new fundamental ideas are required, and therefore it cannot be predicted when human-level intelligence will be achieved (McCarthy, 2008).

12 key AI applications

Machine intelligence does combine a wide variety of advanced technologies to give machines an ability to learn, adapt, make decisions, and display new behaviors. This is achieved using technologies such as neural networks (Sanders et al., 1996), expert systems (Hudson, 1997), self-organizing maps (Burn, 2008), fuzzy logic (Dingle, 2011), and genetic algorithms (Manikas, 2007), and we have applied that machine intelligence technology to many areas. Twelve AI applications include:

- Assembly (Gupta et al., 2001; Schraft and Ledermann, 2003; Guru et al., 2004)

- Building modeling (Gegov, 2004; Wong, 2008)

- Computer vision (Bertozzi, 2008; Bouganis, 2007)

- Environmental engineering (Sanders, 2000; Patra 2008)

- Human-computer interaction (Sanders, 2005; Zhao 2008)

- Internet use (Bergasa-Suso, 2005; Kress, 2008)

- Medical systems (Pransky, 2001; Cardso, 2007)

- Robotic manipulation (Tegin, 2005; Sreekumar, 2007)

- Robotic programming (Tewkesbury, 1999; Kim, 2008)

- Sensing (Sanders, 2007; Trivedi 2007)

- Walking robots (Capi et al., 2001; Urwin-Wright 2003)

- Wheelchair assistance (Stott, 2000; Pei, 2007).

There appear to be some technologies that could significantly increase the practical ability of computers in these areas (Brackenbury, 2002; Sanders, 2008). Four ways AI helps computing.

- Natural language understanding to improve communication.

- Machine reasoning to provide inference, theorem-proving, cooperation, and relevant solutions.

- Knowledge representation for perception, path planning, modeling, and problem solving.

- Knowledge acquisition using sensors to learn automatically for navigation and problem solving.

Some of these investigations are suggesting the possibility of smarter-than-human intelligence within some specific application areas. However, smarter minds are much harder to describe and discuss than faster brains or bigger brains, and what does "smarter-than-human" actually mean? We may not be smart enough to know (at least not yet).

Could AI turn against us?

Let’s

address directly the problem of whether AI is going to destroy us all

(Lanier, 2014). In the coming decades, humanity may create a powerful

AI, but it has all taken longer than expected…and there has been

frustration all along the way. By the middle of the 1960s, research in

the U.S. was heavily funded by the Dept. of Defense and laboratories had

been established around the world. Researchers were optimistic, and

Herbert Simon predicted that "machines will be capable, within 20 years,

of doing any work a [hu]man can do," and Marvin Minsky agreed, writing

that "Within a generation…the problem of creating ‘artificial

intelligence’ will substantially be solved." None of that happened, and a

lot of the funding dried up.

Let’s

address directly the problem of whether AI is going to destroy us all

(Lanier, 2014). In the coming decades, humanity may create a powerful

AI, but it has all taken longer than expected…and there has been

frustration all along the way. By the middle of the 1960s, research in

the U.S. was heavily funded by the Dept. of Defense and laboratories had

been established around the world. Researchers were optimistic, and

Herbert Simon predicted that "machines will be capable, within 20 years,

of doing any work a [hu]man can do," and Marvin Minsky agreed, writing

that "Within a generation…the problem of creating ‘artificial

intelligence’ will substantially be solved." None of that happened, and a

lot of the funding dried up. Only in December 2014, an open letter calling for caution to ensure intelligent machines do not run beyond our control was signed by a large (and growing) number of people, including some of the leading figures in AI. Fears of our creations turning on us stretch back at least as far as Frankenstein, but as computers begin driving our cars (and powered wheelchairs), we may have to tackle these issues.

The letter from Stephen Hawking notes: "As capabilities in these areas and others cross the threshold from laboratory research to economically valuable technologies, a virtuous cycle takes hold whereby even small improvements in performance are worth large sums of money, prompting greater investments in research. There is now a broad consensus that AI research is progressing steadily, and that its impact on society is likely to increase. The potential benefits are huge, since everything that civilization has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools AI may provide, but the eradication of disease and poverty are not unfathomable. Because of the great potential of AI, it is important to research how to reap its benefits while avoiding potential pitfalls."

Other authors have added, "Our AI systems must do what we want them to do," and they have set out research priorities they believe will help "maximize the societal benefit." The primary concern is not spooky emergent consciousness, but simply the ability to make high-quality decisions that are aligned with our values (Lanier, 2014), something else that is difficult to pin down.

A system that is optimizing a function of variables, where the objective depends on a subset of them, will often set remaining unconstrained variables to extremes, for example 0 (Russel, 2014). But if one of those unconstrained variables is actually something we care about, any solution may be highly undesirable.

Improving decision quality

This is essentially the old story of the genie in the lamp; you get exactly what you ask for, not what you want. As higher level control systems become more capable decision makers and are connected through the Internet, then they could have an unexpected impact.

This is not a minor difficulty. Improving decision quality has been a goal of AI in control engineering. Research has been accelerating as pieces of the conceptual framework fall into place and the building blocks gain in number, size, and strength. Senior AI researchers are noticeably more optimistic than was the case even a few years ago, but there is a correspondingly greater concern about potential risks.

Instead of just creating pure intelligence, we need to be building useful intelligence. AI is a tool not a threat (Brooks, 2014). He says "relax; chill," because it all comes from fundamental misunderstandings of the nature of the progress that is being made, and from a misunderstanding of how far we really are from having artificially intelligent beings. It is a mistake to be worrying about us developing malevolent AI anytime soon, and worry stems from not distinguishing between the real recent advances in control engineering, and the enormous complexity of building sentient AI.

Machine learning allows us to teach things like how to distinguish classes of inputs and to fit curves to time data. This lets our machines know when a powered wheelchair is about to collide with an obstacle. But that is only a very small part of the problem. The learning does not help a machine understand much about the human wheelchair driver or his intent or needs. Any malevolent AI would need these capabilities.

The intelligent powered wheelchair systems we are creating are unable to connect their understanding to the bigger world. They don’t know that humans exist (Brooks, 2014). If they are about to run into one, they make no distinction between a human and any other obstacle. The systems don’t even know that the powered wheelchair exists, although people train the systems, and the systems are there to serve us. But they know a little bit about the world, and the controllers have just a little common sense. For instance, they know that if they are driving the motors but are no longer moving for whatever reason, then there is no longer any point in continuing the motion. But they don’t have any semantic connection between an obstacle or person who is detected in their way, and the person who trained them.

There is some interesting work in cloud robotics, connecting the semantic knowledge learned by many robots into a common shared representation. This means that anything that is learned by one is quickly shared and becomes useful to all, but that can just make the machine learning problems bigger. It will actually be figuring out the equations and the problems in applications that will allow us to make useful steps forward in control engineering. It is not simply a matter of pouring more computation onto problems. Let’s get on with inventing better and more useful AI. It will take a long time, but there will be rewards for control engineering along the way.

There is a lot of bad stuff going on in the world, but little has to do with AI. There are so many human directed potential calamites, and we might be wiser to worry about things like terrorism and climate change (Smolin, 2014). If we are to survive as an industrial civilization and solve things like climate change, then there must be a synthesis of the natural and artificial control systems on the planet. To the extent that the feedback systems that control the carbon cycle on the planet have a rudimentary intelligence, this is where the merging of natural and AI could prove decisive for humanity.

More for AI before it is scary

AI may just be a fake thing (Myhrvold, 2014). It may just add an unnecessary philosophical layer to what otherwise should be a technical field. If we talk about particular technical challenges that AI researchers might be interested in, we end up with something duller but that makes a lot more sense. For instance, we can talk about fuzzy logic deciding between classifications in a control system. Can our programs recognize when a wheelchair user wants to go very close to a wall (for example to switch on a light) and that sort of thing? That sort of intelligent problem solving in control engineering is not leading to the creation of any sort of life, and certainly not life that would be superior to us. If you talk about AI as a set of techniques, as a field of study in mathematics or control engineering, it brings benefits. If we talk about it as a mythology, then we can waste time and effort.

It would be exciting if AI was working so well that it was about to get scary (Wastler, 2014), but the part that causes real problems to human beings and the world is the actuator as it is the interface to changing physicality. It is our control systems that will control it. Thinking about the problem as some sort of rogue autonomy algorithm, instead of thinking about the actuators, is misdirected. It is the actuators controlled by the control systems that can do real harm. Some AI mythology appears to be similar to some traditional ideas about religion, but applied to the technical world. The notion of a threshold (sometimes called a singularity or super-intelligence, etc.) is similar to a certain kind of superstitious idea about divinity (Lanier, 2014).

That said, as control engineers we may need to be more concerned about the two- or three-year-old child version of AI. Two- and three-year olds don’t understand when they are being destructive, but it will be difficult if their mistakes lead to more regulation. If our wheelchairs drive under a bus in an effort to avoid hitting a cat, then there will be interesting questions to answer, but regulation just sends work off-shore and hampers the "trusted" players, while hackers continue anyway.

Smarter, with benefits

Machines and control algorithms are getting smarter, and we are working hard to achieve that so that we can enjoy the real benefits, but what should these smart machines do and what should they not do? Should they decide who to kill on the battlefield or in the medical field? The Association for the Advancement of Artificial Intelligence has formally addressed these ethical issues in detail, with a series of panels, and plans are underway to expand the effort (Muehlhauser, 2014).

There are negative opinions, though. The philosopher John Searle says that the idea of a non biological machine being intelligent is incoherent; Hubert Dreyfus says that it is impossible. The computer scientist Joseph Weizenbaum says the idea is obscene, anti-human, and immoral. Various people have said that since AI hasn’t reached a human level by now, it may never reach it. Still other people are disappointed that companies they invested in went bankrupt (Basic Questions, 2015).

7 AI-boosting breakthroughs

Seven recent breakthroughs may boost AI developments:

- Cheap parallel computation could provide the equivalent of billions of neurons firing simultaneously.

- Big Data can help using categorization with the enormous amount of data that is stored on servers.

- Better algorithms, such as neural nets in stacked layers with optimized results within each layer, will allow learning to be accumulated faster.

- The increasing availability of vast computing power at low cost, and the advances in computer science and engineering, are influencing developments in control engineering.

- Recursive algorithmic solutions of control problems are now possible as opposed to the search for closed-form solutions (Kucera, 2014).

- Control systems are decision-making systems, and that is leading to interdisciplinary research and cross-fertilization. Emerging control areas include hybrid control systems (systems with continuous dynamics controlled by sequential machines), fuzzy logic control, parallel processing, neural networks, and learning. Control systems theory also benefits signal processing, communications, numerical analysis, transport, and economics.

- Analog computing is making a comeback, especially in control engineering. The idea that a machine can ultimately think as well or better than a human is a welcome one (Myhrvold, 2014), but our brain is an analog device, and if we are going to worry about AI, we may need analog computers and not digital ones. That said, a model is never reality, and if our models someday outdo the phenomena they’re modeling, we would have a one-off novelty.

If an AI system makes a decision that we regret, then we change its algorithms. If AI systems make decisions that our society or our laws do not approve of, then we will modify the principles that govern them or create better ones. Of course, human engineers make mistakes and intelligent machines will make mistakes too, even big mistakes. Like humans, we need to keep monitoring, teaching, and correcting them. There will be a lot of scrutiny on the actions of artificial systems, but a wider problem is that we do not have a consensus on what is appropriate.

Right tools for the right task

There is a distinction to be made between machine intelligence and machine decision making. We should not be afraid of intelligent machines but of machines making decisions that they do not have the intelligence to make. As for human beings, it is machine stupidity that is dangerous and not machine intelligence (Bishop, 2014). A problem is that intelligent control algorithms can make very many good decisions and then one day make a very foolish decision and fail spectacularly because of some event that never appeared in the training data. That is the problem with bounded intelligence. We should fear our own stupidity far more than the hypothetical brilliance or stupidity of algorithms yet to be invented. AI machines have no emotions and never will, because they are not subject to the forces of natural selection (Ingham & Mollard, 2014).

There is no metric for intelligence or benchmark for particular kinds of learning and smartness, and so it is difficult to know if we are improving (Kelly, 2014). We definitely do not have any ruler to measure the continuum of intelligence. We don’t even have an agreed definition of intelligence. AI does appear to be becoming more useful in control engineering though, partly through trial and error and removing control algorithms and machines that do not work. As AI systems make mistakes, we can decide what is acceptable. Since AI systems are assuming some tasks that humans do, we have much to teach them. Without this teaching and guidance they would be frightening (as can many engineering systems), but as control engineers, we can provide that teaching and guidance.

Virtual world

As humans, we know the physical world only through a neurologically generated virtual model that we consider to be reality. Even our life history and memory is a neurological construct. Our brains generate the narratives that we live by. These narratives are imprecise, but good enough for us to blunder along. Although we may be bested on specific tasks, overall, we tend to fare well in competition against machines. Machines are very far from simulating our flexibility, cunning, deception, anger, fear, revenge, aggression, and teamwork (Brockman, 2014).

While respecting the narrow, chess-playing prowess of Deep Blue, we should not be intimidated by it. In fact, intelligent machines have helped human beings to become better chess players. As AI develops, we might have to engineer ways to prevent consciousness in them just as we engineer control systems to be safe. After all, even with Watson or Deep Blue, anyone can pull its plug and beat it into rubble with a hammer (Provine, 2014)…or can we?

Key concepts

- Artificial intelligence (AI) can help control engineering.

- Key AI applications include assembly, computer vision, robotics, sensing, computing.

- AI has a long way to go before it could be dangerous.

More frequent control software upgrades may be more advantageous as programs use more useful AI principles.

Artificial intelligence tools can aid sensor systems

At least seven artificial intelligence (AI) tools can be useful when applied to sensor systems: knowledge-based systems, fuzzy logic, automatic knowledge acquisition, neural networks, genetic algorithms, case-based reasoning, and ambient-intelligence.

Artificial intelligence: Fuzzy logic explained

Fuzzy logic for most of us: It’s not as fuzzy as you might think and has been working quietly behind the scenes for years. Fuzzy logic is a rule-based system that can rely on the practical experience of an operator, particularly useful to capture experienced operator knowledge.

*********************************************************************************

Spacecraft Control Electronics

the R & D innovate on INTELSAT :

As the in-orbit fleet of INTELSAT satellites continues to grow, it has become imperative to expand the automation of the spacecraft control and house keeping functions to increase flexibility and reliability of satellite operations. In order to safely and efficiently manage and operate a large number of satellites in orbit and to provide optimum communications service to its costumers, INTELSAT has decided to expand on-board automation of the satellite house keeping tasks. This on-board automation is currently being implemented in the Spacecraft Control Electronics (SCE) of the Intelsat VII / VIIA series. This paper provides a description of the SCE architecture and the functions performed by the on-board processor system, together with the hardware and firmware design structure and the SCE testing philosophy.

Spacecraft Control Electronics Assembly (CEA)

space of intern star area ( galaxy )

An electronics system for complex satellite missions.

The Control Electronics Assembly (CEA) is a Spacecraft attitude control and gimbal control electronics system for complex satellite missions. The CEA manages and interfaces with Inertial Reference Units (IRUs), Sun Sensors and Magnetometers. The CEA also is the main interface to torque rods, reaction wheels, thrusters and solar panel pointing and gimbaling control. Coupled with a Command, Telemetry and Control Unit (CTCU) or On-Board Computer (OBC), the CEA provides complete satellite attitude control system solution.

Astrionics

Astrionics is the science and technology of the development and application of electronic systems, sub-systems, and components used in spacecraft. The electronic systems on board a spacecraft include attitude determination and control, communications, command and telemetry, and computer systems. Sensors refers to the electronic components on board a spacecraft.For engineers one of the most important considerations that must be made in the design process is the environment in which the spacecraft systems and components must operate and endure. The challenges of designing systems and components for the space environment include more than the fact that space is a vacuum.

Attitude determination and control

Overview

One of the most vital roles electronics and sensors play in a mission and performance of a spacecraft is to determine and control its attitude, or how it is orientated in space. The orientation of a spacecraft varies depending on the mission. The spacecraft may need to be stationary and always pointed at Earth, which is the case for a weather or communications satellite. However, there may also be the need to fix the spacecraft about an axis and then have it spin. The attitude determination and control system, ACS, ensures the spacecraft is behaving correctly. Below are several ways in which ACS can obtain the necessary measurements to determine this.Magnetometer

This device measures the strength of the Earth's magnetic field in one direction. For measurements on all three axes, the device would consist of three orthogonal magnetometers. Given the spacecraft's position, the magnetic field measurements can be compared to a known magnetic field which is given by the International Geomagnetic Reference Field model. Measurements made by magnetometers are affected by noise consisting of alignment error, scale factor errors, and spacecraft electrical activity. For near Earth orbits, the error in the modeled field direction may vary from 0.5 degrees near the Equator to 3 degrees near the magnetic poles, where erratic auroral currents play a large role. The limitation of such a device is that in orbits far from Earth, the magnetic field is too weak and is actually dominated by the interplanetary field which is complicated and unpredictable.Sun sensors

This device works on the light entering a thin slit on top of a rectangular chamber that casts an image of a thin line on the bottom of the chamber, which is lined with a network of light-sensitive cells. These cells measure the distance of the image from a centerline and using the height of the chamber can determine the angle of refraction. The cells operate based on the photoelectric effect. Incoming photons excite electrons and therefore causing a voltage across the cell, which are in turn converted into a digital signal. By placing two sensors perpendicular to each other the complete direction of the sun with respect to the sensor axes can be measured.Digital solar aspect detectors

Also known as DSADs, these devices are purely digital Sun sensors. They determine the angles of the Sun by determining which of the light-sensitive cells in the sensor is the most strongly illuminated. By knowing the intensity of light striking neighboring pixels, the direction of the centroid of the sun can be calculated to within a few arcseconds.Earth horizon sensor

Static

Static Earth horizon sensors contain a number of sensors and sense infrared radiation from the Earth’s surface with a field of view slightly larger than the Earth. The accuracy of determining the geocenter is 0.1 degrees in near-Earth orbit to 0.01 degrees at GEO. Their use is generally restricted to spacecraft with a circular orbit .Scanning

Scanning Earth horizon sensors use a spinning mirror or prism and focus a narrow beam of light onto a sensing element usually called a bolometer. The spinning causes the device to sweep out the area of a cone and electronics inside the sensor detect when the infrared signal from Earth is first received and then lost. The time between is used to determine Earth’s width. From this the roll angle can be determined. A factor that plays into the accuracy of such sensors is the fact the Earth is not perfectly circular. Another is that the sensor does not detect land or ocean, but infrared in the atmosphere which can reach certain intensities due to the season and latitude.GPS

This sensor is simple in that using one signal many characteristics can be determined. A signal carries satellite identification, position, the duration of the propagated signal and clock information. Using a constellation of 36 GPS satellites, of which only four are needed, navigation, positioning, precise time, orbit, and attitude can be determined. One advantage of GPS is all orbits from Low Earth orbit to Geosynchronous orbit can use GPS for ACS.Command and telemetry

Overview

Another system which is vital to a spacecraft is the command and telemetry system, so much in fact, that it is the first system to be redundant. The communication from the ground to the spacecraft is the responsibility of the command system. The telemetry system handles communications from the spacecraft to the ground. Signals from ground stations are sent to command the spacecraft what to do, while telemetry reports back on the status of those commands including spacecraft vitals and mission specific data.Command systems

The purpose of a command system is to give the spacecraft a set of instructions to perform. Commands for a spacecraft are executed based on priority. Some commands require immediate execution; other may specify particular delay times that must elapse prior to their execution, an absolute time at which the command must be executed, or an event or combination of events that must occur before the command is executed. Spacecraft perform a range of functions based on the command they receive. These include: power to be applied to or removed from a spacecraft subsystem or experiment, alter operating modes of the subsystem, and control various functions of the spacecraft guidance and ACS. Commands also control booms, antennas, solar cell arrays, and protective covers. A command system may also be used to upload entire programs into the RAM of programmable, micro-processor based, onboard subsystems.The radio-frequency signal that is transmitted from the ground is received by the command receiver and is amplified and demodulated. Amplification is necessary because the signal is very weak after traveling the long distance. Next in the command system is the command decoder. This device examines the subcarrier signal and detects the command message that it is carrying. The output for the decoder is normally non-return-to-zero data. The command decoder also provides clock information to the command logic and this tells the command logic when a bit is valid on the serial data line. The command bit stream that is sent to the command processor has a unique feature for spacecraft. Among the different types of bits sent, the first are spacecraft address bits. These carry a specific identification code for a particular spacecraft and prevent the intended command from being performed by another spacecraft. This is necessary because there are many satellites using the same frequency and modulation type.

The microprocessor receives inputs from the command decoder, operates on these inputs in accordance with a program that is stored in ROM or RAM, and then outputs the results to the interface circuitry. Because there is such a wide variety of command types and messages, most command systems are implemented using programmable micro-processors. The type of interface circuitry needed is based on the command sent by the processor. These commands include relay, pulse, level, and data commands. Relay commands activate the coils of electromagnetic relays in the central power switching unit. Pulse commands are short pulses of voltage or current that is sent by the command logic to the appropriate subsystem. A level command is exactly like a logic pulse command except that a logic level is delivered instead of a logic pulse. Data commands transfer data words to the destination subsystem.

Telemetry systems

Commands to a spacecraft would be useless if ground control did not know what the spacecraft was doing. Telemetry includes information such as:- Status data concerning spacecraft resources, health, attitude and mode of operation

- Scientific data gathered by onboard sensors (telescopes, spectrometers, magnetometers, accelerometers, electrometers, thermometers, etc.)

- Specific spacecraft orbit and timing data that may be used for guidance and navigation by ground, sea, or air vehicles

- Images captured by onboard cameras (visible or infrared)

- Locations of other objects, either on the Earth or in space, that are being tracked by the spacecraft

- Telemetry data that has been relayed from the ground or from another spacecraft in a satellite constellation

There are several unique features of telemetry system design for spacecraft. One of these is the approach to the fact that for any given satellite in LEO, because it is traveling so quickly, it may only be in contact with a particular station for ten to twenty minutes. This would require hundreds of ground stations to stay in constant communication, which is not at all practical. One solution to this is onboard data storage. Data storage can accumulate data slowly throughout the orbit and dump it quickly when over a ground station. In deep space missions, the recorder is often used the opposite way, to capture high-rate data and play it back slowly over data-rate-limited links.Another solution is data relay satellites. NASA has satellites in GEO called TDRS, Tracking and Data Relay Satellites, which relay commands and telemetry from LEO satellites. Prior to TDRS, astronauts could communicate with the Earth for only about 15% of the orbit, using 14 NASA ground stations around the world. With TDRS, coverage of low-altitude satellites is global, from a single ground station at White Sands, New Mexico.

Another unique feature of telemetry systems is autonomy. Spacecraft require the ability to monitor their internal functions and act on information without ground control interaction. The need for autonomy originates from problems such as insufficient ground coverage, communication geometry, being too near the Earth-Sun line (where solar noise interferes with radio frequencies), or simply for security purposes. Autonomy is important so that the telemetry system already has the capability to monitor the spacecraft functions and the command systems have the ability to give the necessary commands to reconfigure based on the needs of the action to be taken. There are three steps to this process:

1. The telemetry system must be able to recognize when one of the functions it's monitoring deviates beyond the normal ranges.

2. The command system must know how to interpret abnormal functions, so that it can generate a proper command response.

3. The command and telemetry systems must be capable of communicating with each other.

Sensors

Sensors can be classified into two categories: health sensors and payload sensors. Health sensors monitor the spacecraft or payload functionality and can include temperature sensors, strain gauges, gyros and accelerometers. Payload sensors may include radar imaging systems and IR cameras. While payload sensors represent some of the reason the mission exists, it is the health sensors that measure and control systems to ensure optimum operation.***********************************************************************************

Automation, robotics, and the factory of the future

The future concept means : Cheaper, more capable, and more flexible technologies are accelerating the growth of fully automated production facilities. The key challenge for companies will be deciding how best to harness their power.

the new wave of automation will be driven by the same things that first brought robotics and automation into the workplace: to free human workers from dirty, dull, or dangerous jobs; to improve quality by eliminating errors and reducing variability; and to cut manufacturing costs by replacing increasingly expensive people with ever-cheaper machines. Today’s most advanced automation systems have additional capabilities, however, enabling their use in environments that have not been suitable for automation up to now and allowing the capture of entirely new sources of value in manufacturing.

Robotics is Value Added Control of system

Accessible talent

People with the skills required to design, install, operate, and maintain robotic production systems are becoming more widely available, too. Robotics engineers were once rare and expensive specialists. Today, these subjects are widely taught in schools and colleges around the world, either in dedicated courses or as part of more general education on manufacturing technologies or engineering design for manufacture. The availability of software, such as simulation packages and offline programming systems that can test robotic applications, has reduced engineering time and risk. It’s also made the task of programming robots easier and cheaper.Ease of integration

Advances in computing power, software-development techniques, and networking technologies have made assembling, installing, and maintaining robots faster and less costly than before. For example, while sensors and actuators once had to be individually connected to robot controllers with dedicated wiring through terminal racks, connectors, and junction boxes, they now use plug-and-play technologies in which components can be connected using simpler network wiring. The components will identify themselves automatically to the control system, greatly reducing setup time. These sensors and actuators can also monitor themselves and report their status to the control system, to aid process control and collect data for maintenance, and for continuous improvement and troubleshooting purposes. Other standards and network technologies make it similarly straightforward to link robots to wider production systems.New capabilities

Robots are getting smarter, too. Where early robots blindly followed the same path, and later iterations used lasers or vision systems to detect the orientation of parts and materials, the latest generations of robots can integrate information from multiple sensors and adapt their movements in real time. This allows them, for example, to use force feedback to mimic the skill of a craftsman in grinding, de burring, or polishing applications. They can also make use of more powerful computer technology and big data–style analysis. For instance, they can use spectral analysis to check the quality of a weld as it is being made, dramatically reducing the amount of post manufacture inspection required.Robots take on new roles

Today, these factors are helping to boost robot adoption in the kinds of application they already excel at today: repetitive, high-volume production activities. As the cost and complexity of automating tasks with robots goes down, it is likely that the kinds of companies already using robots will use even more of them. In the next five to ten years, however, we expect a more fundamental change in the kinds of tasks for which robots become both technically and economically viable.Low-volume production

The inherent flexibility of a device that can be programmed quickly and easily will greatly reduce the number of times a robot needs to repeat a given task to justify the cost of buying and commissioning it. This will lower the threshold of volume and make robots an economical choice for niche tasks, where annual volumes are measured in the tens or hundreds rather than in the thousands or hundreds of thousands. It will also make them viable for companies working with small batch sizes and significant product variety. For example, flex track products now used in aerospace can “crawl” on a fuselage using vision to direct their work. The cost savings offered by this kind of low-volume automation will benefit many different kinds of organizations: small companies will be able to access robot technology for the first time, and larger ones could increase the variety of their product offerings. Emerging technologies are likely to simplify robot programming even further. While it is already common to teach robots by leading them through a series of movements, for example, rapidly improving voice-recognition technology means it may soon be possible to give them verbal instructions, too.Highly variable tasks

Advances in artificial intelligence and sensor technologies will allow robots to cope with a far greater degree of task-to-task variability. The ability to adapt their actions in response to changes in their environment will create opportunities for automation in areas such as the processing of agricultural products, where there is significant part-to-part variability. In Japan, trials have already demonstrated that robots can cut the time required to harvest strawberries by up to 40 percent, using a stereoscopic imaging system to identify the location of fruit and evaluate its ripeness.These same capabilities will also drive quality improvements in all sectors. Robots will be able to compensate for potential quality issues during manufacturing. Examples here include altering the force used to assemble two parts based on the dimensional differences between them, or selecting and combining different sized components to achieve the right final dimensions.

Robot-generated data, and the advanced analysis techniques to make better use of them, will also be useful in understanding the underlying drivers of quality. If higher-than-normal torque requirements during assembly turn out to be associated with premature product failures in the field, for example, manufacturing processes can be adapted to detect and fix such issues during production.

Complex tasks

While today’s general-purpose robots can control their movement to within 0.10 millimeters, some current configurations of robots have repeatable accuracy of 0.02 millimeters. Future generations are likely to offer even higher levels of precision. Such capabilities will allow them to participate in increasingly delicate tasks, such as threading needles or assembling highly sophisticated electronic devices. Robots are also becoming better coordinated, with the availability of controllers that can simultaneously drive dozens of axes, allowing multiple robots to work together on the same task.Finally, advanced sensor technologies, and the computer power needed to analyze the data from those sensors, will allow robots to take on tasks like cutting gemstones that previously required highly skilled craftspeople. The same technologies may even permit activities that cannot be done at all today: for example, adjusting the thickness or composition of coatings in real time as they are applied to compensate for deviations in the underlying material, or “painting” electronic circuits on the surface of structures.

Working alongside people

Companies will also have far more freedom to decide which tasks to automate with robots and which to conduct manually. Advanced safety systems mean robots can take up new positions next to their human colleagues. If sensors indicate the risk of a collision with an operator, the robot will automatically slow down or alter its path to avoid it. This technology permits the use of robots for individual tasks on otherwise manual assembly lines. And the removal of safety fences and interlocks mean lower costs—a boon for smaller companies. The ability to put robots and people side by side and to reallocate tasks between them also helps productivity, since it allows companies to rebalance production lines as demand fluctuates.Robots that can operate safely in proximity to people will also pave the way for applications away from the tightly controlled environment of the factory floor. Internet retailers and logistics companies are already adopting forms of robotic automation in their warehouses. Imagine the productivity benefits available to a parcel courier, though, if an onboard robot could presort packages in the delivery vehicle between drops.

Agile production systems

Automation systems are becoming increasingly flexible and intelligent, adapting their behavior automatically to maximize output or minimize cost per unit. Expert systems used in beverage filling and packing lines can automatically adjust the speed of the whole production line to suit whichever activity is the critical constraint for a given batch. In automotive production, expert systems can automatically make tiny adjustments in line speed to improve the overall balance of individual lines and maximize the effectiveness of the whole manufacturing system.While the vast majority of robots in use today still operate in high-speed, high-volume production applications, the most advanced systems can make adjustments on the fly, switching seamlessly between product types without the need to stop the line to change programs or reconfigure tooling. Many current and emerging production technologies, from computerized-numerical-control (CNC) cutting to 3-D printing, allow component geometry to be adjusted without any need for tool changes, making it possible to produce in batch sizes of one. One manufacturer of industrial components, for example, uses real-time communication from radio-frequency identification (RFID) tags to adjust components’ shapes to suit the requirements of different models.

The replacement of fixed conveyor systems with automated guided vehicles (AGVs) even lets plants reconfigure the flow of products and components seamlessly between different workstations, allowing manufacturing sequences with entirely different process steps to be completed in a fully automated fashion. This kind of flexibility delivers a host of benefits: facilitating shorter lead times and a tighter link between supply and demand, accelerating new product introduction, and simplifying the manufacture of highly customized products.

Making the right automation decisions

A successful automation strategy requires good decisions on multiple levels. Companies must choose which activities to automate, what level of automation to use (from simple programmable-logic controllers to highly sophisticated robots guided by sensors and smart adaptive algorithms), and which technologies to adopt. At each of these levels, companies should ensure that their plans meet the following criteria.

Automation strategy must align with business and operations strategy. As we have noted above, automation can achieve four key objectives: improving worker safety, reducing costs, improving quality, and increasing flexibility. Done well, automation may deliver improvements in all these areas, but the balance of benefits may vary with different technologies and approaches. The right balance for any organization will depend on its overall operations strategy and its business goals.

Automation programs must start with a clear articulation of the problem. It’s also important that this includes the reasons automation is the right solution. Every project should be able to identify where and how automation can offer improvements and show how these improvements link to the company’s overall strategy.

Automation must show a clear return on investment. Companies, especially large ones, should take care not to over specify, over complicate, or overspend on their automation investments. Choosing the right level of complexity to meet current and foreseeable future needs requires a deep understanding of the organization’s processes and manufacturing systems.

Platforming and integration

Companies face increasing pressure to maximize the return on their capital investments and to reduce the time required to take new products from design to full-scale production. Building automation systems that are suitable only for a single line of products runs counter to both those aims, requiring repeated, lengthy, and expensive cycles of equipment design, procurement, and commissioning. A better approach is the use of production systems, cells, lines, and factories that can be easily modified and adapted.Just as platforming and modularization strategies have simplified and reduced the cost of managing complex product portfolios, so a platform approach will become increasingly important for manufacturers seeking to maximize flexibility and economies of scale in their automation strategies.

Process platforms, such as a robot arm equipped with a weld gun, power supply, and control electronics, can be standardized, applied, and reused in multiple applications, simplifying programming, maintenance, and product support.

Automation systems will also need to be highly integrated into the organization’s other systems. That integration starts with communication between machines on the factory floor, something that is made more straightforward by modern industrial-networking technologies. But it should also extend into the wider organization. Direct integration with computer-aided design, computer-integrated engineering, and enterprise-resource-planning systems will accelerate the design and deployment of new manufacturing configurations and allow flexible systems to respond in near real time to changes in demand or material availability. Data on process variables and manufacturing performance flowing the other way will be recorded for quality-assurance purposes and used to inform design improvements and future product generations.

Integration will also extend beyond the walls of the plant. Companies won’t just require close collaboration and seamless exchange of information with customers and suppliers; they will also need to build such relationships with the manufacturers of processing equipment, who will increasingly hold much of the know-how and intellectual property required to make automation systems perform optimally. The technology required to permit this integration is becoming increasingly accessible, thanks to the availability of open architectures and networking protocols, but changes in culture, management processes, and mind-sets will be needed in order to balance the costs, benefits, and risks.

Cheaper, smarter, and more adaptable automation systems are already transforming manufacturing in a host of different ways. While the technology will become more straightforward to implement, the business decisions will not. To capture the full value of the opportunities presented by these new systems, companies will need to take a holistic and systematic approach, aligning their automation strategy closely with the current and future needs of the business.

**********************************************************************************

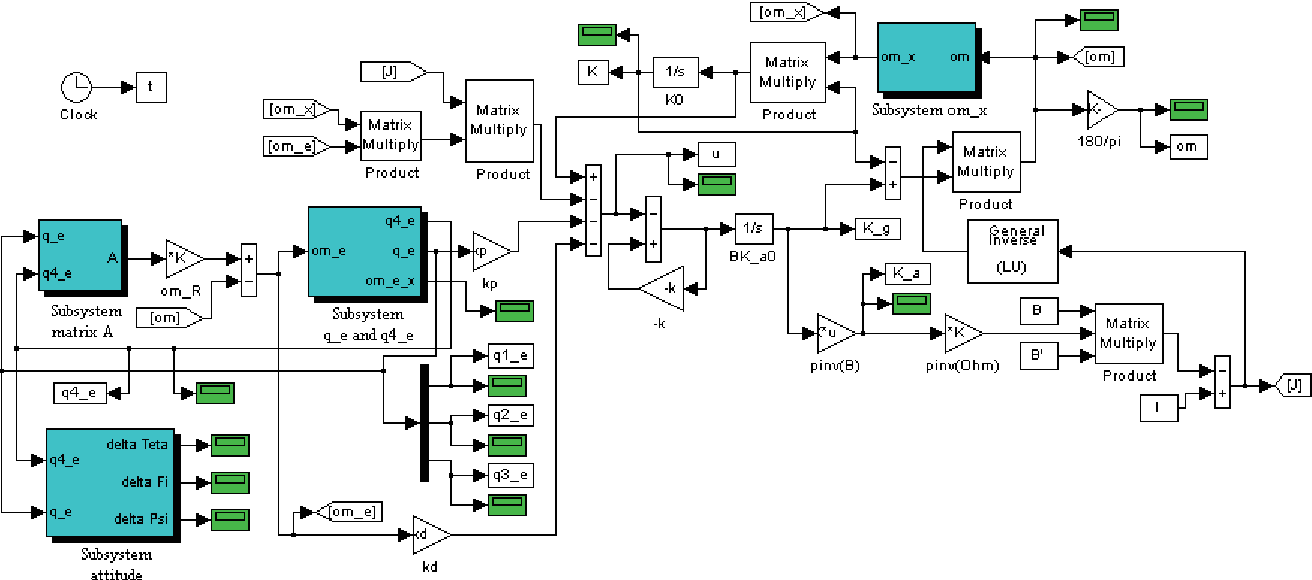

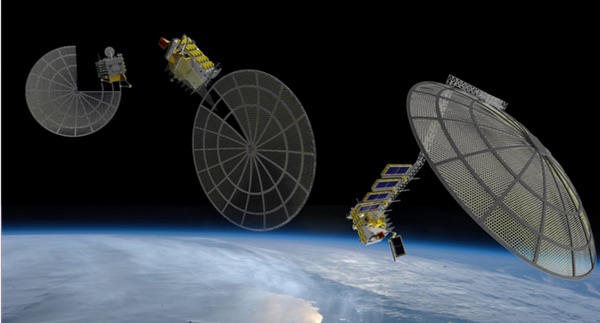

Satellite Project

Automatic Control Of Satellite

Satellite Missions

Spacecraft Robotics and Control LAB

********************************************************************************

HOW SPACE TECHNOLOGY GIVE VALUE ADDED TO EARTH

to clarify and explain current and potential benefits of space-based capabilities for life on Earth from environmental, social, and economic perspectives, including:

- Space activities having a positive impact today (such as Earth observation for weather and climate)

- Space activities that could have a positive impact in the next 5 to 20 years (such as communications satellite mega constellations)

- Space activities that could have a positive impact in the more distant future (such as widespread space manufacturing and industrialization)

Background

The world already benefits greatly from space technology, especially in terms of communications, positioning services, Earth observation, and economic activity related to government-funded space programs. Humanity’s outer space capability has grown remarkably since 1957 when Sputnik was launched. Since then, we have witnessed humans land on the Moon, 135 flights of the Space Shuttle, construction of the International Space Station (ISS), and the launch of more than 8,100 space objects, including dozens of exploration missions to every corner of the Solar System. In March, the US announced an accelerated schedule to permanently return humans to the Moon in 2024. Many other nations are also focused on a return to the Moon. With an explosion of more than 2,000 commercial space companies, including those building communications satellites, orbital launch vehicles, rovers for the Moon and Mars, orbital habitats, space manufacturing platforms, and space greenhouses, the world’s commercial space capabilities are quickly expanding beyond our satellite industry, which over the last year already brought in more than $277 billion in global revenues.| Low launch costs will continue to dramatically change the economics of many space business models, enabling a new era of capabilities once thought prohibitively expensive. |

Other technologies, such as manufacturing materials in space from resources found on the Moon, Mars, or asteroids, could further improve the economics of space activities by dramatically reducing the amount, and hence cost, of material launched from Earth. A prime example is sourcing rocket propellant in space from water-rich regions of the Moon or asteroids, which could lower transportation costs to locations beyond LEO.

Space activities with positive impacts today

1. Earth observation for weather prediction and climate monitoring: Accurate weather prediction enabled by space systems has become a critically important element in our daily lives, impacting government, industry, and personal decision making. Satellites used for weather prediction almost certainly save thousands of lives each year by giving the public storm warnings. Although no one can say exactly how many lives are saved every year, it is worthwhile to note that, in 1900, a hurricane hit Galveston, Texas, killing 6,000 to 12,000 people because they had no warning. Earth observing satellites also monitor greenhouse gases and other crucial climate indicators, as well as overall Earth ecosystem health. Without this kind of environmental information coming from satellites, plans for dealing with climate change would have less scientific basis.2. Earth resources observation: Earth observation provides information and support for agricultural production, fisheries management, freshwater management, and forestry management, as well as monitoring for harmful activities, such as illegal logging, animal poaching, fires, and environmentally pernicious mining.

3. Space-based communication services: Space communication capabilities positively impact almost every aspect of human civilization. Satellite technologies have already revolutionized banking and finance, navigation, and everyday communications, allowing international and long-distance national phone calls, video feeds, streaming media, and satellite TV and radio to become completely routine. (See point 1 in the next subheading for where we are headed in this area.)

4. Space-based Positioning, Navigation, and Timing (PNT) services: Global PNT satellite systems, which can pinpoint a location to within a few meters (or much better) anywhere on the Earth’s surface, have enhanced land and sea navigation, logistics (including ride-hailing services that are transforming personal transportation), precision agriculture, military operations, electrical grids, and many other industrial and societal aspects of Earth life. Space-based location services built into mobile phones and used by applications on mobile phones ranging from maps to dating services have become so intertwined with modern life that their abrupt cessation would be viewed as catastrophic.

5. Increasing economic opportunities in expanding commercial space and non-space sectors: Aside from long-standing commercial satellite services, our expanding space industry, in the process of moving beyond exclusive dependence on limited government budgets and cost-plus contracting, brings with it economic opportunities, not only to those working directly in the space sector but also to non-space actors, including many small businesses. Put another way, an expanding commercial space industry will not only result in high-tech jobs, but also everyday jobs connected to construction, food service, wholesale and retail, finance, and more throughout the communities hosting commercial space companies.

6. Inspiration for STEAM education: Beyond economics, a healthy space sector will continue to inspire people young and old about new frontiers, discoveries, and technologies, and foster interest in STEAM (science, technology, engineering, art, and math) disciplines, which helps create a scientifically literate society able to participate in an increasingly technology-driven world.

7. International space cooperation countering geopolitical tensions: Joint space projects among nations are sometimes the only positive force countering mutual suspicion and geopolitical rivalries. The ISS is a prime example of such a project, a source of pride to all the nations involved. Cross-border business-to-business relationships also serve the same purpose. We are a global community and space endeavors, public and private, are making us more interdependent and interconnected.

| Simply affording people the opportunity to experience the Overview Effect firsthand could lead to powerful shifts in attitudes toward the environment and social welfare, and could become an important “side benefit” of a growing space tourism industry. |

Space activities with the potential for positive impact in the next 5 to 20 years

1. Megaconstellations: This is an emerging business with huge potential, which will possibly enhance the efficiency, capacity, and security of a variety of services to Earth-based business customers by drastically cutting communications latency, while increasing throughput and global coverage. Data satellite constellations, which are planned for launching mostly to LEO, will benefit the business end-users of services in the banking, maritime, energy, Internet, cellular, and government sectors. A related aspect of this service business is focused on everyday Internet end-users and will provide high-speed, high-bandwidth coverage globally, benefitting billions of people. Thousands of LEO satellites are being planned by SpaceX, OneWeb, Telesat, Amazon, Samsung, and others. Such constellations, though, will require orbital debris mitigation and remediation services, as description below.2. Space manufacturing of materials hard to make on Earth: At this time there are only a few materials that can only be made in the microgravity environment of space and have sufficient value back on Earth to justify its manufacture even at today’s high launch costs. The hallmark example is ZBLAN, a fiber optic material that may lead to much lower signal losses per length of fiber than anything that can be made on Earth. This material is being made experimentally on the ISS by Made In Space, Inc., with two competitors working on similar products. Other on-orbit manufacturing projects underway on the ISS include bio-printing, industrial crystallization, super alloy casting, growing human stem cells, and ceramic stereolithography.

3. Fast point-to-point suborbital transport: Supersonic air transport dates back to the Concorde in the 1970s and, more recently, several companies have begun exploring technologies for even faster transport using so-called “hypersonic” airplanes. SpaceX has announced its intentions to utilize its Starship/Super Heavy rocket system currently in development to leapfrog these companies and provide point-to-point (P2P) “suborbital” travel that temporarily leaves Earth’s atmosphere only to reenter a short time later somewhere else on the planet. The potential travel time savings using this technology is enormous, allowing access to anywhere on Earth in less than one hour. While current technologies continue to rely on fossil fuels for propellant, it is possible to substitute those with hydrogen/oxygen propellant electrolyzed from water. Such technology would not emit carbon dioxide, and could thus provide a “green” alternative to long-distance air travel while simultaneously shortening travel times tremendously.

4. Space tourism: There are now several start-up companies whose sole mission is to provide low-cost access to the edge of space. Some are using suborbital rocket technology that affords a few minutes of weightlessness about 100 kilometers above the surface, while others use high-altitude balloons to more inexpensively provide access to high altitudes without becoming weightless. The desire among ordinary people to travel into space is strong. A recent survey indicated that more than 60 percent of Americans would do so, if they could afford a ticket. Space tourism, including Earth and Moon orbiting hotels, sports arenas, yacht cruises, and the like could soon become open to millions of people with the falling cost of space access.

5. The Overview Effect: A well-known phenomenon experienced by virtually every person who has traveled into space and gazed back on our world from above is the “Overview Effect,” usually described as a sudden but lasting feeling of human unity and concern for the fragility of our planet. Therefore, simply affording people the opportunity to experience the Overview Effect firsthand could lead to powerful shifts in attitudes toward the environment and social welfare, and could become an important “side benefit” of a growing space tourism industry.